A Fish Target Identification and Counting Method Based on DIDSON Sonar and YOLOv5 Model

Abstract

1. Introduction

2. Methodology

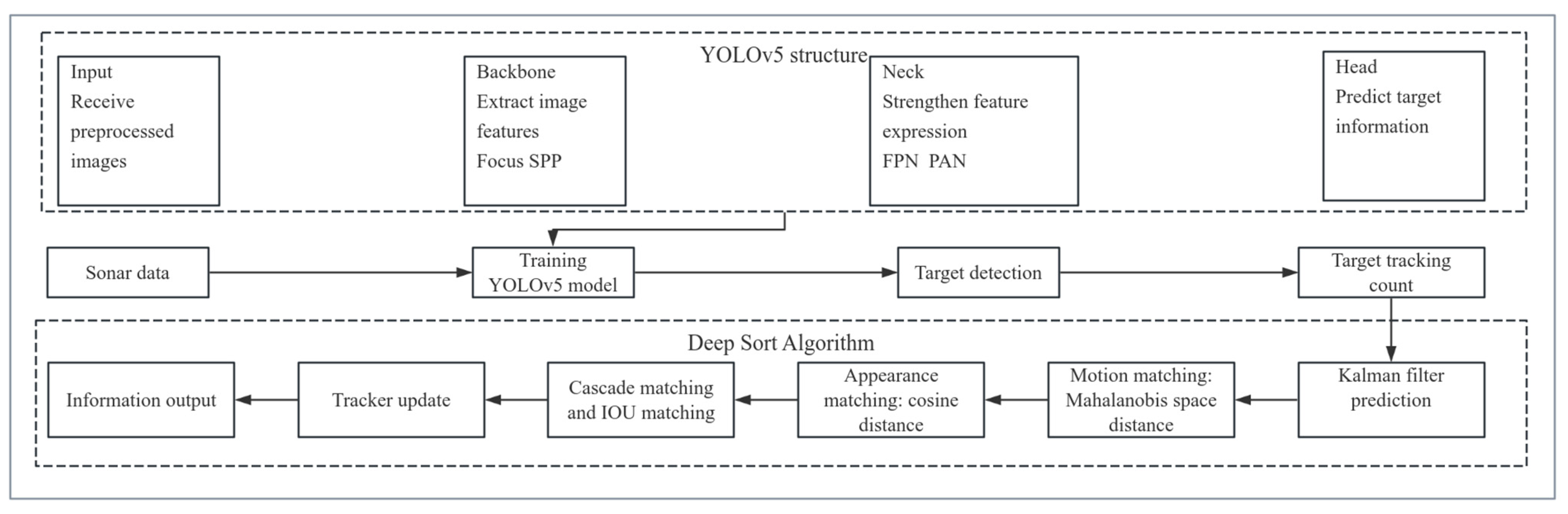

2.1. YOLOv5 Target Detection Model

2.2. DeepSort Multi-Object Tracking Algorithm

2.3. Field Data Acquisition

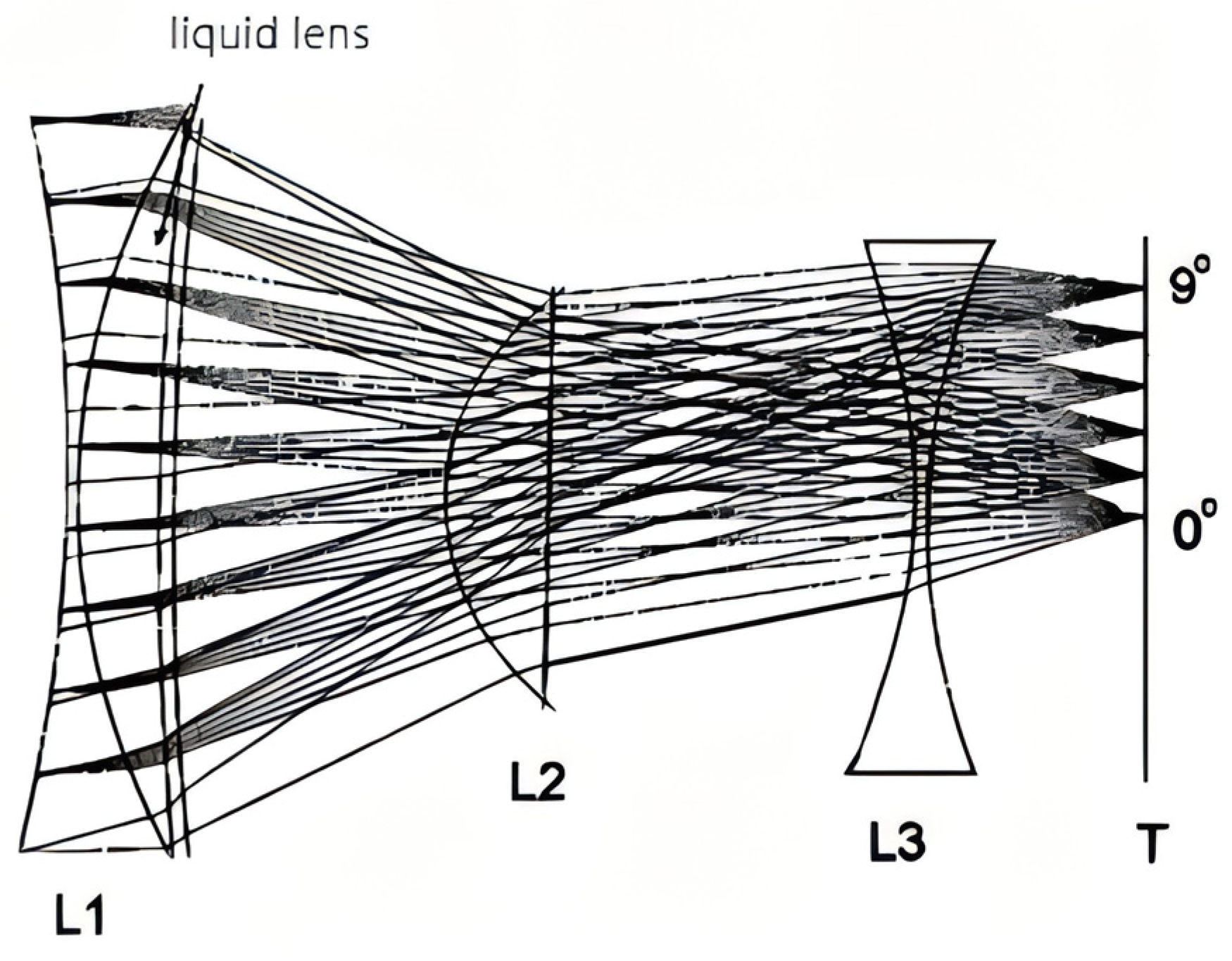

2.3.1. DIDSON Dual-Frequency Identification Sonar

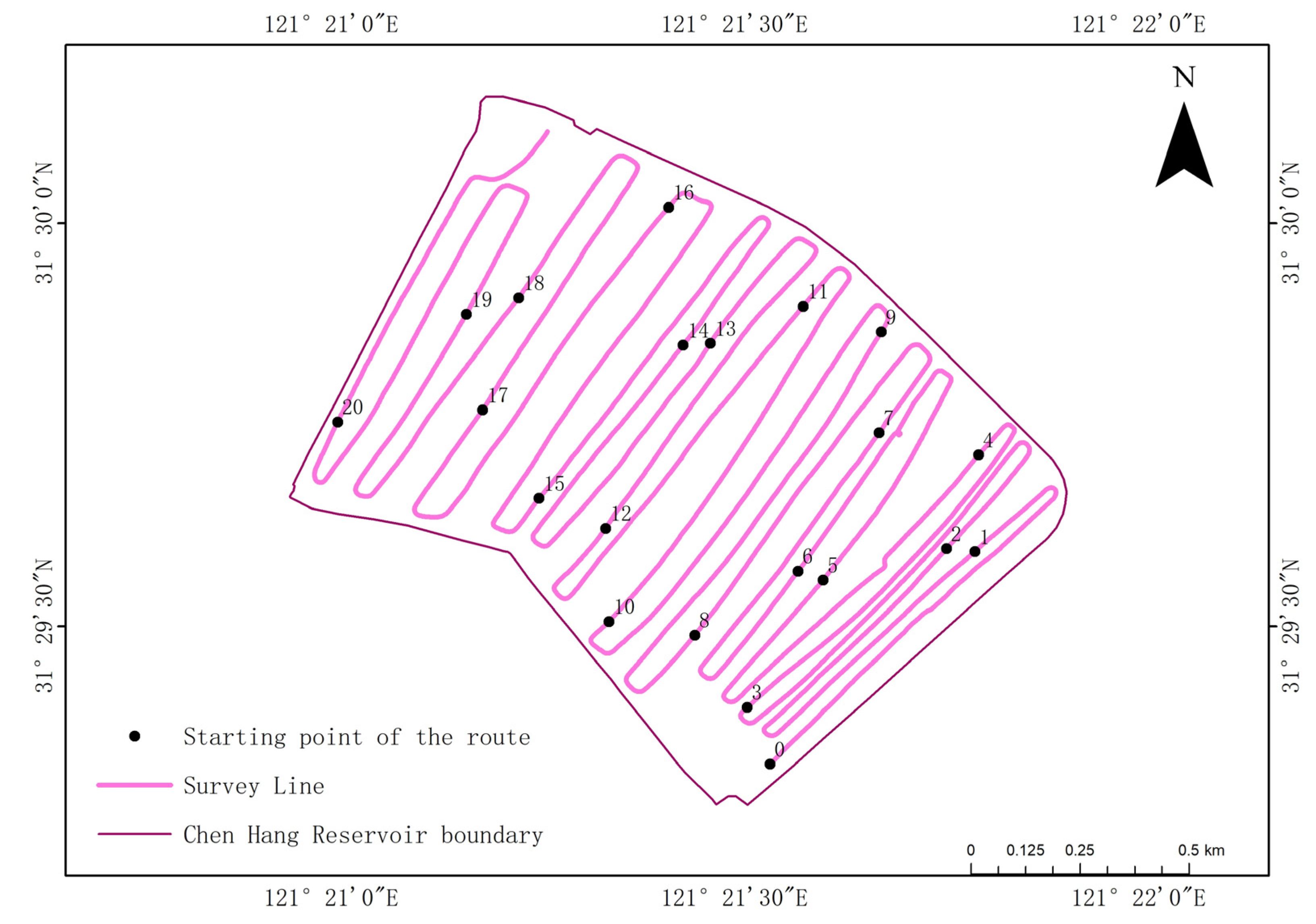

2.3.2. Data Acquisition

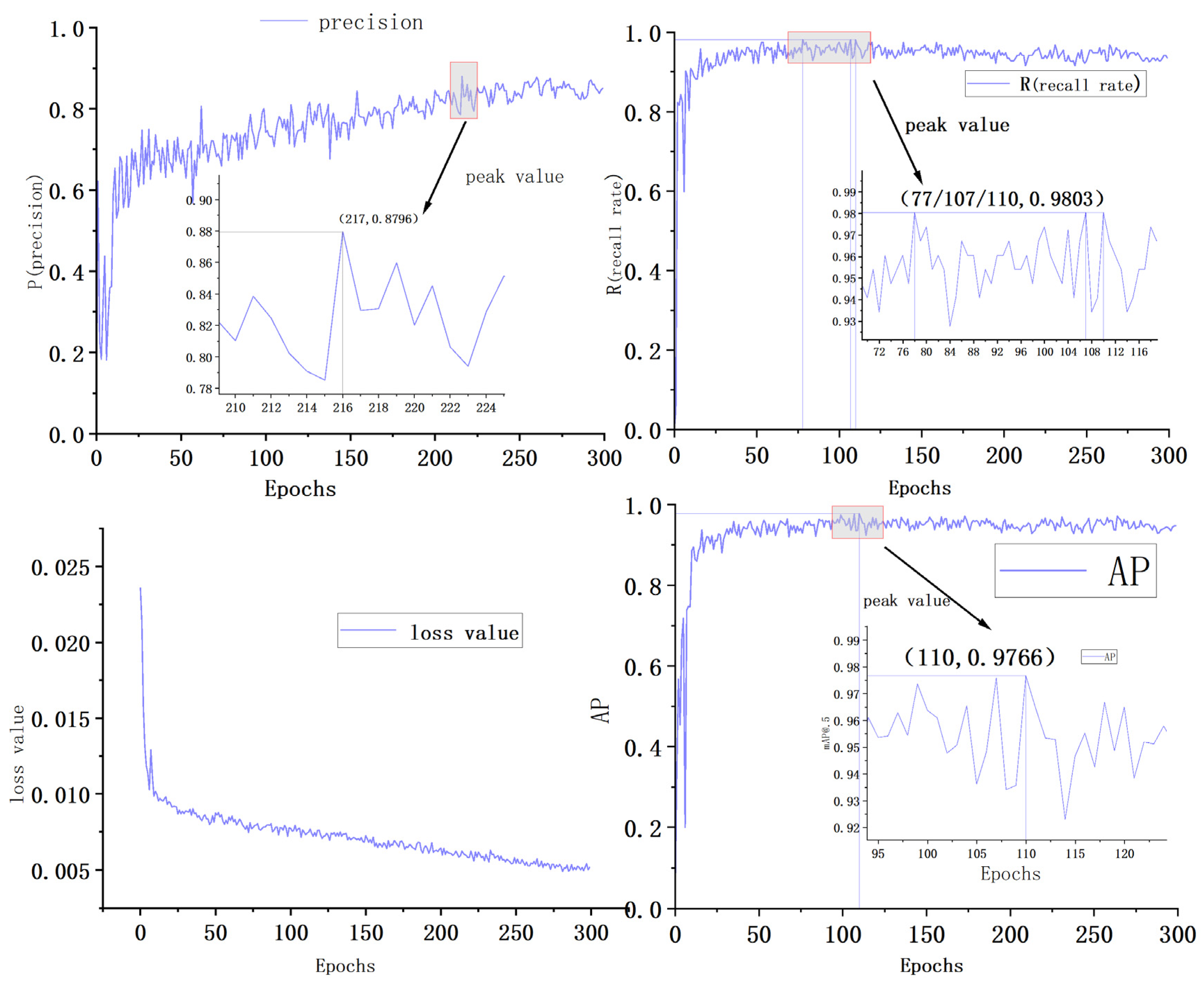

2.4. YOLOv5 Model Training

3. Experiments and Analyses

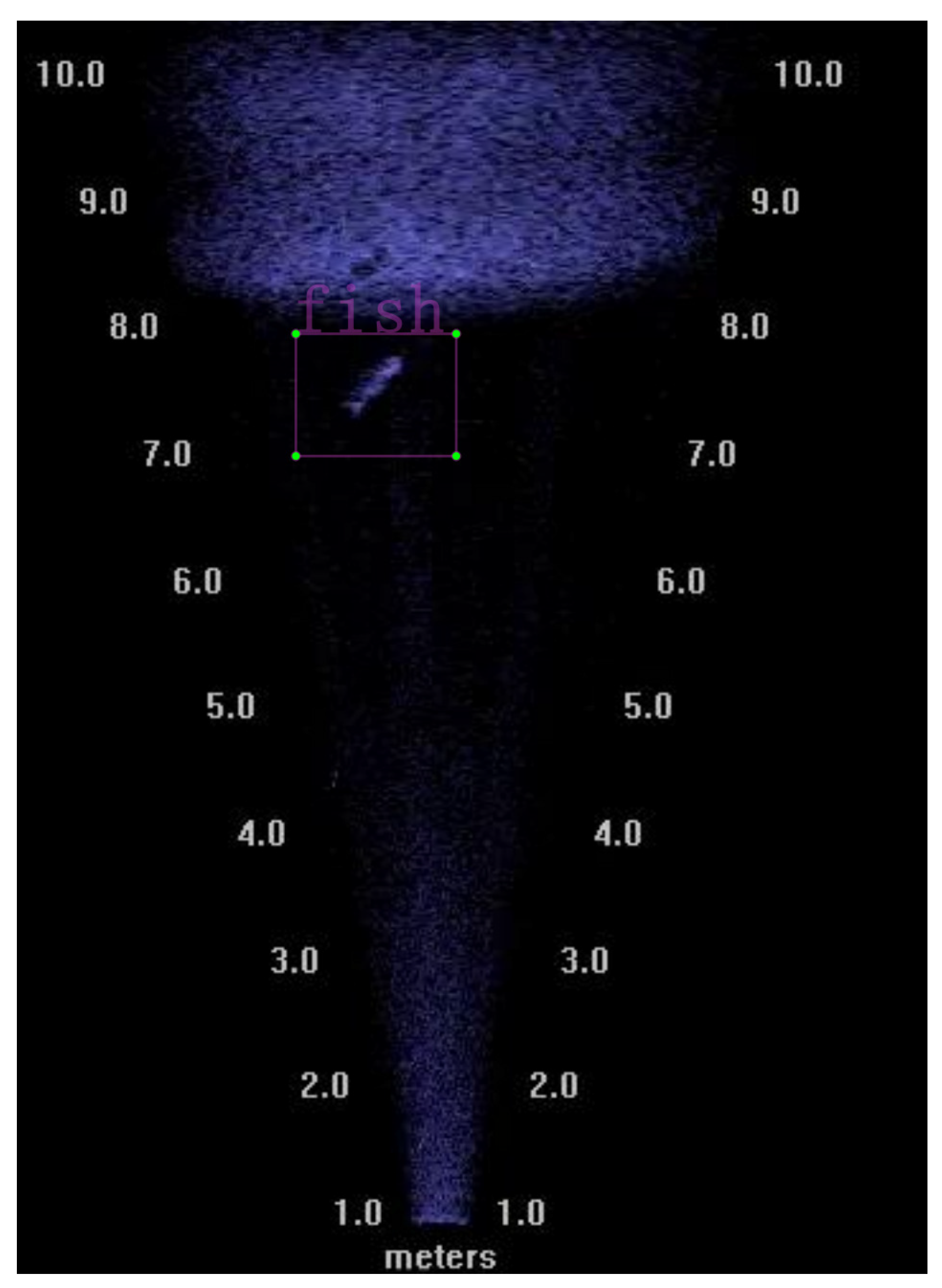

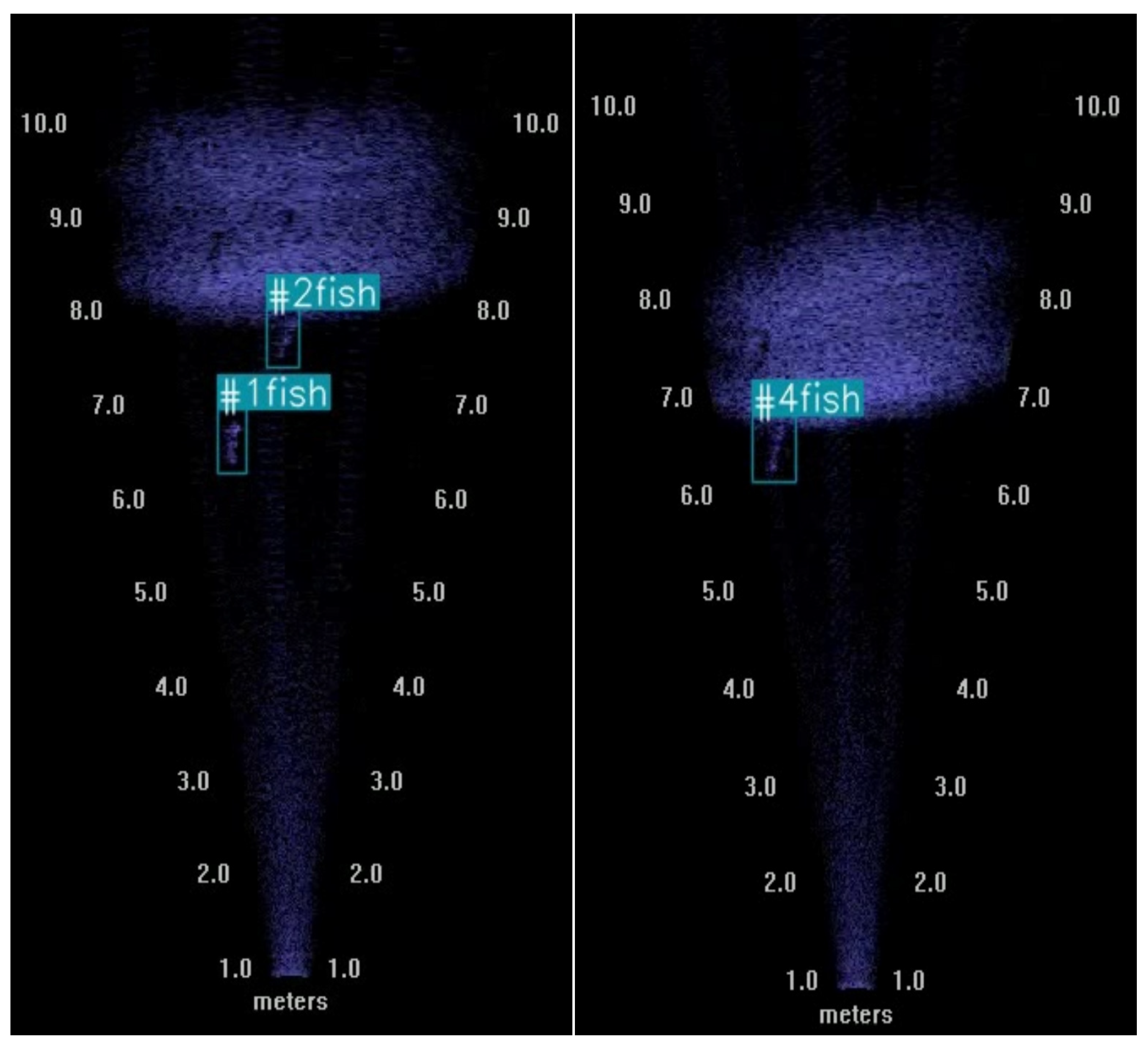

3.1. Target Identification and Counting

3.2. Accuracy Evaluation

3.2.1. Random Sampling

- Excessive clustering of fish led to errors in identification.

- Errors were likely when fish targets neared the water bottom due to linear propagation, causing them to resemble the bottom.

- Complex underwater terrain contributed to recognition errors.

3.2.2. Line Inspection

4. Discussion

4.1. Sonar Images

- Maintaining an appropriate vessel speed, ideally around 5–6 km/h

- Choose a vessel with minimal noise.

- Ensure the equipment is securely installed to avoid vibrations.

- Whenever possible, select clean water areas to reduce reflections from debris in the water.

4.2. Identification and Counting

4.3. Manual Identification

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cronkite, G.M.W.; Enzenhofer, H.J. Observations of Controlled Moving Targets with Split-Beam Sonar and Implications for Detection of Migrating Adult Salmon in Rivers. Aquat. Living Resour. 2002, 15, 1–11. [Google Scholar] [CrossRef]

- Perivolioti, T.-M.; Tušer, M.; Terzopoulos, D.; Sgardelis, S.P.; Antoniou, I. Optimising the Workflow for Fish Detection in DIDSON (Dual-Frequency IDentification SONar) Data with the Use of Optical Flow and a Genetic Algorithm. Water 2021, 13, 1304. [Google Scholar] [CrossRef]

- Moursund, R.A.; Carlson, T.J.; Peters, R.D. A Fisheries Application of a Dual-Frequency Identification Sonar Acoustic Camera. ICES J. Mar. Sci. 2003, 60, 678–683. [Google Scholar] [CrossRef]

- Belcher, E.; Hanot, W.; Burch, J. Dual-Frequency Identification Sonar (DIDSON). In Proceedings of the 2002 Interntional Symposium on Underwater Technology (Cat. No.02EX556), Tokyo, Japan, 19 April 2002; pp. 187–192. [Google Scholar]

- Maxwell, S.L.; Gove, N.E. The Feasibility of Estimating Migrating Salmon Passage Rates in Turbid Rivers Using a Dual Frequency Identification Sonar (DIDSON); Anchorage, Alaska Department of Fish and Game, Division of Commercial Fisheries: Anchorage, Alaska, 2004. [Google Scholar]

- Han, J.; Honda, N.; Asada, A.; Shibata, K. Automated Acoustic Method for Counting and Sizing Farmed Fish during Transfer Using DIDSON. Fish. Sci. 2009, 75, 1359–1367. [Google Scholar] [CrossRef]

- Jing, D.; Han, J.; Wang, X.; Wang, G.; Tong, J.; Shen, W.; Zhang, J. A Method to Estimate the Abundance of Fish Based on Dual-Frequency Identification Sonar (DIDSON) Imaging. Fish. Sci. 2017, 83, 685–697. [Google Scholar] [CrossRef]

- Zhang, H.; Wei, Q.; Kang, M. Measurement of Swimming Pattern and Body Length of Cultured Chinese Sturgeon by Use of Imaging Sonar. Aquaculture 2014, 434, 184–187. [Google Scholar] [CrossRef]

- Ladroit, Y.; Escobar-Flores, P.C.; Schimel, A.C.G.; O’Driscoll, R.L. ESP3: An Open-Source Software for the Quantitative Processing of Hydro-Acoustic Data. SoftwareX 2020, 12, 100581. [Google Scholar] [CrossRef]

- Husain, B.H.; Osawa, T. Advancing Fauna Conservation through Machine Learning-Based Spectrogram Recognition: A Study on Object Detection Using YOLOv5. J. Sumberd. Alam Dan Lingkung. 2023, 10, 58–68. [Google Scholar] [CrossRef]

- Tong, J.; Wang, W.; Xue, M.; Zhu, Z.; Han, J.; Tian, S. Automatic Single Fish Detection with a Commercial Echosounder Using YOLO v5 and Its Application for Echosounder Calibration. Front. Mar. Sci. 2023, 10, 1162064. [Google Scholar] [CrossRef]

- Yu, Y.; Zhao, J.; Gong, Q.; Huang, C.; Zheng, G.; Ma, J. Real-Time Underwater Maritime Object Detection in Side-Scan Sonar Images Based on Transformer-YOLOv5. Remote Sens. 2021, 13, 3555. [Google Scholar] [CrossRef]

- Xing, B.; Sun, M.; Ding, M.; Han, C. Fish Sonar Image Recognition Algorithm Based on Improved YOLOv5. Math. Biosci. Eng. 2024, 21, 1321–1341. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Song, J.; Qiao, K.; Li, C.; Zhang, Y.; Li, Z. Research on Efficient Feature Extraction: Improving YOLOv5 Backbone for Facial Expression Detection in Live Streaming Scenes. Front. Comput. Neurosci. 2022, 16, 980063. [Google Scholar] [CrossRef] [PubMed]

- Belcher, E.O.; Lynn, D.C.; Dinh, H.Q.; Laughlin, T.J. Beamforming and Imaging with Acoustic Lenses in Small, High-Frequency Sonars. In Proceedings of the Oceans ’99. MTS/IEEE. Riding the Crest into the 21st Century. Conference and Exhibition. Conference Proceedings (IEEE Cat. No.99CH37008), Seattle, WA, USA, 13–16 September 1999; Volume 3, pp. 1495–1499. [Google Scholar]

- Holmes, J.A.; Cronkite, G.M.W.; Enzenhofer, H.J.; Mulligan, T.J. Accuracy and Precision of Fish-Count Data from a “Dual-Frequency Identification Sonar” (DIDSON) Imaging System. ICES J. Mar. Sci. 2006, 63, 543–555. [Google Scholar] [CrossRef]

- Kovesi, P. Phase Preserving Denoising of Images. Signal 1999, 4, 212–217. [Google Scholar]

- Luo, Y.; Lu, H.; Zhou, X.; Yuan, Y.; Qi, H.; Li, B.; Liu, Z. Lightweight Model for Fish Recognition Based on YOLOV5-MobilenetV3 and Sonar Images. Guangdong Nongye Kexue 2023, 50, 37–46. [Google Scholar]

- Balk, H.; Lindem, T. Improved Fish Detection in Data from Split-Beam Sonar. Aquat. Living Resour. 2000, 13, 297–303. [Google Scholar] [CrossRef]

- Helminen, J.; Linnansaari, T. Object and Behavior Differentiation for Improved Automated Counts of Migrating River Fish Using Imaging Sonar Data. Fish. Res. 2021, 237, 105883. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the Computer Vision—ECCV 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Shafiq, M.; Gu, Z. Deep Residual Learning for Image Recognition: A Survey. Appl. Sci. 2022, 12, 8972. [Google Scholar] [CrossRef]

- Wojke, N.; Bewley, A.; Paulus, D. Simple Online and Realtime Tracking with a Deep Association Metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

- Keefer, M.L.; Caudill, C.C.; Johnson, E.L.; Clabough, T.S.; Boggs, C.T.; Johnson, P.N.; Nagy, W.T. Inter-Observer Bias in Fish Classification and Enumeration Using Dual-Frequency Identification Sonar (DIDSON): A Pacific Lamprey Case Study. Northwest Sci. 2017, 91, 41–53. [Google Scholar] [CrossRef]

| Specification/Mode | Low Frequency | High Frequency |

|---|---|---|

| Operating Frequency | 1.0 MHz | 1.8 MHz |

| Beam Width | Horizontal 0.4°, Vertical 12° | Horizontal 0.3°, Vertical 12° |

| Number of Beams | 48 | 96 |

| Source Level | 202 dB re 1 μPa at 1 m | 206 dB re 1 μPa at 1 m |

| Start Range | 0.75 m to 40 m | 0.38 m to 11.63 m |

| Maximum Frame Rate | 4–21 frames/s | |

| Field of View | 29° | |

| Remote Focusing | 1 m to maximum range | |

| Power Consumption | Watts typical | |

| Weight in Air | 7.0 kg (15.4 lbs) | |

| Weight in Water | −0.61 kg (1.33 lbs) | |

| Dimensions | 30.7 cm × 20.6 cm × 17.1 cm | |

| Survey Line | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

| YOLOv5 (fish) | 188 | 216 | 143 | 122 | 145 | 100 | 70 | 49 | 71 | 100 | 63 |

| Survey Line | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 | Total |

| YOLOv5 (fish) | 70 | 66 | 12 | 44 | 36 | 48 | 134 | 44 | 22 | 17 | 1760 |

| ID | Frame Count | First Frame | Last Frame | Avg_Depth (m) | Lat | Lon |

|---|---|---|---|---|---|---|

| 1 | 11 | 4 | 14 | 5.87 | 31.49176567 | 121.355733 |

| 2 | 13 | 7 | 19 | 6.70 | 31.49177917 | 121.3557462 |

| 3 | 14 | 21 | 34 | 6.36 | 31.4918065 | 121.3557723 |

| 4 | 7 | 121 | 127 | 6.43 | 31.49198483 | 121.355938 |

| 5 | 4 | 155 | 158 | 5.40 | 31.49204017 | 121.355989 |

| Manual Identification | Total Identifications | Correct Identifications | Unidentified | Misidentifications | Accuracy (%) | Unidentification Rate (%) | Misidentification Rate (%) | |

|---|---|---|---|---|---|---|---|---|

| Count (fish) | 675 | 588 | 564 | 87 | 24 | 83.56% | 12.89% | 3.56% |

| Survey Line | Manual identification Count | YOLOv5 Total Identifications Count | Correct Identifications Count | Unidentified Count | Misidentifications Count | Accuracy (%) | Unidentification Rate (%) | Misidentification Rate (%) |

|---|---|---|---|---|---|---|---|---|

| 10 | 71 | 63 | 61 | 8 | 2 | 85.92% | 11.27% | 2.82% |

| 11 | 83 | 70 | 69 | 13 | 1 | 83.13% | 15.66% | 1.20% |

| 12 | 75 | 66 | 63 | 9 | 3 | 84% | 12% | 4% |

| Average | 10 | 3 | 84.28% | 13.10% | 2.62% |

| Method | YOLOv5 | YOLOv6 | YOLOv8 | Echoview | Manual Identification |

|---|---|---|---|---|---|

| Survey Line 10 | 63 | 61 | 59 | 60 | 71 |

| Survey Line 11 | 70 | 63 | 64 | 69 | 83 |

| Survey Line 12 | 66 | 67 | 75 | 70 | 75 |

| Processing Time (single survey line) | 3 min | 3 min | 3 min | Approximately 30 min | Approximately 120 min |

| Deviation (total) | 30 | 38 | 31 | 30 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shen, W.; Liu, M.; Lu, Q.; Yin, Z.; Zhang, J. A Fish Target Identification and Counting Method Based on DIDSON Sonar and YOLOv5 Model. Fishes 2024, 9, 346. https://doi.org/10.3390/fishes9090346

Shen W, Liu M, Lu Q, Yin Z, Zhang J. A Fish Target Identification and Counting Method Based on DIDSON Sonar and YOLOv5 Model. Fishes. 2024; 9(9):346. https://doi.org/10.3390/fishes9090346

Chicago/Turabian StyleShen, Wei, Mengqi Liu, Quanshui Lu, Zhaowei Yin, and Jin Zhang. 2024. "A Fish Target Identification and Counting Method Based on DIDSON Sonar and YOLOv5 Model" Fishes 9, no. 9: 346. https://doi.org/10.3390/fishes9090346

APA StyleShen, W., Liu, M., Lu, Q., Yin, Z., & Zhang, J. (2024). A Fish Target Identification and Counting Method Based on DIDSON Sonar and YOLOv5 Model. Fishes, 9(9), 346. https://doi.org/10.3390/fishes9090346