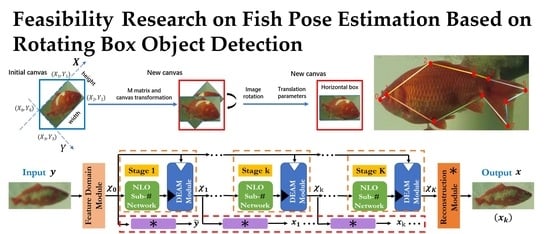

Feasibility Research on Fish Pose Estimation Based on Rotating Box Object Detection

Abstract

:1. Introduction

- (1)

- The physiological structure of golden crucian carp is relatively simple, there are no complex human-like joints and a high degree of freedom limbs, and the purposeful grass goldfish has high attitude recognition. Such as spawning, eating, skin infection, etc.

- (2)

- Although the body appearance similarity of golden crucian carp is high, the dataset based on artificial annotation was screened and analyzed, and the source is reliable, which is explained in detail in Section 2.1 and Section 2.2.

- (3)

- The ecological fish tank with a high reduction degree has a high simulation of the aquaculture environment. In contrast, it is more in line with the requirements of the aquaculture industry chain, has no redundant interference, and can be freely captured from all perspectives.

- (4)

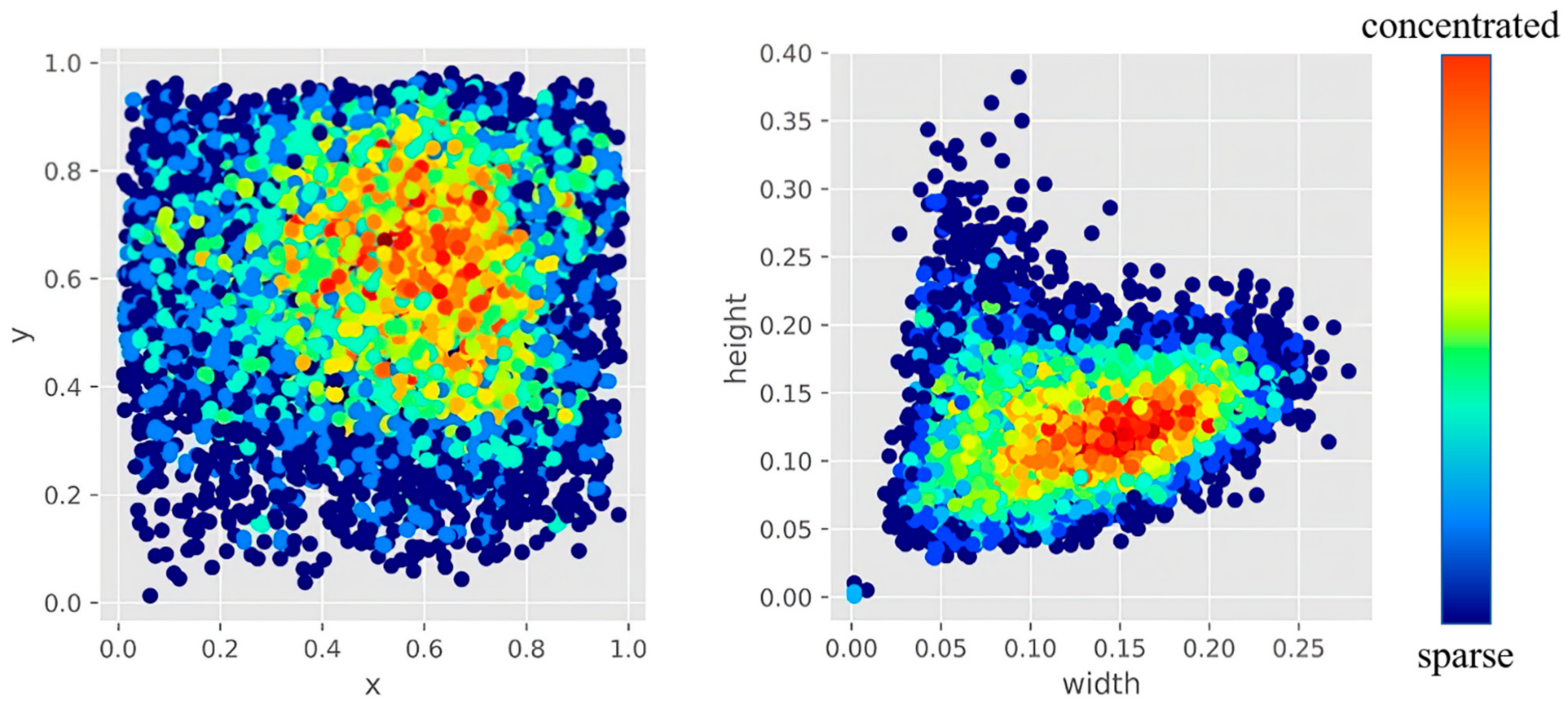

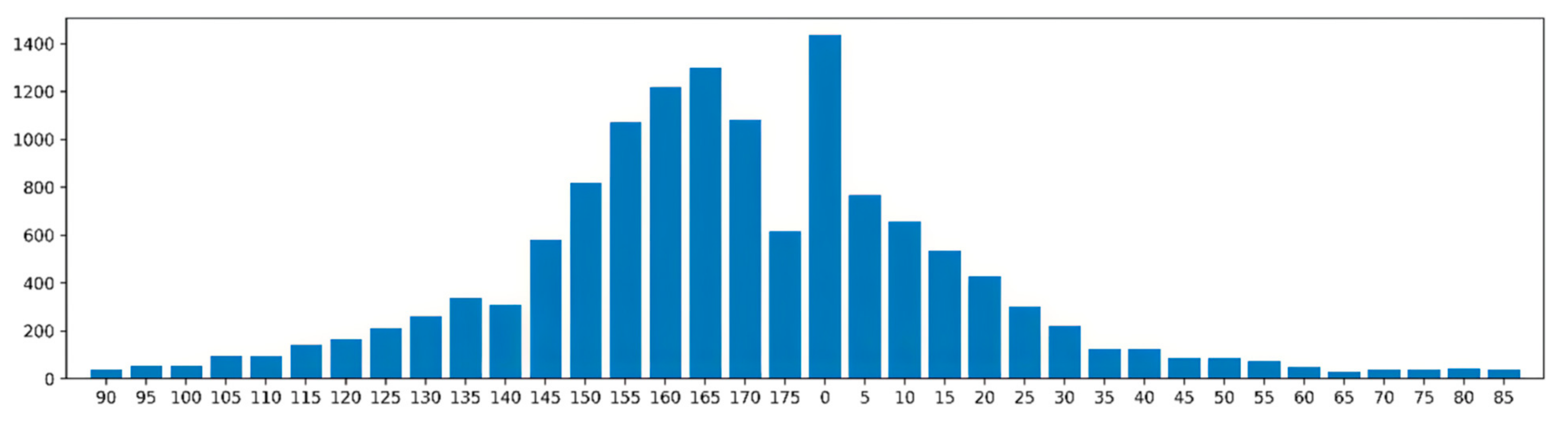

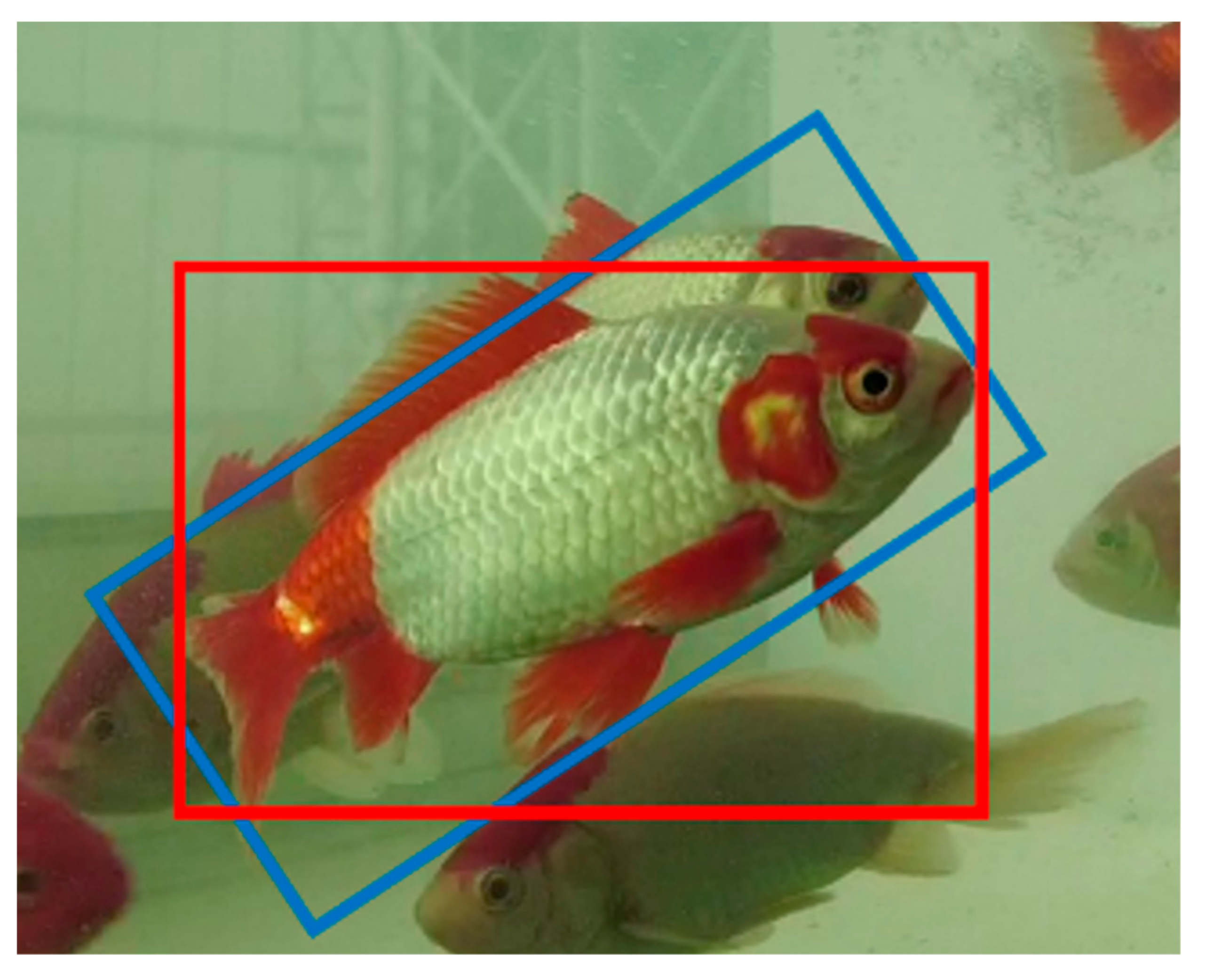

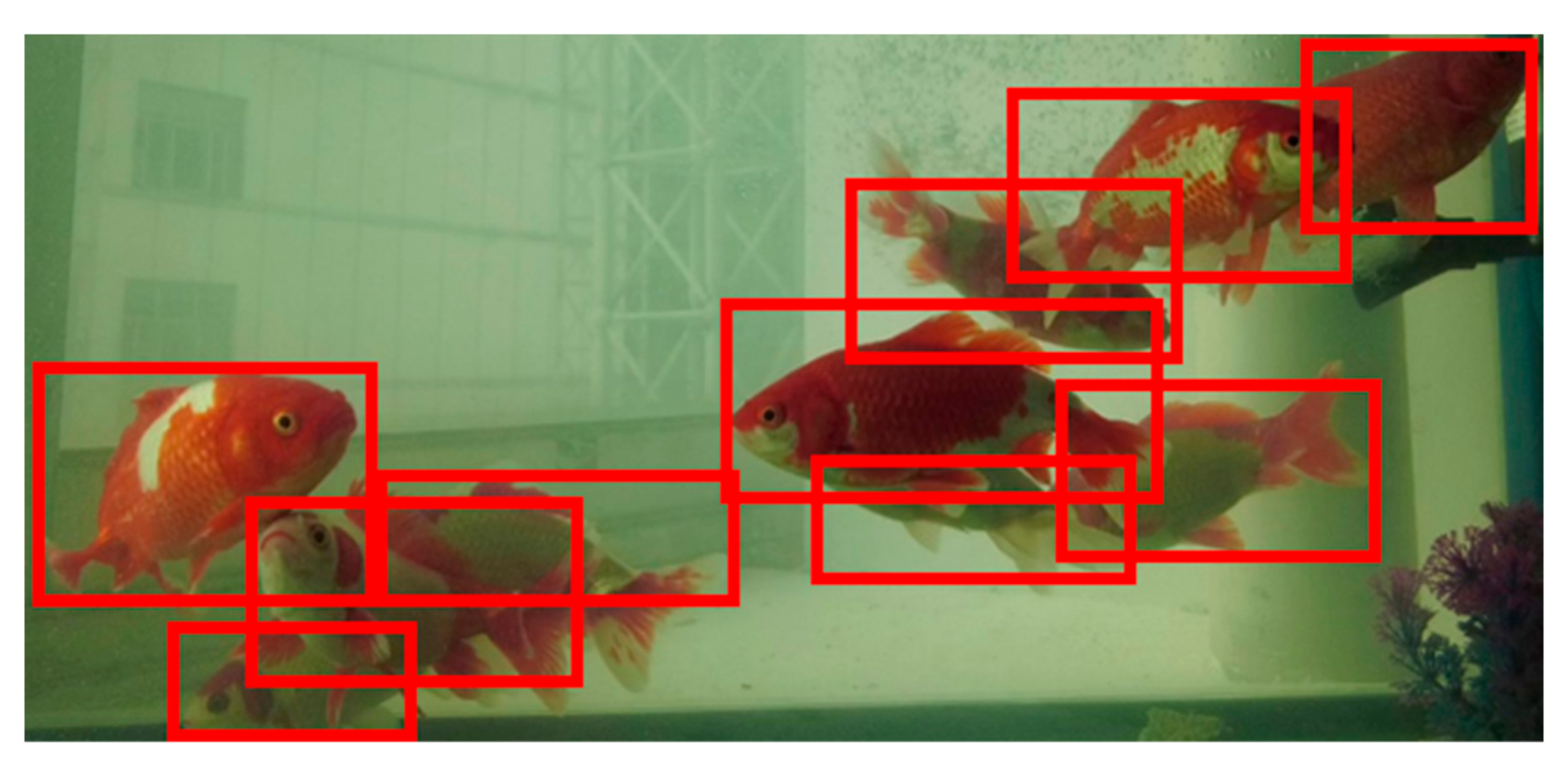

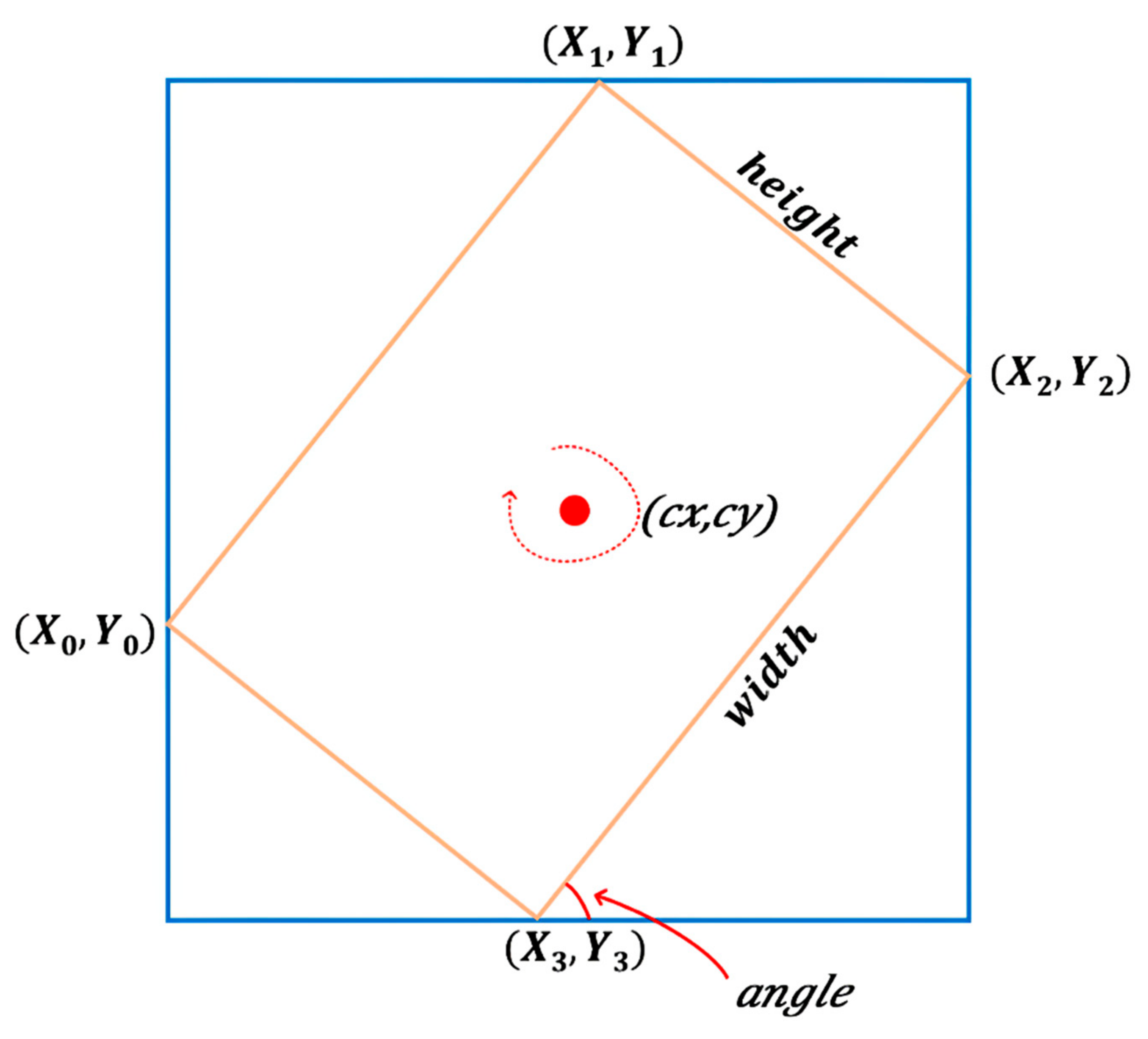

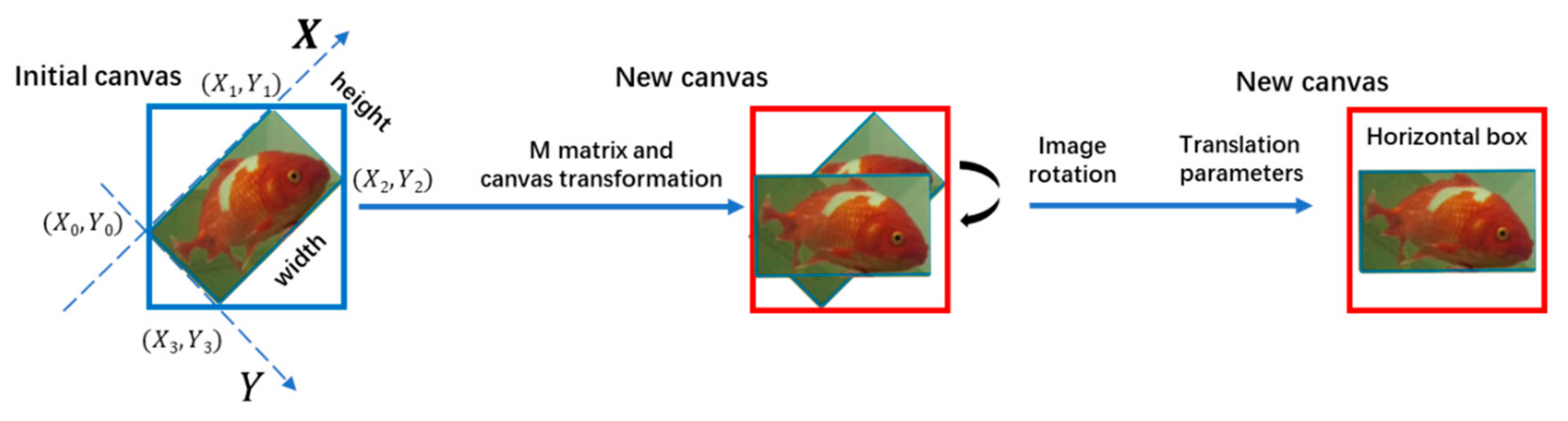

- Golden crucian carp can realize free movement in three-dimensional space in the aquatic environment. According to Figure 1, the turnover range of golden crucian carp is between [0°~180°]. Generally, the deformation degree is large. As shown in Figure 2, 80% of the angle changes are above 40 degrees. Therefore, the traditional object detection pre-selection box is abandoned, and the rotating box is used for flexible box selection. This is the innovation of the dataset in our research process.

- (1)

- The first dataset, we established a new large-scale golden crucian carp dataset; It contains 1541 pose estimation images from 10 golden crucian carp.

- (2)

- The recognition features are extracted from the database, and the related recognition algorithm based on computer vision is realized to recognize the golden crucian carp.

- (3)

- A comprehensive baseline is constructed, including golden crucian carp rotating box object detection and golden crucian carp pose estimation, to realize multi-object pose estimation.

2. Materials and Methods

2.1. Acquisition of Materials

2.2. Data Preprocessing

2.2.1. Training Data Enhancement

2.2.2. HSV Color Space Enhancement

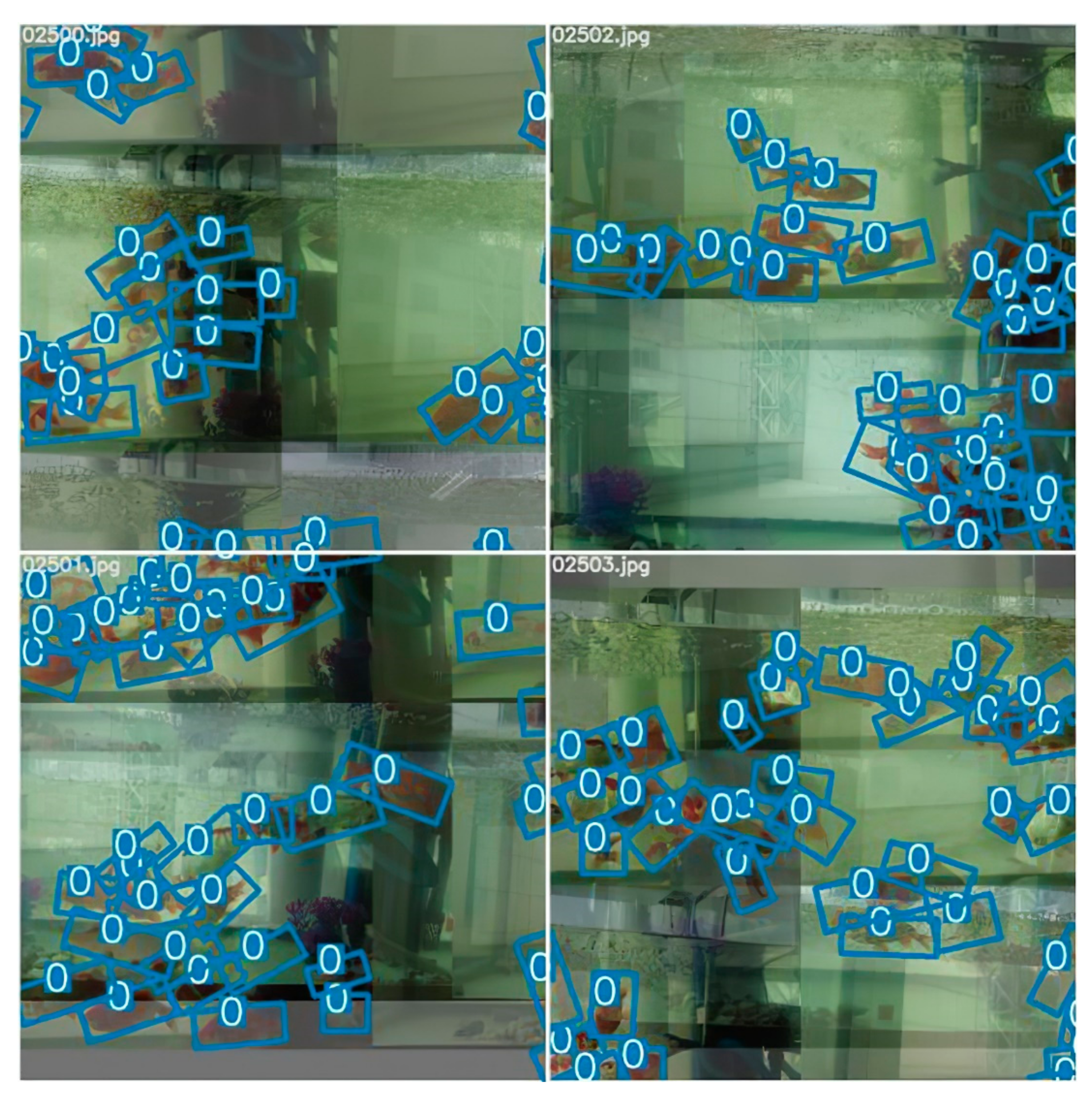

2.2.3. Mosaic

2.2.4. Mixup

2.3. Methods of Detection and Estimation

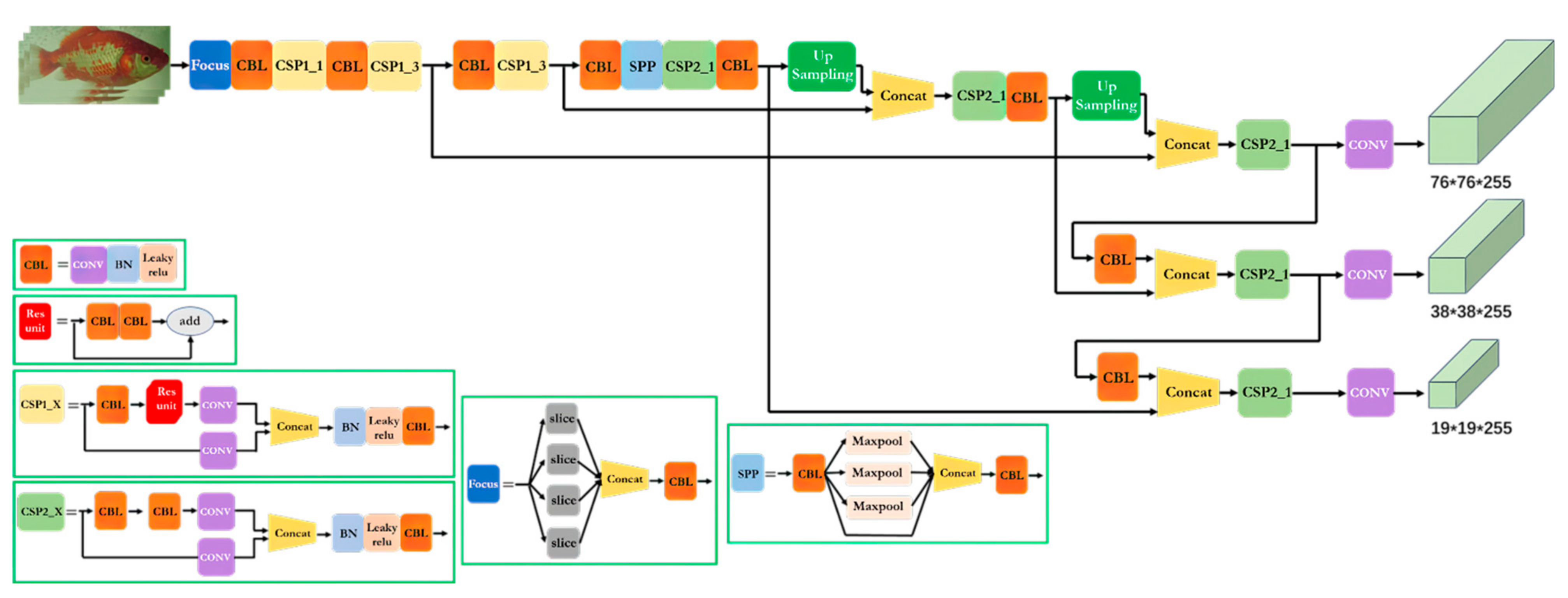

2.3.1. Target Detection

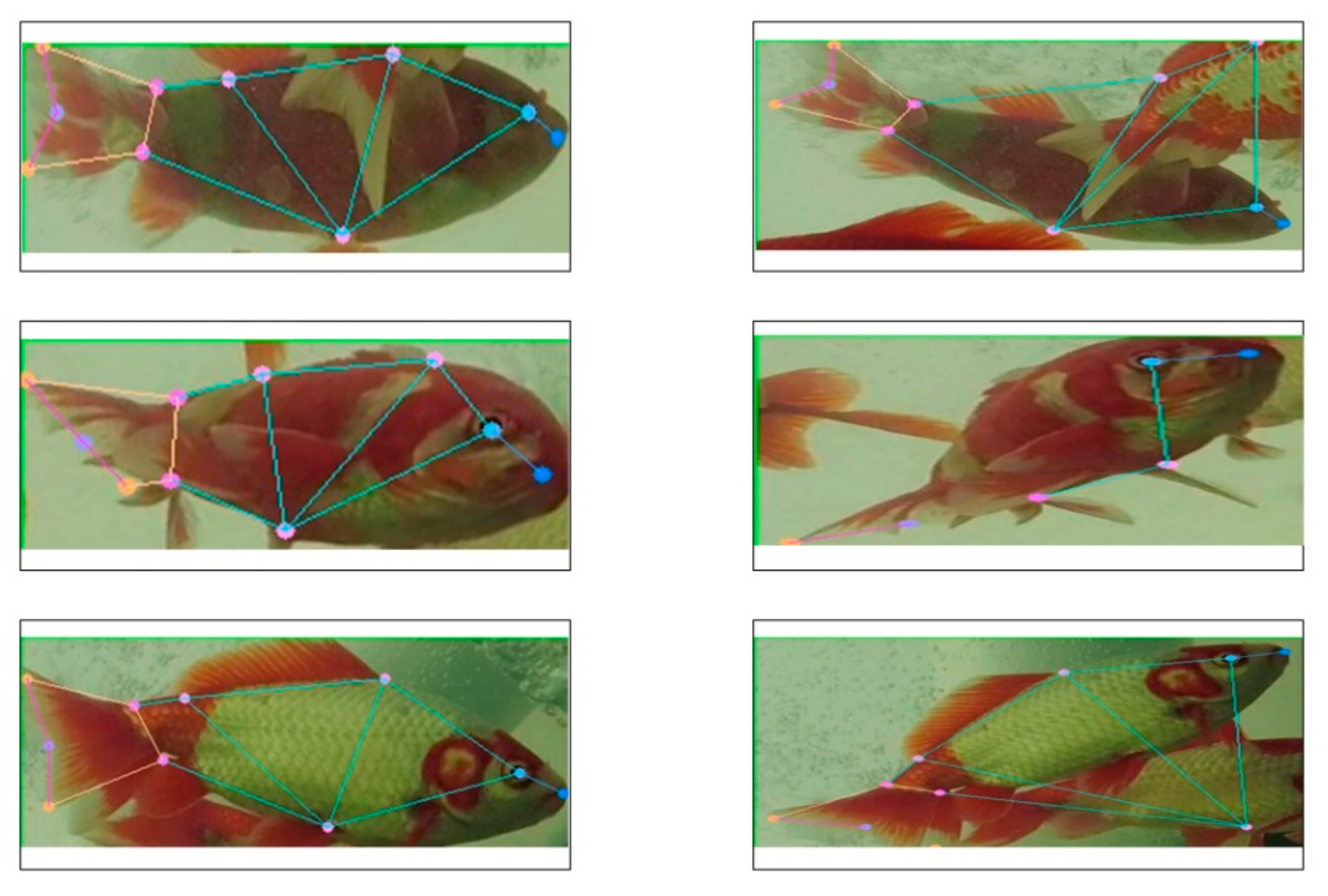

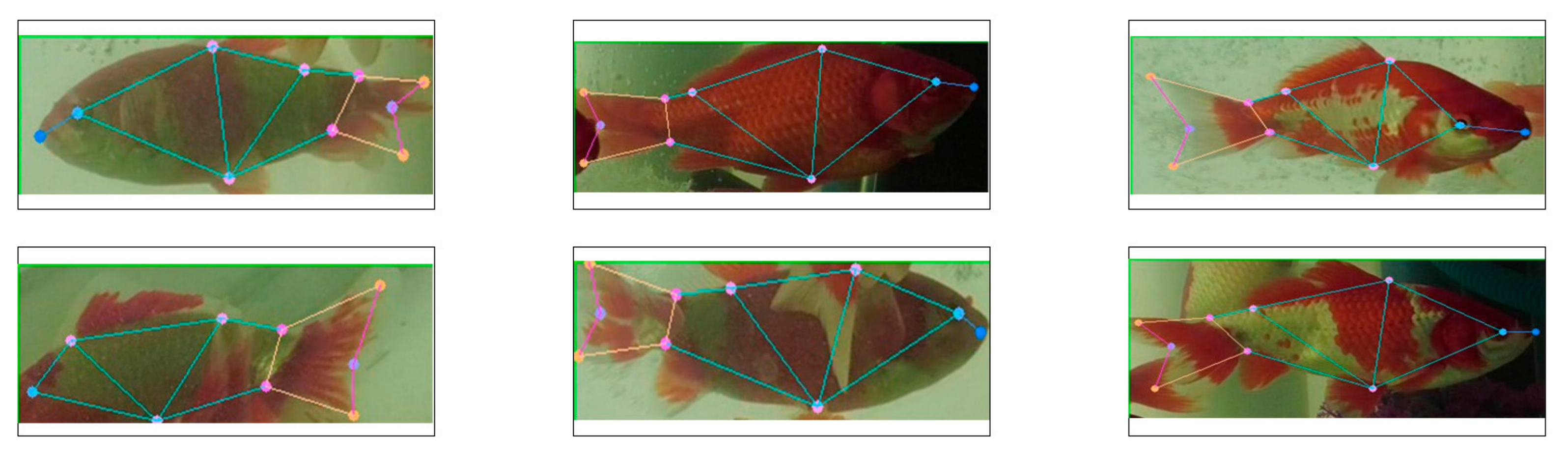

2.3.2. Pose Estimation

- (1)

- Crucian carp can realize free movement in three-dimensional space in the aquatic environment. The crucian carp’s posture flip range is between [0°~180°], and as shown in Figure 2, 80% of the angle changes are above 40 degrees, so the overall degree of deformation is relatively large. This makes the posture change more complicated and has a greater impact on the subsequent activities of the crucian carp. From a research perspective, we can find that most of the crucian carp camps are active in clusters, and there is a lot of shelter and crowding. Based on using the rotating bounding box, the top-down method can continue to optimize, and better deal with the occlusion and crowding of the fish body, which is conducive to extracting the detection target from the pixel background.

- (2)

- The idea of bottom-up is to determine the location of the key points first, and then confirm the ownership of the key points. The main criterion is the affinity of key points, which is simply the distance between key points. Such a scheme can indeed achieve a considerable increase in speed, but when multiple targets are close in distance, it is extremely easy to divide the key points incorrectly, which greatly reduces the effect of the model. Therefore, we chose to use the top-down method.

- (3)

- The detection target of our research is crucian carp. Compared with humans, crucian carp is easier to identify, with more distinctive features, and is easier to extract. Top-down is used to train and output the key points of the complete image by extracting global target features, which is highly objective.

- (4)

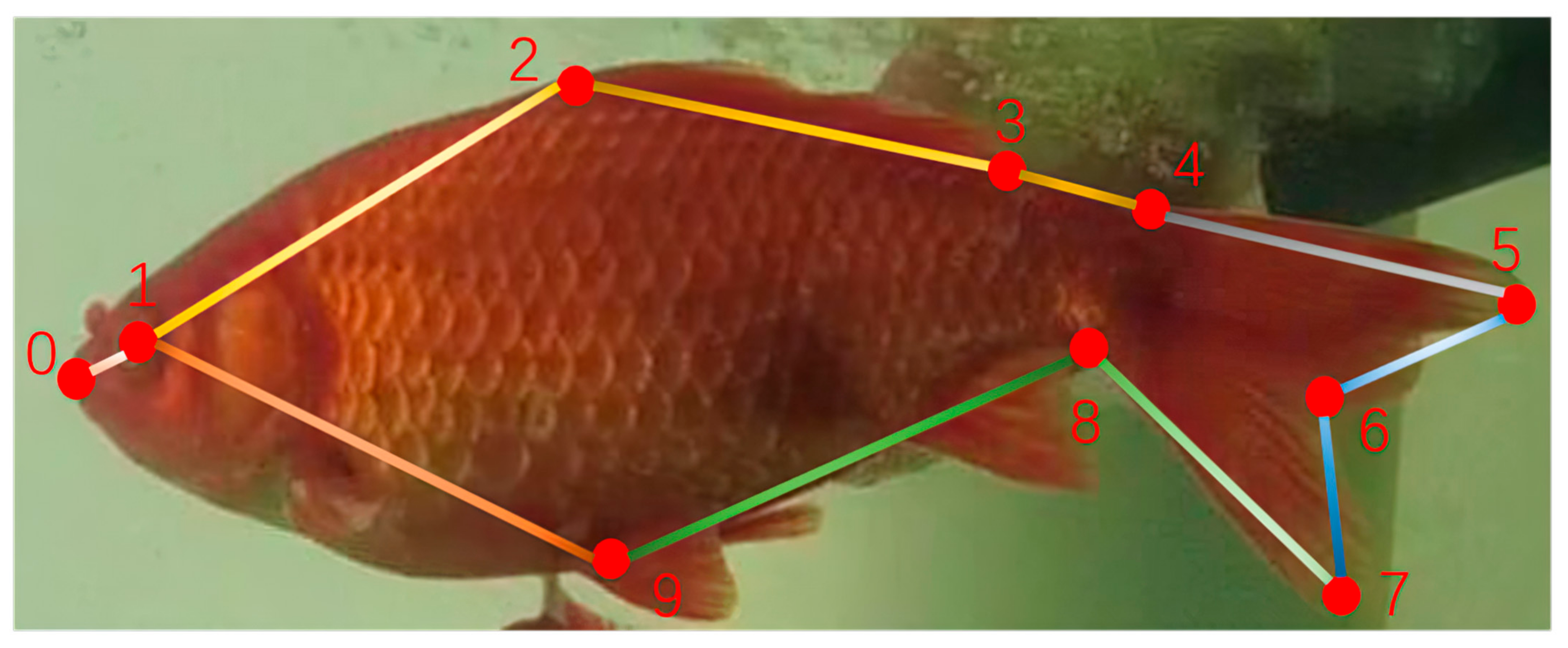

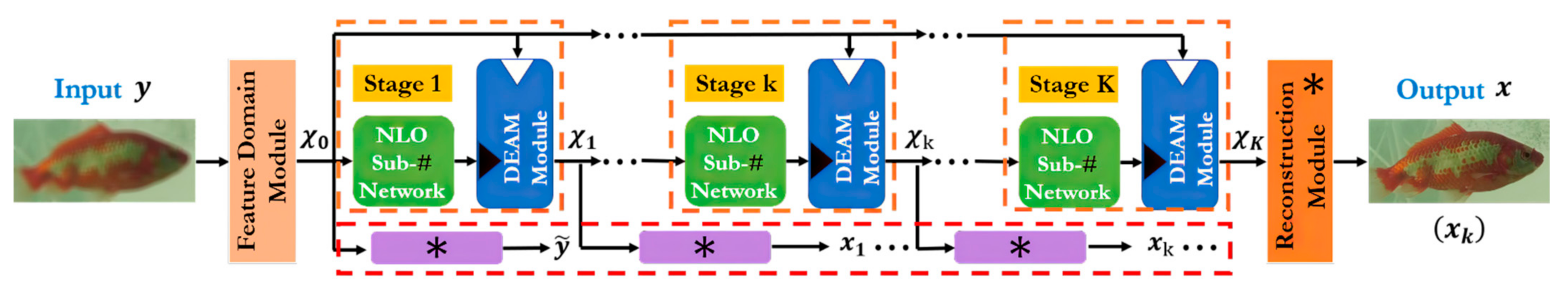

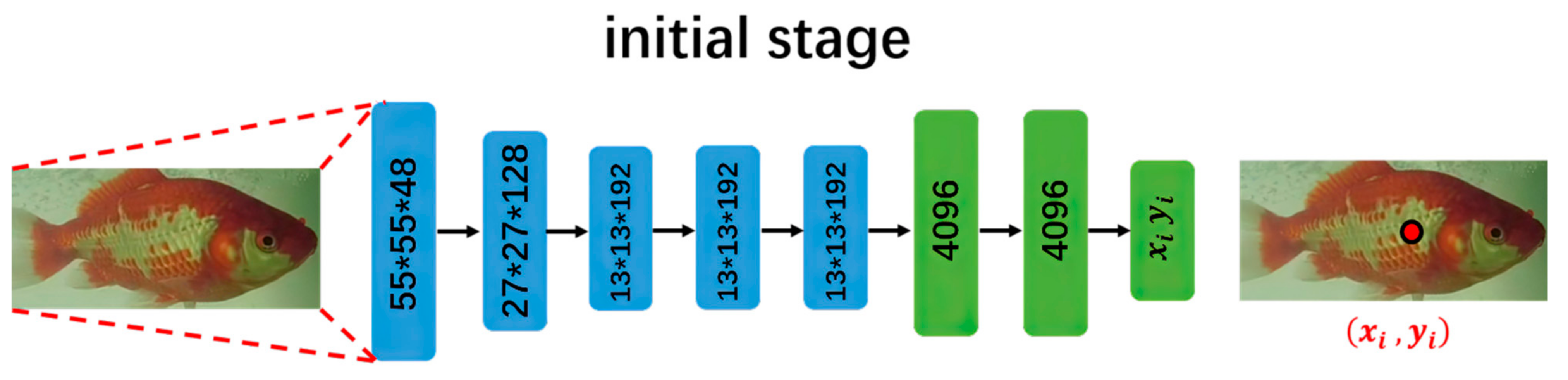

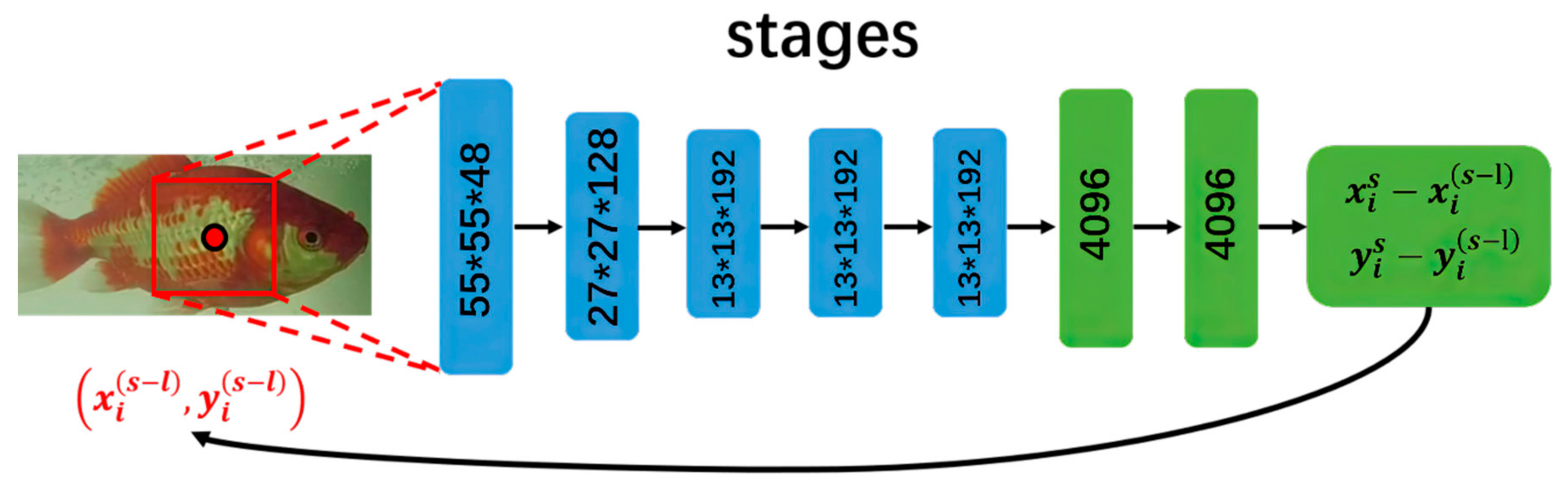

- Top-down has higher accuracy, and bottom-up has faster speed. The use of a single top-down pose estimation model has a speed disadvantage, so while considering the speed and accuracy of the model at the same time, we used the Yolo 5 target detector in the early stage to obtain a significant speed blessing effect. In this way, the dual high-efficiency of the model’s high precision and high speed can be achieved. DeepPose is a method that directly returns to the absolute coordinates of key points [32]. To express the posture of the fish body, we use the following symbols. We encode the positions of all k = 10 fish body joints into the definition , where contains the horizontal and vertical of coordinate. The marked image is represented by , where represents the image data, and is the real posture vector of the fish body.

2.3.3. Convert a Rotating Frame to a Horizontal Frame

3. Experiment and Result

3.1. Train Settings

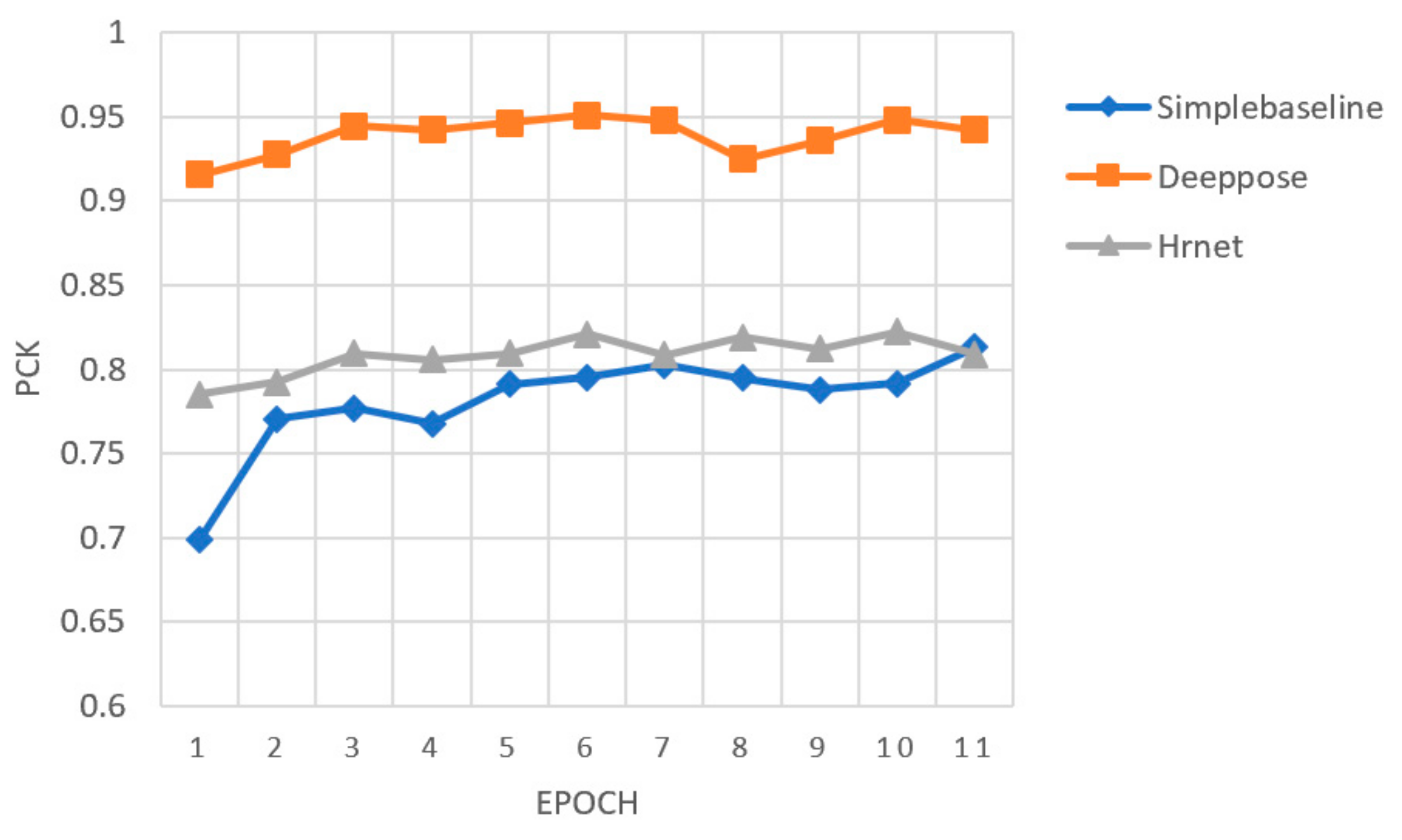

3.2. Benchmark Results

4. Discussion

4.1. Contribution to Pose Estimation of Fish

4.2. Contribution to Underwater Real-Time Detection

4.3. Different from Existing Methods

4.3.1. Benefits of the Rotating Frame

4.3.2. Compare with Other Methods

4.4. Limitations and Future Work

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sun, D.W. Computer Vision Technology for Food Quality Evaluation; Academic Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Vimala, J.S.; Natesan, M.; Rajendran, S. Corrosion and Protection of Electronic Components in Different Environmental Conditions—An Overview. Open Corros. J. 2009, 2, 105–113. [Google Scholar]

- Walther, D.; Edgington, D.R.; Koch, C. Detection and tracking of objects in underwater video. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2004. CVPR 2004, Washington, DC, USA, 27 June–2 July 2004. [Google Scholar]

- Rashid, M.; Gu, X.; Yong, J.L. Interspecies Knowledge Transfer for Facial Keypoint Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Zou, Z.; Shi, Z.; Guo, Y.; Ye, J. Object detection in 20 years: A survey. arXiv 2019, arXiv:1905.05055. [Google Scholar]

- Oksuz, K.; Cam, B.C.; Kalkan, S.; Akbas, E. Imbalance Problems in Object Detection: A Review. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3388–3415. [Google Scholar]

- Hechun, W.; Xiaohong, Z. Survey of deep learning based object detection. In Proceedings of the 2nd International Conference on Big Data Technologies, Jinan, China, 28–30 August 2019; pp. 149–153. [Google Scholar]

- Zhao, Y. Improved SSD Algorithm Based on Multi-scale Feature Fusion and Residual Attention Mechanism. In Proceedings of the 2021 3rd International Conference on Advances in Computer Technology, Information Science and Communication (CTISC), Shanghai, China, 23–25 April 2021; pp. 87–91. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar]

- Rajaram, R.N.; Ohn-Bar, E.; Trivedi, M.M. RefineNet: Iterative refinement for accurate object localization. In Proceedings of the IEEE International Conference on Intelligent Transportation Systems, Rio de Janeiro, Brazil, 1–4 November 2016. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the 2017 IEEE Transactions on Pattern Analysis & Machine Intelligence, Venice, Italy, 7 August 2017; pp. 2999–3007. [Google Scholar]

- Li, J.; Su, W.; Wang, Z. Simple Pose: Rethinking and Improving a Bottom-up Approach for Multi-Person Pose Estimation. In Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence (AAAI-20), New York, NY, USA, 7–12 February 2020; pp. 1354–11361. [Google Scholar]

- Olmos, A.; Trucco, E. Detecting man-made objects in unconstrained subsea videos. In Proceedings of the British Machine Conference (BMVC), Wales, UK, 2–5 September 2002; pp. 1–10. [Google Scholar]

- Wang, C.Y.; Liao, H.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W. CSPNet: A New Backbone that can Enhance Learning Capability of CNN. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Xiu, L.; Min, S.; Qin, H.; Chen, L. Fast accurate fish detection and recognition of underwater images with Fast R-CNN. In Proceedings of OCEANS 2015-MTS/IEEE Washington, Washington, DC, USA, 19–22 October 2015. [Google Scholar]

- Xu, C.; Govindarajan, L.N.; Zhang, Y.; Cheng, L. Lie-X: Depth Image Based Articulated Object Pose Estimation, Tracking, and Action Recognition on Lie Groups. Int. J. Comput. Vis. 2016, 123, 454–478. [Google Scholar]

- Xu, W.; Matzner, S. Underwater Fish Detection using Deep Learning for Water Power Applications. In Proceedings of the 5th Annual Conf. on Computational Science & Computational Intelligence (CSCI’18), Las Vegas, NV, USA, 13–15 December 2018. [Google Scholar]

- Knausgård, K.M.; Wiklund, A.; Sørdalen, T.K.; Halvorsen, K.T.; Kleiven, A.R.; Jiao, L.; Goodwin, M. Temperate fish detection and classification: A deep learning based approach. Appl. Intell. 2021. Available online: https://link.springer.com/article/10.1007/s10489-020-02154-9#citeas (accessed on 1 October 2021). [CrossRef]

- Su, H.; Kong, W.; Jiang, K.; Liu, D.; Gong, X.; Lin, B.; Li, J.; Wang, H.; Xu, C. Gold crucian carp identification based on Siamese network. In Proceedings of the International Conference on Image Processing and Intelligent Control (IPIC 2021); SPIE: Bellingham, WA, USA, 2021; pp. 191–194. [Google Scholar]

- Kong, W.; Li, D.; Li, J.; Liu, D.; Liu, Q.; Lin, B.; Su, H.; Wang, H.; Xu, C. Detection of golden crucian carp based on YOLOV5. In Proceedings of the 2021 2nd International Conference on Artificial Intelligence and Education (ICAIE), Dali, China, 18–20 June 2021; pp. 283–286. [Google Scholar]

- Zheng, L.; Zhang, H.; Sun, S.; Chandraker, M.; Yang, Y.; Tian, Q. Person re-identification in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1367–1376. [Google Scholar]

- Zheng, L.; Shen, L.; Tian, L.; Wang, S.; Wang, J.; Tian, Q. Scalable Person Re-identification: A Benchmark. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Salman, A.; Siddiqui, S.A.; Shafait, F.; Mian, A.; Shortis, M.R.; Khurshid, K.; Ulges, A.; Schwanecke, U. Automatic fish detection in underwater videos by a deep neural network-based hybrid motion learning system. ICES J. Mar. Sci. 2019, 77, 1295–1307. [Google Scholar]

- ultralytics/yolov5. Available online: https://github.com/ultralytics/yolov5 (accessed on 7 May 2020).

- Boyat, A.K.; Joshi, B.K. A Review Paper: Noise Models in Digital Image Processing. Signal Image Process. Int. J. 2015, 6, 63–75. [Google Scholar]

- Kong, Z.; Yang, X.; He, L. A Comprehensive Comparison of Multi-Dimensional Image Denoising Methods. arXiv 2020, arXiv:2011.03462. [Google Scholar]

- Toshev, A.; Szegedy, C.D. Human pose estimation via deep neural networks. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 1653–1660. [Google Scholar]

- Hsiao, Y.H.; Chen, C.C.; Lin, S.I.; Lin, F.P. Real-world underwater fish recognition and identification, using sparse representation. Ecol. Inform. 2014, 23, 13–21. [Google Scholar]

- Cutter, G.; Stierhoff, K.; Zeng, J. Automated Detection of Rockfish in Unconstrained Underwater Videos Using Haar Cascades. In Proceedings of the Applications and Computer Vision Workshops (WACVW), 2015 IEEE Winter, Waikoloa, HI, USA, 6 January 2015. [Google Scholar]

- Xiu, L.; Tang, Y.; Gao, T. Deep but lightweight neural networks for fish detection. In Proceedings of the OCEANS 2017-Aberdeen, Aberdeen, UK, 19–22 June 2017. [Google Scholar]

| Model | P | R | F1 | mAP@0.5 | mAP@0.5:0.95 | Inference @Batch_Size 1 (ms) |

|---|---|---|---|---|---|---|

| CenterNet | 95.21% | 92.48% | 0.94 | 94.96% | 56.38% | 32 |

| Yolo 4s | 84.24% | 94.42% | 0.89 | 95.28% | 52.75% | 10 |

| Yolo 5s | 92.39% | 95.38% | 0.94 | 95.38% | 58.31% | 8 |

| EfficientDet | 88.14% | 91.91% | 0.90 | 95.19% | 53.43% | 128 |

| RatinaNet | 88.16% | 93.21% | 0.91 | 96.16% | 57.29% | 48 |

| Model | P | R | F1 | mIOU | mAngle | Inference@Batch Size 1 (ms) |

|---|---|---|---|---|---|---|

| R-CenterNet | 88.72% | 87.43% | 0.88 | 70.68% | 8.80 | 76 |

| R-Yolo 5s | 90.61% | 89.45% | 0.90 | 75.15% | 8.26 | 43 |

| HSV_Aug | FocalLoss | Mosaic | MixUp | Other Tricks | mAP@0.5 |

|---|---|---|---|---|---|

| × | × | × | × | × | 77.32% |

| √ | × | × | × | × | 77.98% |

| √ | √ | × | × | × | 77.42% |

| √ | √ | √ | × | × | 79.05% |

| √ | √ | √ | √ | × | 81.12% |

| √ | × | × | √ | × | 80.64% |

| √ | √ | √ | × | Fliplrud | 79.68% |

| √ | √ | × | × | Fliplrud | 80.37% |

| √ | × | √ | √ | Fliplrud | 81.46% |

| √ | × | × | × | Fliplrud RandomScale(0.5~1.5) | 78.99% |

| √ | √ | √ | √ | Fliplrud RandomScale(0.5~1.5) | 81.88% |

| Model | Metric |

|---|---|

| Simplebaseline | PCK: 0.8131 |

| hrnet | PCK: 0.8222 |

| DeepPose | PCK: 0.9781 |

| hrnetv2 | PCK: 0.9585, AUC: 0.6994, EPE: 10.4704 |

| Mobilenetv2 + Simplebaseline | PCK: 0.9480, AUC: 0.6878, EPE: 11.5483 |

| udp | PCK: 0.9546, AUC: 0.7124, EPE: 10.2830 |

| darkpose | PCK: 0.9559, AUC: 0.7127, EPE: 9.6965 |

| DeepPose + Wingloss | NME: 0.1250 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, B.; Jiang, K.; Xu, Z.; Li, F.; Li, J.; Mou, C.; Gong, X.; Duan, X. Feasibility Research on Fish Pose Estimation Based on Rotating Box Object Detection. Fishes 2021, 6, 65. https://doi.org/10.3390/fishes6040065

Lin B, Jiang K, Xu Z, Li F, Li J, Mou C, Gong X, Duan X. Feasibility Research on Fish Pose Estimation Based on Rotating Box Object Detection. Fishes. 2021; 6(4):65. https://doi.org/10.3390/fishes6040065

Chicago/Turabian StyleLin, Bin, Kailin Jiang, Zhiqi Xu, Feiyi Li, Jiao Li, Chaoli Mou, Xinyao Gong, and Xuliang Duan. 2021. "Feasibility Research on Fish Pose Estimation Based on Rotating Box Object Detection" Fishes 6, no. 4: 65. https://doi.org/10.3390/fishes6040065

APA StyleLin, B., Jiang, K., Xu, Z., Li, F., Li, J., Mou, C., Gong, X., & Duan, X. (2021). Feasibility Research on Fish Pose Estimation Based on Rotating Box Object Detection. Fishes, 6(4), 65. https://doi.org/10.3390/fishes6040065