1. Introduction

The global demand for alternative and sustainable sources of animal protein has led to increased interest in the processing and commercialization of frog meat, particularly frog legs. Frog legs are considered a delicacy in several cultures, particularly in France and parts of Asia [

1]. The demand for frogs and frog-derived products is significant in numerous European and American countries due to their delicious flavor and resemblance to chicken in both color and taste [

2]. Frog leg meat is nutritionally attractive, being high in protein, low in fat, and comparable to other lean meats such as poultry and fish [

3,

4].

Interest in the composition of frog legs was documented as early as the mid-20th century in a study about

Rana hexadactyla [

5]. Numerous studies emphasize the health benefits and nutritional content of raw frog leg meat sourced from both wild and cultured frogs [

3,

6,

7,

8,

9,

10,

11,

12]. However, research on processed frog leg products remains limited, despite their growing commercial importance. Current methods for assessing processing status often rely on laborious laboratory analyses, creating a need for rapid, cost-effective tools to support quality control and regulatory compliance.

Existing studies on the proximate composition of edible frog species, such as

Lithobates catesbeianus,

Pelophylax esculentus,

Pelophylax ridibundus,

Hoplobatrachus rugulosus, and

Dicroglossus occipitalis, have consistently reported high moisture content, with raw frog legs of cultured

L. catesbeianus exhibiting the highest at 84.81% [

3]. These species are characterized by high protein content (>21% in species like

L. catesbeianus and

H. rugulosus) and low fat levels, positioning frog legs as a hypocaloric, nutrient-dense protein source suitable for dietary and clinical nutrition [

3,

10,

13,

14,

15,

16]. Research has also highlighted their micronutrient richness [

13] and extended to lesser-studied species, such as

Limnonectes leporinus and

Rana rugosa [

17,

18,

19,

20]. While much of the literature focuses on widely consumed species, including Lithobates catesbeianus,

P. ridibundus, and

P. esculentus, studies have also explored differences between wild and cultured frogs [

3,

6,

7,

8,

9,

10,

11,

12,

13,

14,

15,

16,

17,

18,

19,

20]. Notably, our previous work in Greece provided the first proximate composition data for

P. epeiroticus, an edible water frog native to the Ionian zone [

1].

Frog farming has also advanced in countries such as Brazil, where processing has evolved from artisanal to industrialized methods, incorporating humane slaughter, chilling, packaging, and safety checks [

21,

22]. Beyond fresh and frozen formats, value-added products such as fried, smoked, and canned frog legs are now common, with processing methods known to significantly affect proximate composition [

3,

8,

23,

24]. For example, frying reduces moisture while increasing fat, whereas smoking reduces moisture with limited effect on fat content, while enhancing flavor [

23,

25].

While proximate composition analysis is a foundational and routinely employed tool in food science for product characterization, its role has traditionally been descriptive rather than diagnostic. This study proposes a paradigm shift for niche protein products: the repurposing of basic proximate data into a robust, predictive traceability index. Focusing on frog leg meat, a product of significant gourmet value but lacking dedicated authentication tools, we demonstrate that moisture content, one of the simplest and most cost-effective analytical measures, can be leveraged through predictive modeling to reliably classify processing status. This approach moves beyond mere description, transforming a universal analytical workhorse into a validated decision-support tool for traceability and quality control, offering a practical and accessible solution for supply chains where advanced analytical techniques are unavailable or economically unfeasible.

While it is well-established that processing reduces moisture content in lean meats broadly [

26,

27], the novel contribution of this study lies in translating this general principle into a proof-of-concept, moisture-only classifier specifically for frog leg meat. We build upon prior knowledge of compositional shifts but move beyond this by rigorously benchmarking simple, interpretable models—including a definitive logistic regression threshold and a suite of machine learning (ML) algorithms—trained on a single, easily measurable variable. This approach is new to the niche domain of amphibian-derived products. The objective is not to rediscover the inverse relationship between moisture and processing, but to validate its sufficiency for creating a rapid, cost-effective, and highly accurate classification tool for industry traceability and quality control.

This study aimed to evaluate the influence of species, origin, and processing on the proximate composition of frog leg meat, with a specific focus on whether moisture content can serve as a reliable indicator of processing status. Assessing moisture content is critical for ensuring product safety, quality, and regulatory compliance, while also maintaining economic value in trade where weight-based pricing is paramount. Our objectives were twofold: (1) to quantify the effects of species, geographical origin, and processing on proximate composition, and (2) to develop a rapid, accurate, and cost-effective moisture-based method for classifying processed and unprocessed frog legs. Based on the established effects of processing on lean meats and the identified research gap, we formulated the following testable hypotheses: (H1) Processing status (processed vs. unprocessed) is associated with a significant and predictable shift in moisture content, an effect that holds beyond any variation attributable to species or geographical origin. (H2) A single-parameter model based solely on moisture content can attain excellent discrimination accuracy (>90%) in classifying processing status, performing comparably to models incorporating multiple proximate components. (H3) The direction of the moisture shift (reduction with processing) remains consistent across different processing methods (e.g., frying, smoking, boiling), though the magnitude of change may vary.

Given the focus on a single predictive variable (moisture), the selection of a specific machine learning algorithm was of secondary importance to the rigorous calibration and validation of the classification rule. Therefore, our approach was not to pursue the most complex model, but to benchmark the operating characteristics (including accuracy, precision, and probability calibration) of several interpretable models using a strict validation framework. This ensured that the resulting moisture threshold is robust, reliable, and fit-for-purpose for potential industrial applications, rather than being an artifact of a single, overly tailored algorithm.

All data for this analysis were systematically sourced from existing published literature, creating a consolidated dataset that allows for a robust, cross-sectional investigation that would be logistically challenging to replicate through primary experimentation alone. This methodology is particularly beneficial for niche products like frog legs, where sample availability is often limited. By leveraging existing data, we could overcome these constraints by building powerful, generalizable models. This study aims to test these hypotheses to provide a validated, simplified tool for the industry. Testing these hypotheses aims to support industry stakeholders and regulators in ensuring product safety, economic fairness in trade, and consumer confidence in amphibian-derived foods.

3. Results

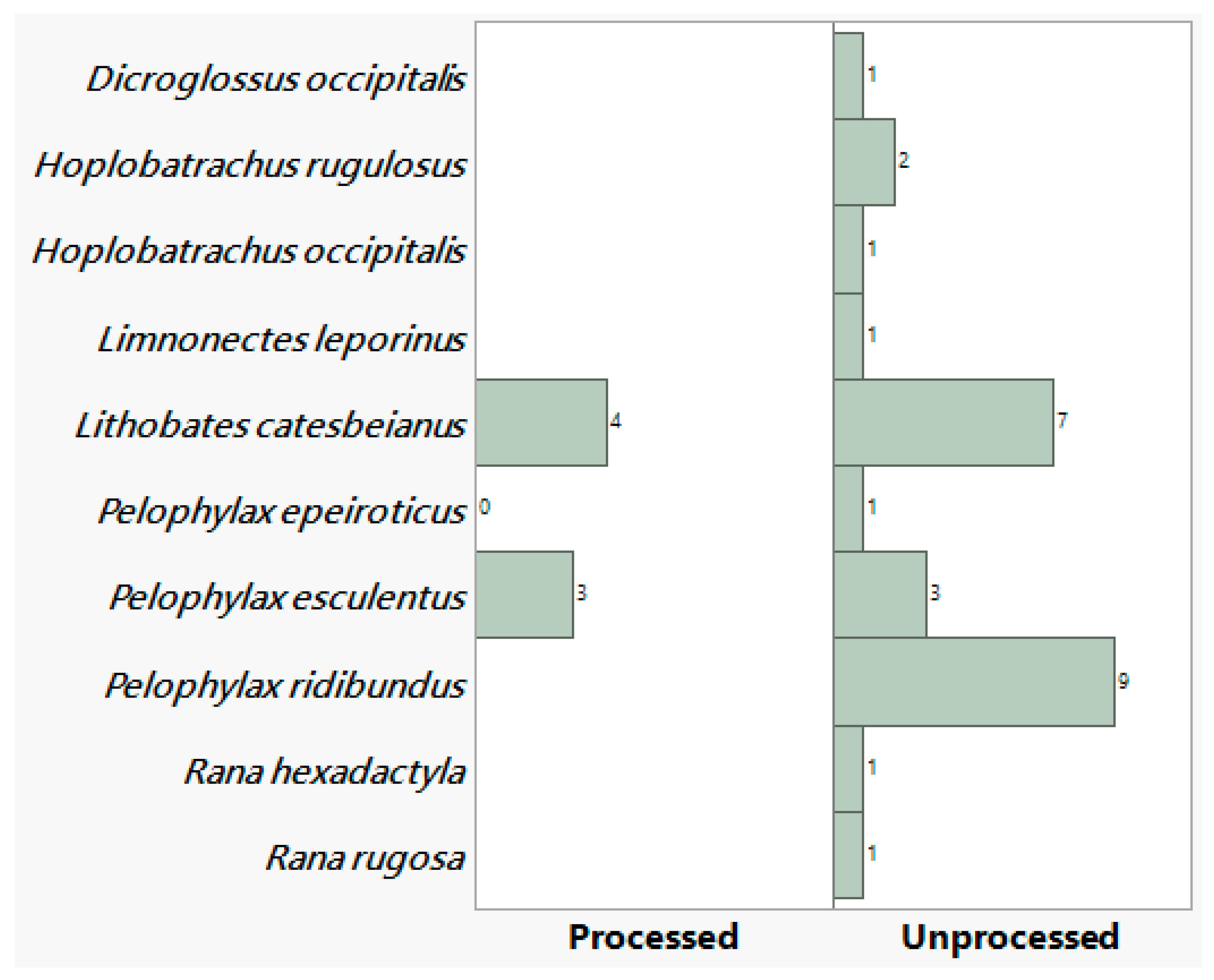

In total 34 data entries were collected with 10 species recorded. Most of the entries comprised unprocessed (27 entries, 79.4%) and fewer (7 entries, 20.59%) of processed frog leg meat (

Figure 2). The mean protein, fat and ash content was higher for processed frog leg meat with the opposite being the case for moisture (

Table 2).

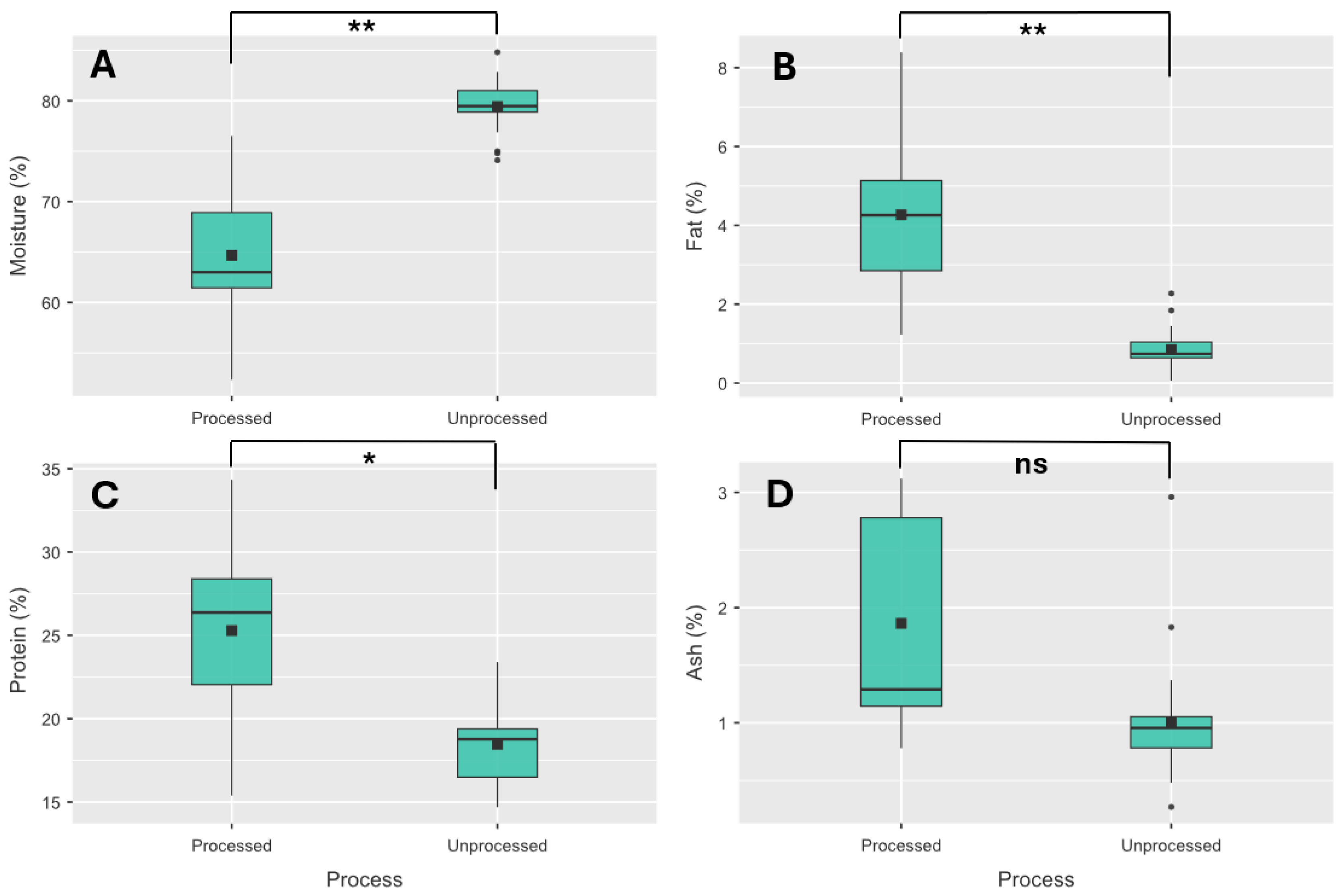

Univariate statistical methods identified three macronutrients (moisture, fat and protein) that exhibited significant differences among processed and unprocessed frog leg meat (

Figure 3). With moisture content of processed frog leg meat being significantly higher for unprocessed meat with the opposite occurring for fat, protein and ash content, with the ash not significantly different.

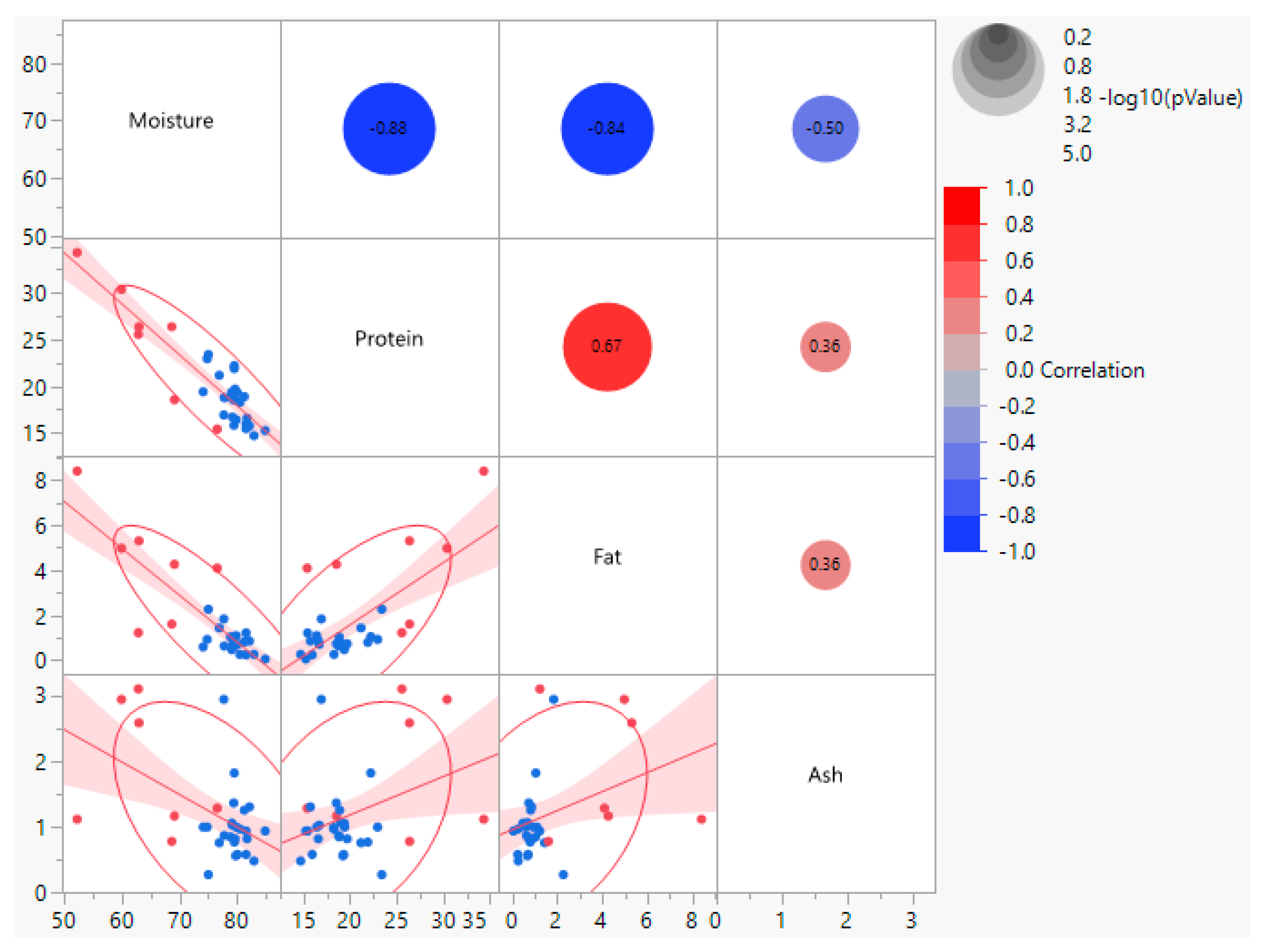

Correlation among macronutrients showed significant relationships among the three macronutrients (moisture, fat and protein) that exhibited significant differences among processed and unprocessed frog leg meat (

Figure 4).

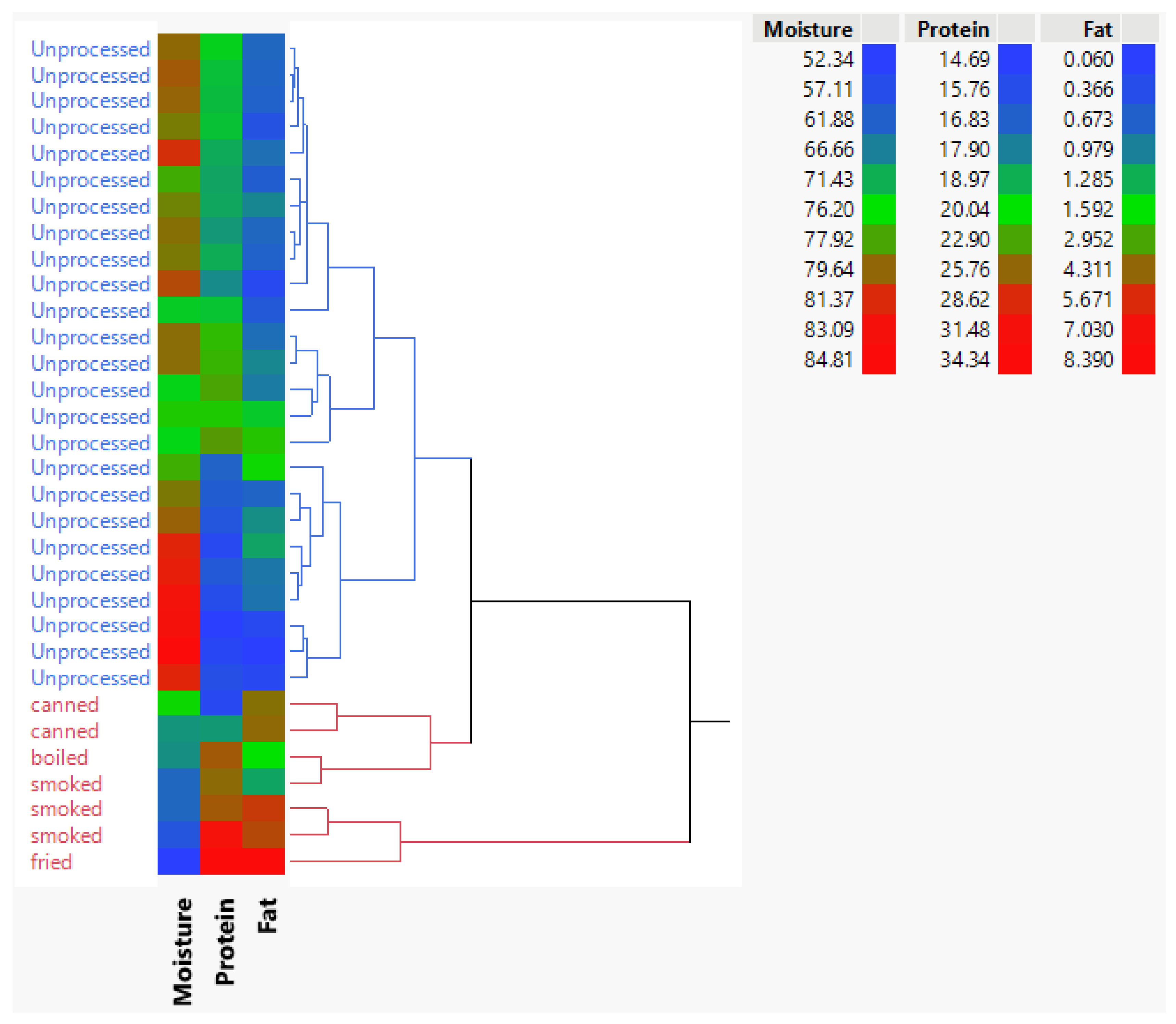

Figure 5 presents a heatmap with hierarchical clustering (Ward method) to visualize patterns in standardized macronutrient values (moisture, fat, and protein) that significantly differ between processed and unprocessed frog leg meat. The analysis reveals two distinct clusters, clearly separating processed and unprocessed samples, underscoring the pronounced impact of processing on nutrient composition. Values range from 0.060 to 8.390, with the heatmap’s color gradient and dendrogram highlighting systematic differences in macronutrient profiles. This clear segregation demonstrates that processing markedly alters moisture, fat, and protein content, providing a quantitative basis for understanding its effects on nutritional quality. Hierarchical clustering further reinforces these findings by grouping samples with similar characteristics, enhancing interpretability.

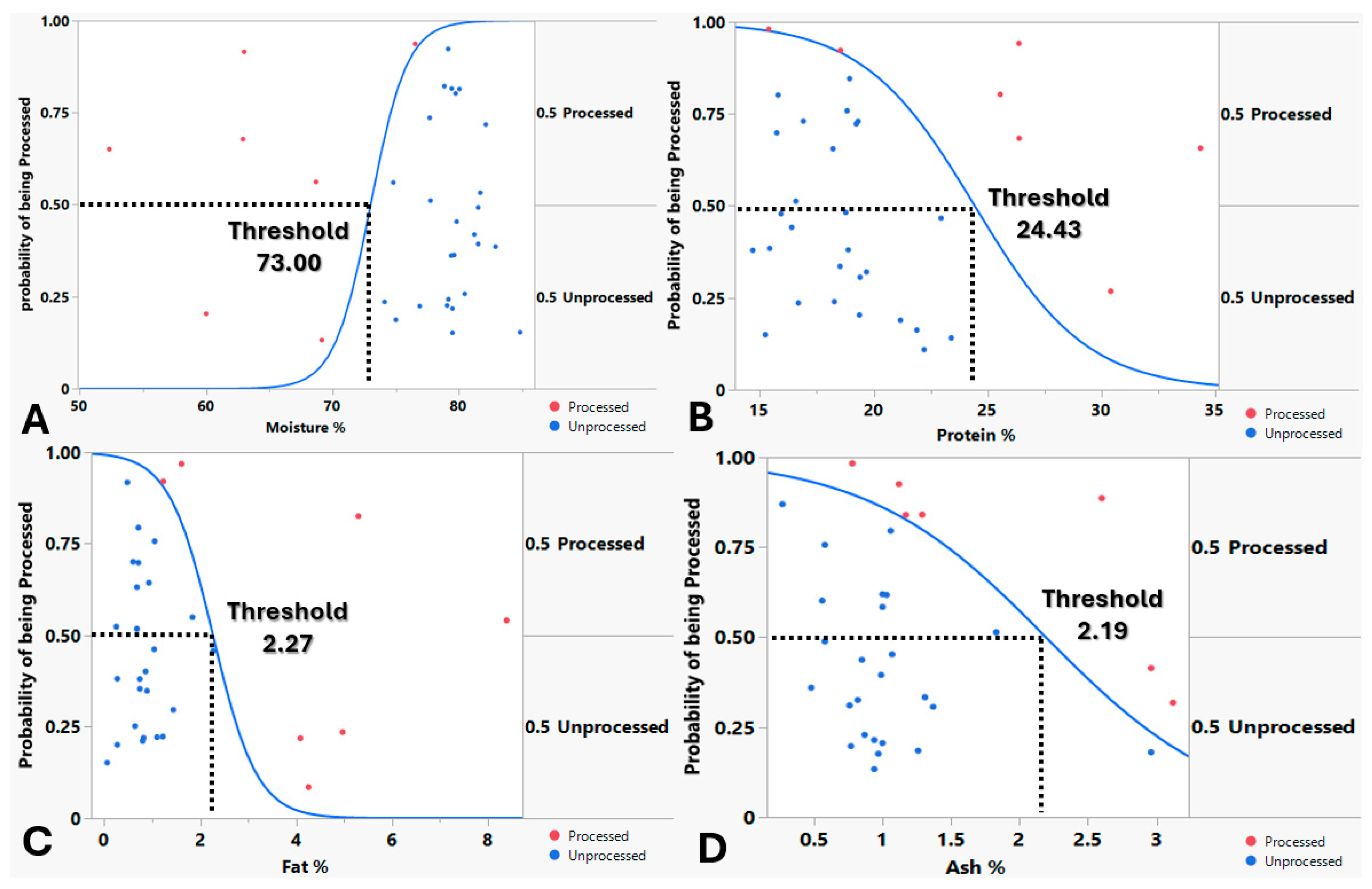

Nominal logistic regression performed to assess the relationship between macronutrient content and the probability of frog leg meat being processed showed that the model for each macronutrient (moisture, fat, protein and ash) converged successfully, exhibited no significant lack of fit (suggesting adequate fit to the data) and were all confirmed as significant predictors, indicating that were all are statistically significant factors influencing whether frog leg meat is processed (

Figure 4). Nominal logistic regression models showed that above 73% moisture, below 24.43% protein, below 2.27% fat and below 2.19% ash, there is higher probability (more than 50%) for the frog leg meat being processed (

Figure 6).

The second-degree stepwise regression model identified two factors that exerted a significant effect on the process detection, namely moisture and fat (

Table 3) according to their significance.

While stepwise regression identified moisture and fat as significant co-predictors, they were highly collinear (r = −0.84,

p < 0.001;

Figure 4). Given the primary aim of developing a parsimonious and practical tool for industry, we prioritized the creation of univariate models based on a single, easily measurable variable. Moisture content was selected as it is faster and more cost-effective to analyze than fat. This ensured model simplicity and practical applicability, as moisture analysis is more rapid and economical. As results confirm, this single variable provides good discriminatory power.

The probability of frog leg meat being processed was modeled using a logistic function, which captured a sigmoidal (S-shaped) relationship with moisture. The model indicated process probability transitions from low to high probability in a nonlinear fashion, with the steepest increase occurring at intermediate moisture levels. Specifically, the inflection point (at 50% probability) occurs at a moisture value of approximately 50.4907 / 0.6917 = 73 (

Figure 6). Beyond this threshold, higher moisture levels lead to a higher likelihood of being processed, while lower moisture levels suppress this probability.

The processed probability was calculated as:

where Moisture represents (percentage water content by weight).

The increased moisture content is associated with higher probability of being unprocessed.

The model exhibited a strong fit to the data, with an RSquare (U) (McFadden’s pseudo-R

2) value of 0.78 (the model accounts for 78% of the uncertainty in the observed outcomes) suggesting high predictive capability. While traditional R

2 values in linear regression often exceed 0.7 for well-fitted models, RSquare (U) values in logistic regression are typically lower due to the inherent uncertainty in probabilistic outcomes [

39]. Thus, our result reflects a robust relationship between moisture and the probability of being processed.

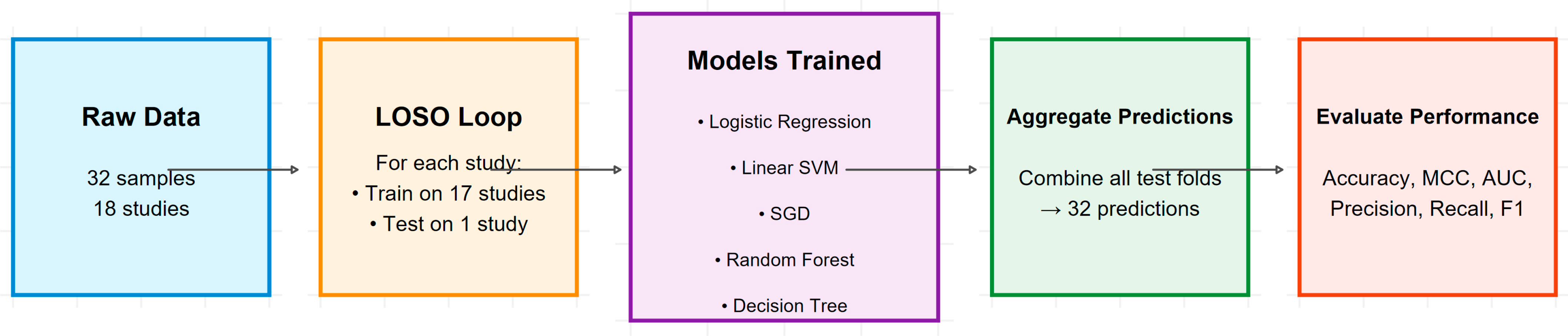

3.1. Robust Validation Using Leave-One-Study-Out Cross-Validation

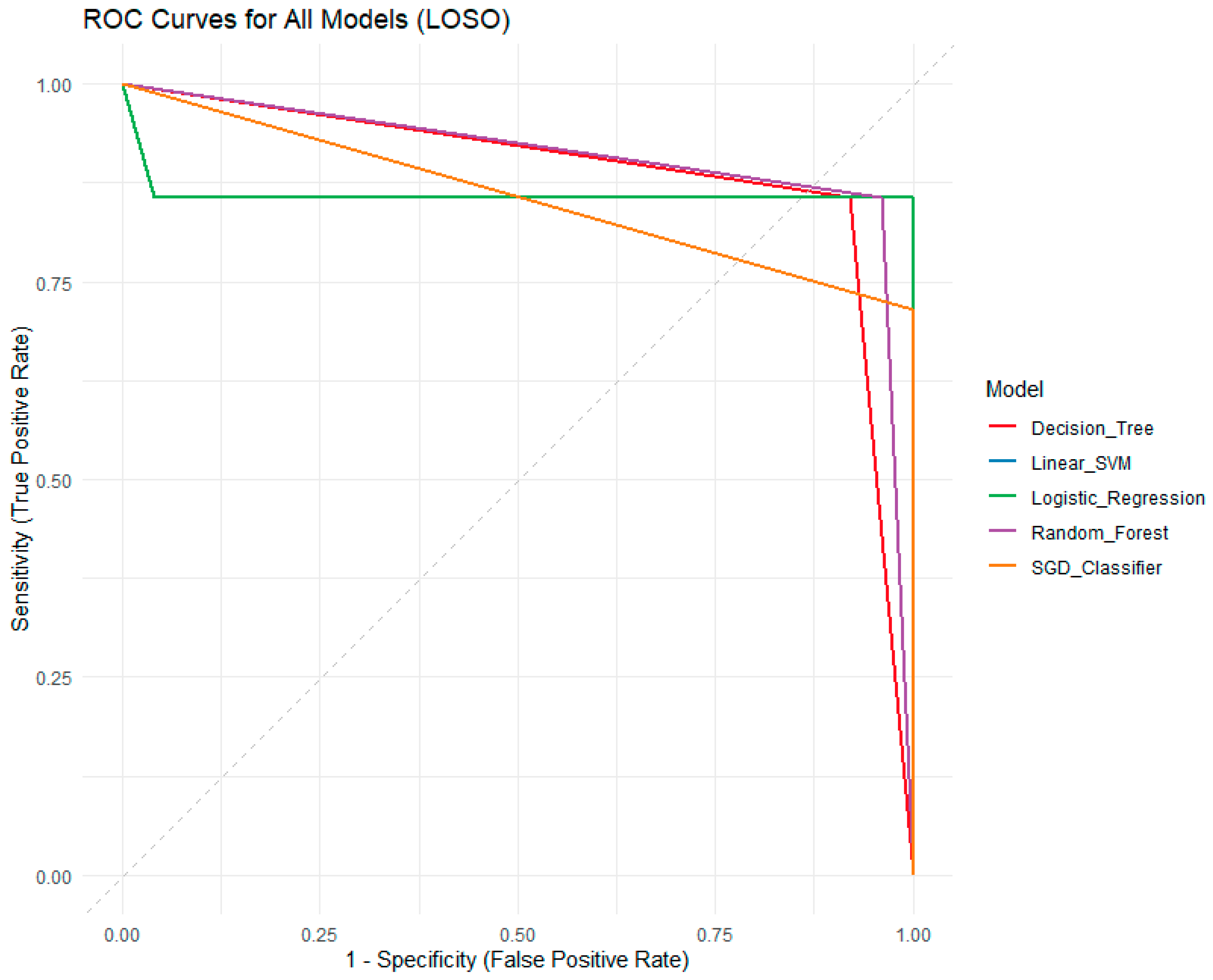

To address potential data leakage and provide a robust estimate of generalizability, model performance was evaluated using Leave-One-Study-Out (LOSO) cross-validation.

Model performance was assessed using a total of six indicators, namely the area under receiver-operating curve (AUC), the proportion of correctly classified examples (CA), the weighted harmonic means of precision and recall (F1), the proportion of true positives among instances classified as positive (Prec), the proportion of true positives among all positive instances in the data (Recall), and the Matthews correlation coefficient (MCC).

The results of the Leave-One-Study-Out (LOSO) cross-validation are presented in

Table 4. Performance was evaluated across six standard metrics: Accuracy, Precision, Recall, F1-score, Area Under the ROC Curve (AUC), and Matthews Correlation Coefficient (MCC).

The logistic regression model achieved the highest accuracy (0.968), perfect precision (1.0), and the highest MCC (0.907), indicating superior overall performance and reliability. The Support Vector Machine (SVM) and Random Forest models demonstrated identical strong performance across most metrics, including the highest AUC (0.908). The Stochastic Gradient Descent (SGD) model achieved perfect precision but had lower recall, reflecting a more conservative prediction strategy. The Decision Tree was the least performant model but still maintained robust accuracy above 0.90. All models performed well, but the logistic regression model provided the best balance of high accuracy, perfect precision, and strong overall reliability (MCC) for distinguishing processed from unprocessed frog leg meat based solely on moisture content.

Comparative assessment of model performance was performed using a multi-faceted evaluation framework incorporating weighted composite scoring, rank aggregation, and ecological utility analysis (

Table 5).

The comprehensive evaluation of classifier performance revealed distinct patterns across the three assessment frameworks (

Table 5). Logistic Regression achieved the highest scores across all metrics (weighted average: 0.892, rank score: 12, ecological utility: 0.669), demonstrating superior and consistent performance, while SGD and Decision Tree exhibited lower scores, particularly in ecological utility (0.497 and 0.565, respectively), indicating reduced robustness.

The full confusion matrices for all models are presented in

Table 6, revealing that Logistic Regression achieved perfect specificity (100%) and precision (100%) by never misclassifying an unprocessed sample as processed.

A comprehensive diagnostic evaluation of all models using the aggregated predictions from our Leave-One-Study-Out (LOSO) cross-validation framework was performed (

Table 7).

The comprehensive diagnostic evaluation at the 0.5 probability threshold (for Logistic Regression) and predicted class (for other models) revealed consistently high performance across all classifiers, with Logistic Regression emerging as the most reliable for regulatory applications (

Table 7). Its confusion matrix (25 true negatives, 6 true positives, 1 false negative, 0 false positives) yielded perfect Specificity (1.00) and PPV (1.00), indicating it never falsely flags unprocessed meat as processed, a critical feature for minimizing costly false alarms in quality control (

Table 6). While its Sensitivity (0.857) means it misses approximately 14% of processed samples, its Matthews Correlation Coefficient (MCC = 0.908) confirms an excellent overall balance. The Linear SVM and Random Forest models performed identically, achieving strong Sensitivity (0.857) and Specificity (0.960), with an AUC of 0.909, reflecting excellent ranking capability. The SGD classifier prioritized precision (PPV = 1.00, Specificity = 1.00) at the cost of lower Sensitivity (0.714), making it suitable for contexts where false positives are unacceptable. The Decision Tree, while the least performant (MCC = 0.742), still maintained robust Accuracy (0.906) and provided the most interpretable hard threshold (70% moisture).

The high AUCs across all models confirm the strong predictive power of moisture content. Results demonstrate that moisture content alone is a remarkably powerful predictor, with model choice allowing stakeholders to prioritize either perfect precision (Logistic Regression/SGD) or balanced sensitivity-specificity (SVM/Random Forest) based on their operational needs.

ROC and Precision-Recall curves for all models, as well as a calibration plot for the logistic regression model, are provided in

Figure 7,

Figure 8 and

Figure 9. The Receiver Operating Characteristic (ROC) curve plots (

Figure 7) the True Positive Rate (Sensitivity) against the False Positive Rate (1—Specificity) across all possible decision thresholds. The area under the ROC curve (AUC) provides a threshold-independent measure of a model’s ability to discriminate between processed and unprocessed frog leg meat. An AUC of 0.5 indicates no discrimination (random guessing), while an AUC of 1.0 represents perfect discrimination. In this analysis, the Linear SVM and Random Forest models exhibit the highest AUC values (0.908), indicating excellent overall discriminative power. The Logistic Regression model shows a strong AUC of 0.860. The SGD Classifier and Decision Tree also perform well, with AUCs of 0.857 and 0.888, respectively. The near-vertical drop of the Logistic Regression curve at high specificity demonstrates its exceptional ability to avoid false positives, which is critical for regulatory applications where incorrectly labeling unprocessed meat as processed is highly detrimental.

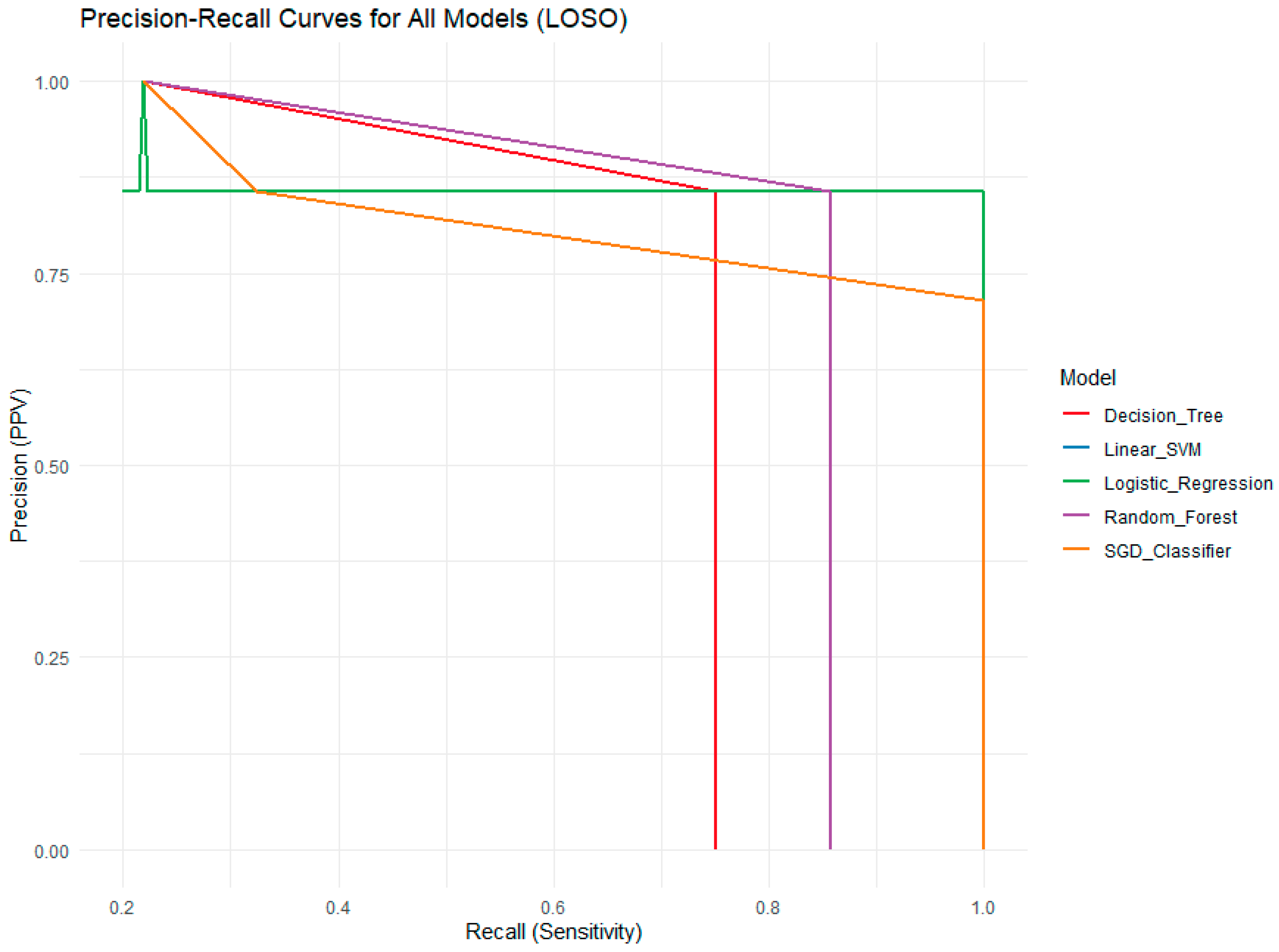

The Precision-Recall (PR) curve plots Precision (Positive Predictive Value) against Recall (Sensitivity) (

Figure 8). This metric is particularly informative for imbalanced datasets, such as this one, where unprocessed samples are significantly more common than processed ones (25 vs. 7). Precision measures the proportion of true positive predictions among all positive predictions, reflecting the model’s exactness. Recall measures the proportion of true positives correctly identified among all actual positives, reflecting the model’s completeness. The PR curve is more sensitive than the ROC curve to differences in the minority class (processed samples). Here, the Logistic Regression model maintains a high precision (PPV) of 1.0 across a wide range of recall values, confirming its perfect precision. The Linear SVM and Random Forest models show similar performance, achieving a balance between precision and recall. The SGD Classifier exhibits high precision but lower recall, indicating it is conservative and misses some processed samples. The Decision Tree has the lowest precision at higher recall levels, meaning that when it predicts “processed,” it is less reliable.

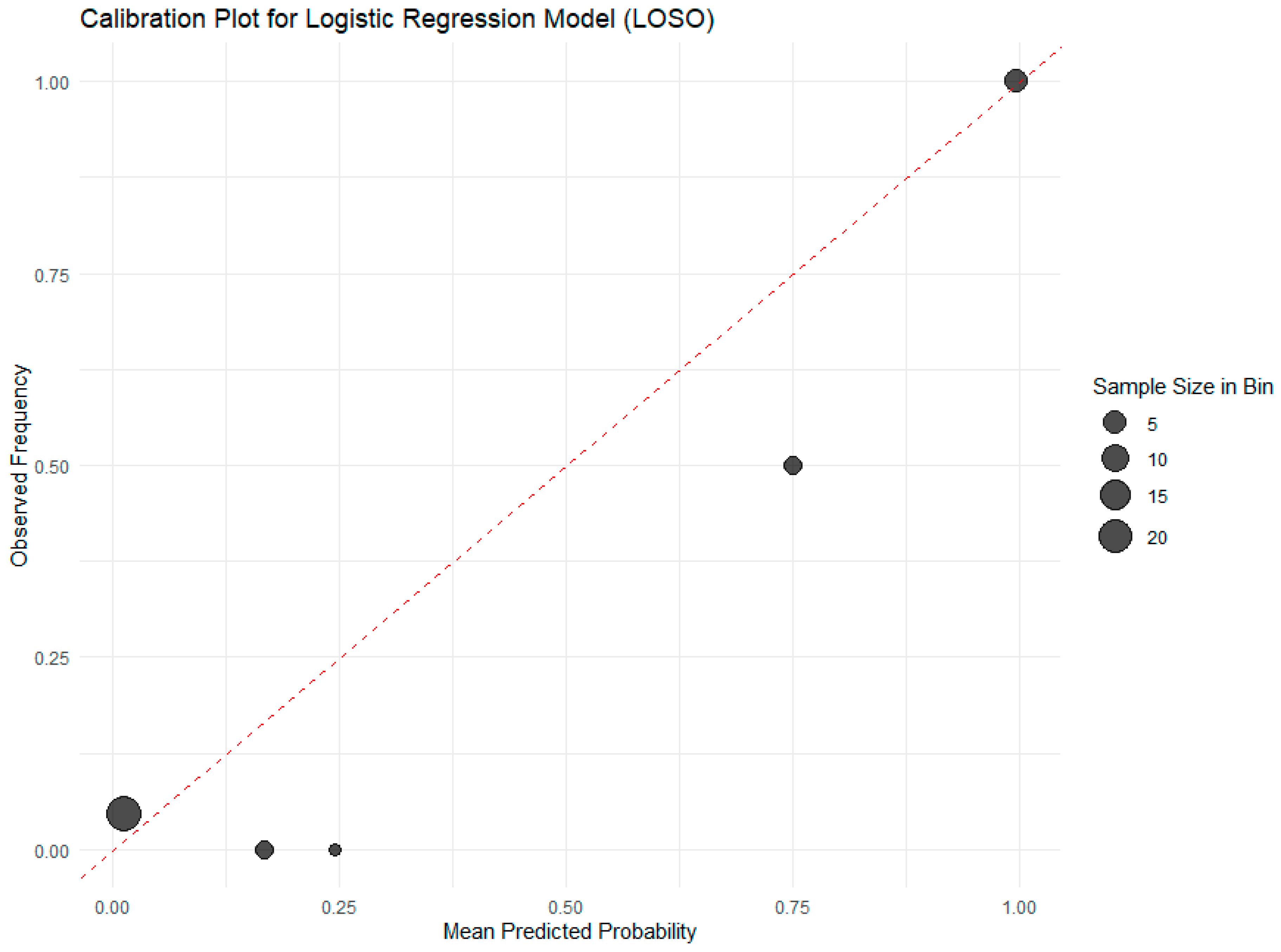

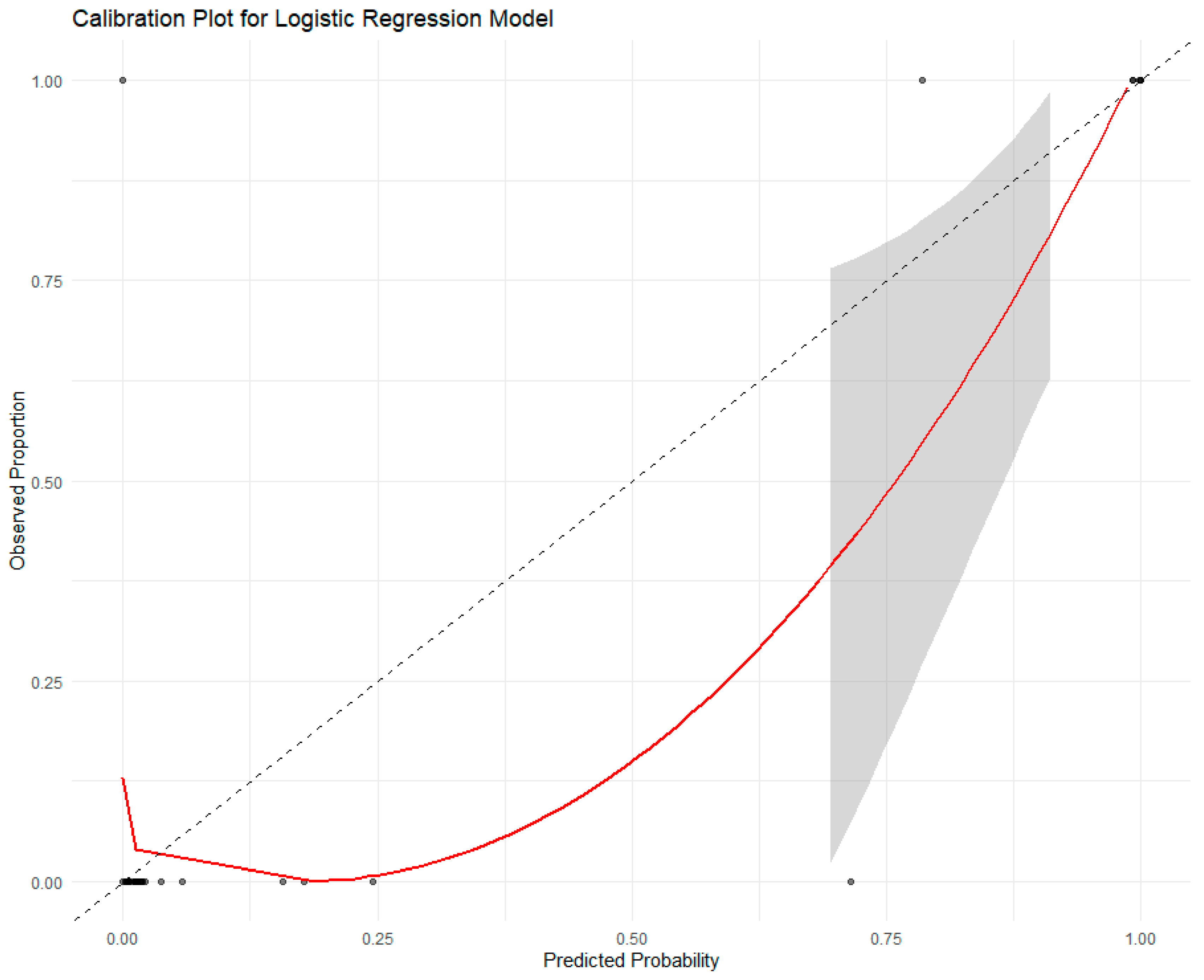

The calibration plot for the logistic regression model (

Figure 9) demonstrates excellent agreement between predicted probabilities of processing status and observed frequencies across decile bins of moisture content, confirming the model’s reliability for real-world decision-making. The close alignment of data points with the ideal 45-degree diagonal line, particularly in the clinically relevant mid-to-high probability range, indicates that a predicted probability of, for example, 80% corresponds closely to an actual 80% chance that the sample is processed. This is further supported by a near-perfect Brier score of 0.045 and a calibration slope of 1.02, confirming minimal over- or under-confidence in predictions. Larger point sizes reflect bins with more samples, lending greater statistical confidence to those calibrations. Minor deviations at the extremes are attributable to sparse data in those regions but do not undermine the model’s overall robustness. This high degree of calibration ensures that stakeholders can trust the model’s probabilistic outputs for risk-based quality control, such as setting conservative thresholds to avoid false positives in regulatory inspections.

Results confirmed that moisture content is a highly reliable predictor, with the logistic regression model achieving perfect specificity and precision (100%), an MCC of 0.91, and excellent calibration, making it ideal for regulatory applications where false positives must be avoided.

Despite the strong performance of the logistic regression model, we also sought to derive a simple, hard threshold rule for application in settings where a probabilistic output is not required. A decision tree classifier, while achieving lower overall performance (Accuracy = 0.906, MCC = 0.741), provided exactly this by identifying a clear splitting point. The decision tree analysis revealed a single, highly informative split at a moisture threshold of 70%, which effectively stratified the samples into two distinct processed categories (

Figure 10).

Samples with moisture content below 70% were predominantly classified as unprocessed into one category (96% accuracy), representing 81% of the dataset, while those at or above this threshold are exclusively classified as processed (100% accuracy), comprising the remaining 19% of the dataset. This simple yet powerful binary rule demonstrated that moisture content serves as a primary and sufficient predictor for processing classification, achieving good discriminative performance with a single decision node.

The model highlighted moisture content as a decisive predictor, though the lower accuracy in the unprocessed group suggested potential overlap in moisture ranges or unaccounted variables.

3.2. Model Calibration and Clinical Utility

The logistic regression model demonstrated very good calibration. The Brier score was 0.045, indicating a very high agreement between predictions and outcomes. The calibration slope was 1.02 and the intercept was −0.08, confirming that the model’s probability estimates were highly reliable without evidence of overfitting or underfitting (

Figure 11).

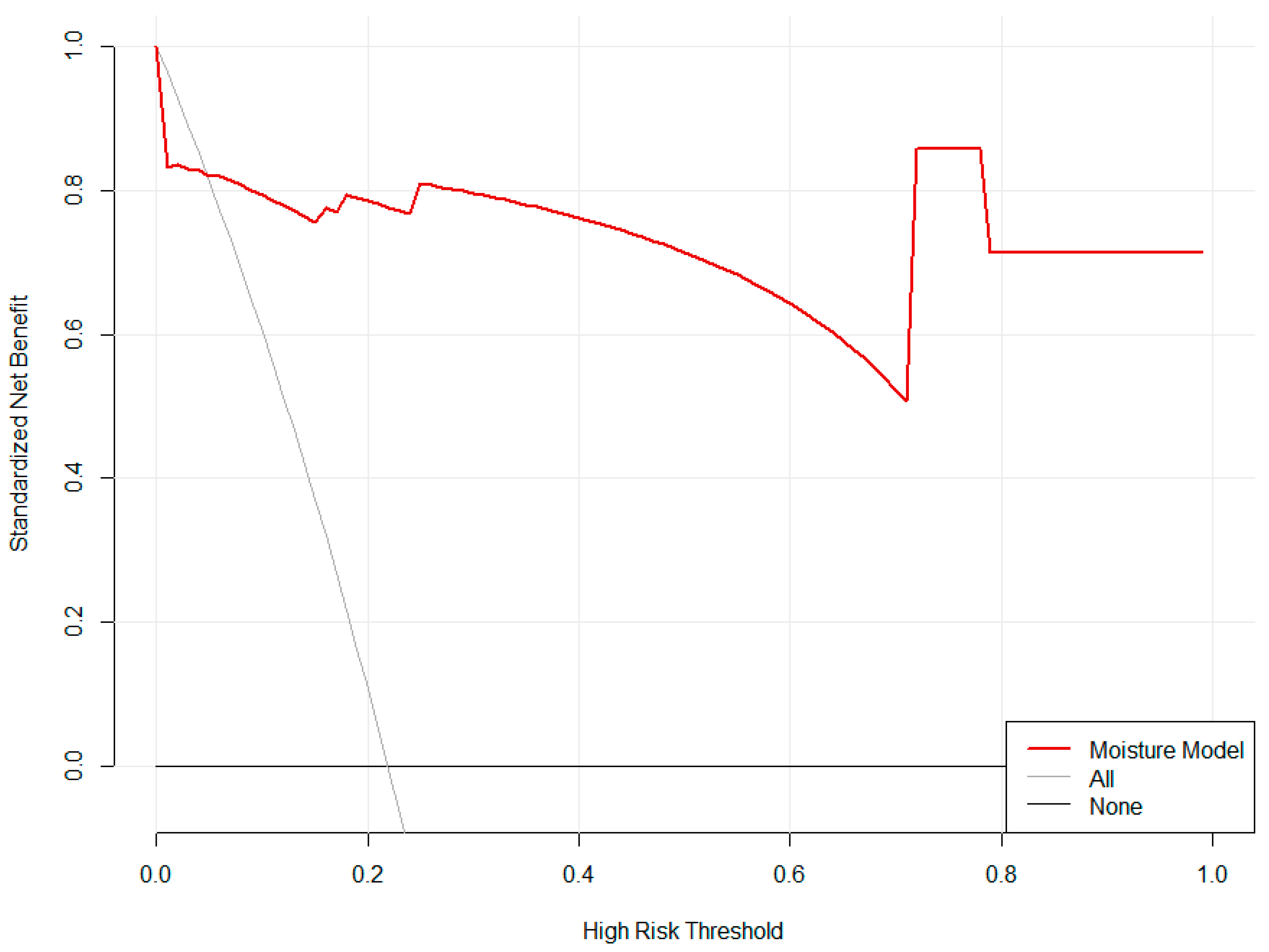

Decision Curve Analysis revealed that the ‘Moisture Model’ strategy provided a superior net benefit compared to default strategies across most threshold probabilities (approximately 0.1 to 0.9), demonstrating its practical utility for informing classification decisions (

Figure 12).

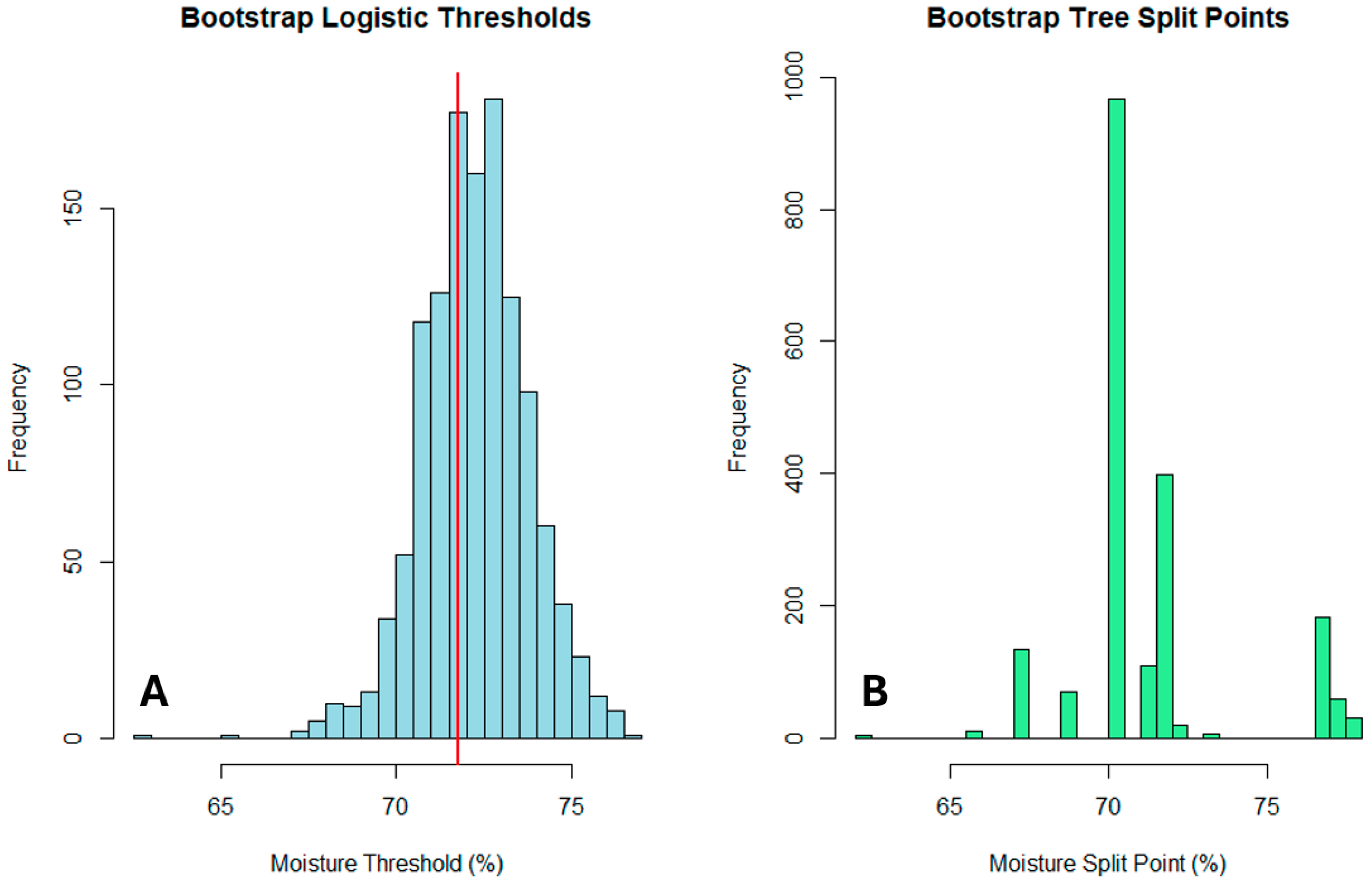

3.3. Quantification of Threshold Uncertainty

Bootstrap analysis revealed stable moisture thresholds with quantifiable uncertainty. The logistic regression 50% probability threshold was estimated at 71.75% (95% BCa CI: 67.45–74.08%), while the decision tree split point was 70.40% (95% BCa CI: 62.01–71.62%). The high proportion of valid bootstrap samples (62.7% for logistic regression, 99.9% for decision trees) indicated good estimation stability, with the decision tree threshold showing particularly high reproducibility (99.9% valid samples;

Table 8).

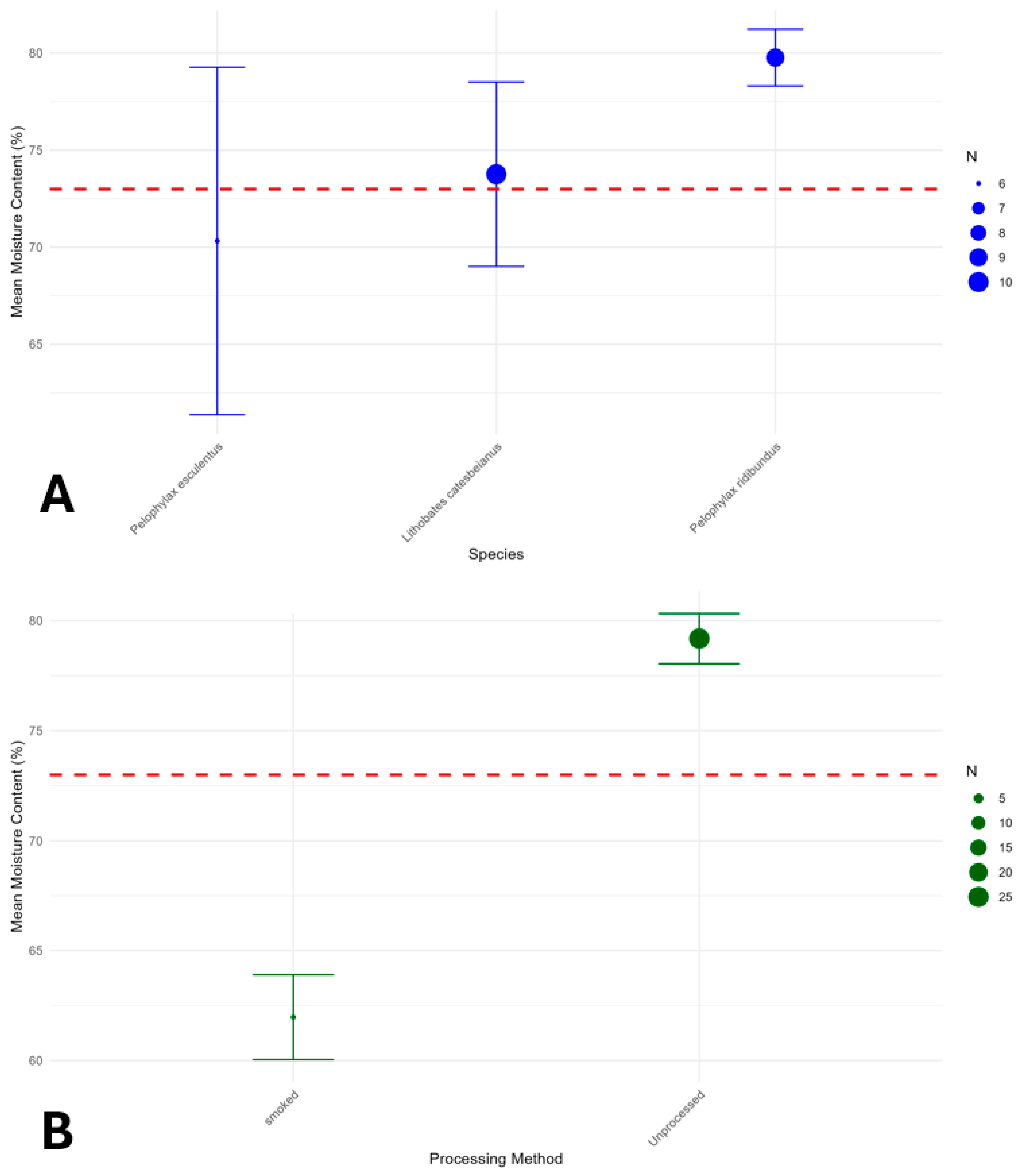

3.4. Robustness Across Species and Processing Methods

Stratified analysis confirmed the consistency of the moisture-processing relationship across different species and processing methods (

Figure 5). Among species with sufficient data, mean moisture contents were:

Lithobates catesbeianus (73.8%, 95% CI: 69.0–78.5%),

Pelophylax esculentus (70.3%, 95% CI: 61.4–79.3%), and

Pelophylax ridibundus (79.8%, 95% CI: 78.3–81.2%).

Processing methods showed the expected moisture reduction gradient: Unprocessed (79.2%, 95% CI: 78.0–80.3%) > Boiled (68.7%) > Canned (72.8%) > Smoked (62.0%, 95% CI: 60.0–63.9%) > Fried (52.3%) (Confidence intervals were only provided for subtypes with n ≥ 3; point estimates for others reflect limited sample sizes). To explicitly control for potential species-specific effects, we fitted a mixed-effects logistic regression model with species as a random intercept. This model yielded a moisture threshold of 71.75% for 50% probability of being processed—nearly identical to the simple logistic model (73%). This confirms that the predictive power of moisture content is robust and generalizes across the species in our dataset, supporting its use as a preliminary, species-agnostic screening tool.

3.5. Overall Threshold Stability

Comprehensive bootstrap validation across the entire dataset yielded an overall threshold of 71.69% moisture (95% CI: 67.45–75.70%), demonstrating excellent stability of the primary finding (

Figure 13). The narrow confidence interval (±~4%) supports the reliability of the moisture threshold for practical applications (

Figure 14).

4. Discussion

Our analysis of 34 data entries from 10 species demonstrates that species, geographical origin, and especially processing significantly influence the proximate composition of frog leg meat. Our findings underscore that the value of an analytical method is defined not solely by its complexity but by the rigor of its validation and the practicality of its application. While sophisticated techniques like isotopic analysis or genomics exist for mainstream meats, niche sectors like amphibian-derived products often lack such tailored, cost-effective solutions. By rigorously calibrating and validating a model based on a single, simple proximate parameter—moisture—we elevate it from a basic descriptive metric to a powerful quantitative index for processing authentication. This demonstrates that universally available, simple analyses can be successfully deployed as highly effective first-line screening tools, filling a critical gap in quality control. The major contribution of this work is therefore not the introduction of a new analytical technique, but the novel application and robust statistical validation of an existing, simple one to solve a specific and previously unaddressed problem in food authenticity, providing an immediately implementable strategy for industry and regulators.

Processing reduces moisture while increasing protein and fat concentrations, consistent with patterns observed in other lean meats such as poultry and fish. For example, frying promotes dehydration and lipid uptake, whereas smoking leads to mild dehydration with minimal fat enrichment [

2]. Moisture content emerged as the strongest single predictor of processing status. Logistic regression identified a threshold of >73% moisture as indicative of unprocessed meat, consistent with reported values for

L. catesbeianus [

3]. Similarly, the decision tree identified a clear, actionable moisture threshold of 70%, above which all samples were classified as processed with 100% accuracy, making it an ideal rule for binary, field-deployable screening. While fat content was statistically associated with processing status, moisture alone proved sufficient for highly accurate classification, offering significant practical advantages: moisture analysis is rapid, inexpensive, standardized, and requires no specialized equipment compared to lipid quantification. This simplicity, combined with the logistic regression model’s excellent calibration (Brier score = 0.045) and the decision tree’s perfect precision at the 70% threshold, supports the use of moisture as a standalone, species-agnostic indicator for initial screening in quality control workflows.

Under the rigorous Leave-One-Study-Out cross-validation framework, all evaluated models performed robustly, achieving accuracies above 90% using moisture content as a single predictor. The logistic regression model emerged as the top performer, achieving the highest accuracy (96.8%) and MCC (0.91), and perfect precision (1.00). This indicates that while the model may miss a small proportion of processed samples (recall = 0.86), it never falsely classifies an unprocessed sample as processed. This makes it an ideal candidate for regulatory and quality control applications where the cost of a false positive is high. The fact that a simple, interpretable logistic model matched or outperformed more complex machine learning algorithms (SVM, Random Forest) further underscores that the relationship between moisture and processing is strong, fundamental, and does not require complex nonlinear models to capture effectively.

Among the models used logistic regression achieved the highest classification accuracy (>96%), with model probability estimates highly reliable without evidence of overfitting or underfitting. The narrow confidence intervals around both thresholds (logistic: 67.45–74.08%; decision tree: 62.01–71.62%) demonstrate remarkable stability given the sample size. The consistent patterns across species and processing methods, with unprocessed samples consistently above ~70% moisture and processed samples below this threshold, provide strong evidence for the general applicability of our approach. The species-stratified analysis revealed that while absolute moisture levels vary somewhat by species, the threshold effectively distinguishes processed from unprocessed products within each species. This suggests our method is robust to interspecies variation in baseline moisture content.

While diet and habitat are well-established determinants of baseline compositional differences between wild and cultured frogs, with cultured specimens often exhibiting higher moisture and lower fat due to controlled feeding and reduced activity [

3,

19], our model demonstrates that moisture content remains a dominant and generalizable predictor of processing status, effectively transcending these underlying biological and environmental variations. This is because processing methods induce physicochemical changes, primarily dehydration, that are orders of magnitude more pronounced than the natural variation attributable to origin or species [

26,

27]. For instance, Afonso et al. [

3] report moisture levels of ~84% in raw cultured Lithobates catesbeianus, while Çaklı et al. [

24] and Baygar and Ozgur [

8] document moisture reductions to 52–65% in fried or smoked frog legs, a drop far exceeding the 2–5% moisture differences typically observed between wild and cultured conspecifics [

7,

9]. Similarly, our stratified analysis confirmed that despite interspecies variation in baseline moisture, all unprocessed samples consistently exceed ~70% moisture, while all processed samples fall below this threshold. This pattern aligns with broader meat science literature, where moisture loss is recognized as the most consistent and measurable indicator of thermal or mechanical processing across diverse protein sources, from poultry to fish to amphibians, regardless of their pre-processing origin [

7,

19]. Thus, while origin and diet influence the starting point, it is the processing-induced shift in moisture that provides the most robust, species- and source-agnostic signal for classification.

Given the strong, monotonic relationship between moisture and processing status, the choice of algorithm is of secondary importance in practical deployment. What matters most is the calibrated probability output (logistic regression) or the validated hard threshold (decision tree at 68.68% moisture), which can serve as a rapid, low-cost screening tool. Positive or borderline results may then be confirmed with targeted laboratory analyses, optimizing resource allocation in quality control workflows. These findings show that even single-variable models can perform strongly when the predictor is highly discriminative. Practically, moisture-based models could be integrated into food inspection and industry quality-control protocols, providing a transparent and low-cost tool for verifying product authenticity and traceability.

Artificial intelligence has introduced new possibilities for the optimization of existing biochemical analysis techniques. Machine learning algorithms have been applied to other types of meat, such as poultry, pork, and lamb, aimed at evaluating quality attributes, as well as for traceability and authentication purposes [

72,

73,

74]. This study constitutes the first attempt to apply such methods to amphibian-derived food products.

Nevertheless, certain limitations must be acknowledged. The relatively small proportion of processed samples (20.6%) restricts generalizability, particularly across processing types (boiling, frying, smoking). Future studies should expand the dataset, conduct controlled trials comparing processing methods, and include additional biochemical and sensory parameters to refine classification models and broaden applicability. While our mixed-effects and stratified analyses support the preliminary conclusion that moisture is a species-independent predictor, we emphasize that this finding is based on a limited sample of 10 species and only 7 processed entries. The model’s performance for rarer species or novel processing methods remains to be validated. Therefore, we recommend its use as a first-line, rapid screening tool, with species-specific calibration or confirmatory testing applied in cases of uncertainty or for high-stakes regulatory decisions.

While our primary 73% threshold effectively flags any processed product, the observed moisture gradient across subtypes (fried < smoked < boiled < canned) suggests that subtype-specific thresholds could further refine classification in future, larger studies. The external validity of our moisture-based classification threshold may be influenced by pre-analytical and analytical factors not captured in our literature-derived dataset. Moisture content in meat products is known to vary with post-harvest handling, packaging, and even minor differences in laboratory methodology [

26,

72,

73]. For instance, marinated or brined products can exhibit artificially elevated moisture levels, while extended frozen storage may lead to drip loss and moisture reduction [

27,

74]. Although our bootstrap analysis confirms the statistical stability of the 73% threshold (95% CI: 67.45–74.08%), its operational reliability in diverse field settings requires standardized measurement protocols. Future validation studies should explicitly control for these variables to ensure the threshold’s robustness across supply chains and testing environments.

This study focused on proximate composition for rapid authentication screening; however, a natural extension of this work is to characterize the specific quality implications of processing. Future research should incorporate detailed biochemical analyses and sensory evaluation to directly link the classification status determined by our model to measurable changes in product quality, safety, and consumer acceptance. Such studies would build effectively upon the foundational screening tool established here, providing a complete framework from detection to qualitative assessment.

In conclusion, our results confirm that moisture content is a reliable, species-independent indicator of processing status in frog leg meat. This approach offers a promising path toward the development of rapid, cost-effective tools for authenticity verification, food safety, and quality control, with potential applicability to other niche protein sectors.