1. Introduction

The robotics community has always been fascinated by biped walkers. Their humanoid form and ability to locomote on human-centered environments have sparked interest into their potential applications. For instance, bipedal robots can replace humans in hazardous situations, such as landmine-ridden fields or radioactive zones. Or consider the possibility of exoskeletons aiding human mobility when compromised by neuromuscular diseases.

However, one of the major challenges in designing bipedal robot walkers is the definition of criteria to generate stable gait trajectories guaranteeing that the robot does not fall during gait. In humans, a combination of neuromechanical factors results in stable, robust, versatile, and energy efficient gait [

1,

2]. However, since the state of the art of motor control neurophysiology cannot fully model the complete human stability control during walking, the biomimetic translation of these characteristics into biped robots is not an easy task. Moreover, the dynamics of biped locomotion is nonlinear, due to its strong dependency on the system configuration [

3], and thus trajectory planning and execution to ensure stability is a complex problem.

In 1969, Vukobratovic et al. introduced one of the first stability criteria, the Zero Moment Point (ZMP), for biped robot walking pattern generation and control [

4]. The ZMP is the point on the ground where the resulting torque of inertial and gravitational forces on the robot has no horizontal component. This requires the resulting forces between the feet and the ground to be located within the region defined by the contact between the feet and the ground.

Although this approach allowed the development of many biped walking robots, the constraints imposed by the criterion result in some drawbacks on the robot movement. The need to position the center of pressure (CoP) inside the support polygon implies that the robot cannot fully exploit the inverse pendulum dynamics. This results in high energy consumption with a slow and unnatural motion of the robot compared to human walking. Furthermore, it requires high accuracy in the measurement of the robot joint positions.

Several techniques have been proposed to overcome these limitations. For instance, in [

5] the authors extended the ZMP introducing the Preview Control theory to achieve a stable gait adapting to uneven terrains. The step capturability criterion (SC), proposed by Pratt and Tedrake [

6], is based on the definition of a point on the ground for foot placement, in such a way that, reaching this point, the robot can stop at static equilibrium at all joints: the capture point. This idea has a clear counterpart in the human gait [

7], and it also related to the mechanism employed by humans to recover from a trip [

8]. In 2012, Koolen et al. [

9] expanded the SC criterion to the N-Step Capturability (N-SC), in such a way that it is possible to reach the capture point in a certain number of steps.

Later, the predicted step viability (PSV) was proposed in [

10], inspired by the N-SC idea and the human ability to recover from perturbations, such as tripping. In this way, a gait is considered stable if the biped is able to reach a capture point in a finite time. It reduces the constraints imposed to the current step in such a way that it only has to end in a configuration that future steps are able to bring the robot to a capture point.

To determine the capability of the biped to reach a capture point, the PSV has to plan the desired gait pattern and verify whether this pattern satisfies the stability criterion at the beginning of each step. This is achieved via a multiphase optimization proble as it is based on the predicted behavior of future steps. Using this criterion, the gait pattern can be non-cyclic as the human gait while walking on irregular surfaces. Given some desired gait parameters such as step length, center of mass (CoM), height, horizontal velocity, and trunk inclination, the algorithm optimizes the step to get as close to these parameters as possible while reducing the capture point distance and adjusting other parameters such as the step duration, advancing or delaying the foot contact as needed. This means that the algorithm can self-adapt to maintain critical constraints but ensure fast recoverability as it is found in the human gait when recovering from a trip [

8]. The major limitation of the PSV is the complexity of the optimization to plan each step. Since the algorithm uses the complete robot dynamics to assess the recoverability of each possible step, it cannot be directly applied to control a biped robot in real-time.

In summary, the PSV is a powerful stability and trajectory planning criterion that optimizes joint trajectories to minimize consumption and maximize stability, ensuring the existence of subsequent stable steps. However, in its analytic formulation, it is impossible to apply to real-time embedded systems. In this paper, we explore machine-learning-based solutions that are able to implement the PSV stability criterion in real time, i.e., classify whether the step is going to be stable or not. We will analyze a set of classifiers coming from different classification paradigms. We will test and compare them in 5-segment biped walkers, such as robots and exoskeletons.

2. Materials and Methods

2.1. Predicted Step Viability

In the PSV, the current step is planned to guarantee that the next step exists on which the robot is capable of either reducing or maintaining the capture point distance [

10]. This multistage optimization problem guarantees the stability of the robot and the viability of subsequent steps, along with minimizing energy consumption.

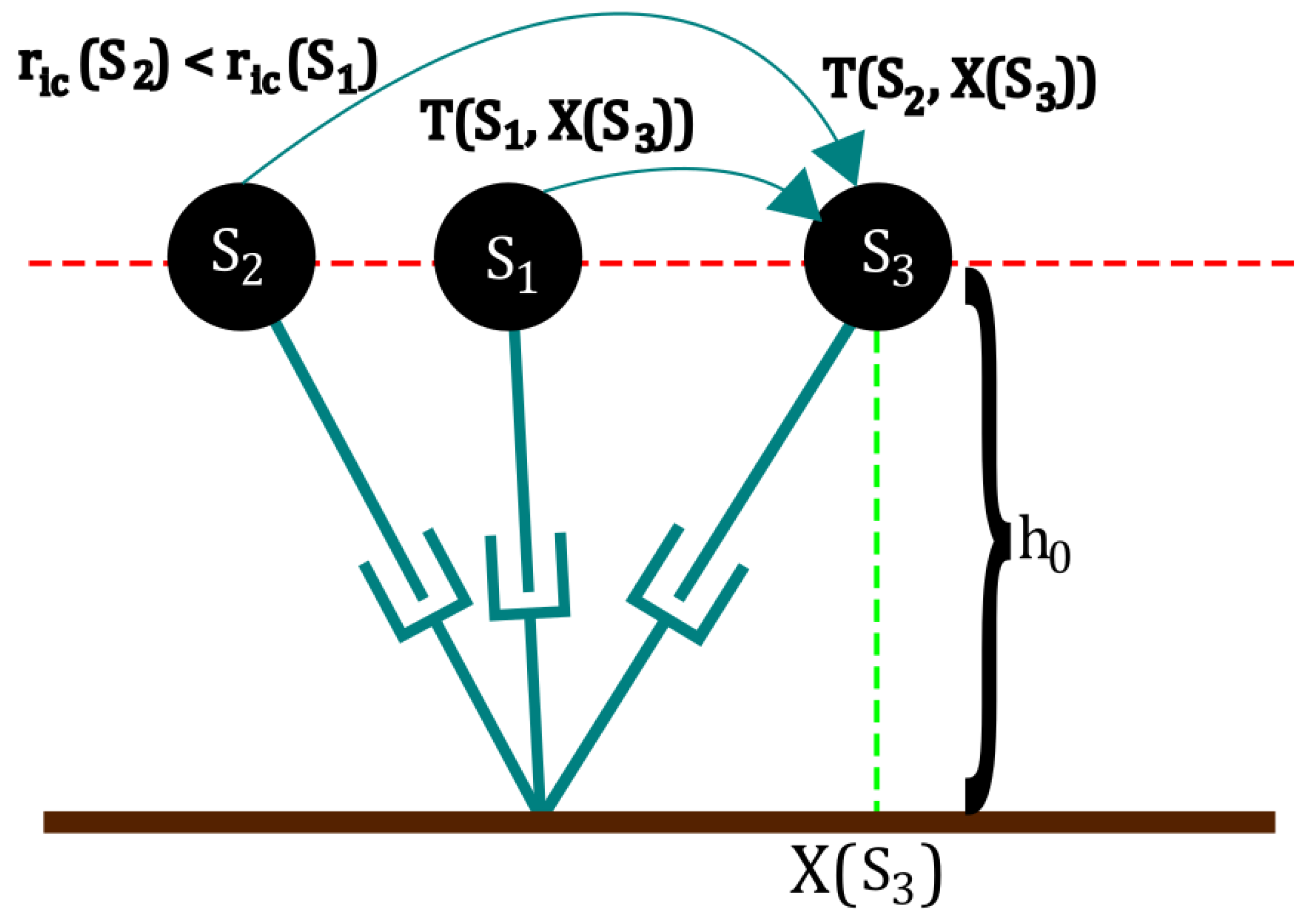

Figure 1 shows a visual explanation of the basis of the PSV criterion.

Given an initial distance to the capture point , on which is the set of initial conditions (positions and velocities) of the robot, and a fixed set of actuations , the robot will drive itself to a given configuration , defined by a fixed distance from the support point , in a given time . This movement is ruled mostly by the exponential diversion of the capture point and thus the passive joint. In this situation, for a second, closer capture point position , the robot will have a time to drive itself to the same configuration using an actuation . Consequently, if the robot can perform the same step, using a higher actuation , we will have and consequently finish the step with a capture point closer than with the previous actuation.

If a possible step exists that reduces the capture point distance in the next step, then the following step that will further reduce it will also exist. Therefore, the robot can eventually bring itself to a full stop, in the absence of external disturbances. Conversely, if there is no possible step that can at least maintain the current distance to the capture point, then a fall is inevitable.

The PSV is a powerful stability and trajectory planning algorithm that guarantees the stability of the current step and the existence of all subsequent steps. Simultaneously, the PSV also aims at enhancing the step feasibility by optimizing a cost function that includes the maximum joint torques, the trunk inclination, and the maximum step length. Notably, other parameters are not needed, such as stepping time, which allows anticipation or delay in contact with the floor as needed to guarantee stability. This maintains the generality of the method while increasing the robustness to external disturbances. However, the main drawback of the method lies in the multistage optimization problem that must be solved to plan the trajectory and check the recoverability of the current step, thus rendering its application in real time impossible with current technology.

2.2. Robot Model

In this work, two different biped robot models were used to assess the capability of the proposed method to work with different bipeds and the generalizability of each set of trained classifiers to work with different models. The parameters of each biped can be seen in

Table 1 and

Table 2. While the first represents an adult-size full body exoskeleton, the second represents the parameters of a small-sized robot.

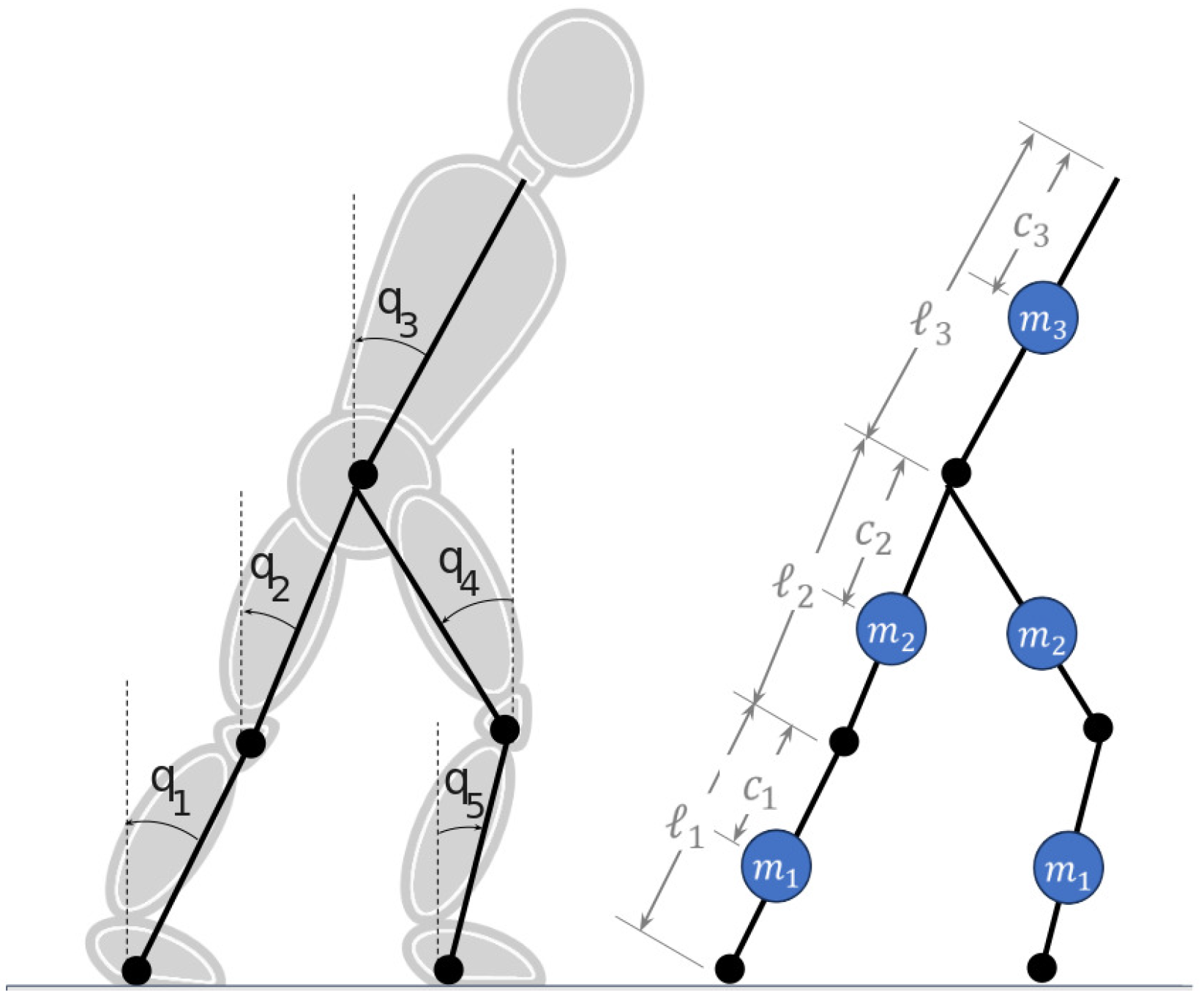

Both models are modified versions of the classic 5-segment robot with point feet RABBIT [

11]. They consist of five segments with known center-of-mass positions. The angles are defined with respect to the vertical as shown in

Figure 2. All signal conventions follow the trigonometric circle convention. Motion is limited to the sagital plane, and thus there is no collision between parts of the robot. Also, the feet, as in the original RABBIT model, are punctual and have a collision box with the ground. Moreover, the robot is modular, with the mass, length, CoM position, and inertia of segments 1 and 2 being equal to 4 and 5. Finally, segment 3 models the torso of the robot, which contains most of the mass and thus governs the CoM position.

The PSV method was implemented as described by Rossi et al. in [

10], and a set of simulations were performed to map the initial and end-step conditions of the biped for each initial configuration. The result of the algorithm, which means whether or not the robot managed to reduce the distance to the capture point (i.e., the step is recoverable), is also registered for posterior analysis.

The set of all possible initial conditions for the robot are defined with three constraints. First, the front leg and the torso must not initially be bent backward to prevent a step back from the robot. For the same reason, all joint velocities must be negative according to the angle convention presented in

Figure 2. Second, the internal angles between the robots thighs and legs must be positive but smaller than

(i.e., the leg cannot be bent forward). And third, both feet must be touching the ground at the beginning of the step and the swing foot starting position must be behind the support foot. They are formulated mathematically using the following equations:

and

and

and

and

and

2.3. Datasets

Three increasingly difficult data sets were generated to assess the viability of the approach and to test the robustness and generalization of each classifier.

The first dataset presents an exploration of the complete set of joint angles for the robot while keeping the initial velocity for each point of the dataset constant for each joint and with a value of −1 rad/s. No constraints on the evolution of joint angles and velocities were imposed other than the mechanical limits of the robot and the maximum torque of the motors. This dataset allows for the exploration of which extreme conditions, which could arise after slipping or external influence, for instance, could still be recoverable. The value of −1 rad/s was chosen as a compromise between the fast and slow movements of the swing and stance legs, respectively. At the same time, we analyzed the theoretical initial n-step capturability of the system for each condition and verified if the chosen value would result in a distribution across all of the theoretically recoverable zones, while minimizing points above . A finer discretization of the workspace is performed around the angles of each segment. The discretization step was set to 0.157 rad. A total of 30.370 different conditions were simulated with the exoskeleton model for this data set, of which 11.4% were recoverable steps.

For the second dataset, both position and velocity were explored. We maintained the same workspace for the position but increased the step to 0.5240 rad. As for velocity, we gradually increased the maximum angular velocity and compared the resulting theoretical n-step capturability of each condition. The maximum velocity was chosen so that most points would fall within the theoretically recoverable zone by the n-step criterion. The chosen range for velocities was from −1 to −10 rad/s, with 2 increments of 4.5 rad/s. However, since the possible configuration of the robot could differ largely from the ideal scenario on which the inverted pendulum model is based, and there was a large excursion of the center of mass both horizontally and vertically, most of the points would result in a fall. A total of 67.068 different conditions were simulated with the robotic model, of which 4.6% were recoverable steps.

These datasets were used to assess the effect of the dataset on the training and the sensitivity to unbalanced data. By analyzing their combined results, we explore how important initial velocity can be for the overall performance of the classifier or if the position mostly dictates the recoverability.

The third dataset was chosen to better represent the operation point of the biped robot. Three constraints were applied to the initial conditions of interest to obtain a balanced dataset, i.e., a dataset with a similar number of recoverable and non-recoverable steps. First, we limit the height of the CoM in the initial configuration to be above 74% of the CoM that the human gait resized to the robot’s parameters would have. Second, we limit the initial vertical velocity of the CoM to be above −0.6 m/s. Third, we calculate the theoretical n-step capturability of each condition and remove all points that would theoretically need more than 3 steps to reach the capture point. Finally, since some of the points were removed due to the 3 rules, we chose a finer step of 0.3140 rads for position exploration and 3 rad/s for velocity exploration. A total of 74.618 different conditions were simulated with the robotic model, of which 44.4% were recoverable steps.

2.4. Classification

We trained 11 different classifiers to predict whether a given step falls within either the stability region or the no-recovery zone for each dataset. They are: Naive Bayes (NB), Logistic Regression (LogReg), k-Nearest Neighbors (KNN), Support Vector Machine with Linear Kernel (SVM-L), Support Vector Machine with Radial Basis Function Kernel (SVM-RBF), Decision Tree (DT), Random Forest (RF), Adaboost (ADA), Quadratic Discriminant Analysis (QDA), Linear Discriminant Analysis (LDA), and Multi-Layer Perceptron (MLP). They were selected to explore various classification approaches, spanning from linear to non-linear, parametric to non-parametric, and generative to discriminative solutions [

12,

13]. In addition, we introduced an ensemble approach that consolidates the outputs generated by each individual classifier. Except for MLP, all of the preceding methods are combined using the stacking technique [

14]. Since we are only interested in real-time and simple implementations, we did not consider any deep-learning-based approaches.

The input feature vector

comprises the positions

and velocities

of five segments illustrated in

Figure 2; the center-of-mass (CoM) position vector

and velocity

; and the relative position of the capture point concerning the support feet

. This approach indirectly incorporates robot-model-related information without the necessity of including mass or inertia distribution, which would tie the solution to a specific model. Due to the prevalence of unstable conditions stemming from extreme robot configurations, resampling was essential for training the classifiers in datasets 1 and 2. The Synthetic Minority Oversampling Technique (SMOTE) with 9 neighbors was employed to balance these datasets [

15].

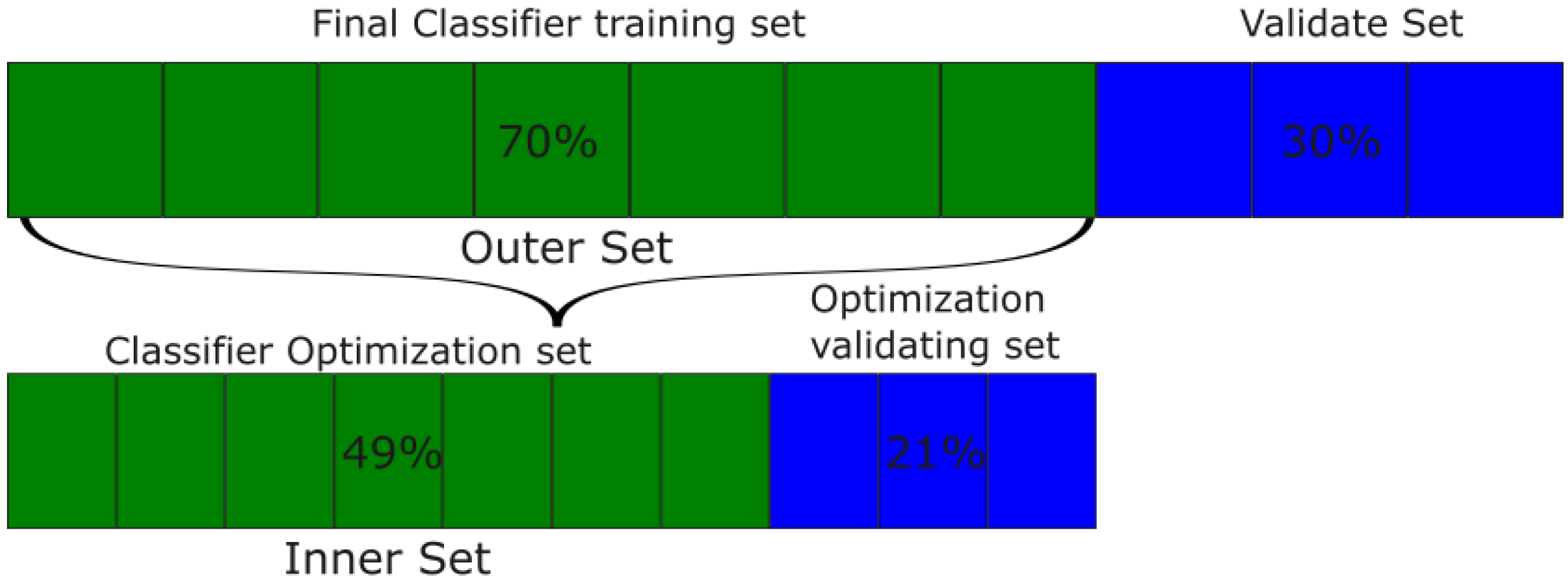

2.5. Model Training and Validation

The nested holdout validation strategy was implemented to find the optimal hyperparameters of each classifier. In total, 70% of the data (outer set) was set aside for the optimization of the hyperparameters. From this subset, 70% was used to train each classifier with each hyperparameter on a grid search paradigm, while the remaining 30% was used to test each classifier and choose the best one. After finding the best hyperparameters, each classifier is retrained in the totality of the Outer Setand the remaining data are used to compute the final score of each method, referred to as Validate Set.

Figure 3 summarizes the data distribution for each stage. Each of the 11 classifiers follows the same procedure.

It is reasonable to expect that, under normal conditions, undisturbed robotic gait will mostly comprise recoverable points. Nonetheless, it is important that the classifiers are able to identify when we have exited this stability zone and should increase gait robustness or prepare for a fall. In this scenario, there is a prevalence distinction on the stable class from training data and real use scenarios. Therefore, the scoring method for the classifiers should be independent from the class prevalence so we do not introduce a bias in our training. In this way, the performance of training and real life should be the same. So, we must be careful in the selection of the metric to be used for the hyperparameter optimization, e.g., in [

16] it is explained why the use of f1-score could lead to problems if there is a large difference between the prevalence of stable class on training and application. In our case, we used the balanced accuracy since it is not affected by the prevalence of the classes, so there is not a risk of obtaining a biased classifier in favor of one of the two classes. Once the hyperparameters for the corresponding classifier are optimized, each classifier is run (for each dataset) and different metrics are calculated: balanced accuracy, ROC AUC, f1 score, average precision, precision, recall, specificity, and negative predictive value (NPV) [

17], as well as the time taken for a prediction for trained classifiers.

In the biped gait framework, falsely identifying a condition as belonging to the stability region can lead to damage to the device or, in the case of exoskeletons, to the wearer. On the other hand, falsely identifying a condition as part of the no-recovery zone would lead to increased computational costs as the algorithm would try to increase robustness of the gait to recover from instability or prepare to minimize damage in an eventual fall. Since misidentification is expected to occur around the boundary of regions, increasing the robustness of gait on a false negative (FN) can lead to faster recovery and convergence towards a more stable region in a situation that, while still stable, would be approaching an irrecoverable situation. On the other hand, not trying to do so in a false positive (FP) could distance the robot even further from the boundary, resulting in a completely irrecoverable situation and unavoidable fall. For this reason, while both are not ideal and should be avoided, the FP are more problematic.

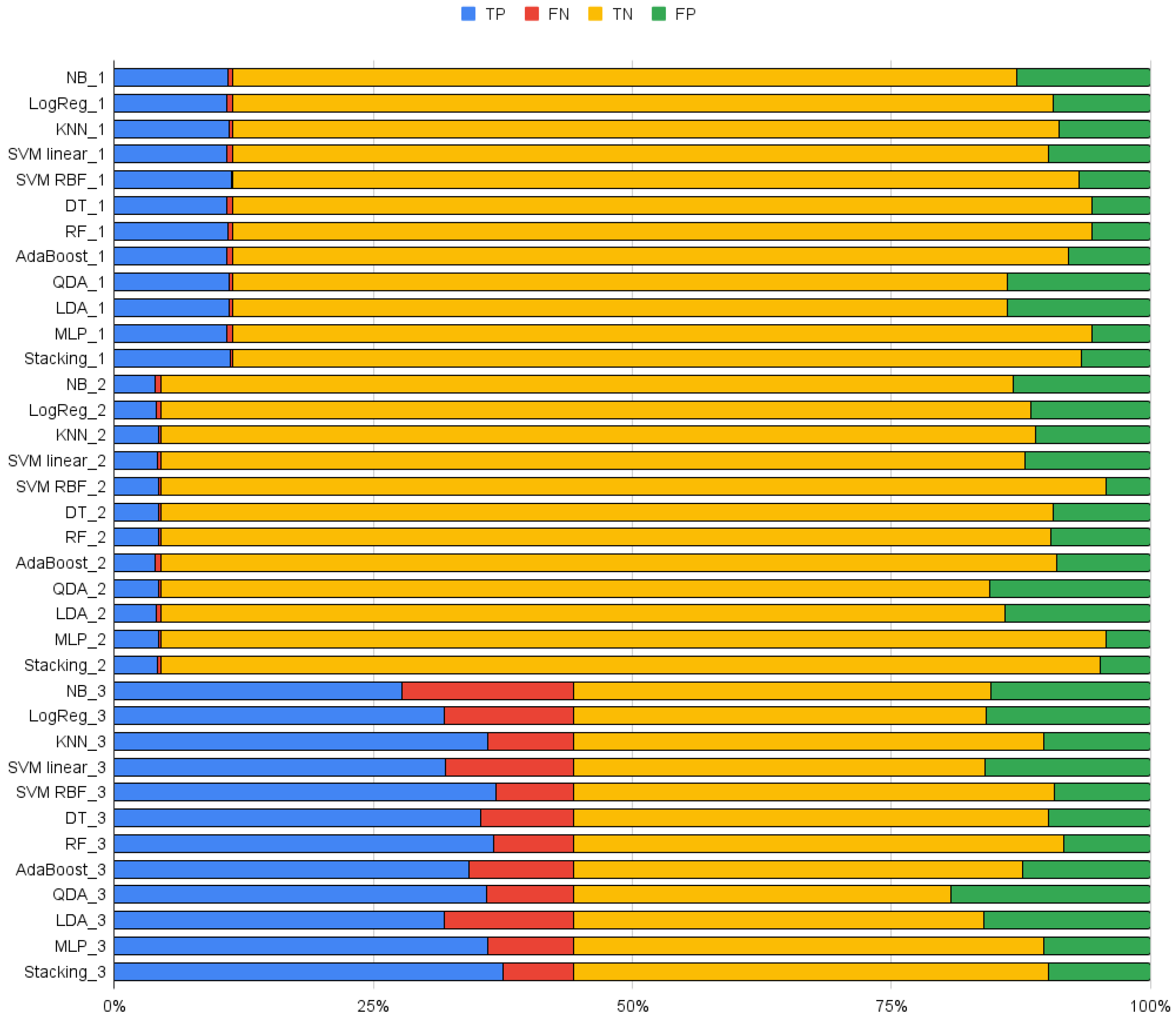

Finally, an Out-of-Sample evaluation was performed by running the trained classifiers of all datasets on the validating set of each data set and comparing the percentage of TP, TN, FP, and FN within each data set. Since each dataset has different prevalences of positive and negative classes, the percentage of each prediction is given to simplify a direct comparison. This way, we can verify if models hold their performance when applied to datasets other than those on which they were trained on and, thus, if the classifier could be generalized for a broader range of robots or must be model specific.

2.6. Robotic Simulation Validation

Finally, to validate the results obtained with the training of the classifiers on the PSV output, a numerical simulation of the robotic model described in

Table 2 and used for datasets 2 and 3 was performed. MATLAB version: 9.14.0 (R2023a) (The MathWorks Inc., Natick, MA, USA) was used in this step. At the end of each step, the procedure described in [

18] was used to compute the new states of the robot post impact.

A motion planner was implemented as described in [

19] to control the robot states. The states considered and that were controlled in this study are:

Torso inclination in respect to the vertical ();

Vertical position of the center of mass ();

Both vertical and horizontal position of the swing foot ();

Similar to [

18], our robot employs Bézier Curves to interpolate and smooth the trajectories for motion planning. The use of Bézier curves allow one to accommodate for initial derivatives at the beginning of the step, thus minimizing required torque, while at the same time having a controlled evolution to the desired end condition. In this study, the torso reference was kept vertical, and CoM height was kept constant at a height equivalent to the human CoM resized to the robot’s dimensions. Swing foot height initially follows the derivative resulting from the impact dynamics and is then kept at a constant height before being projected to the ground. Finally, on each iteration of the control loop, the diversion of the capture point position at the end of the step is estimated, based on its current position and the planned step duration. The Bézier points are then updated based on the new prediction, to smooth the step trajectory at a fixed distance from it.

The simulation was repeated with increasing levels of disturbancies, from model mismatch, sensing noises, terrain irregulaties, and pushes. For model mismatch, a random difference up to 50% was added or subtracted to the robot model but not to the controller model. The mass, inertia, and center of mass positions were varied. Encoder sensing noise was modeled as the sum of two Bernoulli sequences, one positive and one negative, with a probability 99% of being kept at zero, while tachometer noise was modeled as Gaussian noise. Finally, both the push and the terrain inclination were sequentially increased until the control could no longer stabilize the robot, leading to a fall. In all cases, the disturbance was introduced in the 5th step to give the robot time to reach steady state before introducing them and was kept untill the 50th step.

We then proceeded to use the best performing classifiers to predict the recoverability of each step using only the initial conditions of each step.

3. Results

3.1. Real-Time Performance

One of the motivations of this work is to prove that it is possible to obtain an accurate prediction of the PSV criterion in real time. Although in this study only the stability evaluation of the PSV is being reproduced and not the trajectory planning, all of the proposed methods managed to reduce the computation time of the PSV algorithm significantly, allowing for their real-time use. The neural network classifier requires more time due to the complexity of the model as expected: near 5 ms per prediction when applied on a raspberry pi 3 model B, Raspberry Pi Foundation, Pencoed, Wales, UK. KNN and SVM-RBF took 1 ms amd 800 , respectively. For the rest of classifiers, the response is even faster. When it comes to the stacking classifier, the total time to compute an answer was close to the sum of the individual times of the involved methods, taking around 6 ms.

3.2. Classification

Table 3,

Table 4 and

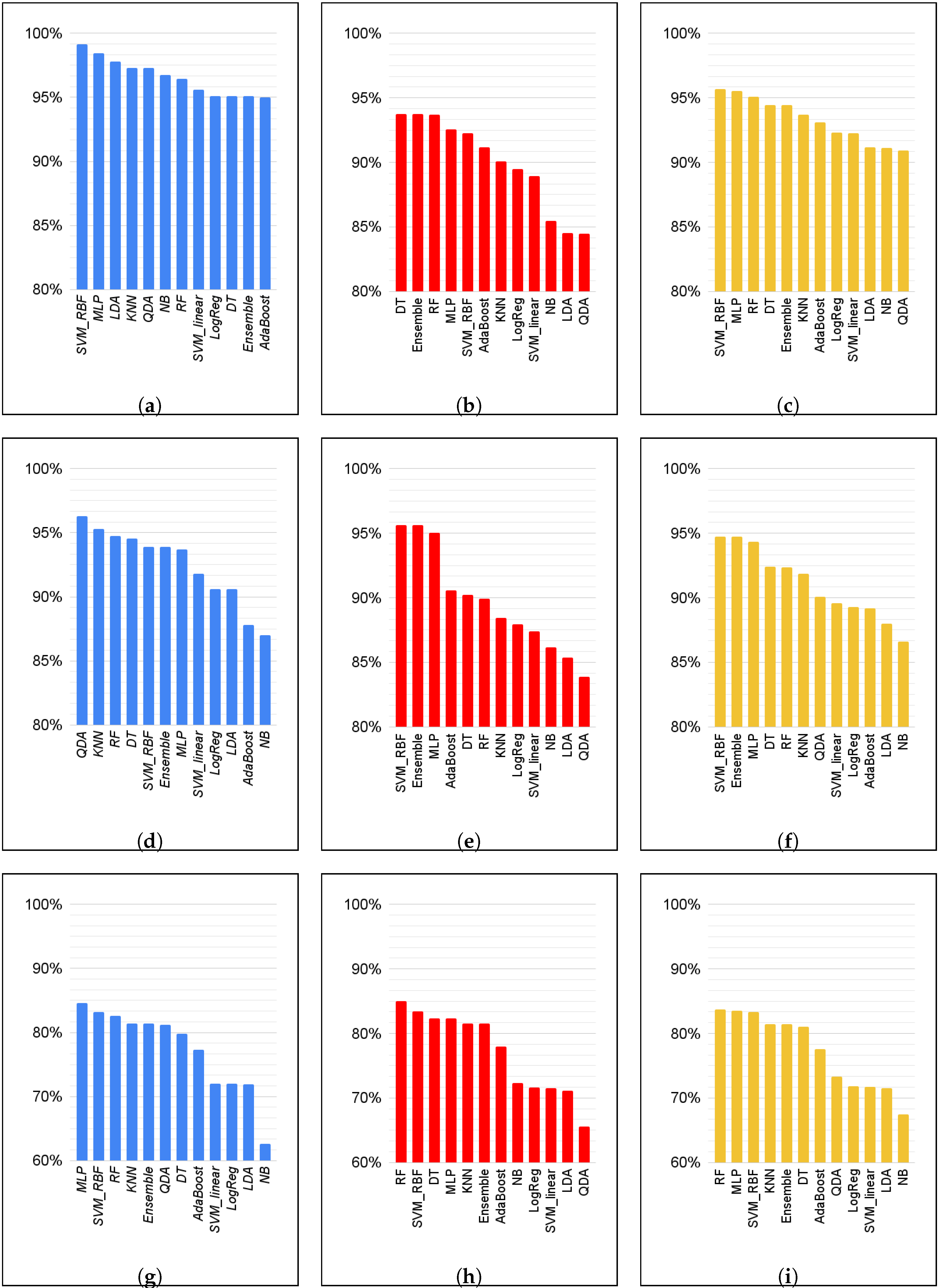

Table 5 summarize the results for each classifier for the validation of the set of data in datasets 1, 2 and 3, respectively. For the comparison of the different results, we will analyze the sensitivity, also known as true positive rate (TPR); the specificity, also known as true negative rate (TNR); and the balanced accuracy (BAcc). A visual representation of these metrics for each algorithm and dataset is given in

Figure 4. As a visual summary, in

Figure 5 we show the overall performance of each classifier.

We can see in

Figure 4 that SVM-RBF performs well in all datasets and metrics; it is consistently ranked in the top three classifiers and always at least in the top five. MLP, RF, DT, stacking, and KNN also consistently rank highly. In terms of TNR, DT, RF, and SVM-RBF obtained the best performances in dataset 3; SVM-RBF, stacking, and MLP performed better in dataset 2; and DT, RF, and stacking performed better in dataset 1. Moreover, we can see that there is a clear drop in performance in dataset 3, with the best three classifiers having around 93% TNR in dataset 1, over 95% in dataset 2, and around 84% in dataset 3. Looking exclusively at FP, this translates to around 6%, 4.37%, and 9.14% FP misidentification for datasets 1, 2, and 3, respectively. At the same time, we found that KNN also managed to retain high TNR in all three datasets. When it comes to TPR, SVM-RBF still performed well, scoring 99%, 94%, and 83%, respectively. We found, however, that LDA and QDA performed well in dataset 1 and 2 in this scoring but performed worse for TNR in dataset 3.

While SVM-RBF, MLP, DT, RF, KNN, and the stacking of methods had significantly high balanced accuracies, LDA, QDA, SVM-L, NB, and LogReg had significantly worse performances. In all cases, the TNR of these methods was worse, albeit at times they had high TPR. Balanced Accuracy was consistently worse than the other methods. When it came to dataset 3, the NPV of these methods was also lower, indicating that the algorithm found it difficult to properly separate regions within the data set.

All of the methods performed worse in dataset 3, which had a more focused distribution of points and contained more points around the boundary between stability and no-recovery zones, making the problem much harder. DT and the stacking method had the greatest performance reduction when compared to dataset 1. RF, MLP, and SVM-RBF were the algorithms that were more robust to this dataset, maintaining high balanced accuracy.

3.3. Stacking Performance

The use of the stacking strategy to combine the different classifiers did not improve the overall performance. In all cases, the stacking results were worse than the ones obtained with the best single algorithm. Most notably, SVM-RBF outperformed the stacking method for all conditions, except TNR in dataset 1, while having a significant lower prediction average time.

3.4. Out-of-Sample Validation

Each trained classifier was tested on all data sets to compare the performance on a data set other than the one it was trained on.

Table 6,

Table 7 and

Table 8 summarize the results.

When it comes to model translation, our results show that there is performance reduction when using a classifier trained on one model on top of another and that it is much greater if there is also a significant difference in data set form.

Table 6 and

Table 7 show that there is a significant increase in either FP and FN for all classifiers, except when trained with dataset 3.

All classifiers trained in dataset 2 performed worse when applied to the other datasets. Out of the best performing ones, DT and RF saw the least significant performance reduction when applied to dataset 1. These algorithms had 4.7% and 3.9% more FP, and 0.4% and 0.3% more FN, respectively, for a total of 14% and 13.5% FP, or 84.1% and 84.8% TNR, and 0.6% and 0.5% FN, or 94.4% and 95.1% TPR, respectively. However, even if the performance decreases of other methods such as SVM-RBF and stacking were greater, since they had better performances in dataset 2, the overall performance was very similar with 14.3% FP and 0.8% FN for both. KNN and MLP saw a much more significant performance decrease, on the other hand. When applied to dataset 3, however, the performance reduction was much more expresive for all methods, having more than 20% extra FP for all methods.

When trained in dataset 1, only MLP managed to retain performance when tested with data set 2. It showed a small increase in both misidentifications, having 3.8% extra FP and 0.7% extra FN, for 89.1% TNR and 80.4% TPR. When applied to dataset 3, all methods had significant performance reduction.

Finally, when it comes to dataset 3’s trained classifiers, SVM-RBF and MLP performed well on both datasets. However, since the number of true positives in datasets 1 and 2 is smaller than in dataset 3, the small increase in misdentification resulted in a lower TPR. SVM-RBF had a total of 91.3% TNR and 68.9% TPR in dataset 1, and a similar 99% TNR and 61.1% TPR in dataset 2. MLP, on the other hand, had 88.6% TNR and 89.1% TPR in dataset 1, and 99% TNR and 66.5% TPR in dataset 2.

3.5. Simulation Results

KNN, DT, RF, and SVM-RBF were used to predict the stability of each step in increasingly unstable conditions. All four classifiers managed to successfully identify that the steps were recoverable in all conditions, with all degrees of disturbances. Terrain inclination varied from −3° (−5.2%) to 20° (36.4%), while a constant push was applied to the robots hip of up to 7N (12% of robot’s mass).

In conclusion, the results obtained for all these experiments prove that it is possible to provide an embeddable real-time classifier that can replace the PSV analytical formulation in a real robot.

4. Discussion

Accurately and rapidly identifying unstable conditions that could lead to a fall is critical for ensuring the integrity of biped robots and exoskeletons alike. Machine learning techniques have become increasingly common due to their versatility, accuracy, and speed of prediction, managing to combine information from complex and varied sources to overcome the limitations of simpler models and the computational cost of complete dynamic models. Over the years, different groups have studied how to best combine various sensors to correctly classify the stability of the gait. Solutions have ranged from inertia measurement units on multiple body parts [

20], to integrating trunk acceleration and CoP position [

21], or using physical quantities derived from the robots’ own sensors [

22]. Input features and training data are critical for the success of such techniques. As such, using complex models that can extract more information about the robots’ gait and including both kinetic and dynamic information on the input feature could be the best approach to ensure the generalization and accuracy of ML-based techniques. Despite researchers’ best efforts, some conditions that may arise during walking are simply irrecoverable. For these, other groups have studied how to minimize potential damage to the system [

23,

24].

In our work, we thoroughly examined different classifiers to assess the viability of implementing the PSV stability criterion in a real-world robotic system under real-time constraints while also focusing on minimizing computational cost and energy consumption. Additionally, we investigated the generalizability of our findings across two biped models and three datasets. This is crucial because maintaining robustness against model parameters is essential for lower limb exoskeletons. While the number of degrees of freedom remains consistent across different users, variables like mass, the segment center of mass positions, and inertia vary significantly. So, a stability classifier can adapt to these variations to ensure safe exoskeleton operation for different users. To test this adaptability, we evaluated the classifiers by training and testing them on a biped system that has different inertial parameters yet with the same number of degrees of freedom.

All the classifiers investigated in this study significantly simplified the PSV algorithm complexity, reducing the prediction time to less than 5 ms, which is adequate for real-time applications. Since robotic systems often operate at sampling frequencies of 1 kHz or higher, it is essential to note that this prediction only needs to run once per step. In this scenario, it is possible to assess stability separately and in parallel with trajectory planning computation and execution.

While the joint control loop could be run at 1 kHz, the step period could range from 400 ms to 1 s depending on the biped robot size. In this case, the 0.8 ms classification delay shown by the SVM-RBF corresponds to less than 0.25% of the stepping time. Furthermore, our simulations demonstrated that the strategy is robust to model mismatch, sensing errors, and light disturbances in both the terrain inclination and pushes, further validating the model’s efficacy. For this reason, SVM-RBF performed better in this criterion as the lower computational time with respect to MLP allows for the computation of the predicted stability of several steps ahead when planing trajectory.

Among the classifiers, SVM-RBF, MLP, RF, DT, and KNN obtained the best results, consistently ranking at the top of the different TPR, TNR, and BAcc metrics. The performance of the joint method of stacking all classifiers was similar to that of the highest performing individual classifiers. Due to its higher computational cost, further analysis ruled out the stacking method.

SVM-RBF and MLP demonstrated higher recall and specificity than the other methods. However, KNN consistently had more mislabeling of FP, as depicted by a lower precision and specificty, despite its high recall and low false negatives. It is also important to note that in this case, false positives FP are much more harmful than false negatives FN. While an FN would induce additional resource use from the robot to increase step robustness (e.g., by reducing center of mass and increasing step length and cadence for a few steps), an FP could result in a fall, possibly damaging hardware or, in the case of exoskeletons, the wearer.

KNN, SVM-RBF, DT, and RF demonstrated excellent performance when dealing with distinct boundaries between classes (recoverable and non-recoverable zones). KNN’s non-parametric nature allows it to thrive on local data distributions, making it effective for well-separated classes. DT and RF excel in partitioning informative features recursively, accommodating non-linear relationships and capturing interactions among features, thereby leveraging clear data separation. Meanwhile, SVM-RBF’s capability to create non-linear decision boundaries by mapping the feature space to higher dimensions becomes advantageous when distinct and well-defined regions exist within the feature space, aligning with our well-separable stable and non-recovery zones. The overall high performance of these four methods indicates a clear separation between classes in our data, suggesting the presence of a basin where the trajectory planning algorithm should focus to maximize gait stability and ensure the recoverability of the next step.

In contrast, LDA and SVM-L seek linear decision boundaries for classification, explaining their poorer performance in classifying non-linear or complex relationships between features and classes. However, MLP’s high flexibility in mapping interrelations between features and classes enables it to handle high-dimensional, non-linear, and complex data by approximating any function mapping inputs to outputs. This flexibility allows MLP to maintain high precision and NPV when other methods fail to do so.

Regarding model adaptation, our findings suggest that it is feasible to maintain or even enhance the overall performance of classifiers trained with different models, provided the appropriate classifier is selected for the task and the training data are carefully selected. However, performance consistently diminished when classifiers were evaluated using dataset 3. This result is expected as dataset 3 places greater emphasis on the boundary region. Consequently, the data from dataset 1 or dataset 2, which are more general and lack detailed information around the boundary, failed to adequately train the algorithm to accurately identify this boundary. However, when the training data were concentrated around the boundary and subsequently the test was expanded to include a broader dataset, performance became comparable, regardless of whether datasets 1 or 2 were used.

The strong performance of KNN, DT, RF, and SVM-RBF classifiers suggests that the feature space could be divided into different non-overlapping regions, which could be considered by the trajectory planning algorithm. One possibility would be ranking the regions by feature stability and ensuring that the step ends in the geometric center of these regions. Additionally, to reduce the number of FN, the robot could be programmed to take action only if the last two or more steps were predicted to be unstable. However, this would result in slower reaction time to actual unstable steps, potentially leading to a fall.

Concerning the robot model, it is important to highlight that the PSV method opts for a detailed model over a simplified one for its multi-stage optimization. This choice is crucial because the datasets used contain configurations that deviate significantly from normal walking patterns and undergo substantial changes during recovery steps. Utilizing a simplified model, such as the inverse pendulum, would fail to accurately predict most outcomes in this scenario. Despite imposing restrictions on positions and velocities based on the theoretically recoverable limit of step capture, a considerable number of points remain unrecoverable. This observation implies that our approach is better at predicting step recoverability after major disruptions in walking patterns.

Future studies should leverage the trained classifiers to evaluate their effectiveness across various scenarios, including 3D movements in more sophisticated models. Investigating the impact of disparate models, sensor inaccuracies, and terrain inconsistencies on prediction accuracy using a real robot would validate the promising results observed in simulations. It is crucial to highlight the importance of integrating prediction and trajectory planning. This integration can be enhanced by incorporating various clusters within the feature space into trajectory planning, aligning them with stability predictions. This approach ensures a more comprehensive trajectory planning process, optimizing the robot’s performance in varying conditions. Moreover, since each prediction takes only a fraction of a millisecond, once it is determined that the current step is stable, the prediction could be used to plan future steps similarly to what the PSV algorithm achieves but in real-time.