1. Introduction

The gaze function is a crucial biological feature of the human visual system. It enables human eyes to identify interest targets in the environment and swiftly shift the gaze to these targets, placing the targets in the foveal region. By doing this, humans can obtain more details and information about the interest targets in the environment and less information about uninteresting regions [

1,

2,

3]. Imitating the gaze function holds great significance for the advancement of fields such as human–robot interaction [

4,

5], autonomous driving [

6], virtual reality [

7], etc. Moreover, imitating the gaze function for the humanoid eye perception system has the potential to filter redundant information from huge data, optimize the use of computing and storage resources, enhance scene comprehension, and improve perception accuracy. This imitation stands as an important step in advancing humanoid eye intelligent perception.

The primary work in imitating the eye gaze function is to research the eye gaze mechanism. The mechanism of eye movement, which plays a key role in the gaze function, has been widely studied. Marg introduced electro-oculography (EOG) as a method for measuring eye movement by obtaining eye potentials through electrodes around the eyes [

8]. However, this contact measurement method suffers from lower precision and poor portability. Subsequently, the presentation of video-oculography (VOG) offered a more accurate and portable non-contact eye movement measurement method [

9]. VOG used cameras mounted on wearable devices to capture the position of the pupil. Nevertheless, this method lacked a stimulus-presenting monitor and recording device, preventing independent measurements. The design of the all-in-one eye-movement-measuring device overcame this limitation, enabling independent and accurate eye movement measurement [

10]. Through the gradual improvement of eye-movement-measurement devices, the factors affecting the eye movement mechanism have been studied [

11,

12,

13,

14]. Those studies have found that factors such as gender differences [

12], cross-cultural differences in facial features [

13], and stimulus contrast and spatial position differences [

14] contribute to saccadic pattern differences. Now, many researchers are interested applying our increasingly robust understanding of the eye movement mechanism to the structural design and motion control of humanoid eye perception systems, which can imitate the gaze function.

In this structural design field, the methods can be divided into two categories. The first method refers to imitating the physiological structure of the extraocular muscles. This usually involves using a spherical link structure [

15], spherical parallel mechanism [

16], or multiple flexible ropes parallel mechanism [

17] to design a device that achieves a highly realistic imitation of the physiological structure of the human eye. However, researchers face difficulties in reducing the sizes of these devices. With that goal, some studies have proposed that super-coiled polymers (SCPs) [

18] or pneumatic artificial muscles (PAMs) [

19] can be used to replace rigid materials or ropes in the design of a device. However, achieving precise control of these devices has remained challenging. The second method is to imitate the effect of the actual motion of the eye. This method usually uses the servo motor as the power source, which can reduce difficulty of controlling the device. Fan et al. [

20] designed a bionic eye that could tilt, pan, and roll with six servo motors. However, a rolling motion is generally not required for a device that imitates the gaze function [

21]. Some studies have focused on the design of devices that can tilt and pan with four servo motors [

22,

23]. However, those four servo motors are not synced for the cooperative motion of human eyes. Thus, a device was designed that could tilt and pan with three servo motors [

24]. However, this device lacked a camera position adjustment component. It would have been difficult to ensure that the vertical visual field of the two cameras was consistent due to the potential for assembly errors, which affect gaze accuracy. Moreover, in a dynamic analysis [

25,

26] of this device, it was found that the torque of the servo motor responsible for tilting exceeded that of the other two servo motors, impacting the overall performance and efficiency. Therefore, further optimization of the structure is needed.

In regard to motion control, researchers have explored two distinct approaches. One approach is a motion-control strategy driven by a set target [

22,

27,

28,

29,

30,

31]. Mao et al. [

28] proposed a control method that could be described as a two-level hierarchical system. This method could imitate the horizontal saccade of human eyes. Subsequently, a control method [

30] was designed that employed a hierarchical neural network model based on predictive coding/biased competition with divisive input modulation (PC/BC-DIM) to achieve gaze shifts in 3D space. Despite the effect of the control method being consistent with that of human eye movement, the neural network requires plenty of data support compared to traditional algorithms. As an alternative, a traditional control algorithm based on 3D or 2D information of a target has raised attention [

22,

31,

32]. For example, a vision servo based on 2D images was proposed to control the pose of each camera and achieve the fixation of a target [

22]. Rubies et al. [

32] calibrated the relationship between the eye positions on a robot’s iconic face displayed on a flat screen and the 3D coordinates of a target point, thereby controlling the gaze toward the target. The motion-control strategy driven by the set target has clear objectives and facilitates precise motion control. However, it falls short in imitating the spontaneous gaze behavior of humans and may ignore other key information, which leaves it impossible to fully understand the scene. Another approach is a motion-control strategy driven by a salient point, which can make up for these shortages. Researchers have made significant progress in saliency-detection algorithms, including classical algorithms [

33,

34,

35] and deep neural networks [

36,

37,

38]. The results of saliency detection are increasingly aligning with human eye selective attention. Building on this foundation, Zhu et al. introduced a saccade control strategy driven by binocular attention based on information maximization (BAIM) and a convergence control strategy based on a two-layer neural network [

39]. However, this method cannot simultaneously execute saccade control and convergence control to imitate the cooperative motion of human eyes.

Recognizing the limitations of the above work, we have proposed a design and control method of the BBCPS inspired by the gaze mechanism of human eyes. To address the issues of servo motor redundancy and the lack of camera position adjustment components in the existing systems, a simple and flexible BBCPD was designed. The BBCPD consisted of RGB cameras, servo motors, pose adjustment modules, braced frames, calibration objects, transmission frames, and bases. It was assembled according to the innovative principle of symmetrical distribution around the center. A simulation demonstrated that the BBCPD achieved a great reduction in energy consumption and an enhancement in braking response performance. Furthermore, we developed an initial position calibration technique to ensure that the state of the BBCPD could meet the requirement of the subsequent control method. On this basis, we propose a control method of the BBCPS, aiming to fill the gap in binocular cooperative motion-control strategies, driven by interest points in the existing systems. In the proposed control method, a PID controller is introduced to realize precise control of a single servo motor. A binocular interest-point extraction method based on frequency-tuned and template-matching algorithms is presented to identify interest points. A binocular cooperative motion-control strategy is then outlined to coordinate the motion of servo motors in order to move the interest point to the principal point. Finally, we summarize the results of real experiments, which proved that the control method of the BBCPS could control the gaze error within three pixels.

The main contributions of our work are as follows. (a) We designed and controlled the BBCPS to simulate the human eye gaze function. This contributes to deepening our understanding of human eye gaze mechanisms and advancing the field of humanoid eye intelligent perception. (b) Our designed BBCPD features a simple structure, flexibility and adjustability, low energy consumption, and excellent braking performance. (c) We developed an interest-point-driven binocular cooperative motion-control method, perfecting the research on the control strategy for imitating human eye gaze. Additionally, we calibrated the initial position of the BBCPS via our self-developed calibration technique. This eliminates the need for repeated calibration in subsequent applications, improving the operational convenience of the BBCPS. What’s more, our proposed binocular interest-point extraction method based on frequency-tuned and template-matching algorithms enriches the current research in the field of salient point detection.

2. Gaze Mechanisms of Human Eyes

The movement of the eyeball is crucial to the gaze function. As shown in

Figure 1, the eyeball is usually regarded as a perfect sphere, and its movement is controlled by the medial rectus, lateral rectus, superior rectus, inferior rectus, superior oblique, and inferior oblique [

40,

41,

42]. These muscles contract and relax to perform different eye movements. The superior and inferior oblique muscles assist in the torsional movement of the eyeball. Torsional eye movements, characterized by minimal overall variability (approximately 0.10°), are an unconscious reflex and strictly physiologically controlled [

21]. The superior and inferior rectus muscles rotate the eyeball around the horizontal axis, and the lateral and medial rectus muscles allow the eyeball to rotate around the vertical axis.

Vertical and horizontal movements of the eyeball are important for the line-of-sight shift in the gaze function [

43], which refers to the process of shifting the current line of sight to the interest point through eyeball movements during visual observation. This process involves saccade and convergence. Saccade is a conjugate movement that can achieve the line-of-sight shift of human eyes in both horizontal and vertical directions. Convergence describes a non-conjugate movement of human eyes in the horizontal direction, where the two eyes move in opposite directions to help humans observe points at different depths. By coordinating saccade and convergence, the two eyes can shift their line of sight to any point of interest in three-dimensional space.

To better understand the movement mechanism of the gaze function, we have created a schematic diagram of the human eye cooperative movement, shown in

Figure 2. From a physiological point of view, the human eye changes from the gaze point

to the gaze point

through the coordination of saccade and convergence. We assume that the eye movement is sequential, and the process

is decomposable into

,

, and

. Specifically, the shift from the gaze point

to the gaze point

is first achieved through the horizontal saccadic movement

. The shift from the gaze point

to the gaze point

is then accomplished through the vertical saccadic movement

. In the end, the convergent movement

is employed to shift the gaze point

to the gaze point

.

3. Structural Design

Inspired by the gaze mechanism of human eyes, the mechanical structure of the BBCPD was designed. Its 3D model is shown in

Figure 3. The device is composed of two RGB cameras, three servo motors, two pose adjustment modules, two braced frames, two calibration objects, a transmission frame, and a base. RGB cameras capture images, and servo motors act as the power source. Pose adjustment modules are used to accurately adjust the pose of cameras toward different desired locations. This indicates an increased flexibility of the BBCPD. The transmission frame is designed to transmit motion. Calibration objects are used to calibrate the initial position of the BBCPD. Braced frames serve to ensure the suspension of the transmission frame, guaranteeing the normal operation of the upper servo motor. The role of the base is to ensure the stable operation of the BBCPD.

In the component design, the transmission frame and the base are designed as parallel symmetrical structures motivated by the stability of the symmetrical structure. The braced frame adopts an L-shaped structure because this is highly stable and its different ends can be used to connect various other components. The design inspiration for the pose adjustment module is derived from the screw motion mechanism and the turbine worm drive. Based on the former, the camera can be adjusted in three directions: front–back, left–right, and up–down. Simultaneously, inspired by the turbine worm drive to change the direction of rotation, the roll, pan, and tilt adjustment of the camera are skillfully realized. The top of the calibration object is designed as a thin-walled ring, as the circle center is easy to detect, facilitating the subsequent zero-position adjustment of the servo motor. Furthermore, lightweight and high-strength aluminum alloy is selected as the component material.

During the assembly of the components, the principle of symmetrical distribution about the center of the transmission frame is followed, though it loses some of its biomimetic morphology compared to the classic principle of a symmetrical low center of gravity [

2]. We recognize that the torque required of the upper servo motor in the BBCPD is markedly smaller than that in a system assembled according to the symmetrical low center of gravity principle. This means that the power consumption is lower in the BBCPD. In addition, the rotational inertia of the upper servo motor load around the rotation axis in the BBCPD is smaller than that in the system assembled according to the symmetrical low center of gravity principle. The BBCPD also has better braking performance. The detailed reason will be explained when we present our subsequent dynamic analysis of the upper servo motor.

In

Figure 4,

is the center of mass of the whole load, and

represents the vertical distance from

to the rotation axis. The rotation axis serves as the boundary, and the load is divided into upper and lower parts. The center of mass of the upper part is denoted as

, and the vertical distance from

to the rotation axis is defined as

. The symbol

is the center of mass of the lower part, and the vertical distance from

to the rotation axis is expressed as

.

(

i = 0, 1, …) represents the location of the motor, and

denotes the rotation angle of the motor at

. The mass of the upper part is represented as

,

represents the mass of the lower part, and

g means the acceleration of gravity.

According to the parallel-axis theorem, the rotational inertia of the whole load around the rotation axis

is given by Equation (1).

where

m represents the mass of the load of the upper servo motor, and

is the rotational inertia of the whole load around the center-of-mass axis.

Next, we conducted the force analysis on the motor and derived the torque of the load on the motor at

.

Thus, the torque of the motor at

could be obtained by combining Equations (1) and (2).

where

is the angular acceleration of the motor. According to Equation (3), it can be observed that as

and the difference between

and

decrease,

and

become smaller. In the BBCPD,

and the difference between

and

are close to 0. However, in the system assembled according to the symmetrical low center of gravity principle, both

and the difference between

and

are greater than 0.

Afterward, we performed stress–strain analyses on the BBCPD using software and refined the dimensions of the components. The initial position of the BBCPD was defined as the state that imitates the approximately symmetrical distribution of eyeballs about the midline of the face when humans gaze at infinity. In other words, the camera optical center coincides with the rotation center, and the cameras are parallel to each other. Ultimately, the layout of each component in the space is introduced in the order from left to right.

The bottom of the left servo motor is installed at the bottom of the transmission frame, 64 mm to the left side of the transmission frame. Its shaft end is connected to the bottom of the pose adjustment module L, and the top of the pose adjustment module L is linked to the bottom of the left camera. The optical center of the left camera passes through the shaft of the left servo motor. The bottom of the right servo motor is installed at the top of the transmission frame, 64 mm to the right side of the transmission frame. Its shaft end is connected to the bottom of the pose adjustment module R, and the top of the pose adjustment module R is linked to the base of the right camera. The optical center of the right camera passes the shaft of the right servo motor. The shaft end of the upper servo motor is connected to the right side of the transmission frame, 180 mm above the bottom of the transmission frame, and the shaft passes through the optical centers of two cameras. The bottom of the upper servo motor is connected to the top of the braced frame. Braced frames are installed on the left and right sides of the base in opposite poses to make full use of the space. The left and right calibration objects are vertically fixed to the front of the base, located at 125 mm and 245 mm on the left side of the base, respectively. The plane they lie on is parallel to the planes containing the shafts of the three servo motors.

The BBCPD with three degrees of freedom can effectively imitate the cooperative motion of human eyes. Specifically, the left servo motor and the right servo motor drive the left camera and the right camera to pan, respectively, thereby realizing the imitation of horizontal saccade and convergence. The upper servo motor drives the left camera and the right camera to tilt simultaneously through the transmission frame to imitate the vertical saccade. The design fully considers the human eye gaze mechanism and provides hardware support for imitating the gaze function of human eyes.

4. Initial Position Calibration

The initial position calibration of the BBCPD is a crucial step for achieving the control of the BBCPS. This is designed so that the initial position of the BBCPD meets the requirements of the subsequent control method that the camera optical center coincides with the rotation center and the cameras are parallel to each other. In addition, once the initial position is determined, the BBCPD does not need to be recalibrated during subsequent applications, saving time and resources. In the design of the BBCPD, we default that the initial position of the BBCPD is in line with the requirement of the subsequent control method. However, due to inevitable errors during the manufacturing and assembly processes, the initial position of the real BBCPD makes it challenging to guarantee this requirement is met. Additionally, the zero-positions of servo motors may not be set at the ideal initial position for the real BBCPD. Therefore, we provided the initial position calibration technology of the BBCPD to determine the initial position by calibrating and adjusting the camera poses and the zero-positions of servo motors.

4.1. Camera Pose Calibration and Adjustment

Camera pose calibration and adjustment refers to calibrating the rotation and translation parameters from the base coordinate frame to the camera coordinate frame, and then changing the camera pose using the pose adjustment module. The camera coordinate frame is a right-handed coordinate frame with the optical center as the origin and straight lines parallel to the length and width of the photosensitive plane as the horizontal axis and the vertical axis. The base coordinate frame is defined as a right-handed coordinate frame with the rotation center as the origin point, the horizontal rotation axis as the horizontal axis, and the vertical rotation axis as the vertical axis.

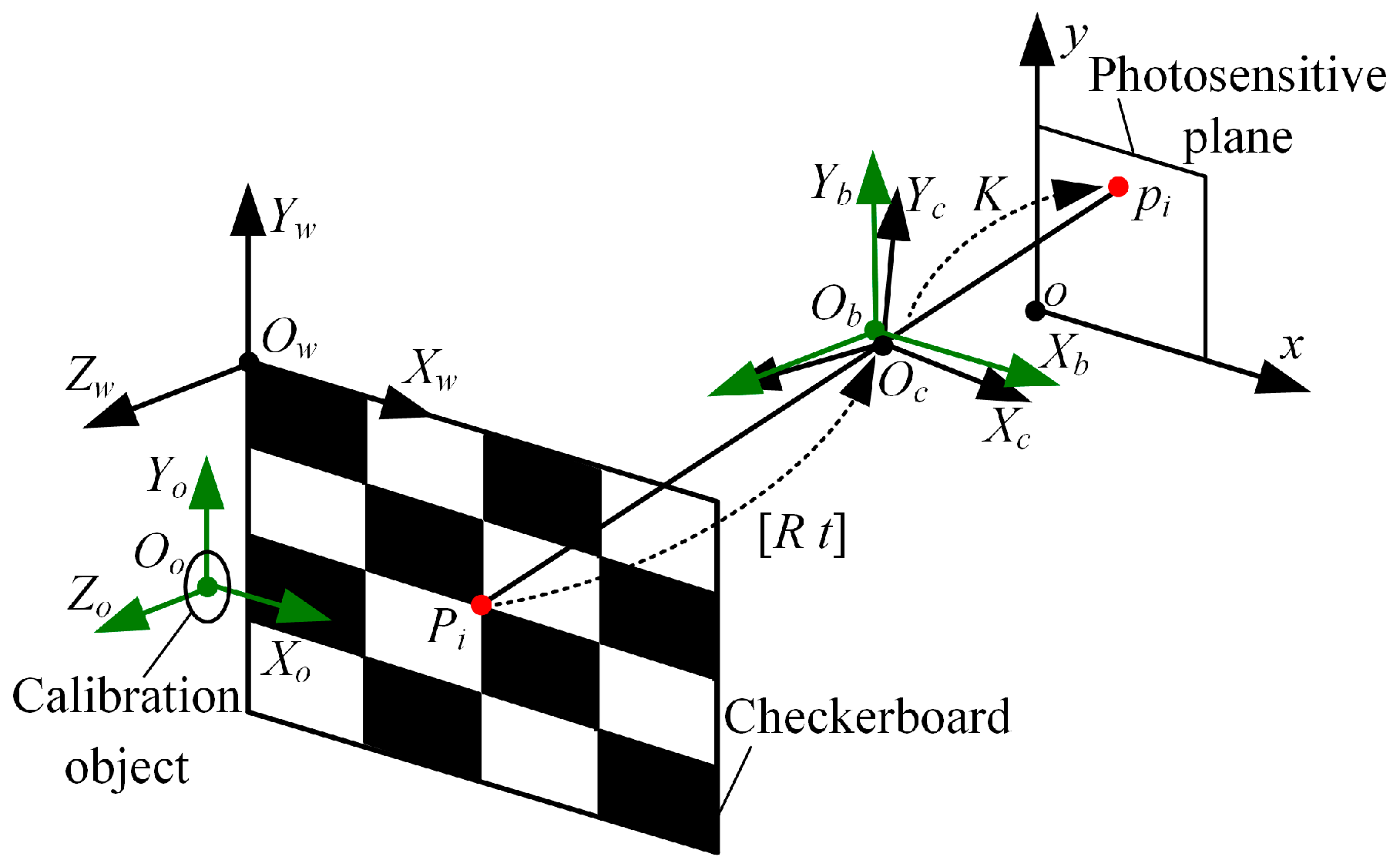

First, we devoted our time to calibrating the rotation parameters, in order to adjust the camera poses to achieve mutual parallelism of the photosensitive planes of the two cameras. Taking the left camera as an example, under corrected lens distortion, the calibration principle of the rotation parameters is shown in

Figure 5. The camera coordinate frame is denoted as

. The base coordinate frame is represented by

, and the calibration object coordinate frame is described by

. The original point

is the ring center of the left calibration object, and the plane

is the vertical center plane of the ring.

is the world coordinate frame. The original point

is set at the upper-right corner of the checkerboard. The horizontal and vertical directions of the checkerboard are the directions of the axis

and the axis

, respectively.

The checkerboard is placed against the calibration objects so that the plane

is parallel to the plane

. In the real BBCPD, the plane

is parallel to the plane

. Thus, by deriving the rotation relationship between the coordinate frame

and the coordinate frame

, the rotation parameters can be calibrated. According to the linear projection principle [

44], the relationship between the 3D corner point

and the 2D corner point

is expressed via Equation (4).

where

is the intrinsic matrix,

is the scale factor,

is the rotation matrix, and

is the translation matrix. We use all corners of the checkerboard to solve

with known

,

, and

. Based on Equation (4), Equation (5) is obtained, and then

is calculated using the least-squares method.

where

n (

n > 4) is the number of corners. Due to the small errors caused by the manufacturing and assembly processes in normal cases, the absolute values of the parameters

,

, and

are generally no more than 90°. The rotation parameters

,

, and

can be uniquely calculated by using Equation (6) based on the rotation order of

.

According to the above principle, the rotation parameters of the right camera can be solved. The poses of the left and right cameras are changed by sequentially adjusting the tilting, panning, and rolling angles of the pose adjustment module L and the pose adjustment module R. The tilting, panning, and rolling angles are the corresponding negative rotation parameters. At this time, the camera coordinate frame is parallel to the base coordinate frame, and the photosensitive planes are parallel to each other.

Next, we calibrated the translation parameters to adjust the camera poses so that the camera optical center coincided with the rotation center [

45]. Based on the above steps, the calibration principle of the translation parameters, taking the left camera as an example, is depicted in

Figure 6. The camera coordinate frame at the initial position

is parallel to the base coordinate frame

.

represents the camera coordinate frame after motion. The end-of-motion coordinate frame at the initial position

is established by taking the line connecting the point

and the point

as the

-axis, the

-axis as the

-axis, and the point

as the origin. The end-of-motion coordinate frame after motion is denoted as

. The rotation angles of the camera around the

-axis and

-axis are

and

. The translation parameters in

,

, and

are denoted as

,

, and

.

Let

represent the transformation from the coordinate frame

to the coordinate frame

. Based on the transformation relationship between the coordinate frame

and the coordinate frame

in

Figure 6a, Equation (7) can be obtained.

where the expressions of

,

,

, and

are in Equation (8).

Equation (9) can be obtained by joining Equations (7) and (8).

where

can be solved using Equation (4), and

is the known rotation angle. Therefore,

and

can be calculated using Equation (10).

According to the above principle, partial translation parameters of the left and right cameras can be solved. The pose adjustment module L and the pose adjustment module R are adjusted in the left–right and front–back directions according to the corresponding negative calculated translation parameters. Upon completion of the adjustments, the remaining translation parameters in the vertical direction continue to be calibrated, which is similar to the principle of calibrating and .

We also take the left camera as an example. Based on the transformation relationship between the coordinate frame

and the coordinate frame

in

Figure 6b, Equation (11) can be obtained.

Therefore, the translation parameter

in the

direction is

where

is the translation component in the

direction of

, which can be solved using Equation (4). The translation parameters of the left and right cameras in the vertical direction are solved. Once the camera pose is adjusted through the adjustment module, it reaches the state where the optical center coincides with the rotation center.

4.2. Servo Motor Zero-Position Calibration and Adjustment

The calibration and adjustment of the zero-position of the servo motor refers to calculating the angle at which the zero-position of the servo motor rotates to the initial position, and then resetting the zero-position of the servo motor. This can ensure that the BBCPS returns to the initial position no matter what movement it performs.

Considering that the optical center coincides with the rotation center, the calibration principle of the angle for the left and upper motors is shown in

Figure 7. The projection point of the ring center of the left calibration object in the left camera is

when the servo motors are at their zero-position. The projection point of the ring center of the left calibration object in the left camera is

when the servo motors are in the initial position of the BBCPS. The projection point of the ring center of the left calibration object in the left camera is

when the angle of the upper servo motor is

. The horizontal difference between

and

is represented by

, and the vertical difference is

. According to the equal vertex angle theorem, the angle of the left servo motor

and the angle of the upper servo motor

can be calculated using Equation (13).

where

f is the focal length of the camera. In addition, the rotation direction of the left servo motor is defined as positive rotation when

is positive. The definition of the direction of the upper servo motor is the same as the left servo motor.

However, Equation (13) will fail when

or

is greater than a certain angle. When the servo motors are in their zero-position, the ring center may not be detected in the image because the ring center is beyond the field of view (FOV) of the left camera. Considering the above situation, we developed the procedure of the zero-position of the servo motor calibration and adjustment as shown in

Figure 8. To prevent multiple circles from being detected, the background of the ring in the image should be kept simple, such as a pure-color wall. First,

is determined using the Hough circle detection algorithm [

46] and recorded as the target point. Next, the left and upper servo motors are returned to their zero-position. To ensure the ring in the FOV, the left servo motor is required to rotate positively

n and the upper servo motor needs to rotate positively

m. The values of

n and

m are from 0° to 360°. Subsequently,

can be detected using the Hough circle detection algorithm and the angles

and

can be calculated. The angles at which the left and upper servo motors rotate to the initial position are

n + and

m + , respectively. Finally, the zero-positions of the left and upper servo motors are reset. The zero-position of the right servo motor can also be reset according to the above procedure.

5. Control Method

In this section, the gaze mechanism of human eyes is imitated from the perspective of control. First, in our study, the motion-control method of a single servo motor based on a controller was introduced. Then, we developed a binocular interest-point extraction method based on frequency-tuned and template-matching algorithms. Furthermore, we proposed a binocular cooperative motion strategy to move the interest point to the principal point. Finally, real experiments were conducted to verify the effectiveness of the control method.

5.1. Motion Control of a Single Servo Motor

A PID controller is widely used in servo motor control because of its relative simplicity, easy adjustment, and fair performance [

47]. The control principle is shown in Equation (14).

where

is the proportional gain,

is the integral gain,

is the differential gain,

t is the time, and

is the error.

By tuning

,

, and

, the motion of the servo motor can better follow the expectation, which is necessary for the control of the BBCPS. The requirement for parameter tuning is that the servo motor can achieve fast and stable motion and keep its motion error at about 0.1%. In response to this requirement, we experimentally determined the optimal

,

and

(the specific tuning process is described in

Section 6.2.1).

5.2. Binocular Interest-Point Extraction Method

The frequency-tuned salient point detection algorithm is a classical method that analyzes an image from a frequency perspective to identify salient points in the image [

34]. In this algorithm, the image needs to be Gaussian smoothed. Then, according to Equation (15), the saliency value of each pixel is calculated, and the pixel with the largest saliency value is the salient point.

where

represents the average feature of the image in Lab color space,

denotes the feature of the pixel point

in Lab color space, and

refers to the saliency value of the pixel point

. However, this algorithm can only determine the salient point in a single image. What we need to extract are interest points in binocular images.

When humans perceive a scene, one eye plays a leading role [

48]. Inspired by this, we used the left camera as the leading eye, and proposed a binocular interest-point extraction method based on frequency-tuned and template-matching algorithms [

49]. The flow of this method is shown in Algorithm 1.

The detailed description of Algorithm 1 is as follows. After inputting the left camera image , the right camera image , the image width , and the template image width , the frequency-tuned salient point detection algorithm is first used. The interest point in the image is obtained. With the point as the center, the template image with a size of × is determined. A template-matching algorithm is used to match the corresponding interest point in the image . Since the vertical visual field angles of the left and right cameras are consistent in the calibrated BBCPS, a matching algorithm through local search is performed to improve the speed. The starting location of the sliding window [, ] in the image is defined as [, ], the ending location [, ] is [, ], and the sliding step d is 1. The similarity S between the sliding window and the template image at each position in the traversal interval is calculated using the mean square error. We find the maximum S and record the corresponding location of the sliding window [, ]. Finally, the interest point in the right camera image is obtained.

| Algorithm 1: Binocular interest-point extraction method based on frequency-tuned and template-matching algorithms. |

| Input: , , pixels, pixels |

| Output: , |

| Obtain in using the frequency-tuned algorithm [34] |

| Extract a template image , with a size of × , centered on |

| for to step d do |

| for ← to step d do |

| Compute S and record [S, , ] |

|

end for |

| end for |

| Find the maximum S and record the corresponding and |

| Obtain |

5.3. Binocular Cooperative Motion Strategy

To imitate the movement mechanism of the gaze function, we developed a binocular cooperative motion strategy. The implementation process of the strategy is shown in

Figure 9. First, we used Algorithm 1 to extract the binocular interest point. Afterward, the rotation angle of the left servo motor

, the rotation angle of the right servo motor

, and the rotation angle of the upper servo motor

were calculated. The principle of calculating the rotation angles of the three servo motors is shown in

Figure 10.

In this paper, we use

to define the left camera coordinate frame and

to describe the right camera coordinate frame. The points

and

are located at the left and right rotation centers. The 2D points

and

are the projection points of the 3D interest point

in the left and right images, respectively.

and

are 3D points located on the optical axes of the left and right cameras. Their corresponding 2D points are the principal point of the left camera

and the principal point of the right camera

. The difference between

and

is denoted by

and

, and the difference between

and

is described by

and

. The rotation angles of the three servo motors can be obtained via Equation (16). The rotation direction is specified in

Section 4.2.

Finally, the calculated rotation angles are sent to the three servo motors at the same time to realize the cooperative motion of the left and right cameras.

7. Conclusions

In this study, motivated by the eye gaze mechanism, we designed the flexible BBCPD. The device was assembled according to the principle of symmetrical distribution around the center based on dynamic analysis. The innovative principle offers distinct advantages by enhancing braking performance and reducing energy consumption in comparison to the classic symmetrical low center of gravity principle (as shown in

Figure 11). A simulation was conducted to verify the advantages. The results showed that the innovative principle could reduce the torque of the upper servo motor by more than 97%, which leads to a reduction in energy consumption of the BBCPD. The results also demonstrated that the principle could lead the BBCPD to have smaller rotational inertia of the load of the upper servo motor, thus enhancing the braking performance of the BBCPD.

Furthermore, we developed an initial position calibration technique for the BBCPD. Based on the calibration results, the BBCPD, after adjusting the pose adjustment modules and resetting the zero-positions of the servo motors, meets the requirement of the control method. Subsequently, the control method was proposed, where a binocular interest-point extraction method based on frequency-tuned and template-matching algorithms was applied to detect the interest points. Then, we crafted a binocular cooperative motion-control strategy for how servo motors could coordinate their movements and thus set the gaze upon an interest point. Last, real experiments were conducted, and the results showed that the control method of the BBCPS could achieve a gaze error within 3 pixels.

The proposed BBCPS can advance the development of humanoid intelligent perception, with application prospects in fields such as intelligent manufacturing [

52,

53], human–robot interaction [

5], and autonomous driving [

54]. However, the gaze accuracy of the BBCPS may constrain its further development. In the future, we aim to reduce gaze errors by optimizing our control algorithm. For instance, by referring to previous research on image matching under viewpoint changes [

55,

56], we plan to improve the matching algorithm in order to enhance the precision of the binocular interest-point extraction algorithm.