1. Introduction

The set packing problem is a classical and significant NP-complete combinatorial optimization problem, which is included in 21 NP-complete problems famously established by Karp [

1]. In the set packing problem, we are given a base set containing

m elements, along with a collection of

n subsets derived from the base set. The objective is to maximize the count of disjoint sets within this collection [

2]. The set packing problem has attracted widespread attention from scholars in academic research and engineering fields, and has been investigated for decades. This problem has extensive practical applications, such as scheduling [

3], combinatorial auctions [

4], communication networks [

5], product management [

6], and computer vision [

7], just to name a few. The significance of set packing optimization lies in its ability to efficiently allocate resources, schedule tasks, and optimize operations by selecting a subset of items while maximizing the overall value or minimizing the costs involved. Therefore, the research on the set packing problem has important application value and practical significance.

Considering the inherent complexity of the set packing problem, researchers have devoted their efforts to finding efficient approaches to address this challenging task. However, unless

, it is unlikely that we can discover an algorithm for solving the set packing problem in polynomial time. Therefore, we usually only find satisfactory or approximate solutions to this NP-hard problem in practice. Hurkens and Schrijver [

8] introduced a local search method that incorporates a

p-neighborhood for solving the set packing problem. The results indicated that the local search technique can obtain

approximate solutions on this problem for any

. Sviridenko and Ward [

9] proposed a local improvement technique that incorporates the color-coding technique, resulting in an improved approximation ratio from

[

8] to

. Cygan [

10] considered local search techniques with swaps of bounded pathwidth, which resulted in a polynomial time approximation algorithm with an approximation ratio of

. Later, Fürer and Yu [

11] achieved the same approximation ratio

as [

10]. However, the runtime of [

11] is singly exponential in

while it is doubly exponential in

for [

10]; the time complexity is decreased.

In the context of the set packing problem, when assigning weights to each subset, it is called the weighted set packing problem. Bafna et al. [

12] studied a local improvement heuristic for the weighted set packing problem, resulting in a tight approximation ratio of

. Arkin and Hassin [

13] obtained the same approximation guarantee for the problem by using a local improvement method, and extended to a general bound

for any fixed

. Furthermore, Chandra and Halldórsson [

14] proposed a greedy strategy called

for the

k-set packing problem, which combines greedy with local improvement and achieved an approximation guarantee of

. They also proposed another greedy strategy called

which can find a bigger improvement than a specified threshold rather than seeking the best improvement. The

algorithm can obtain an approximation ratio

for the optimal threshold selection. Recently, based on the local search procedure of Berman’s algorithm [

15], Thiery and Ward [

16] proposed an improved squared-weight local search algorithm with a large exchange for the weighted

k-set packing problem. It is shown that, for the weighted

k-set packing problem, when performing exchanges of size reaching to

, the algorithm can attain an approximation ratio of

for

. The authors presented the analysis and design of a local search algorithm that incorporates extensive exchanges within a large neighborhood for this problem. Recently, Neuwohner [

17] also studied the weighted

k-set packing problem; some approximation guarantees are obtained by combining local search with the application of a black box algorithm. Gadekar [

18] investigated the parameterized complexity on the set packing problem; he constructed a gadget called the compatible intersecting set system pair to obtain some running time bounds for the parameterized set packing problem. Moreover, Duppala et al. [

19] proved that the randomized algorithms can achieve a probabilistically fair solution with provable guarantees for a fair

k-set packing problem.

Evolutionary algorithms (EAs) [

20,

21,

22] belong to a broad category of stochastic heuristic search methods that draw inspiration from natural processes, which can effectively solve optimization problems without any prior knowledge. Due to their simplicity and ease of implementation, EAs have been widely used to solve various complex problems, which show superior search capabilities compared to local search and greedy algorithms [

23,

24,

25,

26,

27,

28,

29]. Generally, EAs solve optimization problems by updating the population via selection (to select the better individual to enter the next generation), crossover mutation (to generate the new offspring), and fitness evaluation (to evaluate the performance of different individuals) operators [

30,

31,

32,

33]. Since EAs have shown remarkable success in practice, the need for theoretical underpinnings is crucial to help understand the algorithm’s behavior, convergence properties, and performance guarantees. In order to further investigate the execution mechanism and essence of EAs, many scholars have carried out some theoretical research for evolutionary algorithms and obtained some achievements in the past few years. Especially He and Yao [

34,

35,

36,

37], Neumann [

38,

39], Qian, Yu and Zhou [

40,

41], and Xia et al. [

42,

43,

44] who obtained a series of theoretical research results of evolutionary algorithms, including average convergence rate, noisy evolutionary optimization, evolutionary Pareto optimization, evolutionary discrete optimization, etc. EAs have also been widely used for solving the set packing problem. From an experimental perspective, the research findings indicate that EAs demonstrate favorable performance when applied to the set packing problem [

45,

46,

47].

However, there has been limited research conducted on the theoretical analysis of EAs’ performance for the set packing problem. Therefore, it is natural to question whether evolutionary algorithms can obtain some performance guarantees on the set packing problem. We concentrate on the worst-case approximation guarantee achieved by some stochastic algorithms for any problem instance. That is, what the quality of solutions generated by EAs is in what expected runtime, and how the relationships between the solution quality and runtime are. This will help strengthen the theoretical foundation of EAs, and provide guidance to the design of the algorithms.

In this study, we address this question by presenting an analysis of the approximation performance of evolutionary algorithms on the set packing problem. Our primary emphasis lies in the approximation ratio achieved by the algorithms, as well as when the algorithms can obtain this approximation ratio. Specifically, we focus on a simplified variant of an EA known as (1+1) EA, which employs only the mutation operator to iteratively generate improved solutions, and the population size is one. Our investigation focuses on analyzing the performance guarantees of the (1+1) EA when it is applied to the set packing problem. Our analysis reveals that the (1+1) EA has the capability to achieve an approximate ratio of on the k-set packing problem within an expected runtime of , where and is an integer. Moreover, we generate a specific instance of the k-set packing problem and demonstrate that the (1+1) EA can effectively discover the global optima within an expected polynomial runtime. In contrast, local search algorithms tend to become trapped in local optima when dealing with this particular instance.

The rest of this paper is structured as follows. In the subsequent section, we provide an introduction to the set packing problem, along with an overview of the relevant algorithms such as local search and greedy approaches. Additionally, we discuss the analysis tools that are considered in this study. In

Section 3, we conduct a theoretical analysis of the approximation guarantees of the (1+1) EA for the

k-set packing problem. Additionally,

Section 4 provides a performance analysis for the (1+1) EA applied to a problem instance that we construct. Finally, the conclusions are presented in

Section 5.

2. Preliminaries

This section begins by providing a comprehensive description of set packing problems and the corresponding heuristic algorithms. Moreover, we introduce pertinent concepts and analysis tools that will be utilized throughout this paper.

2.1. Set Packing Problem

To begin, we present the concept of an independent set before introducing the specific set packing problem addressed within this work.

Definition 1. (Independent set) For an undirected graph , where V represents the set of vertices, an independent set is defined as a subset of vertices in which no two vertices are adjacent to each other. In other words, there are no edges in E connecting any two vertices within the set I.

The set packing problem can be considered as an extension or generalization of the independent set problem [

48].

In the set packing problem, we are given a universal set of n elements denoted as , along with a collection consisting of subsets of U. The problem is to identify a collection of sets from C that are pairwise disjoint. The goal is to maximize the cardinality of , represented as .

A set packing corresponds to an independent set in the intersection graph. Hence, we give the formal definitions of the set packing problem in the context of an undirected graph.

Definition 2. (Set packing problem) For an undirected graph with a vertices set , and a collection consisting of subsets of V, we aim to discover a collection of sets from C that are pairwise disjoint. The goal is to maximize the cardinality of , denoted as .

Definition 3. (k-Set packing problem) In the set packing problem, when there is a constraint on the size of such that for every , it is referred to as the k-set packing problem.

2.2. Local Search Algorithm

The local search algorithm is a commonly used heuristic approach for solving the set packing problem. It operates by iteratively modifying the current solution in a local manner to improve its quality. A local search approach for the

k-set packing problem was introduced by Hurkens and Schrijver [

8]. The algorithm begins with an empty set

I and examines whether there exist

disjoint sets that are not part of the current solution set. These sets should intersect at most

sets from the current collection. If such a collection

S of

t sets is found, the algorithm improves the current solution by adding

S while simultaneously removing all sets from the current solution that intersect any set in

S (up to

sets). This local search algorithm is given in Algorithm 1 below. The search continues until no further improvement can be made. The resulting solution is referred to as

p-optimal (

p-opt).

| Algorithm 1: p-local search algorithm for the k-set packing problem |

| Input: Graph ; |

| Output: Maximum Independent Set I; |

|

1: Initialize: ; |

|

2: While termination condition does not hold do |

| 3: Find a set such that and the sets in |

| are disjoint. |

| 4: ; |

| 5: if then |

| 6: ; |

| 7: end if |

| 8: end while |

As shown in Algorithm 1, we can see that the p-local search algorithm aims to iteratively enhance the current solution in order to achieve an improved solution.

2.3. Analysis Method

This section presents a fitness-level method (also known as fitness-based partitions) [

49], a theoretical analysis technique. The fitness-level method is a straightforward yet useful approach for estimating the expected runtime of evolutionary algorithms and other randomized search heuristics applied to combinatorial optimization problems. Next, we provide the formal definition of the fitness-based partitions method.

Definition 4. (Fitness-level method). Let be a finite search space, and let be a fitness function that needs to be maximized. For any two sets A and B that are subsets of S, we define if holds for all a in A and all b in B. To analyze the search process, we partition the search space S into disjoint and non-empty sets , satisfying the ordering The set exclusively comprises optimal search points. We denote as the lower bound probability that, in the subsequent iteration, a new search point will be generated from the current search point x, where belongs to . Additionally, we define as the expected number of generations required by the (1+1) EA to discover an optimal search point for the fitness function f, then we have The fitness-level method is a simple yet efficient approach for runtime analysis for EAs, also called fitness-based partitions. However, this technique only presents the upper bound estimation, and a general lower bound estimation method is difficult to establish. It should be pointed out that if you use this method, you have to give an appropriate partition for the search space S. The number of partitions cannot be too large, i.e., exponential. Furthermore, it is relatively straightforward to estimate the probability of transitioning from the current partition to a better partition and generating a new offspring.

3. Theoretical Analysis of a Simple Evolutionary Algorithm for -Set Packing Problem

In this section, we focus on analyzing the approximation guarantee of evolutionary algorithms applied to the set packing problem. An approximation algorithm is designed to provide a solution for a combinatorial optimization problem, such that its objective value is guaranteed to be within a bounded factor of the optimal solution’s value. The approximation ratio serves as a fundamental metric for evaluating the quality of an approximation algorithm.

Let us begin by introducing the notion of approximation ratio. Without a loss of generality, we consider a maximization problem with an objective function denoted as f. For any given instance I, the value of an optimal solution is represented by . An algorithm is considered a -approximation algorithm if the best solution x obtained by the algorithm satisfies , where . In other words, the algorithm achieves a solution with a value that is at least times the value of the optimal solution. We refer to this as the -approximation ratio for the problem. If the value of is equal to 1, it indicates that the algorithm is considered optimal. It is worth noting that a higher approximation ratio indicates a worse solution in terms of quality.

Next, we present the (1+1) EA used in this study as shown in Algorithm 2.

| Algorithm 2: The (1+1) EA for the set packing problem |

| Input: A Graph ;

|

| Output: A vertices set C with the cardinality of C as maximized as possible. |

|

1: Initialization: Randomly select an initial bit string ; |

| 2: While termination condition is not met do |

| 3: Generate an offspring by independently flipping each bit of x with |

| a probability of ; |

| 4: if then |

| 5: . |

| 6: end if |

| 7: end while |

The (1+1) EA begins with an arbitrary solution and proceeds iteratively by generating an offspring solution using the mutation operator but without crossover. The current solution is replaced by the new solution only if it is strictly superior to the current one.

To utilize the (1+1) EA for finding the optimal or approximate optimal solution to the k-set packing problem, we employ a bit string to encode the problem’s solution. Each bit corresponds to a subset , where . If , it indicates that the subset is selected. Conversely, if , it implies that is not selected. Consequently, a solution or bit string x represents a collection of subsets, and represents the count of subsets contained in x.

In the following, we define the fitness function for the

k-set packing problem, denoted as

We aim to maximize the fitness function

. In the above Equation (

1), the item

represents the number of subsets with an intersection. The penalty term in the fitness function,

, serves to encourage the algorithm to minimize the value of

. This, in turn, leads to a reduction in the number of intersecting subsets within the current solution. The primary goal is to obtain a solution where all subsets

are disjoint. The first part of Equation (

1) ensures that the number of disjoint subsets is maximized if all subsets are disjoint.

Hurkens and Schrijver [

8] demonstrated that a simple local search algorithm can attain a polynomial time approximation ratio on the

k-set packing problem.

Lemma 1 ([

8]).

For any , let p be an integer and . The local search algorithm with local improvement can achieve an approximation ratio when applied to any instance of the k-set packing problem. Next, we demonstrate that the (1+1) EA can effectively discover a

-approximation solution for the unweighted

k-set packing problem, while simulating the aforementioned result established by Hurkens and Schrijver [

8]. Additionally, the expected runtime for this achievement is bounded by

.

The (1+1) EA has the capability to discover a packing that includes a minimum number of sets, reaching at least , for the k-set packing problem. This accomplishment is expected to occur within a runtime of , where represents the global optimum.

Theorem 1. The (1+1) EA has the capability to discover a packing with a number of sets that is at least for the k-set packing problem. This achievement can be accomplished within an expected runtime of , where represents the global optimum.

Proof. During the optimization process of the (1+1) EA, it is important to note that the value of the fitness function is never reduced. To facilitate this, the search space

is divided into three distinct sets, namely

,

, and

, based on their respective fitness values.

It is obvious that represents the set of infeasible solutions, and and represent the set of feasible solutions.

In accordance with the fitness function (

1), the fitness values in the evolutionary process of the (1+1) EA are guaranteed to never decrease. The (1+1) EA initiates by randomly selecting an initial solution from the search space. Suppose the current solution

x belongs to

, then

x is a collection of subsets containing intersection, i.e., an infeasible solution. At least two subsets in the current solution are intersecting. The algorithm only accepts the event that reduces the number of subsets with an intersection. In this scenario, the (1+1) EA has the capability to enhance the fitness value by at least one by removing such a subset with an intersection from the solution. In this case, the probability of removing such a specific subset is

; this suggests that the fitness value is expected to increase by at least one within a runtime of

. Note that the maximum fitness value is

n. Consequently, the solutions from set

will be transformed into set

by the (1+1) EA, and this transformation will be accomplished within an expected runtime of

.

Let us assume that the current solution x belongs to set . On the basis of Lemma 1, if some solution satisfies , then the algorithm evolves towards increasing the number of disjoint subsets. For this case, the following possibilities exist. There exist p disjoint sets that are not included in the current solution, and these p subsets intersect at most subsets in the current solution. Consequently, by performing a p-local improvement operation, the fitness value is increased by at least 1 by the (1+1) EA. The probability of performing such operation for the (1+1) EA is . Since there are most n elements in the solution, according to the fitness-level method, performing such n operations, the (1+1) EA will transform the solutions into set within an expected runtime of .

Combining the above analysis for different phases, we complete the proof. □

As shown in Theorem 1, the (1+1) EA can achieve a performance guarantee equivalent to that of the local search algorithm in the worst case. Understanding the theoretical properties of different algorithms can guide researchers and practitioners in choosing the most suitable approach based on the problem characteristics. Applying theoretical guarantees to real-world optimization problems can help in predicting algorithm behavior and performance outcomes. By leveraging theoretical insights, practitioners can tailor optimization algorithms to specific problem domains and optimize them for practical applications.

4. The Power of Evolutionary Algorithms on Some Instances of the -Set Packing Problem

Local search and greedy algorithms are widely used and are practical approaches for efficiently searching approximate solutions in various optimization problems. However, both of these techniques are prone to becoming stuck in local optima. In this section, we present a constructed instance of the k-set packing problem. We demonstrate that the simple evolutionary algorithm has the capability to discover global optimal solutions within an expected polynomial runtime. On the other hand, the 2-local search algorithm may be apt to fall into the local optimum.

First, we give two sets with base elements as follows.

and

where

s is an integer.

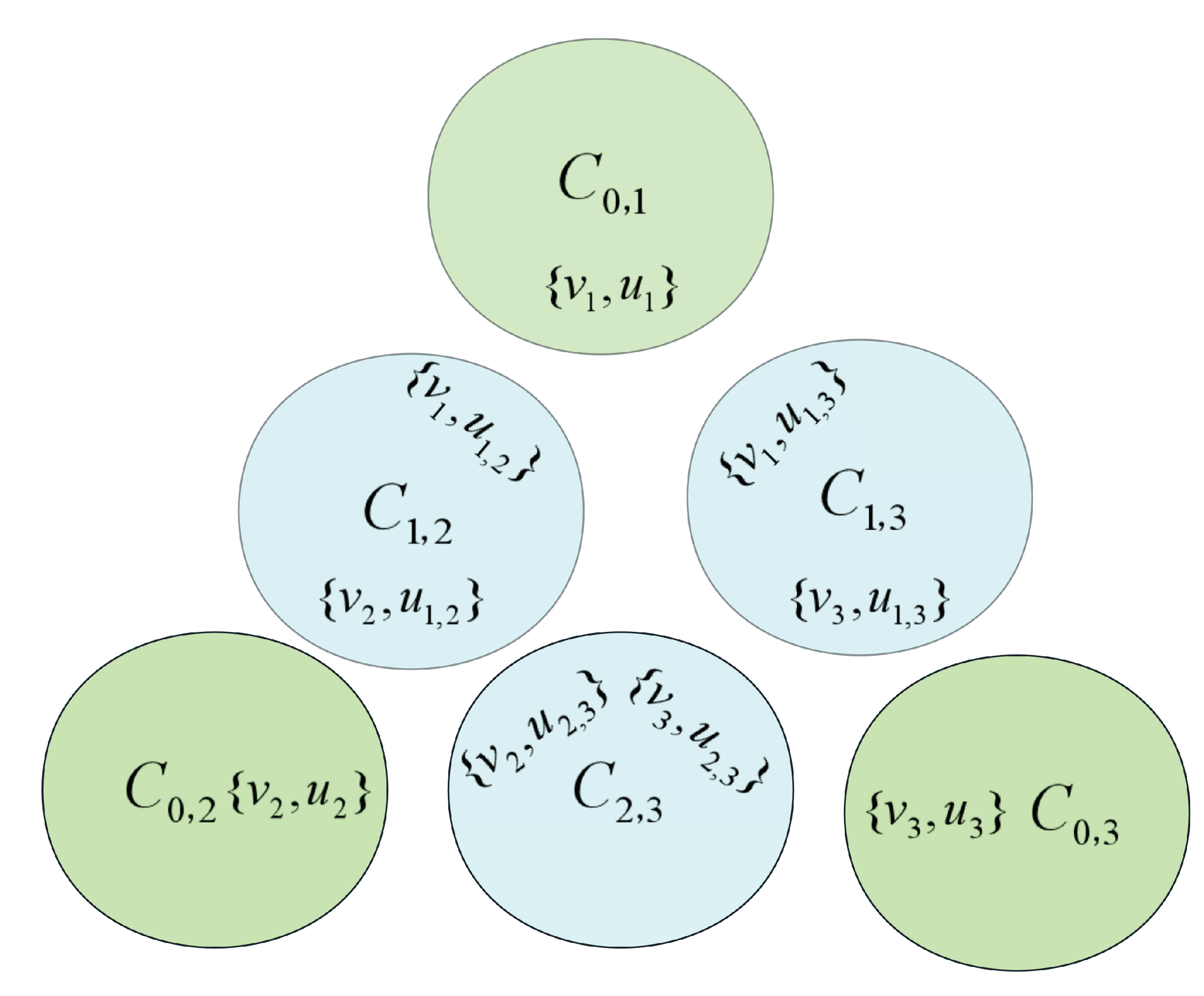

The instance

is constructed using the following steps. First, we construct three groups of sets

,

and

. Let

Here, , and .

If the subscript of u is 0, it means that the element does not exist.

For

, we set

and

After that, we define two sets

X and

Y. For any

, let

,

and

. The set

. We can see that the size of set

X is

s, i.e.,

, and

. The number of all elements contained in sets

X and

Y is

n. The constructed instance

is shown in

Figure 1.

In the following analysis, we demonstrate that the (1+1) EA can achieve a global optima for instance within an expected polynomial runtime.

Theorem 2. For instance , the (1+1) EA can efficiently obtain the global optimum starting from any initial solution within an expected runtime of .

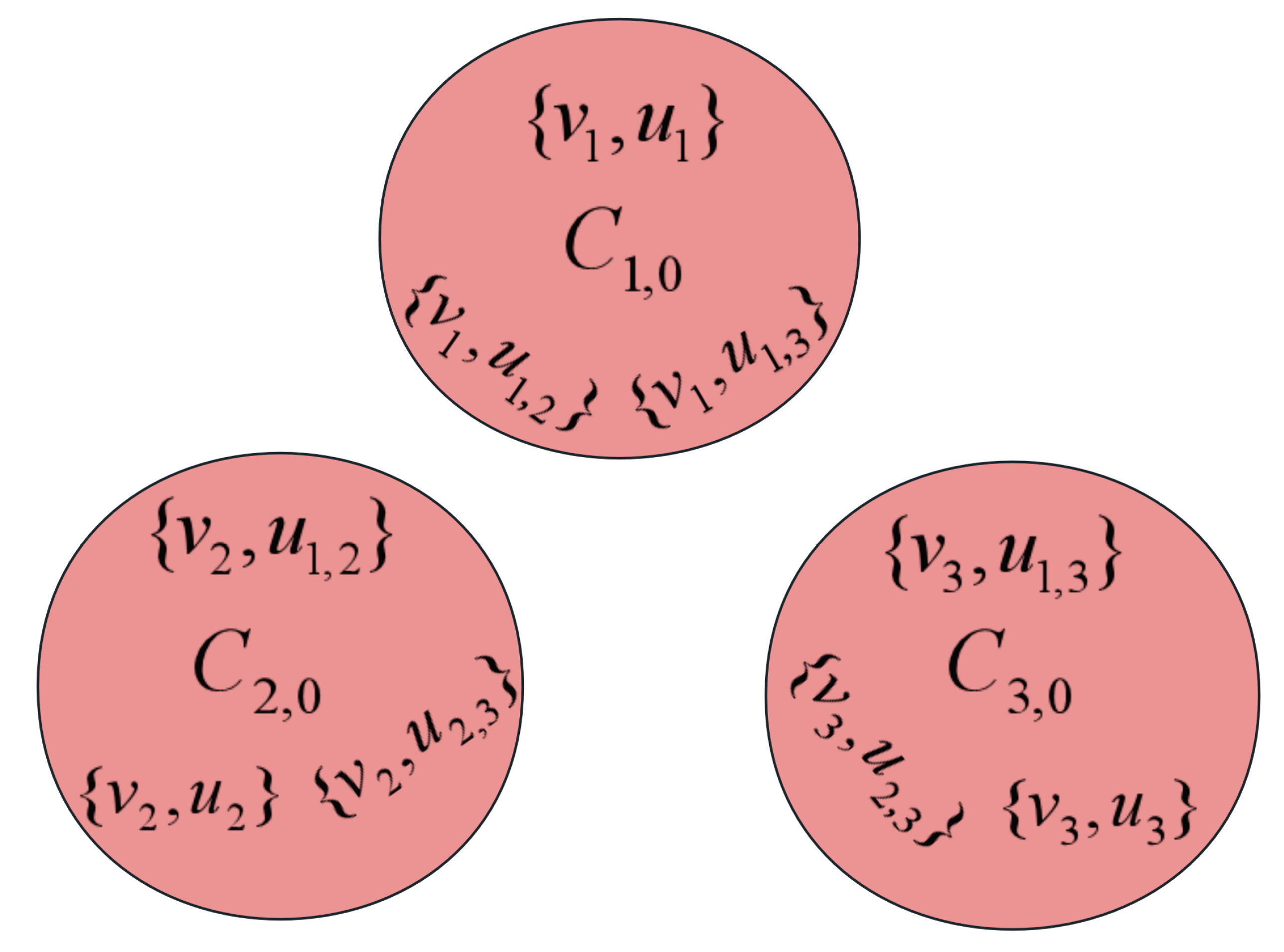

Proof. Obviously, the global optimum of

is

as shown in

Figure 2. We can divide the optimization process of the algorithm on

into two stages. In the first stage, the fitness value is strictly negative, while in the second stage, the fitness value becomes positive.

In the first stage, since the fitness value is strictly less than 0, the intersection of some subsets exists in the current solution. In this case, the (1+1) EA will only accept operations that reduce the number of intersections in the subset. The probability that the algorithm reduces a particular subset with intersection is . It is noticed that the total set contains n elements. By employing the fitness-level method, the (1+1) EA is transitioned from the first stage to the second stage within an expected runtime of .

During the second stage, the subsets within the current solution are mutually disjoint. It is necessary to consider four distinct cases in this context.

Case 1: All the elements from

X are included in the current solution, i.e., a 2-optimal solution, as shown in

Figure 3. In this case, any subset in

Y can not be included into the current solution. Because the algorithm only accepts better solutions, this case occurs only once during the optimization process. We claim that the fitness value can be increased by at least one by the (1+1) EA through deleting two subsets

and

in

X and simultaneously adding three subsets

,

and

in

Y. In the following, we estimate the probability that the algorithm performs this operation. We first choose one element from the set

, where the probability for this operation is

. We assume that we choose the element

, then we need to remove the elements

and

from set

X while adding the elements

and

from set

Y simultaneously. Such an improvement will occur with probability

, which implies that the fitness value can be increased by at least one in the expected runtime

.

Case 2: The current solution contains, at most, elements selected from X, but there are no elements from Y contained in the current solution. In this scenario, by either adding the elements from set X or removing the elements from set Y that are disconnected from the elements in set X, the fitness value can be increased. Such an operation is executed by the (1+1) EA with probability , which implies that the fitness value can be increased by at least one in expected runtime .

Case 3: The current solution contains at most elements selected from X, also there exist some elements from Y contained in the current solution. For this case, we can divide it into two subcases for further consideration.

Subcase 3.1: The current solution contains less than elements selected from X. Let us assume that the subset is included in the current solution but without and for the sake of simplicity. Based on the aforementioned analysis, we can conclude that the current solution forms an independent set. Therefore, neither subset nor is present in the current solution. Through adding subsets and while simultaneously removing subset from set X by the (1+1) EA, the fitness value will be increased. Such an operation is executed by the (1+1) EA with probability , which implies that the fitness value can be increased by at least one in the expected runtime .

Subcase 3.2: The current solution contains exactly elements from X, meaning that only a single element in X is excluded form the current solution. For the sake of simplicity, let us assume that the element from X is not included in the current solution. Furthermore, since the current solution is an independent set, we can know that only the element in Y is contained in the current solution. Through adding two subsets and and simultaneously deleting the subset from X by the (1+1) EA, the fitness value will be increased. The probability of the (1+1) EA executing such an operation is , which implies that the fitness value can be increased by at least one in the expected runtime .

Case 4: The current solution exclusively consists of the elements from set Y. For this case, through adding any other element from Y into the current solution by the (1+1) EA, the fitness value can be increased by at least one. Such an operation is executed by the (1+1) EA with probability , which implies that the fitness value can be increased by at least one in the expected runtime .

Note that there are mostly n elements in the solution, i.e., the maximum fitness value is n. Therefore, according to the above analysis, starting with an arbitrary solution, the (1+1) EA can obtain the global optimal solution of instance in an expected runtime of . □

As we can see from Theorem 2, as a global optimization method, the evolutionary algorithm can avoid becoming stuck in local optima, but it is easy for the local search algorithm to become trapped in local optima.