Abstract

In this paper, a multi-strategy fusion enhanced Honey Badger algorithm (EHBA) is proposed to address the problem of easy convergence to local optima and difficulty in achieving fast convergence in the Honey Badger algorithm (HBA). The adoption of a dynamic opposite learning strategy broadens the search area of the population, enhances global search ability, and improves population diversity. In the honey harvesting stage of the honey badger (development), differential mutation strategies are combined, selectively introducing local quantum search strategies that enhance local search capabilities and improve population optimization accuracy, or introducing dynamic Laplacian crossover operators that can improve convergence speed, while reducing the odds of the HBA sinking into local optima. Through comparative experiments with other algorithms on the CEC2017, CEC2020, and CEC2022 test sets, and three engineering examples, EHBA has been verified to have good solving performance. From the comparative analysis of convergence graphs, box plots, and algorithm performance tests, it can be seen that compared with the other eight algorithms, EHBA has better results, significantly improving its optimization ability and convergence speed, and has good application prospects in the field of optimization problems.

1. Introduction

With the continuous innovation and development of technology in recent years, engineering problems in various fields such as social life and scientific research have generated many complex optimization solving needs [1,2,3,4], such as dynamic changes, nonlinearity, uncertainty, and high-dimensional. Traditional methods, including the gradient descent method, yoke gradient method, variational method, Newton’s method, and other methods, find it difficult to obtain optimal solutions to these problems within a certain time or accuracy, and are no longer able to meet practical needs. In addition, their efficiency is relatively low when solving real-world engineering problems with large search space and non-linearity. On the contrary, meta-heuristics (MHs) are stochastic optimization methods that do not require gradients. Due to their self-organizing, adaptive, and self-learning characteristics, they have demonstrated their ability to solve real-world engineering design problems in different fields. Therefore, with the continuous advancement of society and the development of artificial intelligence, optimization methods based on an MHs algorithm have been developed.

MHs methods solve optimization problems by simulating biological behavior, physical facts, and chemical phenomena. They are divided into four categories: Swarm Intelligence (SI) algorithms, Evolutionary Algorithms (EA) [5], Physics-based Algorithms (PhA) [6,7,8], and human-based algorithms [9,10,11]. Recently, these behaviors have been widely modeled in various optimization techniques, and their results are summarized in Table 1. Among them, SI is a kind of meta-heuristic algorithm that explores optimization by mimicking the swarm intelligence pattern of behavior of biological and non-living systems in nature [12]. It has advantages such as good parallelism, autonomous exploration, easy implementation, strong flexibility, and fewer parameters. In general, the structure of excellent SI optimization algorithms is simple. The simple theories and mathematical models originate from nature and solve practical problems by simulating nature. Additionally, it is easy to incorporate its variant methods in line with state-of-the-art algorithms. Second, these optimization algorithms can be considered as black boxes, which can solve optimization cases for a series of output values and given input values. Furthermore, an important characteristic of SI algorithms is their randomness, which means that they will find the entire variable space and effectively escape local optima. They seek the optimal result through probability search, without requiring too much prior knowledge or analyzing the internal laws and correlations of the data. It only needs to learn from the data itself, self-organize, and adaptively solve optimization problems, which are very suitable for solving NP complete problems [13].

In the last few years, SI was designated as a small branch of artificial intelligence, is widely used in areas like path planning, mechanical control, engineering scheduling, feature extraction, image processing, training MLP, etc. [14,15,16,17,18,19,20,21], and has achieved significant development. The No Free Lunch (NFL) theorem proposed by Wolpert et al. [22] logically proves that there is no algorithm that can solve all optimization problems. Therefore, research in the field of SI algorithms is very active, with many experts and scholars conducting research on improvements to current algorithms and new algorithms. Typical examples include Particle Swarm Optimization algorithms (PSO) [23] and Ant Colony Optimization (ACO) [24], which have been inferred from by the cooperative foraging behavior of bird and ant colonies, respectively. Over the past few years, a number of researchers have been involved in the development of SI, proposing various algorithms that simulate the habits of natural organisms. Yang et al. [25] presented the Bat Algorithm (BA) to simulate the bats’ behavior using sonar for detection and localization. Gandomi et al. [26] have developed the Cuckoo Search algorithm (CS) according to the reproductive characteristics of cuckoo birds; the reason why the optimal solution obtained by CS is much better than that obtained by existing methods is because CS uses unique search features. References [27,28,29] proposed the Grey Wolf Optimizer (GWO), Whale Optimization Algorithm (WOA), and Salp Swarm Algorithm (SSA) by simulating the hunting behavior of grey wolf, humpback whale, and salp, respectively. Compared with well-known meta-heuristic algorithms, the GWO algorithm can provide highly competitive results. The results of classical engineering design problems and practical applications have shown that this algorithm is suitable for challenging problems with unknown search spaces. Compared with existing meta-heuristic algorithms and traditional methods, WOA has strong competitiveness. The SSA can effectively improve the initial random solution and converge to the optimal solution. The results of actual case studies demonstrate the advantages of the proposed algorithm in solving real-world problems with difficult and unknown search spaces.

Mirjalili et al. [30] proposed the Sea-horse Optimizer (SHO) from the motor, predatory, and reproductive behavior of the sea-horse. These three intelligent behaviors are expressed and constructed mathematically to balance the local development and global exploration of SHO. The experimental results indicate that SHO is a high-performance optimizer with positive adaptability for handling constraint problems. Abualigaha et al. [31] introduced the Reptile Search Algorithm (RSA) derived from the hunting activity of crocodiles, the search method of RSA is unique, and it achieves better results. Based on mathematical models of sine and cosine functions, the Sine Cosine Algorithm (SCA) [32] is proposed, which can effectively explore different regions of the search space, avoid local optima, converge to global optima, and effectively utilize the promising regions of the search space during the optimization process. The SCA algorithm has obtained smooth shapes of airfoils with very low drag, indicating its effectiveness in solving practical problems with constraints and unknown search spaces. Subsequently, Tunicate Swarm Algorithm (TSA) [33], Wild Horse Optimizer [34] (WHO), Archimedes Optimization Algorithm (AOA) [35], and Moth Flame Optimization (MFO) [36] were successively proposed.

Table 1.

A brief review of meta-heuristic algorithms.

Table 1.

A brief review of meta-heuristic algorithms.

| Algorithms | Abbrev. | Inspiration |

|---|---|---|

| Particle Swarm Optimization | PSO [23] | The predation behavior of birds |

| Genetic algorithms | GA [5] | Darwin’s theory |

| Gravitational Search Algorithm | GSA [6] | The interaction law |

| Teaching Learning-Based Optimization | TLBO [8] | The effect of influence of a teacher on learners |

| Ant Colony Optimization | ACO [24] | The foraging behavior of ants |

| Bat Algorithm | BA [25] | The echolocation behavior of bats |

| Cuckoo Search algorithm | CS [26] | The reproductive characteristics of cuckoo birds |

| Gray Wolf Optimization | GWO [27] | The leadership hierarchy and hunting mechanism |

| Whale Optimization Algorithm | WOA [28] | The bubble-net hunting behavior of humpback whales |

| Salp Swarm Algorithm | SSA [29] | The swarming behaviour of salps when navigating and foraging in oceans |

| Sea-horse Optimizer | SHO [30] | The movement, predation, and breeding behaviors of sea horses |

| Reptile Search Algorithm | RSA [31] | The hunting behavior of crocodiles |

| Tunicate Swarm Algorithm | TSA [33] | The group behavior of tunicates in the ocean |

| Sine Cosine Algorithm | SCA [32] | Based on mathematical models of sine and cosine functions |

| Wild Horse Optimizer | WHO [34] | The decency behaviour of the horse |

| Arithmetic Optimization Algorithm | AOA [35] | The main arithmetic operators in mathematics |

| Moth Flame Optimization | MFO [36] | The navigation method of moths |

| Honey Badger Algorithm | HBA [37] | The intelligent foraging behavior of honey badger |

The basic framework of the MHs algorithm mentioned above is established in two stages, namely the exploration and exploration stages. The MHs algorithm needs to achieve a perfect balance between these stages in order to be efficient and robust, thereby ensuring the best results in one or more specific applications. The exploration process involves searching for regions of distant feasible solutions to ensure obtaining better candidate solutions. After the exploration phase, exploring the search space is crucial. This algorithm will converge to a promising solution and is expected to find the best solution through local convergence strategy [19].

A good balance of exploration and exploitation and prevention of falling into local solutions are the key requirements for MHs algorithms to solve engineering optimization problems. They ensure a large search space and the acquisition of the optimal global solution. The summary results show that researchers mainly deal with (1) mixing two or more other strategies. The improved meta-heuristic algorithm will introduce the advantages and disadvantages of each algorithm, and refer to the corresponding strategies in a targeted manner to improve optimization efficiency. (2) Propose new heuristic optimization algorithms that are more adaptable to complex engineering optimization problems. However, the newly proposed algorithm must be more mature and generalizable for optimization problems when migrating to a new project.

The Honey Badger Algorithm (HBA) [37] was developed by Fatma et al. Firstly, the special feature of HBA from other meta-heuristic algorithms lies in the use of two new mechanisms to update individual positions: the foraging behavior of honey badgers in both mining and honey picking modes, which possesses stronger searching ability and performs well for the complex practical problems. The dynamic search behavior of honey badger with digging and honey finding approaches are formulated into exploration and exploitation phases in HBA.

Secondly, compared with different algorithms such as PSO, WOA, and GWO, etc., HBA has been widely noticed and used in various fields because of its high flexibility, simple algorithm structure, high convergence accuracy, and operability. It has a stronger searching ability and performs well for the complex practical problems. HBA has successfully solved the speed reducer design problem, tension/compression spring design problem, and some other constraint engineering problems.

Therefore, experts have made varying degrees of improvements to it in order to better adapt to various problems in recent times. For example, Akdag et al. proposed a developed honey badger algorithm (DHBA) to solve the optimal power flow problem [38]. Han et al. proposed an improved chaotic honey badger algorithm to optimize and efficiently model proton exchange membrane fuel cells [39]. The literature [40] proposes an enhanced HBA (LHBA) based on Levy flight strategy and applies it to the optimization problem of robot grippers, The results show that LHBA can obtain the minimum value of the difference between the minimum force and the maximum force and successfully solve this optimization problem. In order to improve the overall optimization performance of basic HBA, literature [41] proposes an improved HBA named SaCHBA_PDN based on the Bernoulli shift graph, segmented optimal decreasing neighborhood, and policy adaptive horizontal crossing and applies it to solve the path planning problem of unmanned aerial vehicles (UAVs). Test experimental results show that SaCHBA_PDN has a better performance than other optimization algorithms. Simulation results show that SaCHBA_PDN can obtain more feasible and efficient paths in different obstacle environments, etc. However, the HBA still has limitations in falling into local optima and solving accuracy when facing multiple local solution problems [37], while the experimental results in this paper also show that there is some room for improvement in its performance such as optimization accuracy and stability. Therefore, this paper attempts to improve some limitations of the HBA.

To further improve the performance of the original HBA, it was enhanced by combining four different strategies: dynamic opposite learning, differential mutation operations, local quantum search, and dynamic Laplacian crossover operators, forming the enhanced Honey Badger Algorithm (EHBA) to be studied in this paper. What is more, the EHBA has been successfully introduced to a number of typical practical engineering problems. To summarize, the main contributions made include:

- (a)

- A dynamic opposite learning strategy was adopted for HBA initialization to enhance the diversity of the population and quality of candidate solution for performance improvement of the original HBA, and increases the convergence speed of the algorithm.

- (b)

- Combining differential mutation operations to increase the diversity of individual populations, enhance the HBA’s capability to jump out of local optima, and to some extent increase the precision of HBA.

- (c)

- Local quantum search and dynamic Laplacian crossover operators are selectively used in the mining and honey mining stages to balance the development and exploration stages of the algorithm.

- (d)

- Performance testing and analysis of EHBA were conducted on test sets CEC2017, CEC2020, and CEC2022, respectively. The feasibility, stability, and high accuracy of the proposed method have been verified through existing test sets. Improved new algorithms EHBA were adopted to design and solve three typical engineering practical cases, further verifying the practicality of EHBA.

The rest of the research content of this article is as outlined below: Section 2 outlines the basic theory of the original honey badger algorithm, combining multiple strategies to establish an enhanced honey badger algorithm (EHBA), and provides specific process steps for improving the algorithm in Section 3; for the effectiveness of the developed EHBA, calculated and statistical analyses were conducted in Section 4 using test sets CEC2017, CEC2020, and CEC2022, respectively; Section 5 provides three specific engineering examples and analysis to validate the engineering utility of EHBA; Finally, the conclusion and future research of the entire article was made in Section 6.

2. Theoretical Basis of Honey Badger Algorithm

The HBA simulates the foraging behavior of honey badgers in both digging and honey modes. In the previous mode, honey badger employs its olfactory capabilities to approach the prey’s position. As it approaches, the honey badger moves around prey to select suitable places to excavate and capture it. With the second option, the honey badger directly tracks the honeycomb under the guidance of the honey guide bird. In theory, HBA has both exploration and exploitation stages, so it can be called a global optimization algorithm. The feeding activity of the honey badger exhibits the properties of powerful optimization capacity and rapid convergence rate.

2.1. Population Initialization Stage

As with all meta-heuristics, HBA starts the optimization process by generating a uniformly distributed randomized population within a set boundary range. According to Equation (1), initialize the population and individual position of honey badgers.

where r1 is a random value within [0, 1], and represent the lower and upper bound of the problem to be solved, respectively.

Forming the initial population matrix P in below Equation (2).

where Pi (i = 1, 2, …, N) represents the candidate solution position vector, and Pi,j represents the position in the j-th direction of the i-th candidate honey badger.

As mentioned earlier, there are two parts to update the position process in the HBA, namely the “digging stage” and the “honey stage”.

2.2. Digging Stage (Exploration)

Some honey badgers approach their prey through their sense of smell, and this unique foraging behavior provides us with the direction of the digging stage. In addition to the location update formula, the digging stage also defines three related concepts: intensity operator, trend modifier, and density factor.

2.2.1. Definition of the Intensity I

The intensity I is proportional to the density of prey and the distance between it and the honey badger, and is denoted by the inverse square law [42] in Equation (4).

where Ii is the intensity of the prey’s odor in Equation (4). If the odor is strong, the speed of the movement will be rapid, and vice versa. S and di indicate the source or concentration intensity (location of prey) and the distance between the current honey badger candidate and the prey, r2, is a random number between 0 and 1.

2.2.2. Update Density Factor α

The density factor (α) governs the time-varying stochasticity to guarantee a steady shift from exploration to exploitation. The diminishing factor α is refreshed to lower the stochasticity over time using Equation (6), which reduces with iterations.

where T and t are the maximum number of iterations and the current number of iterations, respectively. C default is 2.

2.2.3. Definition of the Search Orientation F

The next few steps are all used to jump out of the local optimum zone. Here, the EHBA uses a flag F that switches the search orientation to take advantage of numerous opportunities for individuals to strictly scan the search space.

where r3 is random numbers in the range of 0–1.

2.2.4. Update Location of Digging Stage

During the digging period, honey badgers depend strongly on the scent intensity, the distance between them, and search factors α. Badgers may be subject to any interference during excavation activities; this can be a hindrance to their search for better prey sites. The honey badger executes actions that resemble the shape of a heart-line. Equation (8) can be used to mimic cardioid movement.

among them, Pprey is the globally optimal prey location so far. β ≥ 1 (default to 6) is the honey badger’s capacity to forage, di is shown in Equation (5). r4, r5, and r6 are three different random numbers between 0 and 1, respectively. F is defined as a sign to change the search direction.

2.3. Honey Harvesting Stage (Exploitation)

The situation where the honey badger follows the honey guide badger to the hive is illustrated in Equation (9).

where PNew and Pprey represent the current individual location and the location of the prey, and r7 is random numbers in the range of 0–1. F and α are defined by Equations (8) and (6), respectively. From Equation (9), it can be observed that the honey badger near Pprey is based on variable di.

3. An Enhanced Honey Badger Algorithm Combining Multiple Strategies

To address the issue of inadequate precision in solving the original HBA, there is a phenomenon of insufficient global exploration capability and difficulty in jumping out of local extremes. An enhanced honey badger search algorithm is proposed by combining dynamic opposite learning strategy, differential mutation strategy, Laplacian, and quantum local strategy. Firstly, in terms of population initialization, dynamic opposite learning strategies are utilized to enhance the richness of the initial population. The differential mutation operation is to increase the diversity of the population and prevent HBA from falling into local optima. Simultaneously, introducing quantum local search or Laplacian operators for dynamic crossover operations in the local development stage allows the optimal honey badger individual to adopt different crossover strategies at different stages of development, with a fine search range in the early stage and better jumping out of local extremum in the later stage.

3.1. Dynamic Opposite Learning Strategy

Opposite learning is considered a new technology in intelligent computing, aims to find corresponding opposite solutions based on the current solution, and then select and save better solutions through fitness calculation. Initializing through opposite learning strategies can effectively improve the diversity of the population and help it escape from local optima.

In meta-heuristic optimization algorithms, the population initialization is usually randomly produced, which can only ensure the distribution of the population, but cannot assure the quality of the initial solution. Nevertheless, studies have shown that the quality of the initialization significantly affects the convergence rate and precision of HBA. Based on this issue, domestic and foreign scholars have introduced various strategies into the initialization part to enhance the initial performance, commonly including chaotic initialization, opposite learning, and Cauchy random generation, etc. This section introduces a dynamic opposite learning strategy to stronger the quality of initialization solutions [43] with Equation (10).

where PInit and PDobl are the population created at random and opposite initial population. r8 and r9 are the different arbitrary number within (0, 1). Firstly, PInit and PDobl are produced, respectively. Then, merge them into a new population PNew = {PDobl ∪ PInit}. Calculate the objective function of PNew, and use a greedy strategy to fully compete within the population, selecting the best N candidate honey badgers as the initial population. This allows the population to near the optimal solution more quickly, thereby accelerating the convergence of the HBA.

3.2. Differential Mutation Operation

Differential evolution algorithm (DE) is a new parallel evolutionary algorithm. It consists of three operations: mutation, crossover, and selection [44,45,46]. The DE algorithm keeps the best individuals and eliminates the worst individuals by means of successive iterative operations, and leads the search process towards the global optimal solution. The concrete procedures of the three operations are described below:

- Mutations. Mutation refers to calculating the weighted position difference between two individuals in a population, then adding the position of a random individual to generate a mutated individual. The specific mutation procedures can be described with Equation (11).

- Crossover. By using some parts of the present population and corresponding parts of the mutant population, and exchanging them in accordance with certain rules, it is possible to make a cross population that can enrich the variety of the species in the population.

- Selection. If the fitness value of the cross vector is not inferior to the fitness value of the target individuals , then replace the target individual with the cross vector in the next generation.

The DE algorithm uses the variation information between individuals to disturb them, thereby increasing the variety of the individuals and searching for the optimum result. It has the merits of simplicity of processing, stability of search, and ease of implementation. In this contribution, the new individual obtained by the DE algorithm is substituted for the optimal individual in the original HBA and then drives the evolution process of the individuals. This not only enhances the precision and exploration, but also secures the convergence rate of the HBA.

3.3. Quantum Local Search

First, calculate the adaptive expansion coefficient of the current generation [47]:

where βmax = 1 and βmin = 0.5 are the maximum and minimum values of the preset adaptive expansion coefficient. Generate an attraction point Qi based on individual historical average optimal position and group historical optimal position:

Assuming that the position vector of each honey badger has quantum behavior, the state of the position vector is described using the wave function φ(x, t). The position equation of the new position vector obtained through Monte Carlo random simulation can be seen in Equation (16).

In Equations (15) and (16), φ and u represents a D random number matrix that follows a uniform distribution from 0 to 1. The introduced quantum local search strategy generates an attraction point according to the Equation (15), and the honey badger population moves in a one-dimensional potential well centered around this attraction point, expanding the variety of new individuals and making sure the individuals of the honey badger population have better distribution. Finally, the position is updated according to Equation (16) to decrease the possibility of HBA entering local optima, making the HBA have better exploration performance, which is beneficial for balancing exploration and development.

3.4. Dynamic Laplace Crossover

The Laplace crossover operator was proposed by Kusum et al. [48,49]. The density function of the Laplace operator distribution can be described in Equation (17).

In Equation (17), a∈R is the positional parameter, usually taken as 0, and b > 0 is the proportional parameter. First, generate a random number equally distributed on the interval [0, 1], and λ (random number) is generated by the Equation (18).

In Laplacian crossover, two offspring and are generated by a pair of parents and by Equations (19) and (20).

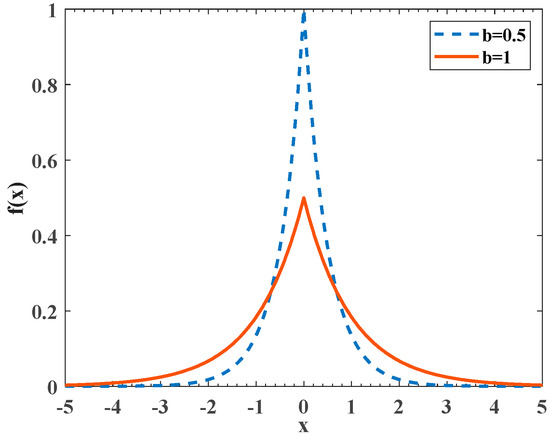

In order to match the iterative law of the algorithm, this article adopts a dynamic Laplacian crossover strategy for cross mutation operations. Figure 1 shows the differences in the Laplace cross density function curves under different values of b. In which, the solid line and dashed line are represented as b = 1 and b = 0.5. From a vertical perspective, the peak near the center value of the dashed line is greater than the solid line, and the peak at both ends is smaller than the solid line; from a horizontal perspective, the solid line descends more slowly as it approaches both ends of the horizontal axis, while the dashed line approaches both ends of the horizontal axis.

Figure 1.

Laplace density function curve.

To make the algorithm easy to operate and universal, this article dynamically introduces the Laplace crossover operator in the local discovery stage to help the honey badger population break free from the constraints of local extremum and avoid premature convergence. Because b = 1 is more likely to generate random numbers entering and leaving the origin than b = 0.5, and has a wider distribution range, selecting b = 1 for Laplace crossing in the early stage of local exploration allows honey badger individuals to search the range of solutions with a larger step size and better break free from the constraints of local extremum by Equation (21).

Due to the high probability of generating random numbers near the central value of b = 0.5, in the later stage of local development, b = 0.5 is chosen for Laplacian crossover, which allows honey badger individuals to walk around the optimal solution with a smaller step length, fine search region, and improve the probability of finding the global optimal. The specific expression is defined with Equation (22).

In Equations (21) and (22), 1 − t/T represents a monotonically decreasing function between [0, 1]. In the early stages of development, t is small, so 1 − t/T is large. The algorithm randomly selects a honey badger and the current optimal individual to perform a crossover operation according to Equation (21) when 1 − t/T is greater than r. In the later stage of development, t is large, so 1 − t/T is small. The algorithm randomly selects a honey badger and performs a crossover operation with the current optimal individual according to Equation (22) when it is less than r. The dynamic Laplacian crossover operation allows for the generation of offspring in the early stage, which can better explore the search space with a larger step size, increase the probability of jumping out of the local extremum, and avoid premature convergence. In the later stage of development, offspring that are closer to their parents are generated, which can finely search the space near the optimal solution with a smaller step size, increasing the probability of finding the global optimal solution, and helping the honey badger individuality converge to the global optimal at a faster speed. Overall, the introduction of Laplace crossover operator in the development stage enables the honey badger population to perform adaptive dynamic crossover operations according to the iterative process, improving the convergence rate and solving ability.

3.5. The Specific Steps of the Enhanced Honey Badger Algorithm

Initialize the population using dynamic opposite learning, replace the random method of HBA, and reduce the uncertainty of the algorithm. This initialization population strategy can generate high-quality populations with good diversity, laying the foundation for subsequent iterations. Introducing Laplacian crossover strategy or local quantum search strategy in the local exploration stage forces the honey badger group to adaptively select and update strategies during the iteration process, helping the honey badger group converge to the global optimum faster and decreasing the probability of premature convergence of the HBA.

Step One. Initialize the population by Equation (1), perform the dynamic opposite learning population with Equation (10), and retain the optimal individuals according to the greedy strategy to enter the main program iteration.

Step Two. Calculate the objective function value of each honey badger, and record the optimal objective function value FBest and the optimal individual position XBest based on the results.

Step Three. Define intensity I with Equation (4) and density factor by Equation (6).

Step Four. Perform differential mutation operation Equations (11)–(13).

Step Five. If , update the values through the digging stage with Equation (8).

Step six. If the random number , update the individual position of the population based on the quantum local search Equation (16); otherwise, if the random number , replace the individual position based on the dynamic Laplace crossover Equation (22); if , update the individual position following the Equation (21).

Step Seven. After updating, judge whether it exceeds the upper and lower bounds of the position. If a certain dimension of the individual exceeds the upper bound, replace its value with the upper bound Upb. If a certain dimension of the individual exceeds the lower bound, replace its value with the lower bound Lob.

Step Eight. Evaluate the fitness value and judge whether is better than FBest; if it is better than FBest, the fitness value of this candidate solution is recorded as a new FBest, and the individual position is updated as PBest;

Step Nine. As the number of iteration increases, if , return to Step Three; otherwise, output the optimal value FBest and the corresponding position PBest.

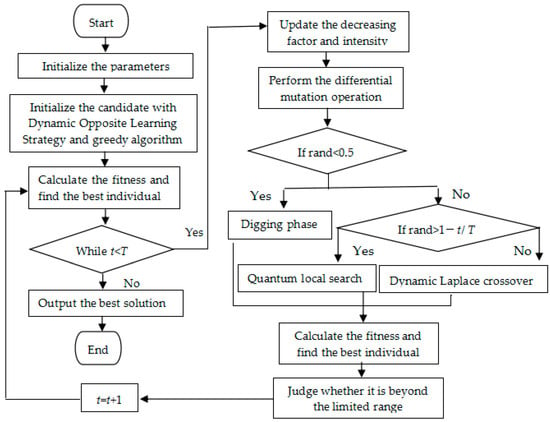

For the sake of expressing the EHBA more clearly, the pseudo-code of EHBA is listed in Algorithm 1 and Figure 2 gives the flowchart of the EHBA.

| Algorithm 1: The Proposed EHBA |

| Input: The parameters of HBA such as β, C, N, Dim, and maximum iterations T. |

| Output: Optimal fitness value. |

| Random Initialization |

| Construct the new population through dynamic opposite learning strategy. For i = 1 to N do r8 = rand(0,1), r9 = rand(0,1), For j = 1 to Dim do Check the boundaries. |

| Using greedy algorithm to select the best initial population from 2N populations |

| Evaluate all fitness value F(Pi), i = 1, 2, …, N. Save best position PBest and FBest. |

| While (t < T) do |

| Renew the decreasing factor α by Equation (6). |

| For i =1 to N do |

| Calculate the intensity Ii by Equation (4). |

| Perform differential mutation operation with Equations (11)–(13): For i = 1 to N do Perform mutation by Equation (11); End For i = 1 to N For j = 1 to Dim do Perform crossover by Equation (12); End End For i = 1 to N For j = 1 to Dim do Perform selection by Equation (13); End End |

| If r < 0.5 then |

| Replace the location Pnew by Equation (8). |

| Else |

| Quantum Local Search: Perform Equations (14)–(16) |

| Else |

| Dynamic Laplace Crossover: |

| if r1 < 1 − t/T then Renewed the honey badger location with Equation (21). Else Renewed the honey badger location with Equation (22). End if |

| End if |

| Evaluate new position |

| If Fnew ≤ F(Pi) then |

| Let Pi = Pnew and Fi = Fnew. |

| End if |

| If Fnew ≤ FBest then |

| Make PBest = Pnew and FBest = Fnew. |

| End if |

| End For |

| Verify the honey badger’s boundaries. |

| Refresh Honey Badger’s location and most best location (P*) |

| t = t + 1 |

| End while |

Figure 2.

Flowchart for the proposed EHBA optimization algorithm.

3.6. The Complexity Analysis

The calculation complexity of EHBA is determined mainly by the following three operations: dynamic opposite learning initialization, fitness evaluation, and population position update. As the primary stage of the algorithm, the initialization stage is executed only once at the beginning, while the other two steps are performed in each iteration cycle. The complexity is calculated with a default population size of N, the iteration period is T, and the dimension is defined D.

The calculation complexity of the HBA is of O(TND). The computational complexity of initialization for dynamic directional learning is O(2N). Quantum local search and Laplace crossover replace the honey harvesting stage of the original algorithm HBA, which is only an order of magnitude operation with a constant multiple c, O(cTND). Constants have little effect on large O. In summary, the total computational complexity of EHBA is O(cTND + 2N).

4. Numerical Experiment and Analysis Results

In this section, we test the performance on the CEC2017, CEC2020, and CEC2022 test sets for demonstrating the effectiveness of the EHBA. CEC2017 contains 30 single objective optimization functions, which is a very classic functions set and also the most capable function set for postgraduate intelligent algorithm optimization ability. The F2 function in the CEC2017 was later removed, as officially declared. The optimization function test kit for CEC2020 includes 10 benchmark problems, which are actually a combination of functions selected from CEC2014 and CEC2017. CEC2022 was also selected from the 2017 and 2014 function sets and is the latest test set for algorithm performance testing. These three test sets contain different uni-modal, multi-modal, hybrid, and composition functions, which can better measure the performance of the new EHBA method.

In this study, the experiment was run in a Windows 10 (64 bit) environment using Intel (R) core (TM) i5-6500 processors, 3.2 GHz, and 8 GB of main memory. EHBA was implemented in MATLAB R2019a to ensure the fairness of the algorithm.

The results were compared with the algorithm mentioned in the introduction containing SHO [30], AOA [35], WOA [28], MFO [36], HBA [37], TSA [32], SCA [33], GWO [27], etc., to verify the efficiency of the EHBA when evaluating test problems. These algorithms not only include the earliest classical algorithms proposed, but also include algorithms with better applicability and performance in recent years, which can better reflect the superiority of EHBA in this paper.

Parameter settings for all comparison algorithms should be consistent with those in the various literature; see Table 2. The maximum number of iterations T and population size N for all methods are 1000 and 30, respectively.

Table 2.

Parameters setting.

The randomness of meta-heuristics leads to unreliable results from a single run. To ensure a fair comparison, all procedures are performed 30 times independently. Usually, the average accuracy (Mean), standard deviation (Std), best value (Min), worst value (Max), Rank, and Wilcoxon’s rank sum test are selected as the evaluation criteria, which best highlight the effectiveness and feasibility of the algorithm. Here, “+” denotes that the results of other methods are superior to EHBA, “—” defines the number of functions that underperform in EHBA, “=” means that there is no significant difference between EHBA and other methods. Also shown in bold are the minimum values obtained by the eight algorithms listed above. Rank represents the ranking result of the average value of different algorithm. The lower the rank, the better the performance of the algorithm in terms of precision and stability.

4.1. Experiment and Analysis on the CEC2017 Test Set

The test function CEC2017 contains 29 functions that are often used to test the effectiveness of algorithms, with at least half being challenging mixed and combined functions [50]. Table 3 presents the test results between EHBA and the other eight methods on the CEC2017. The bold data in the table represent the optimal average data among all the comparison algorithms. In addition, Table 4 shows the Wilcoxon rank sum test results of eight comparative algorithms at a significance level of 0.05.

Table 3.

Comparison results of EHBA and other methods on CEC2017.

Table 4.

Wilcoxon rank sum test values of each comparison algorithm (30-dimensional CEC2017 test set).

From Table 3, we can observe that the average rank of EHBA is 1.2069, at the head of their league. The overall solution results of the EHBA are better. Observing the bold data, EHBA achieved smaller values on 86% of the test functions, which were distributed across various functions (uni-modal, multi-modal, mixed, and combined). However, HBA and GWO achieved smaller values on 3 and 1 functions, respectively. Therefore, the number of smaller values obtained by EHBA was much better than that obtained by other comparison algorithms. It is visible that the local quantum search and dynamic Laplacian crossover strategy have improved the effective searching capabilities of the HBA in seeking the best solution. The EHBA can find superior solutions with higher convergence speed based on the original algorithm.

Based on the p-value results presented in Table 4, due to the fact that many values are the same, for the convenience of observation, “—” represents the same value 6.79562 × 10−8. Combined with Table 3, the final results show that the number of functions of the comparison method that are superior/similar/inferior to EHBA are 3/13/13, 0/12/17, 0/0/29, 0/0/29, 0/0/29, 0/0/29, 1/4/24, and 0/1/28, respectively. It is possible to see that the better HBA in the comparison algorithm outperforms EHBA on 3 test functions, and is inferior to EHBA on 13 test functions. Secondly, the second best GWO in the comparison algorithm outperforms EHBA on function F10, and is inferior to EHBA on 24 test functions. Therefore, overall, the performance of EHBA outperforms the comparison algorithm. Overall, from CEC 2017 test functions, the performance of EHBA is superior to the other eight comparative algorithms. Thus, the experimental results show that the proposed algorithm can effectively solve the CEC2017.

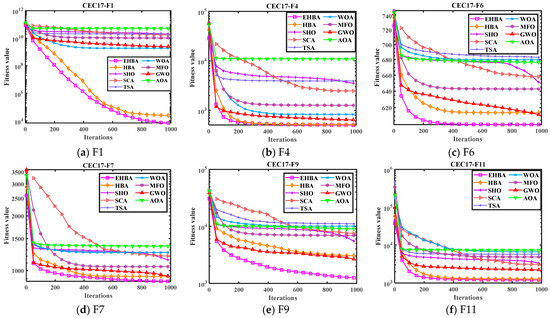

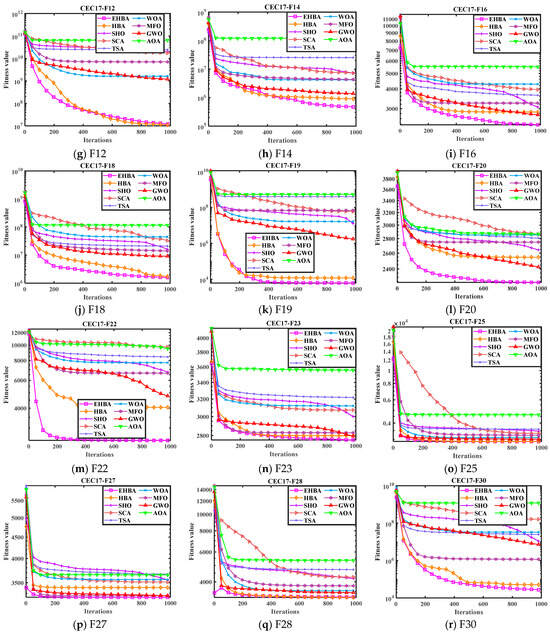

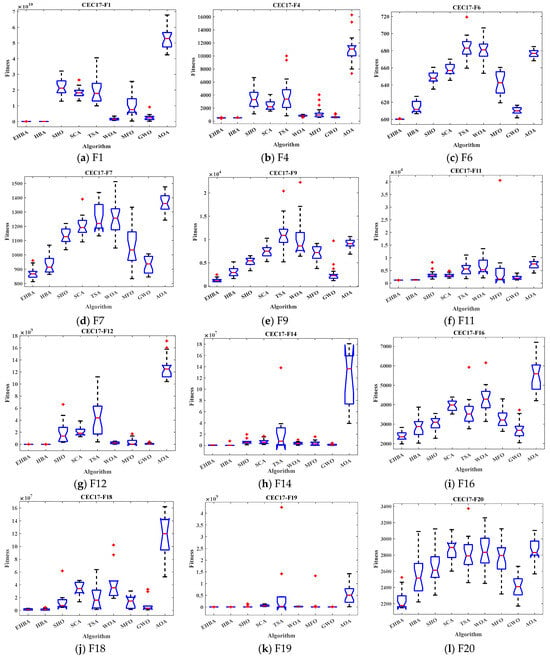

In order to display the optimization performance of the EHBA in a more intuitive way, such as its convergence rate, and capability to escape from local optima, and the convenience of observing the trend of curve changes, Figure 3 and Figure 4 show the convergence curves and box plots on some CEC2017 test functions, marking the iterations set as the horizontal axis, the functions use log10(F) as the vertical axis. From the figure, it can be seen that EHBA can converge to the optimal solution within 1000 iterations continuously, indicating its strong exploration and development capability. The convergence curves indicate that EHBA has a significantly improved convergence accuracy and speed compared to other algorithms. It is evident that the iteration curve of EHBA is able to overcome the local solution in the early stages of iteration and approaches the near-optimal solution; it is close to the optimal solution in the subsequent development period. Specifically, in the convergence curve, EHBA shows more significant convergence effects on F1, F6, F7, F9, F16, F18, F20, F22, and F30, mainly because EHBA has the ability to jump out of the local solution and find the optimal position quickly.

Figure 3.

Convergence curves of EHBA and other algorithms on CEC2017 partial test functions.

Figure 4.

Box plots of EHBA and other algorithms on CEC2017 partial test functions.

Box plot analysis shows the distribution of the data and helps to understand the distribution of the results. Figure 4 shows the box plot of the results of the EHBA algorithm and other recent optimization algorithms. The box plot is an excellent display showing the distribution of the data based on the quartiles. The red lines of EHBA and HBA show the lowest median, with EHBA being more pronounced. The narrow quartile range of the results obtained by EHBA indicates that the distribution of the obtained solutions is more clustered than other algorithms, and there are few outliers, further demonstrating the stability of EHBA due to the incorporation of the improved strategy. Overall, EHBA is a competitive algorithm that deserves further exploration in practical engineering applications.

4.2. Experiment and Analysis on the CEC2020 Test Set

4.2.1. The Ablation Experiments of EHBA

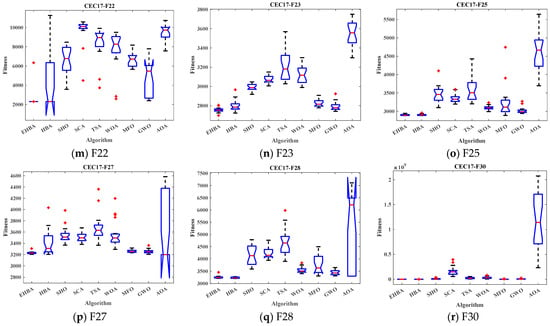

In order to verify the effectiveness of the different strategies of EHBA, EHBA is compared with its six incomplete algorithms and HBA. The incomplete algorithms include the dynamic opposite learning strategy, differential mutation strategy, quantum local search, or dynamic Laplace crossover corresponding to EHBA1, EHBA2, EHBA3, and selecting the combination strategies EHBA4 (the dynamic opposite learning strategy and differential mutation strategy), EHBA5 (the dynamic opposite learning strategy and quantum local search or dynamic Laplace crossover strategy), or EHBA6 (the differential mutation strategy and quantum local search or dynamic Laplace crossover strategy) to evaluate their impact on convergence speed and accuracy. Due to article space constraints, this paper only gives the convergence curves of some test sets on the CEC2020 in Figure 5.

Figure 5.

Convergence curves of incomplete algorithms on CEC2020.

F4 in CEC2020 has almost the same convergence accuracy and speed for all comparison algorithms, and has been recombined into a curve. Therefore, it is not shown here. Figure 5a,d indicate that for functions F1 and F5, EHBA has a slower convergence speed than EHBA2 and EHBA4, but has better convergence accuracy. Figure 5c,f–i indicate that for F7–F10, although EHBA has a slightly faster convergence speed than other algorithms, its convergence accuracy is significantly better than other algorithms. For F2, the convergence speed of EHBA is lower than that of ehba1 and EHBA5 in the early stage of iteration, but in the later stage of iteration, the convergence speed and accuracy are significantly better than other algorithms. In general, every improvement strategy of EHBA is effective and its incomplete algorithms all improve HBA to different degrees in both exploration and exploitation. Overall, applying all strategies has a better convergence effect on HBA than its ablation algorithm, which further proves the effectiveness of the added strategies.

The experimental results show that these four strategies have a certain effect on improving the performance of HBA, especially the quantum local search and dynamic Laplace strategy introduced.

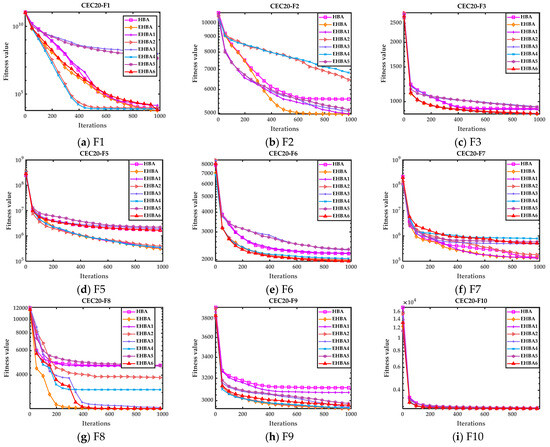

4.2.2. Comparison Experiment between Other HBA Variant Algorithms and EHBA

The proposed EHBA are compared with other HBA variants as well to verify its performance. Here, two recently improved variant algorithms have been selected, LHBA [40] and SaCHBA_PDN [41]. To save space, the convergence curve on CEC2020 is presented in Figure 6.

Figure 6.

Convergence curves of other HBA variant algorithms and EHBA on CEC2020.

Figure 6 shows that for function F2, although the convergence speed is not as fast as the two variant algorithms LHBA and SaCHBA_PDN in the early iteration, the speed and accuracy are better than LHBA and SaCHBA_PDN in the later stage. Except for F2 in Figure 6, other test functions show that the convergence accuracy and speed of the improved algorithm in this paper are significantly better than other variant algorithms, further indicating that the introduced strategy in this paper has a significant improvement on the original algorithm and has high convergence efficiency. This also demonstrates the effectiveness and high convergence of EHBA proposed in this article.

4.2.3. Comparison Experiments of EHBA and Other Intelligent Algorithms

Similarly, Table 5 presents the comparison results between EHBA and the other eight methods on the test set CEC2020 [51]; it contains 10 functions. At this time, the bold data in the table represent the optimal average data among all the comparison algorithms. In addition, Table 6 lists the Wilcoxon rank sum test results of eight comparative methods under the condition of significance level = 0.05.

Table 5.

Comparison results of EHBA and other methods on CEC 2020 test sets.

Table 6.

Wilcoxon rank sum test values of each comparison algorithm on CEC2020 test set.

From Table 5, we can observe that the average rank of EHBA is 1.4, at the head of their league. The overall solution results of the EHBA algorithm are better. Observing the data in bold, EHBA achieved better values on 80% of the test functions, which were distributed across various functions. However, HBA and GWO achieved smaller values on one function, respectively. Therefore, the number of smaller values obtained by EHBA was much better than that obtained by other comparison algorithms. It is visible that the local quantum search and dynamic Laplacian crossover strategy have improved the searching capabilities of the HBA in seeking the best solution. The EHBA can find superior solutions with higher convergence speed based on the original algorithm.

Based on the p-value results presented in Table 6, due to the fact that many values are the same, for the convenience of observation, “—” represents the same value 6.7956 × 10−8. Combined with the Table 5, the final results show that the number of functions of each comparison methods better/similar/inferior to EHBA are 1/5/4, 0/2/8, 0/0/10, 0/0/10, 0/1/9, 0/1/9, 3/3/4, 0/1/9, respectively. It is possible to see that the better HBA outperforms EHBA on F5, and is inferior to EHBA on four test functions. Secondly, the second best GWO in the comparison algorithm outperforms EHBA on three test functions, and is superior to EHBA on four test functions. Therefore, overall, the performance of EHBA is good at the other algorithm. Overall, from ten test functions, the performance of EHBA is superior to the other eight comparative algorithms. Thus, the experimental results show that the proposed algorithm can effectively solve the CEC2020.

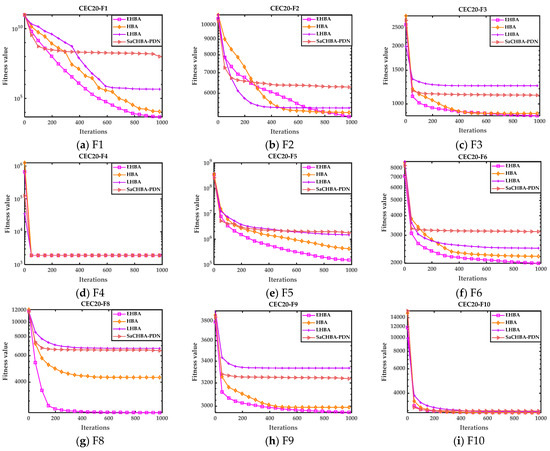

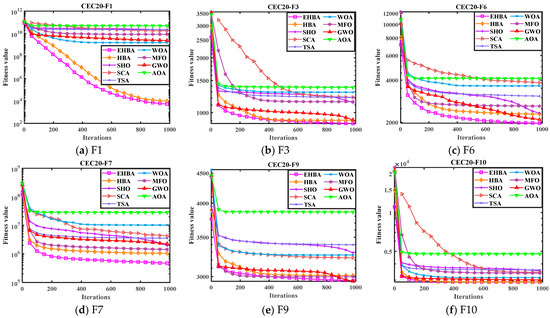

Like CEC2017, the convergence curve and box plot are also provided in Figure 7 and Figure 8. The convergence curves indicate that EHBA has significantly improved convergence accuracy and speed compared to other algorithms. It is observed that the iterative curve of EHBA is able to slip away from the local solution in the early stages of iteration and approach the near-optimal solution. It will be close to the optimum solution in the later development phase. Specifically, the convergence curve of EHBA shows more significant convergence effects on F1, F6, and F7. Therefore, the proposed strategy mainly improves the convergence speed of the algorithm in solving the CEC2020 test function, avoiding local stagnation in the optimization process as well as exploration and exploitation capabilities. Overall, EHBA obtains competitive convergence results, and its overall convergence is better than other comparative algorithms.

Figure 7.

Convergence curves of EHBA and other algorithms on CEC2020 partial test functions.

Figure 8.

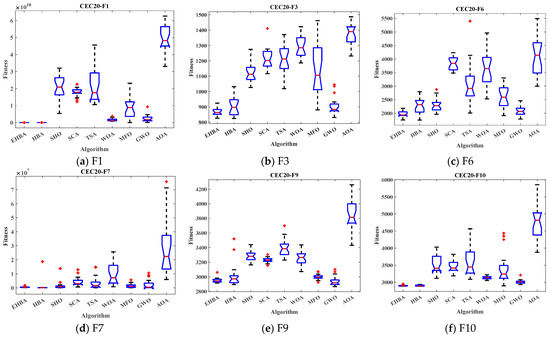

Box plots of EHBA and other algorithms on CEC2020 partial test functions.

Figure 8 demonstrated that the box plot of the test function is in line with Table 5. The red lines of EHBA and HBA show the lowest median, with EHBA being more pronounced. The narrow quartile range of the results obtained by EHBA indicates that the distribution of the obtained solutions is more concise than other methods, and there are few outliers, further demonstrating the stability of the EHBA due to the strategy that will be improved. Overall, EHBA is a competitive algorithm that deserves further exploration in practical engineering applications.

4.3. Experiment and Analysis on the CEC2022 Test Set

In the same way, Table 7 presents the results between EHBA and other algorithms on the test set CEC2022 [52]; it contains 12 functions. At this time, the bold data in the table represent the optimal average data among all the comparison algorithms. Table 6 also shows the Wilcoxon rank sum test results of eight comparative algorithms with the significance level of 0.05.

Table 7.

Comparison results of EHBA and other methods on CEC2022 test set.

The average rank of EHBA is 1.25 from Table 5, top ranking. The overall solution of the EHBA algorithm is better. Observing the bold data, EHBA achieved smaller values on 83% of the test functions, which were distributed across various functions. However, HBA and GWO achieved smaller values on F11 and F4, respectively. Therefore, the number of smaller values obtained by EHBA was much better than that obtained by other comparison algorithms. It is possible to see that the local quantum search and dynamic Laplacian crossover strategy have improved the effective searching capabilities of the HBA in seeking the best solution. The EHBA can find superior solutions with a higher convergence speed based on the original algorithm.

Based on the p-value results presented in Table 8. Due to the fact that many values are the same, for the convenience of observation, “—” represents the same value 6.79562 × 10−8. Combined with Table 7, the final results show that the number of functions superior/similar/inferior to EHBA are 1/4/7, 0/0/12, 0/0/12, 0/0/12, 0/0/12, 1/1/10, 0/2/10, 0/1/11, respectively. It is possible to observe that the better HBA outperforms EHBA on one test function, and is inferior to EHBA on seven test functions. Secondly, the second best MFO in the comparison algorithm outperforms EHBA on one test function, and is not good at EHBA on ten test functions. Therefore, overall, the performance of EHBA is better than the comparison algorithm. Overall, looking at the 12 test functions, the performance of EHBA is superior to the other 8 comparative algorithms. Thus, the experimental results show that the proposed algorithm can effectively solve the CEC2022.

Table 8.

Wilcoxon rank sum test values of each comparison algorithm on CEC2022 test set.

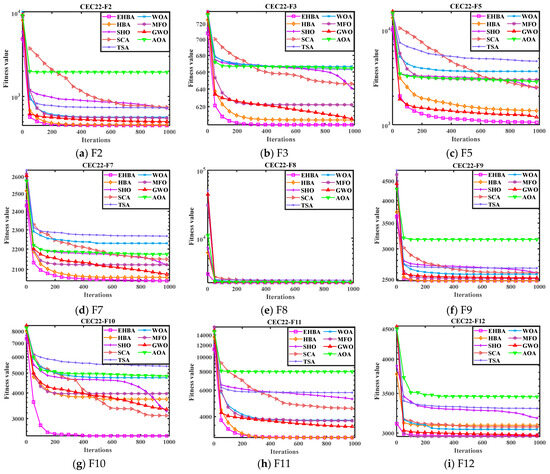

Like CEC2020, Figure 9 provided the convergence curve IYDSE compared to other meta-heuristics. From the results in the figure, the convergence curves indicate that EHBA has significantly improved convergence accuracy and speed compared to other algorithms. The iterative curve of EHBA can avoid the local solution in the early stages of iteration and converge to the approximate optimal solution. It will be found close to the optimum solution in the subsequent development phase. Specifically, the convergence curve of EHBA shows more significant convergence effects on F3, F5, and F10. Therefore, the proposed strategy mainly improves the convergence speed of the algorithm in solving the CEC2022 test function, avoiding local stagnation in the optimization process as well as exploration and exploitation capabilities.

Figure 9.

Convergence curves of EHBA and other algorithms on CEC2022 partial test functions.

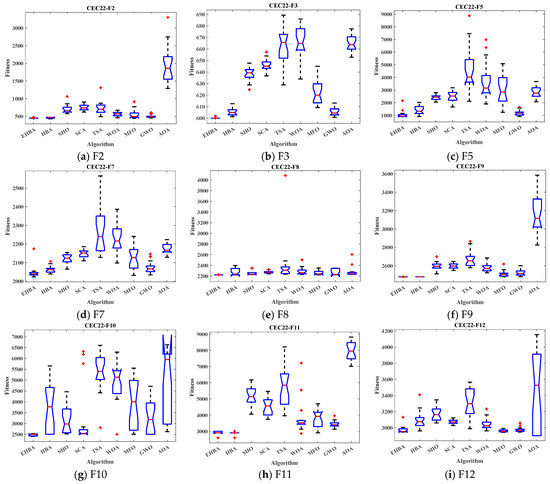

The box plot of the test function in Figure 10, the compact box plot, indicates strong data consistency. The red lines of EHBA and HBA show the lowest median, with EHBA being more pronounced. The narrow quartile range of the results obtained by EHBA indicates that the distribution of the obtained solutions is tighter. What is more, there are few outliers, which is also proof of the stability of the EHBA. EHBA has a thinner box plot compared to other algorithms, which indicates the improved performance of the HBA due to the incorporation of the improved strategy. Overall, EHBA is a competitive algorithm that deserves further exploration in practical engineering applications.

Figure 10.

Box plots of EHBA and other algorithms on CEC2022 partial test functions.

In summary, we can see that the EHBA has good convergence, stability, and effectiveness, which provides a solid foundation for solving practical problems.

5. The Application of EHBA in Engineering Design Issues

To verify its ability to solve practical problems, the EHBA was used to solve three practical engineering design problems. Before using each algorithm to solve practical engineering optimization problems, a penalty function [53] was used to transform the constrained problem into an unconstrained problem.

5.1. Welding Beam Design Issues

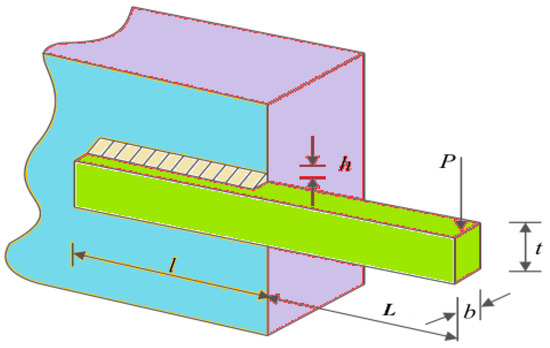

Designing welded beams with the lowest manufacturing cost is an effective way to achieve green manufacturing [26]; the schematic view can see the Figure 11. Notably, thickness (b), length (l), height (t) of the electrode, and weld thickness (h) are defined as the four optimize variables. At the same time, a load was imposed on the top of the reinforcement; this will result in seven violated constraints, as detailed in Equation (24). Let , the formula expression of its mathematical model can be seen in Equation (23). The meanings of relevant variables can be found in reference [26].

Figure 11.

Schematic view of welded beam problem.

The constraint conditions are listed in Equation (24).

The range of variable values is given below, in Equation (25).

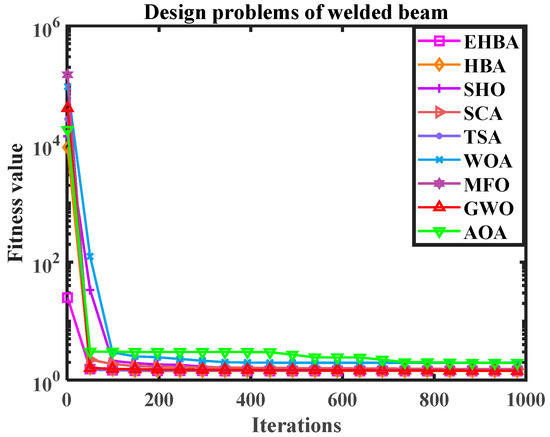

Utilizing EHBA and HBA, SHO, SCA, TSA, WOA, MFO, GWO, and AOA to solve welding beam design problems, Table 9 shows the mean, standard deviation, worst case, and best values independently calculated 20 times in solving the welding beam design problem. Table 10 summarizes the best results in terms of the best results generated by the above algorithms. Simultaneously, the algorithm’s convergence curve diagram is provided in Figure 12; the vertical axis is the logarithm of numerical results, which indicates the efficiency of the EHBA developed in this paper.

Table 9.

Statistical results of the welded beam problem.

Table 10.

Optimal results of the welded beam problem.

Figure 12.

The convergence curve diagram (Design problems of welded beam).

From the data analysis in the table, the objective fitness values acquired by MFO and HBA are the same and smaller, meaning that they have high solving precision. Observing the bold data, it is clear that EHBA has performed well on the fourth indicator, with small optimal values, worst values, average values, and standard deviations. Therefore, overall, EHBA has high accuracy in solving this problem and the solution results are relatively stable.

5.2. Vehicle Side Impact Design Issues

The goal of the car side impact design problem is to minimize the weight of the car. According to the mathematical model of car side impact established in reference [54], this problem has 11 design elements ; the mathematical expressions of the objective problem is below.

The constraint conditions that the objective function needs to meet are shown in Equation (27).

The range of variable values is as follows in Equation (28).

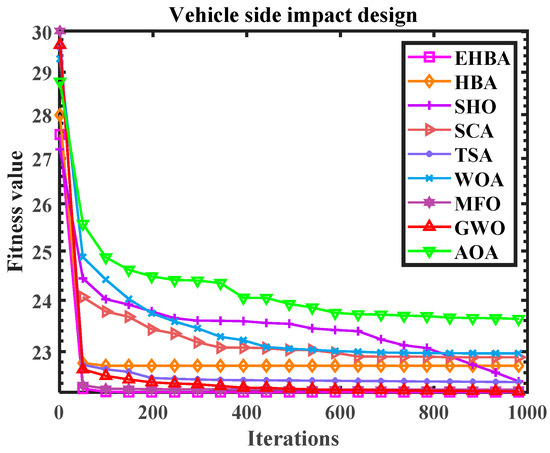

EHBA was implemented to deal with this case and the calculation values were compared with those of HBA, SHO, SCA, TSA, WOA, MFO, GWO, and AOA. Table 11 shows the best values and corresponding variable values for solving the car side impact design problem.

Table 11.

Optimal results of side impact design problems for cars.

The objective fitness values drawn by MFO, HBA, and EHBA are the same and smaller, making it known that they have high solving accuracy. In addition, Table 12 presents the statistical results running 20 times. The bold data in the table represent the optimal values among all algorithms under each evaluation indicator. Observing the bold data, notably, EHBA has achieved good solving results under the four indicators, with small optimal values, worst values, average values, and standard deviations. Therefore, EHBA has high accuracy and is relatively stable in solving this case. In addition, the convergence curve figure in the above methods is provided in Figure 13; the vertical axis is the logarithm of the numerical solution, which also adds to the proof of the efficiency of EHBA developed in this paper.

Table 12.

Statistical results of vehicle side impact design issues.

Figure 13.

The convergence curve diagram (Vehicle side impact design).

5.3. Parameter Estimation of Frequency Modulated (FM) Sound Waves

Finding the optimal parameter combination of the six variables for frequency modulation synthesizers is the most critical issue in the problem of frequency modulation sound waves [55]. This is a multi-modal problem. Here, the minimum sum of squared errors between sound waves and target sound waves is defined as the target equation. Let , the mathematical description of the problem can be seen in Equation (29).

where

In Equation (30), , o(t) and o0(t) are the estimating sound waves and target sound wave.

The range of variable values is defined with Equation (31).

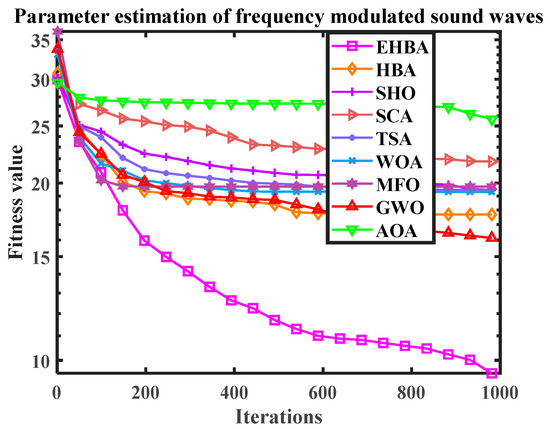

EHBA is applied to deal with the issue of parameter estimation, and the results of EHBA with the original HBA, SHO, SCA, TSA, WOA, MFO, GWO, and AOA are compared. Table 13 lists the best results obtained by all comparison methods. From this, the result obtained by the EHBA are relatively small, indicating that the algorithm has high solving accuracy. In addition, Table 14 presents the statistical results of all methods running 20 times. The bold data are the best value calculated by comparing the algorithms under each indicator (optimal value, worst value, average value, standard deviation). Observing the bold data, it can be seen that although EHBA has a large standard deviation, it can obtain smaller average values, optimal values, and worst values. Therefore, overall, the solution effect of EHBA is relatively good. In addition, the convergence curve diagram for the above methods is provided in Figure 14. The vertical axis is the logarithm of numerical results, which also adds to the proof of the efficiency of EHBA developed in this paper.

Table 13.

Optimal results for parameter estimation of FM sound waves.

Table 14.

Statistical results of FM sound wave parameter estimation problem.

Figure 14.

The convergence curve diagram (Parameter estimation of frequency modulated sound waves).

6. Conclusions and Future Research

A multi-strategy fusion enhanced optimization algorithm (EHBA) is proposed based on the dynamic opposite learning, differential variation and selectively, local quantum search, or dynamic Laplacian crossover operators to address the issues of local optima and slow convergence speed in the HBA. The adoption of a dynamic opposite learning strategy broadens the search area of the population, enhances global search ability, and improves population diversity and the quality of solutions. Differential mutation operation not only enhances the precision and exploration, but also secures the convergence rate of the HBA. Introducing a local quantum search strategy during the honey harvesting stage (development), the local search capabilities are enhanced and the population optimization precision is improved. Alternatively, introducing dynamic Laplacian crossover operators can improve convergence speed, which reduces the probability of EHBA sinking into local optima to a certain extent. Through comparative experiments with other algorithms on the CEC2017, CEC2020, and CEC2022 test sets, along with three engineering examples, EHBA has been verified to have good solving performance compared with other intelligent algorithms and other variant algorithms. From the convergence curve, box plot, and comparative analysis of algorithm performance testing, it will be on display that compared with the other eight comparative methods, EHBA has significantly improved optimization ability and convergence speed, and is expected to prove useful in optimizing problems.

Due to the superiority of EHBA, it may be implemented for multi-objective problems in more scientific research areas, such as robot movement, missile trajectory, image segmentation, predictive modeling, feature selection [56], geometry optimization [57], and engineering design [58,59] in the future. In addition, effective improvements to the original algorithm HBA can not only add different and good strategies, but also integrate other excellent algorithms.

Author Contributions

Conceptualization, J.H.; methodology, J.H. and H.H.; software, J.H.; validation, J.H. and H.H.; formal analysis, H.H.; investigation, J.H. and H.H.; resources, H.H.; data curation, J.H.; writing—original draft, J.H. and H.H.; writing—review and editing, J.H. and H.H.; visualization, J.H.; supervision, H.H.; project administration, H.H.; funding acquisition, H.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received financial support from the National Natural Science Foundation of China (72072144, 71672144, 71372173, 70972053); Shaanxi soft Science Research Plan (2019KRZ007); Science and Technology Research and Development Program of Shaanxi Province (2021KRM183, 2017KRM059, 2017KRM057, 2014KRM282); Soft Science Research Program of Xi’an Science and Technology Bureau (21RKYJ0009); Fundamental Research Funds for the Central Universities, CHD (300102413501); Key R&D Program Project in Shaanxi Province (2021SF-458).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All data generated or analyzed during the study are included in this published article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Jia, H.M.; Li, Y.; Sun, K.J. Simultaneous feature selection optimization based on hybrid sooty tern optimization algorithm and genetic algorithm. Acta Autom. Sin. 2022, 48, 15. [Google Scholar] [CrossRef]

- Jia, H.M.; Jiang, Z.C.; Li, Y. Simultaneous feature selection optimization based on improved bald eagle search algorithm. Control Decis. 2022, 37, 3. [Google Scholar] [CrossRef]

- Jia, H.M.; Jiang, Z.C.; Peng, X.X. Multi-threshold color image segmentation based on improved spotted hyena optimizer. Comput. Appl. Soft. 2020, 37, 261–267. [Google Scholar]

- Zhang, F.Z.; He, Y.Z.; Liu, X.J.; Wang, Z.K. A novel discrete differential evolution algorithm for solving D{0-1} KP problem. J. Front. Comput. Sci. Technol. 2022, 16, 12. [Google Scholar]

- Holland, J.H. Genetic algorithms. Sci. Am. 1992, 267, 66–73. [Google Scholar] [CrossRef]

- Rashedi, E.; Nezamabadi-Pour, H.; Saryazdi, S. GSA: A gravitational search algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Hatamlou, A. Multi-verse optimizer: A nature-inspired algorithm for global optimization. Neural Comput. Appl. 2016, 27, 495–513. [Google Scholar] [CrossRef]

- Faramarzi, A.; Heidarinejad, M.; Stephens, B.E.; Mirjalili, S. Equilibrium optimizer: A novel optimization algorithm. Knowl.-Based Syst. 2020, 191, 105190. [Google Scholar] [CrossRef]

- Rao, R.V.; Savsani, V.J.; Vakharia, D.P. Teaching-learning-based optimization: An optimization method for continuous non-linear large scale problems. Inf. Sci. 2012, 183, 1–15. [Google Scholar] [CrossRef]

- Moghdani, R.; Salimifard, K. Volleyball premier league algorithm. Appl. Soft Comput. 2018, 64, 161–185. [Google Scholar] [CrossRef]

- Abualigah, L.; Yousri, D.; Elaziz, M.A.; Ewees, A.A.; Al-qaness, M.A.A.; Gandomi, A. Aquila Optimizer: A novel meta-heuristic optimization algorithm. Comput. Ind. Eng. 2021, 157, 107250. [Google Scholar] [CrossRef]

- Lin, S.J.; Dong, C.; Chen, M.Z.; Zhang, F.; Chen, J.H. Summary of new group intelligent optimization algorithms. Comput. Eng. Appl. 2018, 54, 1–9. [Google Scholar] [CrossRef]

- Feng, W.T.; Song, K.K. An Enhanced Whale Optimization Algorithm. Comput. Simul. 2020, 37, 275–279, 357. [Google Scholar] [CrossRef]

- Chen, Y.; Chen, S. Research on Application of Dynamic Weighted Bat Algorithm in Image Segmentation. Comput. Eng. Appl. 2020, 56, 207–215. [Google Scholar] [CrossRef]

- Xue, B.; Zhang, M.; Browne, W.N.; Yao, X. A survey on evolutionary computation approaches to feature selection. IEEE Trans. Evol. Comput. 2016, 20, 606–626. [Google Scholar] [CrossRef]

- Gong, G.; Chiong, R.; Deng, Q.; Gong, X. A hybrid artificial bee colony algorithm for flexible job shop scheduling with worker flexibility. Int. J. Prod. Res. 2019, 58, 4406–4420. [Google Scholar] [CrossRef]

- Tharwat, A.; Elhoseny, M.; Hassanien, A.E.; Gabel, T.; Kumar, A. Intelligent Bézier curve-based path planning model using Chaotic Particle Swarm Optimization algorithm. Clust. Comput. 2019, 22 (Suppl. S2), 4745–4766. [Google Scholar] [CrossRef]

- Askarzadeh, A.; Rezazadeh, A. Artificial neural network training using a new efficient optimization algorithm. Appl. Soft Comput. 2013, 13, 1206–1213. [Google Scholar] [CrossRef]

- Irmak, B.; Karakoyun, M.; Gülcü, Ş. An improved butterfly optimization algorithm for training the feed-forward artificial neural networks. Soft Comput. 2023, 27, 3887–3905. [Google Scholar] [CrossRef]

- Ang, K.M.; Chow, C.E.; El-Kenawy, E.-S.M.; Abdelhamid, A.A.; Ibrahim, A.; Karim, F.K.; Khafaga, D.S.; Tiang, S.S.; Lim, W.H. A Modified Particle Swarm Optimization Algorithm for Optimizing Artificial Neural Network in Classification Tasks. Processes 2022, 10, 2579. [Google Scholar] [CrossRef]

- Ang, K.M.; Lim, W.H.; Tiang, S.S.; Ang, C.K.; Natarajan, E.; Ahamed Khan, M.K.A. Optimal Training of Feedforward Neural Networks Using Teaching-Learning-Based Optimization with Modified Learning Phases. In Proceedings of the 12th National Technical Seminar on Unmanned System Technology 2020. Lecture Notes in Electrical Engineering; Springer: Singapore, 2022; Volume 770. [Google Scholar] [CrossRef]

- Wolpert, D.H.; Macready, W.G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Eberhart, R.; Kennedy, J. Particle swarm optimization. In Proceedings of the IEEE International Conference on Neural Networks, Perth, Australia, 27 November–1 December 1995; IEEE: Piscataway, NJ, USA, 1995; Volume 4, pp. 1942–1948. [Google Scholar] [CrossRef]

- Dorigo, M.; Maniezzo, V.; Colorni, A. Ant system: Optimization by a colony of cooperating agents. IEEE Trans. Syst. Man Cybern. B 1996, 26, 29–41. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.S.; Gandomi, A.H. Bat algorithm: A novel approach for global engineering optimization. Eng. Comput. 2012, 29, 464–483. [Google Scholar] [CrossRef]

- Gandomi, A.H.; Yang, X.; Alavi, A.H. Cuckoo search algorithm: A metaheuristic approach to solve structural optimization problems. Eng. Comput. 2013, 29, 17–35. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Mirjalili, S.; Gandomi, A.H.; Mirjalili, S.Z.; Saremi, S.; Faris, H.; Mirjalili, S.M. Salp swarm algorithm: A bio-inspired optimizer for engineering design problems. Adv. Eng. Softw. 2017, 114, 163–191. [Google Scholar] [CrossRef]

- Zhao, S.; Zhang, T.; Ma, S.; Wang, M. Sea-horse optimizer: A novel nature-inspired meta-heuristic for global optimization problems. Appl. Intell. 2022, 53, 11833–11860. [Google Scholar] [CrossRef]

- Abualigah, L.; Elaziz, M.A.; Sumari, P.; Geem, Z.W.; Gandomi, A.H. Reptile Search Algorithm (RSA): A nature-inspired meta-heuristic optimizer. Expert Syst. Appl. 2022, 191, 116158. [Google Scholar] [CrossRef]

- Mirjalili, S. SCA: A sine cosine algorithm for solving optimization problems. Knowl.-Based Syst. 2015, 96, 120–133. [Google Scholar] [CrossRef]

- Kaur, S.; Awasthi, L.K.; Sangal, A.L.; Dhiman, G. Tunicate swarm algorithm: A new bio-inspired based metaheuristic paradigm for global optimization. Eng. Appl. Artif. Intel. 2020, 90, 103541. [Google Scholar] [CrossRef]

- Naruei, I.; Keynia, F. Wild horse optimizer: A new meta-heuristic algorithm for solving engineering optimization problems. Eng. Comput. 2021, 38, 3025–3056. [Google Scholar] [CrossRef]

- Hashim, F.A.; Hussain, K.; Houssein, E.H.; Mabrouk, M.S.; Al-Atabany, W. Archimedes optimization algorithm: A new metaheuristic algorithm for solving optimization problems. Appl. Intell. 2021, 51, 1531–1551. [Google Scholar] [CrossRef]

- Mirjalili, S. Moth-flame optimization algorithm: A novel nature-inspired heuristic paradigm. Knowl.-Based Syst. 2015, 89, 228–249. [Google Scholar] [CrossRef]

- Hashim, F.A.; Houssein, E.H.; Hussain, K.; Mabrouk, M.S.; Al-Atabany, W. Honey badger algorithm: New metaheuristic algorithm for solving optimization problems. Math. Comput. Simulat. 2021, 192, 84–110. [Google Scholar] [CrossRef]

- Akdağ, O. A Developed Honey Badger Optimization Algorithm for Tackling Optimal Power Flow Problem. Electr. Power Compon. Syst. 2022, 50, 331–348. [Google Scholar] [CrossRef]

- Han, E.; Ghadimi, N. Model identification of proton-exchange membrane fuel cells based on a hybrid convolutional neural network and extreme learning machine optimized by improved honey badger algorithm. Sustain. Energy Technol. Assess. 2022, 52, 102005. [Google Scholar] [CrossRef]

- Zhong, J.Y.; Yuan, X.G.; Du, B.; Hu, G.; Zhao, C.Y. An Lévy Flight Based Honey Badger Algorithm for Robot Gripper Problem. In Proceedings of the 7th International Conference on Image, Vision and Computing (ICIVC), Xi’an, China, 26–28 July 2022; pp. 901–905. [Google Scholar] [CrossRef]

- Hu, G.; Zhong, J.Y.; Wei, G. SaCHBA_PDN: Modified honey badger algorithm with multi-strategy for UAV path planning. Expert Syst. Appl. 2023, 223, 119941. [Google Scholar] [CrossRef]

- Kapner, D.; Cook, T.; Adelberger, E.; Gundlach, J.; Heckel, B.R.; Hoyle, C.; Swanson, H. Tests of the gravitational inverse-square law below the dark-energy length scale. Phys. Rev. Lett. 2007, 98, 021101. [Google Scholar] [CrossRef]

- Jia, H.M.; Liu, Q.G.; Liu, Y.X.; Wang, S.; Wu, D. Hybrid Aquila and Harris hawks optimization algorithm with dynamic opposition-based learning. CAAI Trans. Intell. Syst. 2023, 18, 104–116. [Google Scholar] [CrossRef]

- Hua, Y.; Sui, X.; Zhou, S.; Chen, Q.; Gu, G.; Bai, H.; Li, W. A novel method of global optimization for wavefront shaping based on the differential evolution algorithm. Opt. Commun. 2021, 481, 126541. [Google Scholar] [CrossRef]

- Li, X.; Wang, L.; Jiang, Q.; Li, N. Differential evolution algorithm with multi-population cooperation and multi-strategy integration. Neurocomputing 2021, 421, 285–302. [Google Scholar] [CrossRef]

- Cheng, J.; Pan, Z.; Liang, H.; Gao, Z.; Gao, J. Differential evolution algorithm with fitness and diversity ranking-based mutation operator. Swarm Evol. Comput. 2021, 61, 100816. [Google Scholar] [CrossRef]

- Xu, C.H.; Luo, Z.H.; Wu, G.H.; Liu, B. Grey wolf optimization algorithm based on sine factor and quantum local search. Comput. Eng. Appl. 2021, 57, 83–89. [Google Scholar] [CrossRef]

- Deep, K.; Bansal, J.C. Optimization of directional over current relay times using Laplace Crossover Particle Swarm Optimization (LXPSO). In Proceedings of the 2009 World Congress on Nature & Biologically Inspired Computing (NaBIC), Coimbatore, India, 9–11 December 2009; pp. 288–293. [Google Scholar] [CrossRef]

- Wan, Y.G.; Li, X.; Guan, L.Z. Improved Whale Optimization Algorithm for Solving High-dimensional Optimization Problems. J. Front. Comput. Sci. Technol. 2021, 112, 107854. [Google Scholar]

- Awad, N.H.; Ali, M.Z.; Liang, J.J.; Qu, B.Y.; Suganthan, P.N. Problem Definitions and Evaluation Criteria for the CEC2017 Special Session and Competition on Single Objective Bound Constrained Real-Parameter Numerical Optimization; Technical Report; Nanyang Technological University: Singapore, 2016. [Google Scholar]

- Yue, C.T.; Price, K.V.; Suganthan, P.N.; Liang, J.J.; Ali, M.Z.; Qu, B.Y.; Awad, N.H.; Biswas, P.P. Problem Definitions and Evaluation Criteria for the CEC2020 Special Session and Competition on Single Objective Bound Constrained Numerical Optimization; Technical Report; Computational Intelligence Laboratory, Zhengzhou University, Zhengzhou China and Technical Report; Nanyang Technological University: Singapore; Glasgow, UK, 2020. [Google Scholar]

- Yazdani, D.; Branke, J.; Omidvar, M.N.; Li, X.; Li, C.; Mavrovouniotis, M.; Nguyen, T.T.; Yang, S.; Yao, X. IEEE CEC 2022 Competition on Dynamic Optimization Problems Generated by Generalized Moving Peaks Benchmark. arXiv 2021, arXiv:2106.06174. [Google Scholar] [CrossRef]

- Wu, L.H.; Wang, Y.N.; Zhou, S.W.; Yuan, X.F. Differential evolution for nonlinear constrained optimization using non-stationary multi-stage assignment penalty function. Syst. Eng. Theory Pract. 2007, 27, 128–133. [Google Scholar] [CrossRef]

- Youn, B.D.; Choi, K.K.; Yang, R.J.; Gu, L. Reliability-based design optimization for crash worthiness of vehicle side impact. Struct. Multidiscip. Optim. 2004, 26, 272–283. [Google Scholar] [CrossRef]

- Gothania, B.; Mathur, G.; Yadav, R.P. Accelerated artificial bee colony algorithm for parameter estimation of frequency-modulated sound waves. Int. J. Electron. Commun. Eng. 2014, 7, 63–74. [Google Scholar]

- Hu, G.; Du, B.; Wang, X.F.; Wei, G. An enhanced black widow optimization algorithm for feature selection. Knowl.-Based Syst. 2022, 235, 107638. [Google Scholar] [CrossRef]

- Zheng, J.; Ji, X.; Ma, Z.; Hu, G. Construction of Local-Shape-Controlled Quartic Generalized Said-Ball Model. Mathematics 2023, 11, 2369. [Google Scholar] [CrossRef]

- Hu, G.; Guo, Y.X.; Wei, G.; Abualigah, L. Genghis Khan shark optimizer: A novel nature-inspired algorithm for engineering optimization. Adv. Eng. Inform. 2023, 58, 102210. [Google Scholar] [CrossRef]

- Hu, G.; Zheng, Y.X.; Abualigah, L.; Hussien, A.G. DETDO: An adaptive hybrid dandelion optimizer for engineering optimization. Adv. Eng. Inform. 2023, 57, 102004. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).