Abstract

The growing intricacies in engineering, energy, and geology pose substantial challenges for decision makers, demanding efficient solutions for real-world production. The water flow optimizer (WFO) is an advanced metaheuristic algorithm proposed in 2021, but it still faces the challenge of falling into local optima. In order to adapt WFO more effectively to specific domains and address optimization problems more efficiently, this paper introduces an enhanced water flow optimizer (CCWFO) designed to enhance the convergence speed and accuracy of the algorithm by integrating a cross-search strategy. Comparative experiments, conducted on the CEC2017 benchmarks, illustrate the superior global optimization capability of CCWFO compared to other metaheuristic algorithms. The application of CCWFO to the production optimization of a three-channel reservoir model is explored, with a specific focus on a comparative analysis against several classical evolutionary algorithms. The experimental findings reveal that CCWFO achieves a higher net present value (NPV) within the same limited number of evaluations, establishing itself as a compelling alternative for reservoir production optimization.

1. Introduction

In the fields of engineering, energy production, and various industries, decision makers face increasingly complex challenges [1,2,3]. The need for optimization strategies has grown alongside the increasing complexity of these problems. Optimized solutions are essential due to the intricate interplay of factors such as resource scarcity, economic constraints, and technological advancements [4]. Whether in the intricate task of global optimization [5] or the nuanced field of oil reservoir production [6], the imperative for streamlined and efficient solutions becomes paramount.

In order to tackle these challenges, optimization methods play a crucial role in problem-solving approaches. These methods can be broadly divided into deterministic methodologies and metaheuristic algorithms [7]. Deterministic methods, characterized by their structured and precise approach, offer advantages in terms of convergence and reliability; examples include conjugate gradient methods [8], linear programming [9], interior point methods [10], simplex methods [11], etc. However, they usually require the problem to be convex, differentiable, continuous, etc., and it is difficult to solve complex high-dimensional problems. On the other hand, metaheuristic algorithms have almost no requirements on the properties of the problem and bring a new perspective to optimization due to their adaptive and stochastic properties. Nevertheless, their extensive computational requirements and a lack of guaranteed convergence remain notable challenges.

Among metaheuristic algorithms, two prominent classifications are Swarm Intelligence Algorithms (SIs) and evolutionary algorithms (EAs). SIs and EAs have a similar structure, where a set of solutions is first randomly initialized, after which new offspring are generated using a set update strategy, and finally the solutions of the new generation are selected using a specific selection strategy. This process is repeated until the maximum number of iterations is reached. SIs are mainly inspired by the aggregation behaviors of biological population intelligence, among which the classical ones include the Particle Swarm Optimizer (PSO) [12], Ant Colony Optimizer (ACO) [13], and emerging algorithms in recent years, including the Harris Hawk Optimizer (HHO) [14], Grey Wolf Optimizer (GWO) [15], artificial bee colony (ABC) optimization algorithm [16], Hunger Games Search (HGS) [17], Slime Mushroom Algorithm (SMA) [18], Lunger–Kuta Optimization Algorithm (RUN) [19], Vector Weighted Average Algorithm (INFO) [20], etc. EAs mainly simulate the evolutionary process of natural selection [12] and survival of the fittest. They typically involve three operators: crossover, mutation, and selection. Classical EAs include a Genetic Algorithm (GA) [21], Genetic Programming (GP) [22], Spherical Evolution (SE) [23], differential evolutionary (DE) [24].

The intricacies of problem solving in diverse industrial landscapes are further underscored by the No Free Lunch Theorem (NFL) [25], which postulates the absence of a universally superior optimization algorithm. In essence, this theorem asserts that the performance of any given algorithm is contingent upon the specific characteristics of the problem at hand. Consequently, the adoption of tailored algorithms to address specific industrial challenges becomes imperative. Recognizing the inherent limitations and idiosyncrasies of existing algorithms, the impetus for algorithmic improvement gains prominence in navigating the intricate terrain of optimization.

In the field of oil reservoir development, creating an effective production scheme is essential for efficient recovery and sustained production. Optimizing the injection and production processes in oil reservoirs involves considering various dynamic factors, such as reservoir heterogeneity, fluid properties, and operational constraints. Striking an optimal balance in injection rates and production strategies is vital for maximizing hydrocarbon recovery and minimizing operational costs [26]. The inherent complexities of this domain call for innovative approaches to address the multifaceted challenges posed by the dynamic nature of oil reservoirs.

Numerous scholars have made efforts to improve the optimization of petroleum injection and production. Foroud et al. [27] applied eight different optimization algorithms to the optimization of oil and gas production in the Bruges field. The results show that the Guided Pattern Search (GPS) is the most effective and gives the most NPV in the least number of evaluations. Ying et al. [28] proposed a multi-fidelity migrated differential evolutionary algorithm (MTDE), which utilizes the results of different fidelity levels to exchange and migrate information, accelerating the convergence of the algorithm and improving the quality of the optimal knots. The proposed method is validated on an egg model and two real field case models for production optimization, and the results show that the MTDE has a faster convergence rate and a higher quality well control strategy than the single-fidelity and greedy multi-fidelity methods. Zhang et al. [29] proposed a two-model differential evolutionary algorithm (CSDE) for constrained water drive optimization by constructing the boundaries of the feasible domain shown by a support vector machine, after which the objective function is approximated using a Radial Basis Function (RBF). The proposed algorithm is applied to a case study, and the results show that CSDE can effectively handle the constraints, and higher NPV can be obtained compared to other single-model algorithms. Desbordes et al. [30] proposed a migration learning-based optimization framework for solving dynamic production optimization problems. The developed framework is integrated into three well-known evolutionary algorithms, the Non-dominated Sequential Genetic Algorithm (NSGA-II), the Multi-Objective Particle Swarm (MOPSO) and the Decomposition-based Multi-Objective Evolutionary Algorithm (MODE), and PSO. The proposed method was tested on 12 benchmark functions and a real amenity, and in comparison, with their original method, the method effectively reduces the number of calls to the numerical simulator and achieves better NPV. There are many similar studies, but they usually focus on the construction of the agent model and neglect the choice of the optimization framework (DE or PSO is always chosen as the optimization framework). However, an optimizer adapted to a specific task is crucial for achieving better optimization results.

The water flow optimizer (WFO) is a nature-inspired evolutionary algorithm that was introduced in a prestigious journal in 2021 [31]. Its convergence has been rigorously established through limit theory, and it has demonstrated successful applications in the optimization of spacecraft trajectories. Chen et al. [32] proposed an enhanced water flow optimizer and a refined maximally similar path localization algorithm (IWFO-IMSP) for precise localization of wireless sensor networks. These advancements significantly enhance the convergence speed and capability of WFO by integrating strategies like Halton sequences and Cauchy variants. Notably, the proposed IWFO-IMSP algorithm showcases considerably superior localization accuracy when compared to four traditional methods. Furthermore, Xue et al. [33] proposed an enhanced WFO that adaptively tunes hyperparameters of a theory-guided neural network. The quality of the initial population is enhanced through the application of an adversarial learning technique during the initial stage of WFO, while the diversity of the population is improved by introducing a nonlinear convergence factor to the laminar flow operator. This framework exhibits superior performance in solving stochastic partial differential equations. In another study, Fagner et al. [34] proposed a binary variation of WFO that exhibits superior results compared to other classical dimensionality reduction methods. These studies collectively indicate that, despite the superior optimization performance of WFO, further enhancements are necessary to tailor it to specific problem domains.

In this paper, an enhanced WFO algorithm called CCWFO is proposed. It is used to significantly enhance the global optimization capability of the original algorithm and makes it effectively applicable to oil production optimization, by introducing the CC mechanism to enhance the information interaction among the individuals in the population, to enrich the diversity of the population.

The main contributions of this paper are as follows:

- An enhanced WFO algorithm is proposed by introducing the CC mechanism.

- The performance of the CCWFO algorithm is verified in detail, through comparison experiments with 10 other conventional and state-of-the-art optimization algorithms on the CEC2017 benchmark function, and the experimental results obtained are additionally subjected to W and F tests.

- The proposed algorithm is used to solve production optimization problems based on three-channel reservoirs.

The structure of the paper is as follows: Section 1 introduces the background of this research and motivation, briefly performs a literature review, and concludes with a summary of the main contributions of this paper. Section 2 briefly describes the original WFO algorithm. Section 3 describes the CC mechanism and presents the proposed CCWFO algorithm in detail. Section 4 describes the flow, results, and analysis of the global optimization experiments. Section 5 presents an example of the application of CCWFO in a three-channel reservoir. Section 6 summarizes the whole paper.

2. Overview of the Original WFO

WFO, a Swarm Intelligence (SI) Algorithm proposed by Prof. Kaiping Luo in 2021 [31], draws inspiration from the two distinctive types of water flows found in nature: laminar and turbulent. In nature, water flows from high to low, which is similar to the process of searching for a solution in an optimization problem. In the WFO algorithm, the water particles are considered as the solution, the positions of the water particles are considered as the values of the solution, and the potential energy of the water is considered as the fitness value of the objective function. The algorithm simulates the behavior of laminar and turbulent flows in the water flow process through mathematical modelling and finds the optimal solution through continuous iteration. The mathematical description of laminar and turbulent flow is as follows:

1. Laminar Operator: In laminar flow, all particles move parallel to each other in the same direction, but their speed varies due to the surroundings. The rule of motion is denoted using Equation (1).

where is the current iteration number, is the population size, is the position of the th particles at the th iteration, is the possible movement position of the th individual at the tth iteration, is a random number between 0 to 1, and the vector represents the common direction of movement of all the individuals at the current iteration; is defined as shown in Equation (2).

In the laminar flow operator, individuals in the population use a regular parallel unidirectional search, where the same direction vector d ensures that the search is unidirectional, and the randomness of s ensures that different individuals have different move steps.

2. Turbulen Operator: In turbulence, water particles are affected by other obstacles and show irregular rotational movements. The possible moving position is generated by the random dimension of the th individual through Equation (3).

The upper part of Equation (3) denotes the vortex transformation of water particles in the same layer and the lower part of Equation (3) denotes general cross-layer movement of particles. Where denotes a dimension randomly selected from the particle, denotes a dimension different from randomly selected from the particle, and denotes the value of the jth dimension of the th particle at the th iteration. is a random number in the range −π to π, and denote the upper and lower bounds of the selected dimension, respectively, is a random number in the range 0 to 1, and is a control parameter called the vortex probability.

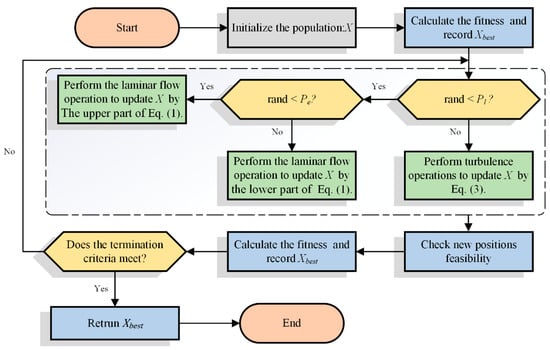

During the iterations of the algorithm run, the algorithm performs a stochastic simulation of the two behaviors, laminar and turbulent, and their respective run probabilities are controlled by the parameter . All generated solutions are evaluated and the new particles generated by each iteration update are compared with the old particles and the particles with better fitness values are retained. The iteration is repeated until the termination condition is met; the flowchart of the algorithm for WFO is shown in Figure 1.

Figure 1.

Flowchart of WFO.

3. Proposed CCWFO

3.1. Crisscross Strategy

The crossover mechanism draws inspiration from Meng’s crossover optimizer (CSO) [35], which was proposed in 2014. It incorporates two operations, namely a horizontal crossover search (HCS) and vertical crossover search (VCS), representing the exchange of horizontal and vertical information between particles, respectively. Essentially, the core concept of the crossover strategy involves generating new particles by exchanging information between randomly selected particles or dimensions. The fittest particles are retained and added to the population. This crossover mechanism exhibits a strong global search capability. Shan et al. [36] enhanced the CSA by integrating the crossover strategy and the combined mutation strategy, where the crossover strategy effectively facilitated the population in escaping local optima. Hu et al. [37] introduced the crossover strategy into the SCA algorithm and experimentally demonstrated that it accelerated the global convergence of the population, improved population diversity, and aided particles in escaping local optima.

In this study, we introduce the crossover mechanism into WFO to enhance its single search mode and enrich population diversity. This exchange of information between particles accelerates the algorithm’s convergence and improves its ability to escape local optima. HCS and VCS are described as follows.

3.1.1. Horizontal Crossover Search

HCS refers to the crossover operation of the dimensions of two randomly selected particles, which can make more use of the population information, refine the search process, and improve the algorithm’s global exploration capability. HCS operation is defined using Equations (4) and (5).

where and are random numbers within the range [0, 1], and are random numbers within the range [−1, 1], is the value of the th dimension of the th particle of population , and is the value of the th dimension of the th particle of population . and are the new offspring of the two particles generated by the HCS operation. After the HCS operation, the new offspring will compete with the parental particles, retaining the particles with better fitness. The pseudo-code for HCS is shown in Algorithm 1.

| Algorithm 1 Horizontal crossover search |

| Bhc = randperm () For = Bhc () = Bhc () For Generate four random number , (0,1), , (−1,1) Generate and by Equations (4) and (5) End End For IF () < () End End End |

3.1.2. Vertical Crossover Search

The VCS operation is performed for each particle by randomly selecting a set of two pairs of dimensions for crossover to obtain a new particle. Similarly, after the VCS operation, the offspring particles will compete with the parental particles and ultimately retain the better ones. VCS operation is defined using Equation (6).

where is a random number within the range [0, 1], and represent the values of the two dimensions randomly selected by the th individual, respectively, and represents the value of the th dimension generated from two random dimensions of the th particle. The pseudo-code for VCS is shown in Algorithm 2.

| Algorithm 2 Vertical crossover search |

| Bvc = randperm () Generate a random number (0,1) For IF < = Bvc () = Bvc () For Generate a random number (0,1) Generate by Equation (6) End End End For IF () < () End End End |

3.2. The Proposed CCWFO

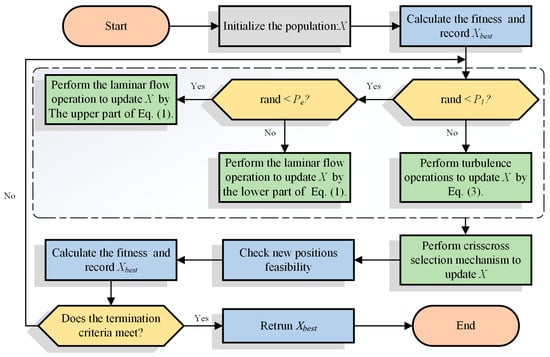

In this subsection, the specific workflow of CCWFO is described. Firstly, CCWFO initializes the initial population of the algorithm and the required parameters, after which the algorithm sequentially updates the particles in the population according to the laminar and turbulent operations of the original WFO. At the end of the update strategy for laminar and turbulent flow, the algorithm will execute the CC strategy to enhance the information exchange between the population particles through HCS and VCS operations to explore the search space in more detail. Finally, this process will be iterated until the termination condition of the algorithm is reached and the current globally optimal particle is finally returned. The flowchart of the algorithm is shown in Figure 2.

Figure 2.

Flowchart of CCWFO.

The pseudo-code of CCWFO is given in Algorithm 3.

| Algorithm 3 Pseudo-code of CCWFO |

| Set parameters: The maximum iteration number , the problem dimension , and the population size Initialize population = 1 For Evaluate the fitness value of Find the global min End While ( IF For /* Laminar flow */ For Generate by Equations (1) and (2) End Evaluate the fitness value of Update , End Else For /* Turbulent flow */ For Generate by Equation (3) End Evaluate the fitness value of Update , End End IF For /*CC*/ Perform Horizontal crossover search to update Perform Vertical crossover search to update Update End ; End While Return End |

The time complexity of the CCWFO algorithm can be succinctly deconstructed into a composite of four stages: population initialization, laminar and vortex operations, and the CC strategy. The paramount parameters that exert a significant influence on the time complexity encompass the dimensionality (dim), the total iterations (T), and the population size (N). Consequently, the time complexity of CCWFO, denoted as O(CCWOF), may be delineated as follows: O(CCWOF) = O(initialization) + O(WFO) + O(CC) ≈ O(n × dim) + O(T × n × dim) + O(T × dim) ≈ O(T × dim × N).

4. Global Optimization Experimental Results and Analysis

This section presents a comprehensive and rigorous evaluation of the proposed CCWFO from a global optimization perspective using different types of benchmark functions. All the experiments were conducted fairly on benchmarks that comply with industry accepted standards. The experiments were conducted on a computer equipped with Intel Xeon Silver 4110 CPU and 128 GB RAM with Windows 10 as the operating system, and all algorithms were coded on MATLAB 2020B. The same parameter settings for all algorithms are as shown in Table 1.

Table 1.

Main parameters of the test experiment.

4.1. Benchmark Function

In this subsection, we succinctly introduce the 29 benchmark functions employed in The IEEE Congress on Evolutionary Computation (CEC) [38]. It is worth noting that function F2 has been officially expunged from consideration due to its inherent propensity for inducing instability. These aforementioned 29 functions have been systematically categorized into four distinct types, namely unimodal, multimodal, hybrid, and composition. This meticulous categorization serves the noble purpose of guaranteeing a comprehensive and equitable assessment of the test functions, thereby upholding the rigorous standards of evaluation. A brief description of CEC 2017 is given in Table 2.

Table 2.

CEC2017 benchmark functions.

4.2. Performance Comparison with Other Algorithms

In this subsection, the comparative results of CCWFO and 10 other algorithms on the CEC 2017 benchmark are presented. These 10 algorithms encompass a mix of classical metaheuristics and advanced algorithms that have emerged in recent years. Specifically, the algorithms considered are WFO [31], SMA [18], WOA [39], PSO [12], GWO [15], MFO [40], BMWOA [41], RCBA [42], SCADE [43], and OBSCA [44]. The hyperparameters associated with each algorithm are presented in Table 3.

Table 3.

Hyperparameters for correlation algorithms.

The experimental results obtained by CCWFO and other algorithms on each benchmark function of CEC2017 are given in Table 4, where ‘Rank’ denotes the Friedman test rank of the algorithm, ‘AVG’ denotes the average of the rankings obtained by the algorithm on each function of CEC2017, and ‘/−/=’ denotes that CCWFO is better than, equal to, or superior to other algorithms.

Table 4.

Experimental results of CCWFO and other algorithms on CEC2017.

Table 4 shows that the average ranking of CCWFO on the benchmark function is 1.3793, which is ranked first among all competitors, indicating that CCWFO has a significant advantage over other algorithms. CCWFO obtained the global optimum in all 30 runs on F3 and F6 and was close to the global optimum on F5, F7, F8, F9, F11, F14, F15, F18, F19, and F20. This shows the stability of the algorithm’s optimization ability to obtain stable optimization results. Among the compared algorithms, WFO performs closest to CCWFO, but also performs worse than the proposed algorithm on 14 functions.

Table 5 reinforces the points obtained in Table 4. In the Wilcoxon signed-rank test, a p < 0.05 means that the hypothesis can be rejected, meaning that the algorithm is significantly different compared to the comparison algorithms. In Table 5, we can see that, mostly, p < 0.05 on most of the functions, which provides strong evidence that CCWFO significantly outperforms the other algorithms on the benchmarks.

Table 5.

The p-values of CCWFO versus other algorithms on CEC2017.

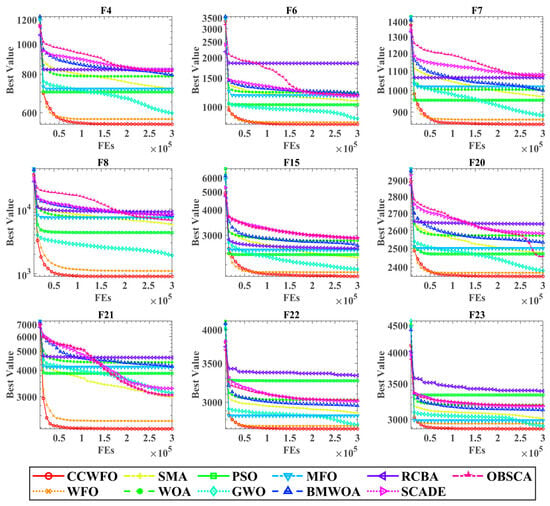

Figure 3 displays the convergence curves of all the algorithms on selected functions. The horizontal axis represents the number of evaluations conducted by the algorithms, while the vertical axis represents the current best fitness value achieved by the algorithms. The legend, located at the bottom of Figure 3, provides information about the different algorithms. Notably, the red lines consistently remain below the other colored lines across all function types. This observation indicates that CCWFO successfully escapes local optima and discovers superior solutions compared to the other algorithms. In conclusion, CC effectively improves the search performance of WFO and has a significant advantage over other algorithms on the benchmark.

Figure 3.

Convergence curves of CCWFO on benchmarks with other algorithms.

5. Application to Oilfield Production

The objective of reservoir production optimization is to identify the optimal solution for each well in order to maximize NPV, and a combinatorial explosion of solution designations occurs due to the larger number of wells and production cycles leading to larger dimensions of optimization variables. Therefore, the problem can be regarded as a typical NP-hard problem, which creates conditions for the introduction of evolutionary algorithms. In this section, based on the reservoir numerical simulation software Eclipse 2010.1, CCWFO is applied to a three-channel reservoir model, and the performance of the method is compared with several classical evolutionary algorithms.

Disregard the nonlinear constraints in oilfield production and take the net present value (NPV) as the objective function to be optimized, and the specific description of NPV is shown in Equation (7).

where is the set of variables to be optimized; in this experiment, the variables are the injection and recovery rates of each well. is the state parameter of the model, which is used to describe the construction of the numerical reservoir model,denotes the total simulation time, and , , and are the oil production rate, water production rate, and water injection rate, respectively, at time step t. is the oil revenue, and are the cost of treating and injecting the water, respectively, is the average annual interest rate, and is the number of years elapsed.

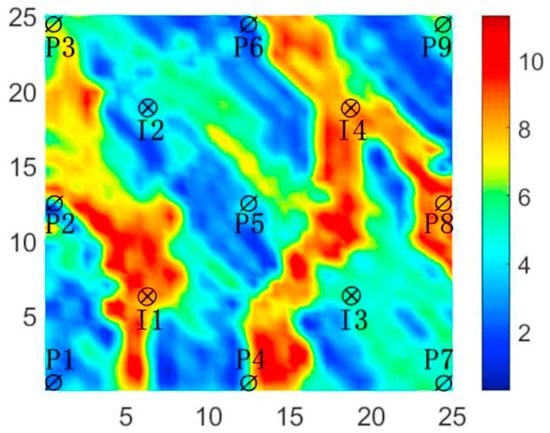

5.1. Three-Channel Model

The three-channel reservoir model is a typical non-homogeneous two-dimensional reservoir that includes four injection wells and nine production wells arranged in a five-point pattern. The model is modeled by 25 × 25 × 1 grid blocks with each grid length of 100 ft, each grid block is 20 ft thick, and the porosity of all grid blocks is 0.2. The physical properties of the reservoir are summarized in Table 6. The specific distribution of the modeled permeability is shown in Figure 4.

Table 6.

Properties of three-channel model.

Figure 4.

Log-permeability distribution of the three-channel model.

In this production optimization problem, the optimization variables consist of the injection rate for each injection well and the fluid recovery rate for the production well. The water injection rate ranges from 0 to 500 STB/DAY, while the water extraction rate for the production wells ranges from 0 to 200 STB/DAY. The thermal storage is utilized for a duration of 1800 days, and the decision time step is set at 360 days. Consequently, the dimensionality of the decision variable is 65.

The fitness function for this optimization problem is the NPV, which is determined by various factors. The oil price is set at 80.0 USD/STB, the cost of water injection is 5.0 USD/STB, and the cost of water treatment is also 5.0 USD/STB. To simplify the model, the average interest rate per annum is assumed to be 0%.

5.2. Analysis and Discussion of Experimental Results

Compare the optimization results of the model using CCWFO and the several classical evolutionary algorithms to showcase the effectiveness of the enhancements. These classical evolutionary algorithms include WFO, GWO, MFO, SMA, WOA, and PSO in Table 3. To ensure fairness in the experiment, each optimization was conducted five times, and the average of the last obtained NPV values was computed.

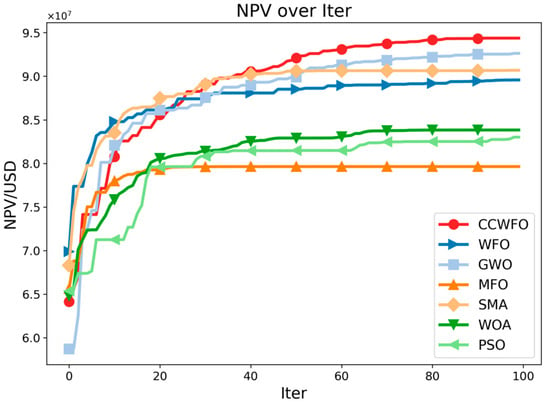

Figure 5 illustrates the optimal NPV values obtained using both methods as a function of the number of iterations. The red line represents CCWFO. From the figure, it is evident that CCWFO outperforms other algorithms significantly, consistently achieving higher NPV values within the same number of iterations.

Figure 5.

NPV obtained by the algorithms with iteration.

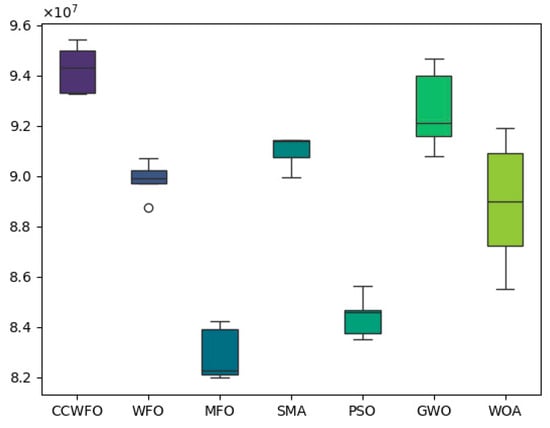

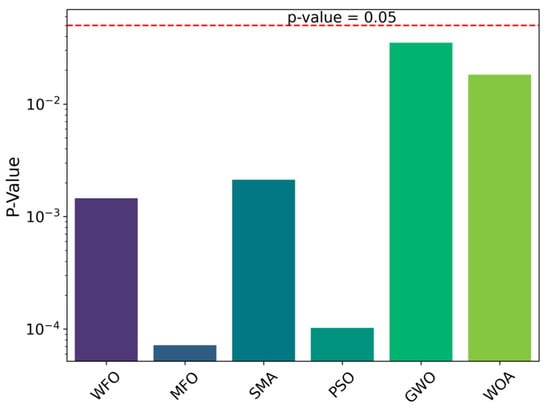

Figure 6 shows the box plot comparison between CCWFO and other algorithms for obtaining optimal NPV in five experiments; it can be seen that in five experiments CCWFO obtains higher NPV as compared to other algorithms. From Figure 7, it can be seen that the p-values of the other traditional algorithms are less than 0.05, which proves that QCSCA has a significant advantage over the six other classical algorithms in terms of statistical significance.

Figure 6.

CCWFO vs. other algorithms: boxplot of best NPV values across 5 experiments.

Figure 7.

p-Value comparison between CCWFO and other algorithms.

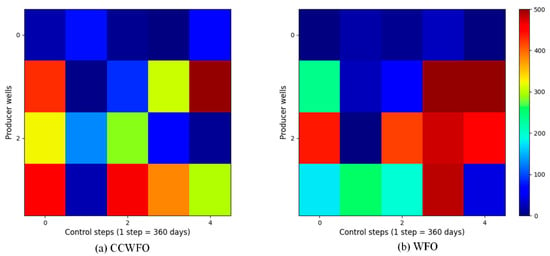

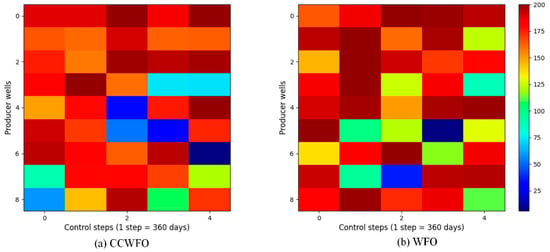

For reasons of space, only the CCWFO and WFO well control schemes are given here; Figure 8 and Figure 9 illustrate the final optimization schemes of the water injection rate and liquid production rate for both CCWFO and WFO. The horizontal axis represents the practice step size, while the vertical axis represents the well number.

Figure 8.

The optimal water-injection rate obtained by each algorithm for the three-channel model.

Figure 9.

The optimal liquid-production rate obtained by each algorithm for the three-channel model.

In Figure 8b, the regulation scheme for the injection wells obtained using WFO is displayed. It can be observed that the injection rates for the same wells in adjacent control step values vary significantly, resulting in an unstable scheme. This instability is not conducive to implementing the scheme in the field. Additionally, the fluctuating injection rates can cause excessive changes in bottomhole pressure, potentially damaging the reservoir and hindering sustainable development.

On the other hand, CCWFO, as shown in Figure 8a, yields a smoother production scheme compared to WFO. This smoother scheme is more favorable for implementation in the field, as it minimizes abrupt changes in injection rates and reduces the potential for negative impacts on the reservoir.

Overall, CCWFO demonstrates superior performance in generating more stable and smoother production schemes compared to WFO.

6. Conclusions

In this study, the implementation of the CCWFO optimizer is proposed by combining the CC mechanism with the WFO algorithm. The CC strategy enhances population diversity by promoting information exchange among individuals, resulting in improved global exploration capability. Comparative experiments conducted on benchmark functions on CEC2017 demonstrate that CCWFO consistently outperforms 10 other metaheuristic algorithms, yielding higher-quality solutions across different types of functions.

Furthermore, CCWFO is applied to solve the production optimization problem in reservoirs with a three-channel model, using a numerical model as an evaluator. The optimization results are compared with several classical evolutionary algorithms, and the experimental findings indicate that CCWFO achieves higher NPV within the same number of iterations. Additionally, CCWFO generates smoother production scenarios, which are more conducive to field development implementation.

In future research, we plan to explore and develop improved optimization methods. Additionally, we aim to closely integrate machine learning techniques with reservoir production scenarios to discover effective agent model strategies for solving complex large-scale production optimization problems.

Author Contributions

Z.Z.: Conceptualization, Software, Data Curation, Investigation, Writing—Original Draft, Project Administration; S.L.: Methodology, Writing—Original Draft, Writing—Review and Editing, Validation, Formal Analysis, Supervision. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

During the revision of this work, the authors used ChatGPT in order to enhance the English grammar and paraphrase some sentences. After using this tool/service, the authors reviewed and edited the content as needed, and they take full responsibility for the content of the publication.

Data Availability Statement

The numerical and experimental data used to support the findings of this study are included within the article.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have influenced the work reported in this paper.

References

- Lin, A.; Wu, Q.; Heidari, A.A.; Xu, Y.; Chen, H.; Geng, W.; Li, C. Predicting intentions of students for master programs using a chaos-induced sine cosine-based fuzzy K-nearest neighbor classifier. IEEE Access 2019, 7, 67235–67248. [Google Scholar] [CrossRef]

- Huang, H.; Heidari, A.A.; Xu, Y.; Wang, M.; Liang, G.; Chen, H.; Cai, X. Rationalized sine cosine optimization with efficient searching patterns. IEEE Access 2020, 8, 61471–61490. [Google Scholar] [CrossRef]

- Zhu, W.; Li, Z.; Heidari, A.A.; Wang, S.; Chen, H.; Zhang, Y. An Enhanced RIME Optimizer with Horizontal and Vertical Crossover for Discriminating Microseismic and Blasting Signals in Deep Mines. Sensors 2023, 23, 8787. [Google Scholar] [CrossRef] [PubMed]

- A vertical and horizontal crossover sine cosine algorithm with pattern search for optimal power flow in power systems. Energy 2023, 271, 127000. [CrossRef]

- Lin, C.; Wang, P.; Heidari, A.A.; Zhao, X.; Chen, H. A Boosted Communicational Salp Swarm Algorithm: Performance Optimization and Comprehensive Analysis. J. Bionic Eng. 2023, 20, 1296–1332. [Google Scholar] [CrossRef]

- Zhang, K.; Zhao, X.; Chen, G.; Zhao, M.; Wang, J.; Yao, C.; Sun, H.; Yao, J.; Wang, W.; Zhang, G. A double-model differential evolution for constrained waterflooding production optimization. J. Pet. Sci. Eng. 2021, 207, 109059. [Google Scholar] [CrossRef]

- Zhao, W.; Wang, L.; Mirjalili, S. Artificial hummingbird algorithm: A new bio-inspired optimizer with its engineering applications. Comput. Methods Appl. Mech. Eng. 2022, 388, 114194. [Google Scholar] [CrossRef]

- Polyak, B.T. The conjugate gradient method in extremal problems. USSR Comput. Math. Math. Phys. 1969, 9, 94–112. [Google Scholar] [CrossRef]

- Dantzig, G.B. Linear Programming. Oper. Res. 2002, 50, 42–47. [Google Scholar] [CrossRef]

- Potra, F.A.; Wright, S.J. Interior-point methods. J. Comput. Appl. Math. 2000, 124, 281–302. [Google Scholar] [CrossRef]

- Nelder, J.A.; Mead, R. A simplex method for function minimization. Comput. J. 1965, 7, 308–313. [Google Scholar] [CrossRef]

- Poli, R.; Kennedy, J.; Blackwell, T. Particle swarm optimization. Swarm Intell. 2007, 1, 33–57. [Google Scholar] [CrossRef]

- Dorigo, M.; Birattari, M.; Stutzle, T. Ant colony optimization. IEEE Comput. Intell. Mag. 2006, 1, 28–39. [Google Scholar] [CrossRef]

- Heidari, A.A.; Mirjalili, S.; Faris, H.; Aljarah, I.; Mafarja, M.; Chen, H. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst. 2019, 97, 849–872. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Karaboga, D.; Basturk, B. Artificial Bee Colony (ABC) Optimization Algorithm for Solving Constrained Optimization Problems. In Foundations of Fuzzy Logic and Soft Computing; Melin, P., Castillo, O., Aguilar, L.T., Kacprzyk, J., Pedrycz, W., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2007; Volume 4529, pp. 789–798. ISBN 978-3-540-72917-4. [Google Scholar]

- Yang, Y.; Chen, H.; Heidari, A.A.; Gandomi, A.H. Hunger games search: Visions, conception, implementation, deep analysis, perspectives, and towards performance shifts. Expert Syst. Appl. 2021, 177, 114864. [Google Scholar] [CrossRef]

- Li, S.; Chen, H.; Wang, M.; Heidari, A.A.; Mirjalili, S. Slime mould algorithm: A new method for stochastic optimization. Future Gener. Comput. Syst. 2020, 111, 300–323. [Google Scholar] [CrossRef]

- Ahmadianfar, I.; Heidari, A.A.; Gandomi, A.H.; Chu, X.; Chen, H. Run beyond the metaphor: An efficient optimization algorithm based on Runge Kutta method. Expert Syst. Appl. 2021, 181, 115079. [Google Scholar] [CrossRef]

- Ahmadianfar, I.; Heidari, A.A.; Noshadian, S.; Chen, H.; Gandomi, A.H. INFO: An efficient optimization algorithm based on weighted mean of vectors. Expert Syst. Appl. 2022, 195, 116516. [Google Scholar] [CrossRef]

- Holland, J.H. Genetic algorithms. Sci. Am. 1992, 267, 66–73. [Google Scholar] [CrossRef]

- Yao, X.; Liu, Y.; Lin, G. Evolutionary programming made faster. IEEE Trans. Evol. Comput. 1999, 3, 82–102. [Google Scholar] [CrossRef]

- Tang, D. Spherical evolution for solving continuous optimization problems. Appl. Soft Comput. 2019, 81, 105499. [Google Scholar] [CrossRef]

- Price, K.; Storn, R.M.; Lampinen, J.A. Differential Evolution: A practical Approach to Global Optimization; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Wolpert, D.H.; Macready, W.G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Golzari, A.; Haghighat Sefat, M.; Jamshidi, S. Development of an adaptive surrogate model for production optimization. J. Pet. Sci. Eng. 2015, 133, 677–688. [Google Scholar] [CrossRef]

- Foroud, T.; Baradaran, A.; Seifi, A. A comparative evaluation of global search algorithms in black box optimization of oil production: A case study on Brugge field. J. Pet. Sci. Eng. 2018, 167, 131–151. [Google Scholar] [CrossRef]

- Yin, F.; Xue, X.; Zhang, C.; Zhang, K.; Han, J.; Liu, B.; Wang, J.; Yao, J. Multifidelity Genetic Transfer: An Efficient Framework for Production Optimization. SPE J. 2021, 26, 1614–1635. [Google Scholar] [CrossRef]

- Desbordes, J.K.; Zhang, K.; Xue, X.; Ma, X.; Luo, Q.; Huang, Z.; Hai, S.; Jun, Y. Dynamic production optimization based on transfer learning algorithms. J. Pet. Sci. Eng. 2022, 208, 109278. [Google Scholar] [CrossRef]

- Luo, K. Water Flow Optimizer: A Nature-Inspired Evolutionary Algorithm for Global Optimization. IEEE Trans. Cybern. 2022, 52, 7753–7764. [Google Scholar] [CrossRef]

- Cheng, M.-M.; Zhang, J.; Wang, D.-G.; Tan, W.; Yang, J. A Localization Algorithm Based on Improved Water Flow Optimizer and Max-Similarity Path for 3-D Heterogeneous Wireless Sensor Networks. IEEE Sens. J. 2023, 23, 13774–13788. [Google Scholar] [CrossRef]

- Xue, X.; Gong, X.; Mańdziuk, J.; Yao, J.; El-Alfy, E.-S.M.; Wang, J. Theory-Guided Convolutional Neural Network with an Enhanced Water Flow Optimizer. In Proceedings of the Neural Information Processing, Changsha, China, 20–23 November 2023; Luo, B., Cheng, L., Wu, Z.-G., Li, H., Li, C., Eds.; Springer Nature: Singapore, 2024; pp. 448–461. [Google Scholar]

- de Matos Macêdo, F.J.; da Rocha Neto, A.R. A Binary Water Flow Optimizer Applied to Feature Selection. In Proceedings of the Intelligent Data Engineering and Automated Learning–IDEAL 2022, Manchester, UK, 24–26 November 2022; Yin, H., Camacho, D., Tino, P., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2022; pp. 94–103. [Google Scholar]

- Meng, A.; Chen, Y.; Yin, H.; Chen, S. Crisscross optimization algorithm and its application. Knowl. Based Syst. 2014, 67, 218–229. [Google Scholar] [CrossRef]

- Shan, W.; Hu, H.; Cai, Z.; Chen, H.; Liu, H.; Wang, M.; Teng, Y. Multi-strategies Boosted Mutative Crow Search Algorithm for Global Tasks: Cases of Continuous and Discrete Optimization. J. Bionic Eng. 2022, 19, 1830–1849. [Google Scholar] [CrossRef]

- Hu, H.; Shan, W.; Tang, Y.; Heidari, A.A.; Chen, H.; Liu, H.; Wang, M.; Escorcia-Gutierrez, J.; Mansour, R.F.; Chen, J. Horizontal and vertical crossover of sine cosine algorithm with quick moves for optimization and feature selection. J. Comput. Des. Eng. 2022, 9, 2524–2555. [Google Scholar] [CrossRef]

- Wu, G.; Mallipeddi, R.; Suganthan, P.N. Problem Definitions and Evaluation Criteria for the CEC 2017 Competition on Constrained Real-Parameter Optimization; Technical Report; National University of Defense Technology: Changsha, China; Kyungpook National University: Daegu, Republic of Korea; Nanyang Technological University: Singapore, 2017. [Google Scholar]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Mirjalili, S. Moth-flame optimization algorithm: A novel nature-inspired heuristic paradigm. Knowl.-Based Syst. 2015, 89, 228–249. [Google Scholar] [CrossRef]

- Heidari, A.A.; Aljarah, I.; Faris, H.; Chen, H.; Luo, J.; Mirjalili, S. An enhanced associative learning-based exploratory whale optimizer for global optimization. Neural Comput. Appl. 2019, 32, 5185–5211. [Google Scholar] [CrossRef]

- Liang, H.; Liu, Y.; Shen, Y.; Li, F.; Man, Y. A hybrid bat algorithm for economic dispatch with random wind power. IEEE Trans. Power Syst. 2018, 33, 5052–5061. [Google Scholar] [CrossRef]

- Nenavath, H.; Jatoth, R.K. Hybridizing sine cosine algorithm with differential evolution for global optimization and object tracking. Appl. Soft Comput. 2018, 62, 1019–1043. [Google Scholar] [CrossRef]

- Abd Elaziz, M.; Oliva, D.; Xiong, S. An improved opposition-based sine cosine algorithm for global optimization. Expert Syst. Appl. 2017, 90, 484–500. [Google Scholar] [CrossRef]

- Chen, G.; Zhang, K.; Xue, X.; Zhang, L.; Yao, J.; Sun, H.; Fan, L.; Yang, Y. Surrogate-assisted evolutionary algorithm with dimensionality reduction method for water flooding production optimization. J. Pet. Sci. Eng. 2020, 185, 106633. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).