Abstract

Visual perception equips unmanned aerial vehicles (UAVs) with increasingly comprehensive and instant environmental perception, rendering it a crucial technology in intelligent UAV obstacle avoidance. However, the rapid movements of UAVs cause significant changes in the field of view, affecting the algorithms’ ability to extract the visual features of collisions accurately. As a result, algorithms suffer from a high rate of false alarms and a delay in warning time. During the study of visual field angle curves of different orders, it was found that the peak times of the curves of higher-order information on the angular size of looming objects are linearly related to the time to collision (TTC) and occur before collisions. This discovery implies that encoding higher-order information on the angular size could resolve the issue of response lag. Furthermore, the fact that the image of a looming object adjusts to meet several looming visual cues compared to the background interference implies that integrating various field-of-view characteristics will likely enhance the model’s resistance to motion interference. Therefore, this paper presents a concise A-LGMD model for detecting looming objects. The model is based on image angular acceleration and addresses problems related to imprecise feature extraction and insufficient time series modeling to enhance the model’s ability to rapidly and precisely detect looming objects during the rapid self-motion of UAVs. The model draws inspiration from the lobula giant movement detector (LGMD), which shows high sensitivity to acceleration information. In the proposed model, higher-order information on the angular size is abstracted by the network and fused with multiple visual field angle characteristics to promote the selective response to looming objects. Experiments carried out on synthetic and real-world datasets reveal that the model can efficiently detect the angular acceleration of an image, filter out insignificant background motion, and provide early warnings. These findings indicate that the model could have significant potential in embedded collision detection systems of micro or small UAVs.

1. Introduction

Real-time robust collision detection provides critical environment sensing and decision support for animal and robot behaviors and tasks. However, the current mainstream collision detection algorithms based on distance and image trends have proven inadequate for meeting UAVs’ low-power sensitive collision detection requirements. This limitation significantly impairs the performance and applicability of UAVs in tasks such as collision avoidance and navigation. For instance, the technique of utilizing laser [1], ultrasonic [2], and other sensors to measure the relative distance trend between the UAV and the obstacle to caution against collisions is inadequate in presenting adequate information about the obstacle’s position, shape, and boundary. This shortfall will impede the UAVs’s accuracy and flexibility in avoidance maneuvers. Methods that use visual sensing capture rich information about the natural world (including the obstacle shape, position, and boundary information) and combine it with deep learning [3] to analyze the relationship between the position of the calibrated obstacle in the pre-collision image and the TTC, as well as the relative distance, to warn of collisions. However, this method does not clarify the relationship between the looming result and the image. Its interpretability and environmental adaptability are poor, and it performs poorly in the face of new background environments and obstacles. On the other hand, if we can quickly extract the critical looming cues from image changes during looming to predict collisions like insects [4], combined with the rich object information given to us by vision, We then have the potential to create a low-power, real-time, robust looming detection algorithm.

A substantial amount of biobehavioral experiments have demonstrated that looming-sensitive neurons in insects [4,5,6], which are responsible for alerting them of potential collisions, can detect and react to various looming visual stimuli. It is thought that the neural computations that encode these diverse looming visual characteristics and generate avoidance commands are crucial for developing efficient looming perception in insects. LGMD neurons are particularly useful in constructing looming-sensing systems due to their maximum response to looming [4,7] and continuously increasing visual stimuli [8], their transient response to retreating objects [9], and their resistance to interference from random motion backgrounds [10]. However, the implementation of these bionic algorithms in fast-moving UAVs is impeded by changes in images caused by the UAV’s motion. This affects the model’s ability to accurately extract collision visual features, resulting in a high false alarm rate and warning time lag.

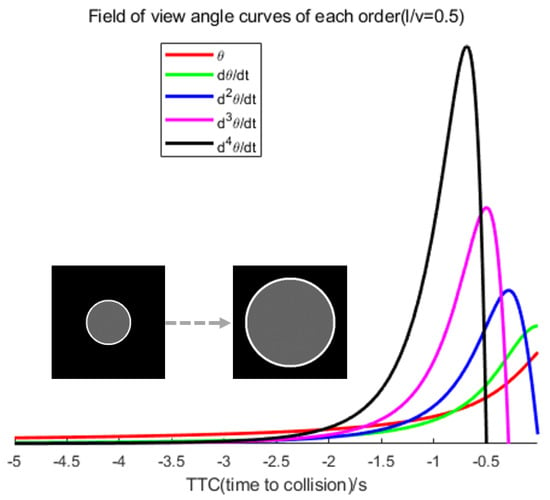

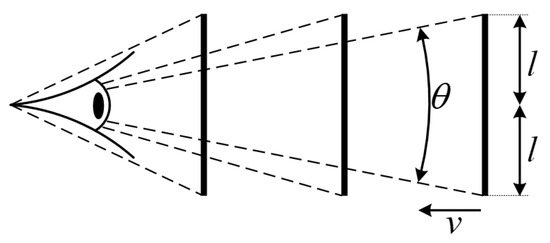

Therefore, we must identify a prominent visual cue that is not affected by the angular velocity of the image to counteract the interference caused by the observer’s motion and advance the warning time. Before the collision, it was observed that peaks emerged in the higher-order field of view curves (second order and above) while plotting the curves for each order. The time of the peaks appeared to be linearly related to . Additionally, the higher the field of view curves, the earlier the peaks occurred (for example, the second-order derivative of Equation (A5), where , and the third-order derivative, where ). This indicates that encoding higher-order field-of-view data from the image and issuing an early warning when the peaks in the higher-order field-of-view curves manifest would theoretically grant the controller ample time to evade. In Figure 1, objective observation is that, unlike other higher-order field-of-view curves, the second-order field-of-view curve (field-of-view angular acceleration) consistently has a numerical value greater than zero. This implies an uninterrupted increase in the field-of-view angular velocity and angle. On the other hand, organisms can sense displacement (visual field angle) through photoreceptors and then indirectly sense changes in velocity (visual field angular velocity) based on the passage of time, and there are also peaks in the true potential curves in locust LGMD neurons. It is probable that biological neurons sensitive to looming encode angular acceleration through the angle and velocity and utilize peaks in the angular acceleration curve as collision warnings.

Figure 1.

Visual field angle curves of different orders based on Equations (A1)–(A3) (only plotting the segments where the curves are greater than 0). Here, . The and curves monotonically increase until a collision occurs. For second-order and higher visual field angle curves, there will be a peak, exhibiting a linear relationship with .

Inspired by this, we propose the A-LGMD model, which can extract acceleration information and combine the angular size and angular velocity information in real-time to accomplish the task of collision detection in a motion scene. Specifically, we propose a two-channel distributed synaptic structure to filter two sets of image angular velocity information with a time delay. We then activate and aggregate the two sets of angular velocity information in the local spatiotemporal domain to obtain the image angular acceleration information. Finally, we fuse multiple looming visual cues and adopt the “peak” triggering method to warn the image of a significant collision. The model serves to warn of substantial looming objects within the image. A systematic evaluation of the model is conducted, encompassing the retrieval of angular acceleration information from the picture, as well as a comparison with other bionic looming detection models in simulated and real UAV flight videos. The study’s main contributions comprise several facets.

- We deduce that there exist peaks in the curves of the high-order (≥2) differentials of the angular size of a looming object. In particular, the peaks of these curves occur earlier as the differential order increases. This suggests that it is worth increasing the alarming TTC of a looming detection model by introducing higher-order information on the angular size.

- Based on the D-LGMD model, which is an angular velocity-focused algorithm, we introduce the angular acceleration cues, intending to increase sensibility and acquire an earlier alarm time before a collision.

- We have conducted a systematic analysis and comparison of the performance of the proposed A-LGMD model with various other bionic looming detection models in different scenarios. The experimental results demonstrate that the proposed model has a distinct tendency toward looming objects in the observer’s motion and is capable of fulfilling the collision detection task of UAVs.

This paper is organized into several sections. Section 2 outlines the related research. Section 3 provides a comprehensive explanation of the A-LGMD model. Section 4 presents the results of experiments that demonstrate that the algorithm proposed in this paper surpasses other bionic looming algorithms in identifying looming objects amidst camera self-motion. Lastly, our conclusions are drawn in Section 5.

2. Related Work

In this section, we review the bionic looming detection algorithms based on motion vision, followed by an introduction to the properties of LGMD neurons and their bionic looming detection models, which are relatively well-researched, and finally a summary of the related research on looming visual cues.

2.1. Looming Visual Cues

Looming detection is a scientifically inspired principle for identifying potential collision risks by observing the visual processes of approaching objects [11]. Animals have been observed to be able to predictively perceive collisions through different sensitive neurons. For instance, in pigeons, certain neurons, namely , , and [12], respond to the time to collision (TTC) at distance [13]. In locusts, LGMD neurons are sensitive to image angles and angular velocities, while in Drosophila, lobula plate/lobula columnar type II (LPLC2) [4] is sensitive to radially symmetric motion.

In investigating looming processes, numerous scholars have examined the correlation between the looming bias of responsive neurons and changes in obstacle images whilst looming. For instance, Rind and Zhao contend that the angular size of obstacles on the retina and the angular velocity consistently increase during looming. Rind utilized the LGMD model [14] to extract the fast-moving edges of the looming objects and set the angular size threshold for collision identification. Zhao, on the other hand, extracted the image angular velocity using the D-LGMD model [15] and established the angular velocity threshold for collision identification. Both approaches were employed for collision detection. Baohuazhou and John Stowers conducted a study on the Drosophila visual system and proposed that during the looming process, the motion of an obstacle on the observer’s retina creates locally symmetric information. Based on the Drosophila’s opponency ultra-selectivity [16], Baohuazhou developed a shallow neural network model [17] that is constrained anatomically to recognize the looming signal. Similarly, Zhao asserted that Drosophila determine collisions based on the radial symmetry of objects and developed the opponency model [18]. John Stowers utilized the dispersion of optical flow vectors at the focus of expansion to determine the approximate position of looming objects, based on the characteristic that Drosophila strongly avoid the focus of the optical flow expansion when moving toward an object [19]. This allowed for achieving the obstacle avoidance function of UAVs.

These bionic algorithms accurately depict the pre-collision process by analyzing the characteristics of the looming object in the image, which is superior to distance-based collision detection algorithms.

2.2. Bionic Looming Detection Algorithms

Researchers have harnessed the biological mechanism of looming neurons to develop multiple bionic proximity detection algorithms, which utilize the image difference principle. These algorithms aim to effectively detect looming by encoding visual cues in the differences between consecutive frames and establishing early warnings based on different visual cues.

Rind put forward the concept of excitatory-inhibitory critical competition, drawing on the preference of locust LGMD neurons for visual stimuli that are looming and continuously increasing. Rind developed a computational model of LGMD1 which aligns with the locust LGMD neuron characteristics, such as transient excitation due to changes in light [14], lateral inhibition [4], and global inhibition [20]. LGMD1 extracts the velocity and number of object motion edges from differential images to indicate the degree of approaching and enable selectivity for approaching objects. Scholars have made various improvements and refinements to the LGMD model due to biologists’ deeper exploration of LGMD properties and the challenges encountered by the LGMD model in robotic applications. These include the following. To enhance the resilience of the LGMD model to ambient light interference, Fu and Yue [21] constructed the LGMD2 model based on its characteristic persecution selectivity for changes between light and dark. This was achieved by including on and off channels in the photoreceptor layer of the LGMD2 model, which separates the image’s brightness changes into on and off channels (on meaning from dark to light and off meaning from light to dark). Lei [22] proposed a new model for LGMD1 that relies on neural competition between the on and off pathways to discern fast translational motion objects. The model is not receptive to paired on-off responses triggered by translational motion, hence improving collision selectivity. To adapt the LGMD1 model for UAV flight scenarios, Zhao proposed a distributed presynaptic connectivity structure [15] grounded in LGMD1. The structure effectively removes background motion noise by precisely extracting the image’s angular velocities.

Inspired by the ultra-selective, looming-sensitive neuron LPLC2 in the Drosophila visual system, Zhao [18] proposed a definition for radially symmetric motion, utilizing the lateral inhibition mechanism to extract the motion velocity and radial symmetry in four directions. This generates a map of the self-attention mechanism. The opponency-based looming detector (OppLoD) model, as proposed by Zhao, integrates the D-LGMD output with the map to extract radially symmetrical motion information that contains faster motion speeds.

2.3. LGMD Neuron Properties and Model Applications

The LGMD is a visual neuron specializing in collision detection in the locust visual system. Its characteristic looming selectivity derives from its dendritic fan [23]. This area responds maximally to visual stimuli that are looming [4] and consistently increasing in magnitude [8]. It responds only briefly to retreating objects [9] and is also resistant to interference from random motion backgrounds [10,24], and it is closely linked to downstream motor neurons and the downstream contralateral motion detector (DCMD) [4] to initiate an escape response [25].

In robotics experiments, Yue and Rind included group excitation and attenuation processing layers in the LGMD1 model [26] to increase the robustness and adaptability to dynamic backgrounds [11]. For UAV applications, Zhao et al. suggested an LGMD1 model built on distributed presynaptic connectivity (D-LGMD1) [15], which employs spatiotemporal filters in lateral inhibition and is useful in situations involving high-speed camera movement. Lei et al. exhibited an improved LGMD1 model featuring on and off dual paths [22]. By assessing the outcome of the fusion of both routes, the model proficiently subdued the reaction to translational movement.

3. Method

In this section, we present an acceleration looming motion detector (A-LGMD) that is based on the D-LGMD model. To extract acceleration information from the velocity space, the model introduces a dual-channel distributed synaptic front-end connection for encoding two sets of angular velocity information. Afterward, the model undergoes spatiotemporal delay activation aggregation for the angular velocity information to obtain angular acceleration information from the image. Considering the continuity of neural processes, the model has been transformed into a continuous integration form. Additionally, the threshold processing has been optimized and adjusted to improve its performance and stability while maintaining the model’s proximity detection selectivity in the presence of complex background motion, as shown in Table 1.

Table 1.

Constant parameters.

3.1. Mechanism and Schematic

From the previous analyses, it is evident that the image of the ideal looming object displays a sharp, nonlinear expansion prior to a collision. The angular velocity and acceleration of the image both exhibit nonlinear increases, with the moment of the sharp peak of the angular acceleration curve being linearly linked to , as displayed in Figure 1. Although the angular acceleration and angular velocity present information differently, the acceleration information is considered to be of a higher order than the velocity information. To perceive the angular acceleration, algorithms that encode the angular velocity can be used as inspiration. We have developed a method of excitation inspired by the D-LGMD model which competes with excitation and inhibition to filter and retain different velocity information [20]. Subsequently, delayed activation and aggregation of various velocity information are executed to generate data on the image’s angular acceleration. Furthermore, the field of view, image angular velocity, and image angular acceleration are nonlinearly coupled and taken as inputs to the Izhikevich neuron model [27]. A “peak” triggering mechanism is utilized to indicate the existence of noteworthy looming objects in the frame.

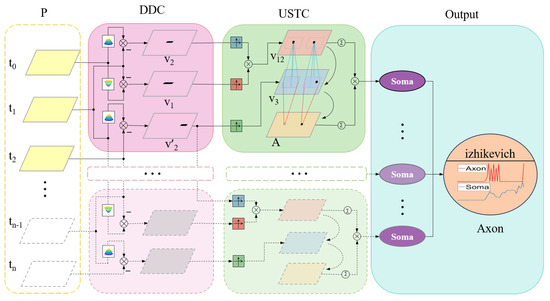

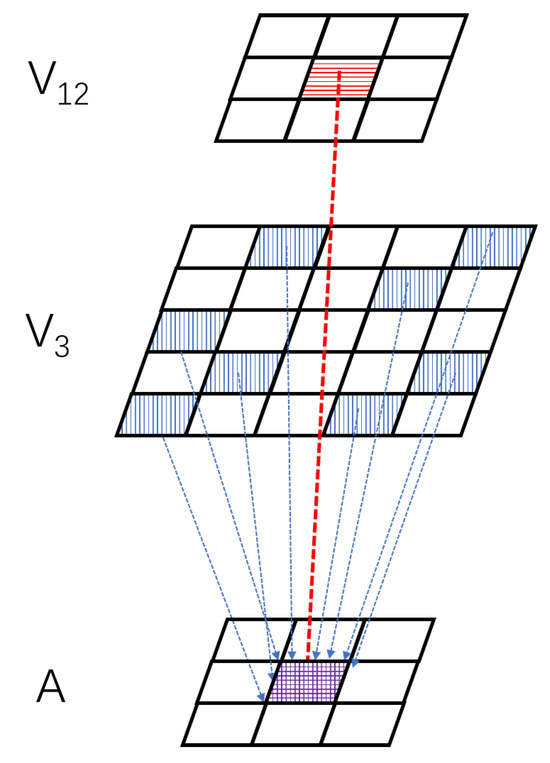

Through the above design, the A-LGMD model presents several advantages, such as improved accuracy in recognizing looming objects, faster response to looming objects, and better resistance to interference from background motion. The structure of the A-LGMD model is explained in detail in Figure 2. This model consists of three image-processing steps.

Figure 2.

Model schematic. P: photo-receptor; DDC: distribution dual channel; USTC: ultra-spatiotemporal connection. The Output comprises two layers: the Soma layer and the Axon layer. The Soma layer integrates the looming information from the USTC layer, while the Izhikevich impulse neurons in the Axon layer generate the impulse information. This model extracts potential danger from image sequences and produces a sequence of pulses that warn of obstacles.

- Dual-channel extraction of angular velocity from images;

- The activation of angular velocity information is delayed, allowing for the aggregation of angular acceleration information;

- Multiple cues for looming stimuli are fused, and warning signals are triggered by peaks.

In the P layer of photoreceptors, image motion information is extracted. Within the DDC layer, motion information undergoes excitation and inhibition competition based on the rind key competition concept, resulting in two distinct sets of image angular velocity information. In the USTC layer, delay activation and aggregation are utilized on two distinct velocity information sets to acquire insights into the angular acceleration of the image. Various looming clues are combined and outputted in the Soma layer. Lastly, the axon layer adopts a “peak” triggering mechanism to signify objects of importance looming in the scene.

3.2. Photoreceptor Layer

The initial layer of the model comprises the photoreceptor layer (P layer). Track alterations are in the overall luminosity at every pixel point:

where is a unit impulse function, denotes the brightness alteration at a certain time, and represents the overall brightness alteration of the pixel over a time t. Layer P embodies variations in the brightness of the image motion regardless of direction.

3.3. Distribution Dual-Channel Layer

In the DDC layer, two pathways are present: one with distributed excitatory properties and the other with distributed inhibitory properties, which compete over time to extract two distinct angular velocities and transmit them to the subsequent USTC layer. By employing a mechanism of temporal competition between excitatory and inhibitory signals, two distinct angular velocity representations, and , can be obtained for each pixel point.

where and represent the signals of excitation and inhibition for each pixel extracted from the edge information of the image motion, respectively, and

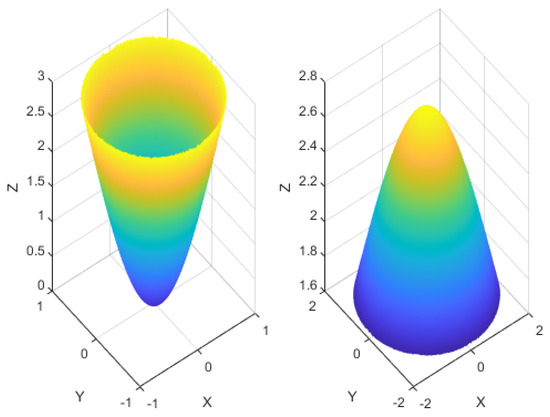

where and are the distribution functions of excitation and inhibition, respectively, and is distributed over time and space. Given the global and local inhibition properties of LGMD neurons, it is physiologically inferred that the presynaptic neighbors transmit inhibition. We have theorized that the dendritic inhibition strength of LGMD neurons possesses a shape characteristic that gradually decreases from the tip of the branch crystal to the root along its diameter [28,29]. This characteristic could account for the local inhibition at the distal end [9]. Consequently, we have chosen to illustrate the spatial distribution of by using an inverted Gaussian kernel with equivalent traits. The inverted Gaussian kernel obstructs faraway motion information, therefore favoring low speeds. The Gaussian kernel obstructs nearby motion information, thus opting for high speeds. The low-speed and high-speed inhibitory as shown in Figure 3.

where and represent the standard deviation between the excitation and inhibition, respectively, while is the time-mapped function of the inhibition pathway. The delay is determined by r and increases as the transmission distance increases as shown in the subsequent equation:

Figure 3.

Examples of the low-speed and high-speed inhibitory kernels and , respectively. The left image shows the kernel grid of , while the right image displays the kernel grid of , where and .

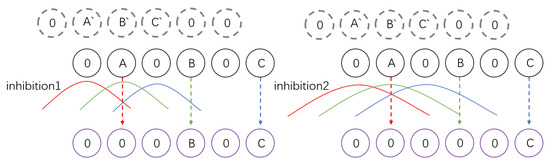

In the tao function, r denotes the spatial range of inhibition, which sets the lowest speed of the inhibition channel during the competition with excitation. By adjusting the width of tao for various inhibitory channels, it is viable to screen out data with velocities exceeding r as depicted in Figure 4.

Figure 4.

position from the previous time step is denoted. Pixels A, B, and C move at speeds of 1, 2, and 3 pixels/ms, respectively. The inhibition1 and inhibition2 radii indicate the inhibition ranges of inhibition pathways 1 and 2. The inhibition values generated by , , and are represented by red, green, and blue colors, respectively. When the movement of the pixel generates a level of stimulation beyond its inhibition threshold, the DDC layer will receive speed data above the threshold (resulting in a purple output).

3.4. Ultra-Spatiotemporal Connection Layer

To extract information on the angular acceleration, the USTC layer receives angular velocity data from the DDC layer at various points in time. This velocity data are then delayed and compiled to form “acceleration information” in the local space, as shown in Figure 5. In the USTC layer, the coordinates of the pixel with an angular velocity between and are determined by processing the two velocities at the same time using two opposite polarity activations, as detailed in Equation (12). Next, the possible acceleration location of the pixel is aggregated within the DDC layer’s aggregation region and outputted to the A layer as the weight of the pixel’s acceleration impact, as shown in Equation (9). Equation (9) can also be interpreted as the pixel points where the image angular velocity jumps within a specified velocity interval (–) over a given time interval. With this design, it is possible to capture the information on accelerated motion in the image effectively. See the following equation for more information:

Figure 5.

Schematic for collecting acceleration data within the USTC layer. The USTC layer generates acceleration information by aggregating data on high-speed moving pixels (blue pixels) near the pre-accelerated pixels (red pixels).

Since the radius of () is larger than , Equation (9) aggregates the pixel points () with velocities less than at the previous moment within the radius of around the noteworthy pixel points () with angular velocities greater than . Here, indicates the noteworthy pixel points, and V12 represents pixel points with angular velocities between and , as shown in Equations (10) and (11):

where and represent the angular velocity data for the pixel with varying velocity limits at the preceding instant. is used to exclude minor background movements, while signals the presence of a significant movement that merits attention:

3.5. Soma Layer

To mitigate the impact of the robot’s motion on the encoded angular velocity, we employed the image angular acceleration (higher-order information) to offset the image angular velocity (lower-order information). This is akin to applying triple correlation to eliminate variability in the second-order components and enhance the motion estimation accuracy [30]. From Equation (A3), it is clear that the estimation has a positive correlation with the angular velocity and an inverse proportion to the field of view angle. Therefore, including both angular velocity and field of view angle information is essential for warning about collisions earlier at TTC moments in the proximity detection model. On the other hand, estimating the field of view angle significantly relies on the robot’s motion. Consequently, we only combined the angular velocity (which includes motion edge information (i.e., the field of view angle)) and angular acceleration into the output of the Soma layer as illustrated in Equation (13):

This leads to a Soma output that is more attuned to objects with a wider field of view angle and faster motion while being less responsive to extraneous objects and background noise. Ultimately, the global acceleration motion information and velocity information in the USTC layer are nonlinearly merged to form the output of the Axon layer.

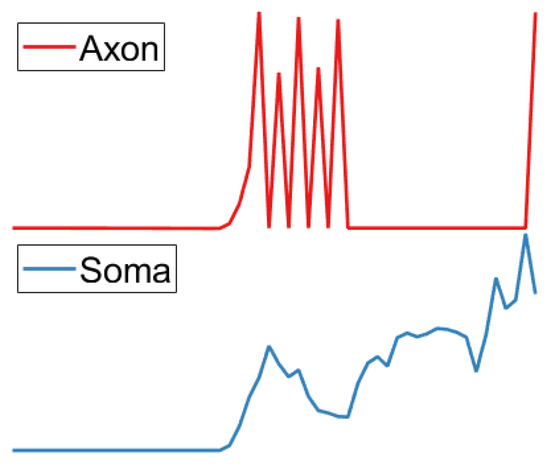

3.6. Axon Layer

Based on the evidence that the generation of action potentials in LGMD neurons necessitates a prolonged period of high-potential pulse [20], an Izhikevich class 2 excitability model [27] has been devised to produce high-potential pulse signals. This model produces high-potential pulse signals, which we subsequently utilize as the present input to the Izhikevich neuron model in the Soma layer. The pulse sequence generated by the Axon layer is shown in Figure 6. The mathematical expression of the model is provided below:

where u is the membrane recovery variable, comprising activation of the K-ion current and deactivation of the Na-ion current. v represents the membrane potential, and the expression of was selected based on the behavioral characteristics of a pulsed signal, which is a nonlinear activation, and the resulting magnitude to guarantee that the membrane potential is measured in mV and that time is measured in ms. An early warning signal is sent out when there is a spike in v. When the membrane potential v exceeds the threshold value of 30 mV, the model emits a pulse and resets the potential v as shown in Equation (15). Additionally, the model simulates the opening of a potassium channel during a decrease in action potential as demonstrated in Equation (16). Table 1 presents the conventional parameters.

Figure 6.

Soma layer input to the Izhikevich model produces an impulse sequence.

4. Experiments and Results

Considering the complexity of real UAV flight environments, this paper focuses on the collision warning capability of A-LGMD in scenarios with a high probability of collision. This section presents a comprehensive evaluation of the A-LGMD model in various UAV flight scenarios both indoors and outdoors. The evaluation concentrates on the model’s capacity to extract visual cues, its sensitivity to background motion, and the warning time. Quantitative analysis was conducted to compare its performance with other models, demonstrating the efficiency of the proposed synaptic mapping and the robust real-time warning capability of the model.

4.1. Experimental Set-up

The stimulus input to the neural network comprised a simulated simulation and an actual first-person view (FPV) video of a drone in flight captured using OSMO Pocket (with a recorded video frame rate of 30 fps). The neural network was executed on a computer with a 2.1 GHz Intel Core i7 CPU and 16 GB of RAM.

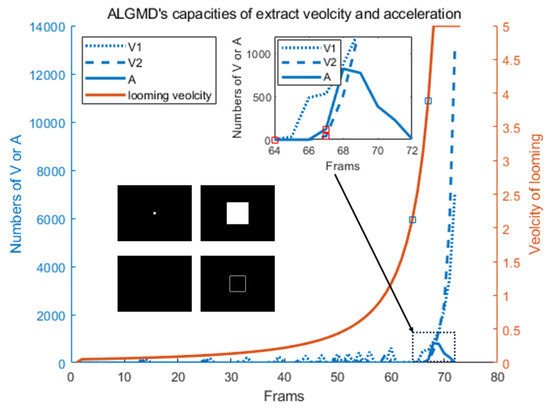

4.2. Model Characteristic Analysis

For the performance of the A-LGMD model in synthetic looming scenarios, this experiment aimed to validate the capability of the A-LGMD model to extract the image velocity and acceleration in synthetic looming scenarios and its pre-collision warning performance. We constructed an OpenMV4 model using Matlab and the following camera parameters: a focal length of 2.8 mm, CMOS dimensions of 4.8 mm × 3.6 mm, a horizontal field of view of 70.8°, a vertical field of view of 55.6°, a frame rate of 30 fps, and image dimensions of 240 × 320 pixels.

In the experiment, a cube with a side length of 0.2 m was simulated. It was positioned at a distance of 5 m from the camera and moved toward it at a speed of 2 m per second until the cube’s edge reached the field of view’s edge as illustrated in Figure 7. According to the results of the experiment, the A-LGMD model is capable of effectively extracting the image motion velocity. Specifically, we extracted the values of velocities v1 and v2 from frames 65 and 68, respectively. In frame 68, a change in velocity was detected when the motion velocity of the image rapidly increased and exceeded v2’s threshold (120 pixels/s).

Figure 7.

The capabilities of the A-LGMD model for extracting the angular speed and acceleration are demonstrated. The number of hazardous pixels in the velocity and acceleration layers is represented on the left y-axis, while the right y-axis displays the ideal magnitude of the angular velocity for a cube looming at a ratio of 0.1 () per frame. During the 64th and 67th frames, the ideal angular velocities exceeded 60 and 120 pixels/s, respectively. Technical abbreviations are explained upon the first usage. The position changes of the cube in the field of view, and the variation in acceleration information extracted by the USTC layer of the model are illustrated in the diagram located in the lower left corner. The small diagram in the upper right corner offers a local magnification of the changing trend in the number of hazardous pixels in the velocity and acceleration layers of the A-LGMD neural network between frames 64 and 70.

As the velocity of the cube surpassed v2 throughout the given timeframe, there was no area for acceleration. As a result, the model extracted angular acceleration data, displaying an abrupt peak within the angular velocity data from frames 67 to 70 and activating the alarm mechanism of the model’s Axon layer.

4.3. Real UAV Experiment

Finally, the model was tested on actual drone recordings, and indoor tests were carried out to assess the model’s performance in different obstacle textures and real collision scenarios. Several drone video sequences featuring collisions with various obstacles, including static chairs, QR code boards, off-road vehicles, and basketball poles, were collected, and the comprehensive information concerning the sequences can be found in Table 2. These experiments aim to evaluate the real-world applicability of the model.

Table 2.

Details of FPV image sequence.

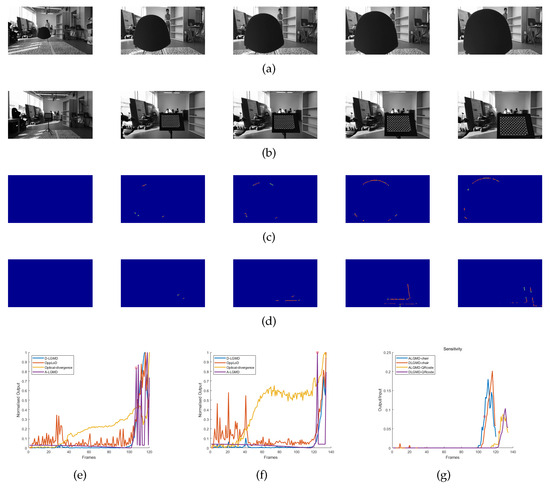

4.3.1. Indoor Simulated Collision Flight Experiments

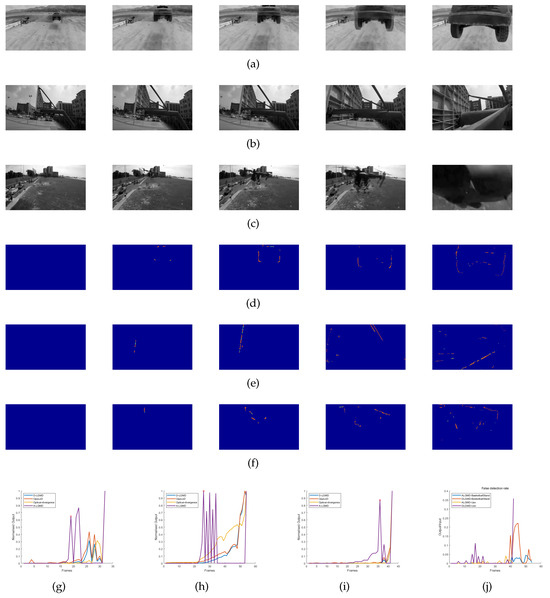

The datasets for Group 1 and Group 2 were obtained through the use of an OSMO Pocket camera while the drones were flown indoors. These experiments comprised five stages for the drones: hovering, pitching, accelerating, looming obstacles (looming), and program-controlled deceleration. Our experiments aimed to evaluate the performance of the A-LGMD algorithm on drones and the ability of biomimetic algorithms to adjust to varying obstacle textures. From Figure 8c,f, it is clear that the A-LGMD model efficiently extracted obstacle motion edges without being affected by the obstacle features. Furthermore, a sensitivity comparison test was conducted on the A-LGMD and D-LGMD models in response to the motion edges of a looming object during UAV motion. Sensitivity was defined using the following equation:

where represents the number of pixel points depicted on the model’s resulting image within the looming region and corresponds to the number of pixel points depicted in the initial difference image within the same region. In Figure 8g, we can observe that the sensitivity of the A-LGMD model emerged earlier when compared with the D-LGMD model. Therefore, the alarm event of A-LGMD will occur before D-LGMD’s.

Figure 8.

Indoor looming detection experimental data were recorded using cameras, and the performance of various looming detection models was evaluated. Grayscale images of Group 1 and Group 2 are shown in (a–d), displaying the output images of the A-LGMD model for Group 1 and Group 2 with corresponding sample images provided in Table 2. The normalized output curves of different models in the Group 1 and Group 2 datasets are presented in (e–g), showing the sensitivity of A-LGMD and D-LGMD to the looming region of the differential input image.

During the experiments conducted with Group 1 and Group 2, it was observed that both the OppLoD and Optical-divergence models registered a high number of false alarms. This occurrence can be attributed to the OppLoD model’s inability to accurately distinguish between the moving contours of the background and the symmetrical contours of the impending object during UAV motion, ultimately resulting in the generation of a false alarm signal. The high incidence of false alarms generated by the Optical-divergence model resulted from the strong influence of the background on the optical flow dispersion in the two selected regions. When the camera moved forward rapidly, the difference between the backgrounds of these regions caused a marked variation in the calculated optical divergence. As a result, this divergence was misinterpreted as a sign of an approaching object, leading to a false alarm situation.

4.3.2. Outdoor Real Collision Tests

To assess the sensitivity of various biomimetic models in real collision scenarios, we used drone-captured videos as input data from a first-person perspective. Our objective was to evaluate their real-time performance by observing the warning and processing times and assessing their performance in identifying looming objects.

Groups 3, 4, and 5 depicted recordings of drones colliding in genuine flight circumstances. The A-LGMD model proved its capability to generate trustworthy early warning indicators (initiating avoidance commands while establishing the avoidance direction through the discerned motion contours of neighboring objects) during these experiments and portrayed the swiftest processing speed, rendering it more appropriate for the obstacle avoidance necessities of low-power drones operating in real time.

The normalized output curves indicate that both the D-LGMD model and the OppLoD model could perceive the oncoming UAV and basketball hoop. However, they exhibited early alarm times to collision (ATTCs) that were less than 0.18 s, as shown in Table 3. It is noteworthy that Group 3 barely recorded any near-collision incidents with the quadcopter. This implies that the D-LGMD and OppLoD models encountered challenges in delivering prompt cautionary notifications when genuine collisions were imminent. In addition, we conducted a comparison test between the A-LGMD and D-LGMD models for false detection backgrounds under the UAV’s motion, and we defined the false detection rate (FDR) as

where represents the number of pixel points depicted in the model’s resulting image within the background region and corresponds to the number of pixel points depicted in the initial difference image within the same region. Figure 9j shows that the FDR of the D-LGMD model was earlier and larger than that of the A-LGMD model. This implies that the persecution signals provided by the A-LGMD model were more trustworthy than those provided by the D-LGMD model. Based on this finding, combined with Figure 8g, we have reason to believe that the persecution motion information and warning information extracted by the A-LGMD model are reliable and valid. From the normalized output curves and the ATTC values in Table 3, it is evident that our proposed A-LGMD model was highly sensitive to forthcoming collisions. The model could detect the location of collision danger about 0.3 s before the collision occurred, consequently issuing a warning signal. Even in scenarios involving drone movement (Group 1, Group 2, Group 4, and Group 5), the A-LGMD model demonstrated efficient performance and displayed a significant advantage in ATTC when compared with other models, emphasizing its potential for widespread application in the drone industry.

Table 3.

Alarm time to collision (ATTC; unit = ms).

Figure 9.

Experiments were conducted using real drone flights involving collisions, focusing on an objective perspective. Grayscale images from Groups 3, 4, and 5 are displayed in (a–c), respectively. In Group 3, a stationary drone collided with an off-road vehicle at high speed. In Group 4, the drone rapidly approached a basketball stand. In Group 5, a quadcopter collided with a uniformly moving drone. (d–f) The output images of the A-LGMD model in Groups 3, 4, and 5, respectively, with corresponding sample images listed in Table 2. (g–i) The normalized output curves of the A-LGMD model and other models in Group 3, 4, and 5 datasets. (j) The rate of false detections by A-LGMD and D-LGMD in the background section of the differential input image.

4.4. Discussion

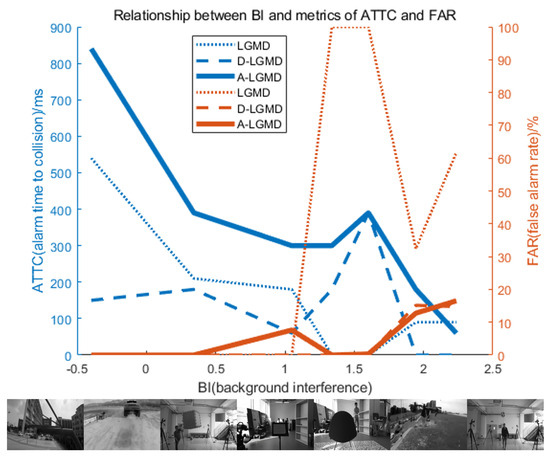

This study examined the use of bio-inspired motion vision-based algorithms for detecting obstacles in autonomous drones and avoiding collisions, particularly in the presence of self-motion interference. The A-LGMD model was proposed based on an analysis of the characteristics of the angular acceleration information in images. The qualitative and quantitative analysis of the A-LGMD model, along with a comparison to other bionic approach detection models, validate the A-LGMD model’s capability to extract angular acceleration information and its feasibility for real-time early warnings, as shown in Figure 10, where the BI and FAR are defined as follows.

Figure 10.

Alarm time to collision (ATTC) and false alarm rate (FAR) performance of different biomimetic looming detection models based on the field of view theory under different background interference (BI). The LGMD algorithm had a high false alarm rate when the UAV was moving. The D-LGMD algorithm was only sensitive to fast-moving objects and performed well in resolving the background interference caused by its motion, but this may result in a lower ATTC, which may not give the UAV enough time to avoid obstacles. In contrast, the A-LGMD algorithm had a high ATTC and a low false alarm rate, making it ideal for UAV looming detection.

Compared with other bio-inspired looming algorithms relying on motion vision, our algorithm incorporates higher-order motion information, specifically angular acceleration information, to alleviate interference caused by the observer’s self-motion and improve the perception of nearby objects. Furthermore, our caution system no longer relies on a singular threshold setting; instead, it incorporates numerous pieces of data and produces results via peaks in the output curve, resulting in heightened stability and dependability.

It should be noted that our current model cannot strictly extract an image’s angular acceleration. Instead, it only initially extracts the pixels of the image velocity jump as the “acceleration”. Improving the image angular velocity extraction may help obtain more accurate information on the image angular acceleration. Additionally, the model has many parameters that must be adjusted according to different scenarios. In the future, the model’s parameters could be combined with the regularity of natural scenes, and a deep learning method could be used to achieve adaptive adjustments based on different scenes.

5. Conclusions

In this paper, we present the A-LGMD neural network model, a bio-inspired proximity detection algorithm, to tackle the problem of UAVs experiencing delays in warnings or being unable to function during their motion. We introduced and examined the features of higher-order information on the angular size curves and integrated the angular acceleration information of images into proximity detection for the first time. This model can accurately extract information on image angular acceleration and fuse real-time multiple proximity visual cues to provide early warnings of looming objects of interest. The experiments conducted on the system indicate that the model can successfully extract the image angular acceleration, eliminate irrelevant background motion, forecast the ideal angular acceleration curve trend, and raise early alarms.

In conclusion, the prospects for the A-LGMD neural network model are promising. Several critical areas have been identified for further development and enhancement. Firstly, the scalability and computational efficiency of the A-LGMD model represent a key research focus. Advancements in methods to scale up the model, enabling it to handle larger datasets and computational workloads, will ensure efficient processing and real-time performance across diverse UAV environments. Integrating multi-sensor fusion techniques, such as cameras, lidar, and radar, into the A-LGMD model presents a significant opportunity to enhance its looming detection capabilities. This will improve the model’s adaptability to complex scenarios, making it more effective in real-world applications. Moreover, image angular acceleration data, a less easily perceivable high-order visual feature, could have a significant role in proximity detection and other areas of robot motion, including posture recognition and grasping tasks.

Author Contributions

F.S. directed and funded this study as corresponding author. J.Z., Q.X. and S.Y. were responsible for writing, surveying related studies, proposing and modelling the A-LGMD looming detection model, experimental results and analysis. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded in part by the Bagui Scholar Program of Guangxi; in part by the National Natural Science Foundation of China, grant numbers 62206065, 61773359, and 61720106009; and in part by the European Union’s Horizon 2020 research and innovation program under the Marie Sklodowska-Curie grant agreement (No. 778062 ULTRACEPT).

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The data of simulation and the source codes can be found via https://github.com/chasen-xqs/ALGMD (accessed on 6 October 2023).

Conflicts of Interest

All authors declare that the research was conducted in the absence of any commercial or financial relationships.

Appendix A. Problem Formulation

Figure A1.

Object looming process. Black squares of different sizes approach at a constant speed and eventually collide with the observer. The object size and value close to the speed were considered to be constant, and thus = was constant.

The visual system performs a fundamental function in biological perception and response. During an object’s approach, its area in the visual field progressively increases, a characteristic that can be simplified as the angle of the visual field expands. Gabbiani [7] derived a relationship between the process of change in the imaging angle and angular velocity of an obstacle in the retina and the ratio of the real size of the obstacle to the approximation velocity (refer to Equation (A1) and Figure 1). This relationship portrays the imaging angle and angular velocity change process of the obstacle in the retina, which provides an important theoretical basis for the encoding of the post-approaching visual cues. Based on this, Zhao [15] proposed the distributed presynaptic connection (DPC) structure to quantify the encoded image angular velocity and described the pre-collision process as a nonlinear increase in the image angular velocity. Additionally, collisions are reflected by the nonlinear increase in many image variation-based visual features that loom (such as dilatancy, radial symmetry, and angular velocity). However, the change in angular acceleration in higher-order visual fields is quite different, with a sharp peak in the angular acceleration curve and a linear correlation with the object size and motion speed before the TTC approaches zero, which is critical for early warnings of collisions. This section provides a detailed explanation of the need for angular acceleration to achieve early responses in collision detection algorithms:

To address image interference due to the robot’s movement and achieve a prompt response by the model, Equation (A1) denotes detecting obstacles when the object imaging angle theta is smaller so that the model can emit early warning signals for larger TTC values. Therefore, for the algorithm of detecting the angle, setting the threshold of theta to be smaller can theoretically achieve an early response, but this also leads the model to false alarms for various small objects, and the performance is not robust:

Based on the above analysis, following the derivation of Equation (A1), Equation (A2) provides the relationship between the TTC and image angular velocity and angle. The algorithm for detecting the angular velocity can achieve an early response by setting a small motion speed threshold when the object’s imaging angle is small, as demonstrated by Equation (A2). However, even slight winds in the scene or the robot’s motion caused by changes in the image can lead to false alarms and reduced model performance, thereby decreasing its robustness:

By deriving Equation (A2), we can obtain the relationship between the image angular acceleration and angle as expressed in Equation (A3). The combination of the , , and states can express the TTC, where the TTC is inversely proportional to and directly proportional to and . If a model for detecting the angular acceleration exists, then a small angular acceleration threshold can be set during the detection of objects with small imaging angles and . This would lead to an early response, indicating the importance of acceleration in estimating the TTC for looming detection:

According to the higher-order field-of-view curves shown in Figure 1, the moment of the spike appears earlier with an increasing field-of-view curve order, and this is linearly related to . The expression for different higher-order field-of-view curves can be derived from Equation (A1) as shown in Equation (A4):

Let and be zero. This provides us with the angular acceleration curves and the third-order field-of-view curve, with peaks occurring at times and as shown in Equation (A5). Consequently, if we can encode high field-of-view information, then we can provide collision warnings at the earliest possible moment, based on curves of spikes in the higher-order field-of-view.

References

- Bachrach, A.; He, R.; Roy, N. Autonomous flight in unknown indoor environments. Int. J. Micro Air Veh. 2009, 1, 217–228. [Google Scholar] [CrossRef]

- Xiang, Y.; Zhang, Y. Sense and avoid technologies with applications to unmanned aircraft systems: Review and prospects. Prog. Aerosp. Sci. 2015, 74, 152–166. [Google Scholar]

- Zhou, B.; Li, Z.; Kim, S.; Lafferty, J.; Clark, D.A. Shallow neural networks trained to detect collisions recover features of visual loom-selective neurons. eLife 2022, 11, e72067. [Google Scholar] [CrossRef] [PubMed]

- Rind, F.C.; Simmons, P.J. Orthopteran DCMD neuron: A reevaluation of responses to moving objects. I. Selective responses to approaching objects. J. Neurophysiol. 1992, 68, 1654–1666. [Google Scholar] [CrossRef] [PubMed]

- Temizer, I.; Donovan, J.C.; Baier, H.; Semmelhack, J.L. A Visual Pathway for Looming-Evoked Escape in Larval Zebrafish. Curr. Biol. 2015, 25, 1823–1834. [Google Scholar]

- Sato, K.; Yamawaki, Y. Role of a looming-sensitive neuron in triggering the defense behavior of the praying mantis Tenodera aridifolia. J. Neurophysiol. 2014, 25, 671–683. [Google Scholar]

- Gabbiani, F.; Krapp, H.G.; Laurent, G. Computation of Object Approach by a Wide-Field, Motion-Sensitive Neuron. J. Neurosci. 1999, 19, 1122–1141. [Google Scholar] [CrossRef] [PubMed]

- Rind, F.C. Motion detectors in the locust visual system: From biology to robot sensors. Microsc. Res. Tech. 2002, 56, 256–269. [Google Scholar] [CrossRef] [PubMed]

- Rind, F.C.; Simmons, P.J. Seeing what is coming: Building collision-sensitive neurones. Trends Neurosci. 1999, 22, 215–220. [Google Scholar] [CrossRef]

- Yakubowski, J.M.; Mcmillan, G.A.; Gray, J.R. Background visual motion affects responses of an insect motion-sensitive neuron to objects deviating from a collision course. Physiol. Rep. 2016, 4, e12801. [Google Scholar] [CrossRef]

- Yue, S.; Rind, F.C. Collision detection in complex dynamic scenes using an LGMD-based visual neural network with feature enhancement. IEEE Trans. Neural Netw. 2006, 17, 705–716. [Google Scholar]

- Fotowat, H.; Gabbiani, F. Collision Detection as a Model for Sensory-Motor Integration. Annu. Rev. Neurosci. 2011, 34, 1. [Google Scholar] [CrossRef]

- Wang, Y.; Frost, B.J. Time to collision is signaled by neurons in the nucleus rotundus of pigeons. Nature 1992, 356, 236–238. [Google Scholar] [CrossRef] [PubMed]

- O’Shea, M.; Rowell, C. The neuronal basis of a sensory analyser, the acridid movement detector system. II. response decrement, convergence, and the nature of the excitatory afferents to the fan-like dendrites of the LGMD. J. Exp. Biol. 1976, 65, 289–308. [Google Scholar] [CrossRef] [PubMed]

- Zhao, J.; Wang, H.; Bellotto, N.; Hu, C.; Peng, J.; Yue, S. Enhancing LGMD’s Looming Selectivity for UAV With Spatial-Temporal Distributed Presynaptic Connections. IEEE Trans. Neural Netw. Learn. Syst. 2021, 34, 2539–2553. [Google Scholar] [CrossRef] [PubMed]

- Klapoetke, N.C.; Nern, A.; Peek, M.Y.; Rogers, E.M.; Breads, P.; Rubin, G.M.; Reiser, M.B.; Card, G.M. Ultra-selective looming detection from radial motion opponency. Nature 2017, 551, 237–241. [Google Scholar] [CrossRef] [PubMed]

- Biggs, F.; Guedj, B. Non-Vacuous Generalisation Bounds for Shallow Neural Networks. In Proceedings of the International Conference on Machine Learning, Baltimore, MA, USA, 17–23 July 2022. [Google Scholar]

- Shuang, F.; Zhu, Y.; Xie, Y.; Zhao, L.; Xie, Q.; Zhao, J.; Yue, S. OppLoD: The Opponency based Looming Detector, Model Extension of Looming Sensitivity from LGMD to LPLC2. arXiv 2023, arXiv:2302.10284. [Google Scholar] [CrossRef]

- Reiser, M.B.; Dickinson, M.H. Drosophila fly straight by fixating objects in the face of expanding optic flow. J. Exp. Biol. 2010, 213, 1771–1781. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Rind, F.C.; Bramwell, D. Neural network based on the input organization of an identifiedneuron signaling impending collision. J. Neurophysiol. 1996, 75, 967–985. [Google Scholar] [CrossRef] [PubMed]

- Fu, Q.; Yue, S. Modelling lgmd2 visual neuron system. In Proceedings of the 25th International Workshop on Machine Learning for Signal Processing (MLSP), Boston, MA, USA, 17–20 September 2015; pp. 1–6. [Google Scholar]

- Lei, F.; Peng, Z.; Liu, M.; Peng, J.; Cutsuridis, V.; Yue, S. A Robust Visual System for Looming Cue Detection Against Translating Motion. IEEE Trans. Neural Netw. Learn. Syst. 2022, 34, 8362–8376. [Google Scholar] [CrossRef]

- Graham, L. How not to get caught. Nat. Neurosci. 2002, 5, 1256–1257. [Google Scholar] [CrossRef]

- Silva, A.C.; Mcmillan, G.A.; Santos, C.P.; Gray, J.R. Background complexity affects response of a looming-sensitive neuron to object motion. J. Neurophysiol. 2015, 113, 218–231. [Google Scholar] [CrossRef][Green Version]

- Fotowat, H.; Harrison, R.R.; Gabbiani, F. Multiplexing of motor information in the discharge of a collision detecting neuron during escape behaviors. Neuron 2011, 69, 147–158. [Google Scholar] [CrossRef] [PubMed]

- Hu, C.; Arvin, F.; Yue, S. Development of a bio-inspired vision system for mobile micro-robots. In Proceedings of the Joint IEEE International Conferences on Development and Learning and Epigenetic Robotics, Genoa, Italy, 13–16 October 2014; pp. 81–86. [Google Scholar]

- Izhikevich, E. Which model to use for cortical spiking neurons? IEEE Trans. Neural Netw. 2004, 15, 1063–1070. [Google Scholar] [CrossRef] [PubMed]

- Cuntz, H.; Borst, A.; Segev, I. Optimization principles of dendritic structure. Theor. Biol. Med. Model. 2007, 4, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Cuntz, H.; Forstner, F.; Borst, A.; Häusser, M.; Morrison, A. One Rule to Grow Them All: A General Theory of Neuronal Branching and Its Practical Application. PLoS Comput. Biol. 2010, 6, e1000877. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Mandel, H.B.; Fitzgerald, J.E.; Clark, D.A. Asymmetric ON-OFF processing of visual motion cancels variability induced by the structure of natural scenes. eLife Sci. 2019, 8, e47579. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).