Bio-Inspired Proprioceptive Touch of a Soft Finger with Inner-Finger Kinesthetic Perception

Abstract

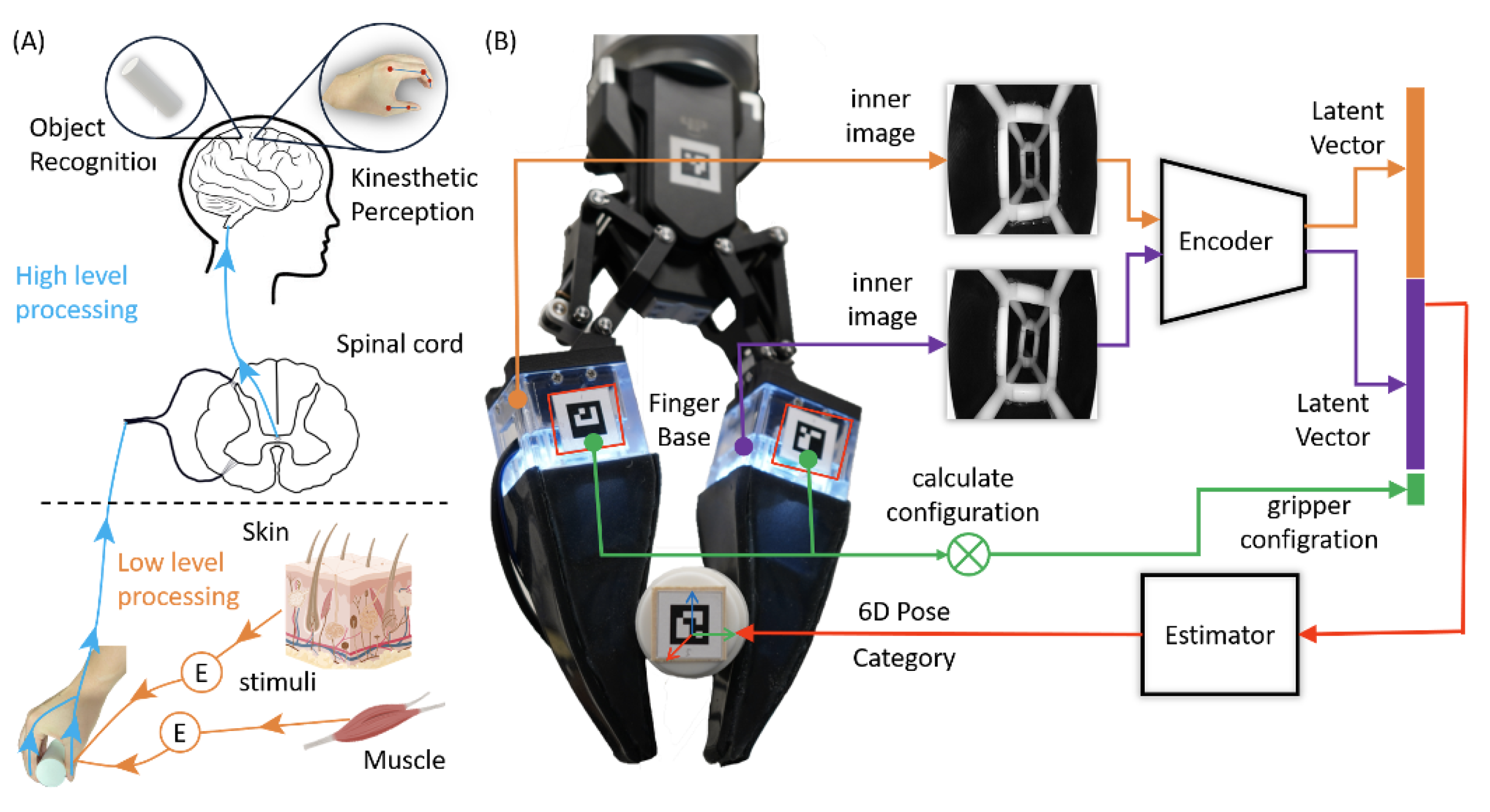

:1. Introduction

2. Materials and Methods

2.1. Design and Fabrication of the Soft Finger with Inner Vision

- Added silicone skin on the finger to isolate the outside environment for a clear background.

- Added an LED light for illumination as the skin blocked the outside light.

- Removed the AruCo marker and used the finger’s skeleton as a deformation feature.

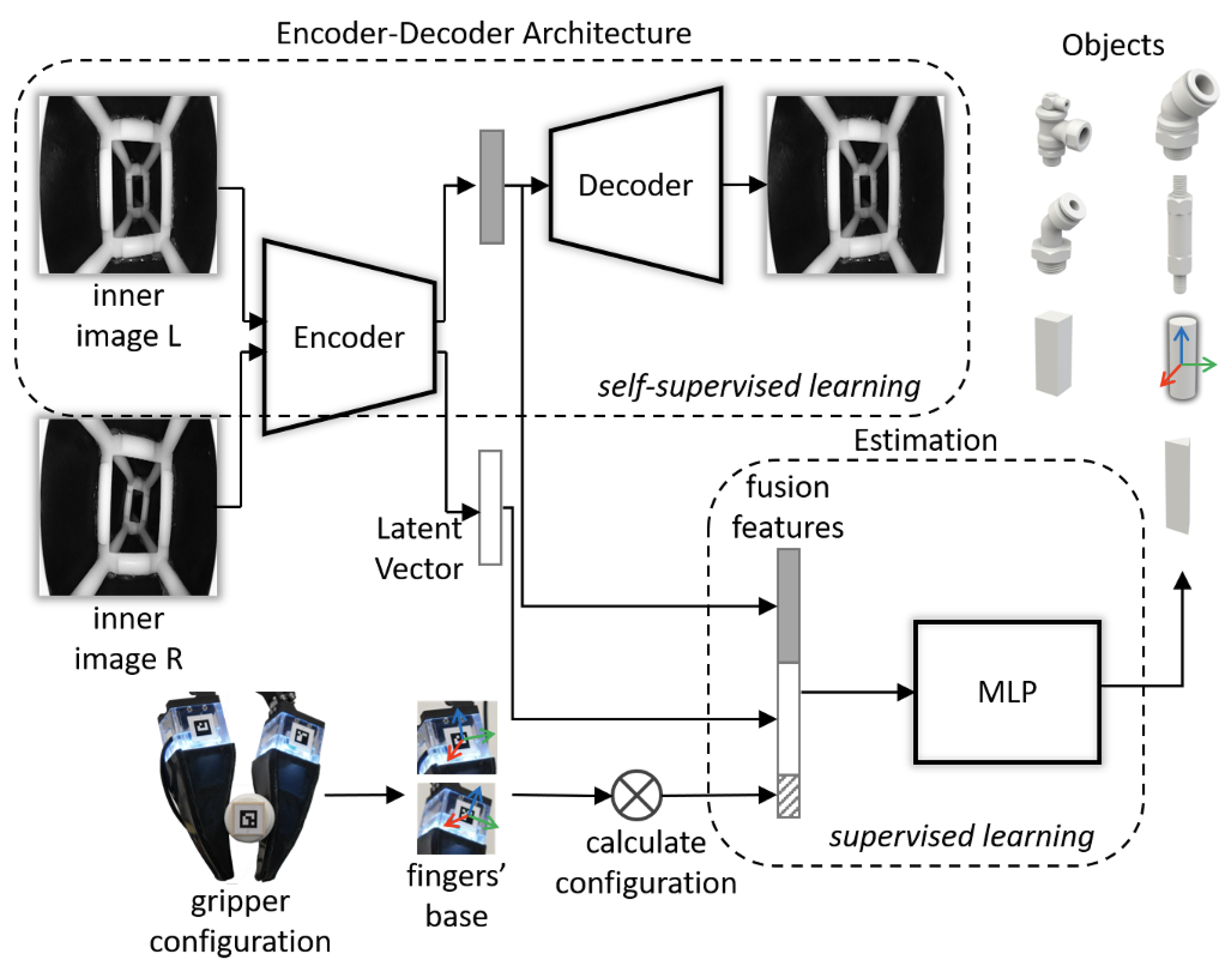

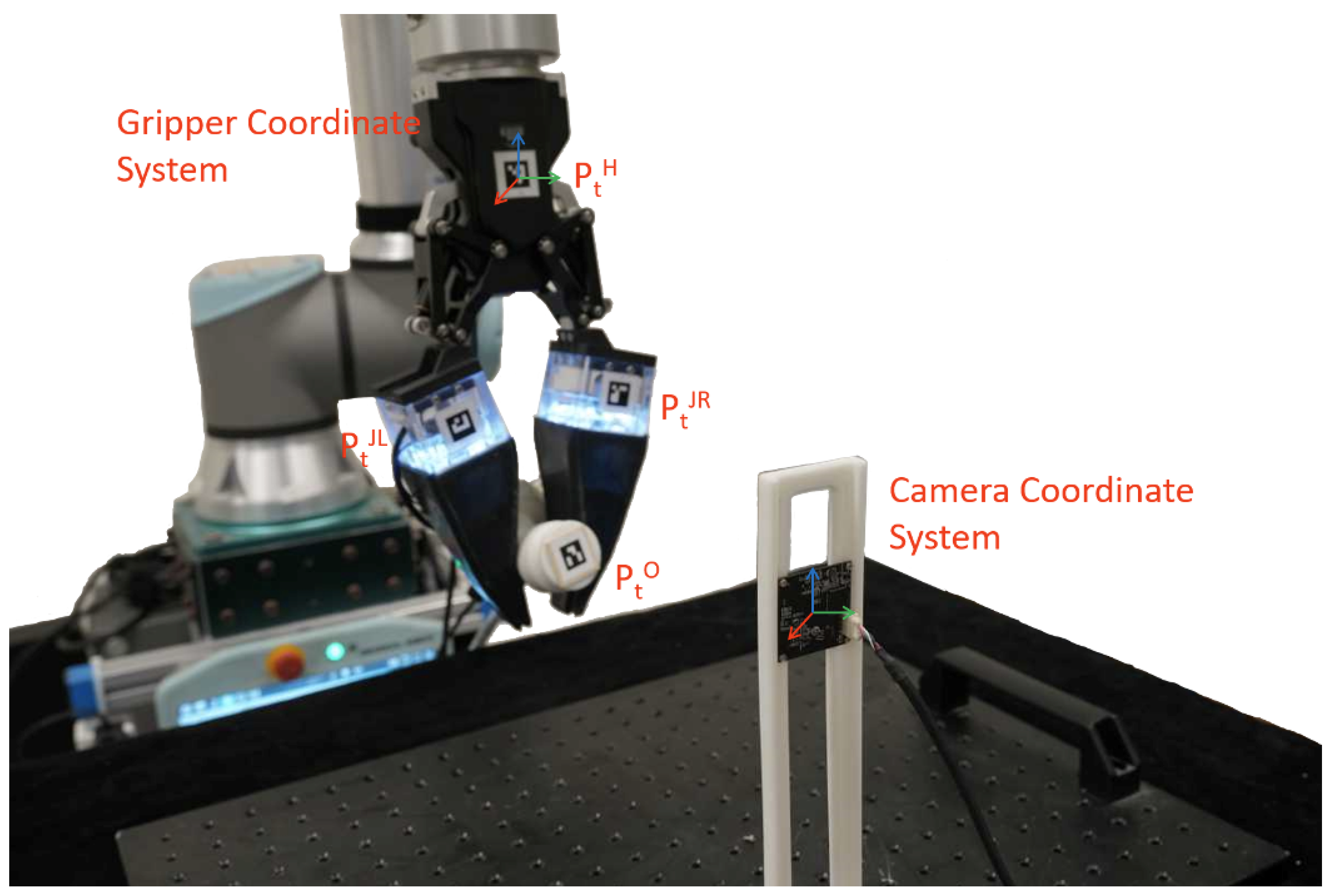

2.2. Framework for Handled Object Recognition with the Soft Finger

2.2.1. Encoder–Decoder Architecture

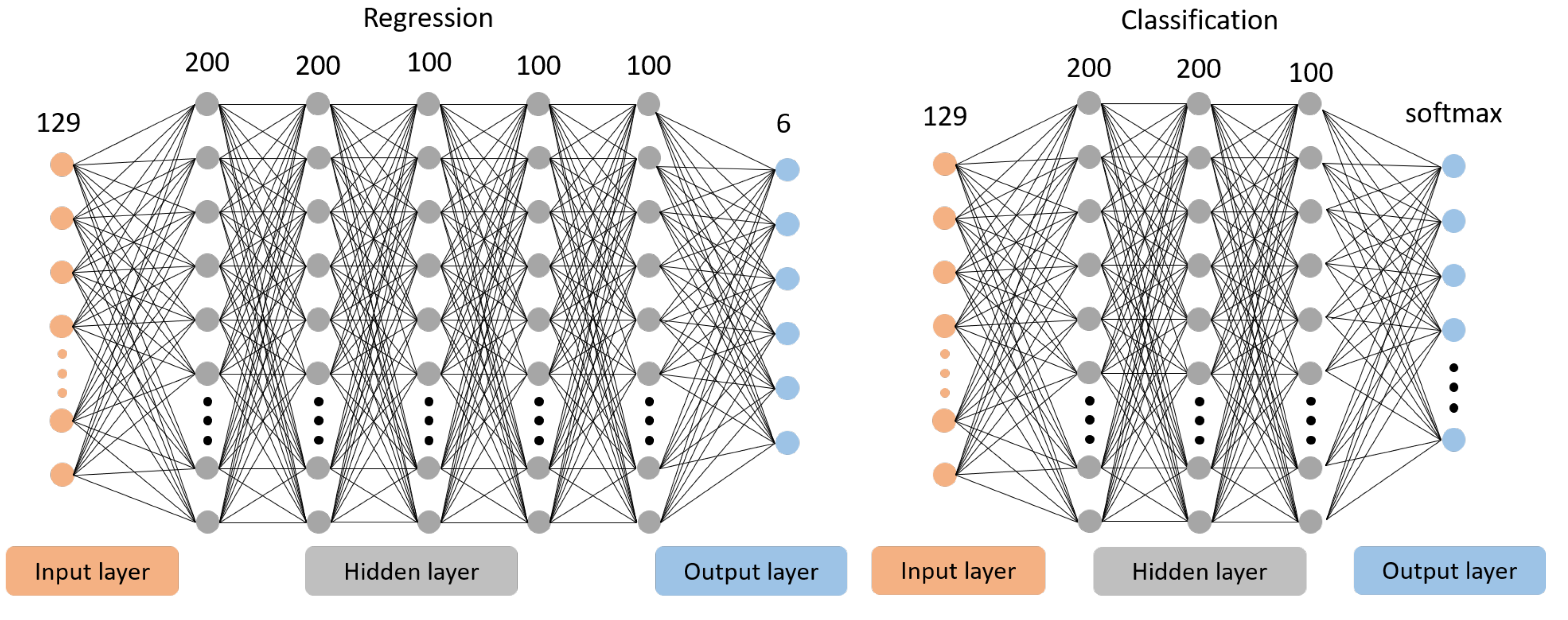

2.2.2. Pose Estimation and Classification

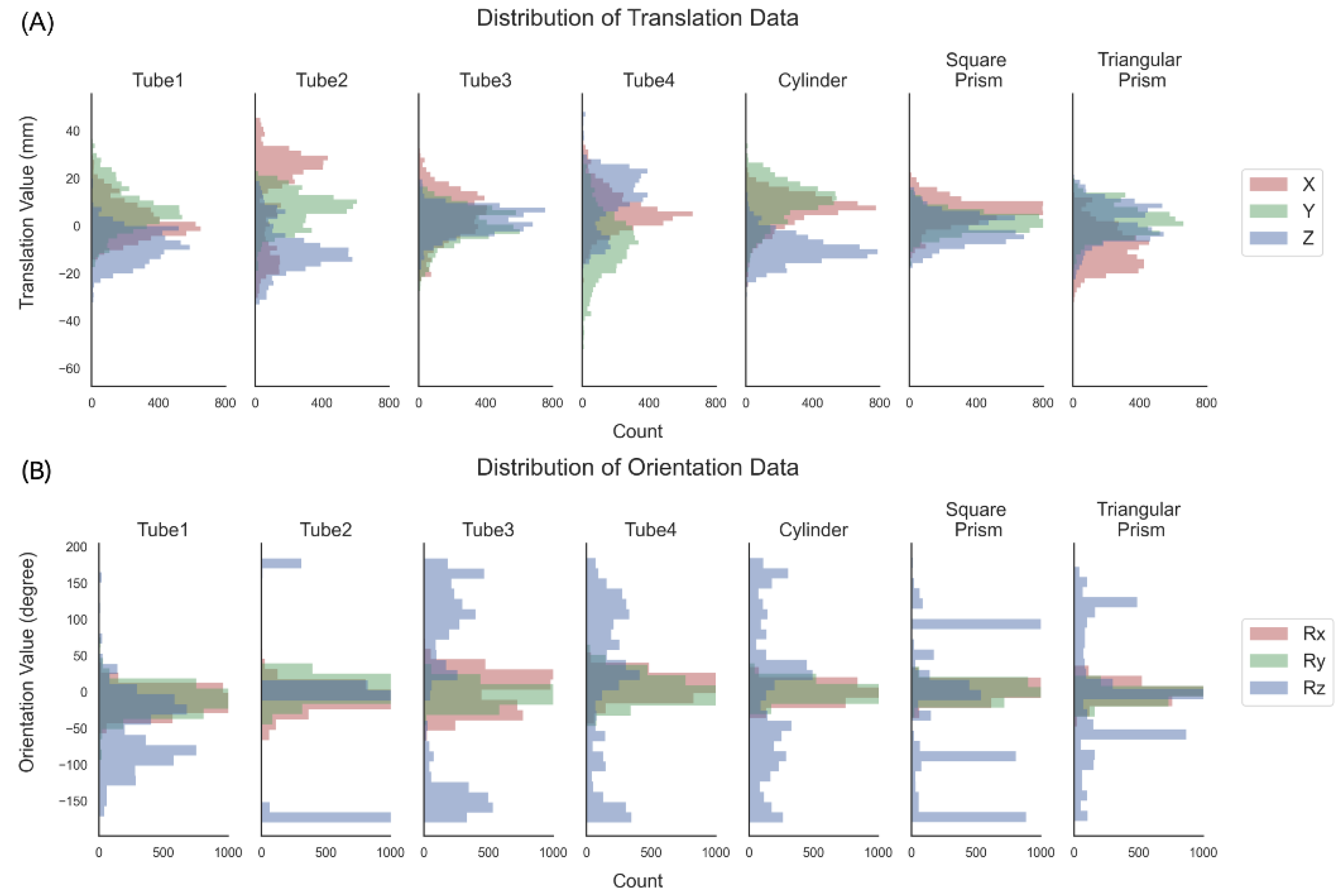

2.3. Data Collection and Training Setups

2.3.1. Data Collection Setup

2.3.2. Network Training Setup

3. Results and Discussion

3.1. Dimension of the Latent Vector

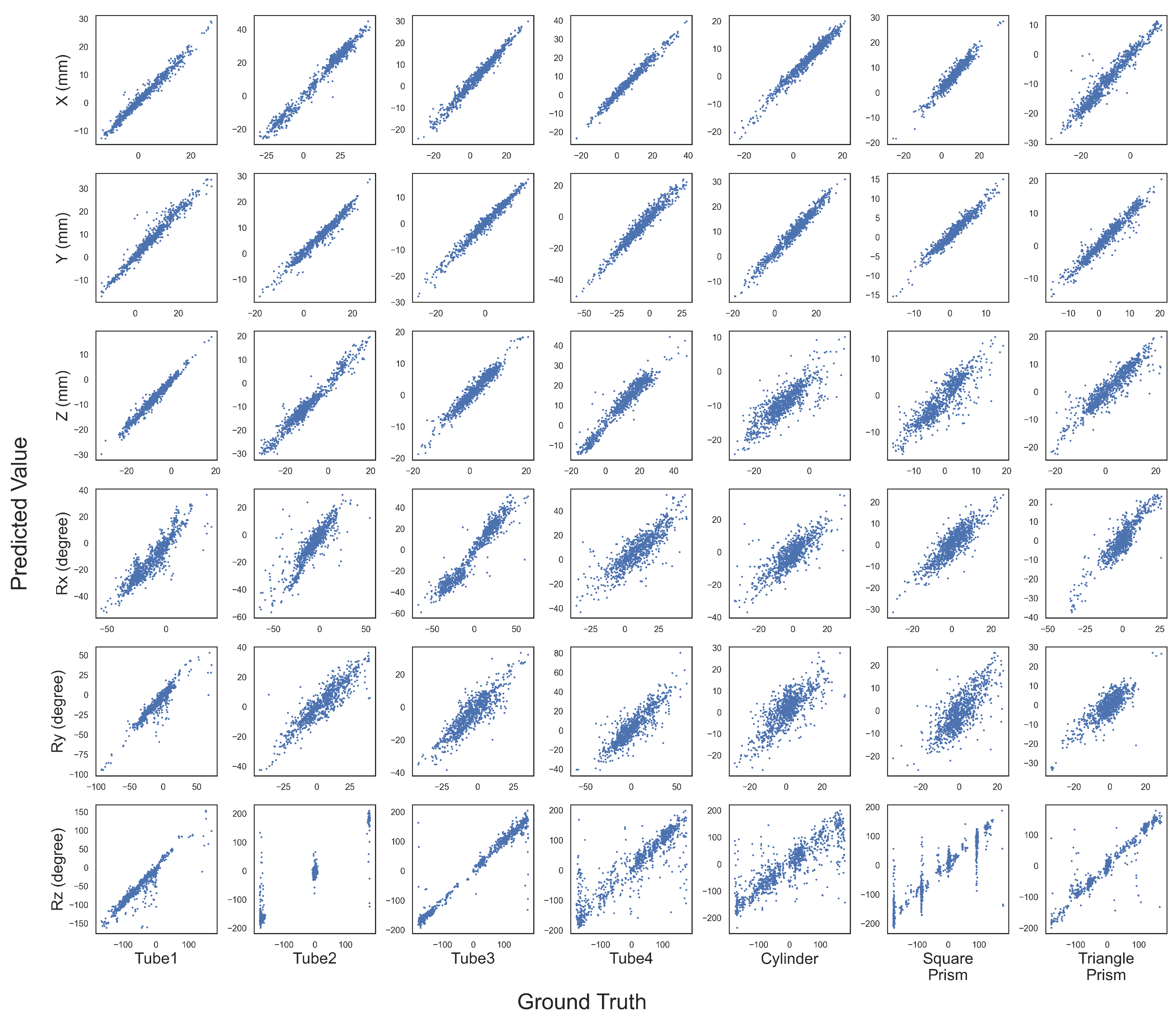

3.2. Quantitative Evaluation of Object Recognition

3.3. Reusability and Expansibility of the Framework

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Klatzky, R.L.; Lederman, S.J.; Metzger, V.A. Identifying objects by touch: An “expert system”. Percept. Psychophys. 1985, 37, 299–302. [Google Scholar] [CrossRef] [PubMed]

- Dahiya, R.S.; Metta, G.; Valle, M.; Sandini, G. Tactile sensing—From humans to humanoids. IEEE Trans. Robot. 2009, 26, 1–20. [Google Scholar] [CrossRef]

- Boivin, M.; Lin, K.Y.; Wehner, M.; Milutinović, D. Proprioceptive Touch of a Soft Actuator Containing an Embedded Intrinsically Soft Sensor using Kinesthetic Feedback. J. Intell. Robot. Syst. 2023, 107, 28. [Google Scholar] [CrossRef]

- Zimmermann, C.; Ceylan, D.; Yang, J.; Russell, B.; Argus, M.; Brox, T. Freihand: A dataset for markerless capture of hand pose and shape from single rgb images. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 813–822. [Google Scholar] [CrossRef]

- Wan, C.; Probst, T.; Gool, L.V.; Yao, A. Self-supervised 3d hand pose estimation through training by fitting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 10853–10862. [Google Scholar] [CrossRef]

- Chen, X.; Liu, Y.; Dong, Y.; Zhang, X.; Ma, C.; Xiong, Y.; Zhang, Y.; Guo, X. Mobrecon: Mobile-friendly hand mesh reconstruction from monocular image. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 20544–20554. [Google Scholar] [CrossRef]

- Doosti, B.; Naha, S.; Mirbagheri, M.; Crandall, D.J. Hope-net: A graph-based model for hand-object pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 6608–6617. [Google Scholar] [CrossRef]

- Tekin, B.; Bogo, F.; Pollefeys, M. H+ o: Unified egocentric recognition of 3d hand-object poses and interactions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 4511–4520. [Google Scholar] [CrossRef]

- Hasson, Y.; Varol, G.; Tzionas, D.; Kalevatykh, I.; Black, M.J.; Laptev, I.; Schmid, C. Learning joint reconstruction of hands and manipulated objects. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 11807–11816. [Google Scholar] [CrossRef]

- Hampali, S.; Sarkar, S.D.; Rad, M.; Lepetit, V. Keypoint transformer: Solving joint identification in challenging hands and object interactions for accurate 3d pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 11090–11100. [Google Scholar] [CrossRef]

- Mason, M.T. Toward robotic manipulation. Annu. Rev. Control. Robot. Auton. Syst. 2018, 1, 1–28. [Google Scholar] [CrossRef]

- Wan, F.; Wang, H.; Liu, X.; Yang, L.; Song, C. DeepClaw: A Robotic Hardware Benchmarking Platform for Learning Object Manipulation. In Proceedings of the 2020 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM), Boston, MA, USA, 6–10 July 2020; pp. 2011–2018. [Google Scholar] [CrossRef]

- Wang, G.; Manhardt, F.; Tombari, F.; Ji, X. Gdr-net: Geometry-guided direct regression network for monocular 6d object pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 16611–16621. [Google Scholar] [CrossRef]

- Lipson, L.; Teed, Z.; Goyal, A.; Deng, J. Coupled iterative refinement for 6d multi-object pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 6728–6737. [Google Scholar] [CrossRef]

- Su, Y.; Saleh, M.; Fetzer, T.; Rambach, J.; Navab, N.; Busam, B.; Stricker, D.; Tombari, F. Zebrapose: Coarse to fine surface encoding for 6dof object pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 6738–6748. [Google Scholar] [CrossRef]

- Von Drigalski, F.; Taniguchi, S.; Lee, R.; Matsubara, T.; Hamaya, M.; Tanaka, K.; Ijiri, Y. Contact-based in-hand pose estimation using bayesian state estimation and particle filtering. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 7294–7299. [Google Scholar] [CrossRef]

- Chalon, M.; Reinecke, J.; Pfanne, M. Online in-hand object localization. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 2977–2984. [Google Scholar] [CrossRef]

- Pfanne, M.; Chalon, M.; Stulp, F.; Albu-Schäffer, A. Fusing joint measurements and visual features for in-hand object pose estimation. IEEE Robot. Autom. Lett. 2018, 3, 3497–3504. [Google Scholar] [CrossRef]

- Tu, Y.; Jiang, J.; Li, S.; Hendrich, N.; Li, M.; Zhang, J. PoseFusion: Robust Object-in-Hand Pose Estimation with SelectLSTM. arXiv 2023, arXiv:2304.04523. [Google Scholar]

- Wen, B.; Mitash, C.; Soorian, S.; Kimmel, A.; Sintov, A.; Bekris, K.E. Robust, occlusion-aware pose estimation for objects grasped by adaptive hands. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 6210–6217. [Google Scholar] [CrossRef]

- Álvarez, D.; Roa, M.A.; Moreno, L. Tactile-based in-hand object pose estimation. In Proceedings of the Iberian Robotics Conference, Sevilla, Spain, 22–24 November 2017; pp. 716–728. [Google Scholar] [CrossRef]

- Yang, L.; Han, X.; Guo, W.; Wan, F.; Pan, J.; Song, C. Learning-based optoelectronically innervated tactile finger for rigid-soft interactive grasping. IEEE Robot. Autom. Lett. 2021, 6, 3817–3824. [Google Scholar] [CrossRef]

- Yuan, W.; Dong, S.; Adelson, E.H. Gelsight: High-resolution robot tactile sensors for estimating geometry and force. Sensors 2017, 17, 2762. [Google Scholar] [CrossRef] [PubMed]

- Lambeta, M.; Chou, P.W.; Tian, S.; Yang, B.; Maloon, B.; Most, V.R.; Stroud, D.; Santos, R.; Byagowi, A.; Kammerer, G.; et al. Digit: A novel design for a low-cost compact high-resolution tactile sensor with application to in-hand manipulation. IEEE Robot. Autom. Lett. 2020, 5, 3838–3845. [Google Scholar] [CrossRef]

- Yamaguchi, A.; Atkeson, C.G. Combining finger vision and optical tactile sensing: Reducing and handling errors while cutting vegetables. In Proceedings of the 2016 IEEE-RAS 16th International Conference on Humanoid Robots (Humanoids), Cancun, Mexico, 15–17 November 2016; pp. 1045–1051. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Wan, F.; Liu, X.; Guo, N.; Han, X.; Tian, F.; Song, C. Visual Learning Towards Soft Robot Force Control using a 3D Metamaterial with Differential Stiffness. In Proceedings of the Conference on Robot Learning, Auckland, New Zealand, 14–18 December 2022; pp. 1269–1278. [Google Scholar]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the 27th International Conference on Machine Learning (ICML-10), Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the International Conference on Machine Learning, Pmlr, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Bradski, G. The OpenCV Library. Dr. Dobb’S J. Softw. Tools 2000, 25, 120–123. [Google Scholar]

- Villalonga, M.B.; Rodriguez, A.; Lim, B.; Valls, E.; Sechopoulos, T. Tactile object pose estimation from the first touch with geometric contact rendering. In Proceedings of the Conference on Robot Learning, London, UK, 8–11 November 2021; pp. 1015–1029. [Google Scholar]

- Sattler, T.; Maddern, W.; Toft, C.; Torii, A.; Hammarstrand, L.; Stenborg, E.; Safari, D.; Okutomi, M.; Pollefeys, M.; Sivic, J.; et al. Benchmarking 6dof outdoor visual localization in changing conditions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8601–8610. [Google Scholar] [CrossRef]

- Gao, Y.; Matsuoka, S.; Wan, W.; Kiyokawa, T.; Koyama, K.; Harada, K. In-Hand Pose Estimation Using Hand-Mounted RGB Cameras and Visuotactile Sensors. IEEE Access 2023, 11, 17218–17232. [Google Scholar] [CrossRef]

- Dikhale, S.; Patel, K.; Dhingra, D.; Naramura, I.; Hayashi, A.; Iba, S.; Jamali, N. Visuotactile 6d pose estimation of an in-hand object using vision and tactile sensor data. IEEE Robot. Autom. Lett. 2022, 7, 2148–2155. [Google Scholar] [CrossRef]

- Yan, Y.; Hu, Z.; Yang, Z.; Yuan, W.; Song, C.; Pan, J.; Shen, Y. Soft magnetic skin for super-resolution tactile sensing with force self-decoupling. Sci. Robot. 2021, 6, eabc8801. [Google Scholar] [CrossRef] [PubMed]

- Gou, M.; Pan, H.; Fang, H.S.; Liu, Z.; Lu, C.; Tan, P. Unseen object 6D pose estimation: A benchmark and baselines. arXiv 2022, arXiv:2206.11808. [Google Scholar]

| Latent Vector Dimension | ||||||

|---|---|---|---|---|---|---|

| 8 | 16 | 32 | 64 | 128 | 256 | |

| Normalized MSELoss | 1.37 | 1.74 | 1.36 | 1.09 | 1.35 | 1 |

| Parameters (M) | 0.57 | 0.67 | 0.88 | 1.29 | 2.11 | 3.74 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, X.; Han, X.; Guo, N.; Wan, F.; Song, C. Bio-Inspired Proprioceptive Touch of a Soft Finger with Inner-Finger Kinesthetic Perception. Biomimetics 2023, 8, 501. https://doi.org/10.3390/biomimetics8060501

Liu X, Han X, Guo N, Wan F, Song C. Bio-Inspired Proprioceptive Touch of a Soft Finger with Inner-Finger Kinesthetic Perception. Biomimetics. 2023; 8(6):501. https://doi.org/10.3390/biomimetics8060501

Chicago/Turabian StyleLiu, Xiaobo, Xudong Han, Ning Guo, Fang Wan, and Chaoyang Song. 2023. "Bio-Inspired Proprioceptive Touch of a Soft Finger with Inner-Finger Kinesthetic Perception" Biomimetics 8, no. 6: 501. https://doi.org/10.3390/biomimetics8060501

APA StyleLiu, X., Han, X., Guo, N., Wan, F., & Song, C. (2023). Bio-Inspired Proprioceptive Touch of a Soft Finger with Inner-Finger Kinesthetic Perception. Biomimetics, 8(6), 501. https://doi.org/10.3390/biomimetics8060501