Abstract

In this study, we introduce an innovative hybrid artificial neural network model incorporating astrocyte-driven short-term memory. The model combines a convolutional neural network with dynamic models of short-term synaptic plasticity and astrocytic modulation of synaptic transmission. The model’s performance was evaluated using simulated data from visual change detection experiments conducted on mice. Comparisons were made between the proposed model, a recurrent neural network simulating short-term memory based on sustained neural activity, and a feedforward neural network with short-term synaptic depression (STPNet) trained to achieve the same performance level as the mice. The results revealed that incorporating astrocytic modulation of synaptic transmission enhanced the model’s performance.

1. Introduction

Short-term memory, also referred to as working memory, is a fundamental cognitive process crucial for temporary storage and manipulation of information. It plays an important role in attention, learning, problem-solving, and decision-making. The multi-component model proposed by Baddeley and Hitch [1] suggested that short-term memory consists of the phonological loop, visuospatial sketchpad, and central executive. Empirical studies, such as Miller’s research [2], supported the concept of limited capacity in short-term memory, which can be understood in terms of “working memory chunks” [3]. Neuroimaging studies employing fMRI identified brain regions such as the dorsolateral prefrontal cortex, parietal cortex, and posterior regions involved in short-term memory [4]. Various factors such as interference, time, and individual differences can influence short-term memory performance [5,6,7].

Recent experimental and computational research indicated that persistent neural activity, facilitated by local recurrent connections or cortical–subcortical loops, was capable of storing information [8,9,10]. Sustained and sequential persistent activity has been observed in various tasks and brain regions, including the prefrontal cortex (PFC) [11]. Another mechanism for maintaining short-term memories involves short-term synaptic facilitation, utilizing residual calcium as a memory buffer [12,13]. However, recent experiments indicated that excitatory synapses in early sensory areas, such as the mouse primary visual cortex, primarily undergo synaptic depression [14]. This synaptic depression influenced visual processing by providing temporal context to distinguish between familiar and novel stimuli [14]. Additionally, adaptation at the synaptic level and intrinsic firing rate adaptation [15,16] may play a significant role in brain functioning.

Visual change detection tasks were extensively employed in cognitive psychology and neuroscience to investigate short-term memory for visual information. In these tasks, participants were presented with a set of visual stimuli that briefly disappear, and one or more stimuli undergo changes. Participants must detect and identify the item(s) changed. This paradigm was utilized in numerous studies exploring various aspects of short-term memory, including capacity, encoding processes, and the effects of attention. For example, Luck and Vogel [17] found that participants could reliably remember and detect changes in approximately three to four objects in a display. Hollingworth and Henderson [18] demonstrated a crucial role of attention in maintaining and updating visual information in short-term memory. Neuroimaging studies utilized visual change detection tasks to investigate the neural mechanisms underlying short-term memory, with Vogel et al. [19] using electroencephalography (EEG) to examine the neural correlates of visual change detection. Visual change detection tasks have provided valuable insights into the capacity, attentional processes, and neural mechanisms involved in visual short-term memory.

Astrocytes, traditionally considered as supportive cells in the brain, have emerged as active participants in various brain functions, including memory processes. Recent research suggested that astrocytes play a role in modulating short-term memory [20]. Various studies demonstrated their involvement in regulating synaptic transmission [21], promoting metabolic interactions for long-term memory formation [22], and organizing inhibitory circuits in the cerebellum [23]. These findings indicated that astrocytes exert influence on short-term memory processes participating in the complex dynamics of memory formation and maintenance.

Convolutional neural networks (CNNs) are a type of deep learning architecture specifically designed for processing grid-like data, such as images and videos. CNNs are inspired by the visual processing mechanism of the human brain and are particularly effective in tasks such as image recognition, object detection, and image segmentation [24]. The applications of CNN are extremely wide and include medical data analysis [25,26], autonomous vehicles [27], natural language processing [28], image style transfer [29], computational chemistry [30], and environmental monitoring control systems [31]. There is no direct relationship between CNNs and short-term memory (STM) in the cognitive sense. CNNs do not have memory components that function as human STM. However, recurrent neural networks (RNNs) [24] are a different type of neural network architecture that is often used for tasks involving sequential data and can exhibit memory-like behavior. Unlike CNNs, RNNs have internal memory cells that can store and process information across time steps, which makes them suitable for tasks where the order and context of data matter, such as natural language processing. Adding memory-like behavior to CNNs can expand the possibilities of their applications.

This study proposes a novel hybrid model of short-term memory that incorporates short-term synaptic plasticity, astrocytic modulation of synaptic transmission, and a convolutional neural network. When compared to the recurrent neural network, the proposed model demonstrates better efficiency in the implementation of short-term memory.

The structure of the paper is as follows. Section 2 outlines the mathematical model and methodology employed in this investigation. In Section 3, we present the primary findings from our examination of the model, both with and without the influence of astrocytic modulation on neuronal activity. We also compare our model to a recurrent neural network and experimental data, demonstrating that incorporating astrocytic modulation yields better alignment with the experimental results. Section 4 engages in a discussion of the obtained results and proposes potential avenues for further research. Finally, Section 5 provides a concise summary of the study’s outcomes.

2. Materials and Methods

We used experimental data obtained from mice during the visual change detection task using natural “Go”/“Catch” images, as described in the study by Hu et al. [32]. In that paper, the mice underwent a multi-step learning process, progressing from static bars to flashing bars, and eventually to natural images [33]. Spherically deformed stimuli were employed to account for variations in the distance from the eye to the monitor periphery (undistorted stimuli were used for simplicity in visualization). A set of eight contrast-normalized natural images, all with the same average brightness, was presented in grayscale (Figure 1). All figures were taken from the CIFAR-10 dataset.

Figure 1.

Sample of 8 images used for training.

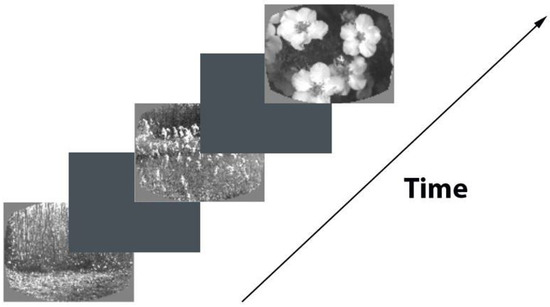

During the training phase, the mice were trained to drink water whenever a change occurred in the presented picture sequence. Each image was shown for 250 ms, followed by a 500 ms inter-stimulus interval with a medium gray brightness (see Figure 2). A small percentage (5%) of image presentations were randomly omitted, ensuring that these omissions never happened before an actual change in the image (referred to as “Go” trials) or a fictitious change in the image (referred to as “Catch” trials). Importantly, these omissions were only present during visualization sessions and not during the actual training sessions. Correct responses were rewarded with water, while premature licking triggered a “time-out” period during which the probe logic timer was reset. In “Go” trials, the images were switched, and the mice had to indicate the change to receive a water reward. Conversely, “Catch” trials involved unchanged images and were used to measure false alarms.

Figure 2.

Scheme of image recognition task.

2.1. Dynamic Synapse Model

In this study, we employed an approach described in several references [34,35,36] to investigate the dynamics of astrocytes, which are a type of glial cell. In this context, the activation of excitatory neurons triggers the release of neurotransmitters, represented by the variable , with the probability of neurotransmitter release denoted as u. Subsequently, when the neurotransmitter binds to receptors on the astrocyte membrane, a series of biochemical reactions occur, leading to the release of gliotransmitters. The dynamics of gliotransmitters are captured by the variable . As a result, the proposed model can be formulated as a system of ordinary differential equations (ODEs).

In Equation (1), the variable represents the time constant governing synaptic depression. The term signifies the presynaptic activity at time , and corresponds to the relaxation time of the gliotransmitter. The activation function is determined by Equation (2), and denotes the activation threshold.

As a dynamic synapse model (Equation (1)), we employ a mean-field model based on the Wilson–Cowan formalism [37] (which does not consider spiking dynamics but focuses on averaged neuronal activity) along with the Tsodyks–Markram model [38]. In this context, presynaptic activity, , essentially reflects the average neuronal activity, which, within our framework, can be described as follows:

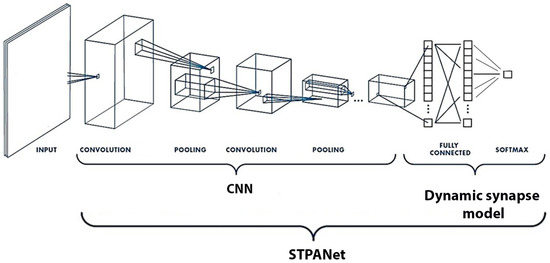

In Equation (4), the variables are interpreted as follows: represents a vector denoting presynaptic activity at distinct time points . Each element of the vector corresponds to a specific time point. The variable n signifies the total number of experiments conducted. refers to the activation function of the i-th element within the FC-64 layer of the convolutional neural network (depicted in Figure 3). Equation (4) describes how changes over time, influenced by the number of experiments conducted (n) and the output from the FC-64 layer of the CNN ().

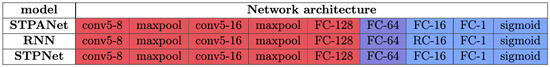

Figure 3.

Model STPNet includes only short-term synaptic adaptation, whereas STPANet also contains astrocytic regulation in modulating synaptic dynamics. RNN refers to a recurrent neural network. The convolutional layers are denoted as “conv<receptive field size>-<number of channels>”. The term “maxpool” indicates the use of max pooling with a 2 × 2 window and a stride of 2. “FC” represents fully connected layers with a specified number of units, while “RC” signifies recurrent layers with a specified number of units.

The activity of astrocytes leads to the release of gliotransmitter, which, upon binding to the membrane receptors of the presynaptic neuron, modulates the probability of neurotransmitter release according to Equation (3). In this context, represents the influence of astrocytes on the likelihood of glutamate release from the presynaptic neuron. Additionally, denotes the probability of neurotransmitter release in the absence of glial interaction, signifies the change in release probability influenced by astrocytes, and represents the activation threshold.

We adopted the standard parameters from the Tsodyks–Markram model [38], which are widely used to characterize neural activity. For the neurotransmitter and gliotransmitter parameters, we followed the tripartite synapse model proposed in previous studies [36,39]. Specifically, we set to 6. The values for the neurotransmitter and gliotransmitter parameters were the following: = 0.23, = 0.305, = 1.8, = 0.4375, = 0.5, and = 0.573.

2.2. Connection of the Dynamic Synapse Model with an Artificial Neural Network

Differential Equations (1)–(3) are used in this model, including astrocytic regulation, to describe the dynamics of chemical concentrations and their interactions in a neural network. In this model, diffuse equations describe the distribution of neuromodulators that act as mediators between neurons and astrocytes.

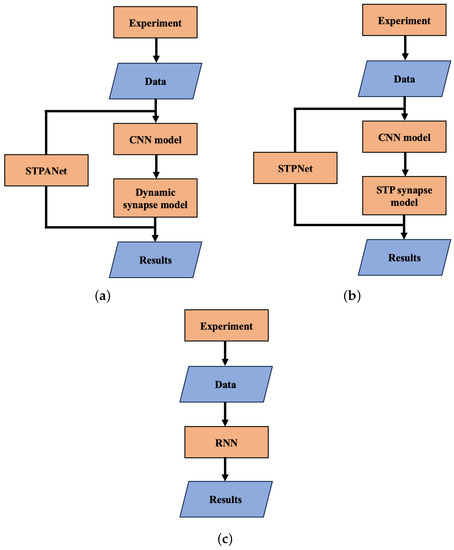

As can be seen in Figure 4a, the STPANet model consists of two submodels: a CNN and a dynamic synapse model. The output layer with CNN Equation (14) with stored weights plays the role of presynaptic activity Equation (1). Further, according to Algorithm 1, we train a dynamic synapse model, which consists of three layers: an input layer with 64 neurons, a hidden layer with 16 neurons, and an output neuron. The model weights were trained using backpropagation. Equations (1)–(3) are used in the conversion step between layers of 64 neurons and 16 neurons. We emphasize that this plasticity is presynaptic, but it can interact with the weight updates from backpropagation during neural network training.

| Algorithm 1 Learning algorithm for dynamic synapse model |

Input: matrix of preprocessed input objects, initial distribution of weights, neuron parameters, plasticity parameters, vector of classes. Parameter: N_epochs, Adam optimizer, BSE loss function, threshold, patience. Output: distribution of weights of the neural network, spike times of the output.

|

Figure 4.

Block diagram of the work carried out. (a) STPANet, (b) STPNet, (c) RNN.

By incorporating differential equations, the STPANet model captures the temporal dynamics and spatial distribution of chemical substances, enabling a more comprehensive understanding of how neuromodulators propagate and affect neural activity.

In summary, the inclusion of differential equations, along with astrocytic regulation, in the STPANet model provides a more comprehensive framework for studying the dynamics of chemical interactions within neural networks. These equations enable the modeling of neuromodulator diffusion, synaptic plasticity, and the impact of astrocytes, contributing to a deeper understanding of the complex mechanisms underlying neural network behavior and plasticity.

2.3. Numerical Simulation Method

Numerical integration was performed using the Euler method. The implementation of numerical methods and data analysis utilized the Python [40] programming language, along with the PyTorch library [41] for model simulation, NumPy [42] for arrays, and the Matplotlib library [43] for data visualization and analysis. As a discretization step, a value was obtained, which is derived from this equation:

where is the duration of each experiment in milliseconds = 50,000 ms and is simulation time step, which is numerically equal to the time one image is shown = 250 ms. Therefore, we compare models in the range of 200 conventional units, where 1 conventional unit corresponds to the sampling step, i.e., the time of displaying one image.

2.4. D-Prime Metric

To evaluate the efficacy of our model compared to a neural network architecture that incorporates only short-term synaptic depression and a recurrent network, we utilized the d-prime metric (detectability index). A higher value of this index indicates better signal recognition. This metric, originally introduced in the study by Hu et al. [32], was employed to assess the capacity to retain and recall a sequence of images.

The d-prime index is calculated using the following formula:

where is the standard deviation and and are “Go” and “Catch” trial distributions, respectively.

Distributions of “Go” and “Catch” are obtained in several stages:

- A sigmoid activation function is fed to the output layer from the model so that the output takes values strictly from 0 to 1:where is an array of the “Go” values or “Catch” values.

- After applying the activation function, draw binary random numbers (0 or 1) from a Bernoulli distribution. The i-th element of the will draw a value according to the i-th probability value given in :

- Introduce the notation for “Go” and “Catch” experiments:“Go”:“Catch”:After that, we can evaluate and :

2.5. Calculate Matrix Asymmetry

The metric utilized is the ratio of the difference between the symmetric and anti-symmetric matrices to the sum of their norms. The formula for this metric is as follows:

- Compute the symmetric matrix () by taking the average of the input matrix and its transpose:

- Compute the anti-symmetric matrix () by taking the difference between the input matrix and its transpose divided by 2:

The resulting asymmetry metric (Q) provides a measure of the asymmetry between the symmetric and anti-symmetric components of the matrix. A higher value indicates greater asymmetry, while a value close to zero suggests a more balanced or symmetric matrix.

3. Results

In general, the work can be divided into several parts, as presented in the block diagram below (Figure 4).

In study [32], an experiment was conducted from which we obtained data for further research. Subsequently, two neural network models were constructed: a convolutional neural network and a dynamic synapse model. Based on the predictions obtained from these models, the results were summarized.

In this paper, we study the STPANet model, which consists of a convolutional neural network (CNN) model and a dynamic synapse model. The dynamic synapse model, Equations (1)–(3), is a short-term memory model with the addition of astrocytic regulation.

3.1. Hybrid Artificial Neural Network Model

A feed-forward neural network was utilized to encode a collection of natural images into a lower-dimensional feature space (see Figure 3 and Figure 5). The convolutional neural network (CNN) underwent training using a grayscale version of the CIFAR-10 dataset [44] and consisted of two convolutional layers followed by two fully connected layers. Once the training process was completed, the weights of the network were saved. For the dynamic synapse model, the input data were obtained by extracting the output of the last fully connected layer, which precedes the classifier.

Figure 5.

CNN: Image features were extracted from the last fully connected layer of a pre-trained convolutional neural network (CNN) trained on a grayscale version of the CIFAR-10 object recognition task. This CNN serves as an encoder network, mapping the input image to a lower-dimensional feature space, which is used as input data for the model. Dynamic synapse model: The model represents a neuron–glia network with short-term synaptic plasticity. It consists of three layers: an input layer with 64 neurons, a hidden layer with 16 neurons, and an output neuron. The model weights were trained using backpropagation.

The STPNet model incorporates only short-term synaptic adaptation, while the STPANet model includes additional astrocytic regulation for modulating synaptic dynamics. The RNN abbreviation stands for recurrent neural network, and the convolutional layers are labeled as “conv<receptive field size>-<number of channels>”. The term “maxpool” indicates the utilization of max pooling with a 2 × 2 window and a stride of 2. “FC” denotes fully connected layers with a specific number of units, while “RC” represents recurrent layers with a specified number of units. At the output of the convolutional neural network, we have a layer of size 64, all weights are saved, and in this form the data are fed to the input of one of three models: STPANet, RNN, STPNet.

CNN: In order to obtain image features, a pre-trained convolutional neural network (CNN) was utilized, which had been trained on a grayscale variation of the CIFAR-10 object recognition task. This CNN functioned as an encoder network, responsible for mapping the input image to a lower-dimensional feature space. These extracted features were then employed as input data for the subsequent stages of the model. By leveraging the pre-trained CNN’s learned representations, the model could effectively capture and utilize the rich visual information present in the images, enhancing its overall performance.

Dynamic synapse model: The architecture of the model encompasses a neuron–glia network that incorporates short-term synaptic plasticity. It comprises 3 distinct layers, starting with an input layer comprising 64 neurons, followed by a hidden layer housing 16 neurons, and culminating in an output neuron. The model’s weights were trained using the backpropagation algorithm, which facilitated the iterative adjustment of the weights based on the computed error signals. This training process enabled the model to learn and adapt its synaptic connections, optimizing its ability to process and generate accurate outputs. The utilization of short-term synaptic plasticity within the model allows for the dynamic modulation of synaptic strength, leading to more sophisticated information processing capabilities and enhancing the model’s overall performance.

The dynamic synapse model received an input represented by a matrix denoted as M:

In Equation (14), the variables have the following interpretations: presynaptic activity and s indicate the size of the output layer obtained from the CNN.

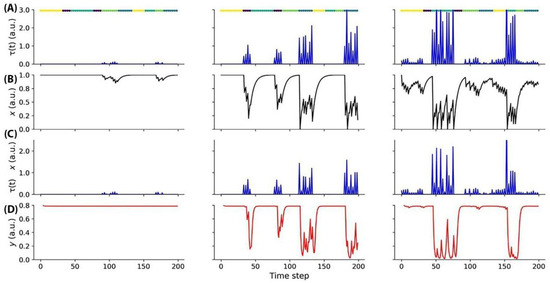

Our model was evaluated in the context of the change detection task (Figure 2). The input activity provided to the model was sparse, aligning with the observed responses in a comprehensive survey conducted on the mouse visual cortex at a large scale (Figure 6).

Figure 6.

(A) The figure illustrates the input activity during the change detection task. Each image was presented for a duration of 250 ms (one time step), followed by a 500 ms (two time steps) gray screen. In the left block, weak responses to a single image are observed. The central block shows responses to two images, with one image eliciting a stronger response than the other. The right block gradually responds to four images. The time of image presentation is indicated by color-coded segments displayed above each plot. (B) The synaptic efficacy (depression) of the units depicted in panel A undergoes changes based on the received input. (C) The input activity of the units shown in panel A is modulated by short-term activity. (D) The dynamics of gliotransmitters.

Figure 6A depicts the activity patterns observed during the change detection task in response to the input stimuli. Each image in the sequence was presented for a duration of 250 ms, which corresponds to a single time step, followed by a 500 ms interval of a gray screen, spanning two time steps. In the left block of Figure 6A, the responses to a single image are relatively weak, indicating lower levels of neural activity. Moving to the central block, responses to two images are illustrated, with one of the images evoking a stronger response compared to the other. This discrepancy in response strengths signifies the neural discrimination between the presented stimuli. The right block of Figure 6A showcases a gradual increase in responses to four images, suggesting a progressive accumulation of neural activity over time. This gradual response pattern indicates the integration of information from multiple image presentations. To facilitate interpretation, color-coded segments displayed above each plot indicate the specific time points of image presentation, aiding in the visualization of the temporal dynamics of neural activity. By examining these activity patterns, we can gain insights into the temporal processing and encoding of visual information within the neural network involved in the change detection task.

The units depicted in panel A exhibit dynamic changes in synaptic efficacy, specifically through a process of synaptic depression, which is influenced by the input they receive (Figure 6B). These changes in synaptic efficacy are crucial for shaping the overall functioning of the neural network. As the units receive input, their synaptic connections undergo modifications, leading to the attenuation or reduction in the strength of synaptic transmission. This process of synaptic depression allows for the network to adapt to varying levels of input and maintain stability by preventing excessive neural activity. By dynamically adjusting synaptic efficacy, the network can effectively regulate the flow of information and optimize its response to the incoming stimuli. Understanding the mechanisms underlying synaptic depression provides valuable insights into the neural circuit’s ability to process and encode information, ultimately contributing to our understanding of neural computation. The activity of the units displayed in panel A is subject to modulation by short-term activity dynamics (Figure 6C). Short-term activity refers to the transient changes in neural firing rates and synaptic efficacy that occur over relatively brief time intervals. In the context of the depicted units, the input activity they receive is influenced and regulated by these short-term activity dynamics. By incorporating short-term activity modulation, the units are capable of flexibly adjusting their responsiveness to incoming input, allowing for dynamic processing and adaptation to changing environmental conditions. This modulation enhances the network’s ability to encode and integrate information, enabling more nuanced and context-dependent computations. The dynamics of gliotransmitters refers to the temporal changes and interactions of signaling molecules released (Figure 6D).

3.2. Learning Process

Algorithm 2 trains a convolutional neural network model on the CIFAR-10 dataset. It utilizes the stochastic gradient descent (SGD) algorithm to optimize the model parameters. The model comprises several convolutional layers, pooling layers, and fully connected layers. Training is conducted using the cross-entropy loss function.

| Algorithm 2 Learning algorithm for CNN model. |

Input: a grayscale image, represented as a single-channel image. Parameter: N_epochs, Batch_size, SGD optimizer, Cross-entropy loss function. Output: the probabilities of the image belonging to each of the classes.

|

Algorithm 1 presents the implementation of a training algorithm for a neural network. The algorithm incorporates the definition of the dynamic synapse model class, which is a neural network model that incorporates the dynamics of STPNet, astrocytic regulation, and spike generation. Additionally, the algorithm calculates the d-prime index.

3.3. Experimental Simulation

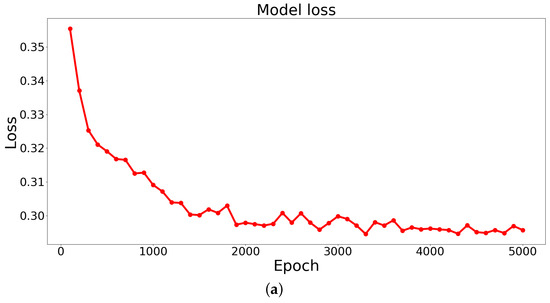

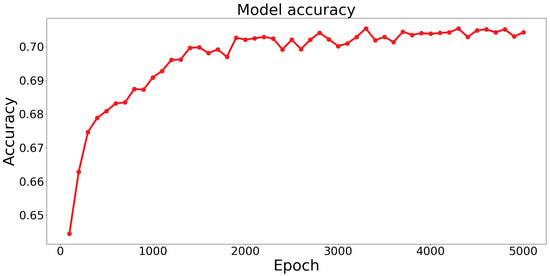

Based on the results obtained from training the STPANet model, accuracy and error graphs were generated (Figure 7).

Figure 7.

The graphs display the model’s (a) loss (error dependence on the number of epochs) and (b) accuracy (accuracy dependence on the number of epochs).

From these graphs, the following conclusions can be drawn:

- Overtraining and undertraining are not observed.

- The optimal value of error and accuracy is reached at about 4000 epochs.

After around 4000 epochs, the model reached its peak performance, achieving the optimal balance between error reduction and accuracy improvement. This implies that further training iterations did not significantly contribute to enhancing the model’s performance.

The identification of this optimal point is crucial, as it allows for the selection of an appropriate stopping criterion during the training process, preventing unnecessary computational costs and saving resources. Additionally, it highlights the importance of monitoring the training progression and evaluating the model’s performance at different epochs to determine the point of convergence and achieve the desired level of accuracy.

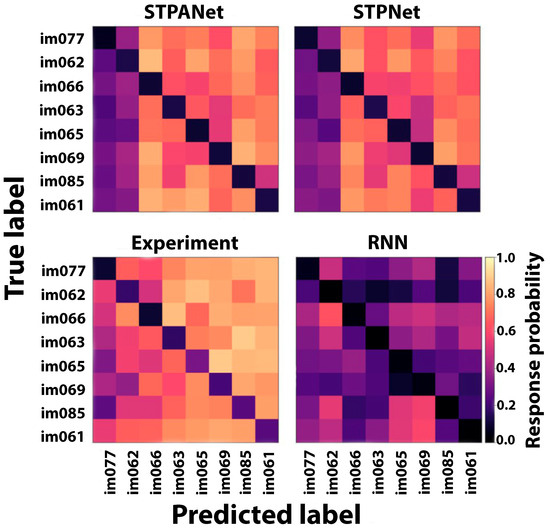

Furthermore, in addition to generating plots for model comparison, we present the mean response probability matrices that illustrate the likelihood of a response for each of the 64 potential image transitions during task execution. The STPANet response probability matrix reveals an asymmetry in image detectability, which is also partially captured by the STPNet model but remains unaccounted for by the RNN model (Figure 8). The experimental data were obtained from the study by Hu et al. [32].

Figure 8.

A confusion matrix is presented, which indicates the probability of responding to each of the possible 64 image transitions during the task. In the matrix, a value of 1 indicates that the image has not been shown before, while a value of 0 signifies that the image has already been shown.

The confusion matrix serves as a valuable tool for evaluating the model’s performance in correctly identifying and distinguishing between different image transitions. This information aids in understanding the model’s capacity to learn and remember the visual patterns associated with specific image transitions. The inclusion of the confusion matrix allows for a comprehensive analysis of the model’s performance, facilitating the identification of potential areas of improvement and informing further training strategies.

After constructing confusion matrices to compare the presented models with the experiment, the asymmetry of the matrices was calculated for each model using Equation (11) (Table 1).

Table 1.

The table shows the asymmetry metrics of the matrices.

Based on the obtained values (Table 1), it can be observed that the STPANet model exhibits the strongest correlation with the experiment.

The calculated d-prime metric using Equation (6) for the three types of models is presented in Table 2 below:

Table 2.

The average d-prime metric values, accompanied by their respective standard deviations, were computed for three models: RNN, STPNet, and STPANet.

The purpose of employing this metric was to demonstrate better classification accuracy achieved by our neural network architecture, which incorporates astrocytic modulation, for both familiar and novel images.

By utilizing this metric, we aimed to showcase the enhanced performance of our model in accurately categorizing not only images that were previously encountered but also those that were novel and unseen during the training phase. This ability to effectively generalize and classify unfamiliar images is a significant advantage of our neural network architecture.

The integration of astrocytic modulation within our architecture offers additional regulatory mechanisms that contribute to improved classification accuracy. The astrocytic modulation allows for dynamic adjustments of synaptic strength and facilitates efficient information processing and discrimination between different image categories.

4. Discussion

Based on analyzing neural and behavioral data related to the visual change detection problem [32], we constructed hybrid neuron network models targeting the implementation of the short-term memory features. Specifically, these models were utilized to test predictions of various short-term memory mechanisms. Our findings verified that adaptation mechanisms, rather than sustained activity, were employed for detecting changes in natural images. Additionally, we eventually demonstrated that accounting for the astrocytic regulation in the dynamical synapses led to better performance in the implementation of image processing tasks.

Our models indicate that short-term plasticity may support short-term memory in early sensory cortex neural circuits, acting as the main memory source in the change detection task. Multiple lines of evidence support our proposed model, including behavioral responses, neural response adaptation, and responses to omitted image presentations. Image repetition causes synapse adaptation, reducing information about image identity. Presentation of a change image activates a new set of input units, influencing hidden unit responses and facilitating image change decoding. Plasticity with astrocyte modulation acts as a temporal filter, enabling comparison of repeated and novel stimuli. Behavioral asymmetry results from different saliency levels, impacting stimulus processing. Models without bottom-up attention lack this behavior. While models with persistent neural activity can solve the task, they are less consistent with observed data. Recurrent neural networks tend to show symmetric responses, requiring further investigation. Depressing synapses on sensory input neurons may sufficiently capture neural dynamics in early sensory cortex for the change detection task. Causal optogenetic perturbations are needed to confirm our results.

In the context of the proposed approach, which involves the integration of an artificial neural network and a dynamic model, which can be used for constructing a spiking neural network, it is pertinent to delve into the distinctions between an artificial neural network and a spiking neural network. Spiking neural networks (SNNs) are distinctive due to their utilization of spiking neuron models, which transmit information through discrete spikes. These spikes carry temporal information and are well-suited for tasks involving precise timing, such as event-based vision. SNNs often employ spike-timing-dependent plasticity (STDP) for learning, capturing biological learning mechanisms [45,46,47].

On the other hand, artificial neural networks (ANNs), particularly convolutional neural networks (CNNs), are widely used for various image tasks such as image classification, object detection, and image segmentation. ANNs rely on continuous activation functions and gradient-based optimization methods for training [24]. This allows them to capture complex features and patterns within images, but they might lack the temporal precision inherent in SNNs.

While SNNs offer advantages in handling temporal information and energy efficiency through sparse spiking, they may require specialized hardware for efficient computation [48]. ANNs, on the other hand, are more established and practical due to their familiarity and widespread adoption. Furthermore, approaches are under development regarding the utilization of supervised, biologically plausible perceptron-based learning for spiking neural networks (SNNs). These approaches aim to construct deep SNNs and create hybrid models that combine convolutional neural networks (CNNs) with spiking neural networks [45].

5. Conclusions

Short-term memory, also known as working memory, is an essential cognitive process that involves temporarily storing and manipulating information. It plays a crucial role in attention, learning, problem-solving, and decision-making. This study presents a new hybrid model for short-term memory that combines short-term synaptic plasticity, astrocytic modulation of synaptic transmission, and a convolutional neural network. By comparing it with a recurrent neural network, the research demonstrates that the proposed model offers improved efficiency in accurately representing short-term memory.

Currently, convolutional neural networks are extensively utilized and, specifically, are actively employed in analyzing medical data related to socially significant diseases [26,49]. The suggested architecture enables the incorporation of a short-term memory effect into a convolutional neural network, thereby enhancing the capabilities of these architectures.

A possible future research direction could involve incorporating the regulation of synaptic transmission by the brain’s extracellular matrix into the model. Experimental and model studies suggest that this regulation can influence processes associated with memory and neural network activity [50,51,52].

Author Contributions

Conceptualization, S.V.S.; methodology, S.V.S.; software, S.V.S. and I.A.Z.; validation, S.V.S.; formal analysis, S.V.S. and I.A.Z.; investigation, S.V.S. and I.A.Z.; resources, S.V.S.; data curation, S.V.S. and I.A.Z.; writing—original draft preparation, S.V.S., I.A.Z. and V.B.K.; writing—review and editing, S.V.S., I.A.Z. and V.B.K.; visualization, S.V.S. and I.A.Z.; supervision, S.V.S.; project administration, S.V.S.; and funding acquisition, S.V.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Russian Science Foundation (Project No. 22-71-00074).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| STPNet | Convolutional neural network with short-term plasticity |

| STPANet | Convolutional neural network with short-term plasticity and astrocyte modulation |

| RNN | Recurrent neural network |

| CNN | Convolutional neural network |

References

- Baddeley, A. Working memory. Curr. Biol. 2010, 20, R136–R140. [Google Scholar] [CrossRef] [PubMed]

- Miller, G. The magical number seven, plus or minus two: Some limits on our capacity for processing information. Psychol. Rev. 1956, 63, 81. [Google Scholar] [CrossRef] [PubMed]

- Cowan, N. The magical number 4 in short-term memory: A reconsideration of mental storage capacity. Behav. Brain Sci. 2001, 24, 87–114. [Google Scholar] [CrossRef]

- Wager, T.; Smith, E. Neuroimaging studies of working memory. Cogn. Affect. Behav. Neurosci. 2003, 3, 255–274. [Google Scholar] [CrossRef] [PubMed]

- Engle, R. Working memory capacity as executive attention. Curr. Dir. Psychol. Sci. 2002, 11, 19–23. [Google Scholar] [CrossRef]

- Park, D.; Polk, T.; Mikels, J.; Taylor, S.; Marshuetz, C. Cerebral aging: Integration of brain and behavioral models of cognitive function. Dialogues Clin. Neurosci. 2001, 3, 151–165. [Google Scholar] [CrossRef]

- Postle, B. Working memory as an emergent property of the mind and brain. Neuroscience 2006, 139, 23–38. [Google Scholar] [CrossRef]

- Wang, X. Synaptic reverberation underlying mnemonic persistent activity. Trends Neurosci. 2001, 24, 455–463. [Google Scholar] [CrossRef]

- Guo, Z.; Inagaki, H.; Daie, K.; Druckmann, S.; Gerfen, C.; Svoboda, K. Maintenance of persistent activity in a frontal thalamocortical loop. Nature 2017, 545, 181–186. [Google Scholar] [CrossRef]

- Zylberberg, J.; Strowbridge, B. Mechanisms of persistent activity in cortical circuits: Possible neural substrates for working memory. Annu. Rev. Neurosci. 2017, 40, 603–627. [Google Scholar] [CrossRef]

- Romo, R.; Brody, C.; Hernández, A.; Lemus, L. Neuronal correlates of parametric working memory in the prefrontal cortex. Nature 1999, 399, 470–473. [Google Scholar] [CrossRef] [PubMed]

- Barak, O.; Tsodyks, M. Working models of working memory. Curr. Opin. Neurobiol. 2014, 25, 20–24. [Google Scholar] [CrossRef] [PubMed]

- Mongillo, G.; Barak, O.; Tsodyks, M. Synaptic theory of working memory. Science 2008, 319, 1543–1546. [Google Scholar] [CrossRef] [PubMed]

- Seeman, S.; Campagnola, L.; Davoudian, P.; Hoggarth, A.; Hage, T.; Bosma-Moody, A.; Baker, C.; Lee, J.; Mihalas, S.; Teeter, C. Others Sparse recurrent excitatory connectivity in the microcircuit of the adult mouse and human cortex. Elife 2018, 7, e37349. [Google Scholar] [CrossRef] [PubMed]

- Grill-Spector, K.; Henson, R.; Martin, A. Repetition and the brain: Neural models of stimulus-specific effects. Trends Cogn. Sci. 2006, 18, 14–23. [Google Scholar] [CrossRef]

- Whitmire, C.; Stanley, G. Rapid sensory adaptation redux: A circuit perspective. Neuron 2016, 92, 298–315. [Google Scholar] [CrossRef]

- Luck, S.; Vogel, E. The capacity of visual working memory for features and conjunctions. Nature 1997, 390, 279–281. [Google Scholar] [CrossRef]

- Hollingworth, A.; Henderson, J. Accurate visual memory for previously attended objects in natural scenes. J. Exp. Psychol. Hum. Percept. Perform. 2002, 28, 113. [Google Scholar] [CrossRef]

- Vogel, E.; Woodman, G.; Luck, S. Pushing around the locus of selection: Evidence for the flexible-selection hypothesis. J. Cogn. Neurosci. 2005, 17, 1907–1922. [Google Scholar] [CrossRef]

- Perez-Catalan, N.; Doe, C.; Ackerman, S. The role of astrocyte-mediated plasticity in neural circuit development and function. Neural Dev. 2021, 16, 1. [Google Scholar] [CrossRef]

- Perea, G.; Araque, A. Astrocytes potentiate transmitter release at single hippocampal synapses. Science 2007, 317, 1083–1086. [Google Scholar] [CrossRef]

- Suzuki, A.; Stern, S.; Bozdagi, O.; Huntley, G.; Walker, R.; Magistretti, P.; Alberini, C. Astrocyte-neuron lactate transport is required for long-term memory formation. Cell 2011, 144, 810–823. [Google Scholar] [CrossRef]

- Ango, F.; Wu, C.; Want, J.; Wu, P.; Schachner, M.; Huang, Z. Bergmann glia and the recognition molecule CHL1 organize GABAergic axons and direct innervation of Purkinje cell dendrites. PLoS Biol. 2008, 6, e103. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Litjens, G.; Kooi, T.; Bejnordi, B.; Setio, A.; Ciompi, F.; Ghafoorian, M.; Van Der Laak, J.; Van Ginneken, B.; Sánchez, C. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef]

- Podder, P.; Das, S.; Mondal, M.; Bharati, S.; Maliha, A.; Hasan, M.; Piltan, F. Lddnet: A deep learning framework for the diagnosis of infectious lung diseases. Sensors 2023, 23, 480. [Google Scholar] [CrossRef] [PubMed]

- Bojarski, M.; Del Testa, D.; Dworakowski, D.; Firner, B.; Flepp, B.; Goyal, P.; Jackel, L.; Monfort, M.; Muller, U.; Zhang, J.; et al. End to end learning for self-driving cars. arXiv 2016, arXiv:1604.07316. [Google Scholar]

- Kim, Y. Convolutional neural networks for sentence classification. arXiv 2014, arXiv:1408.5882. [Google Scholar]

- Gupta, V.; Sadana, R.; Moudgil, S. Image style transfer using convolutional neural networks based on transfer learning. Int. J. Comput. Syst. Eng. 2019, 5, 53–60. [Google Scholar] [CrossRef]

- Goh, G.; Hodas, N.; Vishnu, A. Deep learning for computational chemistry. J. Comput. Chem. 2017, 38, 1291–1307. [Google Scholar] [CrossRef]

- Marmanis, D.; Datcu, M.; Esch, T.; Stilla, U. Deep learning earth observation classification using ImageNet pretrained networks. IEEE Geosci. Remote Sens. Lett. 2015, 13, 105–109. [Google Scholar] [CrossRef]

- Hu, B.; Garrett, M.; Groblewski, P.; Ollerenshaw, D.; Shang, J.; Roll, K.; Manavi, S.; Koch, C.; Olsen, S.; Mihalas, S. Adaptation supports short-term memory in a visual change detection task. PLoS Comput. Biol. 2021, 17, e1009246. [Google Scholar] [CrossRef] [PubMed]

- Garrett, M.; Manavi, S.; Roll, K.; Ollerenshaw, D.; Groblewski, P.; Ponvert, N.; Kiggins, J.; Casal, L.; Mace, K.; Williford, A.; et al. Experience shapes activity dynamics and stimulus coding of VIP inhibitory cells. Elife 2020, 9, e50340. [Google Scholar] [CrossRef]

- Stasenko, S.; Kazantsev, V. Dynamic Image Representation in a Spiking Neural Network Supplied by Astrocytes. Mathematics 2023, 11, 561. [Google Scholar] [CrossRef]

- Stasenko, S.; Lazarevich, I.; Kazantsev, V. Quasi-synchronous neuronal activity of the network induced by astrocytes. Procedia Comput. Sci. 2020, 169, 704–709. [Google Scholar] [CrossRef]

- Barabash, N.; Levanova, T.; Stasenko, S. Rhythmogenesis in the mean field model of the neuron–glial network. Eur. Phys. J. Spec. Top. 2023, 232, 529–534. [Google Scholar] [CrossRef]

- Wilson, H.; Cowan, J. Excitatory and inhibitory interactions in localized populations of model neurons. Biophys. J. 1972, 12, 1–24. [Google Scholar] [CrossRef]

- Tsodyks, M.; Pawelzik, K.; Markram, H. Neural networks with dynamic synapses. Neural Comput. 1998, 10, 821–835. [Google Scholar] [CrossRef]

- Olenin, S.; Levanova, T.; Stasenko, S. Dynamics in the Reduced Mean-Field Model of Neuron–Glial Interaction. Mathematics 2023, 11, 2143. [Google Scholar] [CrossRef]

- Van Rossum, G.; Drake, F., Jr. Python Tutorial; Centrum voor Wiskunde en Informatica: Amsterdam, The Netherlands, 1995. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L. Others PyTorch: An Imperative Style, High-Performance Deep Learning Library. arXiv 2019, arXiv:1912.01703. [Google Scholar]

- Harris, C.; Millman, K.; Van Der Walt, S.; Gommers, R.; Virtanen, P.; Cournapeau, D.; Wieser, E.; Taylor, J.; Berg, S.; Smith, N. Others Array programming with NumPy. Nature 2020, 585, 357–362. [Google Scholar] [CrossRef] [PubMed]

- Bisong, E. Matplotlib and seaborn. In Building Machine Learning and Deep Learning Models on Google Cloud Platform: A Comprehensive Guide for Beginners; Apress: Berkeley, CA, USA, 2019; pp. 151–165. [Google Scholar]

- Ho-Phuoc, T. CIFAR10 to compare visual recognition performance between deep neural networks and humans. arXiv 2018, arXiv:1811.07270. [Google Scholar]

- Tsur, E. Neuromorphic Engineering: The Scientist’s, Algorithms Designer’s and Computer Architect’s Perspectives on Brain-Inspired Computing, 1st ed.; CRC Press: Boca Raton, FL, USA, 2021; p. 330. [Google Scholar] [CrossRef]

- Sterratt, D.; Graham, B.; Gillies, A.; Willshaw, D. Principles of Computational Modelling in Neuroscience, 1st ed.; Cambridge University Press: Cambridge, UK, 2011; p. 390. [Google Scholar] [CrossRef]

- Caporale, N.; Dan, Y. Spike timing–dependent plasticity: A Hebbian learning rule. Annu. Rev. Neurosci. 2008, 31, 25–46. [Google Scholar] [CrossRef] [PubMed]

- Davies, M.; Srinivasa, N.; Lin, T.; Chinya, G.; Cao, Y.; Choday, S.; Dimou, G.; Joshi, P.; Imam, N.; Jain, S. Others Loihi: A neuromorphic manycore processor with on-chip learning. IEEE Micro 2018, 38, 82–99. [Google Scholar] [CrossRef]

- Stasenko, S.; Kovalchuk, A.; Eremin, E.; Drugova, O.; Zarechnova, N.; Tsirkova, M.; Permyakov, S.; Parin, S.; Polevaya, S. Using Machine Learning Algorithms to Determine the Post-COVID State of a Person by Their Rhythmogram. Sensors 2023, 23, 5272. [Google Scholar] [CrossRef]

- Stasenko, S.; Kazantsev, V. Bursting Dynamics of Spiking Neural Network Induced by Active Extracellular Medium. Mathematics 2023, 11, 2109. [Google Scholar] [CrossRef]

- Kazantsev, V.; Gordleeva, S.; Stasenko, S.; Dityatev, A. A homeostatic model of neuronal firing governed by feedback signals from the extracellular matrix. PLoS ONE 2012, 7, e41646. [Google Scholar] [CrossRef]

- Dityatev, A.; Rusakov, D. Molecular signals of plasticity at the tetrapartite synapse. Curr. Opin. Neurobiol. 2011, 21, 353–359. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).