A Novel Underwater Image Enhancement Using Optimal Composite Backbone Network

Abstract

1. Introduction

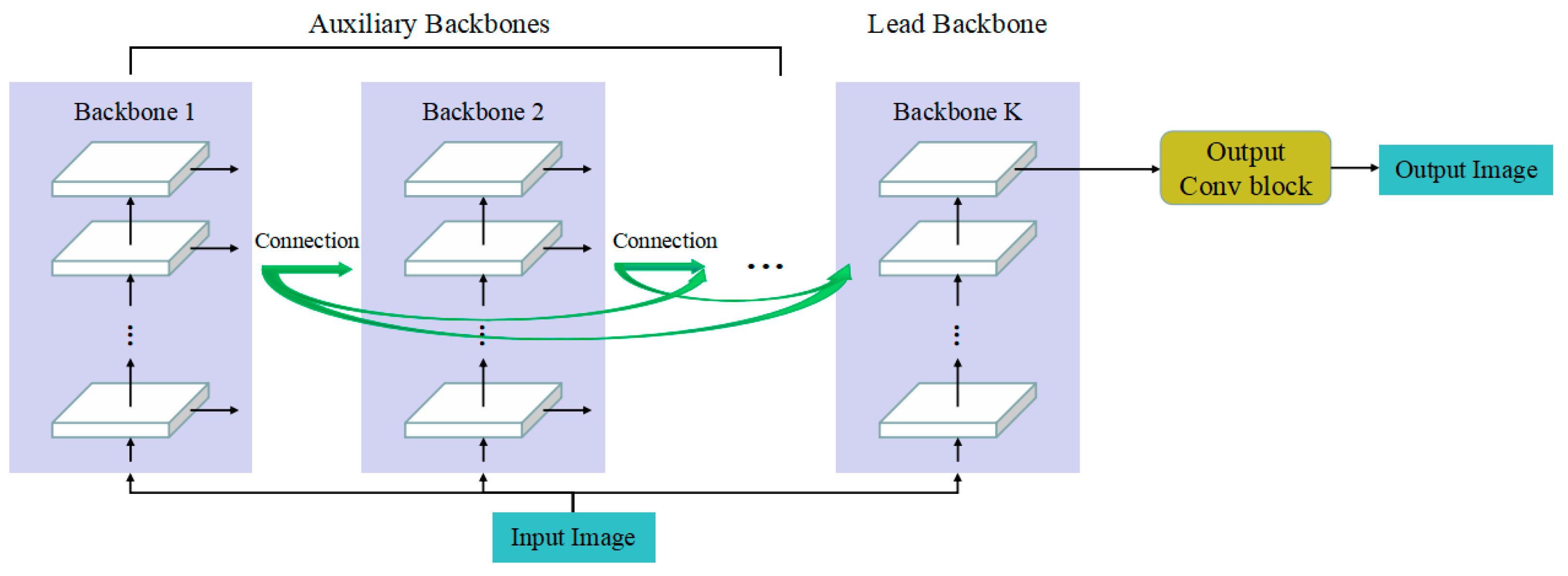

- We introduced a composite backbone into CNN-based underwater image enhancement. The composite backbone networks consist of several uniform end-to-end CNN backbones in specific connection strategies. Composite backbone networks were applied throughout the enhancement networks;

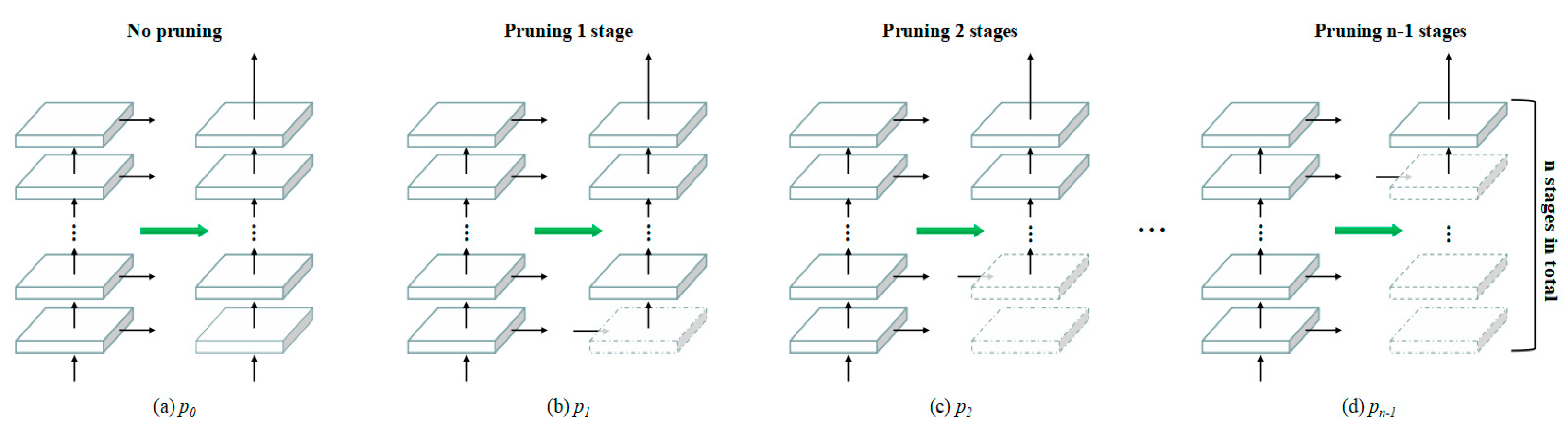

- We conducted a comprehensive study about underwater image enhancement using CBNet in different variants by first investigating the impact of backbone number on the enhancement results. Then, the different connection strategies between backbones and some corresponding pruning strategies were introduced in our research. Moreover, the experiments were conducted in auxiliary losses;

- We proposed an optimal underwater image-enhancing composite backbone network. The optimized composite backbone network consists of two backbones in full-connected composition (FCC), and two stages are pruned in the lead backbone. The network accurately expresses objects as well as background colors and is well adapted to the engineering field.

2. Related Work

2.1. Deep Learning-Based Underwater Image Enhancement

2.2. Underwater Dataset

2.3. Backbone Network

3. Backbone Network Details

3.1. Global Architecture

3.2. Backbone Architecture

3.3. Output Convolution Block

4. Research on CBNet-Based Underwater Image Enhancement

4.1. Implementation Details

4.2. Backbone Number Analysis

4.3. Connection Strategy Analysis

4.3.1. Adjacent Higher-Level Composition (AHLC)

4.3.2. Adjacent Lower-Level Composition (ALLC)

4.3.3. Dense Same-Level Composition (DSLC)

4.3.4. Dense Higher-Level Composition (DHLC)

4.3.5. Full-Connected Composition (FCC)

4.4. Pruning Strategy Analysis

4.5. Auxiliary Loss Analysis

4.6. Summary

5. Cross-Sectional Research

5.1. Reference Subset Evaluation

5.2. Challenging Subset Evaluation

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Cong, Y.; Fan, B.; Hou, D.; Fan, H.; Liu, K.; Luo, J. Novel event analysis for human-machine collaborative underwater exploration. Pattern Recognit. 2019, 96, 106967. [Google Scholar] [CrossRef]

- Zhou, Y.; Li, B.; Wang, J.; Rocco, E.; Meng, Q. Discovering unknowns: Context-enhanced anomaly detection for curiosity-driven autonomous underwater exploration. Pattern Recognit. 2022, 131, 108860. [Google Scholar] [CrossRef]

- Zhang, W.; Zhuang, P.; Sun, H.-H.; Li, G.; Kwong, S.; Li, C. Underwater Image Enhancement via Minimal Color Loss and Locally Adaptive Contrast Enhancement. IEEE Trans. Image Process. 2022, 31, 3997–4010. [Google Scholar] [CrossRef] [PubMed]

- Li, W.; Hu, W.; Dong, T.; Qu, J. Depth Image Enhancement Algorithm Based on RGB Image Fusion. In Proceedings of the 2018 11th International Symposium on Computational Intelligence and Design (ISCID), Hangzhou, China, 8–9 December 2018; Volume 2, pp. 111–114. [Google Scholar] [CrossRef]

- Ancuti, C.O.; Ancuti, C.; De Vleeschouwer, C.; Bekaert, P. Color Balance and Fusion for Underwater Image Enhancement. IEEE Trans. Image Process. 2018, 27, 379–393. [Google Scholar] [CrossRef]

- Peng, Y.-T.; Cao, K.; Cosman, P.C. Generalization of the Dark Channel Prior for Single Image Restoration. IEEE Trans. Image Process. 2018, 27, 2856–2868. [Google Scholar] [CrossRef]

- Lv, X.; Sun, Y.; Zhang, J.; Jiang, F.; Zhang, S. Low-light image enhancement via deep Retinex decomposition and bilateral learning. Signal Process. Image Commun. 2021, 99, 116466. [Google Scholar] [CrossRef]

- Gao, S.-B.; Zhang, M.; Zhao, Q.; Zhanga, X.-S.; Li, Y.-J. Underwater Image Enhancement Using Adaptive Retinal Mechanisms. IEEE Trans. Image Process. 2019, 28, 5580–5595. [Google Scholar] [CrossRef]

- Li, C.; Anwar, S.; Porikli, F. Underwater scene prior inspired deep underwater image and video enhancement. Pattern Recognit. 2020, 98, 107038. [Google Scholar] [CrossRef]

- Li, C.; Guo, C.; Chen, C.L. Learning to Enhance Low-Light Image via Zero-Reference Deep Curve Estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 4225–4238. [Google Scholar] [CrossRef]

- Guo, Y.; Li, H.; Zhuang, P. Underwater Image Enhancement Using a Multiscale Dense Generative Adversarial Network. IEEE J. Ocean. Eng. 2020, 45, 862–870. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, Y.; Wang, S.; Liang, T.; Zhao, Q.; Tang, Z.; Ling, H. CBNet: A Novel Composite Backbone Network Architecture for Object Detection. Proc Conf AAAI Artif Intell 2020, 34, 11653–11660. [Google Scholar] [CrossRef]

- Liang, T.; Chu, X.; Liu, Y.; Wang, Y.; Tang, Z.; Chu, W.; Chen, J.; Ling, H. CBNet: A Composite Backbone Network Architecture for Object Detection. IEEE Trans. Image Process. 2022, 31, 6893–6906. [Google Scholar] [CrossRef]

- Anwar, S.; Li, C. Diving deeper into underwater image enhancement: A survey. Signal Process. Image Commun. 2020, 89, 115978. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention 2015; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Yan, L.; Zhao, M.; Liu, S.; Shi, S.; Chen, J. Cascaded transformer U-net for image restoration. Signal Process. 2023, 206, 108902. [Google Scholar] [CrossRef]

- Liu, X.; Gao, Z.; Chen, B.M. MLFcGAN: Multilevel Feature Fusion-Based Conditional GAN for Underwater Image Color Correction. IEEE Geosci. Remote. Sens. Lett. 2020, 17, 1488–1492. [Google Scholar] [CrossRef]

- Xue, X.; Li, Z.; Ma, L.; Jia, Q.; Liu, R.; Fan, X. Investigating intrinsic degradation factors by multi-branch aggregation for real-world underwater image enhancement. Pattern Recognit. 2023, 133, 109041. [Google Scholar] [CrossRef]

- Li, C.; Guo, C.; Ren, W.; Cong, R.; Hou, J.; Kwong, S.; Tao, D. An Underwater Image Enhancement Benchmark Dataset and Beyond. IEEE Trans. Image Process. 2020, 29, 4376–4389. [Google Scholar] [CrossRef] [PubMed]

- Wu, J.; Liu, X.; Lu, Q.; Lin, Z.; Qin, N.; Shi, Q. FW-GAN: Underwater image enhancement using generative adversarial network with multi-scale fusion. Signal Process. Image Commun. 2022, 109, 116855. [Google Scholar] [CrossRef]

- Wang, Y.; Guo, J.; Gao, H.; Yue, H. UIEC^2-Net: CNN-based underwater image enhancement using two color space. Signal Process. Image Commun. 2021, 96, 116250. [Google Scholar] [CrossRef]

- Chen, Y.; Ke, W.; Kou, L.; Li, Q.; Lu, D.; Bai, Y.; Wang, Z. Multiple Channel Adjustment based on Composite Backbone Network for Underwater Image Enhancement. Int. J. Bio Inspired Comput. 2023; to be published. [Google Scholar]

- Chen, Y.; Li, H.; Yuan, Q.; Wang, Z.; Hu, C.; Ke, W. Underwater Image Enhancement based on Improved Water-Net. In Proceedings of the 2022 IEEE International Conference on Cyborg and Bionic Systems (CBS), Wuhan, China, 24–26 March 2023; pp. 450–454. [Google Scholar] [CrossRef]

- Hambarde, P.; Murala, S.; Dhall, A. UW-GAN: Single-Image Depth Estimation and Image Enhancement for Underwater Images. IEEE Trans. Instrum. Meas. 2021, 70, 5018412. [Google Scholar] [CrossRef]

- Lin, P.; Wang, Y.; Wang, G.; Yan, X.; Jiang, G.; Fu, X. Conditional generative adversarial network with dual-branch progressive generator for underwater image enhancement. Signal Process. Image Commun. 2022, 108, 116805. [Google Scholar] [CrossRef]

- Lu, J.; Li, N.; Zhang, S.; Yu, Z.; Zheng, H.; Zheng, B. Multi-scale adversarial network for underwater image restoration. Opt. Laser Technol. 2019, 110, 105–113. [Google Scholar] [CrossRef]

- Chen, R.; Cai, Z.; Cao, W. MFFN: An Underwater Sensing Scene Image Enhancement Method Based on Multiscale Feature Fusion Network. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4205612. [Google Scholar] [CrossRef]

- Liu, R.; Fan, X.; Zhu, M.; Hou, M.; Luo, Z. Real-World Underwater Enhancement: Challenges, Benchmarks, and Solutions Under Natural Light. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 4861–4875. [Google Scholar] [CrossRef]

- Panetta, K.; Kezebou, L.; Oludare, V.; Agaian, S. Comprehensive Underwater Object Tracking Benchmark Dataset and Underwater Image Enhancement With GAN. IEEE J. Ocean. Eng. 2022, 47, 59–75. [Google Scholar] [CrossRef]

- Li, S.; Song, W.; Qin, H.; Hao, A. Deep variance network: An iterative, improved CNN framework for unbalanced training datasets. Pattern Recognit. 2018, 81, 294–308. [Google Scholar] [CrossRef]

- Li, G.; Zhang, M.; Li, J.; Lv, F.; Tong, G. Efficient densely connected convolutional neural networks. Pattern Recognit. 2021, 109, 107610. [Google Scholar] [CrossRef]

- Gao, S.-H.; Cheng, M.-M.; Zhao, K.; Zhang, X.-Y.; Yang, M.-H.; Torr, P.H. Res2Net: A New Multi-Scale Backbone Architecture. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 652–662. [Google Scholar] [CrossRef]

- Liang, M.; Hu, X. Recurrent convolutional neural network for object recognition. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar] [CrossRef]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShufflfleNet: An extremely effificient convolutional neural network for mobile devices. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar] [CrossRef]

- Li, Z.; Peng, C.; Yu, G.; Zhang, X.; Deng, Y.; Sun, J. DetNet: Design Backbone for Object Detection. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2018; pp. 339–354. [Google Scholar] [CrossRef]

- Fang, L.; Jiang, Y.; Yan, Y.; Yue, J.; Deng, Y. Hyperspectral Image Instance Segmentation Using Spectral–Spatial Feature Pyramid Network. IEEE Trans. Geosci. Remote. Sens. 2023, 61, 5502613. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, Y.; Wang, H.; Wu, J.; Li, Y. CNN Cloud Detection Algorithm Based on Channel and Spatial Attention and Probabilistic Upsampling for Remote Sensing Image. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5404613. [Google Scholar] [CrossRef]

- Yi, S.; Liu, X.; Li, J.; Chen, L. UAVformer: A Composite Transformer Network for Urban Scene Segmentation of UAV Images. Pattern Recognit. 2023, 133, 109019. [Google Scholar] [CrossRef]

- Zhao, Z.; Liu, Y.; Sun, X.; Liu, J.; Yang, X.; Zhou, C. Composited FishNet: Fish Detection and Species Recognition from Low-Quality Underwater Videos. IEEE Trans. Image Process. 2021, 30, 4719–4734. [Google Scholar] [CrossRef] [PubMed]

- Yang, M.; Sowmya, A. An Underwater Color Image Quality Evaluation Metric. IEEE Trans. Image Process. 2015, 24, 6062–6071. [Google Scholar] [CrossRef] [PubMed]

- Panetta, K.; Gao, C.; Agaian, S. Human-Visual-System-Inspired Underwater Image Quality Measures. IEEE J. Ocean. Eng. 2016, 41, 541–551. [Google Scholar] [CrossRef]

| Method | SSIMRGB | SSIMgray | MSERGB | MSEgray | PSNRRGB | PSNRgray |

|---|---|---|---|---|---|---|

| SLC | 0.9025 | 0.9323 | 172.95 | 390.66 | 27.224 | 24.374 |

| AHLC | 0.9017 | 0.9314 | 164.52 | 358.08 | 27.322 | 24.523 |

| ALLC | 0.9045 | 0.9344 | 170.36 | 369.54 | 26.983 | 24.107 |

| DSLC | 0.9059 | 0.9350 | 164.38 | 371.45 | 27.201 | 24.201 |

| DHLC | 0.9024 | 0.9318 | 163.32 | 362.24 | 27.370 | 24.445 |

| FCC | 0.9080 | 0.9375 | 173.81 | 384.59 | 27.026 | 24.047 |

| Method | Average Runtime/s |

|---|---|

| SLC | 0.00261 |

| AHLC | 0.04381 |

| ALLC | 0.00255 |

| DSLC | 0.13251 |

| DHLC | 0.13596 |

| FCC | 0.00259 |

| Method | SSIMRGB | SSIMgray | MSERGB | MSEgray | PSNRRGB | PSNRgray | Runtime |

|---|---|---|---|---|---|---|---|

| FCC(p2) | 0.911 | 0.941 | 162.4 | 349.3 | 27.09 | 24.32 | 0.0023 |

| DHLC(p2) | 0.904 | 0.933 | 162.6 | 345.9 | 27.38 | 24.72 | 0.0514 |

| AHLC(s-a) | 0.905 | 0.935 | 161.2 | 347.7 | 27.23 | 24.44 | 0.0438 |

| Method | SSIMRGB | SSIMgray | MSERGB | MSEgray | PSNRRGB | PSNRgray |

|---|---|---|---|---|---|---|

| UWCNN | 0.8443 | 0.8799 | 496.32 | 1191.6 | 22.550 | 19.470 |

| Water-Net | 0.8840 | 0.9188 | 380.14 | 874.80 | 23.738 | 20.946 |

| UIEC2Net | 0.9034 | 0.9336 | 168.35 | 368.49 | 27.039 | 24.238 |

| IW-Net | 0.9062 | 0.9348 | 223.10 | 473.09 | 27.098 | 24.496 |

| MC-CBNet | 0.9048 | 0.9349 | 168.11 | 385.16 | 27.375 | 24.553 |

| OECBNet | 0.9112 | 0.9409 | 162.40 | 349.29 | 27.091 | 24.322 |

| Method | UCIQE | UIQM | UICM | UISM | UIConM |

|---|---|---|---|---|---|

| Raw | 0.4525 | 1.9821 | 4.9778 | 3.5095 | 0.2252 |

| UWCNN | 0.4416 | 2.5893 | 4.0856 | 4.6135 | 0.3109 |

| Water-Net | 0.5341 | 2.5043 | 5.4714 | 4.5885 | 0.2783 |

| UIEC2Net | 0.5288 | 2.6618 | 6.5597 | 4.8831 | 0.2895 |

| IW-Net | 0.5559 | 2.5874 | 6.8383 | 4.7999 | 0.2733 |

| MC-CBNet | 0.5520 | 2.6580 | 6.9278 | 4.8821 | 0.2856 |

| OECBNet | 0.5612 | 2.7178 | 7.1059 | 5.0357 | 0.2882 |

| Reference | 0.5595 | 2.5024 | 7.5522 | 4.6337 | 0.2576 |

| Method | Average Runtime/s |

|---|---|

| UWCNN | 0.00143 |

| Water-Net | 0.23477 |

| UIEC2Net | 0.11010 |

| IW-Net | 0.31910 |

| MC-CBNet | 0.37713 |

| OECBNet | 0.00230 |

| Method | UCIQE | UIQM | UICM | UISM | UIConM |

|---|---|---|---|---|---|

| Raw | 0.3907 | 1.6933 | 3.1112 | 2.4060 | 0.2504 |

| UWCNN | 0.3877 | 2.1985 | 2.5470 | 3.3318 | 0.3196 |

| Water-Net | 0.5132 | 2.1455 | 4.4568 | 3.2896 | 0.2932 |

| UIEC2Net | 0.5056 | 2.3049 | 4.7146 | 3.7595 | 0.2970 |

| IW-Net | 0.5369 | 2.2320 | 4.7800 | 3.4878 | 0.2985 |

| MC-CBNet | 0.5302 | 2.2942 | 5.0663 | 3.7356 | 0.2932 |

| OECBNet | 0.5503 | 2.3240 | 5.3689 | 3.7981 | 0.2940 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Y.; Li, Q.; Lu, D.; Kou, L.; Ke, W.; Bai, Y.; Wang, Z. A Novel Underwater Image Enhancement Using Optimal Composite Backbone Network. Biomimetics 2023, 8, 275. https://doi.org/10.3390/biomimetics8030275

Chen Y, Li Q, Lu D, Kou L, Ke W, Bai Y, Wang Z. A Novel Underwater Image Enhancement Using Optimal Composite Backbone Network. Biomimetics. 2023; 8(3):275. https://doi.org/10.3390/biomimetics8030275

Chicago/Turabian StyleChen, Yuhan, Qingfeng Li, Dongxin Lu, Lei Kou, Wende Ke, Yan Bai, and Zhen Wang. 2023. "A Novel Underwater Image Enhancement Using Optimal Composite Backbone Network" Biomimetics 8, no. 3: 275. https://doi.org/10.3390/biomimetics8030275

APA StyleChen, Y., Li, Q., Lu, D., Kou, L., Ke, W., Bai, Y., & Wang, Z. (2023). A Novel Underwater Image Enhancement Using Optimal Composite Backbone Network. Biomimetics, 8(3), 275. https://doi.org/10.3390/biomimetics8030275