A Survey on Reinforcement Learning Methods in Bionic Underwater Robots

Abstract

1. Introduction

2. Overview of Reinforcement Learning

2.1. Statement of Reinforcement Learning

2.2. Taxonomy of the Reinforcement Learning Algorithm

2.2.1. Model-Based and Model-Free Algorithms

2.2.2. Algorithms for Prediction Tasks and Control Tasks

2.2.3. On-Policy and Off-Policy Algorithms

2.2.4. Approximator-Based Reinforcement Learning Algorithms

2.2.5. Inverse Reinforcement Learning and Imitative Learning

2.3. Advanced Version of Reinforcement Learning Algorithms

2.4. Advantages of Reinforcement Learning

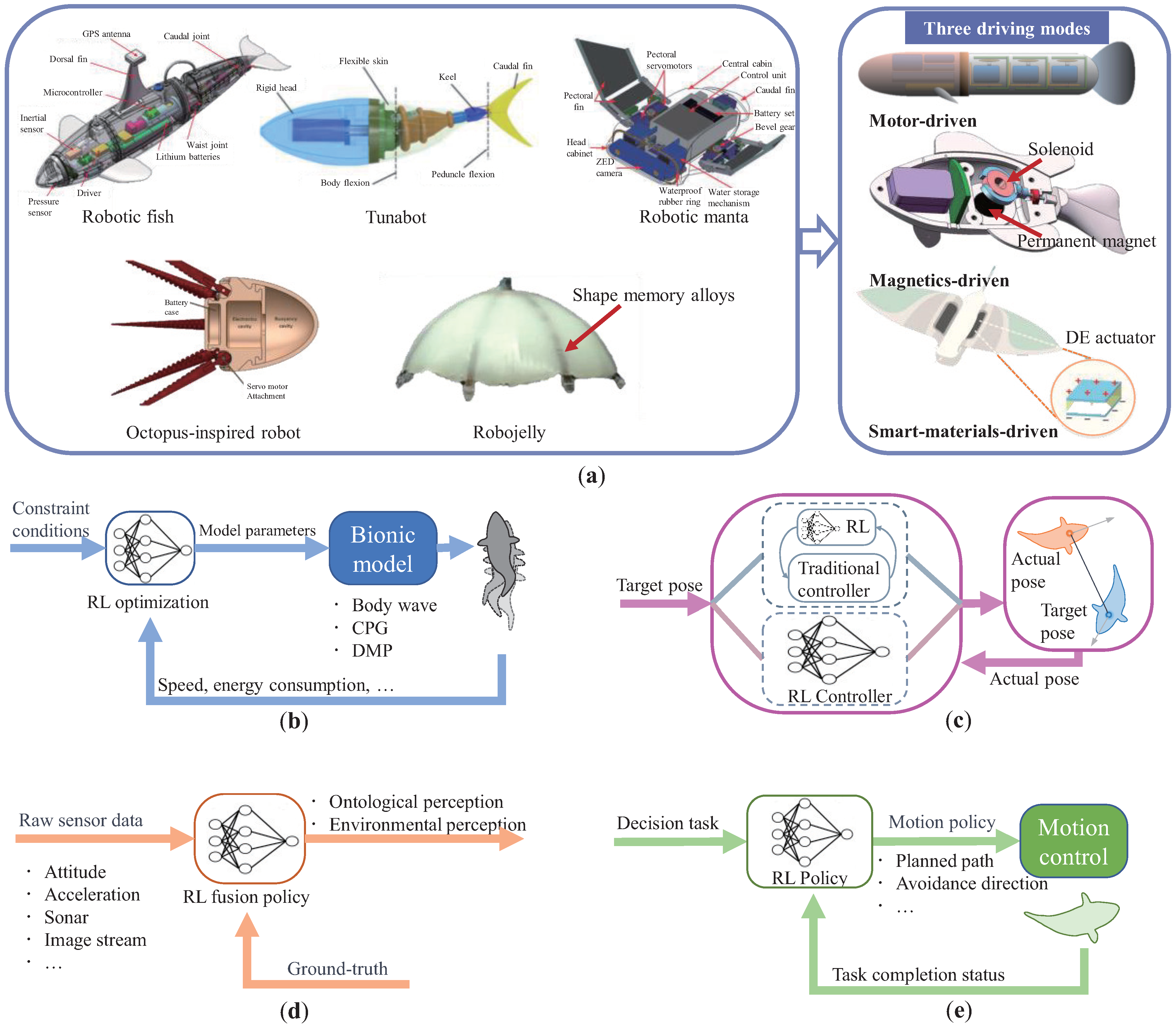

3. Task Spaces of Bionic Underwater Robots

3.1. Common Structure of Bionic Underwater Robots

3.2. Task Spaces

3.2.1. Bionic Action Control Task

3.2.2. Motion Control Task

3.2.3. Perception Fusion Task

3.2.4. Planning and Decision Making Task

3.3. Advantages and Challenges of RL-Based Methods

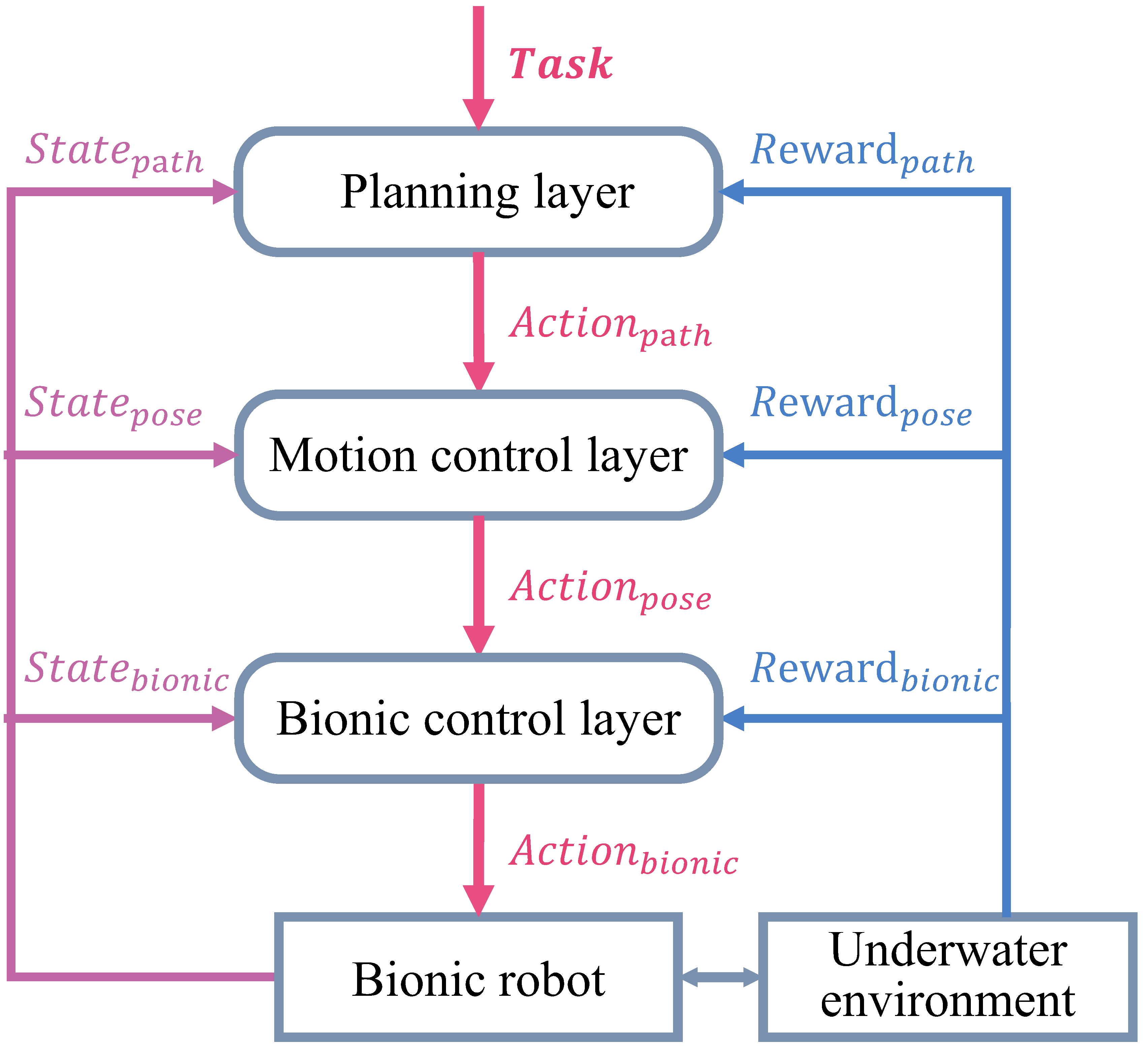

4. RL-Based Methods in Task Spaces of Bionic Underwater Robots

4.1. RL for Bionic Action Control Tasks

4.2. RL for Motion Control Tasks

4.3. RL for Planning and Decision Making Tasks

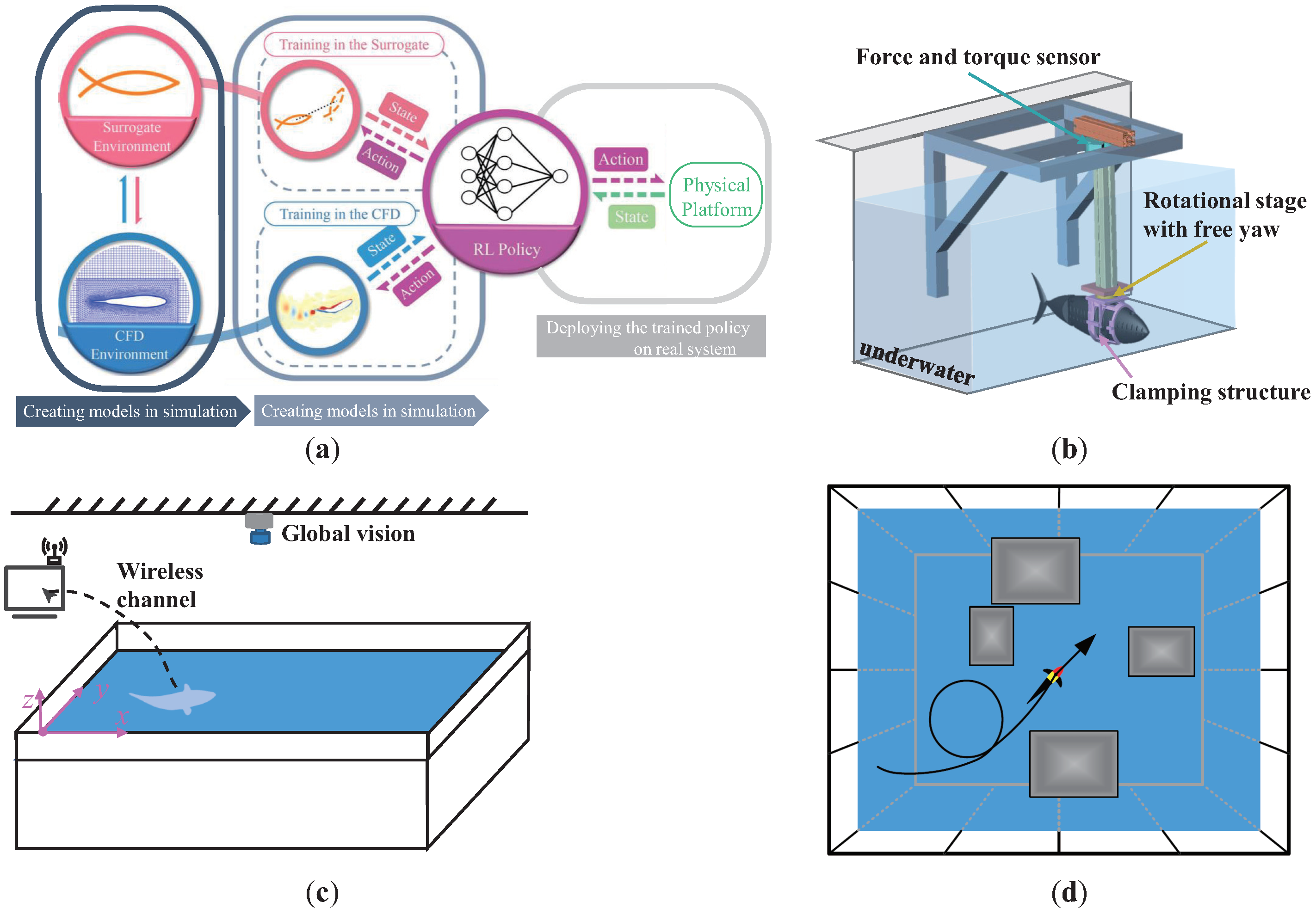

5. Training and Deployment Methods of RL on Bionic Underwater Robots

5.1. Training and Deployment Framework

5.2. Training Techniques

5.3. Computational Complexity

6. Challenges and Future Trends

6.1. Challenges

6.1.1. Inevitable Modeling

6.1.2. Transferability to the Real-World Environment

6.1.3. Sample Efficiency of Training

6.1.4. Security of Deploying RL in Underwater Environments

6.1.5. Robustness and Adaptability for Continuous Disturbances in Underwater Environments

6.1.6. Computational Complexity and Online Deployment

6.2. Future Trends

6.2.1. Multiple Bionic Motion Combination Control

6.2.2. Applicable Training Schemes for Underwater Environments

6.2.3. Improving the Transferability and Generalization of RL

6.2.4. General Simulation Training Environment

6.2.5. Performance Evaluation System of RL in the Field of Bionic Underwater Robots

6.2.6. Complete RL-Based Algorithm Framework for Bionic Underwater Robots

6.2.7. Individual Intelligence of Bionic Underwater Robots

6.2.8. Multi-Agent Collaboration and Coordination

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Videler, J.J.; Wardle, C.S. Fish swimming stride by stride: Speed limits and endurance. Rev. Fish Biol. Fish. 1991, 1, 23–40. [Google Scholar] [CrossRef]

- Bainbridge, R. The speed of swimming of fish as related to size and to the frequency and amplitude of the tail beat. J. Exp. Biol. 1958, 35, 109–133. [Google Scholar] [CrossRef]

- Mitin, I.; Korotaev, R.; Ermolaev, A.; Mironov, V.; Lobov, S.A.; Kazantsev, V.B. Bioinspired propulsion system for a thunniform robotic fish. Biomimetics 2022, 7, 215. [Google Scholar] [CrossRef]

- Baines, R.; Patiballa, S.K.; Booth, J.; Ramirez, L.; Sipple, T.; Garcia, A.; Wallin, E.; Williams, S.; Oppenheimer, D.; Rus, D.; et al. Multi-environment robotic transitions through adaptive morphogenesis. Nature 2022, 610, 283–289. [Google Scholar] [CrossRef]

- Zhong, Y.; Li, Z.; Du, R. A novel robot fish with wire-driven active body and compliant tail. IEEE ASME Trans. Mechatron. 2017, 22, 1633–1643. [Google Scholar] [CrossRef]

- Li, T.; Li, G.; Liang, Y.; Cheng, T.; Dai, J.; Yang, X.; Sun, Z.; Zhang, X.; Zhao, Y.; Yang, W. Fast-moving soft electronic fish. Sci. Adv. 2017, 3, e1602045. [Google Scholar] [CrossRef]

- Meng, Y.; Wu, Z.; Yu, J. Mechatronic design of a novel robotic manta with pectoral fins. In Proceedings of the 2019 IEEE 9th Annual International Conference on CYBER Technology in Automation, Control, and Intelligent Systems (CYBER), Suzhou, China, 29 July–2 August 2019; pp. 439–444. [Google Scholar]

- Meng, Y.; Wu, Z.; Dong, H.; Wang, J.; Yu, J. Toward a novel robotic manta with unique pectoral fins. IEEE Trans. Syst. Man Cybern. Syst. 2022, 52, 1663–1673. [Google Scholar] [CrossRef]

- Thandiackal, R.; White, C.H.; Bart-Smith, H.; Lauder, G.V. Tuna robotics: Hydrodynamics of rapid linear accelerations. Proc. Biol. Sci. 2021, 288, 20202726. [Google Scholar] [CrossRef] [PubMed]

- Du, S.; Wu, Z.; Wang, J.; Qi, S.; Yu, J. Design and control of a two-motor-actuated tuna-inspired robot system. IEEE Trans. Syst. Man Cybern. B Cybern. 2019, 51, 4670–4680. [Google Scholar] [CrossRef]

- White, C.H.; Lauder, G.V.; Bart-Smith, H. Tunabot Flex: A tuna-inspired robot with body flexibility improves high-performance swimming. Bioinspir. Biomim. 2021, 16, 026019. [Google Scholar] [CrossRef] [PubMed]

- Zheng, C.; Ding, J.; Dong, B.; Lian, G.; He, K.; Xie, F. How non-uniform stiffness affects the propulsion performance of a biomimetic robotic fish. Biomimetics 2022, 7, 187. [Google Scholar] [CrossRef] [PubMed]

- Ren, Q.; Xu, J.; Li, X. A data-driven motion control approach for a robotic fish. J. Bionic Eng. 2015, 12, 382–394. [Google Scholar] [CrossRef]

- Wang, R.; Wang, S.; Wang, Y.; Tan, M.; Yu, J. A paradigm for path following control of a ribbon-fin propelled biomimetic underwater vehicle. IEEE Trans. Syst. Man Cybern. Syst. 2019, 49, 482–493. [Google Scholar] [CrossRef]

- Manderson, T.; Higuera, J.C.G.; Wapnick, S.; Tremblay, J.F.; Shkurti, F.; Meger, D.; Dudek, G. Vision-based goal-conditioned policies for underwater navigation in the presence of obstacles. arXiv 2020, arXiv:2006.16235. [Google Scholar]

- Zhang, T.; Li, Y.; Li, S.; Ye, Q.; Wang, C.; Xie, G. Decentralized circle formation control for fish-like robots in the real-world via reinforcement learning. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 8814–8820. [Google Scholar]

- Zheng, J.; Zhang, T.; Wang, C.; Xiong, M.; Xie, G. Learning for attitude holding of a robotic fish: An end-to-end approach with sim-to-real transfer. IEEE Trans. Robot. 2022, 38, 1287–1303. [Google Scholar] [CrossRef]

- Zhang, T.; Tian, R.; Yang, H.; Wang, C.; Sun, J.; Zhang, S.; Xie, G. From simulation to reality: A learning framework for fish-like robots to perform control tasks. IEEE Trans. Robot. 2022, 38, 3861–3878. [Google Scholar] [CrossRef]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; Van Den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. Mastering the game of Go with deep neural networks and tree search. Nature 2016, 529, 484–489. [Google Scholar] [CrossRef]

- Silver, D.; Schrittwieser, J.; Simonyan, K.; Antonoglou, I.; Huang, A.; Guez, A.; Hubert, T.; Baker, L.; Lai, M.; Bolton, A.; et al. Mastering the game of go without human knowledge. Nature 2017, 550, 354–359. [Google Scholar] [CrossRef]

- Hwangbo, J.; Lee, J.; Dosovitskiy, A.; Bellicoso, D.; Tsounis, V.; Koltun, V.; Hutter, M. Learning agile and dynamic motor skills for legged robots. Sci Robot. 2019, 4, eaau5872. [Google Scholar] [CrossRef]

- Cui, R.; Yang, C.; Li, Y.; Sharma, S. Adaptive neural network control of auvs with control input nonlinearities using reinforcement learning. IEEE Trans. Syst. Man Cybern. Syst. 2017, 47, 1019–1029. [Google Scholar] [CrossRef]

- Lee, J.; Hwangbo, J.; Wellhausen, L.; Koltun, V.; Hutter, M. Learning quadrupedal locomotion over challenging terrain. Sci. Robot. 2020, 5, eabc5986. [Google Scholar] [CrossRef] [PubMed]

- Andrychowicz, O.M.; Baker, B.; Chociej, M.; Jozefowicz, R.; McGrew, B.; Pachocki, J.; Petron, A.; Plappert, M.; Powell, G.; Ray, A.; et al. Learning dexterous in-hand manipulation. Int. J. Robot. Res. 2020, 39, 3–20. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Lagoudakis, M.G.; Parr, R. Least-squares policy iteration. J. Mach. Learn. Res. 2003, 4, 1107–1149. [Google Scholar]

- Niu, S.; Chen, S.; Guo, H.; Targonski, C.; Smith, M.; Kovačević, J. Generalized value iteration networks: Life beyond lattices. Proc. Conf. AAAI Artif. Intell. 2018, 32, 1. [Google Scholar]

- Yu, J.; Wang, M.; Tan, M.; Zhang, J. Three-dimensional swimming. IEEE Robot. Autom. Mag. 2011, 18, 47–58. [Google Scholar] [CrossRef]

- Ryuh, Y.S.; Yang, G.H.; Liu, J.; Hu, H. A school of robotic fish for mariculture monitoring in the sea coast. J. Bionic Eng. 2015, 12, 37–46. [Google Scholar] [CrossRef]

- Watkins, C.J.; Dayan, P. Q-Learning. Mach. Learn. 1992, 8, 279–292. [Google Scholar] [CrossRef]

- Hasselt, H. Double Q-learning. Adv. Neural Inf. Process. Syst. 2010, 23, 2613–2621. [Google Scholar]

- Riedmiller, M. Neural fitted q iteration–first experiences with a data efficient neural reinforcement learning method. In Proceedings of the 16th European Conference on Machine Learning, Porto, Portugal, 3–7 October 2005; Springer: Berlin, Germany, 2005; Volume 16, pp. 317–328. [Google Scholar]

- Xu, X.; He, H.G.; Hu, D. Efficient reinforcement learning using recursive least-squares methods. J. Artif. Intell. Res. 2002, 16, 259–292. [Google Scholar] [CrossRef]

- Boyan, J.A. Least-squares temporal difference learning. In Proceedings of the Sixteenth International Conference on Machine Learning, Bled, Slovenia, 27–30 June 1999; pp. 49–56. [Google Scholar]

- Williams, R.J. Simple statistical gradient-following algorithms for connectionist reinforcement learning. Reinf. Learn. 1992, 8, 229–256. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing atari with deep reinforcement learning. arXiv 2013, arXiv:1312.5602. [Google Scholar]

- Gu, S.; Holly, E.; Lillicrap, T.; Levine, S. Deep reinforcement learning for robotic manipulation with asynchronous off-policy updates. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3389–3396. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef]

- van Hasselt, H.; Guez, A.; Silver, D. Deep reinforcement learning with double q-learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30, pp. 2094–2100. [Google Scholar]

- Wang, Z.; Schaul, T.; Hessel, M.; Hasselt, H.; Lanctot, M.; Freitas, N. Dueling network architectures for deep reinforcement learning. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; Volume 48, pp. 1995–2003. [Google Scholar]

- Babaeizadeh, M.; Frosio, I.; Tyree, S.; Clemons, J.; Kautz, J. Reinforcement learning through asynchronous advantage actor-critic on a gpuR. arXiv 2016, arXiv:1611.06256. [Google Scholar]

- Schulman, J.; Levine, S.; Abbeel, P.; Jordan, M.; Moritz, P. Trust region policy optimization. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 1889–1897. [Google Scholar]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Silver, D.; Lever, G.; Heess, N.; Degris, T.; Wierstra, D.; Riedmiller, M. Deterministic policy gradient algorithms. In Proceedings of the International Conference on Machine Learning, Beijing, China, 21–26 June 2014; pp. 387–395. [Google Scholar]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. arXiv 2015, arXiv:1509.02971. [Google Scholar]

- Gao, J.; Shen, Y.; Liu, J.; Ito, M.; Shiratori, N. Adaptive traffic signal control: Deep reinforcement learning algorithm with experience replay and target network. arXiv 2017, arXiv:1705.02755. [Google Scholar]

- Ng, A.Y.; Russell, S. Algorithms for inverse reinforcement learning. In Proceedings of the Seventeenth International Conference on Machine Learning, Stanford, CA, USA, 29 June–2 July 2000; Volume 1, pp. 663–670. [Google Scholar]

- Arora, S.; Doshi, P. A survey of inverse reinforcement learning: Challenges, methods and progress. Artif. Intell. 2021, 297, 103500. [Google Scholar] [CrossRef]

- Abbeel, P.; Ng, A.Y. Apprenticeship learning via inverse reinforcement learning. In Proceedings of the Twenty-First International Conference on Machine Learning, Banff, AB, Canada, 4–8 July 2004; Volume 1, pp. 1–8. [Google Scholar]

- Ratliff, N.D.; Bagnell, J.A.; Zinkevich, M.A. Maximum margin planning. In Proceedings of the 23rd International Conference on Machine Learning, Pittsburgh, PA, USA, 25–29 June 2006; pp. 729–736. [Google Scholar]

- Ziebart, B.D.; Maas, A.L.; Bagnell, J.A.; Dey, A.K. Maximum entropy inverse reinforcement learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Chicago, IL, USA, 13–17 July 2008; Volume 8, pp. 1433–1438. [Google Scholar]

- Ho, J.; Ermon, S. Generative adversarial imitation learning. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; Volume 29. [Google Scholar]

- Ross, S.; Gordon, G.; Bagnell, D. A reduction of imitation learning and structured prediction to no-regret online learning. In Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 11–13 April 2011; pp. 627–635. [Google Scholar]

- Haarnoja, T.; Tang, H.; Abbeel, P.; Levine, S. Reinforcement learning with deep energy-based policies. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 1352–1361. [Google Scholar]

- Tamar, A.; Wu, Y.; Thomas, G.; Levine, S.; Abbeel, P. Value iteration networks. In Proceedings of the 29th Annual Conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 2154–2162. [Google Scholar]

- Van Seijen, H.; Fatemi, M.; Romoff, J.; Laroche, R.; Barnes, T.; Tsang, J. Hybrid Reward Architecture for reinforcement learning. In Proceedings of the 30th Annual Conference on Neural Information Processing Systems, Barcelona, Spain, 4–9 December 2017; pp. 4882–4893. [Google Scholar]

- Andrychowicz, M.; Wolski, F.; Ray, A.; Schneider, J.; Fong, R.; Welinder, P.; McGrew, B.; Tobin, J.; Abbeel, P.; Zaremba, W. Hindsight experience replay. In Proceedings of the 30th Annual Conference on Neural Information Processing Systems, Barcelona, Spain, 4–9 December 2017; pp. 5048–5058. [Google Scholar]

- Raffin, A.; Hill, A.; Gleave, A.; Kanervisto, A.; Ernestus, M.; Dormann, N. Stable-baselines3: Reliable reinforcement learning implementations. J. Mach. Learn. Res. 2021, 22, 1–8. [Google Scholar]

- Yu, J.; Wu, Z.; Su, Z.; Wang, T.; Qi, S. Motion control strategies for a repetitive leaping robotic dolphin. IEEE ASME Trans. Mechatron. 2019, 24, 913–923. [Google Scholar] [CrossRef]

- Zhu, J.; White, C.; Wainwright, D.K.; Di Santo, V.; Lauder, G.V.; Bart-Smith, H. Tuna Robotics: A high-frequency experimental platform exploring the performance space of swimming fishes. Sci. Robot. 2019, 4, eaax4615. [Google Scholar] [CrossRef]

- Sfakiotakis, M.; Kazakidi, A.; Tsakiris, D.P. Octopus-inspired multi-arm robotic swimming. Bioinspir. Biomim. 2015, 10, 035005. [Google Scholar] [CrossRef] [PubMed]

- Villanueva, A.; Smith, C.; Priya, S. A biomimetic robotic jellyfish (robojelly) actuated by shape memory alloy composite actuators. Bioinspir. Biomim. 2011, 6, 036004. [Google Scholar] [CrossRef] [PubMed]

- Du, R.; Li, Z.; Youcef-Toumi, K.; Alvarado, P.V. (Eds.) Robot Fish: Bio-Inspired Fishlike Underwater Robots; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Ijspeert, A.J. Central pattern generators for locomotion control in animals and robots: A review. Neural Netw. 2008, 21, 642–653. [Google Scholar] [CrossRef]

- Zhong, Y.; Li, Z.; Du, R. The design and prototyping of a wire-driven robot fish with pectoral fins. In Proceedings of the 2013 IEEE International Conference on Robotics and Biomimetics (ROBIO), Shenzhen, China, 12–14 December 2013; pp. 1918–1923. [Google Scholar]

- Wang, J.; Wu, Z.; Tan, M.; Yu, J. Model predictive control-based depth control in gliding motion of a gliding robotic dolphin. IEEE Trans. Syst. Man Cybern. B Cybern. 2021, 51, 5466–5477. [Google Scholar] [CrossRef]

- Katzschmann, R.K.; DelPreto, J.; MacCurdy, R.; Rus, D. Exploration of underwater life with an acoustically controlled soft robotic fish. Sci. Robot. 2018, 3, eaar3449. [Google Scholar] [CrossRef] [PubMed]

- Zhang, P.; Wu, Z.; Meng, Y.; Dong, H.; Tan, M.; Yu, J. Development and control of a bioinspired robotic remora for hitchhiking. IEEE ASME Trans. Mechatron. 2022, 27, 2852–2862. [Google Scholar] [CrossRef]

- Liu, J.; Hu, H.; Gu, D. A Hybrid control architecture for autonomous robotic fish. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 312–317. [Google Scholar]

- Crespi, A.; Ijspeert, A.J. Online optimization of swimming and crawling in an amphibious snake robot. IEEE Trans. Robot. 2008, 24, 75–87. [Google Scholar] [CrossRef]

- Ijspeert, A.J.; Nakanishi, J.; Hoffmann, H.; Pastor, P.; Schaal, S. Dynamical movement primitives: Learning attractor models for motor behaviors. Neural Comput. 2013, 25, 328–373. [Google Scholar] [CrossRef]

- Lighthill, M.J. Note on the swimming of slender fish. J. Fluid Mech. 1960, 9, 305–317. [Google Scholar] [CrossRef]

- Sengupta, S.; Basak, S.; Peters, R.A. Particle swarm optimization: A survey of historical and recent developments with hybridization perspectives. Mach. Learn. Knowl. Extr. 2018, 1, 157–191. [Google Scholar] [CrossRef]

- Tian, Q.; Wang, T.; Wang, Y.; Wang, Z.; Liu, C. A Two-level optimization algorithm for path planning of bionic robotic fish in the three-dimensional environment with ocean currents and moving obstacles. Ocean Eng. 2022, 266, 112829. [Google Scholar] [CrossRef]

- Zhang, P.; Wu, Z.; Dong, H.; Tan, M.; Yu, J. Reaction-wheel-based roll stabilization for a robotic fish using neural network sliding mode control. IEEE ASME Trans. Mechatron. 2020, 25, 1904–1911. [Google Scholar] [CrossRef]

- Wang, T.; Wen, L.; Liang, J.; Wu, G. Fuzzy vorticity control of a biomimetic robotic fish using a flapping lunate tail. J. Bionic Eng. 2010, 7, 56–65. [Google Scholar] [CrossRef]

- Verma, S.; Shen, D.; Xu, J.X. Motion control of robotic fish under dynamic environmental conditions using adaptive control approach. IEEE J. Ocean. Eng. 2017, 43, 381–390. [Google Scholar] [CrossRef]

- Gao, Z.P.; Song, X.R.; Chen, C.B.; Gao, S.; Qian, F.; Ren, P.F. The study for path following of robot fish based on ADRC. In Proceedings of the 2019 IEEE International Conference on Unmanned Systems and Artificial Intelligence (ICUSAI), Xi’an, China, 15–17 November 2019; pp. 194–199. [Google Scholar]

- Liu, Q.; Ye, Z.; Wang, Y.; Zhang, Y.; Yin, G.; Yang, J.X. Research on active disturbance rejection control of multi-joint robot fish path tracking. In Proceedings of the 2021 Chinese Intelligent Automation Conference, Zhanjiang, China, 5–7 November 2022; pp. 141–149. [Google Scholar]

- Fu, R.; Li, L.; Xu, C.; Xie, G. Studies on energy saving of robot fish based on reinforcement learning. Beijing Da Xue Xue Bao 2019, 55, 405–410. [Google Scholar]

- Li, G.; Shintake, J.; Hayashibe, M. Deep reinforcement learning framework for underwater locomotion of soft robot. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 12033–12039. [Google Scholar]

- Rajendran, S.K.; Zhang, F. Learning based speed control of soft robotic fish. In Proceedings of the Dynamic Systems and Control Conference, American Society of Mechanical Engineers, Atlanta, GA, USA, 30 September–3 October 2018; Volume 51890, p. V001T04A005. [Google Scholar]

- Aggarwal, S.; Kumar, N. Path planning techniques for unmanned aerial vehicles: A review, solutions, and challenges. Comput. Commun. 2020, 149, 270–299. [Google Scholar] [CrossRef]

- Azar, A.T.; Koubaa, A.; Ali Mohamed, N.; Ibrahim, H.A.; Ibrahim, Z.F.; Kazim, M.; Ammar, A.; Benjdira, B.; Khamis, A.M.; Hameed, I.A.; et al. Drone deep reinforcement learning: A review. Electronics 2021, 10, 999. [Google Scholar] [CrossRef]

- Duan, J.; Eben Li, S.; Guan, Y.; Sun, Q.; Cheng, B. Hierarchical reinforcement learning for self-driving decision-making without reliance on labelled driving data. IET Intell. Transp. Syst. 2020, 14, 297–305. [Google Scholar] [CrossRef]

- Zhu, K.; Zhang, T. Deep reinforcement learning based mobile robot navigation: A review. Tsinghua Sci. Technol. 2021, 26, 674–691. [Google Scholar] [CrossRef]

- Yan, S.; Wu, Z.; Wang, J.; Huang, Y.; Tan, M.; Yu, J. Real-world learning control for autonomous exploration of a biomimetic robotic shark. IEEE Trans. Ind. Electron. 2022, 70, 3966–3974. [Google Scholar] [CrossRef]

- Vu, Q.T.; Pham, M.H.; Nguyen, V.D.; Duong, V.T.; Nguyen, H.H.; Nguyen, T.T. Optimization of central pattern generator-based locomotion controller for fish robot using deep deterministic policy gradient. In Proceedings of the International Conference on Engineering Research and Applications, ICERA 2022, Cairo, Egypt, 6–8 March 2022; pp. 764–770. [Google Scholar]

- Deng, H.; Burke, P.; Li, D.; Cheng, B. Design and experimental learning of swimming gaits for a magnetic, modular, undulatory robot. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 9562–9568. [Google Scholar]

- Hong, Z.; Wang, Q.; Zhong, Y. Parameters optimization of body wave control method for multi-joint robotic fish based on deep reinforcement learning. In Proceedings of the 2022 IEEE International Conference on Robotics and Biomimetics (ROBIO), Xishuangbanna, China, 5–9 December 2022; pp. 604–609. [Google Scholar]

- Hameed, I.; Chao, X.; Navarro-Alarcon, D.; Jing, X. Training dynamic motion primitives using deep reinforcement learning to control a robotic tadpole. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 6881–6887. [Google Scholar]

- Li, L.; Liu, D.; Deng, J.; Lutz, M.J.; Xie, G. Fish can save energy via proprioceptive sensing. Bioinspir. Biomim. 2021, 16, 056013. [Google Scholar] [CrossRef]

- Dong, H.; Wu, Z.; Meng, Y.; Tan, M.; Yu, J. Gliding motion optimization for a biomimetic gliding robotic fish. IEEE ASME Trans. Mechatron. 2021, 27, 1629–1639. [Google Scholar] [CrossRef]

- Liu, J.; Hu, H.; Gu, D. RL-Based Optimisation of Robotic Fish Behaviours. In Proceedings of the 2006 6th World Congress on Intelligent Control and Automation, Dalian, China, 14–16 June 2006; Volume 1, pp. 3992–3996. [Google Scholar]

- Zhang, J.; Zhou, L.; Cao, B. Learning swimming via deep reinforcement learning. arXiv 2022, arXiv:2209.10935. [Google Scholar]

- Chen, G.; Lu, Y.; Yang, X.; Hu, H. Reinforcement learning control for the swimming motions of a beaver-like, single-legged robot based on biological inspiration. Rob. Auton. Syst. 2022, 154, 104116. [Google Scholar] [CrossRef]

- Wang, Q.; Hong, Z.; Zhong, Y. Learn to swim: Online motion control of an underactuated robotic eel based on deep reinforcement learning. Biomimetics 2022, 2, 100066. [Google Scholar] [CrossRef]

- Zhang, T.; Tian, R.; Wang, C.; Xie, G. Path-following control of fish-like robots: A deep reinforcement learning approach. IFAC-PapersOnLine 2020, 53, 8163–8168. [Google Scholar] [CrossRef]

- Zhang, T.; Wang, R.; Wang, Y.; Wang, S. Locomotion control of a hybrid propulsion biomimetic underwater vehicle via deep reinforcement learning. In Proceedings of the 2021 IEEE International Conference on Real-Time Computing and Robotics (RCAR), Xining, China, 15–19 July 2021; pp. 211–216. [Google Scholar]

- Yu, J.; Li, X.; Pang, L.; Wu, Z. Design and attitude control of a novel robotic jellyfish capable of 3d motion. Sci. China Inf. Sci. 2019, 62, 194201. [Google Scholar] [CrossRef]

- Wu, Q.; Wu, Y.; Yang, X.; Zhang, B.; Wang, J.; Chepinskiy, S.A.; Zhilenkov, A.A. Bipedal walking of underwater soft robot based on data-driven model inspired by octopus. Front. Robot. AI 2022, 9, 815435. [Google Scholar] [CrossRef] [PubMed]

- Su, Z.Q.; Zhou, M.; Han, F.F.; Zhu, Y.W.; Song, D.L.; Guo, T.T. Attitude control of underwater glider combined reinforcement learning with active disturbance rejection control. J. Mar. Sci. Technol. 2019, 24, 686–704. [Google Scholar] [CrossRef]

- Zhang, D.; Pan, G.; Cao, Y.; Huang, Q.; Cao, Y. Depth control of a biomimetic manta robot via reinforcement learning. In Proceedings of the Cognitive Systems and Information Processing: 7th International Conference, ICCSIP 2022, Fuzhou, China, 17–18 December 2022; pp. 59–69. [Google Scholar]

- Pan, J.; Zhang, P.; Wang, J.; Liu, M.; Yu, J. Learning for depth control of a robotic penguin: A data-driven model predictive control approach. IEEE Trans. Ind. Electron. 2022. [Google Scholar] [CrossRef]

- Youssef, S.M.; Soliman, M.; Saleh, M.A.; Elsayed, A.H.; Radwan, A.G. Design and control of soft biomimetic pangasius fish robot using fin ray effect and reinforcement learning. Sci. Rep. 2022, 12, 21861. [Google Scholar] [CrossRef]

- Wang, Y.; Tang, C.; Wang, S.; Cheng, L.; Wang, R.; Tan, M.; Hou, Z. Target tracking control of a biomimetic underwater vehicle through deep reinforcement learning. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 3741–3752. [Google Scholar] [CrossRef] [PubMed]

- Rajendran, S.K.; Zhang, F. Design, modeling, and visual learning-based control of soft robotic fish driven by super-coiled polymers. Front. Robot. AI 2022, 8, 431. [Google Scholar] [CrossRef] [PubMed]

- Yu, J.; Wu, Z.; Yang, X.; Yang, Y.; Zhang, P. Underwater target tracking control of an untethered robotic fish with a camera stabilizer. IEEE Trans. Syst. Man Cybern. Syst. 2021, 51, 6523–6534. [Google Scholar] [CrossRef]

- Yan, S.; Wu, Z.; Wang, J.; Tan, M.; Yu, J. Efficient cooperative structured control for a multijoint biomimetic robotic fish. IEEE ASME Trans. Mechatron. 2021, 26, 2506–2516. [Google Scholar] [CrossRef]

- Liu, J.; Liu, Z.; Wu, Z.; Yu, J. Three-dimensional path following control of an underactuated robotic dolphin using deep reinforcement learning. In Proceedings of the 2020 IEEE International Conference on Real-Time Computing and Robotics (RCAR), Virtual Event, 28–29 September 2020; pp. 315–320. [Google Scholar]

- Ma, L.; Yue, Z.; Zhang, R. Path tracking control of hybrid-driven robotic fish based on deep reinforcement learning. In Proceedings of the 2020 IEEE International Conference on Mechatronics and Automation (ICMA), Beijing, China, 13–16 October 2020; pp. 815–820. [Google Scholar]

- Qiu, C.; Wu, Z.; Wang, J.; Tan, M.; Yu, J. Multi-agent reinforcement learning based stable path tracking control for a bionic robotic fish with reaction wheel. IEEE Trans. Ind. Electron. 2023. [Google Scholar] [CrossRef]

- Duraisamy, P.; Nagarajan Santhanakrishnan, M.; Rengarajan, A. Design of deep reinforcement learning controller through data-assisted model for robotic fish speed tracking. J. Bionic Eng. 2022, 20, 953–966. [Google Scholar] [CrossRef]

- Zhang, T.; Yue, L.; Wang, C.; Sun, J.; Zhang, S.; Wei, A.; Xie, G. Leveraging imitation learning on pose regulation problem of a robotic fish. IEEE Trans. Neural Netw. Learn. Syst. 2022. [Google Scholar] [CrossRef]

- Yan, L.; Chang, X.; Wang, N.; Tian, R.; Zhang, L.; Liu, W. Learning how to avoid obstacles: A numerical investigation for maneuvering of self-propelled fish based on deep reinforcement learning. Int. J. Numer. Methods Fluids 2021, 93, 3073–3091. [Google Scholar] [CrossRef]

- Verma, S.; Novati, G.; Koumoutsakos, P. Efficient collective swimming by harnessing vortices through deep reinforcement learning. Proc. Natl. Acad. Sci. USA 2018, 115, 5849–5854. [Google Scholar] [CrossRef]

- Sun, Y.; Yan, C.; Xiang, X.; Zhou, H.; Tang, D.; Zhu, Y. Towards end-to-end formation control for robotic fish via deep reinforcement learning with non-expert imitation. Ocean Eng. 2023, 271, 113811. [Google Scholar] [CrossRef]

- Yu, J.; Wang, C.; Xie, G. Coordination of multiple robotic fish with applications to underwater robot competition. IEEE Trans. Ind. Electron. 2015, 63, 1280–1288. [Google Scholar] [CrossRef]

- Borra, F.; Biferale, L.; Cencini, M.; Celani, A. Reinforcement learning for pursuit and evasion of microswimmers at low reynolds number. Phys. Rev. Fluid 2022, 7, 023103. [Google Scholar] [CrossRef]

- Stastny, J. Towards Solving the Robofish Leadership Problem with Deep Reinforcement Learning. Bachelor Thesis, Freie University, Berlin, Germany, 2019. [Google Scholar]

- Zhang, T.; Wang, R.; Wang, S.; Wang, Y.; Cheng, L.; Zheng, G. Autonomous skill learning of water polo ball heading for a robotic fish: Curriculum and verification. IEEE Trans. Cogn. Develop. Syst. 2022. [Google Scholar] [CrossRef]

- Cheng, L.; Zhu, X.; Chen, J.; Kai, J.; Yang, C.; Li, X.; Lei, M. A novel decision-making method based on reinforcement learning for underwater robots. In Proceedings of the 2019 2nd International Conference on Algorithms, Computing and Artificial Intelligence, Sanya, China, 20–22 December 2019; pp. 364–369. [Google Scholar]

- Tong, R.; Qiu, C.; Wu, Z.; Wang, J.; Tan, M.; Yu, J. NA-CPG: A robust and stable rhythm generator for robot motion control. Biomim. Intell. Robot. 2022, 2, 100075. [Google Scholar] [CrossRef]

- Nguyen, V.D.; Vo, D.Q.; Duong, V.T.; Nguyen, H.H.; Nguyen, T.T. Reinforcement learning-based optimization of locomotion controller using multiple coupled CPG oscillators for elongated undulating fin propulsion. Math. Biosci. Eng. 2022, 19, 738–758. [Google Scholar] [CrossRef]

- Tong, R.; Wu, Z.; Wang, J.; Tan, M.; Yu, J. Online optimization of normalized cpgs for a multi-joint robotic fish. In Proceedings of the 2021 40th Chinese Control Conference (CCC), Shanghai, China, 27–29 July 2021; pp. 4205–4210. [Google Scholar]

- Min, S.; Won, J.; Lee, S.; Park, J.; Lee, J. SoftCon: Simulation and control of soft-bodied animals with biomimetic actuators. ACM Trans. Graph. 2019, 38, 1–12. [Google Scholar] [CrossRef]

- Ishige, M.; Umedachi, T.; Taniguchi, T.; Kawahara, Y. Exploring behaviors of caterpillar-like soft robots with a central pattern generator-based controller and reinforcement learning. Soft Robot. 2019, 6, 579–594. [Google Scholar] [CrossRef]

- Sola, Y.; Le Chenadec, G.; Clement, B. Simultaneous control and guidance of an auv based on soft actor–critic. Sensors 2022, 22, 6072. [Google Scholar] [CrossRef]

- Snyder, S. Using spiking neural networks to direct robotic fish towards a target. J. Stud.-Sci. Res. 2022, 4. [Google Scholar] [CrossRef]

- Liu, W.; Jing, Z.; Pan, H.; Qiao, L.; Leung, H.; Chen, W. Distance-directed target searching for a deep visual servo sma driven soft robot using reinforcement learning. J. Bionic Eng. 2020, 17, 1126–1138. [Google Scholar] [CrossRef]

- Chu, Z.; Sun, B.; Zhu, D.; Zhang, M.; Luo, C. Motion control of unmanned underwater vehicles via deep imitation reinforcement learning algorithm. IET Intell. Transp. Syst. 2020, 14, 764–774. [Google Scholar] [CrossRef]

- Yin, H.; Guo, S.; Shi, L.; Zhou, M.; Hou, X.; Li, Z.; Xia, D. The vector control scheme for amphibious spherical robots based on reinforcement learning. In Proceedings of the 2021 IEEE International Conference on Mechatronics and Automation (ICMA), Portland, OR, USA, 3–6 October 2021; pp. 594–599. [Google Scholar]

- Mao, Y.; Gao, F.; Zhang, Q.; Yang, Z. An AUV target-tracking method combining imitation learning and deep reinforcement learning. J. Mar. Sci. Eng. 2022, 10, 383. [Google Scholar] [CrossRef]

- Behrens, M.R.; Ruder, W.C. Smart magnetic microrobots learn to swim with deep reinforcement learning. Adv. Intell. Syst. 2022, 4, 2200023. [Google Scholar] [CrossRef]

- Tian, R.; Li, L.; Wang, W.; Chang, X.; Ravi, S.; Xie, G. CFD based parameter tuning for motion control of robotic fish. Bioinspir. Biomim. 2020, 15, 026008. [Google Scholar] [CrossRef]

- Hess, A.; Tan, X.; Gao, T. CFD-based multi-objective controller optimization for soft robotic fish with muscle-like actuation. Bioinspir. Biomim. 2020, 15, 035004. [Google Scholar] [CrossRef] [PubMed]

- Parras, J.; Zazo, S. Robust Deep reinforcement learning for underwater navigation with unknown disturbances. In Proceedings of the ICASSP 2021-2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 3440–3444. [Google Scholar]

- Li, W.K.; Chen, H.; Cui, W.C.; Song, C.H.; Chen, L.K. Multi-objective evolutionary design of central pattern generator network for biomimetic robotic fish. Complex Intell. Syst. 2023, 9, 1707–1727. [Google Scholar] [CrossRef]

- Liu, W.; Bai, K.; He, X.; Song, S.; Zheng, C.; Liu, X. Fishgym: A high-performance physics-based simulation framework for underwater robot learning. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 6268–6275. [Google Scholar]

- Drago, A.; Carryon, G.; Tangorra, J. Reinforcement learning as a method for tuning CPG controllers for underwater multi-fin propulsion. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 11533–11539. [Google Scholar]

- Sun, Y.; Ran, X.; Zhang, G.; Xu, H.; Wang, X. AUV 3D path planning based on the improved hierarchical deep q network. J. Mar. Sci. Eng. 2020, 8, 145. [Google Scholar] [CrossRef]

| Algorithms | Model-Based/Model-Free | Learning Task | On-Policy/Off-Policy |

|---|---|---|---|

| Policy iteration | Model-based | State value | — |

| Value iteration | Model-based | State value | — |

| Monte Carlo method (MC) | Model-free | State value | On-policy |

| Temporal difference method (TD) | Model-free | State value | On-policy |

| Sarsa | Model-free | Optimal value function | On-policy |

| Q-learning | Model-free | Optimal value function | Off-policy |

| Q() | Model-free | Optimal value function | Off-policy |

| Double Q-learning | Model-free | Optimal value function | Off-policy |

| DQN | Model-free | Optimal value function | Off-policy |

| A3C | Model-free | Optimal policy | On-policy |

| TRPO | Model-free | Optimal policy | On-policy |

| PPO | Model-free | Optimal policy | On-policy |

| DPG | Model-free | Interpolating between policy optimization and optimal value function | Off-policy |

| DDPG | Model-free | Interpolating between policy optimization and optimal value function | Off-policy |

| Platform | Task | RL Method | Performance |

|---|---|---|---|

| Fish robot (2022) [88] | CPG optimization | DDPG | Higher CPG convergence speed |

| Bot (2021) [89] | CPG-based gait learning | PGPE | Speed optimization |

| Five-jointed robotic fish (2022) [90] | Body-wave-based control | SAC | Wave parameter optimization |

| Robotic tadpole (2022) [91] | DMP-based motion control | TRPO | Validating method effectiveness |

| Robotic fish (2021) [92] | Bionic control | Q-learning | Energy saving |

| Gliding robotic fish (2022) [93] | Bionic gliding control | double DQN | Energy saving |

| MT1 Profile (2006) [94] | Bionic control | PG-RL | Effective steering |

| Tail fin (2022) [95] | Bionic flapping motion | On-policy RL | High hydrodynamic efficiency |

| Beaver-like robot (2022) [96] | Bionic control | Q-learning | Multiple bionic actions control |

| Robotic fish (2022) [17] | Attitude holding control | DDPG | Holding desired angle of attack |

| Fish-like robot (2022) [18] | Pose, path-following control | A2C | A general learning framework |

| Soft robot (2021) [81] | Motion control | SAC | Line tracking under disturbances |

| SCP fish robot (2018) [82] | Speed control | Q-learning | Effective control method |

| Robotic eel (2022) [97] | Motion control | SAC | Effective online control |

| Fish-like robot (2020) [98] | Path-following control | A2C | Dynamics-free control |

| RoboDact (2021) [99] | Yaw, speed control | SAC | Effective control method |

| Robotic jellyfish (2019) [100] | Attitude control | Q-learning | Yaw maneuverability |

| Soft octopus(2022) [101] | Single-arm attitude control | DQN | Forward and turning motion |

| OUC-III (2019) [102] | Attitude control | ADRC + NAC | High-precision adaptive control |

| Bionic manta (2023) [103] | Depth control | Q-learning | Effective control method |

| Robotic penguin (2022) [104] | Depth control | MPC-LOS + DDPG | Effective control method |

| Soft bionic Pangasius (2022) [105] | Path-following control | PPO, A2C, DQN | Effective control method |

| Bionic vehicle (2022) [106] | Target-following control | DPG-AC | Effective control method |

| SCP fish robot (2022) [107] | Yaw, path-following control | DDPG | Effective control method |

| Three-jointed fish robot (2021) [108] | Target-following control | DDPG | Real-time 2D target tracking |

| Bionic robotic fish (2021) [109] | Tracking control | DDPG | Energy-efficient control |

| Robotic Dolphin (2022) [110] | Path-following control | Improved DDPG | Effective control method |

| Hybrid fish robot (2022) [111] | Path-following control | DDQN | Better tracking accuracy |

| Wire-driven robotic fish (2023) [112] | Path-following control | MARL | Improved accuracy and stability |

| Robotic fish (2022) [113] | Speed control | DDPG and TD3 | Improved speed tracking |

| Robotic fish (2022) [114] | Pose control | DDPG-DIR | Pose control under disturbances |

| G9 robotic fish (2006) [69] | Underwater searching | Q-learning | Tank lap swimming |

| Robotic shark (2022) [87] | Underwater searching | DDPG | Exploration efficiency boost |

| Self-propelled fish (2021) [115] | Obstacle avoidance | One-step AC | Complex obstacles avoidance |

| Swarm simulator (2018) [116] | Formation control | DDPG+LSTM | Formation energy-saving |

| CFD-based fish (2023) [117] | Formation control | D3QN | Leader–follower topology |

| Fish-like robots (2021) [16] | Formation control | MARL | Effective circle formation control |

| Multiple robotic fish (2017) [118] | Coordination control | Fuzzy RL | Improving game winning chances |

| Microswimmers (2022) [119] | Pursue evasion game | NAC + MARL | Pursue or evasion decision |

| Simulated agents (2019) [120] | Leadership decision | PPO | Swarm interaction groundwork |

| RoboDact (2022) [121] | Water Polo Ball Heading | SAC | Self-heading water polo ball |

| Underwater robot (2019) [122] | Behavior decision | Q-learning | Better decision making |

| ID | Training | Deployment | Primary Computational Cost |

|---|---|---|---|

| 1 | Simulation-based training | Simulation-based deployment | Robot modeling cost |

| 2 | Simulation-based training | Real-world deployment | Robot modeling cost |

| 3 [18] | Numerically driven simulation training ⇒ CFD-based training | Real-world deployment | CFD modeling cost, high-precision simulation cost |

| 4 [131] | Imitation-learning-based teaching ⇒ Simulation-based training | Real-world deployment | Physical data acquisition cost |

| 5 [87] | Imitation-learning-based pre-training ⇒ Real-world training | Real-world deployment | Supervision cost for underwater training, safety risks of robot motion |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tong, R.; Feng, Y.; Wang, J.; Wu, Z.; Tan, M.; Yu, J. A Survey on Reinforcement Learning Methods in Bionic Underwater Robots. Biomimetics 2023, 8, 168. https://doi.org/10.3390/biomimetics8020168

Tong R, Feng Y, Wang J, Wu Z, Tan M, Yu J. A Survey on Reinforcement Learning Methods in Bionic Underwater Robots. Biomimetics. 2023; 8(2):168. https://doi.org/10.3390/biomimetics8020168

Chicago/Turabian StyleTong, Ru, Yukai Feng, Jian Wang, Zhengxing Wu, Min Tan, and Junzhi Yu. 2023. "A Survey on Reinforcement Learning Methods in Bionic Underwater Robots" Biomimetics 8, no. 2: 168. https://doi.org/10.3390/biomimetics8020168

APA StyleTong, R., Feng, Y., Wang, J., Wu, Z., Tan, M., & Yu, J. (2023). A Survey on Reinforcement Learning Methods in Bionic Underwater Robots. Biomimetics, 8(2), 168. https://doi.org/10.3390/biomimetics8020168