A Day/Night Leader-Following Method Based on Adaptive Federated Filter for Quadruped Robots

Abstract

1. Introduction

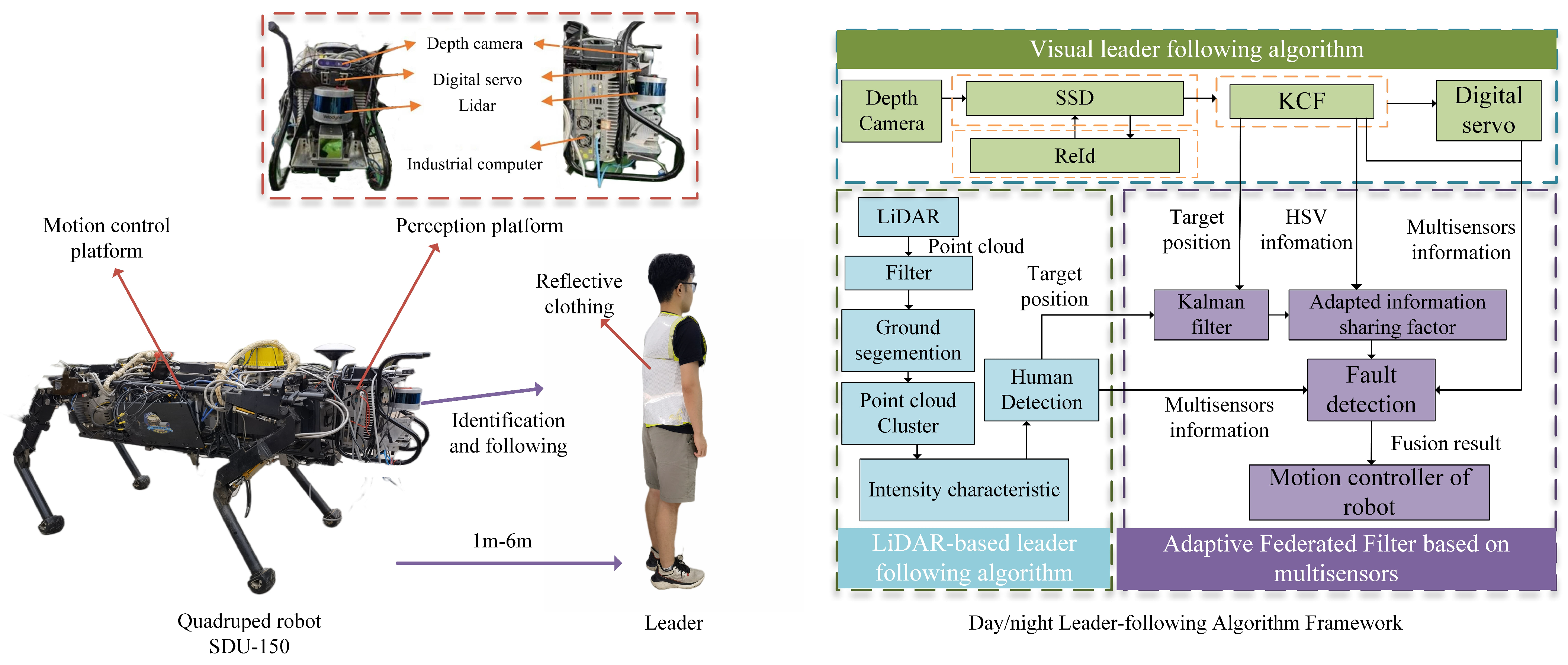

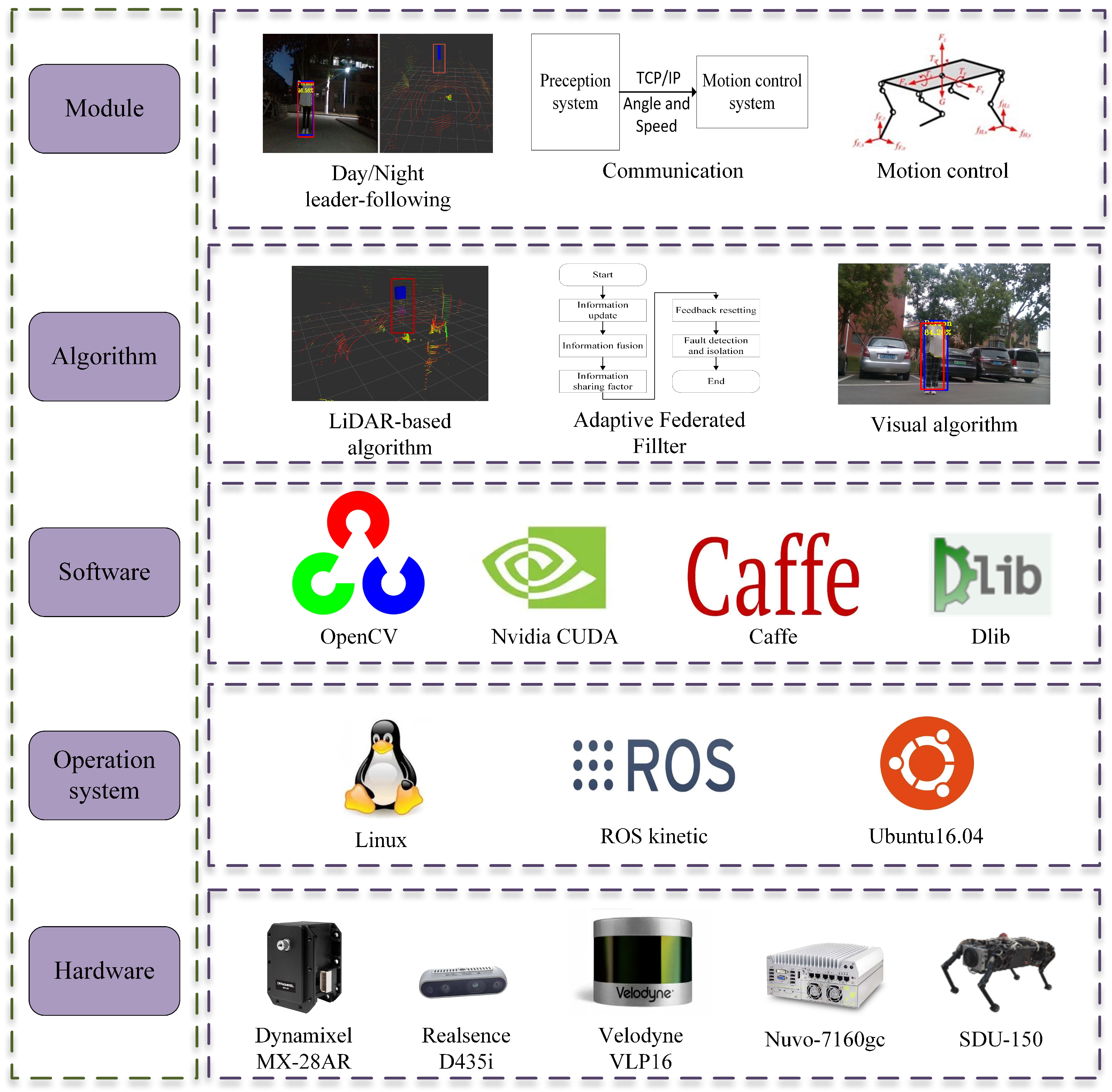

- We build a leader-following system including person detection, communication module, and motion control module. This system enables the quadruped robot to follow a leader in real time.

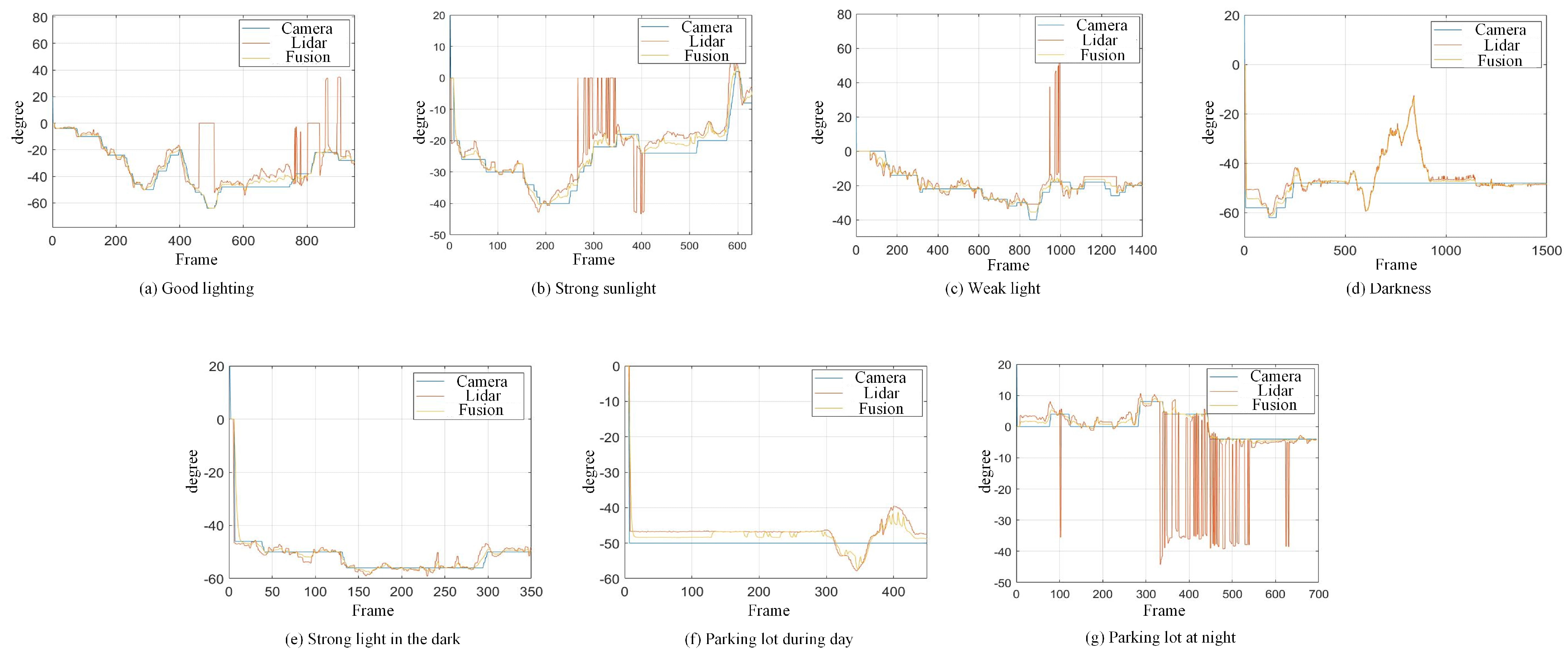

- We propose an Adaptive Federated Filter algorithm framework, which can adaptively adjust the information sharing factors according to light conditions. The algorithm combines visual and LiDAR-based detection frameworks, which helps quadruped robots achieve day/night leader-following.

- We establish a fault detection and isolation algorithm that dramatically improves the stability and robustness of day/night leader-following. In this algorithm, we fully use multisensors information from sensors and detection algorithms, which can adapt to high-frequency vibrations, illumination variations and interference from reflective materials.

2. Related Works

3. Methods

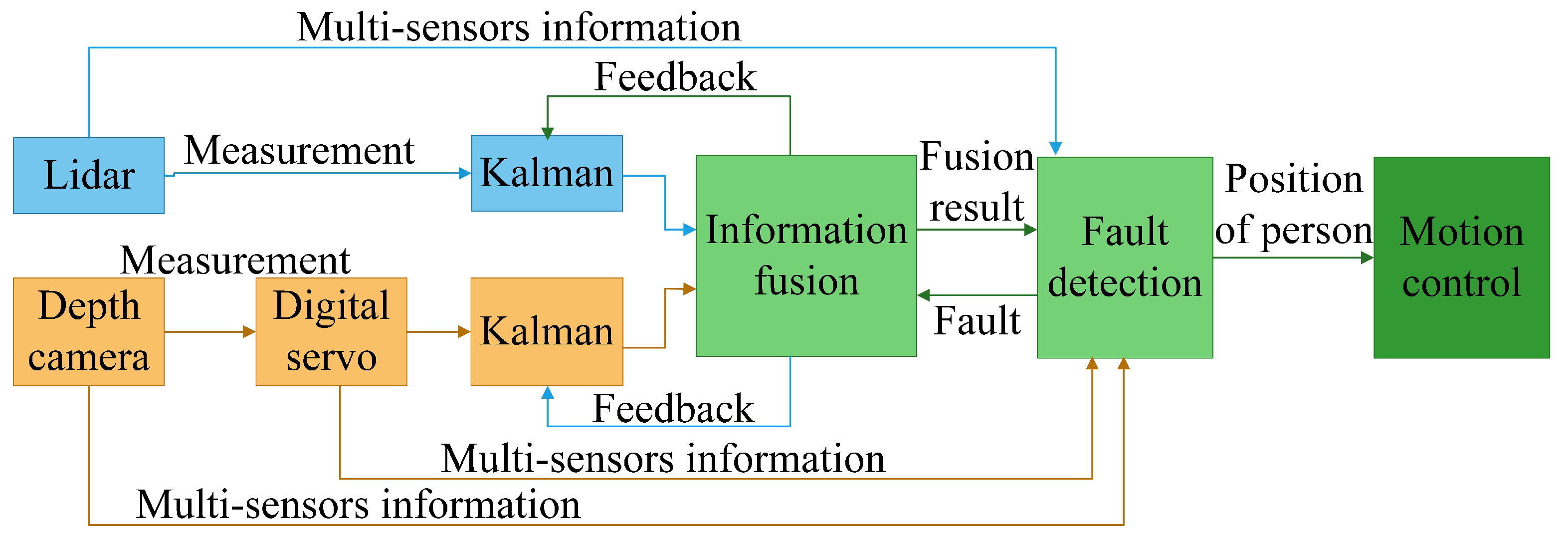

3.1. Information Update

3.2. Information Fusion

3.3. Information Sharing Factor

3.4. Feedback Resetting

3.5. Fault Detection and Isolation

| Algorithm 1: Fault detection and isolation |

Input: measurement of motor, the person position detected by LiDAR algorithm, the number of persons detected by the visual algorithm Output: Robot motion control parameters

|

4. Experiments

4.1. Experimental Setup

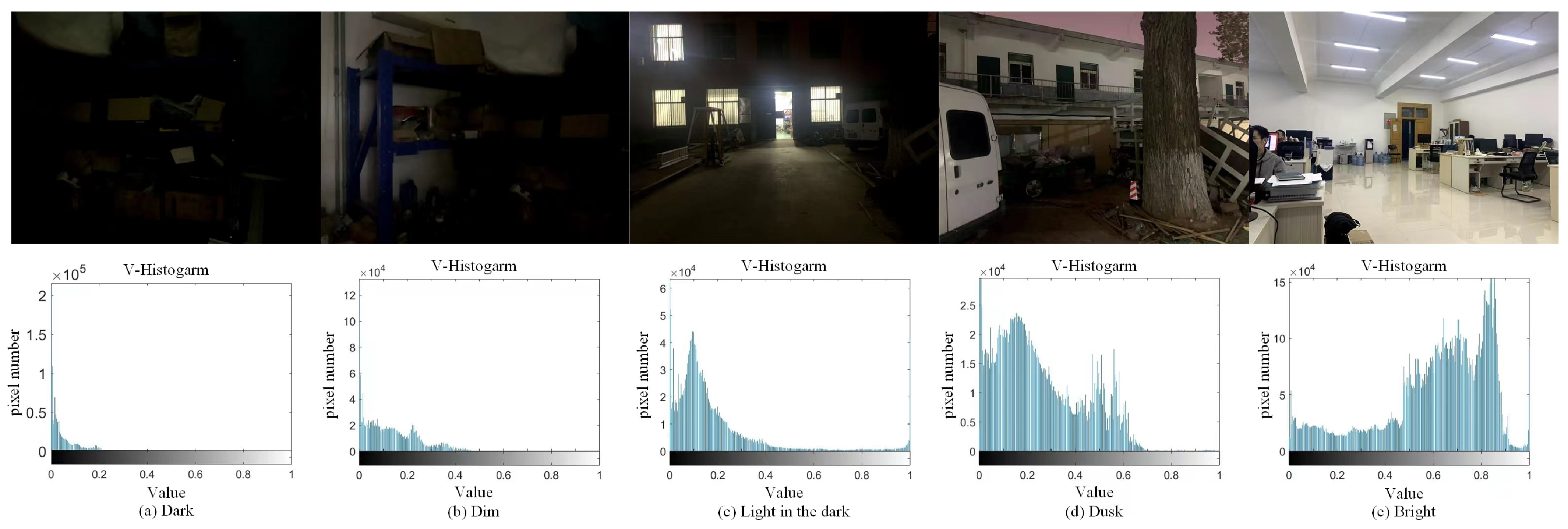

4.2. Information Sharing Factor Construct

4.3. Effectiveness Verification

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Hutter, M.; Gehring, C.; Höpflinger, M.A.; Blösch, M.; Siegwart, R. Toward Combining Speed, Efficiency, Versatility, and Robustness in an Autonomous Quadruped. IEEE Trans. Robot. 2014, 30, 1427–1440. [Google Scholar] [CrossRef]

- Chen, T.; Li, Y.; Rong, X.; Zhang, G.; Chai, H.; Bi, J.; Wang, Q. Design and Control of a Novel Leg-Arm Multiplexing Mobile Operational Hexapod Robot. IEEE Robot. Autom. Lett. 2022, 7, 382–389. [Google Scholar] [CrossRef]

- Chai, H.; Li, Y.; Song, R.; Zhang, G.; Zhang, Q.; Liu, S.; Hou, J.; Xin, Y.; Yuan, M.; Zhang, G.; et al. A survey of the development of quadruped robots: Joint configuration, dynamic locomotion control method and mobile manipulation approach. Biomim. Intell. Robot. 2022, 2, 100029. [Google Scholar] [CrossRef]

- Pang, L.; Cao, Z.; Yu, J.; Guan, P.; Rong, X.; Chai, H. A Visual Leader-Following Approach With a T-D-R Framework for Quadruped Robots. IEEE Trans. Syst. Man Cybern. Syst. 2021, 51, 2342–2354. [Google Scholar] [CrossRef]

- Chi, W.; Wang, J.; Meng, M.Q. A Gait Recognition Method for Human Following in Service Robots. IEEE Trans. Syst. Man Cybern. Syst. 2018, 48, 1429–1440. [Google Scholar] [CrossRef]

- Gupta, M.; Kumar, S.; Behera, L.; Subramanian, V.K. A Novel Vision-Based Tracking Algorithm for a Human-Following Mobile Robot. IEEE Trans. Syst. Man Cybern. Syst. 2017, 47, 1415–1427. [Google Scholar] [CrossRef]

- Bajracharya, M.; Ma, J.; Malchano, M.; Perkins, A.; Rizzi, A.A.; Matthies, L. High fidelity day/night stereo mapping with vegetation and negative obstacle detection for vision-in-the-loop walking. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 3663–3670. [Google Scholar] [CrossRef]

- Miller, I.D.; Cladera, F.; Cowley, A.; Shivakumar, S.S.; Lee, E.S.; Jarin-Lipschitz, L.; Bhat, A.; Rodrigues, N.; Zhou, A.; Cohen, A.; et al. Mine Tunnel Exploration Using Multiple Quadrupedal Robots. IEEE Robot. Autom. Lett. 2020, 5, 2840–2847. [Google Scholar] [CrossRef]

- Zhang, G.; Ma, S.; Shen, Y.; Li, Y. A Motion Planning Approach for Nonprehensile Manipulation and Locomotion Tasks of a Legged Robot. IEEE Trans. Robot. 2020, 36, 855–874. [Google Scholar] [CrossRef]

- Yang, T.; Chai, H.; Li, Y.; Zhang, H.; Zhang, Q. ALeader-following Method Based on Binocular Stereo Vision For Quadruped Robots. In Proceedings of the 2019 IEEE 9th Annual International Conference on CYBER Technology in Automation, Control, and Intelligent Systems (CYBER), Suzhou, China, 29 July–2 August 2019; pp. 677–682. [Google Scholar] [CrossRef]

- Ling, J.; Chai, H.; Li, Y.; Zhang, H.; Jiang, P. An Outdoor Human-tracking Method Based on 3D LiDAR for Quadruped Robots. In Proceedings of the 2019 IEEE 9th Annual International Conference on CYBER Technology in Automation, Control, and Intelligent Systems (CYBER), Suzhou, China, 29 July–2 August 2019; pp. 843–848. [Google Scholar] [CrossRef]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-Speed Tracking with Kernelized Correlation Filters. IEEE Transactions on Pattern Analysis and Machine Intelligence 2015, 37, 583–596. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Liu, H.; Fan, Z.; Chen, X.; Leng, Y.; de Silva, C.W.; Fu, C. Foot Placement Prediction for Assistive Walking by Fusing Sequential 3D Gaze and Environmental Context. IEEE Robot. Autom. Lett. 2021, 6, 2509–2516. [Google Scholar] [CrossRef]

- Guo, J.; Zhang, Q.; Chai, H.; Li, Y. Obtaining lower-body Euler angle time series in an accurate way using depth camera relying on Optimized Kinect CNN. Measurement 2022, 188, 110461. [Google Scholar] [CrossRef]

- Leigh, A.; Pineau, J.; Olmedo, N.; Zhang, H. Person tracking and following with 2D laser scanners. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 726–733. [Google Scholar] [CrossRef]

- Jung, E.; Lee, J.H.; Yi, B.; Park, J.; Yuta, S.; Noh, S. Development of a Laser-Range-Finder-Based Human Tracking and Control Algorithm for a Marathoner Service Robot. IEEE/ASME Trans. Mechatron. 2014, 19, 1963–1976. [Google Scholar] [CrossRef]

- Yuan, J.; Zhang, S.; Sun, Q.; Liu, G.; Cai, J. Laser-Based Intersection-Aware Human Following with a Mobile Robot in Indoor Environments. IEEE Trans. Syst. Man Cybern. Syst. 2021, 51, 354–369. [Google Scholar] [CrossRef]

- Meng, X.; Wang, S.; Cao, Z.; Zhang, L. A review of quadruped robots and environment perception. In Proceedings of the 2016 35th Chinese Control Conference (CCC), Chengdu, China, 27–29 July 2016; pp. 6350–6356. [Google Scholar] [CrossRef]

- Meng, X.; Cai, J.; Wu, Y.; Liang, S.; Cao, Z.; Wang, S. A Navigation Framework for Mobile Robots with 3D LiDAR and Monocular Camera. In Proceedings of the IECON 2018—44th Annual Conference of the IEEE Industrial Electronics Society, Washington, DC, USA, 21–23 October 2018; pp. 3147–3152. [Google Scholar] [CrossRef]

- Wang, C.; Cheng, J.; Wang, J.; Li, X.; Meng, M. Q-H. Efficient Object Search With Belief Road Map Using Mobile Robot. IEEE Robot. Autom. Lett. 2018, 3, 3081–3088. [Google Scholar] [CrossRef]

- Zhao, H.; Wang, C. Autonomous live working robot navigation with real-time detection and motion planning system on distribution line. High Volt. 2022, 7, 1204–1216. [Google Scholar] [CrossRef]

- Voges, R.; Wagner, B. Interval-Based Visual-LiDAR Sensor Fusion. IEEE Robot. Autom. Lett. 2021, 6, 1304–1311. [Google Scholar] [CrossRef]

- Wang, M.; Liu, Y.; Su, D.; Liao, Y.; Shi, L.; Xu, J.; Miro, J.V. Accurate and Real-Time 3-D Tracking for the Following Robots by Fusing Vision and Ultrasonar Information. IEEE/ASME Trans. Mechatron. 2018, 23, 997–1006. [Google Scholar] [CrossRef]

- Perdoch, M.; Bradley, D.M.; Chang, J.K.; Herman, H.; Rander, P.; Stentz, A. Leader tracking for a walking logistics robot. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 2994–3001. [Google Scholar] [CrossRef]

- Eckenhoff, K.; Geneva, P.; Huang, G. MIMC-VINS: A Versatile and Resilient Multi-IMU Multi-Camera Visual-Inertial Navigation System. IEEE Trans. Robot. 2021, 37, 1360–1380. [Google Scholar] [CrossRef]

- Dwek, N.; Birem, M.; Geebelen, K.; Hostens, E.; Mishra, A.; Steckel, J.; Yudanto, R. Improving the Accuracy and Robustness of Ultra-Wideband Localization Through Sensor Fusion and Outlier Detection. IEEE Robot. Autom. Lett. 2020, 5, 32–39. [Google Scholar] [CrossRef]

- Ji, T.; Sivakumar, A.N.; Chowdhary, G.; Driggs-Campbell, K. Proactive Anomaly Detection for Robot Navigation With Multi-Sensor Fusion. IEEE Robot. Autom. Lett. 2022, 7, 4975–4982. [Google Scholar] [CrossRef]

| Median of Value | Lighting Condition | Visual Information Sharing Factor |

|---|---|---|

| 0–0.2 | darkness | 0.9 |

| 0.2–0.4 | weak light | 0.7 |

| 0.4–1 | good light | 0.5 |

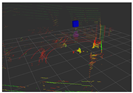

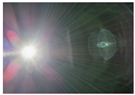

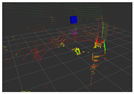

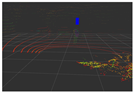

| Light Condition | Experimental Scenes | Visual Detection | LiDAR-Based Detection |

|---|---|---|---|

| Good light |  |  |  |

| Strong sunlight |  |  |  |

| Weak light |  |  |  |

| Darkness |  |  |  |

| Strong flashlight in the dark |  |  |  |

| Parking lot during day |  |  |  |

| Parking lot at night |  |  |  |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, J.; Guo, J.; Chai, H.; Zhang, Q.; Li, Y.; Wang, Z.; Zhang, Q. A Day/Night Leader-Following Method Based on Adaptive Federated Filter for Quadruped Robots. Biomimetics 2023, 8, 20. https://doi.org/10.3390/biomimetics8010020

Zhang J, Guo J, Chai H, Zhang Q, Li Y, Wang Z, Zhang Q. A Day/Night Leader-Following Method Based on Adaptive Federated Filter for Quadruped Robots. Biomimetics. 2023; 8(1):20. https://doi.org/10.3390/biomimetics8010020

Chicago/Turabian StyleZhang, Jialin, Jiamin Guo, Hui Chai, Qin Zhang, Yibin Li, Zhiying Wang, and Qifan Zhang. 2023. "A Day/Night Leader-Following Method Based on Adaptive Federated Filter for Quadruped Robots" Biomimetics 8, no. 1: 20. https://doi.org/10.3390/biomimetics8010020

APA StyleZhang, J., Guo, J., Chai, H., Zhang, Q., Li, Y., Wang, Z., & Zhang, Q. (2023). A Day/Night Leader-Following Method Based on Adaptive Federated Filter for Quadruped Robots. Biomimetics, 8(1), 20. https://doi.org/10.3390/biomimetics8010020