2. Space Salvage

An alternative approach to space debris mitigation is to recover the debris and, rather than de-orbiting it, exploit it as a resource—as salvage. This might be regarded as a more sustainable approach to space debris control. Only robotic manipulation is flexible enough to deal with both large and small debris unlike harpoons and nets which generate complex uncontrollable dynamic interactions between the robotic freeflyer, the target and the flexible umbilical connecting the two. This favours space debris mitigation through the deployment of freeflyer spacecraft mounted with dextrous manipulators which provide controlled interaction with the target [

4]. Robot manipulators have been the workhorse of industrial applications for a range of tasks where precision positioning is required including machining, welding, sanding, spraying and assembly. For machining applications, parallel kinematic machines such as the six degree of freedom Stewart platform are unnecessary given that three or five degrees of freedom are sufficient and can offer high position accuracy [

5]. However, it has been recommended that one degree of freedom redundancy above six degrees of freedom is included to compensate for joint failure [

6]. The 75 kg Baxter with two seven degree-of-freedom arms is a new industrial standard which has a teach-and-follow facility. The arms are driven by series elastic actuators which give it high compliance. We propose that a minimum of two arms are required for grappling space debris targets. It is presumed that capture of defunct spacecraft will occur using either specialised tooling applied through the apogee thruster or at attachment points on the launch adaptor ring. We propose a bio-inspired freeflyer concept that specifically addresses the requirement for adaptability to a range of space debris sizes, offering a salvage solution that is robust to any orbital band. For robotic manipulation, there are three major manoeuvre requirements: (i) controlling freeflyer stability whilst manoeuvring the arms to grapple the target; (ii) manoeuvring the composite object once grappling is completed and then warehousing the captured assets (such as at the International Space Station); (iii) salvaging parts from the debris target for re-processing into new space assets.

During the initial manoeuvre for grappling, there is dynamic coupling between the manipulator arm(s) and the spacecraft base on which it is mounted. The freefloating mode involves controlling the manipulator arm but allowing the spacecraft base attitude to be uncontrolled. There are a host of reasons why this is undesirable, most prominently being the existence of unpredictable dynamic singularities [

7]. It has been proposed that a controlled floating mode be adopted that simultaneously controls the manipulator arm trajectory and the spacecraft attitude trajectory so the spacecraft attitude is altered controllably [

8]. However, it is usually desirable that the spacecraft attitude remains fixed to maintain nominal pointing of antennas, solar arrays and sensors. For this reason, we suggest that the freeflying mode be adopted in which the spacecraft base is stabilised and the manipulator arm trajectory is controlled with respect to it [

9]. This eliminates dynamic singularities. Furthermore, traditional rigid-body manipulator controllers such as the computed torque robotic controller [

10] can be readily adapted to modal control to suppress vibrations by using a virtual rigid manipulator approach—this is achieved by replacing actual endpoint kinematic variables by those of a virtual rigid manipulator [

11]. This approach, despite the increased degrees of freedom introduced by flexibility, allows the use of the smaller number of joint actuators to enforce tracking of the desired end effector trajectory. The feedback gains in the computed torque can be reduced by introducing feedforward torques learned through Gaussian process regression [

12]. On the transition from phase (i) to phase (ii), there is the problem that there are limitations in the ability of traditional feedback control systems to deal with rapid complex dynamic responses whilst grappling the target, i.e., current techniques employed in space manipulators are insufficiently adaptive and robust to handle forces of interaction from widely varying space object geometries, sizes and manipulability [

13]. Rapidly changing interaction forces during manipulation can introduce instabilities in the feedback control loop due to insufficient reactivity. This can be particularly acute if the payload dynamics are only partially known. It is crucial that robust adaptive robotic manipulation is developed to solve the problem of space debris mitigation. Phase (ii) is the conventional problem of orbital manoeuvring into different orbits through the consumption of fuel—we do not address this here. Force control issues will also be essential for phase (iii)— salvage is in fact an extension of on-orbit servicing which will involve a suite of complex operations involving the control of interaction forces between tooling and target. Indeed, salvage goes beyond servicing and repair of satellites to incorporate space manufacturing processes.

The salvage and recycling of space debris involves producing feedstock for manufacturing new spacecraft such as standardised cubesat designs. The idea of salvaging spacecraft goes back to proposals for orbiting and refurbishing the shuttle external tank as a useful volume. Here, we are proposing salvaging space debris especially intact spacecraft as an approach to sustainability. Salvage recovers high quality materials and systems from intact but dysfunctional spacecraft. It is essential to recover everything to prevent debris creation. We propose recovering large decommissioned equipment items that can easily be separated and refurbished as a resource using powered tooling that can be exploited safely: (i) aluminium tankage, plates, radiators and frames—typically monolithic structures that can be removed and/or cut if necessary and re-used as-is; (ii) thermal blankets—typically on the spacecraft surface linked through standardised folds which should reduce cutting requirements but requires sophisticated handling; (iii) wiring harnesses—wound around the internal cavity of the spacecraft restrained by secondary brackets and cable ties that can be cut permitting wholesale removal of the harness; (iv) motors/gearing drives for deployment mechanisms and motorised pumps—motors and gears will require re-lubricating with silicone grease or dry lubrication; (v) solar array panels—solar cells may be refurbished using laser annealing; (vi) reaction/momentum wheels and gimbals—located internally and recovered intact for propellantless attitude control; (vii) payload instruments/attitude sensors—located externally and recovered intact (though external camera optics may be degraded due to radiation exposure, dust, etc. which may be rebuffed using abrasives); (viii) antennae/travelling wave tubes/transponders/radiofrequency electronics—located externally/internally as a module recovered intact for direct re-use.

Aluminium foam structures are commonly adopted as the core between welded aluminium sheets for lightweight space structures and do not require heat-limited adhesives typical of common sandwich materials [

14]. We assume that the majority of tasks to dismantle the target spacecraft involves similar tasks as employed during the Solar Maximum Repair Mission (1984) which comprised two main tasks: (i) orbital replacement exchange of a standardised externally-mounted attitude control module box through bolt manipulation (a variation on the peg-in-hole task) using standard power tools; (ii) replacement of an interior-mounted main electronics box that required manipulation of thermal blankets using specialised tooling [

15]. Designing spacecraft for servicing involves the use of standardised modules (ORUs—orbital replacement units) with capture-compatible interfaces such as grapple pins, handrails, tether points, foot restraint sockets, standardised access doors, and makeable/breakable electrical/mechanical connectors. Standardised module exchange involves the manipulation of standard bolts, the preferred bolts being M8 and M10 hexagonal bolts with double head height. Captive fasteners should be employed, or the tooling should employ captive devices to prevent the loss of bolts as a further source of debris. Connector plugs should require no more than one single-handed turn to disconnect [

16]. Standard power tools are automated threaded fastening systems with a typical torque range of 0.5–3.0 Nm. However, few spacecraft have been designed for servicing (the 1993 Hubble space telescope repair mission involved 150 types of tooling) necessitating substitution of standard tooling with adaptable tooling.

For components that cannot be disassembled, robot manipulators with milling tools are essentially a development from CNC (computer numerically-controlled) machines employed for a wide range of manufacturing operations [

17]. Laser machining is a subtractive manufacturing process in which a laser is employed to ablate material locally in cutting. Alternatively, NASA’s Universal Hand Tool (UHT) utilises electron beams at only 8 kV for welding or cutting thin metal sheets without producing dangerous X-rays. We do not recover onboard computers, batteries or propellant as these are likely to be in a depleted, unusable or dangerous state—an important exception to this are field programmable gate array (FPGA) processors which can be reprogrammed despite physical degradation (and for this reason have been proposed for employment on long-duration starship projects [

18]). Thermal heat pipes represent an unknown factor—they have high utility but will require containment of the fluid medium rendering them a challenge for robotic handling. Excess aluminium from secondary structure may be melted by solar Fresnel lens and powdered for 3D printing by selective solar melting (in particular, for the production of solar sail segments [

19] to provide propellantless propulsion to compensate for the lack of recovered propellant). Such solar sails may also be deployed as drag sails in low Earth orbit or orbit raising propulsors in geostationary orbit though this is disposal by traditional means.

The left-overs once these bulk items have been stripped will be dominated by aluminium, lithium compounds from batteries and silicon and other minor materials in computer chips. The explosivity of lithium can be exploited to heat and melt the silumin-like alloy using solar Fresnel lenses which may then be powdered or drawn into wire. This requires considerably higher temperatures than aluminium smelting. Silumin alloy is a high-performance alloy used in high wear applications, but it is unclear what the effects of minor contaminants such as lithium (which may be readily excluded) and copper might be. These provide resources that can be 3D printed into any variety of joining structures to build new satellites in situ fitted with recovered panels, frames, components, etc. Additive manufacturing of complex net-shaped parts of polymer, metal, ceramic and composites has been proposed for microwave and millimetre-wave radiofrequency component manufacture in satellites [

20]. The primary metrics for assessing the relative merits of 3D printing methods are dimensional accuracy, surface finish, build time and build cost [

21,

22,

23]. Laser additive manufacturing is one approach to layer-by-layer construction though we propose a Fresnel lens-based approach. The only aspect that requires re-supply for new spacecraft is new computer chips and associated electronics from Earth (unless FPGA processors are recovered for their reconfigurability). An alternative approach is to grind mixed materials into a powder for pyrolytic/electrostatic/magnetic separation into its component materials. This would permit exploitation of 3D printing to print de novo cubesat constellations without the restrictions imposed by pre-existing plates, frames, etc. The most complex components of the spacecraft are computer chips which comprise aluminium metal strips, doped silicon semiconductor, silica insulation (especially in silicon-on-insulator technology), copper interconnects and a host of more exotic materials. Although Al can be separated through liquation at 660 °C, recovery of the other materials will be more problematic. Silica can be recrystallised and purified through zone refining but this is a complex process. This favours processing simplicity by reprocessing chips mixed with secondary structure in exploiting a silumin-type alloy as 3D printing feedstock without prior separation.

Salvage is a sustainable form of disposal—it is rarely discussed in the context of active debris removal but it is entirely consistent with the growing sophistication of space missions. It would be far more cost-effective to convert these potentially valuable resources into high-utility commodities than to burn them up in the atmosphere or emplace them into graveyard orbits. It is also consistent with current developments in in-situ resource utilisation (ISRU) of the Moon and asteroids [

24,

25]—indeed, it shares much of the same fundamental technology of materials processing, e.g., complex assembly/disassembly manipulation, physicochemical purification, Fresnel lens pyrolysis, electrolytic processing, 3D printing, etc. Indeed, much of the equipment already in Earth orbit would have high value if transported to the Moon. Copper, so useful for electrical cabling, is extremely rare on the Moon yet miles and miles of wiring harness reside within defunct spacecraft in GEO and elsewhere. Commerce is a far more effective debris removal strategy than recommendations or regulations (which are ignored anyway). This solution will effectively convert a disaster into a bonanza. This effectively eliminates the dangers of debris re-entry and converts what is essentially waste into recycled assets. The key to this capability will be sophisticated robotic manipulation.

3. Lessons from the Factory

Compliant manipulation is a fundamental requirement in manufacturing robotics. The importance of mechanical design in easing the complexity of control systems was illustrated by McGeer with a purely mechanical bipedal walking machine [

26]. The RCC (remote centre of compliance) device is designed to ensure passive mechanical compliance in manipulator end effector behaviour during peg-in-chamfered hole (or screw which is essentially a helical peg) assembly tasks, the most common of assembly tasks encountered in manufacturing [

27,

28]. The phases of a peg-in-hole task are (i) gross motion approach; (ii) chamfer crossing; (iii) single-point contact; (iv) two-point contact; (v) final alignment. Maximum angular error is given by,

where

d = peg diameter,

D = hole diameter,

µ = coefficient of friction. The RCC is mounted at the interface between the tool and the wrist mount and has a nominal lateral stiffness of 25 N/mm and rotational stiffness of 325 Nm/rad. Any peg-in-hole error displaces the axis of the peg with respect to the axis of the hole, thereby preventing jamming. It acts as a multi-axis “float” to allow mechanical linear and angular misalignments of up to 10–15% between parts by deflecting laterally and/or rotationally to permit assembly. Indeed, when the tool experiences a contact force or torque, the RCC deflects laterally and/or rotationally in proportion to the contact forces/torques with the RCC internal stiffness providing the constant of proportionality. The RCC as a mechanical device offers zero-delay response. The size range of RCC is limited, does not allow for high speed dynamics and requires chamfered holes. The instrumented RCC (IRCC) is based on the RCC but includes three strain gauges and three LED-detector pairs to provide active compliance across all three axes to cope with non-chamfered holes [

29]. The RCC and IRCC provide a fixed stiffness and fixed location for the centre of compliance, limiting its use to specific scales of assembly operations. The variable RCC (VRCC) offers variable mechanical impedance and remote centre location through the addition of three motors for adaptive positioning with high precision [

30]. These provide greater flexibility for variable peg-in-hole operations that are ubiquitous in assembly. There are detailed algorithms for computing the state of the peg-in-hole task with associated force measurements [

31]. Exploratory guarded moves allow discovery-based behaviour to be implemented—indeed, most compliant exploratory tasks are in fact variations on the peg-in-hole task.

Designing and fabricating fixtures to flexibly secure workpieces for machining is an important aspect of the manufacturing process—indeed, the difference between manipulator grippers and dynamic fixtures is subtle. Robotic manipulators can exploit visual monitoring of automated microelectronic component assembly with actuated jigs [

32]. Jigs and fixtures are mechanisms to impose structure into the work environment. They are devices to position, orient and constrain the workpiece to ensure fidelity of manufacturing operations which subject the workpiece to external forces and torques. The functional requirements of a fixture include location, constrained degrees of freedom, rigidity, repeatability, distortion and tolerances. Traditionally, fixtures are single purpose and designed for a specific part and require significant human involvement for setup and reconfiguration. There are certain design constraints to fixtures [

33]: (i) form closure in which position/orientation wrenches are balanced to ensure workpiece stability to perturbations; (ii) accessibility/detachability subject to geometric constraints without interference; (iii) no deformation during fixture clamping. These constraints require kinematic, dynamic force and deformation analysis. There are four basic types of fixture—baseplate, locators, clamps and connections—and there are four major module fixturing interfaces—non-threaded hole, T-slot, dowel pin and reconfigurable system. There are 12 degrees of freedom to any workpiece (±x, ±y, ±z, ±R, ±P, ±Y). The 3:2:1 fixturing principle determines the locating points: (i) three support points are assigned to the first plane (restricting five degrees of freedom); (ii) two points are assigned to the second plane (restricting three degrees of freedom); (iii) one point is assigned to the third reference plane (restricting one degree of freedom), i.e., nine degrees of freedom are restricted by supports with the last three degrees of freedom restricted by clamps. Ideally, a single fixturing system must be automatic, flexible, adjustable and reconfigurable to accommodate different workpiece sizes and shapes. This will require the reconfigurable fixture to be comprised of standard modules which can be reconfigured robotically with the fixture design automatically determined by the requirements. An example fixturing system comprised four fixture modules mounted onto a baseplate including vertical supports that were fixed first followed by horizontal supports [

34]. The supports guided the emplacement of the horizontal and vertical clamps. They were all based on a vertical threaded bolt with a pair of locking levers which could be manipulated by a single-handed manipulator. There were at least three vertical supports constraining the centre of gravity for maximum stability. The horizontal supports were emplaced as far apart as possible to impose kinematic constraints. The clamps permitted adjustment of clamping forces. End point surface geometries may be solid cone, hollow cone, flat face or spring-loaded bracket. Intelligent fixturing is enabled through sensors (position and force) to measure clamping positions and motors to actuate the fixtures. In addition, visual imagers may be used to plan fixture grasping. These are robotic fixtures with robot manipulators for loading and unloading of the fixtures. Pins can become solenoid-driven actuators. There are several approaches to fixing arbitrarily shaped parts—(i) modular fixtures, electromagnetic fixtures, and electro/magnetorheological fluid fixtures; (ii) adaptable fixtures (3D gripper); (iii) self-adapting fixtures (vices with movable jaws). These all have their limitations limiting their range of applicability and the requirement for positioning set up which is usually accomplished manually. The self-adapting jaw with pin arrays that mould to the geometry of the part provides high adaptability. Adaptive fixtures such as two or three-fingered adaptive fixtures are based on manipulator grippers. Compliance may be implemented through shape memory alloy wires and electrorheological fluids, e.g., [

35]. Finally, fixtureless assembly adopts robotic tools and grippers with the use of fixtures. Grippers can form a hook grip, a scissor grip, multi-fingered chuck, squeeze grip and multiple geometric grips through its capability to mould to many geometries.

5. The Nature of Sensorimotor Control

In a robotic or biological manipulator, a set of actuators (muscles or motors) at the joints are driven to effect cartesian movement of the end effector (hand or tool). Motor control relates actions on the environment to their sensory effects through a transformation function. Any planned end effector trajectory for a multi-joint arm must undergo sensorimotor transformation into joint coordinates. In the human brain, the primary motor cortex and supplementary motor area encode adaptation of kinematic-dynamic transformations of movements. Voluntary movement requires three main computational processes to be implemented in the brain: (i) determination of cartesian trajectory in visual coordinates; (ii) transformation of visual coordinates into body coordinates in which proprioceptive feedback occurs (within the association cortex); (iii) the cartesian trajectory in body coordinates θ

d is converted into the generation of motor commands τ (within motor cortex) to the muscles through the spinal cord. Internal models are used as neural models of aspects of the sensorimotor loop including interaction with the environment to predict and track motor behaviour. The primary motor cortex (M1) implements inverse models that convert desired end effector cartesian trajectories into patterns of muscle contractions at the joints (output), i.e., motor commands. These coordinate transformations between external world coordinates to joint/muscle coordinates appear to be implemented between M1 and the ventral premotor cortex (PMV) [

46]. The first mapping that must be achieved is the nonlinear transformation of task coordinates of the end effector q in terms of joint coordinates θ:

where

f(

θ) = 4 × 4 Denavit–Hartenburg matrix (an SE(3) Lie group). This may be differentiated to yield cartesian velocities relation to joint velocities through the Jacobian matrix

J(

θ):

where

J(

θ) = 6xn Jacobian matrix for n degrees of freedom. From virtual work arguments, the transpose of the Jacobian relates joint torques

τ to cartesian end effector forces

F:

If the manipulator is kinematically redundant (i.e.,

n > 6), the Moore–Penrose pseudoinverse is the generalised inverse:

The inverse dynamic model for a robotic manipulator is given by the Lagrange–Euler equations describing the output torque τ required to realise the observable kinematic state of the manipulator joints

:

where

D(

θ) = inertia parameter of the manipulator,

= Coriolis and centrifugal parameter of the manipulator,

G(

θ) = gravitational parameter of the manipulator (in the case of space manipulators, this term vanishes),

F = external force at the manipulator end effector,

J = Jacobian matrix. The adaptive finite impulse response (FIR) filter may be used to approximate the inverse dynamic model of a process through mean square error minimisation [

47]. Several regions of the brain project into motor area M1 providing feedback signals from the primary somatosensory cortex, posterior area 5 and from the thalamus via the cerebellum. In feedback control, the actual trajectory is compared with the desired trajectory thereby defining the tracking error. This error is fed back to the motor command system to permit adjustments to reduce this error. Control systems exploit inverse models to compute the desired motor action required to achieve the desired effects on the environment (such as a desired trajectory). The feedback controller computes the motor command based on the error between the desired and estimated states. The motor command is the sum of the feedback controller command and the inverse model output. Inverse internal modelling of the kinematics and dynamics of motion is similar to adaptive sliding control [

48]. The internal model constitutes an observer and essentially represents the reference model employed in adaptive controllers. An inverse model may be generated by inverting a forward model neural network representing the causal process of the plant [

49]. Forward models define the causal relationship between the torque inputs and the outputs states of the motor trajectory (position/velocity) and the sensory states given these estimated states. The parietal cortex is concerned with visual control of hand movements—it requires 135–290 ms to process visual feedback. It computes the error between the desired Cartesian position and the current Cartesian position, the latter computed from proprioceptive feedback measurements from muscle spindles [

50]. This requires an efference copy of the motor commands to create a feedforward compensation model. An efference copy (corollary discharge) of these motor commands is passed to an emulator that models the input-output behaviour of the musculoskeletal system. A hierarchical neural network model can emulate the function of the motor cortex [

51]. There is an error between the actual motor patterns

θ (and

) measured by proprioceptors and the commanded motor patterns

τ from the motor cortex which is fed back as

with a time delay of 40–60 ms. A forward dynamics model of the musculoskeletal system resides within the spinocerebellum-magnocellular red nucleus system. The forward model receives feedback from the proprioceptors

θ and an afferent copy of the motor command

τ. Thus, the forward model takes the motor command

τ as input and outputs the predicted trajectory

θ*. The forward model predicts the movement θ* which is used in conjunction with the motor command

τ to compute a predicted error

which is transmitted to the motor cortex with a much shorter time delay of 10–20 ms. The forward model predicts the sensory consequences of the motor commands. This top-down prediction is based on a statistical generative model of the causal structure of the world learned through input-output relations. In humans, this forward model of the musculoskeletal system has been learned since the earliest motor babbling that begins after birth. In athletes, it is refined through physical training who refer to it as muscle memory. An inverse dynamics model of the musculoskeletal system resides within the cerebrocerebellum-parvocellular red nucleus system—it does not receive sensory inputs. The inverse dynamics model has the desired trajectory θ

d as input from which it computes motor commands τ as output. The inverse dynamics model must learn to match the forward model to generate accurate motor commands τ in order to compensate for variable external forces. The integral forward model paradigm places the forward model at the core of all perception–action processes—this is the basis for the integral forward model in which sensor and motor functions are fully integrated [

52]. Forward models are employed to make predictions that provide top-down expectations to incoming bottom-up sensory information. Mismatch generates a prediction error that induces refinement of expectations.

8. Models—Backwards and Forwards

Large delays in neural feedback signals from sensors make pure feedback strategies implausible so predictive feedforward control is necessary with feedback being used to correct the trajectory. Two copies of a motor command are generated by the inverse model, the efference copy being passed to the forward model to simulate the expected sensory consequences which are compared with actual sensory feedback. The forward predictive model is essential for skilled motor behaviour—it models how the motor system responds to motor commands. In the forward model, motor commands are input to the forward model and transformed into their sensory consequences as the output—forward models model the causal relationship between input actions and their effects on the environment as measured by the sensors. The forward internal model acts as a simulator of the body and its interaction with the environment, i.e., it constitutes a predictor. The forward dynamic model of a robotic manipulator is given by:

Joint acceleration

may be integrated to yield joint rate

and joint rotation

θ as the predicted output sensory states for torque input

. Rather than actual accelerations, it has been suggested that desired accelerations may be employed to train these models [

75]. The forward dynamic model imitates the body’s musculature which generates a predicted trajectory output from an efference copy of input motor commands [

76]. Feedforward control thus uses a model of the plant process to anticipate its response to disturbances to compensate for time delays [

77]. The predicted trajectory output may be fed to the input of the feedback controller to compensate for time delays. Forward models adapt 7.5 times more quickly than inverse models alone [

78]. This forward predictive control scheme has been proposed as a model of cerebellar learning from proprioceptive feedback from muscle spindles and Golgi apparatus which measure muscle stretch. The forward model may be implemented as a neural network function approximator to the forward dynamics. This may be represented as a look-up table with weights learned from input-output pairs of visuomotor training data, e.g., CMAC (cerebellar model articulation controller) [

79]. CMAC has been applied as lookup tables to reproduce input-output functions defined by the kinematics of a robotic manipulator [

80]. CMAC has been applied to the grasping control of a robotic manipulator using CCD camera images transposed to object locations that were passed to a conventional robot controller [

81]. The look-up table representation is not consistent with biology however —it appears that motor adaptation does not involve the composition of look-up tables rather than the forming a full and adaptable model which can extrapolate beyond the initial training data [

82]. The Marr–Albus–Ito theory of cerebellar function represents a motor learning system similar to a multilayer perceptron [

83]. Biology favours Gaussian radial basis function network representation [

84] so the feedforward model may be implemented as a radial basis function network as a biomimetic representation [

85]. Without calibration from actual sensory feedback, forward models will accumulate errors. The combination of feedforward (exploration) and feedback (exploitation) control provides an efficient approach to control systems. It is apparent that predictive forward models are learned prior to inverse models for the application of control [

78].

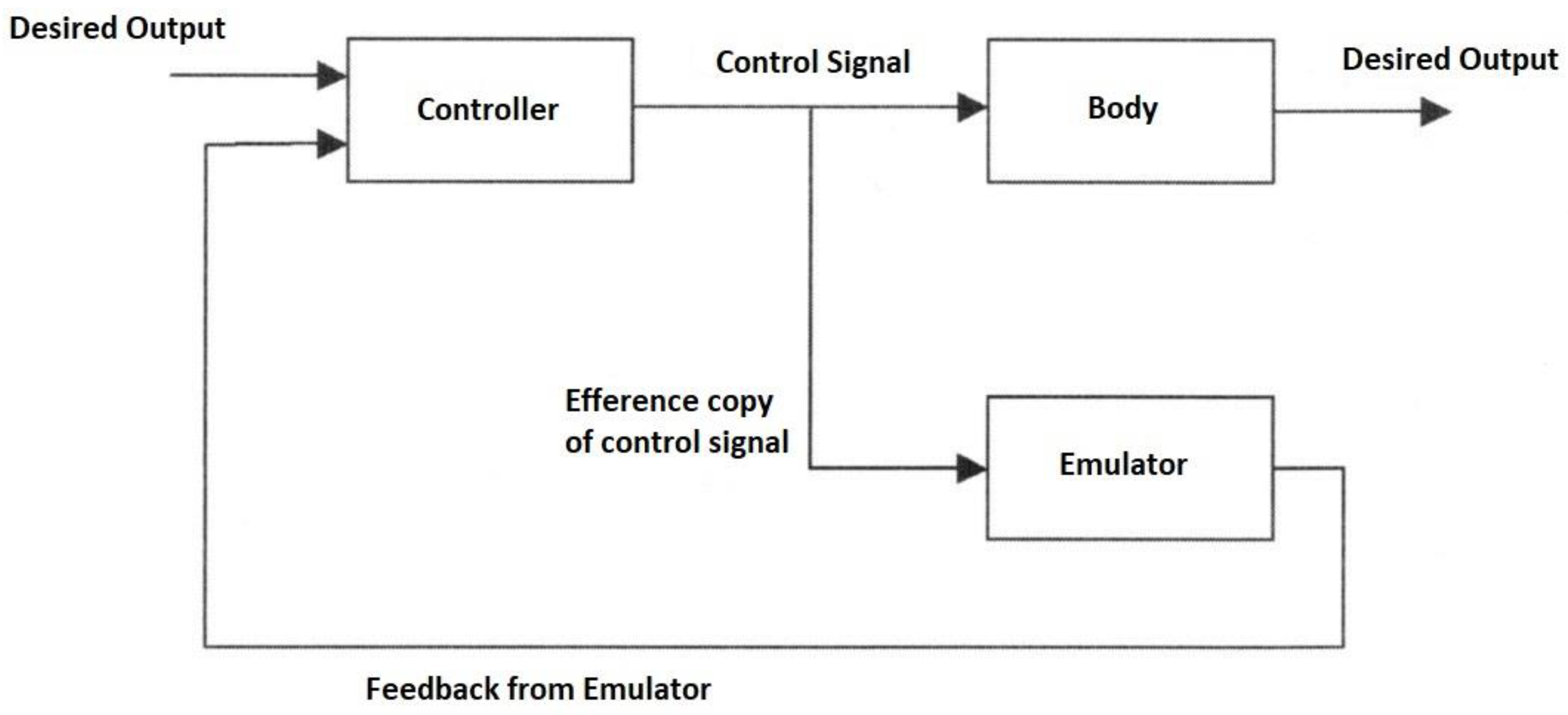

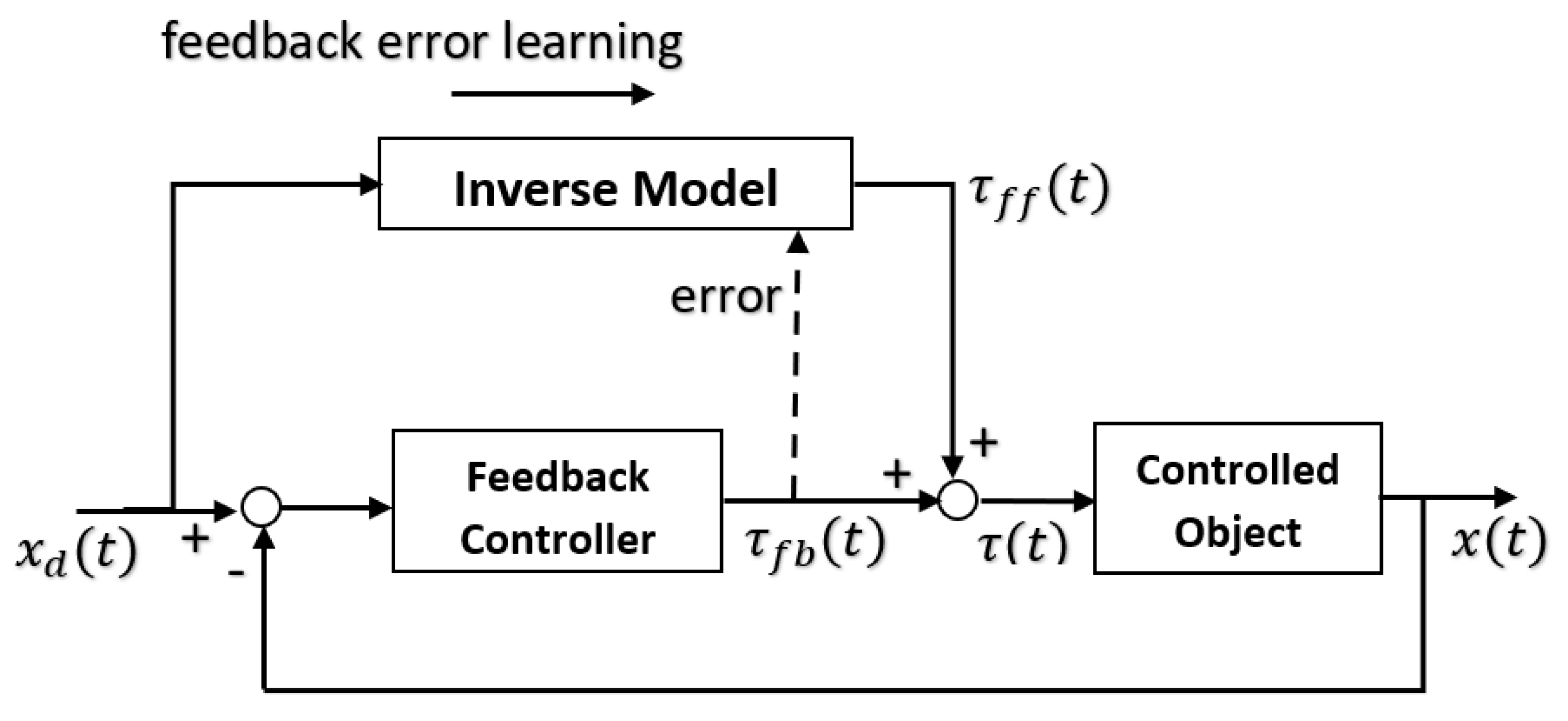

The forward model can use an efference copy of the motor command as input to cancel the sensory effects of movement (

Figure 1).

The same process cancels the effects of self-motion on the senses to distinguish it from environmental effects (e.g., self-tickling). For each forward model, there is a paired inverse model to generate the required motor command for that context cued by sensory signals. In motor control, the full internal model comprises a paired set of forward and inverse models requiring two network pathways through an inverse model and forward model, respectively, with the latter acting as a predictor. The feedback controller converts desired effects into motor commands while the feedforward predictor converts motor commands into expected sensory consequences. The feedforward and feedback components interact continuously by combining both efferent and afferent signals in the forward model, i.e., sensory feedback is essential for the forward model [

86]. The learning rule such as the delta rule of a neural network is similar to a model reference adaptation law and learns the inverse dynamic model [

87]. The feedforward neural model can be used as a nonlinear internal model for a model reference adaptive controller [

88]. The repertoire of motor skills requires multiple different internal models of smaller scope than a single large monolithic model can accommodate. Multiple paired forward and inverse models are necessary to cope with the large number of kinematic-dynamic situations that can occur. Hence, the MOSAIC (modular selection and identification for control) model proposes multiple pairs of forward predictor and inverse controller models to represent different motor behaviours [

89,

90]. This modular approach employs multiple tightly coupled inverse/forward model pairs for generating motor behaviour under widely disparate situations—32 inverse model primitives can yield 2

32 = 10

10 behavioural combinations [

91]. A multitude of such modular paired forward-inverse models exist for different environmental conditions. Each predictor represents a different hypothesis test to determine which context is most appropriate with the smallest prediction error. The current context determines the selection of the predictor–controller pair. Rather than hard switching, modular forward-inverse model pairs are selected with weights. The set of sensory prediction errors from the forward models determine the probabilities that weight the outputs of the paired controllers—the combined output is the weighted sum of the outputs of the individual controllers. Multiple models may be active simultaneously whose outputs may be summed vectorially to construct more complex behaviours. The mixture-of-experts is a divide-and-conquer strategy reducing complex goals into subgoals that are selected through a gating mechanism. The mixture-of-experts approach is a statement of Ashby’s principle of requisite variety [

92]. Adaptability ensures that functionality is retained in the face of environmental perturbations—it does so by monitoring the environment and adjusting its internal parameters in response to maintain its behaviour. As the complexity of the environment increases, a greater diversity of more specialised internal control systems is required. Bayesian gating selection is accomplished from the likelihood of the forward-inverse pair with minimum prediction error

:

where

σ = scaling constant. The prior probability for each sensory context

yi is given by

where

f(.) = forward model approximator (nominally, a neural network),

wi = forward model weight parameters. Bayes theorem multiplies this likelihood with the prior followed by normalisation to generate posterior probabilities:

This soft-max function transforms errors through the exponential function which is then normalised to form a “responsibility” predictor. The posterior probability is used to train the predictive forward model to ensure that priors approach posteriors. Selection between expert modules may be learned to partition the input space into different forward model regions. A gradient descent rule estimates the probability of the suitability of each expert [

91]:

Alternatively, expectation maximisation such as the Baum–Welch algorithm as used in hidden Markov models (which are Kalman filters with discrete hidden states) offers superior performance to gradient descent. The inverse model paired with the selected forward model is then computed to implement the controller. The paired inverse model

generates the required motor command through the same weighting [

93]:

Similarly, learning of the inverse models is weighted:

Prediction errors from the forward model are thus used by the inverse model to generate muscle contractions to generate actions on the world. Prediction errors can also be used to update the predictive forward model by comparing the actual and predicted sensory data from a motor command.

Any action sequence may be represented as a set of schemas organised hierarchically. The highest schema activates component schemas representing units of movement comprising the specific action sequence [

94]. A motor schema is an encapsulated control system module with neural maps to define spatial-temporal control actions of muscles according to proprioceptive feedback signals [

95]. Motor schemas appear to be represented in cortical areas 6 and 7a. The forward model constitutes a body schema representation that maps its sensorimotor behaviour for simulating actions without motor execution [

96]. This emphasises forward and inverse kinematic transforms that can be updated through learning by recognising the correlation between visual images, proprioceptive and tactile feedback and motor joint commands. A Bayesian network model of manipulator kinematic structure where nodes represent body part poses has been shown to learn and represent the forward kinematic structure

and inverse kinematic structure

of a robotic manipulator [

97]. The phantom limb syndrome in amputees, both congenital and subsequent, is due to neural network representation in the somatosensory cortex and connected areas with a genetic origin that is modified by sensory experience [

98]. This is part of the body schema that emulates the human body. We can exploit tools to extend our senses to detect objects as if the tools were parts of our body [

99]. Skilled tool use demonstrates that forward models are adaptively trained using prediction errors [

100]. This sensory embodiment is enabled by the learning capacity of predictor–controller models of Euler–Bernoulli vibrating beams in the cerebellum. The weight of an object to be grasped must be predicted to determine the required grip force 150 ms prior to contact [

101]—it is estimated as the weighted mean force from previous trials, i.e., a predictive model [

102].

Grasping is an object-oriented action that requires an object’s size and shape be transformed into a pattern of finger movements (grip)—there is evidence that these processes are performed in the parietal lobe [

103]. The fingers begin to pre-shape during large scale movements of the hands whereby the fingers straighten to open the hand followed by closure of the grip until it matches the object size. Such a process may be emulated by perceptual and motor schemas, i.e., a pre-shape schema selects the finger configuration prior to grasping. The Kalman filter has been applied to emulate synchronised human arm and finger movements prior to grasping [

104]. Fingers open in a pre-shape configuration halfway through the arm reach. The two movements of finger shaping and arm reaching are synchronised and are determined by the object size and location. Both the ventral “what” visual pathway and the dorsal “where” visual pathway are required to transform visual information into motor acts [

105,

106]. In active vision, the motor system is a fundamental part of the visual system by orienting the visual field. Nevertheless, visual processing is inherently slow due to high computational resource requirements of processing images. Perception is predictive, illustrated by the size–weight illusion that a small object with the same weight as a large object is “felt” to be heavier. It appears that the F5 neurons of the premotor cortex involved in grasping are selective for different types of hand prehension—85% of grasping neurons are selective to one of two types of grip (precision grip and power/prehension grip). The power grip is relatively invariant involving the enveloping of the object but the precision grip is more complex with a variety of finger/hand configurations. There is also specificity in F5 neurons for different finger configurations suggesting the validity of the motor schema model. Prehensile gripping allows the holding of an object in a controlled state relative to the hand and the application of sufficient force on the object to hold it stationary. It imposes constraints through contact via structural and frictional effects. The grip force must exceed the slip ratio though it is preferred to minimise reliance on friction in favour of geometric closure. A condition for force closure is that the grip Jacobian G contains the null space of wrenches w

i from contact forces such that

. Adaptive gripping requires tactile feedback [

107]. Feedforward predictive models in conjunction with cutaneous sensory feedback are instrumental in adapting grip forces to different object shapes, weight and frictional surfaces [

108]. The feedforward component provides rapid adaptability through prediction rather than just reactivity through pure cutaneous feedback. It is the combination of predictive feedforward and reflexive feedback that yields skilled manipulation. Both the cerebellum and cutaneous feedback are critical for the formation of grip force predictions, the latter being essential for detecting slipping. Predictive models provide the basis for haptic grasping indicated by preadaptations to learn complex motor skills [

109]. Anticipatory grip-to-load force ratios precede arm movements and more generally, prediction always precedes movement. Hence, when lifting an unknown object, multiple forward models constructed from prior experience are active generating sensory predictions. The forward model that generates the lowest prediction error is selected activating its paired inverse model-based controller. A forward kinematic model of a robotic hand and arm has been learned through its own exploratory motions observed by a camera based on visual servoing [

110]. Continuous self-modelling by inferring its own structure from sensorimotor causal relationships provides the ability to engage in autonomous compensatory behaviours due to injury [

45]. Forward models are thus essential to for robust behaviour in animals.

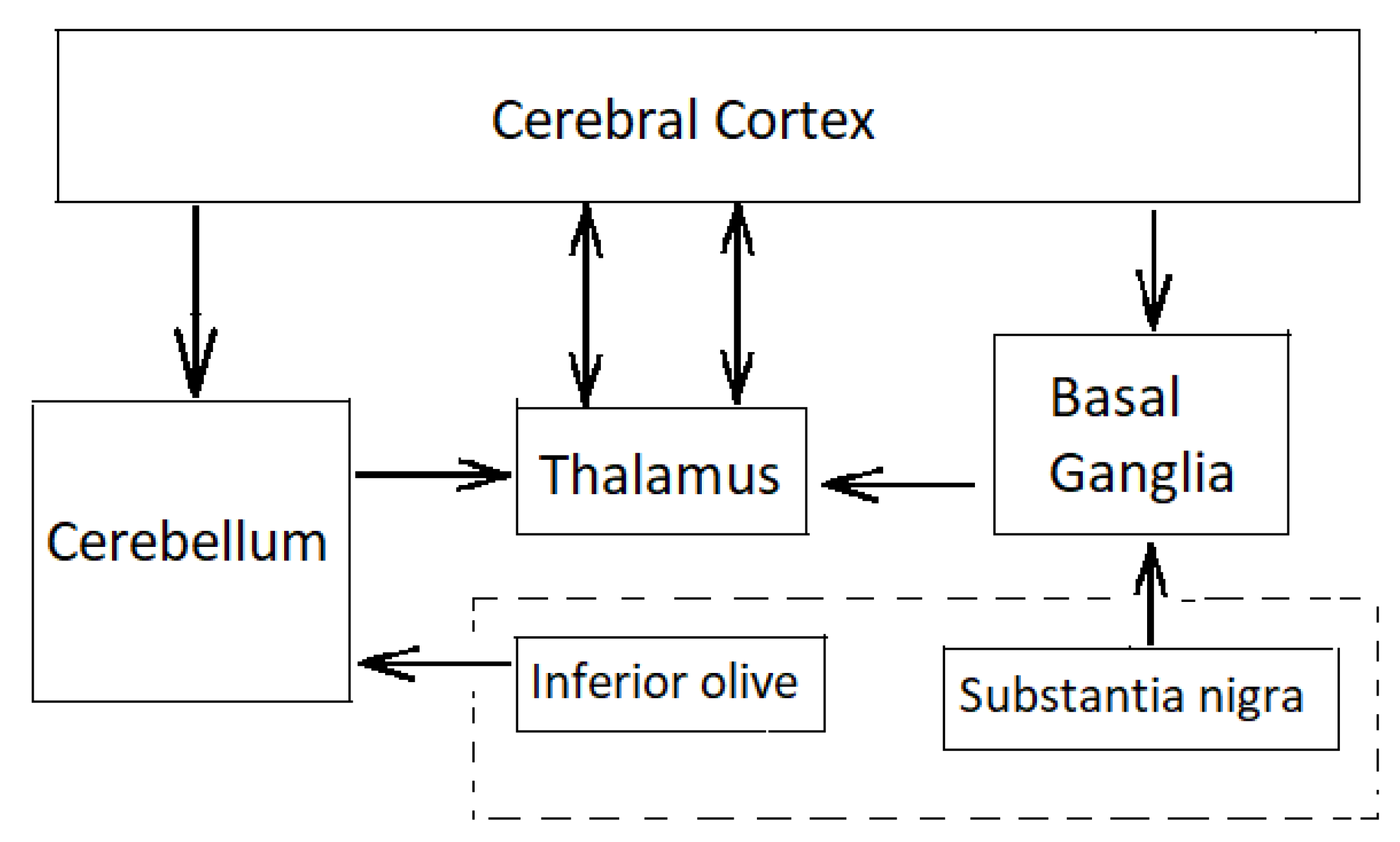

9. Role of the Cerebellum

The cerebellum is one of the most widely connected structures of the brain with connections into all the major systems of the brain (

Figure 2).

The cerebellum implements fine motor coordination, balance, motor timing and motor learning, particularly in initiating and coordinating smooth fine-scale movements [

111,

112]. Cerebellar dysfunction has been attributed as the cause of tremor ataxia involving loss of motor coordination. The cerebellum comprises four major parts with a highly regular architecture—flocculus, vermis, intermediate hemisphere and lateral hemisphere. With 1.6 × 10

10 neurons in a well-ordered architecture, it performs the same computation with different modules projecting widely into different parts of the brain as well as the motor cortex. The cerebellum possesses the largest number of neurons than any other structure in the human brain: it comprises rows of 10 M Purkinje cells, each receiving around 200,000 synapses from parallel fibre inputs from proprioceptors and climbing fibres carrying error signals. The 200,000 parallel fibres fire at a rate of around 60 Hz to a single climbing fibre that fire at a rate of just 1–2 Hz converging onto each Purkinje cell. Lesions of the cerebellum cause disruptions to the coordination of limb movement such as jerky movements, poor accuracy, poor timing, tremors, etc. implicating it in the regulation of movement. According to this theory, the cerebellum serves to coordinate the timing of the elements of muscular movement rather than the muscle movements themselves [

113]. It achieves this through the modulation of gain control parameters. Inherent in this hypothesis is the existence of a somatotopic map of the body within the three cerebellar nuclei. Each cerebellar nucleus controls a different mode of body movement. Parallel fibres linking Purkinje cells somatotopically encode and control specific combinations of the body’s musculature. Purkinje cells linked by parallel fibres project onto cerebellar nuclei imposing different control modes to generate coordinated movement. These muscular pattern synergies control and coordinate different parts of the body. However, it is reckoned that the cerebellum has a far more central role to play in motor control.

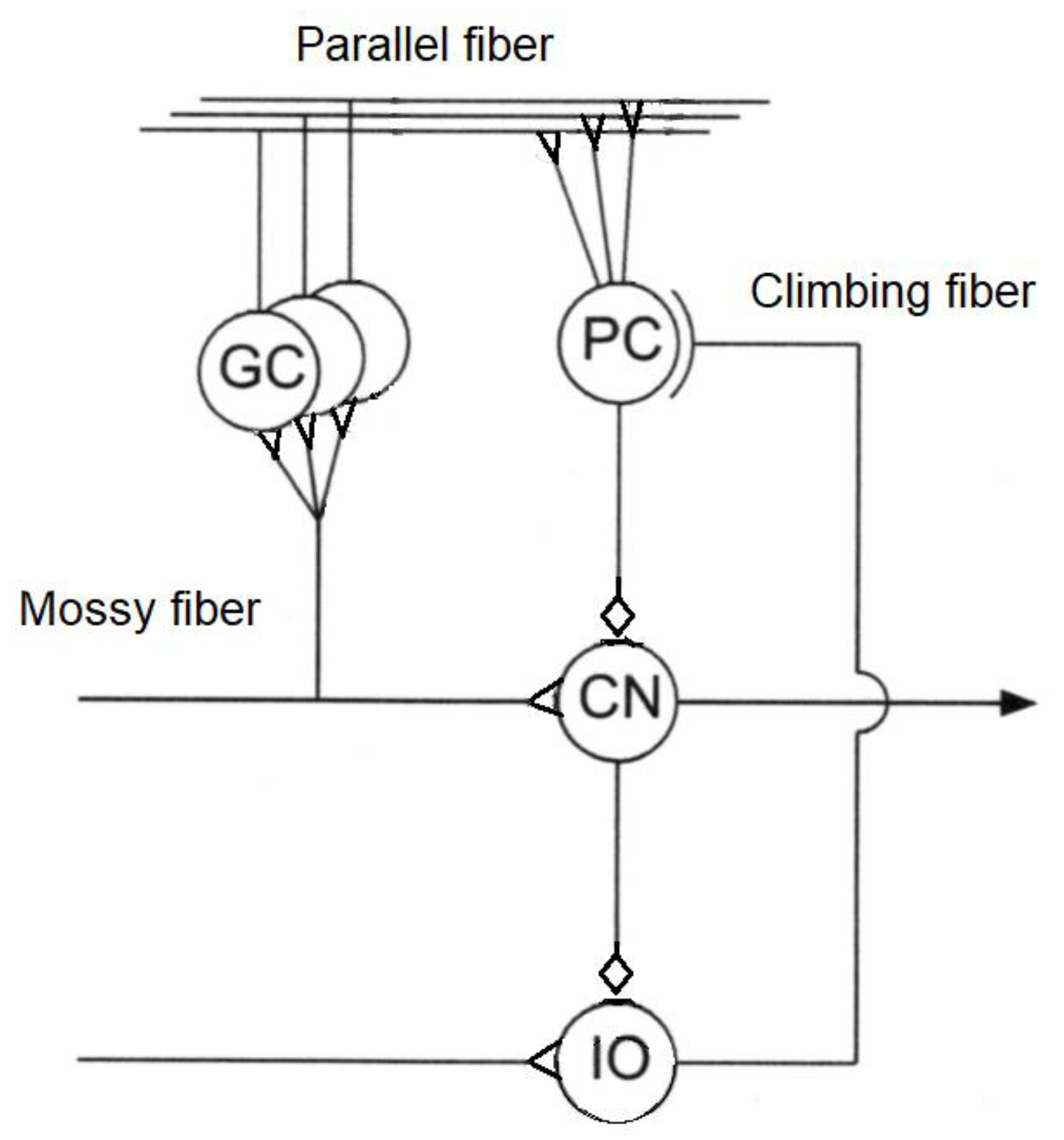

The cerebellum acts as an associative memory between input patterns on granule cells and output patterns on the Purkinje cell patterns (

Figure 3).

Cerebellar input is based on two input channels—parallel fibres from mossy fibres and climbing fibres from olivary neurons. Output from the granule cells—parallel fibres—converge onto Purkinje cells with climbing fibres from the inferior olive. Synapses of parallel fibres connecting granule cells to Purkinje cells are modified by inputs from climbing fibres from the inferior olive to the Purkinje cells. The climbing fibre synapses onto the Purkinje cells reside in the cerebellar flocculus. As granule cells form a recurrent inhibitory network with Golgi cells, they represent a recurrent circuit. The cerebellum comprises a feedforward circuit in which inputs are converted into the output of Purkinje neurons that implement the predictor model. Its feedforward structure comprises a divergence of mossy fibres onto a massive number of granule cells that converge back onto Purkinje cells, i.e., it is highly integrative [

114]. Granule cells are the most numerous neurons at around 10

10–10

11 in the human brain—the ratio of granule cells to Purkinje cells is around 2000:1. Granule cells are small glutamatergic cells which receive feedback inhibition from Golgi cells. Each granule cell has 3–5 dendrites and its axon bifurcates into parallel fibres which cross over orthogonally onto fan-like dendritic inputs to Purkinje cells. Granule cells provide context to sensorimotor information—indeed, the granule cells convey a topographically rich and fine-grained sensory map to the cerebellum necessary for fine motor control [

115]. Purkinje cells are GABAergic neurons and are the only outputs from the cerebellar cortex. Mossy fibres carry sensory (from different sensory modalities such as the vestibular system) and efferent signals in different combinations to a massive number of granule cells. Mossy fibres convey low speed neural signals via the parallel fibres that intersect the Purkinje cells and impose time buffers of 50–100 ms. These delays require the predictions of the forward model. The Fujita model of the cerebellum comprises an adaptive filter that learns to compensate for time delays with parallel fibres forming delay lines but they are too short for this purpose [

116]. Excitatory mossy fibres input an efference copy of motor commands to the forward model of the cerebellum. Climbing fibres from the inferior olive carry the motor error signals rather than input signals—each inhibitory Purkinje cell receives one climbing fibre but each climbing fibre may contact several Purkinje cells. Purkinje cell outputs from the cerebellar cortex project into the deep cerebellar nuclei which output to other parts of the brain. Outputs from the cerebellar nuclei project through the thalamus into the primary motor cortex (face, arm and leg), premotor cortex (arm), frontal eye field, and several areas of the prefrontal cortex [

117]. In fact, those areas of the cerebral cortex that project to the cerebellum are also the targets of cerebellar outputs. The inverse dynamics model learning is located in the Purkinje cells to which the climbing fibre inputs carry error signals in motor torque coordinates thus acting as readout neurons.

The cerebellum implements an associative learning algorithm involved in both motor and non-motor functions including higher cognition and language [

118,

119]. The cerebellum’s role in higher cognitive functions such as attention is less clear than its motor roles, but it performs the same computation on all its inputs [

120,

121,

122]. The Marr–Albus–Ito cerebellar model emphasises motor learning aspects [

123,

124]—parallel fibre/Purkinje cell synaptic weights are modified by climbing fibre inputs from the inferior olive, i.e., learning input-output patterns. Long-term depression (LTD) alters synaptic connections onto Purkinje neurons during motor learning [

125,

126]. The cerebellum is a pattern recognition learning machine that learns predictive associative relationships between events (such as classical and operant conditioning) [

127]. Associative learning involves extracting rules that predict the effects of stimuli with a given association weight. Classical conditioning involves a repeated presentation of a neutral conditioned stimulus (CS) with a reinforcing unconditioned stimulus (US). In classical conditioning, the CS (bell) is repeatedly paired with the US (food) to generate a CR (salivation) so that CR becomes the predictor of US. Similarly, eyeblink conditioning involves a tone (CS) and an airpuff to the eye (US) that generates a blink reflex [

128]. The airpuff generates the eyeblink without learning (UR). The US (airpuff) is conveyed from the inferior olive by the climbing fibres while the CS (tone) is conveyed from the pontine nuclei by the mossy fibres. Both the pontine nucleus and inferior olivary nucleus (via the red nucleus) receive inputs from the cerebral cortex. The climbing fibres (US) “teach” the Purkinje cells to respond to parallel fibre inputs (CS). The animal learns to respond to the CS alone. After 100–200 training pairings, the tone is sufficient to induce the eyeblink response (CR that mimics UR). Temporal association is made only between the US and a preceding CS if the CR occurs prior to the US. The CS must precede the US by at least 100 ms with efficient conditioning occurring 150–500 ms before the US [

129]. Surprising events (new evidence) imply increased uncertainty in prior beliefs about the world which incite animals to learn faster through classical conditioning, i.e., such learning is Bayesian [

130]. In Bayesian analysis, the prior establishes expectations which if violated by observed evidence induces surprise. Hence, different prior models yield different rates of Bayesian learning.

Inverse models are acquired through motor learning via Purkinje cell synaptic weight adjustment in the cerebellum. Motor learning involves the construction of an internal model representation in the cerebellum of the brain. Cause–effect relationships of each action must be learned inductively using training data generated by random movements [

131]. Probabilistic forward models appear to influence random limb movements in infants (motor babbling) into specific motor spaces to reduce learning time [

132]. Forward modelling involves training the transformation of joint torques to hand Cartesian coordinates onto a neural network. The forward model is a Bayesian network with learned probability distributions that also models prediction errors updated by observational feedback. This forward model can be used as a look-up table that computes the inverse model relating the joint torques given the hand coordinates. Furthermore, the forward model predicts the sensory outputs of motor inputs. The cerebellum implements paired forward and inverse models. Cerebellar-based motor deficiencies are some of the earliest indicators of autism prior to 3 years of age which appears to be due to over-reliance on proprioceptive feedback over visual feedback resulting in improper fine-tuning of internal models [

133]. This favouring of internal stimuli over external stimuli manifests itself as stereotypical behaviour, social isolation and other cognitive impairments. The cerebellum appears to be a core hub that implements predictive capabilities across different cortical regions. The cerebellar cortex receives extensive inputs from the cerebral cortex but also sends its output to the dentate nucleus which projects widely to the different regions of the brain including to the prefrontal areas via the thalamus. The cerebellum receives inputs from the pontine nuclei that relay information from the cerebral cortex and outputs information back through the dentate nucleus and thalamus to the cerebral cortex. This cerebro-cerebellar loop may mediate tool use in humans [

134].

It has been suggested that the cerebellum implements more complex forward models such as multitudes of Smith predictors [

135]. The Smith predictor includes a forward model within an internal feedback loop to model a time delay. The latter delays a copy of the fast sensor predictions so that it can be compared directly with the actual sensory feedback to compensate for the time delay [

136,

137]. The forward model provides an estimate of the sensory effects of an input motor command which can be used within a negative feedback loop. The forward model acts as a predictive state estimator and a copy of the sensory estimate from the forward model is delayed to permit synchronisation and comparison with the actual feedback sensory effects of movement which are delayed by the feedback loop. Any errors between the predicted and actual sensory signal serve to improve performance and train the forward model. The linearised discrete system dynamics are given by:

Time delays in the sensory feedback pathways require the use of predictive compensation such a Smith predictor [

84]. The inner loop of the Smith predictor is based on the estimated state and the estimated dynamics:

A predicted sensory estimate is used instead of the actual sensory measurement in a rapid internal high gain feedback loop to drive the system towards the desired sensory state. The linear control law is based on the difference between the desired state, estimated state, delayed state and estimated delayed state:

Subtraction yields the error dynamics:

If the forward model is perfect,

and

:

The Smith predictor also incorporates an outer loop with an explicit delay in the predicted sensory estimate to permit synchronisation with the actual sensory measurement—this is required to correct internal forward model errors. Hence, there are two forward models—one of the dynamics of the effector and the other of the delay in the afferent signal, the former with a faster learning rate of 100–200 ms and the latter with a slower learning rate of 200–300 ms [

138]. This provides adaptability to the forward model through supervised learning. The Smith predictor utilises both a forward predictive model and a feedback delay model but the control loop time delay allows the delayed predictive model to be registered synchronously with actual sensory feedback to correct the estimated value through delay cancellation. The key to selecting which model the cerebellum performs is determined by the climbing fibre inputs—whether it carries predictive sensory information or motor command error information. An alternative approach to dealing with time delays is through wave variables. The cerebrospinal tract may represent control signals as wave variables of a standing wave formed by forward (

) and return (

) signals separated by a transmission delay T [

139]. The cerebellum acts as a master wave variable that accepts u

s(t-T) from the spinal cord and

from the cerebral cortex via the lateral reticular nucleus. These are combined with (x

m-x

md) to generate a reafferent wave variable

that ascends to the cerebral cortex and u

m that descends to the spinal cord. The signals (x

m-x

md) are generated through a reverberating interaction between the cerebellum and the magnocellular red nucleus by integrating

. Feedback control is stable due to the passivity of the slave system. There are several theories of cerebellar function but all point towards the necessity for implementing predictive forward models.

11. The Centrality of Bayesian Inferencing

In the neocortex, feedforward connections follow from early processing areas to higher areas; feedback connections follow from higher areas to earlier processing areas. Lateral connections link areas within the same processing level. In the cortical column, outer layers implement predominantly (ascending) feedforward connections while inner layers implement predominantly (descending) feedback connections implying a predictive Bayesian inferencing process [

143]. Feedforward connections convey prediction errors while feedback connections suppress prediction errors. The cerebral cortex has a uniform laminar architecture of six layers divided into specialised modalities with highly recurrent connections suggesting that the same fundamental algorithm may operate across the entire cortex. A candidate algorithm for the cerebral cortex is a Bayesian algorithm. Bayesian inference integrates top-down contextual priors implemented through feedback with bottom-up observations processed through the feedforward chain. The brain attempts to match bottom-up sensory data from the environment with top-down predictions by minimising the prediction errors (surprise) [

144]. This Bayesian manifestation is equivalent to the free energy minimisation/maximum entropy model of expected energy minus the prediction entropy in the brain [

145]. Free energy is minimised by choosing sensorimotor actions that reduce prediction errors (surprise)—this requires a Bayesian capability of updating predictive world models that cause the sensory data. The brain is indeed a Bayesian inferencing machine that estimates the contextual causes of its sensory inputs based on internal (forward) models which are essential for interpreting that sensory data [

146,

147]. The brain is a maximum a posteriori (MAP) estimator based on likelihoods modified by learned prior (forward) models that create expectations. Prior expectations are updated with new sensory evidence yielding a posterior estimate of the state of the world. The sensory data provides “grounding” to the prior model. Bayesian inferencing has been proposed as a model of memory retrieval, categorisation, causal inference and planning, i.e., Bayesian inferencing is implicated in all human cognition [

148]. Bayesian inferencing is dependent on prior models of the world in memory which are upgraded with experience. The prior encapsulates information on the past history of inferences while the sensory data provides contextual framing. The tradeoff is between the predictability of future events (gain) against the cost of new hypothesis formation. Prediction defines the probability of an event given a set of features or cues—this constitutes the basis for defining causal laws with high probabilities (confidence) associated between the event and the cues, i.e., conditional learning.

The Bayesian approach provides the basis for probabilistic inductive inferencing. The joint probability of hypothesis h and observed data d is the product of the conditional probability of the hypothesis h given data d and the marginal probability of data d:

We employ probabilities to represent degrees of belief in hypotheses

P(

h) prior to any observational data d—this is the prior probability which has a strong effect on the posterior probability. The posterior probability

P(

h|

d) represents the probability of the hypothesis given the observational data d. Bayes theorem states that the revised (posterior) probability of an hypothesis is the product of its prior (former) probability and the probability of sensory data if the hypothesis were true (evidence). The likelihood and prior are combined and normalised using Bayes rule. Bayes rule relates the posterior probability of the hypothesis in terms of the prior probability:

where

P(

d|

h) = probability of data given the hypothesis (likelihood). The prior smooths fluctuations in observed data. Likelihood weights the prior according to how well the hypothesis predicts the observed data. Maximum likelihood estimate (MLE) estimates parameters that maximise the probability of observed data

P(

d). Marginalisation is given in terms of competing hypotheses:

The likelihood is the probability (prediction) of sensory feedback based on the forward model given that context. There are multiple predictive forward models, each applicable in different contexts with a given likelihood in which the prior constitutes the probability of a context.

Object perception may be regarded as a statistical visual inference process in which Bayesian techniques are employed to make optimal decisions based on prior knowledge and current noisy visual measurements [

149]. The maximum a posteriori (MAP) estimate is proportional to the product of prior probability and the likelihood: p(S|I) = p(S,I)/p(I) where p(S,I) = p(I|S)p(S), S = scene description and I = image features. The Bayesian brain constructs complex models of the world despite sparse, noisy sensory data using a range of optimal filtering, estimation and control methods [

150]. Bayesian probability enables inferencing with uncertain information by combining one or more sources of noisy sensory data with imperfect prior information. Bayesian estimation provides the vehicle for sensory fusion of multiple sensory modalities to enhance the quality of data for estimation. Predictive coding involves the elimination of redundancy by transmitting only unpredicted aspects of the sensory signal [

151]. Top-down predictions filter out expected sensory inputs thereby reducing neuronal firing. This affords enhanced coding efficiency in processing sensory signals within the nervous system. This implicates prior predictive models as central to parsimonious information processing in the brain. The prediction error is processed to enhance unexpected (salient) stimuli. The predictive component represents hypotheses that explain sensory data while the prediction error that is transmitted further up the cortical hierarchy represents the mismatch between predicted and actual sensory data. Thus, the efficient minimum description length (MDL) neural code

is equivalent to the Bayesian MAP estimation

where

I = input image,

f = neural firing frequency representing visual features. The salient prediction error invokes better hypotheses (predictive models) further up the processing hierarchy—this requires that multiple hypotheses are available until the correct forward model has suppressed overall neural activity. The probability distributions involve the principle of least commitment but collapse to a single MAP estimate. In its simplest form, this is the basis of habituation in which repetitive stimuli invoke reduced neural response, i.e., conscious attention is not required. When the precision of noisy sensory data is low, attention is deployed to focus on ambiguous sensory data to increase its precision [

152].

The brain combines visual information in retinal coordinates with proprioceptive information in head and body coordinates. The brain must compute coordinate transformations of Cartesian limb postures into limb joint movements in order to interact with objects in the environment. Coordinate transformations are performed in the posterior parietal cortex which represents multiple reference frames [

149]. In Brodman’s area 7, reference frames are allocentric but in Brodman’s area 5, they are both eye- and hand-centred. In the lateral intraparietal layer, reference frames are both eye-centred and body-centred. These indicate multiple reference frames for coordinate transformations for relating visual stimuli in eye-centred frames to motor movements of the hand and arm in hand-centred frames through body-centred and head-centred reference frames. Sensorimotor transformations can be implemented through radial basis function neural networks using Gaussian function basis sets to associate sensory map coordinates with motor map coordinates [

153]. This involves a one-to-many transformation as there are many sets of muscles that can implement a given Cartesian trajectory. A solution is to use two networks—an inverse model and a forward model which acts as a predictor.

A cerebral cortex neural network architecture has implemented Bayesian inferencing in the presence of noise [

154]. It allows estimation of log posterior probabilities by quantitatively modelling the interaction between prior knowledge and sensory evidence. This Bayesian computation was implemented through a recurrent neural network with feedforward and feedback connections:

where

I = network input,

y = network output,

Wff = feedforward weight matrix,

Wfb = feedback weight matrix,

η = learning rate. The posterior probability of the hidden states of a hidden Markov model can also be computed through Bayes rule:

where

x = hidden states with transition probabilities

p(

xt|

xt-1), y = observable output with emission probability

p(

yt|

xt) and

k = normalisation constant. These log likelihoods may be implemented on a recurrent neural network:

The neural firing rates may be related to log posteriors computed by the neurons. The likelihood function is stored in the feedforward connections while the priors provide expected inputs encoded in feedback connections. Thus, knowledge of the statistics of the environment is stored in both sets of weights.

12. What Are Bayesian Networks?

To accommodate a large number of probability distributions, graphical Bayesian networks may be employed—they comprise nodes representing variables and directed edges associated with probability distributions representing causal dependencies between nodes. If a directed edge exists from node A to node B, A is the parent of child B. Every node is independent of other nodes except its descendants. Bayesian networks are acyclic so any node cannot be returned to itself via any combination of directed edges. Acyclicity ensures that the descendants of node

xi are all reachable from

xi. Singly connected Bayesian nets have any pair of nodes linked by only one path; multiply-connected Bayesian nets contain at least one pair of nodes connected by more than one path. A Bayesian network exhibits factorisation of the joint probability distribution within a directed acyclic graph [

155]. Hence, the Bayesian network may be represented as:

where

Pa(

Xi) = set of parents of

Xi. Bayesian networks represent the dependency structure of a set of random variables. A joint probability distribution on n binary variables requires 2

n−1 branches. Dynamic Bayesian networks are Bayesian networks that represent temporal probability models by being partitioned into discrete temporal slices representing temporal states of the world. The edges in a Bayesian network define probabilistic dependencies but causal Bayesian networks assume that statistical correlation between variables does imply causation supported by probabilistic inferences [

156]. The causal Bayesian network describes a causal model of the world in which parameter estimation determines the strength of causal relations. In this case, Bayes rule computes to what degree cause C has the effect of E. The degree of causation is given by:

where

= conditional probability of the effect given the cause,

= conditional probability of the effect in the absence of the cause. An alternative measure is causal power (or weight) given by:

These are both maximum likelihood estimates of causal strength relationships. Causal relations between multiple causes (including background causes) and a single effect may be implemented through the noisy-OR distribution. Children from the age of 2 can construct graphical causal (Bayesian) maps of the world based on causal relations between events [

157]. Biological recurrent neural networks can implement Bayesian computations in the presence of noise.

Predictiveness learned through frequency-based statistical probabilities is a stronger influence on decision-making than Bayesian (conditional) probability estimates [

158]. Associative learning is based on the frequency of associations between predictive cue-outcome pairs, e.g., the Rescorla–Wagner rule which adjusts the association weight between cue-outcome pairs by minimising the prediction error [

159]. The association weight establishes a causal relation between the cue and outcome according to the statistical coincidence between them. The Rescorla–Wagner learning rule iteratively computes the associative strength

Wi of a possible cause C with an effect E after trial n is given by:

where

if cause C does not occur;

= if both cause C and effect E occur;

= if cause C occurs but not effect E.

In this way, causal relations are learned through experience that adjusts prior probabilities. Prior probability represents the structured background knowledge which is modified by new sensory information to enable inductive inferencing. A common model for the prior is the beta distribution given by:

where

= gamma function,

. The parameters

θi of the prior distribution may be learned by integrating out

α and

β:

Exact Bayesian network inferencing is NP-hard so the integral is approximated using either Sequential Monte Carlo (such as recursive particle filters) or Markov Chain Monte Carlo methods.

Particle filters use a weighted set of samples to approximate probability density—they approximate the posterior probability distribution as a weighted sum of random samples from the prior distribution with weights given by the likelihood of measurement. Sequential Monte Carlo inferencing has been applied to selecting minimum prediction error solutions from multiple inverse-forward model pairs [

160]. The posterior probability may be computed recursively:

where

p(

x0) = prior distribution,

p(

zi|

xi) = observation model. Each particle represents a weighted hypothesis of an internal model, the weight

being determined by the prediction error. The particles maintain an ensemble of hypotheses (principle of least commitment). This permits the detection of novelty when observations differ from predictions. The Kullback–Leibler divergence (relative entropy) between weighted particles and current observations defines the novelty. Markov Chain Monte Carlo methods determine the expected value of the function

f(

x) over a probability distribution

p(

x) of

k random variables

x = (

x1,

x2,…

xk):

In a Markov chain, the values of variables are dependent only on their immediate predecessor states and independent of previous states:

Markov Chain Monte Carlo uses a Markov chain to select random samples according to a Markovian transition probability (kernel) function. As the length of the Markov chain increases, the transition kernel

K(

xi+1|

xi) that gives the probability of moving from state

xi to

xi+1 converges to a stationary distribution

p(

x):

The Gibbs sampler is a special case of the Metropolis–Hastings algorithm employed for successive sampling. A two-layer recurrent spiking network with a sensory layer of noisy inputs connected to a hidden layer can compute the posterior distribution

p(

xi|

zi) among a population of neurons, i.e., Bayesian inferencing [

161]. The forward weights encode the observation (emission) probabilities of sensory neurons. The recurrent weights from hidden neurons encode transition probabilities to capture the dynamics over time. Each spike in the posterior distribution represents an independent Monte Carlo sample (particle) of states with the variance of the spike count representing the mean value. Hebbian learning implements an expectation maximisation (EM) algorithm that maximises the log likelihood of hidden parameters given a set of prior observations. A neural particle filter model of the cerebellum exhibits a probability distribution of spiking which approximates a Bayesian posterior distribution in sensory data [

162]. From this, it is possible to construct an optimal state estimate through Bayes rule to give the posterior distribution of spike measurement function in the cerebellum,

where

x = environment state estimate,

s = sensory spike measurement.

13. The Importance of Kalman Filters

The accommodation of noise in forward-inverse models and sensory feedback requires state estimation using observers. State estimation by an observer monitors an efference copy of motor commands and sensory feedback. The observer uses a recursive update process to estimate the state over time. The forward model is used to fuse sensory and motor information for predictive state estimation similarly to a Kalman filter model. Noisy sensory feedback combined with noisy forward models may be used to estimate the current state—an example is the Kalman filter that optimally estimates the current state by combining information from input signals and expectation-based signals [

163]. The Kalman filter is an efficient version of the Wiener filter, with the latter using all previous data in batch mode while the former recursively uses immediately previous data. The Kalman filter is the optimal state estimator under conditions of Gaussian noise. The Kalman filter is a Bayesian method that computes the posterior probability of a situation based on the likelihood and the prior. It computes the relative weighting between the prior model and sensory data as the Kalman filter gain, a weighting that quantifies cognitive attention that balances prior predictive models and the likelihood of sensory data. The innovation (residual) term compares sensory input with the current prediction, i.e., a prediction error. The forward model acts as the predictor step while the sensory information provides the correction step.

There are several approaches to sensor fusion—Bayesian probability, fuzzy sets and Dempster–Shafer evidence [

164]—and the Kalman filter falls into the Bayesian approach. Dempster–Shafer theory is an extension of Bayes theorem to apportion beliefs and plausibility to hypotheses but is complex to compute. Fuzzy sets adopt partial set membership as a measure of imprecision to represent possibility rather than probability but it lacks the precision of Bayesian probability. In the Kalman filter model, the visual neural streams feed environmental information into an environment emulator (mental model) to generate expectations (predictions) for early visual processing to filter incoming data by directing attention and to fill in missing information. The superior colliculus integrates multisensory inputs in a manner consistent with Bayes rule to reduce uncertainty in orienting towards multisensory stimuli [

165]. It receives inputs from visual, auditory and somatosensory brain systems and integrates them to initiate orienting responses such as saccadic eye movements. Neurons in the superior colliculus are arranged topographically according to their receptive fields. Shallow neurons of the superior colliculus tend to be unimodal while deep neurons are commonly multi-modal, e.g., visual-auditory. Deep neurons may compute the conditional probability that the source of a stimulus is present within its receptive field given its sensory input using Bayes rule that reduces the ambiguity from individual senses alone. For a single (e.g., visual) input alone, the posterior probability of a target

t given a visual stimulus

s in the receptive field is given by:

where

p(

v|

t) = likelihood of a visual stimulus given the target (characteristic of the vision system) modelled as a Poisson distribution of neural impulses,

= probability of a visual stimulus regardless of a target (characteristic of the vision system),

p(

t) = prior probability of the existence of the target (property of the environment),

f = number of input neural impulses per 250 ms, λ = mean number of spontaneous neural impulses. The bimodal case (e.g., visual and auditory inputs) is computed similarly:

It is assumed that the sensory modalities are conditionally independent, so p(v.a) may be computed as a product of the corresponding individual likelihoods: and . The multisensory case follows similarly.

In the brain, the integration of multisensory data (such as visual and vestibular data) occurs in the medial superior temporal (MST) area in which population coding implements sensory cue weighting [

166]. The Bayesian estimator computes the posterior probability distribution of state

xi at time

i given the prior distribution

p(

xi|

zi-1), likelihood function

p(

zi|

xi) and history of measurements

z1,…,

zi:

This is recursive so new measurements constantly update the posterior probability of the state. The Kalman filter assumes a linear Bayesian system corrupted by Gaussian noise. The Kalman filter estimates the state

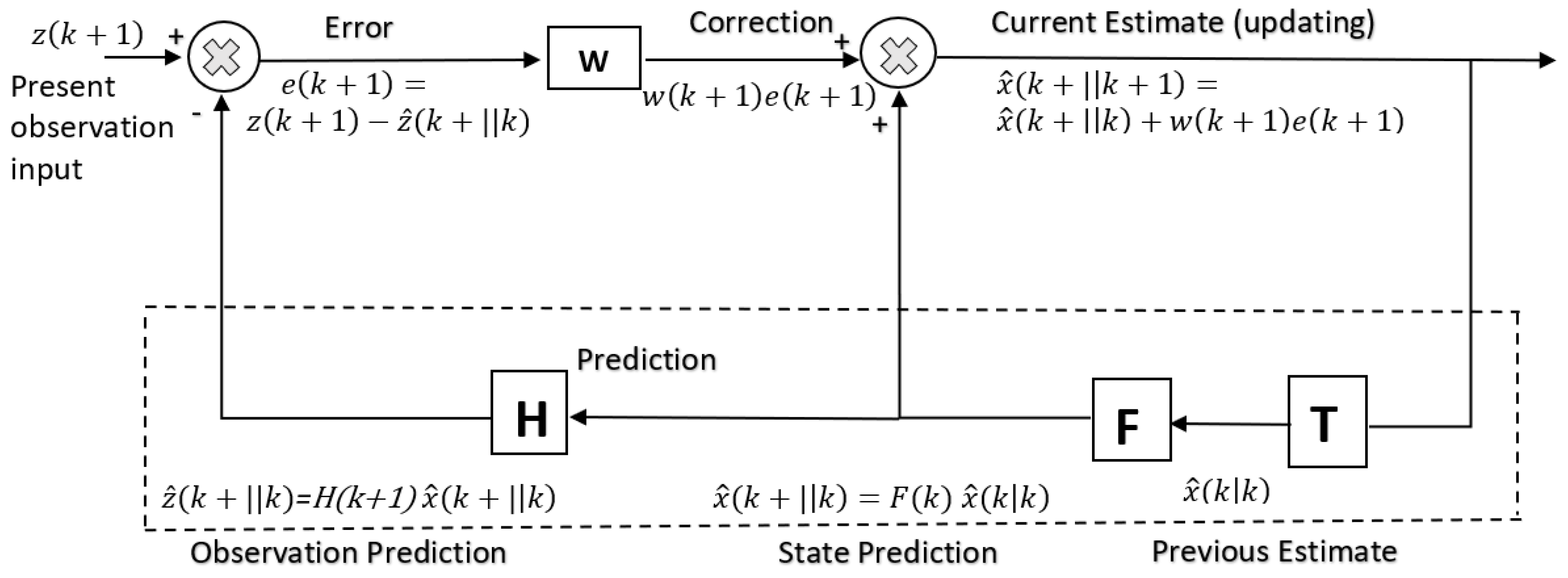

x(

t) of the system (notationally, caps indicate estimates) (

Figure 4).

The model prediction step is given by a prior model

:

where Q = cov(w(t)) of process noise, P = error covariance. The measurement update step is given by the posterior estimate

based on the prediction error

:

where R = cov(v(t)) of measurement noise. Hence, the predicted state

is given by the process model estimate

subject to a correction of the Kalman gain

applied to the prediction error

with an uncertainty in the estimate given by the covariance

. The Kalman filter has a diversity of uses. The Kalman filter may be exploited as an adaptive observer for parameter estimation for system identification in a control system—model reference adaptive control has been exploited to perform such parameter adaptation [

167]. The active observer algorithm reformulates the Kalman filter as a model reference adaptive control system [