1. Introduction

Identifying appropriate sites for landing a spacecraft or building permanent structures is critical for extraterrestrial exploration, including asteroids, moons, and planets. By tracking the movement of land masses or structures on a planetary surface, scientists can better predict issues that could affect the integrity and safety of the site or the structures. A fault in the crust of a planetary body is a planar fracture or discontinuity in a volume of rock that experiences displacement due to movement of the rock. If a landing site or structure is located near a fault, scientists will need to monitor the movement of land on either side of the fault to assess the hazard of the fault. Environmental conditions on extraterrestrial surfaces are vastly different than conditions found on Earth, hence scientists and engineers will need to closely monitor structures built in these uncertain environments for movement that could signify support or structural issues. The National Aeronautics and Space Administration (NASA) has suggested that “advancements in additive manufacturing, or 3-D printing, may make it possible to use regolith harvested on the Moon, Mars and its moons, and asteroids to construct habitation elements on extraterrestrial surfaces, such as living quarters and storage facilities” [

1]. Construction methods using raw materials from a planet being explored, also known as in situ resource utilization, are motivated, in part, by the high cost of transporting materials. Structures built with extraterrestrial materials not found on Earth would require close monitoring due to incomplete knowledge of the material properties. The sensor reported here could be used for these remote sensing applications since it is able to remotely measure small displacements (mm to cm) at long distances (1–60 m). Here, we focus on the application of monitoring fault movement, although the sensor and algorithms presented in this work could be used to remotely measure the displacement of many other objects.

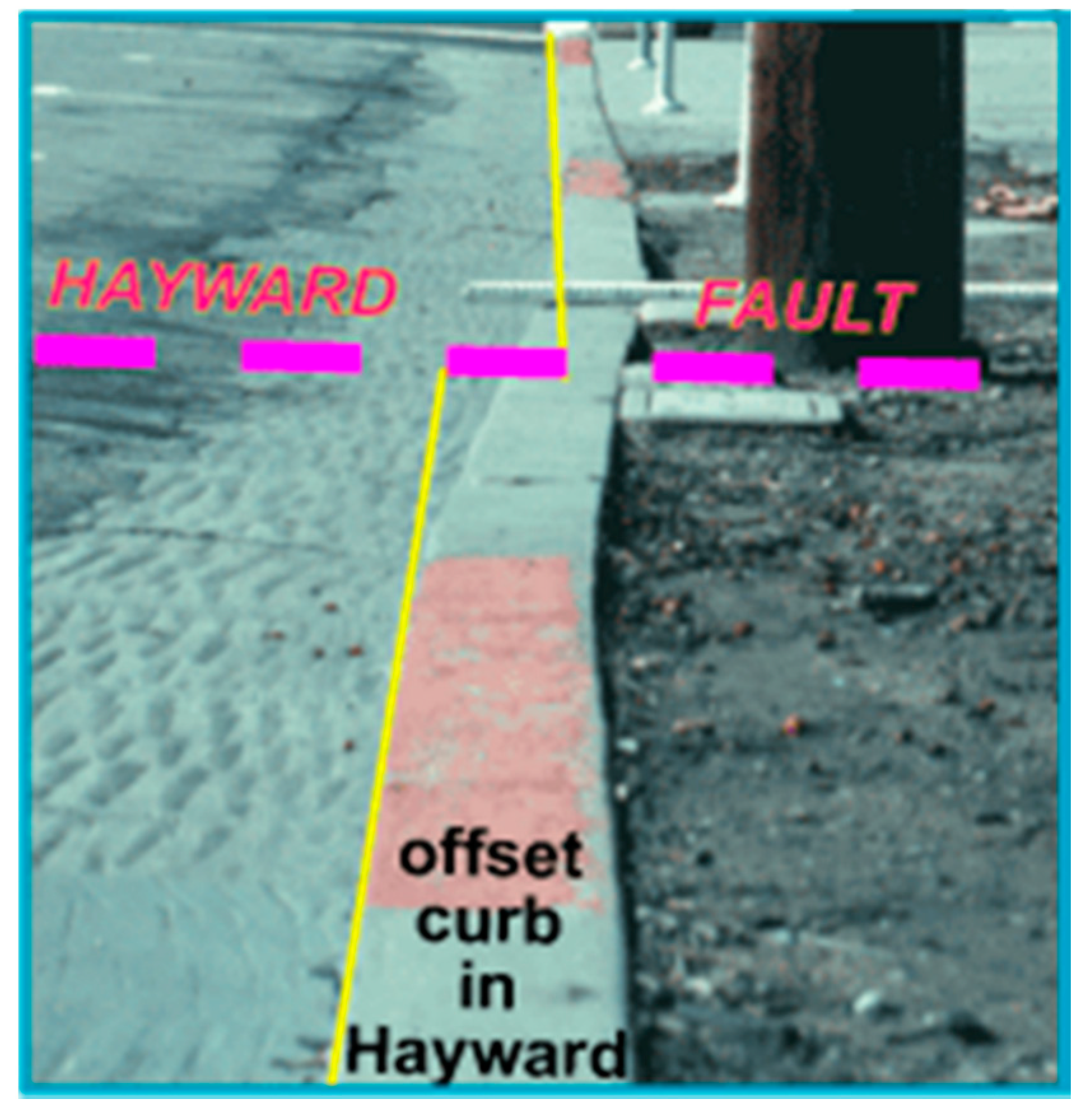

Faults in the Earth’s crust can move slowly without detectable tremors, a process called aseismic deformation or fault creep (

Figure 1). The movement of some active faults is in the range of a few millimeters at a time, with a cumulative movement of a few centimeters a year. Fault creep can relieve strain on a fault, potentially reducing the hazard of a fault. Measurements of these small displacements at many locations along the fault are needed to accurately model inter-seismic and post-seismic fault creep and estimate fault hazard. The cost of current technology to measure earthquake fault creep limits deployment. In particular, one of the most promising fault creep meters uses an Invar wire (or a graphite rod) that is anchored to concrete monuments on either side of a fault to measure strain which is then converted to displacement [

2]. The weight and installation requirements of this system make it impractical for applications on other planetary bodies, whereas the bioinspired sensor described here is a small fraction of the weight, making it a good alternative.

A novel low-cost, low-power bioinspired optical sensor, which could be deployed at many locations, is being developed at NASA Ames Research Center (NASA Ames) for measuring fault creep with funding from the United States Geological Survey Innovation Center for Earth Sciences, United States Department of the Interior. This sensor could play an important role in the monitoring of landslides (especially shear failures at landslide margins) and could help monitor volcanic deformation over short spatial scales. Additionally, the bioinspired sensor could be used in many other applications requiring displacement measurements, such as the movement of civil structures.

The bioinspired sensor for fault monitoring builds upon a bioinspired sensor developed for near real-time estimation of the position of an aircraft wing under load [

4,

5,

6,

7]. For the aircraft application, it was shown that the bioinspired sensor and associated tracking algorithms could outperform a traditional digital camera, where movement results in blurred images. Additional studies using a sensor with a similar design demonstrated the advantages of a compound eye-inspired sensor over a digital camera for processing certain types of moving targets [

8]. Since the bioinspired sensor only has seven photodetectors, the power and bandwidth requirements for signal acquisition and data processing are much lower than for a standard digital camera.

Inspiration for NASA’s bioinspired sensor comes from the compound eye-based vision system found in many insects, including the common housefly (

Musca domestica) [

7,

8,

9,

10]. The insect compound eye consists of many light sensitive cells (ommatidia) with overlapping fields of view. Each ommatidium gives a quasi-Gaussian response to visual stimuli [

11]. These characteristics of the vision system along with neural superposition endow the insect with the ability to quickly detect very small movements of an object. The sensor design reported in this paper draws inspiration from the quasi-Gaussian response, multiple aperture, and overlapping fields of view found in the insect eye. It does not mimic the neural superposition properties or the arrangement of multiple photoreceptors within each ommatidium found in a neural superposition type compound eye.

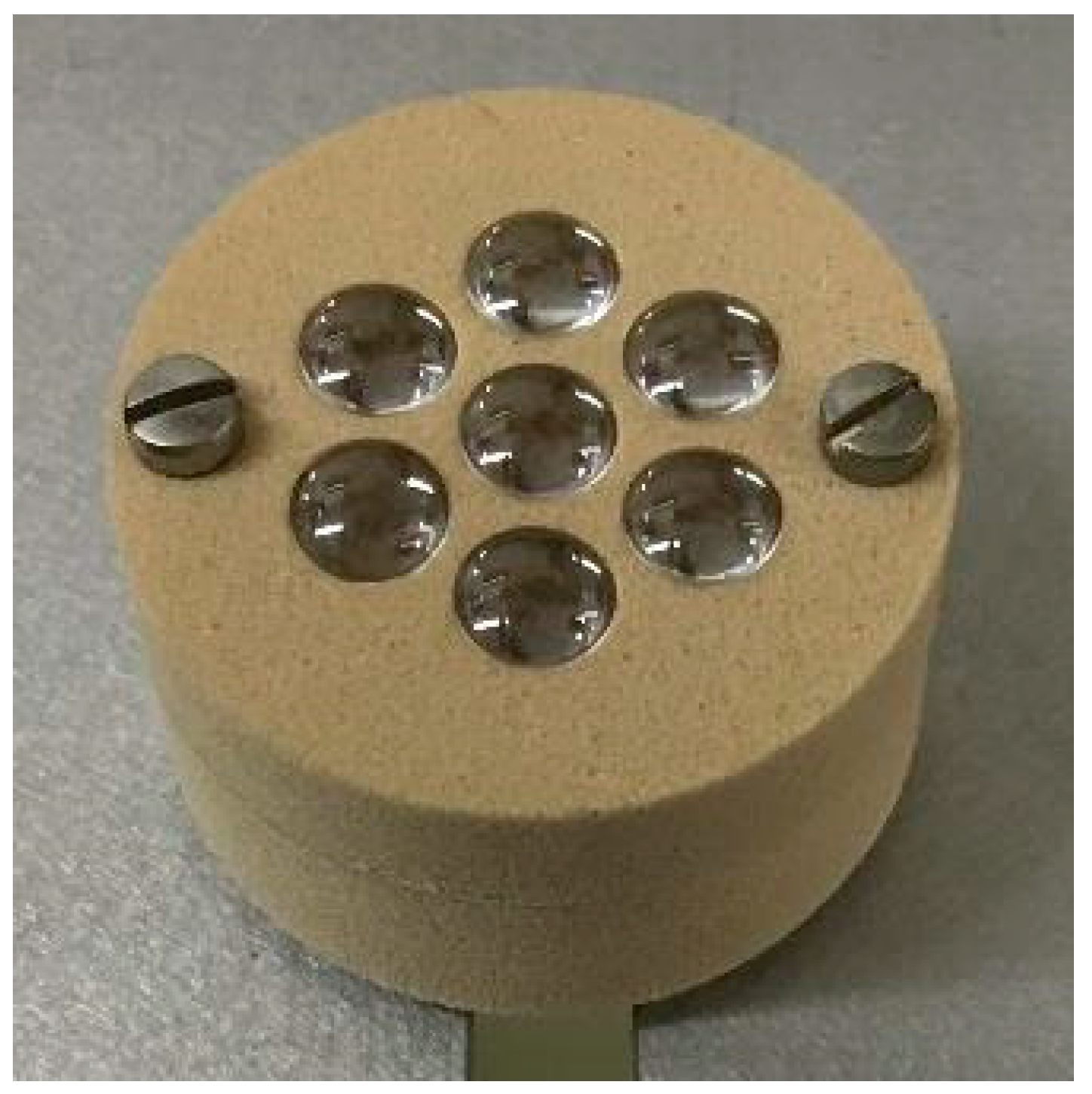

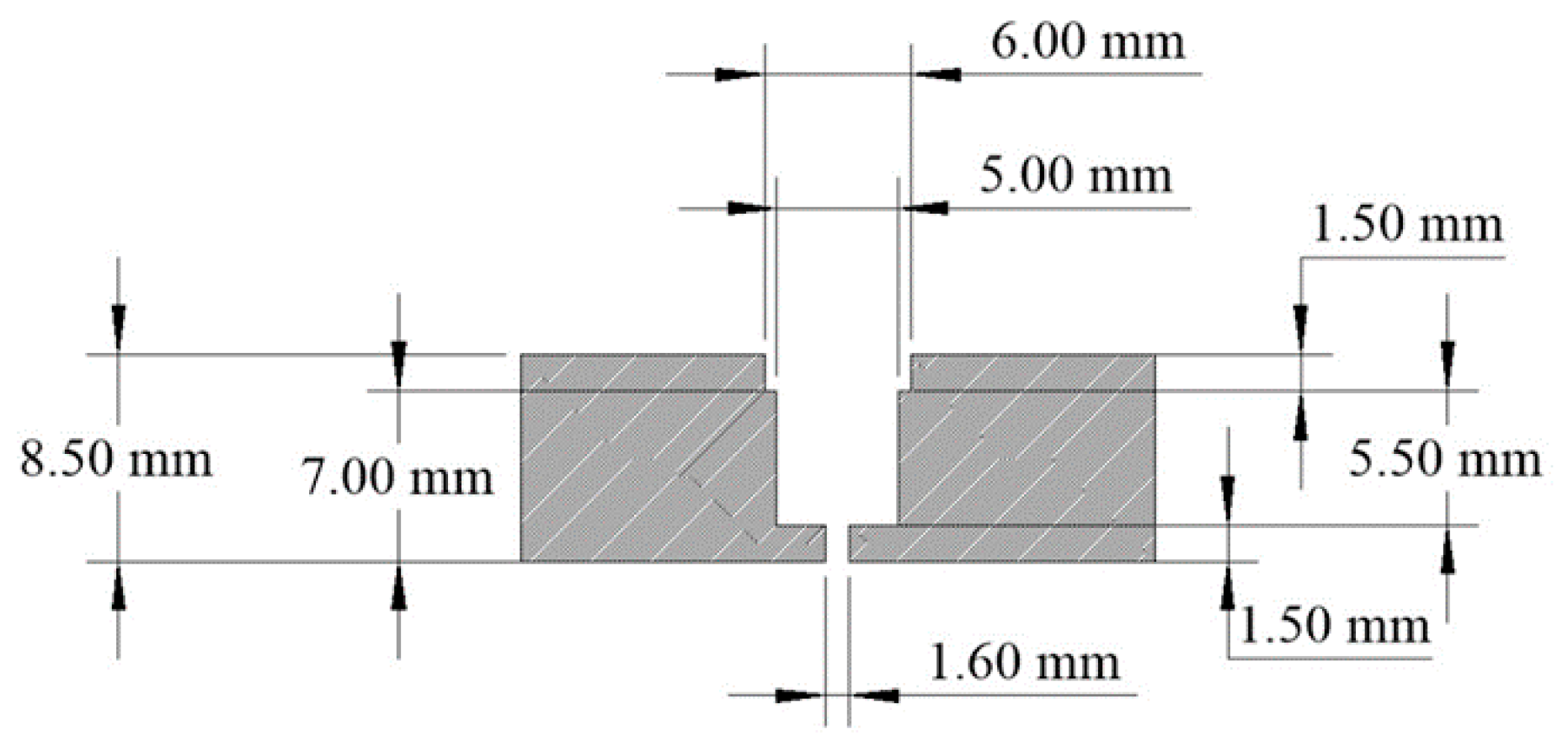

The seven-lens compound eye-inspired sensor designed and built at NASA Ames has seven individual sensors with overlapping fields of view (

Figure 2). Each individual sensor has a lens that focuses incoming light through an airgap onto the face of a plastic optical fiber (POF). The light passes through the POF to a photodetector on a custom printed circuit board (PCB) that was designed and built by researchers at the University of Wyoming, Laramie, WY, USA [

7,

9]. The PCB amplifies and converts the current coming from the photodetector to a voltage and performs filtering of the signals. The individual sensors, with airgaps that blur the image, and the electronics on the PCB, are designed to give the desired quasi-Gaussian response to stimuli. The overlap of the sensors’ fields of view is achieved through the arrangement of the sensors in the sensor head. The quasi-Gaussian response and the overlapping fields of view enable an accurate estimation of target movement.

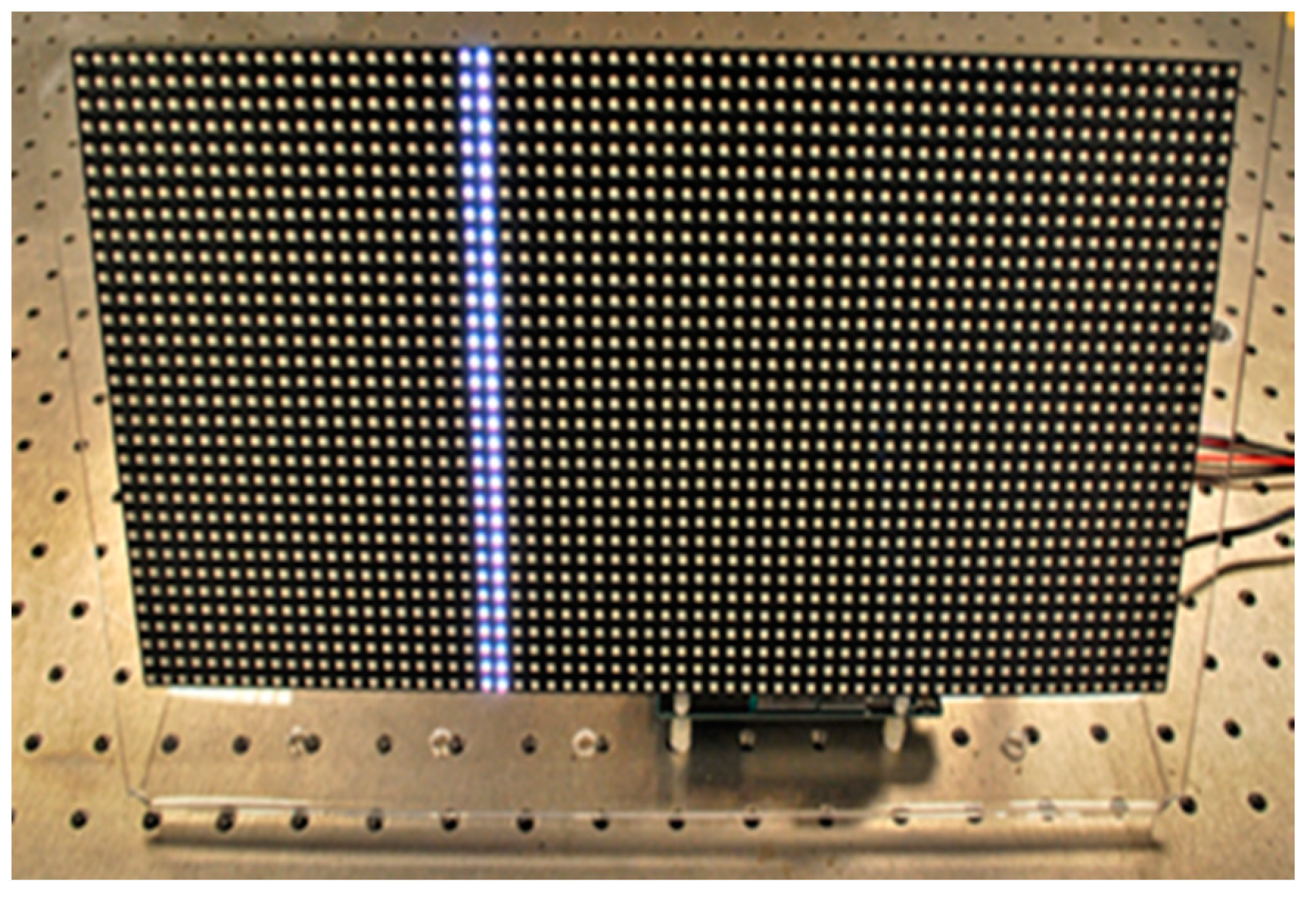

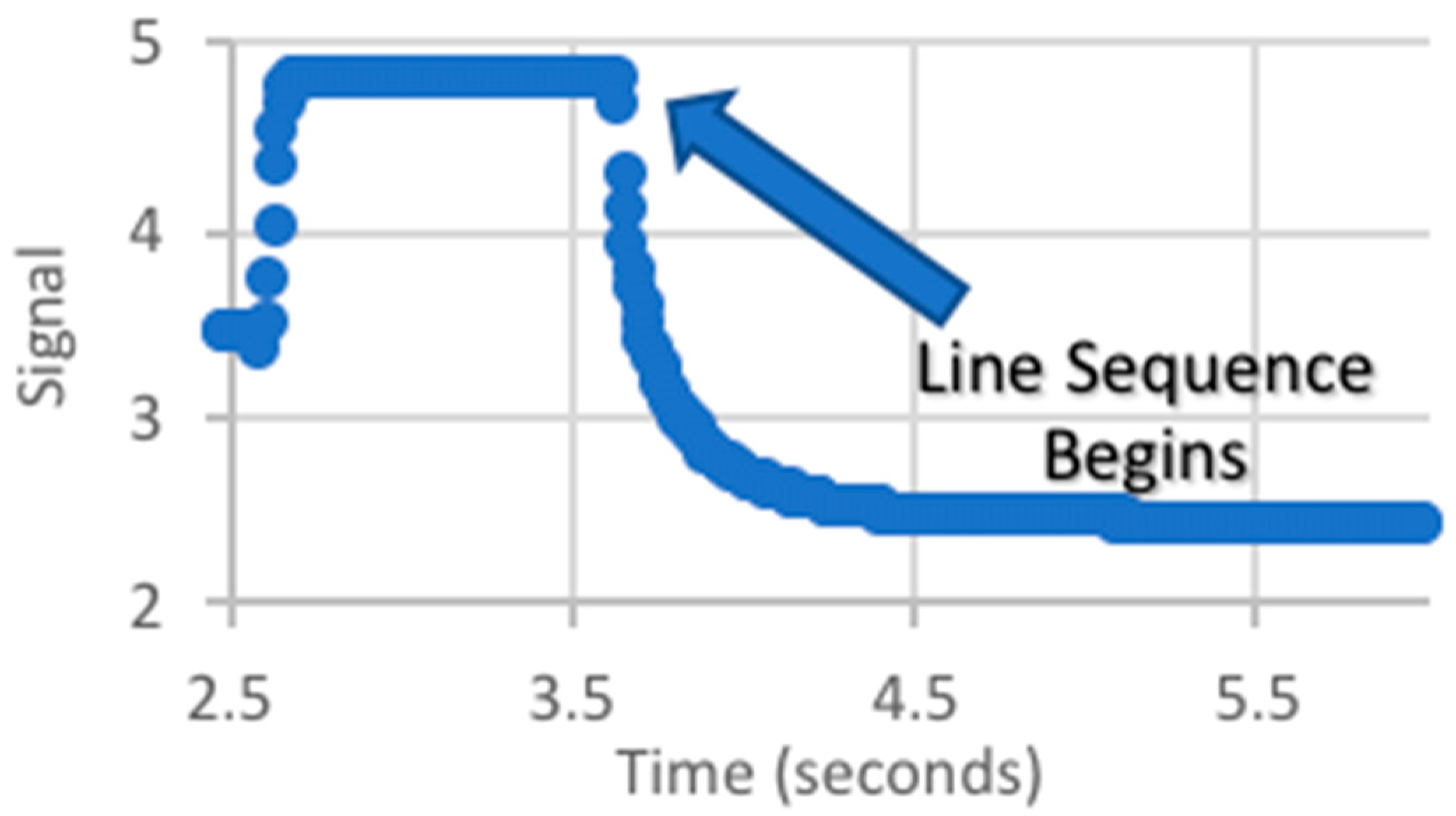

The bioinspired sensor is well-suited to identifying displacements of moving targets; however, long duration movements, such as slippage along a fault line, are almost static. To exploit the strengths of the bioinspired sensor, a pseudo-moving target is employed so that the sensor is responding to motion in its field of view, even when the target is not moving. The prototype pseudo-moving target is created from an array of light-emitting diodes (LEDs) programmed to display a sequence of lines marching across the array (

Figure 3).

In a fault monitoring application, the sensor and target would be located on opposite sides of the fault being monitored. The sensor would be mounted on a geodetic monument built on stable ground outside of the moving fault zone with line-of-sight to the target. The target would be mounted inside the fault zone. Depending on the fault or landslide being monitored, the sensor to target distance could be as little as 6 m or as much as 100 m. External front-end optics are required for the sensor to obtain useful signals from targets at these distances. For cost considerations, primarily driven by the front-end optics, testing of the proposed system has been limited to 30 m. This enabled the use of relatively inexpensive astronomical telescopes (

Figure 4) to increase the sensor’s range. The sensor head was designed at NASA Ames to fit a standard telescope interface (31.75 mm diameter eyepiece tube). Algorithms were developed to process data gathered from the sensor to determine relative displacement of the target.

3. Results and Discussion

Three sets of tests were performed: (1) indoor tests with no front-end optics; (2) outdoor tests with an Orion 70 mm Multi-Use Finder Scope (70 mm optical diameter, 279 mm focal length); and (3) outdoor tests with an Orion Apex 102 mm Maksutov-Cassegrain Telescope (102 mm aperture, 1300 mm focal length).

3.1. Indoor Tests

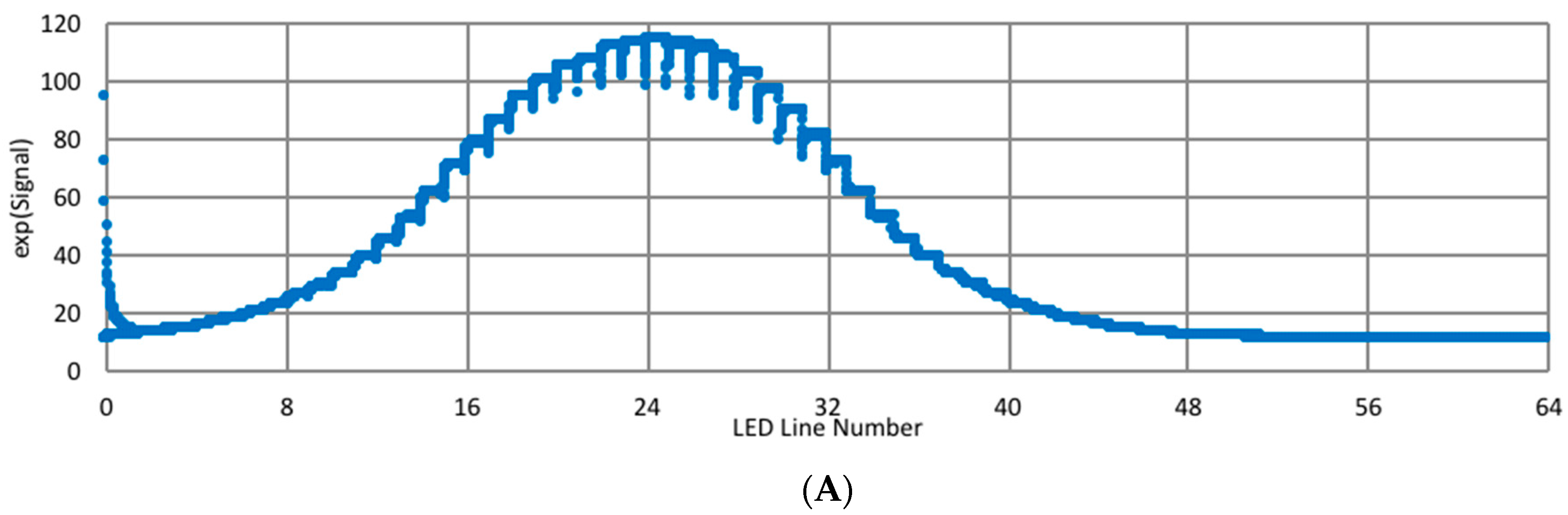

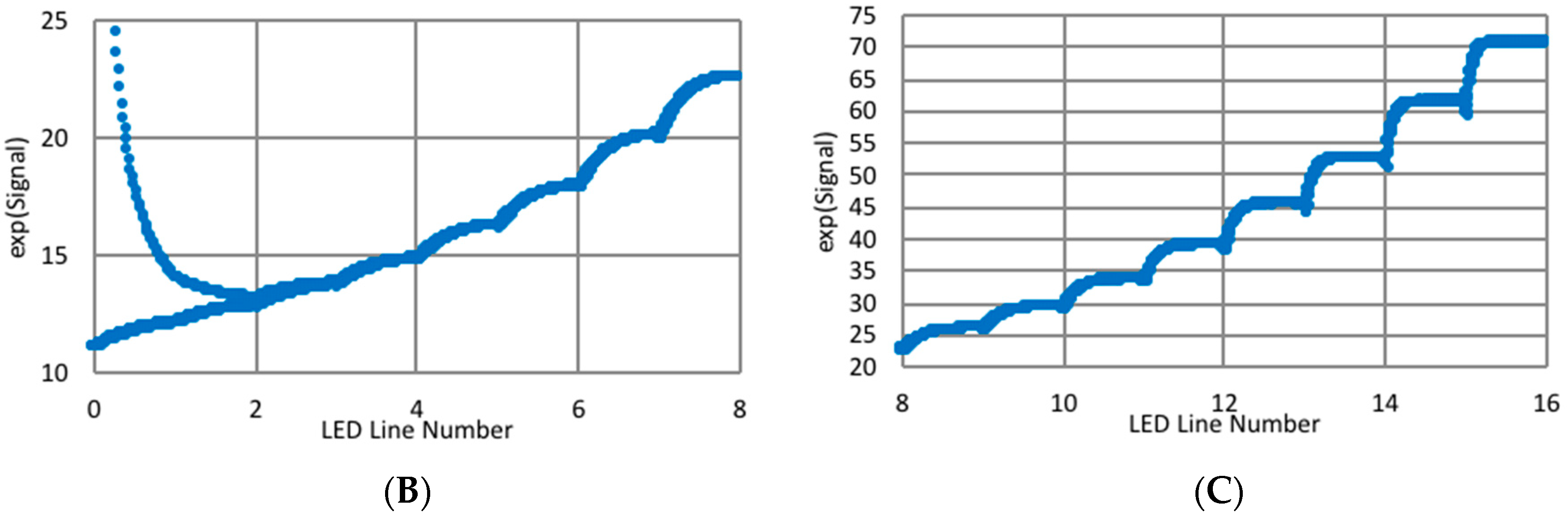

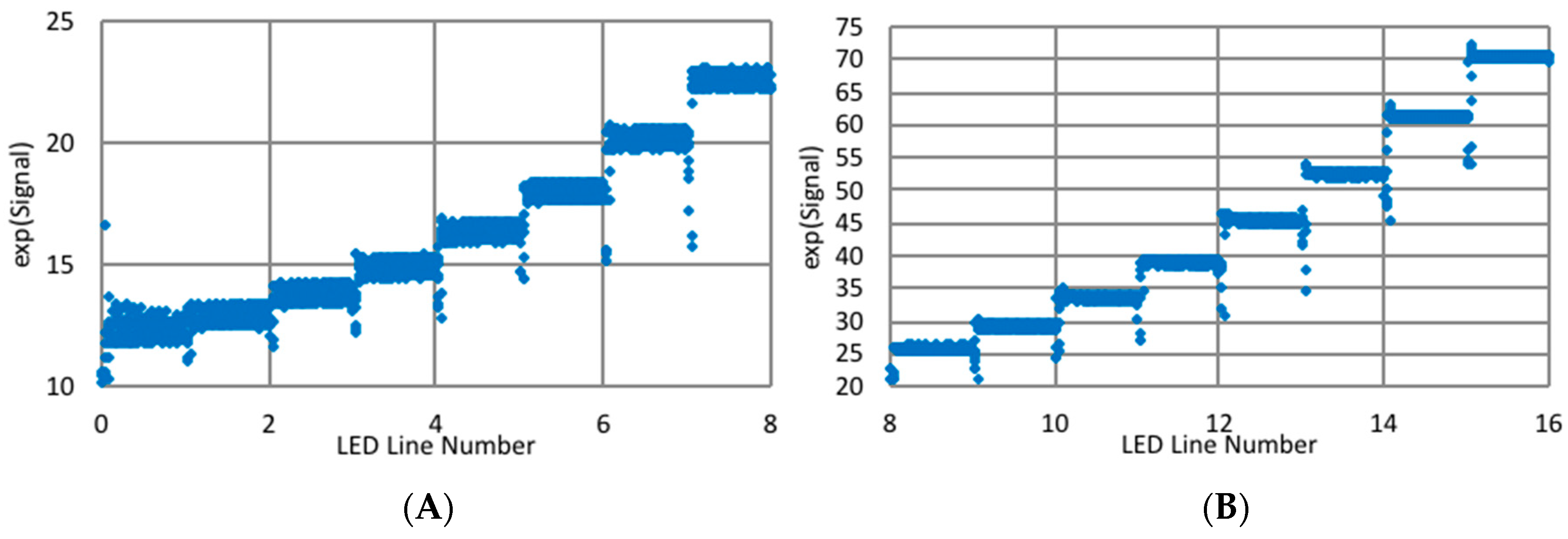

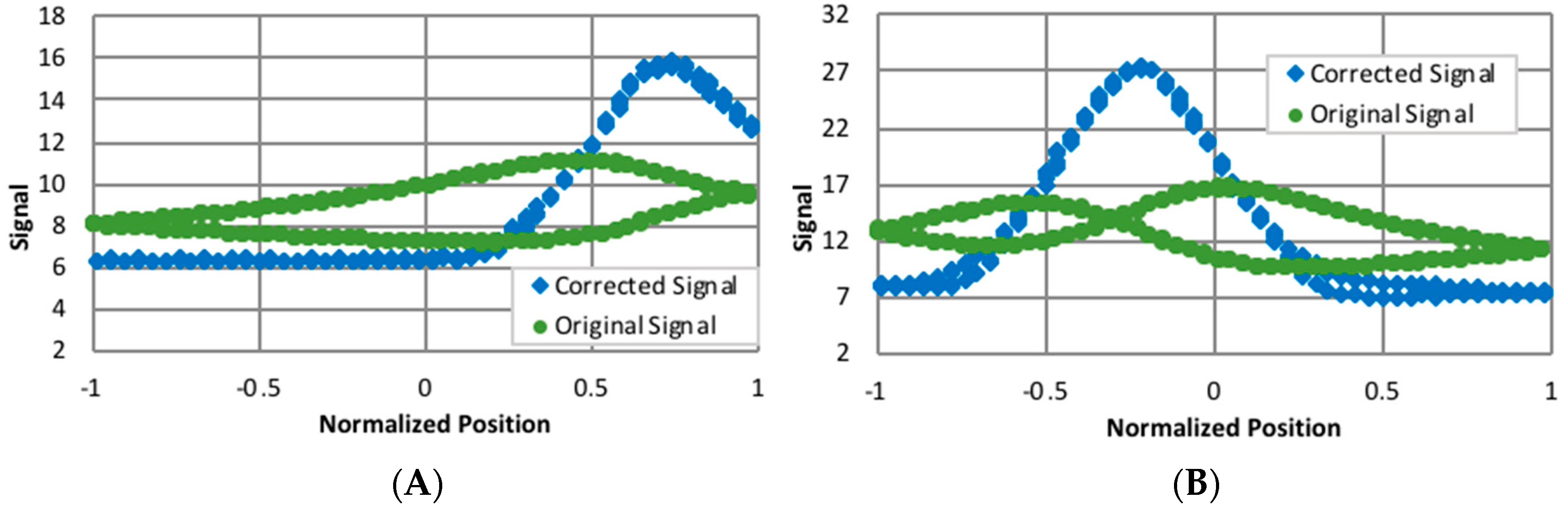

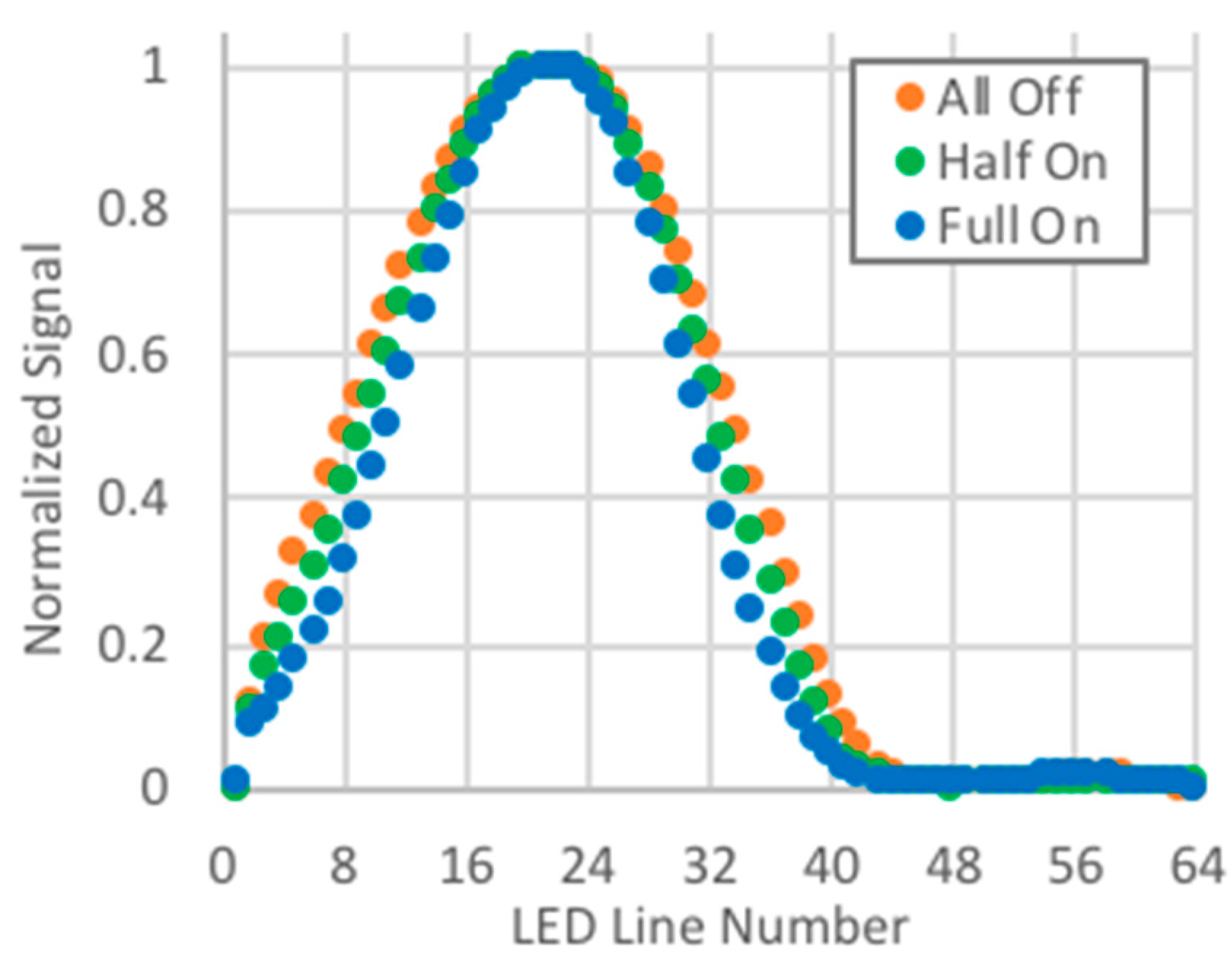

Indoor tests were conducted with the interior overhead lights fully on, half on, and all off. The distance between the sensor head and the target was 35 mm, and the target was placed at three locations: the centerline, 10 mm to the left of the centerline, and 20 mm left of the centerline. Approximately 3.5 cycles of pseudo-motion were captured. The estimated displacements for each channel (individual sensor) and the averaged displacement are given in

Table 3; the averaged value excludes channel 7. The data obtained with channel 7 was nosier, and the displacements estimated using the channel 7 signals were often outliers. With the exclusion of channel 7, the displacements estimated from the individual sensors are within 0.12 mm of the average, and the averages are within 0.25 mm of the nominal displacement. It should be noted that the nominal displacement is not exact and that the differences between the estimated and nominal displacement are not true measures of the uncertainties. A better estimate of the error may be obtained by comparing the displacement (19.91 and 19.88) calculated for the reference at 0 mm and the sample at 20 mm with the sum (19.86 and 19.91 mm) of the displacements for the reference at 0 mm and sample at 10 mm (9.75 and 9.80 mm) and the reference at 10 mm and the sample at 20 mm (10.11 mm). The max difference between these two sets of estimates is 0.05 mm, and it is therefore not unreasonable to expect uncertainty in the displacement estimates in the order 0.1 mm for a controlled environment. This is significantly less than the LED line spacing of 5 mm.

3.2. Outdoor Tests at 6 m

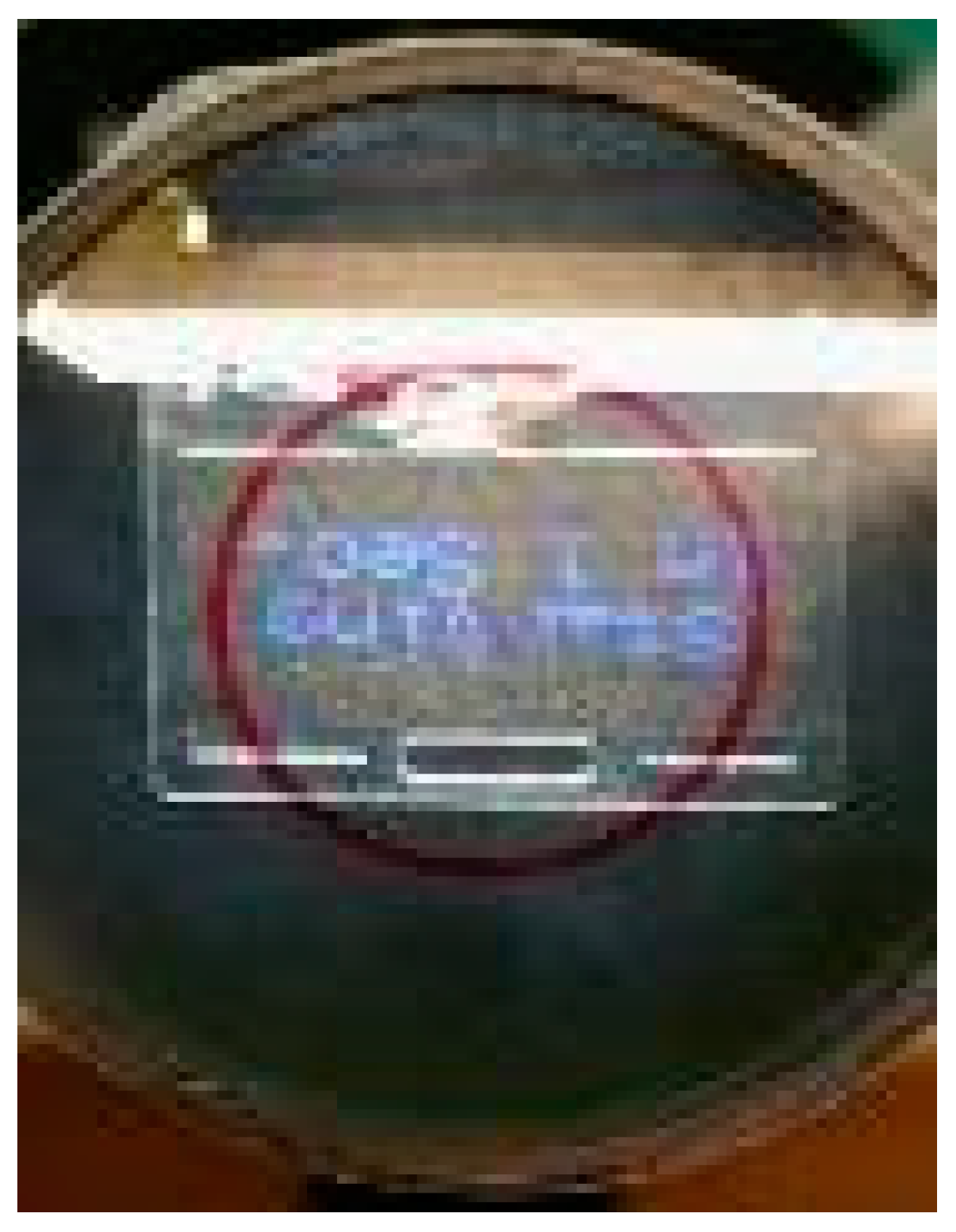

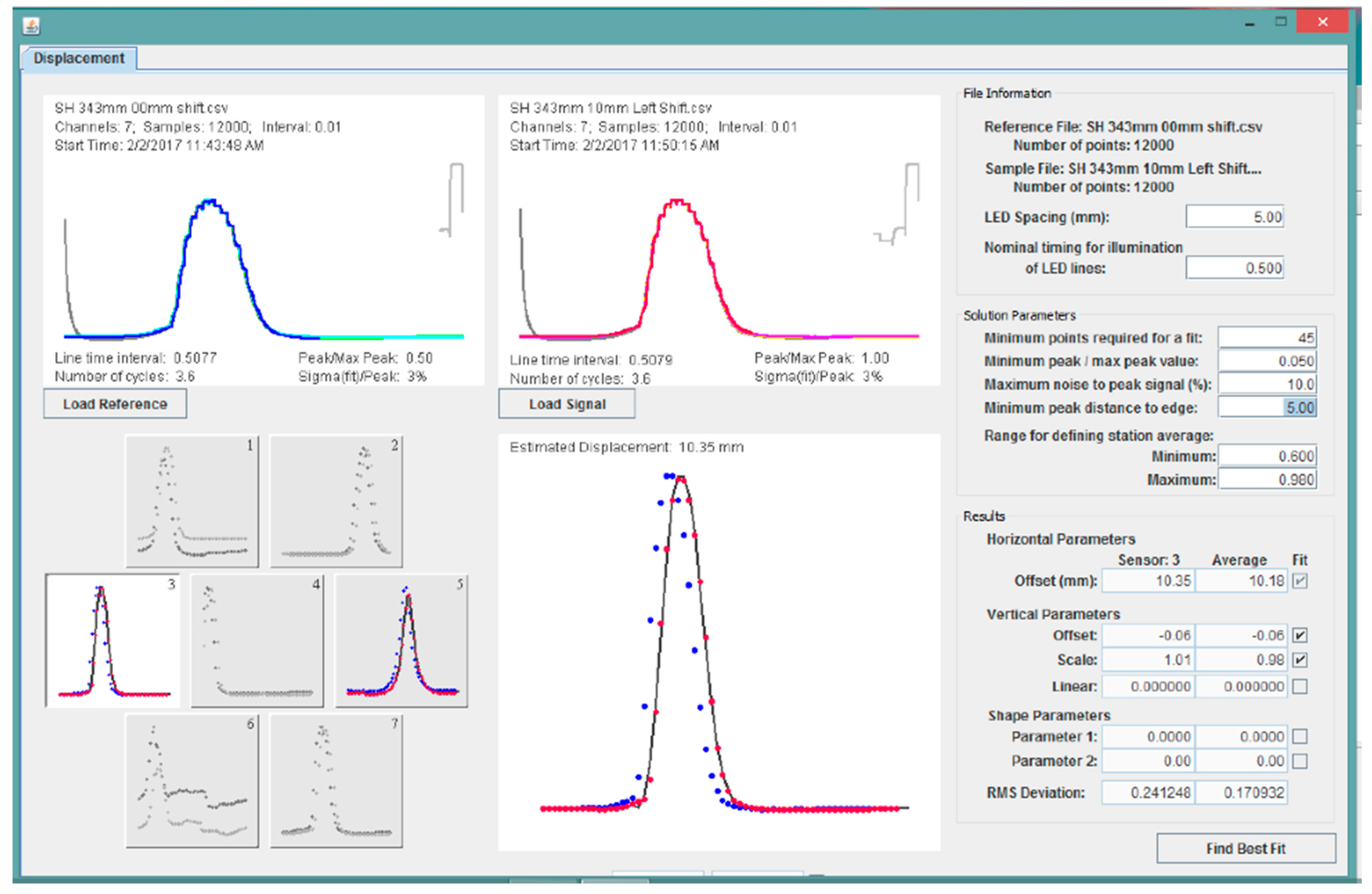

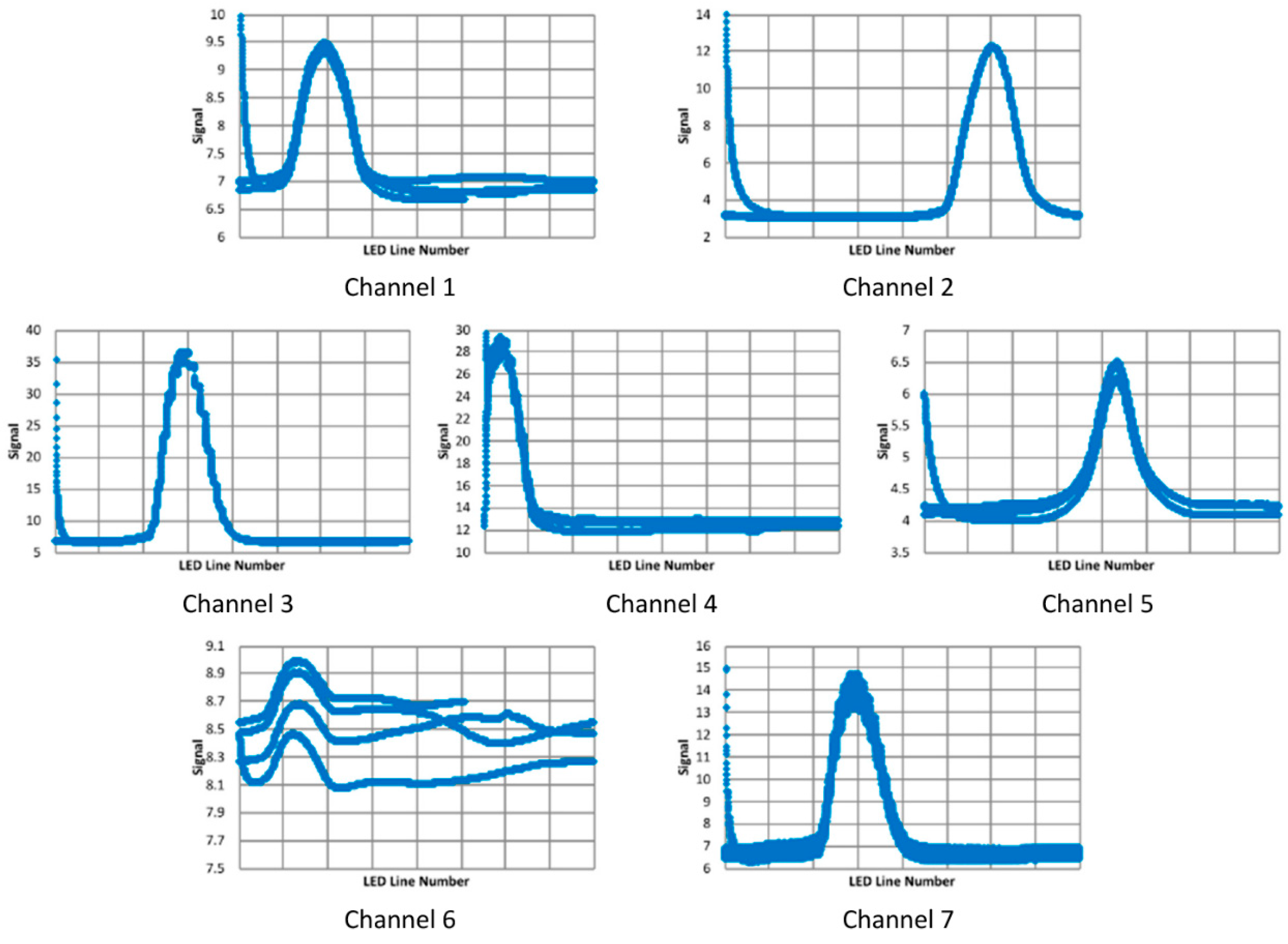

Outdoor tests were performed with the target at 6 m and the Orion 70 mm Multi-Use Finder Scope as the front-end optics. Data was collected with the distance between the front-end lens and the sensor of 343 mm and with the target at two locations, a reference location and one with the target offset to the left by, nominally, 10 mm. The responses for the reference location are shown in

Figure 20. Note that 3.6 cycles of pseudo-motion are overlaid in these plots. With channels 1, 4, 5, and 7, there are definite issues with repeatability, and with channel 6, there is a significant deviation from a quasi-Gaussian response.

When identifying the displacement, constraints were placed on the data: a maximum was placed on the noise to peak ratio (20%), and weak signals (channels with a peak value that was less than 5% of the maximum peak for all seven channels) and signals with peaks too close to the edge were discarded. The estimated target displacements, channel 1: 10.49, channel 2: 10.58, channel 3: 10.35, channel 4: N/A, channel 5: 10.0, channel 6: N/A, and channel 7: 10.32 mm, with an average of 10.35 mm, are surprisingly consistent, although the displacement for channel 5, with its fairly weak signal, appears to be smaller than that for the other channels. Again, it should be noted that the nominal displacement was approximate, and therefore no conclusions can be drawn regarding the accuracy of the displacement estimates; the consistency of the measurements would suggest uncertainties are in the order of a few tenths of a millimeter.

3.3. Outdoor Tests at 30 m

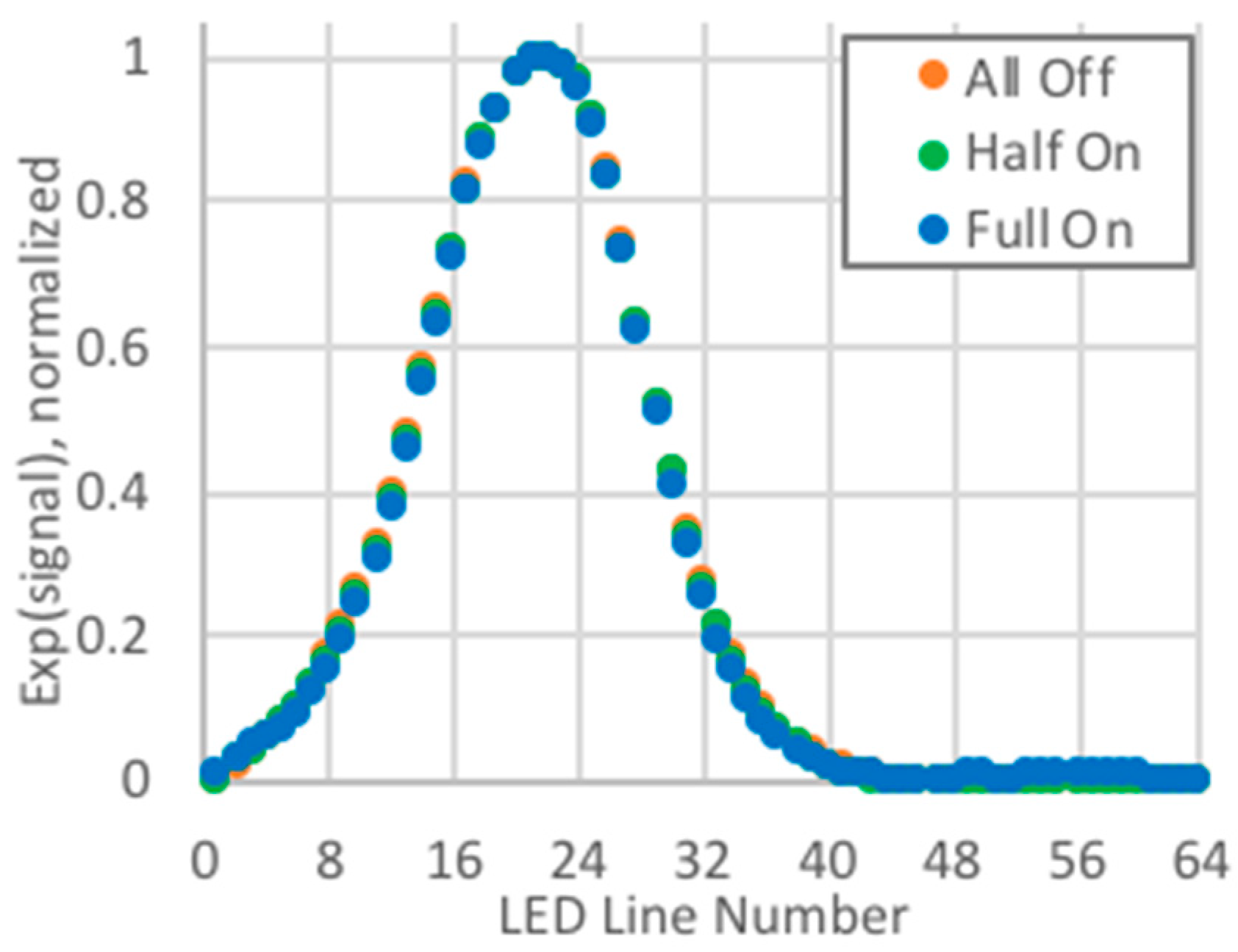

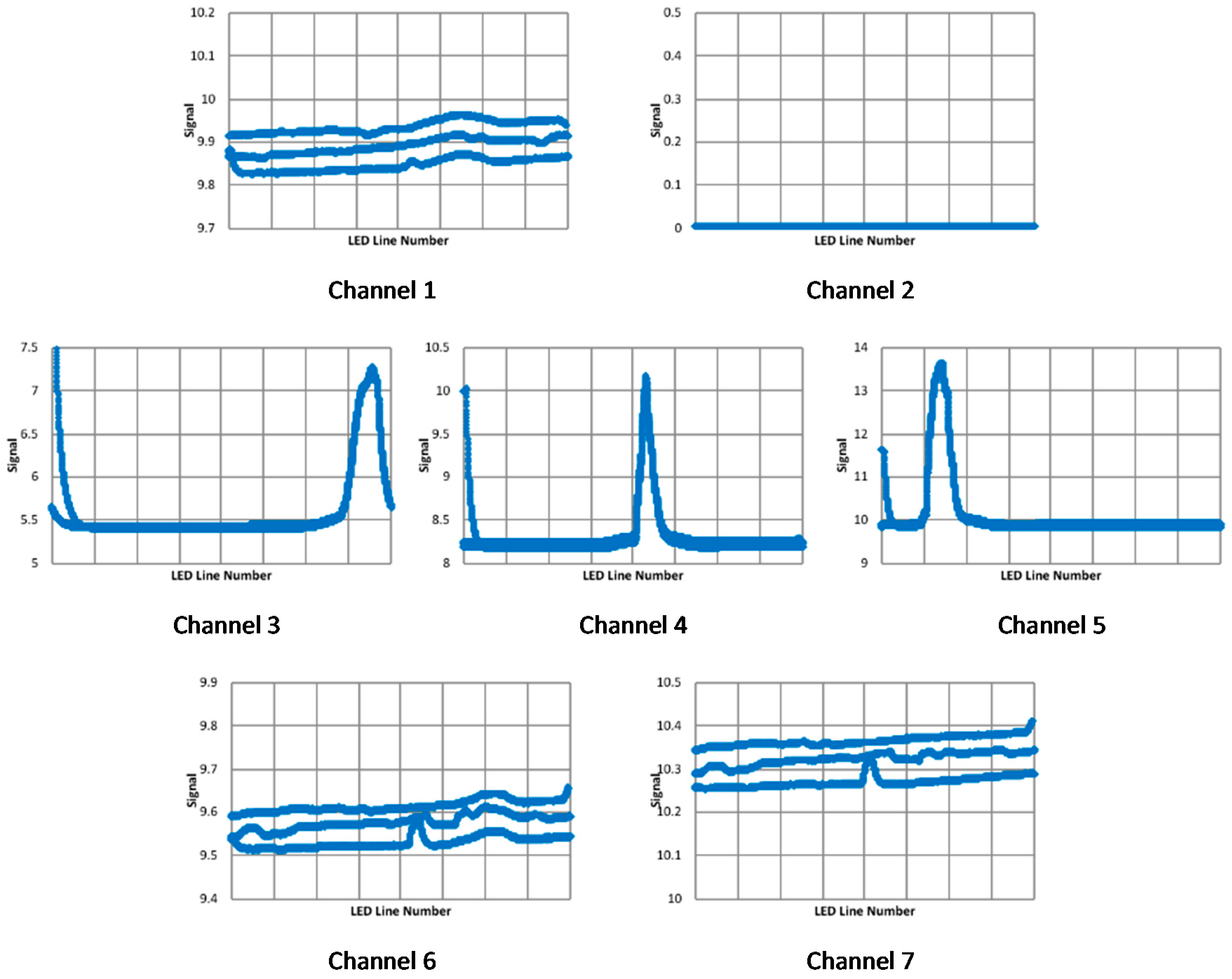

Outdoor tests were performed with the target at 30 m, and the Orion Apex 102 mm Maksutov-Cassegrain Telescope and a 2× Barlow lens as the front-end optics. At this distance, and with the specified front-end optics, the target image was not in view of all of the individual sensors, and only three of the seven channels exhibited quasi-Gaussian behavior (

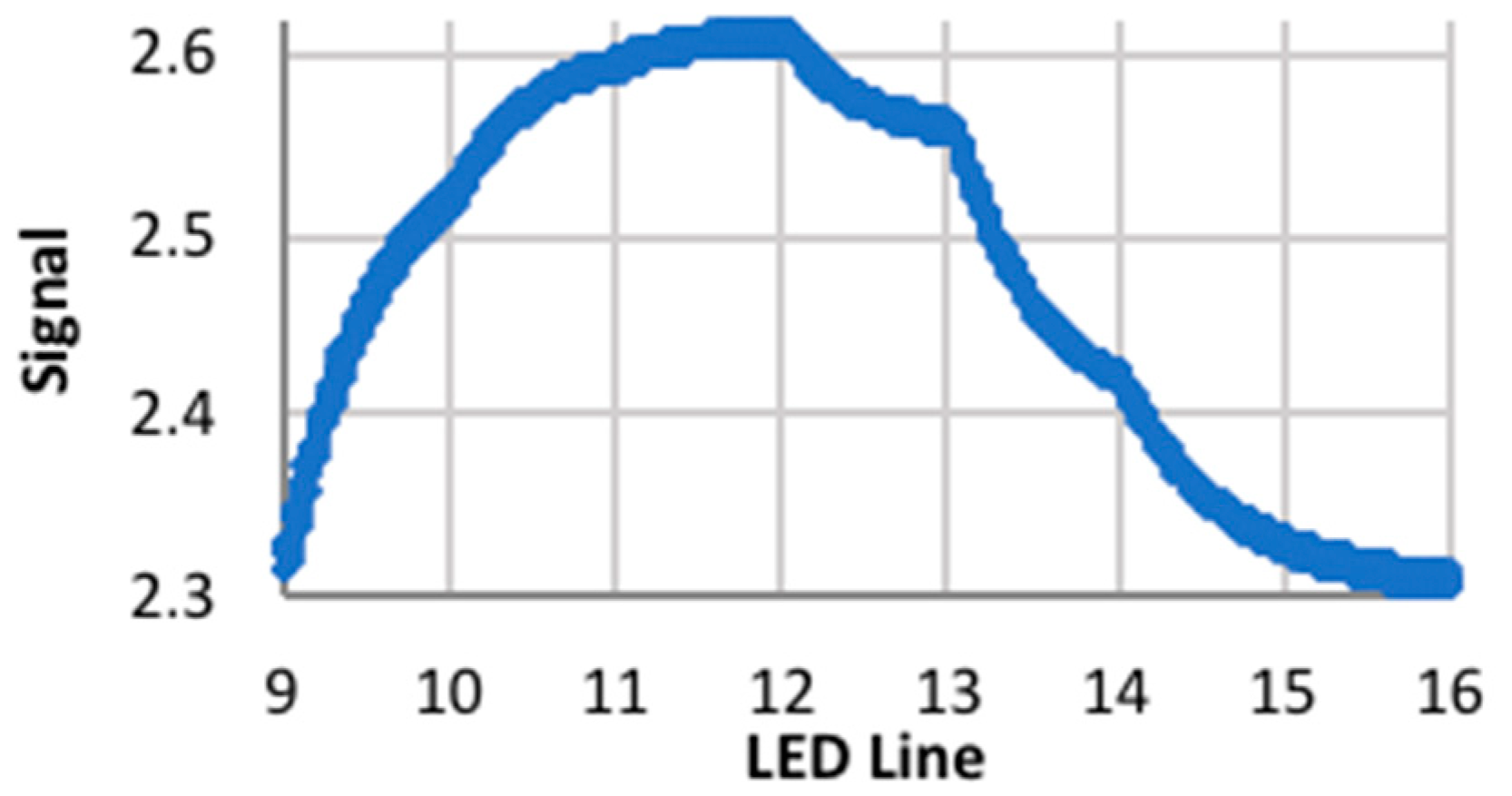

Figure 21). The target was placed at five locations, including on the centerline, and 10 and 20 mm to the left and right of the centerline. For this configuration, the signals were fairly weak and in the amplitude region where there was significant smoothing of the signal (

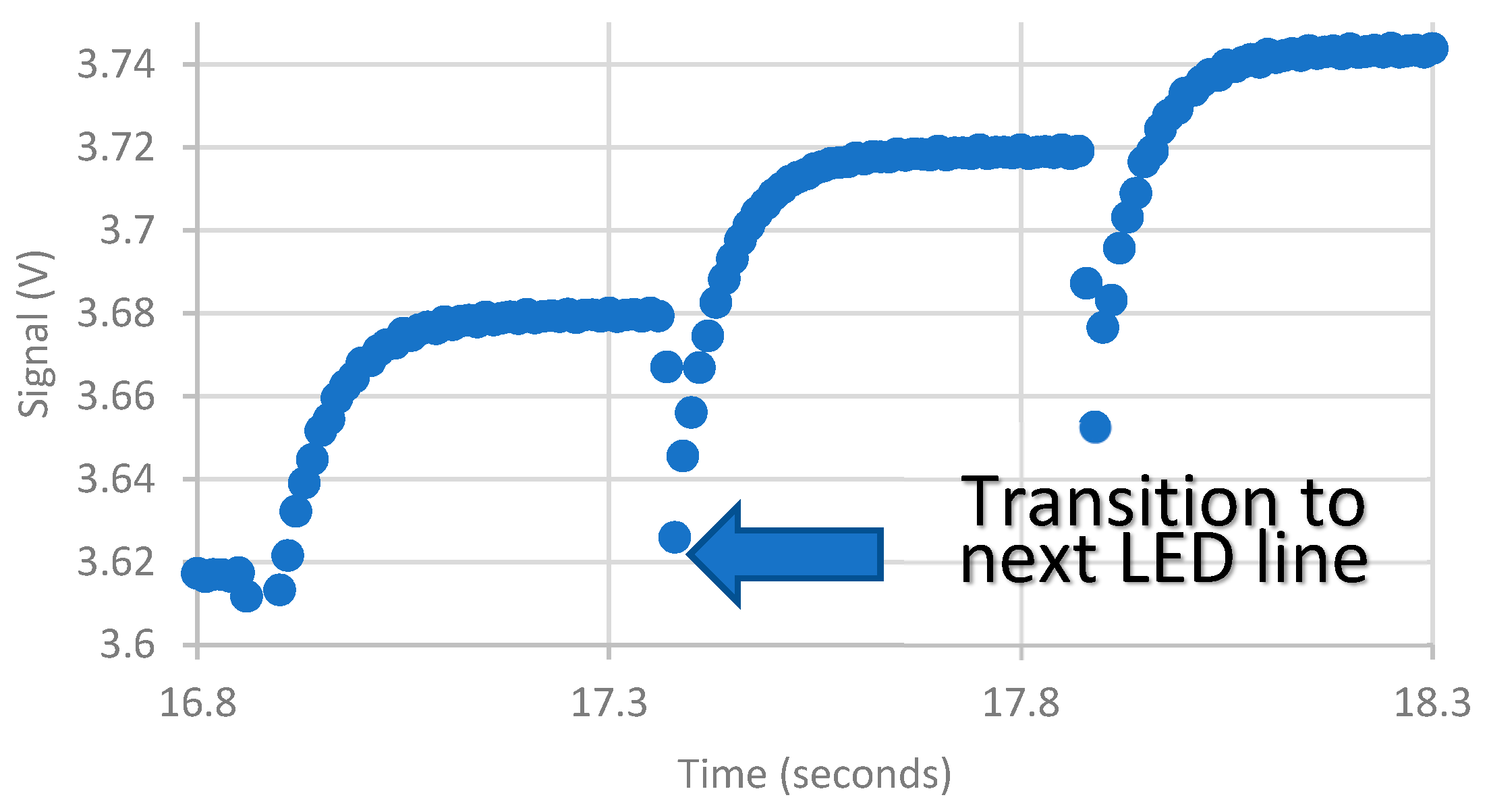

Figure 22). The signal was either monotonically increasing or decreasing, and there were no sudden falls followed by sudden rises that could be identified with turning the LED array off and then illuminating the next line in the sequence. Therefore, the exposure time was fixed to an appropriate value.

Estimated target displacements are shown in

Table 4. Two issues are immediately apparent. First, for the three channels, the scatter in the measured displacements is larger than that at the shorter distances. A major contributor to the increase in the scatter is the peaks are narrower; therefore, there are fewer points defining the quasi-Gaussian, and the accuracy of the interpolated values for the translated reference quasi-Gaussian has larger uncertainties.

The second issue is that the average displacement differs by a few millimeters, not a few tenths of a millimeter, from the nominal value. The start time of the LED line sequence is initially identified with the sudden reduction in amplitude. The rapid falls and rises associated with turning off the LED array and then progressing to the next LED line are used to adjust the start time. Since the smoothing of the data generally removed these falls and rises, the adjustments were not accurate.

Using a visual inspection of the signals, changes in the signal associated with progression to the next LED line were used to adjust the start time for the target sequence. This was done for two of the data sets: (1) the target on the centerline; and (2) the target shifted to the right by 10 mm. With these corrections, the displacement estimates are within 0.8 mm of the nominal displacement (

Table 5).

4. Conclusions

Identifying appropriate sites for landing a spacecraft or building permanent structures is critical for the exploration of planetary bodies, including asteroids, moons, and planets. By tracking the movement of land masses or structures on a planetary surface, scientists can better predict issues that could affect the integrity of the site or structures. Scientists will need new lightweight creep meters for planetary missions to monitor land movement for structures located near faults to assess the hazard of the fault. Additionally, scientists and engineers will need to monitor structures constructed on planetary surfaces for movement that could signify support or structural issues. In this work, we focused on the application of monitoring the movement of faults, although the sensor and algorithms presented here could be used to remotely measure the displacement of many other objects of interest for the exploration of planetary bodies.

A new bioinspired optical sensor head with front-end optics designed and built at NASA Ames was described, along with its use for remotely tracking targets that are 1–60 m from the sensor and move small displacements (1–10 mm). A pseudo-moving target having sequential illumination of lines in an LED array was used to generate signals that mimic those of a moving target. Algorithms were developed that relate these signals to quasi-Gaussians and identify the displacement by comparing the quasi-Gaussians for a reference with the displaced target. This process was tested for three distances between the sensor and the target: 0.35, 6, and 30 m. Front-end optics in the form of telescopes were used to magnify the target image for the tests at 6 and 30 m. For the 0.35 m tests, estimated uncertainties were found to be in the order of a tenth of a millimeter. At 6 m, the estimated error was in the order of a few tenths of a millimeter. For the target at 30 m, difficulties were introduced by the extreme smoothing of the signal and the narrowness of the quasi-Gaussian peak. However, accuracies in the order of 1 mm or less were obtained when corrections were made to the estimated duration for the line illumination and to the start of the target sequence. These difficulties could be alleviated by broadening the quasi-Gaussian peak, possibly by moving the sensor farther away from the image plane, and reducing the smoothing observed in the acquired data. Future work will address the signal plateau and data smoothing issues, in addition to the problems encountered at longer distances related to the signal strength. Work has started on designing and building a new PCB with an improved amplification circuit and on-board analog to digital conversion.