Abstract

To address the issues of low convergence accuracy, poor population diversity, and susceptibility to local optima in the Red-billed Blue Magpie Optimization Algorithm (RBMO), this study proposes a multi-strategy improved Red-billed Blue Magpie Optimization Algorithm (SWRBMO). First, an adaptive T-distribution-based sinh–cosh search strategy is used to enhance global exploration and speed up convergence. Second, a neighborhood-guided reinforcement strategy helps the algorithm avoid local optima. Third, a crossover strategy is also introduced to improve convergence accuracy. SWRBMO is evaluated on 15 benchmark functions selected from the CEC2005 test suite, with ablation studies on 12 of them, and further validated on the CEC2019 and CEC2021 test suites. Across all test sets, its convergence behavior and statistical significance are analyzed using the Wilcoxon rank-sum test. Comparative experiments on CEC2019 and CEC2021 demonstrate that SWRBMO achieves faster convergence and higher accuracy than RBMO and other competitive algorithms. Finally, four engineering design problems further confirm its practicality, where SWRBMO outperforms other methods by up to 99%, 38.4%, 2.4%, and nearly 100% in the respective cases, highlighting its strong potential for real-world engineering applications.

1. Introduction

Optimization problems involve selecting the optimal solution from a set of candidates [1], and they are widely applied to address the growing demands of modern economic and industrial development. With advancements in science and technology, real-world optimization problems have become more complex, often characterized by high dimensionality, non-differentiability, non-convexity, and computationally expensive objective functions. These characteristics make traditional optimization methods ineffective or impractical in many cases. In contrast, metaheuristic algorithms, due to their simplicity, ease of implementation, and robustness, have emerged as a promising alternative and have successfully been applied in areas such as robot navigation [2], workshop job scheduling [3], neural network adjustment [4], and feature selection [5].

Metaheuristic algorithms can be broadly categorized into four types based on their sources of inspiration: swarm intelligence behavior-based algorithms, biological evolution-based algorithms, physical law-based algorithms, and human behavior-based algorithms. Among these, swarm intelligence algorithms, as a core subclass of metaheuristic algorithms, have been widely recognized for their ability to efficiently and accurately solve complex optimization problems without relying on global information. Representative examples include Differential Evolution (DE) [6], Particle Swarm Optimization (PSO) [7], Sparrow Search Algorithm (SSA) [8], Grey Wolf Optimizer (GWO) [9], Harris Hawks Optimization (HHO) [10], Butterfly Optimization Algorithm (BOA) [11], Black Widow Optimization (BWO) [12], and Whale Optimization Algorithm (WOA) [13]. With the growing research interest in optimization, improving traditional algorithms to enhance their performance has become a major focus. For instance, Mingjun Ye et al. [14] proposed a Multi-strategy Enhanced Dung Beetle Optimization algorithm (MDBO). This algorithm replaces the original random initialization with Latin Hypercube Sampling (LHS) [15]—a typical initialization optimization strategy that enables more uniform sampling of the solution space, which helps MDBO demonstrate superior performance in terms of optimization accuracy, stability, and convergence speed. Similarly, Fu Hua et al. [16] introduced a multi-strategy enhanced Sparrow Search Algorithm, integrating an elite-driven chaotic reverse learning mechanism for population initialization and follower position refinement. These examples demonstrate the effectiveness of augmenting swarm intelligence algorithms by integrating novel improvement strategies, providing valuable insights for further improvements.

The Red-billed Blue Magpie Optimization algorithm (RBMO) [17], proposed in 2024 by Shengwei Fu and Ke Li et al., is a novel swarm intelligence optimization algorithm inspired by the cooperative and efficient hunting behaviors of red-billed blue magpies in nature. It stands out due to its strong adaptability, few parameters, and biologically grounded foundation. Unlike traditional optimization algorithms, RBMO uses a dynamic coordination mechanism that adjusts search behavior based on environmental cues, allowing it to adapt to complex and changing problem spaces. Leveraging this unique mechanism, RBMO has demonstrated promising performance in addressing complex engineering optimization tasks, such as polymer electrolyte membrane fuel cell modeling [18], antenna parameter optimization [19], and unmanned aerial vehicle (UAV) path planning [20].

Like many swarm intelligence optimization algorithms, RBMO still exhibits non-negligible limitations when addressing complex optimization problems: it suffers from relatively weak global search ability, tends to get trapped in local optima, and its final accuracy is often compromised in later iterations due to the loss of population diversity. According to the No Free Lunch theorem [21], no single algorithm performs best across all problems, which has motivated continuous efforts to enhance or hybridize existing methods. Meanwhile, it should be acknowledged that some metaheuristic algorithms outside the swarm intelligence family, such as the Survival of the Fittest Algorithm (SoFA) [22], have been proven to achieve global convergence under certain theoretical conditions. Nevertheless, within the domain of swarm intelligence optimization, practical improvements that balance exploration, exploitation, and population diversity remain a key research focus. Therefore, many scholars remain committed to proposing new algorithms or improving existing ones to enhance their applicability and optimization performance in real-world scenarios.

To address the aforementioned limitations of RBMO, this paper proposes a multi-strategy improved Red-billed Blue Magpie Optimization Algorithm (SWRBMO). By synergistically integrating three complementary strategies, SWRBMO targets and addresses the major shortcomings of RBMO in a systematic manner, which are detailed below:

Adaptive T-distribution-Based Sinh–Cosh Search Strategy: The sinh and cosh operators are introduced to inherently coordinate exploration and exploitation, effectively enhancing global search capability. It further integrates an adaptive T-distribution perturbation mechanism, which transitions from large to small perturbations over iterations, and further improves the balance between global exploration and local exploitation, directly accelerating the convergence speed.

Neighborhood-Guided Reinforcement Strategy: This strategy leverages information from neighboring individuals to guide the mutation process, generating diverse candidate solutions and strengthening the algorithm’s ability to explore unknown regions, thus improving its capability to escape from local optima.

Crossover Strategy: This mechanism introduces crossover perturbations among individuals and enables high-quality information exchange, thereby preserving population diversity in the late optimization stage and improving the final optimization accuracy.

To further clarify the innovation of the proposed SWRBMO, Table 1 systematically compares relevant algorithms from multiple perspectives, including inspiration sources, main strategies, strengths and limitations. Specifically, this paper selects classic swarm intelligence algorithms (DE, PSO, and SSA), the original RBMO, and the recently developed representative multi-strategy improved algorithm MDBO as comparison objects.

Table 1.

Comparison of SWRBMO with other algorithms.

The structure of this paper is as follows: Section 2 describes the optimization process of the RBMO algorithm. Section 3 introduces the SWRBMO algorithm in detail, including the integration of the proposed strategies and a complexity analysis. Section 4 presents extensive experimental results on benchmark functions and the CEC2019 and CEC2021 test suites, along with ablation studies and comparative analysis. Section 5 demonstrates its successful application to four real-world engineering optimization problems: the robot gripper optimization problem, the industrial refrigeration system optimization problem, the reinforced concrete beam design optimization problem, and the step cone pulley problem. The optimization results show that SWRBMO’s overall performance is 99%, 38.4%, 2.4% and nearly 100% higher than that of the comparative algorithms for the four problems, respectively. This confirms SWRBMO’s strong potential for engineering applications. Section 6 concludes the study and discusses future directions.

2. Red-Billed Blue Magpie Optimization Algorithm

The RBMO algorithm [17] is a novel swarm intelligence optimization algorithm inspired by the adaptive foraging strategies of red-billed blue magpies, which dynamically adjust their population size and search patterns based on environmental resources. To enhance its performance, in this study, a population balance coefficient α (set to 0.5) is introduced to dynamically coordinate the algorithm’s exploration and exploitation.

2.1. Initial Population

In the RBMO algorithm, a population consisting of n individuals is randomly initialized in a D-dimensional search space. Each individual’s position is uniformly sampled within the predefined upper and lower bounds, which are defined as follows:

where represents the coordinate of the i-th red-billed blue magpie in the j-th variable. and represent the upper and lower bounds of the search space, respectively, and is a uniformly distributed random number within the interval [0, 1].

2.2. Search for Food

In natural settings, red-billed blue magpies employ various strategies to obtain food resources, such as hopping, walking on the ground, or probing tree branches. These birds tend to forage in either small groups (usually 2 to 5) or large groups (10 or more). This behavioral flexibility enables the magpies to dynamically adjust their foraging tactics in response to changes in environmental conditions and food availability. Inspired by this adaptive foraging strategy, the RBMO algorithm simulates both small-group and large-group search behaviors to enhance its exploration capabilities in the solution space.

The math for foraging in small groups is as follows:

The math for foraging in large groups is as follows:

where and denote the updated and current positions, respectively. The variables and represent the group sizes for small and large groups, respectively. The vector denotes the position of the m-th magpie selected at random from the population, whereas refers to the location of another randomly chosen individual during the current iteration. The variables and are uniformly distributed within [0, 1].

The combined model is expressed as

2.3. Attacking Prey

When attacking prey, red-billed blue magpies exhibit efficient abilities and collaborative behaviors. Depending on the characteristics of the prey, they will adopt diversified hunting strategies, such as rapid pecking, flexible jumping and aerial interception. When operating in small groups (usually 2 to 5), magpies primarily target small plants and animals. In contrast, when forming large groups (10 or more), individuals collaborate closely to pursue larger prey, including sizable insects or small vertebrates. This flexible use of multiple cooperative strategies significantly enhances hunting efficiency. The corresponding mathematical model for small-group hunting behavior is formulated as follows:

where indicates the location of the food source, which indicates the current optimal solution. The variable represents a random value drawn from a standard normal distribution, characterized by a mean of 0 and a standard deviation of 1.

CF is the step control factor (from the original literature [17]), which is mathematically represented as follows:

where t represents the current iteration number, and T represents the maximum iteration number.

The mathematical representation when acting in large-group hunting behavior is as follows:

where represents a standard normally distributed random number with a mean of 0 and a standard deviation of 1.

The whole stage of attacking prey is mathematically represented as follows:

2.4. Food Storage

In addition to foraging and hunting, the red-billed blue magpies also exhibit a unique food storage habit. They conceal surplus food in hidden locations, effectively creating an emergency food reserve. This storage strategy ensures a stable energy supply during times of food scarcity. In the context of algorithmic modeling, this characteristic of efficiently preserving data aids the algorithm in progressively approaching the global optimum during the solution process. The mathematical expression of this behavior is as follows:

where denotes the fitness value before the position update, while represents the fitness values after the update.

3. Improved Optimization Algorithm for Red-Billed Blue Magpie

3.1. Adaptive T-Distribution-Based Sinh–Cosh Search Strategy

The original RBMO adopts a monotonic perturbation mechanism for position updating. While this mechanism ensures stability, it has two major drawbacks: first, the weak information exchange among individuals reduces global exploration ability; second, its fixed disturbance pattern lacks adaptability in later iterations, which slows down convergence and makes it difficult to handle dynamic and complex search landscapes. These limitations motivate the introduction of a more flexible strategy to address the aforementioned shortcomings.

In recent years, a number of mathematical optimization techniques—such as the Sine–Cosine Algorithm (SCA) [23] and the Arithmetic Optimization Algorithm (AOA) [24]—have opened new directions for the development of metaheuristic strategies. Within this context, the Sinh–Cosh Optimizer (SCHO) [25] is particularly noteworthy, as its distinctive mathematical characteristics provide promising potential to overcome the limitations of RBMO, especially in strengthening global exploration and improving adaptability across diverse search landscapes. To further introduce randomness, enhance search dynamics, and relax the rigidity of RBMO’s original monotonic perturbation mechanism, a uniformly distributed random variable (within the range [0, 1]) is integrated into the improved strategy. The mathematical basis of the enhancement lies in the sinh and cosh functions, which are defined as follows:

To regulate the adjustment intensity, an intermediate control variable c is designed as

The update factor w, which determines the scale of position adjustment, is defined as

The critical parameters in these formulas are inherited from the validated settings of SCHO, ensuring both theoretical consistency and empirical reliability. Specifically, the constant term 0.45 in Formula (12) and the sensitivity coefficient μ (commonly set to 0.388) in Formula (13) follow standard configurations reported in [25], rather than being tuned arbitrarily. In addition, is a uniformly distributed random number in [0, 1]. Notably, the cosh function is always greater than 1, enhancing the exploration capability, while the sinh function lies within [−1, 1], contributing to controlled perturbations. These complementary effects improve the balance between exploration and exploitation. Based on these insights, the sinh–cosh search strategy is incorporated into RBMO to overcome its inherent limitations.

Based on the balance coefficient , two distinct update rules are applied:

where denotes the updated position, while refers to its current location in the search space. The parameters and define the sizes of small and large groups. Although this sinh–cosh search strategy enriches the exploration–exploitation balance, its convergence speed can still be limited. To further address this issue, an adaptive T-distribution strategy is proposed. Its mathematical representation is as follows:

where trnd(freen) is a random number generated according to the adaptive T-distribution, which introduces the fluctuation characteristics of this distribution into the algorithm. For the adaptive perturbation, a dynamic parameter freen is introduced. Here, freen denotes the degree of freedom of the T-distribution (the “free-n” parameter), and its mathematical expression is defined as follows:

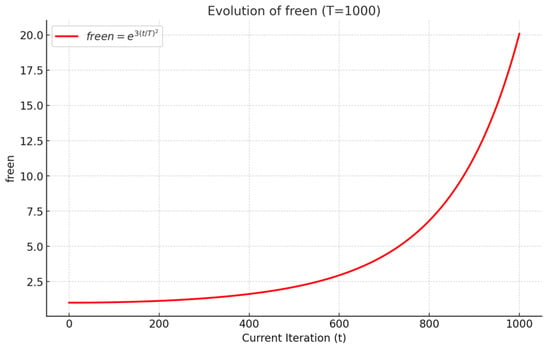

During algorithm iteration, the T-distribution’s degrees of freedom adaptively change with the ratio of current iteration t to total iterations T. Figure 1 shows the T-distribution perturbation curve across iterations. As illustrated, this formulation yields a heavy-tailed distribution during early iterations (when t/T is small), facilitating escape from local optima and global exploration (compensating for RBMO’s original monotonic disturbance’s insufficient exploration). As iterations progress and t/T → 1, the T-distribution gradually approximates a normal distribution, enabling more refined local search around promising regions and effectively accelerating convergence (addressing the slow convergence issue of the original algorithm).

Figure 1.

Iteration variation curve of T-distribution perturbation.

Finally, the adaptive T-distribution strategy is integrated with the sinh–cosh search strategy to construct a more robust position update rule:

In summary, the sinh–cosh search strategy introduces diversity and achieves a balance between exploration and exploitation, while the adaptive T-distribution introduces dynamic perturbations shifting from global to local focus. Their combination complements RBMO’s original monotonic perturbation, enhancing global optimization and convergence speed.

3.2. Neighborhood-Guided Reinforcement Strategy

In the standard RBMO, individuals are strongly guided by the current global best solution. However, this reliance makes the algorithm prone to premature convergence: as iterations progress, individuals crowd around the same region, diversity diminishes, and the search stagnates. To address this limitation, a neighborhood-guided reinforcement strategy inspired by [26] is introduced. Instead of focusing solely on the global best, this mechanism incorporates information from neighboring individuals, thereby promoting local interactions and generating a richer set of candidate solutions. This localized interaction introduces richer candidate solutions, maintains population diversity, and enhances the ability to escape local optima.

Governed by the balance coefficient , the position update rule of this neighborhood-guided reinforcement strategy is formulated as follows:

where is the optimal position of the i-th individual and is the neighboring individual of the current position. When , the individual is considered as the neighboring individual; when , the first individual itself is taken as the neighboring individual. I is a randomly generated integer within {1, 2}. Additionally, is a random number following the standard normal distribution with a mean of 0 and a standard deviation of 1.

3.3. Crossover Strategy

As iterations increase, red-billed blue magpies tend to congregate around the current optimal solution. This clustering behavior reduces population diversity, which restricts the search capability and reduces convergence accuracy. To address the loss of diversity and declining convergence performance during iterations, this paper introduces an innovative crossover strategy [27] into the RBMO algorithm. This strategy improves search efficiency by applying crossover operations that maintain diversity and enhance convergence accuracy.

The crossover strategy consists of two components: horizontal crossover and vertical crossover. Horizontal crossover allows individuals in the population to exchange information comprehensively, promoting extensive exploration of the solution space and enhancing global search capability. Vertical crossover performs crossover operations between different dimensions within an individual, effectively preventing the algorithm from becoming trapped in local optima in certain dimensions. Through the inherent randomness and diversity of these crossover operations, the algorithm continuously produces novel solutions. When the population is trapped in local optima, these new solutions provide alternative search directions, increasing the probability of escaping stagnation and progressing toward the global optimum. After each crossover, offspring compete with their parents, and the individuals with superior fitness are retained, ensuring both diversity maintenance and convergence accuracy.

3.3.1. Horizontal Crossing

In each iteration, pairs of individuals are randomly matched to perform arithmetic crossover across all dimensions. Given parent individuals and , the offspring are generated as follows:

where and are random cross coefficients between [0, 1], and and are random numbers between [0, 1]. and are parents of and , respectively. and are D-dimensional descendants of and , respectively. After crossover, the fitness of offspring is compared to that of their parents, and the individuals with higher fitness are preserved.

3.3.2. Vertical Crossing

Vertical crossover is an arithmetic crossover applied between different dimensions within a single individual. This operation recombines information across two randomly selected dimensions and , preventing the algorithm from getting stuck in local optima restricted to specific dimensions. After normalizing and , the offspring are updated as follows.

where the cross parameter is a random number in [0, 1], and is the child of and in the and dimensions. After vertical crossover, offspring fitness is compared with that of their parents, and the better individuals are retained.

3.4. The Pseudocode of SWRBMO Algorithm

The specific implementation steps and pseudocode of the SWRBMO algorithm are shown below:

Step 1. Initialization: Set the maximum number of iterations T, population size N, problem dimension D, balance coefficient α, and the search boundaries lb and ub. Initialize other relevant parameters as needed. The population is then generated by randomly creating N individuals (magpie positions), which form the initial solution space.

Step 2. Best Solution Update: At the beginning of each iteration, evaluate the fitness of all individuals according to the objective function.

Step 3. Adaptive T-Distribution-based sinh–cosh Search Strategy: For each individual in the population, if rand < α, the position is updated using Formula (17). Otherwise, the position is updated using Formula (18).

Step 4. Neighborhood-guided Reinforcement Strategy: If rand < α, the individual’s position is updated according to Formula (19), where interactions with neighboring individuals increase diversity and reduce stagnation. Otherwise, update its position using Formula (20).

Step 5. Crossover Strategy: Perform crossover operations on the updated individuals using Formulas (21)–(23). Specifically, horizontal crossover is implemented by exchanging information between different individuals, while vertical crossover is achieved by recombining information within a single individual.

Step 6. Food Source Update: After the crossover operation, refresh the food source using Formula (9), and retain the best-performing solution in the current iteration to guide the subsequent search process.

Step 7. Iteration Update: Repeat Steps 2 to 6 continuously, incrementing the iteration count each time until the maximum number of iterations T is reached.

The pseudocode of the SWRBMO algorithm is shown in Algorithm 1.

| Algorithm 1 The pseudocode of the SWRBMO algorithm |

| Input: The dimension D, maximum number of iterations T, and population size N |

| Output: Global optimal solution Global optimal solution |

| 1: Procedure SWRBMO |

| 2: Initialize the key parameters T, D, N, t, and α |

| 3: While t < T +1 |

| 4: Calculate the position of each individual |

| 5: Update the optimal solution |

| 6: Exploration: |

| 7: for i = 1: N |

| 8: if rand < α |

| 9: Modify the individual’s position using Equation (17) |

| 10: else |

| 11: Modify the individual’s position using Equation (18) |

| 12: end if |

| 13: Exploitation: |

| 14: if rand < α |

| 15: Modify the magpie’s coordinates using Equation (19) |

| 16: else |

| 17: Update the magpie’s location using Equation (20) |

| 18: end if |

| 19: Execute the Crossover Strategy using Equations (21)–(23) |

| 20: end for |

| 21: Refresh the food storage, using Equation (9) |

| 22: t = t + 1 |

| 23: end while |

| 24: Return best solution |

3.5. Time Complexity Analysis

According to the complexity analysis framework of the original RBMO algorithm, RBMO’s computational complexity depends on two core stages: the solution initialization stage (O(N), where N is the number of search agents) and the solution update stage (O(T × N) + O(2 × T × N × D), where T is the maximum number of iterations and dim is the problem dimension). The computational complexity framework of the proposed SWRBMO is consistent with that of RBMO, as it also focuses on the initialization stage and the solution update stage (with three new strategies embedded in the latter, without altering the core complexity dependence).

In the initialization stage, SWRBMO adopts the same logic as RBMO to generate n search agents, so its time complexity remains O(N), consistent with RBMO. In the solution update stage, SWRBMO retains RBMO’s original operations contributing O(T × N) + O(2 × T × N × D) and embeds three new strategies (adaptive T-distribution-based sinh–cosh search, neighborhood-guided reinforcement, and crossover). All new strategies operate on n agents across dim dimensions per iteration, contributing complexities of O(T × N × D), O(T × N × D), and O(1.5 × T × N × D), respectively. Thus, the total complexity of SWRBMO’s solution update stage is O(T × N) + O(2 × T × N × D) + O(T × N × D) + O(T × N × D) + O(1.5 × T × N × D). Merging like terms gives O(T × N) + O(5.5 × T × N × D). Combining the initialization stage O(N) and the solution update stage O(T × N) + O(5.5 × T × N × D), the total complexity of SWRBMO is O(N) + O(T × N) + O(5.5 × T × N × D).

Per the rules of complexity analysis (ignoring constant factors (5.5) and lower-order terms O(N) and O(T × N), which are negligible compared to the high-order term O(T × N × D), the total complexity is simplified to O(T × N × D).

Although SWRBMO integrates three strategies based on RBMO, its total computational complexity maintains the same order O(T × N × D) as the original RBMO. This confirms that the improved algorithm does not introduce significant additional computational burden while enhancing performance, ensuring its efficiency and practical applicability for solving complex optimization problems.

4. Algorithm Performance Test and Analysis

To comprehensively assess the effectiveness of the proposed adaptive T-distribution-based sinh–cosh search strategy, neighborhood-guided reinforcement strategy, and crossover strategy on the RBMO algorithm, as well as to systematically evaluate the SWRBMO, the experimental study was designed following a sequential manner from strategy-level validation to comprehensive performance comparison. Accordingly, the experiments were divided into two stages.

4.1. Experimental Design and Parameter Settings

4.1.1. Division of the Experimental Stages

Validation of the individual strategies: To disentangle and assess the contribution of each improvement, comparisons were performed between the original RBMO, its single-strategy enhanced variants, and the proposed SWRBMO across 15 benchmark functions from the CEC2005 test suite. In addition, ablation experiments were conducted on 12 of these functions to further substantiate the rationale for subsequent multi-strategy integration.

Comprehensive performance comparison: After validating the effectiveness of the individual strategies, the proposed SWRBMO was benchmarked against both classical and recently developed metaheuristic algorithms using the CEC2019 and CEC2021 test suites. Compared to CEC2005, these two suites incorporate more intricate function constructions and thus present more challenging optimization landscapes, making them well suited for a rigorous assessment of algorithmic performance. To further enhance statistical reliability, the Wilcoxon rank-sum test was applied across all three test suites (CEC2005, CEC2019, and CEC2021). The inclusion of CEC2005 not only provides a widely recognized historical reference but also complements the analysis, ensuring that the conclusions regarding SWRBMO’s performance are comprehensive and statistically robust.

This study selected the CEC2005, CEC2019, and CEC2021 test suites. The core objective was to move from single-strategy validation to comprehensive performance comparison. The two-stage experimental design formed a complementary and mutually supportive relationship.

4.1.2. Experimental Parameters and Environment

To ensure fairness and eliminate parameter-related bias, all experiments adopted the same settings: population size N = 30, and the maximum number of iterations T = 1000. Each algorithm was independently run 30 times to mitigate stochastic effects. Experiments were carried out on a 64-bit Windows 10 system equipped with an Intel Core i7-8750H CPU, 16 GB RAM, and MATLAB 2022b.

4.2. Effectiveness Analysis of the Improvement Strategy

4.2.1. Selection of Test Functions

To verify the fundamental accuracy of the proposed SWRBMO, the CEC2005 test suite (see Table 2) was employed. CEC2005 is one of the most canonical and widely cited benchmark sets in swarm intelligence research, and its availability of known optima makes it particularly suitable for error-to-optimum accuracy verification. Although other benchmarks such as GKLS (ND type) [28] can generate test classes with controllable global minima, it has been less frequently adopted in recent studies. In contrast, CEC2005 enables fair and direct comparison with a large body of related work, which is crucial for consistency and benchmarking validity.

Table 2.

CEC2005 benchmark function.

The selected 15 functions cover three representative categories: F1–F7 are unimodal functions, which are used to assess the algorithm’s exploitation capability and convergence speed. Functions F8–F13 are multimodal, containing numerous local optima, and are commonly used to evaluate the algorithm’s global search ability and robustness against local entrapment. F14 and F15 are composite multimodal problems with fixed dimensions, representing more complex and challenging optimization scenarios.

For CEC2005, F1–F13 were tested under fixed dimensions of 30 or 100, while F14–F15 followed their inherent dimensionality. This controlled setting eliminates dimensional fluctuations, ensuring that the evaluation focuses on the strategies themselves and rigorously validates stability and robustness across diverse function types.

4.2.2. Effectiveness Analysis of Different Improvement Strategies

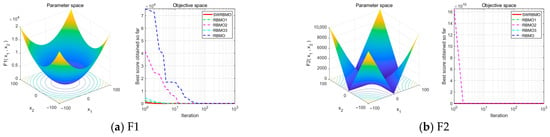

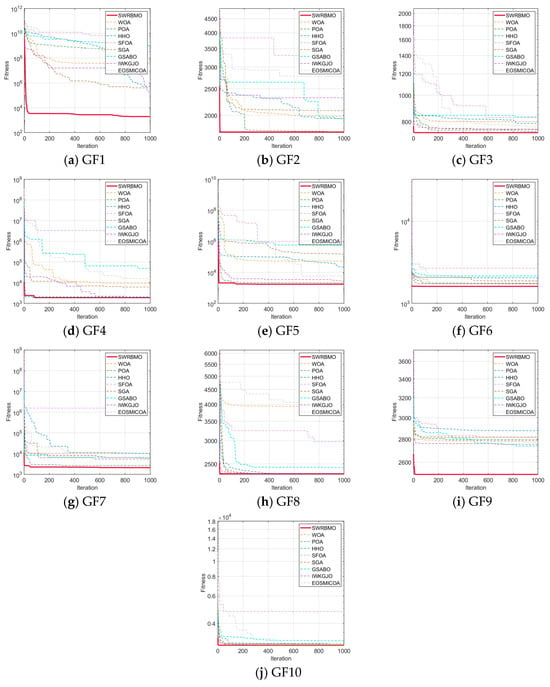

To rigorously assess the effectiveness of the proposed SWRBMO algorithm, three enhanced variants of RBMO were developed: RBMO1, incorporating the adaptive T-distribution-based sinh–cosh search strategy; RBMO2, integrating the neighborhood-guided reinforcement strategy; and RBMO3, embedding the crossover strategy. Comparative experiments were conducted among the original RBMO, its three single-strategy variants, and the full SWRBMO across 15 benchmark functions with diverse characteristics. The numerical optimization results are reported in Table 3 and Table 4, while the convergence behaviors under the 30-dimensional setting are depicted in Figure 2.

Table 3.

Results of the CEC2005 benchmark suite for 30 dimensions and 100 dimensions.

Table 4.

Results of the CEC2005 benchmark suite for fixed dimensions.

Figure 2.

Comparison of convergence curves of different improvement strategies.

Under the 30-dimensional setting, the evaluation on unimodal functions (F1–F7) reveals that all strategy-enhanced variants (RBMO1, RBMO2, and RBMO3) markedly outperform the original RBMO in terms of optimal values, mean values and standard deviations. Among them, SWRBMO consistently exhibits the most competitive overall performance, achieving faster convergence, superior optima, improved mean results, and reduced standard deviations. Specifically, for functions F1–F4, SWRBMO, RBMO1, and RBMO2 all attain the theoretical optima across all metrics, while RBMO3 also demonstrates significant improvements over the baseline. On the more challenging F5, SWRBMO’s best value and standard deviation are slightly lower than RBMO3 and RBMO2, respectively, yet it still outperforms RBMO—reflecting the effectiveness of the integrated strategies. For F6, both SWRBMO and RBMO3 reach the theoretical optimum, highlighting the strong utility of the crossbar strategy in complex cases. Although SWRBMO does not achieve the global optimum on F7, it nevertheless surpasses RBMO across all indicators.

The results on multimodal functions (F8–F13) further corroborate the efficacy of the proposed strategies. For F8, F12, and F13, SWRBMO and RBMO3 exhibit comparable performance, indicating that the crossbar strategy is instrumental in escaping local optima. For F9–F11, SWRBMO, RBMO1, and RBMO2 reach the theoretical optima, while RBMO3 achieves the optima on F9 and F11 and closely approaches it on F10. Collectively, these findings substantiate that the integrated multi-strategy framework of SWRBMO substantially enhances both convergence efficiency and global exploration capability.

For the fixed-dimensional multimodal functions F14 and F15, the algorithm achieves a small deviation between the best and mean results, indicating its potential applicability in more complex and challenging optimization scenarios.

When the dimension is expanded to 100, although there are slight differences in the optimal values, mean values and standard deviations among various functions, SWRBMO can generally achieve better optimal values, average values and stability indicators in most cases. This indicates that the proposed strategy remains effective and stable even under high-dimensional conditions.

Further analysis of the algorithm’s stability is conducted by examining the convergence curves presented in Figure 2. The iterative patterns across the benchmark functions exhibit a high degree of similarity. In particular, the SWRBMO algorithm, enhanced with multiple strategies, demonstrates nearly linear convergence trajectories on functions F1–F3, F5, F7, and F11–F15, rapidly approaching the optimal solution. This behavior highlights the algorithm’s strong global optimization capability and fast convergence speed.

Similarly, the RBMO3 algorithm, incorporating the crossbar strategy, also exhibits nearly straight-line convergence on functions F1, F2, F5, F7, and F11–F15. This result further validates that the crossover mechanism effectively accelerates convergence and improves solution quality. For functions F4, F6, F8, F9, and F10, the convergence curves follow a steep, approximately linear descent, with fitness values dropping sharply as iterations progress. This pattern indicates the algorithm’s ability to quickly approach near-optimal solutions while effectively avoiding local optima.

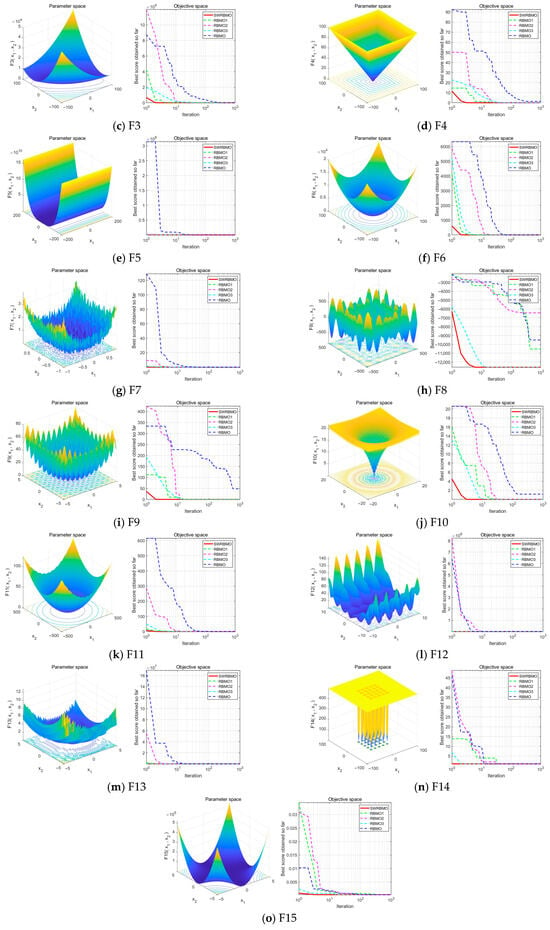

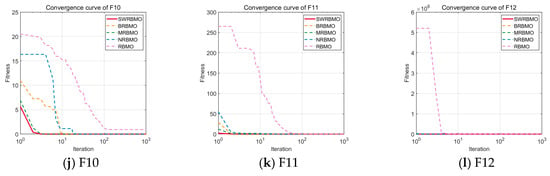

4.3. Ablation Study

To rigorously evaluate the individual contributions and potential synergistic effects of the three optimization strategies incorporated into the SWRBMO algorithm, an ablation study was carried out using the first 12 benchmark functions from the CEC2005 test suite. Four algorithmic settings were considered: the baseline RBMO and three ablation variants, each obtained by removing a single strategy—BRBMO (excluding the crossover strategy), MRBMO (excluding the neighborhood-guided reinforcement strategy), and NRBMO (excluding the adaptive T-distribution-based sinh–cosh search strategy). All experiments in the ablation study were conducted with the problem dimension set to 30.

As shown in Table 5, SWRBMO consistently outperforms or matches the performance of other algorithms across most test functions, demonstrating strong solution accuracy and robustness. In contrast, RBMO exhibits the weakest overall performance.

Table 5.

Performance comparison in ablation study.

Performance across function types: For the unimodal functions (F1–F7), all algorithms except RBMO reach theoretical optima on F1–F4, indicating limited strategy impact in low-complexity scenarios. However, BRBMO shows significant increases in average error and standard deviation on F5–F7, highlighting vulnerabilities of single-strategy designs in moderately complex cases. For the multimodal functions (F8–F12), performance gaps widen. BRBMO suffers severe degradation with high variability on F8 and F12, underscoring the critical role of crossover strategies in maintaining convergence stability. SWRBMO, along with NRBMO and BRBMO, achieves theoretical or near-optimal results on multiple functions with minimal standard deviations, confirming the synergistic effects of the strategy combinations.

Insights from ablation studies (Table 5): RBMO1 (with only the T-distribution strategy) maintains stable performance across most functions. However, it shows mediocre results on F6 and F12 due to insufficient exploration, leading to local optima and breaks through this bottleneck when combined with the neighborhood strategy. RBMO2 (with only the neighborhood-guided reinforcement strategy) shows limited performance on F2, F4, F5–F8, and F12, but exhibits significant improvements in all metrics when combined with other strategies. RBMO3 (with only the crossover strategy) performs poorly on F2–F5, F7, F10, and F11 but sees a sharp increase in performance after integrating additional strategies. This highlights the core value of ablation experiments: dissecting single-strategy bottlenecks through controlled variables, quantifying the contribution of each strategy, and providing robust evidence for the necessity of multi-strategy integration.

In summary, the integration of multiple strategies significantly enhances the overall performance of the algorithm, underscoring the inherent advantages of complementarity and synergy among the strategies. The ablation study, conducted via controlled variable analysis and comparative evaluation, validates the effectiveness of the proposed strategy combination in solving complex optimization problems.

Based on the convergence curves of F1–F12 in Figure 3, the SWRBMO algorithm shows a clear advantage. It converges to near-optimal values faster than other methods in most cases and maintains low fluctuations after convergence, indicating excellent stability. In contrast, the ablation algorithms generally show slower convergence, lower accuracy, and less stability than SWRBMO.

Figure 3.

Iteration curves of the ablation study.

The ablation results confirm the soundness of the algorithm design in two ways. First, removing any strategy leads to performance degradation. Only the combined use of multiple strategies improves convergence speed, accuracy, and stability. Second, the ablation tests reveal the weaknesses of individual strategies. NRBMO (without the adaptive T-distribution-based sinh–cosh search strategy) has poor early exploration on unimodal functions. BRBMO (without the crossover strategy) tends to get stuck in local optima on multimodal functions. These results highlight the unique roles of each strategy. The adaptive T-distribution-based sinh–cosh search strategy enhances global exploration, while the crossover strategy maintains population diversity and prevents premature convergence. Together, they create a cooperative mechanism that compensates for the shortcomings of single strategies. This further confirms the importance and necessity of multi-strategy collaboration in the SWRBMO algorithm.

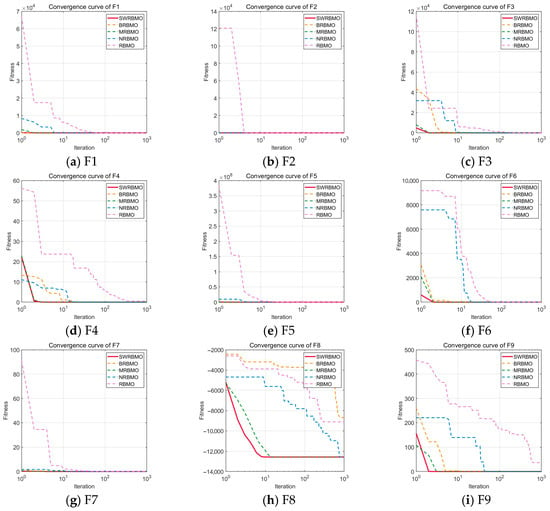

4.4. Comparison and Analysis of SWRBMO Optimization Results with Other Optimization Algorithms

To further evaluate the optimization ability and robustness of the proposed SWRBMO algorithm, the CEC2019 and CEC2021 benchmark test suites were adopted. For comparative analysis, three classical metaheuristics, two recently developed optimization algorithms, and three advanced improved algorithms are included, namely the Whale Optimization Algorithm (WOA) [13], Pelican Optimization Algorithm (POA) [29], Harris Hawks Optimization (HHO) [10], Superb Fairy-wren Optimization Algorithm (SFOA) [30], Snow Geese Algorithm (SGA) [31], Golden Sine-based Average Best Optimizer (GSABO) [32], improved Golden Jackal Optimization Algorithm (IWKGJO) [33] and Multi-Strategy Chimp Optimization Algorithm (EOSMICOA) [34]. The problem dimensionality is set to 10 for CEC2019 and 20 for CEC2021, thereby enabling evaluation under both low- and medium-dimensional scenarios.

Results presented in Table 6 and Table 7 demonstrate that SWRBMO consistently achieves superior performance across the majority of functions. Furthermore, a comparative analysis with several other algorithms shows that SWRBMO achieves superior performance, further validating its effectiveness and practicality.

Table 6.

Results on CEC2019 test suite.

Table 7.

Results on CEC2021 test function suite.

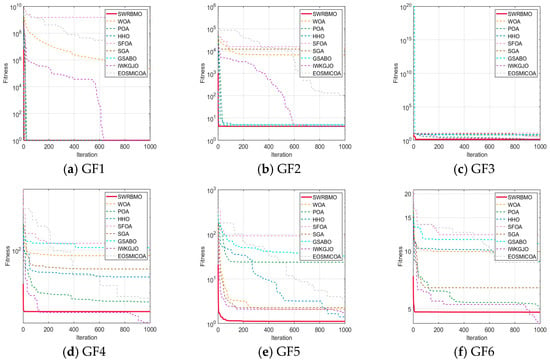

4.4.1. Performance Analysis Using the CEC2019 Test Function Suite

As shown in Table 6, the SWRBMO algorithm overall demonstrates better optimization performance than the other eight advanced algorithms. Notably, the theoretical global optimal value of the functions in the CEC2019 test function suite is 1. Specifically, SWRBMO uniquely achieves the theoretical global optimal value of 1 on GF1 and GF3, indicating excellent search capability. For functions GF2, GF8, and GF9, its best and average fitness values are closer to the theoretical optimal values, highlighting stronger global search ability and higher precision in solving medium-difficulty problems. For GF4, although SWRBMO’s best value, average value, and standard deviation are slightly higher than those of IWKGJO, its overall performance still surpasses most other algorithms, reflecting good stability. On GF5 and GF7, SWRBMO achieves more stable results with lower average and standard deviation values. On GF6, the algorithm’s optimal value and standard deviation are inferior to those of IWKGJO and GSABO, respectively, but it shows a better average value. For function F10, IWKGJO achieves the best optimal value and average value, while SWRBMO demonstrates a more favorable standard deviation.

Although some algorithms perform better on individual functions, SWRBMO shows more consistent advantages across most test functions. In summary, SWRBMO exhibits significant advantages in global search ability, convergence speed, and optimization precision. These results further confirm the effectiveness of its multi-strategy collaborative design.

As shown in Figure 4, the SWRBMO algorithm demonstrates clear advantages in convergence speed, solution accuracy, and the quality of the obtained optimal values across functions GF1 to GF10. In most cases, SWRBMO approaches the theoretical optimum rapidly during the early stages of iteration, indicating strong convergence performance. On GF4, GF6 and GF8, although the best value is slightly lower than that of the IWKGJO algorithm, the SWRBMO curve remains almost flat after convergence, with minimal fluctuations. This suggests that SWRBMO is more reliable in maintaining the optimal solution.

Figure 4.

Curve comparison with other optimization algorithms on CEC2019.

Overall, the SWRBMO algorithm performs well in both convergence speed and stability, highlighting its strong capability in solving complex optimization problems.

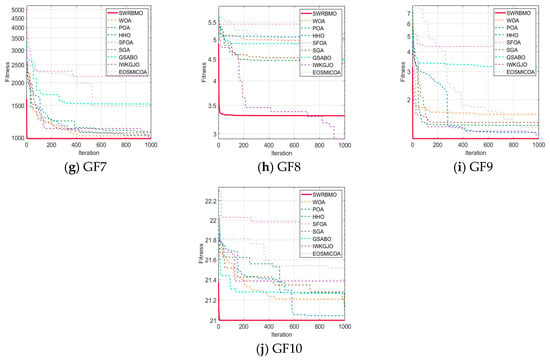

4.4.2. Performance Analysis Using the CEC2021 Test Function Suite

As shown in Table 7, the SWRBMO algorithm exhibits significant advantages in optimization performance. Notably, the theoretical global optimal value of the functions in the CEC2021 test function suite is 0. Specifically, on functions GF1, GF4, and GF8, SWRBMO achieves the best results across all three metrics (best value, mean value, and standard deviation), reflecting its robust overall optimization capability. On functions GF2, GF3, and GF10, SWRBMO also obtains the best optimal value and mean value, further confirming its excellent global search ability. However, on GF5, the average value and standard deviation are respectively lower than those of IWKGJO and POA. On GF6, the mean value and standard deviation of SWRBMO are inferior to those of IWKGJO. On GF7, its mean value and standard deviation are lower than those of POA. On GF9, its average value and standard deviation are respectively lower than those of POA and EOSMICOA. This indicates a slight performance gap for SWRBMO in some specific scenarios.

Overall, SWRBMO demonstrates superior performance across most functions in all three key metrics. By integrating strong global exploration with stable convergence, the algorithm presents an effective and promising solution for addressing complex optimization problems.

As illustrated by the convergence curves in Figure 5, the SWRBMO algorithm demonstrates a markedly rapid reduction in fitness values during the initial adaptation phase across all GF1–GF10 functions, reaching low-fitness regions earlier than the comparative algorithms. This behavior reflects its significantly accelerated convergence toward the theoretical optimum. In particular, functions GF4, GF5, and GF7 exhibit a characteristic inflection point, where a secondary decline in fitness values follows an initial stagnation. Such a pattern highlights SWRBMO’s capacity to overcome premature convergence by reinitiating exploration in regions susceptible to local optima.

Figure 5.

Curve comparison with other optimization algorithms on CEC2021.

4.5. Wilcoxon Rank-Sum Test

When evaluating algorithmic performance, relying solely on indicators such as the best value, mean, and standard deviation provides an incomplete assessment. To strengthen the statistical validity of the comparisons, this study further employs the Wilcoxon rank-sum test on the CEC2005, CEC2019, and CEC2021 benchmark function suites against other optimization algorithms. In this analysis, the p-value measures the statistical significance of performance differences. A p-value below 0.05 indicates that SWRBMO achieves a statistically significant improvement over the compared algorithms, whereas a p-value greater than 0.05 implies no significant difference. For clarity of presentation, “+” indicates that SWRBMO outperforms the compared algorithm, “=” indicates comparable performance, “−” indicates inferior performance, and “NaN” indicates that no statistically significant difference can be established from the dataset.

4.5.1. Wilcoxon Rank-Sum Test on CEC2005

Table 8 reports the results of the Wilcoxon rank-sum test on the CEC2005 benchmark functions (F1–F15), which evaluate the statistical significance of performance differences between SWRBMO and the comparison algorithms. For most functions, the p-values fall below the 5% significance level, with those for F2, F4–F6, F8, F12, and F13 approaching zero, indicating a highly significant advantage of SWRBMO. Conversely, the relatively larger p-values for F9, F10, and F11 suggest that the observed performance differences on these functions are not statistically significant. Overall, these results demonstrate that SWRBMO achieves a statistically superior optimization performance on the majority of CEC2005 functions.

Table 8.

Results of the rank-sum test on CEC2005.

4.5.2. Wilcoxon Rank-Sum Test on CEC2019

The results in Table 9 show that, for the CEC2019 test suite, most p-values obtained from the Wilcoxon rank-sum test are below 5%, indicating significant performance differences between SWRBMO and the compared algorithms. In particular, for functions GF2, GF3, GF5, GF6, GF8, and GF10, the p-values are nearly zero, demonstrating a highly significant advantage of SWRBMO. It is worth noting that only on GF1 does the relatively higher p-value suggest no significant difference, whereas on all other functions, SWRBMO consistently achieves statistically significant improvements. Overall, these results highlight the statistical superiority of SWRBMO and its strong potential for solving complex optimization problems.

Table 9.

Results of the rank-sum test on CEC2019.

4.5.3. Wilcoxon Rank-Sum Test on CEC2021

As shown in Table 10, within the CEC2021 test function suite, the SWRBMO algorithm exhibits distinct performance characteristics compared to other algorithms. Specifically, when compared with the SFOA and SGA algorithms, in GF1-GF10, SWRBMO shows significant statistical differences, highlighting its robust consistency and competitive advantages. In contrast, algorithms such as WOA, POA, HHO, GSABO and EOSMICOA demonstrate statistical significance of the performance differences across most test functions. It is worth noting that when compared with the high-performing IWKGJO algorithm, SWRBMO shows smaller performance differences on functions F1, F2, F3, and F8, with the two algorithms displaying more comparable performance.

Table 10.

Results of the rank-sum test on CEC2021.

5. Excellent Engineering Applications Based on SWRBMO

In the field of mechanical optimization, various engineering problems are closely intertwined with mathematical modeling. As highlighted in references [35,36], the construction of an effective optimization model primarily involves the identification of design variables, the definition of the objective function, and the systematic formulation of constraint conditions.

To validate the effectiveness and applicability of the proposed multi-strategy enhanced Red-billed Magpie Optimization algorithm (SWRBMO) in solving engineering design problems, four representative case studies are selected: the robot gripper problem, industrial refrigeration system problem, reinforced concrete beam design problem, and step cone pulley problem. Each problem is mathematically modeled, with constraint handling implemented via a penalty function approach, allowing for rigorous evaluation of the algorithm’s optimization capabilities.

The performance of SWRBMO is benchmarked against six well-known optimization algorithms: Whale Optimization Algorithm (WOA) [13], Harris Hawks Optimization (HHO) [10], Subtraction-Average-Based Optimizer (SABO) [37], Osprey Optimization Algorithm (OOA) [38], Reindeer-Inspired Metaheuristic (RIME) [39], and Red-billed Blue Magpie Optimizer (RBMO). All algorithms are executed under identical conditions, with maximum iterations T = 1000 and a population size N = 30.

5.1. Robot Gripper Problem

The optimization of robotic grippers poses a complex problem in the field of mechanical engineering. The main goal is to reduce the force disparity—specifically, the gap between the maximum and minimum forces—within a defined displacement interval at the gripper’s endpoint. The design process incorporates seven continuous decision variables and must comply with seven nonlinear constraints. The mathematical representation of this problem is given below:

Minimize

Constraint condition:

where

Variable interval:

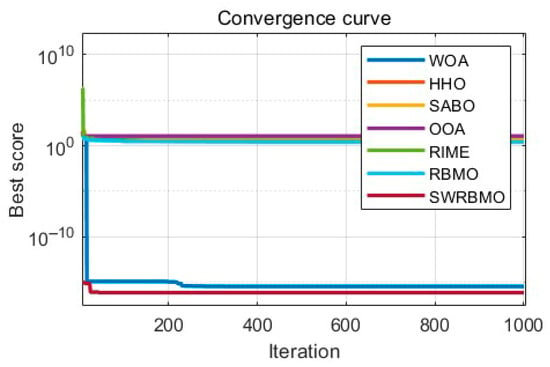

As shown in Table 11, SWRBMO achieves the best performance with the smallest deviation, yielding a result of 7.27 × 10−17. Compared to the HHO, SABO, OOA, RIME, and RBMO algorithms, its performance is optimized by nearly 99%. Even when benchmarked against the top-performing WOA, SWRBMO still realizes an optimization improvement of approximately 78.8%. Figure 6 illustrates that SWRBMO exhibits faster and more stable convergence during the early iterations, enabling more efficient approximation of the optimal design. This enhancement in the overall performance of SWRBMO indicates that the SWRBMO algorithm possesses significant advantages in addressing the robot gripper optimization problem.

Table 11.

Optimization results of robot gripper problem.

Figure 6.

Convergence curve for robot gripper problem.

5.2. Industrial Refrigeration System Problem

The problem aims to minimize the total annual cost (TAC) of the system, considering capital investment and operational expenses. This is a complex nonlinear programming problem involving both continuous and discrete decision variables. The system consists of multiple refrigeration stages and involves decision-making in areas such as compressor capacity, heat exchanger sizing, and refrigerant flow distribution. The mathematical model incorporates thermodynamic performance constraints, equipment selection rules, and energy balance equations.

Minimize

Subject to

With bounds

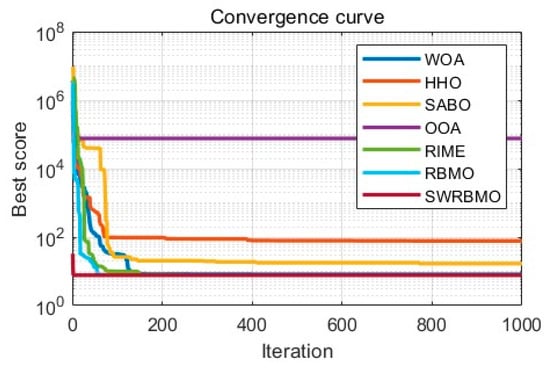

Table 12 results clearly show that the SWRBMO algorithm outperforms the other six compared algorithms. In this optimization result, the overall average performance of the SWRBMO algorithm is approximately 38.4% higher than that of the other algorithms. Combined with Figure 7, the SWRBMO algorithm demonstrates a faster and more stable convergence rate. These characteristics indicate that the SWRBMO algorithm has strong practicality in optimization problems such as industrial refrigeration system design, providing an efficient and precise solution for solving such complex optimization scenarios.

Table 12.

Optimization results of industrial refrigeration system design problem.

Figure 7.

Convergence curve for industrial refrigeration system problem.

5.3. Reinforced Concrete Beam Design Problem

The design problem of reinforced concrete beams aims to minimize the total structural cost while ensuring structural safety and adhering to design standards. To achieve the lowest total structural cost, the area of reinforcement , beam width , and beam height need to be determined, with the height-to-width ratio of the beam restricted to no more than 4. This optimization problem is subject to several nonlinear constraints. Given the nonlinear characteristics of reinforced concrete and the multi-constraint nature of the problem itself, it can serve as a practical and challenging benchmark test problem for evaluating the performance of heuristic optimization algorithms.

Minimize

Subject to

With bounds

To handle inequality constraints, this paper uses a penalty function method. Specifically, the constraint violation is incorporated into the objective function. The augmented penalty function is as follows.

where is the penalty coefficient, and is calculated as follows.

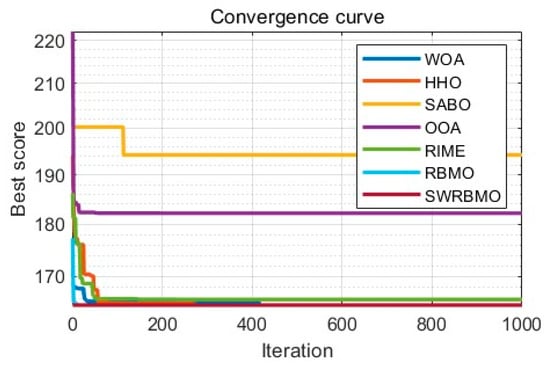

Referring to Table 13, in the reinforced concrete beam design optimization problem, while SWRBMO’s results show subtle numerical differences from comparative algorithms (WOA, HHO, RIME, RBMO), it still has quantifiable advantages—its overall average performance is optimized by 2.4%. Though this difference seems small in isolation, it translates to material cost savings or structural performance improvements in large-scale projects (large bridges, buildings). Combined with Figure 8, SWRBMO also exhibits faster, more stable early-iteration convergence (significantly outperforming other methods), a key advantage for engineering environments with limited design time or iteration budgets.

Table 13.

Optimization results of reinforced concrete beam design problem.

Figure 8.

Convergence curve for reinforced concrete beam design problem.

5.4. Step Cone Pulley Problem

The step cone pulley optimization problem focuses on minimizing the weight of a four-step cone pulley by adjusting five design variables. Among these variables, one defines the pulley’s width, while the remaining four represent the diameters of each individual step. The optimization process is subject to eight nonlinear constraints and three linear constraints, which collectively define the feasible range for the design variables. The mathematical formulation of this problem is presented below.

Minimize

where

Constant values:

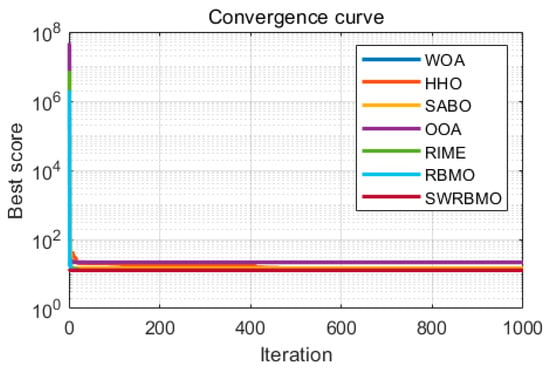

Table 14 reveals that the SWRBMO algorithm achieves the minimum weight for the stepped cone pulley, yielding a result of 17.1. This value is far lower than those of other algorithms. Specifically, when compared with WOA, HHO, SABO, OOA, RIME and RBMO, the numerical results of the SWRBMO algorithm are on average 85 orders of magnitude lower than those of these algorithms, and the improvement is close to the theoretical limit (100%). As depicted in Figure 9, the SWRBMO algorithm consistently outperforms the other methods in both convergence accuracy and speed. This demonstrates that the SWRBMO algorithm possesses substantial potential and value for practical applications, especially in the field of mechanical engineering.

Table 14.

Optimization results of step cone pulley problem.

Figure 9.

Convergence curve for step cone pulley problem.

6. Conclusions

This study presents a multi-strategy improved Red-billed Blue Magpie Optimization Algorithm (SWRBMO), aiming to improve its effectiveness and adaptability in solving complex optimization problems. The algorithm incorporates three strategies: an adaptive T-distribution-based sinh–cosh search strategy to accelerate the convergence speed, a neighborhood-guided reinforcement strategy to help avoid local optima, and a crossover strategy to enhance population diversity and accuracy.

To evaluate the performance of the SWRBMO algorithm, a series of comprehensive experiments were conducted. The effectiveness of individual strategies was first validated through experiments on 15 standard benchmark functions. Ablation studies were performed, which not only provided further insight into the effectiveness of each strategy but also examined the synergistic effects of combining the strategies, emphasizing how they complement and enhance one another within the algorithm framework. Following this, the improved SWRBMO algorithm was tested using the CEC2019 and CEC2021 test suites. The algorithm’s performance was compared with eight representative algorithms. In addition to comparing the commonly used swarm intelligence optimization algorithms such as WHO, POA, and HHO, this study also incorporates comparisons with relatively new algorithms from recent research, namely SFOA and SGA, as well as the excellent improved algorithms, GSABO, IWKGJO, and EOSMICOA. The results show that SWRBMO achieves better accuracy and convergence on most test functions. Furthermore, the results of all three test suites (CEC2005, CEC2019, and CEC2021) were subjected to the Wilcoxon rank-sum test. It was confirmed that in most cases, SWRBMO exhibited significant statistical advantages over the compared algorithms, providing reliable empirical evidence for its outstanding optimization capabilities.

To further verify its practical applicability, SWRBMO was applied to four real-world engineering optimization problems: the robot gripper problem, the industrial refrigeration system problem, the reinforced concrete beam design problem, and the step cone pulley problem. Although the optimal objective values obtained by SWRBMO are sometimes similar to those achieved by other algorithms, its main advantages lie in faster convergence, greater solution diversity, and higher accuracy. Nevertheless, the application of SWRBMO to more complex scenarios still requires further investigation. Future research will aim to address these limitations by exploring engineering problems with higher complexity, dimensionality, constraints, or dynamic characteristics, and by extending the algorithm’s validated performance advantages to challenging fields such as wind power generation, intelligent construction, and disaster prevention.

Author Contributions

Conceptualization, Y.L.; software, J.Z.; validation, J.Z., X.W. and B.S.; formal analysis, Y.L. and J.Z.; resources, X.W. and B.S.; data curation, J.Z.; writing—review and editing, J.Z.; writing—review and editing, Y.L. and J.Z.; supervision, X.W.; project administration, B.S.; funding acquisition, Y.L.; All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (grant no. 52278171).

Data Availability Statement

Data are contained within the article.

Acknowledgments

The authors would like to thank the anonymous reviewers for helping us improve this paper’s quality. Thanks to the National Natural Science Foundation of China (grant no. 52278171).

Conflicts of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Rahkar, F.T. Battle Royale Optimization Algorithm. Neural Comput. Appl. 2021, 33, 1139–1157. [Google Scholar] [CrossRef]

- Li, J.-Y.; Zhan, Z.-H.; Tan, K.C.; Zhang, J. A Meta-Knowledge Transfer-Based Differential Evolution for Multitask Optimization. IEEE Trans. Evol. Comput. 2022, 26, 719–734. [Google Scholar] [CrossRef]

- Yankai, W.; Shilong, W.; Dong, L.; Chunfeng, S.; Bo, Y. An Improved Multi-Objective Whale Optimization Algorithm for the Hybrid Flow Shop Scheduling Problem Considering Device Dynamic Reconfiguration Processes. Expert Syst. Appl. 2021, 174, 114793. [Google Scholar] [CrossRef]

- Yang, B.; Liang, B.; Qian, Y.; Zheng, R.; Su, S.; Guo, Z.; Jiang, L. Parameter Identification of PEMFC via Feedforward Neural Network-Pelican Optimization Algorithm. Appl. Energy 2024, 361, 122857. [Google Scholar] [CrossRef]

- Song, X.; Ma, H.; Zhang, Y.; Gong, D.; Guo, Y.; Hu, Y. A Streaming Feature Selection Method Based on Dynamic Feature Clustering and Particle Swarm Optimization. IEEE Trans. Evol. Comput. 2024. [Google Scholar] [CrossRef]

- Storn, R.; Price, K. Differential Evolution—A Simple and Efficient Heuristic for Global Optimization over Continuous Spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle Swarm Optimization. In Proceedings of the ICNN’95—International Conference on Neural Networks, Perth, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar] [CrossRef]

- Xue, J.; Shen, B. A Novel Swarm Intelligence Optimization Approach: Sparrow Search Algorithm. Syst. Sci. Control. Eng. 2020, 8, 22–34. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Heidari, A.A.; Mirjalili, S.; Faris, H.; Aljarah, I.; Mafarja, M.; Chen, H. Harris Hawks Optimization: Algorithm and Applications. Future Gener. Comput. Syst. 2019, 97, 849–872. [Google Scholar] [CrossRef]

- Arora, S.; Singh, S. Butterfly Optimization Algorithm: A Novel Approach for Global Optimization. Soft Comput. 2019, 23, 715–734. [Google Scholar] [CrossRef]

- Hayyolalam, V.; Pourhaji Kazem, A.A. Black Widow Optimization Algorithm: A Novel Meta-Heuristic Approach for Solving Engineering Optimization Problems. Eng. Appl. Artif. Intell. 2020, 87, 103249. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Ye, M.; Zhou, H.; Yang, H.; Hu, B.; Wang, X. Multi-Strategy Improved Dung Beetle Optimization Algorithm and Its Applications. Biomimetics 2024, 9, 291. [Google Scholar] [CrossRef] [PubMed]

- Yu, H.; Chung, C.Y.; Wong, K.P.; Lee, H.W.; Zhang, J.H. Probabilistic Load Flow Evaluation With Hybrid Latin Hypercube Sampling and Cholesky Decomposition. IEEE Trans. Power Syst. 2009, 24, 661–667. [Google Scholar] [CrossRef]

- Fu, H.; Liu, H. Improved sparrow search algorithm with multi-strategy integration and its application. Control. Decis. 2022, 37, 87–96. [Google Scholar] [CrossRef]

- Fu, S.; Li, K.; Huang, H.; Ma, C.; Fan, Q.; Zhu, Y. Red-Billed Blue Magpie Optimizer: A Novel Metaheuristic Algorithm for 2D/3D UAV Path Planning and Engineering Design Problems. Artif. Intell. Rev. 2024, 57, 134. [Google Scholar] [CrossRef]

- El-Fergany, A.A.; Agwa, A.M. Red-Billed Blue Magpie Optimizer for Electrical Characterization of Fuel Cells with Prioritizing Estimated Parameters. Technologies 2024, 12, 156. [Google Scholar] [CrossRef]

- Lu, B.; Xie, Z.; Wei, J.; Gu, Y.; Yan, Y.; Li, Z.; Pan, S.; Cheong, N.; Chen, Y.; Zhou, R. MRBMO: An Enhanced Red-Billed Blue Magpie Optimization Algorithm for Solving Numerical Optimization Challenges. Symmetry 2025, 17, 1295. [Google Scholar] [CrossRef]

- Sharma, A. Improved Red-Billed Blue Magpie Optimizer for Unmanned Aerial Vehicle Path Planning. In Proceedings of the 2024 International Conference on Computational Intelligence and Network Systems (CINS), Dubai, United Arab Emirates, 28–29 November 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Wolpert, D.H.; Macready, W.G. No Free Lunch Theorems for Optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Morozov, A.Y.; Kuzenkov, O.A.; Sandhu, S.K. Global Optimisation in Hilbert Spaces Using the Survival of the Fittest Algorithm. Commun. Nonlinear Sci. Numer. Simul. 2021, 103, 106007. [Google Scholar] [CrossRef]

- Mirjalili, S. SCA: A Sine Cosine Algorithm for Solving Optimization Problems. Knowl.-Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

- Abualigah, L.; Diabat, A.; Mirjalili, S.; Abd Elaziz, M.; Gandomi, A.H. The Arithmetic Optimization Algorithm. Comput. Methods Appl. Mech. Eng. 2021, 376, 113609. [Google Scholar] [CrossRef]

- Bai, J.; Li, Y.; Zheng, M.; Khatir, S.; Benaissa, B.; Abualigah, L.; Abdel Wahab, M. A Sinh Cosh Optimizer. Knowl.-Based Syst. 2023, 282, 111081. [Google Scholar] [CrossRef]

- Wang, Y.; Cheng, P. Whale Optimization Algorithm with Improved Multi-Strategy. Comput. Eng. Appl. 2024, 61, 83. [Google Scholar] [CrossRef]

- Liu, K.; Zhao, L.L.; Wang, H. Whale Optimization Algorithm Based on Elite Opposition-Based and Crisscross Optimization. J. Chin. Comput. Syst. 2020, 41, 2092–2097. [Google Scholar]

- Gaviano, M.; Kvasov, D.E.; Lera, D.; Sergeyev, Y.D. Software for generation of classes of test functions with known local and global minima for global optimization. ACM Trans. Math. Softw. 2003, 29, 469–480. [Google Scholar] [CrossRef]

- Trojovský, P.; Dehghani, M. Pelican Optimization Algorithm: A Novel Nature-Inspired Algorithm for Engineering Applications. Sensors 2022, 22, 855. [Google Scholar] [CrossRef]

- Jia, H.; Zhou, X.; Zhang, J.; Mirjalili, S. Superb Fairy-Wren Optimization Algorithm: A Novel Metaheuristic Algorithm for Solving Feature Selection Problems. Clust. Comput. 2025, 28, 1–62. [Google Scholar] [CrossRef]

- Tian, A.-Q.; Liu, F.-F.; Lv, H.-X. Snow Geese Algorithm: A Novel Migration-Inspired Meta-Heuristic Algorithm for Constrained Engineering Optimization Problems. Appl. Math. Model. 2024, 126, 327–347. [Google Scholar] [CrossRef]

- Li, Y.; Yu, Q.; Du, Z. Sand Cat Swarm Optimization Algorithm and Its Application Integrating Elite Decentralization and Crossbar Strategy. Sci. Rep. 2024, 14, 8927. [Google Scholar] [CrossRef]

- Li, Y.; Yu, Q.; Wang, Z.; Du, Z.; Jin, Z. An Improved Golden Jackal Optimization Algorithm Based on Mixed Strategies. Mathematics 2024, 12, 1506. [Google Scholar] [CrossRef]

- Huang, Q.; Liu, S.; Li, M.; Guo, Y. Multi-strategy chimp optimization algorithm and its application of engineering problem. Comput. Eng. Appl. 2022, 58, 174–183. [Google Scholar]

- Zhong, R.; Yu, J.; Zhang, C.; Munetomo, M. SRIME: A Strengthened RIME with Latin Hypercube Sampling and Embedded Distance-Based Selection for Engineering Optimization Problems. Neural Comput. Appl. 2024, 36, 6721–6740. [Google Scholar] [CrossRef]

- Kumar, A.; Wu, G.; Ali, M.Z.; Mallipeddi, R.; Suganthan, P.N.; Das, S. A Test-Suite of Non-Convex Constrained Optimization Problems from the Real-World and Some Baseline Results. Swarm Evol. Comput. 2020, 56, 100693. [Google Scholar] [CrossRef]

- Trojovský, P.; Dehghani, M. Subtraction-Average-Based Optimizer: A New Swarm-Inspired Metaheuristic Algorithm for Solving Optimization Problems. Biomimetics 2023, 8, 149. [Google Scholar] [CrossRef] [PubMed]

- Dehghani, M.; Trojovský, P. Osprey Optimization Algorithm: A New Bio-Inspired Metaheuristic Algorithm for Solving Engineering Optimization Problems. Front. Mech. Eng. 2023, 13, 8775. [Google Scholar] [CrossRef]

- Su, H.; Zhao, D.; Heidari, A.A.; Liu, L.; Zhang, X.; Mafarja, M.; Chen, H. RIME: A Physics-Based Optimization. Neurocomputing 2023, 532, 183–214. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).