1. Introduction

Biomimetics is an interdisciplinary field devoted to the study and imitation of biological organisms and systems, whose aim is the development of bioinspired solutions to a wide range of complex problems. Biomimetics research has found applications in many fields of science and engineering, including the design of bioinspired mechanisms, sensors and materials, the imitation/modeling of self-organized and cooperative behavior, robotics design, and biomedicine, among others. One of the most important developments that have arisen from biomimetics study are Metaheuristics Optimization Algorithms (MOAs). MOAs (also known as Nature-Inspired Metaheuristics or bio-inspired algorithms) are a family of heuristic computational methods designed to mimic some natural behavior, mechanisms, or laws with the purpose of applying it to solve optimization problems [

1]. Many different MOAs have been developed based on an ample variety of phenomena, including natural (Darwinian) evolution (which includes techniques such as Differential Evolution (DE), Genetic Algorithms (GA), Evolution Strategies (ES), etc.), swarm behavior (with methods such as Particle Swarm Optimization (PSO), Grey Wolf Optimizer (GWO), Social Spider Optimization (SSO) and many others) and even the laws of physics (with algorithms such as Gravitational Search Algorithm (GSA), States of Matter Search (SMS) or Simulated Annealing (SA), to name a few). Through the last two decades, MOAs have been successfully applied to solve a plethora of real-world problems from many different areas of application such as engineering design, image processing and computer vision, communications, networks, energy/power management, machine learning, data science, medical diagnosis assistance, robotics, and many others [

1].

Video surveillance is a very popular research topic, which is not surprising considering its importance for both security and crime deterring in indoor/outdoor environments. Video surveillance requires the distribution of several image sensors within the target surveillance space (SS). The placement of these devices should be aimed at achieving optimal coverage of the SS; that is, to allow the deployed sensors (camera network) to “observe” as much of the environment as possible at all times [

2].

Optimal Camera Placement (OCP) is an optimization problem that consists of finding an optimal number of image sensor and their locations so that they satisfy a maximum coverage of the SS, as well as some other task-specific constraints (i.e., maximum number of available sensors, spatial resolution requirements, area visibility time, etc.). OCP could become a quite challenging task depending on the characteristics of the SS, as well as the number, type and specifications of the available image sensors. Cameras used for surveillance can be classified into three types: 1. Fixed Perspective (FP), 2. Pan-Tilt-Zoom (PTZ), and 3. Omnidirectional. An FP camera consists of an image sensor whose field of view (FoV) models a frustum (pyramid-like shape), and once mounted, they usually remain in a fixed position, orientation, and focal length. FP sensors are often preferred over other camera types due to their low cost and ease of implementation, particularly for indoor surveillance. On the other hand, we have PTZ cameras. Like FP cameras, PTZs FoV models have a frustum shape, whose orientation and focal length can be adjusted thanks to their ability to rotate horizontally (Pan), vertically (Tilt), and by performing optical zoom (up to 40× for most moder cameras). PTZs are often deployed for surveillance in large outdoor environments, such as construction sites or highways, although they can be placed within indoor locations, where security staff can control their movement remotely. While PTZs have useful features, they may have some issues such as command latency (during remote operation), and gaps in security coverage (due to the use of automatic preset motions or when auto tracking errors occur). Finally, there are omnidirectional cameras (OCs), which are mostly used in large interior spaces. The main feature of OCs are their ultra-wide-angle lenses, which can create dynamic viewing angles ranging from 180° to up to 360°. The images produced by OCs are usually circular and with a distorted appearance (which can be corrected by using appropriate de-warping software) and have relatively high resolution (ranging from 2 to 5 megapixel in most commercial models, although higher resolutions are also available). Unlike PTZs, the FoV on OCs often remains fixed to a certain region of interest (ROI). While this may pose a limitation compared to PTZs, this is highly compensated by their wider FoV (which aids in preventing security gaps), and their lack of any mobile parts (eliminating failure due to mechanical wear). In addition, OCs are usually smaller and more discrete compared to PTZs, and thus more difficult to spot by the human eye [

2].

Recently, a wide range of solutions for OCP, oriented to specific surveillance tasks, have been proposed. Within these approaches, the use of metaheuristic optimization algorithms (MOA) for OCP problem is of special interest. For example, in [

3] FP cameras are randomly scattered within a ROI, and then, Particle Swarm Optimization (PSO) is deployed to find a set of optimal sensor orientations so that the camera network’s coverage is maximized. Such approach was compared against that of Potential field-based Coverage Enhancing Algorithm (PFCEA), achieving better results in terms of coverage. Also, in [

4] a variant of a Binary Particle Swarm Optimization (BPSO) known as BPSO Inspired Probability (BPSO-IP) was proposed for the automated placement of FP camera networks, with emphasis on both locations, and orientations. In this study the authors compared the performance of BPSO-IP against other well-known BPSO-based techniques, as well as with methods such as binary GA (BGA), Simulated Annealing (SA) and Tabu Search (TB), achieving good results in terms of both deployment cost and coverage. In [

5] an approach for OCP based on a variant of SA known as Trans-dimensional Simulated Annealing (TDSA) was presented and compared against camera placement methods based on Binary Integer Programming (BIP) and two other heuristics. In this study, both omnidirectional and FP cameras were considered for experimentation, and results show that TDSA can deliver placement configurations which maximize coverage with a more reduced number of sensors, making it more attractive for application compared to the other methods. In [

6] a variant of GA called Guided Genetic Algorithms (GGA) is applied for OCP in multi-camera motion capture systems. In this approach, a distribution/estimation technique is first applied to restrict the search space prior to GGA initialization. Furthermore, an error metric (optimization function) is also proposed to evaluate the quality of sensor placement in the camera network. This approach was compared to that based on the traditional GA, demonstrating a better performance. In [

7] an approach for the minimization of total cost in camera placement for bridges’ surveillance was presented. Here the authors propose to apply a greedy algorithm and GA, along with a visibility test as an approach to exploring possible solutions effectively. In addition, a heuristic called Uniqueness score with Local Search Algorithm (ULA) was developed and compared against the greedy algorithm and GA, outperforming them in terms of average cost, coverage, and computation time. In [

8] an OCP approach for the surveillance of indoor work areas based on Grey Wolf Optimizer (GWO) was proposed. In this approach, the objective was to set three PTZ cameras within a certain SS (priority area) with the aim to maximize information content and minimize the effects of occlusions caused by randomly moving objects. This approach was compared against methods based in GA and PSO, demonstrating to be preferred to both in terms of computational complexity and convergence speed. In [

9] an improved GA was proposed for OCP in metro station construction sites with the aim of setting a minimum number of PTZ sensors while also achieving total coverage of the construction and tackling the problem of occlusions caused by various dynamic elements present during construction.

The current literature suggests that there is a significant amount of research dedicated to MOA-based OCP for either FP or PTZ cameras; in contrast, the literature seems to be somewhat lacking in works related to optimal placement of OCs assisted by MOAs. In attention to this research gap, in this paper, we present a comparative study of several popular MOAs applied to OCP of OCs in indoor environments. The aim of this comparison is to observe the performance of different MOAs in several OCP tasks. For our experiments, we first model the OCP problem in terms of sensor characteristics (particularly FoV) and target SS (including sources of occlusions such as walls or columns), and then, MOAs are applied to deliver an optimal set of locations for prespecified amounts of image sensors. Our comparisons include camera placement configurations delivered by Particle Swarm Optimization (PSO) [

10,

11], Grey Wolf Optimizer (GWO) [

12,

13], Social Spider Optimization (SSO) [

14,

15], Gravitational Search Algorithm (GSA) [

16,

17], States of Matter Search (SMS) [

18], Simulated Annealing (SA) [

19], Differential Evolution (DE) [

20,

21], Genetic Algorithms (GA) [

22,

23] and Covariance Matrix Adaptation Evolution Strategies (CMA-ES) [

24].

The rest of this paper is organized as follows: In

Section 2, we present the problem formulation for OCP. In

Section 3, a review about metaheuristic optimization algorithms emphasizing their main attributes is presented. In

Section 4, we present our experimental results. Finally, in

Section 5, conclusions are drawn.

2. Problem Formulation

The main objective in OCP is to maximize the amount of area observed by a camera network (set of image sensors) within a target surveillance space (SS). For a 2-D SS, the placement configuration of the camera network may be described expressed as

, where each pair

denotes the location of the

-th camera within the 2-D space, while

stands for the number of available image sensors. Since the amount of area observed by the camera network is relative to both, the structure of the SS and the location of the visual sensors themselves, the optimization problem to solve may be expressed as follows:

where

is the area operator. Furthermore

denotes de main polygon describing the target SS (deployment area), while

denotes simple holes (polygons within the SS representing potential sources of visual obstruction, such as walls, columns, furniture, etc.). Also,

is the polygon representing the area of the surveillance space that is observed by the camera network, as given by

where

is the polygon representing the area within the SS that is observed by the

-th camera according to its respective location

and sensor parameters/restrictions

, as given by

with

denoting the visibility polygon of the visual sensor (extension of the SS that is visible from a certain location when assuming a full 360° field of view, unlimited spatial resolution and infinite depth of field) computed at point

, whereas

stands for the polygon describing the default field of view (FoV) of a camera sensor placed at point

and regarding its corresponding set of characteristics

, which may include properties such as orientation (as in the case of Fixed or PTZ cameras) or minimum required resolution (which may be relevant for tasks such as detection and/or recognition). For example, if we consider a PTZ camera placed for human recognition purposes within a 2-D SS, its default FoV will be determined by the orientation angle

(azimuth) of its viewing frustum at point

, as well as the maximum distance

at which the minimum resolution required for recognition is satisfied (see

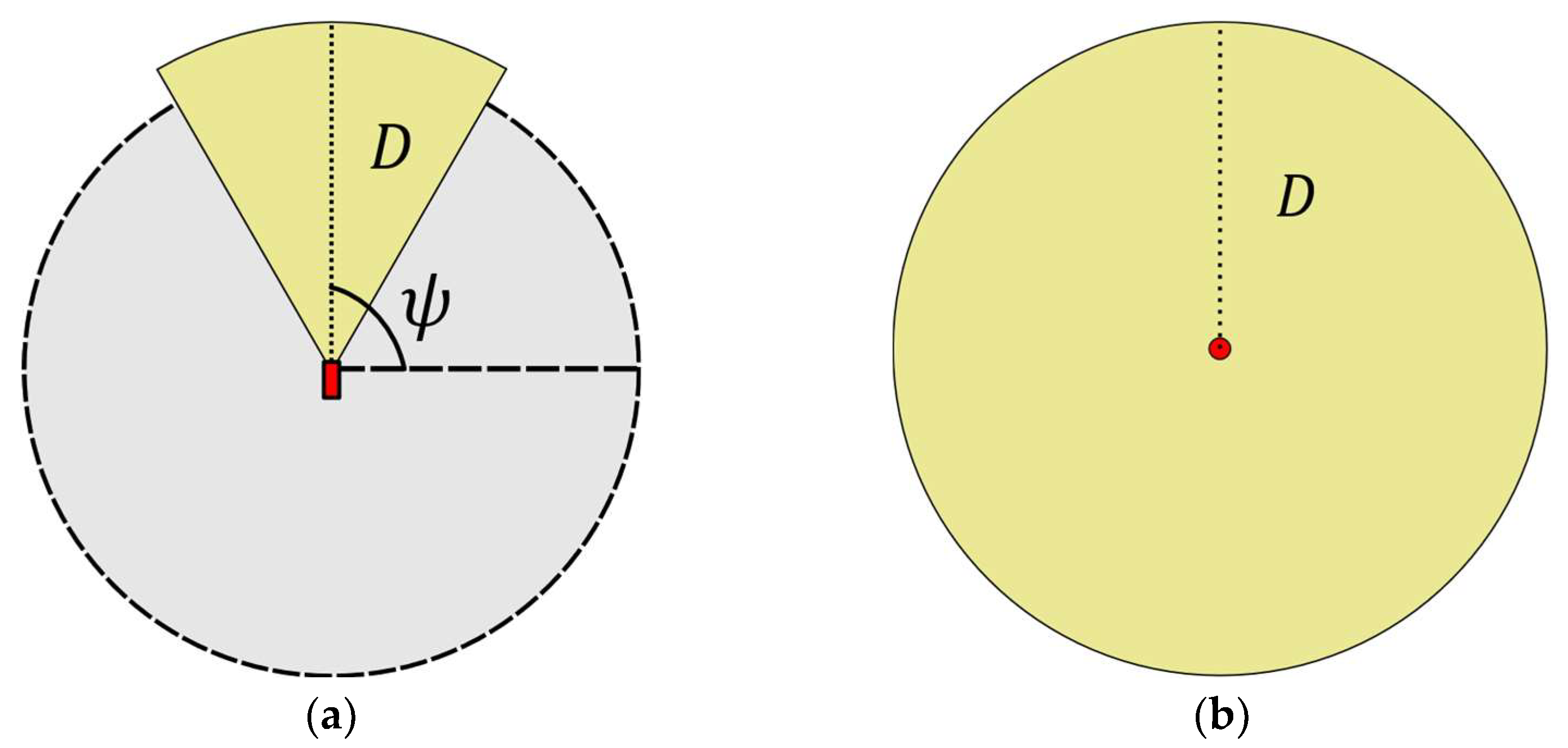

Figure 1a). On the other hand, if an omnidirectional visual sensor is considered instead, then its default FoV will be given as a circular region of radius

centered a point z (see

Figure 1b).

As for the procedure for determining the visibility polygon

, it can be formulated as follows: Given the simple polygon

, a set of

simple holes

and the camera placement point

such that

and

(with ‘

’ denoting the boundary operator)

The visibility polygon

will be comprised by the set of all points

within

such that

To compute the visibility polygon at point

, we can apply the algorithm proposed in [

1]. For a simple polygon

without holes

, such methodology consists of performing a counterclockwise (CCW) radial sweep of the polygon over the range

with point

as the center. The purpose of this is to compute the visible line segments emanating from

and then represent

as the union of all such segments. To apply this procedure, it is first required to represent the polygon

(deployment area) as an edge list in cartesian coordinates as follows:

where

is the number of edges, i the edge index ordered CCW,

are the start (

) and end (

) vertices of edge ‘

’ and

.

Once the cartesian edge list

is defined, it is then converted to its polar coordinate representation; this is

where

are the polar angle and radius of the

-th edge’s vertex, respectively.

With ELP now defined, the algorithm then proceeds to discard all edges that might not be visible (i.e., those for which

) and then the remaining vertices are sorted in lexicographical ascending list as follows:

where

,

and

are the vertex, polar angles, radii, and edge pointers, respectively. The resulting vertex list

is finally applied by the algorithm to sweep the polygon in CCW order, outputting the visibility polygon

.

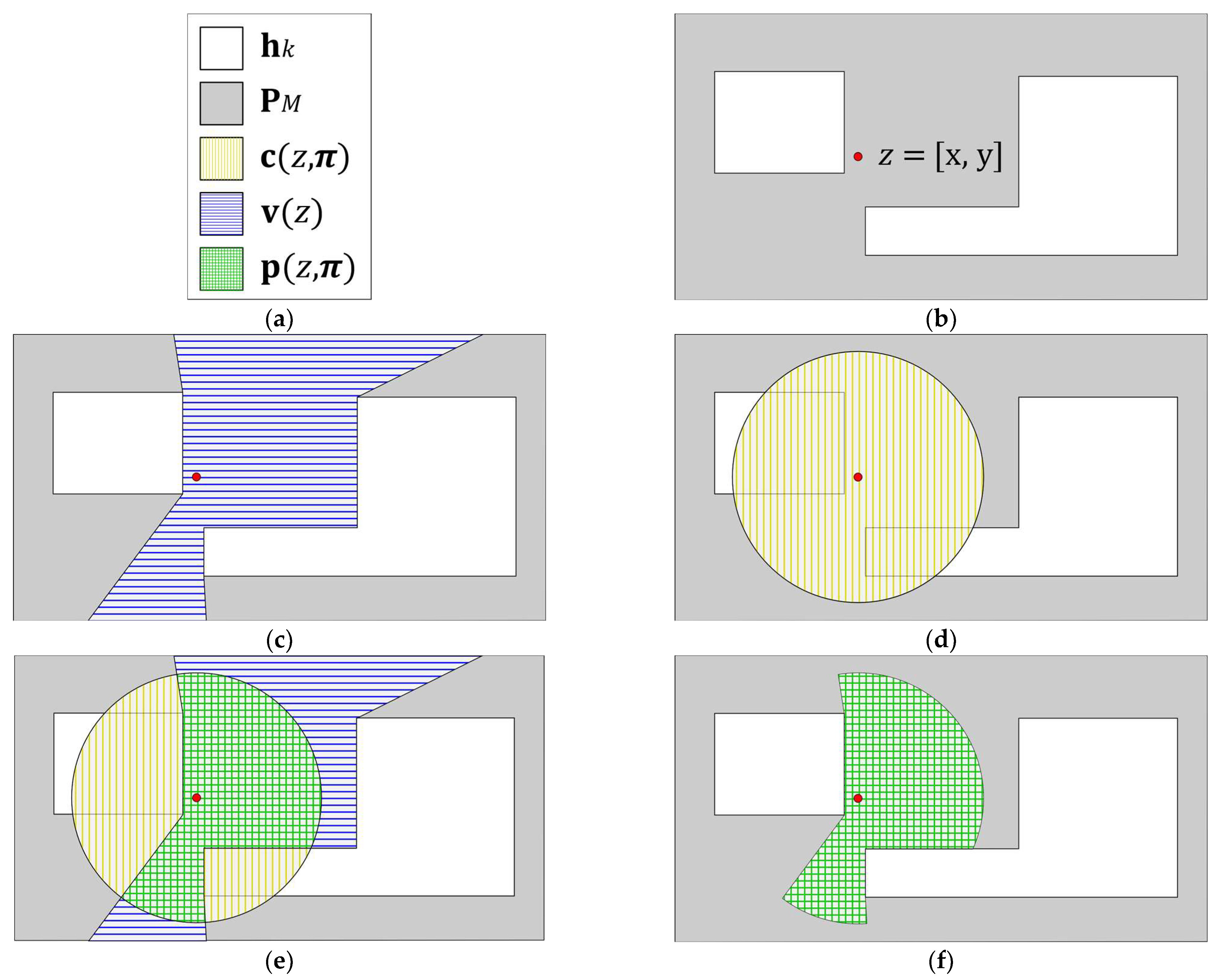

The extension of this algorithm for a polygon

with polygonal holes

is straightforward. Since the outside of holes are also well defined by their respective edge lists (in a clockwise order,) it is only required to append such lists to

’s edge list, and then apply the algorithm as described above [

1] (see

Figure 2).

3. Metaheuristic Optimization Algorithms

Metaheuristic optimization algorithms (MOAs) have become quite popular during the last decades due to their diversity in terms of design and applications; they have been widely applied to solve problems competing to different areas of interest, such as engineering design, communications, energy management, data analysis, computer vision, robotics, among others [

2]. MOAs are often modeled as population-based optimization strategies, in which a set of search agents representing candidate solutions are constantly updated by following certain rules until a satisfactory result can be delivered [

25,

26]. Most of these optimization approaches are composed of three main steps: 1. initialization, 2. main loop, 3. solution presentation. For the initialization step, it is common for MOAs to start by generating a population of random individuals

(where

is the population size), where each of its elements

denotes a candidate solution for a given optimization problem. As for the main loop, this is where similarities among MOAs start to diverge; it is during this step where a search strategy, characteristic of each algorithm, is applied to iteratively update the population of solutions, aiming to improve their quality. Once the main loop comes to an end (due to meeting a pre-specified stop criterion), the algorithm enters its final step, in which it chooses the best solution found during the search process and reports it as the best approximation to the global optimum [

27].

Search strategies applied by MOAs may be quite different from one algorithm to another, which of course has an impact on the performance of these methods against certain optimization problems. In MOAs such as DE or GA [

22,

28], for example, solutions may be generated by either “mixing” information from randomly chosen solutions (crossover) or by adding some perturbations to currently existing ones (mutation) [

21,

29,

30]. On the other hand, there are techniques in which new solutions are produced by accounting for a series of “attraction” effects among certain individuals in the population. Such are the cases of algorithms like PSO [

10,

11,

31], ABC [

32,

33] or GSA [

16,

34], where solutions are set to move toward seemingly good solutions among the present population and/or the current global best solution found by the search process. Another trait impacting the performance of MOAs’ search strategies is their population selection mechanism; in this sense, MOAs can be classified as either greedy or non-greedy. In the case of greedy algorithms (such as GA) the population of solutions for the next iteration of the search process is set by selecting the best elements among the current population and a set of newly generated candidate solutions, then discarding all remaining elements. This type of selection mechanism allows a fast convergence toward promising solutions, but usually with the cost of compromising exploring capabilities [

2,

35]. A variation in this mechanism (individual greedy) can be found in algorithms such as DE or ABC, where a newly generated solution is accepted only if it directly improves the solution from which it was generated. In contrast to typical greedy selection, this approach has the appeal of being more balanced in terms of exploration–exploitation ratio, as the elements of the initial population are forced to improve individually from their own starting point [

2,

35]. On the other hand, non-greedy algorithms (such as PSO, GSA or GWO) have the distinction of not considering the relative quality of newly generated solutions, thus accepting all of them (independent of their quality) as replacement for the current population at the end of each iteration. The lack of elitism in non-greedy selection is preferred when exploration is preferred over exploitation [

2,

35]. It is worth nothing that the performance of a MOA cannot be predicted by only analyzing the characteristics of its applied search strategy, but rather by how these strategies interact with a specific problem’s features (such as the number of decision variables, bounds, constraints, modality, objective(s), etc.). From a practical point of view, this suggest that there will be certain search strategies that may perform better than others across certain problems, whereas for others they might be outperformed by another algorithm [

35,

36]; this fact has served as an inspiration for researchers to keep developing new and more efficient search strategies, aimed at offering better solutions to an ever-increasing variety of problems. Techniques worth mentioning aimed at this continuous search for improvement include modifications/hybridizations of popular MOAs such as PSO (with variants including those which combine crossover [

37] and mutation [

38] operations, and hybridizations such as Clustering-Based Hybrid Particle Swarm Optimization (CBHPSO) [

39], Real-Coded Genetic Algorithms with PSO (RCGA-PSO) [

40]), GA variants (with examples such as with Ant Colony Optimization (GA-ACO) [

41] and the Grey Wolf Optimizer/Genetic based optimizer (GWO-GA) [

42]), and many others.

4. Experimental Results

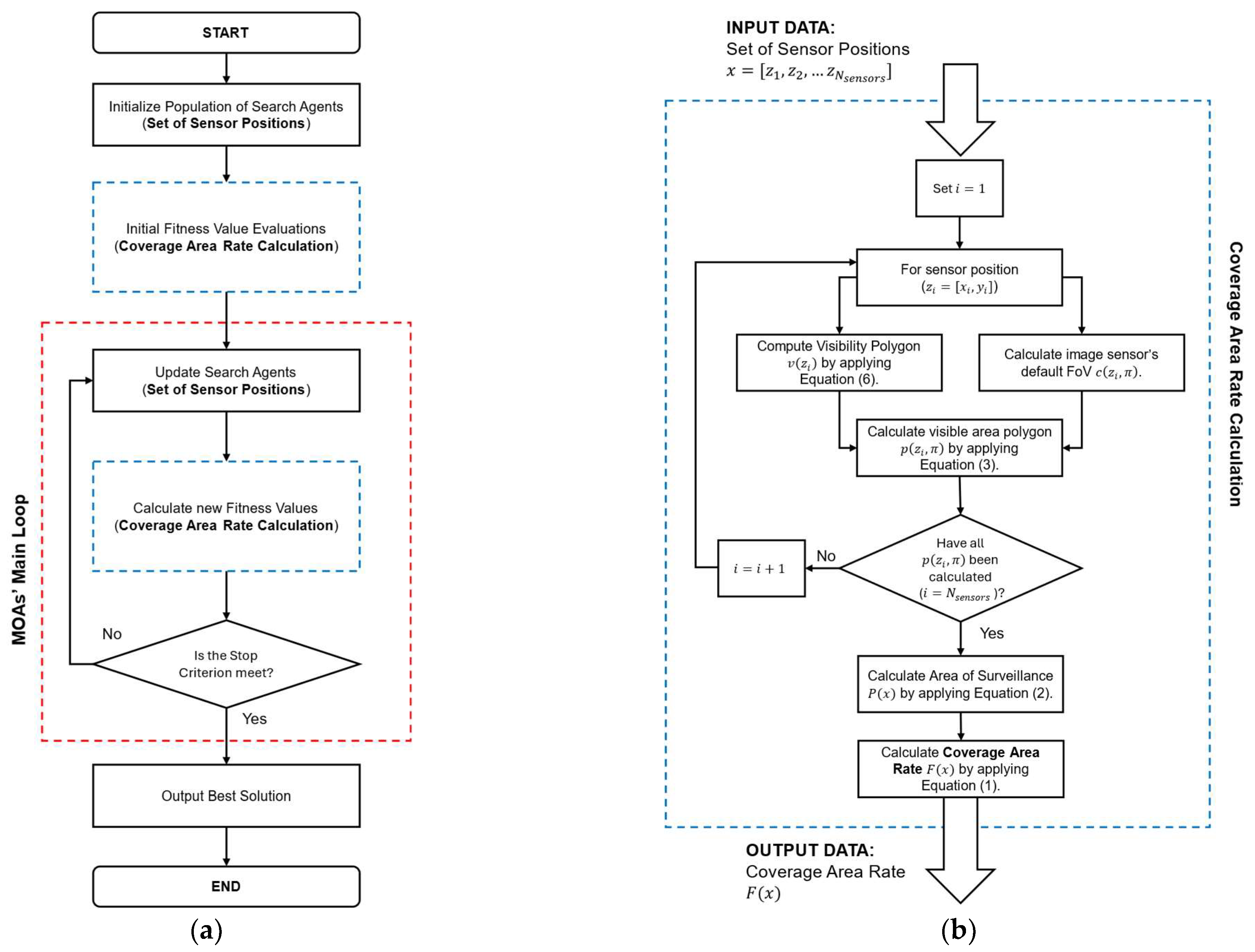

Our proposed MOA-based OCP Sensor Deployment Scheme consists of implementing MOAs to optimize the locations configuration of a set of OCs so that the coverage area rate (the amount of area that is observed by the sensor network) is maximized. In this approach, the function

presented in Equation (2) is applied as the fitness function applied by each MOA as part of their search process (see

Figure 3). Here it is important to remember that

is comprised by

pairs of sensor locations (i.e., [

] being the

location associated with the

-th image sensor); in order to match this with the representation of search agents in MOAs, let

represent a single search agents (with

being the number of decision variables in a given optimization problem). The matching between the elements of

(

locations) and

is as given as follows:

This means that for each

pair

there will be a corresponding pair of successive elements

. In order to match each

pair

with a pair of elements from

, the number of elements

represented by each search agent should be twice the number of available image sensors

; that is

In other words, the dimensionality (number of decision variables) of the optimization problem (maximization of coverage area rate) is conditioned by the number of available image sensors (the greater the number of sensors, the higher the dimensionality).

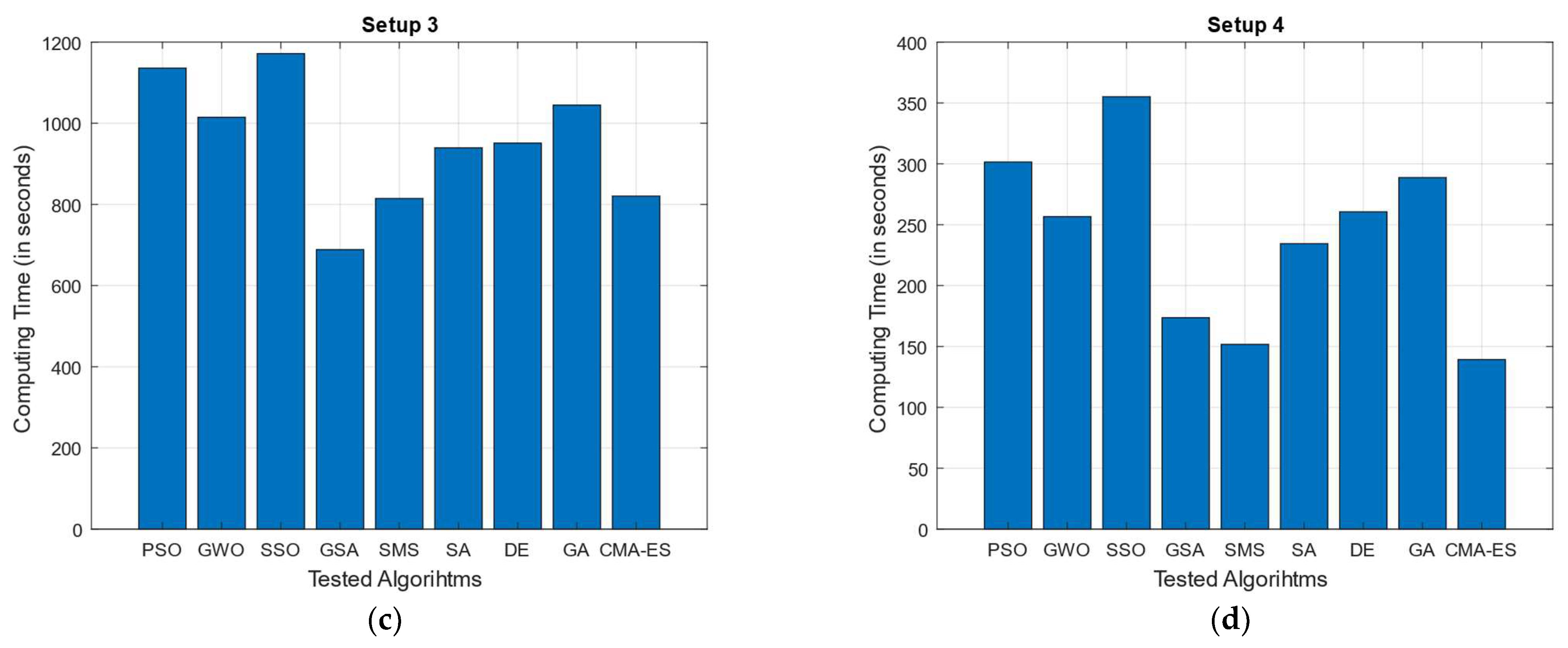

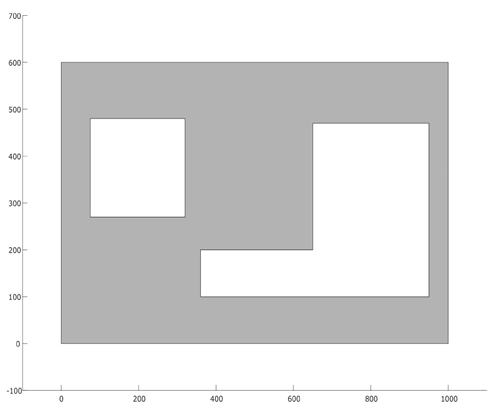

As for our experiments, we have considered two different deployment layouts (see

Table 1). The first layout (

) consists of a square-shaped deployment area (polygon) with a size of 100 m × 100 m and a rectangular hole (representing a visual occlusion) in the middle. The second layout (

) is represented by a rectangular polygon with a size of 100 m × 60 m, containing two holes (a square-shaped polygon and a J-shaped polygon). The region that is required to be covered by the camera network for both

and

is represented by the gray-colored background in each layout, and their area sizes are 9200 m

2 and 4085.5 m

2, respectively. The image sensors considered in our experiments are divided into two types: the first type (

) is a simple OC with horizontal resolution of 1280 px (a common resolution for most “economic” cameras). The second type of sensor (

) is another omnidirectional camera with horizontal resolution of 3584 px (common for “premium” image sensor). Since both cameras are meant to be applied for human recognition in indoor spaces, it is of special interest to know the maximum distance for recognition (minimum resolution distance) associated with each sensor; in the case of image sensor

such distance is of up to 10.24 m, whereas for sensor

it is up to 28.67 m (see

Table 2).

By considering the proposed deployment layouts and camera models, we can build four different experimental setups, each consisting of a layout (either

or

), a camera model (

or

), and a maximum number of sensors

(see

Table 3). Since each camera model has a different maximum recognition distance (and thus a different size on their FoV), the maximum number of sensors required to maximize the coverage of a given deployment space will also be different. To estimate the appropriate number of cameras

to apply at each experimental setup, we simulated a manual colocation of image sensors over the deployment layout seeking maximum coverage and minimum overlap of the sensors’ FoV.

In our experiments, nine different MOAs were tested: PSO, GWO, SSO, GSA, SMS, SA, DE, GA, and CMA-ES. Each of these algorithms proposes a different search strategy, whose performance can be slightly modified by changing the setup of their distinctive algorithm parameters; more importantly, these algorithms were chosen with the intention of ensuring variety with respect to certain performance-influencing characteristics: 1. selection mechanisms, 2. type of attractors (when applied), 3. dependence on iterative process, 4. use of population sorting mechanism, 5. use of measurements related to population/agents, and 6. additional memory requirements. A summary of these characteristics is presented in

Table 4.

In preparation for our experiments, an exhaustive tuning process was applied to find the best parameter configuration for each of the chosen MOAs. Such setups are described in

Table 5.

As for the test settings, to ensure a fair comparison between all tested MOAs, population size for each algorithm was set to

, while the stop criterion for each of these is dictated by the maximum number of allowed fitness function evaluations (function accesses) which was set as

. The remaining test settings (number of decision variables and search space bounds) are strictly dependent on each of the proposed experimental setups (Setup1, Setup 2, Setup 3, and Setup 4, as described in

Table 3); specifically, the number of decision variables

is set to be twice the maximum number of available image sensors for each setup. This is because the solution vector is coded to represent the

coordinates

corresponding to each image sensor

, as described in

Section 3. As for the bounds of the search space, these are dictated by the size of the deployment area (layout) modeled on each experimental setup, i.e., for Setup 3, the lower bounds

will be represented as the origin location (

), whereas the upper bounds

are denoted by the maximum horizontal and vertical length of the deployment area (

in this case). The settings for the number of decision variables and the lower/upper bounds corresponding to each experimental setup are summarized in

Table 6.

All chosen MOAs were applied over each of the proposed experimental setups. For each case, each MOA was tested a total of 30 times, and the results (best fitness value delivered by the search process) have been registered and reported in terms of five performance indexes: 1. the mean for the best fitness values found for each set of 30 individual tests (

), 2. the median of said fitness values (

), 3. their standard deviation (

), 4. the lowest fitness value found among each set of tests (

), and 5. the highest fitness value delivered by each set of tests (

). The performance results delivered by all tested MOAs over each experimental setup are reported on

Table 7,

Table 8,

Table 9 and

Table 10, where the best values for each performance index is shown in boldface. Also, the rank assigned to each algorithm according to its performance over each setup is shown at the bottom of each of these tables.

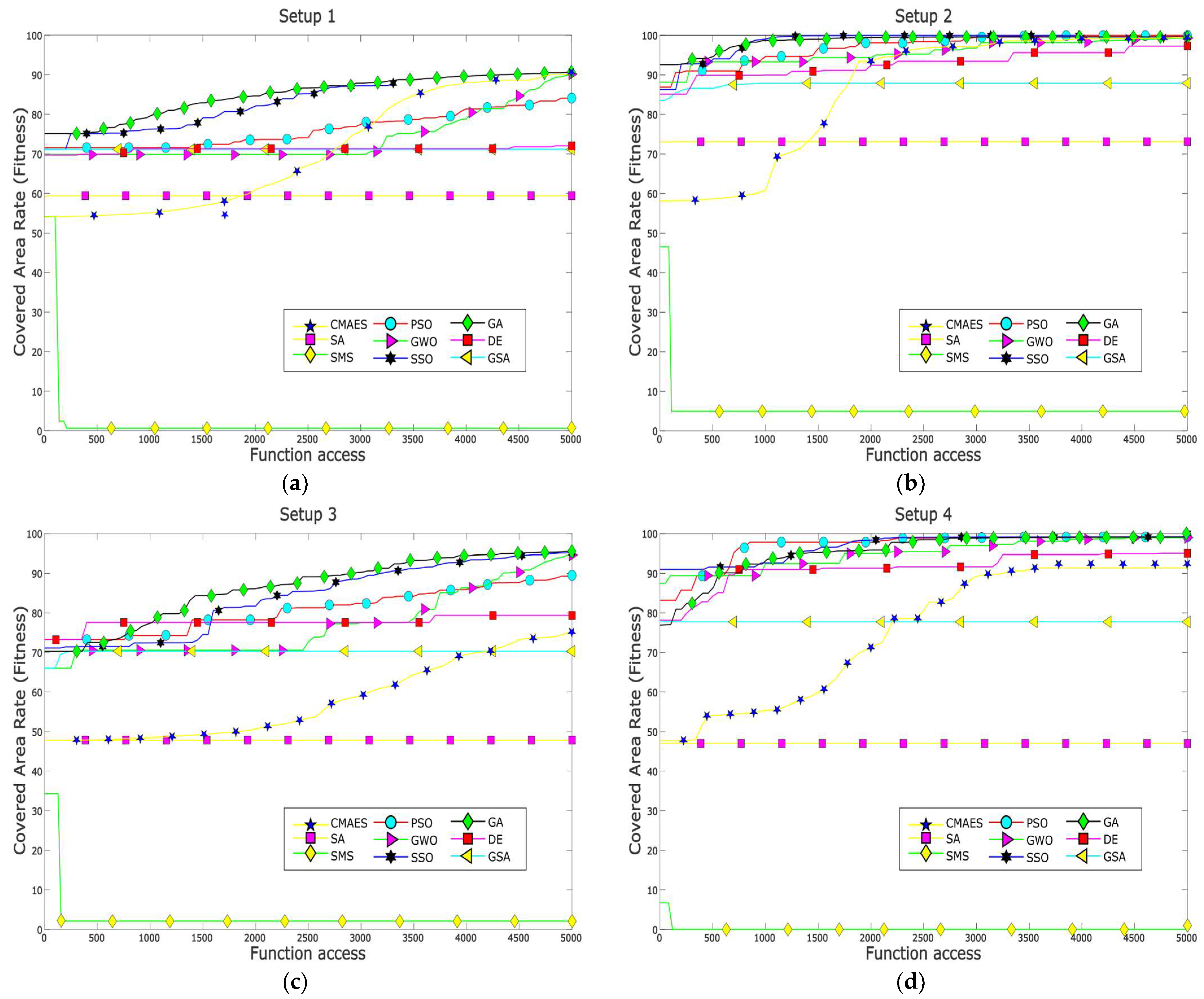

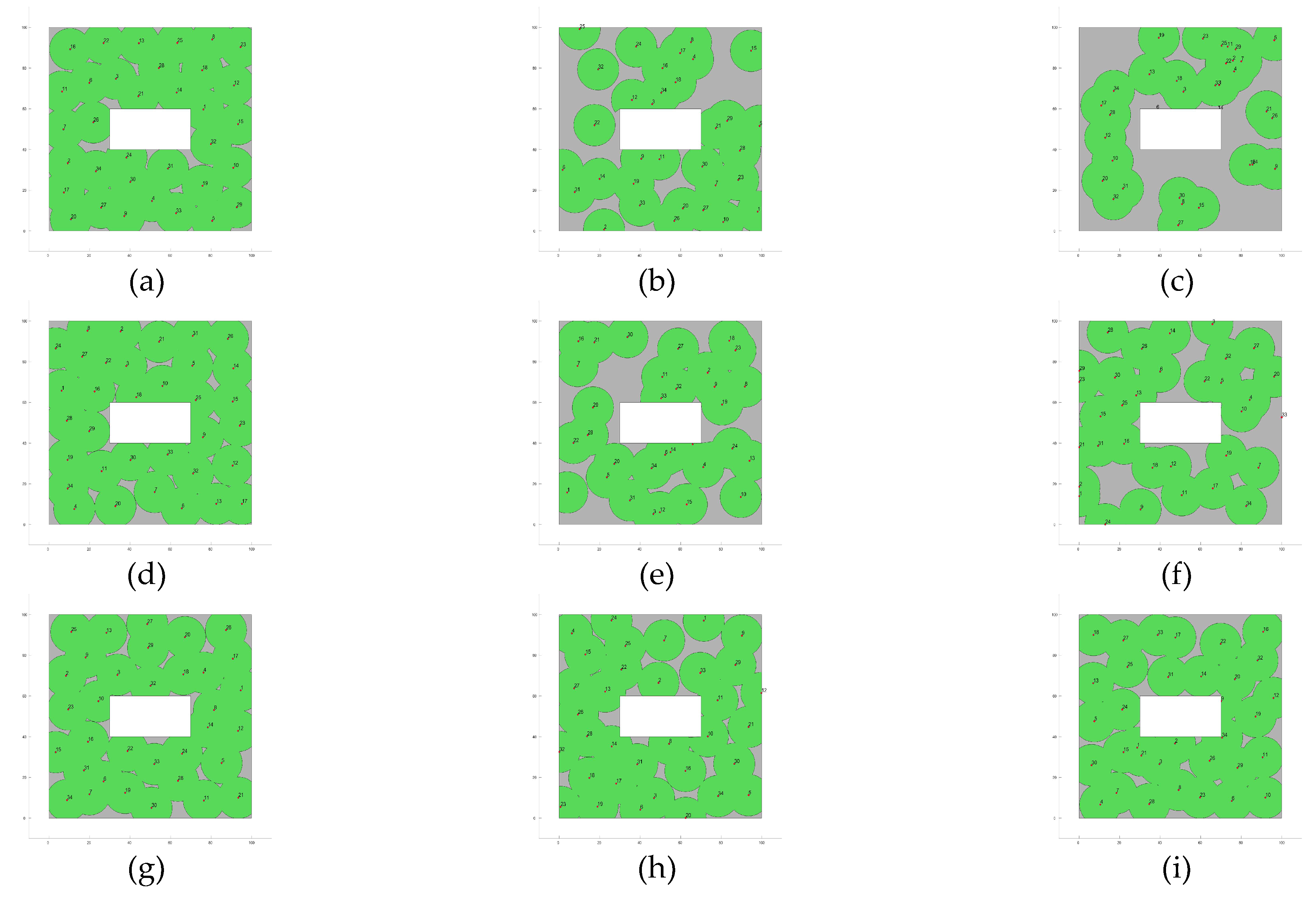

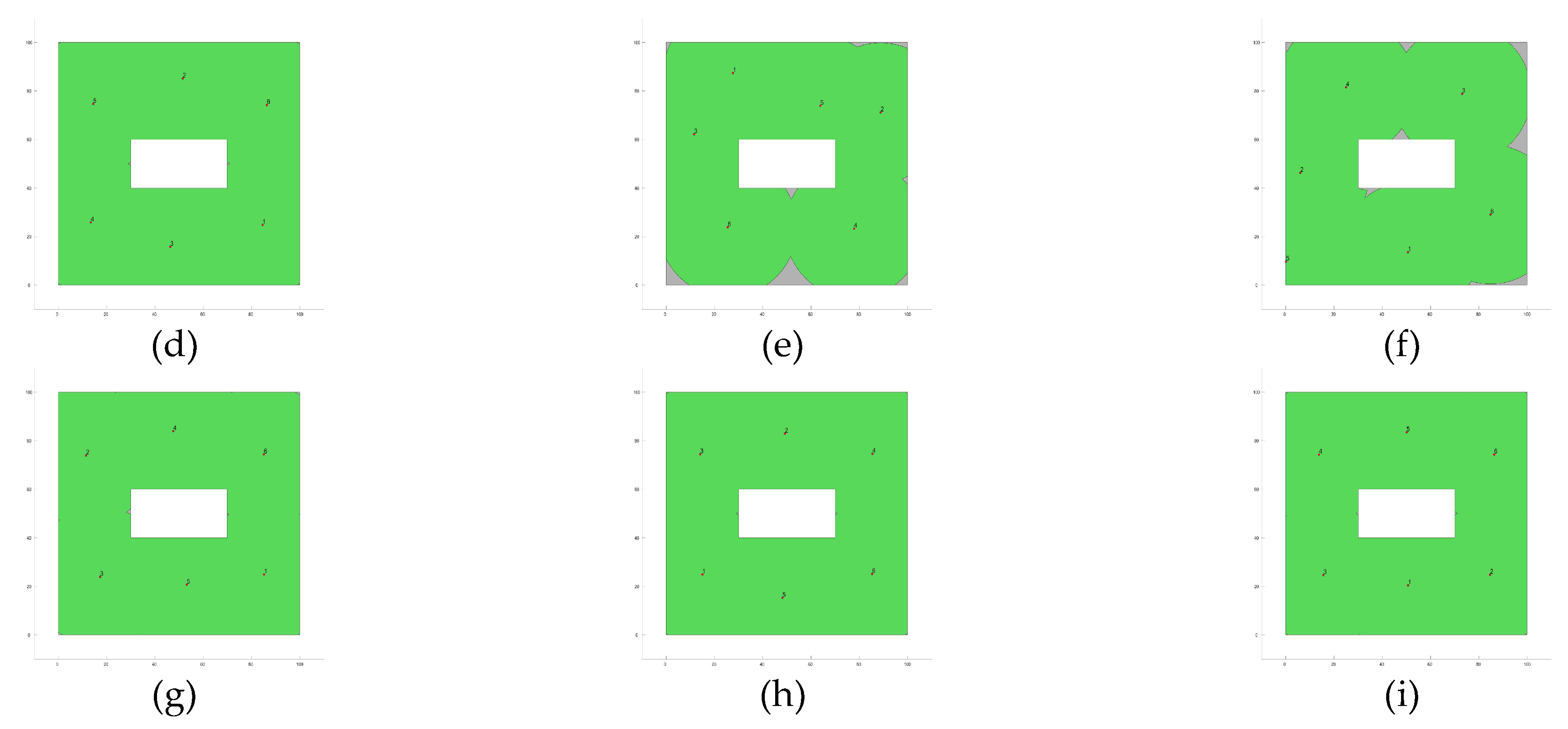

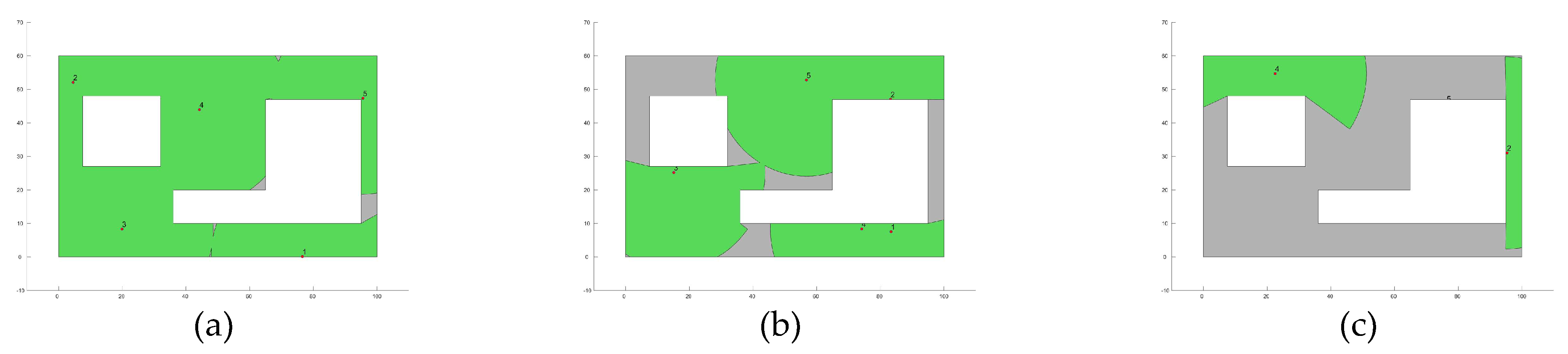

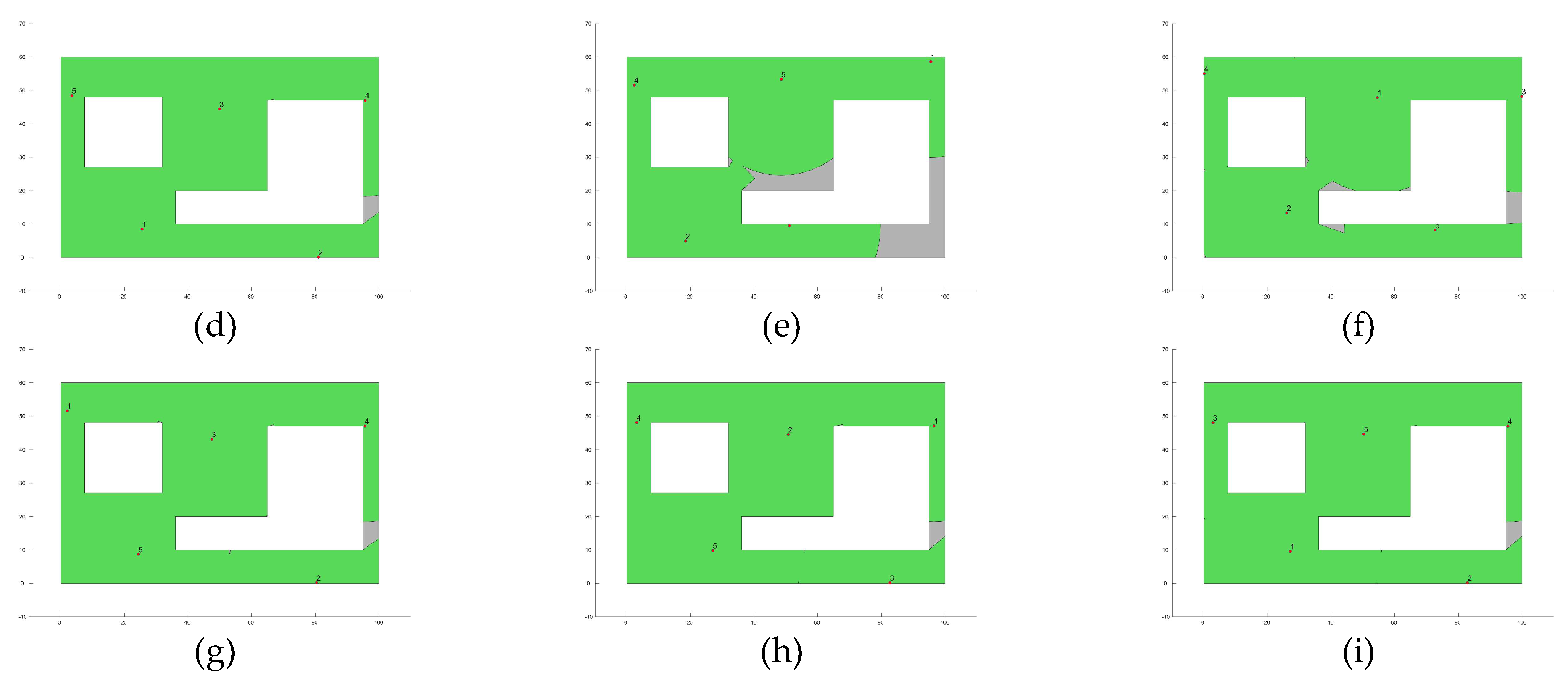

For Setup 1, we can see that the best performing algorithm is GA, followed by CMA-ES and SSO. On the other hand, for Setup 2, the best performing algorithms are represented by CMA-ES, GA and GWO. For Setup 3, GA is once again the best ranked algorithm, followed by SSO and GWO. Finally, for Setup 4, PSO has the best ranking, being followed by GA and SSO.

Furthermore,

Figure 4 presents the fitness evolution curves showing the average performance of each of the applied MOAs for each of the proposed experimental setups. These plots yet again give insight into the great performance of algorithms such as GA, CMA-ES, SSO, and PSO which have the ability reach significantly high fitness values by the end of the search process; however, these plots also expose the underperformance of algorithms such as DE and GSA, which suffer from stagnation very early in their respective search processes, or SMS, which is unable to deliver any competent solution for the proposed experimental setups.

Figure 5,

Figure 6,

Figure 7 and

Figure 8 show the best image sensor placement considering each experimental setup and the tested MOAs. Finally, in

Table 11 we present the overall ranking assigned to each algorithm according to its performance over the whole set of experimental setups. In general, the best performing algorithm is represented by GA (which is not surprising, considering its prevalence among the top three algorithms across all experimental setups), followed by PSO and SSO. On the other hand, the worst performing algorithms are represented by SMS, SA, and GSA, with each of them consistently attaining low rankings in all experimental setups.

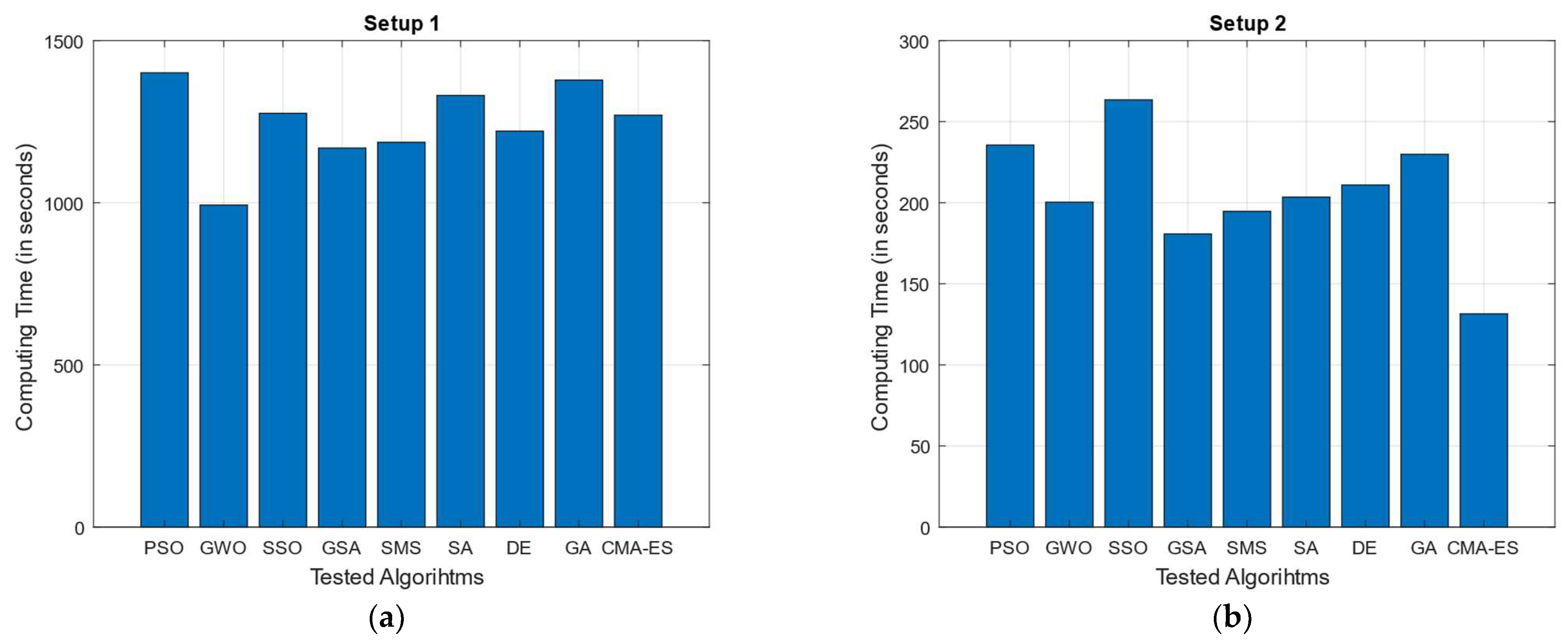

In addition to performance tests, processing times associated with each of the test MOAs when applied to each experimental setup has also been documented. The average processing time (in seconds) for each tested MOA/experimental setup is shown in

Table 12 (numerically) and

Figure 9 (graphically).

5. Discussion

As previously illustrated in

Table 11, the best overall performing algorithm for the proposed OCP problems is GA. The GA method is an evolutionary algorithm (classification which also groups algorithms such as DE and CMA-ES) [

2]. Evolutionary algorithms (EAs) distinguish themselves among other MOAs due to the use of operators which simulate phenomena observed in natural evolution, such as crossover, mutation, and selection. The way in which these operators are modeled and applied is usually different among EAs. In the case of GA, for example, the objective of these operators is to generate a new set of solutions by applying both crossover and mutation operators to the currently existing population, and then comparing such new individuals with the previously existing ones in terms of fitness to choose the best individuals among these two groups to define the new population for the next generation (this is called a greedy selection criterion) [

22]. On the other hand, in the case of DE, mutation operators are applied first to generate a new candidate (mutant) solution from each member in the population, and then crossover operations are performed to mix the information of each current solution and its corresponding mutant solution, forming a set of trial solutions. Finally, trial solutions are compared against their corresponding originating individual, and then the best among each pair is selected and kept as part of the population for the next generation, while the others are discarded (this represents an individual greedy selection criterion) [

29]. Finally, for CMA-ES (a variant of ES), while crossover and mutation operators are applied (according to the algorithms’ own approach), specific selection operators are absent, thus accepting any new solution generated by the crossover/mutation operators independent of their quality (non-greedy selection mechanism) [

36]. From a selection mechanism perspective, it seems that the greedy selection criterion associated with GA offers an advantage when applied to solve OCP problems in contrast to non-greedy methods (as in the case of CMA-ES). The good performance of GA for OCP may also be related to the nature of its own crossover and mutation operators. In contrast to DE and CMA-ES, the probabilistic criterion applied by GA to select parent solutions and the way recombination of information is applied in its crossover seems to be more effective in promoting solution diversity, thus allowing better exploration of the feasible solution space; for OCP problems, this translates into an ability for finding better placement distributions for the available image sensors, and thus better coverage of the target surveillance space.

The second and third best MOAs for OCP according to our experiments are PSO and SSO. It is interesting that these two methods (both classified as swarm-based algorithms) search operators which model attraction toward some previously defined location(s) or individuals. In the case of PSO, for example, the attraction movements performed by each particle in the population is dictated by both, the location of the global best solution (stored in the algorithms “memory” at the current iteration) and its personal best solution. Modeling the attraction movements in terms of these two specific solutions allows search agents not only to converge toward the location of the global best solution, but also to move toward the direction of other prominent solutions, which enhance their exploration capabilities [

10,

11]. Similarly, in SSO, movements are modeled so that search agents move toward other prominent members of the population. The key difference with PSO though, is that SSO models a population composed of two types of search agents: female and male individuals (the latter of which are further classified as either dominant or non-dominant according to their aptitudes). Under this scheme, SSO can deploy different movement strategies associated with both their gender designation and how each of them can interact with other agents (according to their specific movement rules), thus giving it flexibility for exploring the feasible search space. Also, SSO proposes a mating and selection mechanism similar to crossover operators present in EAs which allows it to generate new candidate solutions by mixing information corresponding to female and (dominant) male individual, and then comparing them to some of its originating solutions to select the best among them (thus performing a sort of greedy selection) [

15]. While both PSO and SSO share some similarities in terms of search strategy, the clearest difference lies in the way the movement of search agents is guided; in the case of PSO, it uses “memory” to store and update the information of prominent solution found during the search process (namely, the global best solution and the personal best solutions associated with each particle) and can use this information to guide the movement of the population toward those prominent locations. Conversely, SSO does not implement a process to register prominent solutions at all, and instead updates the population by using the information provided at the moment by those same individuals (which could cause search agents to diverge away from the global optimum depending on how the search process has developed). Finally, it is worth discussing a key difference between GA (the best-ranked algorithm) and PSO (the second best). As previously discussed, in GA new solutions are generated by applying both crossover and mutation operators, followed by a selection process in which old and new solutions are compared against each other to keep the most prominent among them. In contrast, PSO applies attraction-based operators which drive the population toward the location of the currently known global and personal best solutions and to update accordingly. The differentiating factor here is the presence of an actual (greedy) selection process in the case of GA. In contrast, while PSO solutions are driven toward selected locations of the search space at each iteration, these are always updated independently of their quality compared to their previous locations (thus being classified as a non-greedy algorithm).

In summary, for problems like OCP, in which the distribution of image sensors must be carefully defined to ensure maximum coverage rate, it seems that the unsupervised nature of a non-greedy selection algorithms makes better solutions harder to deliver in contrast to those based on Greedy selection schemes. This is evidenced by the performance shown by algorithms such as GSA, SMS, GWO, and SA during our experiments, which are, very appropriately, non-greedy algorithms. While PSO and SSO also fall in this category, the inclusion of memory for storing the location of prominent solutions, along with the nature of its search strategy, compensates for its lack of elitism, thus allowing them to deliver good results when applied of OCP of OC-type sensors.

As for the computing time tests, it can be seen from

Table 12/

Figure 9 that processing time (in seconds) for all tested MOAs is drastically higher in Setups 1 and 3 (compared to Setups 2 and 4); this makes sense, since for such experimental configurations, the number of deployed sensors is significantly greater (34 and 20 vs. 6 and 5, respectively). Here, it is important to remember that the dimensionally (number of decision variables) of the optimization problem to solve is conditioned by the number of sensors that are required to be allocated (twice the number of image sensors, as shown in Equation (11)); thus, the higher the number of available sensors, the more complex the optimization of their arrangement. In addition, it is worth mentioning that most of the processing time for a single optimization test is associated with the evaluation of the fitness function itself; this is because calculating coverage area rate under the proposed radial sweep approach involves a procedure in which individual steps are complex (and slow) to compute (i.e., computing each sensor’s visibility polygon and visible area, followed by the calculation of the total surveillance area and the coverage rate in terms of the total deployment polygon’s area). This, added to the complexity of optimizing numerous sensors, makes the whole process relatively slow, taking at least 131 s (approximately 2.2 min) even in the fastest documented test (CMA-ES when tested for Setup 2). As for the MOAs tested, a tendency toward an increased computing time can be observed in algorithms such as SSO and PSO (both swarm algorithms with attractor-based operators), as well as in GA. The lengthier computing times in the case of SSO (averaged ranking as first in this category) may be related to the varied and more complex operators used to update solutions, as well as the different designations adopted by search agents within the algorithms (male/female individuals, as well as dominant/non-dominant members, all of which require the use of additional memory) plus the integration of mating-like mechanism (which compared to most other MOAs, could demand additional fitness function accesses within a single iteration of the process). In the case of PSO, the computing time (on average ranked as the second highest) this could be attributed to the use of memory mechanisms. These mechanisms store local and global promising solutions, which are then used in the calculation of the updated population. In contrast, the lengthier computing times in the case of SSO (on average ranked first in this category) may be related to the varied and more complex operators used to update solutions, as well as the different designations adopted by search agents within the algorithms (male and female, as well as dominant and non-dominant individuals) plus the integration of mating-like mechanism (which, compared to most other MOAs, could demand additional fitness function accesses within a single iteration of the process). Finally, there is the case of GA (ranking third in average highest computing time) which in contrast to SSO and PSO is an evolutionary algorithm. In this case, the increased computing time might be associated with the crossover, mutation, and selection operators (and their respective related parameter configurations) applied as part of their iterative process; in particular, since the mutation rate in our tested GA is set to a relatively high value (

) the algorithm is more prone to alter elements in the available search agents, thus increasing the number of operations performed.