Abstract

In this paper, an Enhanced Greylag Goose Optimization Algorithm (EGGO) based on evolutionary game theory is presented to address the limitations of the traditional Greylag Goose Optimization Algorithm (GGO) in global search ability and convergence speed. By incorporating dynamic strategy adjustment from evolutionary game theory, EGGO improves global search efficiency and convergence speed. Furthermore, EGGO employs dynamic grouping, random mutation, and local search enhancement to boost efficiency and robustness. Experimental comparisons on standard test functions and the CEC 2022 benchmark suite show that EGGO outperforms other classic algorithms and variants in convergence precision and speed. Its effectiveness in practical optimization problems is also demonstrated through applications in engineering design, such as the design of tension/compression springs, gear trains, and three-bar trusses. EGGO offers a novel solution for optimization problems and provides a new theoretical foundation and research framework for swarm intelligence algorithms.

1. Introduction

Meta-heuristic algorithms are highly effective for solving non-deterministic problems. They find approximate optimal solutions by imitating biological or physical phenomena, with simple structures and limited computational resources [1]. Their strong adaptability and robustness make them a key focus in computational intelligence research. Scholars have proposed numerous meta-heuristic algorithms [2], such as Particle Swarm Optimization (PSO) [3] and the Bat Algorithm (BA) [4]. Valdez and Castillo et al. noted around 150 available algorithms for optimization problems [5]. As a crucial subset of meta-heuristic algorithms, swarm intelligence (SI) algorithms are known for their adaptability, robustness, and flexibility [6]. Notable examples include Grey Wolf Optimizer (GWO) [7], Ant Colony Optimization (ACO) [8], Whale Optimization Algorithm (WOA) [9], and Greylag Goose Optimization (GGO) [10]. Due to their strengths, SI algorithms are widely used in engineering, biomedicine, and other fields [11]. However, they also have limitations like premature convergence [12], poor balance [13], and susceptibility to local optima [14].

In 2023, Kenawy and Nima Khodadadi proposed the GGO algorithm. This novel meta-heuristic, inspired by the collective behavior and social structure of greylag geese during migration, falls under swarm intelligence [10]. Its dynamic grouping and exploration strategies enable it to escape local optima and enhance the likelihood of finding the global optimum. However, similar to other swarm intelligence algorithms, GGO has limitations such as premature convergence in high-dimensional problems and high computational complexity due to maintaining two groups. To address these issues, Hossein Najafi Khosrowshahi et al. proposed a modified GGO with adaptive mechanisms like dynamic balanced partitioning and stasis detection, improving its robustness and convergence speed [15]. Amal H et al. introduced a Parallel GGO with Restricted Boltzmann Machines (PGGO-RBM), boosting the model’s dynamic balance and accuracy [16]. Ahmed El-Sayed Saqr et al. combined GGO with a Multi-Layer Perceptron (MLP) to enhance accuracy [17]. Nikunj Mashru et al. integrated an elite non-dominated sorting method and archiving mechanism into a single-objective GGO-based algorithm, maintaining its advantages while improving convergence and diversity [18]. Dildar Gürses et al. improved GGO by adding a Lévy flight mechanism and artificial neural network (ANN) strategy, balancing exploration and exploitation, and validated its effectiveness in engineering problems like heat exchanger design, automotive side-impact design, and spring design optimization [19]. These modifications demonstrate GGO’s effectiveness in solving uncertain problems and its great potential. However, challenges such as local optima, premature convergence, and issues with diversity and balance remain.

This paper integrates evolutionary game theory (EGT) into the Grey Goose Optimization (GGO) algorithm to address its limitations. By imitating competition and cooperation among individuals, EGT guides population evolution. This integration allows for dynamic adjustment of strategy frequencies, enhancing search efficiency and solution quality.

Firstly, the dynamic population structure adjustment mechanism from EGT is introduced to maintain population diversity and exploration ability. A dynamic grouping mechanism adjusts the size of exploration and exploitation groups based on fitness distribution, balancing global and local searching.

Secondly, random mutation and partial re-initialization of individuals are applied to preserve population diversity and avoid premature convergence. The local search scope and intensity are also dynamically adjusted according to fitness distribution.

Finally, the local search capability from EGT is incorporated to strengthen the algorithm’s exploitation. Individuals with high fitness are selected for local searching to further optimize solution quality.

2. Materials and Methods

2.1. Overview of the Greylag Goose Optimization Algorithm

The GGO algorithm is a meta-heuristic algorithm inspired by the migratory behavior of greylag geese. During migration, these geese fly in a V-formation to reduce air resistance and enhance efficiency, demonstrating remarkable collaboration. In the GGO algorithm, the population is divided into an exploration group and an exploitation group. The exploration group focuses on the global search, while the exploitation group handles local optimization. This structure helps to balance exploration and exploitation capabilities.

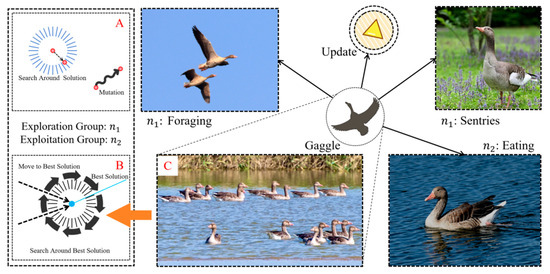

Within a greylag goose gaggle, individuals enhance survival efficiency through division of labor. Some members serve as sentries monitoring environmental risks, while others focus on foraging (Figure 1A). Integrating this dynamic grouping mechanism (Figure 1B) with natural migratory behavior (Figure 1C) creates an efficient search framework for algorithms.

Figure 1.

Greylag Goose Optimization exploration, exploitation, and dynamic groups. (A) Exploration group; (B) Exploitation group; (C) Dynamic group in real life.

2.2. Improvement of Greylag Goose Optimization Based on Evolutionary Game Theory (EGGO)

Evolutionary game theory (EGT) is a framework for studying the evolution of biological populations and their adaptive behaviors [20]. By simulating competition and cooperation among individuals, it offers guidance for population evolution. Integrating EGT into the GGO algorithm enables dynamic adjustment of strategy usage. This, in turn, enhances the algorithm’s search efficiency and solution quality.

2.2.1. Strategy Selection and Update

In the standard GGO, the patrol group, guard group, and foraging group are three types of auxiliary search agents that expand the exploration scope. Within the EGT framework, these agents in different tasks can be regarded as distinct “strategies”. The strategy selection mechanism in EGT dynamically adjusts the usage frequency of these strategies. By following the leaders in different auxiliary search agent groups, a wider search scope and more optimal solutions can be obtained.

The dynamic population structure adjustment mechanism of EGT is incorporated into the GGO to maintain population diversity and exploration ability. A dynamic grouping mechanism adjusts the size of exploration and exploitation groups based on fitness distribution, balancing global and local searching. Random mutation or partial individual re-initialization is introduced to preserve diversity and prevent premature convergence. Meanwhile, the local search capability of EGT is integrated into the GGO to enhance its exploitation. Individuals with high fitness are selected for local searching to optimize solution quality, with the local search range and intensity dynamically adjusted according to fitness distribution.

2.2.2. Fitness Assessment and Evolutionary Stable Strategy (ESS)

In EGT, fitness assesses individuals’ quality within a population. In the GGO, an individual’s fitness value reflects its position in the search space. Comparing these values identifies superior individuals and guides population evolution.

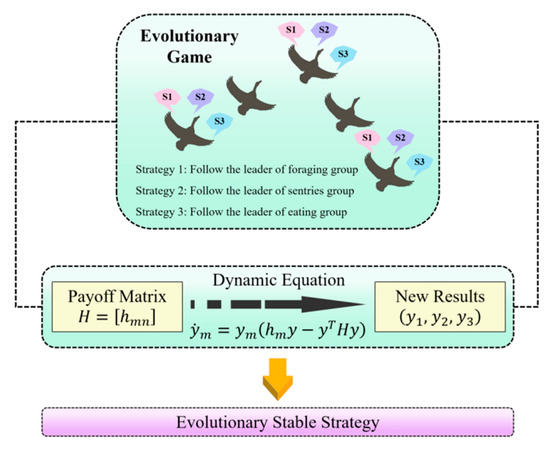

In evolutionary game theory, an Evolutionary Stable Strategy (ESS) is a key concept that describes a strategy prevailing in a population. Within the GGO framework, an ESS guides population evolution, ensuring convergence to a stable strategy distribution. To clarify the proposed method’s workings, a detailed schematic of the Evolutionary Game Theory Greylag Goose Optimization (EGGO) is presented in Figure 2.

Figure 2.

Schematic diagram of EGGO in detail.

In the mixed game method, the following settings can be made:

- (1)

- Each greylag goose is mapped to a player in the evolutionary game.

- (2)

- The three operators are regarded as three available strategies S1, S2, and S3, with the state space , where represents the proportion of strategy m in the population.

- (3)

- The average behavior obtained by following a specific strategy constitutes the payoff matrix H.

Subsequently, the game proceeds based on the fundamental evolutionary dynamics mechanism proposed by Taylor and Jonker [20], which is written as:

Here, Hm represents the m-th row of the payment matrix H, and stores all the fitness information of the population, that is, the combined result of each individual using a single strategy. Therefore, H can be defined as:

Here, represents the benefit that the goose obtain by using strategy m. As a first-order ordinary differential equation that describes the difference between the fitness of the strategy and the average fitness in the group, Equation (1) depicts the evolution of the strategy frequency ym. Once (2) is established, (1) is executed to generate the related Evolutionary Stable Strategy (ESS), which is the output of the game [21]. Therefore, we obtain the ESS candidate for the number of iterations t, where the specific values of the coefficient , ym represent the ratio of strategy m in the t-th iteration. It should be noted that the initial value of the strategy proportion is set as a uniform distribution, and during the update process, is standardized to ensure that . Among them, if , then is reset and re-standardized. This process can effectively prevent boundary absorption.

Taking into account the iterative process, the benefit of the strategy is presented as:

Among them, represents the average value during the iteration process, and indicates the cost of goose X in the j-th iteration. Clearly, (3) reflects the average performance of the gain obtained through a specific strategy. During the process of solving the dynamic equation, better results are continuously generated to replace the previous solutions, and the game eventually converges to an ESS that no mutation strategy can invade [21]. The replicator dynamics are discretized via the forward Euler method, , with step size per algorithm iteration. This discretization preserves evolutionary directionality while maintaining computational efficiency, and empirical results confirm its efficacy in driving convergence toward ESS.

Through the optimization of strategy selection, population structure, and local searching using evolutionary game theory, EGGO significantly improves the convergence speed and global optimization ability while maintaining the biological behavior simulation of the greylag goose algorithm. Experimental results show that this improved algorithm has higher solution accuracy and stability in complex optimization problems.

2.3. Lyapunov Stability Theory

To verify the convergence of the EGGO algorithm, we constructed a Lyapunov function to prove the stability of the system through dynamic adjustment of the strategy.

2.3.1. Dynamic System Modeling

In EGGO, the evolution of strategy proportions follows a first-order ordinary differential equation, as expressed in Equation (4). Here, represents the vector of strategy proportions, is the payoff function of strategy m, and is the average payoff.

Assumption 1: The profit function is continuously differentiable within the strategy space, and it satisfies (indicating that there is a competitive relationship among the strategies).

The assumption stems from resource competition in optimization:

- Strategy similarity: increased reduces if strategies and mm exploit overlapping regions.

- Resource dilution: a fixed population size implies that a higher diminishes resources available to strategy .

We emphasize that ESS convergence guarantees only local stability of strategy distribution, not global optimality. EGGO mitigates local optima via dynamic group adjustment, increasing the exploration ratio upon solution stagnation, random mutation, perturbing agents to escape locally optimal solutions, persistent multi-strategy coexistence, and exploratory strategies to sustain global search potential.

2.3.2. Lyapunov Stability Proof

The candidate Lyapunov function is defined as the negative entropy function of the policy distribution, as given by Equation (5). This function is positive definite within the policy simplex and attains its minimum value at the equilibrium point (ESS).

The time derivative of along the system trajectory is calculated as follows:

According to Hypothesis 1, when the return of strategy m is , its proportion increases (). At this time, increases, but () is negative, so the product term ; conversely, the same reasoning holds. Therefore, , and only when .

According to Lyapunov’s stability theorem:

- (1)

- is positive definite within the strategy space;

- (2)

- , and only when (ESS), then .

Therefore, the system converges globally and asymptotically to an ESS. This demonstrates that the EGGO algorithm, enhanced by evolutionary game theory, possesses a rigorous guarantee of convergence.

2.4. EGGO Algorithm Model and Analysis

This section updates the GGO’s mathematical model based on the improvements outlined in Section 2.2. It includes the EGGO mathematical model, algorithmic complexity analysis, pseudocode for EGGO, and a visualization of the algorithmic process.

2.4.1. Mathematical Model

The exploration group () in the gaggle will search for promising new locations near its current position. This is achieved by repeatedly comparing numerous potential nearby options to find the best one based on fitness. The EGGO algorithm achieves this through the following equation to update vectors A and C to and during iterations, where parameter a linearly changes from 2 to 0, and .

Here, represents the agent in iteration t. denotes the position of the best solution (the leader). is the updated position of the agent. The values of and randomly vary within the range of .

Three random search agents are selected and named , and to prevent the agents from being influenced by a leader position, thereby achieving greater exploration. This stage is improved through evolutionary game theory. The positions of the improved search agents will be updated as follows, where .

Among them, the values of , , and are updated in [0, 2]. , , and satisfy . represents the conversion factor and is a random number within [0, 1]. Parameter z decreases exponentially, and the calculation method is as follows.

During the second update process, the values of , and the vector values of and have decreased, as follows:

In the formula, parameter is a constant, and is a random value in [−1, 1]. The parameter is updated in [0, 2]. and are updated in [0, 1].

The exploitation group () focuses on refining existing solutions. At the end of each cycle, individuals with the highest fitness are identified and rewarded. Three sentries (1, 2, and 3) guide other individuals to adjust their positions toward the estimated prey location. The following equation illustrates this position-updating process.

Among them, , , and are calculated as , while , , and are calculated as . The updated position is represented as the average of the three solutions, , , and , as shown below.

The most promising option is to stay close to the leader during flight, which prompts some greylag geese to investigate and approach the area with the most desirable response in order to find a better solution, which is named ““. EGGO implements the above process using the following equation.

2.4.2. Analysis of Algorithm Complexity

To evaluate the computational efficiency of the EGGO algorithm, an analysis is conducted from both temporal and spatial complexity perspectives to quantify the asymptotic behavior of resource consumption during the iterative process.

(1) Temporal Complexity: Compared with the standard GGO, the introduction of payoff matrix updates results in a temporal complexity of . When solving the ordinary differential equation in Equation (4), the complexity is . Additionally, performing mutation operations on k individuals incurs a temporal complexity of . Overall, although EGGO maintains the same order of temporal complexity as GGO, there is an increase in overall complexity. Considering that the total complexity of EGGO is , which aligns with mainstream algorithms, it is capable of meeting the demands for rapid optimization.

(2) Spatial Complexity: Compared with the standard GGO, storing the strategy proportion vector y, payoff matrix A, and historical payoff records leads to a spatial complexity of . Retaining intermediate solutions for neighborhood searching contributes a spatial complexity of . In summary, the overall spatial complexity of EGGO is . This indicates that the algorithm’s memory consumption is primarily dominated by population size and problem dimensionality, demonstrating good scalability.

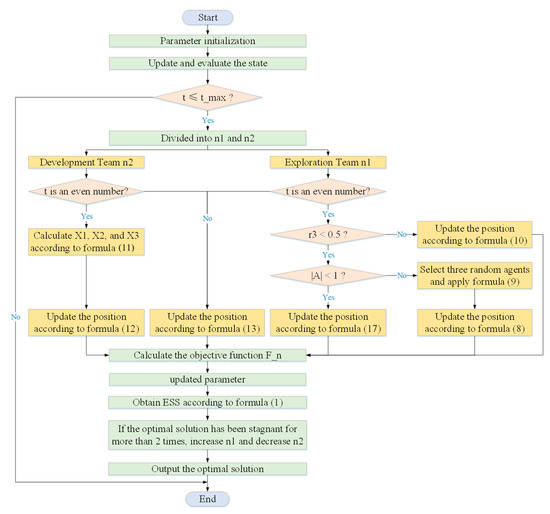

2.4.3. Algorithm Pseudocode and Flowchart

Based on the previous content, we compiled the pseudo-code of EGGO, as shown in Algorithm 1, which demonstrates the operation logic of EGGO. On this basis, we visualized the algorithm flow to further explain the operation logic of EGGO and the collaborative relationships among multiple processes in the mathematical model. The visualized algorithm flow is shown in Figure 3.

| Algorithm 1 Pseudocode of EGGO |

| 1. Initialize EGGO population , size , iterations , and objective function |

| 2. Initialize EGGO parameters, |

| 3. Calculate objective function for each agent |

| 4. Set best agent position |

| 5. Update solutions in exploration group () and exploitation group () |

| 6. while do |

| 7. Initialize the transition factor , strategy proportion |

| 8. Divide the exploration group members into three parts |

| 9. Calculate the average fitness values of each part , , and . |

| 10. Initialize the payoff matrix |

| 11. Update the strategy proportion based on Equation (1) |

| 12. for () do |

| 13. if () then |

| 14. if () then |

| 15. if () then |

| 16. Update position of current search agent as Equation (7) |

| 17. else |

| 18. Select three random search agents , , and |

| 19. Update (z) by the exponential form of Equation (9) |

| 20. Update position of current search agent as Equation (8) |

| 21. end if |

| 22. else |

| 23. Update position of current search agent as Equation (10) |

| 24. end if |

| 25. else |

| 26. Update position of current search agent as Equation (13) |

| 27. end if |

| 28. end for |

| 29. for () do |

| 30. if () then |

| 31. Calculate , , and by the Equation (11) |

| 32. Update individual position as Equation (12) |

| 33. else |

| 34. Update position of current search agent as Equation (13) |

| 35. end if |

| 36. end for |

| 37. Calculate objective function for each agent |

| 38. Update parameters |

| 39. Set |

| 40. Adjust beyond the search space solutions |

| 41. if (Best is same as previous two iterations) then |

| 42. Increase solutions of exploration group () |

| 43. Decrease solutions of exploitation group () |

| 44. end if |

| 45. end while |

| 46. Return best agent |

Figure 3.

Flowchart of EGGO.

3. Results

3.1. Comparison and Analysis of Test Functions

This section compares EGGO with seven classic algorithms (standard GGO [10], GWO [7], MFO [22], SSA [23], WOA [9], HHO [24], and PSO [3]) using benchmark functions and the CEC 2022 test suite. Simulations are run on an Intel(R) Core (TM) i7-14650HX processor with Windows 11, 32GB RAM, and a 2.2GHz processor speed. The algorithms are implemented in MATLAB R2024b, with parameters set as shown in Table 1.

Table 1.

Settings of algorithm parameters under 500 iterations and a population size of 100.

3.1.1. Benchmark Test Functions

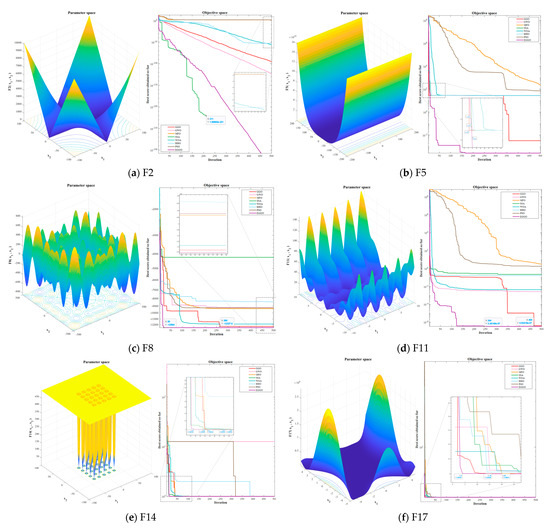

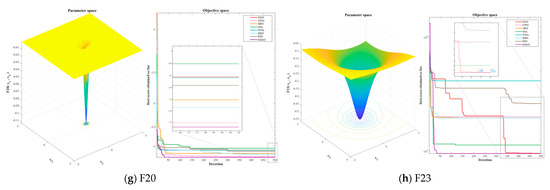

Among the 23 benchmark test functions, we selected 8 (F2, F5, F8, F11, F14, F17, F20, and F23) according to an arithmetic sequence as the comparison test functions for this stage. The 3D images of the test functions and the convergence curves of the algorithms are shown in Figure 4.

Figure 4.

Test results in benchmark test function.

From the results shown in Figure 4, it can be observed that EGGO significantly outperforms the other algorithms in unimodal (F2, F5) and multimodal functions (F8, F11). The advantages in mixed functions (F14, F17) and combined functions (F20, F23) are reduced. It is notable that in the 30-repetition experiments of each benchmark function group, the performance of SSA is not stable. For example, in Figure 4a, it can be observed that the algorithm stops converging when the number of iterations reaches 211. In each of the 30-repetition experiments of the benchmark test functions, the number of times EGGO ranks first is all over 28, demonstrating strong algorithm performance.

3.1.2. CEC 2022 Test Suite

In this section, the above eight algorithms will be compared and tested using the CEC 2022 test suite. The function information of the test suite is shown in Table 2 below.

Table 2.

Details of the CEC 2022.

The test suite dimension is set to 10 dimensions (10D), and the experimental results are shown in Table 3. According to Table 2, in the 10D tests of 12 functions, EGGO achieves the following rankings:

Table 3.

Results of the CEC 2022 (10D).

Average Value (Ave.): first place in eight functions (F2, F3, F4, F6, F7, F9, F11, F12); second place in two functions (F8, F10); third place in one function (F5); sixth place in one function (F1).

Standard Deviation (Std.): first place in ten functions (F1, F3, F4, F5, F6, F7, F8, F9, F11, F12); second place in two functions (F2, F10).

Execution Time (Time): first place in two functions (F7, F11); fifth place in three functions (F8, F9, F12); sixth place in one function (F10); seventh place in three functions (F3, F4, F5); eighth place in three functions (F1, F2, F6).

Best Value (Best): first place in nine functions (F3, F4, F5, F6, F7, F8, F9, F11, F12); third place in two functions (F1, F2); seventh place in one function (F10).

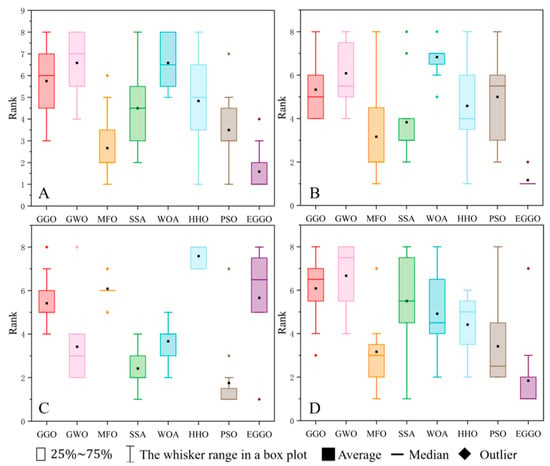

Based on the rankings in Table 3, Figure 5 visualizes the performance of all six algorithms across four metrics: Ave., Std., Time, and Best.

Figure 5.

Test result (CEC 2022 10D) ranking: (A) Ave.; (B) Std.; (C) Time; (D) Best.

The test suite dimension is set to 20 dimensions (20D), and the experimental results are shown in Table 4. According to Table 4, in the 20D tests of 12 functions, EGGO achieves the following rankings:

Table 4.

Results of the CEC 2022 (20D).

Average Value (Ave.): first place in eleven functions (F1, F2, F3, F4, F5, F6, F7, F8, F10, F11, F12); second place in one function (F9).

Standard Deviation (Std.): first place in seven functions (F2, F3, F6, F7, F8, F10, F12); second place in three functions (F1, F5, F9); third place in two functions (F4, F11).

Execution Time (Time): first place in four functions (F1, F3, F5, F8); second place in one function (F2); fifth place in three functions (F9, F10, F11); sixth place in two functions (F7, F12); seventh place in two functions (F4, F6).

Best Value (Best): first place in eight functions (F2, F4, F5, F6, F8, F9, F10, F12); second place in three functions (F3, F7, F11); third place in one function (F1).

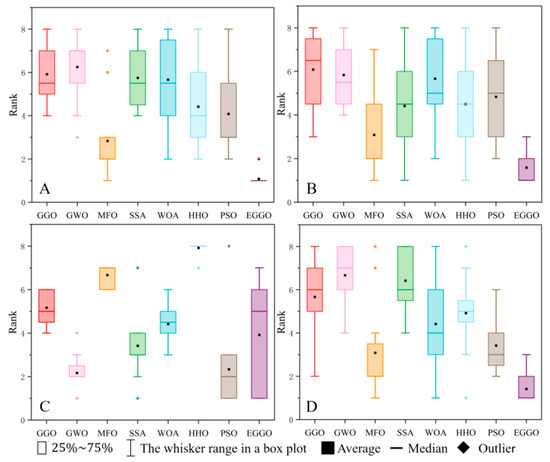

Based on the rankings in Table 4, Figure 6 visualizes the performance of all six algorithms across four metrics: Ave., Std., Time, and Best.

Figure 6.

Test result (CEC 2022 20D) ranking: (A) Ave.; (B) Std.; (C) Time; (D) Best.

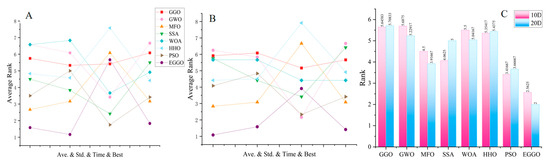

Comprehensive evaluation of algorithm performance across the CEC 2022 benchmark suite indicates that EGGO significantly outperforms the other algorithms, particularly in terms of convergence speed and precision. When the dimensionality of the test suite increases from 10D to 20D, EGGO shows more remarkable advantages. Its rankings for mean value, variance, time consumption, and best score in the 20D tests are significantly better than those in the 10D tests. This highlights EGGO’s superior performance in handling high-dimensional problems compared to the other algorithms. The visualization results of this analysis are presented in Figure 7.

Figure 7.

Test result: (A) average rank in 10D test; (B) average rank in 20D test; (C) total average rank in 10D and 20D test.

3.2. Engineering Applications of EGGO

To further evaluate the EGGO algorithm’s applicability to engineering design problems, this paper assesses its performance on three such problems: gear train design, tension–compression spring design, and three-bar truss design. In the experiments, the design variables of each problem serve as individual information in the algorithm, with the design model acting as the objective function for optimization. The results of the EGGO algorithm are compared with those of other algorithms to demonstrate its superiority in solving engineering problems. These algorithms originate from various sources in the literature and include PSO [3], GWO [7], SSA [22], WOA [9], MFO [22], HHO [24], GMO [25], KABC [26], ALO [27], GOA [28], CS [29], MBA [30], GSA [31], IAPSO [32], and DMMFO [33].

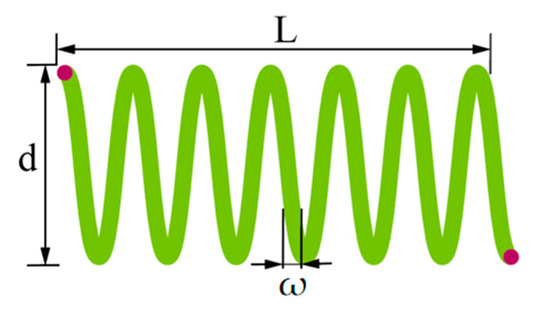

3.2.1. Tension/Compression Spring Design Problem

As shown in Figure 8, the tension/compression spring design (TCSD) problem is a constrained optimization task aimed at minimizing spring volume under constant tension/compression loads, as described by Belegundu and Arora [34]. It involves three design variables: the number of coils (L), the coil diameter (d), and the wire diameter (w).

Figure 8.

Schematic of the tension/compression spring.

The calculation model of the tension/compression spring is as follows:

It is subject to the following constraints:

The value ranges of the other three variables are as follows:

For constrained optimization, EGGO employs a static penalty function method. The fitness function is reformulated as , where is the primary objective; denotes design constraints; and the penalty coefficient ensures strong infeasibility rejection. This mechanism transforms constrained problems into unoptimization via objective space mapping.

As indicated in Table 5, the computational outcomes of the EGGO algorithm for the tension/compression spring design problem are compared with those from seven other algorithms. All variables in the results satisfy the constraints. The EGGO algorithm demonstrates significant superiority in performance.

Table 5.

Comparison of the best solution for tension/compression spring design problem.

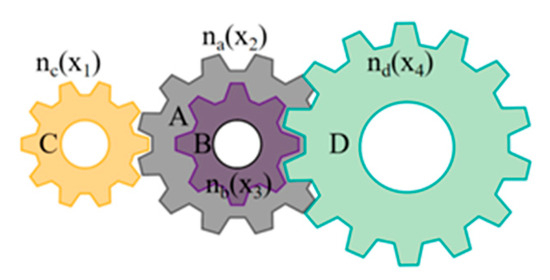

3.2.2. Gear Train Design Problem

Gear train design (GTD) is a classic engineering design problem in mechanical transmission [35,36]. Its objective is to determine the number of teeth on each gear in the transmission system based on a reasonable gear ratio. The gear train structure is shown in Figure 9. The design variables for GTD consist of the number of teeth on four gears, denoted as x1, x2, x3, and x4.

Figure 9.

Transmission diagram of the gear train.

The specific mathematical model is as follows:

It is subject to .

The solution to the gear train design problem is unique and must be an integer. As shown in Table 6, EGGO yields the same optimal results as GMO, ALO, IAPSO, and MBA. This demonstrates that EGGO’s solution is both optimal and feasible.

Table 6.

Comparison of the best solution for gear train design problem.

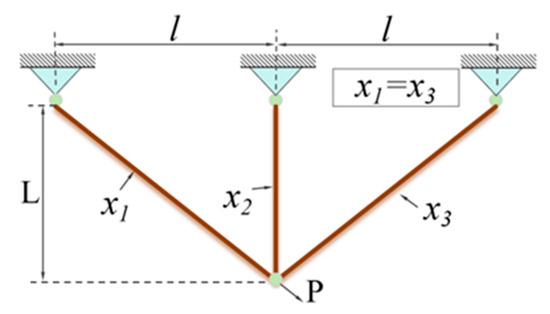

3.2.3. Three-Bar Truss Design Problem

TA schematic diagram of the three-bar truss design (T-BTD) is shown in Figure 10, and T-BTD is a mechanical optimization problem [26]. The objective of this problem is to minimize the weight of the three-bar truss structure while meeting the constraints of stress and loading force. The optimization variables of this problem are the cross-sectional areas of the connecting rods (x1, x2).

Figure 10.

Schematic of three-bar truss mechanism.

The specific optimization objective function is:

In the formula, l represents the distance between the connecting rods, and l = 100 cm, x1, x2 ∈ [0, 1].

During the T-BTD optimization process, the design variables are constrained from three aspects: structural stress, material deflection, and buckling. The three constraint formulas are as follows:

Here, P = 2 KN/cm2 and σ = 2 KN/cm2.

Based on Equations (18) and (19), the EGGO algorithm was applied to solve the three-bar truss design problem, with results presented in Table 7. When compared to the other algorithms, EGGO achieved the same optimal fitness as GMO, ALO, and GSA. Additionally, the solution values x1 and x2 satisfy the constraints. These experimental results demonstrate the EGGO algorithm’s capability to effectively solve the three-bar truss design problem.

Table 7.

Comparison of the best solution for three-bar truss design problem.

4. Conclusions

In response to the shortcomings of the traditional GGO, such as being prone to getting trapped in local optima and having a slow convergence speed, an EGGO algorithm based on evolutionary game theory is proposed. EGGO significantly enhances the global search ability and convergence speed by introducing the dynamic strategy adjustment mechanism from evolutionary game theory. At the same time, it adopts strategies such as a dynamic grouping mechanism, random mutation, and local search enhancement to further improve the efficiency and robustness of the algorithm. The dynamic grouping mechanism of EGGO dynamically adjusts the number of the exploration group and development group according to the fitness distribution of the population to balance global searching and local development; random mutation or re-initialization of some individuals maintains the diversity of the population and prevents premature convergence; and the introduction of a local search ability improves the local development ability of the algorithm.

The EGGO algorithm was compared with seven classic algorithms: standard GGO, GWO, MFO, SSA, WOA, HHO, and PSO. The results indicate that EGGO surpasses the others in multiple standard test functions and the CEC 2022 benchmark suite. It shows excellent performance in convergence accuracy and speed. EGGO is significantly better in unimodal and multimodal functions. Though its advantage is slightly less in hybrid and composite functions, it still ranks first in over 28 of 30 runs per group, proving its strong performance. In the CEC 2022 tests for 10D and 20D, EGGO achieved excellent results. Among the 12 functions in the 10D test, EGGO ranked first in mean value, standard deviation, execution time, and best value. In the 20D test, it also performed outstandingly in these metrics. This indicates that EGGO outperforms other algorithms in handling high-dimensional problems.

In engineering applications, EGGO was applied to the engineering design problems of tension/compression spring design, gear train design, and three-bar truss design. The experimental results indicate that EGGO can efficiently solve these problems while satisfying constraints, with results either comparable to or better than other advanced algorithms. This demonstrates the feasibility and effectiveness of EGGO in practical engineering optimization.

In this paper, the following contributions are proposed:

1. An EGGO algorithm is proposed. By incorporating dynamic strategy adjustment from evolutionary game theory, it improves the algorithm’s adaptability and strategy selection ability. This significantly boosts global search efficiency and convergence speed.

2. EGGO uses a dynamic grouping mechanism. Based on population fitness distribution, it adjusts exploration and exploitation groups to balance global and local searches. This avoids premature convergence and ensures excellent performance in high-dimensional problems.

3. Random mutation and local search enhancement strategies are adopted. These maintain population diversity, prevent early convergence, and improve local exploitation ability.

Despite the remarkable performance improvements achieved by EGGO, there remains scope for further enhancement. Future work will focus on fine-tuning the algorithm’s parameters to accommodate diverse optimization scenarios and integrating additional adaptive strategies to bolster the algorithm’s robustness and applicability. Furthermore, the potential of EGGO will be explored in a broader range of practical applications, such as engineering design, resource allocation, and scheduling problems. In summary, the EGGO algorithm proposed in this paper has significantly advanced the development of GGO and laid a solid foundation for future innovation in the field of computational intelligence and optimization.

Author Contributions

Conceptualization, L.W. and Z.Y.; Methodology, L.W. and X.Z.; Software, Y.Y.; Validation, Y.Y., Z.Y. and Z.Z.; Formal analysis, L.W. and Y.Z.; Investigation, Y.Y. (Yuanting Yang) and Z.Y.; Resources, Y.Z. and Y.Y. (Yuanting Yang); Data curation, L.W., Y.Y. (Yuqi Yao) and Z.Z.; Writing—original draft preparation, L.W.; Writing—review and editing, L.W. and X.Z.; Visualization, Y.Y. (Yuqi Yao) and Z.Y.; Supervision, Y.Z.; Project administration, Y.Z. and X.Z.; Funding acquisition, X.Z. and Y.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Jilin Province and Changchun City Major Science and Technology Special Project: 20240301008ZD.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author, upon reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Qin, S.; Liu, J.; Bai, X.; Hu, G. A Multi-Strategy Improvement Secretary Bird Optimization Algorithm for Engineering Optimization Problems. Biomimetics 2024, 9, 478. [Google Scholar] [CrossRef]

- Zhang, C.; Song, Z.; Yang, Y.; Zhang, C.; Guo, Y. A Decomposition-Based Multi-Objective Flying Foxes Optimization Algorithm and Its Applications. Biomimetics 2024, 9, 417. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95—International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Casella, G.; Murino, T.; Bottani, E. A modified binary bat algorithm for machine loading in flexible manufacturing systems: A case study. Int. J. Syst. Sci. Oper. Logist. 2024, 11, 2381828. [Google Scholar] [CrossRef]

- Fevrier, V.; Oscar, C.; Patricia, M. Bio-Inspired Algorithms and Its Applications for Optimization in Fuzzy Clustering. Algorithms 2021, 14, 122. [Google Scholar] [CrossRef]

- Mohammadi, A.; Sheikholeslam, F.; Mirjalili, S. Nature-Inspired Metaheuristic Search Algorithms for Optimizing BenchmarkProblems: Inclined Planes System Optimization to State-of-the-Art Methods. Arch. Computat. Methods Eng. 2023, 30, 331–389. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Dorigo, M.; Di Caro, G.; Gambardella, L.M. Ant Algorithms for Discrete Optimization. Artif. Life 1999, 5, 137–172. [Google Scholar] [CrossRef] [PubMed]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- El-Kenawy, E.S.M.; Khodadadi, N.; Mirjalili, S.; Abdelhamid, A.A.; Eid, M.M.; Ibrahim, A. Greylag Goose Optimization: Nature-inspired optimization algorithm. Expert Syst. Appl. 2024, 238, 122147. [Google Scholar] [CrossRef]

- Xu, M.; Cao, L.; Lu, D.; Hu, Z.; Yue, Y. Application of Swarm Intelligence Optimization Algorithms in Image Processing: A Comprehensive Review of Analysis, Synthesis, and Optimization. Biomimetics 2023, 8, 235. [Google Scholar] [CrossRef]

- Liu, J.; Shi, J.; Hao, F.; Dai, M. A novel enhanced global exploration whale optimization algorithm based on Lévy flights and judgment mechanism for global continuous optimization problems. Eng. Comput. 2023, 39, 2433–2461. [Google Scholar] [CrossRef]

- Chu, J.; Yu, X.; Yang, S.; Qiu, J.; Wang, Q. Architecture entropy sampling-based evolutionary neural architecture search and its application in osteoporosis diagnosis. Complex Intell. Syst. 2023, 9, 213–231. [Google Scholar] [CrossRef]

- Sun, Y.; Yang, T.; Liu, Z. A whale optimization algorithm based on quadratic interpolation for high-dimensional global optimization problems. Appl. Soft Comput. 2019, 85, 105744. [Google Scholar] [CrossRef]

- Khosrowshahi, H.N.; Aghdasi, H.S.; Salehpour, P. A refined Greylag Goose optimization method for effective IoT service allocation in edge computing systems. Sci. Rep. 2025, 15, 15729. [Google Scholar] [CrossRef]

- Alharbi, A.H.; Khafaga, D.S.; El-Kenawy, E.-S.M.; Eid, M.M.; Ibrahim, A.; Abualigah, L.; Khodadadi, N.; Abdelhamid, A.A. Optimizing electric vehicle paths to charging stations using parallel greylag goose algorithm and Restricted Boltzmann Machines. Front. Energy Res. 2024, 12, 1401330. [Google Scholar] [CrossRef]

- Saqr, A.E.S.; Saraya, M.S.; El-Kenawy, E.S.M. Enhancing CO2 emissions prediction for electric vehicles using Greylag Goose Optimization and machine learning. Sci. Rep. 2025, 15, 16612. [Google Scholar] [CrossRef]

- Mashru, N.; Tejani, G.G.; Patel, P. Reliability-based multi-objective optimization of trusses with greylag goose algorithm. Evol. Intell. 2025, 18, 25. [Google Scholar] [CrossRef]

- Gürses, D.; Mehta, P.; Sait, S.M.; Yildiz, A.R. Enhanced Greylag Goose optimizer for solving constrained engineering design problems. Mater. Test. 2025, 67, 900–909. [Google Scholar] [CrossRef]

- Cheng, L.; Huang, P.; Zhang, M.; Yang, R.; Wang, Y. Optimizing Electricity Markets Through Game-Theoretical Methods: Strategic and Policy Implications for Power Purchasing and Generation Enterprises. Mathematics 2025, 13, 373. [Google Scholar] [CrossRef]

- Taylor, P.D.; Jonker, L.B. Evolutionary stable strategies and game dynamics. Math. Biosci. 1978, 40, 145–156. [Google Scholar] [CrossRef]

- Mirjalili, S. Moth-flame optimization algorithm: A novel nature-inspired heuristic paradigm. Knowl.-Based Syst. 2015, 89, 228–249. [Google Scholar] [CrossRef]

- Xue, J.; Shen, B. A novel swarm intelligence optimization approach: Sparrow search algorithm. Syst. Sci. Control. Eng. 2020, 8, 22–34. [Google Scholar] [CrossRef]

- Heidari, A.A.; Mirjalili, S.; Faris, H.; Aljarah, I.; Mafarja, M.; Chen, H. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst. 2019, 97, 849–872. [Google Scholar] [CrossRef]

- Wu, H.; Zhang, X.; Song, L.; Zhang, Y.; Gu, L.; Zhao, X. Wild Geese Migration Optimization Algorithm: A New Meta-Heuristic Algorithm for Solving Inverse Kinematics of Robot. Comput. Intell. Neurosci. 2022, 2022, 5191758. [Google Scholar] [CrossRef]

- El-Sherbiny, A.; Elhosseini, M.A.; Haikal, A.Y. A new ABC variant for solving inverse kinematics problem in 5 DOF robot arm. Appl. Soft Comput. J. 2018, 73, 24–38. [Google Scholar] [CrossRef]

- Mirjalili, S. The Ant Lion Optimizer. Adv. Eng. Softw. 2015, 83, 80–98. [Google Scholar] [CrossRef]

- Saremi, S.; Mirjalili, S.; Lewis, A. Grasshopper Optimisation Algorithm: Theory and application. Adv. Eng. Softw. 2017, 105, 30–47. [Google Scholar] [CrossRef]

- Gandomi, A.H.; Yang, X.-S.; Alavi, A.H. Cuckoo search algorithm: A metaheuristic approach to solve structural optimization problems. Eng. Comput. 2013, 29, 17–35, Erratum in Eng. Comput. 2013, 29, 245. [Google Scholar] [CrossRef]

- Sadollah, A.; Bahreininejad, A.; Eskandar, H.; Hamdi, M. Mine blast algorithm: A new population based algorithm for solving constrained engineering optimization problems. Appl. Soft Comput. J. 2013, 13, 2592–2612. [Google Scholar] [CrossRef]

- Rashedi, E.; Nezamabadi-pour, H.; Saryazdi, S. GSA: A Gravitational Search Algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- Guedria, N.B. Improved accelerated PSO algorithm for mechanical engineering optimization problems. Appl. Soft Comput. 2016, 40, 455–467. [Google Scholar] [CrossRef]

- Ma, L.; Wang, C.; Xie, N.G.; Shi, M.; Ye, Y.; Wang, L. Moth-flame optimization algorithm based on diversity and mutation strategy. Appl. Intell. 2021, 51, 1–37. [Google Scholar] [CrossRef]

- Belegundu, A.D.; Arora, J.S. A study of mathematical programming methods for structural optimization. Part I: Theory. Int. J. Numer. Methods Eng. 1985, 21, 1583–1599. [Google Scholar] [CrossRef]

- Moosavi SH, S.; Bardsiri, K.V. Poor and rich optimization algorithm: A new human-based and multi populations algorithm. Eng. Appl. Artif. Intell. 2019, 86, 165–181. [Google Scholar] [CrossRef]

- Xu, X.; Hu, Z.; Su, Q.; Li, Y.; Dai, J. Multivariable grey prediction evolution algorithm: A new metaheuristic. Appl. Soft Comput. J. 2020, 89, 106086. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).