Abstract

Detecting internal defects in narrow and curved pipelines remains a significant challenge, due to the difficulty of achieving reliable defect perception under low-light conditions and generating collision-free motion trajectories. To address these challenges, this article proposes an event-aware ES-YOLO framework, and develops a pipeline defect inspection experimental environment that utilizes a hyper-redundant manipulator (HRM) to insert an event camera into the pipeline in a collision-free manner for defect inspection. First, to address the lack of datasets for event-based pipeline inspection, the ES-YOLO framework is proposed. This framework converts RGB data into an event dataset, N-neudet, which is subsequently used to train and evaluate the detection model. Concurrently, comparative experiments are conducted on steel and acrylic pipelines under three different illumination conditions. The experimental results demonstrate that, under low-light conditions, the event-based detection model significantly outperforms the RGB detection model in defect recognition rates for both types of pipelines. Second, a pipeline defect detection physical system is developed, integrating a visual perception module based on the ES-YOLO framework and a control module for the snake-like HRM. The system controls the HRM using a combination of Nonlinear Model Predictive Control (NMPC) and the Serpentine Crawling Algorithm (SCA), enabling the event camera to perform collision-free inspection within the pipeline. Finally, extensive pipeline insertion experiments are conducted to validate the feasibility and effectiveness of the proposed framework. The results demonstrate that the framework can effectively identify steel pipeline defects in a 2 Lux low-light environment, achieving a detection accuracy of 84%.

1. Introduction

Pipelines typically present a narrow, complex, and poorly illuminated environment [1], making manual inspection of pipeline defects a considerable challenge. This challenge arises primarily from two factors. First, inadequate lighting conditions hinder sensors from clearly capturing the internal pipeline environment. Second, the narrow and curved geometry of pipelines restricts the effectiveness of traditional manual inspection and remotely operated robotic systems, which are not only inefficient and vulnerable to environmental disturbances but also struggle to detect concealed defects. In recent years, with advancements in sensor technologies and intelligent robotics, pipeline inspection has increasingly evolved toward automation and intelligence.

Visual perception is widely applied in pipeline defect detection. Le et al. [2] developed an online multi-sensor inspection system for pipeline defect detection, employing RGB fusion technology to precisely identify defects such as cracks, corrosion, and blockages. Nevertheless, the limitations of RGB cameras in low-light and high-dynamic-range environments remain a significant challenge in complex pipeline inspections.

In recent years, event cameras, as bio-inspired visual sensors, have become increasingly important in industrial inspection due to their advantages in dynamic range, temporal resolution, low power consumption, and high sensitivity to low-light conditions [3]. This makes event cameras particularly suited for low-light and high-contrast environments. However, existing computer vision algorithms are not directly compatible with event streams, posing challenges for the direct application of event cameras in data processing. In addition, the data format of RGB and event streams differs significantly, and most existing standard datasets are based on RGB images, with a lack of publicly available datasets specifically designed for event data. This further restricts the application and development of event cameras in specific fields. Recreating pipeline defect datasets suitable for event cameras is not only time-consuming but also costly.

To address this issue, Henri Rebecq et al. [4] designed the event camera simulator ESIM. Building on this, Daniel Gehrig et al. [3] introduced frame upsampling technology into this framework, allowing models trained on synthetic event data to better generalize to real event data. Although these methods offer potential for applying event cameras to pipeline defect detection, whether the model can preserve the advantages of event cameras in low-light conditions remains a critical issue. Furthermore, beyond visual perception, ensuring that a pipeline inspection robot can navigate a collision-free trajectory for safe insertion into the pipeline constitutes an additional significant challenge. Currently, pipeline robots have been developed in various structures and configurations [5,6,7]. These technologies offer significant advantages in improving detection accuracy and reducing labor costs.

Among them, snake-like hyper-redundant manipulators (HRMs), due to their high flexibility, adaptability, and multiple degrees of freedom, can operate efficiently in complex pipeline environments. However, a key challenge in their path planning lies in ensuring that the end-effector reaches the target position while avoiding collisions between the manipulator and its surroundings. Although the high redundancy enhances the manipulator’s reachability and spatial exploration capabilities, it also significantly increases the computational time and resource consumption of path planning [8,9]. The E-RRT* algorithm proposed by Ji et al. [10] employed ellipses instead of traditional straight lines to connect path nodes and optimized the sampling process, effectively addressing the path planning problem for HRMs in confined spaces.

Due to their slender and flexible bodies, snakes efficiently explore narrow spaces, making them a key inspiration for bionic design. Inspired by this, previous studies have proposed modular snake-like structures to construct HRMs [11]. Furthermore, snake locomotion exhibits a “head-following” characteristic, whereby body movements are determined by the trajectory of the head. This mechanism can be applied to the motion planning of snake-like robots: if the final configuration of the robot’s end-effector reaching the target point is directly planned, this configuration can be regarded as the head trajectory, and the body joints follow this trajectory. Zhu et al. [12] proposed a pipeline inspection method that combines Nonlinear Model Predictive Control (NMPC) with a Serpentine-inspired Crawling Algorithm (SCA).

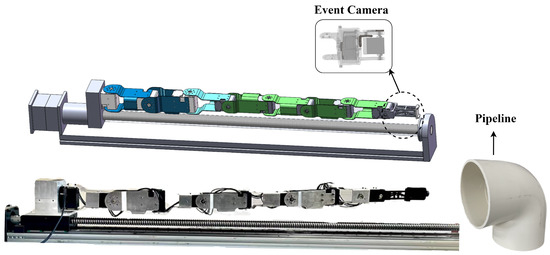

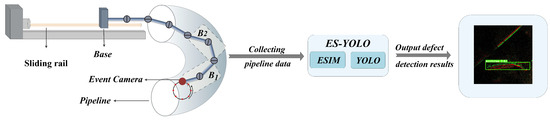

In summary, this article proposes and validates an innovative framework, ES-YOLO, designed to address the core challenges of employing event cameras for defect detection in narrow, low-light pipelines. To this end, we first present a practical method to systematically convert a public RGB dataset (NEU-DET) into event-based data, thereby creating a novel dataset, N-neudet. This dataset is then utilized to train an efficient defect detection model. Furthermore, the proposed perception framework is integrated with the motion control system of a snake-like hyper-redundant manipulator, as illustrated in Figure 1. The feasibility and practical effectiveness of the overall system are validated through physical experiments conducted in pipelines of different materials and under extremely low-light conditions. The main contributions of this article are as follows.

Figure 1.

Schematic diagram of the model of an HRM equipped with an event camera.

- To the best knowledge of the authors, this work presents the first system-level integration and validation of event-driven perception with an HRM for pipeline inspection, thereby constructing and experimentally verifying a complete HRM-based defect detection platform. The contribution goes beyond algorithmic testing under idealized conditions by providing a holistic validation of the entire perception and motion framework under real-world physical constraints. These results convincingly demonstrate both the feasibility and the significant potential of this technical approach for addressing complex industrial environments.

- An event-based defect dataset, termed N-neudet, is successfully constructed, with its data synthesized from traditional RGB images using the ES-YOLO framework. The practical utility of this dataset is rigorously validated through comprehensive comparative experiments. A defect detection system trained on N-neudet exhibits remarkable stability and accuracy, particularly under low-light conditions where conventional RGB cameras proved ineffective. These results confirm the robustness of the dataset and its suitability for event-based pipeline defect detection applications.

2. Related Work

2.1. Trajectory Planning for HRM

Due to their high redundancy, HRMs can flexibly avoid obstacles and effectively access narrow, curved spaces.

The nonlinear control of HRMs poses significant challenges due to their high degrees of redundancy. Liu et al. [13] addressed this issue by proposing a novel control framework based on a nonlinear observer, which transformed the system into an interconnected input-to-state stable (ISS) structure, thereby achieving asymptotic stability. Building upon this, Liu et al. [14] employed the small-gain theorem to design distributed optimal controllers that effectively resolved the output consensus problem in multi-agent nonlinear systems. More recently, Jin [15] tackled the global asymptotic stability problem of feedback optimization in nonlinear systems by integrating an enhanced gradient flow optimizer with a nonlinear perturbation function. The proposed method was rigorously validated using tools such as singular perturbation theory and input-to-state stability analysis.These studies provide theoretical guidance and inspiration for the nonlinear control of HRMs.

Currently, prevalent path planning methods primarily include graph search-based algorithms and sampling-based algorithms. Among these, the A* algorithm [16,17,18] and Dijkstra’s algorithm [9] were common representatives of graph search methods. Tang et al. [19] enhanced the A* algorithm by incorporating the artificial potential field method, introducing new node search strategies, and integrating local path optimization. This approach effectively reduced the number of search nodes, improved search efficiency, and simultaneously optimized the manipulator’s obstacle avoidance posture. Conversely, ref. [20] proposed an extended Dijkstra’s algorithm that integrates Delaunay triangulation and plane transformation techniques to optimize paths on complex surfaces, significantly enhancing path planning accuracy in both single-robot and multi-robot tasks.

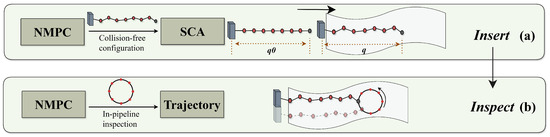

Ref. [12] proposed a pipeline inspection and detection method that integrates Nonlinear Model Predictive Control (NMPC) with a Snake-inspired Heuristic Crawling Algorithm (SCA). The method is divided into three stages: insertion, inspection, and exit. During the insertion and withdrawal stages, the SCA—inspired by snake locomotion—is employed to significantly reduce path planning time. In the inspection stage, NMPC is applied to ensure efficient and collision-free configuration. Specifically, NMPC functions as a high-level planner, generating a global, collision-free path configuration. In contrast, SCA operates as a low-level controller, guiding the manipulator to accurately track the sequence of path points generated by NMPC, thereby executing the physical insertion motion.

2.2. Deep Learning with Event Data

In the field of event cameras, significant efforts have been made to create datasets and explore their applications, particularly in object detection.

For example, in [21], the authors used a Spiking Neural Network (SNN) to simulate human eye saccadic movements, converting static image datasets into event data, thereby creating semi-synthetic event-based versions of the MNIST and Caltech101 datasets. In [22], the DVS-Gesture dataset, which includes 11 types of gestures, was used to achieve real-time recognition of gesture data streamed by DVS. Additionally, Enrico Calabrese et al. [23] created a 3D human pose dataset, and [24] created the active pixel vision sensors (DAVIS) Driving Dataset (DDD17), the first driving recording dataset combining DVS and DAVIS. Subsequently, ref. [25] proposed DSEC, a large-scale new dataset that includes data from high-resolution event cameras, traditional cameras, LiDAR, and GPS, and provides disparity ground truth, aimed at promoting and evaluating the development of event-based stereo vision algorithms.

These datasets have laid the foundation for advancements in object detection using event cameras. Cannici et al. [26] proposed two event camera-based object detection models, YOLE and fcYOLE. Maqueda et al. [27] designed a CNN architecture adapted to event camera outputs and proposed an event-frame representation method, successfully predicting steering angles in autonomous driving using the DDD17 [24] dataset based on event cameras. Subsequently, Alonso et al. [28] proposed the first semantic segmentation baseline model for the DDD17 dataset. However, event-based deep learning algorithms still lack support from large-scale event datasets.

2.3. Synthetic Event Data

Currently, there are many event datasets [21,22,23] available for event-driven algorithms. Rebecq et al. [29,30] made progress by using simulated event data to train a recurrent neural network-based model, which succeeded in video reconstruction tasks, demonstrating the potential for expanding event-camera applications. Kaiser et al. [31] proposed a simple event camera simulator that generates events based on image difference thresholds. Refs. [32,33] introduced two event generation simulators. Daniel Gehrig et al. [3] enhanced the event simulator ESIM [4] with frame interpolation technology, which can convert frame data from traditional video sequences into asynchronous event streams. This method allows for the reuse of existing datasets to generate event data and accurately simulates the behavior of real event cameras, ensuring that synthetic data is suitable for training and testing event algorithms.

3. Overall Workflow of the Pipeline Defect Detection Method

To evaluate a performance of the ES-YOLO framework in a real-world pipeline environment, a dedicated experimental platform for defect detection is developed. This platform integrates two core components: first, a visual perception module equipped with the ES-YOLO framework to address the challenge of detection in low-light environments; second, a snake-like HRM motion control system based on NMPC and SCA algorithms to achieve collision-free navigation within complex curved pipelines.

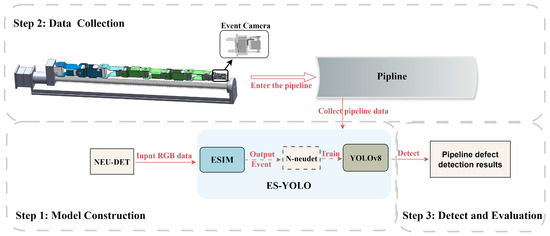

The experimental procedure is primarily divided into three steps.

- Step 1: Model Construction. The ESIM model is employed to synthesize an event-based dataset, designated N-neudet, from the public NEU-DET RGB dataset. This synthesized dataset is then utilized to train a YOLOv8 model, culminating in the final ES-YOLO framework.

- Step 2: Data Collection. An event camera is mounted on the HRM. Guided by the NMPC and SCA algorithms, the HRM first determines a collision-free trajectory and is subsequently inserted into the pipeline to collect in situ data.

- Step 3: Defect Detection and Evaluation. Upon completion of data acquisition, the resulting dataset is fed into the ES-YOLO framework for defect detection, and the performance is quantitatively evaluated.

The comprehensive experimental workflow is illustrated in Figure 2.

Figure 2.

Overall workflow of the pipeline defect detection method based on the ES-YOLO.

4. Methodology of the ES-YOLO

4.1. Overview

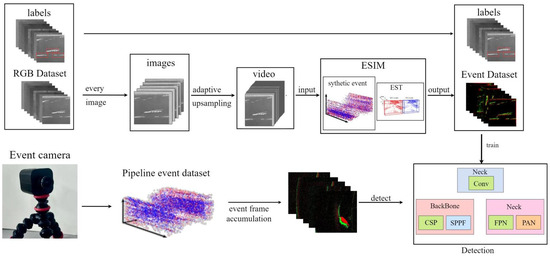

The framework of ES-YOLO, which converts RGB datasets for event cameras, is illustrated in Figure 3.

Figure 3.

ES-YOLO framework architecture. First, large-scale image datasets (NEU-DET) are converted into synthetic event datasets (N-neudet) through the ESIM module; then, the generated event data is passed to the YOLOv8 module for training.

ES-YOLO consists of the ESIM module and the YOLOv8s module. This section introduces the ESIM module and the YOLOv8s module. First, the RGB dataset is upsampled to convert it into a high-frequency dataset. Second, the ESIM module is used to synthesize event dataset. Finally, the YOLOv8s model is trained using the N-neudet event dataset, and defect detection is performed on actual pipelines.

4.2. ESIM Module

ESIM module is used to synthesize event data. Due to the lack of large event datasets for defect detection, we need to convert RGB datasets into event datasets. Equation (1) [3,34,35] describes the event generation model of an ideal sensor. The pixels of an event camera are independent and continuously monitor the logarithmic brightness signal at their corresponding positions . When the change in logarithmic brightness at a pixel exceeds a certain threshold C over time , an event is triggered.

In Equation (1), represents the polarity of the event signal, which is the indicator of the change in brightness. represents the time since the last occurrence of pixel u.

The image is processed through an offset function to transform the original image into a low-frame-rate version. Although the ESIM event camera simulator can adaptively render virtual scenes at any temporal resolution, the actual event camera operates on a microsecond timescale. Therefore, we apply frame interpolation technology [36] to enable frame reconstruction at any temporal resolution. Simultaneously, an adaptive upsampling strategy [4] is employed to determine the number of intermediate frames to generate, thus converting the low-frame-rate images into a high-frame-rate sequence. Finally, the event synthesis module (ESIM) is used to convert the high-frame-rate image sequence into event data.

4.3. YOLOv8 Module

The event dataset generated by ESIM in Section 4.2 is then used as input for training the defect detection model. As mentioned in [37], YOLO is the first model to treat the object detection problem as a regression task. Released in 2023, YOLOv8 [38] builds upon the success of earlier versions such as YOLOv5 [37], introducing advanced architectural designs and training strategies, including anchor-free detection, to provide a unified framework with improved accuracy for various computer vision tasks [39,40]. By integrating an anchor-free design with attention mechanisms and dynamic convolution, the model not only simplifies its architecture but also significantly enhances the detection of small objects—an essential factor in many edge deployment scenarios. Given its excellent performance in terms of accuracy, efficiency, and usability, this study adopts YOLOv8 as the core detection module.

4.4. Event Spike Tensor

The synthetic events are combined with the original NEU-DET labels to train the YOLO module. Due to the sparsity of event signals and their non-uniform spatiotemporal distribution, pattern recognition algorithms typically aggregate event data into grid-based representations. To address this, we use the general framework Event Spike Tensor (EST) [41], which converts event streams into grid-based representations. Unlike previous event representation methods, EST retains the four dimensions of event signals and maps events of positive and negative polarities to two independent spatiotemporal grids, thereby enhancing the model’s ability to represent event data.

5. Comparative Experiments Based on the NEU-DET Dataset

To validate the feasibility of the ES-YOLO framework for practical pipeline defect detection, we conducted a series of experiments. These experiments involved synthesizing event datasets, setting model training parameters, evaluating the model, and performing practical defect detection. Finally, we compared the detection results of the event camera with those of the RGB camera.

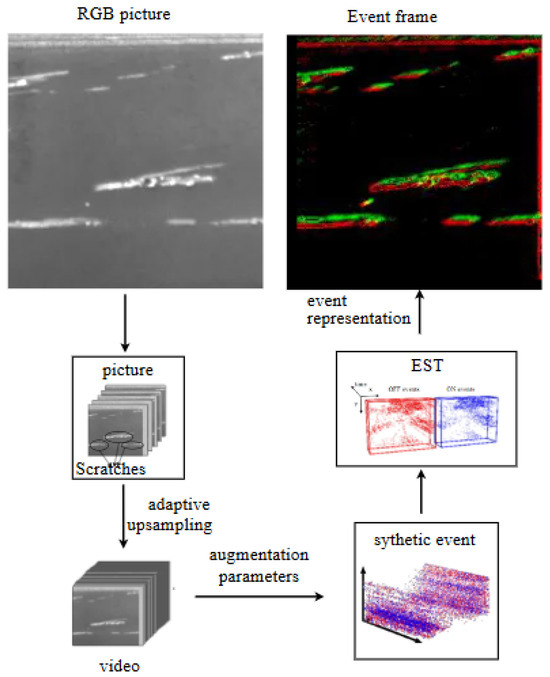

5.1. Dataset Generation

For the experiments, we selected the NEU-DET dataset [42], which contained six typical surface defects of hot-rolled strip steel: Roll Scale (RS), Patches (Pa), Cracks (Cr), Pitted Surface (PS), Inclusions (In), and Scratches (Sc). The dataset consisted of 1800 images, with 300 samples per defect type, and included detailed annotations specifying both the defect categories and their locations. After selecting the NEU-DET dataset, we processed it through the ESIM module to generate a synthetic event-based defect dataset, which we named N-neudet. This event dataset was subsequently used to train the YOLO-based defect detection model, allowing for the evaluation of the model’s performance across different defect categories. As a result, a total of 1800 event defect images were generated, containing six different types of typical defects, with 300 samples for each defect type. The conversion process from RGB images to event frames is illustrated in Figure 4.

Figure 4.

Illustration of RGB dataset conversed into event dataset.

To improve the recognition accuracy, this article employed a sliding window combined with adaptive interpolation strategy. Specifically, a 512 × 512 Region of Interest (ROI) window was defined on a 640 × 640 static RGB image and was synchronously shifted in both horizontal and vertical directions with a stride of pixel, resulting in sub-regions (with the final incomplete region discarded). Each ROI sequence was synthesized into a 128-frame, 20 fps video, while its original label boundaries were mapped to the local coordinate system. This transformation was given by , where represented the bounding box coordinates in the original image, was the displacement vector of the ROI window’s origin, and was the resulting coordinate vector in the local ROI frame. Subsequently, an adaptive frame interpolation technique [36] was applied to upsample the video from 20 fps to 200 fps, producing a high-frame-rate sequence. This sequence was then fed into the ESIM module to generate the corresponding event data. Compared to directly simulating events from the full 640 × 640 image, the proposed sliding window and adaptive interpolation approach significantly increased the target event density, thereby enhancing the recognition accuracy of the ES-YOLO framework.

To simulate event generation, we used the open-source simulator ESIM [3]. Before generating events, we needed to carefully select the contrast thresholds. We randomly sampled the contrast thresholds for positive () and negative () events from a uniform distribution, . This random sampling method improved the domain adaptability between simulated and real data, thereby enhancing the generalization of simulated events to real events. In this experiment, we chose and . After the ESIM simulation, the final event stream was generated with an event density of 256 events/ms and a temporal resolution of 1.953 ms.

Finally, to match the synthesized event stream with the annotations based on the YOLOv8 model, we converted the asynchronous and sparse event stream into a tensor representation. We selected the Event Spike Tensor (EST) [41] because it performed well in both high-level and low-level tasks. The EST method mapped positive and negative polarity events into separate spatiotemporal grids, which were then stacked along the channel dimension to form the final tensor representation of size . In this representation, H and W were the spatial resolution of the sensor, and was a hyperparameter which controls the number of temporal bins used to aggregate events.

5.2. Experimental Parameters

This experiment was implemented using the PyTorch framework. The ADAM optimizer was used for training, with a learning rate set to 0.01. A total of 100 epochs were trained, with the momentum parameter set to 0.937, and a batch size of 64. The training parameters used in the experiment are shown in Table 1.

Table 1.

Training parameters.

5.3. Evaluation Metrics

In this experiment, we used multiple metrics to evaluate the model’s detection performance, including precision (P), recall (R), and mean average precision ().

Precision is defined as the ratio of the number of True Positive samples correctly predicted by the model to the total number of samples predicted as positive by the model, as shown in Equation (2).

Recall is defined as the ratio of the number of True Positive samples correctly predicted by the model to the total number of actual True Positive samples, as shown in the following Equation (3).

mAP and AP are metrics used to evaluate multi-class classification problems. mAP is the average of the AP values across all classes, while AP is calculated separately for each class. The formulas are shown in Equations (4) and (5).

In the above formula, S represents the total number of classes, is True Positives, is False Positives, and is False Negatives. is the precision–recall curve. These metrics are important for evaluating the performance and adaptability of the model.

The False Negative Rate (FNR) is defined as the proportion of positive samples that the model fails to detect. It reflects the model’s missed detection rate, as shown in Equation (6).

In traditional classification tasks, the False Positive Rate (FPR), defined as the proportion of non-defective samples incorrectly classified as defective, is commonly used to evaluate model performance. However, in the context of industrial defect inspection, this metric may not provide a meaningful assessment of model reliability. To more effectively capture the practical impact of false alarms in such scenarios, this study adopts the False Discovery Rate (FDR) as a key evaluation metric. FDR is defined as the proportion of false positives among all samples predicted as defective, thereby directly quantifying the severity of the model’s false alarms. The formulation of FDR is presented in Equation (7).

5.4. Results

5.4.1. Training Visualization

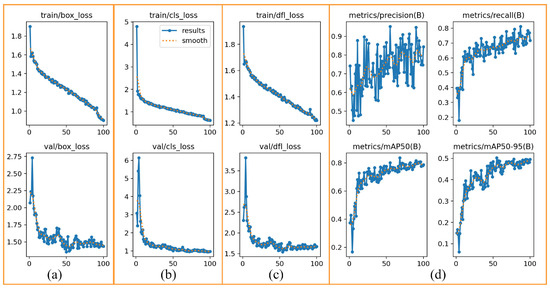

Through the experiments described above, we obtained the ES-YOLO framework’s experimental results on the N-neudet training set, as illustrated in Figure 5.

Figure 5.

Training result figures. (a) The bounding box loss (box_loss), which measures the error in predicted object locations, shows a consistent downward trend, indicating that the model is effectively learning to locate objects; (b) the classification loss (cls_loss), indicating the error in predicting the correct object class, also steadily decreases as training progresses; (c) Distribution Focal Loss (dfl_loss), another component for bounding box regression, follows a similar convergence pattern; (d) Key evaluation metrics, showing the progression of precision, recall, and mean Average Precision (mAP), all exhibit a clear upward trend, signifying significant performance improvement over time.

In Figure 5a, the train/box_loss exhibited a steady downward trend, indicating that the model’s bounding box regression capability was gradually improved. The val/box_loss also showed a similar downward trend, confirming the model’s strong generalization ability on unseen data. In Figure 5b, both train/cls_loss and val/cls_loss decreased significantly, signifying that the model was effectively learning to distinguish between different object classes. The distribution focal loss, another component of bounding box regression, was shown in Figure 5c. Its convergence on both sets further confirmed the stability of the training process.

In Figure 5d, as the number of training epochs increased, the model’s precision gradually improved. The mAP@0.5 (mean average precision at an IoU threshold of 0.5) increased rapidly and stabilized, indicating a significant enhancement in the model’s overall detection performance. Although the mAP@0.5:0.95 (mean average precision across IoU thresholds from 0.5 to 0.95) was lower than mAP@0.5, its upward trend was also evident, further demonstrating the model’s robustness across different IoU thresholds.

Overall, the ES-YOLO framework exhibited good convergence during training, with both training and validation losses showing a downward trend. Additionally, the model’s precision and recall demonstrated significant improvement, highlighting the model’s effectiveness in the defect detection task.

5.4.2. Validating Visualization

We selected a subset of images from the N-neudet event dataset as the validation set. After 100 epochs of training, the model’s performance on the validation set is presented in Table 2.

Table 2.

ES-YOLO validating results.

The results showed that the model achieved a precision of 0.952, a recall of 0.657, and an mAP@0.5 of 0.801 across all six categories, demonstrating good overall performance. However, for the two defect types, Crazing and Rolled-in-scale, although the detection precision exceeded 0.8, the recall rates were notably low. This phenomenon indicated that the model struggled to accurately distinguish between these two specific defect types, often leading to misclassifications. These findings highlighted certain limitations of event cameras in fine-grained defect classification tasks.

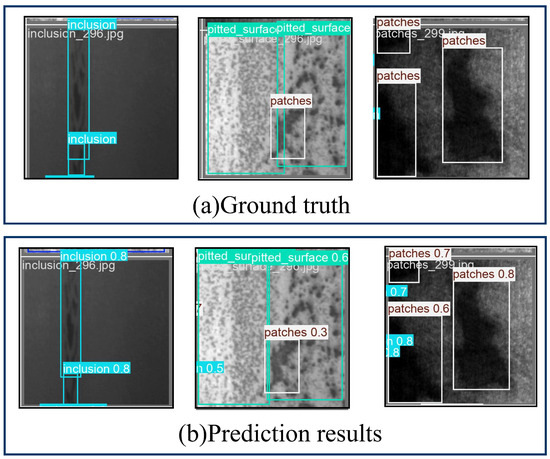

The qualitative results of the validation set are illustrated in Figure 6. The bounding boxes in Figure 6a represent the ground truth. Figure 6b illustrates the prediction results of the validation set.

Figure 6.

Visualization of validation set results. (a) represents the ground truth; (b) represents the prediction results of the validation set.

Meanwhile, the original NEU-DET dataset was trained using the YOLOv8s model, and detection results were obtained on the validation set. Table 3 presents a quantitative comparison of the detection performance between models trained on the original NEU-DET RGB dataset and the synthesized N-NEUDET event dataset. The evaluation was conducted using the four key metrics of precision (P), recall (R), mAP@0.5, and inference time. The results demonstrated that, on the validation set, the ES-YOLO model achieved substantially higher detection accuracy on the event-based dataset compared to the RGB dataset, which validated its effectiveness in defect identification.

Table 3.

Comparison of training results between RGB and event dataset.

6. Performance Evaluation of ES-YOLO Under Different Illuminations

To validate the advantages of event cameras in defect detection, we conducted comparative experiments. Specifically, we used the Intel RealSense L515 RGB camera and the DVXplorer Mini event camera to collect data from steel and acrylic pipelines. The trained ES-YOLO framework was then applied for detection, and the results were analyzed for comparison.

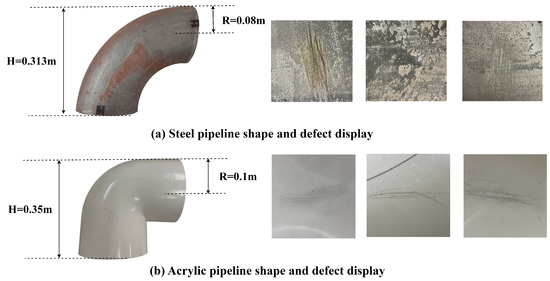

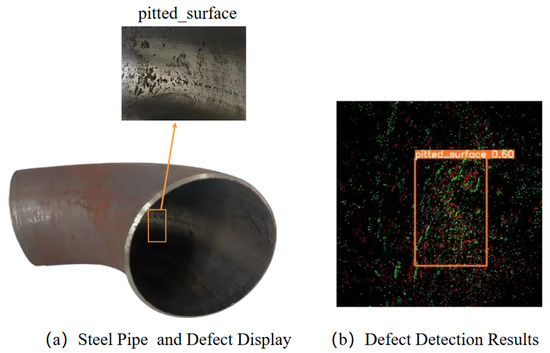

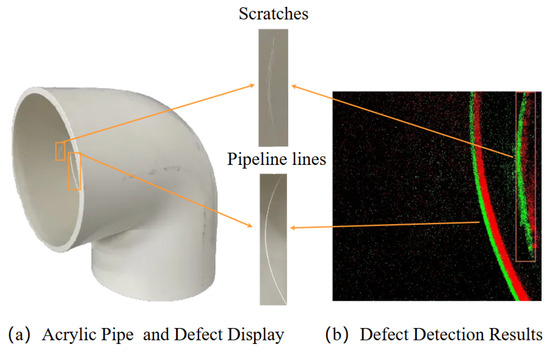

The geometric specifications and defect characteristics of the two pipeline types are depicted in Figure 7. Figure 7a presents the steel pipeline, which was sourced from a decommissioned industrial system and contained naturally occurring defects such as rust patches, surface scratches, and pitting corrosion. In contrast, Figure 7b displays the acrylic pipe, on which scratches were artificially created to simulate common damage, given its material properties. In this experiment, we used the detection rate () metric to evaluate the performance of the ES-YOLO framework. The detection rate was defined as the ratio of the number of defect samples successfully detected by the framework to the total number of defect samples captured by the camera, as expressed in Equation (8).

where represents the number of defect samples successfully detected, and represents the number of defect samples not detected.

Figure 7.

Diagram showing the radius height of the experimental pipeline and its defects.

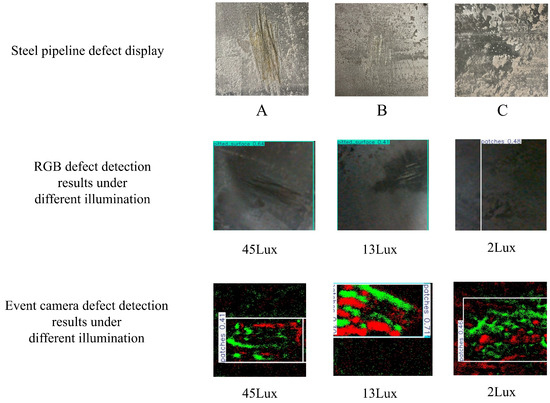

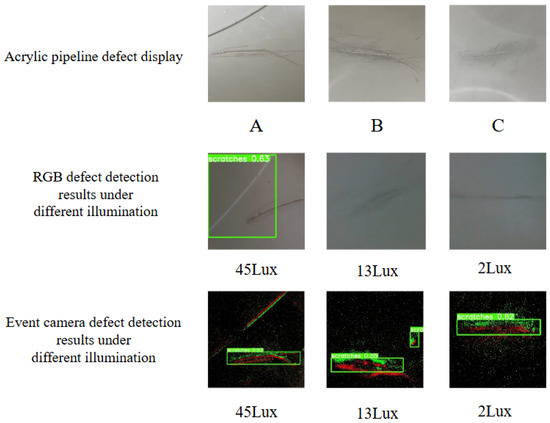

In the actual pipeline inspection experiment, we designed six comparative experiments to evaluate the detection performance of event cameras and RGB cameras under the same target defects and lighting conditions. First, three target defects were selected on both steel and acrylic pipelines, with their specific locations marked as A, B, and C in Figure 8 and Figure 9. Then, we used an optical illuminance meter UT383 to measure the brightness of the target points, where the illuminance of defects A, B, and C was 45 Lux, 13 Lux, and 2 Lux, respectively. Subsequently, both event and RGB cameras were used to capture images of the target defects on both types of pipelines. A total of 50 images were collected for each target defect using the Intel RealSense L515 RGB camera and the DVXplorer Mini event-based camera, respectively. The collected images were then fed into the ES-YOLO framework for detection.

Figure 8.

Comparison experiment of different defects and illumination levels in steel pipes. Among them, A, B, and C represent the target defects in the steel pipe. Both the event camera and RGB camera can detect the defect at all three points (A, B, and C), where the illumination levels are 45 Lux, 13 Lux, and 2 Lux, respectively.

Figure 9.

Comparison experiment of different defects and illumination levels in acrylic pipes. Among them, A, B, and C represent the target defects in the acrylic pipe. The illumination levels are 13 Lux and 2 Lux when measured at points B and C, respectively. As a result, only the event camera successfully detected the defects.

Figure 8 and Figure 9 present the defect images collected from the steel and acrylic pipelines, respectively. To facilitate a direct comparison of the performance between the two sensors, these figures also include the corresponding detection results obtained from the RGB camera and the event camera under three distinct lighting conditions. Notably, in the low-light environment of 2 Lux, the RGB camera exhibited markedly inferior detection performance compared to the event camera, with frequent occurrences of misclassifications and even complete missed detections. The quantitative detection results for the steel and acrylic pipelines are detailed in Table 4 and Table 5, respectively.

Table 4.

Comparison results of event camera and RGB camera detection in steel pipes.

Table 5.

Comparison results of event camera and RGB camera detection in acrylic pipes.

The experimental results showed that in the steel pipeline, the event camera successfully detected all target defects, achieving a detection rate of over 84%. In contrast, the defect detection rate using RGB images under 2 Lux low-light conditions was only 10%, which was significantly lower than the detection performance achieved with event-based data. In the acrylic pipeline, the RGB camera detected defects only under 45 Lux and 13 Lux lighting conditions, with a maximum detection rate of 34%. In contrast, the event camera accurately detected the target defects under all lighting conditions, with a detection rate consistently above 60%. These results indicate that, compared to the RGB camera, the event camera could effectively detect pipeline defects in low-light environments. However, the detection rate of the RGB model for acrylic pipelines was significantly lower than that for steel pipelines, primarily due to the traditional RGB models’ susceptibility to interference caused by the optical properties of different materials. In contrast, the event camera effectively alleviated the impact of material differences by capturing the dynamic characteristics of defects. The results indicate that the ES-YOLO framework performed better in cross-material detection.

In summary, under the low-light environments, the event camera consistently performed better than the RGB camera, particularly in low-light and cross-material scenarios, which made it more suitable for practical applications and provided a significant overall advantage.

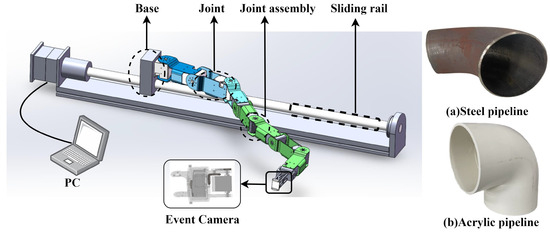

7. Experimental Design for Pipeline Defect Detection via a Snake-like HRM

7.1. Composition of the Pipeline Defect Detection System

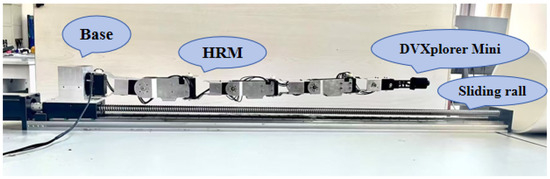

To evaluate the performance of the ES-YOLO framework in real-world pipeline environments, a pipeline defect detection experimental platform is constructed, comprising two main components. The first is a visual perception module based on the ES-YOLO framework, designed to tackle detection challenges under low-light conditions. The second is a modeling and control algorithm for the snake-like HRM, developed to enable collision-free navigation within curved pipeline structures. The system consists of an HRM, a sliding rail, a base, an event camera mounted on the end of the HRM, and a PC-based control system, as illustrated in Figure 10.

Figure 10.

Schematic diagram of the pipeline defect detection system, where (a) represents the steel pipeline and (b) corresponds to the acrylic pipeline.

A key design consideration is achieving a balance between mechanical strength and maneuverability. The HRM adopts a “3 + 5” hierarchical configuration: three high-torque XH540-W270-R motors connected by magnesium–aluminum alloy joints form the base section, while five lightweight XH430-W270-R motors connected via resin connectors comprise the end section. The sliding rail structure employs a ball screw drive system, which consists primarily of a stepper motor, a lead screw, and a mounting base for the HRM. The ball screw system offers a travel distance of 1.1 m and features high load capacity, and stable stepping performance. The PC-based control system independently communicates control commands to both the HRM and the sliding rail using the RS485 protocol, ensuring reliable and synchronized motion control. Additionally, the DVXplorer Mini event camera is mounted on the HRM’s end-effector to capture pipeline defects throughout the insertion process. Table 6 shows more details of the system specifications.

Table 6.

Composition of the pipeline defect detection system.

The overall workflow of the system is illustrated in Figure 11. First, a control algorithm facilitates its collision-free insertion to accurately reach the target detection position. Subsequently, the event camera at the end of the HRM is used to collect internal data from within the pipeline. Finally, the acquired data is processed using the ES-YOLO framework, and defect detection results are produced.

Figure 11.

Schematic diagram of the pipeline defect detection experimental workflow.

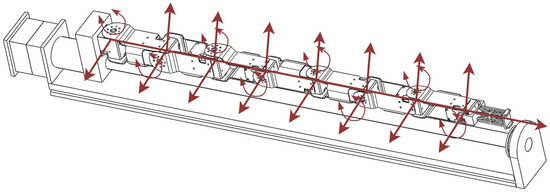

7.2. The Model and Control Algorithm of the HRM

To enable collision-free defect inspection within narrow pipelines, this study employs a motor-driven, 9-degree-of-freedom (DOF) hyper-redundant snake-like robotic arm. The HRM consists of a base, a sliding rail, eight joints, and joint assemblies, with its detailed configuration illustrated in Figure 12.

Figure 12.

Mechanical structure design of HRM and sliding rail.

To characterize the motion properties of the HRM, this article establishes its kinematic model based on forward kinematics and Denavit–Hartenberg (D-H) parameters. The relevant D-H parameters are presented in Table 7. Based on this model, the homogeneous transformation matrix between adjacent joints is derived, as shown in Equation (9), where ‘s’ represents the sine function (sin), and ‘c’ represents the cosine function (cos).

Table 7.

D-H parameters of the snake manipulator.

By combining the transformation matrix from the world coordinate system to the base coordinate system and the homogeneous transformation matrix from the world coordinate system to the end-effector, the forward kinematics equation is obtained, as shown in Equation (10). Here, denotes the configuration vector of the HRM.

Due to the high degree of redundancy in the HRM and the spatial constraints of narrow pipeline environments, the control complexity of pipeline defect inspection tasks is significantly increased. To ensure collision-free operation of the HRM during inspection, efficient path planning is essential. However, due to kinematic constraints and the inherent complexity of the planning process, finding an optimal path often incurs substantial time and computational costs, and requires precise control of each HRM joint to avoid collisions. To balance planning efficiency and path quality, this article adopts an efficient strategy that seeks a suboptimal HRM configuration to reduce computational cost. This strategy is ultimately implemented by combining Nonlinear Model Predictive Control (NMPC) with a Snake-inspired Crawling Algorithm (SCA) [12]. The obstacle avoidance strategy for pipeline defect inspection includes two stages: insertion and inspection, as shown in Figure 13. Experimental validation demonstrates that the minimum pipeline the HRM can successfully traverse is a 90° curved pipe with an inner diameter of 8 cm and a length of 48 cm. This limit is jointly determined by the physical dimensions of the manipulator, the pipeline radius, and the joint bending capability.

Figure 13.

Flow chart of the pipeline inspection process: (initial horizontal configuration), (collision-free configuration).

7.3. Generalization Ability of NMPC

To achieve comprehensive data acquisition of the inner pipeline wall, the event camera mounted on the HRM’s end-effector performs a rotational scan along the pipeline cross-section. This scanning trajectory is discretized into key target points. To ensure the HRM can safely and accurately reach each target point, the NMPC algorithm is employed to pre-plan a corresponding collision-free configuration, , for every target point before the manipulator enters the pipeline.

The optimization objective of this pose planning is to minimize the sum of the absolute values of the joint angles and the sum of the changes in joint angles between adjacent configurations. This objective helps prevent the HRM from moving to its joint limits and avoids abrupt movements caused by local minima. The planning method for the collision-free configuration of the i-th target point is shown in Equation (11), where represents the j-th joint angle () of the i-th configuration; is the desired pose vector of the i-th target point; , define the upper and lower limits of the HRM’s joint angles; and represents the minimum safe distance between the HRM and the pipeline.

A solution from the NMPC is not always guaranteed due to multiple constraints, including the desired pose of the HRM end-effector, pipeline curvature, radius, and the safe distance . In the experiments, is set to 4 cm. This threshold accounts for both the physical radius of the HRM links and potential positioning errors during actual operation, thereby ensuring physical safety. Planning is considered to have failed under two conditions.

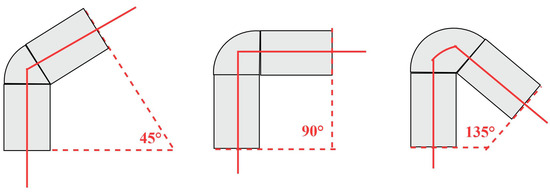

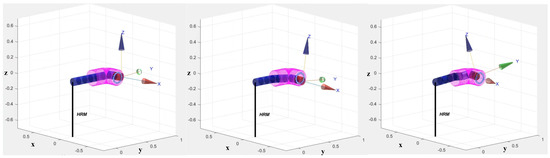

To systematically evaluate the generalization ability of NMPC, this article conducts a series of collision-free configuration simulations in MATLAB. The objective of NMPC is to compute safe, collision-free configurations. For the HRM, configurations closer to the pipeline centerline reduce the risk of collision with the wall and thereby improve safety. Therefore, this simulation aims to calculate the maximum distance, , that the HRM’s configuration deviates from the centerline as it passes through pipelines of different curvatures. The minimum pipeline radius for ensuring safe passage is then calculated by adding this maximum deviation distance, , to a predefined safety threshold, , where curvature is defined as the included angle of the sector forming the bent pipe section. The experiments test three typical pipeline curvatures, including , , . The total length of all simulated pipelines is kept consistent, and their shapes are illustrated in Figure 14. Although the simulation results cannot directly quantify the algorithm’s generalization ability, they provide a qualitative evaluation of its adaptability under different geometric constraints. The specific experimental results are detailed in Table 8.

Figure 14.

Schematic diagram of single-bend pipe models with different curvatures, where the red solid line represents the pipeline centerline.

Table 8.

Minimum safe passage radius of the HRM for different pipeline curvatures.

The experimental results indicate that when the NMPC algorithm generates a collision-free configuration for a specific target point, higher pipeline curvature requires a larger radius to ensure safe passage. Specifically, for a pipeline with a curvature, the minimum passage radius required by the collision-free configuration planned by NMPC is 6.53 cm. When the curvature is , the minimum pipeline radius the HRM can pass through is 7.72 cm. For a high-curvature pipeline of , the pipeline radius must be at least 9.13 cm to ensure the HRM can perform the inspection safely. Figure 15 illustrates a successful planning result for a pipeline with a radius of 10 cm and a curvature of , along with the corresponding collision-free configuration of the HRM.

Figure 15.

Simulation results for the collision-free inspection of the pipeline defect detection system.

8. Experimental Results for Pipeline Defect Detection via a Snake-like HRM

To validate the practicality and feasibility of the proposed pipeline defect detection system, physical experiments were conducted on both steel and acrylic pipelines, as illustrated in Figure 16. In each pipeline environment, the NMPC optimization algorithm was employed to compute collision-free configurations and generate corresponding motion trajectories.

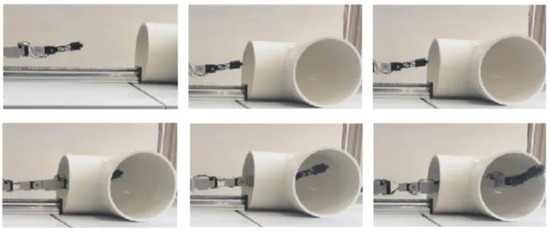

Figure 16.

Experimental platform for pipeline defect detection based on an event camera. The setup comprises the key components of the system, including a HRM, a movable base, a sliding rail, an event-based camera, and the pipeline specimen under inspection.

The generation of the HRM’s collision-free configurations was conducted through MATLAB simulations. Detailed numerical results are presented in Table 9. The table reports the maximum positioning error between the end-effector and the target defect. These metrics are provided for both steel and acrylic pipeline environments. To visually demonstrate the insertion process of the HRM, six consecutive visualization frames are presented in Figure 17. During the pipeline insertion process, the illumination from the pipe entrance to the darkest section ranged between 10 Lux and 50 Lux, corresponding to a low-light environment. Owing to its high dynamic range and asynchronous sensing mechanism, the event camera effectively responded to relative changes in brightness and continuously output an event stream, thereby capturing defect details clearly from the bright pipe entrance to the dim depths of the pipeline. Subsequently, the event camera was inserted into the pipeline by the manipulator to collect data, and the detection results were obtained through ES-YOLO analysis.

Table 9.

Geometries of the two pipelines and experimental error results.

Figure 17.

The process of defect detection inside the pipeline using an event camera mounted on the HRM. Six consecutive frames illustrate the collision-free insertion of the HRM into the pipeline. The illuminance at the pipeline entrance is approximately 50 Lux. As the HRM advances deeper into the pipeline, occlusion of the entrance light source by its arm and its own shadow causes the light to diminish, with the minimum illuminance inside the pipeline dropping to approximately 10 Lux.

Based on the aforementioned experiments, the detection results of the proposed pipeline defect detection system are presented in Figure 18 and Figure 19. The results indicate that the target defects were successfully detected by inputting the pipeline data, collected by the event camera mounted on the HRM, into the ES-YOLO framework. These results demonstrate that the bio-inspired vision-based system could effectively identify defects in pipelines made of different materials.

Figure 18.

Steel pipe internal defect detection. (a) shows the shape and defects of the steel pipe; (b) shows the detection results of the steel pipe.

Figure 19.

Acrylic pipe internal defect detection. (a) shows the structure and defects of the acrylic pipe; (b) shows the detection results of the acrylic pipe defects.

In summary, the experimental results demonstrate that the ES-YOLO framework exhibited strong applicability in practical pipeline defect detection and possessed cross-material generalization capability, which validates the robustness and effectiveness of the proposed system.

9. Discussion

In this study, the ES-YOLO framework successfully detects defects in both steel and acrylic pipes, demonstrating that generating event data through simulation is an efficient and feasible strategy to address dataset scarcity in specialized application domains. This finding establishes a reproducible, data-driven paradigm that can be extended to other industrial and scientific fields lacking native event datasets.

Comparative experiments with traditional RGB vision further highlight the advantages of event cameras in low-light conditions. These results not only confirm their technical feasibility but also open new avenues for robotic applications in visually degraded environments such as unlit pipelines, nighttime inspections, and settings with high-dynamic-range illumination changes. Notably, the system achieves defect detection in near-total darkness, a task that remains challenging for conventional RGB-based methods.

Moreover, the strong robustness exhibited across both steel and acrylic pipelines warrants deeper investigation. We speculate that this cross-material generalization arises from the intrinsic properties of the RGB-to-event conversion process, which primarily encodes brightness changes rather than absolute color or texture information. By filtering out material-dependent surface details while emphasizing geometric contours of defects, this process enhances detection consistency across diverse environments—a property of considerable practical significance for real-world inspection systems.

In this study, the HRM exhibits notable advantages in confined and complex environments that demand high precision and stability. It enables stable, high-precision, non-contact data acquisition and offers a greater payload capacity for carrying advanced sensor suites. Additionally, its energy consumption is significantly lower than that of UAVs. However, the HRM is less suitable for large-scale environments, such as wide-diameter pipelines or oil storage tanks, where UAVs and other mobile robots demonstrate clear advantages. Notably, the ES-YOLO framework is highly portable and can be deployed across various robotic platforms, including UAVs and ground-based mobile robots.

Ultimately, the HRM, UAV, or other robotic systems serve merely as carriers for executing pipeline inspection tasks. Deploying the ES-YOLO framework on different platforms to support diverse engineering applications presents broad prospects for practical deployment.

However, the current approach has a fundamental limitation: to leverage the mature YOLOv8 architecture, the asynchronous event stream must first be converted into discrete event frames before being input into the ES-YOLO framework. While this conversion step facilitates the application of advanced detection algorithms, it compromises the intrinsic advantages of event cameras, such as microsecond-level temporal resolution and low latency. Therefore, future research should prioritize overcoming this bottleneck by exploring advanced algorithms capable of directly processing raw event streams. A particularly promising direction is the use of Spiking Neural Networks (SNNs), whose event-driven and asynchronous processing characteristics are inherently compatible with the data format of event cameras, offering the potential for low-latency and low-power defect detection. In addition, asynchronous convolutional networks and graph-based methods that operate directly on event streams also warrant further investigation. Ultimately, the goal is to deeply integrate these emerging technologies with the HRM detection system to enhance the generalizability and practical utility of the entire framework.

10. Conclusions

This article proposed an ES-YOLO framework to enhance defect detection in low-light pipeline environments, which leveraged an event camera based on bionic visual perception. To address the issue of scarce event datasets, the RGB NEU-DET dataset was converted into the N-neudet event-based dataset using the ESIM module. Subsequently, the pipeline defect detection model was trained on the generated event data via YOLOv8s. To verify the accuracy of this framework in low-light environments, two sets of comparative experiments were conducted to assess the defect detection performance of RGB cameras versus event cameras within both steel and acrylic pipelines. The results showed that RGB cameras fail to effectively identify defects, while event cameras demonstrated clear advantages under low-light conditions. Furthermore, the pipeline defect detection system based on a snake-like HRM was developed, and its feasibility and detection accuracy were validated through physical experiments in realistic pipeline inspection scenarios.

Future work will focus on expanding the breadth and depth of application for the proposed HRM-based detection method. To achieve real-time and efficient system operation, low-power models such as Spiking Neural Networks (SNNs) will be deployed on embedded hardware to reduce inference time and improve energy efficiency. At the system architecture level, migration to the ROS 2 framework will be explored to enhance modularity, stability, and scalability. On the perception level, efforts will target key challenges such as real-time 3D modeling and dynamic path planning in unknown environments—both critical to improving system autonomy. To validate and improve the framework’s generalizability, its application will be extended beyond pipeline interiors to encompass a broader range of industrial geometries, including ventilation ducts, mining tunnels, and confined industrial cavities. The ultimate goal is to develop an automated and intelligent inspection system capable of adapting to diverse environments while maintaining high autonomy, efficiency, and robustness for inspection tasks in various constrained spaces.

Author Contributions

Conceptualization, X.X.; methodology, X.X. and M.S.; software, M.S. and B.G.; validation, M.S., B.G. and J.W. (Jingxue Wu); data curation, B.G.; writing—original draft preparation, M.S.; writing—review and editing, X.X., J.W. (Jianming Wang) and J.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| HRM | Hyper-Redundant Manipulator |

| NMPC | Nonlinear Model Predictive Control |

| SCA | Snake-inspired Crawling Algorithm |

| DOF | Degrees Of Freedom |

| D-H | Denavit–Hartenberg |

References

- Kazeminasab, S.; Sadeghi, N.; Janfaza, V.; Razavi, M.; Ziyadidegan, S.; Banks, M.K. Localization, mapping, navigation, and inspection methods in in-pipe robots: A review. IEEE Access 2021, 9, 162035–162058. [Google Scholar] [CrossRef]

- Le, D.V.K.; Chen, Z.; Rajkumar, R. Multi-sensors in-line inspection robot for pipe flaws detection. IET Sci. Meas. Technol. 2020, 14, 71–82. [Google Scholar] [CrossRef]

- Gehrig, D.; Gehrig, M.; Hidalgo-Carrió, J.; Scaramuzza, D. Video to events: Recycling video datasets for event cameras. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3586–3595. [Google Scholar]

- Rebecq, H.; Gehrig, D.; Scaramuzza, D. ESIM: An open event camera simulator. In Proceedings of the Conference on Robot Learning, PMLR, Stockholm, Sweden, 10–15 July 2018; pp. 969–982. [Google Scholar]

- Shao, L.; Wang, Y.; Guo, B.; Chen, X. A review over state of the art of in-pipe robot. In Proceedings of the 2015 IEEE International Conference on Mechatronics and Automation (ICMA), Beijing, China, 2–5 August 2015; pp. 2180–2185. [Google Scholar]

- Kazeminasab, S.; Aghashahi, M.; Banks, M.K. Development of an inline robot for water quality monitoring. In Proceedings of the 2020 5th International Conference on Robotics and Automation Engineering (ICRAE), Singapore, 20–22 November 2020; pp. 106–113. [Google Scholar]

- Liljebäck, P.; Pettersen, K.Y.; Stavdahl, Ø.; Gravdahl, J.T. A review on modelling, implementation, and control of snake robots. Robot. Auton. Syst. 2012, 60, 29–40. [Google Scholar] [CrossRef]

- Ji, H.; Xie, H.; Yang, H. A Spatial Path Following Method for Hyper-Redundant Manipulators by Step-by-Step Search and Calculating. In Proceedings of the 2022 7th International Conference on Robotics and Automation Engineering (ICRAE), Singapore, 18–20 November 2022; pp. 292–298. [Google Scholar] [CrossRef]

- Soltani, A.; Tawfik, H.; Goulermas, J.; Fernando, T. Path planning in construction sites: Performance evaluation of the Dijkstra, A*, and GA search algorithms. Adv. Eng. Inform. 2002, 16, 291–303. [Google Scholar] [CrossRef]

- Ji, H.; Xie, H.; Wang, C.; Yang, H. E-RRT*: Path Planning for Hyper-Redundant Manipulators. IEEE Robot. Autom. Lett. 2023, 8, 8128–8135. [Google Scholar] [CrossRef]

- Liu, J.; Tong, Y.; Liu, J. Review of snake robots in constrained environments. Robot. Auton. Syst. 2021, 141, 103785. [Google Scholar] [CrossRef]

- Zhu, J.; Su, M.; Li, L.; Xiang, Y.; Wang, J.; Xiao, X. Snake-inspired trajectory planning and control for confined pipeline inspection with hyper-redundant manipulators. Biomim. Intell. Robot. 2025, 5, 100245. [Google Scholar] [CrossRef]

- Liu, T.; Jiang, Z.P. Event-based control of nonlinear systems with partial state and output feedback. Automatica 2015, 53, 10–22. [Google Scholar] [CrossRef]

- Liu, T.; Qin, Z.; Hong, Y.; Jiang, Z.P. Distributed Optimization of Nonlinear Multiagent Systems: A Small-Gain Approach. IEEE Trans. Autom. Control 2022, 67, 676–691. [Google Scholar] [CrossRef]

- Jin, Z. Global Asymptotic Stability Analysis for Autonomous Optimization. IEEE Trans. Autom. Control 2025, 1–7. [Google Scholar] [CrossRef]

- Xu, C.; Liu, Z.; Hu, C.; Li, X. Improved Hybrid A* Algorithm Obstacle Avoidance Strategy Based on Reinforcement Learning. In Proceedings of the 2023 42nd Chinese Control Conference (CCC), Tianjin, China, 24–26 July 2023; pp. 4077–4082. [Google Scholar] [CrossRef]

- Song, R.; Liu, Y.; Bucknall, R. Smoothed A* algorithm for practical unmanned surface vehicle path planning. Appl. Ocean Res. 2019, 83, 9–20. [Google Scholar] [CrossRef]

- Yu, D.; Roh, M.I. Method for anti-collision path planning using velocity obstacle and A* algorithms for maritime autonomous surface ship. Int. J. Nav. Archit. Ocean. Eng. 2024, 16, 100586. [Google Scholar] [CrossRef]

- Tang, X.; Zhou, H.; Xu, T. Obstacle avoidance path planning of 6-DOF robotic arm based on improved A* algorithm and artificial potential field method. Robotica 2024, 42, 457–481. [Google Scholar] [CrossRef]

- Luo, M.; Hou, X.; Yang, J. Surface Optimal Path Planning Using an Extended Dijkstra Algorithm. IEEE Access 2020, 8, 147827–147838. [Google Scholar] [CrossRef]

- Orchard, G.; Jayawant, A.; Cohen, G.K.; Thakor, N. Converting static image datasets to spiking neuromorphic datasets using saccades. Front. Neurosci. 2015, 9, 437. [Google Scholar] [CrossRef]

- Amir, A.; Taba, B.; Berg, D.; Melano, T.; McKinstry, J.; Di Nolfo, C.; Nayak, T.; Andreopoulos, A.; Garreau, G.; Mendoza, M.; et al. A low power, fully event-based gesture recognition system. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7243–7252. [Google Scholar]

- Calabrese, E.; Taverni, G.; Awai Easthope, C.; Skriabine, S.; Corradi, F.; Longinotti, L.; Eng, K.; Delbruck, T. DHP19: Dynamic vision sensor 3D human pose dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Binas, J.; Neil, D.; Liu, S.C.; Delbruck, T. DDD17: End-to-end DAVIS driving dataset. arXiv 2017, arXiv:1711.01458. [Google Scholar]

- Gehrig, M.; Aarents, W.; Gehrig, D.; Scaramuzza, D. DSEC: A Stereo Event Camera Dataset for Driving Scenarios. IEEE Robot. Autom. Lett. 2021, 6, 4947–4954. [Google Scholar] [CrossRef]

- Cannici, M.; Ciccone, M.; Romanoni, A.; Matteucci, M. Asynchronous convolutional networks for object detection in neuromorphic cameras. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Maqueda, A.I.; Loquercio, A.; Gallego, G.; García, N.; Scaramuzza, D. Event-based vision meets deep learning on steering prediction for self-driving cars. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5419–5427. [Google Scholar]

- Alonso, I.; Murillo, A.C. EV-SegNet: Semantic segmentation for event-based cameras. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Rebecq, H.; Ranftl, R.; Koltun, V.; Scaramuzza, D. Events-to-video: Bringing modern computer vision to event cameras. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 3857–3866. [Google Scholar]

- Rebecq, H.; Ranftl, R.; Koltun, V.; Scaramuzza, D. High Speed and High Dynamic Range Video with an Event Camera. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 1964–1980. [Google Scholar] [CrossRef]

- Kaiser, J.; Tieck, J.C.V.; Hubschneider, C.; Wolf, P.; Weber, M.; Hoff, M.; Friedrich, A.; Wojtasik, K.; Roennau, A.; Kohlhaas, R.; et al. Towards a framework for end-to-end control of a simulated vehicle with spiking neural networks. In Proceedings of the 2016 IEEE International Conference on Simulation, Modeling, and Programming for Autonomous Robots (SIMPAR), San Francisco, CA, USA, 13–16 December 2016; pp. 127–134. [Google Scholar]

- Li, W.; Saeedi, S.; McCormac, J.; Clark, R.; Tzoumanikas, D.; Ye, Q.; Huang, Y.; Tang, R.; Leutenegger, S. Interiornet: Mega-scale multi-sensor photo-realistic indoor scenes dataset. arXiv 2018, arXiv:1809.00716. [Google Scholar]

- Mueggler, E.; Rebecq, H.; Gallego, G.; Delbruck, T.; Scaramuzza, D. The event-camera dataset and simulator: Event-based data for pose estimation, visual odometry, and SLAM. Int. J. Robot. Res. 2017, 36, 142–149. [Google Scholar] [CrossRef]

- Gallego, G.; Lund, J.E.; Mueggler, E.; Rebecq, H.; Delbruck, T.; Scaramuzza, D. Event-based, 6-DOF camera tracking from photometric depth maps. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 2402–2412. [Google Scholar] [CrossRef]

- Gallego, G.; Forster, C.; Mueggler, E.; Scaramuzza, D. Event-based camera pose tracking using a generative event model. arXiv 2015, arXiv:1510.01972. [Google Scholar] [CrossRef]

- Jiang, H.; Sun, D.; Jampani, V.; Yang, M.H.; Learned-Miller, E.; Kautz, J. Super slomo: High quality estimation of multiple intermediate frames for video interpolation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 9000–9008. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLOv8, Version 8.0.0. Ultralytics. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 22 August 2025).

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M.; Hill, R.; Allen, P. A comprehensive review of convolutional neural networks for defect detection in industrial applications. IEEE Access 2024, 12, 94250–94295. [Google Scholar] [CrossRef]

- Gehrig, D.; Loquercio, A.; Derpanis, K.G.; Scaramuzza, D. End-to-end learning of representations for asynchronous event-based data. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 5633–5643. [Google Scholar]

- Bao, Y.; Song, K.; Liu, J.; Wang, Y.; Yan, Y.; Yu, H.; Li, X. Triplet-graph reasoning network for few-shot metal generic surface defect segmentation. IEEE Trans. Instrum. Meas. 2021, 70, 1–11. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).