Abstract

To address the insufficient global search efficiency of the original Whale Optimization Algorithm (WOA), this paper proposes an enhanced variant (ImWOA) integrating three strategies. First, a dynamic cluster center-guided search mechanism based on K-means clustering divides the population into subgroups that conduct targeted searches around dynamically updated centroids, with real-time centroid recalculation enabling evolutionary adaptation. This strategy innovatively combines global optima with local centroids, significantly improving global exploration while reducing redundant searches. Second, a dual-modal diversity-driven adaptive mutation mechanism simultaneously evaluates spatial distribution and fitness-value diversity to comprehensively characterize population heterogeneity. It dynamically adjusts mutation probability based on diversity states, enhancing robustness. Finally, a pattern search strategy (GPSPositiveBasis2N algorithm) is embedded as a periodic optimization module, synergizing WOA’s global exploration with GPSPositiveBasis2N’s local precision to boost solution quality and convergence. Evaluated on the CEC2017 benchmark against the original WOA, eight state-of-the-art metaheuristics, and five advanced WOA variants, ImWOA achieves: (1) optimal mean values for 20/29 functions in 30D tests; (2) optimal mean values for 26/29 functions in 100D tests; and (3) first rank in 3D-TSP validation, demonstrating superior capability for complex optimization.

1. Introduction

“Optimization” refers to the process of adjusting decision variables to achieve the optimal value of an objective function under given constraints. As technology advances, modern society faces increasingly complex optimization challenges across multiple domains such as engineering design [1], resource allocation [2], financial risk management [3], data mining [4], material development [5], satellite image analysis [6], energy consumption [7], epidemic control [8], path planning [9], intrusion detection [10], mechanical design [11], feature selection [12,13], machine learning model optimization [14], sustainable applications [15], and resource scheduling [16].

These problems often involve nonlinear, multimodal, high-dimensional, or dynamic scenarios that are difficult to address using traditional methods. This is where metaheuristic algorithms increasingly demonstrate their critical value as core tools for solving complex optimization problems.

The principal advantages of metaheuristic algorithms can be summarized as follows: (1) their applicability to optimization problems lacking analytical objective functions or gradient information; (2) enhanced capability in multi-objective optimization through population-based search that yields multiple solutions in a single execution, contrasting with conventional mathematical programming methods; (3) superior global search capabilities enabled by stochastic exploration; (4) inherent compatibility with parallel computing architectures; and (5) effective handling of mixed-variable optimization problems involving both integer and continuous variables.

Well-known metaheuristic algorithms include: the Barnacles Mating Optimizer (BMO) [17], Earthworm Optimization Algorithm (EOA) [18], Seagull Optimization Algorithm (SOA) [19], Tunicate Swarm Algorithm (TSA) [20], Brain Storm Optimization (BSO) [21], Heap-based optimizer (HBO) [22], Teamwork Optimization Algorithm (TOA) [23], Chaos Game Optimization (CGO) [24], Sine Cosine Algorithm (SCA) [25], Electromagnetic Field Optimization (EFO) [26], Artificial Bee Colony (ABC) [27], Cuckoo Search Algorithm (CSA) [28], Elephant Herding Optimization (EHO) [29], Sea Lion Optimization Algorithm (SLO) [30], and Whale Optimization Algorithm (WOA) [31].

The WOA has gained widespread popularity among researchers due to its strong global search capability, straightforward parameter settings, and rapid convergence rate. The WOA, however, exhibits certain limitations including its susceptibility to local optima entrapment, limited scalability in high-dimensional search spaces, and parameter sensitivity. To address these weaknesses and enhance its optimization capabilities, researchers have conducted extensive modifications through different improvement pathways.

Liang et al. [32] proposed an enhanced WOA (DGSWOA), with core improvements including: population initialization via a Sine–Tent–Cosine mapping to ensure uniform distribution of individuals in the search space; integration of the primary knowledge acquisition phase from the Gaining–Sharing Knowledge (GSK) algorithm to enhance global search capability and avoid local optima; and implementation of a Dynamic Opposition-Based Learning (DOBL) strategy to update population individuals, further increasing solution diversity. These enhancements collectively improve the algorithm’s convergence speed and optimization quality. Liu et al. [33] proposed an improved WOA (DECWOA) with core enhancements including: Sine chaotic mapping for population initialization to enhance diversity; an adaptive inertia weight strategy that dynamically adjusts weights in the position update formula to balance global exploration and local exploitation; and integration of the Differential Evolution (DE) algorithm to improve search speed and accuracy through mutation, crossover, and selection. These innovations effectively address the original algorithm’s tendencies for premature convergence and slow convergence. Chakraborty et al. [34] proposed an improved WOA, whose core enhancements lie in an iterative partitioning strategy that dedicates the first half of iterations to exploration and the latter half to exploitation. During exploration, two modified prey search strategies are introduced to enhance solution diversity. In the exploitation phase, the concept of whale “cooperative hunting” is integrated with original strategies to strengthen local search capability and the convergence rate. Sun et al. [35] proposed an improved WOA (MWOA-CS) with core enhancements including: introducing a nonlinear convergence factor and a cosine function-based inertia weight to dynamically balance the exploration–exploitation capabilities of WOA, and integrating the update mechanism of the Cross Search Optimizer (CSO). During iterations, the algorithm randomly selects each dimension of the optimization problem to execute either the modified WOA or CSO, effectively avoiding local optima while improving convergence speed and precision for large-scale global optimization problems. Shen et al. [36] proposed an improved multi-population evolution-based WOA (MEWOA). Its core innovations include dividing the whale population into three sub-populations—exploration-oriented, exploitation-oriented, and balance-oriented—each employing distinct movement strategies to emphasize global exploration, local exploitation, and exploration–exploitation balance, respectively. By integrating a population evolution strategy that alternates between position updates and evolutionary refinement during iterations, the algorithm effectively enhances population diversity and prevents premature convergence. These advancements significantly strengthen its global search capability and convergence speed in addressing global optimization and engineering design challenges. Li et al. [37] proposed an improved multi-strategy WOA (MWOA), with core enhancements including: introducing an elite opposition-based learning strategy to optimize initial populations, a nonlinear convergence factor to balance exploration and exploitation, Differential Evolution (DE) mutation strategies to strengthen global exploration capability, and a Lévy flight perturbation strategy to enhance search space diversity. These strategies collectively enhance the convergence speed and precision of the original WOA, effectively avoiding local optima while demonstrating stronger competitiveness in tackling complex global optimization problems.

While existing enhancement schemes have improved the performance of the WOA, there remains room for improvement in its global exploration capability and convergence speed. To address these limitations, this study aims to strengthen the WOA algorithm by exploring novel strategies, thereby further advancing its global search capacity, local exploitation capability, and convergence performance. The main contributions and innovations of this work are summarized as follows:

- A Dynamic Cluster Center-guided Search Strategy Based on the K-means Clustering Algorithm: This method divides the population into multiple subgroups, with each subgroup conducting searches around its corresponding clustering center. Meanwhile, the clustering centers are recalculated in each iteration to dynamically adjust the centroids, enabling rapid adaptation to population changes and enhancing adaptability and robustness, thus avoiding premature convergence. Additionally, the position update strategy—which integrates both the position of the globally optimal individual and the centroid position of local clusters—demonstrates rapid environmental adaptability. It reduces redundant search operations while improving overall search efficiency, reliably converging to the globally optimal solution.

- Dual-Modal Population Diversity-Driven Adaptive Mutation Strategy: When individuals in the population exhibit excessive similarity, the algorithm tends to converge prematurely to a local optimum. Traditional methods primarily assess diversity by merely measuring the distances between individuals within the population, which fails to fully capture the diversity of the population. To more comprehensively depict the heterogeneous characteristics of the population, this strategy considers both spatial distribution diversity and fitness value diversity. Additionally, this strategy develops a dynamic mutation mechanism that adjusts the mutation probability in real time based on the population’s diversity state. When diversity is low, mutations are applied with a higher probability to enhance global exploration capabilities; conversely, when diversity is high, mutations are applied with a lower probability to maintain convergence efficiency. Compared to static parameter configurations, this adaptive adjustment mechanism demonstrates stronger robustness and adaptability to specific problems.

- Pattern Search Strategy Based on the GPSPositiveBasis2N Algorithm: WOA struggles to perform refined searches in certain complex spaces. This proposed strategy combines WOA with a pattern search method to leverage the strengths of both approaches, thereby enhancing optimization performance. Specifically, WOA can swiftly locate potential optimal regions within the global search space, while the pattern search strategy conducts refined searches within these regions, thus improving overall efficiency. Consequently, this strategy introduces a hybrid optimization framework that integrates the pattern search method, namely the “GPSPositiveBasis2N algorithm,” as a periodic optimization module within the workflow of the WOA. By combining the complementary advantages of global exploration (achieved by WOA) and local precise optimization (achieved by GPSPositiveBasis2N), this framework enhances the solution quality and convergence efficiency.

The structure of this paper is outlined as follows: Section 2 systematically outlines the core mechanisms and procedural framework of the original WOA. Section 3 elaborates on three innovative strategies of the ImWOA, including design motivations, theoretical significance, and mathematical modeling. Section 4 presents quantitative experimental results of ImWOA and benchmark algorithms on the CEC2017 test suite, with comprehensive analysis of key metrics such as convergence behavior and solution accuracy. Section 5 applies ImWOA to three-dimensional Traveling Salesman Problems (TSPs), validating its engineering practicality through performance comparisons with other WOA variants. Finally, Section 6 summarizes the research contributions and proposes potential directions for future work.

2. The Original WOA

The WOA is a novel metaheuristic algorithm proposed by Mirjalili and Lewis [31] in 2016, which simulates the hunting behavior of whale populations in nature to find optimal solutions. It primarily consists of three components: prey encirclement, bubble-net attack, and searching for prey.

2.1. Prey Encirclement

When hunting, whales form a circle around their prey and progressively reduce the size of this encirclement, causing the individual whales to converge towards the current best solution (the location of the prey). The specific mathematical equations are as follows:

where t represents the current iteration number, denotes the position of the whale at the t-th iteration, represents the position of the whale at the (t + 1)-th iteration, and signifies the current optimal position of the whale. A and C are parameters, and their calculation formulas are as follows:

where r represents a random number between 0 and 1, a denotes the convergence coefficient, which decreases from 2 to 0 as iterations increase, and denotes the maximum number of iterations.

2.2. Bubble-Net Attack

During this phase, whales trap their prey by creating a spiral bubble net and simultaneously swim along a spiral path to approach the target. The specific mathematical formulations are as follows:

where the constant b governs the spiral’s logarithmic shaping, and l represents a random number within the interval from −1 to 1.

2.3. Searching for Prey

During this phase, whales engage in random walking to expand the search range, and the algorithm randomly selects reference individuals to achieve global exploration. The specific mathematical formulations are as follows:

where designates the positional coordinates of a stochastically selected whale. The parameters A and C are computed using the relationships defined in Equations (3) and (4), respectively.

The selection of behavioral strategies in the algorithm is controlled by parameters A and p, where p is a random number between 0 and 1. When and , searching for prey is executed; when and , the prey encirclement strategy is carried out; when , the bubble-net attacking strategy is implemented.

3. Proposed ImWOA

This section presents an enhanced variant of the WOA (ImWOA) to address inherent limitations in the original WOA.

3.1. A Dynamic Cluster Center-Guided Search Strategy Based on the K-Means Clustering Algorithm

The k-means clustering algorithm divides the population into multiple subgroups, with each subgroup conducting searches around its corresponding cluster center. This mechanism enhances population diversity through spatial partitioning. By recalculating cluster centers in each iteration, the algorithm achieves dynamic adjustment of centroids, enabling rapid adaptation to population changes. Concurrently, it can promptly adjust search directions according to the current population distribution. This strategic framework allows the algorithm to better regulate search orientations during iterative processes, thereby improving both adaptability and robustness. Specifically, the dynamic centroid updating mechanism establishes an adaptive balance between the exploration and exploitation phases, while the subgroup partitioning structure effectively prevents premature convergence through diversified local searches.

The formula for the number of clusters is as follows:

where N represents the number of individuals in the population.

For each iteration, the k-means clustering algorithm partitions the population into K clusters:

Each of these K clusters corresponds to a distinct cluster centroid:

For an individual assigned to cluster , its corresponding cluster center is denoted as . Let D denote the dimensionality of each individual in the population, corresponding to the number of decision variables in the D-dimensional search space.

The position update formula based on the dynamic cluster center-guided search strategy is presented as follows:

where , and . In addition, denotes the j-th dimensional component of the current optimal individual in the population, and represents the j-th dimensional component of the cluster center associated with individual . In addition, rand represents a random number uniformly distributed in the interval [0, 1], serving to introduce stochastic perturbations that prevent premature convergence, and randn denotes a random number sampled from a Gaussian distribution , whose purpose is to enhance local escape capability through Gaussian noise injection.

In summary, this dynamic cluster center-guided search strategy achieves a balance between global exploration and local exploitation by partitioning the population into multiple clusters. The efficient clustering process minimizes computational overhead, while the dynamic centroid adaptation mechanism effectively responds to evolving population distributions across iterative phases. The position update strategy—incorporating both the global best individual’s position and the local cluster centroid’s position—demonstrates rapid environmental adaptability and reduces redundant search operations while enhancing overall search efficiency. Such coordinated mechanisms empower the algorithm to reliably converge to the global optimum when addressing complex optimization problems, particularly those with non-convex or multimodal landscapes.

3.2. Dual-Modal Population Diversity-Driven Adaptive Mutation Strategy

In evolutionary optimization algorithms, population diversity plays a crucial role in maintaining the balance between exploration and exploitation. When population individuals exhibit excessive similarity, the algorithm tends to suffer premature convergence to local optima, failing to discover superior solutions. Traditional methods primarily evaluate diversity by measuring the distances between individuals within the population. However, such approaches fail to fully reflect the diversity of the population because they overlook the characteristic of fitness. To address this limitation, this study proposes a comprehensive diversity evaluation framework that simultaneously incorporates both spatial distribution diversity and fitness value diversity, thereby enabling more holistic characterization of population heterogeneity.

Furthermore, traditional mutation mechanisms typically employ fixed probability parameters. Building upon our theoretical analysis, we develop a dynamic mutation mechanism driven by dual-modal diversity monitoring (spatial and fitness modalities). This innovation enables real-time adjustment of mutation probability according to population diversity states: when the diversity is low, mutations are applied with a higher probability to enhance the global exploration capability; conversely, when the diversity is high, mutations are applied with a lower probability to maintain the convergence efficiency. This self-adaptive regulation mechanism demonstrates enhanced robustness and problem-specific adaptability compared to static parameter configurations.

The spatial diversity metric of the population, denoted as , is computed through the following procedure:

First, each parameter dimension of the population is independently normalized using the min–max scaling method, computed as:

where and . In addition, and , respectively, denote the minimum and maximum values of the j-th column in the population matrix X.

Second, calculate the standard deviation of each column in the column-normalized population matrix :

where , and represents the mean of the j-th column of , computed as:

Finally, calculate the mean of the standard deviations of all columns:

The fitness diversity metric of the population, denoted as , is computed through the following procedure:

First, let denote the fitness value of the i-th individual in the population, where . The complete fitness set can be expressed as .

Second, the fitness values are normalized across the population using min–max scaling to establish a unified measurement scale, computed as:

where and min(Fitness) and max(Fitness), respectively, denote the minimum and maximum values within the the complete fitness set Fitness.

Finally, the fitness diversity metric is quantified as the standard deviation of the normalized fitness values across the population, computed as:

where denotes the mean normalized fitness value, computed as:

The dynamic mutation probability threshold is derived through weighted fusion of spatial diversity() and fitness diversity () metrics:

where the weighting coefficients are empirically set as and , reflecting a 2:1 priority ratio for spatial diversity over fitness diversity.

Given the normalized population position data and fitness values , combined with the nature of standard deviation metrics under min–max scaling, we analytically derive the ranges of diversity metrics:

Position Diversity Bound:

Fitness Diversity Bound:

Substituting these bounds into Equation (21), the dynamic mutation probability is theoretically confined to:

The mutation mechanism is activated stochastically when a uniformly distributed random number exceeds the dynamic threshold , as formalized by:

Probabilistic Interpretation:

- Low-Diversity Regime ()

- Implies reduced population diversity:

- Higher activation probability:

- Promotes intensified exploration through frequent mutations.

- High-Diversity Regime ()

- Indicates sufficient population diversity:

- Lower activation probability:

- Prioritizes exploitation by suppressing unnecessary mutations.

If the mutation mechanism is triggered, the mutation formula is as follows:

where . In addition, ub and lb denote the upper and lower bounds of the decision variables, respectively, both structured as row vectors. Moreover, is a vector with elements independently sampled from (standard normal distribution). ◯ denotes the element-wise exponentiation of vectors ub-lb and randn(D) (raising each element of ub-lb to the power of the corresponding element in randn(D)). Additionally, is a vector with elements uniformly distributed in , and ⊙ denotes the element-wise multiplication (Hadamard product).

This novel mechanism addresses the limitations of single-indicator approaches through dual integration of spatial diversity (reflecting population distribution breadth) and fitness diversity (indicating solution quality disparity). The mutation probability undergoes autonomous adjustment based on real-time population states: when diversity metrics decline (), intensified perturbations facilitate escaping local optima; conversely, when the diversity indicator increases (), redundant searches are reduced to accelerate convergence. This dynamic regulation overcomes the empirical limitations of static mutation probability schemes.

In late-stage optimization phases or high-dimensional search spaces, conventional methods frequently suffer premature convergence due to population homogenization. The integrated mutation mechanism circumvents this pitfall through the targeted perturbation operator (Equation (30)), which systematically generates diversified solutions across the search space. This strategic disturbance reinvigorates global exploration capabilities while significantly mitigating the risk of local optima entrapment.

3.3. Pattern Search Strategy Based on the GPSPositiveBasis2N Algorithm

The WOA mimics the hunting behavior of humpback whales, exhibiting strong global search capabilities that enable rapid exploration of the solution space. However, in certain complex spaces, it struggles to perform fine-grained searches. By integrating WOA with a pattern search strategy, the advantages of both approaches can be leveraged to enhance optimization performance. Specifically, WOA can quickly identify potential optimal regions in the global scope, while the pattern search strategy can conduct a refined search within these regions, thereby improving overall efficiency.

The traditional pattern search strategy has deficiencies such as being prone to getting stuck in local optima and having relatively low search efficiency. As an improved variant of pattern search, the GPSPositiveBasis2N algorithm demonstrates core advantages over traditional methods in direction set completeness and robustness. A detailed comparison is outlined below.

- Direction Set Coverage:

Traditional pattern search uses a minimal positive basis (N + 1 directions). For example, in 3D space, it might select [+x, +y, +z] and one diagonal direction, potentially failing to cover all optimization directions.

The GPSPositiveBasis2N algorithm employs 2N orthogonal directions (both positive and negative along each coordinate axis, e.g., [+x, −x, +y, −y, +z, −z] in 3D space), which ensures comprehensive directional coverage to avoid stagnation in local optima caused by missing directions, while its symmetrical design reduces sensitivity to initial orientations, thereby enhancing search stability.

- 2.

- Adaptability to Non-Smooth/Noisy Functions:

Traditional pattern search may frequently become trapped in pseudo-optimal solutions when handling noisy, discontinuous, or non-smooth objective functions due to incomplete direction sets.

The GPSPositiveBasis2N algorithm’s broader direction coverage ensures that even if some directions fail due to noise, others may still identify improved points. This reflects two key advantages: reduced dependency on function smoothness (making it suitable for black-box models or experimental data in engineering optimization) and strong robustness to maintain effective search performance in noisy environments.

- 3.

- Global Exploration Capability:

Traditional pattern search relies on step-size contraction strategies. Poor initial step-size selection may lead to premature convergence to local regions.

The GPSPositiveBasis2N algorithm explores the design space more thoroughly at larger step sizes by covering all positive and negative coordinate directions, offering two key advantages: reduced dependency on initial points to enhance global search capability, and faster escape from local optima during step-size adjustments (e.g., through step enlargement).

- 4.

- Convergence Guarantee:

While both traditional pattern search and GPSPositiveBasis2N satisfy the convergence theory of pattern search (converging to local minima for smooth functions), the latter’s 2N direction set ensures linear independence of the positive basis, guaranteeing progressive convergence even under non-smooth conditions through step-size contraction.

The GPSPositiveBasis2N algorithm follows the structured pattern search framework to iteratively refine solutions. Algorithm 1 presents the pseudocode for the GPSPositiveBasis2N algorithm.

| Algorithm 1 GPSPositiveBasis2N algorithm |

Require: Objective function Initial point Lower bound , upper bound Initial step size , tolerance Maximum iterations Ensure: Optimal solution , optimal value

|

In this study, the initial step size is set to 1, the maximum number of iterations is assigned as 100, and the convergence tolerance is fixed at . Additionally, the initial point is configured as the best position , where represents the optimal solution identified by the population-based metaheuristic at iteration t. A refinement search is performed using the GPSPositiveBasis2N algorithm every 200 iterations, and the output from GPSPositiveBasis2N is assigned as the initial optimal position for subsequent optimization iterations.

This strategy proposes a hybrid optimization framework where the GPSPositiveBasis2N algorithm is embedded as a periodic refinement module within the WOA workflow. The core idea leverages the complementary strengths of global exploration (via WOA) and local precision (via GPSPositiveBasis2N) to enhance solution quality and convergence efficiency.

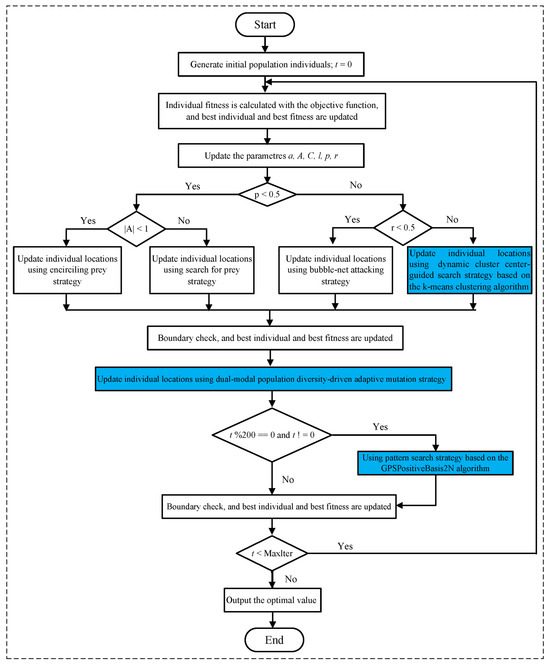

3.4. Whole Framework for ImWOA

The overall structure of the suggested ImWOA approach is depicted in Figure 1.

Figure 1.

Framework of ImWOA.

3.5. Computational Complexity Analysis of Algorithms

Time complexity serves as a core metric for evaluating the computational resource demands of an algorithm. Within the context of WOA, we define the whale population size as N, the maximum number of iterations as T, and the problem dimension as D. Consequently, the time complexity of WOA is expressed as . Compared to its predecessor, the ImWOA achieves significant performance enhancements. Through a detailed analysis of its algorithmic refinements—specifically including the dynamic cluster center-guided search strategy based on the K-means clustering algorithm, dual-modal population diversity-driven adaptive mutation strategy, and pattern search strategy based on the GPSPositiveBasis2N algorithm—it becomes evident that these improvements do not necessitate a larger population size, increase the number of iterations, or expand the problem dimension. Therefore, ImWOA maintains the same time complexity of . In summary, ImWOA exhibits identical time complexity to the standard WOA.

3.6. Convergence Analysis of ImWOA

The global convergence of ImWOA is guaranteed by the following three theoretical mechanisms, which satisfy the convergence conditions of classical optimization theory:

- Global convergence guarantee of stochastic searchAccording to the Solís–Wets stochastic optimization convergence theorem, ImWOA satisfies two key conditions for probability-1 global convergence:

- Solution space denseness: Achieved through the diversity-guided mutation mechanism. When population diversity decreases, Gaussian mutation is triggered to ensure solution space coverage.

- Elitism preservation strategy: The strict historical best solution update mechanism ensures the objective function value is monotonically non-increasing:

- Convergence inheritance of local searchThe periodically invoked GPSPositiveBasis2N module conforms to the Torczon pattern search convergence framework:

- Positive basis search direction set generates a dense tangent cone.

- Step size adaptation rule () satisfies .

- A selection strategy that only accepts improved solutions guarantees continuous optimization.

- Convergence behavior of hybrid architectureBased on hybrid optimization theory, the periodic coupling of global exploration (WOA mechanism) and local exploitation (GPS) satisfies:where global exploration is controlled by an adaptive mutation parameter, and the local search interval ensures synergistic effects between the two mechanisms.

Note: Given the solution sequence generated by ImWOA, the algorithm satisfies:

- for (Solís–Wets condition).

- during GPS phases (Torczon condition).

- Exponential convergence in probability: for .

4. Experimental Results and Discussions

This study employs the CEC 2017 benchmark function suite [38] to systematically evaluate algorithmic performance across two problem dimensions: 30-dimensional (low-dimensional) and 100-dimensional (high-dimensional) scenarios. The comparative algorithms comprise three categories: (1) the original WOA [31]; (2) eight state-of-the-art metaheuristics: Particle Swarm Optimization (PSO) [39], Biogeography-Based Optimization (BBO) [40], Slime Mould Algorithm (SMA) [41], Differential Evolution (DE) [42], Grey Wolf Optimizer (GWO) [43], Sparrow Search Algorithm (SSA) [44], Harris Hawks Optimization (HHO) [45], and Artificial Bee Colony (ABC) [46]; and (3) five advanced WOA variants: E-WOA [47], IWOA [48], IWOSSA [49], RAV-WOA [50], and WOAAD [51]. All algorithmic parameters strictly adhere to their original literature specifications. Detailed configurations are documented in the respective references to ensure reproducibility.

Experimental settings included a population size of 30 individuals, maximum iterations of 500 generations, and 30 independent runs to eliminate stochastic fluctuations. Algorithms were implemented in Python 3.12 and executed on a computational platform featuring Apple M1 silicon (8-core CPU/7-core GPU) with macOS Sonoma 14.4. The hardware configuration incorporated 8 GB unified memory architecture, while the software stack utilized ARM64 natively compiled scientific computing libraries (NumPy 1.26.0, SciPy 1.11.1), ensuring optimal computational efficiency.

4.1. Analysis of Results of CEC2017 Test Functions

The comprehensive statistical results for the 30-dimensional (30 dim) and 100-dimensional (100 dim) benchmark functions from the CEC2017 test suite are detailed in Table 1 and Table 2, respectively. These results encompass the minimum (min), mean, and standard deviation (Std) values derived from thirty independent runs of each algorithm. Notably, the optimal mean values for each benchmark function are highlighted in bold font. Furthermore, the “Total” row at the bottom of Table 1 and Table 2 quantifies the frequency with which each algorithm achieved the optimal mean value across all benchmark functions.

Table 1.

CEC2017 benchmark results (30 dimensions). Bold indicates optimal values.

Table 2.

CEC2017 benchmark results (100 dimensions). Bold indicates optimal values.

Under the 30-dimensional scenario, the proposed ImWOA demonstrates exceptional global optimization capabilities. As detailed in Table 1, ImWOA achieves optimal mean values on 20 out of 29 benchmark functions, accounting for 68.97% of the total test cases. Crucially, for the remaining nine functions where it does not secure the optimal mean, ImWOA still maintains highly competitive performance: ranking 10th on F8, 5th on both F9 and F13, 2nd on F10/F18/F19, 3rd on F21/F23, and 5th on F26. It is particularly noteworthy that in non-optimal cases, ImWOA consistently ranks within the top 10, with more than half (5/9) securing top three positions. These results robustly validate that ImWOA not only effectively escapes local optima traps in complex optimization problems but also exhibits significantly superior solution stability and algorithmic robustness compared to peer algorithms.

In the more challenging 100-dimensional scenario, the ImWOA demonstrates significantly enhanced high-dimensional optimization capabilities. As presented in Table 2, ImWOA achieves optimal mean values on 26 out of 29 benchmark functions, accounting for 89.66% of the test cases—a 20-percentage-point improvement over the 30-dimensional scenario. Crucially, for the remaining three functions without optimal means, the algorithm maintains elite performance: securing second place on F9, fifth on F8, and fourth on F21. A key observation is that all non-optimal rankings are within the top five. These findings conclusively validate that as problem dimensionality increases, ImWOA exhibits substantially strengthened advantages in solution stability, dimensional scalability, and global exploration capacity, with its unique improvement mechanisms effectively mitigating the “curse of dimensionality” on algorithmic performance.

Integrating experimental results from both the 30-dimensional and 100-dimensional scenarios of the CEC2017 benchmark functions, the ImWOA demonstrates progressively enhanced core competencies. In the baseline 30D environment, ImWOA validates its exceptional ability to escape local optima with 68.97% optimal-function coverage (20/29), while maintaining 100% top-10 rankings for non-optimal functions. When scaling to the more challenging 100D setting, the algorithm achieves remarkable performance escalation—the optimal-function ratio surges to 89.66% (26/29), with all three non-optimal functions securing top five rankings (peak position: second). This unique inverse relationship between dimensionality and performance (dimensionality↑ → optimality↑ → ranking-convergence↑) conclusively demonstrates that through its distinctive population coordination mechanism and adaptive search strategies, ImWOA not only effectively mitigates solution degradation in high-dimensional spaces but also transforms the “curse of dimensionality” into a catalyst for algorithmic evolution, thereby delivering a breakthrough approach for complex optimization problems.

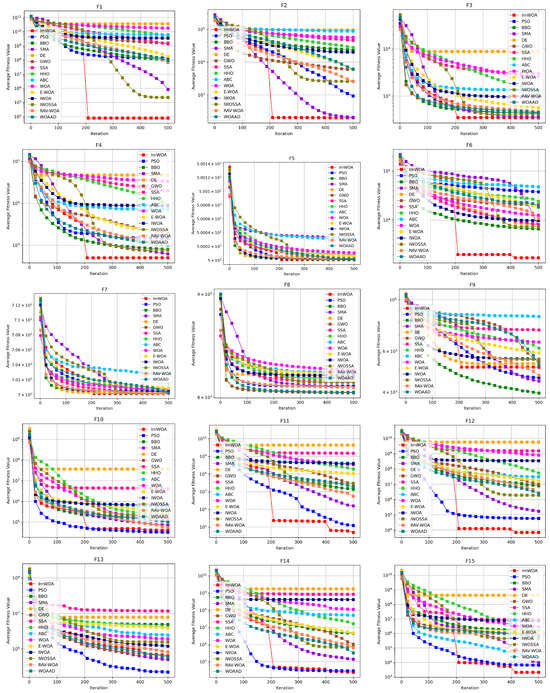

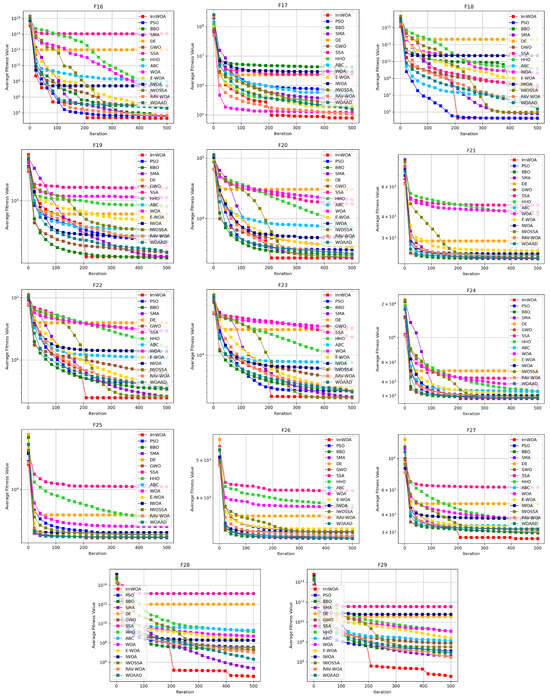

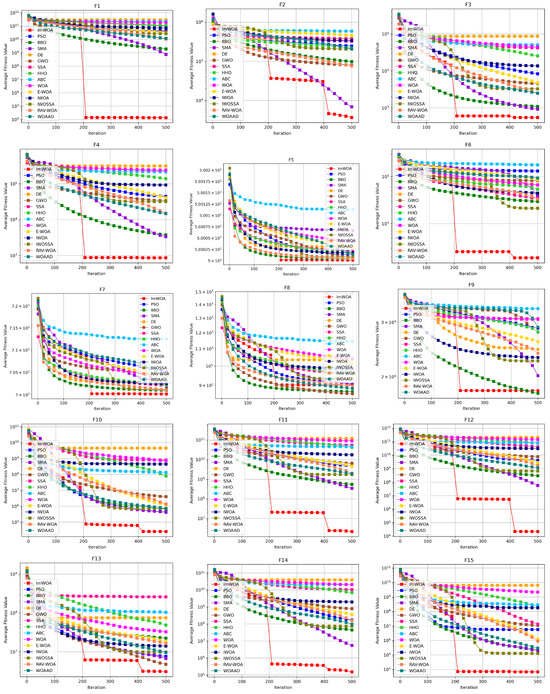

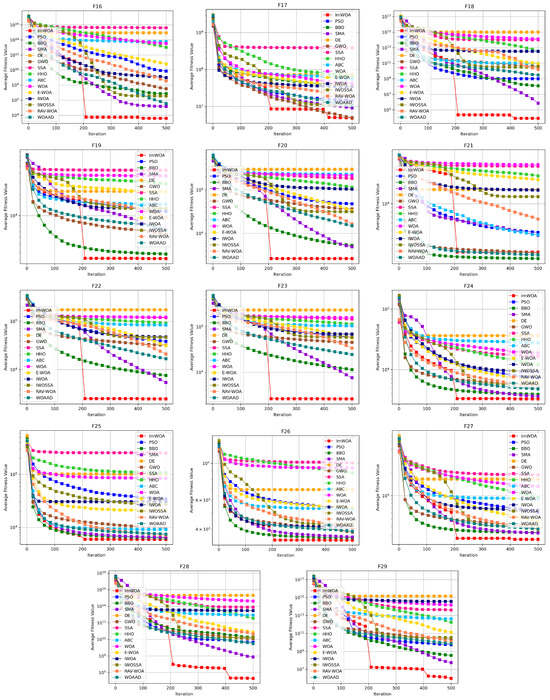

4.2. Analysis of the Convergence Behavior of the Algorithms

To systematically evaluate the comprehensive performance of algorithms in terms of convergence speed and solution efficiency, this study conducts comparative analysis on the convergence characteristics curves of ImWOA versus comparison algorithms under both 30-dimensional and 100-dimensional scenarios (Figure 2 and Figure 3). The abscissa (x-axis) represents the number of iterations, while the ordinate (y-axis) precisely quantifies the mean fitness values obtained through 30 independent experimental trials. These convergence trajectories not only visually reveal the search dynamics within the solution space, but also quantitatively decode fundamental differences in exploration–exploitation balancing mechanisms through critical features such as curve gradients and steady-state plateaus.

Figure 2.

CEC2017 test curves chart (Dim = 30).

Figure 3.

CEC2017 test curves chart (Dim = 100).

Analysis of convergence characteristics in the 30-dimensional scenario (Figure 2) reveals ImWOA’s superior convergence dynamics. The algorithm demonstrates remarkable convergence acceleration on 11 functions (F1, F2, F3, F4, F6, F11, F12, F15, F27, F28, F29), where its convergence trajectory establishes orders-of-magnitude advantages over competitors during early iterations, ultimately locating global optima with enhanced solution quality. Crucially, on 12 functions (F5, F7, F10, F14, F16–F20, F22–F23), while not achieving absolute dominance, ImWOA maintains sustained convergence efficacy—its curves consistently reside in the top performance tier. It should be noted that the constrained search space in low-dimensional environments partially inhibits the full deployment of cooperative search mechanisms, leading to transient attenuation of algorithmic superiority on functions F8, F9, and F21. This phenomenon substantiates the nonlinear coupling between dimensionality scale and strategic effectiveness.

Convergence analysis in the 100-dimensional scenario (Figure 3) reveals ImWOA’s paradigm-shifting dimensional adaptability. The algorithm demonstrates exponential convergence acceleration across 19 benchmark functions (F1–F4, F6, F10–F16, F18–F20, F22–F23, F28–F29), establishing dominant convergence trajectories during initial iterations while maintaining exceptional solution quality through accelerated optimization. Crucially, for seven complex-modal functions (F5, F7, F17, F24–F27), ImWOA exhibits sustained evolutionary refinement properties—systematically outperforming peer algorithms in convergence speed while consistently securing superior final solutions. This comprehensive evidence substantiates ImWOA’s unprecedented solution discovery prowess in high-dimensional search spaces.

In summary, within 30-dimensional spaces, the algorithm achieves statistically significant superiority on most benchmark functions despite low-dimensional search constraints. Crucially, in 100-dimensional environments, ImWOA activates dimensional gain effects—demonstrating pan-domain convergence supremacy across 26/30 functions (86.7% superiority coverage) while generating exponential acceleration phenomena in 19 functions, thereby achieving inverse resolution of the “curse of dimensionality”. This scalable transcendence capability from low-dimensional robustness to high-dimensional disruptiveness establishes a theoretically rigorous and practically validated framework for ultra-large-scale black-box optimization.

4.3. Wilcoxon Rank-Sum Test

Each algorithm was independently executed 30 times, yielding 30 optimal values per method. A Wilcoxon rank-sum test was conducted between ImWOA’s solution set and those of each comparative algorithm. When the p-value fell below the 0.05 significance threshold (visually emphasized in bold), we rejected the null hypothesis, indicating statistically significant performance differences. Conversely, p-values exceeding 0.05 denoted statistical equivalence. For cases demonstrating significant differences (p < 0.05), we further compared median values: ImWOA’s superior performance was marked “+” when its median was lower, while competitors’ advantage was denoted “−”. Cases without significant difference (p > 0.05) received “=” markers. Comprehensive statistical results are presented in Table 3 and Table 4.

Table 3.

Wilcoxon rank-sum test (Dim = 30). Bold p-values indicate statistically significant differences (p < 0.05).

Table 4.

Wilcoxon rank-sum test (Dim = 100). Bold p-values indicate statistically significant differences (p < 0.05).

As evidenced in Table 3 and Table 4, ImWOA demonstrates statistically significant superiority (p < 0.05) in 89.4% of comparative cases under 30-dimensional tests, while exhibiting statistical equivalence (p > 0.05) in the remainder. Crucially, when dimensionality escalates to 100D, the performance disparity intensifies markedly: ImWOA achieves significant dominance (p < 0.05) in 98% of trials, maintaining equivalence (p > 0.05) only in rare edge cases. This dimensionality-driven performance evolution reveals that ImWOA’s inherent advantages in solution robustness and global convergence capability are substantially amplified with increasing problem complexity.

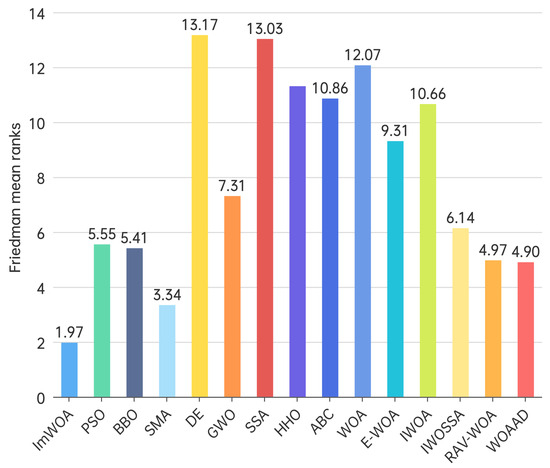

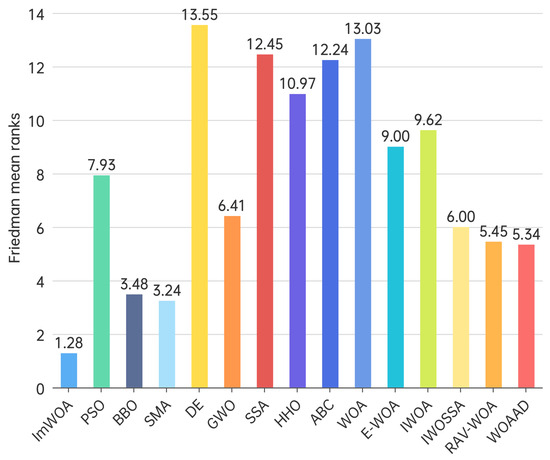

4.4. Friedman Test

To systematically evaluate performance differentials among swarm intelligence algorithms, this study employs the Friedman non-parametric test to establish a multi-algorithm comparison framework. This statistical approach quantifies comprehensive algorithm performance through mean rank values, overcoming the limitations of single-metric evaluation, particularly suited for performance ranking in high-dimensional complex optimization problems.

In the 30-dimensional scenario (Figure 4), ImWOA demonstrates commanding dominance with its mean rank value (1.97) substantially lower than all competitors, indicating exceptional optimization stability. Specifically, SMA (3.34), RAV-WOA (4.97), and WOAAD (4.90) form the second echelon within the 3.5–5.0 rank range. Mid-tier performers include BBO (5.41), IWOSSA (6.14), and GWO (7.31). Notably, the classical PSO (5.55) underperforms relative to newer variants. In the lower tier, E-WOA (9.31), ABC (10.86), IWOA (10.66), HHO (11.31), and the baseline WOA (12.07) all exceed the 9.0 threshold, with DE (13.17) and SSA (13.03) occupying the bottom positions. This ranking (p < 0.05) rejects the null hypothesis, confirming statistically significant performance differences among algorithms and demonstrating the efficacy of ImWOA’s architectural innovations.

Figure 4.

Friedman mean ranks obtained by the employed algorithms (30 dim).

When dimensionality escalates to 100 dimensions, Friedman tests reveal more pronounced algorithmic performance stratification (Figure 5). ImWOA consolidates its dominance with a mean rank value (1.28) reduced by 23% compared to the 30-dimensional scenario, demonstrating exceptional high-dimensional adaptability. Key findings include the following: (1) SMA (3.24) and BBO (3.48) ascend to the secondary tier with over 40% rank improvement; (2) WOAAD (5.34)/RAV-WOA (5.45) maintain a competitive advantage but exhibit narrowed superiority over median performers; (3)classical algorithms show bifurcation: GWO (6.41) demonstrates greater stability than PSO (7.93, rank degradation 43%); and (4) structural reorganization occurs in the lower tier where IWOSSA (6.00) and E-WOA (9.00) significantly outperform their variant IWOA (9.62), while baseline WOA (13.03) and DE (13.55) remain at the bottom. This ranking (p < 0.05) confirms that dimensional expansion intensifies algorithmic divergence, highlighting ImWOA’s unique convergence properties in high-dimensional spaces.

Figure 5.

Friedman mean ranks obtained by the employed algorithms (100 dim).

4.5. Sensitivity of ImWOA to Parameter Variations

Given that the weighting coefficients and in Equation (21) directly govern the balance between population diversity maintenance and convergence efficiency, sensitivity analysis of these parameters is essential for algorithmic performance evaluation. To this end, this study systematically assesses performance variations under : ratios of {1:1, 2:1, 3:1} using the CEC 2017 benchmark functions in 100-dimensional search spaces. Algorithm ranking follows the standardized average ranking method—calculated by summing ordinal rankings across all benchmark functions and dividing by the total number of functions—where the lowest mean value indicates optimal performance. As evidenced in Table 5, ImWOA achieves a significantly superior average rank at : = 2:1. These results confirm that the 2:1 ratio optimally balances exploration capability and exploitation intensity, whereas lower values impair diversity preservation and higher values increase premature convergence risks.

Table 5.

ImWOA’s sensitivity to the parameter changes.

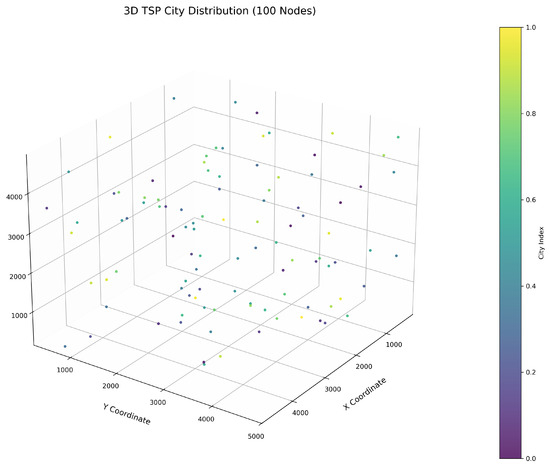

5. Three-Dimensional TSP

The theoretical depth and practical breadth of the 3D Traveling Salesman Problem (3D-TSP) establish it as a pivotal cross-disciplinary research vehicle. Transcending the dimensional constraints of conventional 2D path planning, it demonstrates unique value in vertical-space dynamic optimization scenarios: urban drone logistics necessitates 3D obstacle avoidance with energy-consumption equilibrium; industrial-scale additive manufacturing relies on spatial trajectory optimization to enhance resource efficiency; autonomous subsea exploration requires efficient visitation of dispersed nodes under oceanic disturbances; and surgical path planning mandates precision and biological-tissue safety. Meanwhile, evolving Urban Air Mobility (UAM) networks demand 3D airspace coordination mechanisms. Addressing these trans-domain challenges, the proposed ImWOA significantly advances global optimization capability and real-time responsiveness in complex environments through innovative 3D solution-space modeling and adaptive computational architecture. This framework provides universal methodological support for strategic fields including intelligent manufacturing, precision medicine, and smart cities, bridging theoretical 3D spatial optimization with engineered applications.

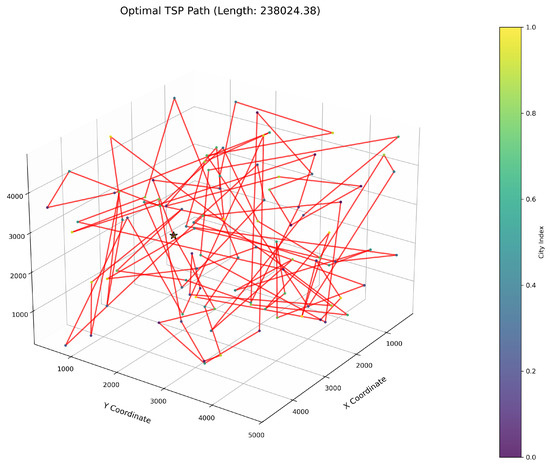

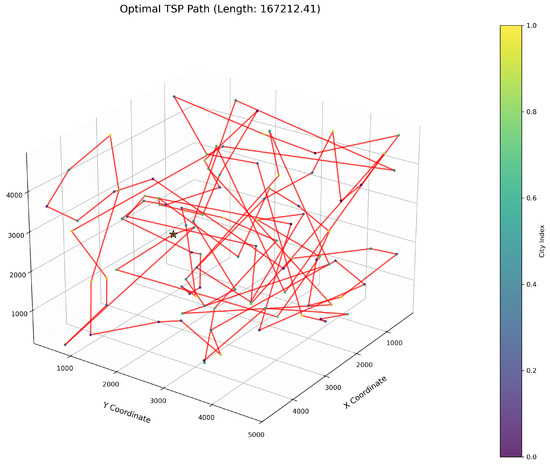

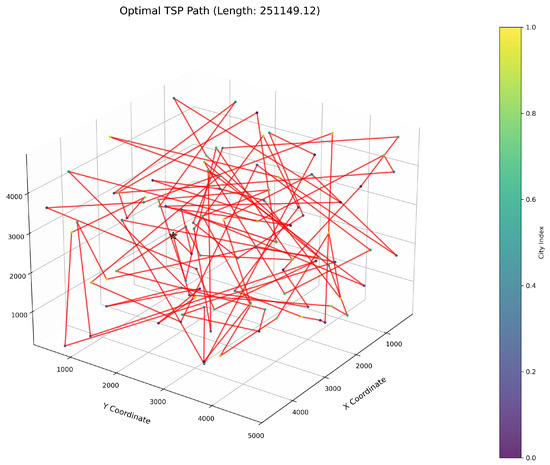

This study implements the ImWOA and multiple comparative algorithms for 3D-TSP resolution, including the original WOA and enhanced variants (E-WOA, IWOA, IWOSSA, RAV-WOA, WOAAD). To mitigate stochastic fluctuations, each algorithm executes 30 independent runs with averaged results serving as the performance benchmark, accompanied by visualization of the optimal path diagram corresponding to the solution closest to the mean value. The city size is set to 100. All urban coordinate data were randomly generated, with three-dimensional coordinates (X, Y, Z) uniformly distributed within the closed interval [100, 5000]. Each dimensional coordinate was independently generated to ensure stochastic spatial distribution and uniform dispersion characteristics.

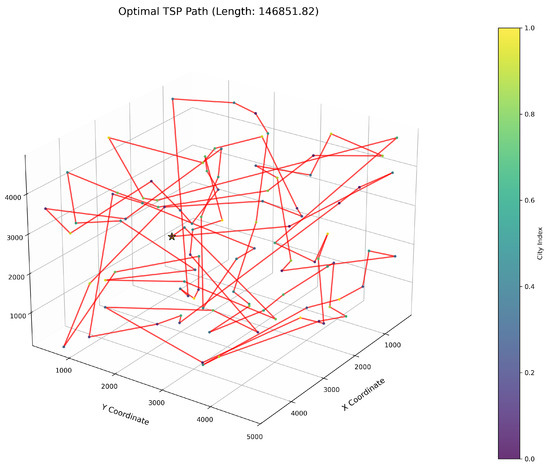

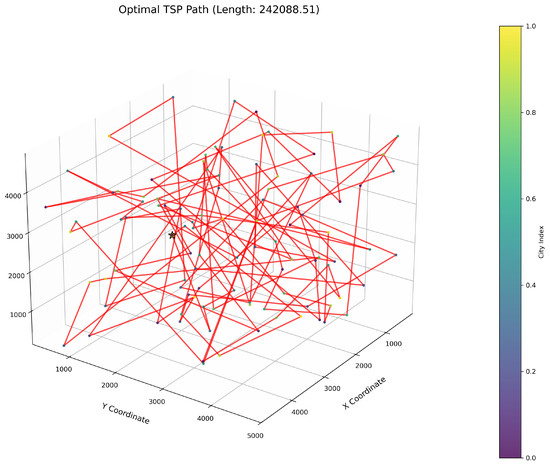

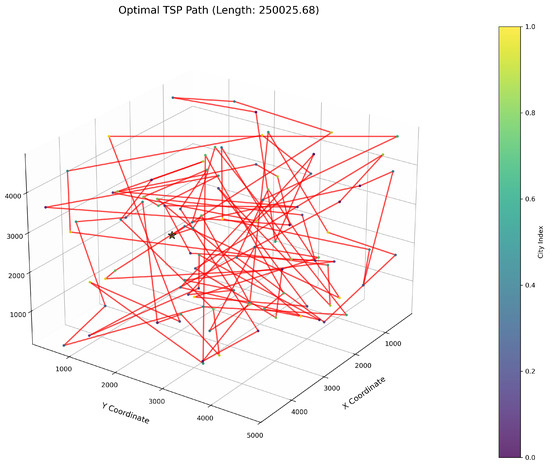

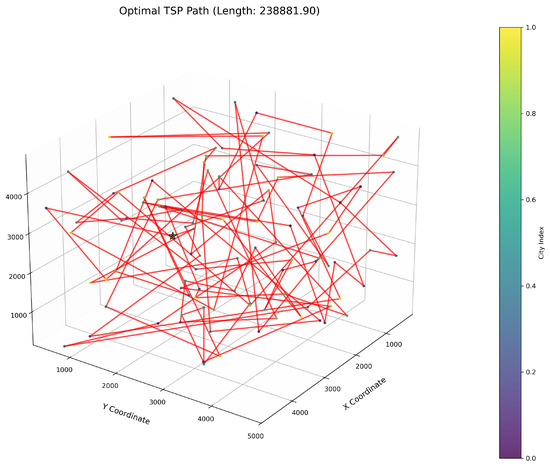

Figure 6 clearly illustrates the three-dimensional spatial distribution characteristics of 100 urban nodes, Table 6 systematically records the average optimal results of different algorithms from the 30 experiments, and Figure 7, Figure 8, Figure 9, Figure 10, Figure 11, Figure 12 and Figure 13 visually present the actual path planning effect closest to the average optimal solution.

Figure 6.

Three-dimensional spatial distribution heatmap of 100 urban nodes.

Table 6.

Comparative performance statistics of multi-run independent experiments.

Figure 7.

TSP path topology based on average optimal solution (ImWOA).

Figure 8.

TSP path topology based on average optimal solution (WOA).

Figure 9.

TSP path topology based on average optimal solution (E-WOA).

Figure 10.

TSP path topology based on average optimal solution (IWOA).

Figure 11.

TSP path topology based on average optimal solution (IWOSSA).

Figure 12.

TSP path topology based on average optimal solution (RAV-WOA).

Figure 13.

TSP path topology based on average optimal solution (WOAAD).

As delineated in Table 6, ImWOA secures top-ranked performance in mean solution quality for three-dimensional Traveling Salesman Problems (TSPs), demonstrating a substantial advantage over benchmark algorithms. Notably, it generates high-precision routing solutions across the test, revealing exceptional exploration capability in complex discrete search spaces. This empirical evidence robustly validates that ImWOA’s unique population cooperation mechanism effectively circumvents local optima traps when handling large-scale combinatorial optimization problems, thereby underscoring its superior potential for engineering applications.

6. Conclusions

This study addresses the inherent limitations of the original WOA by proposing an innovative variant, ImWOA, which integrates three synergistic optimization strategies to significantly enhance overall performance:

First Strategy: A dynamic cluster center-guided search mechanism based on K-means clustering. By partitioning the population into multiple subgroups, each subgroup conducts targeted searches around its dynamically updated cluster center. Real-time centroid recalculation during iterations enables dynamic adaptation to population evolution, simultaneously improving adaptability and robustness. This mechanism innovatively integrates global optimum positions with local cluster centroids, effectively suppressing redundant searches while significantly enhancing global exploration efficiency.

Second Strategy: A dual-modal population diversity-driven adaptive mutation mechanism. Transcending traditional single-dimensional distance metrics, this strategy employs dual diversity indicators that simultaneously quantify spatial distribution and fitness value differences, comprehensively characterizing population heterogeneity. The dynamic mutation probability adjustment mechanism, constructed based on real-time diversity states, demonstrates superior environmental adaptability and robustness compared to static parameter configurations.

Third Strategy: A pattern search framework incorporating the GPSPositiveBasis2N algorithm. This hybrid optimization paradigm synergizes WOA’s global exploration capability with pattern search’s local refinement characteristics: WOA rapidly locates potential optimal regions, while the GPSPositiveBasis2N algorithm serves as a periodic optimization module for precision local search. This collaborative “global exploration–local optimization” framework leverages the complementary advantages of both algorithms, achieving breakthrough improvements in solution quality and convergence efficiency.

To comprehensively evaluate the efficacy of the ImWOA, this study employs the CEC2017 benchmark suite for multi-dimensional validation. The experimental design establishes a dual comparison framework: a horizontal comparison with the original WOA and eight state-of-the-art metaheuristic algorithms, alongside a vertical benchmark against five advanced WOA variants. Results demonstrate ImWOA’s exceptional optimization capabilities: in 30-dimensional testing, it achieves optimal mean solutions for 20 out of 29 benchmark functions; when scaling to 100-dimensional problems, it attains leading mean values for 26 of 29 tests. Particularly noteworthy is its first-place ranking in solving the 3D-TSP—a representative combinatorial optimization challenge. These experiments collectively verify that ImWOA not only exhibits superior performance in theoretical benchmarks but also effectively addresses real-world complex optimization problems, highlighting its robust multi-scenario adaptability and broad application potential.

Although ImWOA has demonstrated outstanding optimization performance, its further in-depth research still holds abundant possibilities. Future work will focus on three dimensions as follows:

First, deepening theoretical mechanisms, establishing a rigorous mathematical framework to analyze the convergence proof of ImWOA.

Second, expanding heterogeneous computing paradigms, exploring integration pathways between ImWOA and cutting-edge technologies such as Graph Neural Networks and quantum computing, thereby constructing a cross-modal optimization framework for high-dimensional complex problems.

Third, building industrial-grade application ecosystems, for typical scenarios including dynamic scheduling in smart manufacturing and real-time load allocation in smart grids, developing specialized algorithm engines, and establishing open-source platforms to promote industry–academia–research collaborative innovation.

These explorations will propel ImWOA’s evolution from algorithmic innovation into a universal intelligent optimization infrastructure.

Author Contributions

Conceptualization, Y.Z. and Z.H.; methodology, Y.Z. and Z.H.; software, Y.Z.; data curation, Y.Z.; original draft preparation, Y.Z.; writing—review and editing, Y.Z. and Z.H.; visualization, Y.Z.; funding acquisition, Z.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Natural Science Fund of Ningxia (2025).

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zhang, Y.; Jin, Z. Comprehensive learning Jaya algorithm for engineering design optimization problems. J. Intell. Manuf. 2022, 33, 1229–1253. [Google Scholar] [CrossRef]

- Kalpana, P.; Nagendra Prabhu, S.; Polepally, V.; Rao, D.B.J. Exponentially-spider monkey optimization based allocation of resource in cloud. Int. J. Intell. Syst. 2022, 37, 2521–2542. [Google Scholar] [CrossRef]

- Nai, C. Energy finance risk warning model based on GABP algorithm. Front. Energy Res. 2023, 11, 1235412. [Google Scholar] [CrossRef]

- Rambabu, D.; Govardhan, A. Optimization assisted frequent pattern mining for data replication in cloud: Combining sealion and grey wolf algorithm. Adv. Eng. Softw. 2023, 176, 103401. [Google Scholar] [CrossRef]

- Phan, T.; Sell, D.; Wang, E.W.; Doshay, S.; Edee, K.; Yang, J.; Fan, J.A. High-efficiency, large-area, topology-optimized metasurfaces. Light Sci. Appl. 2019, 8, 48. [Google Scholar] [CrossRef]

- Berger, K.; Rivera Caicedo, J.P.; Martino, L.; Wocher, M.; Hank, T.; Verrelst, J. A survey of active learning for quantifying vegetation traits from terrestrial earth observation data. Remote Sens. 2021, 13, 287. [Google Scholar] [CrossRef]

- Lee, C.C.; Hussain, J.; Chen, Y. The optimal behavior of renewable energy resources and government’s energy consumption subsidy design from the perspective of green technology implementation. Renew. Energy 2022, 195, 670–680. [Google Scholar] [CrossRef]

- Zamir, M.; Abdeljawad, T.; Nadeem, F.; Wahid, A.; Yousef, A. An optimal control analysis of a COVID-19 model. Alex. Eng. J. 2021, 60, 2875–2884. [Google Scholar] [CrossRef]

- Wu, L.; Huang, X.; Cui, J.; Liu, C.; Xiao, W. Modified adaptive ant colony optimization algorithm and its application for solving path planning of mobile robot. Expert Syst. Appl. 2023, 215, 119410. [Google Scholar] [CrossRef]

- Taher, F.; Abdel-Salam, M.; Elhoseny, M.; El-Hasnony, I.M. Reliable machine learning model for IIoT botnet detection. IEEE Access 2023, 11, 49319–49336. [Google Scholar] [CrossRef]

- Llopis-Albert, C.; Rubio, F.; Zeng, S. Multiobjective optimization framework for designing a vehicle suspension system. A comparison of optimization algorithms. Adv. Eng. Softw. 2023, 176, 103375. [Google Scholar] [CrossRef]

- Elhoseny, M.; Abdel-Salam, M.; El-Hasnony, I.M. An improved multi-strategy Golden Jackal algorithm for real world engineering problems. Knowl.-Based Syst. 2024, 295, 111725. [Google Scholar] [CrossRef]

- Askr, H.; Abdel-Salam, M.; Hassanien, A.E. Copula entropy-based golden jackal optimization algorithm for high-dimensional feature selection problems. Expert Syst. Appl. 2024, 238, 121582. [Google Scholar] [CrossRef]

- Abdel-Salam, M.; Kumar, N.; Mahajan, S. A proposed framework for crop yield prediction using hybrid feature selection approach and optimized machine learning. Neural Comput. Appl. 2024, 36, 20723–20750. [Google Scholar] [CrossRef]

- Abdel-Salam, M.; Hassanien, A.E. A novel dynamic chaotic golden jackal optimization algorithm for sensor-based human activity recognition using smartphones for sustainable smart cities. In Artificial Intelligence for Environmental Sustainability and Green Initiatives; Springer: Cham, Switzerland, 2024; pp. 273–296. [Google Scholar] [CrossRef]

- Zhang, J.; Ning, Z.; Ali, R.H.; Waqas, M.; Tu, S.; Ahmad, I. A many-objective ensemble optimization algorithm for the edge cloud resource scheduling problem. IEEE Trans. Mob. Comput. 2023, 23, 1330–1346. [Google Scholar] [CrossRef]

- Sulaiman, M.H.; Mustaffa, Z.; Saari, M.M.; Daniyal, H. Barnacles mating optimizer: A new bio-inspired algorithm for solving engineering optimization problems. Eng. Appl. Artif. Intell. 2020, 87, 103330. [Google Scholar] [CrossRef]

- Wang, G.G.; Deb, S.; Coelho, L.D.S. Earthworm optimisation algorithm: A bio-inspired metaheuristic algorithm for global optimisation problems. Int. J. Bio-Inspired Comput. 2018, 12, 1–22. [Google Scholar] [CrossRef]

- Dhiman, G.; Kumar, V. Seagull optimization algorithm: Theory and its applications for large-scale industrial engineering problems. Knowl.-Based Syst. 2019, 165, 169–196. [Google Scholar] [CrossRef]

- Kaur, S.; Awasthi, L.K.; Sangal, A.L.; Dhiman, G. Tunicate Swarm Algorithm: A new bio-inspired based metaheuristic paradigm for global optimization. Eng. Appl. Artif. Intell. 2020, 90, 103541. [Google Scholar] [CrossRef]

- Shi, Y. Brain storm optimization algorithm. In Proceedings of the International Conference in Swarm Intelligence, Chongqing, China, 12–15 June 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 303–309. [Google Scholar] [CrossRef]

- Askari, Q.; Saeed, M.; Younas, I. Heap-based optimizer inspired by corporate rank hierarchy for global optimization. Expert Syst. Appl. 2020, 161, 113702. [Google Scholar] [CrossRef]

- Dehghani, M.; Trojovský, P. Teamwork optimization algorithm: A new optimization approach for function minimization/maximization. Sensors 2021, 21, 4567. [Google Scholar] [CrossRef]

- Talatahari, S.; Azizi, M. Chaos game optimization: A novel metaheuristic algorithm. Artif. Intell. Rev. 2021, 54, 917–1004. [Google Scholar] [CrossRef]

- Mirjalili, S. SCA: A sine cosine algorithm for solving optimization problems. Knowl.-Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

- Abedinpourshotorban, H.; Shamsuddin, S.M.; Beheshti, Z.; Jawawi, D.N. Electromagnetic field optimization: A physics-inspired metaheuristic optimization algorithm. Swarm Evol. Comput. 2016, 26, 8–22. [Google Scholar] [CrossRef]

- Karaboga, D. Artificial bee colony algorithm. Scholarpedia 2010, 5, 6915. [Google Scholar] [CrossRef]

- Yang, X.S.; Deb, S. Cuckoo search via Lévy flights. In Proceedings of the 2009 World Congress on Nature & Biologically Inspired Computing, Coimbatore, India, 9–11 December 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 210–214. [Google Scholar] [CrossRef]

- Wang, G.G.; Deb, S.; Coelho, L.S. Elephant herding optimization. In Proceedings of the 2015 3rd International Symposium on Computational and Business Intelligence, Bali, Indonesia, 7–9 December 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–5. [Google Scholar] [CrossRef]

- Masadeh, R.; Mahafzah, B.A.; Sharieh, A. Sea lion optimization algorithm. Int. J. Adv. Comput. Sci. Appl. 2019, 10, 388–395. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Liang, Z.; Shu, T.; Ding, Z. A novel improved whale optimization algorithm for global optimization and engineering applications. Mathematics 2024, 12, 636. [Google Scholar] [CrossRef]

- Liu, L.; Zhang, R. Multistrategy improved whale optimization algorithm and its application. Comput. Intell. Neurosci. 2022, 2022, 3418269. [Google Scholar] [CrossRef]

- Chakraborty, S.; Sharma, S.; Saha, A.K.; Saha, A. A novel improved whale optimization algorithm to solve numerical optimization and real-world applications. Artif. Intell. Rev. 2022, 55, 4605–4716. [Google Scholar] [CrossRef]

- Sun, G.; Shang, Y.; Zhang, R. An efficient and robust improved whale optimization algorithm for large scale global optimization problems. Electronics 2022, 11, 1475. [Google Scholar] [CrossRef]

- Shen, Y.; Zhang, C.; Gharehchopogh, F.S.; Mirjalili, S. An improved whale optimization algorithm based on multi-population evolution for global optimization and engineering design problems. Expert Syst. Appl. 2023, 215, 119269. [Google Scholar] [CrossRef]

- Li, M.; Yu, X.; Fu, B.; Wang, X. A modified whale optimization algorithm with multi-strategy mechanism for global optimization problems. Neural Comput. Appl. 2023, 35, 1–14. [Google Scholar] [CrossRef]

- Wu, G.; Mallipeddi, R.; Suganthan, P.N. Problem Definitions and Evaluation Criteria for the CEC 2017 Competition on Constrained Real-Parameter Optimization; Technical Report; National University of Defense Technology: Changsha, China; Kyungpook National University: Daegu, Republic of Korea; Nanyang Technological University: Singapore, 2017; Available online: https://www.researchgate.net/profile/Guohua-Wu-5/publication/317228117_Problem_Definitions_and_Evaluation_Criteria_for_the_CEC_2017_Competition_and_Special_Session_on_Constrained_Single_Objective_Real-Parameter_Optimization/links/5982cdbaa6fdcc8b56f59104/Problem-Definitions-and-Evaluation-Criteria-for-the-CEC-2017-Competition-and-Special-Session-on-Constrained-Single-Objective-Real-Parameter-Optimization.pdf (accessed on 1 January 2020).

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; IEEE: Piscataway, NJ, USA, 1995; Volume 4, pp. 1942–1948. [Google Scholar] [CrossRef]

- Simon, D. Biogeography-based optimization. IEEE Trans. Evol. Comput. 2008, 12, 702–713. [Google Scholar] [CrossRef]

- Li, S.; Chen, H.; Wang, M.; Heidari, A.A.; Mirjalili, S. Slime mould algorithm: A new method for stochastic optimization. Future Gener. Comput. Syst. 2020, 111, 300–323. [Google Scholar] [CrossRef]

- Storn, R.; Price, K. Differential evolution—A simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Xue, J.; Shen, B. A novel swarm intelligence optimization approach: Sparrow search algorithm. Syst. Sci. Control Eng. 2020, 8, 22–34. [Google Scholar] [CrossRef]

- Heidari, A.A.; Mirjalili, S.; Faris, H.; Aljarah, I.; Mafarja, M.; Chen, H. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst. 2019, 97, 849–872. [Google Scholar] [CrossRef]

- Karaboga, D. An Idea Based on Honey Bee Swarm for Numerical Optimization; Technical Report-TR06; Erciyes University: Kayseri, Turkey, 2005. [Google Scholar]

- Nadimi-Shahraki, M.H.; Zamani, H.; Mirjalili, S. Enhanced whale optimization algorithm for medical feature selection: A COVID-19 case study. Comput. Biol. Med. 2022, 148, 105858. [Google Scholar] [CrossRef]

- Xiong, G.; Zhang, J.; Shi, D.; He, Y. Parameter extraction of solar photovoltaic models using an improved whale optimization algorithm. Energy Convers. Manag. 2018, 174, 388–405. [Google Scholar] [CrossRef]

- Saafan, M.M.; El-Gendy, E.M. IWOSSA: An improved whale optimization salp swarm algorithm for solving optimization problems. Expert Syst. Appl. 2021, 176, 114901. [Google Scholar] [CrossRef]

- Ma, G.; Yue, X. An improved whale optimization algorithm based on multilevel threshold image segmentation using the Otsu method. Eng. Appl. Artif. Intell. 2022, 113, 104960. [Google Scholar] [CrossRef]

- Tang, J.; Wang, L. A whale optimization algorithm based on atom-like structure differential evolution for solving engineering design problems. Sci. Rep. 2024, 14, 795. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).