Hybrid Algorithms Based on Two Evolutionary Computations for Image Classification

Abstract

1. Introduction

- (a)

- This study proposes an adaptive hyperparameter optimization method that combines QIHLOA and NIGAO algorithms. This algorithm is embedded into DenseNet-121, thus achieving efficient image classification. Notably, HGAO can quickly search for the optimal solution by adjusting the ratio of different weights β1 and β2, thereby improving the accuracy and speed of image classification.

- (b)

- Four public datasets about image classification are gathered, which contain fields such as healthcare, agriculture, traditional medicine, remote sensing, and disaster management. Moreover, we make a self-developed traditional Chinese medicine image dataset. These datasets are sufficient for our experiments.

- (c)

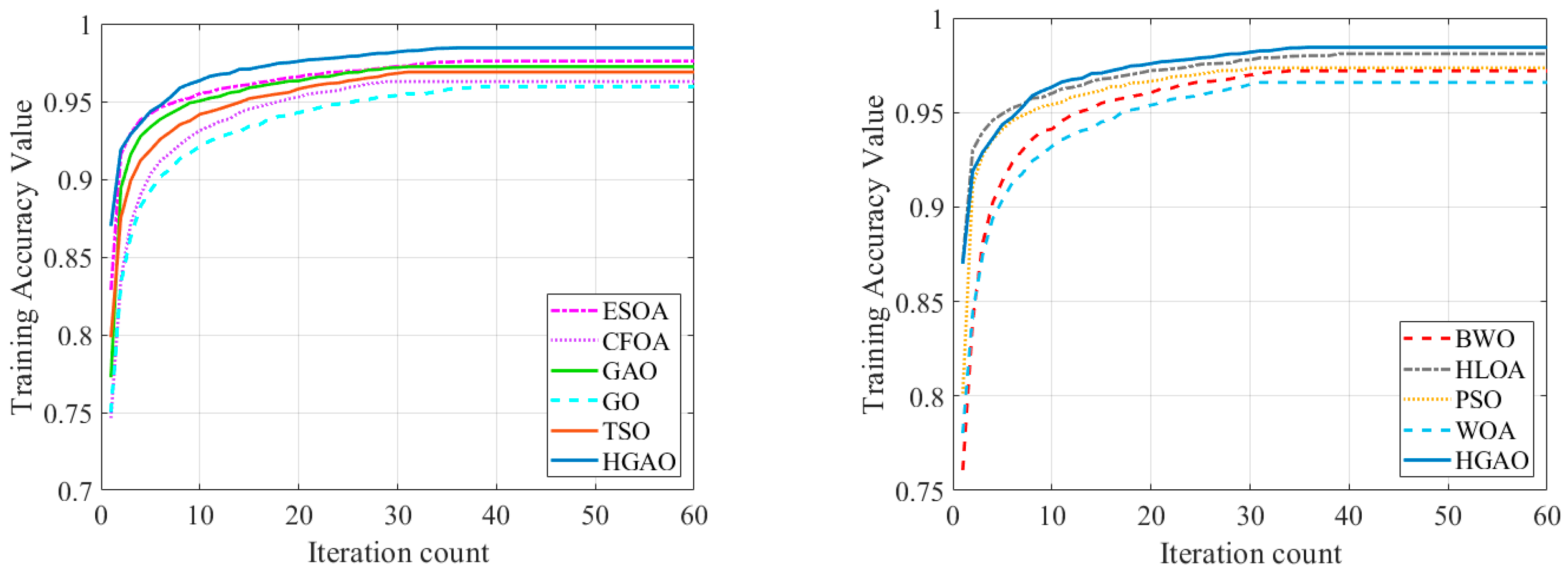

- Extensive experimental results indicate that the proposed method has high accuracy and efficiency in multi-domain image classification tasks. In particular, the proposed HGAO algorithm with DenseNet-121 outperforms several state-of-the-art algorithms—including HLOA, ESOA, PSO, and WOA—in multi-domain image classification tasks.

2. Related Theoretical Description

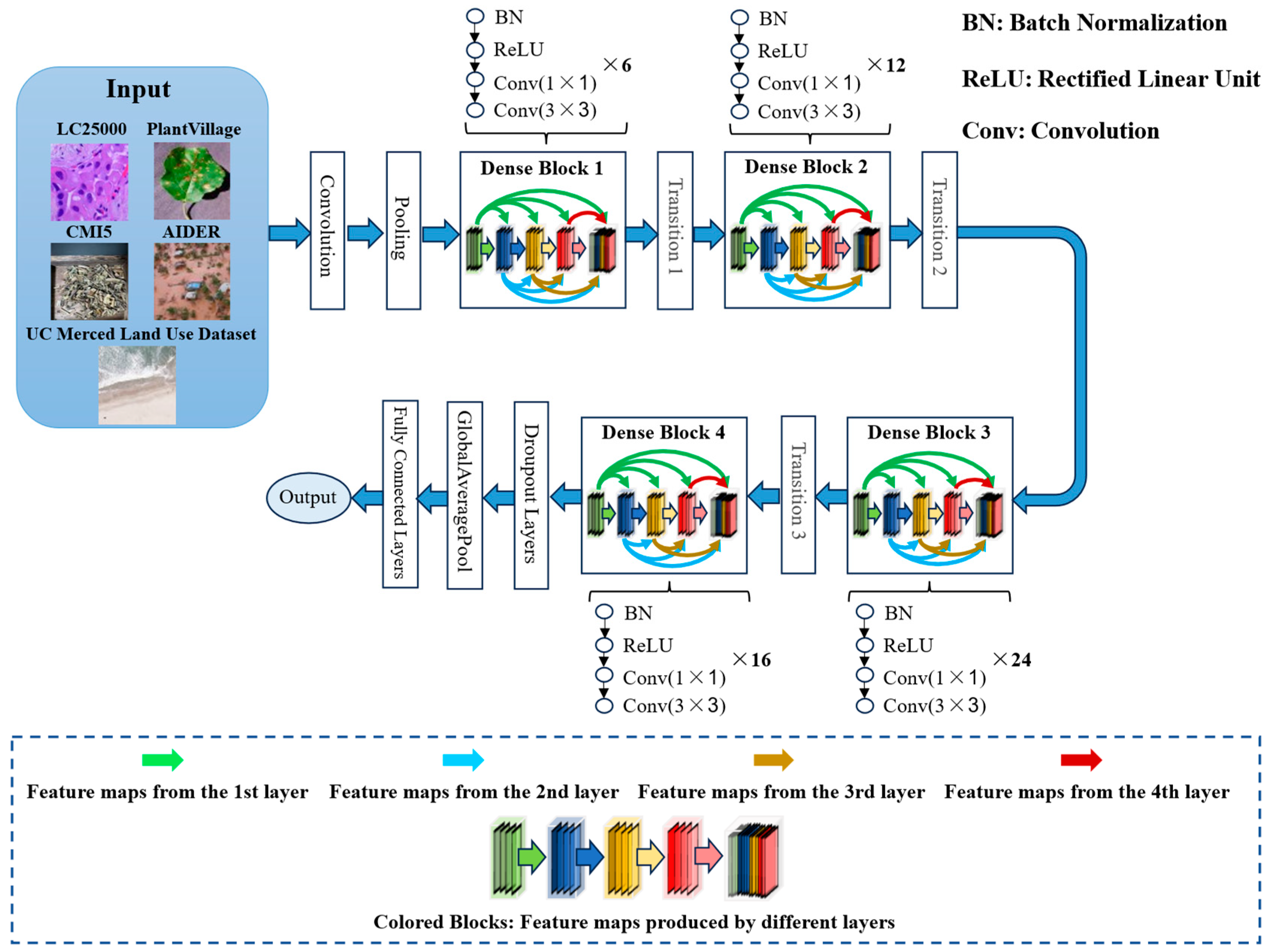

2.1. DenseNet-121

2.2. HLOA Algorithm

2.2.1. Crypsis Behavior Strategy

2.2.2. Skin Darkening or Lightening Strategy

2.2.3. Blood-Squirting Strategy

2.2.4. Move and Escape Strategy

2.2.5. Melanophore-Stimulating Hormone Rate Strategy

2.3. Quadratic Interpolation Method

| Algorithm 1: QIHLOA | ||

| Input: number of search agents D, population size P, maximum number of iterations T | ||

| Operation | ||

| /* Initialization */ | ||

| 1. | Initialize: D, P, T | |

| 2. | Initialize: Population initialization | |

| /* Training Starts */ | ||

| 3. | for t = 1 to |T| | |

| 4. | for i = 1 to |P| | |

| 5. 6. 7. 8. 9. 10. 11. 12. 13. 14. 15. 16. 17. 18. 19. 20. 21. 22. 23. 24. | if Crypsis? Then with (2). else if Flee? Then with (6). else with (5). end if end if Strategy 2: Replace the worst population individual with (3) or (4). if melanophore(i) ≤ 0.3 Then with (8). end if . Use (9) to obtain a new individual xh. with (15). if F(i) < Fbest Then Fbest = F(i) end if | |

| 25. | end for | |

| 26. | end for | |

| /* Operation Ending */ | ||

| , Fbest | ||

2.4. GAO Algorithm

2.4.1. The Stage of Exploration

2.4.2. Digging in Termite Mounds

2.5. Newton Interpolation Method

| Algorithm 2: NIGAO | ||

| Input: number of search agents D, population size P, maximum number of iterations T | ||

| Operation | ||

| /* Initialization */ | ||

| 1. | Initialize: D, P, T | |

| 2. | Initialize: Population initialization | |

| /* Training Starts */ | ||

| 3. | for t = 1 to |T| | |

| 4. | for i = 1 to |P| | |

| 5. 6. 7. 8. 9. 10. 11. 12. 13. 14. 15. 16. 17. 18. | Phase 1: Attack on termite mounds Determine the termite mound set for the i-th population member Xi Randomly select the termite mound of Xi. Obtain a new position Xnew with (10). Calculate the fitness of Xnew and update Xi according to (11). Phase 2: Digging in termite mounds Update the position Xnew based on (12). Calculate the fitness of Xnew and update Xi according to (13). Use (14) to obtain a new individual Xb. Update Xbest with (15). if Fi < Fbest Then: Fbest = Fi Xbest = Xi end if | |

| 19. | end for | |

| 20. | end for | |

| /* Operation Ending */ | ||

| Output: Xbest, Fbest | ||

2.6. Association Optimization Algorithm (HGAO)

3. Experiments and Analyses

3.1. Experimental Setting

3.1.1. Evaluation Metrics

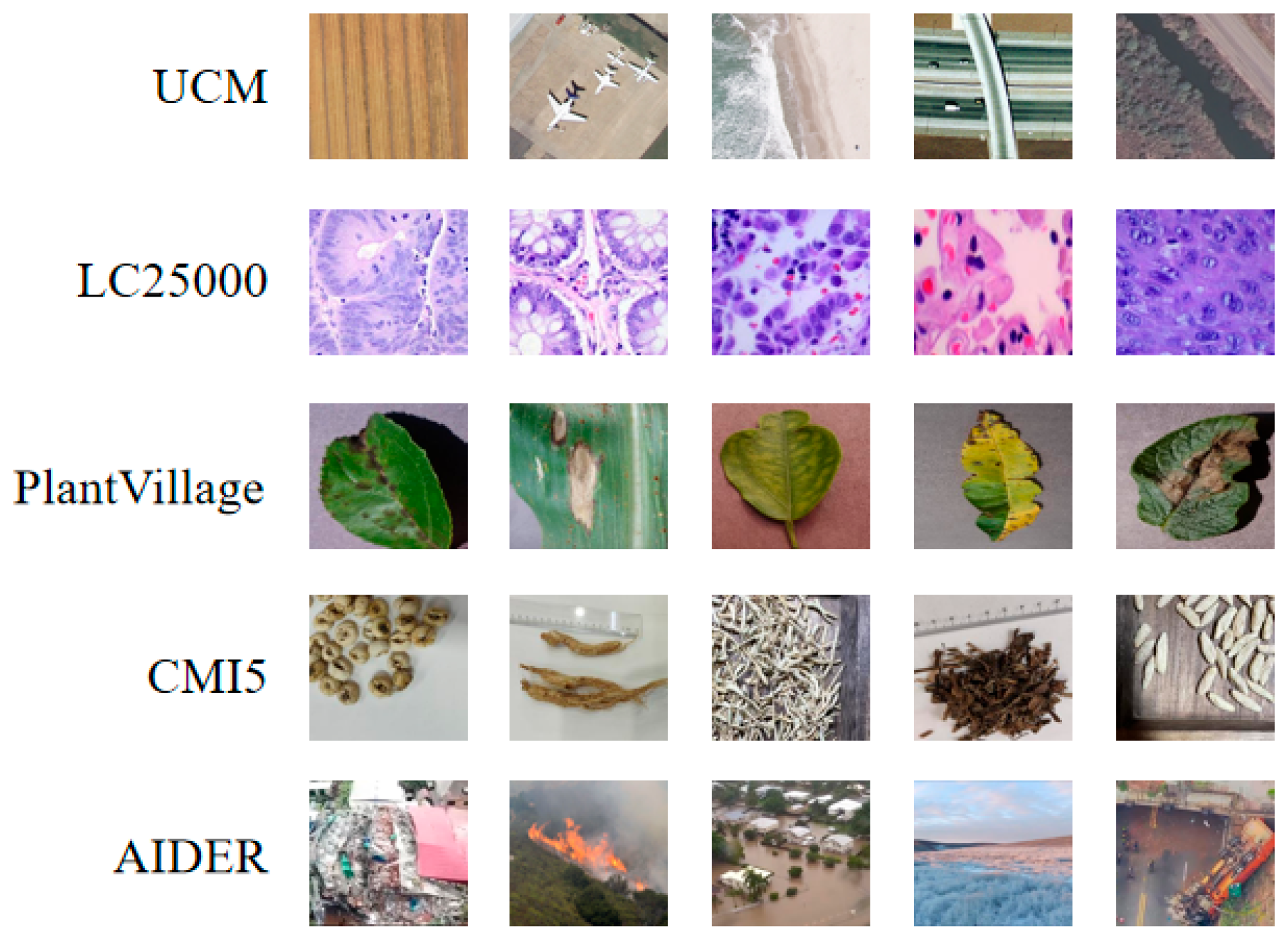

3.1.2. Datasets

- (a)

- UC Merced Land Use Dataset (UCM) [22]: This is a standard dataset for remote sensing image classification, which is released by the University of California, Merced. This dataset contains 2100 high-resolution aerial images and has a size of 256 × 256 pixels. It consists of 17 different land use types, including farmland, forests, highways, parking lots, etc.

- (b)

- LC25000 [20]: This consists of 25,000 color histopathological images, which can be used for classification tasks on lung and colon cancers. The dataset is evenly divided into five categories: lung adenocarcinoma, lung squamous cell carcinoma, benign lung tissue, colon adenocarcinoma, and benign colon tissue.

- (c)

- PlantVillage [21]: This is an image dataset that contains 39 types of plant diseases, which is widely used in agricultural AI research. Moreover, this dataset includes 61,486 color images with a resolution of 256 × 256 pixels, which covers healthy and diseased leaf samples from common crops like vegetables, fruits, and grains.

- (d)

- CMI5 (Chinese Medicine Identification 5 Dataset): We gathered this dataset, which contains images of traditional Chinese medicinal herbs. It consists of five categories: mint, fritillaria, honeysuckle, ophiopogon, and ginseng. Each category contains 2020 images; thus, it obtains a total of 10,100 images.

- (e)

- AIDER (Aerial Image Dataset for Emergency Response applications) [23]: This is an aerial image dataset that is primarily used for classification tasks in emergency response scenarios. This dataset contains 5500 images with 5 categories, e.g., fire or smoke, floods, building collapse or debris, traffic accidents, and normal situations.

3.2. Compared Algorithms

- (a)

- M1: Horned Lizard Optimization Algorithm (HLOA) [8].

- (b)

- M2: Giant Armadillo Optimization Algorithm (GAO) [9].

- (c)

- M3: Particle Swarm Optimization (PSO) [29].

- (d)

- M4: Egret Swarm Optimization Algorithm (ESOA) [30].

- (e)

- M5: Black Widow Optimization (BWO) [31].

- (f)

- M6: Transient Search Optimization Algorithm (TSO) [32].

- (g)

- M7: Whale Optimization Algorithm (WOA) [33].

- (h)

- M8: Catch fish Optimization Algorithm (CFOA) [34].

- (i)

- M9: Goose Optimization Algorithm (GO) [35].

3.3. Combination Parameter Selection

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Siuly, S.; Khare, S.K.; Kabir, E.; Sadiq, M.T.; Wang, H. An efficient Parkinson’s disease detection framework: Leveraging time-frequency representation and AlexNet convolutional neural network. Comput. Biol. Med. 2024, 174, 108462. [Google Scholar] [CrossRef]

- Ma, L.; Wu, H.; Samundeeswari, P. GoogLeNet-AL: A fully automated adaptive model for lung cancer detection. Pattern Recognit. 2024, 155, 110657. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Yadav, H.; Thakkar, A. NOA-LSTM: An efficient LSTM cell architecture for time series forecasting. Expert Syst. Appl. 2024, 238, 122333. [Google Scholar] [CrossRef]

- Visin, F.; Ciccone, M.; Romero, A.; Kastner, K.; Cho, K.; Bengio, Y.; Matteucci, M.; Courville, A. Reseg: A recurrent neural network-based model for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Las Vegas, NV, USA, 27–30 June 2016; pp. 41–48. [Google Scholar]

- Ovadia, O.; Kahana, A.; Stinis, P.; Turkel, E.; Givoli, D.; Karniadakis, G.E. Vito: Vision transformer-operator. Comput. Methods Appl. Mech. Eng. 2024, 428, 117109. [Google Scholar] [CrossRef]

- Touvron, H.; Cord, M.; Douze, M.; Massa, F.; Sablayrolles, A.; Jégou, H. Training data-efficient image transformers & distillation through attention. In Proceedings of the International Conference on Machine Learning, Kunming, China, 16–18 July 2021; pp. 10347–10357. [Google Scholar]

- Peraza-Vázquez, H.; Peña-Delgado, A.; Merino-Treviño, M.; Morales-Cepeda, A.B.; Sinha, N. A novel metaheuristic inspired by horned lizard defense tactics. Artif. Intell. Rev. 2024, 57, 59. [Google Scholar] [CrossRef]

- Alsayyed, O.; Hamadneh, T.; Al-Tarawneh, H.; Alqudah, M.; Gochhait, S.; Leonova, I.; Malik, O.P.; Dehghani, M. Giant Armadillo optimization: A new bio-inspired metaheuristic algorithm for solving optimization problems. Biomimetics 2023, 8, 619. [Google Scholar] [CrossRef]

- Chen, T.; Li, S.; Qiao, Y.; Luo, X. A robust and efficient ensemble of diversified evolutionary computing algorithms for accurate robot calibration. IEEE Trans. Instrum. Meas. 2024, 73, 7501814. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Hawaii, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Wei, P.; Hu, C.; Hu, J.; Li, Z.; Qin, W.; Gan, J.; Chen, T.; Shu, H.; Shang, M. A Novel Black Widow Optimization Algorithm Based on Lagrange Interpolation Operator for ResNet18. Biomimetics 2025, 10, 361. [Google Scholar] [CrossRef]

- Chang, S.; Yang, G.; Cheng, J.; Feng, Z.; Fan, Z.; Ma, X.; Li, Y.; Yang, X.; Zhao, C. Recognition of wheat rusts in a field environment based on improved DenseNet. Biosyst. Eng. 2024, 238, 10–21. [Google Scholar] [CrossRef]

- Li, Z.; Li, S.; Luo, X. An overview of calibration technology of industrial robots. IEEE/CAA J. Autom. Sin. 2021, 8, 23–36. [Google Scholar] [CrossRef]

- Song, Y.; Dai, Y.; Liu, W.; Liu, Y.; Liu, X.; Yu, Q.; Liu, X.; Que, N.; Li, M. DesTrans: A medical image fusion method based on transformer and improved DenseNet. Comput. Biol. Med. 2024, 174, 108463. [Google Scholar] [CrossRef] [PubMed]

- Wu, D.; Ying, Y.; Zhou, M.; Pan, J.; Cui, D. Improved ResNet-50 deep learning algorithm for identifying chicken gender. Comput. Electron. Agric. 2023, 205, 107622. [Google Scholar] [CrossRef]

- Talukder, M.A.; Layek, M.A.; Kazi, M.; Uddin, M.A.; Aryal, S. Empowering COVID-19 detection: Optimizing performance through fine-tuned efficientnet deep learning architecture. Comput. Biol. Med. 2024, 168, 107789. [Google Scholar] [CrossRef]

- Shafik, W.; Tufail, A.; De Silva Liyanage, C.; Rosyzie Anna Awg Haji Mohd Apong. Using transfer learning-based plant disease classification and detection for sustainable agriculture. BMC Plant Biol. 2024, 24, 136. [Google Scholar] [CrossRef]

- Hamza, A.; Khan, M.A.; Rehman, S.U.; Al-Khalidi, M.; Alzahrani, A.I.; Alalwan, N. A novel bottleneck residual and self-attention fusion-assisted architecture for land use recognition in remote sensing images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 2995–3009. [Google Scholar] [CrossRef]

- Lee, G.Y.; Dam, T.; Ferdaus, M.M.; Poenar, D.P.; Duong, V.N. Watt-effnet: A lightweight and accurate model for classifying aerial disaster images. IEEE Geosci. Remote Sens. Lett. 2023, 20, 6005205. [Google Scholar] [CrossRef]

- Deng, Y.; Hou, X.; Li, B.; Wang, J.; Zhang, Y. A highly powerful calibration method for robotic smoothing system calibration via using adaptive residual extended Kalman filter. Robot. Comput.-Integr. Manuf. 2024, 86, 102660. [Google Scholar] [CrossRef]

- She, S.; Meng, T.; Zheng, X.; Shao, Y.; Hu, G.; Yin, W. Evaluation of defects depth for metal sheets using 4-coil excitation array eddy current sensor and improved ResNet18 network. IEEE Sens. J. 2024, 24, 18955–18967. [Google Scholar] [CrossRef]

- Qaraad, M.; Amjad, S.; Hussein, N.K.; Farag, M.A.; Mirjalili, S.; Elhosseini, M.A. Quadratic interpolation and a new local search approach to improve particle swarm optimization: Solar photovoltaic parameter estimation. Expert Syst. Appl. 2024, 236, 121417. [Google Scholar] [CrossRef]

- Li, Z.; Li, S.; Luo, X. Using quadratic interpolated beetle antennae search to enhance robot arm calibration accuracy. IEEE Robot. Autom. Lett. 2022, 7, 12046–12053. [Google Scholar] [CrossRef]

- Almutairi, N.; Saber, S. Application of a time-fractal fractional derivative with a power-law kernel to the Burke-Shaw system based on Newton’s interpolation polynomials. MethodsX 2024, 12, 102510. [Google Scholar] [CrossRef]

- Li, Z.; Li, S.; Bamasag, O.O.; Alhothali, A.; Luo, X. Diversified regularization enhanced training for effective manipulator calibration. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 8778–8790. [Google Scholar] [CrossRef]

- Wang, D.; Zhai, L.; Fang, J.; Li, Y.; Xu, Z. psoResNet: An improved PSO-based residual network search algorithm. Neural Netw. 2024, 172, 106104. [Google Scholar] [CrossRef]

- Kong, Z.; Le, D.N.; Pham, T.H.; Poologanathan, K.; Papazafeiropoulos, G.; Vu, Q.V. Hybrid machine learning with optimization algorithm and resampling methods for patch load resistance prediction of unstiffened and stiffened plate girders. Expert Syst. Appl. 2024, 249, 123806. [Google Scholar] [CrossRef]

- Lee, J.H.; Song, J.; Kim, D.; Kim, J.W.; Kim, Y.J.; Jung, S.Y. Particle swarm optimization algorithm with intelligent particle number control for optimal design of electric machines. IEEE Trans. Ind. Electron. 2018, 65, 1791–1798. [Google Scholar] [CrossRef]

- Wei, P.; Shang, M.; Zhou, J.; Shi, X. Efficient Adaptive Learning Rate for Convolutional Neural Network Based on Quadratic Interpolation Egret Swarm Optimization Algorithm. Heliyon 2024, 10, e37814. [Google Scholar] [CrossRef]

- Hayyolalam, V.; Kazem, A.A. Black widow optimization algorithm: A novel meta-heuristic approach for solving engineering optimization problems. Eng. Appl. Artif. Intell. 2020, 87, 103249. [Google Scholar] [CrossRef]

- Shaheen, M.A.; Ullah, Z.; Hasanien, H.M.; Tostado-Véliz, M.; Ji, H.; Qais, M.H.; Alghuwainem, S.; Jurado, F. Enhanced transient search optimization algorithm-based optimal reactive power dispatch including electric vehicles. Energy 2023, 277, 127711. [Google Scholar] [CrossRef]

- Sapnken, F.E.; Tazehkandgheshlagh, A.K.; Diboma, B.S.; Hamaidi, M.; Noumo, P.G.; Wang, Y.; Tamba, J.G. A whale optimization algorithm-based multivariate exponential smoothing grey-holt model for electricity price forecasting. Expert Syst. Appl. 2024, 255, 124663. [Google Scholar] [CrossRef]

- Jia, H.; Wen, Q.; Wang, Y.; Mirjalili, S. Catch fish optimization algorithm: A new human behavior algorithm for solving clustering problems. Clust. Comput. 2024, 27, 13295–13332. [Google Scholar] [CrossRef]

- Hamad, R.K.; Rashid, T.A. GOOSE algorithm: A powerful optimization tool for real-world engineering challenges and beyond. Evol. Syst. 2024, 15, 1249–1274. [Google Scholar] [CrossRef]

- Mahmood, K.; Shamshad, S.; Saleem, M.A.; Kharel, R.; Das, A.K.; Shetty, S.; Rodrigues, J.J.P.C. Blockchain and PUF-based secure key establishment protocol for cross-domain digital twins in industrial Internet of Things architecture. J. Adv. Res. 2024, 62, 155–163. [Google Scholar] [CrossRef]

- Peng, L.; Cai, Z.; Heidari, A.A.; Zhang, L.; Chen, H. Hierarchical Harris hawks optimizer for feature selection. J. Adv. Res. 2023, 53, 261–278. [Google Scholar] [CrossRef]

- Khan, A.T.; Li, S.; Zhou, X. Trajectory optimization of 5-link biped robot using beetle antennae search. IEEE Trans. Circuits Syst. II Express Briefs 2021, 68, 3276–3280. [Google Scholar] [CrossRef]

- Zhou, S.; Xing, L.; Zheng, X.; Du, N.; Wang, L.; Zhang, Q. A self-adaptive differential evolution algorithm for scheduling a single batch-processing machine with arbitrary job sizes and release times. IEEE Trans. Cybern. 2021, 51, 1430–1442. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; He, J.; Li, Y.; Rafique, M.U. Distributed recurrent neural networks for cooperative control of manipulators: A game-theoretic perspective. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 415–426. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Zhang, Y.; Jin, L. Kinematic control of redundant manipulators using neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 2243–2254. [Google Scholar] [CrossRef]

- Giammarco, M.D.; Martinelli, F.; Santone, A.; Cesarelli, M.; Mercaldo, F. Colon cancer diagnosis by means of explainable deep learning. Sci. Rep. 2024, 14, 15334. [Google Scholar] [CrossRef]

| Dataset | Categories | Sizes (M) | Image Count |

|---|---|---|---|

| UC Merced Land Use Data | 21 | 418 MB | 2100 |

| LC25000 | 5 | 1.75 GB | 25,000 |

| AIDER | 5 | 263 MB | 6433 |

| PlantVillage | 39 | 898 MB | 61,486 |

| Chinese Medicinal Materials | 5 | 117 MB | 10,100 |

| β1 | β2 | Training Accuracy | Training Loss | Test Accuracy | Test Loss | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|---|---|

| 0.1 | 0.9 | 0.992 | 0.029 | 0.950 | 0.153 | 0.950 | 0.950 | 0.950 |

| 0.3 | 0.7 | 0.993 | 0.014 | 0.951 | 0.140 | 0.950 | 0.950 | 0.950 |

| 0.5 | 0.5 | 0.983 | 0.054 | 0.948 | 0.189 | 0.950 | 0.950 | 0.950 |

| 0.7 | 0.3 | 0.989 | 0.039 | 0.949 | 0.223 | 0.950 | 0.950 | 0.950 |

| 0.9 | 0.1 | 0.985 | 0.046 | 0.942 | 0.206 | 0.940 | 0.940 | 0.940 |

| β1 | β2 | Training Accuracy | Training Loss | Test Accuracy | Test Loss | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|---|---|

| 0.1 | 0.9 | 0.998 | 0.006 | 0.969 | 0.104 | 0.970 | 0.970 | 0.970 |

| 0.3 | 0.7 | 0.998 | 0.004 | 0.972 | 0.092 | 0.970 | 0.970 | 0.970 |

| 0.5 | 0.5 | 0.995 | 0.016 | 0.969 | 0.099 | 0.970 | 0.970 | 0.970 |

| 0.7 | 0.3 | 0.998 | 0.006 | 0.97 | 0.104 | 0.970 | 0.970 | 0.970 |

| 0.9 | 0.1 | 0.997 | 0.084 | 0.967 | 0.111 | 0.970 | 0.970 | 0.970 |

| β1 | β2 | Training Accuracy | Training Loss | Test Accuracy | Test Loss | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|---|---|

| 0.1 | 0.9 | 0.999 | 0.005 | 0.991 | 0.033 | 0.990 | 0.990 | 0.990 |

| 0.3 | 0.7 | 0.998 | 0.008 | 0.985 | 0.052 | 0.990 | 0.990 | 0.990 |

| 0.5 | 0.5 | 0.998 | 0.007 | 0.987 | 0.390 | 0.990 | 0.990 | 0.990 |

| 0.7 | 0.3 | 0.997 | 0.009 | 0.980 | 0.066 | 0.980 | 0.980 | 0.980 |

| 0.9 | 0.1 | 0.996 | 0.010 | 0.979 | 0.069 | 0.980 | 0.980 | 0.980 |

| β1 | β2 | Training Accuracy | Training Loss | Test Accuracy | Test Loss | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|---|---|

| 0.1 | 0.9 | 0.999 | 0.005 | 0.990 | 0.033 | 0.990 | 0.990 | 0.990 |

| 0.3 | 0.7 | 0.998 | 0.008 | 0.985 | 0.052 | 0.990 | 0.990 | 0.990 |

| 0.5 | 0.5 | 0.999 | 0.006 | 0.987 | 0.039 | 0.990 | 0.990 | 0.990 |

| 0.7 | 0.3 | 0.997 | 0.009 | 0.980 | 0.066 | 0.980 | 0.980 | 0.980 |

| 0.9 | 0.1 | 0.996 | 0.009 | 0.979 | 0.069 | 0.980 | 0.980 | 0.980 |

| β1 | β2 | Training Accuracy | Training Loss | Test Accuracy | Test Loss | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|---|---|

| 0.1 | 0.9 | 0.991 | 0.021 | 0.910 | 0.367 | 0.910 | 0.910 | 0.910 |

| 0.3 | 0.7 | 0.998 | 0.004 | 0.917 | 0.339 | 0.920 | 0.920 | 0.920 |

| 0.5 | 0.5 | 0.994 | 0.010 | 0.909 | 0.364 | 0.910 | 0.910 | 0.910 |

| 0.7 | 0.3 | 0.994 | 0.008 | 0.914 | 0.349 | 0.910 | 0.910 | 0.910 |

| 0.9 | 0.1 | 0.992 | 0.016 | 0.905 | 0.386 | 0.910 | 0.900 | 0.900 |

| Compared Algorithm | Training Accuracy | Training Loss | Test Accuracy | Test Loss | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|---|

| HLOA | 0.988 | 0.036 | 0.947 | 0.194 | 0.950 | 0.950 | 0.950 |

| GAO | 0.986 | 0.033 | 0.936 | 0.220 | 0.940 | 0.940 | 0.940 |

| PSO | 0.984 | 0.035 | 0.934 | 0.230 | 0.930 | 0.930 | 0.930 |

| ESOA | 0.985 | 0.032 | 0.932 | 0.250 | 0.930 | 0.930 | 0.930 |

| BWO | 0.981 | 0.082 | 0.918 | 0.254 | 0.920 | 0.920 | 0.920 |

| TSO | 0.982 | 0.048 | 0.925 | 0.240 | 0.930 | 0.930 | 0.930 |

| WOA | 0.979 | 0.061 | 0.903 | 0.313 | 0.900 | 0.900 | 0.900 |

| CFOA | 0.982 | 0.058 | 0.896 | 0.339 | 0.900 | 0.900 | 0.900 |

| GO | 0.976 | 0.063 | 0.923 | 0.258 | 0.920 | 0.920 | 0.920 |

| HGAO | 0.993 | 0.014 | 0.951 | 0.140 | 0.950 | 0.950 | 0.950 |

| Compared Algorithm | Training Accuracy | Training Loss | Test Accuracy | Test Loss | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|---|

| HLOA | 0.997 | 0.009 | 0.970 | 0.102 | 0.970 | 0.970 | 0.970 |

| GAO | 0.996 | 0.011 | 0.969 | 0.105 | 0.970 | 0.970 | 0.970 |

| PSO | 0.991 | 0.026 | 0.964 | 0.112 | 0.960 | 0.960 | 0.960 |

| ESOA | 0.994 | 0.017 | 0.965 | 0.111 | 0.970 | 0.960 | 0.960 |

| BWO | 0.994 | 0.019 | 0.964 | 0.113 | 0.960 | 0.960 | 0.960 |

| TSO | 0.989 | 0.030 | 0.961 | 0.135 | 0.960 | 0.960 | 0.960 |

| WOA | 0.988 | 0.034 | 0.967 | 0.106 | 0.970 | 0.970 | 0.970 |

| CFOA | 0.991 | 0.022 | 0.965 | 0.109 | 0.960 | 0.960 | 0.960 |

| GO | 0.989 | 0.028 | 0.961 | 0.135 | 0.960 | 0.960 | 0.960 |

| HGAO | 0.998 | 0.004 | 0.972 | 0.092 | 0.970 | 0.970 | 0.970 |

| Compared Algorithm | Training Accuracy | Training Loss | Test Accuracy | Test Loss | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|---|

| HLOA | 0.995 | 0.016 | 0.921 | 0.252 | 0.920 | 0.920 | 0.920 |

| GAO | 0.991 | 0.039 | 0.917 | 0.384 | 0.920 | 0.920 | 0.920 |

| PSO | 0.974 | 0.1888 | 0.911 | 0.451 | 0.910 | 0.910 | 0.910 |

| ESOA | 0.993 | 0.028 | 0.882 | 0.620 | 0.880 | 0.880 | 0.880 |

| BWO | 0.984 | 0.104 | 0.890 | 0.575 | 0.890 | 0.890 | 0.890 |

| TSO | 0.974 | 0.172 | 0.871 | 0.670 | 0.870 | 0.870 | 0.870 |

| WOA | 0.966 | 0.241 | 0.852 | 0.783 | 0.850 | 0.850 | 0.850 |

| CFOA | 0.985 | 0.101 | 0.894 | 0.576 | 0.890 | 0.890 | 0.890 |

| GO | 0.978 | 0.136 | 0.898 | 0.521 | 0.900 | 0.900 | 0.900 |

| HGAO | 0.998 | 0.008 | 0.923 | 0.224 | 0.920 | 0.920 | 0.920 |

| Compared Algorithm | Training Accuracy | Training Loss | Test Accuracy | Test Loss | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|---|

| HLOA | 0.997 | 0.008 | 0.987 | 0.446 | 0.990 | 0.990 | 0.990 |

| GAO | 0.994 | 0.030 | 0.973 | 0.092 | 0.970 | 0.970 | 0.970 |

| PSO | 0.991 | 0.064 | 0.957 | 0.131 | 0.960 | 0.960 | 0.960 |

| ESOA | 0.991 | 0.050 | 0.962 | 0.108 | 0.960 | 0.960 | 0.960 |

| BWO | 0.989 | 0.054 | 0.972 | 0.085 | 0.970 | 0.970 | 0.970 |

| TSO | 0.985 | 0.077 | 0.957 | 0.132 | 0.960 | 0.960 | 0.960 |

| WOA | 0.988 | 0.068 | 0.966 | 0.108 | 0.970 | 0.970 | 0.970 |

| CFOA | 0.985 | 0.073 | 0.970 | 0.080 | 0.970 | 0.970 | 0.970 |

| GO | 0.987 | 0.095 | 0.944 | 0.168 | 0.940 | 0.940 | 0.940 |

| HGAO | 0.999 | 0.005 | 0.991 | 0.033 | 0.990 | 0.990 | 0.990 |

| Compared Algorithm | Training Accuracy | Training Loss | Test Accuracy | Test Loss | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|---|

| HLOA | 0.994 | 0.010 | 0.912 | 0.352 | 0.910 | 0.910 | 0.910 |

| GAO | 0.992 | 0.014 | 0.908 | 0.374 | 0.910 | 0.910 | 0.910 |

| PSO | 0.987 | 0.039 | 0.896 | 0.556 | 0.900 | 0.900 | 0.900 |

| ESOA | 0.987 | 0.035 | 0.904 | 0.392 | 0.900 | 0.900 | 0.900 |

| BWO | 0.978 | 0.063 | 0.872 | 0.613 | 0.870 | 0.870 | 0.870 |

| TSO | 0.978 | 0.068 | 0.893 | 0.555 | 0.890 | 0.890 | 0.890 |

| WOA | 0.983 | 0.057 | 0.870 | 0.745 | 0.870 | 0.870 | 0.870 |

| CFOA | 0.986 | 0.044 | 0.887 | 0.649 | 0.890 | 0.890 | 0.890 |

| GO | 0.977 | 0.061 | 0.873 | 0.687 | 0.870 | 0.870 | 0.870 |

| HGAO | 0.998 | 0.004 | 0.917 | 0.339 | 0.920 | 0.920 | 0.920 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wei, P.; Zou, R.; Gan, J.; Li, Z. Hybrid Algorithms Based on Two Evolutionary Computations for Image Classification. Biomimetics 2025, 10, 544. https://doi.org/10.3390/biomimetics10080544

Wei P, Zou R, Gan J, Li Z. Hybrid Algorithms Based on Two Evolutionary Computations for Image Classification. Biomimetics. 2025; 10(8):544. https://doi.org/10.3390/biomimetics10080544

Chicago/Turabian StyleWei, Peiyang, Rundong Zou, Jianhong Gan, and Zhibin Li. 2025. "Hybrid Algorithms Based on Two Evolutionary Computations for Image Classification" Biomimetics 10, no. 8: 544. https://doi.org/10.3390/biomimetics10080544

APA StyleWei, P., Zou, R., Gan, J., & Li, Z. (2025). Hybrid Algorithms Based on Two Evolutionary Computations for Image Classification. Biomimetics, 10(8), 544. https://doi.org/10.3390/biomimetics10080544