1. Introduction

Path planning is a key technology for mobile platforms to realize intelligent functions such as environmental sensing and autonomous operation. Algorithms are utilized to enable mobile platforms to find optimal feasible paths from the initial point to the target location in different spaces, thereby improving operational efficiency [

1]. Path planning algorithms can be broadly categorized into two classes: traditional algorithms and bionic intelligence-based algorithms [

2], and they are widely used in fields such as electric power inspection [

3], underwater search [

4], and military task assignment [

5]. Traditional algorithms can be subdivided into global and local path planning algorithms according to the utilization degree of environmental information. Global path planning provides approximate paths, while local path planning makes corrections within a local range of the global path to adapt to environmental changes and special needs. The global path planning algorithm needs to consider obstacle information in the environmental map, with representative algorithms including the A* and RRT algorithms [

6]; the local path planning algorithm needs to update real-time environmental information via sensors, with representative algorithms such as the artificial potential field method and D* algorithm [

7]. With the development of artificial intelligence technologies, bionic swarm intelligence algorithms that simulate biological evolution, group social behavior, and information exchange in nature have been formulated using mathematical expressions [

8]. Early representative algorithms of swarm intelligence algorithms are particle swarm optimization (PSO) and ant colony optimization (ACO) [

9]; medium-term representative algorithms are artificial bee colony (ABC) and artificial fish swarm algorithm (AFSA) [

10]; and recent representative algorithms are sparrow search algorithm (SSA), whale optimization algorithm (WOA), and beetle antennae search (BAS), etc. [

11]. The swarm intelligence algorithms have independent solution thinking and they can be adjusted adaptively and iteratively according to the path planning characteristics and requirements. Rich population categories help to achieve parallel computing and improve search efficiency while also having excellent scalability for larger-scale and more complex scenarios [

12].

Swarm intelligence algorithms include two phases: global exploration and local optimization. The former performs a highly stochastic global search, while the latter performs deep optimization as the number of iterations in the search area increases. Regulating the balance between these two phases is particularly important. If the weight in the early exploration stage is too large, the algorithm may generate an unordered search, which slows down the convergence speed and wastes computing resources. If the weight in the later stage is too large, the algorithm may fall into local optimal oscillations and be unable to escape extreme points in the later stage [

13]. The swarm intelligence algorithms do not rely on the initial parameter settings, and they are more inclined to explore the environmental information with a wider search range. The beetle algorithm has a high degree of structuring. It can not only simulate an individual beetle to judge the information concentration in the environment and solve problems independently but also be converted into a population of beetles to establish information interaction for a common solution. Some scholars combined the powerful search ability of the swarm algorithm with the low complexity of the beetle algorithm to improve the global search ability and local planning speed of the algorithm [

14]. Cui et al. [

15] introduced globally optimal individuals into both the employed bees and scout bees stages and introduced the teaching- and learning-based optimization algorithm into the peak-peak stage to enhance the algorithm’s mining ability and searching efficiency in terms of swarm population. Ni et al. [

16] proposed a multi-strategy integrated bee colony algorithm, which utilizes different individual experiences with multiple search strategies to update the global path. Li [

17] and Comert [

18] combined the bee colony algorithm with the ant colony algorithm to improve the quality of the initial solution, optimize the number of path turns, and avoid blind searches. Liang et al. [

19] added a virtual goal to the global search points to achieve fast local search optimization. Yu et al. [

20] divided the global search process into several segments using the water flow potential field method and optimized the local search coordinate points according to the obstacles’ characteristics using the beetle algorithm to prevent falling into local optimal points. In addition, introducing chaotic functions and reverse learning mechanisms are the main strategies for improving the performance of swarm intelligence algorithms. Chen et al. [

21] and Geng et al. [

22] incorporated non-uniform variation and chaotic mapping functions into the sparrow search algorithm, respectively, which enhances the randomness and traversal ability of the populations. Mohapatra et al. [

23] introduced a stochastic reverse learning mechanism into the golden jackal algorithm to overcome the limitations of balancing exploration and exploitation and improve the convergence speed. Hu et al. [

24] introduced variational and greedy strategies into the rabbit algorithm to improve the quality of spatial solution exploration and population exploitation. The aforementioned literature has improved the efficiency of algorithms in path planning by means of hybrid swarm intelligence algorithms, introducing optimization strategies, etc. However, there are still problems such as early convergence of global exploration, low local optimization accuracy, and high dependence on parameter settings.

According to the No Free Lunch Theorem [

25], no single intelligent optimization algorithm can universally solve all path planning problems. Even a well-optimized single algorithm fails to simultaneously address the dual challenges of global search and local optimization. To tackle this, the IABCBAS algorithm is tailored for path planning in multi-dimensional environments. This algorithm leverages swarm intelligence mechanisms for global exploration of path waypoints while employing the beetle algorithm for local path refinement. The main contributions of this study are summarized as follows.

Firstly, Tent chaotic perturbation is employed to enhance bee population diversity. By non-uniformly adjusting the updated swarm positions, the algorithm broadens the distribution of solution sets, optimizes search point quality, and boosts convergence speed. Secondly, a random reversal strategy is adopted to increase search direction points, thereby expanding the optimization range of the beetle algorithm and strengthening its ability to escape local optima. Position-based greedy selection is employed to achieve higher convergence accuracy. Thirdly, an adaptive inertia weight factor is introduced to autonomously adjust the weight distribution between the two algorithms, thereby achieving a balanced trade-off between global search capability and local optimization performance. Fourthly, path planning simulation experiments are conducted; the swarm algorithm performs global path pre-search, while the beetle algorithm handles local optimization. Two-dimensional, three-dimensional, and real-world environments are established to verify the engineering application value of the proposed algorithm.

This paper is structured into five parts.

Section 1 provides a relevant introduction to the research content.

Section 2 elaborates on the artificial bee colony algorithm, beetle swarm algorithm, and their respective improvement strategies.

Section 3 focuses on testing the performance of the proposed algorithm.

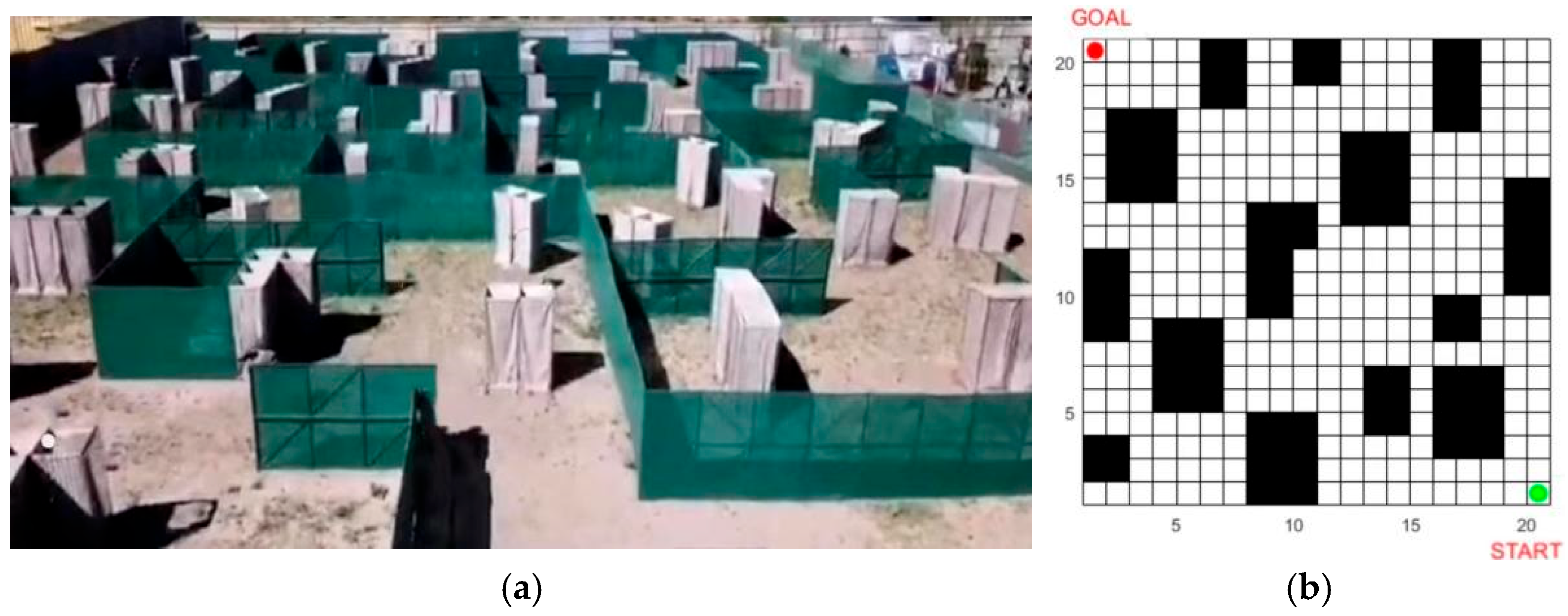

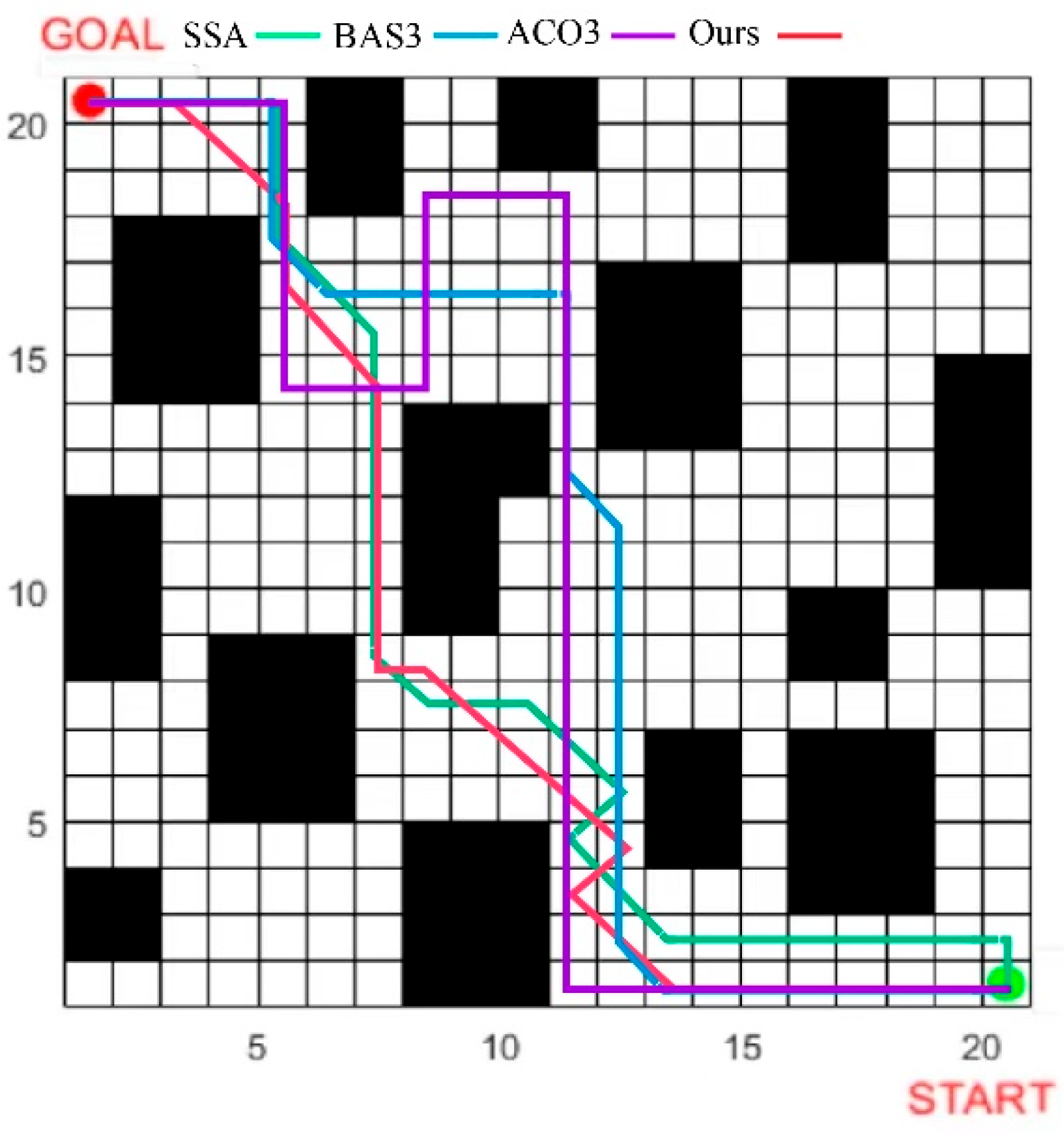

Section 4 presents path planning experiments conducted in 2D, 3D simulation environments, and a real-world environment.

Section 5 includes conclusions and future prospects.

2. Hybrid Path Planning Based on Improved ABC and BAS Algorithms

2.1. ABC Algorithm for Global Path Exploration Optimization

2.1.1. The Optimized ABC Algorithm

The ABC algorithm model is optimized as an “employed–onlooker–scout” model, which makes full use of the contribution of each type of bee in the global nectar search process and determines the location of the next path point based on the fitness value. Given that the ABC algorithm excessively relies on the optimization capability of leading bees, it tends to bypass the exploration of fitness values in unknown regions by scout bees, which leads to stagnation in the later stages of the search. To address this, the Tent chaotic perturbation mechanism and non-uniform mutation operator are introduced, respectively, aiming to enrich population diversity while enhancing the exploration capability of the search space, thereby generating more global waypoints available for selection by the algorithm.

Assuming there are

A bees in a B-dimensional search space, the position of each bee is shown as

where

; and

is the position of the

d-th bee in dimension

B.

The employed bees guide the movement of the entire honey colony and can search for nectar sources anywhere in the region. Their updated position is shown in

where

e is the number of model iterations;

is the maximum number of iterations;

is a random number with

;

F is a random value obeying the normal distribution function; and

G is a

matrix where each element is 1.

, which denotes the concentration of nectar in the region. When

, the current concentration of nectar in the region is low, and the search range needs to be expanded. When

, the current concentration of nectar is high, and all the honeybees need to fly to the region quickly.

The onlooker bees follow the employed bees in search of nectar and may harvest nectar together with the employed bees to enhance the nectar concentration of the entire population. Their updated position is shown in

where

is the global worst position of the bee in the

b-th dimension at the

eth iteration;

is the global optimal position of the employed bee in the

b-th dimension at the (

e + 1)

-th iteration; and

rand is a randomly assigned value within the given range. When

, it means that the

dth onlooker bee, which has low adaptability, is more likely to fail to collect honey due to the low nectar concentration in the region. Otherwise, the

d-th onlooker bee finds a random position near the optimal nectar source where the employed bee is located to collect honey.

The random initial position of the scout bees is shown in

where

denotes the step control parameter in the model, following a random normal distribution with a mean of 0 and variance of 1;

J is a random value between

;

is the current fitness value of the bees;

is the current optimal fitness value in the global search;

is the worst fitness value; and

is a non-zero minimum constant.

ndicates that the scout bee is located at the edge of the population and

indicates that the scout bee has found a better source of nectar and needs to move to a new region.

2.1.2. Improvement Strategies

The Tent chaotic function [

26] is adopted to enrich the population diversity, prevent the ABC algorithm from falling into a premature convergence situation during the optimization process, and improve the algorithm’s global search capability. The chaotic mapping function has strong traversal and randomness and can be mathematically mapped to transform chaotic sequences into individual spatial search capabilities. Among them, Tent chaos has a faster iteration speed and better uniformity, but there may be errors on immovable points. Therefore, random variables are introduced into the Tent chaos function to control the random values within a certain range and keep the algorithm’s regularity. Firstly, during the initialization of the bee population, a random initial individual is generated as a b-dimensional vector, where each dimension is within the interval [0,1]. Subsequently, the next individual is generated by substituting the value of each dimension of the current individual into the Tent chaotic formula sequentially to update the corresponding dimension of the new individual. This process of generating new individuals is repeated until (

n − 1) new individuals are produced. All generated individuals are then mapped to the variable range, resulting in the Tent chaotic initial bee population. The initial population is generated as

where

N is the number of particles within the sequence, and rand (0,1) takes a random number in that range.

A non-uniform mutation operator is introduced to enhance solution accuracy. The non-uniform mutation operator [

27] can introduce large perturbations in the early iterations of the algorithm, thereby enhancing the exploration of the search space. In the later stages, it reduces the perturbation magnitude, promoting the algorithm’s convergence to the vicinity of the global optimal solution and improving the quality of the global solution. For each bee generation after position update, the

d-dimensional vector is randomly perturbed using a variance probability

, which decreases dynamically with the number of iterations. The mutation formulas for the

o-th dimensional component are shown in

where

and

are the maximum and minimum values of the vector range, respectively;

randomly takes the value 0 or 1;

e is the current number of iterations;

is the maximum number of iterations;

s is the number of random numbers in the interval [0,1]; and

t determines the dependence degree of random number perturbation on the iteration count

e. In the early stage of the algorithm,

e is small, and the operator performs uniform search in the space. In the later stage, as

e increases, the new solution generated by mutation infinitely approximates the optimal real solution, yielding the best nectar source.

2.2. Local Path Optimal Optimization Based on BAS Algorithm

2.2.1. The Optimized BAS Algorithm

The BAS algorithm is a novel heuristic algorithm based on simulating the mate and foraging behavior of beetles. If the ABC algorithm generates an excessive number of global waypoints, the BAS algorithm, with its strong local search capability, can obtain multiple locally optimal waypoints. This algorithm determines the optimal solution by comparing the fitness values of the current and the next waypoints. In this section, improvements are made to the optimization direction and fitness value confirmation of the BAS algorithm. The introduction of random reverse learning and a greedy selection mechanism enhances the reliability of the algorithm’s waypoint selection and improves the accuracy of waypoint optimization. The beetles’ search direction vector

is defined using the random search formula

where

b is the spatial dimension.

The initial position of the beetle in the

b-dimensional space is shown as follows:

At the

e-th iteration, the beetle’s tentacles determine the left and right directions at the current position as follows:

where

denotes the distance between the two tentacles of the beetle at the

e-th iteration; and

is the updated length of the tentacles.

The beetle position of the next iteration is updated as

where

denotes the iterative step size of the beetle in the

e-th iteration, which determines the convergence speed of the algorithm’s optimization search;

is the step factor;

is the sign function; and

and

denote the objective function values corresponding to the left and right directions at the

e-th iteration, respectively. As the number of iterations increases,

continuously updates the convergence value until the optimal objective function solution is obtained.

2.2.2. The Improvement Strategy

The beetle algorithm, characterized by few parameters and a simple structure, tends to steer randomly in local paths. Therefore, positional stochastic inverse learning and a greedy selection strategy [

28] are introduced to optimize the judgment of left and right directions. Currently, the beetle’s direction selection is limited to left and right, with the remaining position information being underutilized. A local search in nearby directions is required to find a better position and avoid random steering. The stochastic inverse learning method generates a stochastic inverse solution based on the left and right directions, thereby increasing direction options and avoiding blind movement, as shown in

where

denotes the newly generated random direction and

is the current optimal position.

Since the fitness value of the generated new position may be lower than those of the left and right directions, a greedy selection of the beetle’s direction is performed to expand the search range and avoid local optima. In each iteration, the fitness values of the generated new position direction and the original left and right directions are compared. If the fitness value of the new direction is better than those of the original left and right directions, the beetle moves toward the new direction for updating as per Equation (12); otherwise, it moves as Equation (11). The next optimal position of the beetle is obtained through iterative screening using this method, as shown in

2.3. Adaptive Adjustment to Algorithm

In the early stage of the improved algorithm, the number of individual bees in the population is large and scattered. Adaptively adjusting the ABC algorithm to obtain a larger weight value can accelerate the convergence process and enhance the ability to explore global waypoints. As the number of iterations increases, the weight of the ABC algorithm gradually decreases, while the weight of the BAS algorithm gradually increases. This helps to refine the search for local waypoints and find the optimal path. The nonlinear inertia weight factor [

29] is introduced to adaptively adjust the weights of the two algorithms and achieve a balance between the global exploration and local optimality search. The nonlinear weight factor update formula is shown in

The values of

0.84 and

are determined through extensive experiments. The traditional adaptive weight factor increases linearly, whereas the nonlinear weight factor employed here rises slowly in the early iterations, expanding the search range and thereby enhancing global exploration capability. In the later iterations, the increase rate of the weight factor accelerates, further strengthening the local exploitation capability. The variation in ω is illustrated in

Figure 1.

An adaptive nonlinear inertia weight is incorporated into the position update mechanisms of both the swarm and beetle algorithms, as shown in

A dynamic coefficient

is introduced to adjust the proportion of the two algorithms, regulate the ratio between current weights and weight changes from the previous generation during each weight update, and achieve a dynamic balance between global exploration and local exploitation through iterations, as shown in

2.4. Path Planning Based on IABCBAS Algorithm

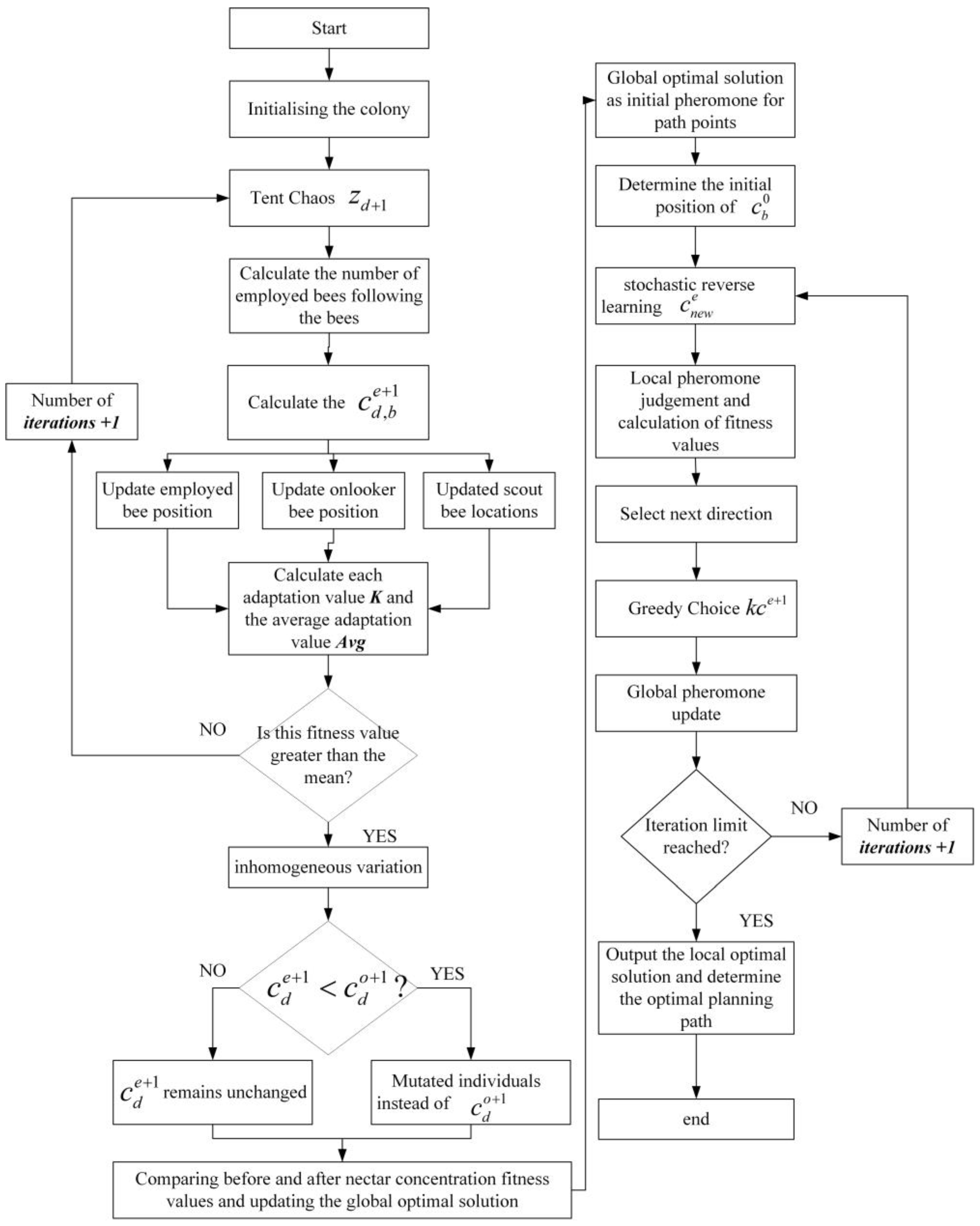

The path planning process based on the IABCBAS algorithm is illustrated in

Figure 2, with the steps as follows:

- (1)

Set the relevant parameters for the ABC algorithm and generate the bee population with Tent chaotic perturbation using Equation (5).

- (2)

“Leading” and “following” are set as the main behaviors, interspersed with scouting, to form a new distribution of the honeybee population; obtain the corresponding nectar concentration, and select the colony distribution position based on nectar concentration.

- (3)

Mutate the bees after position update using Equations (6) and (7). If the nectar concentration after mutation is higher than that before mutation, update the position to the mutated one; otherwise, no operation is performed.

- (4)

If the maximum number of iterations of the bee colony algorithm is not reached, repeat the above cycle; otherwise, increase the initial pheromone value of the path points corresponding to the current bee colony to form an optimized initial pheromone distribution and improve the quality of global search points.

- (5)

Set the relevant parameters of the BAS algorithm and perform local numerical optimization using the optimized initial pheromone.

- (6)

Randomly generate a new solution using Equation (12) and calculate the fitness value of it. If the new solution outperforms the previous one, the direction of the optimization search is adjusted accordingly.

- (7)

The optimal solution is retained according to Equation (13), with local and global pheromones updated simultaneously.

- (8)

Check if the maximum number of iterations of the BAS algorithm has been reached. If so, the results of the optimal search are saved, analyzed, and processed.

2.5. Analysis of Algorithm Time Complexity

The time complexity of an algorithm can reflect its feasibility and efficiency in solving path planning problems. Therefore, this section conducts a time complexity analysis of the original ABC and BAS algorithms, as well as the improved IABCBAS algorithm.

In the global waypoint exploration phase, the size of the artificial bee colony is C, the problem dimension is b, and the maximum number of iterations of the algorithm is E. Therefore, the time complexity of the original ABC algorithm is . The initialized population of the improved ABC algorithm is disturbed by Tent chaos, and the initialization time complexity is . Affected by the mutation factor, the time complexity of the waypoint exploration phase is . Thus, the time complexity of the improved ABC algorithm phase is .

In the local waypoint optimization phase, the problem dimension at this stage is b. The computational complexity of initializing the beetle is . During the iteration process, the computational complexity for judging the directions of the two antennae is , and the computational complexity for updating the beetle’s position is . Therefore, the time complexity of the original BAS algorithm is . Since the initial position of the beetle in the improved BAS algorithm is generated in the global exploration phase, the initialization computational complexity is reduced. Due to the introduction of random reverse solutions, the computational complexity caused by the increased direction selection is . The computational complexity of greedy selection confirmation is . Thus, the time complexity of the improved BAS algorithm is .

In summary, the combined time complexity of the two original algorithms before improvement is , and the complexity of the IABCBAS algorithm is . It can be seen that the IABCBAS algorithm has the same complexity of . However, through the design of optimization strategies, the IABCBAS algorithm improves the search capability, controls the time, and improves the efficiency of path planning.

3. Experimental Testing of Search Performance on IABCBAS Algorithms

3.1. Test Functions and Environment

Four swarm intelligence algorithms from different periods are selected to conduct comparative experiments on nine test benchmark functions to verify the performance and feasibility of the proposed IABCBAS algorithms. All experiments are conducted on the same experimental platform. The comparison algorithms [

16] and related parameters are shown in

Table 1.

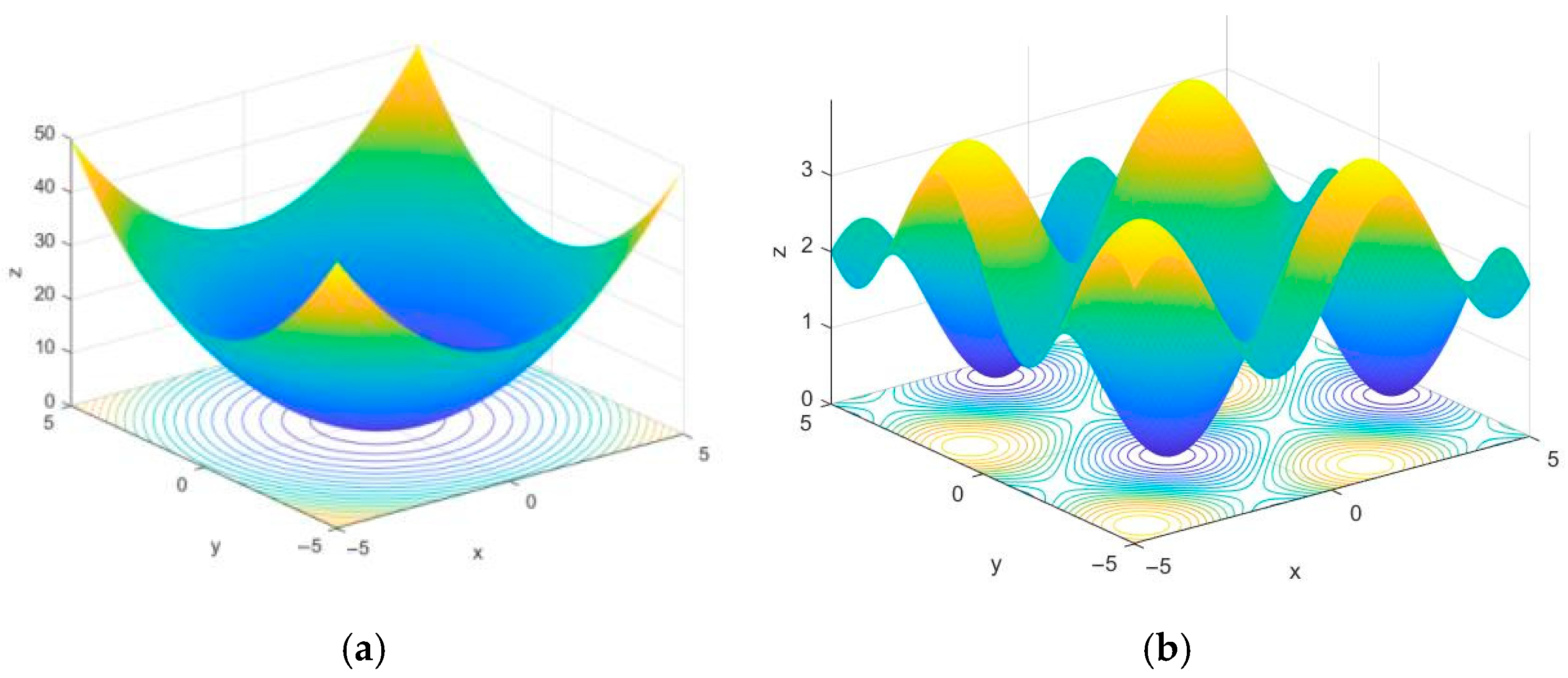

Benchmark functions with different dimensions and numbers of peaks are employed to verify the algorithms’ convergence speed and their ability to escape local optima. The selected benchmark functions [

30] are listed in

Table 2, and part of the test function space is illustrated in

Figure 3. The Sphere test function is a continuous unimodal convex function in a high-dimensional space with multiple local minima, enabling it to test the search algorithm’s ability to escape local minima. The Foxholes test function is a low-dimensional multimodal function with numerous canyons and obstacles, making the testing of algorithms more challenging.

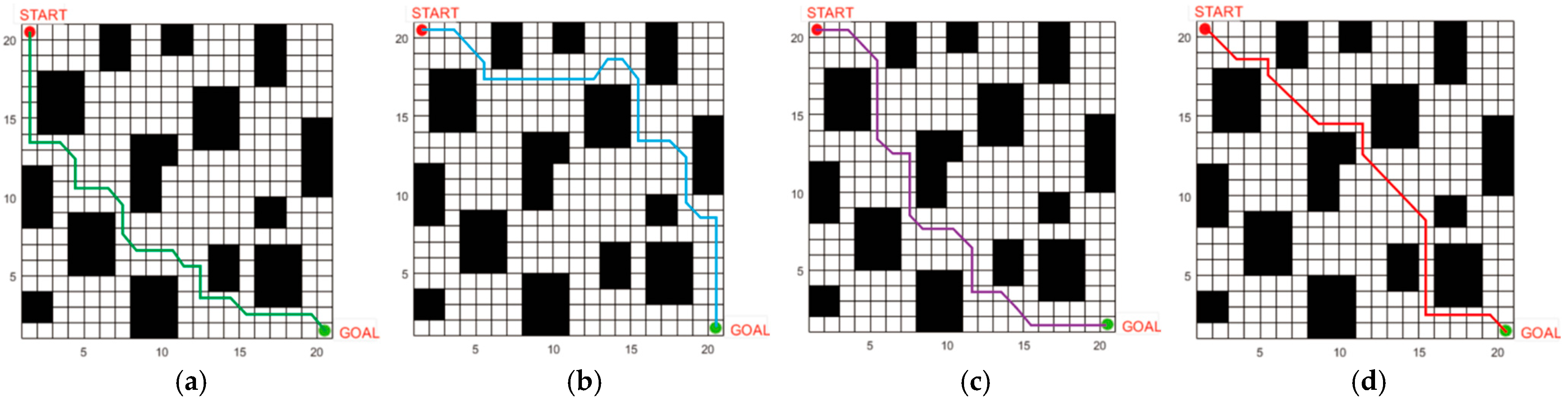

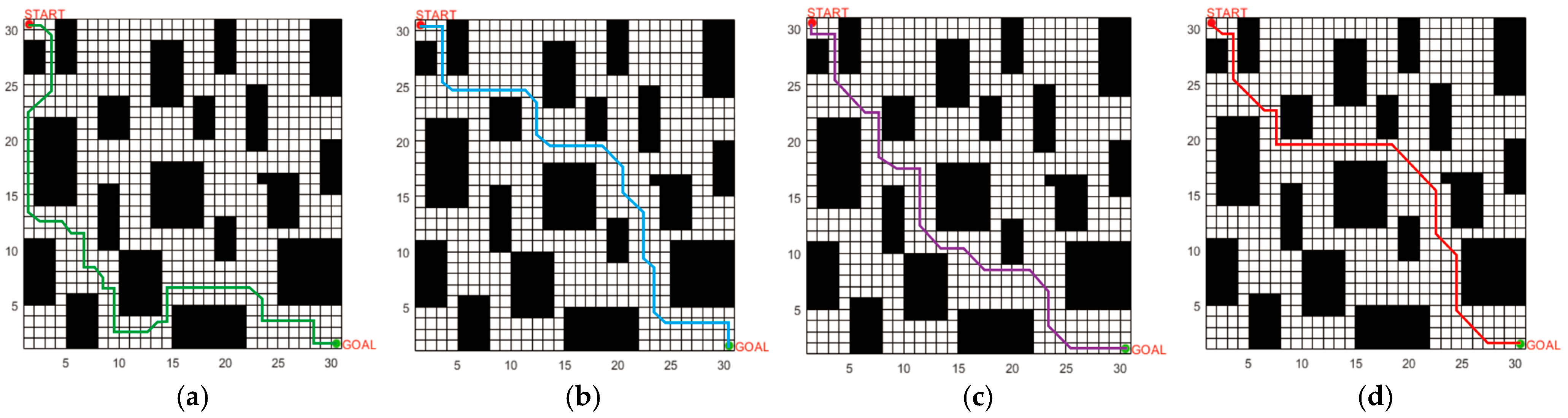

3.2. Convergence and Stability Analysis

Performance comparison experiments are conducted on five algorithms and each benchmark function is run independently 50 times. The evaluation metrics include the best value, average value, and standard deviation. The population size is set to 40 and the maximum number of iterations is 500. The experimental results are presented in

Table 3.

Among these functions, high-dimensional unimodal functions have only one global optimal point and no local extrema, which are mainly used to test convergence speed. Multimodal functions, which have multiple local extrema, are used to evaluate the performance of escaping local extrema across different dimensions (both high and low). For the three high-dimensional unimodal functions, the proposed IABCBAS algorithm achieves the theoretical optimal value of 0 when solving f1 and f3, with the corresponding standard deviation also being 0. In solving f4, the optimal value, average value, and standard deviation of the proposed IABCBAS algorithm are at least three orders of magnitude better than those of the other four algorithms.

Among the three high-dimensional multimodal functions, the WOA and SSA find the optimal values for and , and the proposed IABCBAS algorithm maintains superior test performance; the proposed algorithm has much higher values for the function than that of the other four algorithms, reflecting improved search capability. For the three low-dimensional multimodal functions to , our algorithm reaches the optimal value under the solution, demonstrating better optimization performance under low-dimensional conditions. In terms of stability, only the ACO, SSA, and our algorithm find the optimal solution for , with their stability increasing in sequence. Our algorithm has the best stability for and ranks second only to the WOA for . Across all algorithms, the accuracy on unimodal functions is higher than that on multimodal functions, and the accuracy on low-dimensional functions is higher than that on high-dimensional functions. The proposed IABCBAS algorithm achieves superior performance in both accuracy and stability. Regarding running time, different search mechanisms result in significant differences among algorithms. The average running times of the five algorithms are 3.994, 4.563, 5.597, 4.458, and 3.889 s, respectively. The proposed IABCBAS algorithm can generate multiple distinct solutions simultaneously, accelerating numerical comparisons and improving running time by 2.7% compared to the fastest PSO algorithm.

The average convergence curves of the nine benchmark test functions are plotted to compare the convergence speed, accuracy, and ability to escape local optima among different algorithms, as shown in

Figure 4. The vertical axis represents the fitness value, and the horizontal axis denotes the iteration number.

Figure 4a–c demonstrate that the proposed IABCBAS algorithm exhibits faster convergence speed in unimodal functions, attributed to the chaotic initialization of the population. Simultaneously, the convergence accuracy of this algorithm significantly outperforms others. In both (a) and (c), the proposed algorithm escapes local extrema, whereas PSO, ACO, and WOA fail to do so. In the high-dimensional multimodal functions, ACO, WOA, SSA, and our algorithm achieve the optimal value of 0 in

and

; thus, the convergence curves are not given due to early convergence. It is worth noting that our algorithm requires significantly fewer iterations than the other three algorithms and exhibits superior convergence performance.

Figure 4d shows that the proposed IABCBAS algorithm falls into the local extreme value point in the 200th~300th generation, and there is a significant difference between the optimization value and the optimal value after 300 generations. However, the overall numerical curve amplitude is smaller and more stable than the other four algorithms. In the low-dimensional multimodal function, the numerical types of functions

and

are the same; only the former is shown in

Figure 4f. All algorithms in

Figure 4e,f converge to the optimal solution, but the proposed IABCBAS algorithm exhibits the fastest convergence speed.

3.3. Comparative Ablation Experiments

The proposed algorithm has been proven to exhibit superior global search capability and local optimization performance. However, additional experiments are required to verify (1) whether the selected mechanism is optimal, (2) whether it represents the optimal improvement for the proposed algorithm, and (3) whether the combination of the two algorithms is generalizable. To enhance the persuasiveness of model improvements, comparative algorithms are selected for experiments by reviewing the latest literature. The ABC algorithm is modified by introducing Bernoulli chaotic mapping, reverse learning mechanism, and KHO algorithm, respectively [

29,

30,

31], denoted as ACO1-ACO3; the BAS algorithm is selected to combine with ABC, PSO, and AFSA [

32,

33,

34], denoted as BAS1-BAS3. ACO1 and ACO2 were set up to compare with the Tent population Chaos and local random direction selection mechanism. ACO3 and BAS1-BAS3 were set up to compare the two algorithm-combining methods we used, respectively. The results are shown in

Table 4.

It can be seen from

Table 3 that compared with the original ABC algorithm, the added mechanisms have improved the algorithm’s optimality. The numerical results of ACO1 are all better than those of ACO2 in

function, indicating that enhancing population richness can effectively improve global search efficiency in high-dimensional functions. The numerical results of ACO2 are slightly better than that of ACO1 in function

, indicating that increasing the random direction can increase local search stability in the low-dimensional function. Comprehensive results show that the results of each two combined algorithms are numerically better than the single-added mechanism results. The numerical results of BAS3 and ACO3 are better than those of BAS1 and BAS2, indicating that the combination of the new swarm intelligence algorithm has a wider search range and better enhancement effect. Meanwhile, the overall value of BAS1 is better than BAS2, indicating that the combination of the bee swarm and the beetle swarm is also good, which side by side verifies the accuracy of the basic algorithm selected. However, compared to the fish swarm and krill swarm algorithms, the beetle swarm algorithm has a simpler structure, giving the IABCBAS algorithm a greater advantage in search and optimization capabilities. Furthermore, taking the

function as an example, the average value of the IABCBAS algorithm is the lowest among the eight algorithms, reaching

, which indicates that the algorithm has a better optimization effect on the f1 function. Meanwhile, only the IABCBAS algorithm has a standard deviation of 0, suggesting that the results of multiple runs are highly stable without significant differences.