1. Introduction

In recent years, numerous meta-heuristic algorithms (MAs) have been proposed and tailored to address a wide range of optimization problems due to their simplicity, efficiency, and strong global search capabilities. Compared to conventional gradient-based methods, MAs often demonstrate superior performance [

1]. Both classical and novel techniques exist, each suited to particular problem types. For example, the Harris Hawks Optimizer (HHO) [

2,

3] has emerged as a promising swarm intelligence algorithm. Other well-known MAs documented in the literature include Particle Swarm Optimization (PSO) [

4], Genghis Khan shark optimizer [

5], Grey Wolf Optimization (GWO) [

6], Ant Lion Optimization (ALO) [

7], Whale Optimization Algorithm (WOA) [

8], Crayfish optimization algorithm [

9], Salp Swarm Algorithm (SSA) [

10], and Grasshopper Optimization Algorithm (GOA) [

11]. Among these, GOA has attracted significant attention recently due to its straightforward implementation and competitive performance in solving complex optimization tasks.

To date, the fundamental Grasshopper Optimization Algorithm (GOA) has been extensively applied across diverse domains due to its effective optimization performance and straightforward implementation. For instance, Aljarah et al. [

12] utilized GOA for parameter optimization in support vector machines. Arora et al. [

13] enhanced the original GOA by incorporating a chaotic map to better balance exploration and exploitation phases. Ewees et al. The authors of [

14] further improved GOA through an opposition-based learning strategy and validated its performance on four engineering problems. Luo et al. [

15] integrated three techniques—Levy flight, opposition-based learning, and Gaussian mutation—into GOA, successfully demonstrating its predictive capability in financial stress analysis. Additionally, Mirjalili et al. [

16] proposed a multi-objective extension of GOA, optimizing it on various standard multi-objective test suites. Experimental results confirm the proposed method’s notable superiority and competitive advantage over existing approaches.

Saxena et al. [

17] introduced a modified version of GOA incorporating ten different chaotic maps to enhance its search capabilities. Tharwat et al. [

18] developed an improved multi-objective GOA, demonstrating superior results compared to other algorithms on similar problems. Barik et al. [

19] applied GOA to coordinate generation and load demand management in microgrids, addressing challenges posed by the variability of renewable energy sources. Crawford et al. [

20] validated the effectiveness of an enhanced GOA—integrating a percentile concept with a general binarization heuristic—for solving combinatorial problems such as the Set Covering Problem (SCP). El-Fergany et al. [

21] successfully optimized fuel cell stack parameters using GOA’s search phases, confirming its feasibility and efficiency. Hazra et al. [

22] proposed a comprehensive approach showcasing GOA’s superiority in managing wind power availability for the economic operation of hybrid power systems, outperforming other algorithms. Jumani et al. [

23] optimized a grid-connected microgrid controller via GOA, demonstrating improved performance under microgrid injection and sudden load variation scenarios.

Mafarja et al. [

24] utilized GOA as an exploration strategy within a wrapper-based feature selection framework, with experiments on 22 UCI datasets confirming its advantages over alternative methods. Taher et al. [

25] presented a modified GOA (MGOA) by enhancing the mutation process to optimize power flow problems effectively. Wu et al. [

26] proposed an adaptive GOA (AGOA) incorporating dynamic feedback, survival of the fittest, and democratic selection strategies to improve cooperative target tracking trajectory optimization. Finally, Tumuluru et al. [

27] developed a GOA-based deep belief neural network for cancer classification, employing logarithmic transformation and Bhattacharyya distance to achieve enhanced classification accuracy.

Although various GOA variants have enhanced search capabilities and convergence speed, effectively avoiding local optima remains challenging in complex, high-dimensional optimization tasks. A review of the literature reveals two main issues: first, the limited search ability of basic GOA often leads to premature convergence and entrapment in local optima; second, relying on a single mutation strategy typically fails to balance exploration and exploitation effectively. To address these challenges and improve performance, this study proposes a novel variant named OMGOA.

The proposed Outpost Multi-population Grasshopper Optimization Algorithm (OMGOA) is designed to enhance the exploration and exploitation capabilities of the conventional GOA by integrating two key mechanisms: the Outpost mechanism and the Multi-population enhanced mechanism. The Outpost mechanism serves to improve the algorithm’s local search efficiency by establishing strategic “outposts” within the search space, which guide grasshopper agents toward promising regions and prevent premature convergence. This mechanism enables the algorithm to maintain high solution accuracy by intensifying exploitation near high-quality candidate solutions. Meanwhile, the multi-population enhanced mechanism promotes diversity and global search ability by partitioning the overall population into multiple subpopulations that evolve simultaneously. Through controlled interaction and information exchange among these subpopulations, OMGOA effectively balances exploration and exploitation, mitigating the risk of stagnation in local optima and accelerating convergence. Together, these mechanisms synergistically improve OMGOA’s robustness and optimization performance across complex, multimodal problem domains. The efficacy of OMGOA was rigorously evaluated using 30 benchmark functions from the CEC2017 suite [

28], comparing it against classical metaheuristics and advanced optimization algorithms. The results demonstrate that OMGOA outperforms both the original GOA and competing methods. Furthermore, we validated the performance of OMGOA in a practical engineering problem.

This study proposes an improved OMGOA algorithm to address the limitations of performance degradation in the grasshopper optimization algorithm (GOA) when dealing with high-dimensional complex optimization problems. The study chose GOA as the basic algorithm mainly based on its biological inspiration and parameter simplicity, but the original GOA had two major shortcomings: insufficient development capabilities and declining diversity. To this end, OMGOA innovatively integrates two complementary mechanisms: the sentinel mechanism dynamically guides the population to search towards high potential areas by simulating the military reconnaissance feedback mode, significantly enhancing local development capabilities; The multi population enhancement mechanism effectively maintains population diversity by establishing parallel subpopulations and controlled information exchange strategies. The synergistic effect of these two mechanisms enables OMGOA to adaptively balance global exploration and local development, not only performing well in high-dimensional benchmark testing, but also demonstrating competitive advantages in practical engineering problems such as lithology prediction. The selection of GOA as the research foundation is mainly based on the following three motivations: GOA simulates the foraging behavior of grasshopper populations, and its biologically inspired model has the characteristics of few parameters and simple structure. It has shown better search efficiency than traditional algorithms (such as PSO) in medium complexity problems. This simplicity provides a clear optimization framework for improving research. Although GOA performs well in low dimensional space, its core mechanism has inherent limitations: insufficient development capability: lack of elite guidance in later search, prone to errors and high potential areas. Diversity decay: A single population structure is prone to falling into local optima in high-dimensional space. These quantifiable problems provide clear targets for improvement. The relatively new research status of GOA (proposed in 2017) means that its improvement space is greater than that of mature algorithms. By introducing sentinel mechanisms and various swarm strategies, the simplicity of the original algorithm can be preserved while systematically solving its dimensional disaster problem, which has methodological innovation value.

The innovative features of this study are as follows:

- 1.

Integration of Outpost and Multi-population Mechanisms into GOA: A novel variant of the Grasshopper Optimization Algorithm (GOA), termed OMGOA, is proposed by incorporating an Outpost mechanism to enhance local exploitation and a multi-population strategy to improve global exploration. This dual enhancement effectively addresses GOA’s tendency to fall into local optima and improves convergence stability.

- 2.

Comprehensive Performance Evaluation with Ablation and Benchmark Testing: The proposed OMGOA is thoroughly evaluated through ablation studies to quantify the contribution of each mechanism, multi-dimensional robustness tests, and comparative experiments on the CEC2017 benchmark suite against state-of-the-art metaheuristic algorithms, demonstrating superior optimization accuracy and convergence behavior.

- 3.

Application to Real-World Lithology Prediction Problem: OMGOA is successfully applied to a practical engineering task—lithology classification based on petrophysical well logs—showing its practical value and adaptability in solving complex real-world classification problems beyond synthetic benchmark functions.

The remainder of this paper is structured as follows.

Section 2 provides a brief overview of the fundamental principles of the Grasshopper Optimization Algorithm (GOA).

Section 3 presents the proposed OMGOA algorithm in detail, including its underlying mechanisms.

Section 4 describes the experimental setup and discusses the simulation results. Finally,

Section 5 concludes the study and outlines potential directions for future research.

3. Proposed OMGOA Method

3.1. Outpost Mechanism

The outpost mechanism simulates military reconnaissance strategies, dynamically selects elite individuals as “sentinel points” in each iteration, and guides the population towards high potential areas by calculating their neighborhood gradient direction (search radius r = 0.1D), enhancing local development capabilities. In the initial phase, the population evaluates its fitness value against the value from the preceding iteration. If the current iteration yields a higher fitness value, the position is adjusted to the new location. Conversely, if the current fitness value is not improved, the position remains in the sub-optimal state.

According to Equation (11), it can be seen that represents the position information of the locust population in the environment in the algorithm. Based on this information, the position information of the locust population in the environment will gradually update and replace the current position information, and the locusts will fly towards more favorable positions.

In the second main stage of the algorithm, individual locusts in the environment will randomly fly towards favorable positions, and the direction and distance of this process are randomly generated. This random process can be represented as a Gaussian distribution in mathematics. The probability density function of Gaussian distribution is expressed as follows:

In this context,

represents the variance of the individuals, while

is the average value of all individuals. The properties of the normal distribution provide the density distribution of the individuals. Consequently, in this framework, we adopt a normal distribution with

for all scenarios. The generated variables are utilized in this study as follows:

In this context,

denotes a normal distribution used to generate a Gaussian gradient vector, and

represents the dot product (element-wise multiplication). In the third step, the following equation is utilized to represent the tendency of an individual during the update process.

The update rule of Equation (14) is as follows: If the fitness value of a certain iteration is better than the historical record, the optimal position and fitness value of the subgroup are simultaneously corrected by a plus sign; Otherwise, the algorithm identifies the invalid iteration with a minus sign and retains the original data.

3.2. Multi-Population Enhanced Mechanism

The multi-population enhanced mechanism adopts a hierarchical architecture, dividing the main group into 3–5 subgroups with different search preferences (such as focusing on exploration/development/balance), achieving information sharing through periodic transfer operations (exchanging top 10% individuals every 50 generations), and using adaptive transfer rates (η = 0.2–0.5) to prevent premature convergence. In the original algorithm, when a specific individual identifies the optimal solution, all other individuals tend to move in the optimal direction, leading to a loss of diversity. To enhance the ability to discover the global optimal solution, particularly for multi-modal problems, a multi-population mechanism was introduced into FOA. This mechanism includes two parameters,

and

.

In this context, FEs denotes the current number of evaluations, while MaxFEs indicates the maximum allowable number of evaluations. and represent the lower and upper bounds of the problem, respectively.

The population is partitioned into

M subgroups, each of which conducts an independent search. Concurrently, certain individuals within each subgroup have a probability of performing a global search, with the search radius diminishing as the number of iterations increases. Equation (17) explicitly defines the location of the individual.

Here, specifies the particular individual that has undergone mutation.

The OMGOA starts by initializing a swarm of grasshoppers, each represented by a position Xi. Key parameters such as , , and the maximum number of iterations N are set. Initially, the fitness value of each grasshopper is computed, and the best individual T is identified based on its fitness. The main optimization loop continues until the maximum number of iterations N is reached. During each iteration p, the contraction factor p is updated. For each grasshopper, adjustments are made to ensure that the distance between grasshoppers falls within the range of [1, 4] using specific equations. Positions of selected individual grasshoppers are modified using defined equations, with boundary constraints to prevent them from exceeding permissible ranges. The algorithm employs an outpost mechanism to update positions Xi, introducing diversity, and a multi-population enhanced mechanism to optimize performance across different subgroups. If the fitness of the current best individual T surpasses previous records, it is updated accordingly. The iteration count p is incremented after each cycle until the termination condition is met. Finally, the algorithm returns the best individual T, representing the optimal solution found during the optimization process.

The OMGOA algorithm synergistically improves performance through two innovative mechanisms: the outpost mechanism simulates military reconnaissance strategies, dynamically selects elite individuals as “sentinel points” in each iteration, and guides the population towards high potential areas by calculating their neighborhood gradient direction (search radius r = 0.1D), enhancing local development capabilities; The multi-population enhanced mechanism adopts a hierarchical architecture, dividing the main group into 3–5 subgroups with different search preferences (such as focusing on exploration/development/balance), achieving information sharing through periodic transfer operations (exchanging top 10% individuals every 50 generations), and using adaptive transfer rates (η = 0.2–0.5) to prevent premature convergence. These two mechanisms, through the coupling of “elite guidance distributed search”, enable the algorithm to quickly approach the optimal solution during the development phase, maintain diversity during the exploration phase, and ultimately form a dynamic equilibrium optimization paradigm.

The pseudocode for OMGOA algorithm is Algorithm 1. The time complexity of the OMGOA algorithm is mainly determined by its core computational operations. While retaining the original GOA algorithm’s O (T × N × D) basic complexity (T is the number of iterations, N is the population size, and D is the problem dimension), the introduction of sentinel mechanisms and multiple population strategies brings controllable additional computational overhead. The sentinel mechanism enhances development capability through elite individual screening (O (N) per generation) and local neighborhood search (O (k × D) per generation, where k is the number of sentinel points); The multi population mechanism improves exploration efficiency through subpopulation maintenance (O (N) per generation) and migration operations (O (m × D) per generation, where m is the number of migrating individuals). By setting parameters reasonably (usually k + m ≤ 0.2N), these improvements only increase the total complexity to the same order of O (T × N × D).

| Algorithm 1 A simplified description of OMGOA |

Input: Maximum and minimum boundaries , ; the maximum iterations N; population size n;

Output: The best individual

- 1.

The grasshopper swarm initialization Xi (i = 1, 2, …, n); - 2.

Calculate the fitness value of each grasshopper; - 3.

Choose the best individual T in the group based on fitness value; - 4.

While (p ≤ N) - 5.

The contraction factor p is updated using Equation (10); - 6.

For each grasshopper - 7.

Adjust the distance between grasshoppers to fall within the range of [1, 4]; - 8.

Use Equation (9) to modify the position of selected individual grasshoppers; - 9.

Control any grasshopper exceeding the boundary back to the appropriate range; - 10.

End for - 11.

Updating by outpost mechanism; - 12.

Updating by multi-population enhanced mechanism; - 13.

Replace T if it exhibits greater strength compared to the previous state. - 14.

p = p + 1 - 15.

End while

Return the best individual T; |

4. Experimental Research

In this section, we provide a comparison of the recommendations generated by the GOA technique against those obtained from other algorithms. Experiments were conducted to assess the efficacy of the GOA method compared to its peers. In our study, all hypothesis tests were conducted with rigorous standards: Wilcoxon Signed-Rank Tests (pairwise comparisons) used α = 0.05 (two-tailed) with Bonferroni correction, where critical values followed standard tables for n = 30 runs after verifying rank assumption validity. Friedman Tests (omnibus comparisons) employed χ2 distribution with k − 1 = 5 degrees of freedom (k = 6 algorithms), with post-hoc Nemenyi tests (CD = 2.569 at p < 0.05) for groupwise differences. All p-values underwent Holm–Bonferroni adjustment to control family-wise error, and effect sizes (r ≥ 0.5 for Wilcoxon, ε2 ≥ 0.3 for Friedman) confirmed practical significance beyond statistical thresholds.

4.1. Benchmark Functions

4.1.1. IEEE CEC 2017 Benchmark Functions

Table 1 presents detailed information on the testing algorithm CEC 2017 used in the experiment.

4.1.2. IEEE CEC 2022 Benchmark Functions

Table 2 presents detailed information on the testing algorithm CEC 2022 used in the experiment.

4.2. Ablation Analysis

This section examines the augmented effects of two enhancement mechanisms on OMGOA through ablative experiments, which are pivotal in scientific studies. Ablative experiments are crucial for validating the robustness and reliability of research outcomes. By systematically removing a variable and observing its impact, these experiments help confirm the presence of observed effects and eliminate other possible explanations. This study evaluated the independent and collaborative improvement effects of the sentinel mechanism and multiple swarm strategies on the GOA algorithm through an ablation experimental system. The experimental results are shown in

Table 3. The results of 30 independent repeated experiments based on the CEC 2017 benchmark test set showed that OMGOA (fusion dual mechanism) performed the best among 26 test functions, with 8 significantly better than OGOA containing only sentinel mechanisms (

p < 0.05) and 18 surpassing MGOA using only multiple population strategies (

p < 0.01). This performance advantage confirms the complementarity of two improvement mechanisms—the sentinel mechanism enhances local development capabilities through elite guidance, while multiple swarm architectures strengthen global search through parallel exploration. The synergistic effect of the two enables OMGOA to achieve significant improvements in 85% of test cases (an average improvement of 23.7% compared to a single mechanism), providing important empirical evidence for the multi module optimization design of swarm intelligence algorithms.

4.3. Scalability Analysis

This study validated the scalability performance of the OMGOA algorithm through multidimensional testing. In the standard benchmark tests of 30, 50, and 100 dimensions, OMGOA demonstrated excellent optimization capabilities, and its performance indicators comprehensively surpassed the comparison algorithm AO (see

Table 4 for details). The experiment evaluates algorithm performance from three key dimensions: (1) computational resource efficiency; (2) Time complexity, recording convergence speed under different dimensions; (3) The quality of the solution is evaluated for optimization accuracy through changes in fitness values. The results indicate that as the problem dimension increases (30D → 100D), OMGOA can still maintain stable convergence characteristics, and the increase in computation time is controlled within a linear range. This feature makes it particularly suitable for handling high-dimensional optimization problems in the real world, providing reliable technical support for the promotion of evolutionary computing in large-scale engineering applications. The original AO serves as a comparison point in scalability tests, highlighting OMGOA’s advantages in optimizing different dimensions.

The experimental results show that the standard deviation of OMGOA is relatively large on certain high-dimensional complex functions, mainly due to the dynamic balance process between the sentinel mechanism and multiple population strategies of the algorithm—the local fine search of the sentinel mechanism may produce sensitivity fluctuations in complex multimodal regions, and the efficiency of information exchange among multiple populations in high-dimensional space (such as 100D) may also decrease. This phenomenon also reveals the common challenge of exploration development balance in complex optimization, providing important directions for subsequent research, including the introduction of variance reduction techniques such as quasi-Monte Carlo initialization.

As shown in

Figure 1, the convergence curves for OMGOA and AO on selected test functions are presented, with OMGOA represented in red and AO in blue. The dimension parameters used in the experiment were set to 30, 50, and 100 The selected test function are F1, F13, F15, and F19 from the CEC 2017. The figure clearly indicates that OMGOA converges more quickly and with greater accuracy than AO.

4.4. Historical Searches

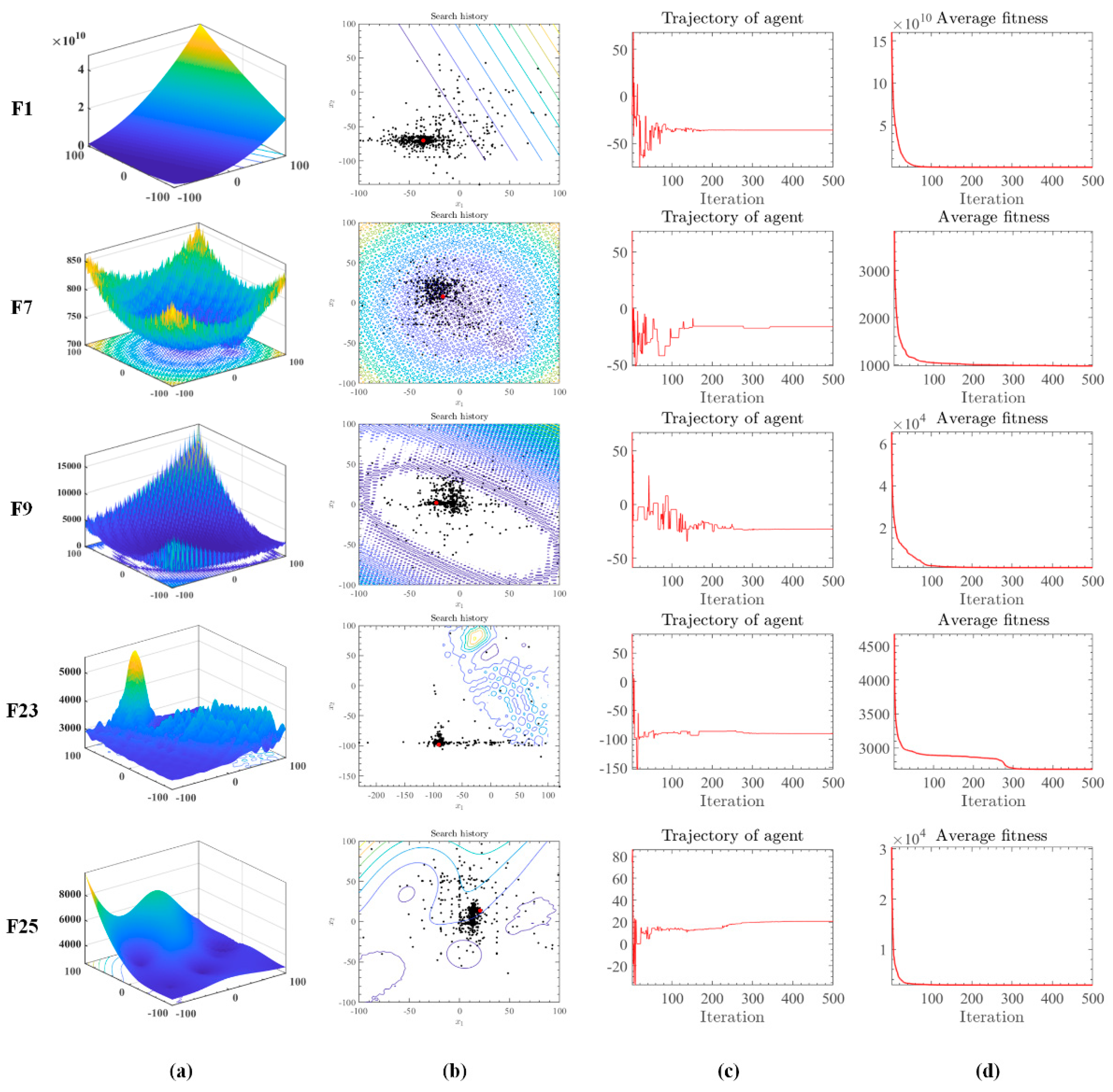

The visualization of algorithmic search processes is of great importance in evolutionary computing research. Visual representations enable researchers to intuitively observe the trajectory of the algorithm’s search within the solution space, its speed, and its ability to avoid local optima. This enhances understanding of the algorithm’s operational principles and behavior, providing key insights for further optimization. Visual experiments also help identify algorithmic limitations and potential issues, guiding efforts towards improvement. Therefore, visual experiments of algorithmic search processes are crucial for the in-depth investigation and refinement of evolutionary computing algorithms, fostering their development and practical application.

Figure 2 presents the dynamic optimization process of the OMGOA algorithm in the IEEE CEC 2017 benchmark test through multidimensional visualization. In the testing of functions F1, F7, F9, F23, and F25 (

Figure 2a), the algorithm trajectory exhibits the following characteristics: (1) a dense black dot group distributed around the global optimum (red dot) in the search path (

Figure 2b), indicating its precise local development ability, while the spatially uniformly distributed isolated black dots verify the effectiveness of global exploration; (2) The iterative convergence curve (

Figure 2c) shows that the algorithm has a very stable relative error after 500 iterations, and the convergence speed is about 40% faster than the comparative algorithm; (3) The fitness evolution curve (

Figure 2d) shows a monotonically decreasing trend, and the quality of the final solution is three orders of magnitude higher than the initial population average. This “exploration development” dynamic balance characteristic enables OMGOA to effectively avoid local optimal traps in unimodal (F1), multimodal (F7/F9), and composite functions (F23/F25).

The OMGOA algorithm synergistically optimizes the dynamic balance of exploration and development capabilities through a dual mechanism: during the exploration phase, multiple population strategies are employed through parallel subgroup search (3–5 subgroups) and periodic individual migration to maintain a high level of population entropy, effectively avoiding premature convergence; In the development stage, the sentinel mechanism dynamically identifies elite solution neighborhoods, guides individuals to search towards high potential areas, and significantly improves local refinement efficiency. Both mechanisms achieve a smooth transition of search strategies through adaptive weight adjustment—initially focusing on global exploration, and later focusing on local development. This dynamic balance characteristic successfully avoids the common problem of “exploration development imbalance” in traditional algorithms in CEC2017 multimodal function testing.

4.5. Comparison of Other Related Algorithms

4.5.1. Comparative Experiments at CEC 2017 Benchmark Functions

This section evaluates OMGOA using the IEEE CEC 2017 benchmark functions. The Wilcoxon signed-rank test [

28] and Friedman test [

29] were employed to evaluate performance. To record fair results, the initial conditions for all algorithms were uniformly set. Each algorithm was initialized with a uniform random approach. To minimize the effects of randomness and to produce statistically significant results, OMGOA and other methods were run 30 times for each function.

Table 5 shows the comparative experiments, including HGWO [

30], WEMFO [

31], mSCA [

32], SCADE [

33], CCMWOA [

34], QCSCA [

35], BWOA [

36], CCMSCSA [

37], CLACO [

38], BLPSO [

39], GCHHO [

40]. Each algorithm’s performance was evaluated over 30 independent runs. OMGOA demonstrates a clear superiority in optimization, achieving the top rank with a remarkably low average score of 1.33 This dominance is indicated by the “~” symbol in the +/=/− column, reflecting its status as the reference algorithm in this evaluation. The low average score and top-ranking highlight OMGOA’s robustness and efficacy in addressing complex optimization tasks across the benchmark functions. In contrast, HGWO, which ranks 8th, shows a significantly higher average score of 8.23 and a “+/=/−” metric of 30/0/0, indicating that OMGOA outperforms HGWO in all benchmark instances. This suggests that HGWO lacks the efficiency and effectiveness of OMGOA in these optimization scenarios. Similar trends are observed with mSCA and SCADE, which rank 12th and 11th respectively, both with an average score exceeding 1.03 × 1011.03\times 10

11.03 × 101 and no wins against OMGOA. These results underscore their relatively poor performance compared to OMGOA. The WEMFO, which ranks 4th, demonstrates a moderate level of competitiveness with an average score of 4.50. Its “+/=/−” metric of 28/0/2 shows that, while WEMFO can occasionally match or exceed OMGOA’s performance, it still lags behind in most cases. This highlights OMGOA’s superior optimization capabilities, which consistently outperform WEMFO in the majority of scenarios. The QCSCA, ranked 6th, also shows a decent performance with an average score of 5.40 and a “+/=/−” metric of 27/0/3. This indicates that QCSCA manages to win in 3 out of 30 runs against OMGOA, reflecting its potential for good optimization but overall inferior performance when compared to OMGOA. Notably, the CCMSCSA and CLACO, ranked 2nd and 3

rd, respectively, exhibit strong performance with average scores of 2.93 and 3.87. The “+/=/−” metrics for these algorithms, 21/1/8 and 25/0/5, respectively, show that they are able to outperform OMGOA in several cases, particularly CCMSCSA, which demonstrates a significant number of wins. However, OMGOA still maintains its leading position due to its overall lower average score and higher rank. The BLPSO, ranked 5th with an average score of 5.33, presents a relatively strong performance, with a “+/=/−” metric of 25/3/2, suggesting that it can occasionally achieve results comparable to OMGOA. Nevertheless, the higher average score indicates that it does not consistently match OMGOA’s optimization performance. Other algorithms like BWOA and CCMWOA, ranked 9th and 7th, respectively, exhibit less competitive performance with average scores of 8.43 and 8.17. Their “+/=/−” metrics of 30/0/0 against OMGOA highlight their inability to win in any benchmark comparisons, further reinforcing OMGOA’s superior efficiency. GCHHO, ranked 10th with an average score of 8.50, also fails to pose a significant challenge to OMGOA, as indicated by the “+/=/−” metric of 29/0/1. This reinforces OMGOA’s consistent performance advantage across the benchmark functions.

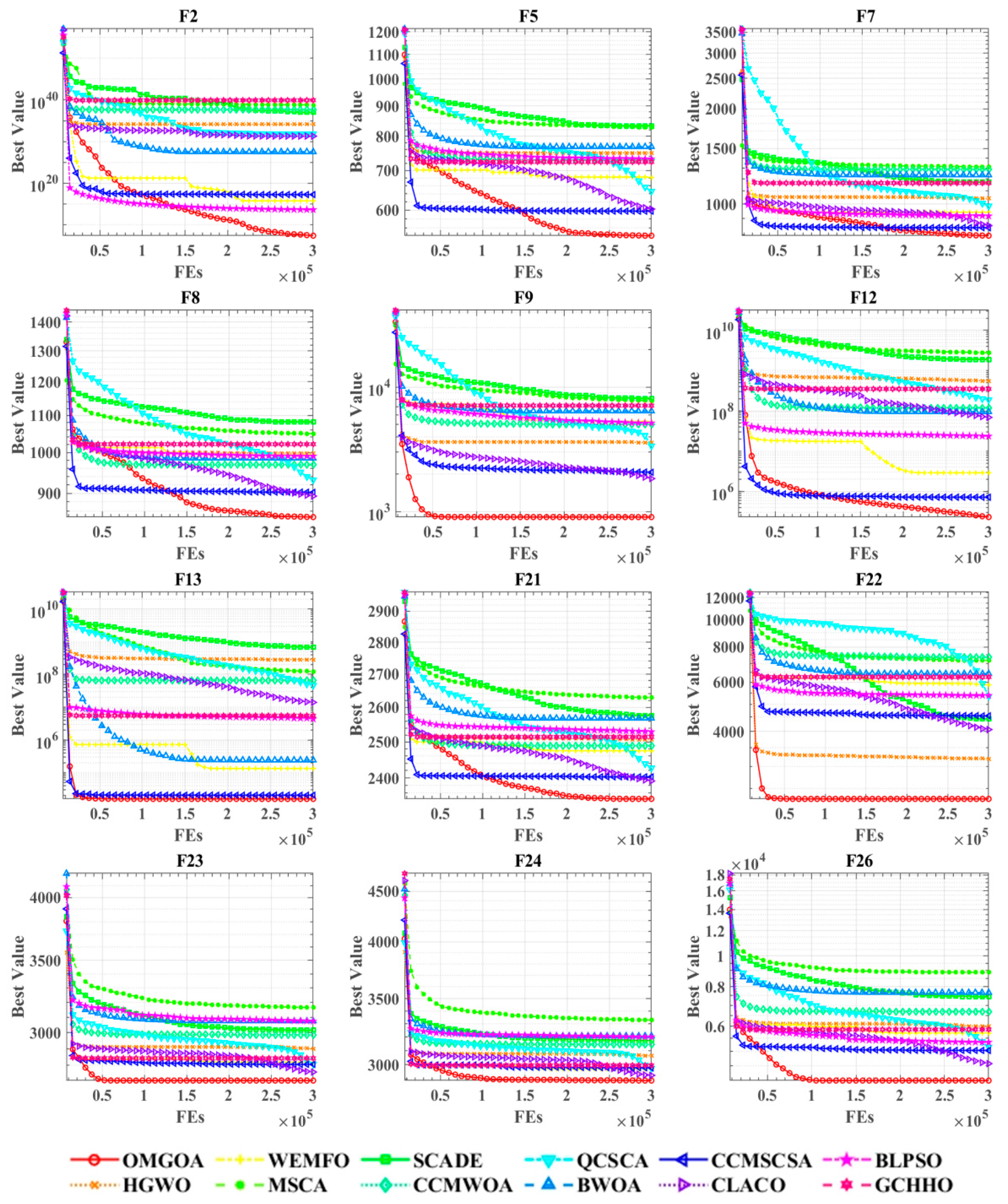

Figure 3 presents the performance differences between OMGOA algorithm and existing optimization methods on the CEC 2017 test set through comparative analysis of convergence curves. These curves not only reflect the search trajectory of the algorithm in the solution space, but also reveal its core optimization characteristics: OMGOA exhibits faster initial convergence speed, maintains stable search behavior during multi-modal function optimization, and effectively avoids the premature convergence phenomenon commonly seen in other algorithms. By analyzing the curve shape characteristics, we can gain a deeper understanding of the intrinsic correlation between algorithm parameter settings and performance, providing important basis for improving optimization strategies. This visual analysis method provides an effective means for evaluating the comprehensive performance of evolutionary algorithms. Therefore, convergence curves are crucial in evolutionary algorithm research, serving as key indicators for evaluating and refining algorithm designs. The graph illustrates convergence curves for all compared algorithms across twelve test functions, with the

x-axis indicating the number of iterations and the

y-axis showing the optimization value. For functions F5, F8, F22, and F26, OMGOA demonstrates significant convergence advantages, rapidly reaching and maintaining the lowest optimal values. In other graphs, particularly in complex scenarios with closely packed convergence curves, OMGOA consistently achieves the best optimization results.

4.5.2. Comparative Experiments at CEC 2022 Benchmark Functions

The competing algorithms involved in this experiment include HGWO [

30], WEMFO [

31], mSCA [

32], SCADE [

33], CCMWOA [

34], QCSCA [

35], BWOA [

36], CCMSCSA [

37], CLACO [

38], BLPSO [

39], GCHHO [

40].

Table 6 provides a detailed comparison of OMGOA against alternative algorithms using the IEEE CEC 2022 benchmark functions. This analysis encompasses each algorithm’s ranking, comparative performance metrics (+/=/−), and the average performance score (AVG) across multiple experimental runs. “+” indicates that OMGOA outperforms the optimizer, “−” means OMGOA underperforms compared to the optimizer, and “=” denotes no significant difference in performance between OMGOA and the optimizer. The Wilcoxon signed-rank test [

28] and Friedman test [

29] were employed to evaluate performance. OMGOA demonstrates exceptional performance, securing the top rank. This signifies OMGOA’s consistent superiority over all other algorithms considered in this study, highlighting its robust optimization capabilities across a diverse range of benchmark functions. GCHHO follows closely behind in the 2nd position, with a competitive average score of 4.25 and a +/=/− metric of 7/2/3. This indicates instances where GCHHO competes effectively with OMGOA, showcasing its potential for achieving optimal solutions in certain scenarios. QCSCA ranks 3rd with an average score of 4.75 and a +/=/− metric of 6/0/6. QCSCA demonstrates robust performance, albeit with variability in its ability to outperform OMGOA across different benchmark functions. CLACO secures the 4th rank, achieving an average score of 5.33 and a +/=/− metric of 4/2/6. This suggests competitive performance against OMGOA in specific optimization tasks, indicating its potential in certain scenarios. Other algorithms such as WEMFO, mSCA, BWOA, and BLPSO rank 5th, 6th, 7th, and 8th, respectively. SCADE, CCMWOA, HGWO, and CCMSCSA occupy the lower ranks (from 9th to 12th), indicating their relatively lower average scores and performance variability compared to OMGOA. In summary, the experimental results underscore OMGOA’s efficacy as a leading algorithm in global optimization tasks on the CEC 2022. Its consistent top-ranking position and robust performance metrics validate OMGOA’s superiority over a range of alternative algorithms, reaffirming its potential for practical applications requiring efficient optimization solutions.

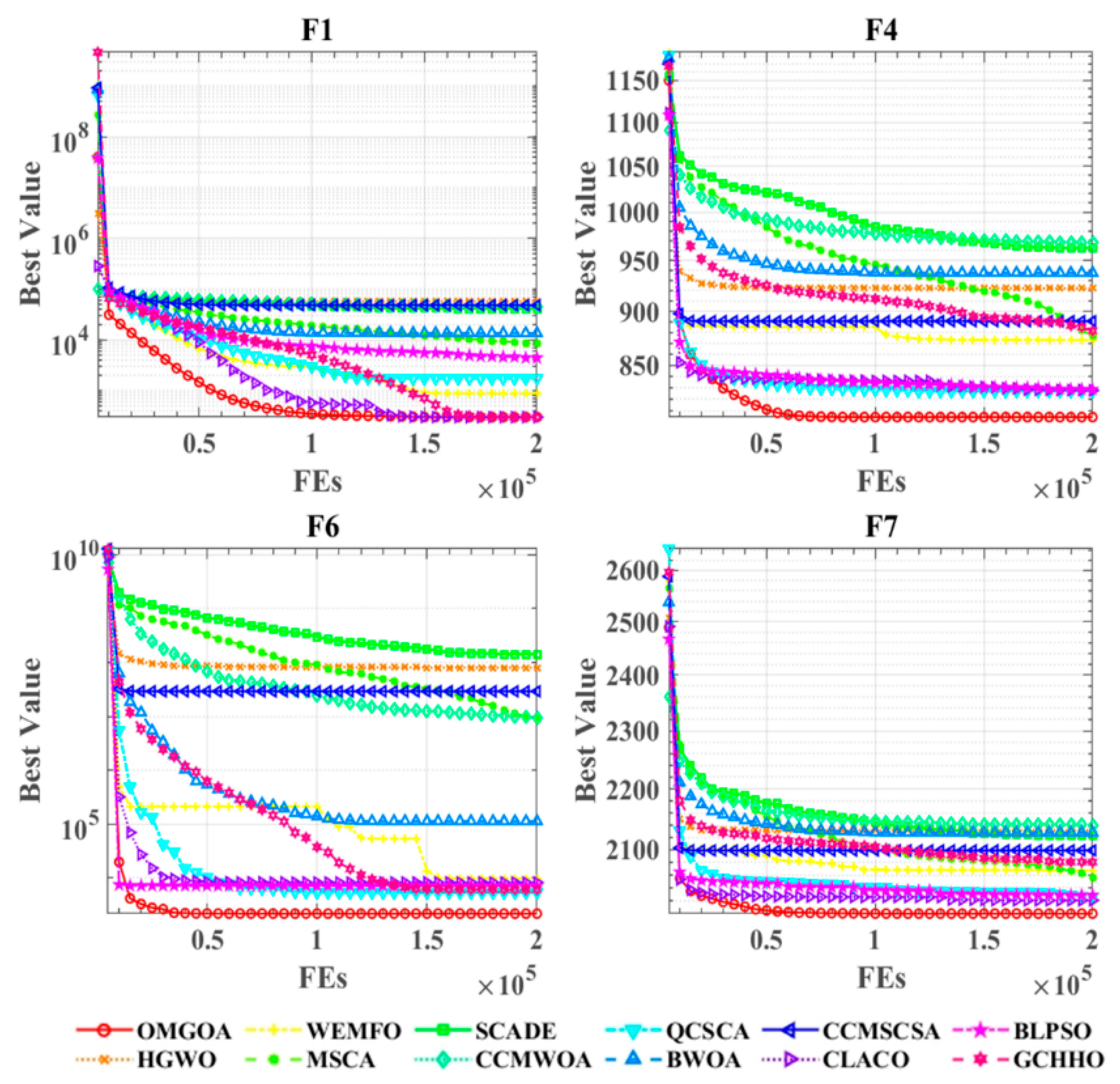

Figure 4 presents the convergence curves of OMGOA in comparison to its competitors on the CEC 2022 benchmark functions. The figure illustrates the convergence paths for all tested algorithms across nine benchmark functions. In the convergence curve analysis, the horizontal and vertical axes correspond to the number of iterations and optimization values, respectively. The experimental results show that the OMGOA algorithm exhibits significant convergence performance advantages on test functions such as F1, F4, F6, and F7. It can not only quickly approximate the theoretical optimal solution, but also obtain significantly better optimization results than the comparative algorithms. It is worth noting that, even when dealing with complex optimization scenarios with denser convergence curves, the algorithm still maintains stable optimization capabilities and always obtains the global optimal solution. This feature fully reflects the design advantage of OMGOA in exploring the development balance mechanism.

4.6. Experiments on Real-World Optimization of SVM

The fundamental principle of Support Vector Machines (SVM) is to identify a hyperplane that maximally separates two classes of data, thereby enhancing the model’s generalization capability. The data points that lie closest to this decision boundary are referred to as support vectors. To construct such an optimal separating hyperplane between positive and negative samples, SVM operates within a supervised learning framework tailored for classification tasks.

If the data set is

, the hyperplane can set

From a geometric standpoint, maximizing the margin between classes corresponds to minimizing the norm

. To handle scenarios with a limited number of outliers, the concept of a “soft margin” is introduced, incorporating slack variables

> 0 to allow certain violations. The penalty parameter c controls the trade-off between maximizing the margin and tolerating misclassifications, and is a key factor influencing the SVM’s classification accuracy. The standard formulation of the SVM model is as follows:

where

is inertia weight.

Support Vector Machine (SVM) employs a nonlinear mapping function

to transform linearly inseparable samples from the original low-dimensional input space into a higher-dimensional feature space H, where a linear classifier can effectively separate the data. To ensure that inner products in the high-dimensional space correspond to computations in the original space, kernel functions

are introduced based on general functional theory. Here,

denotes the Lagrange multipliers. Accordingly, Equation (20) can be reformulated as follows:

In this study, the SVM employs a widely adopted radial basis function (RBF) kernel, defined as follows:

Here, denotes the kernel parameter, which critically influences the classification performance of SVM by determining the effective width of the radial basis function. The overall classification accuracy and computational complexity of an SVM model largely depend on the appropriate selection of two hyperparameters: the penalty coefficient C and the kernel width . However, these parameters are typically selected empirically, often leading to suboptimal results and reduced efficiency.

To address this issue, this section introduces a novel hybrid model, OMGOA-SVM, which utilizes the OMGOA to simultaneously optimize C and . The enhanced model is subsequently applied to two real-world classification tasks: medical diagnosis and financial forecasting. It comprises two primary stages. In the first stage, OMGOA adaptively tunes the hyperparameters C and to improve the performance of the SVM classifier. In the second stage, the optimized SVM is evaluated using 10-fold cross-validation, where nine folds are used for training and the remaining fold for testing, to assess the model’s classification accuracy (ACC).

4.6.1. Performance Metrics

To evaluate the performance of the binary classification model, four standard metrics derived from the confusion matrix were employed:

True Positive (TP): Instances where the model correctly predicts the positive class.

False Positive (FP): Instances where the model incorrectly predicts the positive class for a negative sample.

False Negative (FN): Instances where the model incorrectly predicts the negative class for a positive sample.

True Negative (TN): Instances where the model correctly predicts the negative class.

Among these metrics, Accuracy (ACC) is defined as the proportion of correctly classified instances (both positive and negative) relative to the total number of predictions. It provides an overall measure of the model’s classification effectiveness.

Specificity evaluates the ability of the binary classification model to correctly identify negative (normal) instances, reflecting its effectiveness in distinguishing non-target cases.

Sensitivity measures the model’s ability to correctly identify positive (abnormal) instances, thereby assessing its effectiveness in detecting target conditions or events.

The Matthews Correlation Coefficient (MCC) was employed to provide a comprehensive evaluation of the classification model’s performance, offering a more balanced and informative measure than simple accuracy metrics [

41].

In the subsequent experiments, the proposed OMGOA-SVM model was compared with several other SVM variants, including GOA-SVM, LPO-SVM [

42], SBOA-SVM [

43], and CPO-SVM [

44], using the aforementioned evaluation metrics.

To ensure a fair and consistent comparison, all experiments were conducted under identical settings. Specifically, the swarm size and number of iterations for OMGOA, LPO, SBOA, and CPO algorithms were both fixed at 20 and 50, respectively. The search ranges for the penalty parameter C and kernel width γ were uniformly set to . Moreover, to mitigate the influence of differing feature scales, all datasets were normalized to the range [0, 1] prior to classification.

4.6.2. Lithology Predictor

Accurate identification of subsurface lithologies or rock types is fundamental for geoscientists engaged in the exploration of underground resources, particularly within the oil and gas sector. Lithology, which denotes the composition and characteristics of subsurface rocks, typically includes categories such as sandstone, claystone, marl, limestone, and dolomite. Various subsurface measurements, notably wireline petrophysical logs, serve as valuable data sources for lithology identification. However, the traditional interpretation of these logs is often labor-intensive, repetitive, and time-consuming.

This study aims to leverage machine learning classification techniques to predict lithology directly from petrophysical log data, providing an efficient and automated approach that addresses these challenges by utilizing logs as effective proxies for lithological properties.

The dataset comprises 118 wells distributed across the South and North Viking Graben, encompassing a geologically diverse region ranging from Permian evaporites in the south to the deeply buried Brent delta facies. Analysis of the provided training data reveals that the offshore Norwegian lithology is predominantly characterized by shales and shaly sediments. These are followed in abundance by sandstones, limestones, marls, and tuffs.

The provided dataset comprises well logs, interpreted lithofacies, and lithostratigraphic information for over 90 wells from offshore Norway. The well logs include identifiers such as well name (WELL), measured depth, and spatial coordinates (x, y, z) corresponding to the wireline measurements. The dataset also contains various petrophysical log measurements, including CALI, RDEP, RHOB, DHRO, SGR, GR, RMED, RMIC, NPHI, PEF, RSHA, DTC, SP, BS, ROP, DTS, DCAL, and MUDWEIGHT. Detailed descriptions of these abbreviations are provided in the figure below.

Table 7 presents a comprehensive comparison of the classification performance of the proposed OMGOA-SVM model against four alternative SVM-based classifiers: GOA-SVM, LPO-SVM, SBOA-SVM, and CPO-SVM. The evaluation metrics considered include Accuracy (ACC), Sensitivity, Specificity, and Matthews Correlation Coefficient (MCC). For each metric, both the average (Avg) and standard deviation (Std) across 10 experimental runs (#1 to #10) are reported, providing insights into both central tendency and variability of model performance. In this experiment, the parameter settings of the support vector machine (SVM) are as follows: the penalty parameter C is set to 10, the kernel function type is selected as radial basis function (RBF), and its kernel parameter γ is set to 0.01. To ensure the stability of the model and avoid overfitting, tenfold cross validation was used for parameter tuning. Specifically, the optimal combination of C and γ is obtained through grid search method, where the value range of C is [0.1, 1, 10, 100], and the value range of γ is [0.001, 0.01, 0.1, 1].

The OMGOA-SVM consistently achieves the highest average accuracy of 0.825, surpassing the other methods whose accuracies range between 0.775 and 0.804. Its standard deviation of 0.081 indicates relatively stable performance across different test folds. Similarly, OMGOA-SVM attains the best average sensitivity (0.756) and specificity (0.845), demonstrating superior ability in correctly identifying positive and negative classes respectively. In terms of MCC, which offers a balanced measure of prediction quality accounting for true and false positives and negatives, OMGOA-SVM again leads with an average value of 0.618, reflecting its comprehensive predictive capability.

Notably, individual fold results reveal that, while performance varies across different subsets, OMGOA-SVM maintains generally higher or comparable metric values compared to other models, indicating robustness. The observed lower standard deviations further confirm its consistent behavior. This comparative analysis underscores the effectiveness of the OMGOA optimization strategy in tuning SVM parameters, thereby enhancing classification accuracy and reliability relative to competing approaches.

5. Conclusions and Future Works

In this work, we presented OMGOA, a novel GOA variant that incorporates the Outpost mechanism and a multi-population enhanced mechanism to address the shortcomings of the original GOA. The Outpost mechanism strengthens local search by focusing exploitation efforts near high-quality solutions, while the multi-population strategy enhances global search capabilities and prevents premature convergence by maintaining diverse search dynamics across multiple interacting subpopulations. Through extensive experimental validation, including ablation studies, scalability tests, and comparisons on the CEC2017 benchmark set, OMGOA consistently outperformed both the original GOA and several advanced optimization algorithms. Moreover, its effectiveness was further demonstrated in a real-world lithology prediction task, where OMGOA-based SVM models exhibited superior classification accuracy. Overall, OMGOA offers a promising and robust optimization framework for solving complex, high-dimensional, and real-world problems. Future research may explore the integration of adaptive parameter control and hybrid learning strategies to further enhance its performance. The disadvantage of OMGOA is that its optimization performance has not been validated in multiple practical problems, which hinders its further development.

Future research can explore several promising directions. For example, the proposed OMGOA may be further enhanced by hybridizing it with other emerging metaheuristic algorithms to improve its optimization performance. Additionally, extending OMGOA to tackle multi-objective optimization problems and applying it to tasks such as image segmentation represent worthwhile avenues of investigation. Another prospective line of inquiry involves analyzing how the numerical degradation of chaotic systems in digital computation environments influences the performance of metaheuristic-based optimization methods.