WGGLFA: Wavelet-Guided Global–Local Feature Aggregation Network for Facial Expression Recognition

Abstract

1. Introduction

- We propose a wavelet-guided global–local feature aggregation network (WGGLFA), which integrates multi-scale feature extraction, local region feature aggregation, and key region guidance. We utilize the spatial frequency locality of the wavelet transform to achieve high-/low-frequency feature separation, enhancing fine-grained representation.

- The scale-aware expansion module is designed to enhance the ability to capture multi-scale details of facial expressions by combining wavelet transform with dilated convolution.

- The structured local feature aggregation module is introduced, which dynamically partitions the facial regions based on facial keypoints and enhances the partitioned feature units. These representations are then fused with those extracted from high-response regions by the expression-guided region refinement module, improving the accuracy of fine-grained expression feature extraction.

- Extensive experiments on RAF-DB, FERPlus, and FED-RO demonstrate that our WGGLFA is effective and has more robustness and generalization capability than the SOTA expression recognition methods.

2. Related Work

3. Methodology

3.1. Scale-Aware Expansion Module

- (1)

- Wavelet decomposition: The input image is decomposed using Haar wavelets into one low-frequency component, , along with three directional high-frequency components: , , and . , and correspond to the horizontal, vertical, and diagonal high-frequency components. The process is defined as follows:This decomposition enables the network to capture structural and edge information separately, improving its modeling of subtle facial variations such as wrinkles and mouth corner movements.

- (2)

- Multi-Frequency Convolution: At each layer, the low-/high-frequency parts of the input feature map are convolved with the current convolution kernel , resulting in a low-frequency output and a high-frequency convolution output .By separating convolutions over high- and low-frequency components, the model is able to independently model structural and detailed features, enhancing its perception and discrimination of information across different frequency bands.

- (3)

- Inverse Wavelet Transform (IWT): IWT processes the recombined convolved low- and high-frequency components to reconstruct them into a new feature map, thereby recovering the original spatial information. The process retains the original spatial structure and fuses multi-frequency responses to produce a more informative representation.Finally, after processing the four units with a global average pooling layer, we concatenate them along the channel dimension and apply an FC layer for dimensionality reduction to obtain the local features.

3.2. Structured Local Feature Aggregation Module

3.3. Expression-Guided Region Refinement Module

3.4. Fusion Strategy and Loss Function

4. Experimental Verification

4.1. Datasets

4.2. Experiment Details

- Data Preparation: In all our experiments, we utilized aligned image samples provided by the official dataset. Each input image was resized to , and five predefined facial keypoints were detected using RetinaFace [62].

- Training: ResNet-34 [45] was employed as the backbone network, with its parameters initialized using ImageNet pre-trained weights. Stochastic gradient descent (SGD) was employed as the optimization algorithm, initialized with a learning rate of 0.01, which decayed every 20 epochs. The model was trained for 100 epochs, applying early stopping when appropriate to avoid overfitting. The model was trained using a batch size of 64, a momentum value of 0.9, and a weight decay of 0.0001. Our WGGLFA contains 53.74 million parameters and 1.42 G FLOPs. We implemented the model using PyTorch 1.11.0, and all experiments were performed using an NVIDIA A100 GPU equipped with 40 GB of memory.

4.3. Comparison with State-of-the-Art Methods

4.4. Ablation Study

4.4.1. Effectiveness of the Proposed Modules

4.4.2. The Impact of the Dilation Rate d

4.4.3. The Impact of the Fusion Factor

4.4.4. The Impact of the Region Size M

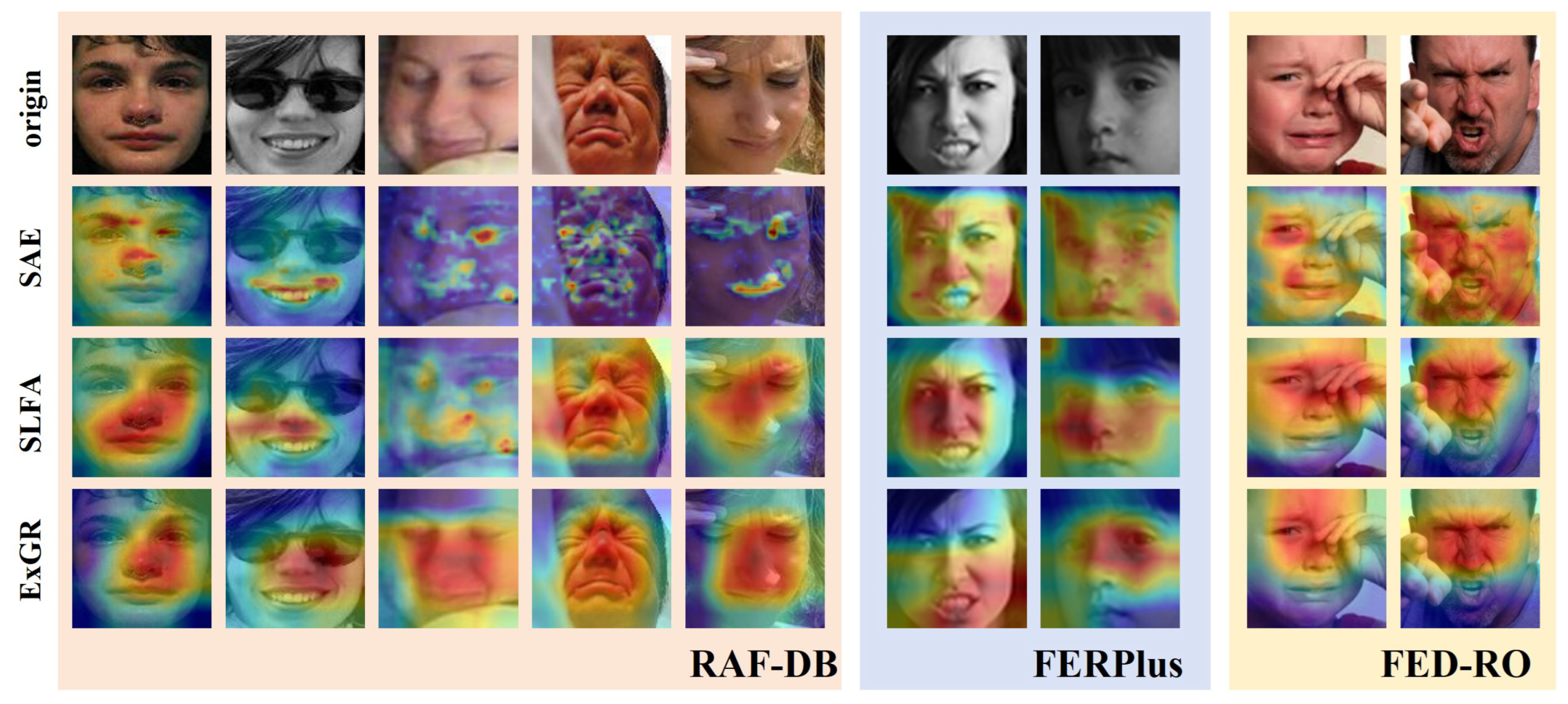

4.5. Visualization Analysis

5. Conclusions

6. Limitations and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, W.; Zeng, G.; Zhang, J.; Xu, Y.; Xing, Y.; Zhou, R.; Guo, G.; Shen, Y.; Cao, D.; Wang, F.Y. Cogemonet: A cognitive-feature-augmented driver emotion recognition model for smart cockpit. IEEE Trans. Comput. Soc. Syst. 2021, 9, 667–678. [Google Scholar] [CrossRef]

- Cimtay, Y.; Ekmekcioglu, E.; Caglar-Ozhan, S. Cross-subject multimodal emotion recognition based on hybrid fusion. IEEE Access 2020, 8, 168865–168878. [Google Scholar] [CrossRef]

- Thevenot, J.; López, M.B.; Hadid, A. A survey on computer vision for assistive medical diagnosis from faces. IEEE J. Biomed. Health Inform. 2017, 22, 1497–1511. [Google Scholar] [CrossRef]

- Nguyen, C.V.T.; Kieu, H.D.; Ha, Q.T.; Phan, X.H.; Le, D.T. Mi-CGA: Cross-modal Graph Attention Network for robust emotion recognition in the presence of incomplete modalities. Neurocomputing 2025, 623, 129342. [Google Scholar] [CrossRef]

- Guo, L.; Song, Y.; Ding, S. Speaker-aware cognitive network with cross-modal attention for multimodal emotion recognition in conversation. Knowl.-Based Syst. 2024, 296, 111969. [Google Scholar] [CrossRef]

- Wang, Y.; Guo, X.; Hou, X.; Miao, Z.; Yang, X.; Guo, J. Multi-modal sentiment recognition with residual gating network and emotion intensity attention. Neural Netw. 2025, 188, 107483. [Google Scholar] [CrossRef]

- Yang, H.; Ciftci, U.; Yin, L. Facial expression recognition by de-expression residue learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2168–2177. [Google Scholar]

- Chen, Y.; Wang, J.; Chen, S.; Shi, Z.; Cai, J. Facial motion prior networks for facial expression recognition. In Proceedings of the 2019 IEEE Visual Communications and Image Processing (VCIP), Sydney, Australia, 1–4 December 2019; pp. 1–4. [Google Scholar]

- Wang, S.; Shuai, H.; Liu, Q. Phase space reconstruction driven spatio-temporal feature learning for dynamic facial expression recognition. IEEE Trans. Affect. Comput. 2020, 13, 1466–1476. [Google Scholar] [CrossRef]

- Hazourli, A.R.; Djeghri, A.; Salam, H.; Othmani, A. Deep multi-facial patches aggregation network for facial expression recognition. arXiv 2020, arXiv:2002.09298. [Google Scholar]

- Lucey, P.; Cohn, J.F.; Kanade, T.; Saragih, J.; Ambadar, Z.; Matthews, I. The extended cohn-kanade dataset (ck+): A complete dataset for action unit and emotion-specified expression. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition-Workshops, San Francisco, CA, USA, 13–18 June 2010; pp. 94–101. [Google Scholar] [CrossRef]

- Pantic, M.; Valstar, M.; Rademaker, R.; Maat, L. Web-based database for facial expression analysis. In Proceedings of the 2005 IEEE International Conference on Multimedia and Expo, Amsterdam, The Netherlands, 6 July 2005; pp. 317–321. [Google Scholar] [CrossRef]

- Shan, L.; Deng, W. Reliable crowdsourcing and deep locality-preserving learning for unconstrained facial expression recognition. IEEE Trans. Image Process. 2018, 28, 356–370. [Google Scholar] [CrossRef]

- Mollahosseini, A.; Hasani, B.; Mahoor, M.H. Affectnet: A database for facial expression, valence, and arousal computing in the wild. IEEE Trans. Affect. Comput. 2017, 10, 18–31. [Google Scholar] [CrossRef]

- Dhall, A.; Goecke, R.; Lucey, S.; Gedeon, T. Collecting large, richly annotated facial-expression databases from movies. IEEE Multimed. 2012, 19, 34–41. [Google Scholar] [CrossRef]

- Li, Y.; Zeng, J.; Shan, S.; Chen, X. Occlusion aware facial expression recognition using CNN with attention mechanism. IEEE Trans. Image Process. 2018, 28, 2439–2450. [Google Scholar] [CrossRef]

- Wang, K.; Peng, X.; Yang, J.; Meng, D.; Qiao, Y. Region attention networks for pose and occlusion robust facial expression recognition. IEEE Trans. Image Process. 2020, 29, 4057–4069. [Google Scholar] [CrossRef]

- Cornejo, J.Y.R.; Pedrini, H. Recognition of occluded facial expressions based on CENTRIST features. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 1298–1302. [Google Scholar]

- Yao, A.; Cai, D.; Hu, P.; Wang, S.; Sha, L.; Chen, Y. HoloNet: Towards robust emotion recognition in the wild. In Proceedings of the 18th ACM International Conference on Multimodal Interaction, Tokyo, Japan, 12–16 November 2016; pp. 472–478. [Google Scholar]

- Bourel, F.; Chibelushi, C.C.; Low, A.A. Recognition of Facial Expressions in the Presence of Occlusion. In Proceedings of the British Machine Vision Conference (BMVC), Manchester, UK, 10–13 September 2001; pp. 1–10. [Google Scholar]

- Zhang, Z.; Lyons, M.; Schuster, M.; Akamatsu, S. Comparison between geometry-based and gabor-wavelets-based facial expression recognition using multi-layer perceptron. In Proceedings of the 3rd IEEE International Conference on Automatic Face and Gesture Recognition, Nara, Japan, 14–16 April 1998; pp. 454–459. [Google Scholar] [CrossRef]

- Liu, J.; Hu, M.; Wang, Y.; Huang, Z.; Jiang, J. Symmetric multi-scale residual network ensemble with weighted evidence fusion strategy for facial expression recognition. Symmetry 2023, 15, 1228. [Google Scholar] [CrossRef]

- Liu, Z.; Zhu, F.; Xiong, H.; Chen, X.; Pelusi, D.; Vasilakos, A.V. Graph regularized discriminative nonnegative matrix factorization. Eng. Appl. Artif. Intell. 2025, 139, 109629. [Google Scholar] [CrossRef]

- Bendjillali, R.I.; Beladgham, M.; Merit, K.; Taleb-Ahmed, A. Improved facial expression recognition based on DWT feature for deep CNN. Electronics 2019, 8, 324. [Google Scholar] [CrossRef]

- Indolia, S.; Nigam, S.; Singh, R. A self-attention-based fusion framework for facial expression recognition in wavelet domain. Vis. Comput. 2024, 40, 6341–6357. [Google Scholar] [CrossRef]

- Yu, F.; Koltun, V. Multi-scale context aggregation by dilated convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar] [CrossRef]

- Finder, S.E.; Amoyal, R.; Treister, E.; Freifeld, O. Wavelet convolutions for large receptive fields. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 363–380. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–26 June 2005; Volume 1, pp. 886–893. [Google Scholar] [CrossRef]

- Ng, P.C.; Henikoff, S. SIFT: Predicting amino acid changes that affect protein function. Nucleic Acids Res. 2003, 31, 3812–3814. [Google Scholar] [CrossRef]

- Shan, C.; Gong, S.; McOwan, P.W. Facial expression recognition based on local binary patterns: A comprehensive study. Image Vis. Comput. 2009, 27, 803–816. [Google Scholar] [CrossRef]

- Liu, C.; Wechsler, H. Gabor feature based classification using the enhanced fisher linear discriminant model for face recognition. IEEE Trans. Image Process. 2002, 11, 467–476. [Google Scholar] [CrossRef]

- Zhan, Y.; Ye, J.; Niu, D.; Cao, P. Facial expression recognition based on Gabor wavelet transformation and elastic templates matching. Int. J. Image Graph. 2006, 6, 125–138. [Google Scholar] [CrossRef]

- Shao, Y.; Tang, C.; Xiao, M.; Tang, H. Fusing Facial Texture Features for Face Recognition. Proc. Natl. Acad. Sci. India Sect. A Phys. Sci. 2016, 86, 395–403. [Google Scholar] [CrossRef]

- Huang, Z.; Yang, Y.; Yu, H.; Li, Q.; Shi, Y.; Zhang, Y.; Fang, H. RCST: Residual Context Sharing Transformer Cascade to Approximate Taylor Expansion for Remote Sensing Image Denoising. IEEE Trans. Geosci. Remote Sens. 2025, 63, 1–15. [Google Scholar] [CrossRef]

- Huang, Z.; Hu, W.; Zhu, Z.; Li, Q.; Fang, H. TMSF: Taylor expansion approximation network with multi-stage feature representation for optical flow estimation. Digit. Signal Process. 2025, 162, 105157. [Google Scholar] [CrossRef]

- Zhu, Z.; Xia, M.; Xu, B.; Li, Q.; Huang, Z. GTEA: Guided Taylor Expansion Approximation Network for Optical Flow Estimation. IEEE Sens. J. 2024, 24, 5053–5061. [Google Scholar] [CrossRef]

- Zhu, Z.; Huang, C.; Xia, M.; Xu, B.; Fang, H.; Huang, Z. RFRFlow: Recurrent feature refinement network for optical flow estimation. IEEE Sens. J. 2023, 23, 26357–26365. [Google Scholar] [CrossRef]

- Huang, Z.; Zhu, Z.; Wang, Z.; Shi, Y.; Fang, H.; Zhang, Y. DGDNet: Deep gradient descent network for remotely sensed image denoising. IEEE Geosci. Remote Sens. Lett. 2023, 20, 1–5. [Google Scholar] [CrossRef]

- Huang, Z.; Wang, Z.; Zhu, Z.; Zhang, Y.; Fang, H.; Shi, Y.; Zhang, T. DLRP: Learning deep low-rank prior for remotely sensed image denoising. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Huang, Z.; Lin, C.; Xu, B.; Xia, M.; Li, Q.; Li, Y.; Sang, N. T 2 EA: Target-aware Taylor Expansion Approximation Network for Infrared and Visible Image Fusion. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 4831–4845. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Gao, S.H.; Cheng, M.M.; Zhao, K.; Zhang, X.Y.; Yang, M.H.; Torr, P. Res2net: A new multi-scale backbone architecture. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 652–662. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Xia, H.; Lu, L.; Song, S. Feature fusion of multi-granularity and multi-scale for facial expression recognition. Vis. Comput. 2024, 40, 2035–2047. [Google Scholar] [CrossRef]

- Zhao, Z.; Liu, Q.; Wang, S. Learning deep global multi-scale and local attention features for facial expression recognition in the wild. IEEE Trans. Image Process. 2021, 30, 6544–6556. [Google Scholar] [CrossRef]

- Ali, H.; Sritharan, V.; Hariharan, M.; Zaaba, S.K.; Elshaikh, M. Feature extraction using radon transform and discrete wavelet transform for facial emotion recognition. In Proceedings of the 2016 2nd IEEE International Symposium on Robotics and Manufacturing Automation (ROMA), Ipoh, Malaysia, 25–27 September 2016; pp. 1–5. [Google Scholar] [CrossRef]

- Wang, T.; Xiao, Y.; Cai, Y.; Gao, G.; Jin, X.; Wang, L.; Lai, H. Ufsrnet: U-shaped face super-resolution reconstruction network based on wavelet transform. Multimed. Tools Appl. 2024, 83, 67231–67249. [Google Scholar] [CrossRef]

- Ezati, A.; Dezyani, M.; Rana, R.; Rajabi, R.; Ayatollahi, A. A lightweight attention-based deep network via multi-scale feature fusion for multi-view facial expression recognition. arXiv 2024, arXiv:2403.14318. [Google Scholar]

- Shahzad, T.; Iqbal, K.; Khan, M.A.; Imran; Iqbal, N. Role of zoning in facial expression using deep learning. IEEE Access 2023, 11, 16493–16508. [Google Scholar] [CrossRef]

- Zhang, K.; Zhang, Z.; Li, Z.; Qiao, Y. Joint face detection and alignment using multitask cascaded convolutional networks. IEEE Signal Process. Lett. 2016, 23, 1499–1503. [Google Scholar] [CrossRef]

- Tao, H.; Duan, Q. Hierarchical attention network with progressive feature fusion for facial expression recognition. Neural Netw. 2024, 170, 337–348. [Google Scholar] [CrossRef]

- Liu, H.; Cai, H.; Lin, Q.; Li, X.; Xiao, H. Adaptive multilayer perceptual attention network for facial expression recognition. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 6253–6266. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Bie, M.; Xu, H.; Gao, Y.; Song, K.; Che, X. Swin-FER: Swin Transformer for Facial Expression Recognition. Appl. Sci. 2024, 14, 6125. [Google Scholar] [CrossRef]

- Xu, R.; Huang, A.; Hu, Y.; Feng, X. GFFT: Global-local feature fusion transformers for facial expression recognition in the wild. Image Vis. Comput. 2023, 139, 104824. [Google Scholar] [CrossRef]

- Wang, X.; Zhao, S.; Sun, H.; Wang, H.; Zhou, J.; Qin, Y. Enhancing Multimodal Emotion Recognition through Multi-Granularity Cross-Modal Alignment. arXiv 2024, arXiv:2412.20821. [Google Scholar]

- Pan, Z.; Luo, Z.; Yang, J.; Li, H. Multi-Modal Attention for Speech Emotion Recognition. In Proceedings of the Interspeech 2020, Shanghai, China, 25–29 October 2020; pp. 364–368. [Google Scholar] [CrossRef]

- Ryumina, E.; Ryumin, D.; Axyonov, A.; Ivanko, D.; Karpov, A. Multi-corpus emotion recognition method based on cross-modal gated attention fusion. Pattern Recognit. Lett. 2025, 190, 192–200. [Google Scholar] [CrossRef]

- Deng, J.; Guo, J.; Ververas, E.; Kotsia, I.; Zafeiriou, S. Retinaface: Single-shot multi-level face localisation in the wild. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 5203–5212. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Xu, W.; Wan, Y. ELA: Efficient local attention for deep convolutional neural networks. arXiv 2024, arXiv:2403.01123. [Google Scholar] [CrossRef]

- Barsoum, E.; Zhang, C.; Ferrer, C.C.; Zhang, Z. Training deep networks for facial expression recognition with crowd-sourced label distribution. In Proceedings of the 18th ACM International Conference on Multimodal Interaction, Tokyo, Japan, 12–16 November 2016; pp. 279–283. [Google Scholar]

- Goodfellow, I.J.; Erhan, D.; Carrier, P.L.; Courville, A.; Mirza, M.; Hamner, B.; Cukierski, W.; Tang, Y.; Thaler, D.; Lee, D.H.; et al. Challenges in representation learning: A report on three machine learning contests. In Proceedings of the Neural Information Processing: 20th International Conference (ICONIP 2013), Daegu, Republic of Korea, 3–7 November 2013; Proceedings, Part III 20. Springer: Berlin/Heidelberg, Germany, 2013; pp. 117–124. [Google Scholar]

- Li, H.; Xiao, X.; Liu, X.; Wen, G.; Liu, L. Learning cognitive features as complementary for facial expression recognition. Int. J. Intell. Syst. 2024, 2024, 7321175. [Google Scholar] [CrossRef]

- Devasena, G.; Vidhya, V. Twinned attention network for occlusion-aware facial expression recognition. Mach. Vis. Appl. 2025, 36, 23. [Google Scholar] [CrossRef]

- Li, H.; Wang, N.; Yang, X.; Wang, X.; Gao, X. Unconstrained facial expression recognition with no-reference de-elements learning. IEEE Trans. Affect. Comput. 2023, 15, 173–185. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

| Methods | Accuracy (%) | Params (M) | FLOPs (G) | ||

|---|---|---|---|---|---|

| RAF-DB | FERPlus | FED-RO | |||

| RAN [17] | 86.90 | 88.55 | 67.98 | – | – |

| MA-Net [47] | 88.40 | 87.60 | 70.00 | 50.54 | 3.65 |

| AMP-Net [54] | 89.25 | 85.44 | 71.75 | 105.67 | 1.69 |

| MM-Net [46] | 89.77 | 89.34 | 68.75 | 23.11 | 4.70 |

| DENet [69] | 87.35 | 89.37 | 71.50 | – | – |

| Twinned-Att [68] | 86.92 | – | 69.82 | 52.85 | 4.96 |

| LCFC [67] | 89.23 | 89.60 | – | 22.61 | – |

| WGGLFA (Ours) | 90.32 | 91.24 | 71.90 | 53.74 | 1.42 |

| Emotion | RAN [17] | MA-Net [47] | AMP-Net [54] | MM-Net [46] | DENet [69] | Twinned-Att [68] | LCFC [67] | WGGLFA (Ours) |

|---|---|---|---|---|---|---|---|---|

| neutral | 0.81 | 0.85 | 0.89 | 0.88 | 0.88 | 0.88 | 0.89 | 0.92 |

| fear | 0.76 | 0.76 | 0.65 | 0.75 | 0.66 | 0.73 | 0.68 | 0.77 |

| disgust | 0.68 | 0.67 | 0.65 | 0.73 | 0.54 | 0.86 | 0.72 | 0.75 |

| happy | 0.87 | 0.93 | 0.96 | 0.96 | 0.95 | 0.85 | 0.94 | 0.97 |

| sadness | 0.75 | 0.79 | 0.87 | 0.89 | 0.85 | 0.92 | 0.89 | 0.90 |

| angry | 0.85 | 0.84 | 0.82 | 0.85 | 0.80 | 0.87 | 0.83 | 0.87 |

| surprise | 0.78 | 0.86 | 0.86 | 0.86 | 0.87 | 0.86 | 0.87 | 0.89 |

| Emotion | RAN [17] | MA-Net [47] | AMP-Net [54] | MM-Net [46] | DENet [69] | LCFC [67] | WGGLFA (Ours) |

|---|---|---|---|---|---|---|---|

| neutral | 0.83 | 0.85 | 0.83 | 0.88 | 0.92 | 0.92 | 0.89 |

| fear | 0.80 | 0.82 | 0.79 | 0.78 | 0.54 | 0.53 | 0.79 |

| disgust | 0.76 | 0.77 | 0.65 | 0.65 | 0.53 | 0.56 | 0.74 |

| happy | 0.88 | 0.92 | 0.91 | 0.95 | 0.95 | 0.96 | 0.96 |

| sadness | 0.76 | 0.83 | 0.81 | 0.81 | 0.78 | 0.79 | 0.87 |

| angry | 0.87 | 0.90 | 0.88 | 0.91 | 0.89 | 0.86 | 0.92 |

| surprise | 0.82 | 0.86 | 0.84 | 0.89 | 0.92 | 0.93 | 0.90 |

| contempt | 0.50 | 0.51 | 0.68 | 0.62 | 0.38 | 0.31 | 0.68 |

| Emotion | RAN [17] | MA-Net [47] | AMP-Net [54] | Twinned-Att [68] | WGGLFA (Ours) |

|---|---|---|---|---|---|

| neutral | 0.68 | 0.70 | 0.70 | 0.72 | 0.71 |

| fear | 0.65 | 0.75 | 0.76 | 0.67 | 0.77 |

| disgust | 0.62 | 0.48 | 0.47 | 0.66 | 0.50 |

| happy | 0.80 | 0.83 | 0.86 | 0.74 | 0.85 |

| sadness | 0.66 | 0.70 | 0.74 | 0.69 | 0.72 |

| angry | 0.64 | 0.78 | 0.83 | 0.63 | 0.84 |

| surprise | 0.63 | 0.60 | 0.63 | 0.73 | 0.62 |

| Ablation Strategy | Accuracy (%) | ||||

|---|---|---|---|---|---|

| SAE | SLFA | ExGR | RAF-DB | FERPlus | FED-RO |

| 85.00 | 85.20 | 61.20 | |||

| ✓ | 86.88 | 86.72 | 66.74 | ||

| ✓ | 86.14 | 86.49 | 65.80 | ||

| ✓ | 85.91 | 86.02 | 63.44 | ||

| ✓ | ✓ | 88.02 | 88.69 | 68.60 | |

| ✓ | ✓ | 87.60 | 87.24 | 67.50 | |

| ✓ | ✓ | 86.50 | 86.83 | 67.25 | |

| ✓ | ✓ | ✓ | 89.15 | 89.44 | 69.90 |

| Dilation Rates | Accuracy (%) | |||||

|---|---|---|---|---|---|---|

| RAF-DB | FERPlus | FED-RO | ||||

| 1 | 2 | 3 | 4 | 87.35 | 87.89 | 68.85 |

| 1 | 2 | 4 | 6 | 87.81 | 88.33 | 69.12 |

| 1 | 2 | 4 | 8 | 88.50 | 88.75 | 69.30 |

| 1 | 4 | 8 | 12 | 88.14 | 88.50 | 69.50 |

| 1 | 6 | 12 | 18 | 89.15 | 89.44 | 69.90 |

| Region Size | Accuracy (%) | ||

|---|---|---|---|

| RAF-DB | FERPlus | FED-RO | |

| 5 | 88.83 | 89.00 | 69.45 |

| 6 | 88.98 | 89.27 | 69.73 |

| 7 | 89.15 | 89.44 | 69.90 |

| 8 | 89.02 | 89.21 | 69.40 |

| 9 | 88.90 | 89.15 | 69.30 |

| 10 | 88.72 | 89.02 | 69.15 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dong, K.; Li, X.; Zhang, C.; Xiao, Z.; Nie, R. WGGLFA: Wavelet-Guided Global–Local Feature Aggregation Network for Facial Expression Recognition. Biomimetics 2025, 10, 495. https://doi.org/10.3390/biomimetics10080495

Dong K, Li X, Zhang C, Xiao Z, Nie R. WGGLFA: Wavelet-Guided Global–Local Feature Aggregation Network for Facial Expression Recognition. Biomimetics. 2025; 10(8):495. https://doi.org/10.3390/biomimetics10080495

Chicago/Turabian StyleDong, Kaile, Xi Li, Cong Zhang, Zhenhua Xiao, and Runpu Nie. 2025. "WGGLFA: Wavelet-Guided Global–Local Feature Aggregation Network for Facial Expression Recognition" Biomimetics 10, no. 8: 495. https://doi.org/10.3390/biomimetics10080495

APA StyleDong, K., Li, X., Zhang, C., Xiao, Z., & Nie, R. (2025). WGGLFA: Wavelet-Guided Global–Local Feature Aggregation Network for Facial Expression Recognition. Biomimetics, 10(8), 495. https://doi.org/10.3390/biomimetics10080495