Abstract

This paper introduces a novel bio-inspired metaheuristic algorithm, named the sharpbelly fish optimizer (SFO), inspired by the collective ecological behaviors of the sharpbelly fish. The algorithm integrates four biologically motivated strategies—(1) fitness-driven fast swimming, (2) convergence-guided gathering, (3) stagnation-triggered dispersal, and (4) disturbance-induced escape—which synergistically enhance the balance between global exploration and local exploitation. To assess its performance, the proposed SFO is evaluated on the CEC2022 benchmark suite under various dimensions. The experimental results demonstrate that SFO consistently achieves competitive or superior optimization accuracy and convergence speed compared to seven state-of-the-art metaheuristic algorithms. Furthermore, the algorithm is applied to three classical constrained engineering design problems: pressure vessel, speed reducer, and gear train design. In these applications, SFO exhibits strong robustness and solution quality, validating its potential as a general-purpose optimization tool for complex real-world problems. These findings highlight SFO’s effectiveness in tackling nonlinear, constrained, and multimodal optimization tasks, with promising applicability in diverse engineering scenarios.

1. Introduction

With the continuous advancement of science and technology, complex optimization problems have become increasingly prevalent in both scientific research and practical applications. Among them, engineering optimization problems are particularly prominent due to their critical role in improving industrial performance and economic efficiency. These problems often involve fine-tuning process parameters and operational data under complex constraints to maximize productivity or minimize cost while satisfying technical requirements. Solving such problems typically requires the analysis of high-dimensional, nonlinear, and constrained objective functions. Traditional numerical methods often fall short in these scenarios, especially when the feasible region is narrow due to strict inequality constraints and mixed-integer decision variables. To address these challenges, researchers have developed a variety of swarm intelligence algorithms (SIAs), which are a class of metaheuristic approaches inspired by the collective behavior of natural organisms. Swarm intelligence algorithms are particularly suitable for engineering optimization due to their simplicity, flexibility, and global search capabilities [1,2,3]. They can effectively explore complex search spaces and avoid local optima by leveraging stochastic population-based mechanisms. As a result, SIAs have been widely applied in fields such as signal processing, image analysis, medical resource scheduling, very large-scale integration design, and structural engineering optimization [4,5].

Swarm intelligence embodies the collective intelligence exhibited by biological populations through collaboration and interaction. Inspired by such natural behaviors, researchers have proposed a variety of swarm intelligence optimization algorithms. These algorithms are characterized by features such as self-organization, distributed processing, and high adaptability, making them well-suited for solving complex optimization problems. One of the earliest and most representative algorithms is the genetic algorithm (GA) [6], proposed by Holland, which has been widely applied in knapsack problems, image processing, and scheduling tasks. Subsequently, Marco et al. introduced the ant colony optimization (ACO) algorithm [7], inspired by the food-foraging behavior of ants, which is particularly effective for shortest path problems. Kennedy and Eberhart developed the particle swarm optimization (PSO) algorithm [8] by simulating the coordinated flight of bird flocks; PSO guides the search process using both personal and global best solutions and is especially suitable for solving high-dimensional continuous problems. In addition, many other algorithms have drawn inspiration from collective animal behaviors. For example, the bat algorithm (BA) [9] simulates the echolocation-based hunting strategy of bats, mapping the search and exploitation process to prey tracking. The firefly algorithm (FA) [10] is based on the light intensity-driven attraction between fireflies, using brightness (fitness) to guide the search direction. The sparrow search algorithm (SSA) [11], a novel swarm optimization approach inspired by the group wisdom, foraging strategies, and anti-predation behaviors of sparrows, has demonstrated strong performance in both single-objective and multi-objective optimization tasks. The butterfly optimization algorithm (BOA) [12] mimics how butterflies locate food sources and mates using olfactory cues, enabling effective exploration of the solution space.

Considering the increasing complexity of optimization tasks, recent years have witnessed the development of numerous bio-inspired metaheuristic algorithms. These methods have introduced a diverse range of biological inspirations—from algae aggregation to cuckoo mimicry—to improve convergence speed, exploration adaptability, or constraint handling. To provide an overview of this evolving landscape, Table 1 presents a comparative summary of twelve representative algorithms developed over the past five years. The table highlights their design inspirations, major advantages, and known limitations.

Table 1.

Comparison between existing metaheuristic algorithms and the proposed SFO.

Despite their individual strengths, these algorithms still suffer from common challenges, such as premature convergence, insufficient exploration in high-dimensional spaces, or instability under constrained conditions. According to the no free lunch (NFL) theorem (Wolpert and Macready, 1997) [24], no optimization algorithm can perform optimally across all problem domains. This theoretical constraint underscores the ongoing need to develop novel and efficient metaheuristic strategies that are adaptable to specific problem characteristics. Motivated by this, this paper proposes a new metaheuristic algorithm named the sharpbelly fish optimizer (SFO). Inspired by the ecological behaviors of sharpbelly fish—including predation, aggregation, dispersal, and escape—SFO integrates four biologically derived mechanisms to achieve a dynamic balance between global exploration and local exploitation in complex optimization landscapes.

The rest of this paper is organized as follows. Section 2 describes the design principles and search mechanisms of the proposed SFO. Section 3 presents numerical experiments on standard benchmark functions to evaluate the performance of SFO. Section 4 applies the algorithm to several classical engineering design problems to verify its practical effectiveness. Finally, Section 5 concludes the paper and discusses potential directions for future research.

2. The Sharpbelly Fish Optimizer (SFO)

2.1. Inspiration

The sharpbelly (Hemiculter leucisculus, Basilesky, 1855) [25,26,27] is a small, fast-swimming freshwater and brackish-water fish belonging to the family Xenocyprididae, as shown in Figure 1. It is widely distributed in East Asia, including mainland China and Korea, and has been introduced to various countries such as Iran and Afghanistan, where it has demonstrated strong ecological adaptability and invasiveness. This species primarily inhabits low-elevation freshwater bodies, such as streams, lakes, and reservoirs, and typically resides in the upper to middle water column, at depths ranging from 0 to 10 m. The optimal environmental conditions for the sharpbelly include a pH of approximately 7.0, water hardness around 15 DH, and a temperature range of 18–22 °C (64–72 °F). Behaviorally, the sharpbelly is characterized by its active, agile, and highly sensitive nature. It is a schooling fish often found swimming in shallow waters near the surface, where it exhibits predatory behavior, such as chasing flying insects above the water. The species shows a preference for hovering near aquatic vegetation and open gaps in submerged plant beds in search of food. Sharpbellies are omnivorous, feeding on a diverse range of items including zooplankton, aquatic insects, algae, crustaceans, and plant debris. They are known to deposit adhesive eggs on aquatic plants during spawning [28]. Due to their strong reproductive capacity and environmental adaptability, they are considered ecologically resilient, often dominating in invaded habitats, and outcompeting native species.

Figure 1.

Sharpbelly fish.

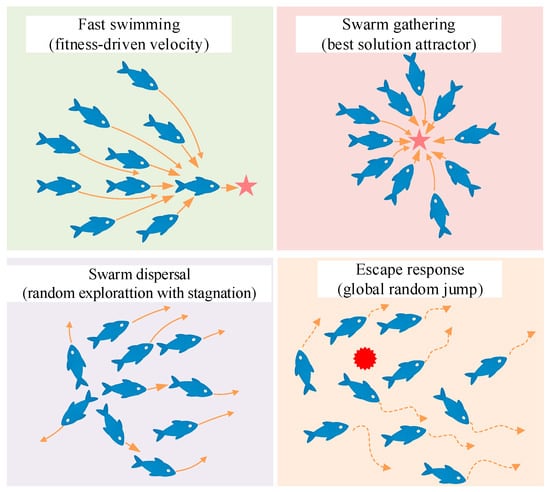

The sharpbelly fish exhibits a range of instinctive group behaviors in its natural habitat that offers rich inspiration for designing swarm-based optimization strategies. These behaviors include active predation near the surface, group aggregation, high sensitivity to environmental stimuli, and evasive maneuvers under disturbance. Such adaptive traits are highly aligned with the fundamental principles of intelligent search and collective decision-making in optimization algorithms. Inspired by these ecological characteristics, this paper proposes a novel metaheuristic algorithm named the sharpbelly fish optimizer (SFO). As illustrated in Figure 2, four core behavioral patterns: fast swimming, swarm gathering, dispersal, and escape response, serve as the foundation for the algorithmic design of SFO.

Figure 2.

Four core behaviors in the Sharpbelly fish.

Comparison with other fish-inspired algorithms: to clarify the distinctions between the proposed SFO and other biologically inspired algorithms such as Bitterling Fish Optimization (BFO) and Pufferfish Optimization Algorithm (PFO), a comparison is provided in terms of biological inspiration, search mechanism, and structural design.

(1) The biological origin of SFO is derived from the foraging and evasion behaviors of Hemiculter leucisculus (sharpbelly fish), which exhibit cooperative swimming, opportunistic aggregation, and rapid dispersal under threats. In contrast, BFO is inspired by the reproductive behavior of bitterling fish, focusing on host mussel selection and spawning strategy. PFO models the self-defensive inflation behavior and localized movement of pufferfish. Thus, while BFO and PFO focus on reproductive or defensive behaviors, SFO emphasizes swarm intelligence and motion-driven adaptation.

(2) Behavioral modeling and update dynamics: The SFO algorithm integrates four core strategies: swim (directional movement), gather (group-based attraction), disturb (randomized noise injection), and disperse (diversity enhancement under stagnation), forming a balanced exploration–exploitation mechanism. BFO, in contrast, relies on discrete egg-laying decisions and lacks continuous positional updates, limiting its performance in high-dimensional continuous domains. PFO utilizes radial motion and repulsion behavior but lacks collective intelligence modeling or stagnation escape strategies.

(3) Mechanism novelty and algorithm robustness: SFO introduces an explicit stagnation detection mechanism based on the Ts threshold, triggering global dispersal to prevent premature convergence. Additionally, the velocity-based update model in SFO allows dynamic adaptation to landscape complexity. These mechanisms are absent or simplified in BFO and PFO, making SFO more suitable for solving complex numerical optimization problems.

In summary, although all three algorithms are inspired by aquatic species, SFO distinguishes itself through a motion-coordination framework, behavioral diversity, and stagnation-driven adaptivity, which collectively contribute to its superior convergence stability and robustness across various benchmark functions.

2.2. The Mathematical Model of SFO

2.2.1. Population Initialization

In the proposed SFO algorithm, each individual sharpbelly fish represents a potential candidate solution within the search space of a given optimization problem. The position of each fish is modeled as a point in a D-dimensional continuous space, where D denotes the number of decision variables. The algorithm starts by initializing a population of N individuals, whose positions are randomly distributed within the defined boundaries. The initial population matrix X0 ∈ RN×D is defined as follows:

where X0 is the initial position matrix at iteration t = 0, rand(N, D) is a uniformly distributed random matrix with values in [0, 1], and ub, lb ∈ RD are the upper and lower bounds of the search space.

This initialization ensures that each fish begins its search from a feasible location within the problem domain. The fitness of each individual is evaluated using an objective function, with results stored in the fitness vector Ft ∈ RN×1.

where f(·) represents the fitness function.

2.2.2. Swimming and Gathering

To model the innate tendency of sharpbelly fish to seek nutrient-rich zones, a basic swimming behavior is implemented. Each fish moves in the direction of the global best solution, with a speed inversely proportional to its fitness value. This mechanism reflects the ecological observation that weaker individuals tend to exhibit more exploratory movement.

where represents the swimming velocity vector of the i-th individual, and the swim speed determined by the relative fitness of the individual compared to the worst solution in the population. Specifically, maxj f() represents the worst fitness value at iteration t, which is used to normalize the swimming speed of each individual. The constant ε is a small positive number to prevent division by zero.

Simultaneously, all fish are influenced by a social cohesion mechanism, prompting them to gather near the current global optimum:

where represents the gathering velocity of the i-th individual, which drives it toward the global best position . The parameter α ∈ (0, 1) controls the social attraction strength toward the global best position, facilitating exploitation regardless of fitness.

2.2.3. Escape Behavior

In nature, sharpbelly fish react strongly to disturbances in their habitat by rapidly changing direction. To emulate this behavior, a stochastic escape mechanism is triggered with a probability Pf, modeled by Gaussian-distributed random motion.

where denotes the disturbance velocity vector of the i-th individual. δ controls the disturbance magnitude and N(0, 1) is a standard normal vector. Pf ∈ [0, 1] is the disturbance probability, which controls how frequently individuals are subjected to random perturbation. A higher value of Pf promotes exploration, while a lower value maintains stability.

2.2.4. Dispersal Due to Stagnation

When the algorithm exhibits stagnation—defined as a lack of improvement over a fixed number of iterations Ts—a dispersal mechanism is activated to help individuals escape local optima. In this mode, affected individuals randomly explore the space within a bounded radius:

where represents the dispersal velocity of the i-th individual, which is introduced to prevent stagnation. When the individual has not improved its fitness for Ts consecutive iterations, it is relocated within the search space using a random vector scaled by γ. The parameter cno_improve monitors this stagnation period. Here, γ ∈ [0, 1] determines the dispersal rang.

2.2.5. Position Update

The final velocity of each fish is the sum of the active behavior components:

Each fish updates its position based on the resulting velocity vector, according to the following rule:

To ensure feasibility, each updated position is projected onto the feasible domain:

This projection operation ensures that each dimension of the updated position vector lies within the predefined lower and upper bounds, thereby maintaining feasibility throughout the search process.

At the end of each iteration, the best individual is updated as follows:

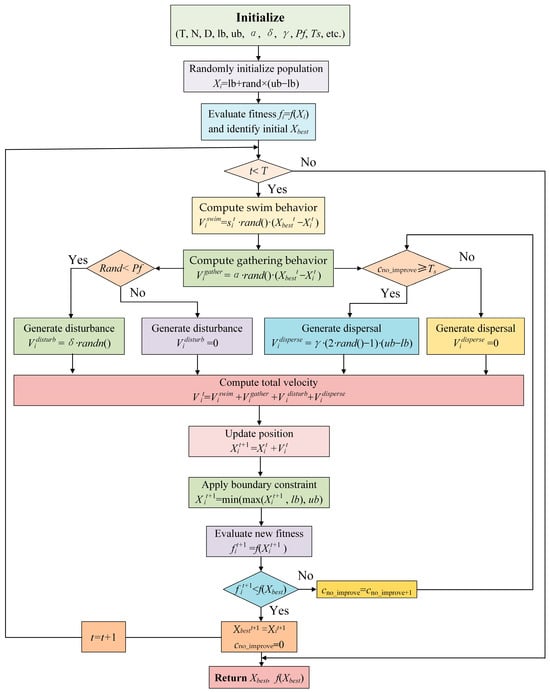

To summarize, in SFO, the optimization process is driven by the integration of four biologically inspired behaviors—swimming, gathering, escape, and dispersal—which collectively determine the search dynamics of each fish. These behaviors are mathematically modeled to guide the individuals toward high-quality solutions while maintaining population diversity. The velocity-based update mechanism enables the algorithm to balance global exploration and local exploitation effectively. Once the position is updated and feasibility is ensured, the global best solution is retained to guide subsequent search. This iterative process continues until the termination criterion is met. The detailed workflow of SFO is illustrated in Figure 3, where each step corresponds to the key equations and mechanisms in Algorithm 1. Please note that the complete source code for the SFO is publicly available at https://github.com/DYHuestc/SFO-project (accessed on 2 July 2025).

Figure 3.

The detailed workflow of SFO.

2.3. Computational Complexity

The computational complexity of the proposed SFO is analyzed based on its main procedures, including population initialization, fitness evaluation, and position update. Let N denote the population size, D the problem dimension, and T the maximum number of iterations. The initialization process involves generating N individuals in a D-dimensional search space, yielding a complexity of O(N × D). During each iteration, the algorithm evaluates the fitness of all individuals, which requires O(N) operations. Additionally, the velocity update consists of four behavior mechanisms—swimming, gathering, escape, and dispersal—each involving vector operations with complexity O(D) per individual. Therefore, the overall position update in one iteration incurs a cost of O(N × D), and across TTT iterations, the total becomes O(N × T × D). The global best solution is updated at each iteration by comparing fitness values, which adds a negligible cost of O(N) per iteration. In total, the computational complexity of the SFO algorithm is O(N × T × D). This indicates that the proposed algorithm scales linearly with respect to the population size, the number of iterations, and the dimensionality of the problem, making it suitable for solving high-dimensional optimization problems efficiently.

| Algorithm 1 SFO | |

| Begin | |

| 1. | Initialize (T, N, D, lb, ub, α, δ, γ, Pf, Ts, etc.) |

| 2. | Randomly initialize population Xi = lb + rand × (ub−lb) |

| 3. | Evaluate fitness fi = f(Xi), and identify initial Xbest |

| 4. | t = 0; stagnation_count = 0 |

| 5. | for t = 1 to T |

| 6. | for i = 1 to N |

| 7. | Compute swim behavior: = ·rand()·(−) |

| 8. | Compute gathering behavior: = α·rand()·(−) |

| 9. | if rand < Pf |

| 10. | Generate disturbance: = δ·randn() |

| 11. | else |

| 12. | = 0 |

| 13. | end if |

| 14. | if cno_improve ≥ Ts |

| 15. | Generate dispersal:= γ·(2·rand()−1)·(ub−lb) |

| 16. | else |

| 17. | = 0 |

| 18. | end if |

| 19. | Compute total velocity: = + + + |

| 20. | Update position: = + |

| 21. | Apply boundary constraint: = min(max(, lb), ub) |

| 22. | Evaluate new fitness = f() |

| 23. | if < f(Xbest) |

| 24. | = ; cno_improve = 0 |

| 25. | else |

| 26. | cno_improve = cno_improve + 1 |

| 27. | end if |

| 28. | end for |

| 29. | end for |

| 30. | Return Xbest, f(Xbest) |

3. Experimental Results and Analysis

3.1. Experimental Setting

3.1.1. Benchmark Test Functions

Benchmark test functions provide a standardized and rigorous framework for evaluating the effectiveness and robustness of optimization algorithms. In this study, the CEC2022 benchmark suite is employed to assess the performance of the proposed SFO algorithm. Specifically, the test functions are configured under two dimensional settings: 10 and 20. As the problem dimensionality increases, the landscape complexity typically grows due to the presence of more local optima, posing greater challenges for global search algorithms. The CEC2022 suite offers a comprehensive set of benchmark functions encompassing various difficulty levels and landscape characteristics. These include unimodal functions for testing exploitation capability, multimodal functions for evaluating the ability to escape local optima, as well as hybrid and composition functions that simulate complex real-world optimization scenarios. Such a diversified set of benchmark problems ensures a thorough evaluation of the algorithm’s global exploration and local exploitation performance under varying search conditions. Table 2 summarizes the CEC2022 benchmark functions adopted in this study. These functions span unimodal, hybrid, and composition types, providing diverse and representative challenges for evaluating the algorithm’s robustness, stability, and adaptability.

Table 2.

Summary of CEC2022 benchmark functions used in this study.

3.1.2. Competitor Algorithms and Parameters Setting

To evaluate the optimization performance of the proposed SFO, several widely recognized metaheuristic algorithms were selected for comparative testing. These algorithms have demonstrated competitive results in various benchmark and engineering optimization problems. A brief overview of each algorithm is presented as follows:

Particle Swarm Optimization (PSO) [29]: a population-based algorithm inspired by the social behavior of bird flocking, where individuals (particles) adjust their trajectories based on their own experience and that of their neighbors to converge toward promising regions.

Ant Colony Optimization (ACO) [30]: inspired by the foraging behavior of ants, ACO uses pheromone trails and probabilistic decision rules to find optimal paths through combinatorial spaces, making it effective for discrete optimization problems.

Genetic Algorithm (GA) [31]: based on the principles of natural selection and genetics, GA operates through selection, crossover, and mutation operators to evolve a population of solutions toward optimality over generations.

Grey Wolf Optimizer (GWO) [32]: mimics the leadership hierarchy and hunting mechanism of grey wolves in nature, where individuals update positions based on the guidance of alpha, beta, and delta wolves to balance exploration and exploitation.

Sparrow Search Algorithm (SSA) [33]: simulates the foraging and anti-predation behavior of sparrows, dividing individuals into discoverers and joiners to coordinate global search and local refinement effectively.

Bat Algorithm (BA) [34]: inspired by echolocation behavior in bats, this algorithm uses frequency tuning and loudness adjustment to guide individuals toward optimal solutions.

Whale Optimization Algorithm (WOA) [35]: emulates the spiral bubble-net hunting strategy of humpback whales, incorporating both encircling prey and stochastic search components to perform global exploration and local exploitation.

The control parameters of these algorithms were set according to commonly recommended values in the literature and are summarized in Table 3. To ensure a fair comparison, all algorithms use parameter settings either recommended in the original papers or commonly adopted in benchmark studies, without additional fine-tuning. For fairness, all algorithms shared the same experimental conditions: the population size n was set to 30, and the maximum number of iterations T was fixed at 1000. Each algorithm was independently executed 30 times to account for stochastic variability, and all relevant performance metrics were recorded.

Table 3.

Control Parameter Settings of All Compared Algorithms.

3.2. Results Analysis

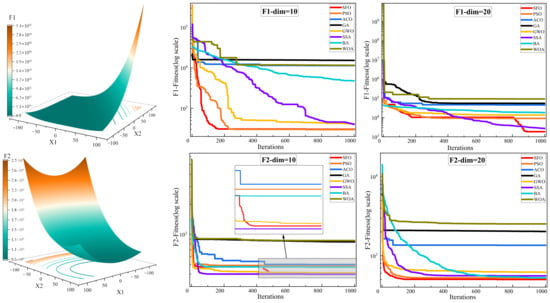

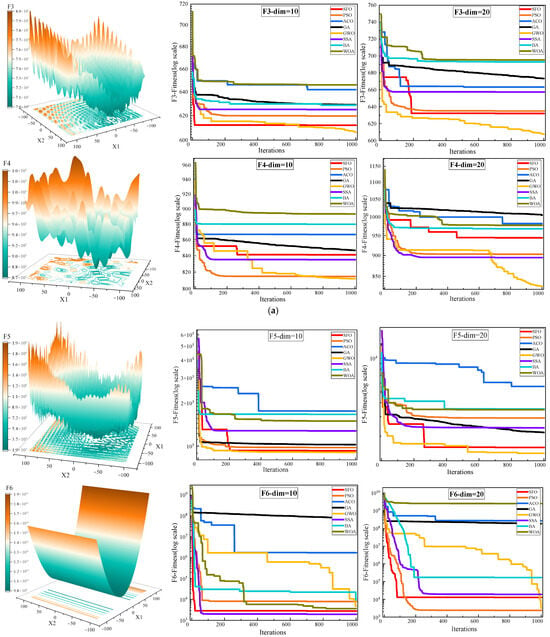

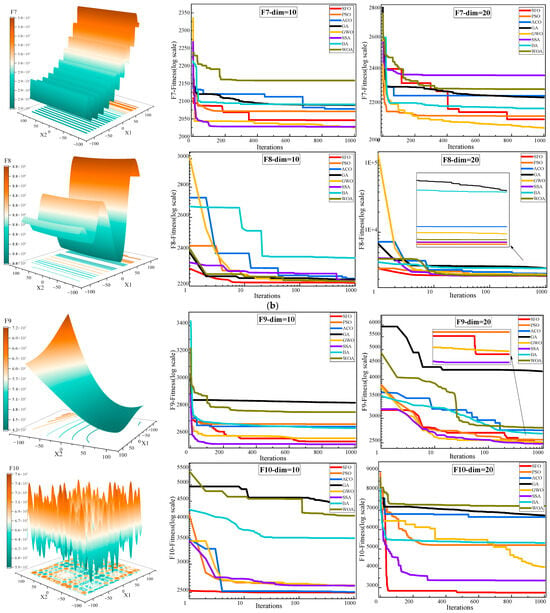

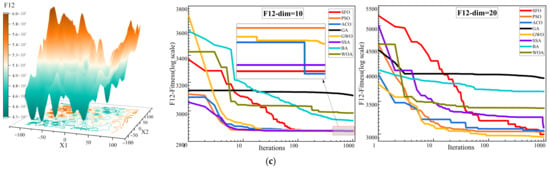

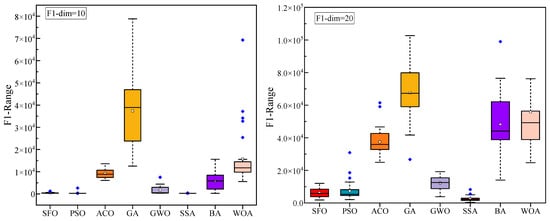

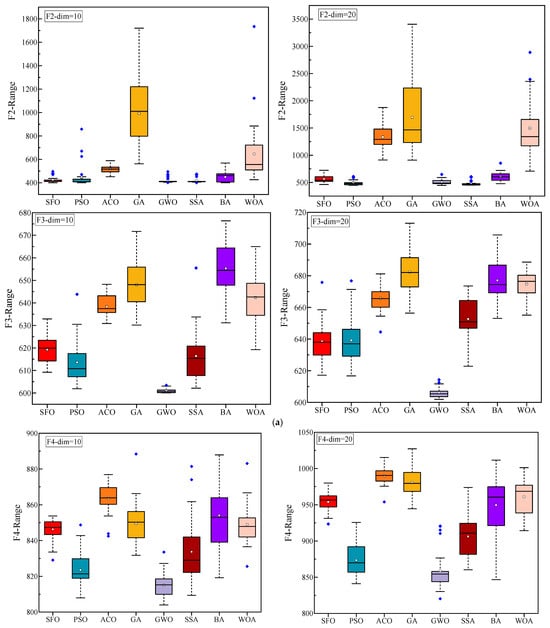

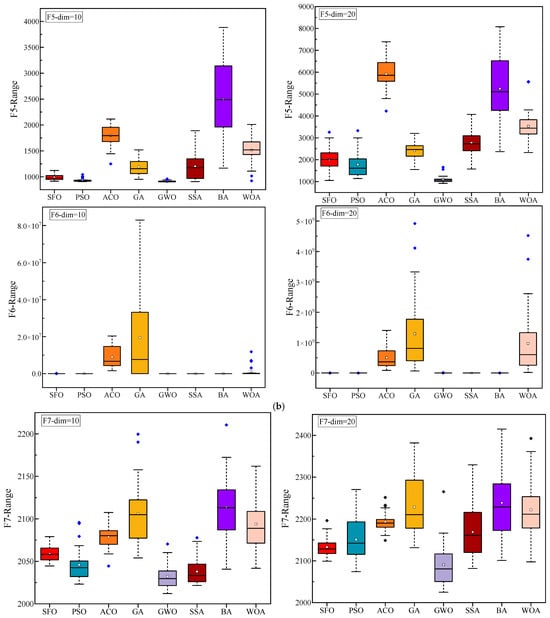

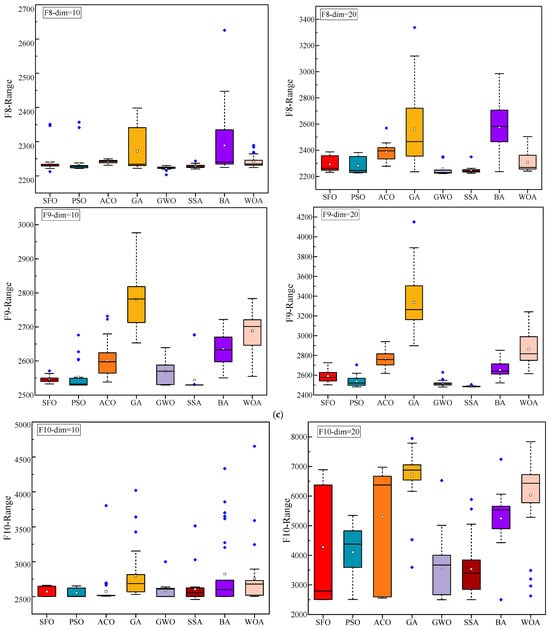

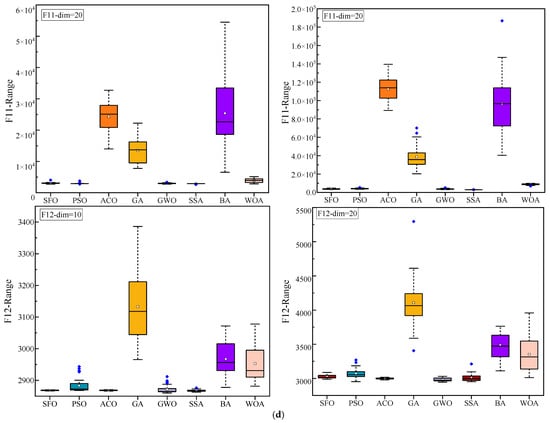

Figure 4 illustrates the convergence curves of various algorithms on the CEC2022 benchmark functions under different dimensional settings (Dim = 10 and Dim = 20), providing an intuitive comparison of their optimization speed and convergence stability. Figure 5 presents the boxplot distributions of the optimization results obtained from 30 independent runs for each algorithm, highlighting the robustness and variability of their performance across the CEC2022 test suite. Table 4 reports the detailed numerical results of the compared algorithms, including the maximum, mean, and standard deviation values over 30 independent runs under both Dim = 10 and Dim = 20. These statistical indicators comprehensively reflect the optimization accuracy, consistency, and reliability of each method across all test functions.

Figure 4.

Convergence behavior analysis of various algorithms on the CEC2022 benchmark. (a) F1–F4; (b) F5–F8; and (c) F9–F12.

Figure 5.

Statistical performance variation (boxplots) of algorithms on CEC2022 functions. (a) F1–F3; (b) F4–F6; (c) F7–F9; and (d) F10–F12.

Table 4.

Comparative performance on CEC2022 under different dimensions.

To comprehensively evaluate the optimization performance of the proposed SFO, Figure 4 and Figure 5, along with Table 3, present a detailed comparison against seven well-established metaheuristic algorithms across the CEC2022 benchmark functions under two dimensional settings (Dim = 10 and Dim = 20).

Figure 4 illustrates the convergence behavior of all competing algorithms. It is evident that SFO consistently achieves faster convergence rates and lower objective function values across most benchmark functions, particularly in complex landscapes such as F1, F6, and F11, demonstrating strong exploitation capability and convergence efficiency. Especially in high-dimensional scenarios (Dim = 20), SFO maintains a clear advantage in both convergence speed and stability, avoiding the premature stagnation observed in algorithms such as GA and ACO. However, it is worth noting that in several test functions, other algorithms achieve better convergence performance than SFO. For example, SSA outperforms SFO on functions F2, F6, F7, F9, and F11, likely due to its dynamic foraging and vigilance behavior that adapts well to multimodal or discontinuous landscapes. Similarly, GWO achieves superior results on F3–F5, F7, and F12, benefiting from its hierarchical leadership model that guides the population efficiently in functions with narrow valleys or deceptive basins. PSO, known for its rapid convergence, outperforms SFO on F4 and F6 (Dim = 20), while ACO shows better performance on F12 (Dim = 10) due to its memory-based pheromone-guided search. These results indicate that while SFO performs competitively across most scenarios, other algorithms can surpass it in specific problem classes with favorable structural alignment.

Figure 5 displays the boxplot distributions of the optimization results over 30 independent runs. The compact interquartile range and relatively low dispersion of SFO results indicate its robust performance and superior stability in most cases. In functions such as F2, F7, and F12, SFO achieves lower medians and presents fewer outliers, highlighting its reliability under stochastic conditions. Nevertheless, in several specific functions, other algorithms demonstrate better statistical distributions than SFO: In F2 (both dimensions), SSA slightly outperforms SFO, likely due to its effective role-switching mechanism between discoverers and followers, which provides stronger exploration in early iterations. In F3 and F4, GWO and PSO show improved consistency and median values. These advantages are attributed to their structured exploitation strategies and adaptive velocity updates, respectively. For F6 (Dim = 20), PSO exhibits better performance, benefiting from its inertia-controlled exploration behavior that facilitates early convergence in rugged search spaces. In F12, SFO is marginally outperformed by ACO (Dim = 10) and GWO (Dim = 20), both of which incorporate pheromone reinforcement or hierarchical guidance, offering improved stability in composite functions. These findings suggest that while SFO performs competitively across most test cases, its performance can be slightly surpassed by algorithms whose internal dynamics are better aligned with the characteristics of certain benchmark functions.

Table 4 provides the statistical metrics (maximum, mean, and standard deviation) of the optimization results over 30 independent runs. SFO frequently ranks among the top performers in terms of both average accuracy and variance control. Compared to classical algorithms like GA and BA, which exhibit high variability and sensitivity to initialization, SFO maintains lower standard deviation values and better mean convergence accuracy, reflecting its strong repeatability and robustness across diverse landscapes. However, it is noteworthy that in some specific benchmark functions, other algorithms exhibit superior performance over SFO: F2 (Dim = 10, 20): SSA achieves better convergence than SFO. This can be attributed to the adaptive step-size and role-based role division strategy in SSA, which enhances its local search ability in unimodal functions like F2, especially under low and moderate dimensions. F3 and F5 (Dim = 10, 20): GWO surpasses SFO in convergence performance. The Grey Wolf Optimizer benefits from its balanced hierarchical leadership structure, which enables efficient navigation in complex bowl-shaped function landscapes and contributes to its superior stability and global search capability on these multimodal functions. F4 (Dim = 10, 20): SSA, GWO, and PSO all outperform SFO. F4 is a hybrid composition function with significant landscape shifts. PSO’s velocity-position memory mechanism and SSA’s local adaptive behavior may help avoid stagnation more effectively than SFO in such composite landscapes. F6 (Dim = 20): PSO slightly surpasses SFO in mean performance. This is likely due to PSO’s strong convergence tendency, which in this case provides an advantage in a more rugged landscape where fine-tuning around global optima is essential. F7 (Dim = 10, 20): GWO and SSA outperform SFO. Their social hierarchy (GWO) and vigilance-based dynamic search (SSA) enhance exploration in noisy or discontinuous regions represented by F7. F9 (Dim = 10, 20): SSA again achieves better accuracy than SFO, which may be due to its predator-prey inspired escape mechanism, allowing efficient exit from deceptive local minima in non-separable problems. F11 (Dim = 20): SSA slightly outperforms SFO. F11 is known for deceptive local optima; SSA’s local–global trade-off mechanisms may help in this context. F12 (Dim = 10): ACO achieves better performance than SFO. Ant Colony Optimization’s path memory and pheromone update strategies may better adapt to the intricate structures in this rotated hybrid function. F12 (Dim = 20): GWO shows superior convergence. This suggests that in high-dimensional and highly irregular landscapes, GWO’s balance between exploration and exploitation may slightly outperform SFO’s fish behavior modeling.

The results indicate that SFO demonstrates competitive and well-balanced performance across convergence speed, solution quality, and robustness. It maintains superiority or near-top rankings in most benchmark functions and dimensional settings. However, the observation that other algorithms occasionally outperform SFO on specific functions aligns with the No-Free-Lunch (NFL) theorem, which states that no optimization algorithm can be universally superior across all problem domains. The varied landscape characteristics of benchmark functions naturally favor different algorithmic behaviors, highlighting the importance of diversity in algorithm design and the potential of hybridization or problem-specific enhancements for future improvements of SFO.

4. Representative Engineering Design Cases

In this section, the proposed SFO is applied to three classical engineering design problems to evaluate its optimization performance in practical scenarios. These problems include the pressure vessel design problem (PVD) [36], the speed reducer design problem [37], and the gear train design problem [38], which are widely recognized benchmarks in the field of structural and mechanical optimization. These design problems are characterized by nonlinear, constrained, and multi-variable objective functions, making them suitable for testing the global search capabilities and convergence robustness of metaheuristic algorithms. For each case, the mathematical formulation, design constraints, and variable bounds are retained in accordance with the respective literature to ensure comparability of results. The effectiveness of SFO in solving these problems is assessed based on solution quality, convergence speed, and constraint-handling performance, and is compared with existing optimization approaches.

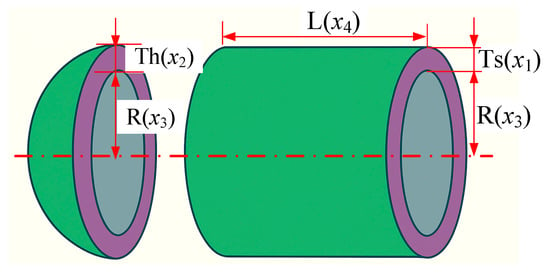

4.1. Pressure Vessel Design Problem

The pressure vessel design problem is a widely cited benchmark in structural engineering optimization. The design consists of a cylindrical vessel capped with two hemispherical heads. The primary objective is to minimize the total fabrication cost, which comprises the material cost, forming cost, and welding cost. To achieve this, the design must determine four key variables: the thickness of the shell and head (denoted as Ts = x1 and Th = x2, respectively), the inner radius of the vessel (R = x3), and the length of the cylindrical section excluding the head (L = x4), as illustrated in Figure 6.

Figure 6.

Schematic diagram of the pressure vessel design problem.

The mathematical formulation of the optimization problem is as follows:

To evaluate the performance of the proposed SFO on engineering design tasks, a benchmark test was conducted on the pressure vessel design problem. Seven algorithms, including SFO, PSO, ACO, GWO, SSA, BA, and WOA, were tested independently over 50 runs.

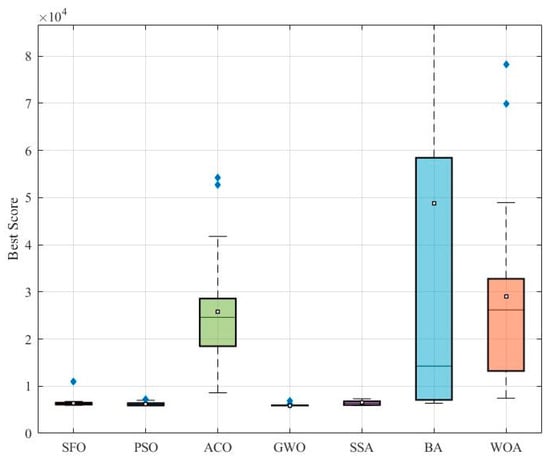

Figure 7 shows the boxplot of the best fitness values achieved by each algorithm. While SFO displays a relatively concentrated distribution with few outliers, it is slightly outperformed by GWO and PSO in terms of median and overall spread. Notably, GWO achieves the smallest variation, indicating highly stable performance, whereas BA and WOA exhibit much larger dispersion.

Figure 7.

Boxplot of optimization results for the pressure vessel problem.

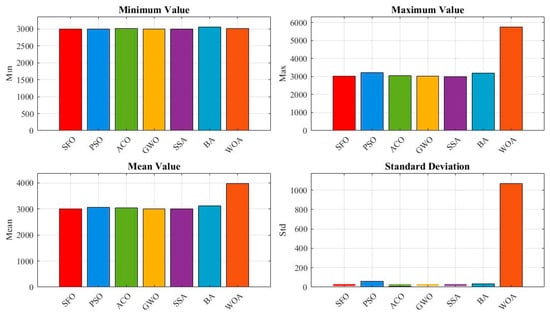

Figure 8 provides a breakdown of four statistical metrics: minimum, maximum, mean, and standard deviation. SFO achieves competitive results, with moderate mean and variance values, but trails behind GWO and PSO, both of which demonstrate lower mean and standard deviation, suggesting better convergence precision and consistency.

Figure 8.

Comparison of statistical indicators for the pressure vessel design optimization.

Table 5 lists the best solutions found by each algorithm. SFO’s best-found solution yields a relatively low objective value, though not the lowest. PSO obtains a slightly better optimum value, while ACO and WOA show significantly higher costs.

Table 5.

Comparative optimization results for the pressure vessel design.

Table 6 summarizes the statistical indicators and includes the Friedman ranking and Wilcoxon signed-rank test. SFO ranks third overall (F-Rank = 3), following GWO (Rank 1) and PSO (Rank 2). According to the Wilcoxon test, SFO is statistically superior to several weaker algorithms (e.g., ACO, BA, WOA), but not significantly better than GWO and PSO (p > 0.05 in these cases).

Table 6.

Comparative results and significance analysis for the pressure vessel optimization.

In summary, although SFO does not outperform all competitors on this specific problem, it achieves stable and competitive performance and remains statistically better than several mainstream algorithms. This suggests that SFO is a reliable optimization approach, though further tuning or hybridization may be needed for pressure vessel design task with strong structural constraints.

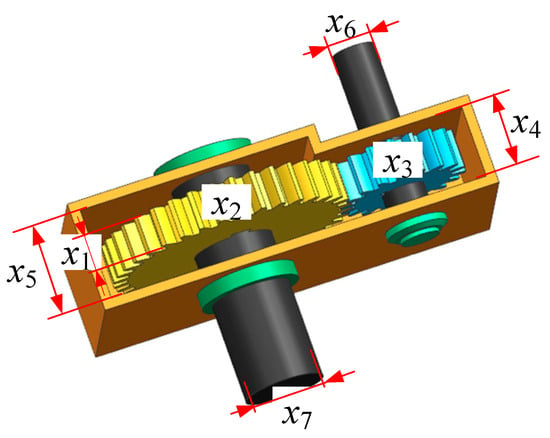

4.2. Speed Reducer Design

The speed reducer design problem is a classical constrained engineering optimization problem. The objective of this problem is to minimize the total weight of the speed reducer. As illustrated in Figure 9, the speed reducer consists of two independent shafts equipped with gears, which are sequentially connected to the main shaft via bearings. The optimization problem involves seven design variables and eleven inequality constraints. The decision variables include the following: face width (x1), module of the teeth (x2), number of teeth on the pinion (x3), length of the first shaft between bearings (x4), length of the second shaft between bearings (x5), diameter of the first shaft (x6), and diameter of the second shaft (x7).

Figure 9.

Schematic diagram of the speed reducer design problem.

The detailed mathematical formulation is provided below:

consider:

Minimize:

Subject to:

Bounds:

To evaluate the performance of the proposed SFO, we applied it to the classic speed reducer design problem and compared it with six widely used metaheuristic algorithms: PSO, ACO, GWO, SSA, BA, and WOA. The comparative analysis was conducted from multiple perspectives, including solution distribution, statistical indicators, and significance tests.

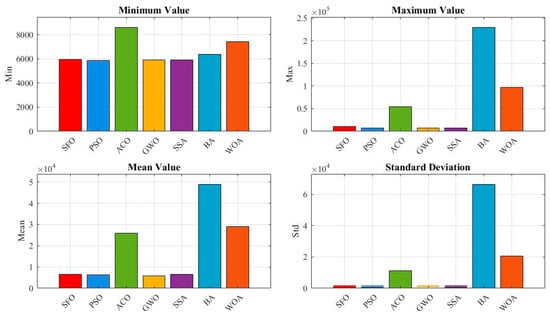

Figure 10 presents a boxplot of the best objective values obtained by each algorithm over 50 independent runs. It can be observed that SSA achieves the lowest median and overall tighter spread, indicating its superior consistency and performance. The SFO algorithm shows a relatively narrow interquartile range and low median value, reflecting stable performance and outperforming PSO, ACO, GWO, BA, and WOA.

Figure 10.

Comparative boxplot of algorithms for the speed reducer design.

Figure 11 provides a bar chart comparison of four statistical indicators: minimum, maximum, mean, and standard deviation. SFO ranks second in minimum and mean values, slightly behind SSA, which achieves the best scores across all metrics. The standard deviation of SFO is notably lower than that of most compared algorithms, demonstrating robust convergence.

Figure 11.

Evaluation metrics of optimization algorithms for the speed reducer design.

Table 7 lists the best design variables and corresponding objective values found by each algorithm. The best solution obtained by SFO is f(x) = 3006.9523, which is only slightly higher than SSA’s f(x) = 2994.4711, reinforcing the conclusion that SFO is a strong competitor but marginally outperformed by SSA. Nonetheless, SFO yields better solutions than PSO, ACO, GWO, BA, and WOA in this context.

Table 7.

Optimization outcomes of various algorithms for the speed reducer design.

Table 8 presents the statistical comparison results, including Min, Max, Mean, Std, Friedman ranking (F-Rank), Wilcoxon test outcomes, and corresponding p-values. SFO obtains a Friedman Rank of 2, second only to SSA, which ranks first. The Wilcoxon test confirms that the performance difference between SFO and the weaker algorithms (e.g., WOA, BA, and ACO) is statistically significant at the 0.05 level. Although SSA surpasses SFO in terms of optimality, the performance of SFO is statistically superior to most other algorithms.

Table 8.

Evaluation and statistical testing of the algorithms on the speed reducer design.

Overall, SFO demonstrates strong optimization capabilities on the speed reducer design problem. While SSA exhibits the best overall performance, SFO consistently outperforms PSO, ACO, GWO, BA, and WOA across most metrics, showing its effectiveness and stability.

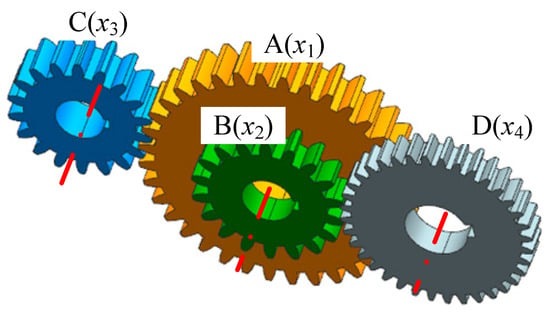

4.3. Gear Train Design Problem

The gear train design problem is a typical unconstrained discrete integer optimization problem in mechanical engineering. The objective is to minimize the gear ratio error between the input and output shafts. The gear ratio is defined as the ratio of the angular velocity of the output shaft to that of the input shaft. Let the number of teeth on gears A, B, C, and D be denoted as x1, x2, x3, and x4, respectively, as shown in Figure 12.

Figure 12.

Schematic diagram of the gear train design problem.

The mathematical model of the optimization problem is formulated as follows:

consider:

Minimize:

Subject to:

To further evaluate the effectiveness of the proposed SFO, this section investigates its performance on the gear train design problem, a discrete, integer, and nonlinear optimization task in mechanical systems. The goal is to minimize the deviation from a target gear ratio. SFO is compared with six established metaheuristic algorithms: PSO, ACO, GWO, SSA, BA, and WOA.

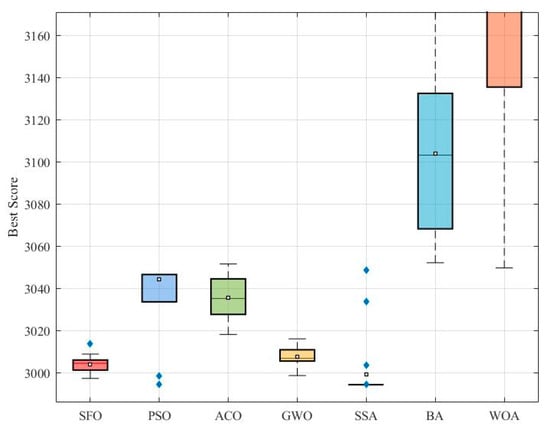

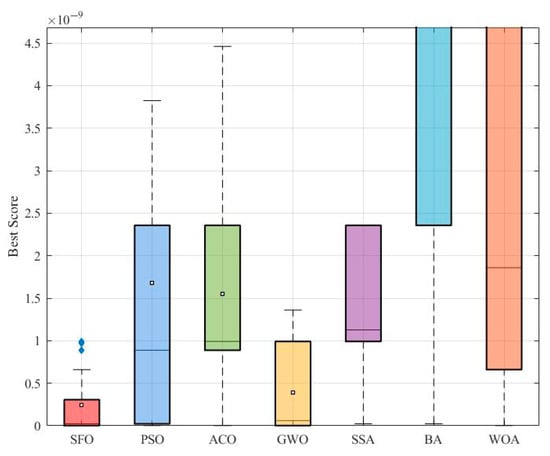

As shown in Figure 13, the boxplot reveals the distribution of the best objective values over 50 independent runs. The SFO algorithm clearly outperforms all other algorithms, achieving the lowest median value and the narrowest spread. In contrast, algorithms such as BA and WOA exhibit much higher variability and worse overall solution quality, with significant outliers. This indicates that SFO not only finds better solutions but also maintains high stability and robustness.

Figure 13.

Comparative boxplot of algorithms for the gear train design problem.

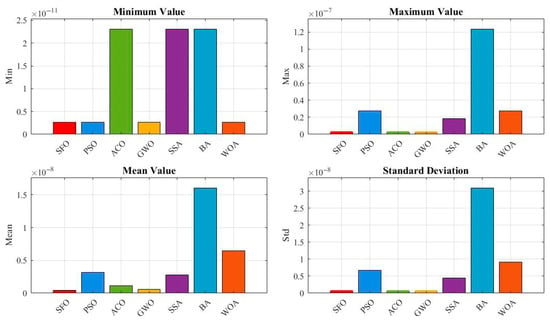

Figure 14 provides a quantitative comparison in terms of minimum, maximum, mean, and standard deviation values. SFO achieves the lowest mean and standard deviation, highlighting its consistent convergence toward the global optimum. Notably, although all algorithms reach a best fitness of 0 (as shown in Table 7), the statistical indicators in this figure show that only SFO consistently converges to zero with minimal variance, while other algorithms exhibit higher average errors and wider dispersions.

Figure 14.

Evaluation metrics of the algorithms for the gear train design problem.

Table 9 lists the best gear tooth combinations and corresponding objective values for each algorithm. All algorithms achieve an objective value of 0 at least once, demonstrating the solvability of the problem. However, the gear combinations identified by SFO—(53,30,13,51)—represent one of the ideal configurations that precisely meet the desired gear ratio. The robustness of SFO in repeatedly finding such solutions is further confirmed by the statistical results in Table 8.

Table 9.

Optimization outcomes of various algorithms for the gear train design problem.

Table 10 presents the statistical analysis of algorithm performance, including minimum, maximum, mean, standard deviation, Friedman ranking (F-Rank), Wilcoxon signed-rank test, and p-values. SFO ranks first (F-Rank = 1), with a mean value of 4.491 × 10−10 and the smallest standard deviation of 4.848 × 10−10. The Wilcoxon test confirms that the performance of SFO is statistically superior to all other competitors at the 0.05 significance level. These results strongly support the effectiveness and reliability of SFO for discrete, nonlinear problems such as gear train design.

Table 10.

Evaluation and statistical testing of the algorithms on the gear train design problem.

Across the three engineering design problems investigated—pressure vessel design, speed reducer design, and gear train design—the proposed SFO consistently demonstrates competitive optimization performance. Although SFO is slightly outperformed by GWO and PSO in the pressure vessel case, it achieves stable convergence and ranks among the top three algorithms. In the speed reducer problem, SFO exhibits superior performance over five of the six compared algorithms, trailing only SSA by a narrow margin. Most notably, in the gear train design problem, SFO ranks first in all statistical indicators, clearly outperforming all competitors in both accuracy and robustness. These results collectively highlight the generalizability, reliability, and strong optimization capability of SFO across a diverse set of engineering scenarios, including both continuous and discrete design domains.

These engineering cases, together with the preceding CEC2022 benchmark tests, demonstrate that SFO is not limited to specific types of problems but exhibits strong versatility across diverse optimization scenarios. It performs reliably on both numerical and real-world design problems, including continuous and discrete domains, unimodal and multimodal landscapes, and problems with complex structural constraints. While no algorithm can guarantee superiority in all possible cases—as asserted by the No-Free-Lunch (NFL) theorem—the experimental results confirm that SFO is a robust and competitive alternative for solving complex engineering and computational optimization tasks.

5. Conclusions

This paper presents the sharpbelly fish optimizer (SFO), a novel bio-inspired metaheuristic algorithm designed based on the ecological behaviors of Hemiculter leucisculus. By integrating four adaptive behaviors—fast swimming, swarm gathering, stagnation-triggered dispersal, and escape under disturbance—SFO achieves an effective balance between global exploration and local exploitation. The main contributions and findings are summarized as follows:

(1) SFO introduces a biologically driven search strategy that combines fitness-based motion and population diversity regulation. The algorithm is computationally efficient, with linear time complexity in terms of population size, problem dimension, and iteration count.

(2) On the CEC2022 benchmark suite, SFO demonstrates competitive or superior convergence accuracy, speed, and robustness compared to several state-of-the-art algorithms, including PSO, ACO, GWO, SSA, and others.

(3) SFO has been validated on three representative engineering design problems, covering both continuous and discrete domains. It consistently achieves top-level performance across multiple evaluation metrics, confirming its strong adaptability and reliability in practical engineering scenarios.

These results confirm that SFO is a versatile and effective optimization tool, capable of solving complex real-world problems with various structural characteristics and constraints.

While the SFO algorithm demonstrates strong convergence speed, stability, and adaptability across various benchmark functions, it still has certain limitations. For instance, its performance may degrade in extremely high-dimensional or highly deceptive multimodal landscapes, where more sophisticated memory-based or adaptive mechanisms may be required. Nevertheless, its simple structure, ease of implementation, and competitive performance make it a promising candidate for addressing a wide range of practical optimization problems in engineering and industrial applications.

Future work will focus on enhancing SFO through hybridization with neural or evolutionary mechanisms, extending it to multi-objective, dynamic, or multimodal scenarios, and exploring broader applications such as intelligent scheduling and automated industrial design.

Author Contributions

Conceptualization, J.L. and Y.D.; methodology, J.L.; software, R.W.; validation, R.W. and Y.D.; formal analysis, X.H.; investigation, Z.L.; resources, J.L.; data curation, R.W.; writing—original draft preparation, J.L.; writing—review and editing, Y.D.; visualization, X.H.; supervision, J.L.; project administration, J.L.; and funding acquisition, J.L. and Z.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Natural Science Foundation of Sichuan, China (No. 25LHJJ0373), the Science Fund of Chengdu Technological University (No. 2023ZR001), the Doctoral Talents Project of Chengdu Technological University (2025RC046), the National Funded Postdoctoral Research Program (No. GZC20241900), the Natural Science Foundation Program of Xinjiang Uygur Autonomous Region (No. 2024D01A141), the Tianchi Talents Program of Xinjiang Uygur Autonomous Region (Li Zhibin), and the Postdoctoral Fund of Xinjiang Uygur Autonomous Region (Li Zhibin).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data are available within the article.

Acknowledgments

The authors would like to express their sincere gratitude to the technical staff and research members of the laboratory team for their assistance in conducting the robot calibration experiments and providing valuable support throughout the study.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Chakraborty, A.; Kar, A.K. Swarm intelligence: A review of algorithms. Nat.-Inspired Comput. Optim. 2017, 475, 475–494. [Google Scholar]

- Brezočnik, L.; Fister, I., Jr.; Podgorelec, V. Swarm intelligence algorithms for feature selection: A review. Appl. Sci. 2018, 8, 1521. [Google Scholar] [CrossRef]

- Tang, J.; Liu, G.; Pan, Q. A review on representative swarm intelligence algorithms for solving optimization problems: Applications and trends. IEEE/CAA J. Autom. Sin. 2021, 8, 1627–1643. [Google Scholar] [CrossRef]

- Sun, W.; Tang, M.; Zhang, L.; Huo, Z.; Shu, L. A survey of using swarm intelligence algorithms in IoT. Sensors 2020, 20, 1420. [Google Scholar] [CrossRef]

- Parpinelli, R.S.; Lopes, H.S. New inspirations in swarm intelligence: A survey. Int. J. Bio-Inspired Comput. 2011, 3, 1–16. [Google Scholar] [CrossRef]

- Holland, J.H. Genetic algorithms. Sci. Am. 1992, 267, 66–73. [Google Scholar] [CrossRef]

- Dorigo, M.; Birattari, M.; Stützle, T. Ant colony optimization. IEEE Comput. Intell. Mag. 2007, 1, 28–39. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95—International Conference on Neural Networks, Perth, Australia, 27 November–7 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Yang, X.S.; He, X. Bat algorithm: Literature review and applications. Int. J. Bio-Inspired Comput. 2013, 5, 141–149. [Google Scholar] [CrossRef]

- Johari, N.F.; Zain, A.M.; Noorfa, M.H.; Udin, A. Firefly algorithm for optimization problem. Appl. Mech. Mater. 2013, 421, 512–517. [Google Scholar] [CrossRef]

- Xue, J.; Shen, B. A novel swarm intelligence optimization approach: Sparrow search algorithm. Syst. Sci. Control Eng. 2020, 8, 22–34. [Google Scholar] [CrossRef]

- Deng, Y.; Hou, X.; Li, B.; Wang, J.; Zhang, Y. A highly powerful calibration method for robotic smoothing system calibration via using adaptive residual extended Kalman filter. Robot. Comput.-Integr. Manuf. 2024, 86, 102660. [Google Scholar] [CrossRef]

- Zhao, W.; Zhang, Z.; Wang, L. Manta ray foraging optimization: An effective bio-inspired optimizer for engineering applications. Eng. Appl. Artif. Intell. 2020, 87, 103300. [Google Scholar] [CrossRef]

- Faramarzi, A.; Heidarinejad, M.; Stephens, B.; Mirjalili, S. Equilibrium optimizer: A novel optimization algorithm. Knowl.-Based Syst. 2020, 191, 105190. [Google Scholar] [CrossRef]

- Ahmadianfar, I.; Heidari, A.A.; Gandomi, A.H.; Chu, X.; Chen, H. RUN beyond the metaphor: An efficient optimization algorithm based on Runge Kutta method. Expert Syst. Appl. 2021, 181, 115079. [Google Scholar] [CrossRef]

- Mehta, P.; Yildiz, B.S.; Sait, S.M.; Yildiz, A.R. Hunger games search algorithm for global optimization of engineering design problems. Mater. Test. 2022, 64, 524–532. [Google Scholar] [CrossRef]

- Zhao, W.; Wang, L.; Mirjalili, S. Artificial hummingbird algorithm: A new bio-inspired optimizer with its engineering applications. Comput. Methods Appl. Mech. Eng. 2022, 388, 114194. [Google Scholar] [CrossRef]

- Fang, R.; Zhou, T.; Yu, B.; Li, Z.; Ma, L.; Zhang, Y. Dung Beetle Optimization Algorithm Based on Improved Multi-Strategy Fusion. Electronics 2025, 14, 197. [Google Scholar] [CrossRef]

- El-Kenawy, E.S.M.; Khodadadi, N.; Mirjalili, S.; Abdelhamid, A.A.; Eid, M.M.; Ibrahim, A. Greylag goose optimization: Nature-inspired optimization algorithm. Expert Syst. Appl. 2024, 238, 122147. [Google Scholar] [CrossRef]

- Tripathy, B.K.; Reddy Maddikunta, P.K.; Pham, Q.V.; Gadekallu, T.R.; Dev, K.; Pandya, S.; ElHalawany, B.M. Harris hawk optimization: A survey onvariants and applications. Comput. Intell. Neurosci. 2022, 2022, 2218594. [Google Scholar] [CrossRef]

- Abualigah, L.; Diabat, A.; Mirjalili, S.; Abd Elaziz, M.; Gandomi, A.H. The arithmetic optimization algorithm. Comput. Methods Appl. Mech. Eng. 2021, 376, 113609. [Google Scholar] [CrossRef]

- Cai, T.; Zhang, S.; Ye, Z.; Zhou, W.; Wang, M.; He, Q.; Bai, W. Cooperative metaheuristic algorithm for global optimization and engineering problems inspired by heterosis theory. Sci. Rep. 2024, 14, 28876. [Google Scholar] [CrossRef]

- Kouka, S.; Makhadmeh, S.N.; Al-Betar, M.A.; Dalbah, L.M.; Nachouki, M. Recent Applications and Advances of Migrating Birds Optimization. Arch. Comput. Methods Eng. 2024, 31, 243–262. [Google Scholar] [CrossRef]

- Wolpert, D.H.; Macready, W.G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Wang, T.; Jakovlić, I.; Huang, D.; Wang, J.G.; Shen, J.Z. Reproductive strategy of the invasive sharpbelly, Hemiculter leucisculus (Basilewsky 1855), in Erhai Lake, China. J. Appl. Ichthyol. 2016, 32, 324–331. [Google Scholar] [CrossRef]

- Guo, J.; Mo, J.; Zhao, Q.; Han, Q.; Kanerva, M.; Iwata, H.; Li, Q. De novo transcriptomic analysis predicts the effects of phenolic compounds in Ba River on the liver of female sharpbelly (Hemiculter lucidus). Environ. Pollut. 2020, 264, 114642. [Google Scholar] [CrossRef]

- Chen, W.; Zhong, Z.; Dai, W.; Fan, Q.; He, S. Phylogeographic structure, cryptic speciation and demographic history of the sharpbelly (Hemiculter leucisculus), a freshwater habitat generalist from southern China. BMC Evol. Biol. 2017, 17, 216. [Google Scholar] [CrossRef] [PubMed]

- Dong, X.; Ju, T.; Grenouillet, G.; Laffaille, P.; Lek, S.; Liu, J. Spatial pattern and determinants of global invasion risk of an invasive species, sharpbelly Hemiculter leucisculus (Basilewsky, 1855). Sci. Total Environ. 2020, 711, 134661. [Google Scholar] [CrossRef]

- Wang, D.; Tan, D.; Liu, L. Particle swarm optimization algorithm: An overview. Soft Comput. 2018, 22, 387–408. [Google Scholar] [CrossRef]

- Dorigo, M.; Stützle, T. Ant colony optimization: Overview and recent advances. In Handbook of Metaheuristics; Springer: Cham, Switzerland, 2018; pp. 311–351. [Google Scholar]

- Mirjalili, S.M. Genetic algorithm. In Evolutionary Algorithms and Neural Networks: Theory and Applications; Springer: Cham, Switzerland, 2019; pp. 43–55. [Google Scholar]

- Faris, H.; Aljarah, I.; Al-Betar, M.A.; Mirjalili, S. Grey wolf optimizer: A review of recent variants and applications. Neural Comput. Appl. 2018, 30, 413–435. [Google Scholar] [CrossRef]

- Gharehchopogh, F.S.; Namazi, M.; Ebrahimi, L.; Abdollahzadeh, B. Advances in sparrow search algorithm: A comprehensive survey. Arch. Comput. Methods Eng. 2023, 30, 427–455. [Google Scholar] [CrossRef]

- Dao, T.K.; Nguyen, T.T. A review of the bat algorithm and its varieties for industrial applications. J. Intell. Manuf. 2024, 1–23. [Google Scholar] [CrossRef]

- Amiriebrahimabadi, M.; Mansouri, N. A comprehensive survey of feature selection techniques based on whale optimization algorithm. Multimed. Tools Appl. 2024, 83, 47775–47846. [Google Scholar] [CrossRef]

- Hassan, S.; Kumar, K.; Raj, C.D.; Sridhar, K. Design and optimisation of pressure vessel using metaheuristic approach. Appl. Mech. Mater. 2014, 465, 401–406. [Google Scholar] [CrossRef]

- Yuan, R.; Li, H.; Gong, Z.; Tang, M.; Li, W. An enhanced Monte Carlo simulation–based design and optimization method and its application in the speed reducer design. Adv. Mech. Eng. 2017, 9, 1687814017728648. [Google Scholar] [CrossRef]

- Alam, J.; Panda, S.; Dash, P. A comprehensive review on design and analysis of spur gears. Int. J. Interact. Des. Manuf. 2023, 17, 993–1019. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).