1. Introduction

Brain-like neuromorphic hardware is commonly used for pattern recognition tasks, and has demonstrated a strong performance in speech recognition, image classification, and obstacle avoidance. Wu et al. [

1] implemented a spiking neural network (SNN) with dynamic memristor-based time-surface neurons on complementary metal–oxide semiconductor (CMOS) hardware network architecture, and applied it in a speech recognition task on the Heidelberg digits dataset. They found that the speech recognition accuracy of this SNN hardware is 95.91%. Han et al. [

2] implemented SNN on Field-Programmable Gate Arrays (FPGA), and applied it in a handwritten digits classification task on the MNIST dataset. They found that the classification accuracy of this SNN hardware is 97.06%, and the power consumption is only 0.477 W. However, electromagnetic interference can damage the components of electronic equipment, resulting in a decline or even failure of their normal function [

3]. As a product of natural selection, the human brain is adaptive to exterior attack [

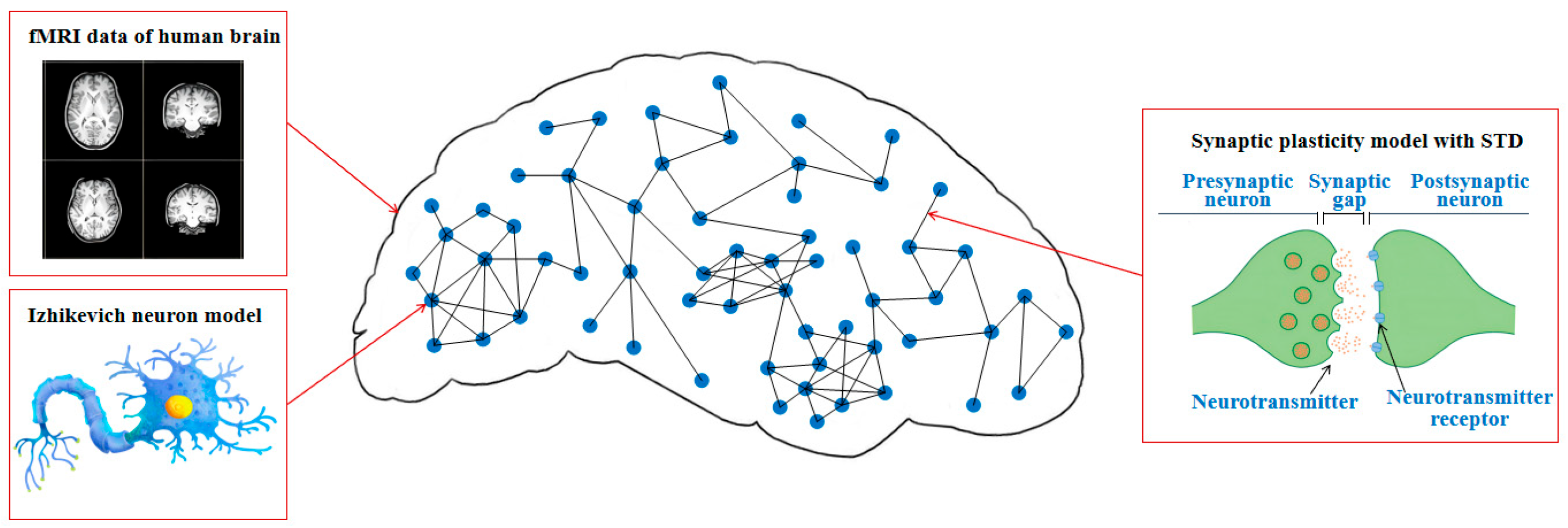

4]. Thus, a brain-like model, by incorporating self-adaptability, could be expected to improve its robustness; however, existing models are limited by their lack of bio-plausibility. As a brain-like model, the SNN contains neuron models, synaptic plasticity models, and topology, which offers a powerful processing capability for temporal-related signals through simulating electrophysiological properties [

5].

Neurons are the fundamental structural and functional units of the brain, and neuron models have been proposed to simulate the electrophysiological characteristics of neurons based on mathematical equations. As a first-order ordinary differential equation (ODE), the integrate-and-fire (LIF) neuron model can reproduce the integration and firing process of neuronal membrane potential (MP), which has a low computing cost, but cannot conform well to the firing characteristics of the neuron, resulting in a lack of bio-reality [

6]. Due to the simplicity of the LIF model, Rhodes et al. [

7] employed the LIF model to construct an SNN on the SpiNNaker neuromorphic hardware platform, and measured its computing speed and energy efficiency. They found that the computing speed and energy efficiency of this neuromorphic hardware, with parallelized spike communication, are higher than those of the neuromorphic hardware without parallelized spike communication. Conversely, the Hodgkin–Huxley (HH) neuron model conforms well to the firing characteristics of the neuron, but its fourth-order ODE imposes a high computing cost [

8]. Ward et al. [

9], respectively, the employed HH model and LIF model to construct SNNs on the SpiNNaker neuromorphic hardware platform, and compared their computing time and firing behaviors. They found that, compared to neuromorphic hardware with the LIF model, this neuromorphic hardware with the HH model has a greater computation time and more diverse firing behaviors. These results verify that neuromorphic hardware with the LIF model has lower bio-reality, and neuromorphic hardware with the HH model has a greater computation cost. The Izhikevich neuron model, as a second-order ODE [

10], conforms well to the firing characteristics of the neuron while maintaining a low computing cost, and can be broadly applied in the construction of a brain-like model. Faci-Lázaro et al. [

11] built an SNN incorporating the Izhikevich model and investigated the network’s spontaneous activation, which was influenced by both dynamic and topological factors. Their research demonstrated that higher connectivity, shorter internodal distances, and greater clustering collectively reduce the synaptic strength that is necessary to sustain network activity. Li et al. [

12] employed the Izhikevich model to construct an SNN in a RRAM-based neuromorphic chip, and investigated its signal transmission. They found that the spike signals generated by the Izhikevich model have rich firing patterns and a high computation efficiency, which enable it to maintain encoding efficiency, even under exterior noise, thereby reducing the signal distortion of the chip. These results demonstrate that the brain-like model, combined with the Izhikevich model, has the advantages of higher bio-reality and a lower computation cost.

Synapses are important structures for information transfer between neurons, and serve as the foundation for learning and memory [

13]. The results of biological experiments indicated that excitatory synapses (ES) and inhibitory synapses (IS) jointly form the basis of dynamic modulation in the brain [

14]. Drawing on these findings, scholars have incorporated synaptic plasticity models with ES and IS into SNNs. For example, Sun and Si [

15] found that an SNN with the synaptic plasticity models co-modulated by ES and IS has higher coding efficiency than the SNN modulated only by ES or IS during the population coding. In synapses, the neurotransmitters’ diffusion across the synaptic cleft induces synaptic time delay (STD), which augments the processing of neural signals. The dynamic range of bio-STD is not fixed, but rather exhibits a stochastic distribution, varying randomly between 0.1 and 40 ms [

16]. Zhang et al. [

17] employed STD to SNN, and discovered that the learning performance of this SNN surpassed those of SNNs without STD. However, its STD was a fixed value, which did not conform to the bio-STD. The variability in STD is crucial for the modulation of the signal transfer, allowing for an adaptable response to attack. We therefore constructed a complex SNN based on synaptic plasticity models with stochastic STD distributed between 0.1 and 40 ms, conforming to the bio-STD, and discovered that the damage resistance of this SNN was superior to the SNN with fixed STD. In turn, the SNN with a fixed STD was superior to the SNN without an STD against stochastic attack [

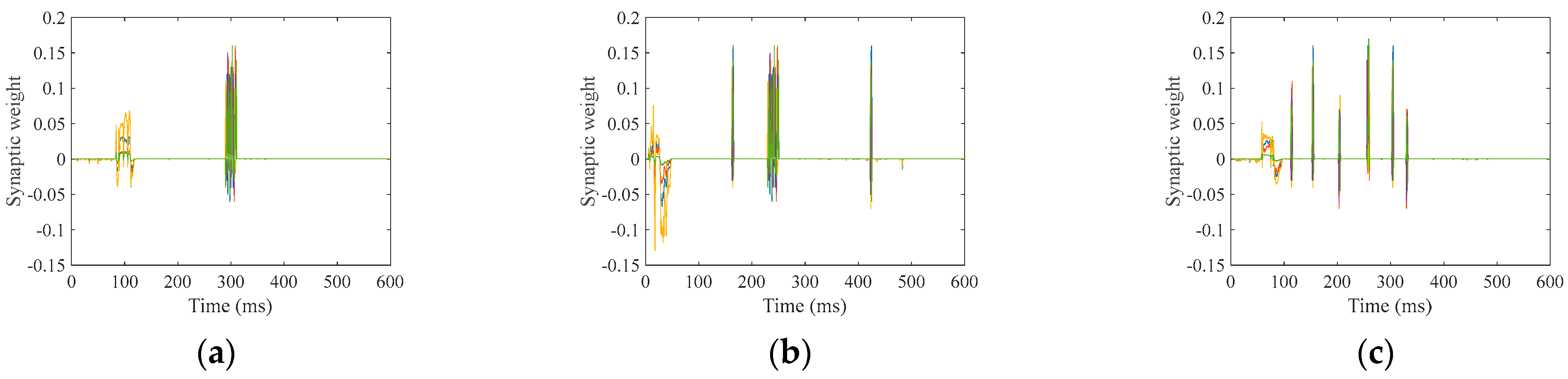

18]. Therefore, a synaptic plasticity model incorporating STD aligns with the bio-STD, is co-modulated by ES and IS, and can enhance the information processing of an SNN.

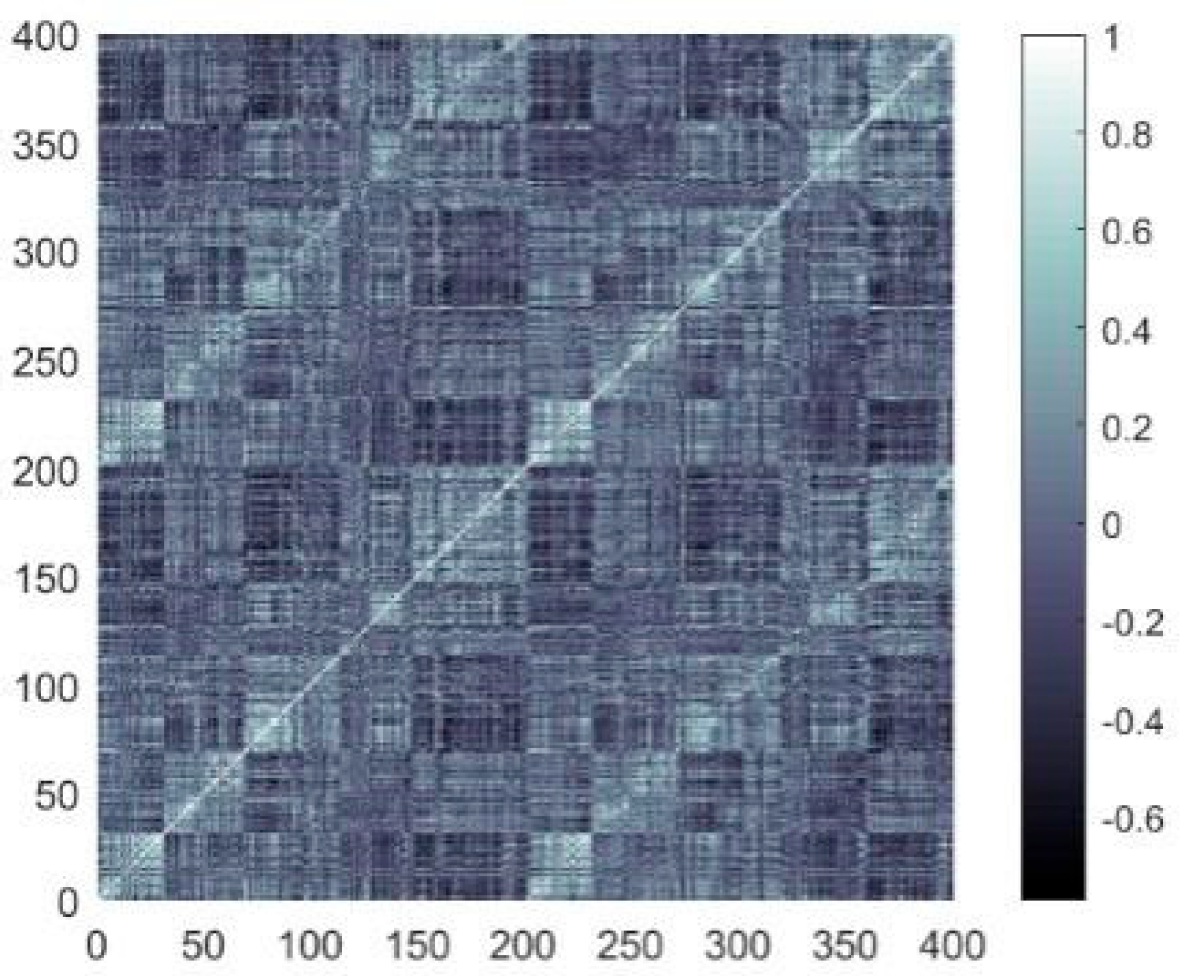

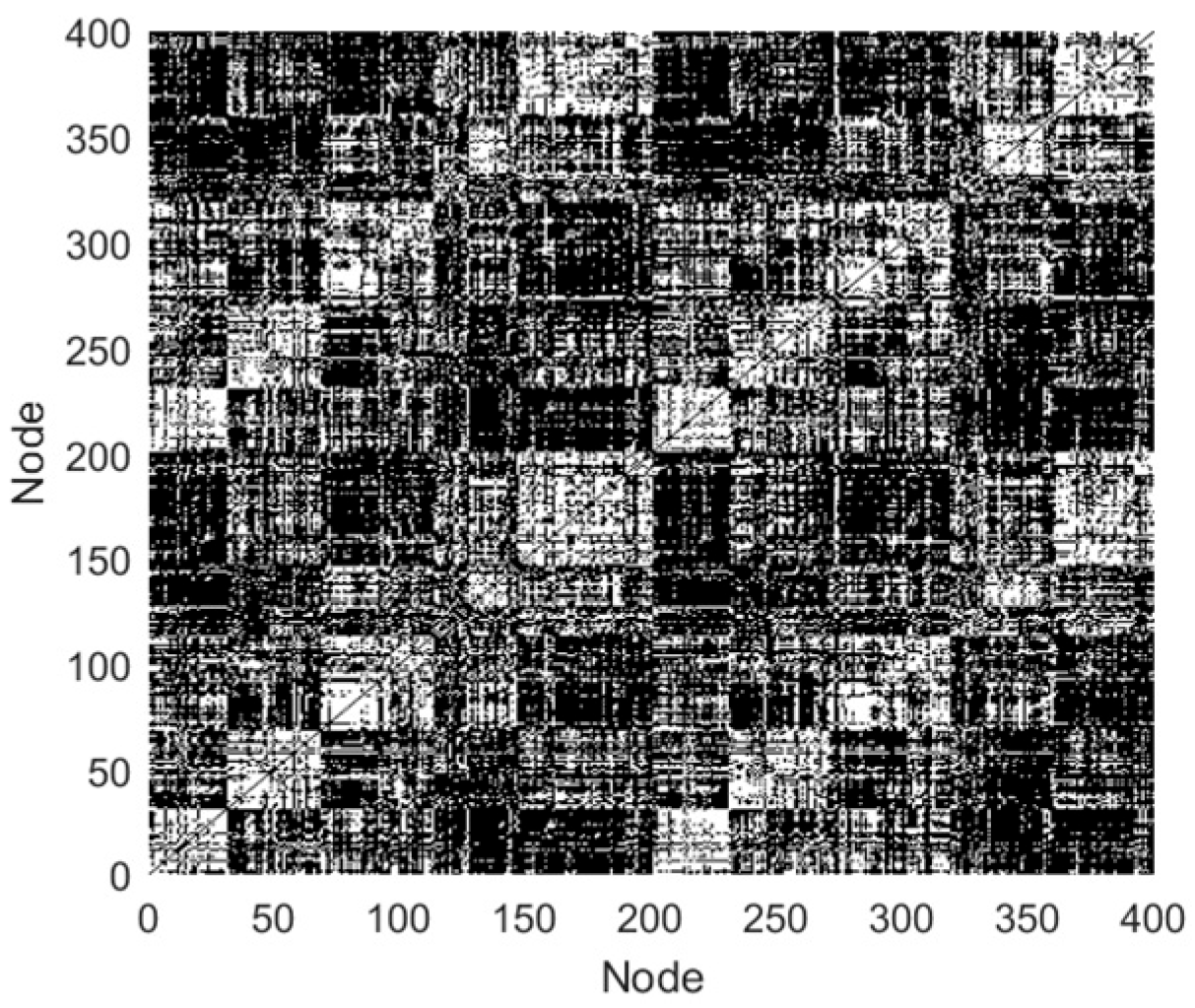

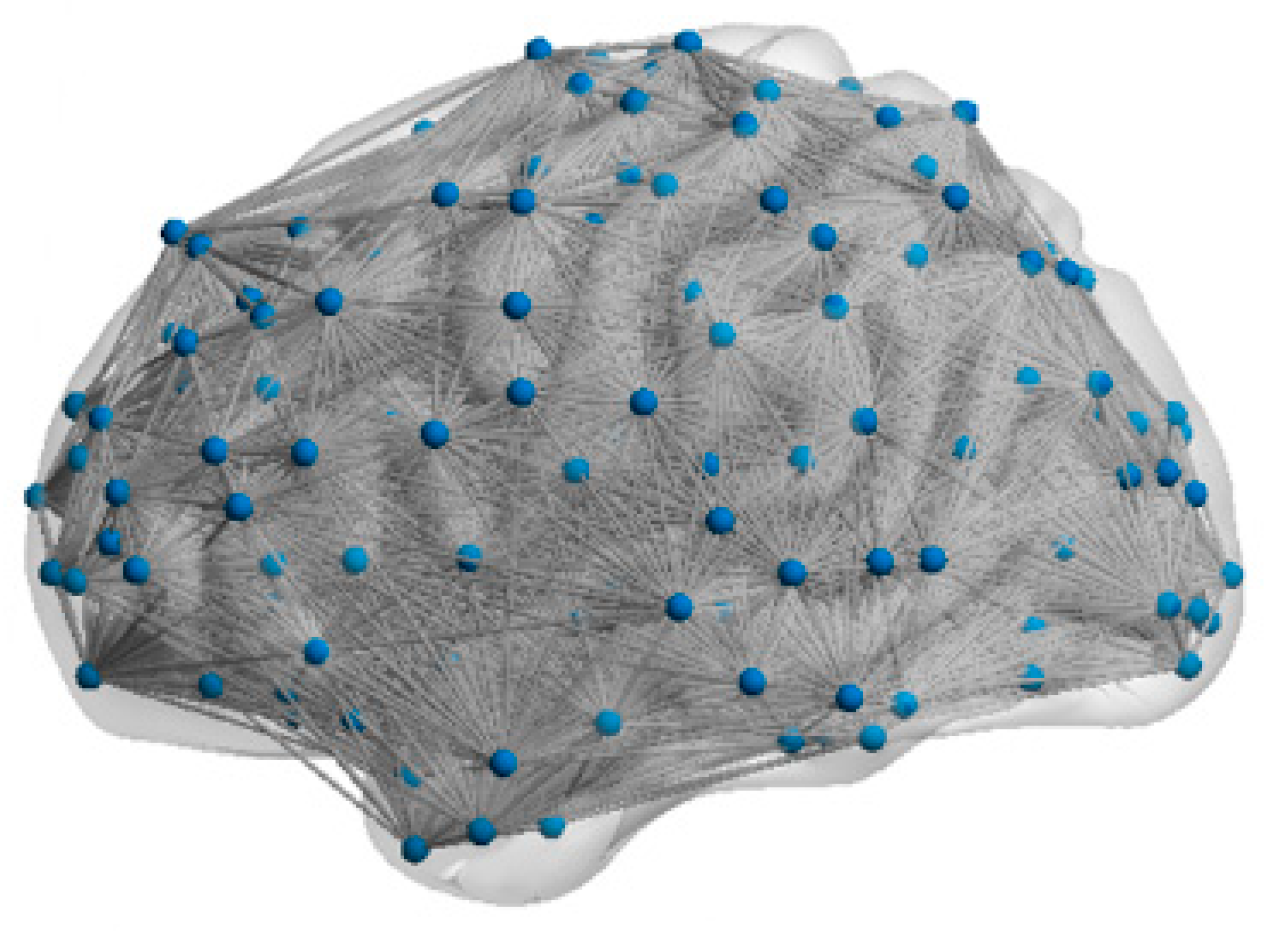

The topology reflects the connections that form among neurons, and influences the brain function. Van et al. [

19] obtained functional brain networks (FBNs), utilizing resting-state fMRI of healthy humans, and discovered that the networks possessed the small-world (SW) and scale-free (SF) features. The SW network achieves an optimal balance between high clustering coefficients and low path lengths, supporting efficient distributed signal processing capabilities. In contrast, the SF network exhibits a significant node connectivity heterogeneity by following a power–law degree distribution, and this topology utilizes hub node-mediated routing mechanisms to significantly improve information throughput efficiency. Drawing on these findings, scholars have constructed brain-like models with SW or SF features. For examples, Budzinski et al. [

20] constructed a small-world spiking neural network (SWSNN) using the Watts–Strogatz (WS) algorithm, and discovered that when the coupling strength was sufficiently high, this SWSNN transitioned from an asynchronous state to an synchronous state as the reconnection probability increased; Reis et al. [

21] built a scale-free spiking neural network (SFSNN), employing the Barabasi–Albert (BA) algorithm, and discovered that this SFSNN could inhibit burst synchronization caused by light pulse perturbation, and keep low synchronization when the perturbation stopped. However, the real functional connections of the human brain cannot be reflected by the topology created by algorithms. Due to natural selection and evolution, the human brain has developed efficient computing power [

22]. SNNs constrained by the FBN for the human brain have the potential to improve neural information processing.

An SNN possesses the capability to capture and transmit temporal signals through its spiking coding, which makes it superior in handling the temporal-related tasks [

23,

24]. The speech signals inherently exhibit prominent temporal characteristics, as the rhythm of phonemes, syllables, and words evolves over time. Thus, SR extends beyond the instantaneous classification of acoustic signals, encompassing the continuous monitoring and analysis of temporal dynamics in speech features across an extended time sequence [

25]. A liquid state machine (LSM) serves as a framework for recognition tasks, where the reserve layer formed an SNN [

26]. This SNN is responsible for transforming the input data from its original lower-dimensional space into a higher-dimensional space, where distinct categories tend to become more recognizable. Additionally, the independence of the reserve layer and reading function in the LSM allows for easier training of the reading function rather than the entire network [

27]. Hence, the LSM has been applied to the task of SR. Zhang et al. [

28] built an LSM utilizing recurrent SNN with a synaptic plasticity that conformed to Hebbian theory, and observed that its SR outperformed a recurrent SNN with a synaptic plasticity of long short-term memory on the TI-46 dataset. Deckers et al. [

29] built an LSM co-modulated by ES and IS, and observed that its SR accuracy outperformed the SNN modulated by the ES on the TI-46 speech corpus. Moreover, the performance of an SNN is influenced by its topology. Srinivasan et al. [

30] built an LSM with topology featuring a distributed multi-liquid topology, and observed that its performance outperformed the SNN with a random topology on the TI-46 speech corpus. In our previous research [

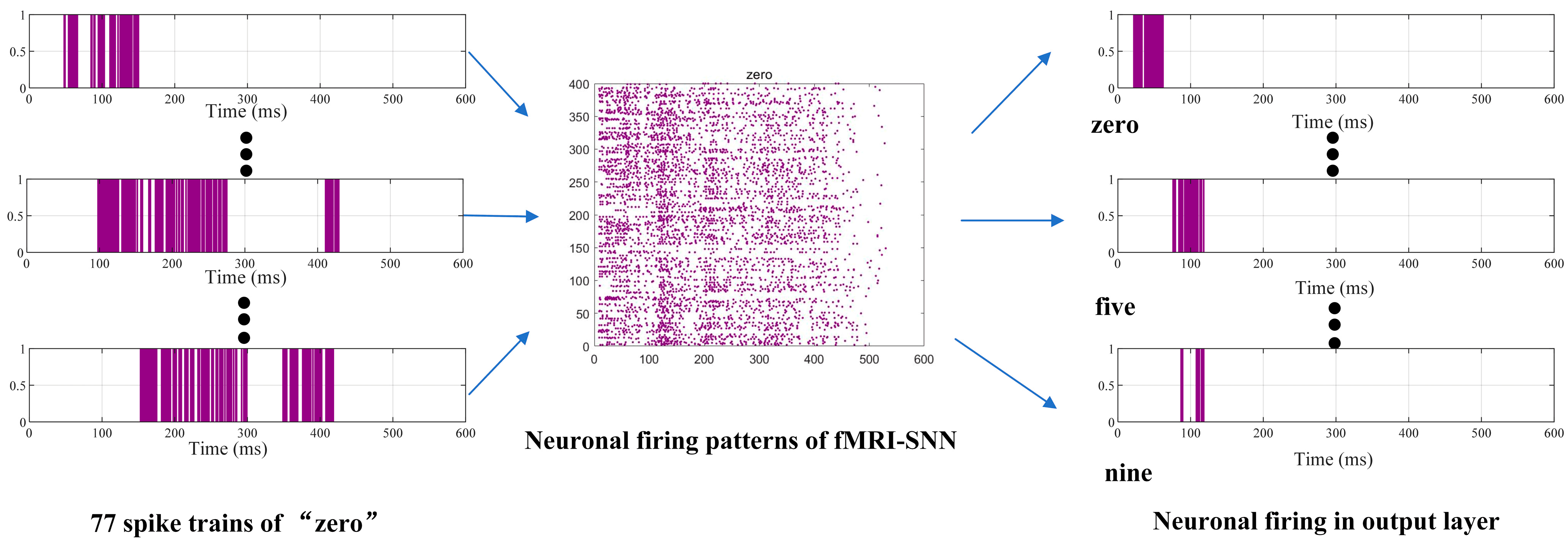

31,

32], we constructed a brain-like model called fMRI-SNN, whose topology was constrained by human brain fMRI data, and discovered that its SR accuracy outperformed that of SNNs with distinct topologies on the TI-46 speech corpus. These findings suggest that improving the bio-plausibility of SNN can enhance its performance, and SR tasks serve as an effective benchmark for certifying the performance of SNN.

Research in the field of biological neuroscience has shown that the human brain is robust to injury [

33]. Drawing on these findings, the robustness of brain-like models, including interference resistance and damage resistance, has been investigated. In terms of interference resistance, Zhang et al. [

34] found that a novel hybrid residual SNN could eliminate exterior electric-field noise by controlling the pulse frequency, with a recognition accuracy that was only 2.15% lower than that of an SNN without exterior electric-field noise on the neuromorphic DVS128 Gesture dataset. In our previous work [

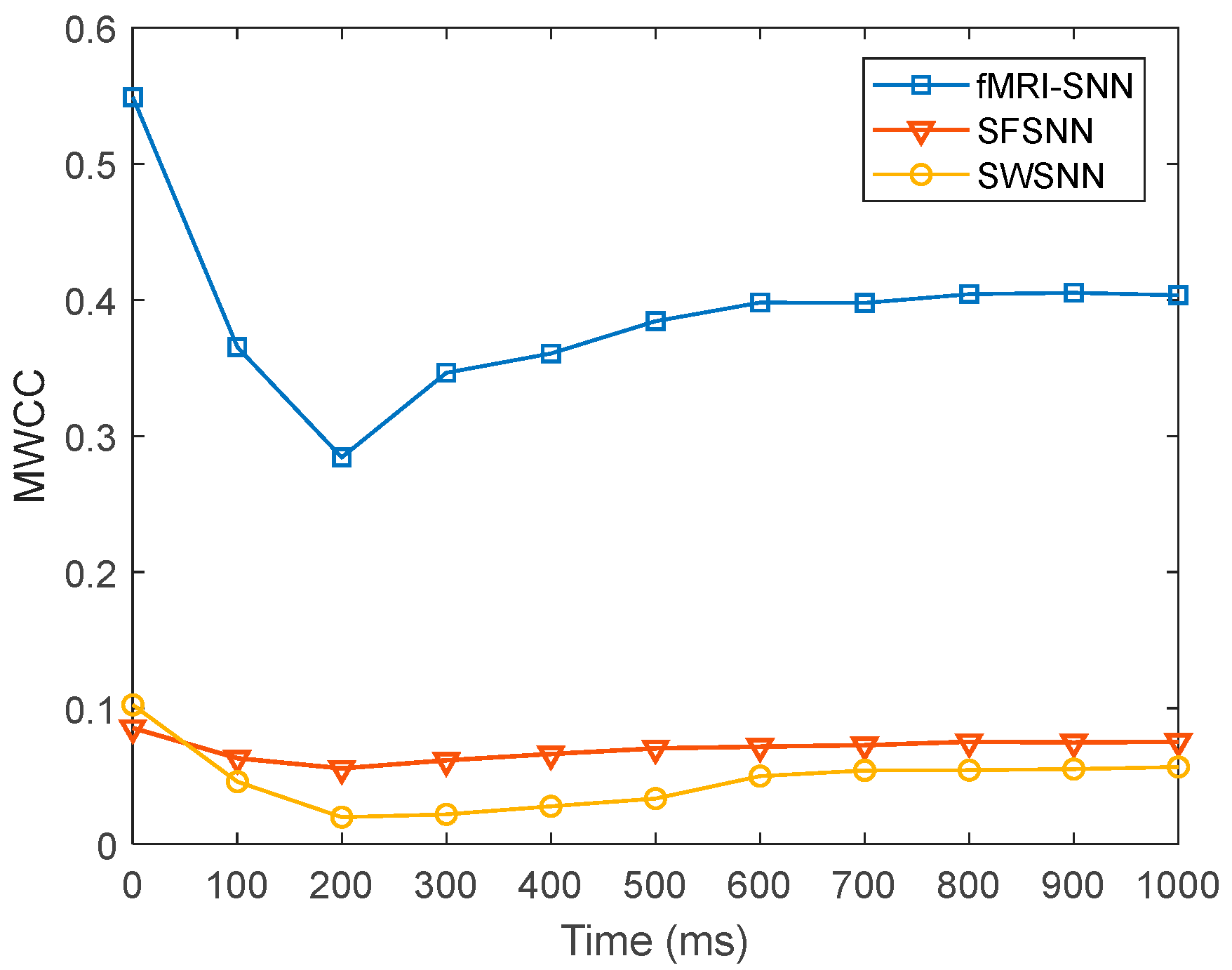

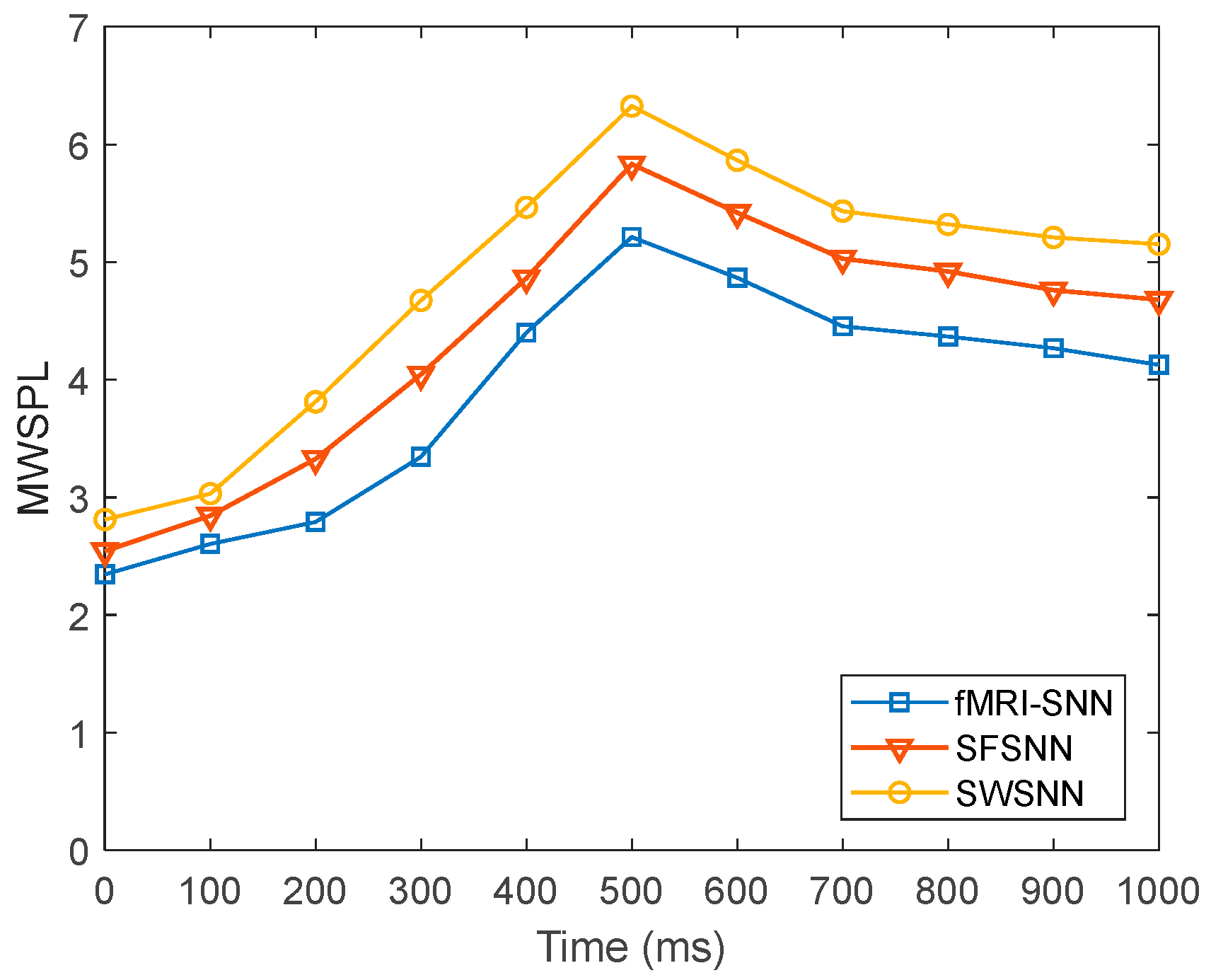

35], we found that, against pulse noise, the SWSNN exhibited superior interference resistance compared to an SFSNN by analyzing the neural electrical activity. In terms of damage resistance, Jang et al. [

36] found that against stochastic attacks, a deep neural network incorporating adaptable activation functions had a superior recognition performance to those networks without these same functions on the CIFAR-10 datasets. In our previous work [

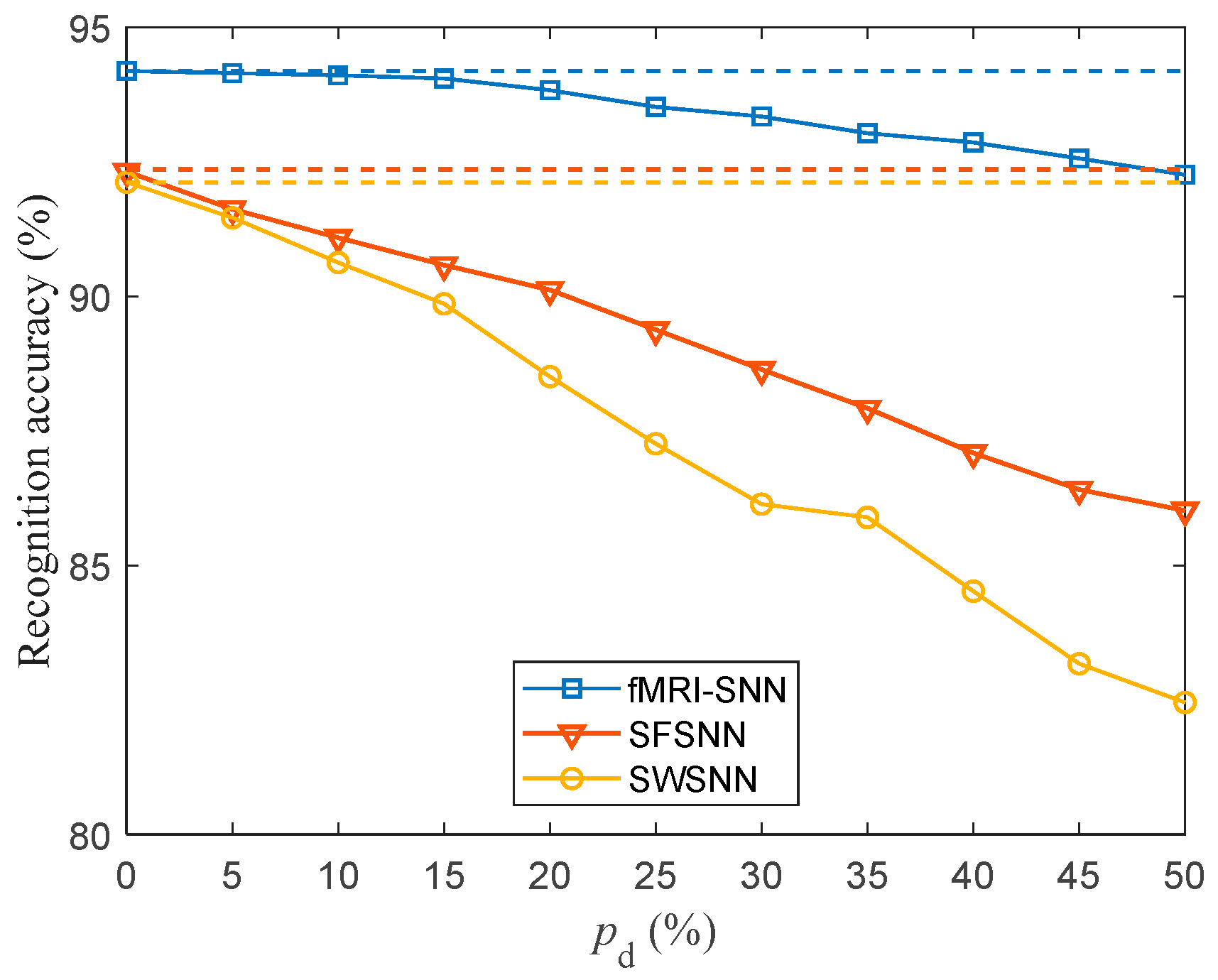

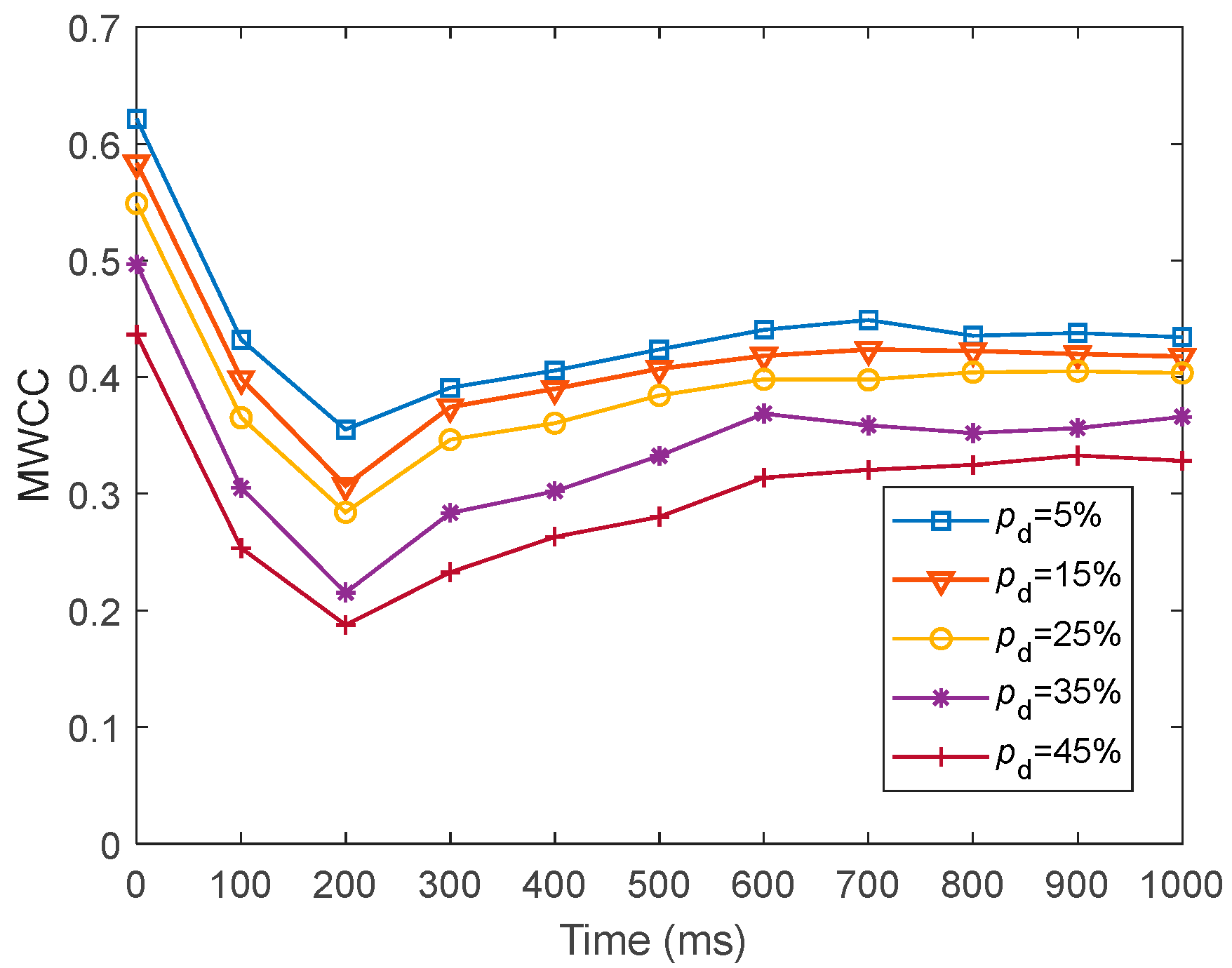

37], we found that against a targeted attack, the SWSNN exhibited superior damage resistance compared to SFSNN by analyzing the neural electrical activity. Nevertheless, the existing brain-like models currently lack bio-plausibility, and researching a brain-like model with bio-plausibility is expected to improve its robustness. The aim of this study is to construct a brain-like model with bio-plausibility to optimize its damage resistance. We construct an fMRI-SNN constrained by human FBN from fMRI data, then certify its damage resistance on the task of SR, and further investigate the damage-resistant mechanism.

The core contributions of this research are highlighted below:

We proposed an fMRI-SNN whose topology is constrained by human brain fMRI to improve its bio-plausibility.

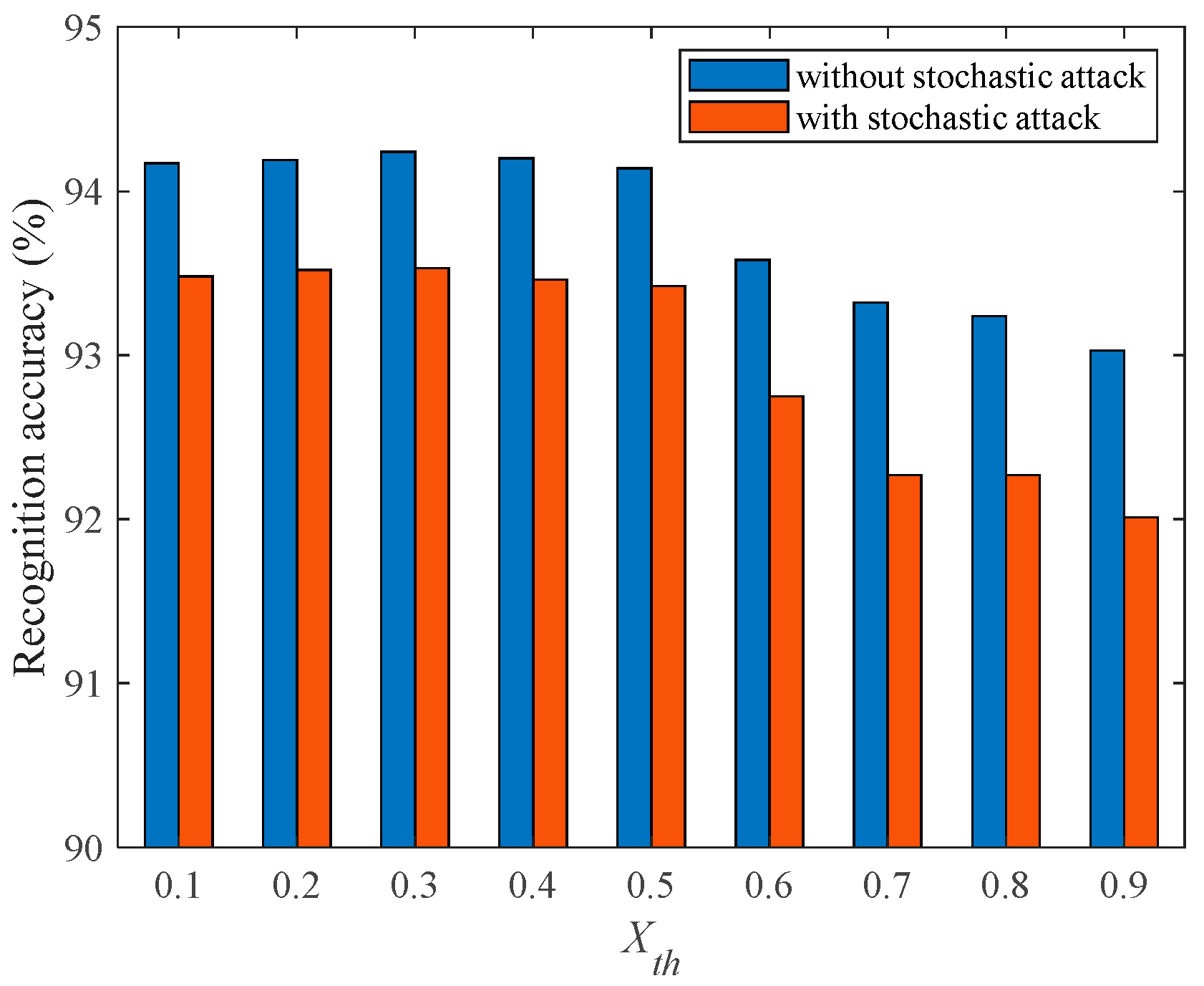

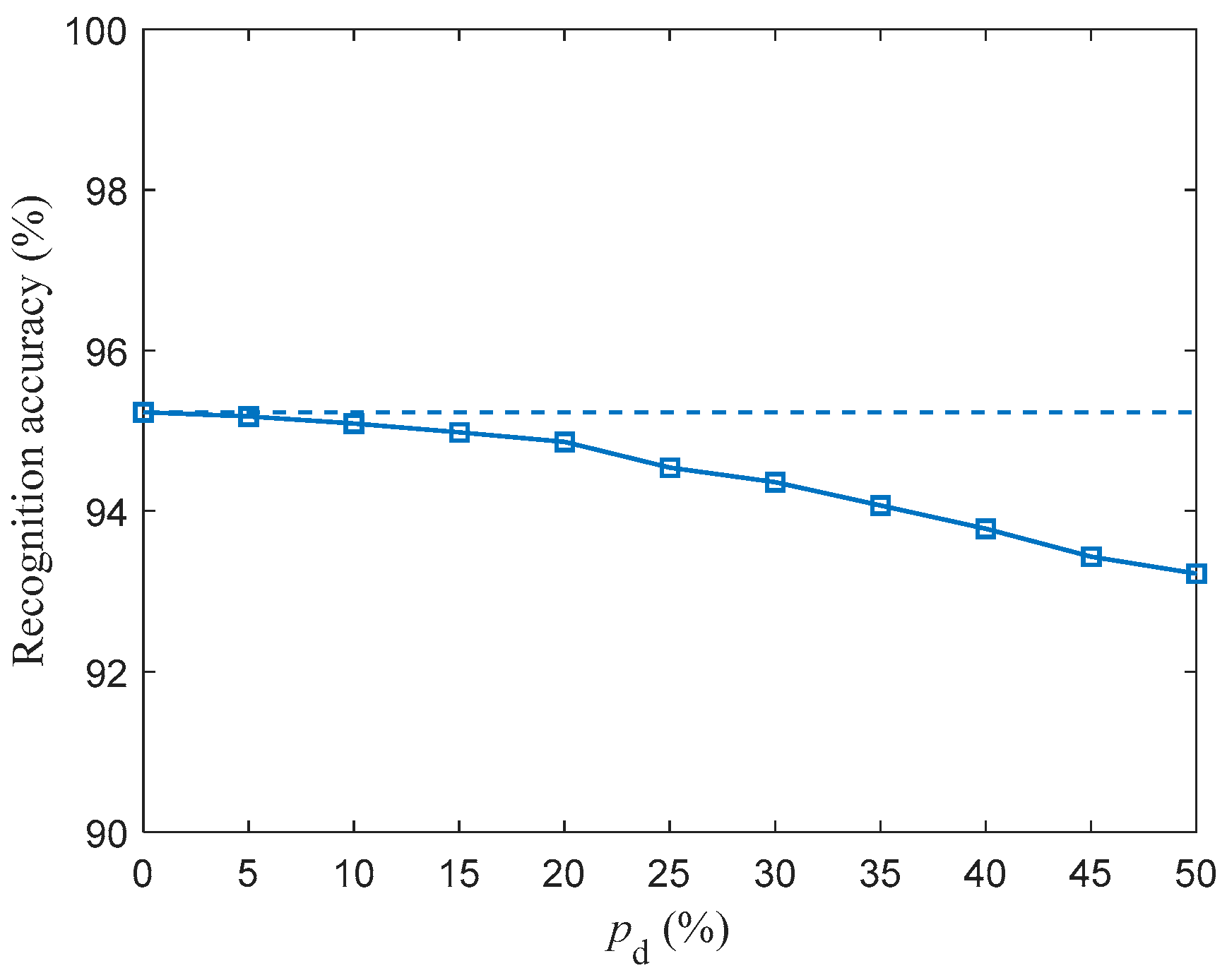

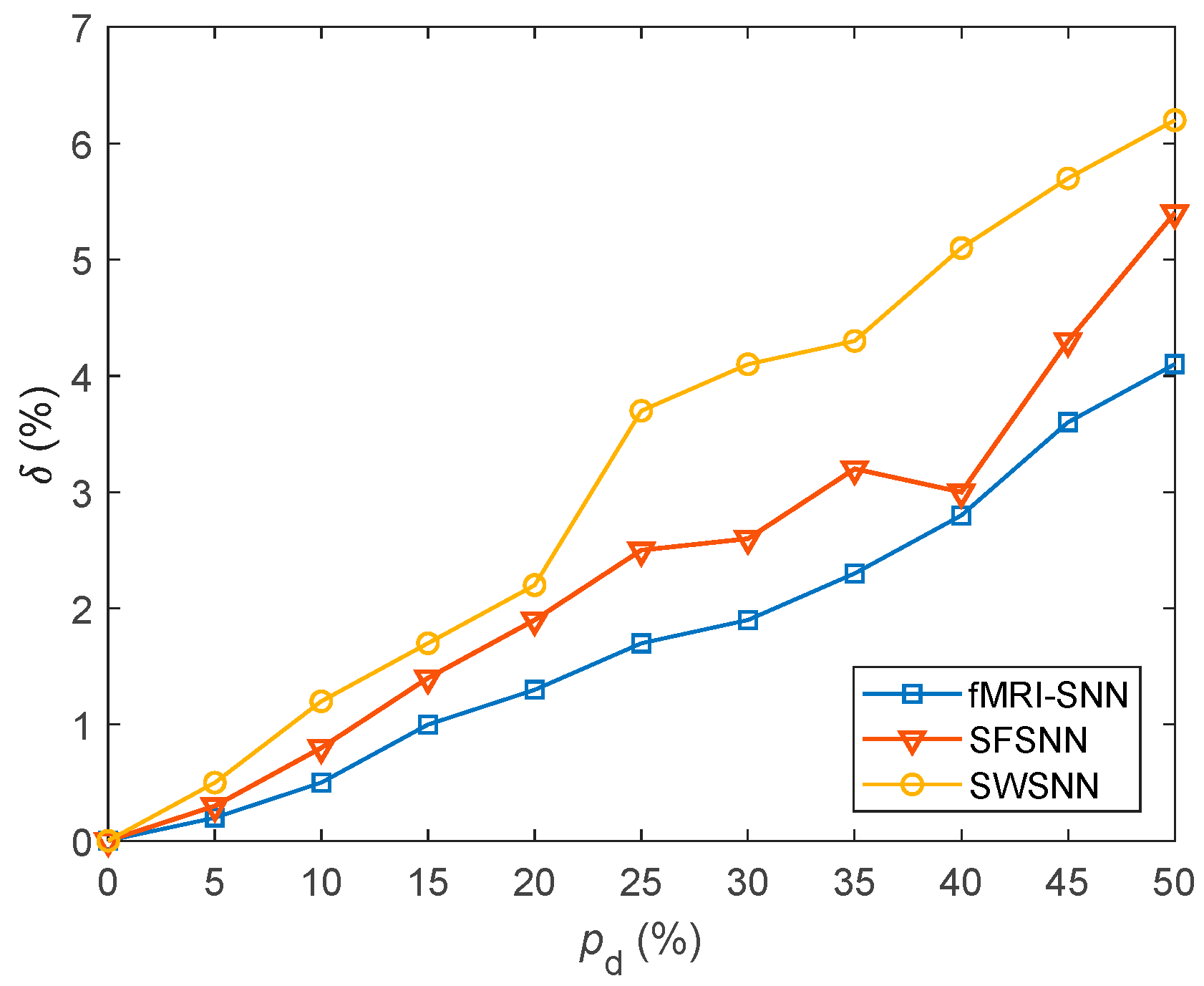

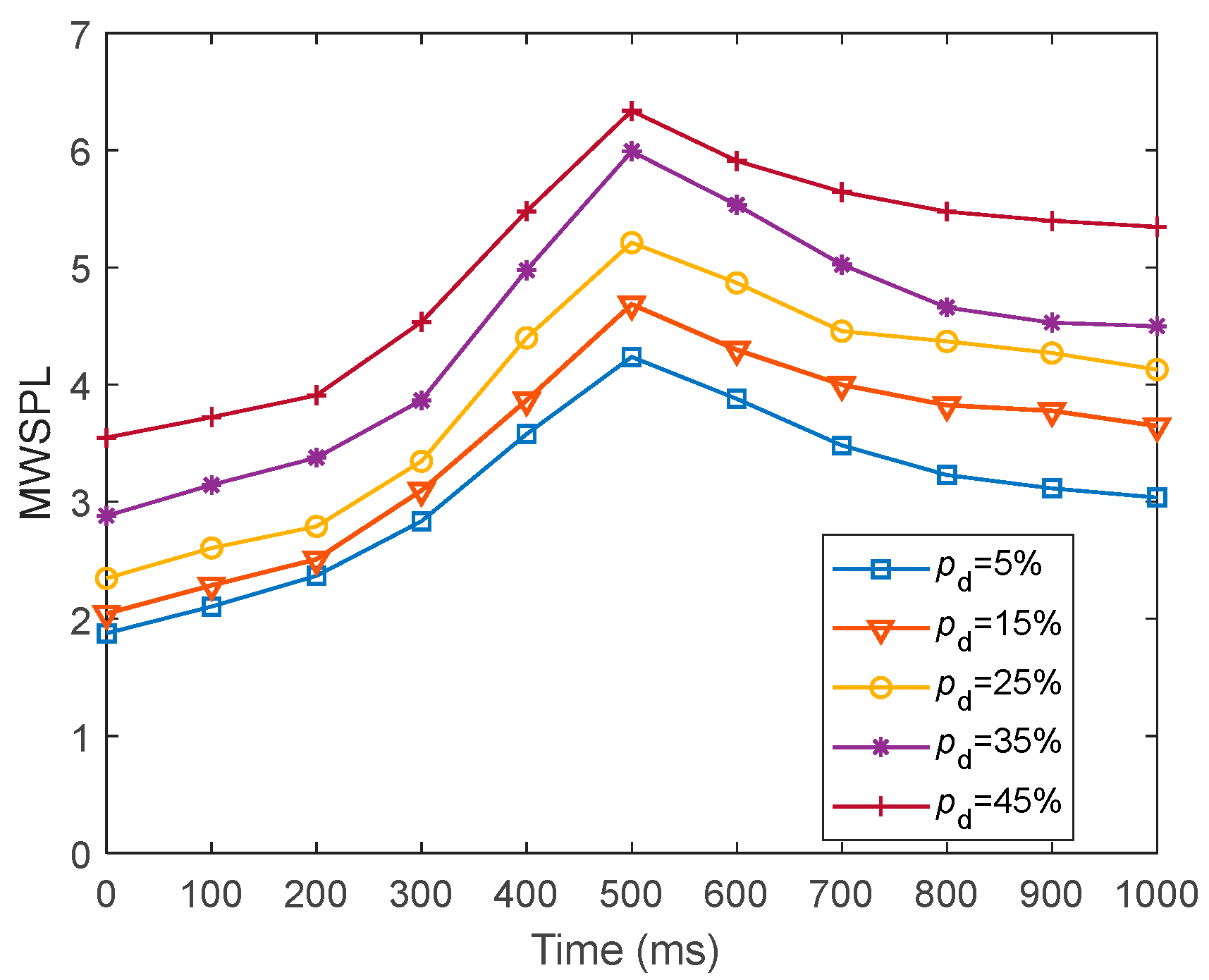

We investigated the SR accuracy of fMRI-SNN against stochastic attack to certify the damage resistance, and found that fMRI-SNN surpasses SNNs with distinct topologies in SR accuracy, notably maintaining similar accuracy levels before and after stochastic attack at the damage proportion below 30%.

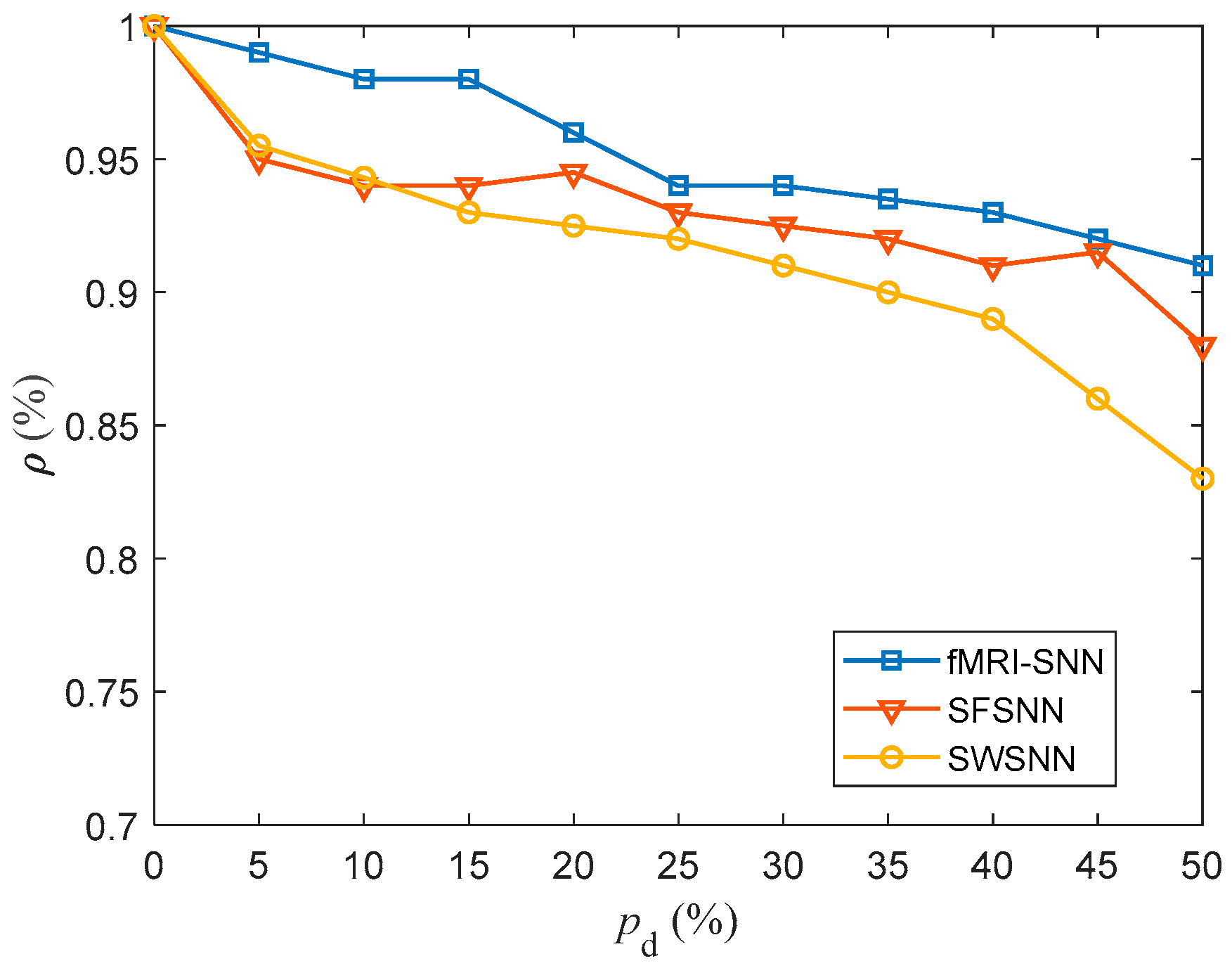

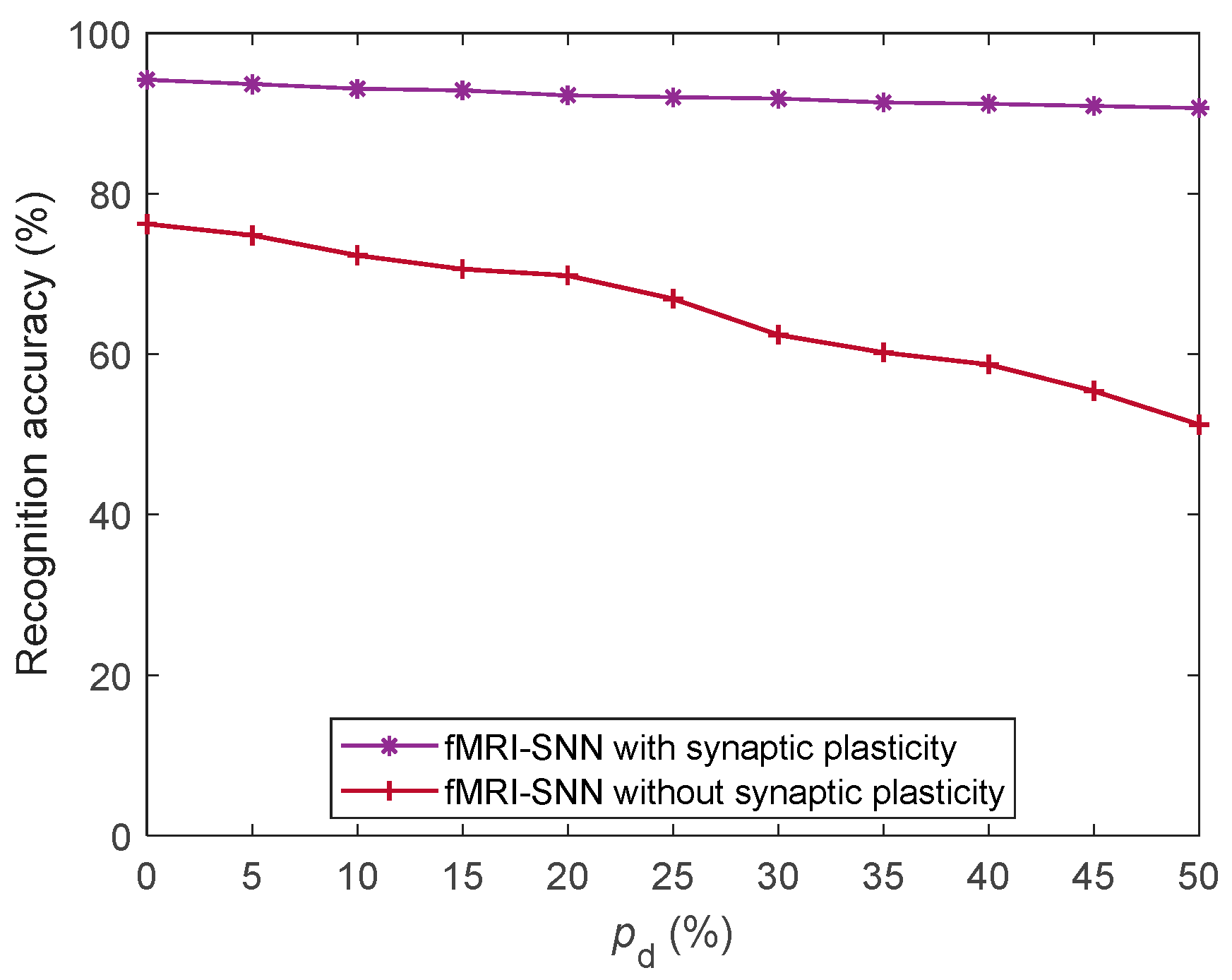

Furthermore, we discussed the damage-resistant mechanism of fMRI-SNN, and found that the change in neural electrical activity serves as an interior manifestation corresponding to the damage resistance of SNNs for recognition tasks, the synaptic plasticity serves as the inherent determinant of the damage resistance, and the topology serves as a determinant impacting the damage resistance.

The structure of this paper is as follows:

Section 2 presents the method applied to build the fMRI-SNN;

Section 3 certifies the damage resistance of the fMRI-SNN by speech recognition;

Section 4 discusses the damage-resistant mechanism of the fMRI-SNN; finally,

Section 5 concludes the paper.