1. Introduction

In the fields of computer-aided geometric design (CAGD) and computer graphics, significant progress has been made in shape optimization methods for parameterized free-form curves. The Ball curve, as one of the classic curves in CAGD, is primarily applied in mechanical design, vascular structure repair, and path planning due to its excellent properties in geometric modeling [

1]. Since Ball introduced the rational cubic Ball curve in 1974 [

2], research on Ball curves has continued to deepen. Wang extended the cubic Ball curve to higher-order generalized Ball curves [

3], while Said further proposed the generalized Ball curve, extending it to arbitrary odd degrees and revealing its numerous advantageous properties in geometric modeling [

4]. Hu et al. conducted an in-depth comparative study of generalized Ball curves and Bézier curves in terms of recursive evaluation and envelope properties [

5]. In 2000, Wu introduced two new generalized Ball curves, namely the Said–Bézier generalized Ball curve and the Wang–Said generalized Ball curve (WSGB) [

6]. Recently, Liu et al. proposed the h–Said–Ball basis function, enhancing modeling capabilities and improving evaluation efficiency through h-calculus [

7]. These new curves exhibit more efficient recursive algorithms compared to traditional Bézier curves.

In curve approximation techniques, Sederberg and Farouki first introduced interval Bézier curves into curve approximation and formally proposed interval algorithms to ensure the stability and accuracy of computational results [

8]. Tuohy et al. introduced the concept of interval B-spline curves and surfaces and studied the boundary properties of interval Bézier curves [

8]. Lin and Rokne proposed disk Bézier curves and spherical Bézier curves, which demonstrated superior performance in geometric computations [

9]. Lu et al. developed a Bézier curve degree reduction algorithm based on L2 norm approximation error control, significantly improving the stability and accuracy of degree reduction [

10]. Quan et al. proposed a preprocessing asymptotic iterative approximation method to accelerate the convergence speed of tensor product Said–Ball surfaces, markedly enhancing computational efficiency and stability [

11].

The degree reduction of curves and surfaces has long been a research focus in CAGD, particularly in complex geometric design, where the need for degree reduction is more pressing. Hu et al. conducted an in-depth study on the approximate degree reduction of Said–Ball curves and surfaces, proposing a stepwise degree reduction method, though it can only achieve single-step reduction at a time [

5]. Chen and Lou developed a degree reduction algorithm for interval B-spline curves [

12], while Tan and Fang systematically explored the degree reduction of interval WSGB curves [

13]. Hu and Wang, based on Jacobi polynomials and quadratic programming theory, proposed an optimal multi-degree reduction algorithm [

14]. Wang Hongkai achieved the exact degree reduction of Bézier curves through polynomial reparameterization, significantly simplifying the process and improving computational efficiency [

15]. However, traditional degree reduction methods (e.g., least squares, geometric approximation, and classical optimization algorithms) exhibit significant limitations when handling complex curves like Said–Ball curves: first, their convergence speed is slow, failing to meet the demands of efficient computation; second, their accuracy is insufficient, particularly in high-dimension spaces where they tend to fall into local optima, making it difficult to simultaneously preserve geometric features and minimize errors; and third, their robustness is poor, lacking stability for complex shapes or high-degree curves, resulting in degree reduction outcomes that deviate from expectations. Moreover, traditional methods struggle to find globally optimal solutions when dealing with curves exhibiting multimodal or nonlinear characteristics, further limiting their applicability.

In view of the aforementioned challenges, the degree reduction problem can essentially be formulated as an optimization task, making it well-suited for resolution through bio-inspired intelligent optimization algorithms. In recent years, such algorithms have demonstrated significant potential in addressing complex optimization problems, particularly excelling in global search capabilities, convergence performance, and algorithmic robustness. These methods draw inspiration from natural phenomena, animal behaviors, and evolutionary mechanisms, abstracting biological principles into mathematical models to simulate adaptive and efficient search strategies. According to the No Free Lunch (NFL) theorem, no single algorithm can achieve optimal performance across all types of optimization problems [

16]. This highlights the necessity of designing specialized algorithms tailored to specific application domains. To address this need, researchers have continuously proposed and refined bio-inspired intelligent optimization algorithms to deliver more efficient and targeted solutions. For instance, Cheng et al., based on uniform experimental design theory, proposed an improved sparrow search algorithm (ISSA), inspired by the foraging behavior of sparrows. By incorporating a surrounding diversity metric, dynamic management strategies, and boundary update mechanisms, ISSA enhances population diversity and convergence efficiency and has been validated for its superior performance in UAV path planning [

17]. Mahmoud et al. developed an enhanced hiking optimization algorithm (AEDHOA), drawing inspiration from human hiking behavior. The algorithm introduces four strategies to improve population diversity and convergence efficiency, effectively tackling high-dimension feature selection problems while demonstrating strong global search capabilities and robustness [

18].These studies suggest that biomimetic intelligent optimization algorithms are powerful tools for addressing curve and surface degree reduction in CAGD.

Specifically, Hu et al. applied the grey wolf optimization algorithm (GWO)—a bio-inspired swarm intelligence method—to investigate the multi-degree reduction of SG-Bézier curves [

19]. Guo et al. employed a skewed normal cloud-modified whale optimization algorithm (WOA) to enhance the degree reduction performance for S-

curves, leveraging its global search capabilities [

20]. Additionally, Hu et al. proposed an enhanced golden jackal optimization algorithm (EGJO), which integrates oppositional learning, adaptive mutation, and binomial crossover strategies to improve the shape optimization of complex CSGC–Ball surfaces [

21]. These studies collectively demonstrate the significant potential of biomimetic intelligent optimization algorithms in addressing complex curve and surface approximation problems, offering efficient and reliable solutions for high-order degree reduction tasks. However, existing biomimetic intelligent optimization algorithms still exhibit certain limitations when addressing degree reduction of complex curves. For instance, the grey wolf optimization algorithm (GWO) excels in global search capabilities but tends to get trapped in local optima in high-dimension problems and suffers from slow convergence [

19]; the whale optimization algorithm (WOA) lacks sufficient local exploitation ability, particularly showing unstable performance in multimodal optimization problems [

20]; and golden jackal optimization (GJO) struggles to maintain population diversity, often leading to premature convergence [

21]. These shortcomings constrain the effectiveness of existing algorithms in complex curve degree reduction problems.

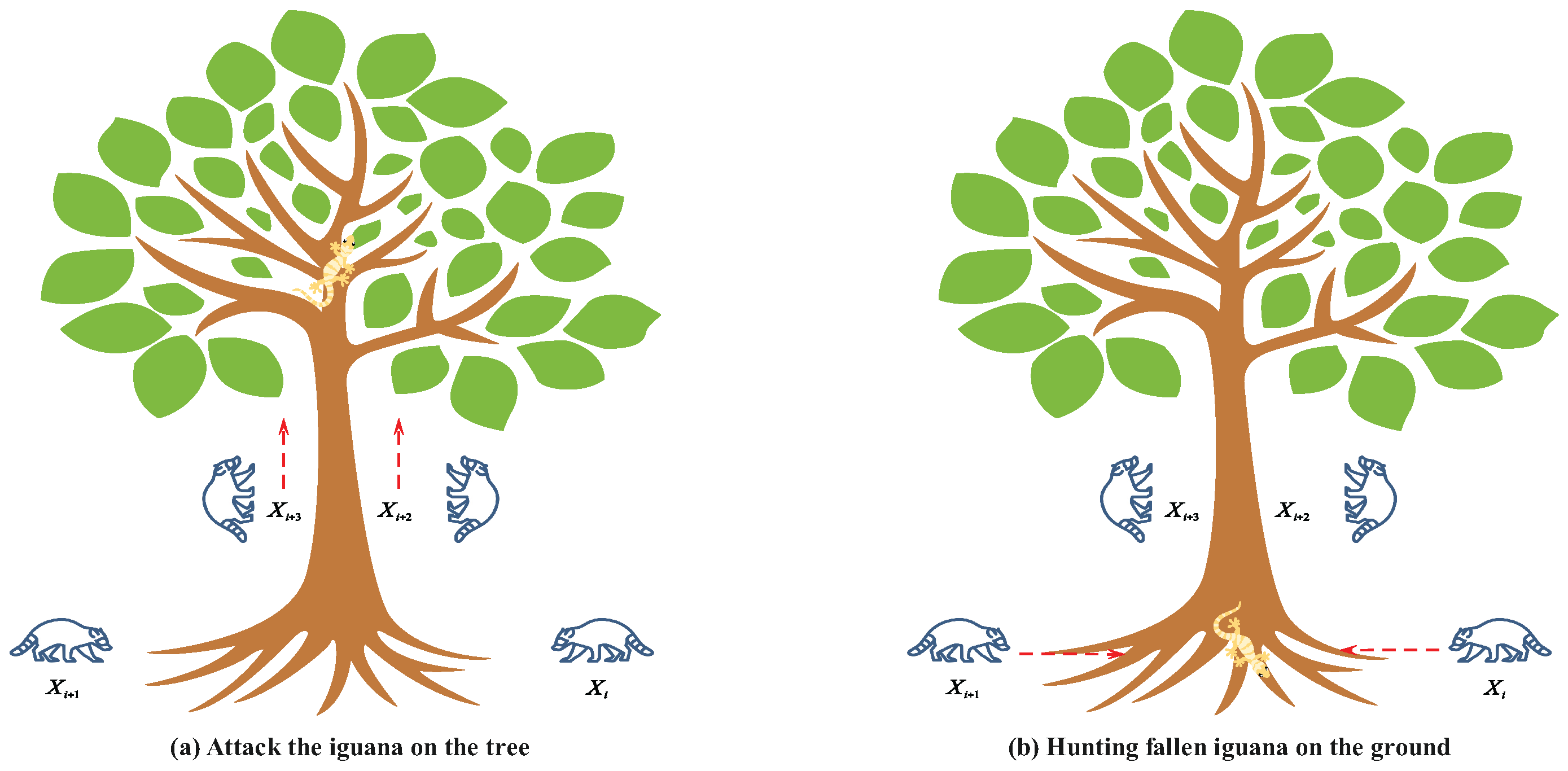

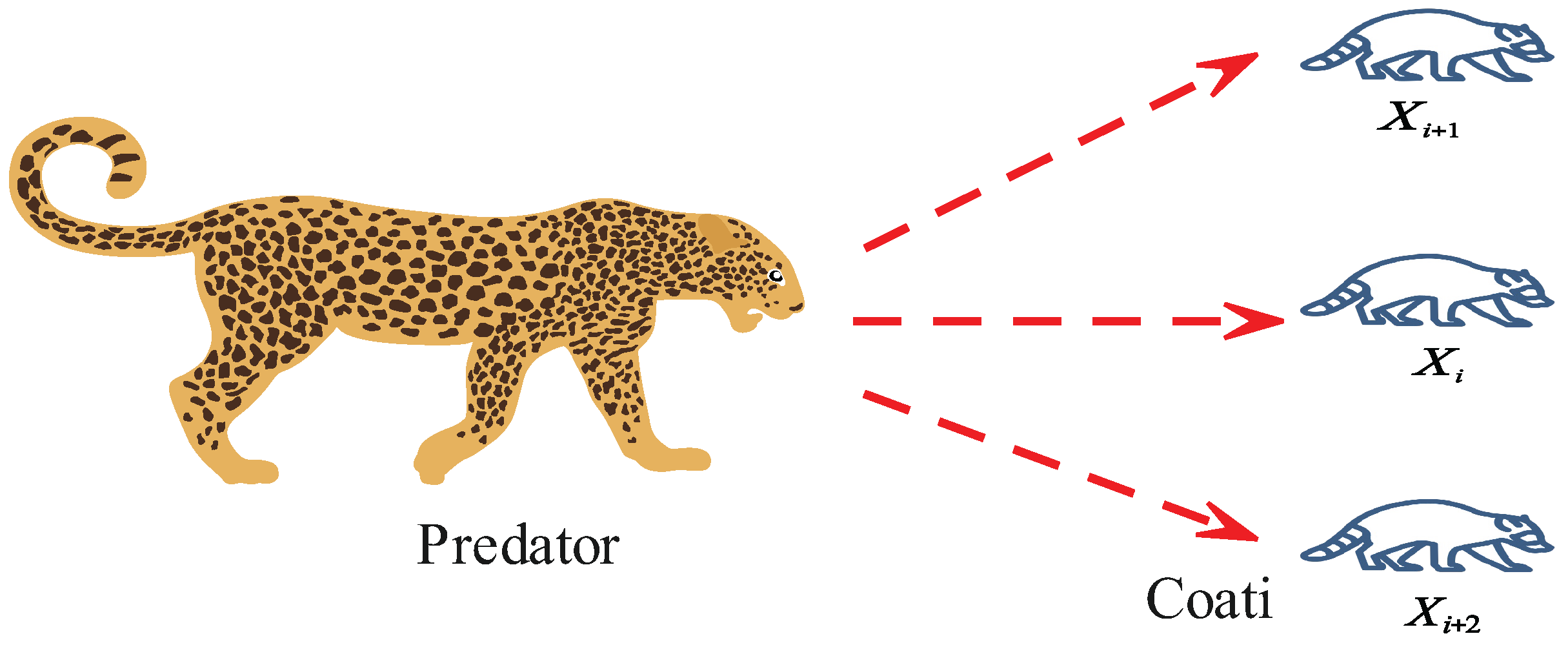

This paper addresses the limitations of the coati optimization algorithm [

22], such as insufficient population diversity, limited local convergence capability, and a tendency to become trapped in local optima in complex optimization problems, by proposing a multi-strategy enhanced coati optimization algorithm (MSECOA) and applying it to the degree reduction approximation problem of Said–Ball curves. The main contributions of this paper are as follows:

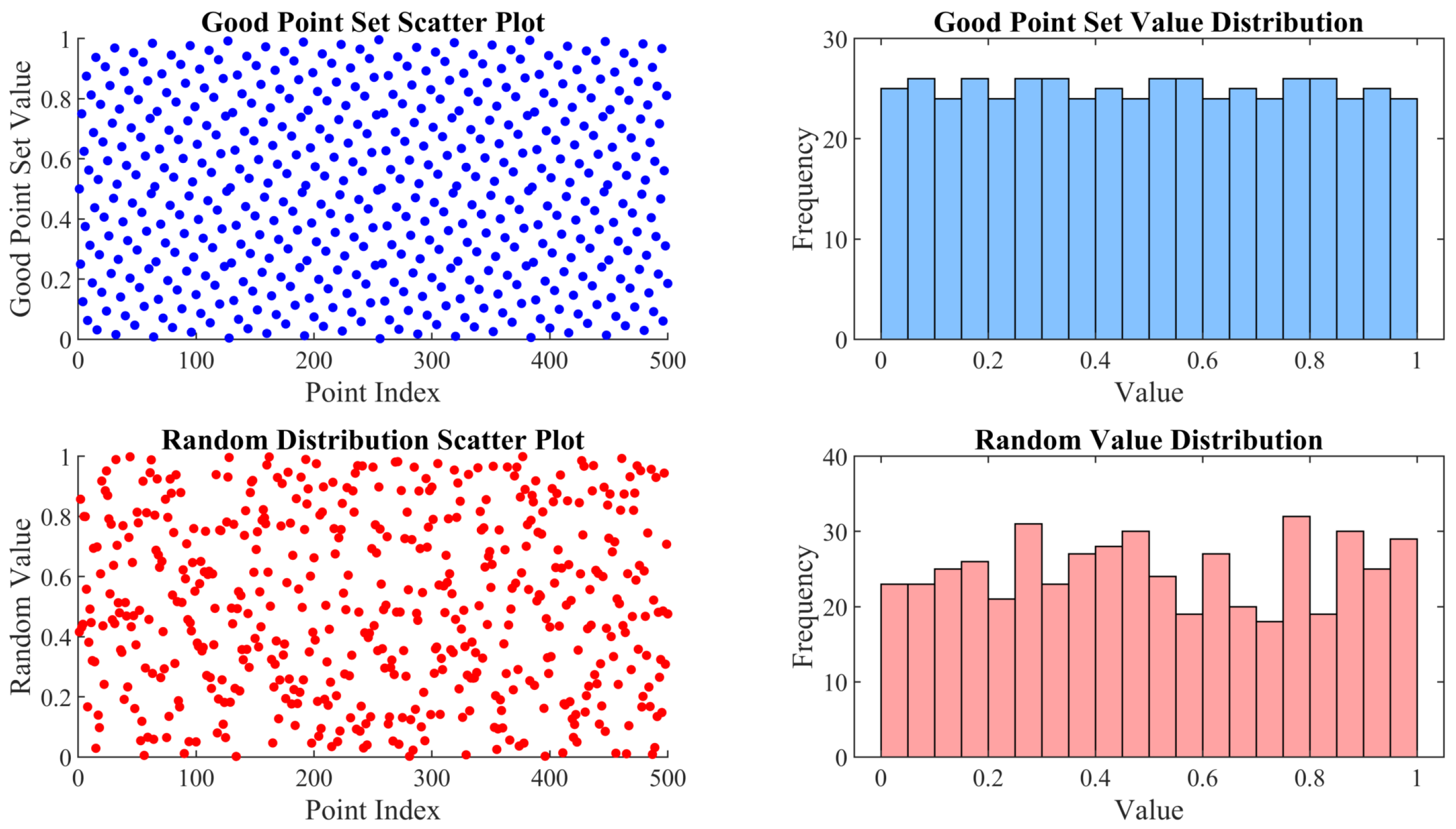

Construction of a Said–Ball curve degree reduction model: A new degree reduction approximation model for Said–Ball curves is proposed, with the objective function designed to minimize Euclidean distance and curvature error.

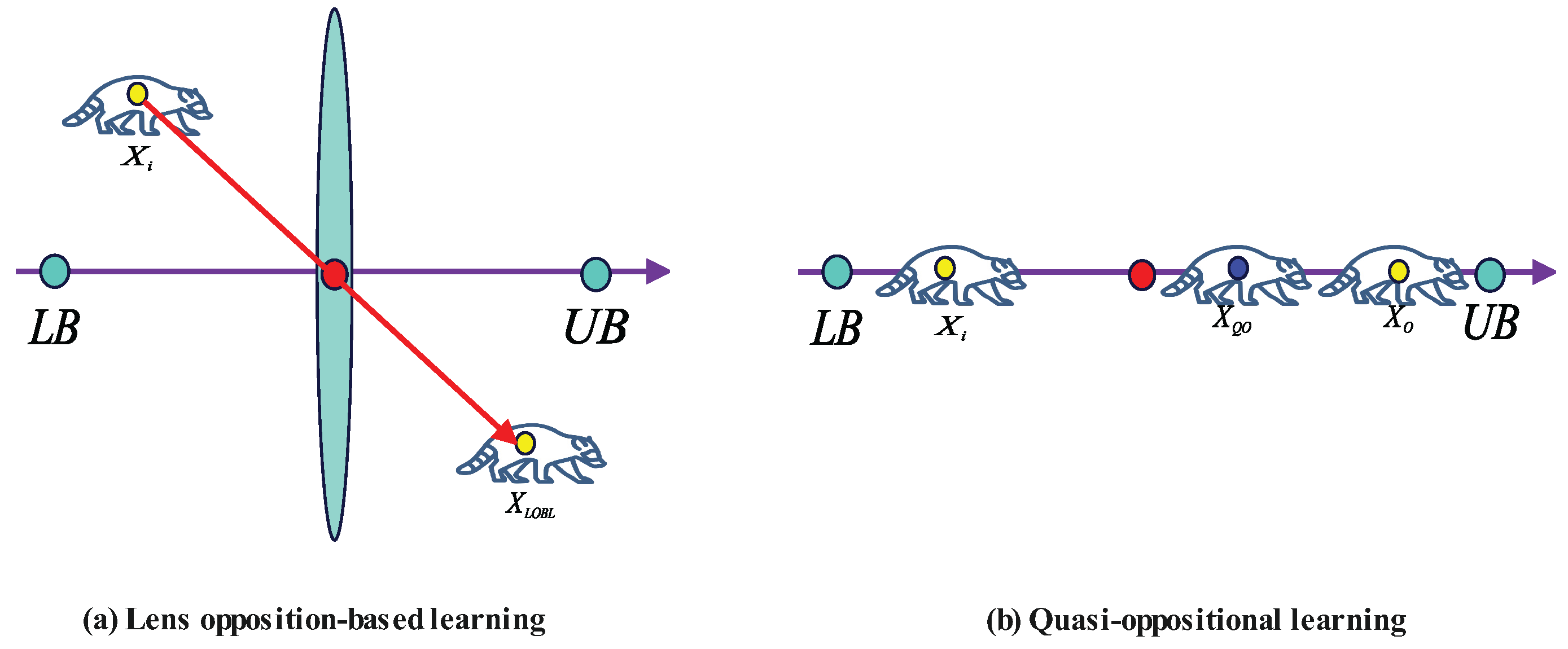

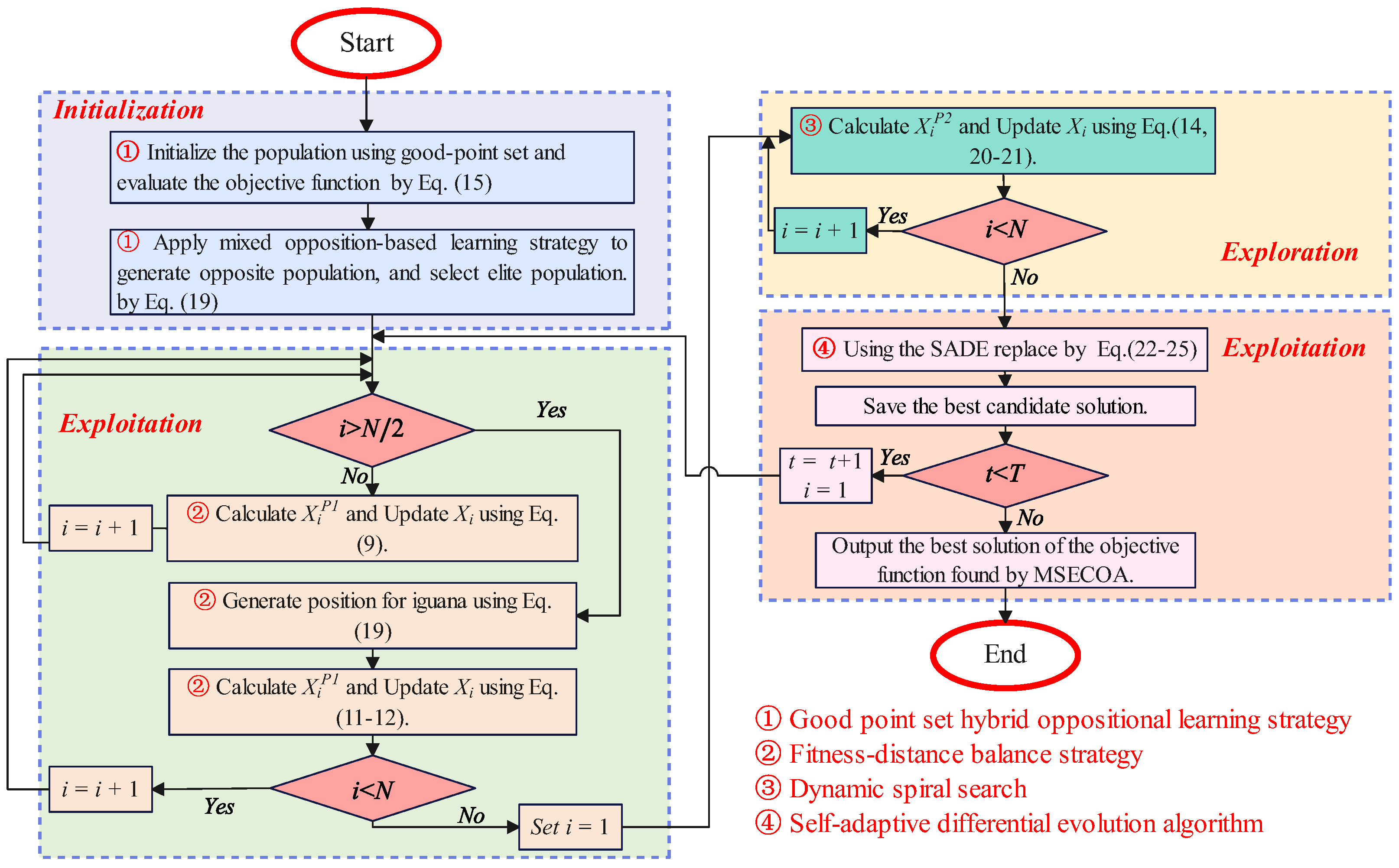

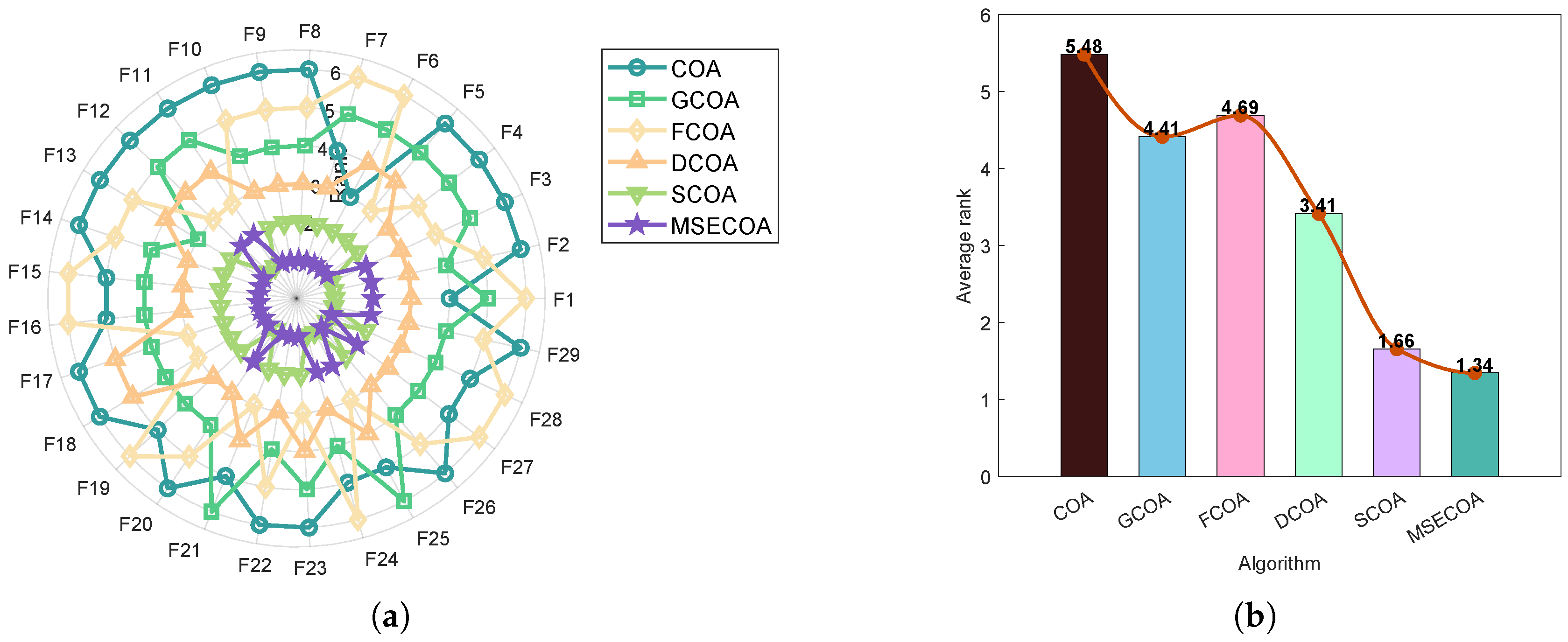

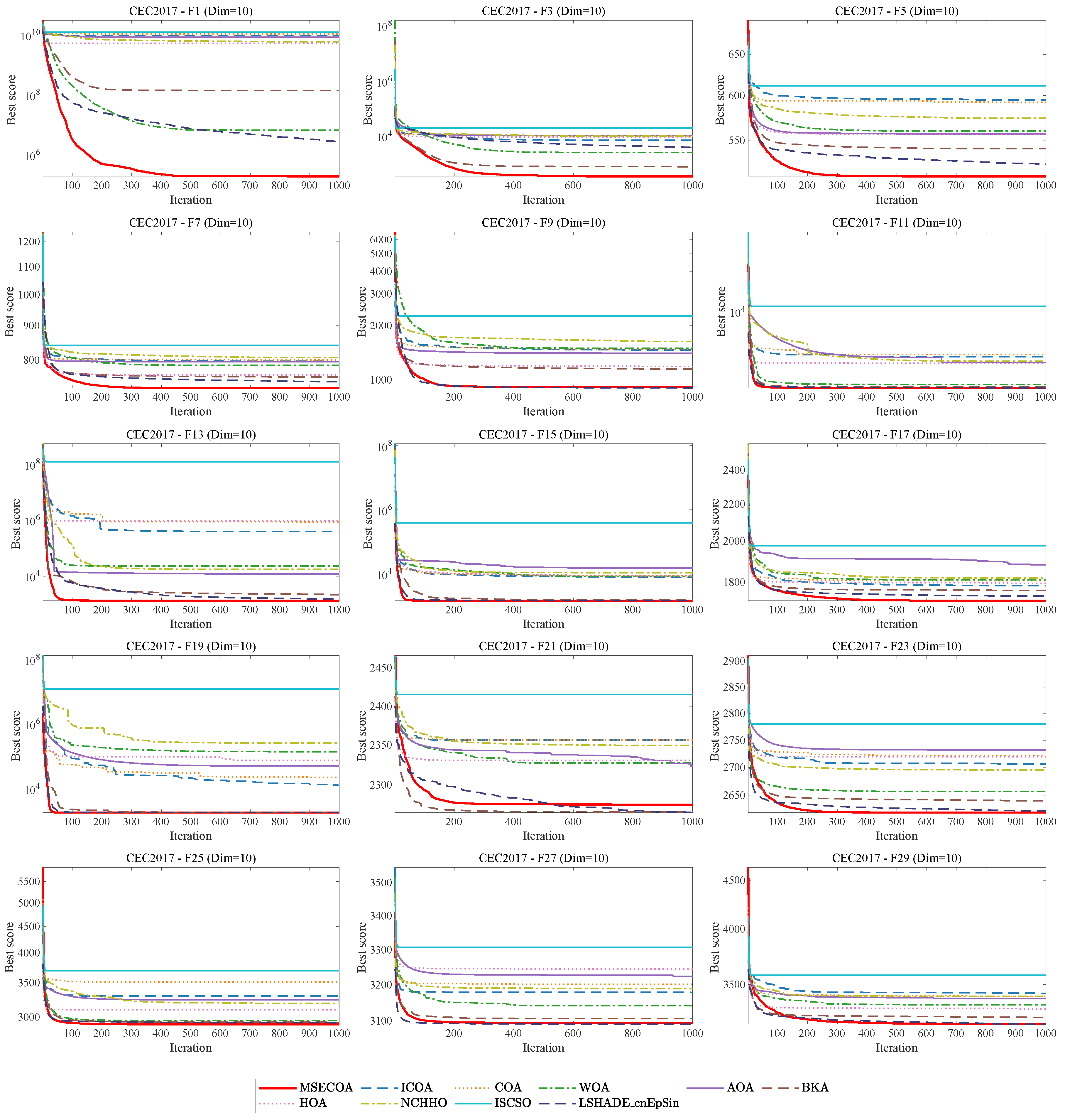

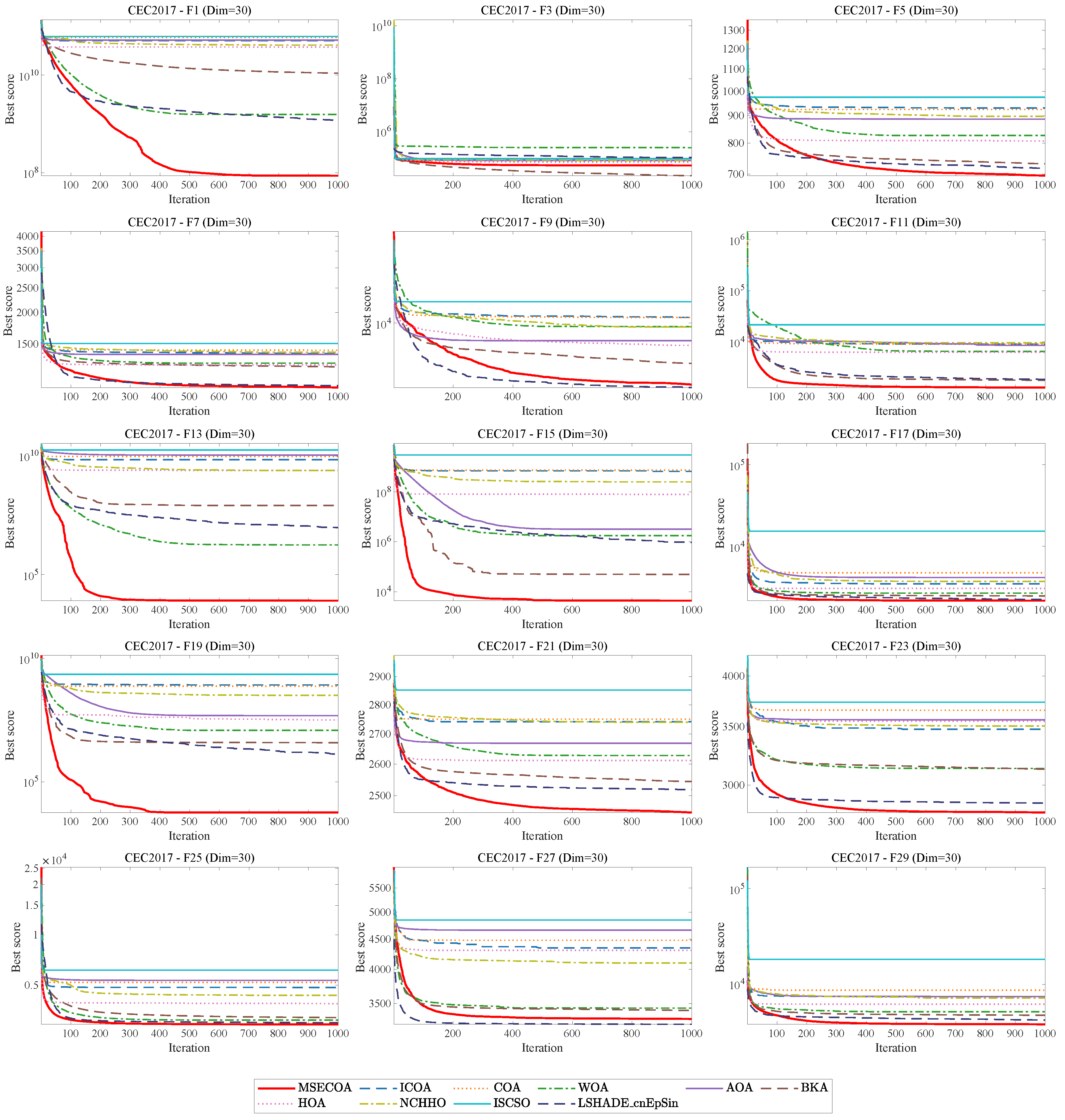

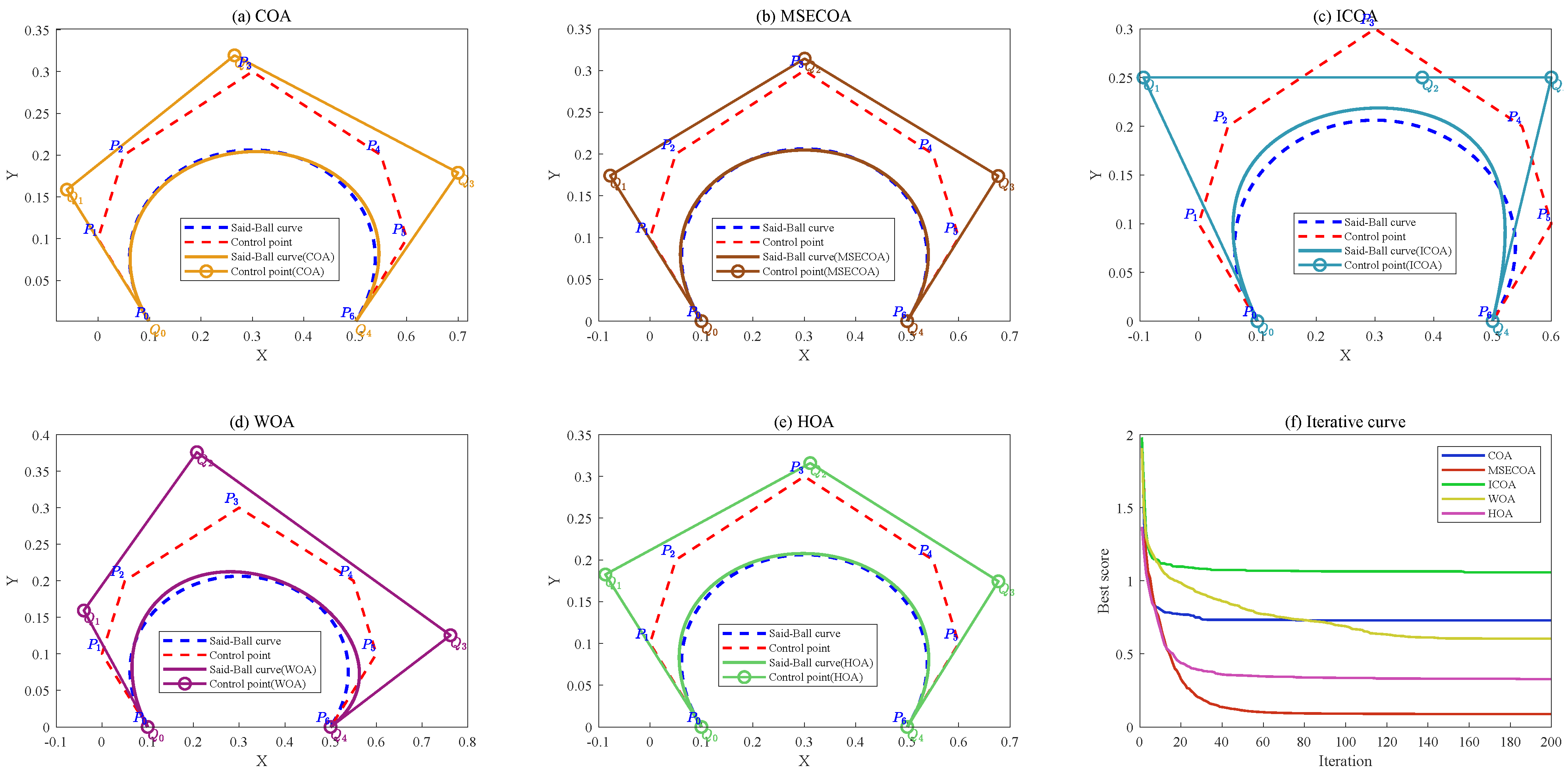

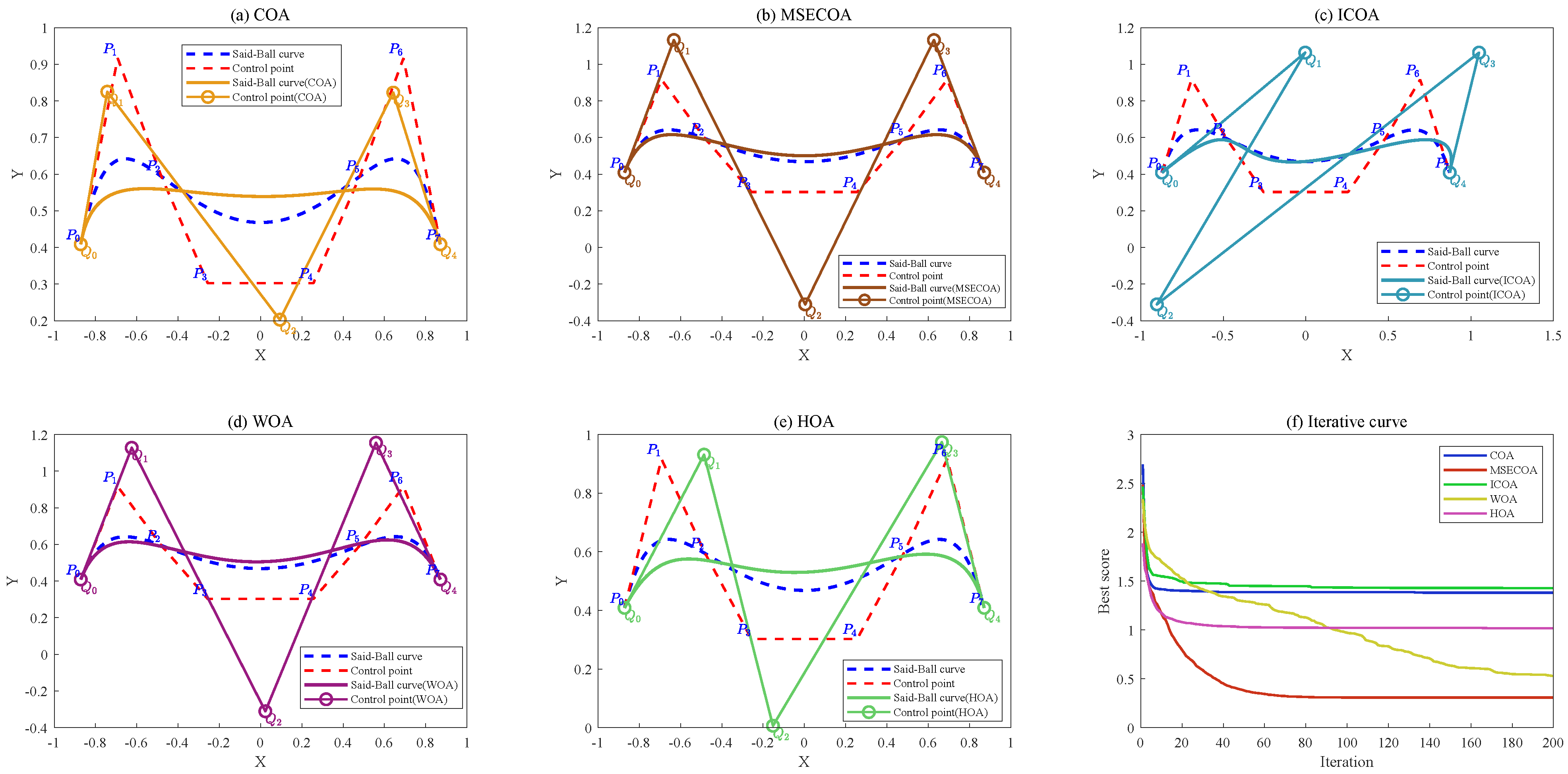

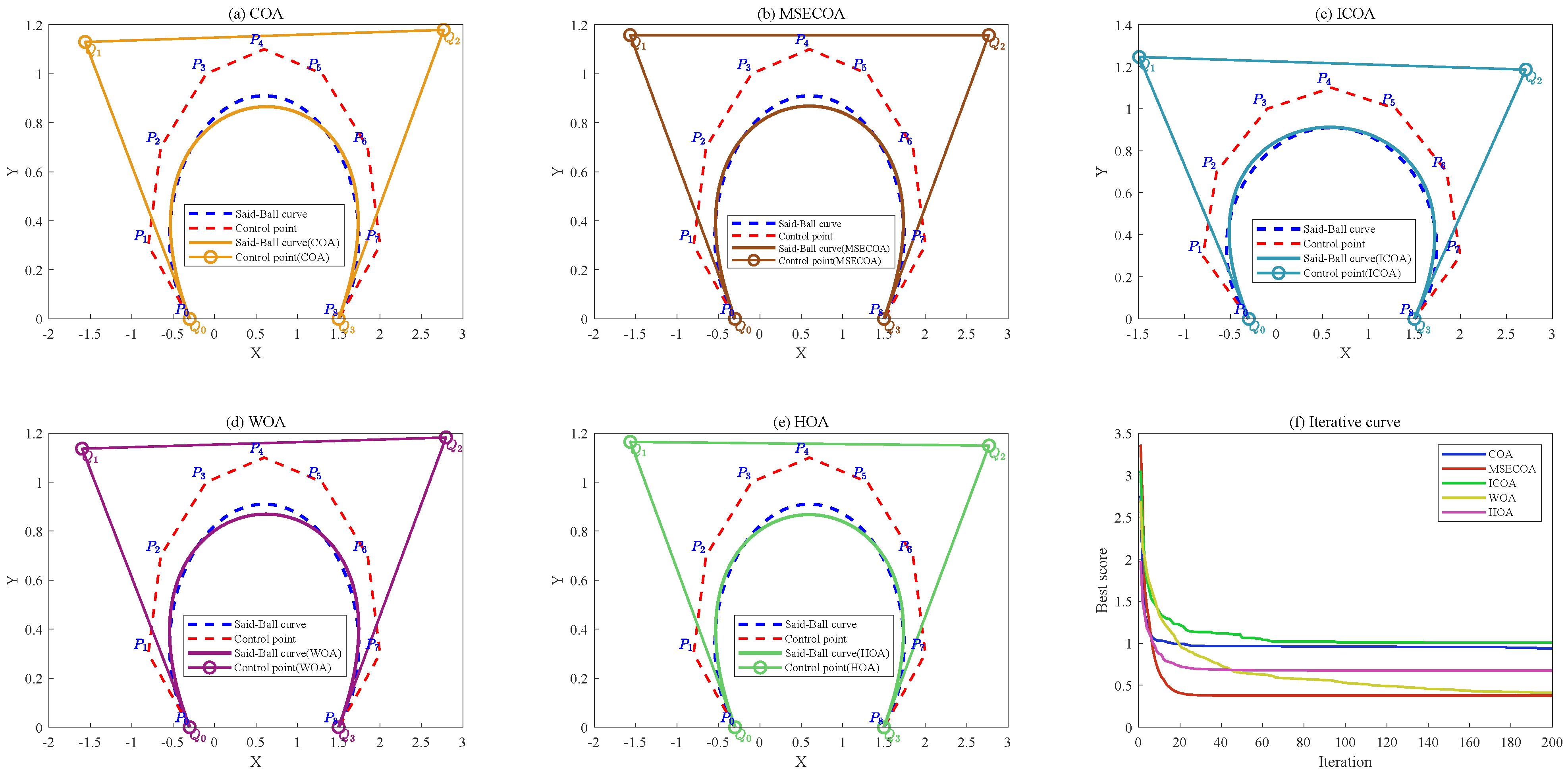

Proposal of a multi-strategy enhanced coati optimization algorithm: The initial population distribution is optimized using a hybrid oppositional learning strategy based on a good point set, while global search and local exploitation capabilities are enhanced through a fitness–distance balance strategy and a dynamic spiral search strategy. An adaptive differential evolution mechanism is introduced to improve convergence speed and robustness. Comparative experiments on the IEEE CEC2017 and CEC-2020 test function suites, as well as four engineering constrained design problems, validate the MSECOA’s significant advantages in optimization accuracy, convergence speed, and robustness.

Validation of the MSECOA’s experimental performance in Said–Ball curve degree reduction: Through numerical examples involving four different degree reduction levels, the MSECOA’s efficiency in multi-degree reduction approximation of Said–Ball curves is demonstrated. Experimental results show that the MSECOA effectively preserves the geometric features of curves while reducing their degree, offering an efficient solution for complex curve degree reduction problems.

The structure of this paper is organized as follows:

Section 2 systematically elaborates degree reduction approximation problem of Said–Ball curves and constructs a degree reduction optimization model.

Section 3 provides a detailed introduction to core principles and mathematical formulation of the coati optimization algorithm (COA).

Section 4 presents a multi-strategy enhanced coati optimization algorithm (MSECOA), including pseudocode, flowcharts, and a theoretical analysis of its computational complexity.

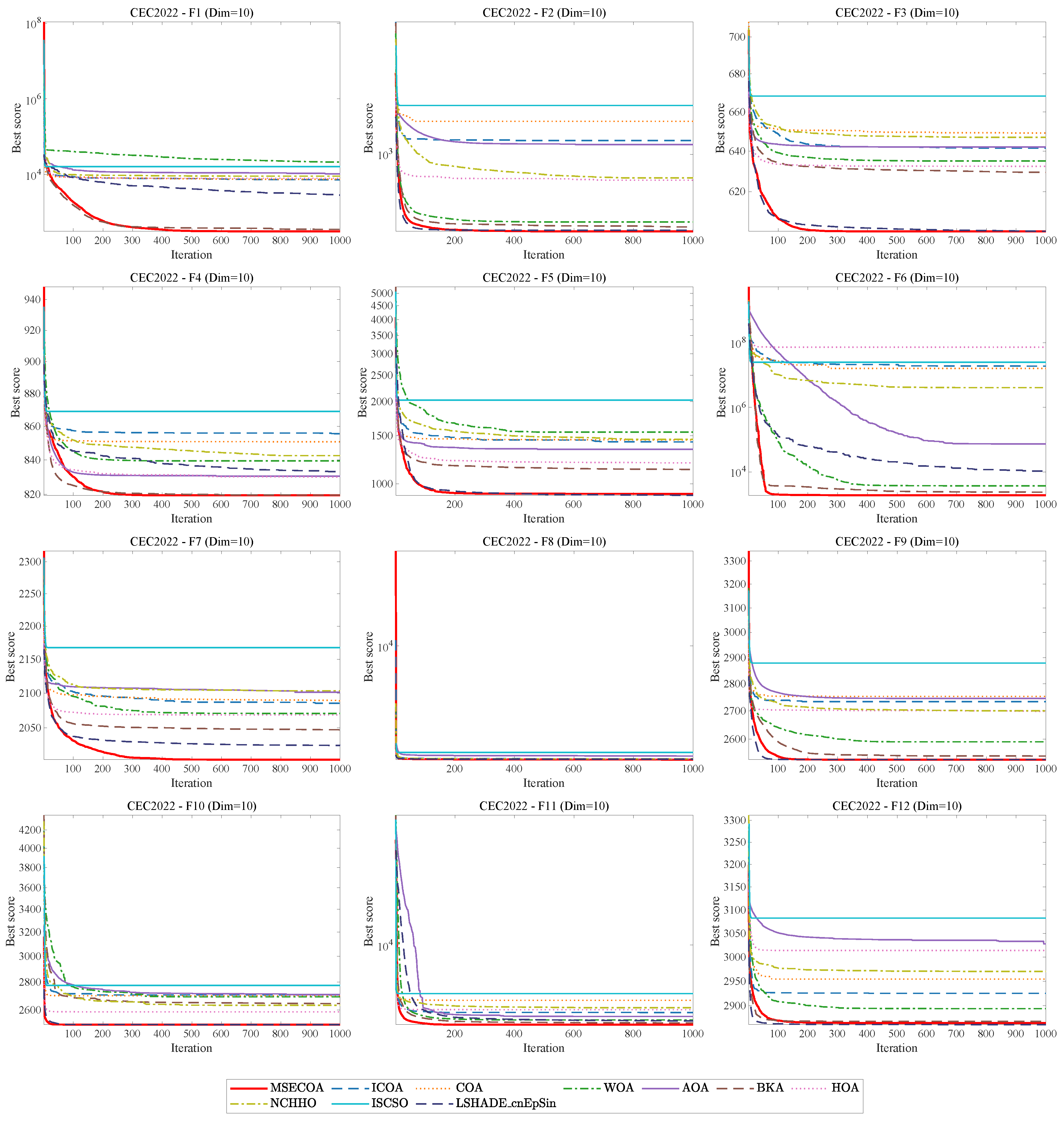

Section 5 comprehensively evaluates the MSECOA’s optimization performance using IEEE CEC2017 and CEC2022 benchmark functions.

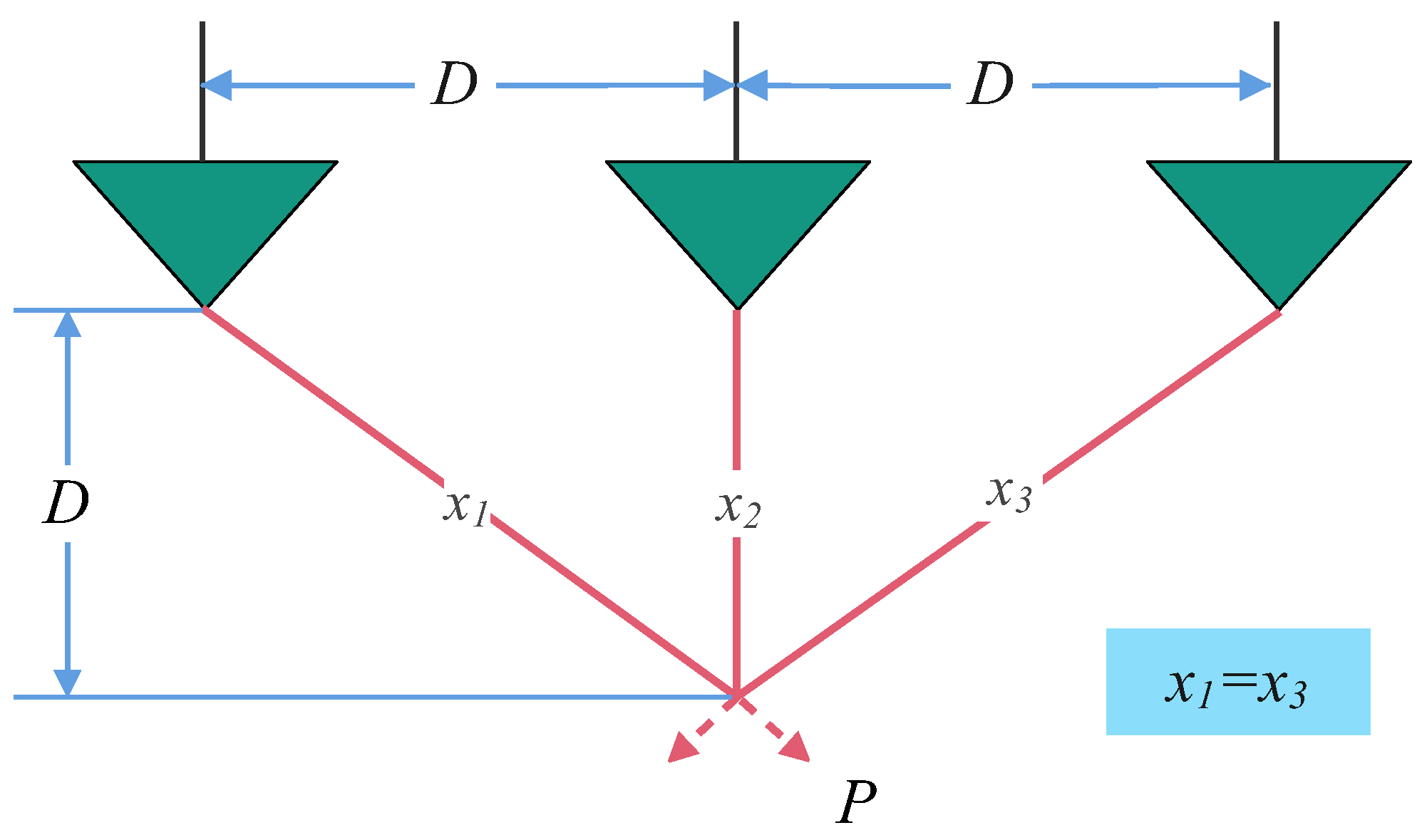

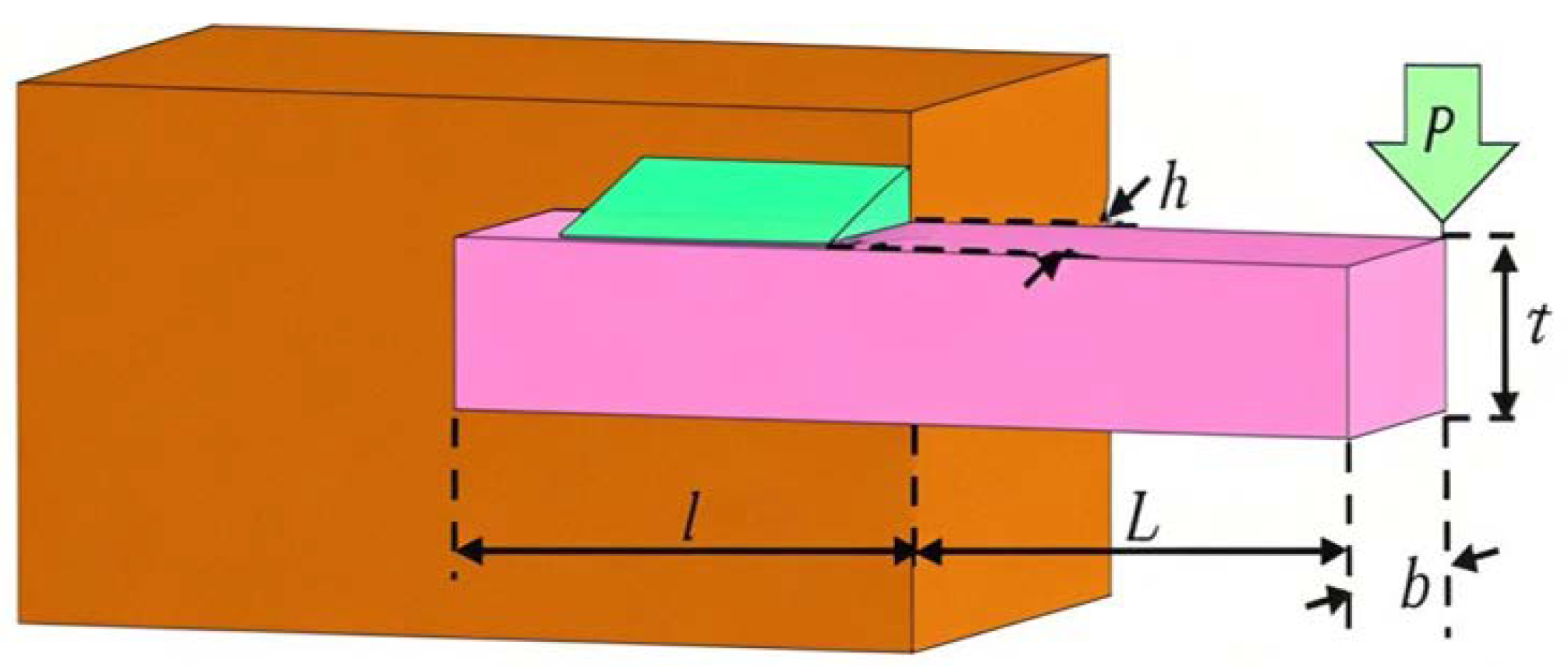

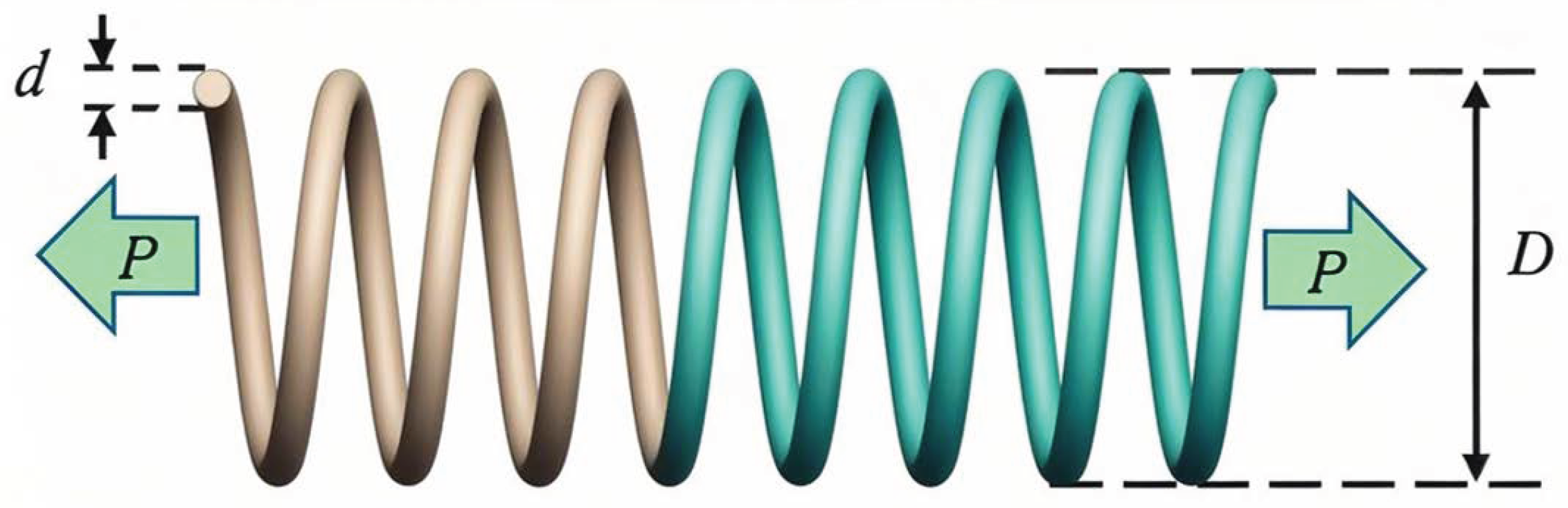

Section 6 applies the MSECOA to three typical engineering constrained optimization problems to verify its practical effectiveness.

Section 7 validates the MSECOA’s superior performance in Said–Ball curve degree reduction through four sets of numerical experiments with varying degree reduction levels. Finally,

Section 8 summarizes research contributions and innovations of this study and provides an outlook on future research directions.

8. Conclusions and Future Prospects

This paper addresses the degree reduction problem of Said–Ball curves by proposing a multi-strategy enhanced coati optimization algorithm (MSECOA). The algorithm constructs a degree reduction model based on Euclidean distance and integrated curvature information, incorporating multiple strategies such as good point set-based hybrid opposition learning, fitness–distance balance, dynamic spiral searching, and adaptive differential evolution. These enhancements significantly improve the algorithm’s global search capability, local exploitation ability, and convergence speed. The experimental results demonstrate that the MSECOA outperforms the existing methods on the IEEE CEC2017 and CEC2022 test function suites and exhibits strong practical applicability in four engineering constrained design problems. Furthermore, numerical experiments with four different degree reduction levels validate the MSECOA’s notable advantages in the Said–Ball curve degree reduction problem, effectively preserving the geometric features of the curve while reducing its degree. This provides an efficient and reliable solution for complex curve degree reduction challenges.

The potential applications of the MSECOA extend beyond the degree reduction of Said–Ball curves to broader areas of geometric modeling [

42,

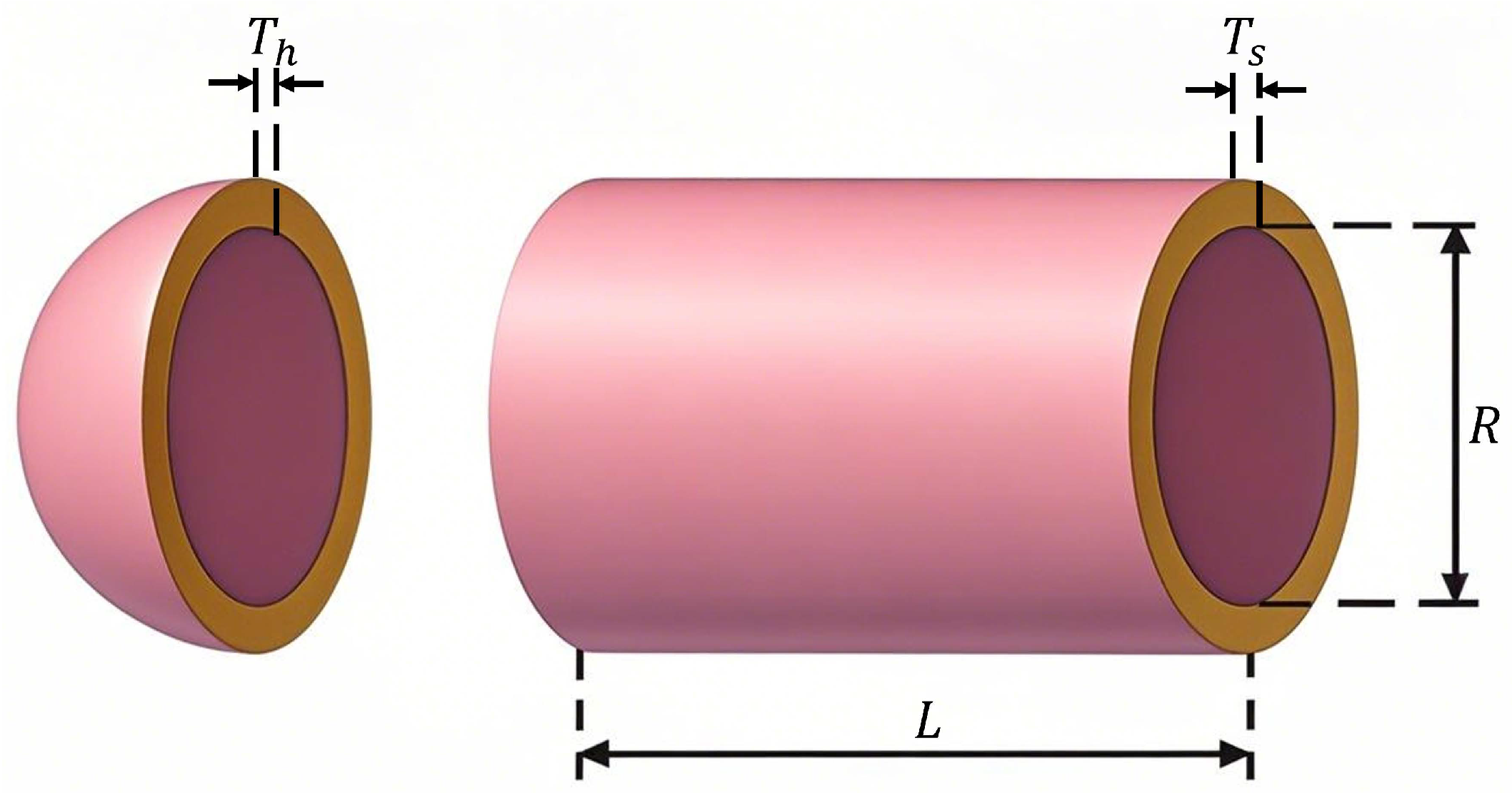

43]. In particular, the MSECOA demonstrates strong applicability in curve and surface modeling for complex real-world problems. For example, in vascular structure reconstruction, accurate curve approximation is essential for modeling intricate blood vessel geometries based on segmented medical imaging data. The algorithm’s ability to preserve shape characteristics during degree reduction makes it particularly suitable for reconstructing tubular anatomical structures, such as arteries and veins, under spatial constraints. Similarly, in pipeline design, where the precise geometric representation of pipe networks is crucial for layout optimization and structural integrity analysis, the MSECOA can be applied to generate high-fidelity free-form curves and surfaces, offering improved efficiency and accuracy.

Although the MSECOA has demonstrated notable performance advantages in current degree reduction tasks, future research will focus on further improving its convergence behavior and robustness. Promising research directions include extending the algorithm to handle curves defined over disk or ball domains, exploring its application in the degree reduction of Ball Said–Ball curves, and generalizing the framework to high-dimensional surface reduction, thereby addressing more complex geometric modeling challenges. Additionally, integrating reinforcement learning techniques to explore the MSECOA’s potential in intelligent geometric modeling represents a promising avenue for future studies.