A Novel Exploration Stage Approach to Improve Crayfish Optimization Algorithm: Solution to Real-World Engineering Design Problems

Abstract

1. Introduction

- Global search: they could search the whole solution space effectively and discover the best fit solution for the problem.

- Scalability: they could be applied to high-dimensional, non-linear, and continuous or discrete problems.

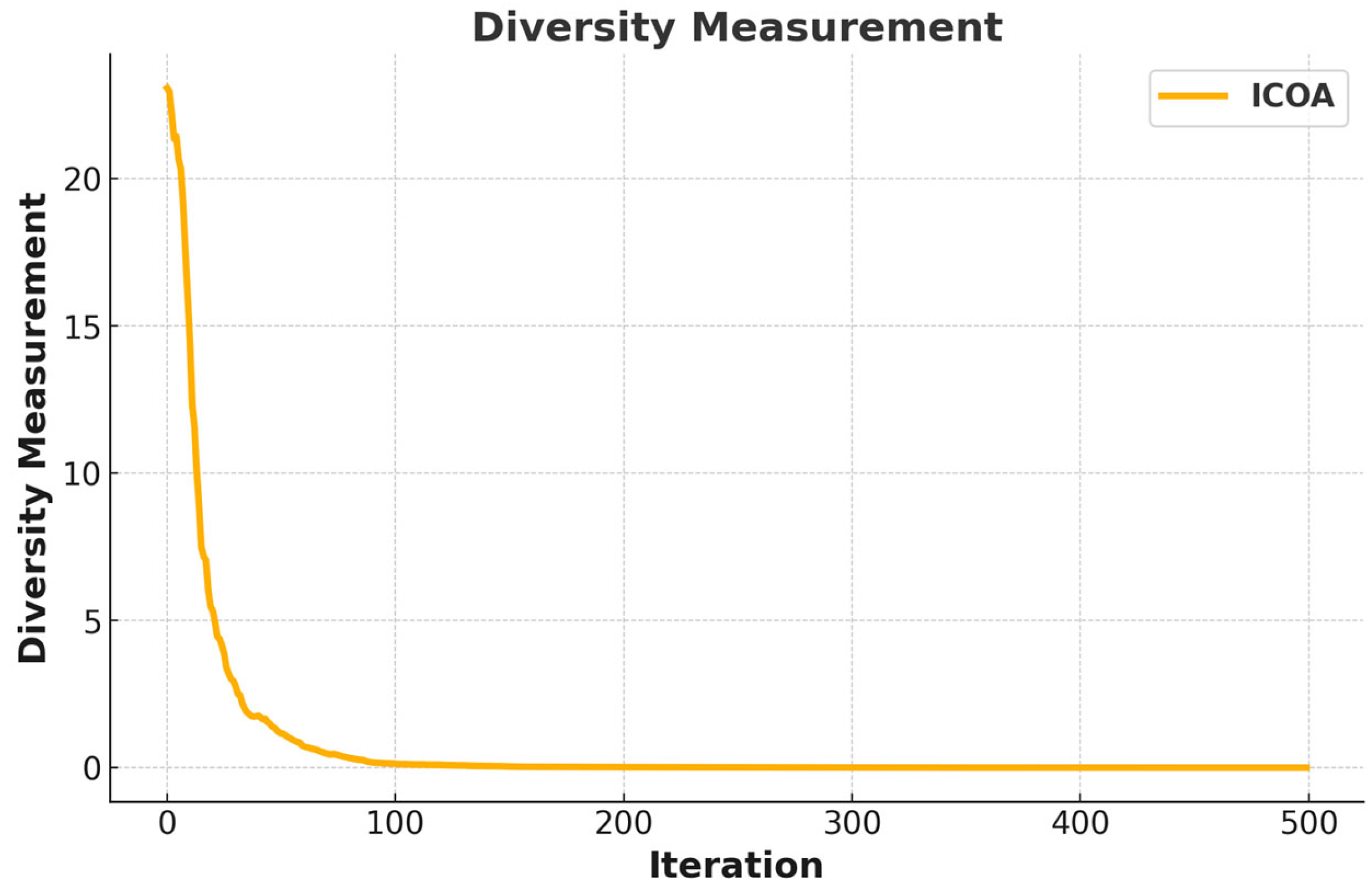

- Diversity: since the whole solution space is scanned in global search, population diversity is generated.

- Adaptability: MHAs could be applied to various problems in finance, economy, engineering, medicine, etc.

- Computational efficiency: MHAs generate acceptable results in a reasonable time. This property of MHAs presents them as advantageous compared to exact methods for the solution of high-scaled optimization problems.

- Gradient descent: MHAs do not need gradient descent information. Moreover, the mathematical models of MHAs are simple.

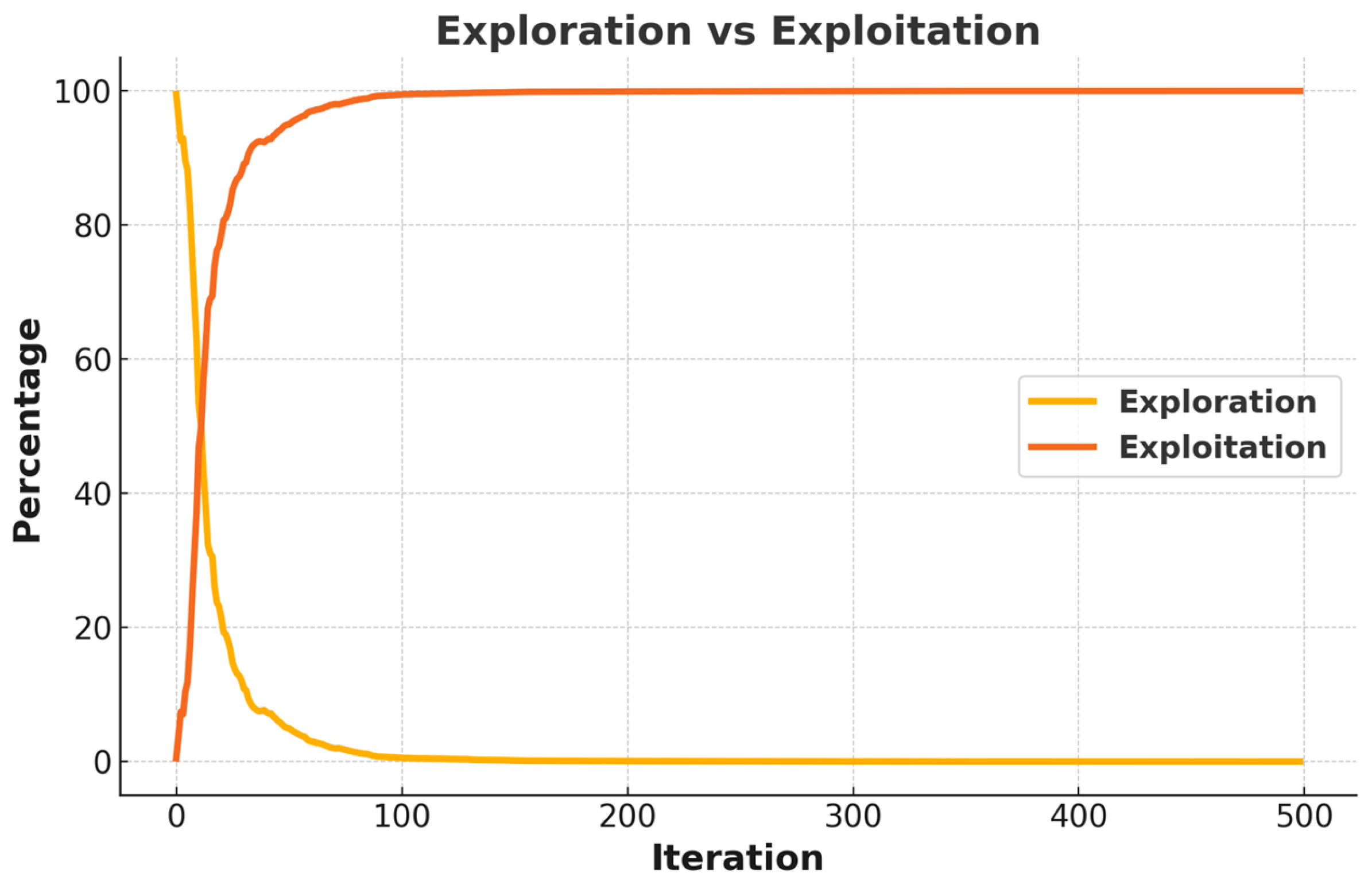

- At the competition stage of the COA, crayfish have to compete with the other crayfish in order to go in the cave. In the original COA, this competition is modeled only by distance. In the ICOA, besides the distance, the step length and locations of crayfish are added to the model.

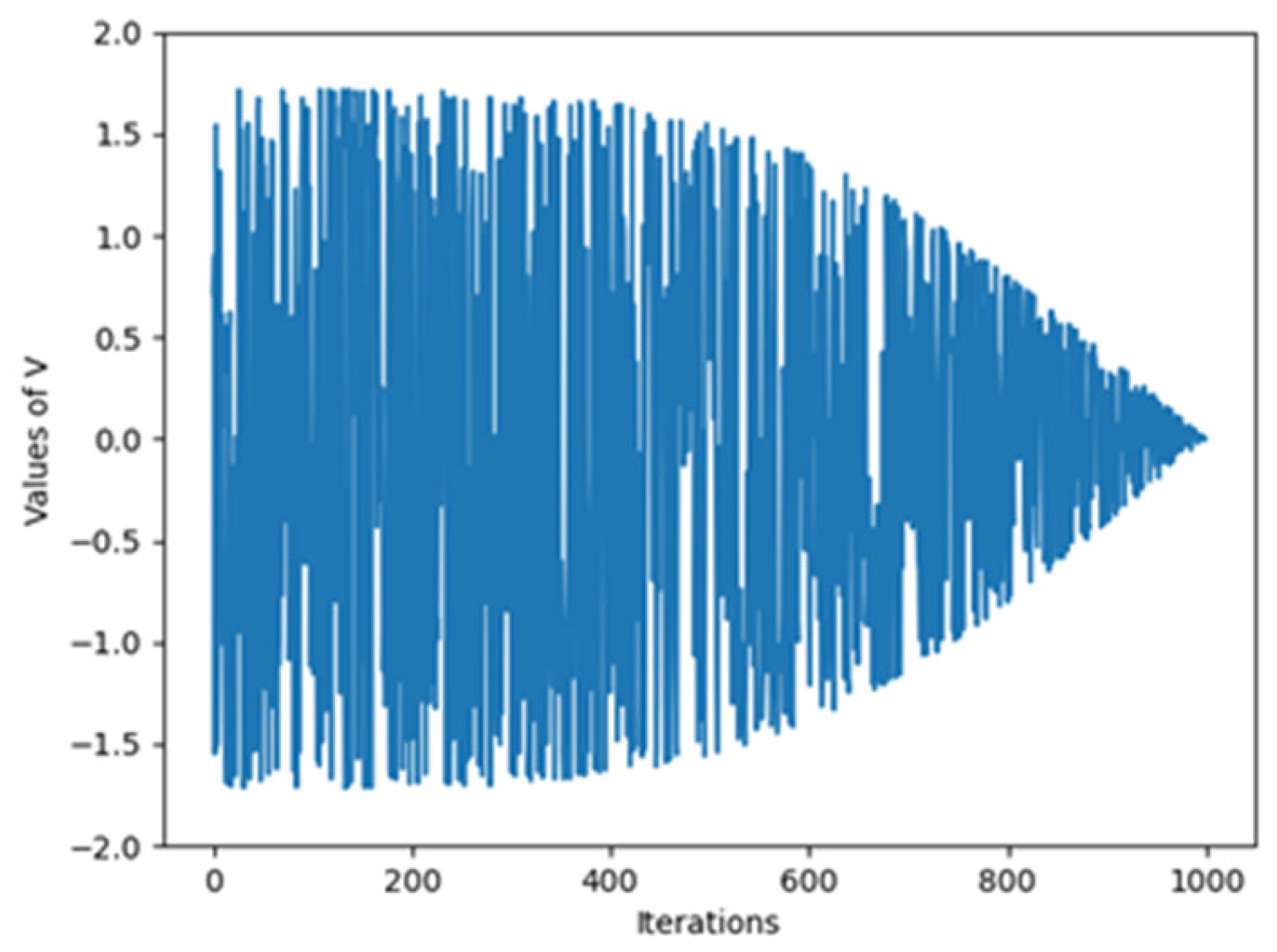

- The step length of crayfish differs depending on the iteration. Big steps are produced at low iteration numbers while at high iteration numbers, there occur small steps. Such a case allows the competition stage to help exploration at low iteration numbers and to help exploitation at high iteration numbers.

- In addition, at the competition stage of the ICOA, the cave location, which represents the best location, is removed from the mathematical model. The reason for that is to increase the exploration ability of the ICAO. Cave locations are also available in other stages.

- To verify the validity of the ICOA, it was compared to nine MHAs. For comparison, the CEC-2014 dataset with 30 test functions was used. Five engineering design problems were used for comparison as well.

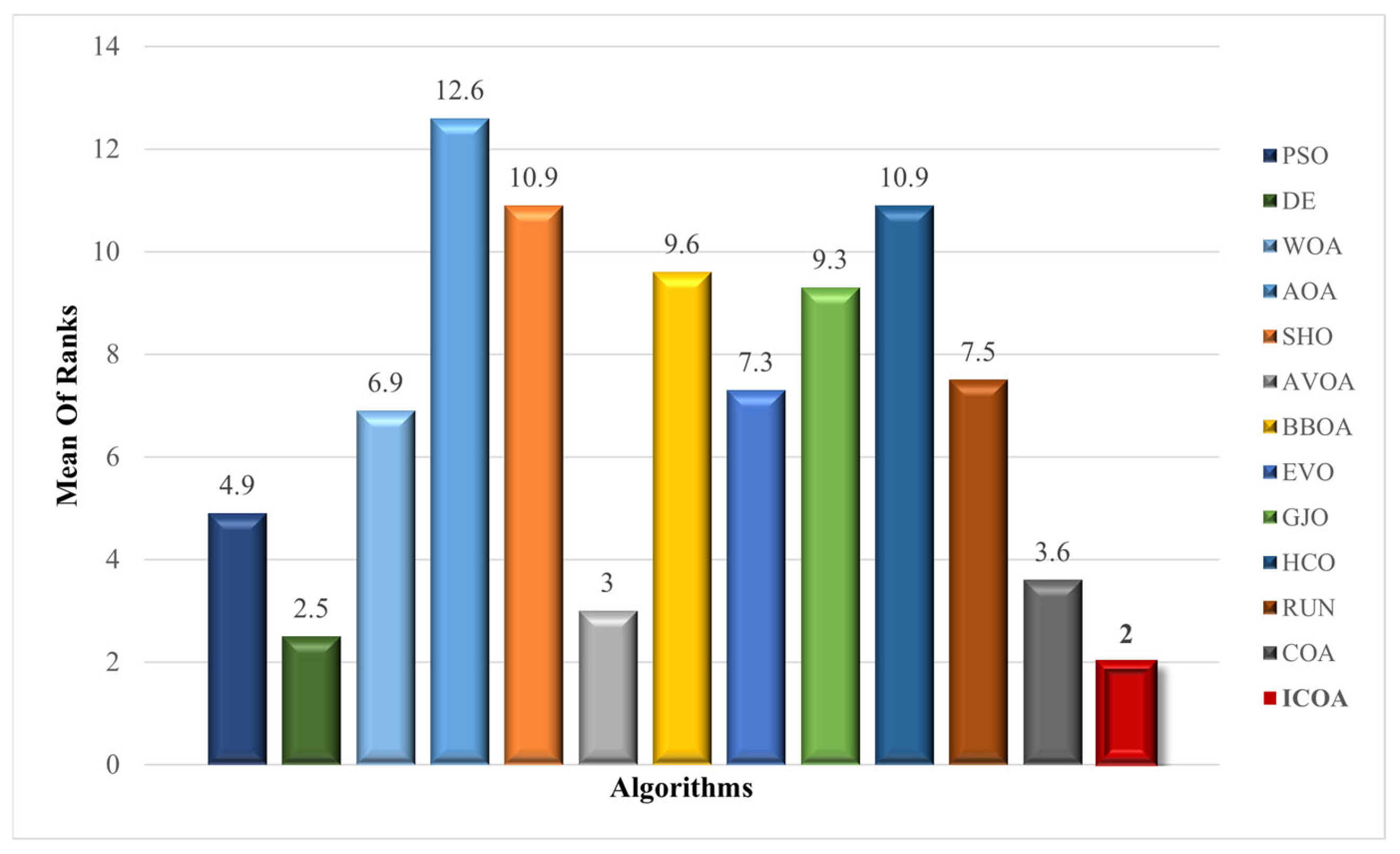

- The results are interpreted by the Wilcoxon Signed-Rank Test and Friedman test.

2. Crayfish Optimization Algorithm (COA)

2.1. Define Temperature and Crayfish Intake

2.2. Summer Resort Stage (Exploration)

2.3. Competition Stage (Exploitation)

2.4. Foraging Stage (Exploitation)

3. Improved Crayfish Optimization Algorithm

| Algorithm 1. Pseudo-codes of algorithms. | |

| COA pseudo-code | ICOA pseudo-code |

| Input: T: maximum iteration, N: population size, D: variable dimension | Input: T: maximum iteration, N: population size, D: variable dimension |

| Output: The optimal search agent , and its fitness value | Output: The optimal search agent , and its fitness value |

| Generate initial population Calculate the fitness value of the population to get , Defining temperature temp by Equation (1) Define cave according to Equation (3) Crayfish conducts the summer resort stage according to Equation (4) Crayfish compete for caves through Equation (6) The food intake p and food size are obtained by Equation (2) and Equation (9) Crayfish shreds food by Equation (10) Crayfish foraging according to Equation (11) Crayfish foraging according to Equation (12) Update fitness values, , | Generate initial population Calculate the fitness value of the population to get , Defining temperature temp by Equation (1) Define cave according to Equation (3) Crayfish conducts the summer resort stage according to Equation (4) Updated competition stage Equation (13) The food intake p and food size are obtained by Equation (2) and Equation (9) Crayfish shreds food by Equation (10) Crayfish foraging according to Equation (11) Crayfish foraging according to Equation (12) Update fitness values, , |

4. Computational Results and Discussions

4.1. Experimental Settings and Compared Algorithms

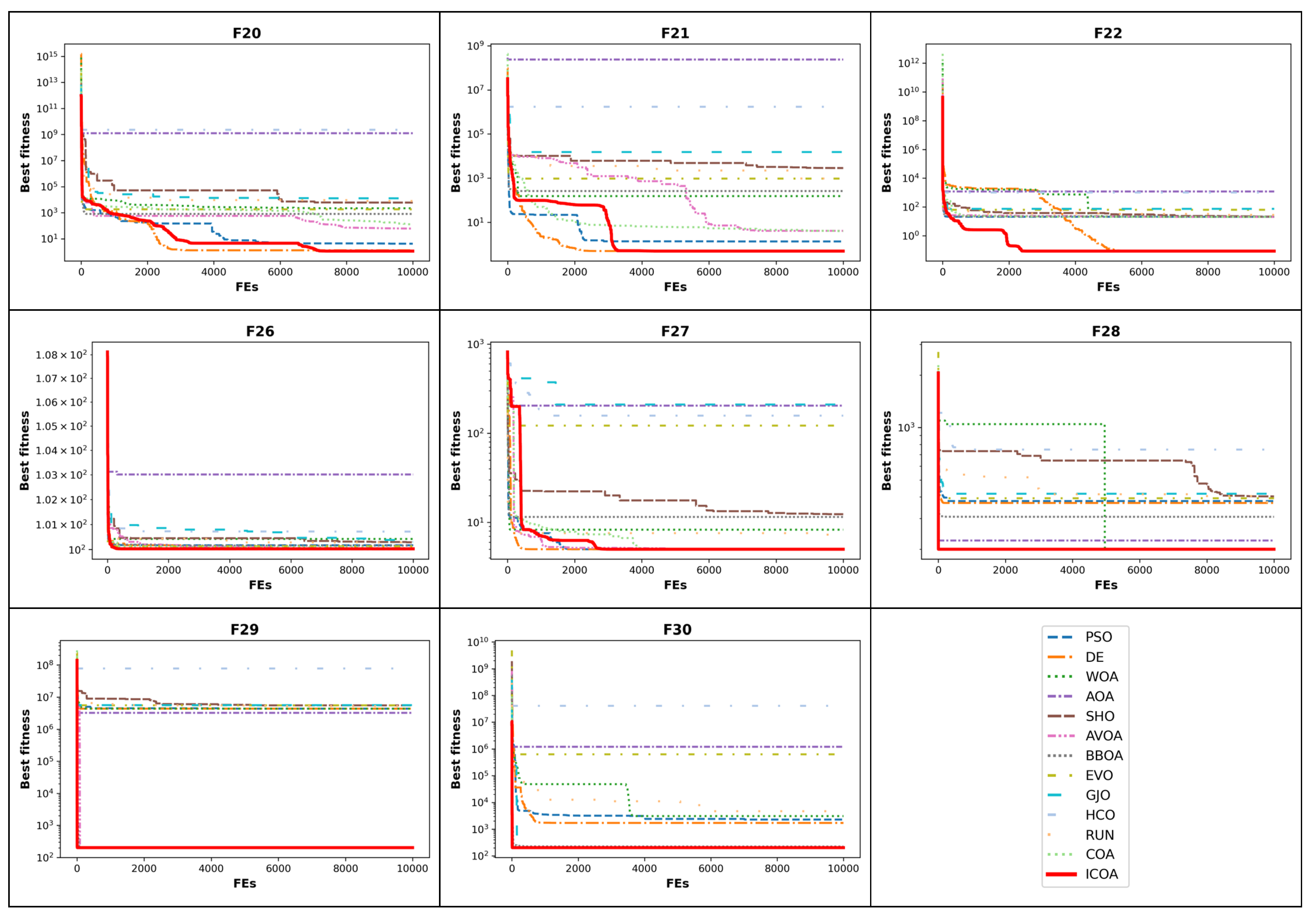

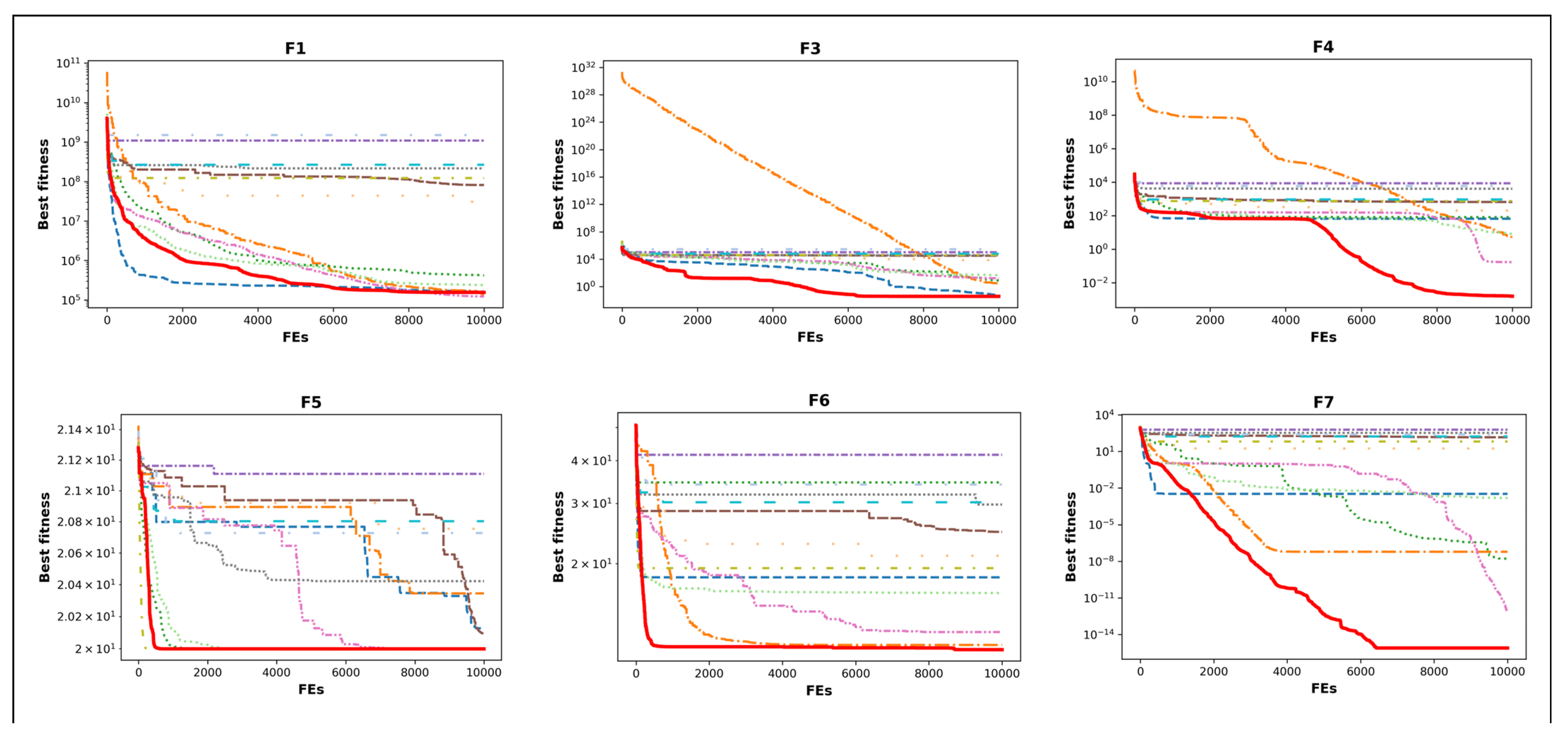

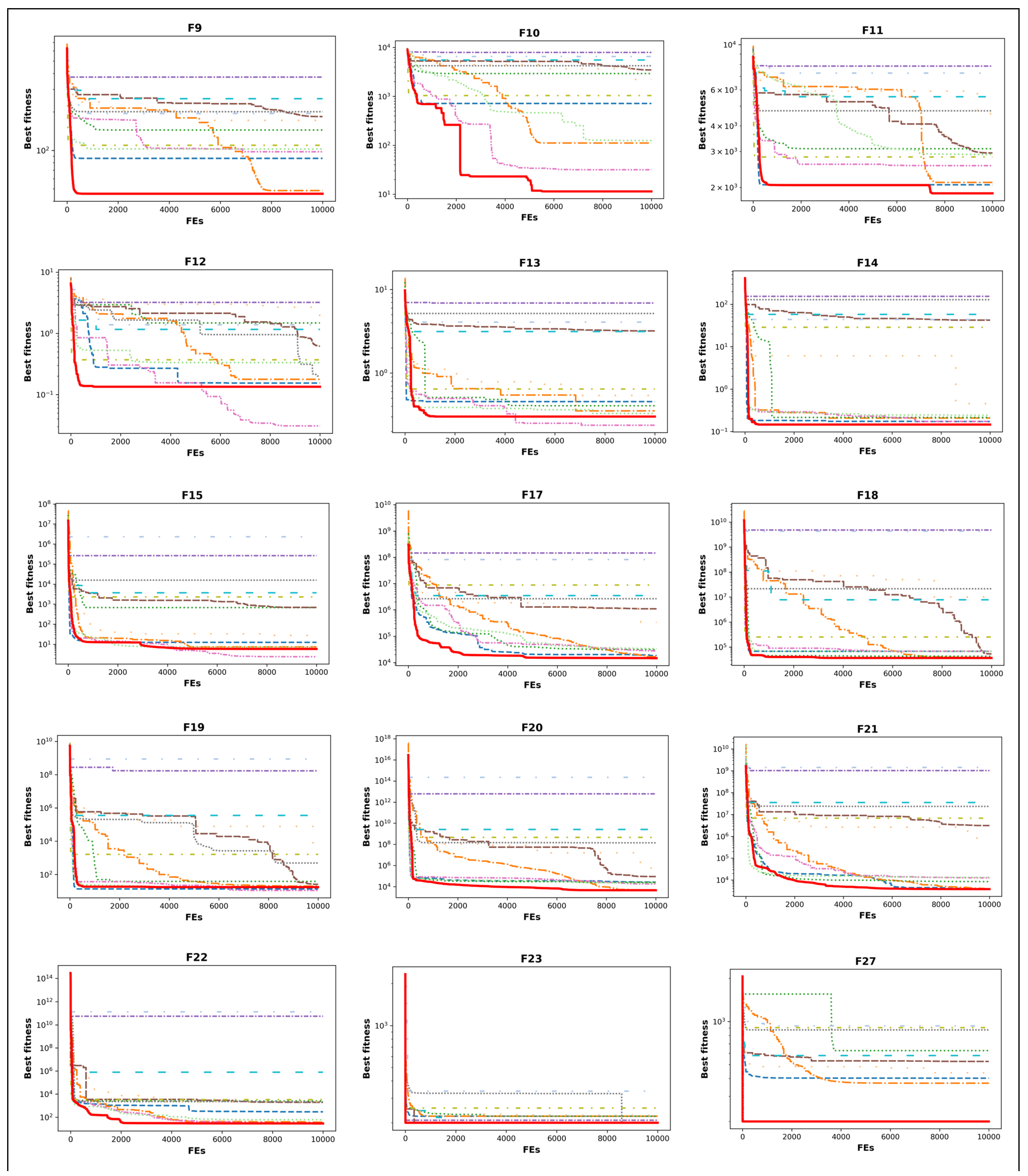

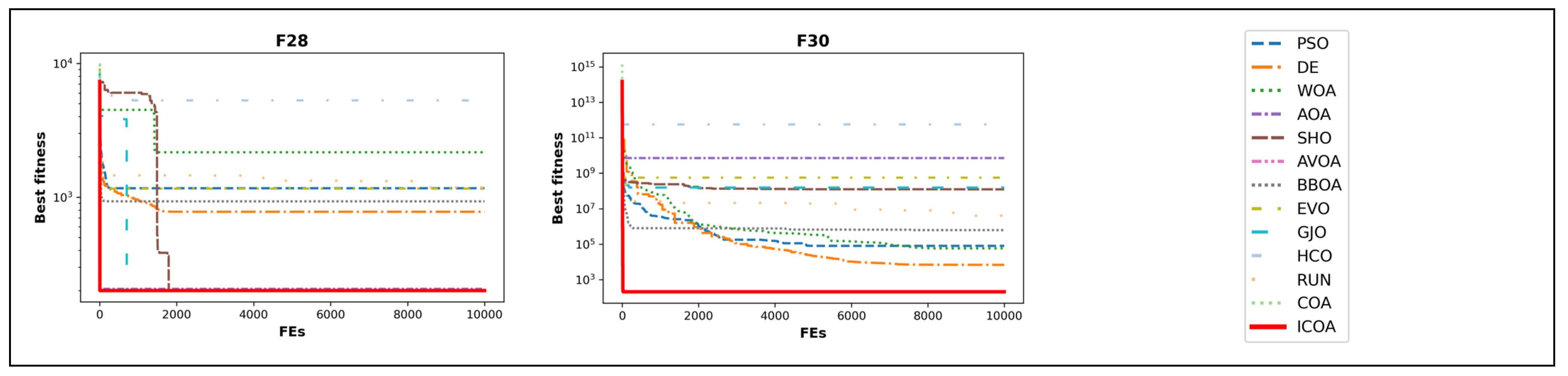

4.2. CEC-2014 Benchmark Function Results

4.3. Ablation Study: Evidence of Component-Wise Improvements

4.4. Scalability Analysis

4.5. Application of ICOA to Engineering Problems

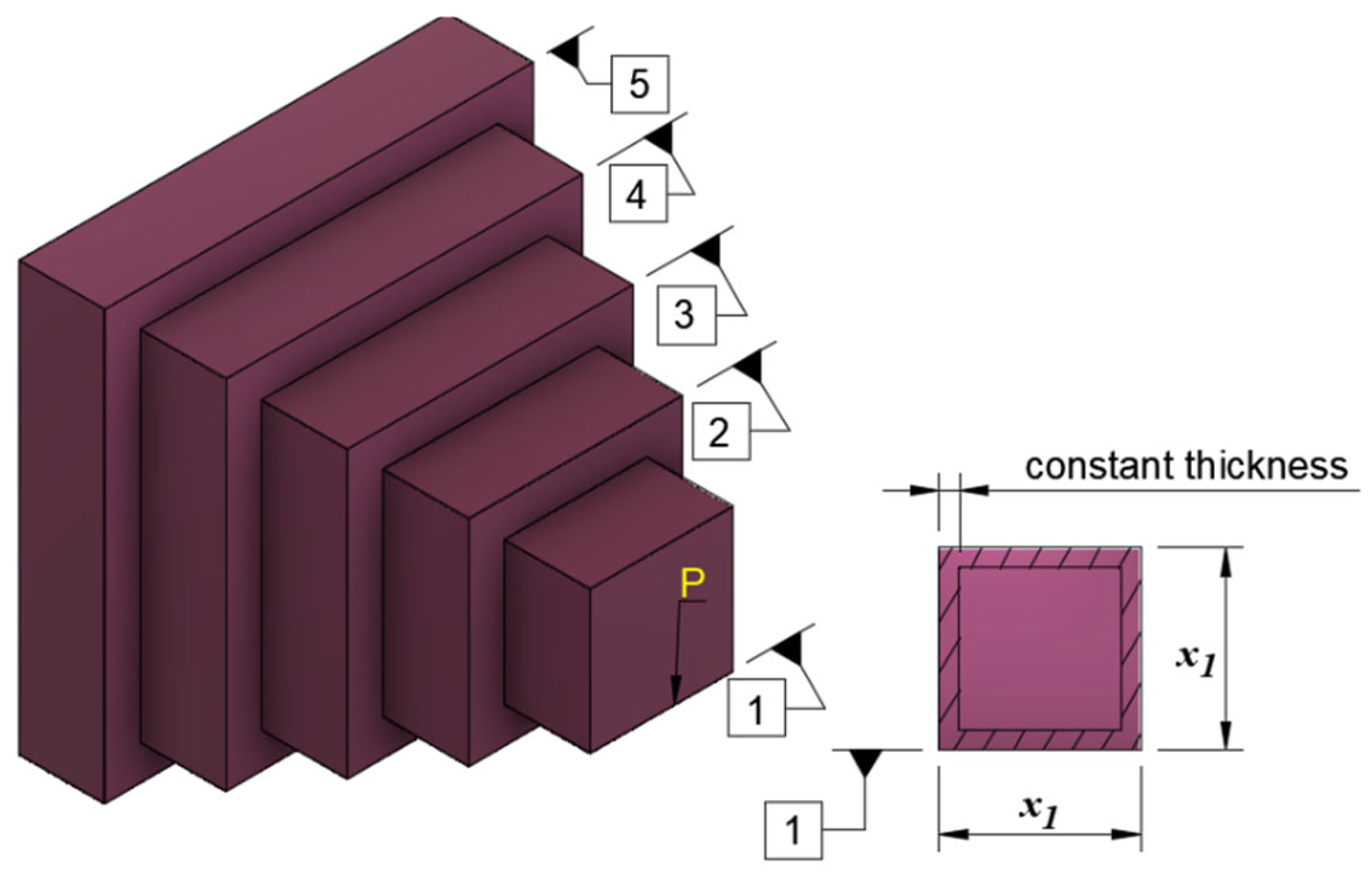

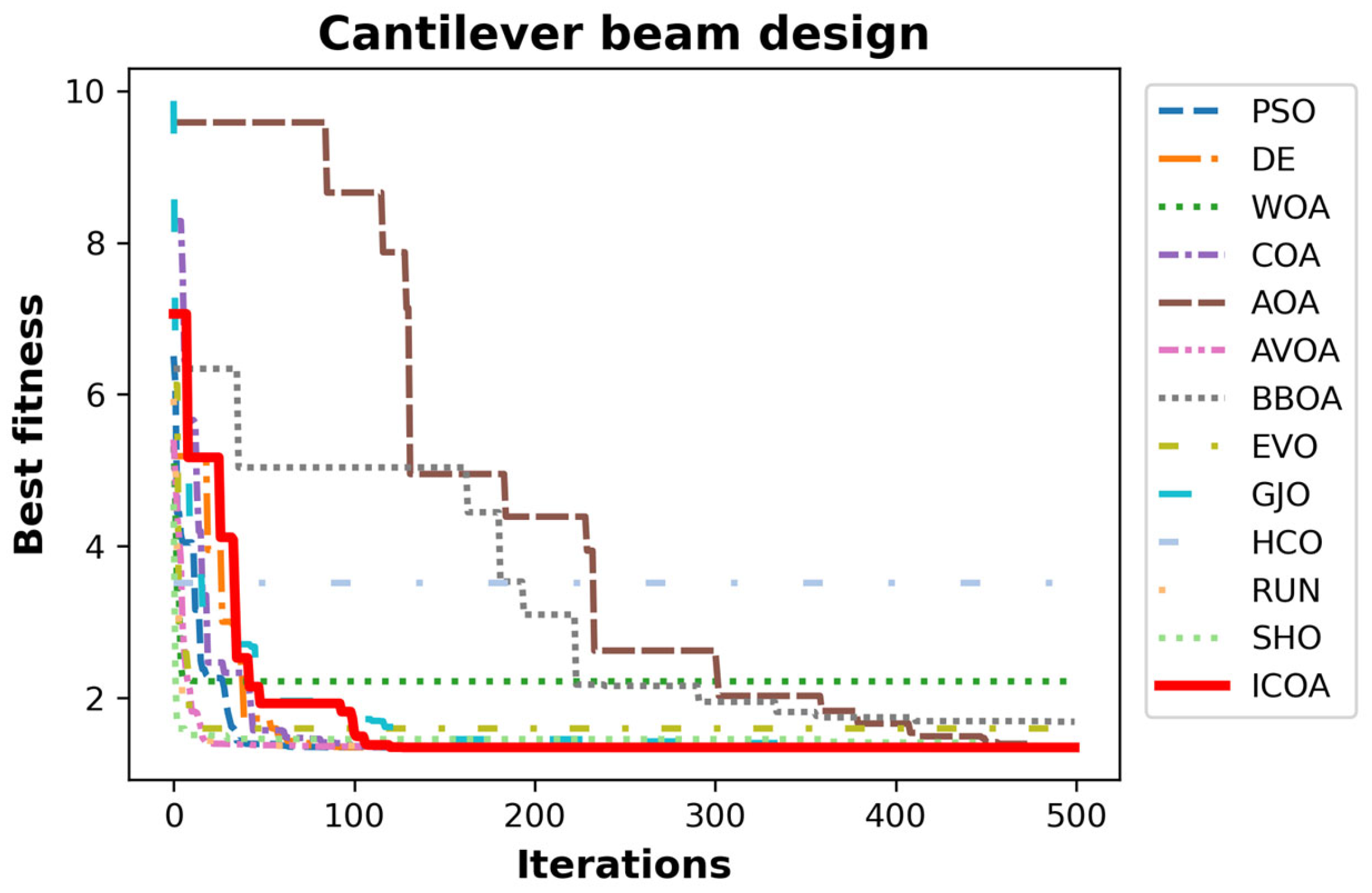

4.5.1. Cantilever Beam Design

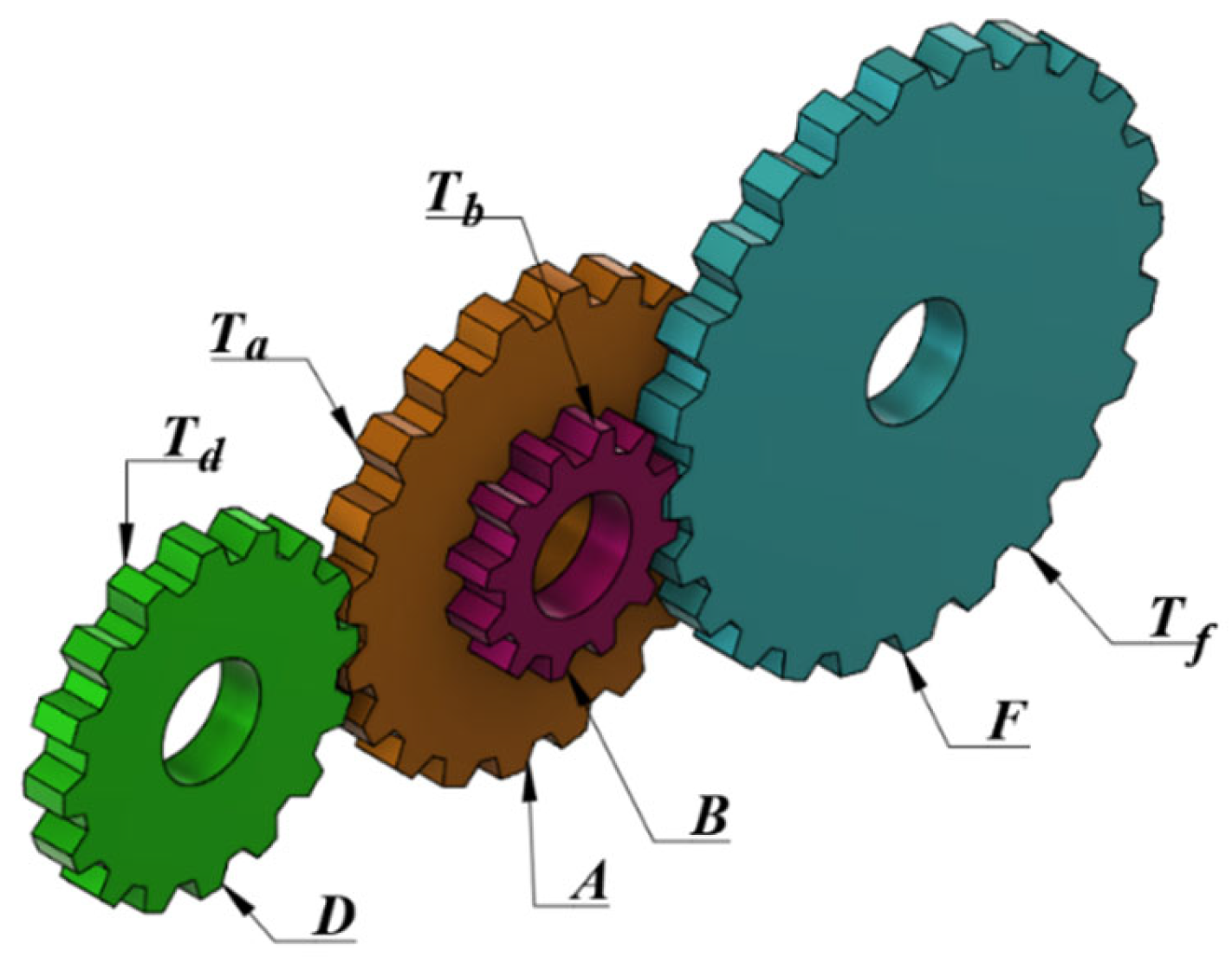

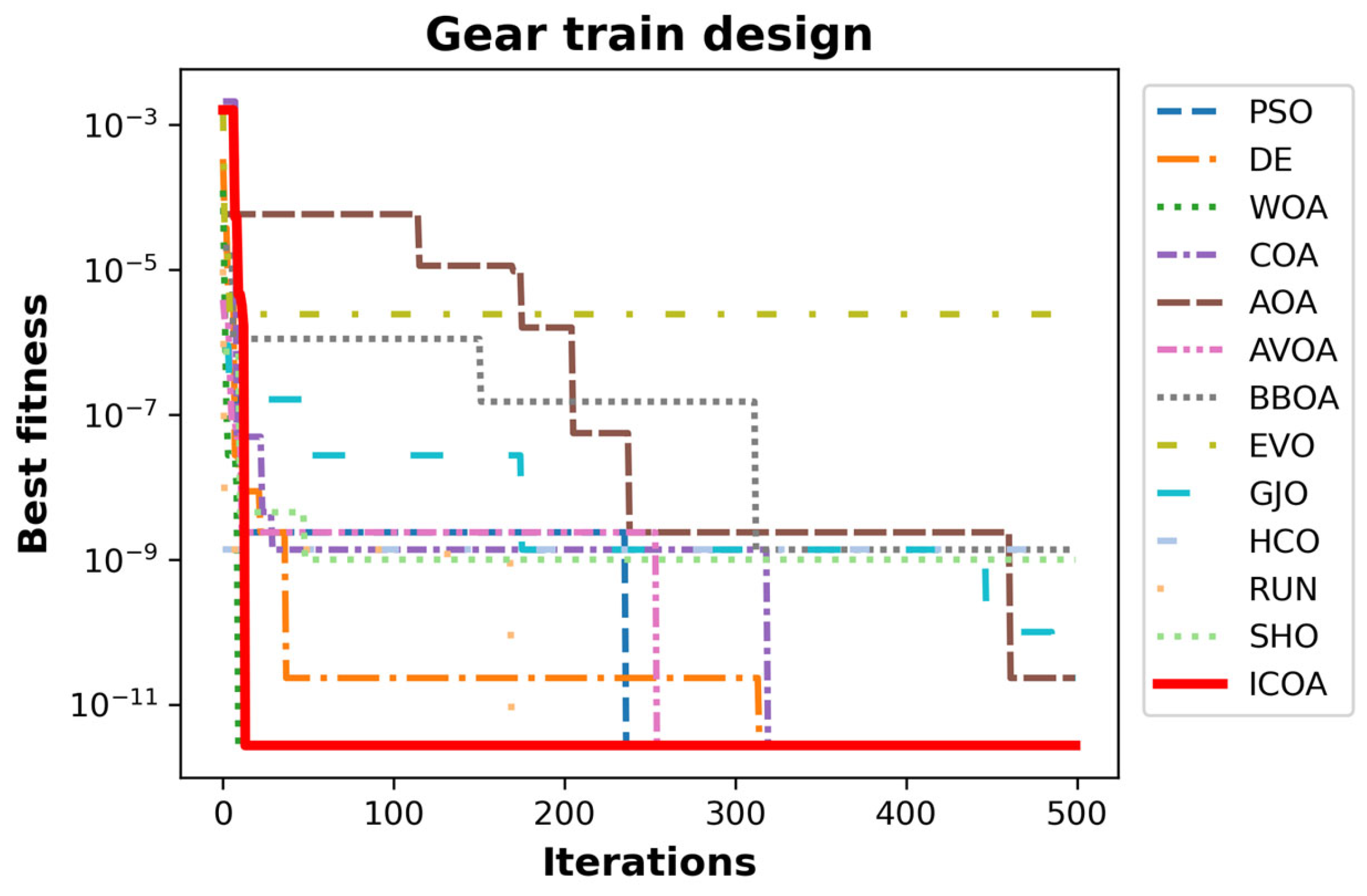

4.5.2. Gear Train Design

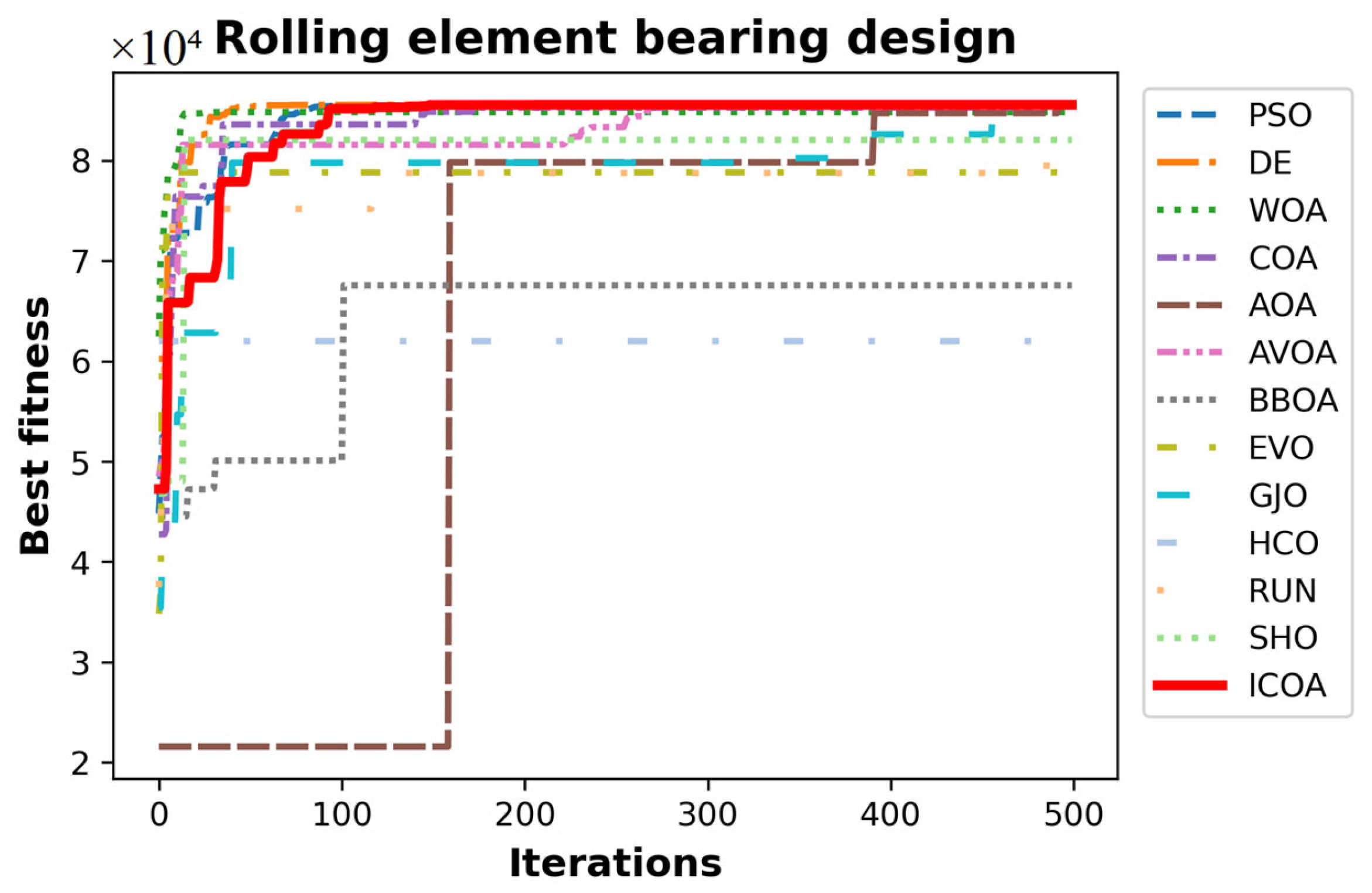

4.5.3. Rolling Element Bearing Design

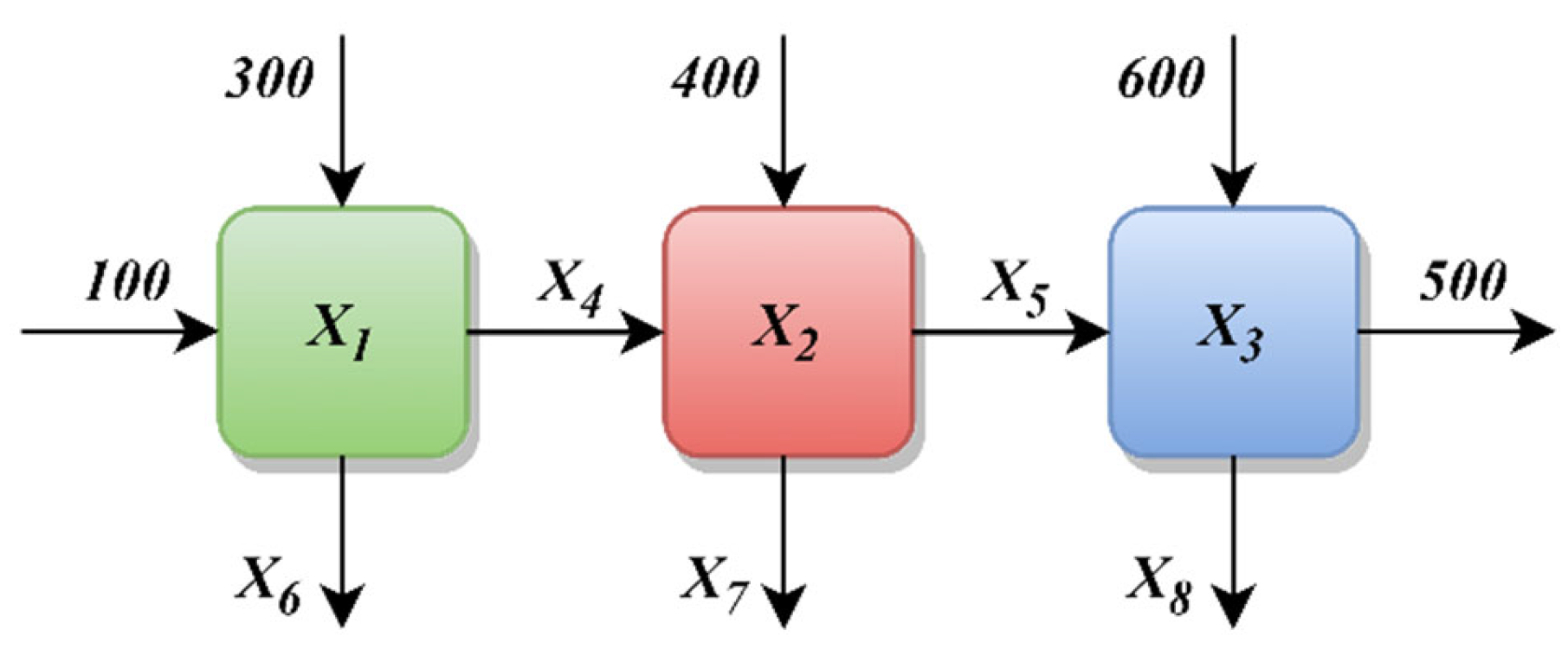

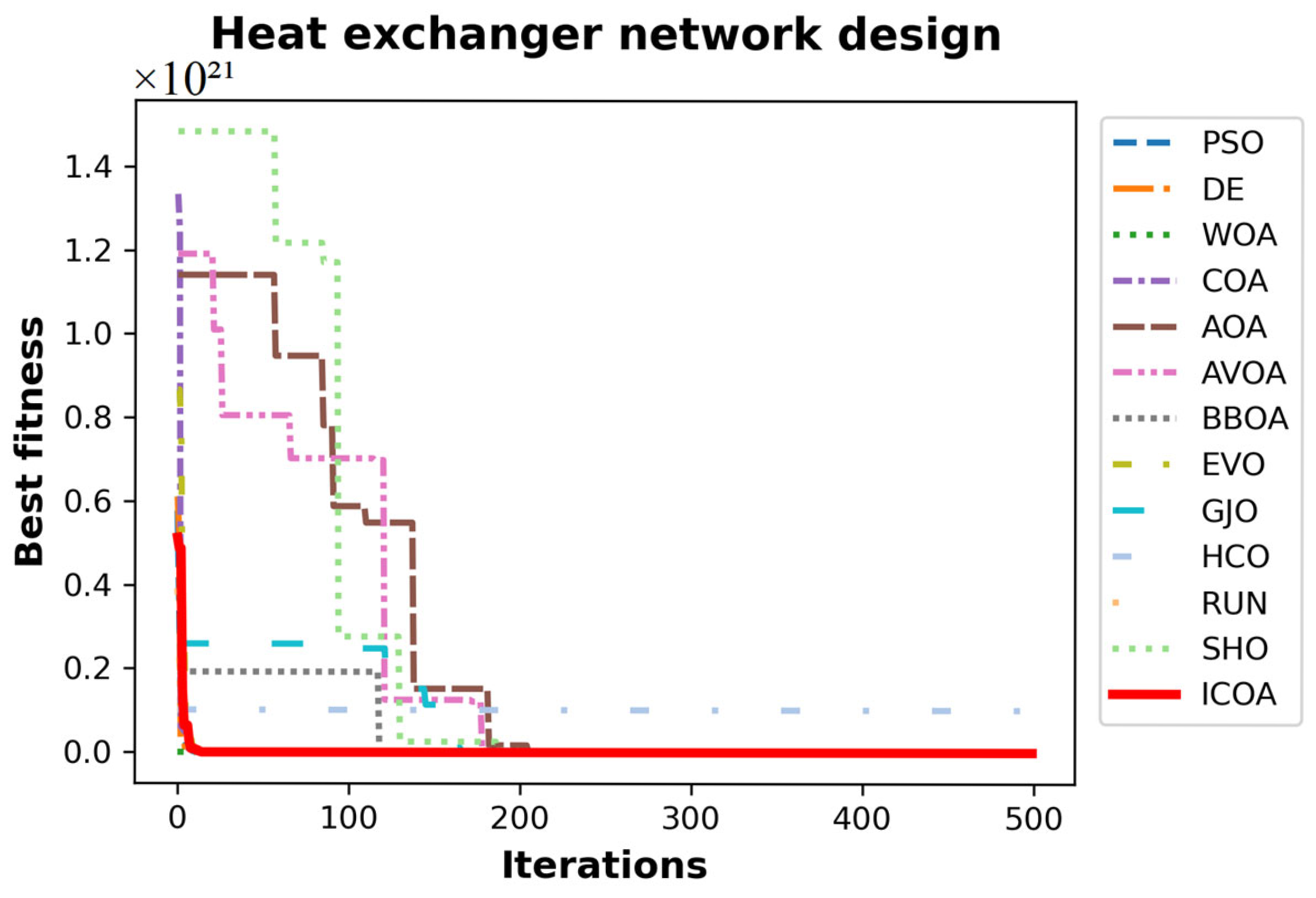

4.5.4. Heat Exchanger Network Design

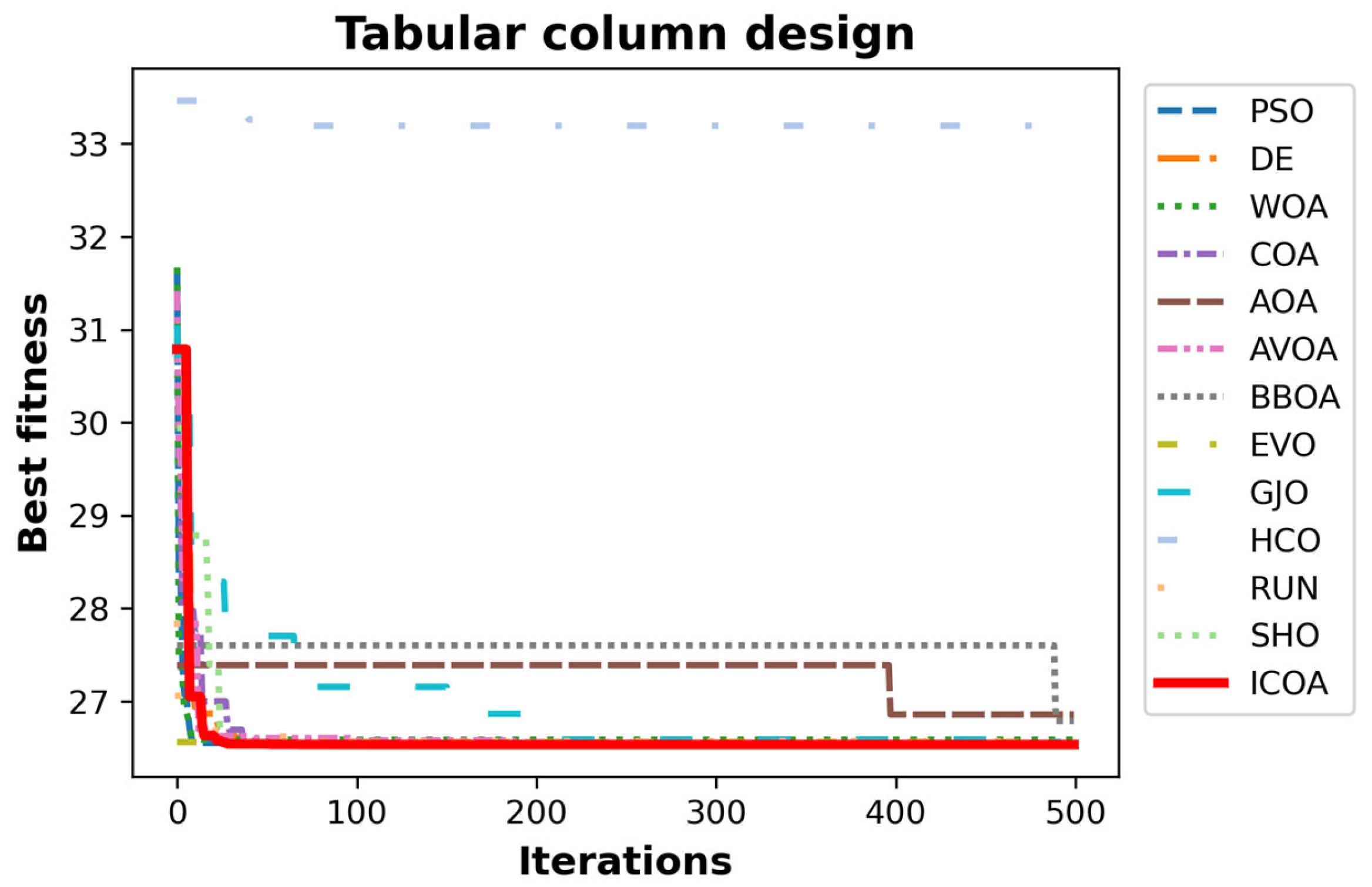

4.5.5. Tabular Column Design

4.6. Critical Assessment of ICOA Implementation

4.6.1. Algorithmic Strengths

4.6.2. Limitations and Drawbacks

4.6.3. Performance Assessment

4.6.4. Recommendations

5. ROAS Rate Problem

6. Discussion

7. Conclusions

- In the competition stage of the ICOA, step length is inversely proportional to iteration. This enables the competition stage to help exploration at low iteration numbers and exploitation at high iteration numbers.

- The randomness of the mapping vector increases the stochastic property of the ICOA.

- CEC-2014 test results indicate that the ICOA is superior to its competitor algorithms. It is a significant result that the ICOA’s efficiency does not decrease, especially when the dimension of the problem increases.

- By means of the mapping vector, in high-dimensional problems, updating only some dimensions at a time both reduces the computational load of the algorithm and controls excessive randomness.

- Studying convergence curves, it is observed that the ICOA has a better curve than the COA. This verifies that the improvements made in the competition stage are successful. The number one reason why the ICOA has a better slope is that it performs exploration at low iteration numbers and exploitation at high iteration numbers. The second reason is the mapping vector of the ICOA.

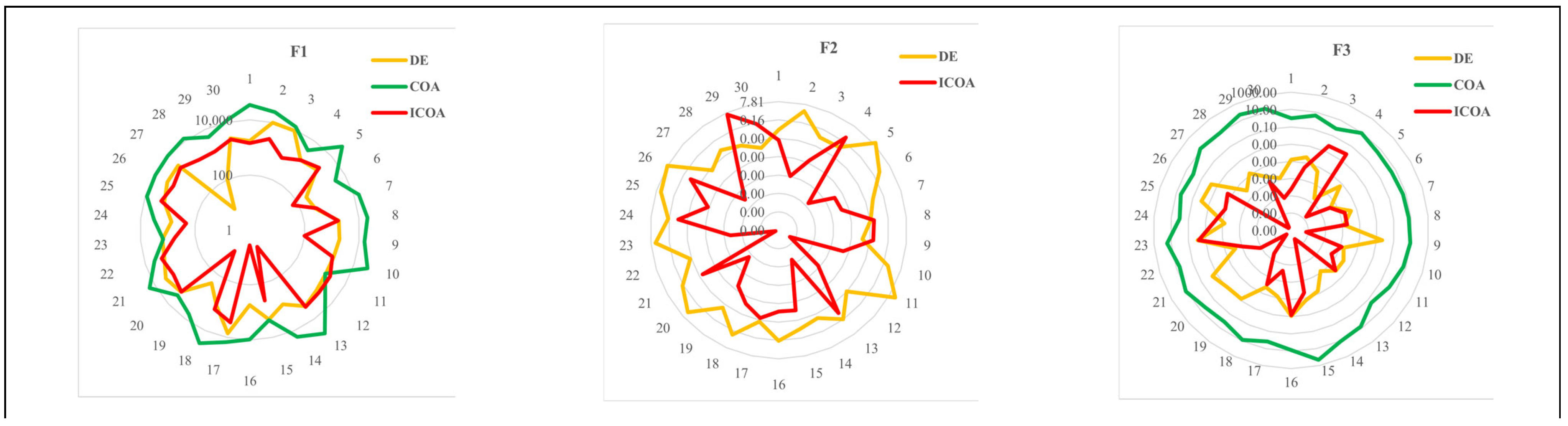

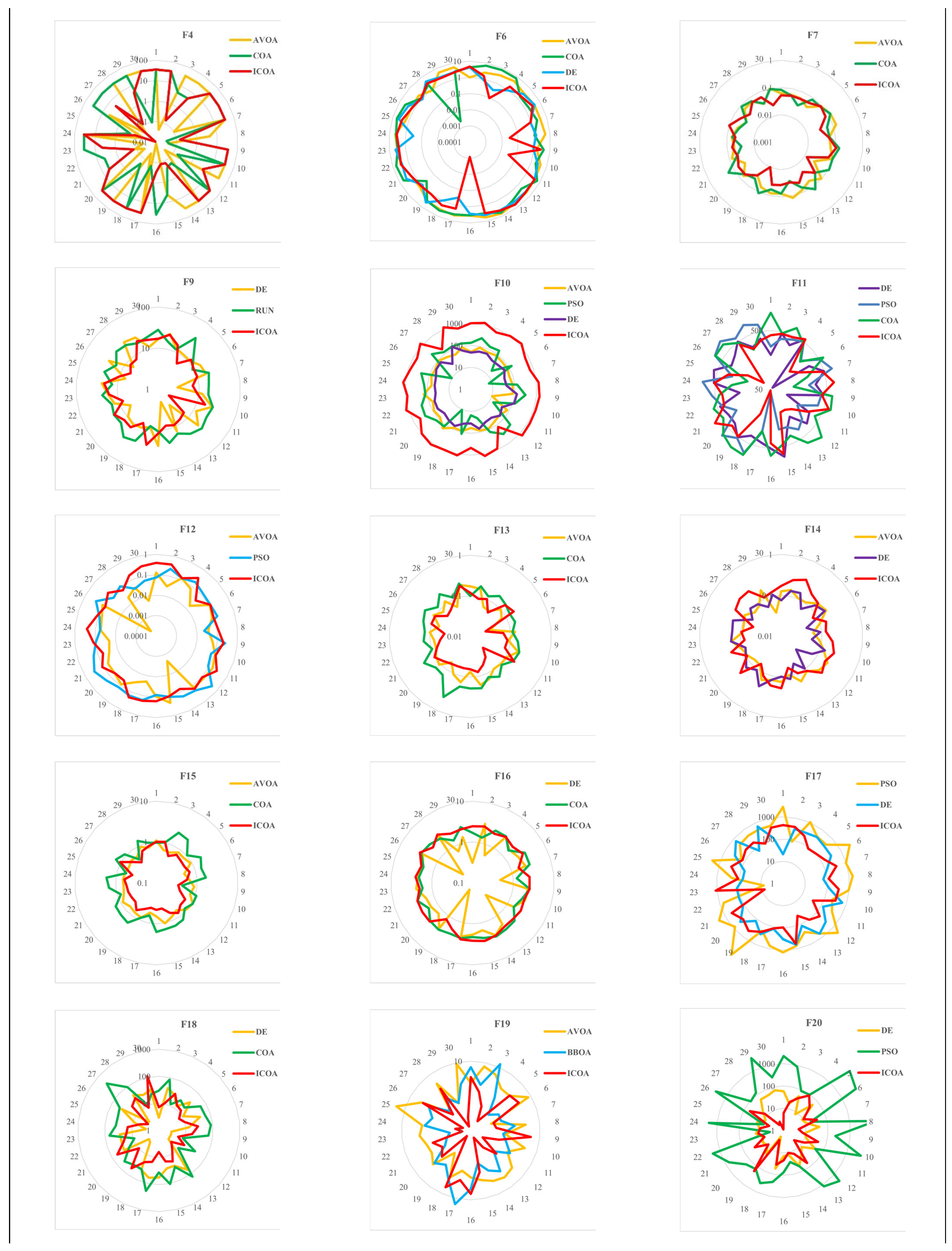

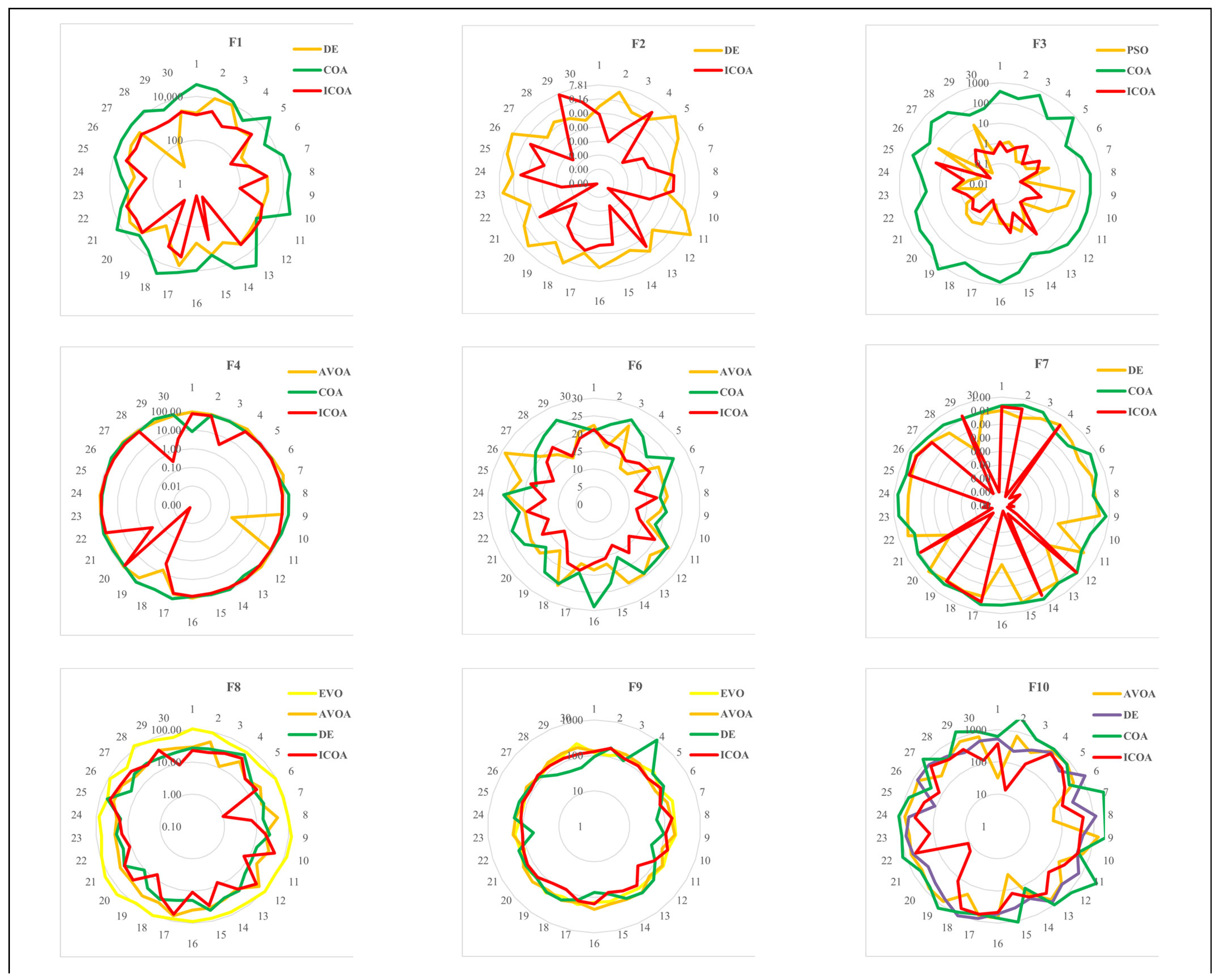

- Radar graphics indicate that the ICOA has more consistent results than its competitors.

- Scalability analysis indicates that the ICOA is the optimizer that is the least affected algorithm by the dimension increase.

- The results of engineering design problems provide the promising impression that the ICOA could solve real-world problems.

- The ICOA’s success in the ROAS problem heralds that it could solve complex real-world problems. The reason for the ICOA’s success in this problem is the improvements made in the competition stage. Removing the parameter prevents the ICOA from being caught by the local minimum trap.

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Nomenclature

| Temperature | |

| Crayfish intake | |

| , and | Control parameter |

| The best temperature for crayfish | |

| Iteration | |

| Maximum iteration | |

| Cave location | |

| Best location | |

| Best location (current population) | |

| The position of the ith agent in the jth dimension at the tth iteration | |

| The position of the ith agent in the jth dimension at the (t + 1)th iteration | |

| The position of the randomly selected zth agent from the population in the jth dimension at the tth iteration | |

| Population size | |

| Randomly selected agent | |

| food factor | |

| location of food | |

| Fitness value of agent i | |

| Fitness value of the food | |

| step length | |

| mapping vector | |

| Dimension of the problem | |

| MHA | Meta-heuristic algorithms |

| FE | Function evaluations |

| WSRT | Wilcoxon Signed-Rank Test |

| PSO | Particle Swarm Optimization |

| DE | Differential Evolution |

| WOA | Whale Optimization Algorithm |

| AOA | Arithmetic Optimization Algorithm |

| AVOA | African Vultures Optimization Algorithm |

| GJO | Golden Jackal Optimization |

| SHO | Sea-Horse Optimizer |

| EVO | Energy Valley Optimizer |

| HCO | Human Conception Optimizer |

| RUN | RUNge Kutta optimizer |

| BBOA | Brown-Bear Optimization Algorithm |

| COA | Crayfish Optimization Algorithm |

| ICOA | Improved Crayfish Optimization Algorithm |

| ROAS | Return on advertising spend |

| AS | Advertising spend |

| R | Revenue |

| APO | Artificial Protozoa Optimizer |

| FLA | Flood Algorithm |

| GGO | Greylag Goose Optimization |

| HO | Hippopotamus Optimization |

| HOA | Hiking Optimization Algorithm |

| CFOA | Catch Fish Optimization Algorithm |

| EEFO | Electric Eel Foraging Optimization |

| PO | Puma optimizer |

Appendix A

| Keywords | Advertising Spend | Num. of Impressions | Num. of Clicks | Hit Rate(%) | Sales Volume | Revenue | ROAS | Proposed CPM | Actual CPM | Selected CPM |

|---|---|---|---|---|---|---|---|---|---|---|

| Makarna lutfen | 1299.50 | 9532 | 156 | 1.64 | 130 | 18,940.01 | 14.57 | 42.51 | 136.33 | 225.42 |

| Baby biscuit | 462.85 | 8165 | 58 | 0.71 | 8 | 1277.16 | 2.76 | 18.41 | 56.69 | 206.04 |

| Biscuit | 16.56 | 4523 | 14 | 0.31 | 32 | 5251.80 | 317.14 | 15.22 | 3.66 | 145.51 |

| Organic | 116.36 | 1742 | 17 | 0.98 | 3 | 312.59 | 2.69 | 18.43 | 66.80 | 211.30 |

| Pasta | 402.09 | 6377 | 18 | 0.28 | 1 | 105.61 | 0.26 | 64.72 | 63.05 | 86.76 |

| Toddler food | 37.40 | 830 | 37 | 4.46 | 13 | 1667.82 | 44.59 | 21.00 | 45.06 | 101.03 |

| Baby semolina | 93.94 | 1180 | 33 | 2.80 | 21 | 2623.33 | 27.93 | 32.65 | 79.61 | 87.34 |

| Baby tarhana | 51.98 | 832 | 9 | 1.08 | 3 | 434.52 | 8.36 | 37.28 | 62.48 | 102.37 |

| Gluten-free pasta | 43.91 | 1207 | 9 | 0.75 | 6 | 612.40 | 13.95 | 12.37 | 36.38 | 53.18 |

| Keywords | Advertising Spend | Num. of Impressions | Num. of Clicks | Hit Rate (%) | Sales Volume | Revenue | ROAS | Proposed CPM | Actual CPM | Selected CPM |

|---|---|---|---|---|---|---|---|---|---|---|

| Makarna lutfen | 442.97 | 7497 | 83 | 1.11 | 92 | 13,709.16 | 30.95 | 73.57 | 59.09 | 124.99 |

| Baby semolina | 118.11 | 1905 | 45 | 2.36 | 9 | 1538.10 | 13.02 | 28.05 | 62.00 | 103.69 |

| Pasta | 148.75 | 3353 | 12 | 0.36 | 5 | 929.50 | 6.25 | 30.48 | 44.36 | 76.92 |

| Baby tarhana | 50.68 | 829 | 36 | 4.34 | 6 | 746.40 | 14.73 | 41.18 | 61.13 | 67.88 |

| Gluten-free pasta | 51.18 | 2177 | 51 | 2.34 | 5 | 822.50 | 16.07 | 13.30 | 23.51 | 45.05 |

| Pudding | 2.36 | 933 | 3 | 0.32 | 3 | 459.70 | 194.79 | 10.28 | 2.53 | 50.28 |

| Toddler food | 46.34 | 748 | 10 | 1.34 | 2 | 309.80 | 6.69 | 45.59 | 61.95 | 97.84 |

| Organic | 0.25 | 132 | 3 | 2.27 | 10 | 1405.63 | 5622.52 | 12.37 | 1.89 | 52.37 |

| Keywords | Advertising Spend | Num. of Impressions | Num. of Clicks | Hit Rate (%) | Sales Volume | Revenue | ROAS | Proposed CPM | Actual CPM | Selected CPM |

|---|---|---|---|---|---|---|---|---|---|---|

| Pudding | 1076.37 | 27,121 | 145 | 0.53 | 4 | 1106.6 | 1.03 | 14.46 | 39.69 | 74.4 |

| Makarna lutfen | 10,331.77 | 94,109 | 10,065 | 10.7 | 10,703 | 1,211,873.38 | 117.3 | 23.4 | 109.79 | 138 |

| Organic | 131.63 | 5060 | 87 | 1.72 | 6 | 599.19 | 4.55 | 38.69 | 26.01 | 72 |

| Pasta | 906.84 | 7287 | 31 | 0.43 | 10 | 661 | 0.73 | 28.17 | 124.45 | 145.71 |

| Baby curd | 9.86 | 242 | 9 | 3.72 | 13 | 1952.44 | 198.02 | 14.24 | 40.74 | 57 |

| Baby butter | 2.11 | 110 | 9 | 8.18 | 4 | 999.78 | 473.83 | 12.37 | 19.18 | 21 |

| Toddler food | 1449.46 | 15,005 | 252 | 1.68 | 93 | 11,648.8 | 8.04 | 40.98 | 96.6 | 541 |

| Keywords | Advertising Spend | Num. of Impressions | Num. of Clicks | Hit Rate (%) | Sales Volume | Revenue | ROAS | Proposed CPM | Actual CPM | Selected CPM |

|---|---|---|---|---|---|---|---|---|---|---|

| Makarna lutfen | 661.88 | 9722 | 83 | 0.85 | 138 | 20,105.91 | 30.38 | 63.96 | 68.08 | 74.65 |

| Toddler food | 206.31 | 2750 | 18 | 0.65 | 8 | 997.61 | 4.84 | 48.15 | 75.02 | 112.41 |

| Pasta | 404.93 | 5280 | 22 | 0.42 | 1 | 319.9 | 0.79 | 25.69 | 76.69 | 104.72 |

| Organic | 6.83 | 200 | 4 | 2 | 3 | 541.7 | 79.31 | 11.06 | 34.15 | 40.79 |

| Biscuit | 1115.59 | 13,124 | 30 | 0.23 | 8 | 1447.2 | 1.3 | 49.36 | 85 | 86.39 |

| Baby biscuit | 36.96 | 708 | 8 | 1.13 | 4 | 1134.6 | 30.7 | 38.62 | 52.2 | 102.37 |

| Baby semolina | 1566.92 | 19,175 | 509 | 2.65 | 189 | 21,785.87 | 13.9 | 25.14 | 81.72 | 163.4 |

| Keywords | Advertising Spend | Num. of Impressions | Num. of Clicks | Hit Rate (%) | Sales Volume | Revenue | ROAS | Proposed CPM | Actual CPM | Selected CPM |

|---|---|---|---|---|---|---|---|---|---|---|

| Makarna lutfen | 17,665.35 | 11,4206 | 13,709 | 12 | 13,790 | 1,811,070.41 | 102.52 | 81.57 | 154.68 | 287 |

| Pasta | 7494.32 | 56,982 | 638 | 1.12 | 371 | 53,609.57 | 7.15 | 70.41 | 131.52 | 212 |

| Toddler food | 1784.95 | 12,867 | 349 | 2.71 | 111 | 14,323.48 | 8.02 | 41.9 | 138.72 | 370 |

| Baby semolina | 5198.98 | 37,666 | 955 | 2.54 | 342 | 38,720.82 | 7.45 | 25.08 | 138.03 | 910 |

| Baby tarhana | 2780.55 | 16,440 | 381 | 2.32 | 131 | 16,951.23 | 6.1 | 27.39 | 169.13 | 291 |

| Organic | 4511.94 | 17,223 | 337 | 1.96 | 26 | 3479.74 | 0.77 | 57.02 | 261.97 | 112 |

| Baby butter | 4389.9 | 60,975 | 308 | 0.51 | 27 | 3451.92 | 0.79 | 67.66 | 72 | 170 |

| Rice flour | 107.78 | 2770 | 24 | 0.87 | 10 | 1773 | 16.45 | 16.71 | 38.91 | 93.26 |

| Keywords | Advertising Spend | Num of Impressions | Num. of Clicks | Hit Rate (%) | Sales Volume | Revenue | ROAS | Proposed CPM | Actual CPM | Selected CPM |

|---|---|---|---|---|---|---|---|---|---|---|

| Makarna lutfen | 300.51 | 6952 | 53 | 0.76 | 54 | 9519.31 | 31.68 | 54.93 | 43.23 | 65.21 |

| Rice flour | 183.78 | 6480 | 36 | 0.56 | 5 | 859.5 | 4.68 | 13.62 | 28.36 | 52.37 |

| Organic | 36.74 | 796 | 27 | 3.39 | 8 | 1515.2 | 41.24 | 12.37 | 46.16 | 61.02 |

| Pasta | 12.98 | 1311 | 12 | 0.92 | 6 | 931.4 | 71.76 | 20.57 | 9.9 | 57.29 |

| Toddler food | 3292.64 | 33,583 | 620 | 1.85 | 139 | 16,264.6 | 4.94 | 58.08 | 98.04 | 300 |

| Baby semolina | 9.83 | 253 | 36 | 14.23 | 20 | 2279 | 231.84 | 45.55 | 38.85 | 45 |

| Baby butter | 1655.21 | 79,968 | 583 | 0.73 | 28 | 5149.68 | 3.11 | 12.99 | 20.7 | 28 |

| Baby curd | 74.21 | 5589 | 60 | 1.07 | 18 | 2779.6 | 37.46 | 12.37 | 13.28 | 46 |

References

- Agushaka, J.O.; Ezugwu, A.E. Evaluation of Several Initialization Methods on Arithmetic Optimization Algorithm Performance. J. Intell. Syst. 2021, 31, 70–94. [Google Scholar] [CrossRef]

- Agushaka, J.O.; Ezugwu, A.E.; Abualigah, L. Dwarf Mongoose Optimization Algorithm. Comput. Methods Appl. Mech. Eng. 2022, 391, 114570. [Google Scholar] [CrossRef]

- Zhao, W.; Wang, L.; Zhang, Z.; Mirjalili, S.; Khodadadi, N.; Ge, Q. Quadratic Interpolation Optimization (QIO): A New Optimization Algorithm Based on Generalized Quadratic Interpolation and Its Applications to Real-World Engineering Problems. Comput. Methods Appl. Mech. Eng. 2023, 417, 116446. [Google Scholar] [CrossRef]

- Wang, L.; Cao, Q.; Zhang, Z.; Mirjalili, S.; Zhao, W. Artificial Rabbits Optimization: A New Bio-Inspired Meta-Heuristic Algorithm for Solving Engineering Optimization Problems. Eng. Appl. Artif. Intell. 2022, 114, 105082. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Jameel, M.; Abouhawwash, M. Nutcracker Optimizer: A Novel Nature-Inspired Metaheuristic Algorithm for Global Optimization and Engineering Design Problems. Knowl. Based Syst. 2023, 262, 110248. [Google Scholar] [CrossRef]

- Ren, L.; Wang, Z.; Zhao, J.; Wu, J.; Lin, R.; Wu, J.; Fu, Y.; Tang, D. Shale Gas Load Recovery Modeling and Analysis after Hydraulic Fracturing Based on Genetic Expression Programming: A Case Study of Southern Sichuan Basin Shale. J. Nat. Gas. Sci. Eng. 2022, 107, 104778. [Google Scholar] [CrossRef]

- Hashim, F.A.; Hussain, K.; Houssein, E.H.; Mabrouk, M.S.; Al-Atabany, W. Archimedes Optimization Algorithm: A New Metaheuristic Algorithm for Solving Optimization Problems. Appl. Intell. 2021, 51, 1531–1551. [Google Scholar] [CrossRef]

- Panwar, D.; Saini, G.L.; Agarwal, P. Human Eye Vision Algorithm (HEVA): A Novel Approach for the Optimization of Combinatorial Problems. In Artificial Intelligence in Healthcare; Springer: Singapore, 2022. [Google Scholar]

- Qais, M.H.; Hasanien, H.M.; Turky, R.A.; Alghuwainem, S.; Tostado-Véliz, M.; Jurado, F. Circle Search Algorithm: A Geometry-Based Metaheuristic Optimization Algorithm. Mathematics 2022, 10, 1626. [Google Scholar] [CrossRef]

- Geem, Z.W.; Kim, J.H.; Loganathan, G.V. A New Heuristic Optimization Algorithm: Harmony Search. Simulation 2001, 76, 60–68. [Google Scholar] [CrossRef]

- Rajwar, K.; Deep, K.; Das, S. An Exhaustive Review of the Metaheuristic Algorithms for Search and Optimization: Taxonomy, Applications, and Open Challenges. Artif. Intell. Rev. 2023, 56, 13187–13257. [Google Scholar] [CrossRef]

- Zhang, W.; Zhao, J.; Liu, H.; Tu, L. Cleaner Fish Optimization Algorithm: A New Bio-Inspired Meta-Heuristic Optimization Algorithm. J. Supercomput. 2024, 80, 17338–17376. [Google Scholar] [CrossRef]

- Taheri, A.; RahimiZadeh, K.; Beheshti, A.; Baumbach, J.; Rao, R.V.; Mirjalili, S.; Gandomi, A.H. Partial Reinforcement Optimizer: An Evolutionary Optimization Algorithm. Expert. Syst. Appl. 2024, 238, 122070. [Google Scholar] [CrossRef]

- Demir, U.; Akgun, G.; Kocaoglu, S.; Okay, E.; Heydar, A.; Akdogan, E.; Yildirim, A.; Yazi, S.; Demirci, B.; Kaplanoglu, E. Comparative Design Improvement of the Growing Rod for the Scoliosis Treatment Considering the Mechanical Complications. IEEE Access 2023, 11, 40107–40120. [Google Scholar] [CrossRef]

- Huang, K.; Zhen, H.; Gong, W.; Wang, R.; Bian, W. Surrogate-Assisted Evolutionary Sampling Particle Swarm Optimization for High-Dimensional Expensive Optimization. Neural Comput. Appl. 2023, 1–17. [Google Scholar] [CrossRef]

- Deng, X.; He, D.; Qu, L. A Novel Hybrid Algorithm Based on Arithmetic Optimization Algorithm and Particle Swarm Optimization for Global Optimization Problems. J. Supercomput. 2024, 80, 8857–8897. [Google Scholar] [CrossRef]

- Wolpert, D.H.; Macready, W.G. No Free Lunch Theorems for Optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Khouni, S.E.; Menacer, T. Nizar Optimization Algorithm: A Novel Metaheuristic Algorithm for Global Optimization and Engineering Applications. J. Supercomput. 2023, 80, 3229–3281. [Google Scholar] [CrossRef]

- Rajmohan, S.; Elakkiya, E.; Sreeja, S.R. Multi-Cohort Whale Optimization with Search Space Tightening for Engineering Optimization Problems. Neural Comput. Appl. 2023, 35, 8967–8986. [Google Scholar] [CrossRef]

- Duan, Y.; Liu, C.; Li, S.; Guo, X.; Yang, C. Manta Ray Foraging and Gaussian Mutation-Based Elephant Herding Optimization for Global Optimization. Eng. Comput. 2023, 39, 1085–1125. [Google Scholar] [CrossRef]

- Arora, S.; Singh, S. Butterfly Optimization Algorithm: A Novel Approach for Global Optimization. Soft Comput. 2019, 23, 715–734. [Google Scholar] [CrossRef]

- Hashim, F.A.; Houssein, E.H.; Hussain, K.; Mabrouk, M.S.; Al-Atabany, W. Honey Badger Algorithm: New Metaheuristic Algorithm for Solving Optimization Problems. Math. Comput. Simul. 2022, 192, 84–110. [Google Scholar] [CrossRef]

- Heidari, A.A.; Mirjalili, S.; Faris, H.; Aljarah, I.; Mafarja, M.; Chen, H. Harris Hawks Optimization: Algorithm and Applications. Future Gener. Comput. Syst. 2019, 97, 849–872. [Google Scholar] [CrossRef]

- Hashim, F.A.; Hussien, A.G. Snake Optimizer: A Novel Meta-Heuristic Optimization Algorithm. Knowl. Based Syst. 2022, 242, 108320. [Google Scholar] [CrossRef]

- Lu, Y.; Yu, X.; Hu, Z.; Wang, X. Convolutional Neural Network Combined with Reinforcement Learning-Based Dual-Mode Grey Wolf Optimizer to Identify Crop Diseases and Pests. Swarm Evol. Comput. 2025, 94, 101874. [Google Scholar] [CrossRef]

- Azizi, M.; Aickelin, U.A.; Khorshidi, H.; Baghalzadeh Shishehgarkhaneh, M. Energy Valley Optimizer: A Novel Metaheuristic Algorithm for Global and Engineering Optimization. Sci. Rep. 2023, 13, 226. [Google Scholar] [CrossRef]

- Prakash, T.; Singh, P.P.; Singh, V.P.; Singh, N. A Novel Brown-Bear Optimization Algorithm for Solving Economic Dispatch Problem. In Advanced Control and Optimization Paradigms for Energy System Operation and Management; River Publishers: Aalborg, Denmark, 2022; pp. 137–164. [Google Scholar]

- Xia, J.Y.; Li, S.; Huang, J.J.; Yang, Z.; Jaimoukha, I.M.; Gunduz, D. Metalearning-Based Alternating Minimization Algorithm for Nonconvex Optimization. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 5366–5380. [Google Scholar] [CrossRef]

- Ariyanto, M.; Refat, C.M.M.; Hirao, K.; Morishima, K. Movement Optimization for a Cyborg Cockroach in a Bounded Space Incorporating Machine Learning. Cyborg Bionic Syst. 2023, 4, 0012. [Google Scholar] [CrossRef]

- Wang, H.; Yu, X.; Lu, Y. A Reinforcement Learning-Based Ranking Teaching-Learning-Based Optimization Algorithm for Parameters Estimation of Photovoltaic Models. Swarm Evol. Comput. 2025, 93, 101844. [Google Scholar] [CrossRef]

- Dehghani, M.; Trojovská, E.; Zuščák, T. A New Human-Inspired Metaheuristic Algorithm for Solving Optimization Problems Based on Mimicking Sewing Training. Sci. Rep. 2022, 12, 17387. [Google Scholar] [CrossRef]

- Braik, M.; Hammouri, A.; Atwan, J.; Al-Betar, M.A.; Awadallah, M.A. White Shark Optimizer: A Novel Bio-Inspired Meta-Heuristic Algorithm for Global Optimization Problems. Knowl. Based Syst. 2022, 243, 108457. [Google Scholar] [CrossRef]

- Seyyedabbasi, A.; Kiani, F. Sand Cat Swarm Optimization: A Nature-Inspired Algorithm to Solve Global Optimization Problems. Eng. Comput. 2023, 39, 2627–2651. [Google Scholar] [CrossRef]

- Ylldlz, B.S.; Mehta, P.; Panagant, N.; Mirjalili, S.; Yildiz, A.R. A Novel Chaotic Runge Kutta Optimization Algorithm for Solving Constrained Engineering Problems. J. Comput. Des. Eng. 2022, 9, 2452–2465. [Google Scholar] [CrossRef]

- Gezici, H.; Livatyalı, H. Chaotic Harris Hawks Optimization Algorithm. J. Comput. Des. Eng. 2022, 9, 216–245. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Akl, D.T.; Saafan, M.M.; Haikal, A.Y.; El-Gendy, E.M. IHHO: An Improved Harris Hawks Optimization Algorithm for Solving Engineering Problems. Neural Comput. Appl. 2024, 36, 12185–12298. [Google Scholar] [CrossRef]

- Jia, H.; Rao, H.; Wen, C.; Mirjalili, S. Crayfish Optimization Algorithm. Artif. Intell. Rev. 2023, 56, 1919–1979. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, P.; Li, Y.; Zhang, Y.; Liu, P.; Li, Y. Implementation of an Enhanced Crayfish Optimization Algorithm. Biomimetics 2024, 9, 341. [Google Scholar] [CrossRef]

- Jia, H.; Zhou, X.; Zhang, J.; Abualigah, L.; Yildiz, A.R.; Hussien, A.G. Modified Crayfish Optimization Algorithm for Solving Multiple Engineering Application Problems. Artif. Intell. Rev. 2024, 57, 127. [Google Scholar] [CrossRef]

- Yuan, P.; Li, S.; Liao, Z. ECOA: An Enhanced Crayfish Optimization Algorithm for Real-World Engineering Problems. In Proceedings of the 2024 6th International Conference on Communications, Information System and Computer Engineering (CISCE), Guangzhou, China, 10–12 May 2024; pp. 804–809. [Google Scholar] [CrossRef]

- Zhang, J.; Diao, Y. Hierarchical Learning-Enhanced Chaotic Crayfish Optimization Algorithm: Improving Extreme Learning Machine Diagnostics in Breast Cancer. Mathematics 2024, 12, 2641. [Google Scholar] [CrossRef]

- Eberhart, R.; Kennedy, J. New Optimizer Using Particle Swarm Theory. In Proceedings of the Sixth International Symposium on Micro Machine and Human Science, Nagoya, Japan, 4–6 October 1995; pp. 39–43. [Google Scholar] [CrossRef]

- Kaelo, P.; Ali, M.M. A Numerical Study of Some Modified Differential Evolution Algorithms. Eur. J. Oper. Res. 2006, 169, 1176–1184. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Abualigah, L.; Diabat, A.; Mirjalili, S.; Abd Elaziz, M.; Gandomi, A.H. The Arithmetic Optimization Algorithm. Comput. Methods Appl. Mech. Eng. 2021, 376, 113609. [Google Scholar] [CrossRef]

- Abdollahzadeh, B.; Gharehchopogh, F.S.; Mirjalili, S. African Vultures Optimization Algorithm: A New Nature-Inspired Metaheuristic Algorithm for Global Optimization Problems. Comput. Ind. Eng. 2021, 158, 107408. [Google Scholar] [CrossRef]

- Chopra, N.; Mohsin Ansari, M. Golden Jackal Optimization: A Novel Nature-Inspired Optimizer for Engineering Applications. Expert. Syst. Appl. 2022, 198, 116924. [Google Scholar] [CrossRef]

- Zhao, S.; Zhang, T.; Ma, S.; Wang, M. Sea-Horse Optimizer: A Novel Nature-Inspired Meta-Heuristic for Global Optimization Problems. Appl. Intell. 2023, 53, 11833–11860. [Google Scholar] [CrossRef]

- Acharya, D.; Das, D.K. A Novel Human Conception Optimizer for Solving Optimization Problems. Sci. Rep. 2022, 12, 21631. [Google Scholar] [CrossRef]

- Ahmadianfar, I.; Heidari, A.A.; Gandomi, A.H.; Chu, X.; Chen, H. RUN beyond the Metaphor: An Efficient Optimization Algorithm Based on Runge Kutta Method. Expert. Syst. Appl. 2021, 181, 115079. [Google Scholar] [CrossRef]

- Derrac, J.; García, S.; Molina, D.; Herrera, F. A Practical Tutorial on the Use of Nonparametric Statistical Tests as a Methodology for Comparing Evolutionary and Swarm Intelligence Algorithms. Swarm Evol. Comput. 2011, 1, 3–18. [Google Scholar] [CrossRef]

- Rao, H.; Jia, H.; Wu, D.; Wen, C.; Li, S.; Liu, Q.; Abualigah, L. A Modified Group Teaching Optimization Algorithm for Solving Constrained Engineering Optimization Problems. Mathematics 2022, 10, 3765. [Google Scholar] [CrossRef]

- Rao, R.V.; Savsani, V.J.; Vakharia, D.P. Teaching–Learning-Based Optimization: A Novel Method for Constrained Mechanical Design Optimization Problems. Comput.-Aided Des. 2011, 43, 303–315. [Google Scholar] [CrossRef]

- Rajeswara Rao, B.; Tiwari, R. Optimum Design of Rolling Element Bearings Using Genetic Algorithms. Mech. Mach. Theory 2007, 42, 233–250. [Google Scholar] [CrossRef]

- Pant, M.; Thangaraj, R.; Singh, V.P. Optimization of Mechanical Design Problems Using Improved Differential Evolution Algorithm. Int. J. Recent. Trends Eng. 2009, 1, 21. [Google Scholar]

- Hsu, Y.L.; Liu, T.C. Developing a Fuzzy Proportional–Derivative Controller Optimization Engine for Engineering Design Optimization Problems. Eng. Optim. 2007, 39, 679–700. [Google Scholar] [CrossRef]

- Wang, X.; Snášel, V.; Mirjalili, S.; Pan, J.S.; Kong, L.; Shehadeh, H.A. Artificial Protozoa Optimizer (APO): A Novel Bio-Inspired Metaheuristic Algorithm for Engineering Optimization. Knowl. Based Syst. 2024, 295, 111737. [Google Scholar] [CrossRef]

- Ghasemi, M.; Golalipour, K.; Zare, M.; Mirjalili, S.; Trojovský, P.; Abualigah, L.; Hemmati, R. Flood Algorithm (FLA): An Efficient Inspired Meta-Heuristic for Engineering Optimization. J. Supercomput. 2024, 80, 22913–23017. [Google Scholar] [CrossRef]

- El-kenawy, E.S.M.; Khodadadi, N.; Mirjalili, S.; Abdelhamid, A.A.; Eid, M.M.; Ibrahim, A. Greylag Goose Optimization: Nature-Inspired Optimization Algorithm. Expert. Syst. Appl. 2024, 238, 122147. [Google Scholar] [CrossRef]

- Amiri, M.H.; Mehrabi Hashjin, N.; Montazeri, M.; Mirjalili, S.; Khodadadi, N. Hippopotamus Optimization Algorithm: A Novel Nature-Inspired Optimization Algorithm. Sci. Rep. 2024, 14, 5032. [Google Scholar] [CrossRef]

- Oladejo, S.O.; Ekwe, S.O.; Mirjalili, S. The Hiking Optimization Algorithm: A Novel Human-Based Metaheuristic Approach. Knowl. Based Syst. 2024, 296, 111880. [Google Scholar] [CrossRef]

- Jia, H.; Wen, Q.; Wang, Y.; Mirjalili, S. Catch Fish Optimization Algorithm: A New Human Behavior Algorithm for Solving Clustering Problems. Clust. Comput. 2024, 27, 13295–13332. [Google Scholar] [CrossRef]

- Zhao, W.; Wang, L.; Zhang, Z.; Fan, H.; Zhang, J.; Mirjalili, S.; Khodadadi, N.; Cao, Q. Electric Eel Foraging Optimization: A New Bio-Inspired Optimizer for Engineering Applications. Expert. Syst. Appl. 2024, 238, 122200. [Google Scholar] [CrossRef]

- Abdollahzadeh, B.; Khodadadi, N.; Barshandeh, S.; Trojovský, P.; Gharehchopogh, F.S.; El-kenawy, E.S.M.; Abualigah, L.; Mirjalili, S. Puma Optimizer (PO): A Novel Metaheuristic Optimization Algorithm and Its Application in Machine Learning. Clust. Comput. 2024, 27, 5235–5283. [Google Scholar] [CrossRef]

| Algorithms | Parameter Settings |

|---|---|

| PSO | |

| DE | |

| WOA | |

| AOA | |

| AVOA | |

| GJO | |

| SHO | |

| EVO | |

| HCO | |

| RUN | |

| BBOA | |

| COA | |

| ICOA |

| Func. | Index | Algorithms | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PSO | DE | WOA | AOA | SHO | AVOA | BBOA | EVO | GJO | HCO | RUN | COA | ICOA | ||

| F1 | Min | 1.34 × 101 | 8.78 × 100 | 1.34 × 101 | 4.93 × 107 | 2.20 × 106 | 1.66 × 103 | 2.23 × 105 | 3.45 × 106 | 5.77 × 106 | 9.47 × 107 | 1.33 × 105 | 1.46 × 103 | 3.59 × 100 |

| Ave | 3.02 × 103 | 2.28 × 103 | 3.47 × 106 | 3.47 × 108 | 8.95 × 106 | 1.51 × 104 | 4.36 × 106 | 2.06 × 107 | 1.21 × 107 | 3.27 × 108 | 6.41 × 105 | 1.39 × 104 | 1.44 × 103 | |

| Std | 4.15 × 103 | 2.37 × 103 | 1.00 × 107 | 2.12 × 108 | 4.40 × 106 | 8.14 × 103 | 4.96 × 106 | 1.45 × 107 | 3.19 × 106 | 1.89 × 108 | 4.80 × 105 | 1.15 × 104 | 9.64 × 102 | |

| F2 | Min | 5.23 × 10−2 | 5.95 × 10−4 | 1.07 × 101 | 7.25 × 109 | 5.72 × 101 | 2.24 × 101 | 1.67 × 108 | 3.41 × 107 | 6.51 × 108 | 4.74 × 109 | 2.61 × 105 | 1.60 × 10−1 | 1.99 × 10−11 |

| Ave | 2.54 × 102 | 2.07 × 100 | 3.35 × 103 | 1.04 × 1010 | 4.71 × 108 | 3.27 × 103 | 1.63 × 109 | 6.61 × 108 | 1.67 × 109 | 1.08 × 1010 | 1.80 × 107 | 1.67 × 103 | 1.91 × 10−1 | |

| Std | 7.45 × 102 | 5.85 × 100 | 3.99 × 103 | 2.13 × 109 | 5.75 × 108 | 3.86 × 103 | 1.06 × 109 | 4.73 × 108 | 4.19 × 108 | 3.51 × 109 | 1.13 × 107 | 2.57 × 103 | 9.33 × 10−1 | |

| F3 | Min | 1.20 × 10−7 | 3.66 × 10−9 | 1.23 × 102 | 2.04 × 104 | 1.31 × 103 | 1.86 × 101 | 4.85 × 102 | 9.40 × 102 | 2.87 × 103 | 5.96 × 104 | 2.01 × 102 | 3.20 × 10−1 | 2.66× 10−13 |

| Ave | 1.53 × 10−1 | 1.09 × 10−3 | 9.06 × 103 | 2.34 × 106 | 4.04 × 103 | 2.77 × 102 | 3.65 × 103 | 1.60 × 104 | 1.02 × 104 | 1.56 × 106 | 6.69 × 102 | 1.52 × 101 | 6.65 × 10−4 | |

| Std | 4.60 × 10−1 | 2.40 × 10−3 | 8.86 × 103 | 2.93 × 106 | 2.23 × 103 | 1.74 × 102 | 2.35 × 103 | 1.79 × 104 | 3.51 × 103 | 4.77 × 106 | 3.28 × 102 | 4.04 × 101 | 1.97 × 10−3 | |

| Func. | Index | Algorithms | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PSO | DE | WOA | AOA | SHO | AVOA | BBOA | EVO | GJO | HCO | RUN | COA | ICOA | ||

| F4 | Min | 4.76 × 10−2 | 1.56 × 10−2 | 1.44 × 10−2 | 5.82 × 102 | 1.89 × 100 | 1.22 × 10−2 | 4.98 × 101 | 2.94 × 101 | 4.29 × 101 | 2.73 × 102 | 1.11 × 100 | 3.33 × 10−2 | 1.10 × 10−2 |

| Ave | 2.47 × 101 | 2.93 × 101 | 2.87 × 101 | 2.18 × 103 | 5.74 × 101 | 1.90 × 101 | 1.86 × 102 | 1.37 × 102 | 6.31 × 101 | 1.24 × 103 | 2.26 × 101 | 1.93 × 101 | 1.83 × 101 | |

| Std | 1.71 × 101 | 1.22 × 101 | 3.29 × 101 | 1.16 × 103 | 3.30 × 101 | 1.72 × 101 | 1.24 × 102 | 7.67 × 101 | 1.83 × 101 | 5.92 × 102 | 1.52 × 101 | 1.69 × 101 | 1.68 × 101 | |

| F5 | Min | 2.00 × 101 | 2.01 × 101 | 2.00 × 101 | 2.05 × 101 | 2.00 × 101 | 2.00 × 101 | 1.60 × 101 | 2.00 × 101 | 2.02 × 101 | 2.01 × 101 | 8.11 × 100 | 1.99 × 101 | 2.00 × 101 |

| Ave | 2.01 × 101 | 2.02 × 101 | 2.01 × 101 | 2.10 × 101 | 2.00 × 101 | 2.00 × 101 | 1.99 × 101 | 2.03 × 101 | 2.03 × 101 | 2.03 × 101 | 1.99 × 101 | 2.00 × 101 | 2.00 × 101 | |

| Std | 9.70 × 10−2 | 5.48 × 10−2 | 1.26 × 10−1 | 1.68 × 10−1 | 4.56 × 10−2 | 3.23 × 10−2 | 7.45 × 10−1 | 3.32 × 10−1 | 7.02 × 10−2 | 1.15 × 10−1 | 2.22 × 100 | 1.37 × 10−2 | 5.31 × 10−3 | |

| F6 | Min | 8.86 × 10−1 | 3.26 × 10−1 | 6.64 × 100 | 1.00 × 101 | 3.03 × 100 | 9.03 × 10−1 | 2.96 × 100 | 1.11 × 100 | 5.50 × 100 | 4.63 × 100 | 1.86 × 100 | 2.49 × 10−3 | 8.44 × 10−4 |

| Ave | 4.11 × 100 | 2.55 × 100 | 9.04 × 100 | 1.22 × 101 | 5.10 × 100 | 3.47 × 100 | 5.40 × 100 | 3.85 × 100 | 6.62 × 100 | 8.13 × 100 | 3.55 × 100 | 3.63 × 100 | 2.27 × 100 | |

| Std | 1.40 × 100 | 1.50 × 100 | 1.23 × 100 | 9.29 × 10−1 | 1.23 × 100 | 1.53 × 100 | 1.14 × 100 | 1.62 × 100 | 8.47 × 10−1 | 1.85 × 100 | 8.85 × 10−1 | 2.06 × 100 | 1.50 × 100 | |

| F7 | Min | 9.11 × 10−2 | 3.94 × 10−2 | 1.19 × 100 | 7.65 × 101 | 1.21 × 100 | 2.46 × 10−2 | 3.07 × 100 | 1.47 × 100 | 7.17 × 100 | 6.30 × 100 | 8.28 × 10−1 | 2.84 × 10−2 | 9.86 × 10−3 |

| Ave | 2.52 × 10−1 | 1.50 × 10−1 | 9.50 × 100 | 1.89 × 102 | 1.20 × 101 | 6.88 × 10−2 | 3.74 × 101 | 1.25 × 101 | 1.34 × 101 | 3.56 × 101 | 1.38 × 100 | 7.44 × 10−2 | 5.16 × 10−2 | |

| Std | 1.81 × 10−1 | 7.52 × 10−2 | 6.08 × 100 | 4.88 × 101 | 1.34 × 101 | 2.78 × 10−2 | 2.78 × 101 | 8.45 × 100 | 4.39 × 100 | 1.83 × 101 | 2.65 × 10−1 | 3.59 × 10−2 | 2.32 × 10−2 | |

| F8 | Min | 5.97 × 100 | 0.00 × 100 | 9.95 × 100 | 8.54 × 101 | 1.59 × 101 | 0.00 × 100 | 1.50 × 101 | 4.38 × 100 | 3.35 × 101 | 1.81 × 101 | 2.03 × 100 | 1.29 × 10−9 | 0.00 × 100 |

| Ave | 1.85 × 101 | 2.17 × 100 | 4.07 × 101 | 1.25 × 102 | 2.56 × 101 | 1.61 × 100 | 2.72 × 101 | 1.15 × 101 | 4.06 × 101 | 3.99 × 101 | 1.21 × 101 | 5.35 × 100 | 1.16 × 100 | |

| Std | 8.27 × 100 | 1.28 × 100 | 1.77 × 101 | 1.57 × 101 | 7.59 × 100 | 9.25 × 10−1 | 7.37 × 100 | 4.00 × 100 | 4.47 × 100 | 1.30 × 101 | 4.34 × 100 | 7.85 × 100 | 8.28 × 10−1 | |

| F9 | Min | 1.09 × 101 | 2.08 × 100 | 1.59 × 101 | 6.99 × 101 | 2.31 × 101 | 9.95 × 100 | 1.91 × 101 | 8.78 × 100 | 3.69 × 101 | 1.81 × 101 | 8.25 × 100 | 6.98 × 100 | 1.99 × 100 |

| Ave | 2.56 × 101 | 1.39 × 101 | 5.02 × 101 | 9.37 × 101 | 3.41 × 101 | 2.36 × 101 | 2.91 × 101 | 1.93 × 101 | 4.93 × 101 | 3.52 × 101 | 1.92 × 101 | 3.16 × 101 | 1.19 × 101 | |

| Std | 8.74 × 100 | 7.06 × 100 | 1.73 × 101 | 8.99 × 100 | 7.83 × 100 | 7.86 × 100 | 5.46 × 100 | 6.33 × 100 | 3.81 × 100 | 1.46 × 101 | 6.14 × 100 | 1.64 × 101 | 5.43 × 100 | |

| F10 | Min | 7.20 × 100 | 2.72 × 101 | 3.01 × 102 | 1.09 × 103 | 1.32 × 102 | 1.03 × 101 | 4.12 × 102 | 1.18 × 101 | 3.80 × 102 | 1.16 × 103 | 7.23 × 100 | 2.50 × 10−1 | 1.30 × 102 |

| Ave | 1.39 × 102 | 5.80 × 101 | 6.95 × 102 | 1.65 × 103 | 4.37 × 102 | 7.82 × 101 | 7.36 × 102 | 2.23 × 102 | 7.26 × 102 | 1.64 × 103 | 2.34 × 102 | 4.22 × 102 | 9.67 × 102 | |

| Std | 9.15 × 101 | 2.57 × 101 | 2.50 × 102 | 2.27 × 102 | 1.43 × 102 | 2.53 × 101 | 2.02 × 102 | 1.68 × 102 | 1.46 × 102 | 2.40 × 102 | 1.62 × 102 | 3.24 × 102 | 3.51 × 102 | |

| F11 | Min | 5.69 × 101 | 5.49 × 101 | 2.45 × 102 | 1.78 × 103 | 2.70 × 102 | 1.43 × 102 | 1.15 × 102 | 2.38 × 102 | 7.16 × 102 | 9.45 × 102 | 3.34 × 102 | 1.29 × 102 | 5.22 × 101 |

| Ave | 4.53 × 102 | 3.75 × 102 | 1.13 × 103 | 2.28 × 103 | 6.61 × 102 | 6.40 × 102 | 7.64 × 102 | 8.08 × 102 | 1.13 × 103 | 1.50 × 103 | 5.99 × 102 | 5.19 × 102 | 3.61 × 102 | |

| Std | 2.16 × 102 | 1.48 × 102 | 3.48 × 102 | 2.61 × 102 | 2.04 × 102 | 2.40 × 102 | 2.72 × 102 | 2.34 × 102 | 1.59 × 102 | 2.80 × 102 | 1.64 × 102 | 2.20 × 102 | 1.85 × 102 | |

| F12 | Min | 2.29 × 10−2 | 4.32 × 10−2 | 3.91 × 10−1 | 1.88 × 100 | 5.16 × 10−2 | 2.26 × 10−4 | 1.15 × 10−1 | 7.44 × 10−2 | 4.15 × 10−1 | 6.89 × 10−1 | 3.42 × 10−1 | 4.92 × 10−2 | 4.01 × 10−2 |

| Ave | 1.43 × 10−1 | 2.17 × 10−1 | 8.38 × 10−1 | 4.04 × 100 | 2.36 × 10−1 | 6.93 × 10−2 | 4.06 × 10−1 | 3.34 × 10−1 | 7.39 × 10−1 | 1.45 × 100 | 8.19 × 10−1 | 3.05 × 10−1 | 1.55 × 10−1 | |

| Std | 9.34 × 10−2 | 1.33 × 10−1 | 2.47 × 10−1 | 1.16 × 100 | 1.35 × 10−1 | 5.31 × 10−2 | 1.57 × 10−1 | 1.92 × 10−1 | 1.95 × 10−1 | 4.31 × 10−1 | 2.00 × 10−1 | 1.83 × 10−1 | 9.60 × 10−2 | |

| F13 | Min | 1.72 × 10−1 | 4.15 × 10−2 | 2.73 × 10−1 | 2.07 × 100 | 3.77 × 10−1 | 3.26 × 10−2 | 3.26 × 10−1 | 2.30 × 10−1 | 3.96 × 10−1 | 1.64 × 10−1 | 1.31 × 10−1 | 9.67 × 10−2 | 2.49 × 10−2 |

| Ave | 4.61 × 10−1 | 1.55 × 10−1 | 6.59 × 10−1 | 4.83 × 100 | 7.33 × 10−1 | 1.05 × 10−1 | 1.41 × 100 | 4.39 × 10−1 | 7.01 × 10−1 | 1.19 × 100 | 2.64 × 10−1 | 1.62 × 10−1 | 8.43 × 10−2 | |

| Std | 2.09 × 10−1 | 6.28 × 10−2 | 2.37 × 10−1 | 1.07 × 100 | 3.98 × 10−1 | 4.01 × 10−2 | 9.51 × 10−1 | 1.34 × 10−1 | 1.10 × 10−1 | 8.64 × 10−1 | 7.27 × 10−2 | 5.97 × 10−2 | 4.10 × 10−2 | |

| F14 | Min | 4.02 × 10−2 | 3.12 × 10−2 | 1.04 × 10−1 | 2.47 × 101 | 2.26 × 10−1 | 3.90 × 10−2 | 1.04 × 100 | 2.38 × 10−1 | 9.88 × 10−1 | 3.04 × 10−1 | 8.05 × 10−2 | 6.85 × 10−2 | 5.30 × 10−2 |

| Ave | 2.20 × 10−1 | 1.08 × 10−1 | 4.58 × 10−1 | 3.98 × 101 | 1.54 × 100 | 1.17 × 10−1 | 1.06 × 101 | 2.55 × 100 | 3.14 × 100 | 7.90 × 100 | 1.76 × 10−1 | 2.01 × 10−1 | 1.56 × 10−1 | |

| Std | 1.59 × 10−1 | 4.43 × 10−2 | 3.64 × 10−1 | 7.46 × 100 | 2.41 × 100 | 4.03 × 10−2 | 7.33 × 100 | 2.18 × 100 | 9.37 × 10−1 | 4.33 × 100 | 4.36 × 10−2 | 8.29 × 10−2 | 6.57 × 10−2 | |

| F15 | Min | 7.14 × 10−1 | 5.68 × 10−1 | 3.24 × 100 | 1.14 × 103 | 1.78 × 100 | 5.19 × 10−1 | 2.77 × 100 | 2.68 × 100 | 6.49 × 100 | 1.13 × 104 | 1.84 × 100 | 4.30 × 10−1 | 3.44 × 10−1 |

| Ave | 2.33 × 100 | 1.45 × 100 | 5.89 × 101 | 1.55 × 104 | 4.49 × 100 | 7.64 × 10−1 | 4.74 × 101 | 9.06 × 101 | 1.22 × 101 | 1.35 × 105 | 2.98 × 100 | 1.24 × 100 | 6.17 × 10−1 | |

| Std | 1.33 × 100 | 5.40 × 10−1 | 7.12 × 101 | 1.65 × 104 | 3.33 × 100 | 1.51 × 10−1 | 1.37 × 102 | 2.77 × 102 | 7.99 × 100 | 1.29 × 105 | 5.83 × 10−1 | 4.35 × 10−1 | 1.89 × 10−1 | |

| F16 | Min | 1.35 × 100 | 1.52 × 10−1 | 2.63 × 100 | 3.89 × 100 | 2.20 × 100 | 1.80 × 100 | 2.26 × 100 | 2.57 × 100 | 3.44 × 100 | 2.38 × 100 | 1.77 × 100 | 1.24 × 100 | 1.24 × 100 |

| Ave | 2.41 × 100 | 1.66 × 100 | 3.45 × 100 | 4.31 × 100 | 2.93 × 100 | 2.47 × 100 | 2.88 × 100 | 3.38 × 100 | 3.65 × 100 | 3.39 × 100 | 2.47 × 100 | 2.20 × 100 | 2.32 × 100 | |

| Std | 4.00 × 10−1 | 8.31 × 10−1 | 3.41 × 10−1 | 1.81 × 10−1 | 3.18 × 10−1 | 3.01 × 10−1 | 2.85 × 10−1 | 4.04 × 10−1 | 1.02 × 10−1 | 4.52 × 10−1 | 3.52 × 10−1 | 5.01 × 10−1 | 3.92 × 10−1 | |

| Func. | Index | Algorithms | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PSO | DE | WOA | AOA | SHO | AVOA | BBOA | EVO | GJO | HCO | RUN | COA | ICOA | ||

| F17 | Min | 7.58 × 100 | 2.08 × 101 | 6.77 × 102 | 5.83 × 105 | 4.62 × 103 | 8.60 × 102 | 6.22 × 102 | 6.34 × 103 | 8.93 × 103 | 6.20 × 105 | 1.60 × 103 | 2.29 × 102 | 7.40 × 100 |

| Ave | 1.15 × 103 | 2.73 × 102 | 1.95 × 103 | 7.14 × 106 | 9.24 × 103 | 2.62 × 103 | 2.74 × 103 | 1.39 × 105 | 4.33 × 104 | 1.05 × 107 | 8.92 × 103 | 4.89 × 103 | 2.51 × 102 | |

| Std | 1.68 × 103 | 2.07 × 102 | 1.21 × 103 | 1.03 × 107 | 4.33 × 103 | 1.42 × 103 | 2.56 × 103 | 4.26 × 105 | 3.09 × 104 | 8.43 × 106 | 4.51 × 103 | 5.48 × 103 | 2.20 × 102 | |

| F18 | Min | 4.95 × 100 | 2.95 × 100 | 1.32 × 102 | 1.33 × 106 | 1.03 × 104 | 1.35 × 101 | 1.13 × 103 | 6.16 × 103 | 1.78 × 104 | 3.90 × 106 | 1.32 × 103 | 8.46 × 100 | 2.91 × 100 |

| Ave | 4.62 × 101 | 2.39 × 101 | 5.22 × 103 | 1.48 × 108 | 1.62 × 104 | 1.06 × 103 | 9.66 × 103 | 1.99 × 106 | 1.94 × 104 | 1.20 × 108 | 4.11 × 103 | 6.23 × 101 | 1.99 × 101 | |

| Std | 1.45 × 102 | 1.86 × 101 | 4.31 × 103 | 1.61 × 108 | 2.97 × 103 | 1.18 × 103 | 4.91 × 103 | 6.97 × 106 | 4.36 × 102 | 1.08 × 108 | 2.38 × 103 | 7.53 × 101 | 2.19 × 101 | |

| F19 | Min | 1.66 × 100 | 3.18 × 100 | 6.80 × 100 | 1.22 × 104 | 4.32 × 100 | 2.05 × 100 | 2.02 × 100 | 5.92 × 100 | 7.99 × 100 | 1.44 × 105 | 4.23 × 100 | 1.58 × 100 | 1.16 × 100 |

| Ave | 1.08 × 101 | 5.17 × 100 | 3.07 × 101 | 7.63 × 106 | 8.31 × 100 | 5.72 × 100 | 4.51 × 100 | 3.80 × 104 | 2.98 × 101 | 3.29 × 106 | 9.35 × 100 | 7.12 × 100 | 3.55 × 100 | |

| Std | 7.61 × 100 | 1.10 × 100 | 3.93 × 101 | 1.41 × 107 | 1.66 × 100 | 2.39 × 100 | 2.42 × 100 | 1.77 × 105 | 9.25 × 101 | 3.33 × 106 | 2.61 × 100 | 4.86 × 100 | 2.20 × 100 | |

| F20 | Min | 4.20 × 100 | 1.96 × 100 | 2.18 × 103 | 1.26 × 109 | 5.94 × 103 | 6.04 × 101 | 8.07 × 102 | 1.81 × 103 | 1.24 × 104 | 2.30 × 109 | 7.94 × 103 | 1.30 × 102 | 1.13 × 100 |

| Ave | 1.82 × 103 | 2.63 × 101 | 1.18 × 104 | 2.34 × 1013 | 1.62 × 104 | 5.80 × 103 | 2.30 × 104 | 4.38 × 101⁰ | 4.50 × 104 | 4.27 × 1012 | 2.35 × 104 | 6.95 × 103 | 2.61 × 101 | |

| Std | 3.21 × 103 | 2.03 × 101 | 1.10 × 104 | 6.72 × 1013 | 6.85 × 103 | 5.21 × 103 | 2.08 × 104 | 2.27 × 1011 | 1.30 × 105 | 9.67 × 1012 | 1.18 × 104 | 9.02 × 103 | 3.48 × 101 | |

| F21 | Min | 1.36 × 100 | 4.98 × 10−1 | 3.68 × 100 | 7.10 × 105 | 2.87 × 103 | 4.12 × 100 | 2.61 × 102 | 9.54 × 102 | 1.52 × 104 | 1.72 × 106 | 9.62 × 102 | 4.01 × 100 | 4.97 × 10−1 |

| Ave | 4.32 × 101 | 4.09 × 100 | 5.21 × 102 | 5.18 × 107 | 5.86 × 103 | 2.18 × 102 | 2.44 × 103 | 3.11 × 105 | 1.43 × 105 | 3.96 × 107 | 2.50 × 103 | 5.56 × 101 | 3.91 × 100 | |

| Std | 4.41 × 101 | 3.79 × 100 | 8.02 × 102 | 5.72 × 107 | 1.18 × 103 | 1.94 × 102 | 2.54 × 103 | 5.71 × 105 | 1.39 × 105 | 3.23 × 107 | 1.26 × 103 | 5.47 × 101 | 8.48 × 100 | |

| F22 | Min | 2.06 × 101 | 8.56 × 10−2 | 2.07 × 101 | 1.19 × 103 | 2.13 × 101 | 2.03 × 101 | 2.10 × 101 | 6.34 × 101 | 7.39 × 101 | 1.01 × 103 | 2.83 × 101 | 2.03 × 101 | 8.54 × 10−2 |

| Ave | 1.83 × 102 | 5.29 × 102 | 1.20 × 102 | 3.61 × 1011 | 2.06 × 102 | 7.88 × 101 | 1.19 × 102 | 3.35 × 107 | 3.20 × 102 | 8.66 × 101⁰ | 4.31 × 101 | 1.35 × 102 | 9.83 × 101 | |

| Std | 9.83 × 101 | 8.17 × 102 | 1.14 × 102 | 1.34 × 1012 | 2.56 × 102 | 9.76 × 101 | 1.34 × 102 | 1.79 × 108 | 2.95 × 102 | 2.29 × 1011 | 4.05 × 101 | 1.69 × 102 | 1.07 × 102 | |

| Func. | İndex | Algorithms | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PSO | DE | WOA | AOA | SHO | AVOA | BBOA | EVO | GJO | HCO | RUN | COA | ICOA | ||

| F23 | Min | 2.37 × 102 | 2.37 × 102 | 2.00 × 102 | 2.00 × 102 | 2.00 × 102 | 2.00 × 102 | 2.00 × 102 | 2.43 × 102 | 2.00 × 102 | 2.52 × 102 | 2.00 × 102 | 2.00 × 102 | 2.00 × 102 |

| Ave | 2.42 × 102 | 2.37 × 102 | 2.38 × 102 | 2.05 × 102 | 2.00 × 102 | 2.00 × 102 | 2.00 × 102 | 2.52 × 102 | 2.00 × 102 | 2.73 × 102 | 2.04 × 102 | 2.00 × 102 | 2.00 × 102 | |

| Std | 1.65 × 100 | 1.65 × 100 | 1.29 × 101 | 1.12 × 100 | 0.00 × 100 | 0.00 × 100 | 0.00 × 100 | 5.66 × 100 | 0.00 × 100 | 1.90 × 101 | 1.13 × 101 | 0.00 × 100 | 0.00 × 100 | |

| F24 | Min | 1.16 × 102 | 1.11 × 102 | 1.23 × 102 | 1.88 × 102 | 1.16 × 102 | 1.15 × 102 | 1.29 × 102 | 1.21 × 102 | 1.50 × 102 | 1.23 × 102 | 1.15 × 102 | 1.16 × 102 | 1.10 × 102 |

| Ave | 1.35 × 102 | 1.25 × 102 | 1.68 × 102 | 2.00 × 102 | 1.45 × 102 | 1.32 × 102 | 1.63 × 102 | 1.57 × 102 | 1.56 × 102 | 1.63 × 102 | 1.25 × 102 | 1.98 × 102 | 1.21 × 102 | |

| Std | 1.18 × 101 | 5.39 × 100 | 3.01 × 101 | 2.73 × 100 | 2.67 × 101 | 1.60 × 101 | 2.53 × 101 | 2.33 × 101 | 3.99 × 100 | 2.47 × 101 | 6.09 × 100 | 1.54 × 101 | 6.75 × 100 | |

| F25 | Min | 1.55 × 102 | 2.00 × 102 | 1.66 × 102 | 2.00 × 102 | 1.60 × 102 | 2.00 × 102 | 1.82 × 102 | 2.02 × 102 | 2.00 × 102 | 2.01 × 102 | 1.55 × 102 | 2.00 × 102 | 2.00 × 102 |

| Ave | 1.95 × 102 | 2.00 × 102 | 1.99 × 102 | 2.00 × 102 | 1.97 × 102 | 2.00 × 102 | 1.99 × 102 | 2.04 × 102 | 2.00 × 102 | 2.06 × 102 | 1.95 × 102 | 2.00 × 102 | 2.00 × 102 | |

| Std | 1.42 × 101 | 1.45 × 10−13 | 7.34 × 100 | 3.58 × 10−2 | 9.47 × 100 | 0.00 × 100 | 4.35 × 100 | 1.65 × 100 | 0.00 × 100 | 1.71 × 100 | 1.49 × 101 | 0.00 × 100 | 0.00 × 100 | |

| F26 | Min | 1.00 × 102 | 1.00 × 102 | 1.00 × 102 | 1.03 × 102 | 1.00 × 102 | 1.00 × 102 | 1.00 × 102 | 1.00 × 102 | 1.00 × 102 | 1.01 × 102 | 1.00 × 102 | 1.00 × 102 | 1.00 × 102 |

| Ave | 1.00 × 102 | 1.00 × 102 | 1.01 × 102 | 1.06 × 102 | 1.04 × 102 | 1.00 × 102 | 1.01 × 102 | 1.01 × 102 | 1.01 × 102 | 1.03 × 102 | 1.00 × 102 | 1.00 × 102 | 1.00 × 102 | |

| Std | 2.14 × 10−1 | 5.78 × 10−2 | 1.99 × 10−1 | 4.16 × 100 | 1.81 × 101 | 2.44 × 10−2 | 7.24 × 10−1 | 5.85 × 10−1 | 3.59 × 10−1 | 1.32 × 100 | 5.89 × 10−2 | 1.53 × 10−1 | 2.57 × 10−2 | |

| F27 | Min | 5.00 × 100 | 5.00 × 100 | 8.29 × 100 | 2.04 × 102 | 1.24 × 101 | 5.00 × 100 | 1.15 × 101 | 1.22 × 102 | 2.10 × 102 | 1.58 × 102 | 7.29 × 100 | 5.00 × 100 | 5.00 × 100 |

| Ave | 3.17 × 102 | 3.37 × 102 | 3.90 × 102 | 2.04 × 102 | 3.30 × 102 | 1.48 × 102 | 3.58 × 102 | 4.14 × 102 | 3.96 × 102 | 4.25 × 102 | 3.58 × 102 | 1.83 × 102 | 6.43 × 101 | |

| Std | 1.59 × 102 | 1.36 × 102 | 7.22 × 101 | 2.89 × 10−14 | 1.53 × 102 | 8.77 × 101 | 1.54 × 102 | 8.54 × 101 | 1.06 × 102 | 7.71 × 101 | 1.21 × 102 | 5.35 × 101 | 9.04 × 101 | |

| F28 | Min | 3.78 × 102 | 3.69 × 102 | 2.00 × 102 | 2.24 × 102 | 4.02 × 102 | 2.00 × 102 | 3.07 × 102 | 3.92 × 102 | 4.17 × 102 | 7.47 × 102 | 3.73 × 102 | 2.00 × 102 | 2.00 × 102 |

| Ave | 5.09 × 102 | 3.83 × 102 | 7.27 × 102 | 2.24 × 102 | 5.16 × 102 | 2.00 × 102 | 3.36 × 102 | 6.00 × 102 | 5.03 × 102 | 1.10 × 103 | 4.67 × 102 | 2.00 × 102 | 2.00 × 102 | |

| Std | 9.32 × 101 | 2.68 × 101 | 1.79 × 102 | 5.78 × 10−14 | 7.79 × 101 | 0.00 × 100 | 3.06 × 101 | 1.71 × 102 | 7.55 × 101 | 2.18 × 102 | 8.15 × 101 | 0.00 × 100 | 0.00 × 100 | |

| F29 | Min | 4.36 × 106 | 4.36 × 106 | 4.36 × 106 | 3.23 × 106 | 5.51 × 106 | 2.02 × 102 | 2.07 × 102 | 5.54 × 106 | 5.61 × 106 | 7.79 × 107 | 4.58 × 106 | 2.02 × 102 | 2.02 × 102 |

| Ave | 4.92 × 106 | 4.60 × 106 | 1.21 × 107 | 1.16 × 107 | 8.80 × 106 | 2.02 × 102 | 2.83 × 102 | 1.89 × 107 | 6.88 × 106 | 1.83 × 108 | 5.83 × 106 | 2.02 × 102 | 2.02 × 102 | |

| Std | 4.60 × 105 | 3.93 × 105 | 7.93 × 106 | 1.92 × 106 | 3.51 × 106 | 0.00 × 100 | 8.13 × 101 | 9.27 × 106 | 1.16 × 106 | 7.49 × 107 | 8.20 × 105 | 0.00 × 100 | 0.00 × 100 | |

| F30 | Min | 2.29 × 103 | 1.71 × 103 | 3.07 × 103 | 1.20 × 106 | 2.03 × 102 | 2.03 × 102 | 2.23 × 102 | 6.28 × 105 | 2.03 × 102 | 4.09 × 107 | 4.67 × 103 | 2.03 × 102 | 2.03 × 102 |

| Ave | 2.01 × 104 | 1.31 × 104 | 6.04 × 106 | 1.71 × 107 | 7.43 × 105 | 1.15 × 104 | 9.28 × 102 | 7.88 × 107 | 7.41 × 105 | 2.93 × 109 | 4.79 × 104 | 2.03 × 102 | 2.03 × 102 | |

| Std | 2.93 × 104 | 2.00 × 104 | 1.76 × 107 | 6.27 × 106 | 1.77 × 106 | 2.72 × 104 | 1.09 × 103 | 9.21 × 107 | 7.05 × 105 | 6.41 × 109 | 5.41 × 104 | 2.89 × 10−14 | 2.89 × 10−14 | |

| F. ave-rank | 5.07 | 3.93 | 8.57 | 11.87 | 7.68 | 3.50 | 7.48 | 9.43 | 9.37 | 11.83 | 5.67 | 4.48 | 2.12 | |

| Func. | Index | Algorithms | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PSO | DE | WOA | AOA | SHO | AVOA | BBOA | EVO | GJO | HCO | RUN | COA | ICOA | ||

| F1 | Min | 1.63 × 105 | 1.39 × 105 | 4.20 × 105 | 1.09 × 109 | 8.16 × 107 | 1.23 × 105 | 2.15 × 108 | 1.23 × 108 | 2.66 × 108 | 1.50 × 109 | 3.09 × 107 | 2.30 × 105 | 1.56 × 105 |

| Ave | 1.29 × 106 | 6.62 × 105 | 3.08 × 106 | 1.77 × 109 | 2.90 × 108 | 7.25 × 105 | 6.27 × 108 | 4.45 × 108 | 4.26 × 108 | 3.31 × 109 | 6.17 × 107 | 9.73 × 105 | 5.26 × 105 | |

| Std | 1.18 × 106 | 4.54 × 105 | 2.76 × 106 | 2.36 × 108 | 1.13 × 108 | 3.76 × 105 | 1.95 × 108 | 1.74 × 108 | 8.46 × 107 | 9.37 × 108 | 2.25 × 107 | 6.49 × 105 | 3.23 × 105 | |

| F2 | Min | 1.27 × 100 | 1.11 × 100 | 3.08 × 100 | 6.48 × 1010 | 1.09 × 1010 | 8.73 × 101 | 4.24 × 1010 | 1.29 × 1010 | 2.25 × 1010 | 1.04 × 1011 | 1.66 × 109 | 1.13 × 102 | 9.70 × 10−1 |

| Ave | 2.73 × 105 | 1.68 × 104 | 1.13 × 104 | 7.96 × 1010 | 3.16 × 1010 | 1.62 × 104 | 5.77 × 1010 | 2.17 × 1010 | 3.05 × 1010 | 1.32 × 1011 | 2.79 × 109 | 1.62 × 104 | 9.55 × 103 | |

| Std | 7.92 × 105 | 1.44 × 104 | 1.41 × 104 | 5.74 × 109 | 8.77 × 109 | 1.18 × 104 | 8.36 × 109 | 5.54 × 109 | 4.01 × 109 | 1.40 × 1010 | 6.33 × 108 | 1.25 × 104 | 1.31 × 104 | |

| F3 | Min | 4.51 × 10−2 | 2.54 × 100 | 6.99 × 100 | 1.02 × 105 | 3.13 × 104 | 1.64 × 101 | 3.66 × 104 | 3.90 × 104 | 6.24 × 104 | 2.82 × 105 | 7.25 × 103 | 4.67 × 101 | 3.63 × 10−2 |

| Ave | 5.15 × 100 | 9.29 × 100 | 1.64 × 103 | 5.05 × 106 | 4.88 × 104 | 7.16 × 102 | 5.90 × 104 | 6.99 × 104 | 7.10 × 104 | 2.69 × 106 | 1.18 × 104 | 3.20 × 102 | 1.81 × 100 | |

| Std | 1.13 × 101 | 6.01 × 100 | 2.68 × 103 | 7.66 × 106 | 8.61 × 103 | 5.26 × 102 | 8.99 × 103 | 2.05 × 104 | 5.18 × 103 | 3.39 × 106 | 1.94 × 103 | 3.09 × 102 | 4.25 × 100 | |

| Func. | Index | Algorithms | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PSO | DE | WOA | AOA | SHO | AVOA | BBOA | EVO | GJO | HCO | RUN | COA | ICOA | ||

| F4 | Min | 6.53 × 101 | 5.47 × 100 | 8.06 × 101 | 8.55 × 103 | 6.56 × 102 | 1.67 × 10−1 | 4.04 × 103 | 7.41 × 102 | 9.21 × 102 | 5.89 × 103 | 2.02 × 102 | 8.27 × 100 | 1.55 × 10−3 |

| Ave | 1.07 × 102 | 4.23 × 106 | 1.39 × 102 | 1.49 × 104 | 2.36 × 103 | 7.62 × 101 | 8.21 × 103 | 2.56 × 103 | 1.28 × 103 | 1.10 × 104 | 3.04 × 102 | 8.60 × 101 | 5.88 × 101 | |

| Std | 2.69 × 101 | 1.61 × 107 | 2.73 × 101 | 2.84 × 103 | 1.20 × 103 | 2.41 × 101 | 2.17 × 103 | 1.12 × 103 | 2.36 × 102 | 2.54 × 103 | 6.57 × 101 | 2.91 × 101 | 2.91 × 101 | |

| F5 | Min | 2.01 × 101 | 2.03 × 101 | 2.00 × 101 | 2.11 × 101 | 2.01 × 101 | 2.00 × 101 | 2.04 × 101 | 2.00 × 101 | 2.08 × 101 | 2.07 × 101 | 2.08 × 101 | 2.00 × 101 | 2.00 × 101 |

| Ave | 2.08 × 101 | 2.07 × 101 | 2.02 × 101 | 2.13 × 101 | 2.04 × 101 | 2.01 × 101 | 2.06 × 101 | 2.04 × 101 | 2.09 × 101 | 2.10 × 101 | 2.09 × 101 | 2.01 × 101 | 2.00 × 101 | |

| Std | 2.11 × 10−1 | 1.93 × 10−1 | 2.70 × 10−1 | 7.94 × 10−2 | 1.32 × 10−1 | 1.24 × 10−1 | 1.01 × 10−1 | 5.77 × 10−1 | 6.65 × 10−2 | 9.73 × 10−2 | 6.75 × 10−2 | 1.03 × 10−1 | 2.65 × 10−2 | |

| F6 | Min | 1.82 × 101 | 1.16 × 101 | 3.45 × 101 | 4.16 × 101 | 2.48 × 101 | 1.26 × 101 | 2.98 × 101 | 1.94 × 101 | 3.02 × 101 | 3.41 × 101 | 2.11 × 101 | 1.64 × 101 | 1.12 × 101 |

| Ave | 2.59 × 101 | 2.23 × 101 | 4.03 × 101 | 4.51 × 101 | 3.07 × 101 | 2.01 × 101 | 3.40 × 101 | 2.66 × 101 | 3.15 × 101 | 3.71 × 101 | 2.51 × 101 | 2.23 × 101 | 1.62 × 101 | |

| Std | 3.28 × 100 | 9.64 × 100 | 2.08 × 100 | 1.61 × 100 | 2.19 × 100 | 3.81 × 100 | 1.99 × 100 | 3.74 × 100 | 7.77 × 10−1 | 2.12 × 100 | 2.36 × 100 | 2.97 × 100 | 2.75 × 100 | |

| F7 | Min | 3.35 × 10−3 | 6.01 × 10−8 | 1.62 × 10−8 | 6.09 × 102 | 1.45 × 102 | 7.05 × 10−13 | 3.33 × 102 | 6.53 × 101 | 1.70 × 102 | 2.49 × 102 | 1.76 × 101 | 1.56 × 10−3 | 7.77 × 10−16 |

| Ave | 5.66 × 10−1 | 1.35 × 10−2 | 2.90 × 10−1 | 7.56 × 102 | 2.83 × 102 | 2.25 × 10−2 | 5.64 × 102 | 2.00 × 102 | 2.32 × 102 | 3.44 × 102 | 2.59 × 101 | 7.77 × 10−2 | 1.25 × 10−2 | |

| Std | 6.48 × 10−1 | 1.58 × 10−2 | 8.47 × 10−1 | 6.26 × 101 | 8.44 × 101 | 3.11 × 10−2 | 9.04 × 101 | 5.97 × 101 | 3.14 × 101 | 5.55 × 101 | 4.44 × 100 | 8.90 × 10−2 | 1.84 × 10−2 | |

| F8 | Min | 5.37 × 101 | 1.03 × 101 | 8.76 × 101 | 4.16 × 102 | 1.41 × 102 | 1.09 × 101 | 1.91 × 102 | 5.36 × 101 | 2.30 × 102 | 1.88 × 102 | 9.15 × 101 | 5.09 × 101 | 9.95 × 10−1 |

| Ave | 1.09 × 102 | 2.50 × 101 | 2.02 × 102 | 4.61 × 102 | 2.03 × 102 | 3.22 × 101 | 2.41 × 102 | 9.00 × 101 | 2.69 × 102 | 2.44 × 102 | 1.61 × 102 | 1.13 × 102 | 2.47 × 101 | |

| Std | 2.75 × 101 | 1.12 × 101 | 5.17 × 101 | 2.08 × 101 | 2.73 × 101 | 1.24 × 101 | 1.97 × 101 | 2.14 × 101 | 1.49 × 101 | 2.71 × 101 | 2.47 × 101 | 3.56 × 101 | 1.48 × 101 | |

| F9 | Min | 8.66 × 101 | 4.85 × 101 | 1.44 × 102 | 3.74 × 102 | 1.84 × 102 | 9.75 × 101 | 2.01 × 102 | 1.10 × 102 | 2.54 × 102 | 1.94 × 102 | 1.72 × 102 | 1.02 × 102 | 4.58 × 101 |

| Ave | 1.63 × 102 | 1.55 × 102 | 2.75 × 102 | 4.11 × 102 | 2.29 × 102 | 1.61 × 102 | 2.59 × 102 | 1.57 × 102 | 2.81 × 102 | 2.64 × 102 | 2.11 × 102 | 1.79 × 102 | 1.21 × 102 | |

| Std | 4.48 × 101 | 1.70 × 102 | 7.15 × 101 | 1.78 × 101 | 2.48 × 101 | 3.31 × 101 | 3.02 × 101 | 3.16 × 101 | 1.36 × 101 | 2.45 × 101 | 2.02 × 101 | 2.00 × 101 | 2.75 × 101 | |

| F10 | Min | 7.14 × 102 | 1.11 × 102 | 2.93 × 103 | 7.90 × 103 | 3.47 × 103 | 3.16 × 101 | 4.22 × 103 | 1.04 × 103 | 5.53 × 103 | 6.52 × 103 | 2.22 × 103 | 1.25 × 102 | 1.14 × 101 |

| Ave | 1.63 × 103 | 5.68 × 102 | 4.35 × 103 | 8.98 × 103 | 4.34 × 103 | 5.21 × 102 | 5.42 × 103 | 2.01 × 103 | 5.90 × 103 | 7.64 × 103 | 3.96 × 103 | 1.15 × 103 | 3.01 × 102 | |

| Std | 5.22 × 102 | 2.79 × 102 | 7.15 × 102 | 4.86 × 102 | 5.08 × 102 | 3.03 × 102 | 5.53 × 102 | 4.38 × 102 | 1.96 × 102 | 4.46 × 102 | 8.39 × 102 | 8.11 × 102 | 1.89 × 102 | |

| F11 | Min | 2.06 × 103 | 2.11 × 103 | 3.09 × 103 | 7.85 × 103 | 2.95 × 103 | 2.55 × 103 | 4.74 × 103 | 2.82 × 103 | 5.56 × 103 | 7.25 × 103 | 4.59 × 103 | 2.90 × 103 | 1.87 × 103 |

| Ave | 3.14 × 103 | 4.58 × 103 | 5.64 × 103 | 9.11 × 103 | 4.88 × 103 | 3.55 × 103 | 5.87 × 103 | 4.45 × 103 | 6.19 × 103 | 7.91 × 103 | 6.06 × 103 | 4.44 × 103 | 3.01 × 103 | |

| Std | 6.13 × 102 | 1.80 × 103 | 1.23 × 103 | 4.41 × 102 | 7.42 × 102 | 6.08 × 102 | 5.24 × 102 | 9.78 × 102 | 4.39 × 102 | 3.63 × 102 | 6.12 × 102 | 5.71 × 102 | 5.07 × 102 | |

| F12 | Min | 1.54 × 10−1 | 1.77 × 10−1 | 1.48 × 100 | 3.20 × 100 | 6.18 × 10−1 | 3.07 × 10−2 | 2.01 × 10−1 | 3.70 × 10−1 | 1.16 × 100 | 1.38 × 100 | 1.13 × 100 | 3.31 × 10−1 | 1.34 × 10−1 |

| Ave | 5.12 × 10−1 | 1.43 × 100 | 2.37 × 100 | 4.82 × 100 | 1.02 × 100 | 1.02 × 10−1 | 1.26 × 100 | 1.61 × 100 | 1.71 × 100 | 3.01 × 100 | 2.26 × 100 | 1.03 × 100 | 5.21 × 10−1 | |

| Std | 2.44 × 10−1 | 8.31 × 10−1 | 4.71 × 10−1 | 8.11 × 10−1 | 2.56 × 10−1 | 7.07 × 10−2 | 4.55 × 10−1 | 1.35 × 100 | 2.00 × 10−1 | 6.91 × 10−1 | 3.45 × 10−1 | 3.73 × 10−1 | 2.26 × 10−1 | |

| F13 | Min | 4.55 × 10−1 | 3.53 × 10−1 | 4.06 × 10−1 | 6.88 × 100 | 3.19 × 100 | 2.38 × 10−1 | 5.16 × 100 | 6.44 × 10−1 | 3.13 × 100 | 4.06 × 100 | 5.39 × 10−1 | 3.28 × 10−1 | 3.02 × 10−1 |

| Ave | 6.99 × 10−1 | 5.33 × 10−1 | 6.43 × 10−1 | 7.69 × 100 | 3.97 × 100 | 3.66 × 10−1 | 6.19 × 100 | 3.20 × 100 | 3.64 × 100 | 5.03 × 100 | 7.53 × 10−1 | 5.55 × 10−1 | 5.49 × 10−1 | |

| Std | 1.54 × 10−1 | 8.03 × 10−2 | 1.09 × 10−1 | 4.20 × 10−1 | 5.41 × 10−1 | 7.07 × 10−2 | 6.00 × 10−1 | 1.03 × 100 | 2.61 × 10−1 | 4.99 × 10−1 | 1.07 × 10−1 | 1.62 × 10−1 | 1.05 × 10−1 | |

| F14 | Min | 1.72 × 10−1 | 2.07 × 10−1 | 2.15 × 10−1 | 1.54 × 102 | 4.23 × 101 | 1.73 × 10−1 | 1.29 × 102 | 2.87 × 101 | 5.78 × 101 | 4.39 × 101 | 4.54 × 10−1 | 2.42 × 10−1 | 1.46 × 10−1 |

| Ave | 5.17 × 10−1 | 3.71 × 10−1 | 4.26 × 10−1 | 2.21 × 102 | 7.68 × 101 | 4.86 × 10−1 | 1.68 × 102 | 5.05 × 101 | 6.88 × 101 | 7.98 × 101 | 3.49 × 100 | 5.01 × 10−1 | 3.37 × 10−1 | |

| Std | 3.05 × 10−1 | 1.39 × 10−1 | 2.87 × 10−1 | 2.79 × 101 | 1.77 × 101 | 1.72 × 10−1 | 2.04 × 101 | 1.51 × 101 | 7.25 × 100 | 1.71 × 101 | 2.52 × 100 | 2.98 × 10−1 | 1.36 × 10−1 | |

| F15 | Min | 1.24 × 101 | 7.40 × 100 | 6.71 × 102 | 2.63 × 105 | 6.86 × 102 | 2.38 × 100 | 1.57 × 104 | 2.28 × 103 | 3.68 × 103 | 2.30 × 106 | 2.75 × 101 | 7.11 × 100 | 5.81 × 100 |

| Ave | 3.43 × 101 | 1.56 × 101 | 1.94 × 103 | 5.29 × 105 | 1.42 × 104 | 4.70 × 100 | 8.44 × 104 | 1.84 × 104 | 1.27 × 104 | 1.85 × 107 | 4.03 × 101 | 1.65 × 101 | 1.08 × 101 | |

| Std | 1.61 × 101 | 2.15 × 100 | 1.00 × 103 | 2.01 × 105 | 1.53 × 104 | 1.31 × 100 | 5.25 × 104 | 1.57 × 104 | 6.03 × 103 | 1.00 × 107 | 9.14 × 100 | 7.45 × 100 | 3.48 × 100 | |

| F16 | Min | 1.02 × 101 | 9.37 × 100 | 1.14 × 101 | 1.34 × 101 | 1.17 × 101 | 9.96 × 100 | 1.18 × 101 | 1.22 × 101 | 1.23 × 101 | 1.27 × 101 | 1.18 × 101 | 9.86 × 100 | 9.31 × 100 |

| Ave | 1.12 × 101 | 1.19 × 101 | 1.27 × 101 | 1.41 × 101 | 1.23 × 101 | 1.15 × 101 | 1.23 × 101 | 1.31 × 101 | 1.28 × 101 | 1.33 × 101 | 1.21 × 101 | 1.09 × 101 | 1.07 × 101 | |

| Std | 6.08 × 10−1 | 1.05 × 100 | 5.41 × 10−1 | 2.61 × 10−1 | 3.26 × 10−1 | 4.45 × 10−1 | 3.02 × 10−1 | 5.42 × 10−1 | 2.32 × 10−1 | 3.69 × 10−1 | 2.24 × 10−1 | 5.00 × 10−1 | 6.65 × 10−1 | |

| Func. | Index | Algorithms | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PSO | DE | WOA | AOA | SHO | AVOA | BBOA | EVO | GJO | HCO | RUN | COA | ICOA | ||

| F17 | Min | 1.83 × 104 | 1.59 × 104 | 3.11 × 104 | 1.45 × 108 | 1.10 × 106 | 2.77 × 104 | 2.65 × 106 | 8.80 × 106 | 3.49 × 106 | 8.15 × 107 | 3.36 × 105 | 3.34 × 104 | 1.45 × 104 |

| Ave | 1.44 × 105 | 8.23 × 104 | 3.49 × 105 | 3.56 × 108 | 1.14 × 107 | 9.28 × 104 | 1.46 × 107 | 5.37 × 107 | 1.32 × 107 | 4.48 × 108 | 1.57 × 106 | 1.26 × 105 | 4.05 × 104 | |

| Std | 2.36 × 105 | 6.24 × 104 | 3.50 × 105 | 1.38 × 108 | 1.34 × 107 | 3.45 × 104 | 1.13 × 107 | 5.54 × 107 | 8.33 × 106 | 2.10 × 108 | 6.22 × 105 | 1.44 × 105 | 1.73 × 104 | |

| F18 | Min | 6.76 × 104 | 3.71 × 104 | 4.29 × 104 | 4.81 × 109 | 5.33 × 104 | 6.81 × 104 | 2.14 × 107 | 2.52 × 105 | 7.79 × 106 | 4.32 × 109 | 1.01 × 107 | 6.64 × 104 | 3.65 × 104 |

| Ave | 1.04 × 105 | 8.18 × 104 | 1.06 × 105 | 9.45 × 109 | 6.12 × 107 | 1.08 × 105 | 9.51 × 108 | 4.31 × 107 | 4.33 × 107 | 1.02 × 1010 | 3.81 × 107 | 1.12 × 105 | 7.81 × 104 | |

| Std | 2.66 × 104 | 2.65 × 104 | 3.41 × 104 | 2.73 × 109 | 1.50 × 108 | 2.47 × 104 | 8.51 × 108 | 9.94 × 107 | 2.05 × 107 | 3.66 × 109 | 1.47 × 107 | 3.08 × 104 | 2.63 × 104 | |

| F19 | Min | 1.35 × 101 | 2.01 × 101 | 3.86 × 101 | 1.71 × 108 | 2.58 × 101 | 1.10 × 101 | 4.90 × 102 | 1.62 × 103 | 3.56 × 105 | 8.75 × 108 | 1.89 × 103 | 1.67 × 101 | 1.74 × 101 |

| Ave | 3.58 × 102 | 2.41 × 101 | 1.81 × 103 | 1.51 × 109 | 1.53 × 105 | 5.15 × 101 | 9.25 × 106 | 8.71 × 105 | 2.42 × 106 | 5.00 × 109 | 1.28 × 105 | 1.38 × 102 | 1.29 × 102 | |

| Std | 9.96 × 102 | 3.15 × 100 | 2.97 × 103 | 7.43 × 108 | 6.62 × 105 | 8.74 × 101 | 1.91 × 107 | 2.49 × 106 | 2.65 × 106 | 2.56 × 109 | 1.01 × 105 | 4.57 × 102 | 2.75 × 102 | |

| F20 | Min | 2.64 × 104 | 4.76 × 103 | 2.52 × 104 | 6.06 × 1012 | 8.98 × 104 | 1.88 × 104 | 1.40 × 108 | 4.62 × 108 | 2.60 × 109 | 2.20 × 1014 | 5.67 × 105 | 2.26 × 104 | 4.56 × 103 |

| Ave | 8.71 × 104 | 4.57 × 104 | 8.79 × 104 | 1.47 × 1015 | 1.25 × 1010 | 4.93 × 104 | 6.04 × 1012 | 7.15 × 1011 | 1.14 × 1010 | 1.15 × 1016 | 1.49 × 107 | 9.23 × 104 | 3.50 × 104 | |

| Std | 4.18 × 104 | 6.64 × 104 | 2.77 × 104 | 1.18 × 1015 | 2.78 × 1010 | 2.04 × 104 | 1.64 × 1013 | 1.81 × 1012 | 9.02 × 109 | 1.40 × 1016 | 1.81 × 107 | 5.41 × 104 | 2.03 × 104 | |

| F21 | Min | 3.91 × 103 | 3.92 × 103 | 8.42 × 103 | 1.02 × 109 | 3.10 × 106 | 1.25 × 104 | 2.34 × 107 | 6.75 × 106 | 3.54 × 107 | 1.42 × 109 | 8.27 × 105 | 1.31 × 104 | 3.81 × 103 |

| Ave | 2.52 × 104 | 2.52 × 104 | 4.33 × 104 | 3.44 × 109 | 3.89 × 107 | 3.50 × 104 | 1.28 × 108 | 5.84 × 107 | 8.81 × 107 | 3.85 × 109 | 2.49 × 106 | 4.58 × 104 | 2.06 × 104 | |

| Std | 1.17 × 104 | 1.45 × 104 | 2.56 × 104 | 1.49 × 109 | 2.85 × 107 | 1.22 × 104 | 9.09 × 107 | 5.13 × 107 | 3.31 × 107 | 1.75 × 109 | 9.68 × 105 | 1.99 × 104 | 1.05 × 104 | |

| F22 | Min | 2.90 × 102 | 3.66 × 101 | 2.49 × 103 | 5.55 × 1010 | 1.92 × 103 | 3.02 × 101 | 2.11 × 103 | 3.28 × 103 | 7.80 × 105 | 1.33 × 1011 | 2.12 × 103 | 5.89 × 101 | 2.83 × 101 |

| Ave | 1.23 × 103 | 6.94 × 102 | 4.91 × 103 | 3.01 × 1013 | 2.85 × 103 | 4.99 × 102 | 7.25 × 107 | 4.33 × 1010 | 1.57 × 107 | 1.85 × 1014 | 8.36 × 103 | 7.14 × 102 | 3.63 × 102 | |

| Std | 7.20 × 102 | 7.41 × 102 | 1.52 × 103 | 3.86 × 1013 | 3.85 × 102 | 1.92 × 102 | 3.56 × 108 | 1.56 × 1011 | 2.25 × 107 | 2.20 × 1014 | 8.98 × 103 | 3.90 × 102 | 2.21 × 102 | |

| Func. | Index | Algorithms | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PSO | DE | WOA | AOA | SHO | AVOA | BBOA | EVO | GJO | HCO | RUN | COA | ICOA | ||

| F23 | Min | 2.23 × 102 | 2.23 × 102 | 2.23 × 102 | 2.08 × 102 | 2.00 × 102 | 2.00 × 102 | 2.00 × 102 | 2.55 × 102 | 2.00 × 102 | 3.37 × 102 | 2.25 × 102 | 2.00 × 102 | 2.00 × 102 |

| Ave | 2.23 × 102 | 2.23 × 102 | 2.26 × 102 | 2.12 × 102 | 2.41 × 102 | 2.00 × 102 | 2.50 × 102 | 2.76 × 102 | 2.30 × 102 | 4.03 × 102 | 2.27 × 102 | 2.00 × 102 | 2.00 × 102 | |

| Std | 2.35 × 10−1 | 0.00 × 100 | 5.88 × 100 | 8.41 × 10−1 | 1.11 × 101 | 0.00 × 100 | 3.00 × 101 | 1.83 × 101 | 1.41 × 101 | 5.69 × 101 | 2.79 × 100 | 0.00 × 100 | 0.00 × 100 | |

| F24 | Min | 2.23 × 102 | 2.01 × 102 | 2.01 × 102 | 2.01 × 102 | 2.01 × 102 | 2.01 × 102 | 2.01 × 102 | 2.47 × 102 | 2.01 × 102 | 2.43 × 102 | 2.01 × 102 | 2.01 × 102 | 2.01 × 102 |

| Ave | 2.42 × 102 | 2.01 × 102 | 2.01 × 102 | 2.02 × 102 | 2.01 × 102 | 2.01 × 102 | 2.01 × 102 | 2.62 × 102 | 2.01 × 102 | 2.51 × 102 | 2.01 × 102 | 2.01 × 102 | 2.01 × 102 | |

| Std | 9.22 × 100 | 0.00 × 100 | 0.00 × 100 | 2.09 × 10−1 | 0.00 × 100 | 0.00 × 100 | 0.00 × 100 | 8.34 × 100 | 0.00 × 100 | 6.06 × 100 | 3.67 × 10−11 | 0.00 × 100 | 0.00 × 100 | |

| F25 | Min | 2.06 × 102 | 2.04 × 102 | 2.00 × 102 | 2.00 × 102 | 2.00 × 102 | 2.00 × 102 | 2.00 × 102 | 2.24 × 102 | 2.00 × 102 | 2.40 × 102 | 2.00 × 102 | 2.00 × 102 | 2.00 × 102 |

| Ave | 2.17 × 102 | 2.04 × 102 | 2.12 × 102 | 2.01 × 102 | 2.00 × 102 | 2.00 × 102 | 2.00 × 102 | 2.31 × 102 | 2.00 × 102 | 2.62 × 102 | 2.15 × 102 | 2.00 × 102 | 2.00 × 102 | |

| Std | 9.14 × 100 | 3.14 × 10−1 | 2.12 × 101 | 8.53 × 10−2 | 0.00 × 100 | 0.00 × 100 | 0.00 × 100 | 6.49 × 100 | 0.00 × 100 | 1.49 × 101 | 5.01 × 100 | 0.00 × 100 | 0.00 × 100 | |

| F26 | Min | 1.01 × 102 | 1.00 × 102 | 1.00 × 102 | 1.10 × 102 | 1.02 × 102 | 1.00 × 102 | 1.05 × 102 | 1.03 × 102 | 1.03 × 102 | 1.14 × 102 | 1.01 × 102 | 1.00 × 102 | 1.00 × 102 |

| Ave | 1.34 × 102 | 1.00 × 102 | 1.01 × 102 | 1.93 × 102 | 1.61 × 102 | 1.00 × 102 | 1.12 × 102 | 1.51 × 102 | 1.36 × 102 | 1.50 × 102 | 1.47 × 102 | 1.73 × 102 | 1.01 × 102 | |

| Std | 4.76 × 101 | 6.83 × 10−2 | 1.65 × 100 | 2.30 × 101 | 4.83 × 101 | 9.56 × 10−2 | 1.69 × 101 | 5.07 × 101 | 4.60 × 101 | 3.60 × 101 | 5.07 × 101 | 4.48 × 101 | 1.19 × 10−1 | |

| F27 | Min | 4.02 × 102 | 3.71 × 102 | 6.26 × 102 | 2.01 × 102 | 5.26 × 102 | 2.00 × 102 | 8.74 × 102 | 9.07 × 102 | 5.78 × 102 | 9.32 × 102 | 4.38 × 102 | 2.00 × 102 | 2.00 × 102 |

| Ave | 9.46 × 102 | 4.66 × 102 | 1.45 × 103 | 2.03 × 102 | 1.02 × 103 | 2.00 × 102 | 1.25 × 103 | 1.07 × 103 | 1.16 × 103 | 1.59 × 103 | 8.87 × 102 | 2.00 × 102 | 2.00 × 102 | |

| Std | 2.05 × 102 | 6.14 × 101 | 2.33 × 102 | 4.73 × 10−1 | 2.30 × 102 | 0.00 × 100 | 8.52 × 101 | 9.54 × 101 | 1.52 × 102 | 2.45 × 102 | 1.80 × 102 | 0.00 × 100 | 0.00 × 100 | |

| F28 | Min | 1.16 × 103 | 7.77 × 102 | 2.16 × 103 | 2.06 × 102 | 2.00 × 102 | 2.00 × 102 | 9.29 × 102 | 1.16 × 103 | 2.00 × 102 | 5.29 × 103 | 1.18 × 103 | 2.00 × 102 | 2.00 × 102 |

| Ave | 1.89 × 103 | 8.38 × 102 | 3.07 × 103 | 2.09 × 102 | 3.31 × 103 | 2.00 × 102 | 1.73 × 103 | 2.26 × 103 | 4.80 × 102 | 6.49 × 103 | 1.47 × 103 | 2.00 × 102 | 2.00 × 102 | |

| Std | 5.98 × 102 | 4.11 × 101 | 5.85 × 102 | 7.67 × 10−1 | 8.82 × 102 | 0.00 × 100 | 5.34 × 102 | 6.13 × 102 | 8.11 × 102 | 7.67 × 102 | 1.38 × 102 | 0.00 × 100 | 0.00 × 100 | |

| F29 | Min | 3.05 × 103 | 1.37 × 103 | 2.08 × 102 | 1.62 × 106 | 2.08 × 102 | 2.08 × 102 | 2.08 × 102 | 1.24 × 108 | 2.08 × 102 | 1.38 × 109 | 2.71 × 106 | 2.08 × 102 | 2.08 × 102 |

| Ave | 8.13 × 106 | 6.07 × 105 | 1.41 × 107 | 1.19 × 107 | 4.15 × 106 | 2.08 × 102 | 2.95 × 107 | 3.05 × 108 | 1.16 × 108 | 4.64 × 109 | 1.23 × 107 | 2.08 × 102 | 2.08 × 102 | |

| Std | 2.04 × 107 | 3.31 × 106 | 2.47 × 107 | 2.80 × 106 | 1.75 × 107 | 0.00 × 100 | 7.93 × 107 | 1.31 × 108 | 6.21 × 107 | 2.15 × 109 | 7.15 × 106 | 0.00 × 100 | 0.00 × 100 | |

| F30 | Min | 7.91 × 104 | 6.79 × 103 | 5.76 × 104 | 7.12 × 109 | 1.22 × 108 | 2.05 × 102 | 6.18 × 105 | 5.59 × 108 | 1.55 × 108 | 5.63 × 1011 | 4.02 × 106 | 2.05 × 102 | 2.05 × 102 |

| Ave | 1.92 × 107 | 1.10 × 104 | 4.33 × 106 | 2.44 × 1011 | 4.23 × 108 | 3.14 × 104 | 6.32 × 108 | 1.48 × 1010 | 4.70 × 108 | 1.64 × 1014 | 3.51 × 107 | 9.87 × 103 | 1.87 × 104 | |

| Std | 2.70 × 107 | 2.89 × 103 | 7.42 × 106 | 8.06 × 1010 | 3.06 × 108 | 4.59 × 104 | 6.96 × 108 | 2.70 × 1010 | 3.15 × 108 | 1.80 × 1014 | 3.46 × 107 | 2.43 × 104 | 6.20 × 104 | |

| F. ave-rank | 5.43 | 3.95 | 6.85 | 11.23 | 8.00 | 2.77 | 9.47 | 8.93 | 9.00 | 12.13 | 7.40 | 4.13 | 1.70 | |

| Func. | PSO-ICOA | DE-ICOA | WOA-ICOA | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| p-Value | T− | T+ | W | p-Value | T− | T+ | W | p-Value | T− | T+ | W | |

| F1 | 6.90 × 10−1 | 150 | 315 | ≈ | 3.29 × 10−1 | 147 | 318 | ≈ | 8.37 × 10−2 | 84 | 381 | ≈ |

| F2 | 2.49 × 10−10 | 18 | 447 | + | 2.87 × 10−11 | 71 | 394 | + | 2.87 × 10−11 | 0 | 465 | + |

| F3 | 1.66 × 10−2 | 38 | 427 | + | 2.87 × 10−11 | 82 | 383 | + | 2.87 × 10−11 | 0 | 465 | + |

| F4 | 3.11 × 10−1 | 145 | 319 | ≈ | 2.62 × 10−3 | 148 | 317 | + | 9.78 × 10−2 | 119 | 346 | ≈ |

| F5 | 1.34 × 10−3 | 103 | 362 | + | 2.87 × 10−11 | 0 | 465 | + | 1.06 × 10−8 | 2 | 463 | + |

| F6 | 2.51 × 10−5 | 23 | 442 | + | 1.56 × 10−3 | 188 | 227 | + | 2.87 × 10−11 | 0 | 465 | + |

| F7 | 5.23 × 10−11 | 0 | 465 | + | 4.88 × 10−8 | 15 | 450 | + | 2.87 × 10−11 | 0 | 465 | + |

| F8 | 2.87 × 10−11 | 0 | 465 | + | 6.10 × 10−3 | 96.5 | 365.5 | + | 2.87 × 10−11 | 0 | 465 | + |

| F9 | 3.08 × 10−8 | 8 | 457 | + | 2.94 × 10−1 | 170 | 295 | ≈ | 9.44 × 10−11 | 0 | 465 | + |

| F10 | 3.01 × 10−10 | 463 | 2 | - | 3.18 × 10−11 | 465 | 0 | - | 5.41 × 10−4 | 383 | 82 | - |

| F11 | 1.69 × 10−1 | 157 | 308 | ≈ | 1.10 × 10−1 | 175 | 290 | ≈ | 3.64 × 10−10 | 6 | 459 | + |

| F12 | 7.34 × 10−1 | 253 | 212 | ≈ | 5.10 × 10−2 | 129 | 336 | ≈ | 2.87 × 10−11 | 0 | 465 | + |

| F13 | 3.51 × 10−11 | 0 | 465 | + | 1.07 × 10−4 | 42 | 423 | + | 2.87 × 10−11 | 0 | 465 | + |

| F14 | 8.37 × 10−2 | 103 | 362 | ≈ | 3.59 × 10−3 | 364 | 101 | - | 5.66 × 10−6 | 29 | 436 | + |

| F15 | 1.04 × 10−10 | 0 | 465 | + | 4.37 × 10−9 | 3 | 462 | + | 2.87 × 10−11 | 0 | 465 | + |

| F16 | 2.58 × 10−6 | 214 | 251 | + | 1.12 × 10−9 | 404 | 61 | - | 2.58 × 10−6 | 0 | 465 | + |

| F17 | 2.51 × 10−5 | 38 | 427 | + | 2.87 × 10−11 | 195 | 270 | + | 3.88 × 10−11 | 0 | 465 | + |

| F18 | 2.20 × 10−1 | 175 | 290 | ≈ | 2.87 × 10−11 | 181 | 284 | + | 2.87 × 10−11 | 0 | 465 | + |

| F19 | 3.70 × 10−6 | 23 | 442 | + | 9.67 × 10−3 | 92 | 373 | + | 7.03 × 10−11 | 0 | 465 | + |

| F20 | 5.31 × 10−8 | 14 | 451 | + | 3.18 × 10−11 | 185 | 280 | + | 2.87 × 10−11 | 0 | 465 | + |

| F21 | 2.10 × 10−8 | 19 | 446 | + | 2.87 × 10−11 | 161 | 304 | + | 7.76 × 10−11 | 0 | 465 | + |

| F22 | 1.55 × 10−6 | 37 | 428 | + | 7.32 × 10−7 | 231 | 234 | + | 7.45 × 10−3 | 175 | 290 | + |

| F23 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 2.13 × 10−9 | 0 | 459 | + |

| F24 | 2.40 × 10−6 | 32 | 433 | + | 5.27 × 10−6 | 129 | 336 | + | 2.05 × 10−10 | 5 | 460 | + |

| F25 | 6.56 × 10−5 | 165 | 300 | + | 2.87 × 10−11 | 0 | 465 | + | 3.75 × 10−1 | 152 | 277 | ≈ |

| F26 | 2.87 × 10−11 | 0 | 465 | + | 9.27 × 10−3 | 110 | 355 | + | 2.87 × 10−11 | 0 | 465 | + |

| F27 | 3.70 × 10−6 | 26 | 439 | + | 5.82 × 10−7 | 10 | 455 | + | 8.56 × 10−11 | 0 | 465 | + |

| F28 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 5.32 × 10−10 | 1 | 464 | + |

| F29 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + |

| F30 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + |

| Func. | AOA-ICOA | SHO-ICOA | AVOA-ICOA | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| p-Value | T− | T+ | W | p-Value | T− | T+ | W | p-Value | T− | T+ | W | |

| F1 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 3.01 × 10−10 | 0 | 465 | + |

| F2 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + |

| F3 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + |

| F4 | 2.87 × 10−11 | 0 | 465 | + | 9.31 × 10−10 | 11 | 454 | + | 2.46 × 10−2 | 174 | 290 | + |

| F5 | 2.87 × 10−11 | 0 | 465 | + | 4.37 × 10−9 | 4 | 461 | + | 5.43 × 10−5 | 66 | 399 | + |

| F6 | 2.87 × 10−11 | 0 | 465 | + | 1.49 × 10−8 | 8 | 457 | + | 4.75 × 10−3 | 88 | 377 | + |

| F7 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 1.05 × 10−2 | 113 | 352 | + |

| F8 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 1.27 × 10−2 | 134.5 | 327.5 | + |

| F9 | 2.87 × 10−11 | 0 | 465 | + | 3.51 × 10−11 | 0 | 465 | + | 8.70 × 10−8 | 17 | 448 | + |

| F10 | 5.32 × 10−10 | 2 | 463 | + | 1.66 × 10−7 | 451 | 14 | - | 3.51 × 10−11 | 465 | 0 | - |

| F11 | 2.87 × 10−11 | 0 | 465 | + | 1.55 × 10−6 | 36 | 429 | + | 2.20 × 10−5 | 46 | 419 | + |

| F12 | 2.87 × 10−11 | 0 | 465 | + | 1.25 × 10−2 | 109 | 356 | + | 9.50 × 10−5 | 414 | 51 | - |

| F13 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 2.76 × 10−2 | 123 | 342 | + |

| F14 | 2.87 × 10−11 | 0 | 465 | + | 1.15 × 10−10 | 1 | 464 | + | 1.35 × 10−2 | 355 | 110 | - |

| F15 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 5.41 × 10−4 | 81 | 384 | + |

| F16 | 2.87 × 10−11 | 0 | 465 | + | 8.94 × 10−1 | 17 | 448 | ≈ | 2.98 × 10−6 | 157 | 308 | + |

| F17 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 3.51 × 10−11 | 0 | 465 | + |

| F18 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 1.39 × 10−10 | 1 | 464 | + |

| F19 | 2.87 × 10−11 | 0 | 465 | + | 2.33 × 10−9 | 1 | 464 | + | 6.73 × 10−4 | 78 | 387 | + |

| F20 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 3.88 × 10−11 | 1 | 464 | + |

| F21 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 1.54 × 10−10 | 0 | 465 | + |

| F22 | 2.87 × 10−11 | 0 | 465 | + | 1.48 × 10−3 | 116 | 349 | + | 1.69 × 10−1 | 202 | 263 | ≈ |

| F23 | 2.87 × 10−11 | 0 | 465 | + | 1 | 0 | 0 | ≈ | 1 | 0 | 0 | ≈ |

| F24 | 2.87 × 10−11 | 0 | 465 | + | 1.11 × 10−7 | 9 | 456 | + | 2.60 × 10−4 | 67 | 398 | + |

| F25 | 2.87 × 10−11 | 0 | 465 | + | 8.24 × 10−1 | 87 | 102 | ≈ | 1 | 0 | 0 | ≈ |

| F26 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 3.08 × 10−1 | 304 | 161 | ≈ |

| F27 | 2.87 × 10−11 | 0 | 465 | + | 1.06 × 10−8 | 12 | 453 | + | 3.06 × 10−5 | 78 | 387 | + |

| F28 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 1 | 0 | 0 | ≈ |

| F29 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 1 | 0 | 0 | ≈ |

| F30 | 2.87 × 10−11 | 0 | 465 | + | 5.32 × 10−10 | 0 | 462 | + | 1.90 × 10−3 | 0 | 329 | + |

| Func. | BBOA-ICOA | EVO-ICOA | GJO-ICOA | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| p-Value | T− | T+ | W | p-Value | T− | T+ | W | p-Value | T− | T+ | W | |

| F1 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + |

| F2 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + |

| F3 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + |

| F4 | 2.87 × 10−11 | 0 | 465 | + | 1.27 × 10−10 | 1 | 464 | + | 2.87 × 10−11 | 0 | 465 | + |

| F5 | 2.10 × 10−8 | 31 | 434 | + | 5.27 × 10−6 | 42 | 423 | + | 2.87 × 10−11 | 0 | 465 | + |

| F6 | 2.13 × 10−9 | 12 | 453 | + | 4.10 × 10−4 | 80 | 385 | + | 9.44 × 10−11 | 0 | 465 | + |

| F7 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + |

| F8 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + |

| F9 | 1.04 × 10−10 | 0 | 465 | + | 2.06 × 10−5 | 42 | 423 | + | 2.87 × 10−11 | 0 | 465 | + |

| F10 | 1.56 × 10−3 | 381 | 84 | - | 2.55 × 10−9 | 458 | 7 | - | 9.27 × 10−4 | 363 | 102 | - |

| F11 | 1.31 × 10−7 | 3 | 462 | + | 8.86 × 10−9 | 20 | 445 | + | 2.87 × 10−11 | 0 | 465 | + |

| F12 | 3.21 × 10−8 | 16 | 449 | + | 4.22 × 10−5 | 35 | 430 | + | 2.87 × 10−11 | 0 | 465 | + |

| F13 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + |

| F14 | 2.87 × 10−11 | 0 | 465 | + | 3.51 × 10−11 | 1 | 464 | + | 2.87 × 10−11 | 0 | 465 | + |

| F15 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + |

| F16 | 3.59 × 10−1 | 19 | 446 | ≈ | 1.21 × 10−4 | 0 | 465 | + | 5.77 × 10−11 | 0 | 465 | + |

| F17 | 5.23 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + |

| F18 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + |

| F19 | 6.04 × 10−2 | 152 | 313 | ≈ | 6.37 × 10−11 | 1 | 464 | + | 3.88 × 10−11 | 0 | 465 | + |

| F20 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + |

| F21 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + |

| F22 | 1.41 × 10−3 | 154 | 311 | + | 1.94 × 10−9 | 6 | 459 | + | 6.73 × 10−4 | 92 | 373 | + |

| F23 | 1 | 0 | 0 | ≈ | 2.87 × 10−11 | 0 | 465 | + | 1 | 0 | 0 | ≈ |

| F24 | 6.37 × 10−11 | 1 | 464 | + | 4.40 × 10−10 | 6 | 459 | + | 2.87 × 10−11 | 0 | 465 | + |

| F25 | 7.60 × 10−2 | 87 | 242 | ≈ | 2.87 × 10−11 | 0 | 465 | + | 1 | 0 | 0 | ≈ |

| F26 | 2.87 × 10−11 | 0 | 465 | + | 3.51 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + |

| F27 | 2.13 × 10−9 | 5 | 460 | + | 1.69 × 10−10 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + |

| F28 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + |

| F29 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + |

| F30 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 2.13 × 10−9 | 0 | 459 | + |

| Func. | HCO-ICOA | RUN-ICOA | COA-ICOA | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| p-Value | T− | T+ | W | p-Value | T− | T+ | W | p-Value | T− | T+ | W | |

| F1 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 1.12 × 10−9 | 2 | 463 | + |

| F2 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 3.51 × 10−11 | 0 | 465 | + |

| F3 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + |

| F4 | 2.87 × 10−11 | 0 | 465 | + | 8.79 × 10−4 | 134 | 331 | + | 4.44 × 10−2 | 175 | 290 | + |

| F5 | 2.87 × 10−11 | 0 | 465 | + | 5.32 × 10−10 | 30 | 435 | + | 8.02 × 10−1 | 228 | 237 | ≈ |

| F6 | 4.73 × 10−11 | 1 | 464 | + | 2.76 × 10−4 | 77 | 388 | + | 1.01 × 10−2 | 104 | 361 | + |

| F7 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 1.25 × 10−2 | 102 | 363 | + |

| F8 | 2.87 × 10−11 | 0 | 465 | + | 3.18 × 10−11 | 0 | 465 | + | 1.73 × 10−2 | 110 | 355 | + |

| F9 | 1.27 × 10−10 | 0 | 465 | + | 2.35 × 10−5 | 68 | 397 | + | 1.44 × 10−6 | 20 | 445 | + |

| F10 | 5.22 × 10−9 | 1 | 464 | + | 4.37 × 10−9 | 455 | 10 | - | 6.78 × 10−7 | 435 | 30 | - |

| F11 | 2.87 × 10−11 | 0 | 465 | + | 1.13 × 10−5 | 37 | 428 | + | 6.82 × 10−3 | 107 | 358 | + |

| F12 | 2.87 × 10−11 | 0 | 465 | + | 3.51 × 10−11 | 0 | 465 | + | 6.04 × 10−4 | 79 | 386 | + |

| F13 | 3.88 × 10−11 | 0 | 465 | + | 9.44 × 10−11 | 2 | 463 | + | 8.02 × 10−8 | 13 | 452 | + |

| F14 | 3.18 × 10−11 | 0 | 465 | + | 1.43 × 10−1 | 147 | 318 | ≈ | 3.33 × 10−2 | 136 | 329 | + |

| F15 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 1.02 × 10−7 | 3 | 462 | + |

| F16 | 1.54 × 10−4 | 4 | 461 | + | 1.05 × 10−5 | 172 | 293 | + | 5.31 × 10−8 | 291 | 174 | - |

| F17 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 1.69 × 10−10 | 1 | 464 | + |

| F18 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 3.71 × 10−5 | 67 | 398 | + |

| F19 | 2.87 × 10−11 | 0 | 465 | + | 1.12 × 10−9 | 7 | 458 | + | 1.84 × 10−4 | 66 | 399 | + |

| F20 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 3.18 × 10−11 | 0 | 465 | + |

| F21 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 1.35 × 10−9 | 26 | 439 | + |

| F22 | 2.87 × 10−11 | 0 | 465 | + | 5.28 × 10−2 | 264 | 201 | ≈ | 1.90 × 10−3 | 117 | 348 | + |

| F23 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 1 | 0 | 0 | ≈ |

| F24 | 1.54 × 10−10 | 2 | 463 | + | 1.25 × 10−2 | 134 | 331 | + | 3.31 × 10−10 | 1 | 464 | + |

| F25 | 2.87 × 10−11 | 0 | 465 | + | 1.07 × 10−6 | 114 | 351 | + | 1 | 0 | 0 | ≈ |

| F26 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 1.44 × 10−6 | 31 | 434 | + |

| F27 | 7.03 × 10−11 | 1 | 464 | + | 7.04 × 10−10 | 0 | 465 | + | 1.23 × 10−9 | 10 | 455 | + |

| F28 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 1 | 0 | 0 | ≈ |

| F29 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 1 | 0 | 0 | ≈ |

| F30 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 1 | 0 | 0 | ≈ |

| Function Type | PSO-ICOA | DE-ICOA | WOA-ICOA | AOA-ICOA | SHO-ICOA | AVOA-ICOA |

| Unimodal | 2/1/0 | 2/1/0 | 2/1/0 | 3/0/0 | 3/0/0 | 3/0/0 |

| Multimodal | 8/4/1 | 7/3/3 | 11/1/1 | 13/0/0 | 11/1/1 | 10/0/3 |

| Hybrid | 5/1/0 | 6/0/0 | 6/0/0 | 6/0/0 | 6/0/0 | 5/1/0 |

| Composition | 8/0/0 | 8/0/0 | 7/1/0 | 8/0/0 | 6/2/0 | 3/5/0 |

| Total | 23/6/1 | 23/4/3 | 26/3/1 | 30/0/0 | 26/3/1 | 21/6/3 |

| Function type | BBOA-ICOA | EVO-ICOA | GJO-ICOA | HCO-ICOA | RUN-ICOA | COA-ICOA |

| Unimodal | 3/0/0 | 3/0/0 | 3/0/0 | 3/0/0 | 3/0/0 | 3/0/0 |

| Multimodal | 11/1/1 | 12/0/1 | 12/0/1 | 13/0/0 | 11/1/1 | 10/1/2 |

| Hybrid | 5/1/0 | 6/0/0 | 6/0/0 | 6/0/0 | 5/1/0 | 6/0/0 |

| Composition | 6/2/0 | 8/0/0 | 6/2/0 | 8/0/0 | 8/0/0 | 3/5/0 |

| Total | 25/4/1 | 29/0/1 | 27/2/1 | 30/0/0 | 27/2/1 | 22/6/2 |

| Func. | PSO-ICOA | DE-ICOA | WOA-ICOA | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| p-Value | T− | T+ | W | p-Value | T− | T+ | W | p-Value | T− | T+ | W | |

| F1 | 1.35 × 10−2 | 80 | 385 | + | 2.87 × 10−11 | 181 | 284 | + | 8.87 × 10−3 | 0 | 465 | + |

| F2 | 4.58 × 10−6 | 85 | 380 | + | 2.87 × 10−11 | 145 | 320 | + | 4.84 × 10−10 | 188 | 277 | + |

| F3 | 8.79 × 10−4 | 186 | 279 | + | 2.87 × 10−11 | 42 | 423 | + | 4.29 × 10−11 | 0 | 465 | + |

| F4 | 3.15 × 10−1 | 12 | 453 | ≈ | 2.87 × 10−11 | 79 | 386 | + | 3.70 × 10−6 | 0 | 465 | + |

| F5 | 2.87 × 10−11 | 0 | 465 | + | 2.87 × 10−11 | 0 | 465 | + | 2.95 × 10−8 | 14 | 451 | + |

| F6 | 6.04 × 10−2 | 1 | 464 | ≈ | 2.87 × 10−11 | 80 | 385 | + | 3.18 × 10−11 | 0 | 465 | + |

| F7 | 3.45 × 10−6 | 16 | 449 | + | 1.27 × 10−10 | 185 | 280 | + | 2.56 × 10−2 | 34 | 431 | + |

| F8 | 2.07 × 10−4 | 0 | 465 | + | 2.87 × 10−11 | 185 | 280 | + | 8.70 × 10−8 | 0 | 465 | + |

| F9 | 1.03 × 10−3 | 45 | 420 | + | 1.66 × 10−7 | 198 | 267 | + | 1.02 × 10−7 | 2 | 463 | + |

| F10 | 3.21 × 10−6 | 0 | 465 | + | 5.23 × 10−11 | 26 | 439 | + | 2.55 × 10−9 | 0 | 465 | + |

| F11 | 4.13 × 10−2 | 187 | 278 | + | 6.90 × 10−2 | 52 | 413 | ≈ | 4.00 × 10−9 | 0 | 465 | + |

| F12 | 8.02 × 10−1 | 228 | 237 | ≈ | 1.81 × 10−5 | 34 | 431 | + | 2.87 × 10−11 | 0 | 465 | + |