1. Introduction

Legged robots have emerged as a class of robots capable of navigating diverse and challenging environments where wheeled or tracked systems often fall short [

1,

2]. From uneven terrains to cluttered indoor spaces, legged robots provide superior mobility by mimicking the movement patterns of biological organisms [

3]. Their ability to traverse complex surfaces, such as rocky landscapes, stairs, or loose soil, makes them ideal for applications in search and rescue missions, space exploration, and industrial inspection [

4]. However, one of the main challenges in developing robust and efficient legged robots lies in achieving smooth transitions between various gait patterns and operational conditions [

5,

6]. Abrupt shifts in motion, whether due to changes in speed, direction, or terrain type can lead to instability, energy inefficiency, and mechanical stress [

7,

8].

Legged robots rely on a variety of gaits, which are coordinated patterns of limb movement that allow them to walk, run, jump, or climb [

9]. These gaits are typically selected based on environmental conditions and the desired speed or energy consumption of the robot [

10]. For instance, walking gaits are preferred at low speeds on rough terrain for stability, while running gaits are more effective for high-speed movement on flat surfaces [

11,

12]. However, the transition between these gaits, especially in dynamic or unpredictable environments remains a challenge. Poorly timed or abrupt transitions can compromise balance, increase energy consumption, and cause mechanical failures [

10]. Achieving smooth, adaptive transitions, such as from walking to running or during surface changes, is thus vital for maintaining the robot’s balance, stability, and efficiency [

10].

In biological systems, animals transition effortlessly between gaits, adjusting their movement patterns based on terrain and speed [

13]. This is enabled by Central Pattern Generators (CPGs), neural circuits capable of producing rhythmic outputs without requiring continuous sensory feedback [

14,

15]. These circuits are found in the spinal cords of vertebrates and control repetitive locomotion patterns such as walking, swimming, or flying [

16]. Inspired by this biological mechanism, researchers have applied CPG-based control systems in robotics to generate smooth and adaptive limb coordination [

5]. The use of spiking neurons within CPGs further enhances the biological realism and timing precision of these control systems [

17], enabling robots to better emulate the gait modulation observed in animals [

18].

Spiking neural networks (SNNs) have demonstrated great potential in bio-inspired robotic control due to their event-based, low-latency communication properties [

19,

20,

21]. However, most existing studies using SNNs for locomotion rely on large-scale networks or are restricted to simulation environments, requiring high-performance computing platforms for real-time operation [

22,

23,

24]. These factors limit their use in practical applications, especially on low-cost, resource-constrained robotic systems.

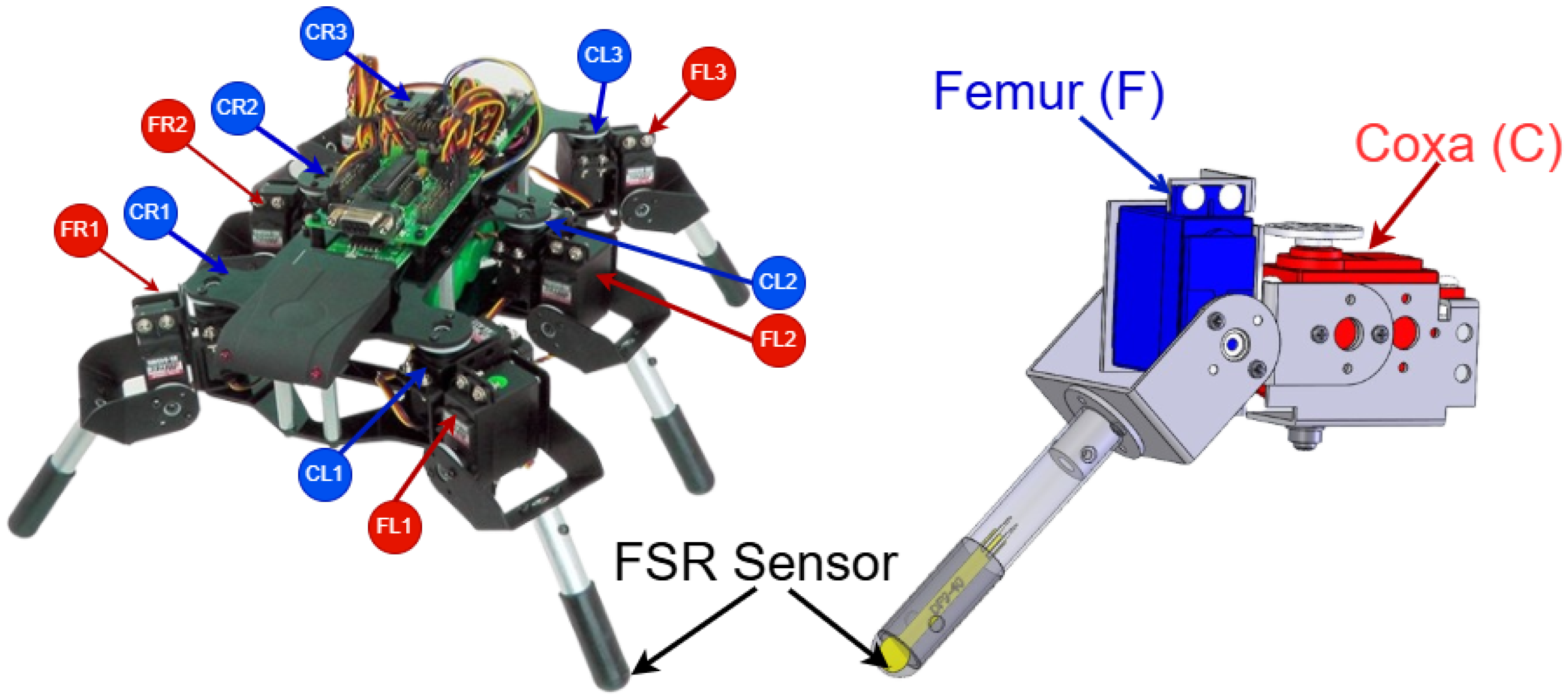

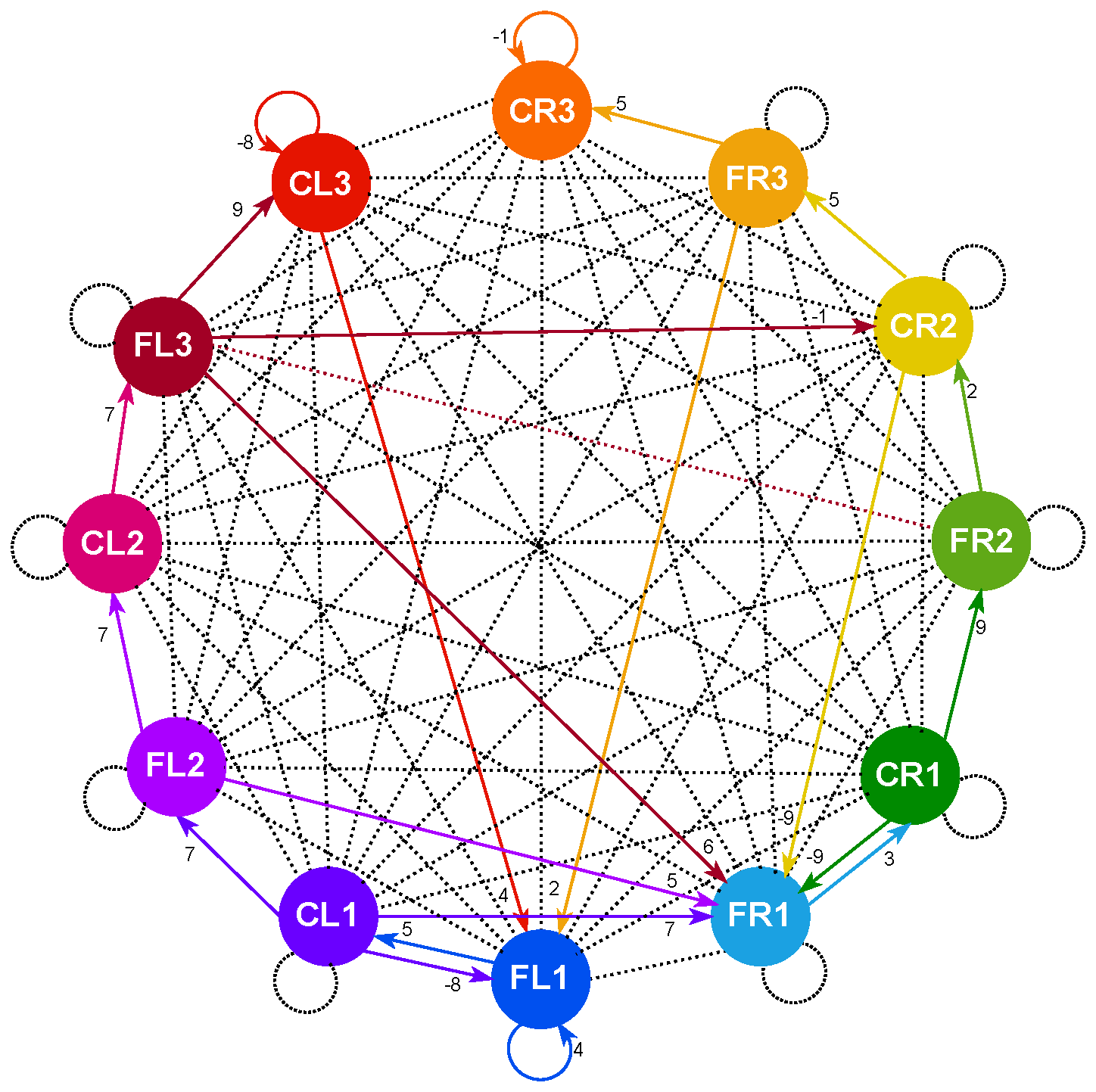

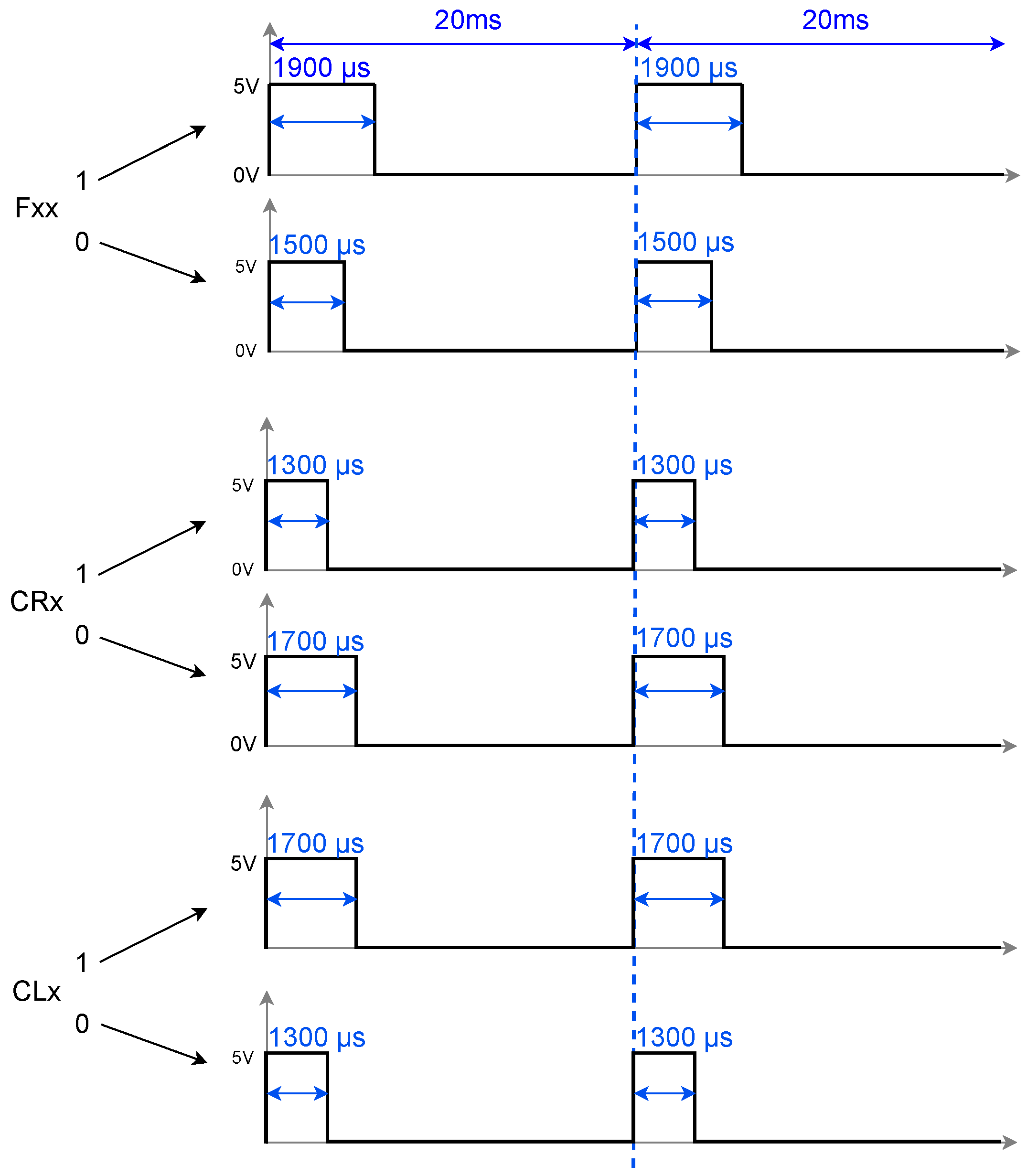

In this paper, we address this gap by introducing a novel, fully bio-inspired spiking CPG controller composed of only 12 neurons capable of producing distinct gait patterns and achieving smooth transitions between them. Gait changes are autonomously triggered based on terrain feedback, using a Force-Sensitive Resistor (FSR) sensor integrated into the hexapod’s leg. Crucially, this minimalist architecture enables real-time performance on low-power microcontrollers such as an Arduino board, demonstrating a level of computational and energy efficiency not seen in other SNN-based approaches.

Through simulation and extensive real-world experiments, we demonstrate that our system achieves nearly imperceptible gait transitions, with average stepping time errors below 5 milliseconds, while operating on minimal hardware. These results highlight a path forward for efficient, adaptive locomotion in field-ready legged robots.

The rest of this paper is organized as follows:

Section 2 describes the robot architecture, the Spiking Central Pattern Generator (SCPG), the SPIKE-synchronization metric, and the hardware implementation. In

Section 3, we present the performance of our approach under various conditions, along with a detailed performance analysis.

Section 4 provides a discussion of the results, and finally,

Section 5 concludes the paper.

Problem Statement

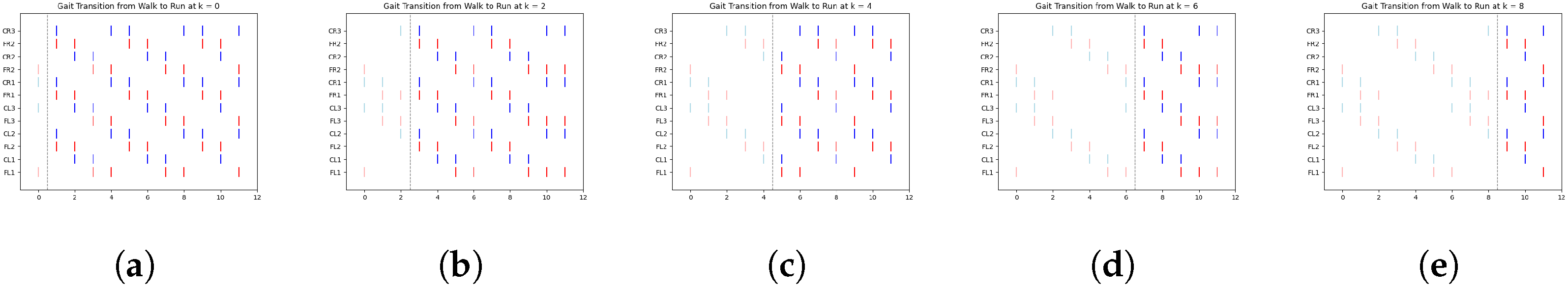

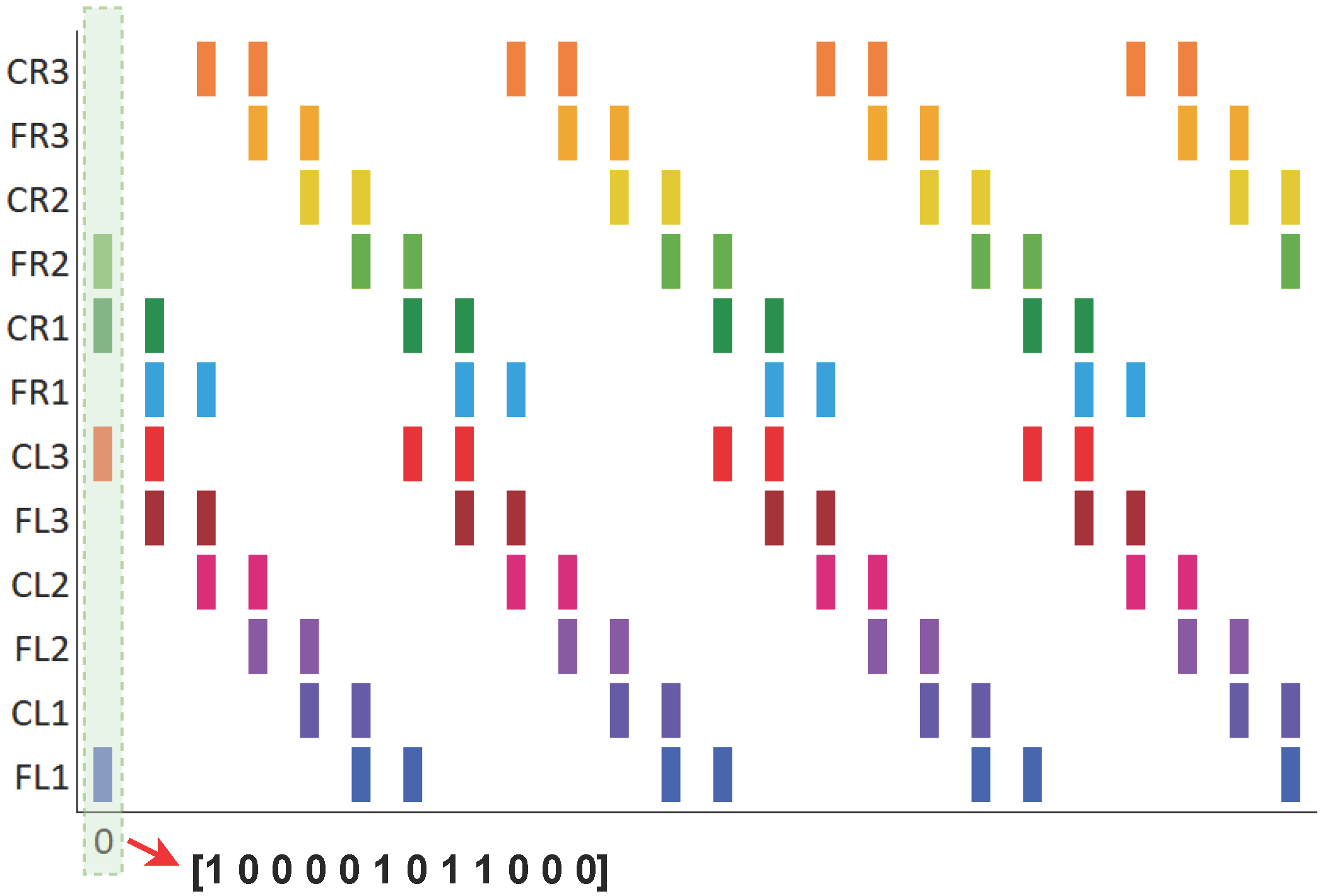

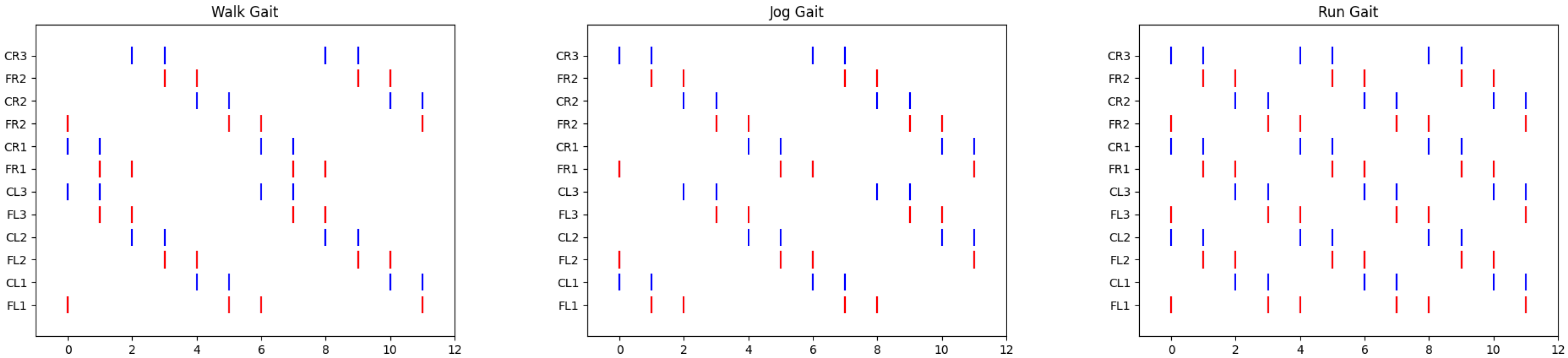

In [

25], a spiking neural network was proposed to reproduce three biologically inspired gait patterns—walk, jog, and run—observed in stick insects, aimed at controlling the locomotion of legged robots. The transition between these gait patterns was managed by initializing a vector that defined the activation of each servomotor in the robot at time zero. In other words, for each gait pattern, there was an initial vector of pulse values (0 or 1), and from this vector, the spiking neural network, as described by Equations (

1) and (

2), generated the periodic activation of the neurons (or servos) during the execution of the gait sequence. An example of these vectors is shown in

Figure 1. The issue with this approach is that the transition between patterns did not account for the current running time, leading to abrupt shifts that could affect or even damage the servos. This lack of proper synchronization increased the risk of the robot malfunctioning or crashing due to poor coordination between the servos during the transition.

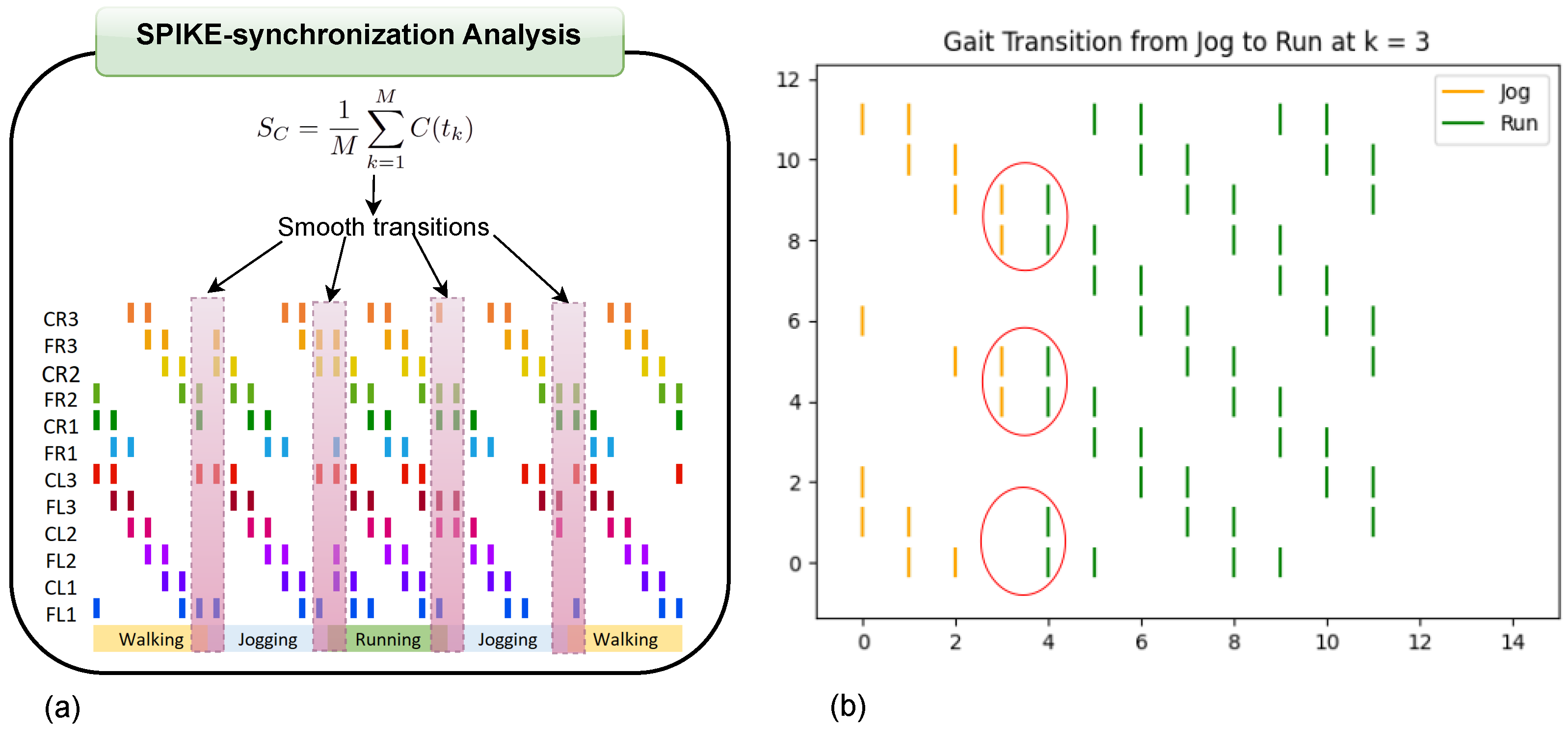

To address this issue, the proposed solution in this research is to enable smooth transitions between gaits by monitoring the activation pulses (spikes) over time and selecting the optimal moment for transitioning. To achieve this, we use an event-based metric called SPIKE-synchronization, which will be explained in detail later.

When the network detects a change in

(see Equation (

1)) triggered by a sensor input, e.g., an FSR sensor, such as when the robot encounters an obstacle or when the terrain type changes, the system will evaluate the SPIKE-synchronization metric to determine the best timing for the gait transition. This ensures a smoother, more coordinated change, reducing the risk of servo damage or robot malfunction.

3. Results

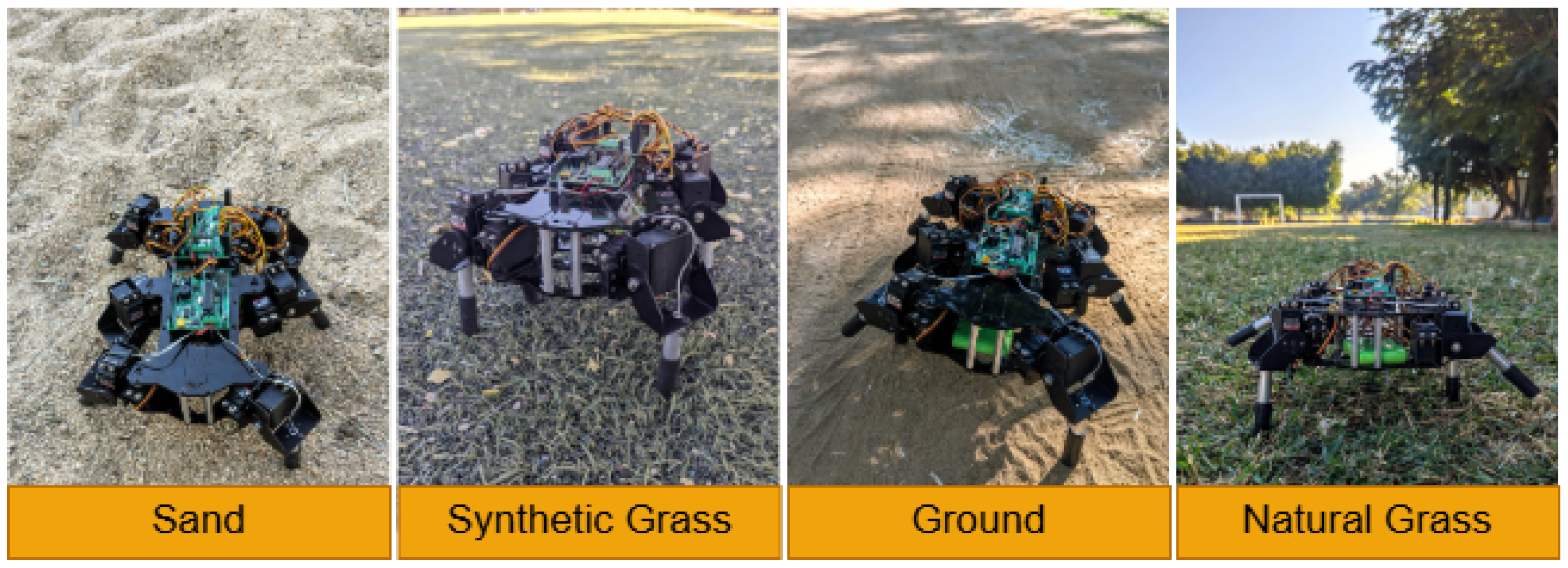

To validate our method, we tested our hexapod robot’s navigation across four different surfaces—sand, ground, synthetic grass, and natural grass—as shown in

Figure 7. Equipped with Force-Sensitive Resistor (FSR) sensors, the robot is capable of detecting variations in terrain based on changes in resistance measured by the sensors.

Table 1 summarizes the mean resistance values observed for each type of terrain. These sensor readings serve as the input for triggering gait transitions in the robot. By identifying specific resistance thresholds associated with each surface, the robot can adapt its gait dynamically.

SPIKE-synchronization analysis for the different possible gait transitions is presented in

Table 2,

Table 3,

Table 4,

Table 5 and

Table 6; the rows represent the different patterns at time

k for the ongoing gait, and the columns correspond to the possible patterns of the transitioning gait, except for the last column, which indicates the selected time of the transitioning gait that best matches the ongoing gait at time

k. Since a SPIKE-synchronization value closer to 1 indicates greater similarity, at each time step

k, all potential states of the transitioning gait are evaluated with respect to the ongoing gait in order to identify the one that best matches, thus enabling a smooth transition; in cases where multiple patterns of the transitioning gait reach the highest value, the first one is selected for pairing.

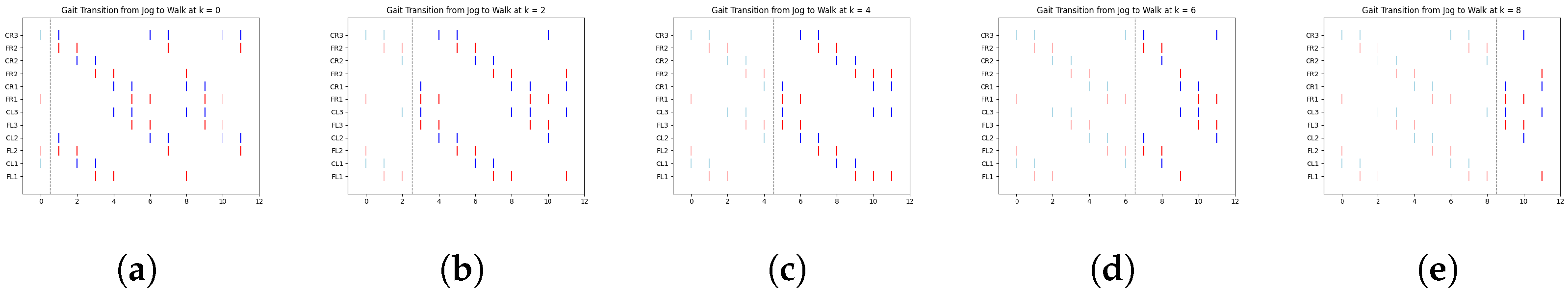

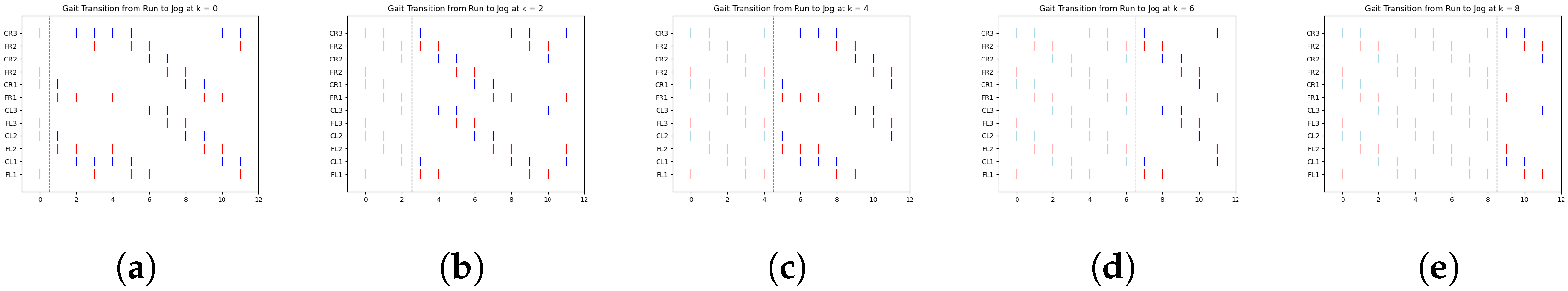

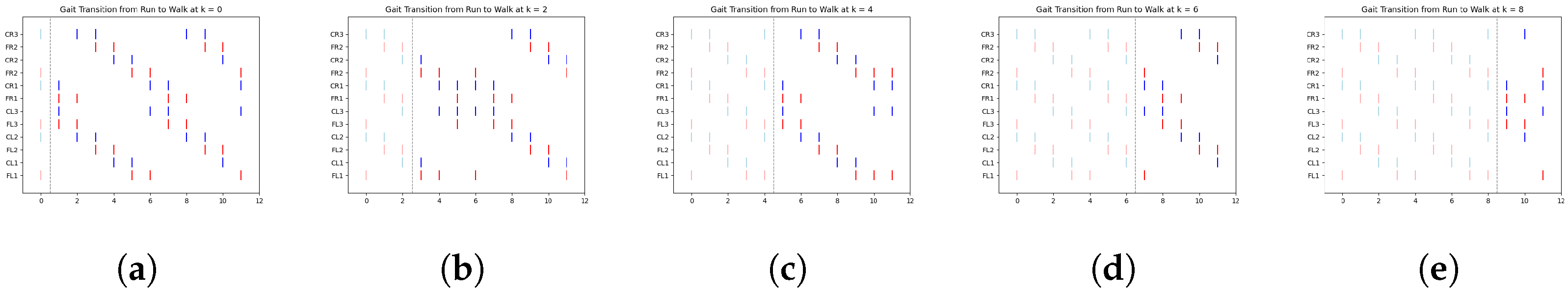

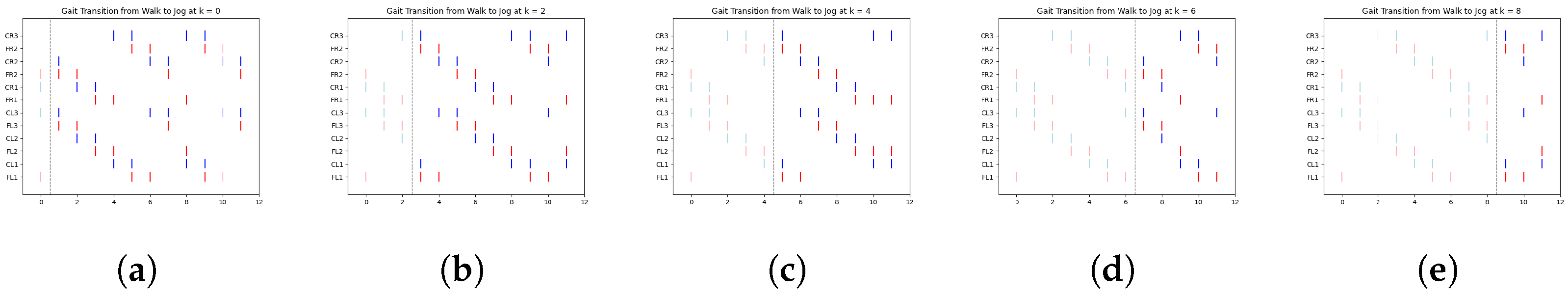

In

Figure 8, we illustrate a clear example of the transition from the ongoing gait (jog) to the transitioning gait (run) at early, middle, and late simulation times. Each figure includes a vertical dashed line marking the exact moment of transition from one gait to another.

According to

Table 4,

Figure 8a shows the jog gait at simulation time 0, which transitions to the run gait pattern at simulation time 1. Similarly,

Figure 8b shows the jog gait at simulation time 4, transitioning to the run gait pattern at simulation time 5. Finally,

Figure 8c shows the jog gait at simulation time 8, transitioning to the run gait pattern at simulation time 9. The complete set of gait transitions is shown in

Appendix A, in

Figure A1,

Figure A2,

Figure A3,

Figure A4,

Figure A5 and

Figure A6. A total of 60 experiments were conducted, with 10 trials for each transition type. However, for clarity and better visualization, only five trials per transition are presented.

The dashed line represents the point at which the FSR sensor detected a change in terrain, prompting a gait transition. The spikes are color-coded: red represents the activation of the motor controlling the coxa, while blue corresponds to the femur.

Although the simulation time shown in

Appendix A Figure A1,

Figure A2,

Figure A3,

Figure A4,

Figure A5 and

Figure A6 extends up to 12 time steps, these

Table 2,

Table 3,

Table 4,

Table 5 and

Table 6 display data only up to time step 5. This is due to the periodic nature of the gaits, where the pattern begins to repeat after this point. By focusing on the initial time steps, we can better analyze the effectiveness of the transitions without redundancy.

One particularly interesting observation in these graphs is the shift in balance structure when transitioning between jog/walk and run, or vice versa. During these transitions, the robot switches between tripod and tetrapod balance structures, as described in [

29]. This shift highlights the adaptability of the system to changes in terrain and gait demands, demonstrating the robustness of the proposed approach.

4. Discussion

While biological inspiration has long guided research in robotic locomotion, the use of spiking neural networks (SNNs) in this field remains relatively limited. Most studies in gait generation rely on traditional control systems or oscillators, with only a few exploring the unique potential of spike-based, event-driven computation.

Among the existing SNN-based approaches, many employ large and complex networks to generate rhythmic locomotion patterns. For instance, systems such as those implemented on neuromorphic platforms like SpiNNaker or Loihi typically involve hundreds or even thousands of spiking neurons to model Central Pattern Generators (CPGs) or sensory–motor integration modules. Although these implementations have demonstrated biological plausibility and functional control, they come at the cost of high computational complexity and often require specialized hardware.

In contrast, our proposal introduces a fully bio-inspired architecture that operates with only 12 spiking neurons, which is significantly fewer than what has been reported in the literature. This minimalist design is capable of generating and transitioning between three distinct gait patterns—walk, jog, and run—without relying on complex training procedures or extensive synaptic configurations.

A key innovation in our system is the event-based transition mechanism, which is triggered by sensory input from a Force-Sensitive Resistor (FSR). This sensor detects terrain rigidity, allowing the robot to adapt its locomotion speed accordingly. To the best of our knowledge, no previous work has demonstrated such gait transitions driven by event-based sensory mechanisms in SNN-controlled robots.

Furthermore, the low computational demands of our network allow the entire system to run on a low-cost microcontroller platform, such as Arduino, as opposed to power-hungry processors or external compute units. This not only reduces cost and system complexity but also contributes to greater energy efficiency—an essential feature for autonomous, battery-powered robots.

As a future research direction, we envision extending this bio-inspired architecture by incorporating neuromorphic sensors, such as event-based vision sensors (e.g., Dynamic Vision Sensors (DVSs)) or tactile neuromorphic interfaces. These sensors would provide spiking input natively, enabling more seamless and biologically plausible integration with the SNN controller while also potentially allowing the robot to adapt to more complex and dynamic environments with enhanced sensory resolution and lower latency.

5. Conclusions

The aim of our work is to reduce the gap between basic research, as exemplified in our previous studies, where we successfully designed a unique Spiking Central Pattern Generator capable of generating multiple gaits and applied research, which is the current focus of our efforts to extend the capabilities of our proposal. In this regard, we explored the integration of a transition mechanism between gaits in the locomotion of a hexapod robot, aiming to make these transitions as smooth as possible. To achieve this, we utilized an event-based metric that measures the degree of similarity between two spike trains (neural activations). This metric was incorporated because the robot’s locomotion is controlled by a spiking neural network (SNN), which generates three gait patterns (walk, jog, and run) based on events, or spikes. These patterns are produced by a single network capable of transitioning from one gait to another, with the transitions triggered by the FSR readings based on the terrain conditions.

A critical aspect of our approach, as observed in real-world experiments showcasing the robot’s gait transitions, is the deliberate modulation of locomotion speed in response to the terrain. Specifically, the robot adjusts its velocity, slowing down or speeding up, based on the rigidity of the surface, as measured by an integrated Force-Sensitive Resistor (FSR) sensor. This adaptive behavior is fundamental to the system, allowing the robot to maintain stability and efficiency when navigating different environments. The observed delay during gait transitions, which reflects the time needed for the robot to adjust its speed, is quantitatively captured and discussed through the stepping time error presented in

Table 7. Notably, the transition error is nearly imperceptible, with a maximum average stepping time error of 0.004781 s in the worst-case scenario.

We conducted numerous experiments on a real hexapod robotic platform with a low-cost processing unit, namely Arduino. While not every trial resulted in a perfectly smooth transition, the majority achieved transitions that were nearly imperceptible, highlighting the potential of the proposed approach to improve robotic locomotion performance.

Although experimentation was carried out on a six-legged platform, the methodology is extensible to robots with varying numbers of limbs. Furthermore, integrating neuromorphic platforms such as SpiNNaker or Loihi could enhance the system, advancing toward a fully bio-inspired robotic locomotion framework. This paves the way for more energy-efficient and adaptable robots capable of navigating complex environments with improved robustness and flexibility.