1. Introduction

The exponential growth of technology has elevated the importance of optimization problems within a multitude of disciplines. As critical instruments for resolving these issues, optimization algorithms have evolved from their traditional iterations to sophisticated intelligent algorithms [

1]. Early optimization techniques are predominantly based on mathematical formulations and analytical approaches, including linear and nonlinear programming, which prove to be effective for straightforward problems. However, these methods frequently falter in effectively optimizing complex, high-dimensional, and nonlinear optimization problems [

2].

The surge in computing power and the development of big data technology have prompted researchers to investigate more adaptable and efficient optimization algorithms. This has led to the emergence of intelligent optimization algorithms [

3]. By mimicking natural biological behaviors and group intelligence, these algorithms are capable of identifying approximate optimal solutions in the complex search space. Consequently, they exhibit a robust optimization performance and a high degree of adaptability [

4].

The coati optimization algorithm (COA) [

5], introduced by Dehghani in 2023, is a recently developed meta-heuristic algorithm that emulates the hunting behavior of coatis. Nevertheless, the initial population of COA is generated randomly, leading to a deficiency in diversity. In the hunting stage, the algorithm employs a random strategy, which lacks adaptability. Moreover, the behavior of evading natural enemies also relies on a random strategy. These factors contribute to an imbalance between COA’s global search and local optimization capabilities, predisposing it to become trapped in local optima, exhibiting limited global exploration, and demonstrating poor convergence accuracy. The analysis indicates that the COA algorithm, akin to other meta-heuristic algorithms (MA), inherits the common shortcomings associated with this class of algorithms [

6].

The COA’s search process consists of two separate phases: exploration and exploitation. The former phase relates to the algorithm’s ability to navigate global search, a determinant of its ability to locate the optima. Conversely, the latter phase concerns the algorithm’s proficiency in navigating the local search space, which affects the rate at which the optimal values are produced. The COA’s performance is directly proportional to the balance achieved between exploration and exploitation. Nonetheless, adhering to the “No Free Lunch” theory [

7], it is acknowledged that none of the algorithms can efficiently address all optimization challenges. A significant challenge with meta-heuristic algorithms is their tendency to get trapped locally, and most of them struggle to circumvent this pitfall [

8].

As optimization algorithms have evolved, a plethora of enhancements has been suggested to improve their efficacy [

9]. Shang S et al. [

10] optimized the extreme learning machine using the improved zebra optimization algorithm. Wang C L et al. [

11] developed a sound quality prediction model that incorporates extreme learning machine enhanced by fuzzy adaptive particle swarm optimization. Zhang et al. [

12] introduced the chaotic adaptive sail shark optimization algorithm, which integrates the tent chaos strategy. Hassan et al. [

13] put forth an improved butterfly optimization algorithm featuring nonlinear inertia weights and a bi-directional difference mutation strategy, along with decision coefficients and disturbance factors. Zhu et al. [

14] proposed an adaptive strategy and a chaotic dyadic learning strategy implemented through the improved sticky mushroom algorithm. Yan Y et al. [

15] proposed an On-Load Tap-Changer fault diagnosis method based on the weighted extreme learning machine optimized by an improved grey wolf algorithm. Gürses, Dildar et al. [

16] used the slime mold optimization algorithm, the marine predators algorithm, and the salp swarm algorithm for real-world engineering applications. Dehghani, Mohammad et al. [

17] used the spring search algorithm to solve the engineering optimization problems.

Additionally, the COA is attracting considerable interest. Jia et al. [

18] introduced a sound-based search encirclement strategy to enhance the COA, yet they overlooked the optimization of the initial population’s generation. Zhang et al. [

19] enhanced the COA by applying it to practical engineering issues, albeit employing only a straightforward nonlinear strategy. Barak [

20] suggested integrating the COA with the grey wolf optimization algorithm for tuning active suspension linear quadratic regulator controllers. Baş et al. [

21] proposed the enhanced coati optimization algorithm, a nonlinear optimization algorithm that improves upon the COA by balancing its exploitation and exploration capacities, although it does not address the resolution of imbalances through the optimization of the exploitation phase. Wang et al. [

22] utilized the enhanced COA in the context of wind power forecasting applications. Mu G et al. [

23] proposed a multi-strategy improved black-winged kite algorithm to select features. Zhou Y et al. [

24] used an improved whale optimization algorithm in the engineering domain. Meng WP et al. [

25] used a Q-learning-driven butterfly optimization algorithm to solve the green vehicle routing problem.

Through the above analysis, the problem is solved, the metaheuristic algorithm is slow to converge and easily falls into the local optimum, and the optimization speed and performance of the COA are improved. The proposal of a multi-strategy adaptive COA incorporates strategies such as Lévy flight, a nonlinear inertia step factor, and an enhanced coati vigilante mechanism to optimize the algorithm’s performance. The Lévy flight mechanism is employed during the initialization phase to populate the initial solution positions uniformly, thus generating high-quality starting solutions and enriching the solution population. Consequently, the problem of the COA’s initial solution, suffering from poor quality and uneven distribution, is addressed. During the exploration period, a nonlinear inertia weight parameter is incorporated to balance the local and global search abilities of the COA. Meanwhile, during the exploitation period, an enhanced coati vigilante mechanism is implemented to facilitate the COA’s capacity to get away from local optima.

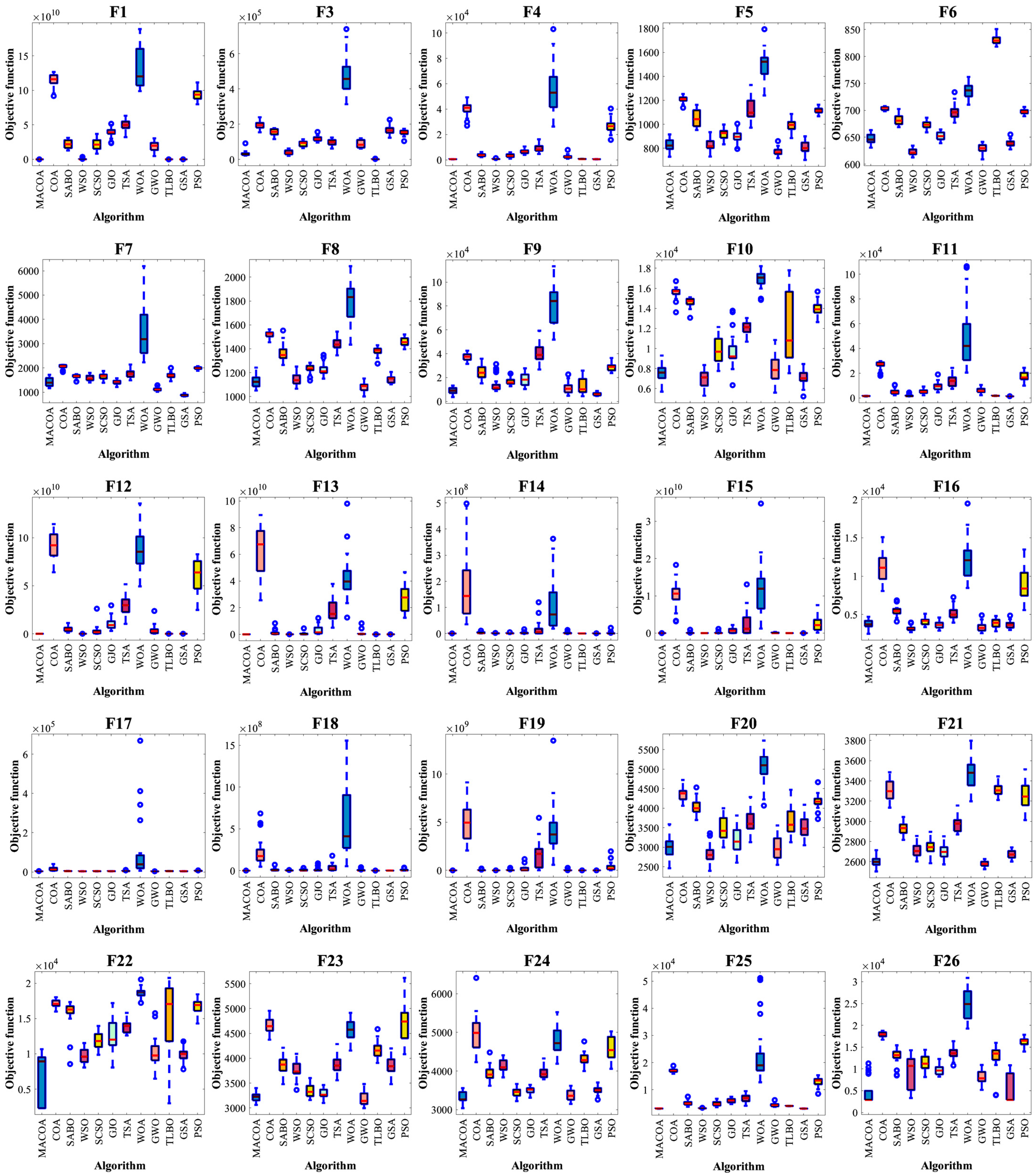

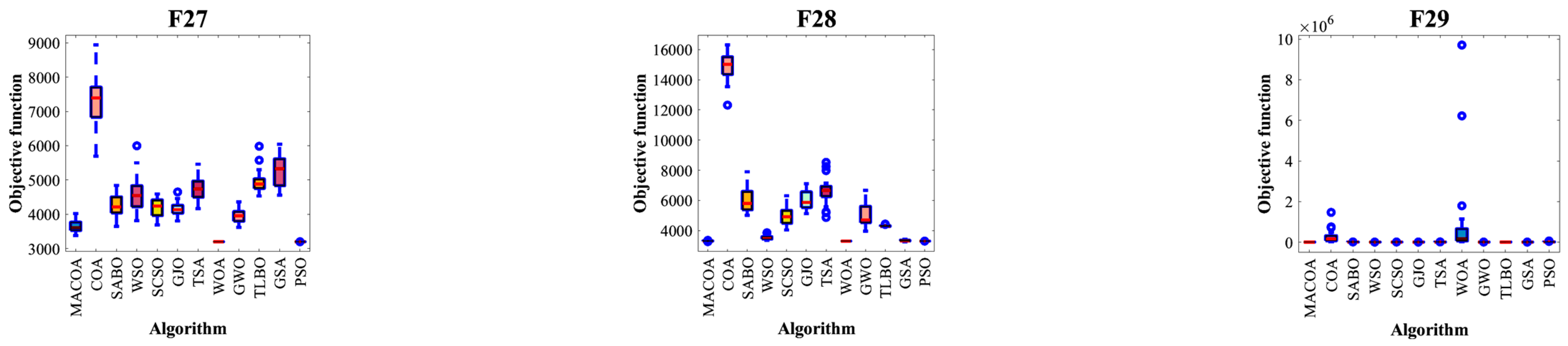

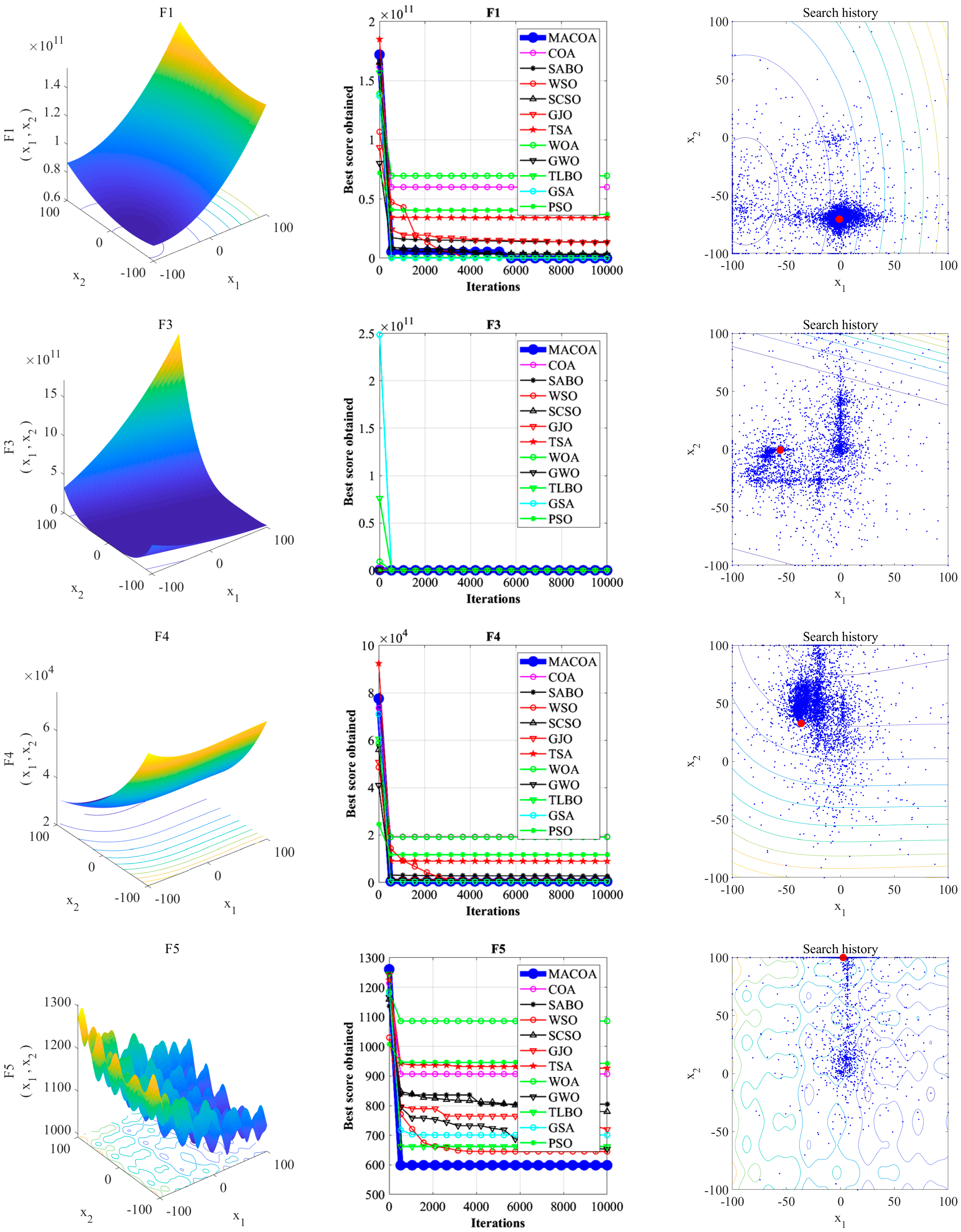

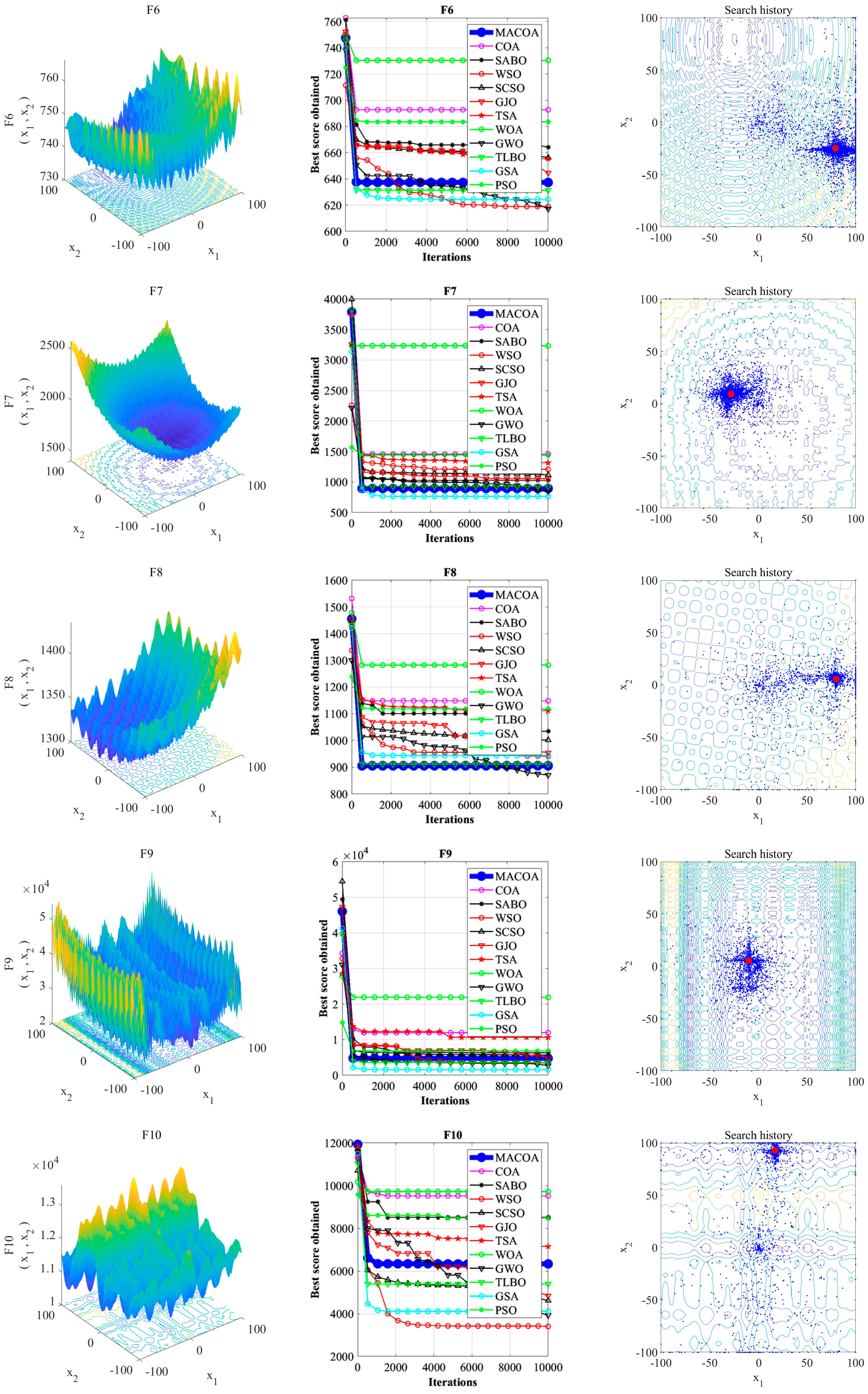

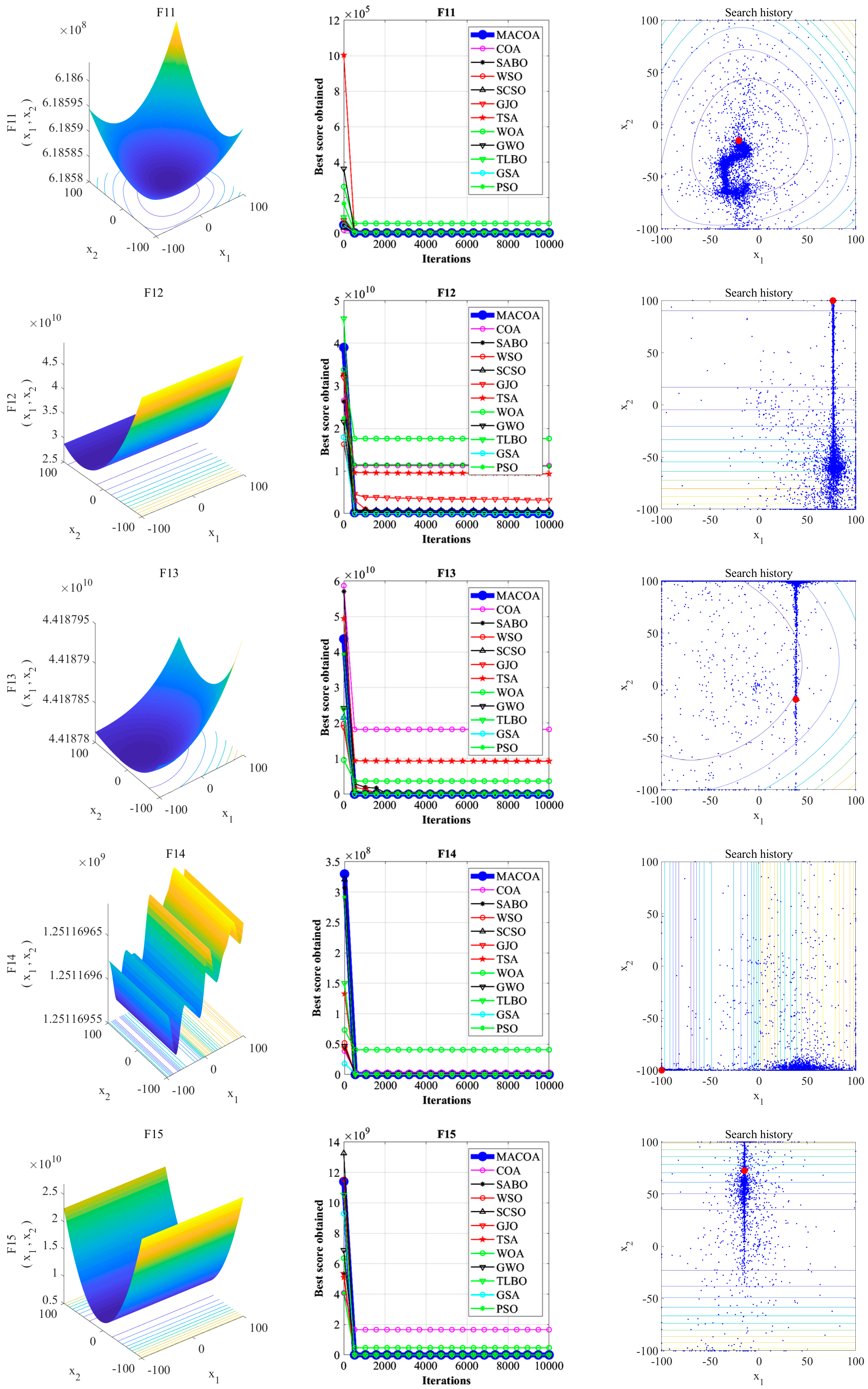

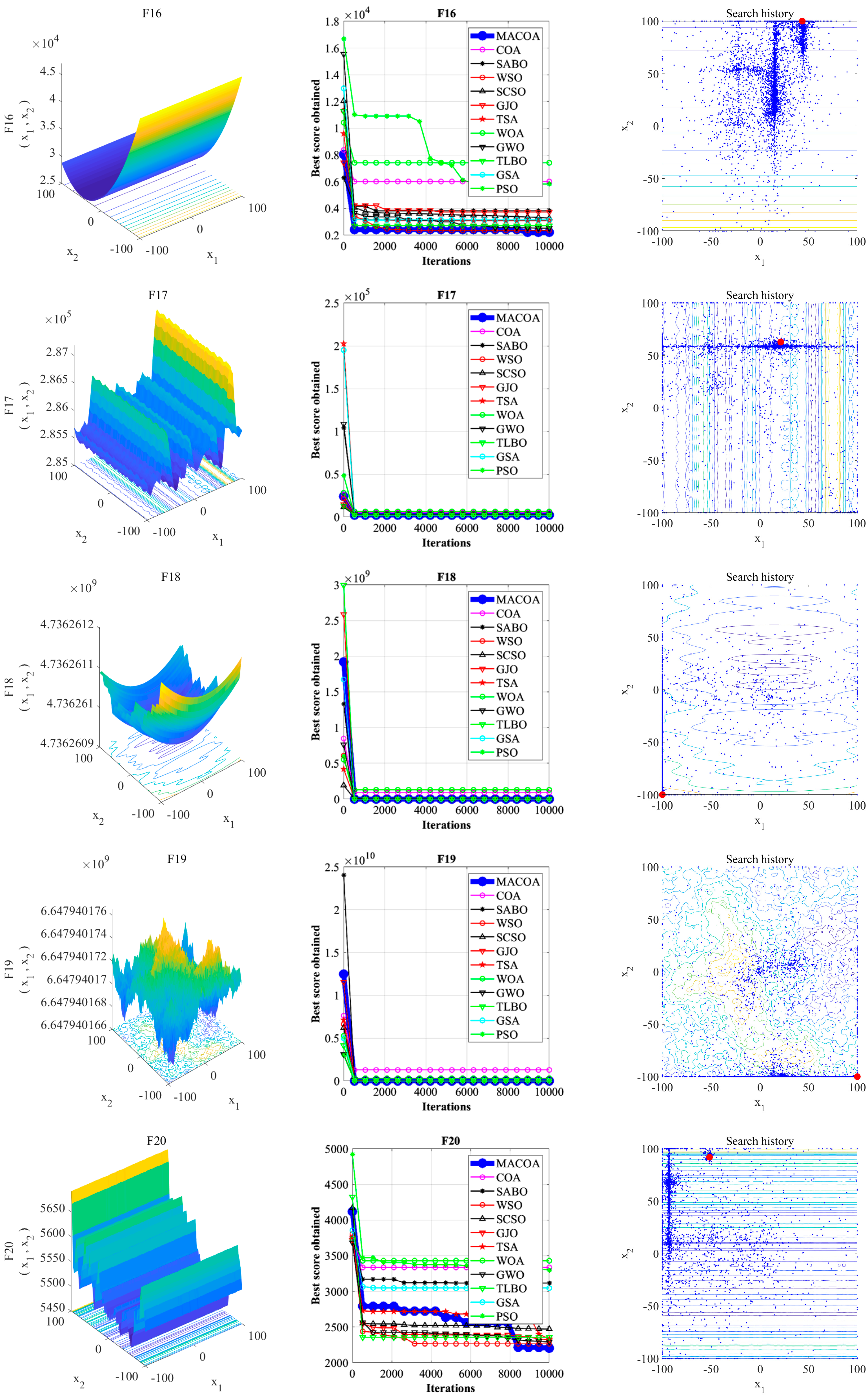

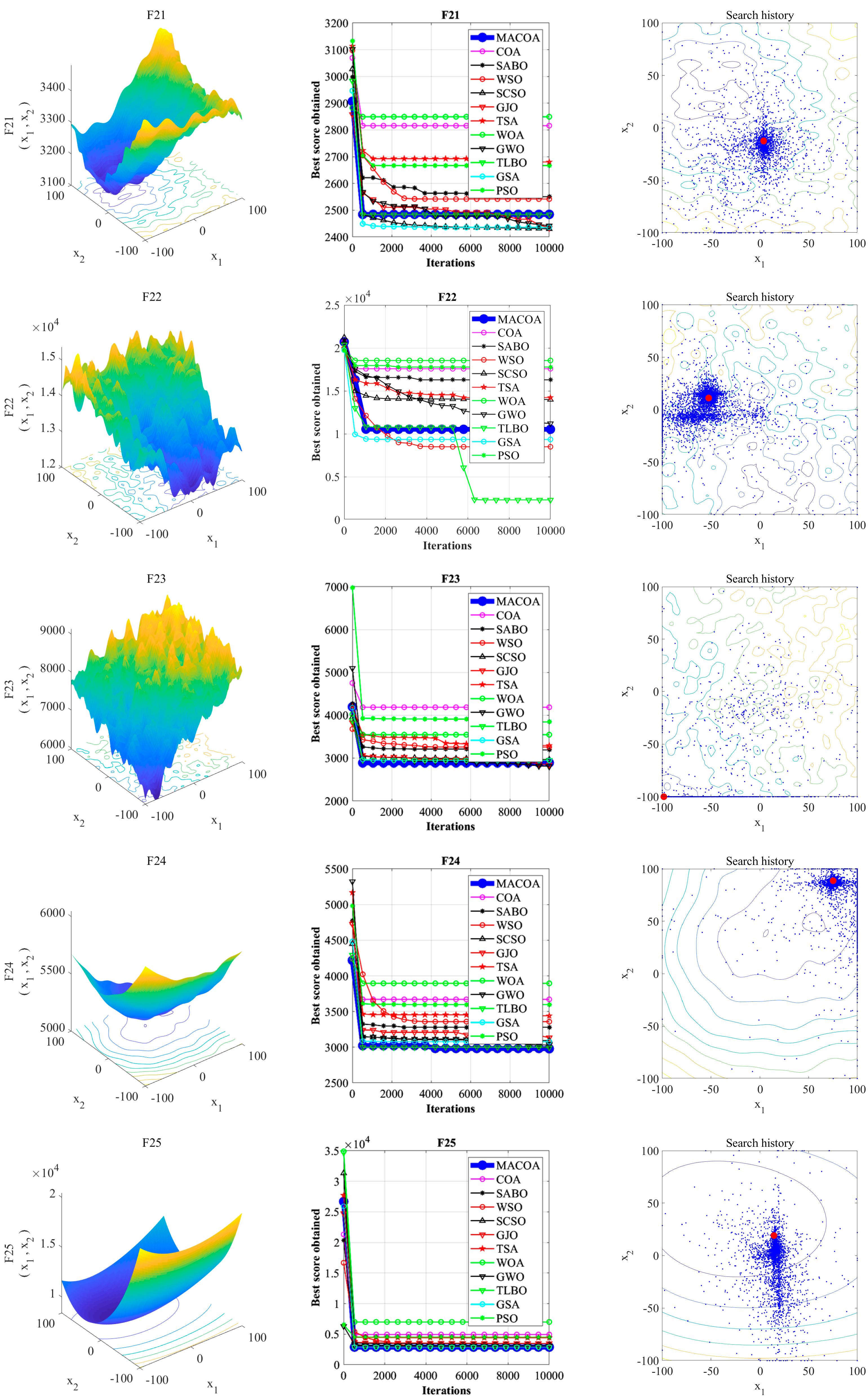

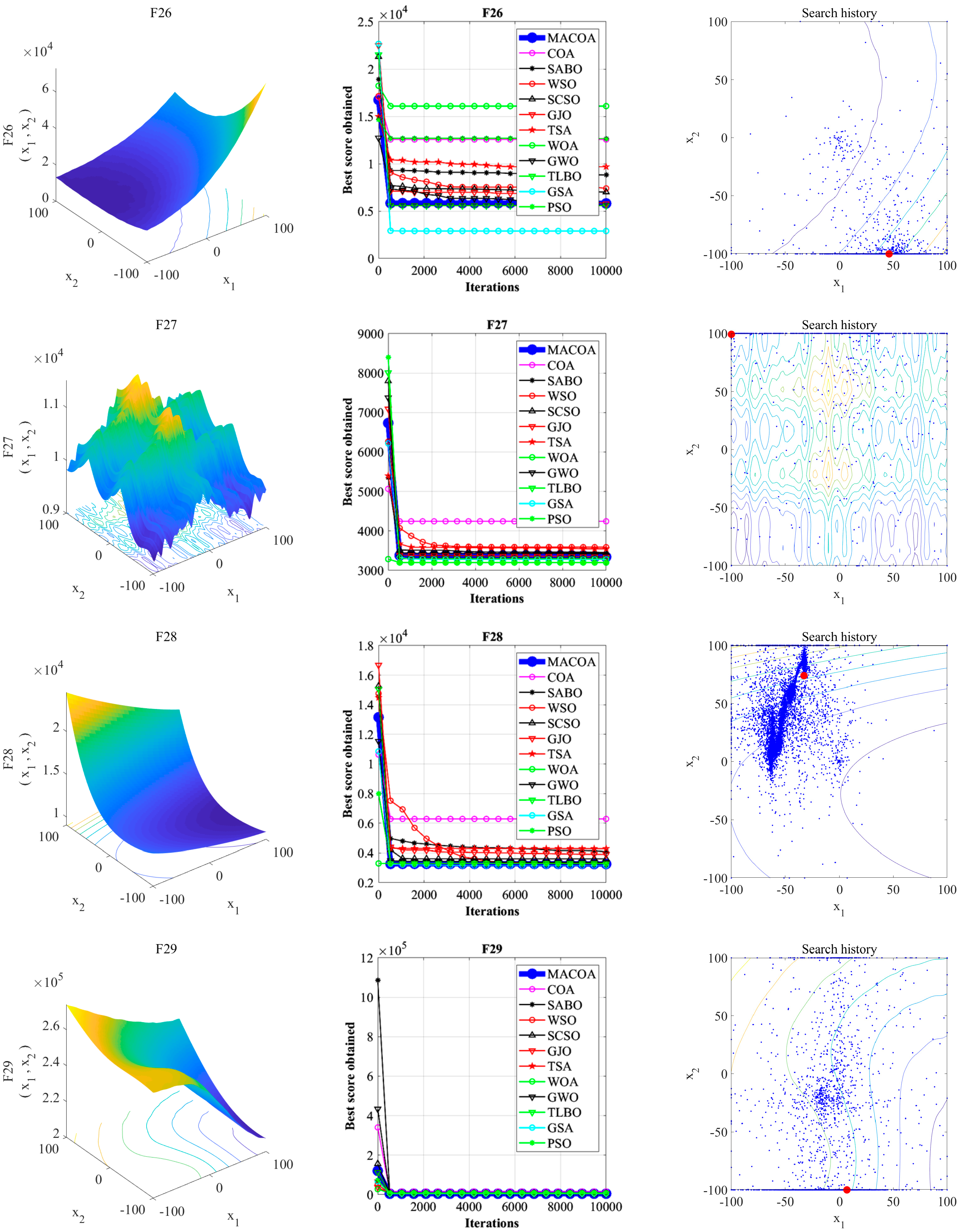

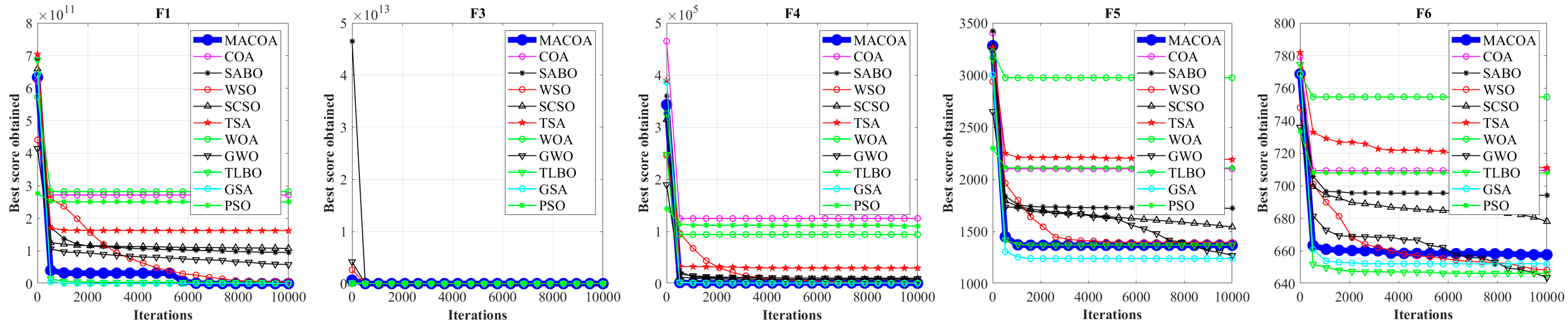

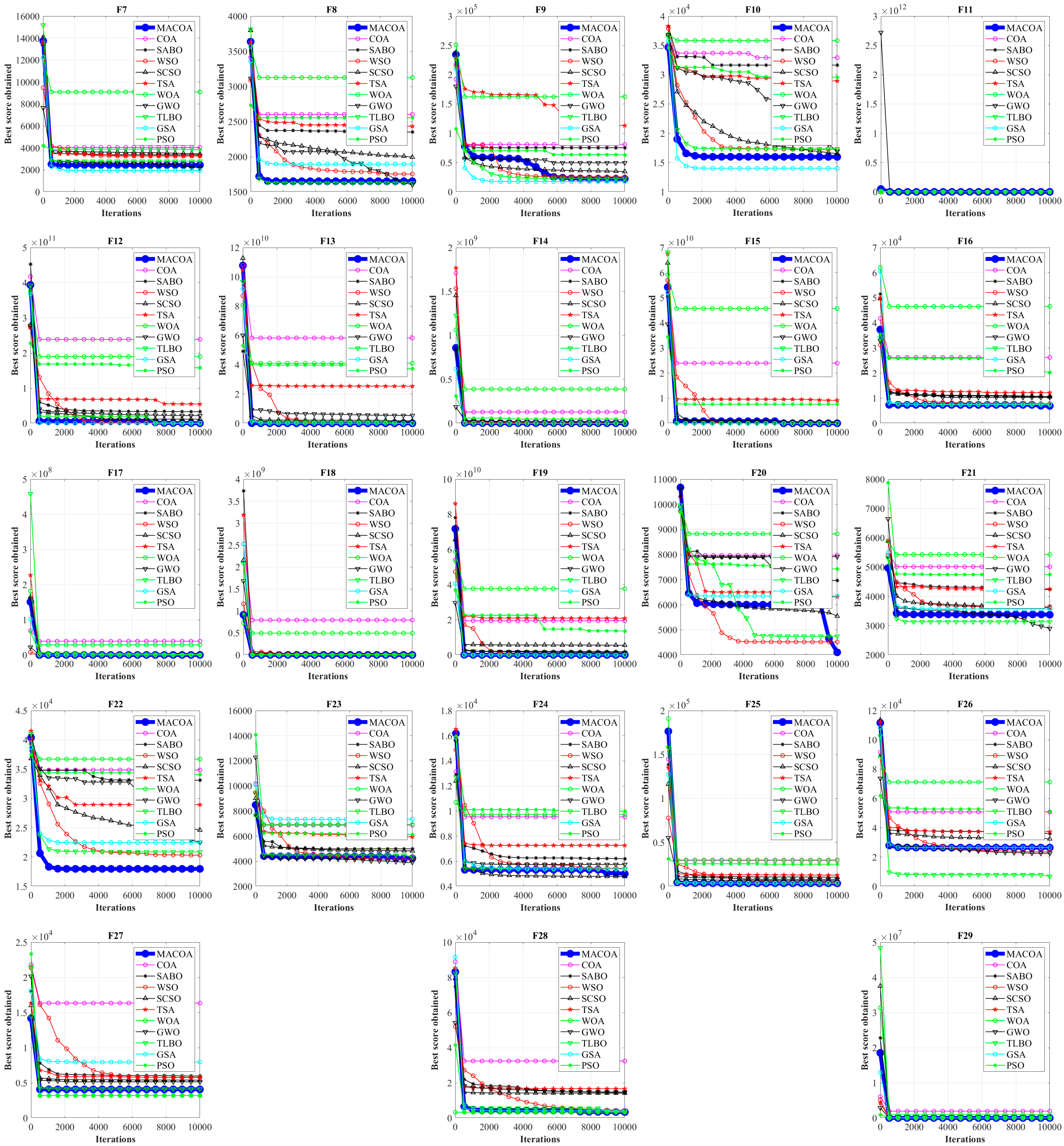

The MACOA searching capability is validated through experimental studies that employ the IEEE CEC2017 benchmark functions. The MACOA is compared with 10 popular algorithms across various dimensions (30, 50, and 100), respectively. A comparative analysis of the convergence curves for all 12 algorithms across these dimensions, along with an examination of boxplots representing the outcomes from multiple runs and search history graphs, reveals that the MACOA demonstrates better optimization results over the other algorithms. To further study the engineering applicability of the MACOA, seven engineering challenges, including the design of a gear train, a reducer, etc., are used to test the ability of MACOA’s optimization. The analysis of the experiment results for these engineering design problems confirms the practical efficacy of the MACOA in optimizing practical engineering problems.

3. Proposed Algorithm

Although the COA is highly optimized, its initial population is generated randomly. Furthermore, COA employs a random strategy during the hunting phase, and its behavior in avoiding natural enemies is also contingent upon this random approach. These factors contribute to an imbalance between the global search capabilities and local optimization abilities of COA, making it susceptible to converging on local optima, exhibiting limited global exploration capacity, and demonstrating poor convergence accuracy. To address these issues, we propose the following heuristic strategies.

3.1. Chaos Mapping for Lévy Flight

Conventional random strategies generate populations with certain drawbacks, such as a lack of population diversity, and their randomness may lead to the possibility that certain areas are over-explored. Therefore, a mapping process for randomly generated populations is necessary.

The chaotic mapping mechanism is highly uncertain and sensitive. It can generate complicated and unpredictable dynamic behaviors to achieve a broader range of exploration in the search space [

26].

Lévy flight is a special random walk model that describes movement patterns with long-tailed distributions [

27]. The mapping is used in optimization algorithms to improve the randomness, which can assist the algorithm in more effectively exploring the solution space. Therefore, the global optimization capability is increased [

28]. Lévy flights are introduced in the initialization process of the MACOA, as shown in (7)–(9).

where

X(

t) denotes the location of the i-th coati, and

α is the weight of the control step.

.

.

β is a constant, which is 1.5.

3.2. Nonlinear Inertia Step Size Factor

In the global optimization phase, premature convergence can hinder the algorithm’s ability to identify the global optimal solution. The incorporation of a nonlinear inertia step factor can mitigate the risk of premature convergence to local optima by dynamically adjusting the step size, thereby preserving the diversity of the population.

The introduction of a nonlinear inertia step size factor can greatly improve search efficiency and convergence performance, and the COA can dynamically adjust individuals’ search behavior. This dynamic adjustment mechanism effectively enhances the balance between exploration and exploitation. It also enhances adaptability and robustness.

Considering that updating a coati’s position is related to a coati’s current position, a nonlinear inertia step size factor is introduced. The factor adjusts the relationship between the coati’s position update and the current position information, depending on the individual coati’s position. Then, the factor is calculated by (10):

where

Cn is a constant greater than 1 to control the degree of nonlinearities, which is taken as 2 in this method.

Initially, the value of ω is small, resulting in the position updates being less influenced by the current position. This facilitates a broader search range for the algorithm and enhances its global exploration capability. As the search process progresses, the value of ω is increasing over time. The effect brought by the current coati position becomes larger, which assists in obtaining the optimal solution. Furthermore, it enhances the convergence speed as well as its local exploration ability.

The improved formula for modelling coati positions in the first stage is shown in (11):

3.3. Coati Vigilante Mechanism

In the local optimal search phase, the algorithm usually focuses on a certain region for detailed search. The vigilante mechanism can assist the algorithm in escaping local optima by introducing random perturbations or altering the direction of the search to enhance the algorithm’s exploration.

In the sparrow search algorithm, when part of the sparrows search for food, some of them act as vigilantes, responsible for monitoring the security of their surroundings and sounding an alarm when a potential threat is detected.

This mechanism not only improves the survival rate of the group but also facilitates the rapid dissemination of information. The introduction of the vigilante mechanism enables the algorithm to cope with complex optimization problems more effectively. In this way, the COA can maintain a higher degree of flexibility and dynamism in exploring the solution space [

29].

Introducing the sparrow vigilante mechanism in the exploitation phase enhances the vigilance ability of the COA to search within an optional range. The coatis on the outskirts of the population will swiftly relocate to seek a safe area when they realize there is danger. The coati located in the center will walk around randomly in order to get close to others in the population. The sparrow vigilant mechanism formula is shown in (12).

where

represents the global optimal position in the current iteration, and

represents the step control parameter.

.

is randomly selected in [−1, 1].

is the fitness value.

is the greatest global greatest fitness value, and

is the worst one.

is a very small constant.

Equation (12) can be optimized to address the problem of the global search capability. A dynamically adjusted step factor [

30] is introduced, as shown in (13).

where

is a dynamically adjusted step factor, as shown in (14).

is a dynamically adjusted step factor, as shown in (15).

.

The introduction of dynamic step factors and enables the algorithm to adjust the search behavior dynamically. At the beginning of the algorithm, it focuses on exploration, and the later phase focuses on exploitation. These optimizations improve the adaptability and robustness of the COA.

3.4. Multi-Strategy Adaptive Coati Optimization Algorithm

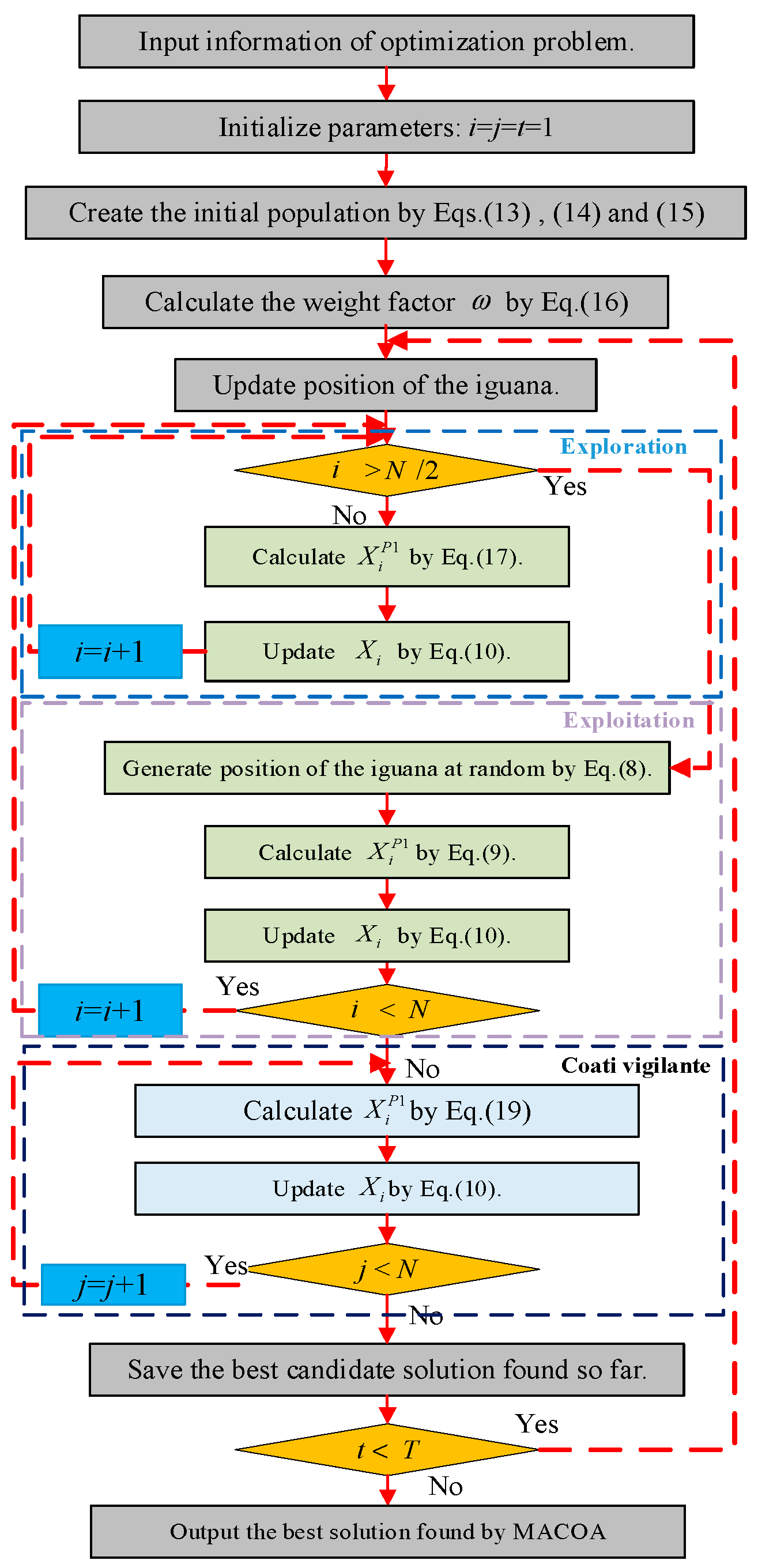

The detailed flowchart of the MACOA is presented in

Figure 1. The pseudo-code for MACOA is given in

Table 1.

5. Engineering Problems

A benchmark suite of real-world non-convex constrained optimization problems and various established baseline results are utilized to analyze the engineering problems. In these constrained engineering problem designs, we use penalty terms as constraints. The optimization algorithm will find the global optimal solution under the constraints to achieve the constrained design. Problem difficulty within the benchmark is assessed using various evaluation criteria [

43]. The algorithms COA, SABO, WSO, SCSO, GJO, TSA, SRS [

44], MPA [

45], and TLBO are included for comparative analysis, with each algorithm executed independently for 50 runs on all problems within the benchmark suite. Performance is evaluated based on feasibility rate (FR) and success rate (SR). FR represents the proportion of runs achieving at least one feasible solution within the maximum function evaluations. Meanwhile, SR denotes the proportion of runs where a feasible solution

x satisfies f(x) − f(x*) ≤ 10

−8 within function evaluations. This section uses tables of optimal, standard deviation, mean, median, worst, FR, and SR values generated by the algorithms, iteration curves generated by 10,000 iterations, box plots generated by 50 experiments, and search history for statistical analysis.

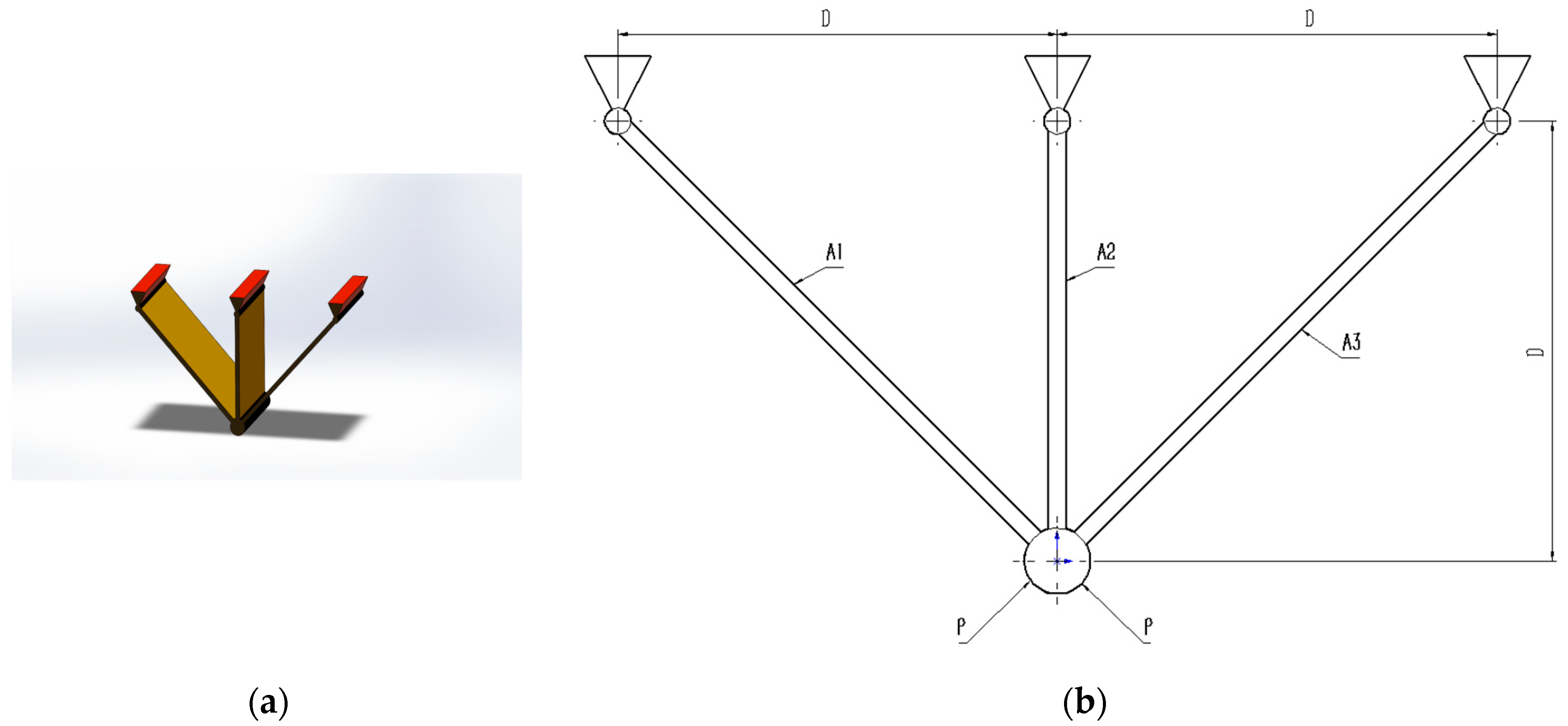

5.1. Three-Bar Truss Design Problem

The three-bar truss design problem is to minimize the volume while satisfying the stress constraints on each side of the truss member.

Figure 5 provides the geometry explanation. Within the benchmark suite, the problem features D = 2 decision variables, g = 3 inequality constraints, and h = 0 equality constraints. The optimal value of the objective function is known to be f(x*) = 2.6389584338 × 10

2 [

43].

The design problem for the three-bar truss can be outlined as follows:

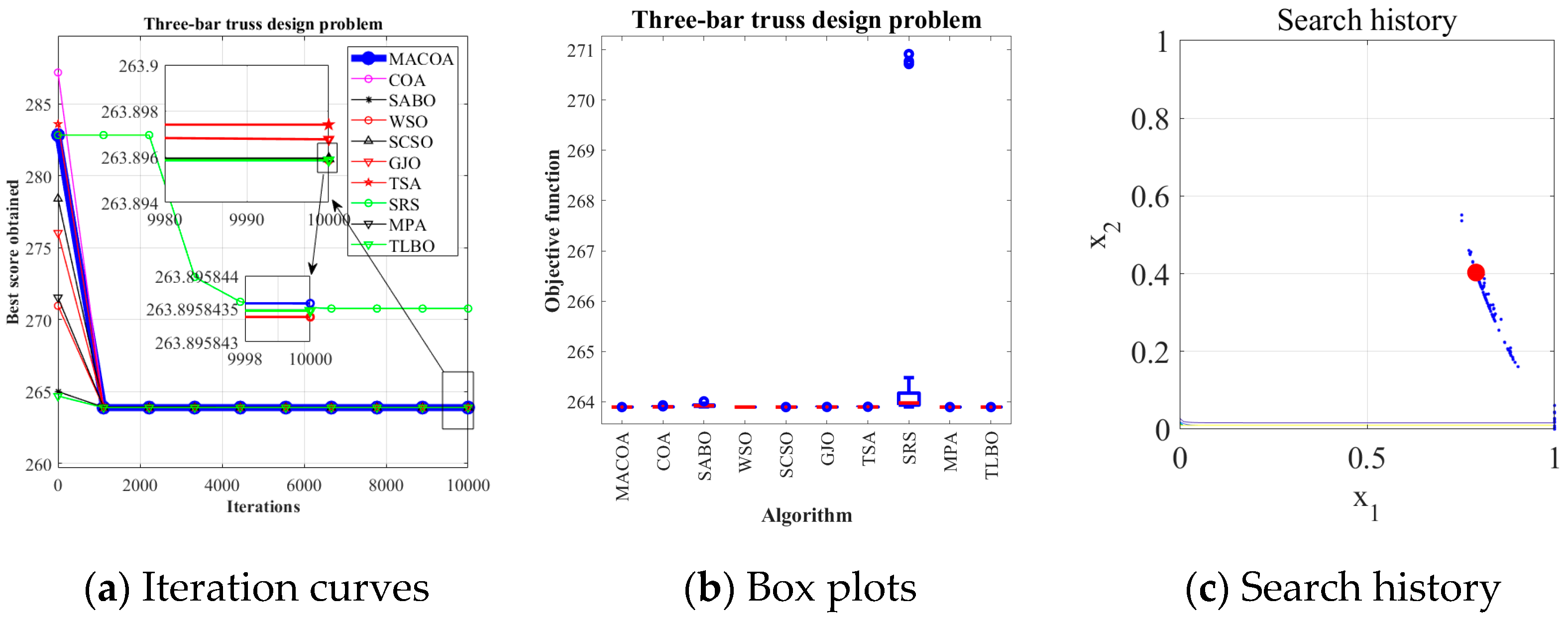

From the experimental results in

Table 10, it can be seen that MACOA has FR = 2 and SR = 10. These results show that MACOA’s FR score is second only to MPA, while its SR value is second only to MPA and TLBO. Moreover, the results of MACOA are significantly better than COA.

Figure 6a illustrates the iteration process of the optimal solutions of the ten algorithms. The box-and-line plot is displayed in

Figure 6b, and it can be seen that MACOA has strong stability.

Figure 6c shows the search history, from which it can be seen that the search history of MACOA is concentrated around this neighborhood of the global optimal solution. Overall, these results show that MACOA outperforms COA.

5.2. Tension or Compression Spring Design Problem

The design of tension or compression springs represents a common optimization problem in mechanical engineering and structural design. The function of this device is to store and discharge energy.

Therefore, a spring requires consideration of parameters during the design process. Within the benchmark suite, the problem features D = 3 decision variables, g = 4 inequality constraints, and h = 0 equality constraints. The optimal value of the objective function is known to be f(x*) = 1.2665232788 × 10

−2 within the benchmark [

43].

Figure 7 illustrates the pressure vessel configuration.

The design problem for tension or compression springs can be outlined as follows:

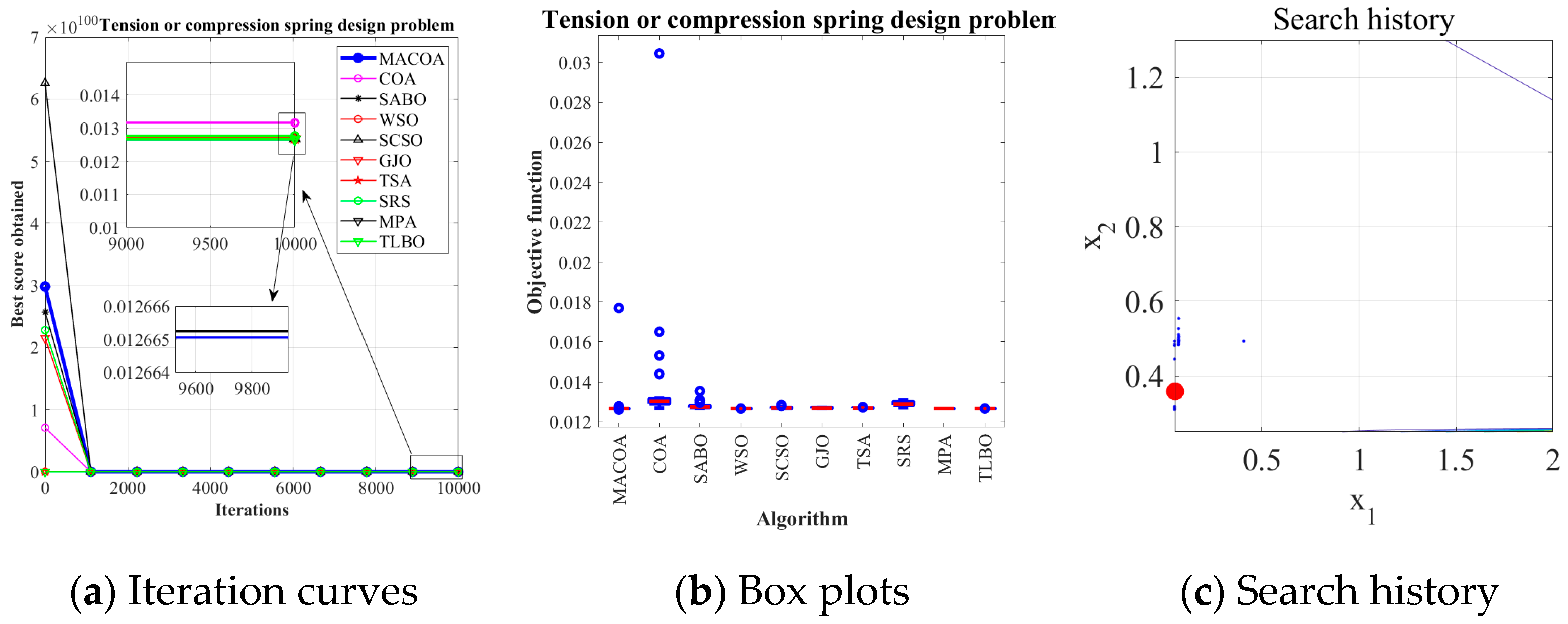

The experimental results in

Table 11 show that MACOA has an FR of 98 and an SR of 98. These results indicate that MACOA has the highest FR among all the compared algorithms, and its SR is second only to WSO and MPA.

Figure 8a illustrates the iteration process of the optimal solutions of the ten algorithms. The box-and-line plot is displayed in

Figure 8b. It can be seen that MACOA has very few outliers.

Figure 8c shows the search history. Although most of the search range lies on the boundary, it can be seen that most of the search history lies around the global optimum. Overall, these results show that MACOA outperforms COA.

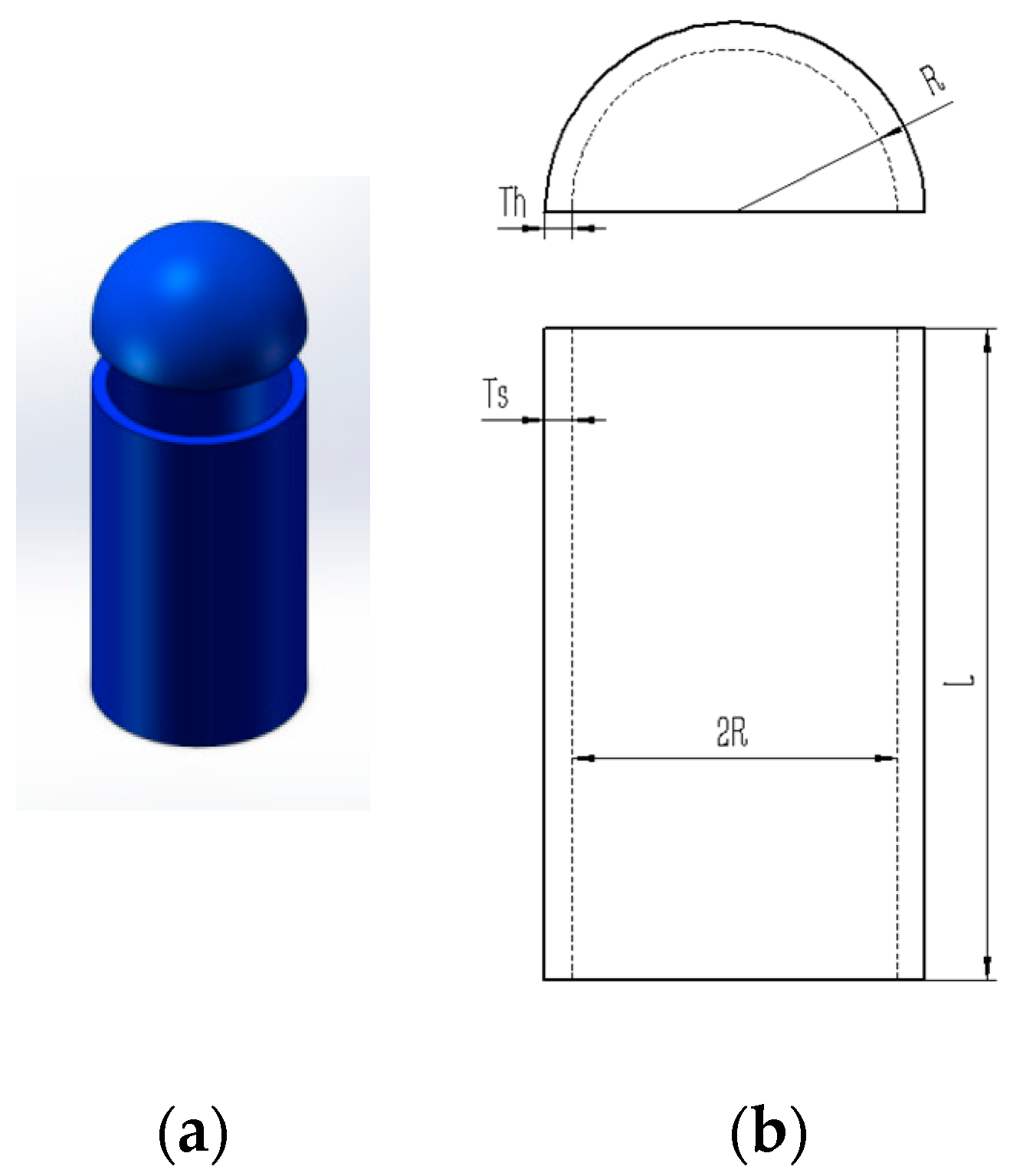

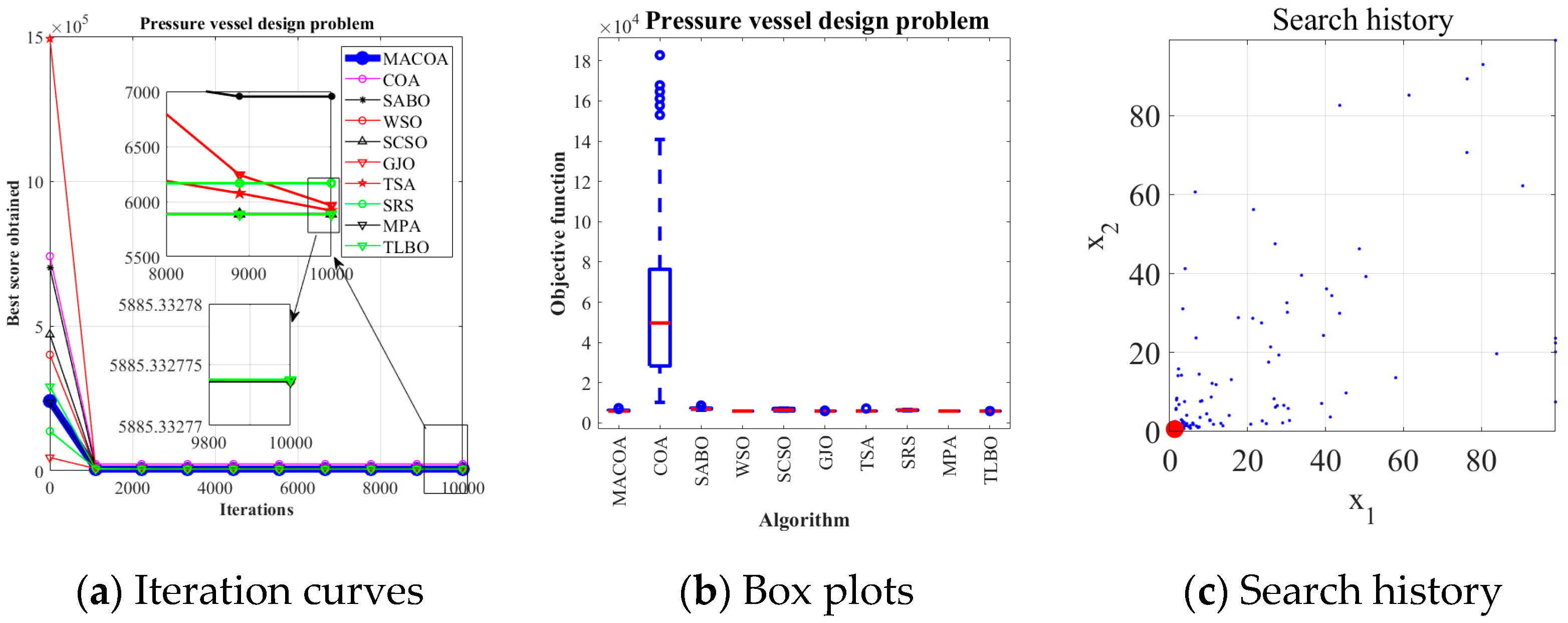

5.3. Pressure Vessel Design Problem

The pressure vessel design problem focuses on minimizing weight while maintaining structural integrity under high-pressure operating conditions. This involves optimizing design parameters, including material selection and wall thickness, within specified constraints to minimize the overall manufacturing costs. Within the benchmark suite, the problem features D = 4 decision variables, g = 4 inequality constraints, and h = 0 equality constraints. The optimal value of the objective function is known to be f(x*) = 5.8853327736 × 10

3 [

43].

Figure 9 illustrates the pressure vessel configuration. The design problem for the pressure vessel can be outlined as follows:

The results in

Table 12 show that MACOA has the highest FR value among all the algorithms, with both FR and SR values of 98. Meanwhile, the SR value of MACOA is second only to TLBO and MPA.

Figure 10a shows the iterative process for the optimal solution of the ten algorithms.

Figure 10b, on the other hand, shows the box plots, from which it can be seen that the anomalies and quartiles of MACOA are concentrated with a certain degree of stability.

Figure 10c shows the search history of MACOA, from which it can be seen that most of the search history of MACOA is concentrated near the global optimal solution region. These results show that MACOA can obtain the best performances.

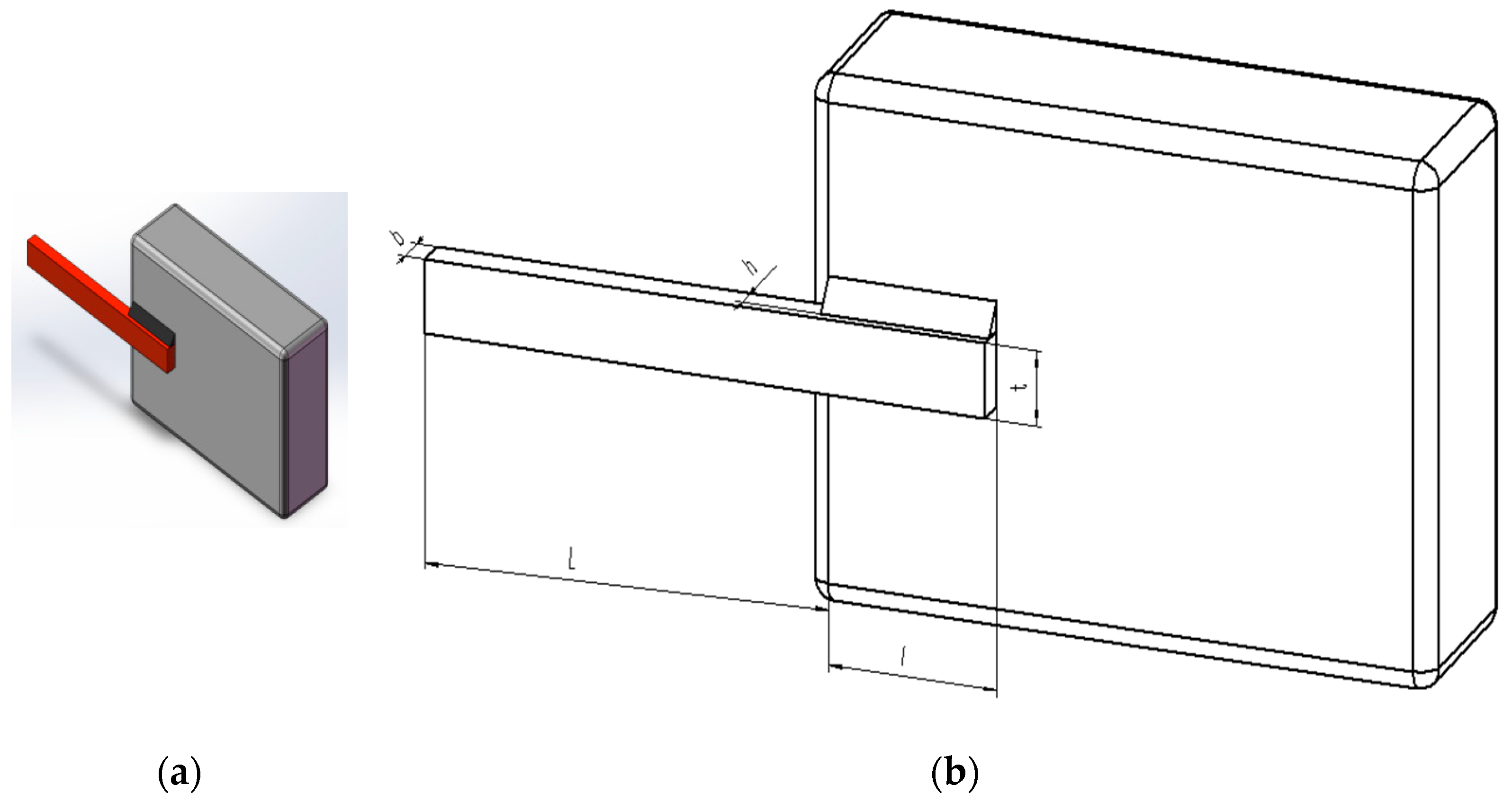

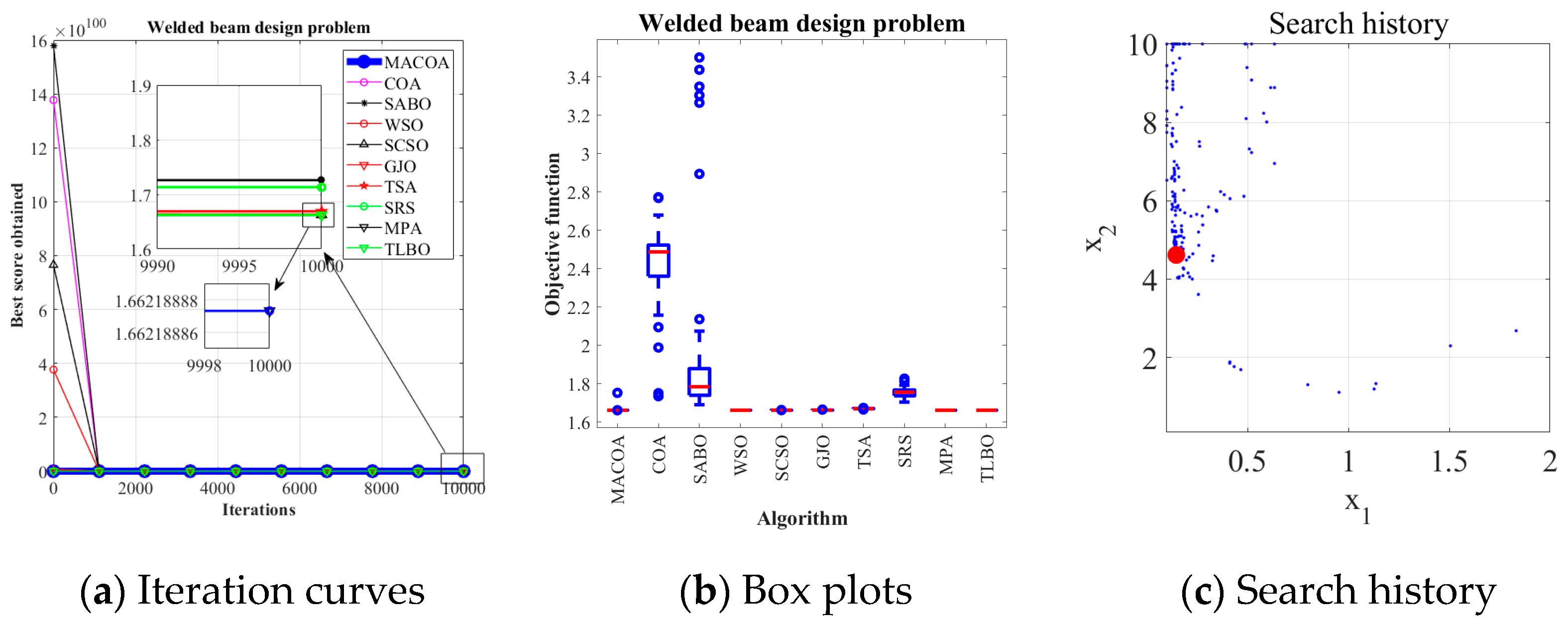

5.4. Welded Beam Design Problem

The welded beam design problem is to maximize structural performance while minimizing the beam’s weight by optimizing parameters such as weld dimensions, geometry, and placement, subject to specific constraints. Within the benchmark suite, the problem features D = 4 decision variables, g = 7 inequality constraints, and h = 0 equality constraints. The optimal value of the objective function is known to be f(x*) = 1.6702177263 [

43].

Figure 11 describes the welded beam structure.

The design problem for the welded beam can be outlined as follows:

The MACOA results in

Table 13 show that both FR and SR are 84, which are significantly better than those of COA in both metrics.

Figure 12a shows the iterative process of the optimal solutions found by the ten algorithms.

Figure 12b shows the boxplots generated from 50 experiments, where MACOA is far superior to COA in terms of the number of anomalies and the median number of anomalies.

Figure 12c shows the search history of MACOA, where most of the search histories are clustered around the lower bound. These results clearly show the superior performance of MACOA compared to COA.

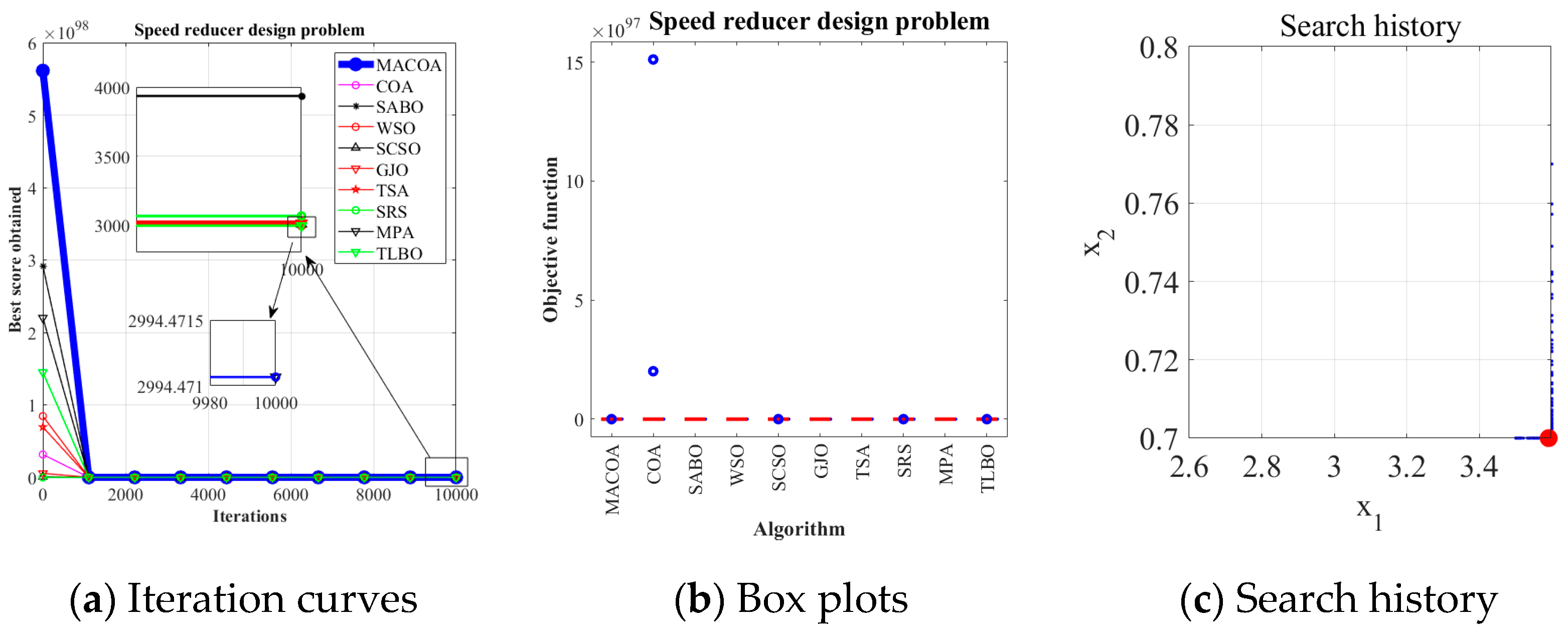

5.5. Speed Reducer Design Problem

The speed reducer design problem is a well-known optimization challenge in engineering design. Within the benchmark suite, the problem features D = 7 decision variables, g = 11 inequality constraints, and h = 0 equality constraints. The optimal value of the objective function is known to be f (x*) = 2.9944 × 10

3 [

43].

Figure 13 illustrates the speed reducer configuration.

The design problem for the speed reducer can be outlined as follows:

Table 14 shows that MACOA achieves FR = 46 and SR = 46, which are much higher than those of COA, and although WSO, MPA, and TLBO have slightly better FR and SR values, MACOA still outperforms other algorithms, including COA.

Figure 14a shows the iterative process graphs for the optimal solutions of all algorithms.

Figure 14b shows the boxplots of 50 experiments, demonstrating that MACOA has far fewer anomalies than COA.

Figure 14c shows the search history of MACOA, with most of the search points clustered around the boundary and global optimal solutions. The above analysis can conclude that MACOA is significantly better than COA.

5.6. Gear Train Design Problem

The problem of gear train design is a classic engineering design problem. The gear train design problem is proposed to minimize the gear ratio. Within the benchmark suite, the problem features D = 4 decision variables, g = 2 inequality constraints, and h = 0 equality constraints. The optimal value of the objective function is known to be f (x*) = 0 [

43].

Figure 15 illustrates the gear train configuration.

The design problem for the gear train can be outlined as follows:

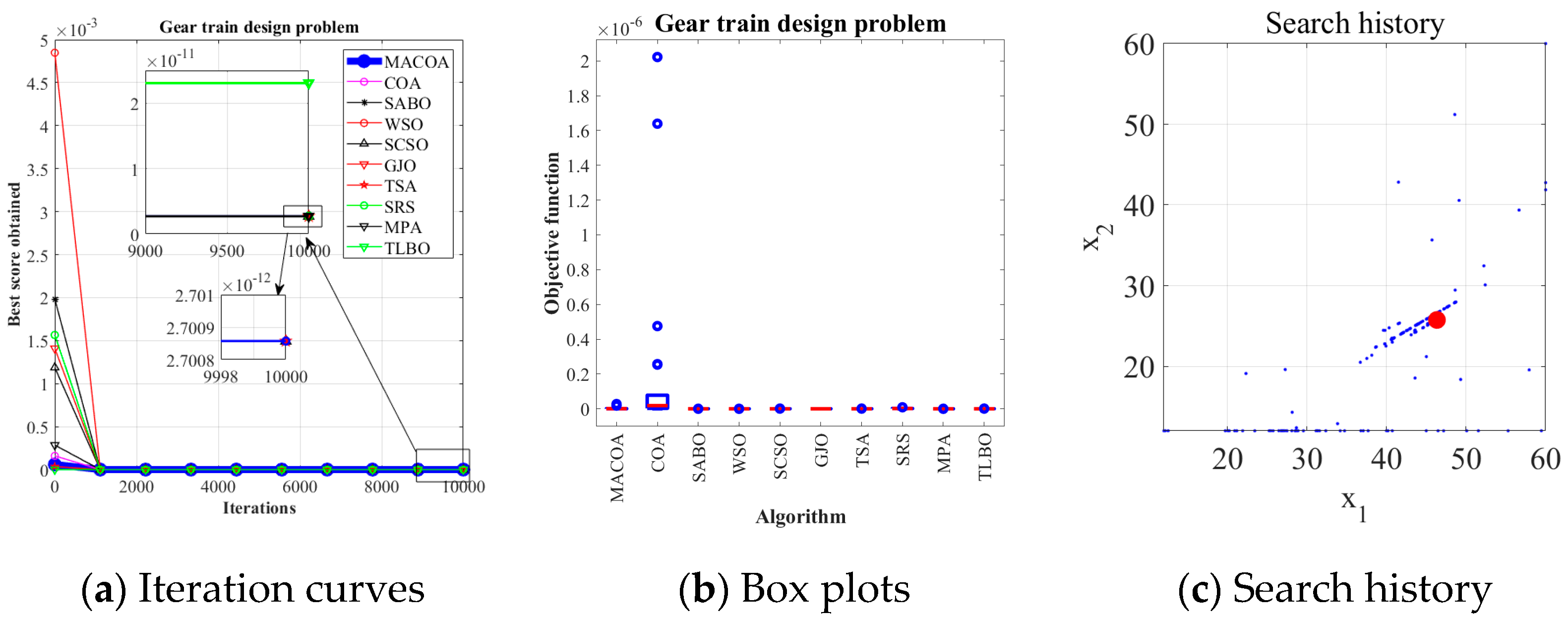

Table 15 shows that the SR of MACOA is 92%, which greatly exceeds that of COA.

Figure 16a and

Figure 16b show the iterative process and the box plot distribution of the optimal solutions for all the algorithms, respectively.

Figure 16c shows the search history of MACOA over 10,000 iterations, with most of the search regions located in the region where the global optimum is located. These results further highlight the improved performance of MACOA compared to COA.

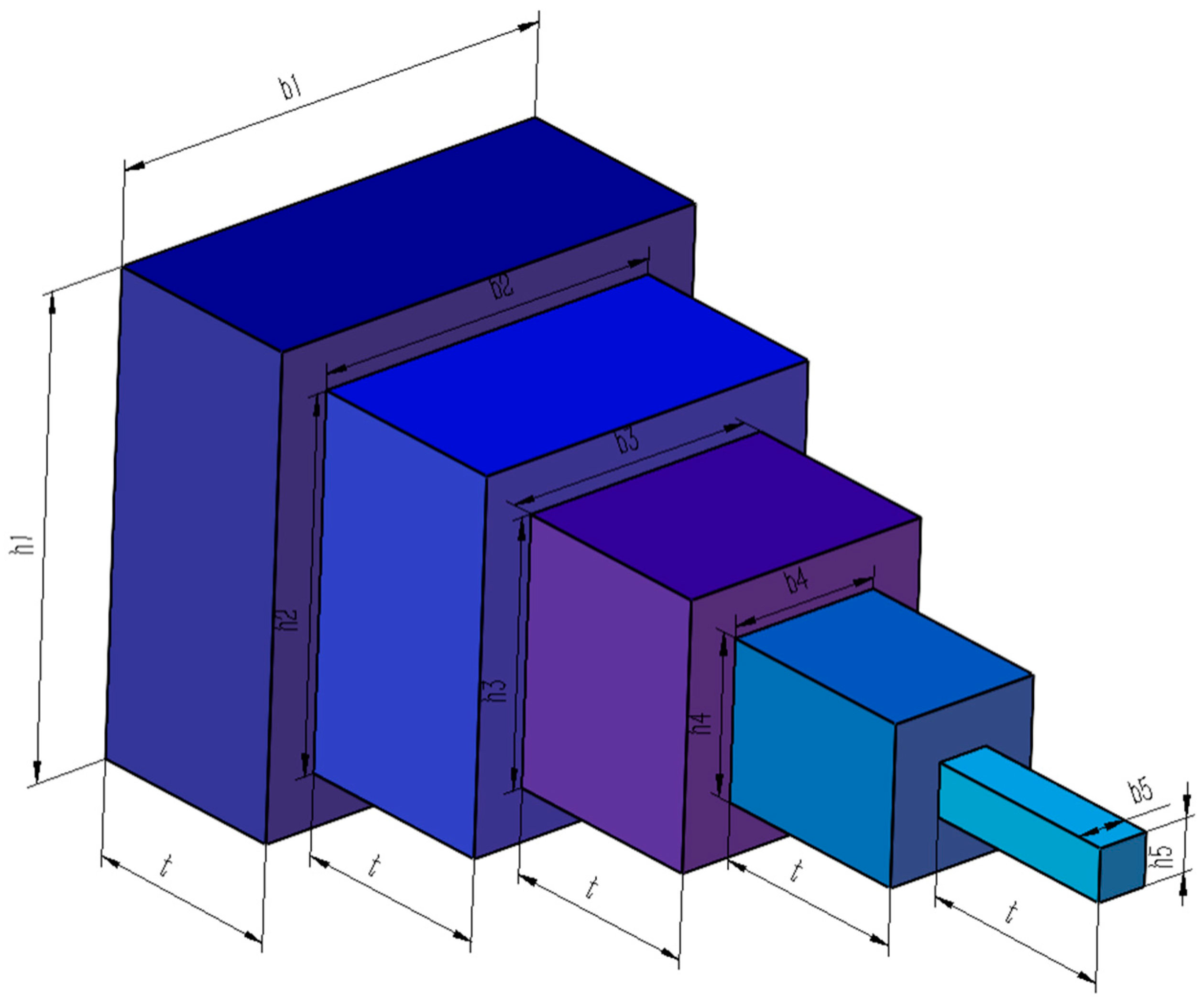

5.7. Cantilever Beam Design Problem

The design of a cantilever beam is a classic engineering design problem. Within the benchmark suite, the problem features D = 5 decision variables, g = 1 inequality constraints, and h = 0 equality constraints. The established optimal objective function f (x*) is 1.34 [

43].

Figure 17 illustrates the cantilever beam configuration.

The design problem for the cantilever beam can be outlined as follows:

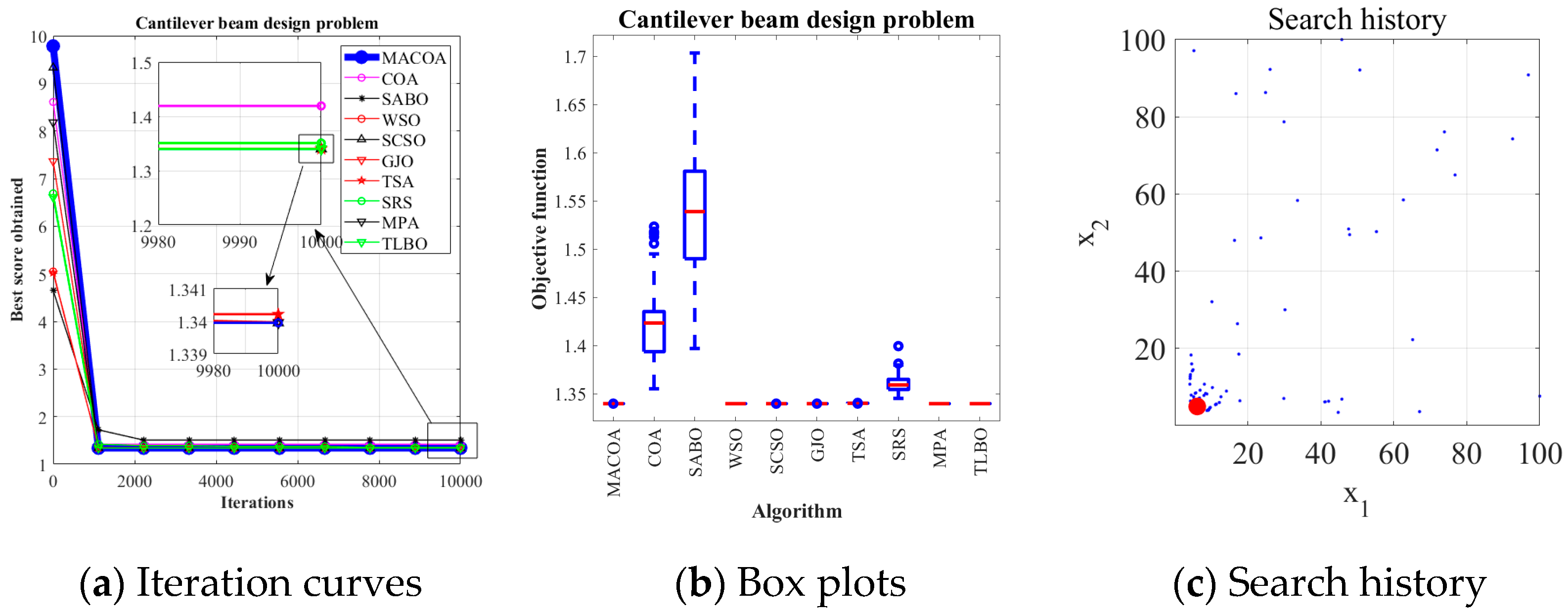

Table 16 shows that MACOA has both FR and SR of 100. These metrics far exceed COA and are comparable to the performance of WSO, SCSO, MPA, and TLBO.

Figure 18a shows the iterative convergence process of all the algorithms.

Figure 18b shows the boxplot distribution, from which it can be seen that MACOA has better stability and convergence than COA.

Figure 18c shows the search history of MACOA, where most of the searches are clustered around the global optimum. MACOA also demonstrates better population convergence. These results show that MACOA’s performance is very competitive, not only outperforming COA, but also being on par with other good optimization algorithms.

5.8. Summary of Engineering Problems

Examining the data gathered from tackling the seven engineering problems discussed above, it is clear that the MACOA algorithm excels compared to other algorithms regarding feasibility and success rate in most engineering problems. This demonstrates MACOA’s exceptional capability to solve constrained engineering problems.

6. Conclusions and Future Prospects

The challenge of slow convergence and the tendency of COA to converge to local optima are addressed in this paper. To mitigate these issues, MACOA is introduced, which integrates Lévy flight, nonlinear inertia weight factors, and the coati vigilante mechanism. The Lévy flight mechanism is introduced into the population initialization phase to improve the quality of initial solutions. Then, the nonlinear inertia weight factors are introduced in the exploration phase to improve COA’s global search capabilities and accelerate convergence. Additionally, the coati vigilante mechanism is implemented in the exploitation phase to enable the algorithm to quickly escape from local optima and address the imbalance between the exploration and exploitation capabilities of COA.

Experiments are conducted based on the IEEE CEC2017 test functions, comparing MACOA with 11 other popular algorithms across three dimensions. The analysis of convergence curves, boxplots, and search history results indicates that MACOA achieves the best performance on 9 test functions with an average ranking of 2.17 in the 30-dimensional experiment, 12 test functions with an average ranking of 1.90 in the 50-dimensional experiment, and 14 test functions with an average ranking of 1.76 in the 100-dimensional experiment. Overall, MACOA outperforms all compared algorithms in the CEC2017 test function experiments.

In application experiments, MACOA is tested on seven engineering problems. By analyzing the experimental results, it is clear that MACOA exhibits the best performance in four of these problems and outperforms COA in all application scenarios. Therefore, the proposed MACOA significantly improves the performance of COA and holds strong application value in constrained engineering optimization problems.

Although MACOA is overall effective at present, there are still some areas that need further improvement. In the standard test function experiments, MACOA did not perform well on some functions compared to other algorithms. In future work, we will continue our research on several optimization strategies, particularly the nonlinear strategy in this study, where the adaptive parameters change with iterations. In addition, we are working on the challenges of its integration with other complex disciplinary issues. These efforts will further validate the adaptability and effectiveness of MACOA in different domains.