Abstract

In order to overcome the drawbacks of low search efficiency and susceptibility to local optimal traps in PSO, this study proposes a multi-strategy particle swarm optimization (PSO) with information acquisition, referred to as IA-DTPSO. Firstly, Sobol sequence initialization on particles to achieve a more uniform initial population distribution is performed. Secondly, an update scheme based on information acquisition is established, which adopts different information processing methods according to the evaluation status of particles at different stages to improve the accuracy of information shared between particles. Then, the Spearman’s correlation coefficient (SCC) is introduced to determine the dimensions that require reverse solution position updates, and the tangent flight strategy is used to improve the inherent single update method of PSO. Finally, a dimension learning strategy is introduced to strengthen individual particles’ activity, thereby ameliorating the entire particle population’s diversity. In order to conduct a comprehensive analysis of IA-DTPSO, its excellent exploration and exploitation (ENE) capability is firstly validated on CEC2022. Subsequently, the performance of IA-DTPSO and other algorithms on different dimensions of CEC2022 is validated, and the results show that IA-DTPSO wins 58.33% and 41.67% of the functions on 10 and 20 dimensions of CEC2022, respectively. Finally, IA-DTPSO is employed to optimize parameters of the time-dependent gray model (1,1,r,ξ,Csz) (TDGM (1,1,r,ξ,Csz)) and applied to simulate and predict total urban water resources (TUWRs) in China. By using four error evaluation indicators, this method is compared with other algorithms and existing models. The results show that the total MAPE (%) value obtained by simulation after IA-DTPSO optimization is 5.9439, which has the smallest error among all comparison methods and models, verifying the effectiveness of this method for predicting TUWRs in China.

1. Introduction

Optimization is the procedure of determining the most efficient solution for practical problems. For an optimization problem, it is necessary to first clarify the three basic elements of the problem: the number of decision variables, the optimization range of variables, and the objective function. At present, optimization techniques have been employed in various domains such as business, engineering, science, and medicine [1,2,3,4]. However, classical numerical methods struggle to offer accurate solutions for non-convex problems with non-linear constraints, leading to extended computation time [5]. Meta-heuristics are key tools for developing efficient optimizers that can effectively solve challenging real-world problems [6,7]. Metaheuristic algorithms (MAs) can traverse the solution space with effect in indeterminate environments to recognize global optima or find an approximate optimum [8]. This means that although MAs cannot guarantee an accurate solution, they can definitely generate an optimal solution [9]. MAs have high scalability and can be directly designed and implemented and can surmount challenges related to the enormous sophistication of mathematical inference [10,11]. Therefore, when traditional optimization techniques cannot handle exact solutions, MAs become an alternative method for quickly solving large-scale optimization problems [12]. MAs’ main features can be generalized as below:

- It is an approximate method that is not specific to a particular problem.

- It is a process of continuously learning towards the optimal solution through trial and error.

- Demonstrates significant multi-functionality and robustness.

- It is an optimization logic used to determine approximate solutions to complex global optimization problems.

All population-based MAs possess these characteristics, with differences only in the use of operators and mechanisms. In addition, MAs also include two essential search tactics, namely ENE [13,14]. Exploration is the ability to search the solution space on a global scale, which is associated with averting local optimality and solving traps in local optimality. Exploitation is about making optimal decisions from promising solutions in the vicinity to increase MAs’ local quality [15]. Therefore, the key to whether a MA has excellent performance depends on whether an appropriate balance can be achieved between these two strategies. This typically involves how to use search operators to effectively extract and utilize information, thereby generating more promising solutions to problems [16].

Thirty years ago, MAs represented by PSO [17] gained widespread recognition in the research community, and more and more MAs rapidly emerged under its influence. So far, thousands of MAs have been made public. According to different sources of inspiration, MAs can be broadly divided into four categories: swarm-behavior inspired, human-behavior inspired, evolution-phenomena inspired, and nature-science-phenomena inspired.

- (i)

- Swarm-behavior inspired: Swarm-behavior-inspired algorithms are techniques that mimic collaborative behavior in biological social systems to solve problems. They organize a large number of simple individual units (such as ants, bees, bird swarm agents) together, allowing them to interact and learn in complex environments, and jointly search for optimal solutions. In recent years, newly proposed population-based algorithms include: Whale Optimization Algorithm (WOA) [18], Northern Goshawk Optimization (NGO) [19], Bottlenose Dolphin Optimizer (BDO) [20], Nutcracker Optimization Algorithm (NOA) [21], Mantis Search Algorithm (MSA) [22], Genghis Khan Shark Optimizer (GKSO) [23], Black-winged kite algorithm (BKA) [24], Secretary Bird Optimization Algorithm (SBOA) [25], and Horned Lizard Optimization Algorithm (HLOA) [26].

- (ii)

- Human-behavior inspired: Human-behavior-inspired algorithms typically draw inspiration from human creativity, artistic thinking, and problem-solving approaches, simulating the process of humans making a series of decisions through team collaboration. In recent years, this type of algorithm includes: Enterprise Development Optimizer (EDO) [27], Hiking Optimization Algorithm (HOA) [28], Great Wall Construction Algorithm (GWCA) [29], Football Team Training Algorithm (FTTA) [30], Alpine Skiing Optimization (ASO) [31], Information Acquisition Optimizer (IAO) [32], Adolescent Identity Search Algorithm (AISA) [33], and Information Decision Search Algorithm (IDSE) [34].

- (iii)

- Evolution-phenomena inspired: Evolution-phenomena-inspired algorithms are mainly a type of computational technology that draw inspiration from biological evolution theory. These mainly include Genetic Algorithm (GA) [35], Genetic Programming (GP) [36], Evolutionary Programming (EP) [37], Evolutionary Strategy (ES) [38], Differential Evolution (DE) algorithm [39], Biogeography-based optimization (BBO) [40], Clonal Selection Algorithm (CSA) [41], and Alpha Evolution (AE) [42].

- (iv)

- Nature-science-phenomena inspired: Nature-science-phenomena-inspired algorithms based on natural science phenomena mainly come from observations of natural phenomena and scientific laws in various fields. The latest achievements in this research direction mainly include: Tangent Search Algorithm (TSA) [43], Kepler Optimization Algorithm (KOA) [44], Exponential- Trigonometric Optimization (ETO) algorithm [45], Artemisinin Optimization (AO) algorithm [46], Weighted Average Algorithm (WAA) [5], Newton-Raphson-based Optimizer (NRBO) [47], Polar Lights Optimization (PLO) [48], and FATA morgana algorithm (FATA) [49].

In addition to these classic MAs, many improved versions of MAs have emerged in this field and been applied in various practical applications. Yan et al. [50] developed an enhanced human memory optimizer to solve engineering optimization problems. Hu et al. [51] studied a multi-strategy DE algorithm for the smooth path planning of multi-scale robots and obtained a motion path with higher smoothness. Gobashy et al. [7] used WOA to solve the problem of spontaneous potential energy anomalies caused by 2D tilted plates of infinite horizontal length. Li et al. [52] proposed an improved seagull optimizer for fault location in distribution networks. Jamal et al. [53] proposed an improved Pelican optimization algorithm to solve non-convex stochastic optimal power flow problems in power systems, thereby reducing generation costs and emissions.

Although the successive emergence of various new MAs has added great vitality to the field of intelligent optimization, existing MAs still have several limitations, which can be generalized as below:

- Difficulty in achieving the optimal balance of ENE, resulting in MAs to local optimum.

- Multiple operators are typically used to approximate the optimum, complicating the search scenario.

- Performance degradation in high-dimensional search space.

As one of the classic MAs, PSO has hundreds or thousands of improved versions, but it is difficult to select the best from these improved versions due to the No Free Lunch (NFL) theorem that all MAs have to rely on [54]. This theorem emphasizes that no MA can be universally applicable to all types of problems. That is to say, different MAs may perform better for specific types of problems but may not be as effective for other types of problems. Furthermore, search operators’ basis vectors in PSO typically determine the starting point of the search and are sampled straightforwardly from the solution set instead of being adaptively selected. In addition, particles overly rely on obtaining information from two historical best positions while lacking the capacity to gain more information from other particles. Finally, PSO still exposes the imbalance of ENE. In response to the shortcomings of PSO mentioned above and combined with the NFL theorem, this study proposes a multi-strategy improved PSO (IA-DTPSO), which is based on the information acquisition strategy and involves four other improvement strategies for targeted auxiliary improvement. Compared with a large number of existing PSO variants, this method has a more novel structure and refined update method.

In recent years, combining MAs with predictive models has become a hot topic. However, existing prediction models have the characteristics of slow technological updates and slow application of new technologies. Usually, these models are mostly based on historical data, which makes it difficult to cope with complex changes in the future, thereby affecting prediction accuracy and efficiency. At present, this field has achieved relatively satisfactory optimization results by utilizing various hybrid MAs or other machine learning methods to process models. However, these methods often have low universality, and there is still room for improvement in terms of prediction accuracy and efficiency. In order to overcome these limitations, this study combines the proposed IA-DTPSO with the simulation and prediction of China’s TUWRs. Through the distinctive update method of PSO variants, the parameters of TDGM (1,1,r,ξ,Csz) are optimized step by step to achieve the solution of the simulation and prediction problem of China’s TUWRs.

This study’s main contributions are as below:

- (i)

- A multi-strategy PSO with information acquisition, referred to as IA-DTPSO, is proposed and the entire optimization process is modeled.

- (ii)

- The good ENE ability of IA-DTPSO is validated on CEC2022.

- (iii)

- IA-DTPSO is compared with 11 other algorithms on different dimensions of CEC2022, verifying the superiority of IA-DTPSO.

- (iv)

- IA-DTPSO and seven other algorithms are employed to optimize parameters of TDGM (1,1,r,ξ,Csz) and applied to predict TUWRs in China. In addition, the IA-DTPSO optimized model is compared with three existing models, and the results indicate that the model optimized by IA-DTPSO achieves the minimum error among the four error evaluation metrics in both comparisons.

This study’s remaining parts are arranged as below: Section 2 reviews PSO’s basic framework. Section 3 gives an optimization model for IA-DTPSO. Section 4 analyzes and discusses the experimental results of IA-DTPSO on CEC2022. Section 5 utilizes the proposed IA-DTPSO to optimize TDGM (1,1,r,ξ,Csz) and applies it to simulate and predict TUWRs in China. Section 6 provides a summary of this study and prospects for the future.

2. The Classic PSO

PSO [16] regards bird flocks as a group of particles with self-activity trajectories, and the activity trajectories of particles depend on their velocity v and position x. Then, the calculation formula for v at time t + 1 is shown in Equation (1) [16]:

where ω is the inertia weight, c1 and c2 represent individual and social cognitive factors, respectively, and r1 and r2 are random numbers between [0, 1]. Assuming there are N populations, and represent the historical best positions found by the i-th and all N particles up to time t.

At time t + 1, x is updated according to the current position of the particle and the rate of change towards the next position, as shown in Equation (2) [16]:

3. The Proposed IA-DTPSO

In this section, we introduce five strategies to optimize PSO and propose an improved PSO (IA-DTPSO) method. The proposed IA-DTPSO is described in detail below.

3.1. Sobol Sequence Initialization

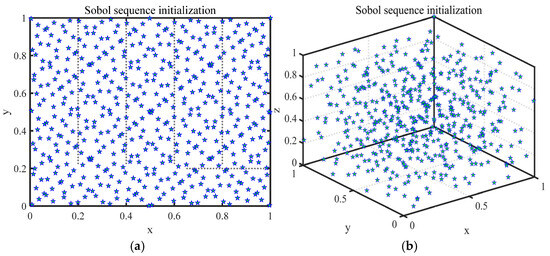

The initial solutions’ distribution is an important prerequisite for affecting the convergence speed of MAs. A homogeneously spread initial population can effectively improve the search efficiency of MAs. Therefore, this article uses a Sobol sequence initialization [55] population instead of the random initialization scheme in PSO. The Sobol sequence is a low-variance sequence that uses a deterministic quasi-random number sequence instead of a pseudo-random number sequence to fill, as evenly as possible, points into a multidimensional hypercube, thereby generating wider coverage in the solution space. The initial population position generated by the Sobol sequence is shown in Equation (3) [55]:

where Soboli denotes the i-th randomly generated number in the sequence. ub and lb denote the upper and lower bounds, respectively. The population spatial distribution of Sobol sequence initialization is shown in Figure 1.

Figure 1.

Population spatial distribution of Sobol sequence initialization. (a) Distribution in 2D space. (b) Distribution in 3D space.

3.2. Information Acquisition Strategy

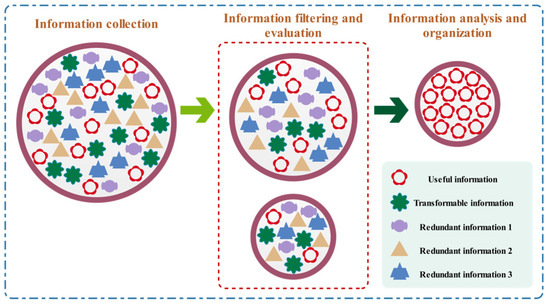

The information acquisition strategy is to collect and acquire useful information through the key stages of information gathering, information filtering and evaluation, information analysis and organization.

3.2.1. Information Gathering

Information gathering is a crucial step in gaining valuable feedback. Therefore, particles use various approaches and utilize a variety of channels to gather information, forming a more complete initial information system. This procedure can be expressed as [32]:

where and are two randomly generated particles at time t. μ is used as a random number between [−1, 1] to control the strength and direction of particle information collection. Generally speaking, collecting more information is not necessarily better. A large amount of information may lead to new candidate solutions exceeding the global optimum, thereby weakening the exploitation of the equation.

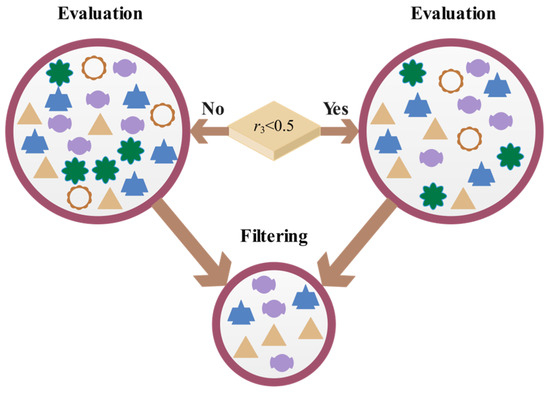

3.2.2. Information Filtering and Evaluation

After the particles have collected the information, they need to quickly identify the relevant useful information, and this key mechanism can be expressed as

where r3 is a random number between [0, 1], and rN is a particle randomly selected from N populations. is the error generated when subjective factors filter and evaluate information, defined by Equations (6)–(9) [32]:

where is the subjective influencing factor, which serves as a quantitative indicator of particle subjectivity. It may make two extreme judgments on the information, thereby changing the result of information acquisition. Owing to changes in subjective states, the evaluation of different particles or the same particle at different time points may vary. Another key factor is , which characterizes the algorithm’s ability to self-adjust based on the information quality at different iteration stages. Among it, represents the information quality factor, which avoids the algorithm from neglecting the basic requirements of information quality due to excessive optimization iteration dynamics. Furthermore, ri (i = 4, 5, 6, 7) is a random number between [0, 1] in these equations.

Figure 2 shows a schematic diagram of information filtering and evaluation, from which it can be seen that particles exhibit adaptive adjustment behavior when evaluating different information, which not only effectively eliminates unconventional information but also significantly improves the overall quality of information.

Figure 2.

Schematic diagram of information filtering and evaluation.

3.2.3. Information Analysis and Organization

After filtering out the information, particles need to seek out existing valuable information. They increase the likelihood of obtaining the optimal target information by converting the convertible information identified in the preceding stage into valuable information. This operation can be shown by Equations (10) to (11) [32]:

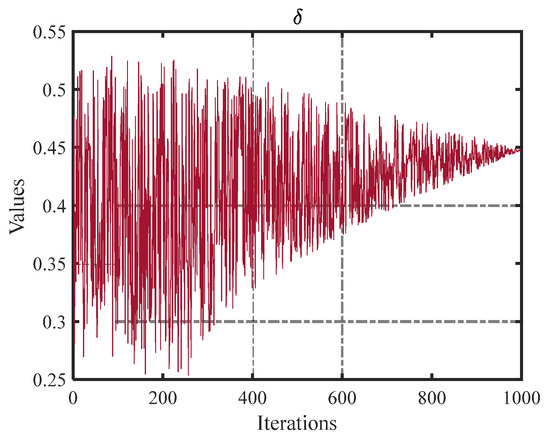

where ri (i = 8, 9, 10, 11) is a random number between [0, 1]. indicates a controlling factor, the trend of which is shown in Figure 3.

Figure 3.

Trend plot of control factor .

During this process, particles can optimize the depth and breadth of this stage dynamically, according to the quality of the information, thus increasing the target information body’s accuracy. In the next iteration, the novel information subject will totally substitute the previous information subject. Figure 4 depicts the entire framework of the information acquisition strategy.

Figure 4.

Schematic diagram of information acquisition strategy.

3.3. SCC Method

SCC is a method used to evaluate the statistical dependence between two ranking sequences (here are two candidate solution positions) [56]. By measuring the consistency of the particle ranking differences between two candidate solutions, the statistical correlation between these two rankings can be evaluated. The expression of this method is shown in Equation (12) [56]:

where zi = mi − ni, where mi and ni are the rankings of N populations in two sequences, respectively. When two sequences are completely identical, they are considered positively correlated. In this case, mi = ni. For each individual i, there is , so SC = 1. Similarly, when there is inconsistency between two sequences, it can be inferred that SC < 1.

In IA-DTPSO, the SCC’s calculation method shown in Equation (4) is used to measure the correlation between GB and each particle in each dimension, which determines the dimension that requires an inverse solution position update. The specific calculation formulas are shown in Equations (13)–(15) [56]:

where j is the j-th dimension on the D-dimensional problem. This targeted non-complete reverse operation helps the algorithm improve computational accuracy while maintaining its fast convergence.

3.4. Tangent Flight Strategy

Due to the fact that there is only one update method in PSO that calculates the next position based on the rate of change, this single-search method often carries the risk of convergence stagnation. Therefore, based on the PSO update method, this section utilizes the tangent flight strategy to compensate for this deficiency. The updated formula obtained by combining Equations (1) and (2) and introducing the tangent flight strategy is shown in Equation (16) [43]:

where r12 is a random number between [0, 1]. In tangent flight, all motion equations are controlled by a global step, which takes the form of step × tan(θ), and θ is a random number between . step is the move’s size, and its calculation formula is

where r13 is a random number between [0, 1], the sign controls the direction of ENE, and the norm is a Euclidean norm.

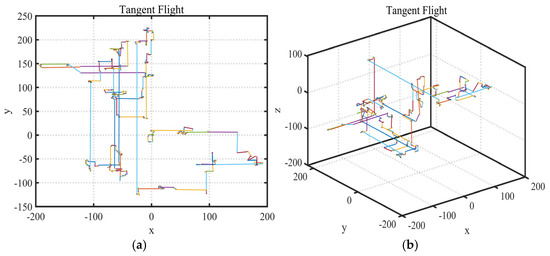

As shown in Figure 5a,b, the stride interval generated by tangent flight is large and the stride randomness is small, which keeps the search distance stable during the iteration process and greatly shortens the optimization iteration cycle of the algorithm. In addition, particles can obtain more information in this large step frequency search to get rid of local optima’s constraints.

Figure 5.

Schematic diagram of tangent flight random walk. (a) Distribution in 2D space. (b) Distribution in 3D space.

3.5. Dimension Learning Strategy

For particles in PSO, they can only passively be limited by the radiation of PB and GB, and cannot extract more effective information from other particles. In the introduced dimension learning strategy, particles can learn based on the behavior of their neighbors. Calculate the radius between the particle and other candidate particles based on the Euclidean distance [57]:

The neighborhood of can be expressed as:

where is the Euclidean distance between and . Once the neighborhood of is constructed, a neighbor particle can be randomly selected from the j-th dimensional neighborhood for updating using Equation (20):

where r14 is a random number between [0, 1], is a randomly selected neighbor from the neighborhood , and is a randomly selected particle from N populations.

Dimension learning strategy increases the algorithm’s exploration capacity and ability to retain population diversity by increasing the interaction between particles and their neighbors and introducing other randomly selected particles from the population.

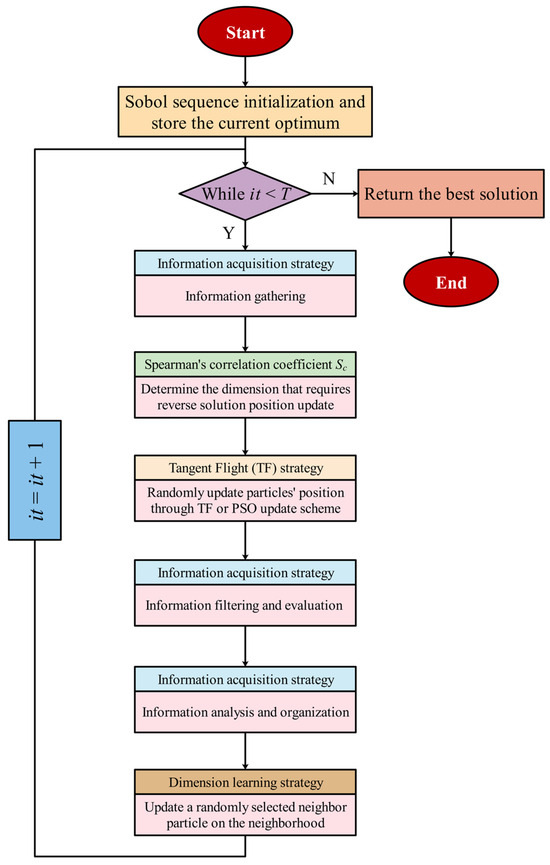

In order to present the structure and process of IA-DTPSO more intuitively, Algorithm 1 provides IA-DTPSO’s pseudo-code and draws IA-DTPSO’s flowchart as mirrored in Figure 6.

| Algorithm 1: IA-DTPSO’s pseudo-code |

| Start IA-DTPSO Input: Particles’ number (N) and iterations (T) Output: The optimum 1: Use Equation (3) for Sobol sequence initialization and store the current optimum |

| 2: While (it < T) Do 3: For i = 1 to N Do 4: Use Equation (4) to form the initial information system 5: End For 6: Update the parameter a using Equation (15) 7: Calculate the Spearman’s correlation coefficient Sc using Equations (12) and (14) 8: For i = 1 to N Do 9: For j = 1 to D Do 10: If Sc <= 0 11: For 12: Use Equation (13) to determine the dimension that requires reverse solution position update 13: End For 14: End If 15: End For 16: End For 17: For i = 1 to N Do 18: Calculate the movement size step using Equation (15) 19: Use Equation (16) for the tangent flight or PSO update scheme to randomly update particles’ position 20: End For 21: For i = 1 to N Do 22: Exploration 23: Update relevant parameters using Equations (6)–(9) 24: Use Equation (5) for information filtering and evaluation process 25: End 26: Exploitation 27: Update parameter using Equation (11) 28: Use Equation (10) for information analysis and organization 29: End 30: End For 31: For i = 1 to N Do 32: Update radius using Equation (18) 33: Construct the neighborhood using Equation (19) 34: For j = 1 to D Do 35: Update a randomly selected neighbor particle on the neighborhood using Equation (20) 36: End For 37: End For 38: Compute fitness values and store the current optimum 39: it = it + 1 40: End While 41: Output the optimum |

| End IA-DTPSO |

Figure 6.

The flowchart of the proposed IA-DTPSO.

3.6. Time Complexity Analysis of IA-DTPSO

In this section, we discuss the time complexity of IA-DTPSO. The time complexity of IA-DTPSO mainly depends on four parts: Sobel sequence initialization O(N × D), fitness evaluation O(5 × N), fitness ranking O(N × logN), and update O(5 × N × D). Therefore, the time complexity of IA-DTPSO is as follows:

4. Experimental Results and Discussion

4.1. Experimental Design and Parameter Setting

In this section, we test IA-DTPSO with 11 different types of comparison algorithms on the 10 and 20 dimensions of CEC2022 [23]. This test set provides a train of challenging test functions, as shown in Algorithm 1. By inputting variables N and T, continuous position updates and iterations are carried out until the optimum is output. The entire process reflects the solving performance of IA-DTPSO on these functions. Therefore, CEC2022 is an effective tool for fair comparison between different MAs. Comparative algorithms can be categorized into the following two types:

- (1)

- New MAs proposed in recent years: RUNge Kutta Optimizer (RUN) [58], Northern Goshawk Optimization (NGO) [19], Nutcracker Optimization Algorithm (NOA) [21], Genghis Khan Shark Optimizer (GKSO) [23], and IVY Algorithm (IVYA) [59].

- (2)

- PSO [17] and its various improved versions: Elite Archives-driven PSO (EAPSO) [60], Gaussian Quantum-behaved PSO (G-QPSO) [61], Hybrid algorithm based on Jellyfish Search PSO (HJSPSO) [62], single-objective variant PSO (PSO-sono) [63], and Multi-strategy PSO incorporating Snow Ablation Optimizer (SAO-MPSO) [64].

Table 1 displays the parameter settings for each MA. To avoid the influence of unexpected factors on the experiment, the runs are set to 20, which means that all MAs are independently run 20 times on each test function. Meanwhile, set N to 100 and T to 1000. Evaluate the optimization results through six evaluation metrics: Best, Worst, Mean, Wilcoxon Rank Sum Test (WRST), Friedman Test (FT), and Rank. In addition, optimization results are evaluated through three error indicators: Standard deviation (Std), Root Mean Square Error (RMSE), and relative error (δ). All tests are conducted according to the equipment specifications displayed in Table 2.

Table 1.

Parameter settings of IA-DTPSO and other different types of MAs.

Table 2.

PC configuration.

4.2. ENE Behavior Analysis

In order to further confirm that the improvement strategy proposed in this study is promising and effective in solving potential problems, this section discusses the trend of ENE rate changes of IA-DTPSO on CEC2022. The relevant formulas are as follows [64]:

where denotes all particles’ median on the j-th dimension. Divmax indicates the maximum diversity. E1% and E2% mean exploration rate and exploitation rate, respectively.

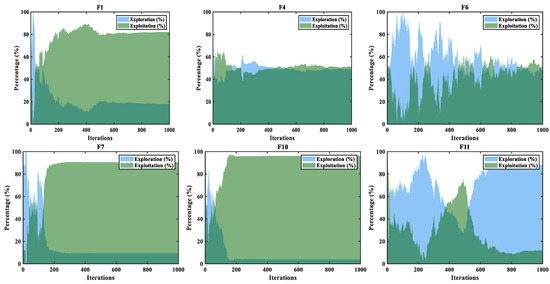

The two intersecting nonlinear curves shown in Figure 7 represent the ENE change rate of IA-DTPSO on the CEC2022 partial function. From these graphs, it can be seen that ENE rapidly approaches the intersection point at the beginning of iteration, which is attributed to the fact that the initial population under Sobol sequence initialization can effectively improve the search efficiency of particles. Subsequently, ENE reaches the first equilibrium point, where the two are intertwined and the ENE rate is 50%. This is due to the fact that the updated equations of Equations (5) and (9) in the information acquisition strategy effectively balance ENE. As shown in F1, F7, and F10 in Figure 7, after the first intersection of ENE, IA-DTPSO quickly transitioned from exploration to exploitation, focusing on local exploitation and completing the entire search in subsequent iterations. This is due to the targeted non-complete reverse operation of SCC, which helps IA-DTPSO improve convergence accuracy. However, ENE may experience fluctuations and even multiple intersections on certain functions. As shown in F6, ENE intersects several times at 50% and eventually stabilizes. This is because in tangent flight, the random step frequency and size generated by particles can obtain more information, and this random execution of exploration or exploitation operations can effectively break free from the constraints of local optima. The dimension learning strategy enhances the algorithm’s exploration ability and ability to maintain population diversity by increasing the interaction between particles and their neighbors. Specifically, as shown in F11, after 500 iterations, the proportion of exploration continues to increase, indicating that IA-DTPSO is still searching for the global optimum. In summary, the proposed strategies play their respective roles in solving CEC2022 and jointly promote the convergence of IA-DTPSO towards the theoretical optimum.

Figure 7.

ENE trends of IA-DTPSO on CEC 2022 partial test functions.

4.3. Experimental Results and Analysis

Table 3 displays statistical results of IA-DTPSO and other MAs on 10-dimensional CEC2022, with the optimal data highlighted in bold. In addition, the Theoretical Optimal (TO) values for F1–F12 in CEC2022 are 300, 400, 600, 800, 900, 1800, 2000, 2200, 2300, 2400, 2600, and 2700, respectively. All values that reach theoretical optimum in experimental results of this section are replaced by “TO”. For an algorithm, the more times it reaches TO, the better its performance. Firstly, IA-DTPSO achieves smaller values on 7 out of 12 test functions, accounting for 58.33% of CEC2022. Secondly, IA-DTPSO performs particularly well on uni-modal functions (F1), hybrid functions (F6–F8), and composition functions (F9–F12), all of which have achieved at least the top 2 rankings. Finally, according to the final ranking results, IA-DTPSO has a mean rank of 1.833 and a mean FT of 2.681, both leading the other comparison MAs. This denotes that IA-DTPSO has a great ability to solve complex optimization problems, and a smaller FT also means that IA-DTPSO has better stability. If FT is designed for repeated testing, then WRST is designed to test the pairing between two groups. Table 3 presents the WRST results under the condition of significance level = 0.05. The symbol “-” denotes comparison algorithms’ number that are inferior to IA-DTPSO; “+” is the quantity of opposite effects to “-”; “=“ represents the number of algorithms with similar performance compared to IA-DTPSO. The final functions’ numbers that are superior/similar/inferior to IA-DTPSO for each comparison algorithm are 2/0/10, 0/1/11, 4/3/5, 0/0/12, 0/1/11, 0/0/12, 1/2/9, 0/0/12, 3/2/7, 0/0/12, and 0/2/10, respectively. The WRST results show that NOA, IVYA, G-QPSO, and PSO-sono obtain the same test data, and these algorithms do not outperform IA-DTPSO on any of the functions. RUN and GKSO also obtain the same test data, which only show similar performance to IA-DTPSO on one function and inferior to IA-DTPSO on the other functions. Although NGO and HJSPSO, ranked second and third respectively, outperform IA-DTPSO in some functions, they are inferior to IA-DTPSO in more functions. Therefore, it can be said that NGO and HJSPSO also have certain competitiveness. In addition, PSO ranked seventh, which is inferior to IA-DTPSO on 10 functions, indicating that IA-DTPSO has significantly improved its optimization ability compared to PSO. However, PSO still outperforms IA-DTPSO on two functions, and further improvement of IA-DTPSO’s performance on these functions can be considered in the future.

Table 3.

Statistical results of IA-DTPSO and other MAs on 10-dimensional CEC2022.

To further compare the discrepancy in performance between IA-DTPSO and various MAs, Table 4 provides the error data for IA-DTPSO and other algorithms in solving the 10-dimensional CEC2022. Based on the relevant data in Table 4, the Std values of PSO are relatively high on F1 and F6, indicating that PSO exhibits instability on different test functions. Meanwhile, the RMSE and δ values of PSO on F6 are also high, indicating that PSO has low accuracy in complex problems and inconsistent performance in different runs. In addition to PSO, NOA, IVYA, and PSO-sono also perform poorly on complex problems, with low stability and accuracy. EAPSO and NGO perform well on simple issues but are still slightly inferior to IA-DTPSO on complex issues. IA-DTPSO gains relatively small RMSE and δ values on most functions, suggesting that IA-DTPSO has high accuracy to assure that more experimental results are close to the TO solution.

Table 4.

Errors of IA-DTPSO and other MAs on 10-dimensional CEC2022.

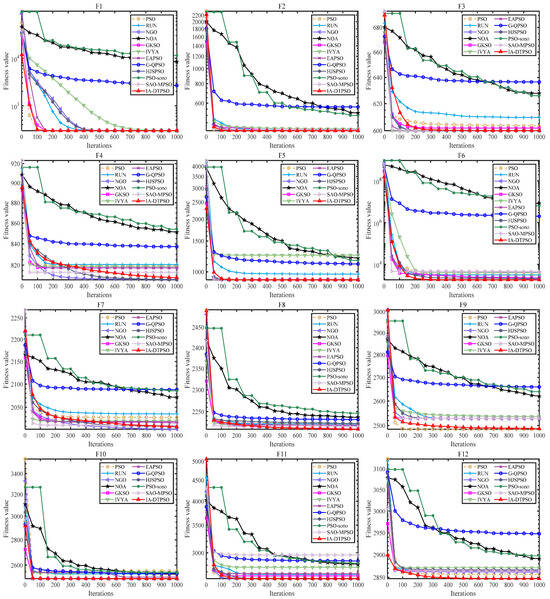

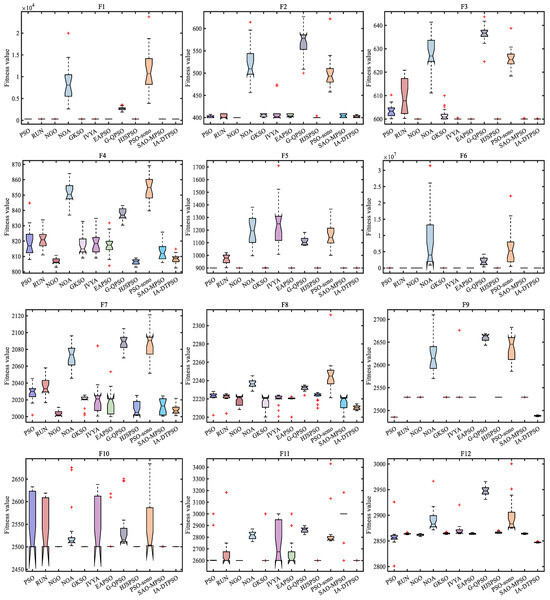

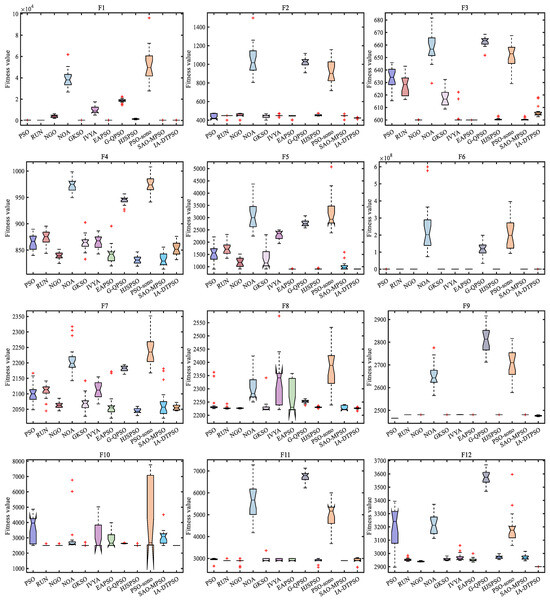

Figure 8 mirrors IA-DTPSO’s convergence curves and other MAs on 12 functions. IA-DTPSO quickly converges to the global optimum in the early stage and finds the TO solution around 100 iterations. This is due to the Sobol sequence initialization generating a good initial solution for the particle population, which gives IA-DTPSO extraordinary optimization ability. In addition, IA-DTPSO still has a downward convergence trend after 1000 iterations when solving the F4 function, indicating that IA-DTPSO still has the capacity to find the global optimum. This is also due to the information acquisition strategy that maintains the diversity between different particles, resulting in a steady increase in population diversity. Based on the box plots shown in Figure 9, IA-DTPSO’s box shape is relatively narrow and positioned downwards, indicating that IA-DTPSO has good robustness and high accuracy.

Figure 8.

Convergence curves of IA-DTPSO and other MAs for addressing 10-dimensional CEC2022.

Figure 9.

Box plots of IA-DTPSO and other MAs for solving 10-dimensional CEC2022.

Table 5 presents the statistical results of IA-DTPSO and other MAs on 20-dimensional CEC2022. Firstly, IA-DTPSO achieves smaller values on 5 out of the 12 test functions, accounting for 41.67% of CEC2022. Secondly, IA-DTPSO performs particularly well on uni-modal functions (F1) and hybrid functions (F6–F8), both ranking at least in the top two. Finally, according to the final ranking results, IA-DTPSO has a mean rank of 2.417 and a mean FT of 3.242, both leading the other comparison algorithms. This indicates that with the increase in problem dimensions, IA-DTPSO still has a positive optimization ability. Meanwhile, Table 5 presents the WRST results of IA-DTPSO and other MAs. The number of functions obtained for each comparison MA that are superior/similar/inferior to IA-DTPSO is 1/1/10, 0/2/10, 3/2/7, 0/0/12, 0/2/10, 1/0/11, 3/4/5, 0/0/12, 4/1/7, 0/0/12, and 4/3/5, respectively. From the WRST results of this group, NOA, G-QPSO, and PSO-sono obtain the same test data, and these algorithms do not outperform IA-DTPSO on any of the functions. Similar to the test results on the 10-dimensional CEC2022, RUN and GKSO also obtain the same settlement data. They only perform similarly to IA-DTPSO on one function and are inferior to IA-DTPSO on the other functions. In addition, PSO ranked eighth and only outperformed IA-DTPSO on one function and is inferior to IA-DTPSO on ten functions, indicating that IA-DTPSO has significantly improved its optimization level compared to PSO. It is worth mentioning that HJSPSO and SAO-MPSO, ranked fourth and fifth, respectively, have a higher number of functions than IA-DTPSO, NGO, and EAPSO, ranked second and third, respectively. Combined with the mean rank outcomes, there is no appreciable discrepancy in the performance of these MAs.

Table 5.

Statistical results of IA-DTPSO and other MAs on 20-dimensional CEC2022.

Table 6 provides error data for IA-DTPSO and other algorithms in solving 20-dimensional CEC2022. Based on the relevant data of the best and worst values in Table 5, IA-DTPSO has smaller Std values on most functions. Combined with the relevant data of Mean in Table 5, IA-DTPSO also gains smaller RMSE and δ values on most functions. Thus, IA-DTPSO has high accuracy and always approaches the TO solution with minimal error, making it the algorithm with the best overall performance. In addition, EAPSO and SAO-MPSO perform well on simple problems, but are slightly inferior to IA-DTPSO on complex problems. It is worth mentioning that PSO, NOA, IVYA, and PSO-sono still perform poorly in high-dimensional complex problems, manifested in low stability and accuracy.

Table 6.

Errors of IA-DTPSO and other MAs on 20-dimensional CEC2022.

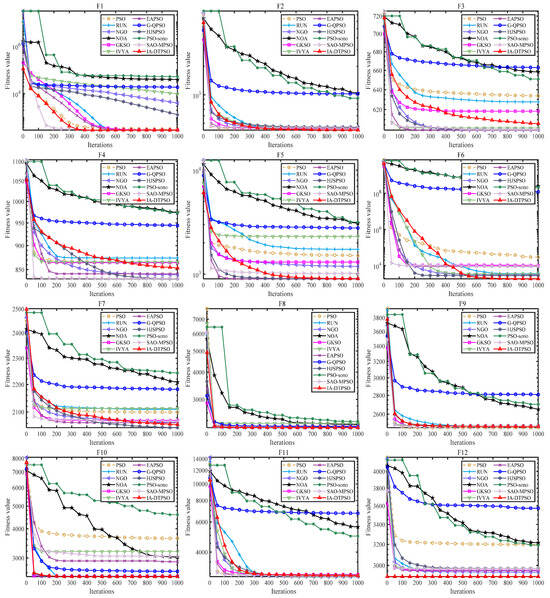

Figure 10 mirrors the convergence curves of IA-DTPSO and other MAs on a 20-dimensional CEC2022. IA-DTPSO has a faster convergence rate on most functions, and its convergence curve can reach a lower landing point within a limited number of iterations. In addition, IA-DTPSO still has a downward convergence trend after iteration termination when solving F3, F4, and F7 functions, indicating that IA-DTPSO still has the capacity to find the global optimum. Figure 11 mirrors the box plots of IA-DTPSO and other MAs on a 20-dimensional CEC2022. IA-DTPSO’s box shape is relatively narrow and positioned downwards, indicating that IA-DTPSO has good robustness and high accuracy.

Figure 10.

Convergence curves of IA-DTPSO and other MAs for solving 20-dimensional CEC2022.

Figure 11.

Box plots of IA-DTPSO and other MAs for solving 20-dimensional CEC2022.

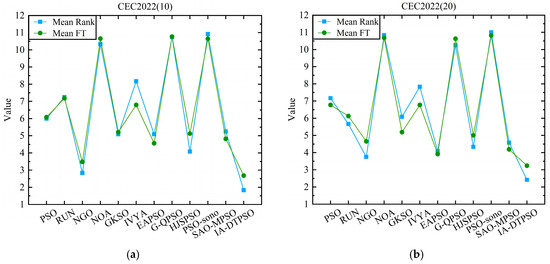

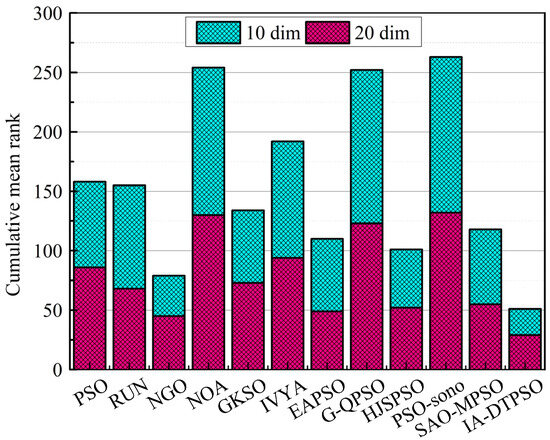

Figure 12 shows a line graph comparison of the mean rank and mean FT of IA-DTPSO and other algorithms in different dimensions of CEC2022. Among them, (a) is the comparison result in 10 dimensions, and (b) is the comparison result in 20 dimensions. From the two sub-graphs in Figure 12, IA-DTPSO has the smallest mean rank and mean FT in both dimensions, which is due to the stable performance of IA-DTPSO in both dimensions under the influence of the dimension learning strategy. In addition, owing to discrepancies in statistical approaches, the mean FT and mean rank of each algorithm also vary slightly. The smaller this difference, the better the stability of the algorithm’s operation. Finally, Figure 13 mirrors the stacked rank and bar chart of IA-DTPSO and other algorithms in different dimensions and finds that IA-DTPSO obtains the lowest cumulative column height. In summary, the comprehensive performance of IA-DTPSO is obviously better than the other compared MAs.

Figure 12.

Comparative line graphs of mean rank and mean FT for each MA on different dimensions of CEC2022. (a) Comparison results on 10-dimensional CEC2022. (b) Comparison results on 20-dimensional CEC2022.

Figure 13.

Cumulative rank sum of IA-DTPSO and other MAs on different dimensions.

5. Simulation and Prediction of TUWRs in China Based on IA-DTPSO and TDGM(1,1,r,ξ,Csz)

We verified the superiority of the proposed IA-DTPSO on the test set. In this section, we use IA-DTPSO to optimize TDGM (1,1,r,ξ,Csz) and apply the optimized TDGM (1,1,r,ξ,Csz) model to simulate and predict TUWRs’ situation in China.

5.1. TDGM(1,1,r,ξ,Csz)

Definition 1.

Let a set of data sequences , is a one-time accumulation sequence of , as shown in Equation (26):

where the calculation formula for is shown in Equation (27):

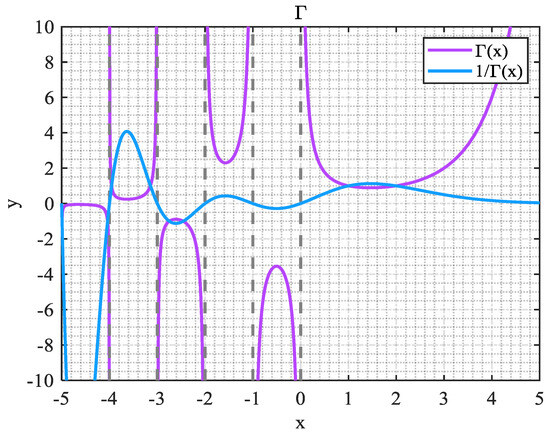

where function is utilized to optimize the space of order r in the model. Figure 14 shows the graph of function.

Figure 14.

Function.

It is not difficult to derive the expression for the inverse first-order accumulation sequence of from Definition 1, which will not be repeated here.

Definition 2.

is the average sequence generated by consecutive neighboring neighbors of , as shown in Equation (28):

where the calculation method of is shown in Equation (29):

Definition 3.

If , , and have the same definitions as above, then there are

Equation (30) is denoted as TDGM (1,1,r,ξ,Csz).

Theorem 1.

Let be computed as shown in Equation (31):

where Y and B are matrices (n − 1) × 1 and (n − 1) × 3, respectively, expressed as:

Theorem 2.

The r-order time response function of TDGM (1,1,r,ξ,Csz) is

where and

The proof process of TDGM (1,1,r,ξ,Csz) is described in reference [65].

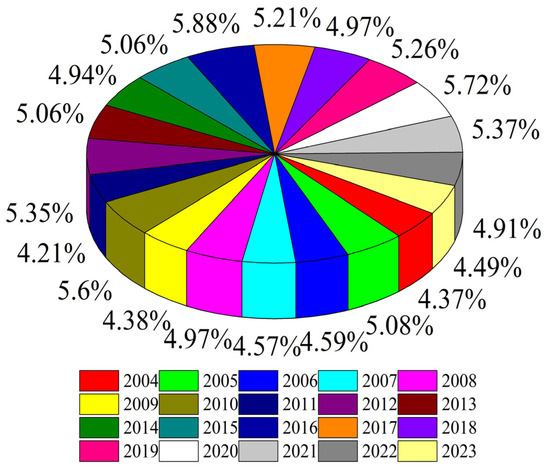

5.2. Investigation Data Analysis

TUWRs refer to the surface and underground water production formed by urban precipitation, which is the sum of surface runoff and precipitation infiltration recharge [66]. China is a country with abundant water resources, but also a country with scarce and unevenly distributed water resources [67]. With the acceleration of urbanization, people’s demand for water resources has sharply increased. In order to improve prediction accuracy and water resource utilization efficiency, this section selects the TUWRs in China from 2004 to 2023 for simulation and prediction. Table 7 presents the total urban water resources data in China over the past 20 years, sourced from the National Bureau of Statistics (https://data.stats.gov.cn/ access on 20 March 2025). As an important input data for this method, 75% of the data (2004–2018) will be used for the training set and 25% (2019–2023) for the test set.

Table 7.

TUWRs in China from 2004 to 2023 (Unit: Hundred million cubic meters).

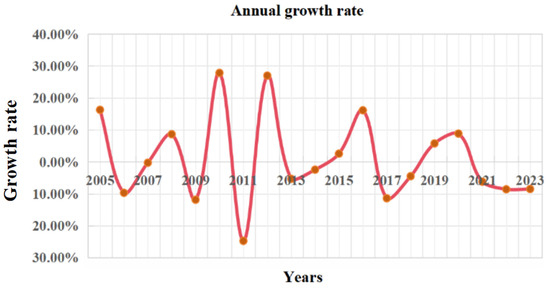

Figure 15 shows the distribution of the proportion of TUWRs in China from 2004 to 2023. It can be seen that China’s TUWRs do not increase linearly over time, and their annual TUWRs are influenced by actual social conditions. Figure 16 shows the growth rate of TUWRs in China, from which it can be seen that the total urban water resources growth rate was the most significant from 2009 to 2013, while the growth rate in the past two years has been relatively slow.

Figure 15.

Distribution of the proportion of TUWRs in China from 2004 to 2023.

Figure 16.

Growth rate of total urban annual water resources in China.

5.3. Model Evaluation Criteria

This study uses four error evaluation metrics to gauge the predictive performance of TDGM (1,1,r,ξ,Csz), including Absolute Percentage Error (APE), Mean Absolute Percentage Error (MAPE), simulation MAPE (MAPEsimulation), and prediction MAPE (MAPEprediction). The descriptions of these indicators are shown in Equations (35)–(38) [68]:

where and are the fitted value and the raw data, respectively.

5.4. IA-DTPSO and Other Algorithms for Parameter Optimization and Prediction of TDGM (1,1,r,ξ,Csz)

This section uses IA-DTPSO, PSO [17], GKSO [23], IVYA [59], EAPSO [60], HJSPSO [62], PSO-sono [63], and SAO-MPSO [64] to optimize TDGM (1,1,r,ξ,Csz) and applies them to simulate and predict TUWRs in China. Table 8 shows the statistical results of IA-DTPSO and other MAs for solving TUWRs in China. Among them, SimD represents simulated data, and ResE represents residuals. It is not difficult to see from Table 8 that all data except for the true values given over these years are output data. Among them, the MAPEsimulation (%) and MAPEprediction (%) of IA-DTPSO on the training and testing sets are 5.6366 and 6.8041, respectively, and the total MAPE (%) is 5.9439. It can be seen that the three performance indicators of IA-DTPSO have achieved the minimum values compared with the other seven MAs. Furthermore, the MAPEsimulation (%) and MAPEprediction (%) of PSO on the training and testing sets are 6.6432 and 7.2254, respectively, and the total MAPE (%) is 6.7964. Obviously, IA-DTPSO has significantly reduced the error in predicting TUWRs in China compared to PSO. Table 9 shows the parameters calculated by IA-DTPSO and other algorithms after optimizing the TDGM (1,1,r,ξ,Csz). Finally, Table 10 displays the forecast results of TUWRs in China in the next five years. TUWRs in China will reach 3368.846 hundred million cubic meters in 2028, which is the year with the highest total water resources since 2004. How to reasonably utilize and manage these huge water resources will be a challenge in the future.

Table 8.

Statistical results of IA-DTPSO and other MAs for addressing TUWRs in China.

Table 9.

Parameter statistics of IA-DTPSO and other algorithms for solving the TUWRs in China.

Table 10.

Prediction results of TUWRs in China in the next five years.

5.5. Four Models for Simulating and Predicting TUWRs in China

In this section, the IA-DTPSO optimized TDGM (1,1,r,ξ,Csz) and existing GM (1,1) [69], DGM (1,1) [70], and NGBM (1,1) [71] models are used to simulate and predict TUWRs in China. The statistical results are shown in Table 11. From the output data in the table, it can be seen that the TDGM (1,1,r,ξ,Csz) model optimized by IA-DTPSO (referred to as ID_T) has a MAPEsimulation (%) and a MAPEprediction (%) of 5.6366 and 6.8041 on the training and testing sets, respectively, and a total MAPE (%) of 5.9439. Its three performance indicators have reached the minimum values among the four compared models, further indicating the superiority of IA-DTPSO. Table 12 presents the forecast results of TUWRs in China for the next five years under four different models. It can be seen from the table that the GM (1,1), DGM (1,1), and NGBM (1,1) models predict a relatively flat growth trend in TUWRs for the next five years, while the TDGM (1,1,r,ξ,Csz) model optimized by IA-DTPSO shows a large fluctuation in the forecast results, which is closely related to the iteration and randomness of meta-heuristic methods and can better reflect the more fitting forecast data.

Table 11.

Statistical results of solving TUWRs in China using different models.

Table 12.

Prediction results of TUWRs in China for the next five years under four different models.

6. Conclusions and Future Prospects

This study proposes a multi-strategy improved PSO (IA-DTPSO). Firstly, Sobol sequences are introduced to produce a wider coverage of the initial particles. Secondly, an update mechanism based on information acquisition methods is established, which applies three different types of information processing operations to different stages. Among them, information gathering is the preparatory stage for particles to obtain useful information, and the remaining two methods correspond to the ENE stage of algorithms. This method improves the overall quality of information obtained by particles. Then, the SCC is introduced to gauge the correlation between GB and particles in each dimension, determining the dimensions that require reverse solution position updates, ensuring that the algorithm improves computational accuracy without sacrificing convergence speed. In addition, the use of tangent flight strategy combined with the original update method of PSO prevents the algorithm from falling into convergence stagnation. Finally, the introduced dimension learning strategy increases the interactivity between particles, enhances the overall particle vitality, and sustains the population diversity.

In the experimental section, the changing trend of the ENE rate of IA-DTPSO on CEC2022 was first discussed, further confirming the promising improvement strategy proposed in this study in solving potential problems. Then, by comparing IA-DTPSO with 11 other algorithms on different dimensions of CEC2022, the results show that IA-DTPSO achieves the minimum mean rank and mean FT index in both dimensions. According to the WRST results, IA-DTPSO outperforms other algorithms with a larger number of optimization functions in pairwise comparisons. Therefore, this study utilizes IA-DTPSO to optimize the TDGM (1,1,r,ξ,Csz) model and applies it to simulate and predict TUWRs in China. At the same time, numerical experiments are conducted to compare IA-DTPSO with seven other algorithms and three existing models, and the results show that TDGM (1,1,r,ξ,Csz) optimized by IA-DTPSO obtains the smallest error among the four error evaluation indicators compared in the two groups, verifying the superiority of the proposed method. Finally, the total urban water resources in China for the next five years are predicted, and results show that by 2028, the TUWRs in China will reach 3368.846 hundred million cubic meters. In summary, the proposed IA-DTPSO achieves good results in both numerical experiments and simulation examples.

However, IA-DTPSO still has some limitations. Its performance on two functions did not surpass PSO in solving the 10-dimensional CEC2022. In addition, the accuracy obtained by IA-DTPSO in solving F3, F4, and F11 on 20-dimensional CEC2022 is not high. In the future, further improvement of IA-DTPSO’s performance on these functions can be considered. In future work, IA-DTPSO can be applied to more existing models and compared with the optimized model in this study. IA-DTPSO may not be the most perfect optimizer, but it can definitely demonstrate its applicability in a wide range of fields. It can be attempted to use IA-DTPSO to solve other complex optimization problems, such as engineering optimization [72], feature selection [73], and path planning [74].

Author Contributions

Conceptualization, J.W. and K.Y.; Methodology, Z.Z., J.W. and K.Y.; Software, Z.Z.; Validation, Z.Z.; Formal analysis, J.W. and K.Y.; Investigation, Z.Z. and K.Y.; Resources, J.W. and K.Y.; Data curation, Z.Z. and J.W.; Writing—original draft, Z.Z., J.W. and K.Y.; Writing—review & editing, Z.Z., J.W. and K.Y.; Visualization, Z.Z.; Supervision, K.Y.; Project administration, J.W. and K.Y.; Funding acquisition, K.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the National Natural Science Foundation of China (grant Nos. 52278047), the Key Research Projects of the Shaanxi Provincial Government Research Office in 2024 (grant Nos. 2024HZ1186), and the 2024 Shaanxi Provincial Communist Youth League and Youth Work Research Project (grant Nos. 2024HZ1236).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All data generated or analyzed during the study are included in this published article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zhang, J.; Wang, Y.; Yang, Y.; Ma, Y.; Dai, Z. Fault diagnosis and intelligent maintenance of industry 4.0 power system based on internet of things technology and thermal energy optimization. Therm. Sci. Eng. Prog. 2024, 55, 102902. [Google Scholar]

- Li, Z.; Song, P.; Li, G.; Han, Y.; Ren, X.; Bai, L.; Su, J. AI energized hydrogel design, optimization and application in biomedicine. Mater. Today Bio 2024, 25, 101014. [Google Scholar]

- Yaiprasert, C.; Hidayanto, A.N. AI-powered ensemble machine learning to optimize cost strategies in logistics business. Int. J. Inf. Manag. Data Insights 2024, 4, 100209. [Google Scholar]

- Hong, Q.; Jun, M.; Bo, W.; Sichao, T.; Jiayi, Z.; Biao, L.; Tong, L.; Ruifeng, T. Application of Data-Driven technology in nuclear Engineering: Prediction, classification and design optimization. Ann. Nucl. Energy 2023, 194, 110089. [Google Scholar]

- Cheng, J.; De Waele, W. Weighted average algorithm: A novel meta-heuristic optimization algorithm based on the weighted average position concept. Knowl. -Based Syst. 2024, 305, 112564. [Google Scholar]

- Huang, L.; Wang, Y.; Guo, Y.; Hu, G. An Improved Reptile Search Algorithm Based on Lévy Flight and Interactive Crossover Strategy to Engineering Application. Mathematics 2022, 10, 2329. [Google Scholar] [CrossRef]

- Gobashy, M.; Abdelazeem, M. Metaheuristics Inversion of Self-Potential Anomalies. In Self-Potential Method: Theoretical Modeling and Applications in Geosciences; Biswas, A., Ed.; Springer International Publishing: Cham, Switzerland, 2021; pp. 35–103. [Google Scholar]

- Peng, L.; Cai, Z.; Heidari, A.A.; Zhang, L.; Chen, H. Hierarchical Harris hawks optimizer for feature selection. J. Adv. Res. 2023, 53, 261–278. [Google Scholar]

- Zamani, H.; Nadimi-Shahraki, M.H.; Mirjalili, S.; Gharehchopogh, F.S.; Oliva, D. A Critical Review of Moth-Flame Optimization Algorithm and Its Variants: Structural Reviewing, Performance Evaluation, and Statistical Analysis. Arch. Comput. Methods Eng. 2024, 31, 2177–2225. [Google Scholar]

- Sahoo, S.K.; Houssein, E.H.; Premkumar, M.; Saha, A.K.; Emam, M.M. Self-adaptive moth flame optimizer combined with crossover operator and Fibonacci search strategy for COVID-19 CT image segmentation. Expert Syst. Appl. 2023, 227, 120367. [Google Scholar]

- Li, X.; Lin, Z.; Lv, H.; Yu, L.; Heidari, A.A.; Zhang, Y.; Chen, H.; Liang, G. Advanced slime mould algorithm incorporating differential evolution and Powell mechanism for engineering design. iScience 2023, 26, 107736. [Google Scholar]

- Minh, H.-L.; Sang-To, T.; Wahab, M.A.; Cuong-Le, T. A new metaheuristic optimization based on K-means clustering algorithm and its application to structural damage identification. Knowl. -Based Syst. 2022, 251, 109189. [Google Scholar]

- Salcedo-Sanz, S. Modern meta-heuristics based on nonlinear physics processes: A review of models and design procedures. Phys. Rep. 2016, 655, 1–70. [Google Scholar]

- Abualigah, L.; Diabat, A.; Geem, Z.W. A Comprehensive Survey of the Harmony Search Algorithm in Clustering Applications. Appl. Sci. 2020, 10, 3827. [Google Scholar] [CrossRef]

- Hu, G.; Guo, Y.; Sheng, G. Salp Swarm Incorporated Adaptive Dwarf Mongoose Optimizer with Lévy Flight and Gbest-Guided Strategy. J. Bionic Eng. 2024, 21, 2110–2144. [Google Scholar]

- Mohamed, A.W.; Hadi, A.A.; Mohamed, A.K. Gaining-sharing knowledge based algorithm for solving optimization problems: A novel nature-inspired algorithm. Int. J. Mach. Learn. Cybern. 2020, 11, 1501–1529. [Google Scholar]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. Proc. ICNN’95—Int. Conf. Neural Netw. 1995, 1944, 1942–1948. [Google Scholar]

- Mahmood, S.; Bawany, N.Z.; Tanweer, M.R. A comprehensive survey of whale optimization algorithm: Modifications and classification. Indones. J. Electr. Eng. Comput. Sci. 2023, 29, 899–910. [Google Scholar]

- Dehghani, M.; Hubálovský, Š.; Trojovský, P. Northern Goshawk Optimization: A New Swarm-Based Algorithm for Solving Optimization Problems. IEEE Access 2021, 9, 162059–162080. [Google Scholar]

- Srivastava, A.; Das, D.K. A bottlenose dolphin optimizer: An application to solve dynamic emission economic dispatch problem in the microgrid. Knowl. -Based Syst. 2022, 243, 108455. [Google Scholar]

- Abdel-Basset, M.; Mohamed, R.; Jameel, M.; Abouhawwash, M. Nutcracker optimizer: A novel nature-inspired metaheuristic algorithm for global optimization and engineering design problems. Knowl. -Based Syst. 2023, 262, 110248. [Google Scholar]

- Abdel-Basset, M.; Mohamed, R.; Zidan, M.; Jameel, M.; Abouhawwash, M. Mantis Search Algorithm: A novel bio-inspired algorithm for global optimization and engineering design problems. Comput. Methods Appl. Mech. Eng. 2023, 415, 116200. [Google Scholar]

- Hu, G.; Guo, Y.; Wei, G.; Abualigah, L. Genghis Khan shark optimizer: A novel nature-inspired algorithm for engineering optimization. Adv. Eng. Inform. 2023, 58, 102210. [Google Scholar]

- Wang, J.; Wang, W.-C.; Hu, X.-X.; Qiu, L.; Zang, H.-F. Black-winged kite algorithm: A nature-inspired meta-heuristic for solving benchmark functions and engineering problems. Artif. Intell. Rev. 2024, 57, 98. [Google Scholar]

- Fu, Y.; Liu, D.; Chen, J.; He, L. Secretary bird optimization algorithm: A new metaheuristic for solving global optimization problems. Artif. Intell. Rev. 2024, 57, 123. [Google Scholar]

- Peraza-Vázquez, H.; Peña-Delgado, A.; Merino-Treviño, M.; Morales-Cepeda, A.B.; Sinha, N. A novel metaheuristic inspired by horned lizard defense tactics. Artif. Intell. Rev. 2024, 57, 59. [Google Scholar]

- Truong, D.-N.; Chou, J.-S. Metaheuristic algorithm inspired by enterprise development for global optimization and structural engineering problems with frequency constraints. Eng. Struct. 2024, 318, 118679. [Google Scholar]

- Oladejo, S.O.; Ekwe, S.O.; Mirjalili, S. The Hiking Optimization Algorithm: A novel human-based metaheuristic approach. Knowl. -Based Syst. 2024, 296, 111880. [Google Scholar] [CrossRef]

- Guan, Z.; Ren, C.; Niu, J.; Wang, P.; Shang, Y. Great Wall Construction Algorithm: A novel meta-heuristic algorithm for engineer problems. Expert Syst. Appl. 2023, 233, 120905. [Google Scholar]

- Tian, Z.; Gai, M. Football team training algorithm: A novel sport-inspired meta-heuristic optimization algorithm for global optimization. Expert Syst. Appl. 2024, 245, 123088. [Google Scholar]

- Yuan, Y.; Ren, J.; Wang, S.; Wang, Z.; Mu, X.; Zhao, W. Alpine skiing optimization: A new bio-inspired optimization algorithm. Adv. Eng. Softw. 2022, 170, 103158. [Google Scholar] [CrossRef]

- Wu, X.; Li, S.; Jiang, X.; Zhou, Y. Information acquisition optimizer: A new efficient algorithm for solving numerical and constrained engineering optimization problems. J. Supercomput. 2024, 80, 25736–25791. [Google Scholar] [CrossRef]

- Bogar, E.; Beyhan, S. Adolescent Identity Search Algorithm (AISA): A novel metaheuristic approach for solving optimization problems. Appl. Soft Comput. 2020, 95, 106503. [Google Scholar] [CrossRef]

- Wang, K.; Guo, M.; Dai, C.; Li, Z. Information-decision searching algorithm: Theory and applications for solving engineering optimization problems. Inf. Sci. 2022, 607, 1465–1531. [Google Scholar] [CrossRef]

- Holland, J.H. Genetic Algorithms. Sci. Am. 1992, 267, 66–73. [Google Scholar] [CrossRef]

- Banzhaf, W.; Koza, J.R.; Ryan, C.; Spector, L.; Jacob, C. Genetic programming. IEEE Intell. Syst. Their Appl. 2000, 15, 74–84. [Google Scholar] [CrossRef]

- Sinha, N.; Chakrabarti, R.; Chattopadhyay, P.K. Evolutionary programming techniques for economic load dispatch. IEEE Trans. Evol. Comput. 2003, 7, 83–94. [Google Scholar] [CrossRef]

- Bäck, T. Evolution strategies: An alternative evolutionary algorithm. In Artificial Evolution; Alliot, J.-M., Lutton, E., Ronald, E., Schoenauer, M., Snyers, D., Eds.; Springer: Berlin/Heidelberg, Germany, 1996; pp. 1–20. [Google Scholar]

- Storn, R.; Price, K. Differential Evolution–A Simple and Efficient Heuristic for global Optimization over Continuous Spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Simon, D. Biogeography-Based Optimization. IEEE Trans. Evol. Comput. 2008, 12, 702–713. [Google Scholar] [CrossRef]

- De Castro, L.N.; Von Zuben, F.J. The clonal selection algorithm with engineering applications. In Proceedings of the GECCO, Las Vegas, NV, USA, 8–12 July 2000; pp. 36–39. [Google Scholar]

- Gao, H.; Zhang, Q. Alpha evolution: An efficient evolutionary algorithm with evolution path adaptation and matrix generation. Eng. Appl. Artif. Intell. 2024, 137, 109202. [Google Scholar] [CrossRef]

- Layeb, A. Tangent search algorithm for solving optimization problems. Neural Comput. Appl. 2022, 34, 8853–8884. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Azeem, S.A.A.; Jameel, M.; Abouhawwash, M. Kepler optimization algorithm: A new metaheuristic algorithm inspired by Kepler’s laws of planetary motion. Knowl. -Based Syst. 2023, 268, 110454. [Google Scholar]

- Luan, T.M.; Khatir, S.; Tran, M.T.; De Baets, B.; Cuong-Le, T. Exponential-trigonometric optimization algorithm for solving complicated engineering problems. Comput. Methods Appl. Mech. Eng. 2024, 432, 117411. [Google Scholar]

- Yuan, C.; Zhao, D.; Heidari, A.A.; Liu, L.; Chen, Y.; Wu, Z.; Chen, H. Artemisinin optimization based on malaria therapy: Algorithm and applications to medical image segmentation. Displays 2024, 84, 102740. [Google Scholar] [CrossRef]

- Sowmya, R.; Premkumar, M.; Jangir, P. Newton-Raphson-based optimizer: A new population-based metaheuristic algorithm for continuous optimization problems. Eng. Appl. Artif. Intell. 2024, 128, 107532. [Google Scholar]

- Yuan, C.; Zhao, D.; Heidari, A.A.; Liu, L.; Chen, Y.; Chen, H. Polar lights optimizer: Algorithm and applications in image segmentation and feature selection. Neurocomputing 2024, 607, 128427. [Google Scholar]

- Qi, A.; Zhao, D.; Heidari, A.A.; Liu, L.; Chen, Y.; Chen, H. FATA: An efficient optimization method based on geophysics. Neurocomputing 2024, 607, 128289. [Google Scholar] [CrossRef]

- Yan, J.; Hu, G.; Shu, B. MGCHMO: A dynamic differential human memory optimization with Cauchy and Gauss mutation for solving engineering problems. Adv. Eng. Softw. 2024, 198, 103793. [Google Scholar]

- Hu, G.; Gong, C.; Shu, B.; Xu, Z.; Wei, G. DHRDE: Dual-population hybrid update and RPR mechanism based differential evolutionary algorithm for engineering applications. Comput. Methods Appl. Mech. Eng. 2024, 431, 117251. [Google Scholar]

- Li, Y.; Su, S.; Hu, F.; He, X.; Su, J.; Zhang, J.; Li, B.; Liu, S.; Man, W. A novel fault location method for distribution networks with distributed generators based on improved seagull optimization algorithm. Energy Rep. 2025, 13, 3237–3245. [Google Scholar]

- Jamal, R.; Khan, N.H.; Ebeed, M.; Zeinoddini-Meymand, H.; Shahnia, F. An improved pelican optimization algorithm for solving stochastic optimal power flow problem of power systems considering uncertainty of renewable energy resources. Results Eng. 2025, 26, 104553. [Google Scholar] [CrossRef]

- Wolpert, D.H.; Macready, W.G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Sirsant, S.; Reddy, M.J. Improved MOSADE algorithm incorporating Sobol sequences for multi-objective design of Water Distribution Networks. Appl. Soft Comput. 2022, 120, 108682. [Google Scholar]

- Dhargupta, S.; Ghosh, M.; Mirjalili, S.; Sarkar, R. Selective Opposition based Grey Wolf Optimization. Expert Syst. Appl. 2020, 151, 113389. [Google Scholar]

- Nadimi-Shahraki, M.H.; Taghian, S.; Mirjalili, S. An improved grey wolf optimizer for solving engineering problems. Expert Syst. Appl. 2021, 166, 113917. [Google Scholar]

- Ahmadianfar, I.; Heidari, A.A.; Gandomi, A.H.; Chu, X.; Chen, H. RUN beyond the metaphor: An efficient optimization algorithm based on Runge Kutta method. Expert Syst. Appl. 2021, 181, 115079. [Google Scholar] [CrossRef]

- Ghasemi, M.; Zare, M.; Trojovský, P.; Rao, R.V.; Trojovská, E.; Kandasamy, V. Optimization based on the smart behavior of plants with its engineering applications: Ivy algorithm. Knowl. -Based Syst. 2024, 295, 111850. [Google Scholar]

- Zhang, Y. Elite archives-driven particle swarm optimization for large scale numerical optimization and its engineering applications. Swarm Evol. Comput. 2023, 76, 101212. [Google Scholar]

- Coelho, L.D.S. Gaussian quantum-behaved particle swarm optimization approaches for constrained engineering design problems. Expert Syst. Appl. 2010, 37, 1676–1683. [Google Scholar]

- Nayyef, H.M.; Ibrahim, A.A.; Zainuri, M.A.A.; Zulkifley, M.A.; Shareef, H. A Novel Hybrid Algorithm Based on Jellyfish Search and Particle Swarm Optimization. Mathematics 2023, 11, 3210. [Google Scholar] [CrossRef]

- Meng, Z.; Zhong, Y.; Mao, G.; Liang, Y. PSO-sono: A novel PSO variant for single-objective numerical optimization. Inf. Sci. 2022, 586, 176–191. [Google Scholar]

- Hu, G.; Guo, Y.; Zhao, W.; Houssein, E.H. An adaptive snow ablation-inspired particle swarm optimization with its application in geometric optimization. Artif. Intell. Rev. 2024, 57, 332. [Google Scholar] [CrossRef]

- Zeng, B.; He, C.; Mao, C.; Wu, Y. Forecasting China’s hydropower generation capacity using a novel grey combination optimization model. Energy 2023, 262, 125341. [Google Scholar] [CrossRef]

- Guan, Y.; Xiao, Y.; Niu, R.; Zhang, N.; Shao, C. Characterizing the water resource-environment-ecology system harmony in Chinese cities using integrated datasets: A Beautiful China perspective assessment. Sci. Total Environ. 2024, 921, 171094. [Google Scholar] [CrossRef] [PubMed]

- Wang, F.; Wang, W.; Wu, Y.; Li, W. Assessment of water retention dynamic in water resource formation area: A case study of the northern slope of the Qinling Mountains in China. J. Hydrol. Reg. Stud. 2024, 56, 102063. [Google Scholar] [CrossRef]

- Hu, G.; Song, K.; Abdel-salam, M. Sub-population evolutionary particle swarm optimization with dynamic fitness-distance balance and elite reverse learning for engineering design problems. Adv. Eng. Softw. 2025, 202, 103866. [Google Scholar] [CrossRef]

- Yuan, C.Q.; Liu, S.F.; Fang, Z.G. Comparison of China’s primary energy consumption forecasting by using ARIMA (the autoregressive integrated moving average) model and GM(1,1) model. Energy 2016, 100, 384–390. [Google Scholar] [CrossRef]

- Ye, J.; Dang, Y.; Ding, S.; Yang, Y. A novel energy consumption forecasting model combining an optimized DGM (1, 1) model with interval grey numbers. J. Clean. Prod. 2019, 229, 256–267. [Google Scholar] [CrossRef]

- Wang, Z.X.; Hipel, K.W.; Wang, Q.; He, S.W. An optimized NGBM(1,1) model for forecasting the qualified discharge rate of industrial wastewater in China. Appl. Math. Model. 2011, 35, 5524–5532. [Google Scholar] [CrossRef]

- Hu, G.; Guo, Y.; Zhong, J.; Wei, G. IYDSE: Ameliorated Young’s double-slit experiment optimizer for applied mechanics and engineering. Comput. Methods Appl. Mech. Eng. 2023, 412, 116062. [Google Scholar] [CrossRef]

- Abdel-Salam, M.; Alzahrani, A.I.; Alblehai, F.; Zitar, R.A.; Abualigah, L. An improved Genghis Khan optimizer based on enhanced solution quality strategy for global optimization and feature selection problems. Knowl. -Based Syst. 2024, 302, 112347. [Google Scholar] [CrossRef]

- Hu, G.; Huang, F.; Seyyedabbasi, A.; Wei, G. Enhanced multi-strategy bottlenose dolphin optimizer for UAVs path planning. Appl. Math. Model. 2024, 130, 243–271. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).