Abstract

A multi-strategy enhanced version of the escape algorithm (mESC, for short) is proposed to address the challenges of balancing exploration and development stages and low convergence accuracy in the escape algorithm (ESC). Firstly, an adaptive perturbation factor strategy was employed to maintain population diversity. Secondly, introducing a restart mechanism to enhance the exploration capability of mESC. Finally, a dynamic centroid reverse learning strategy was designed to balance local development. In addition, in order to accelerate the global convergence speed, a boundary adjustment strategy based on the elite pool is proposed, which selects elite individuals to replace bad individuals. Comparing mESC with the latest metaheuristic algorithm and high-performance winner algorithm in the CEC2022 testing suite, numerical results confirmed that mESC outperforms other competitors. Finally, the superiority of mESC in handling problems was verified through several classic real-world optimization problems.

1. Introduction

1.1. Research Background

The optimization problem is a type of problem that optimizes the objective function that meets the constraints [1]. This type of problem involves many fields, such as physical chemistry [2], biomedical [3], economics and finance [4], logistics and operations management [5], science and technology, and machine learning [6]. Optimization problems are commonly present in the real world, such as path planning, image processing, feature selection, etc. Through optimization, specific excellent parts can be extracted to achieve the goal of improving overall performance.

1.2. Literature Review

The traditional methods for solving optimization problems such as the conjugate gradient method and momentum method have obvious disadvantages such as low efficiency and unsatisfactory optimization results. In this regard, metaheuristic algorithms can provide a novel and efficient approach. Metaheuristic algorithms include some classic algorithms inspired by the concept of selective elimination, such as particle swarm optimization (PSO) [7], differential evolution (DE) [8], etc. It also includes some algorithms inspired by animals, such as the zebra optimization algorithm (ZOA) proposed based on zebra foraging and predator avoidance behavior [9], the moth flame optimization (MFO) inspired by the nature of moths [10], spider wasp optimization (SWO) inspired by the survival behavior of spider bees [11], the seahorse optimization (SHO) [12] proposed based on the biological habits of seahorses in the ocean, the artificial hummingbird algorithm (AHA) [13] proposed based on the flight and foraging of hummingbirds, and the dwarf mongoose optimization algorithm (DMOA) [14] proposed based on the collective foraging behavior of dwarf mongoose. Metaheuristic algorithms also include algorithms inspired by physics and chemistry, such as the multi-verse optimization (MVO) proposed based on the concept of physical motion of celestial bodies in the universe, and the planetary optimization algorithm (POA) inspired by Newton’s law of gravity [15], algorithms proposed under the influence of human development behavior, such as the imperialist competition algorithm (ICA) inspired by weak strong cannibalism between countries [16], teaching–learning based optimization (TLBO) that simulates the process of human “teaching” and “learning” [17], in addition to sled dog optimization (SDO) [18], gray wolf optimization (GWO) [19], osprey optimization algorithm (OOA) [20], dung beetle optimization (DBO) [21], gravity search algorithm (GSA) [22], big bang big crunch (BBBC) [23], and other algorithms. In addition, there are also algorithms that have been improved on existing algorithms, such as the enhanced bottlenose dolphin optimization (namely EMBDO) for drone path planning with four constraints [24], the improved Kepler optimization algorithm (namely CGKOA) for handling engineering optimization problems [25], the superior eagle optimization algorithm (namely SEOA) for path planning [26], and the artificial rabbit optimization (namely MNEARO) for optimizing several engineering problems [27].

However, it is unrealistic to use one algorithm to solve all problems, and constantly proposing new algorithms and improving them is the most effective approach. The proposal of ESC to compound this demand was inspired by crowd evacuation behavior, and ESC [28] simulated three types of crowd behavior. ESC validates its superiority and competitiveness by comparing it with other competitors on two testing suites and several optimization problems. However, when balancing exploration and development, as well as handling high-dimensional situations, ESC may fall into local optima due to insufficient performance. Therefore, in order to better unleash the potential of ESC and further improve its performance, this article proposes mESC.

In mESC, the proposed adaptive perturbation factor strategy, boundary adjustment strategy based on the elite pool, dynamic centroid reverse learning strategy, and a proposed restart mechanism are used to enhance the overall performance of ESC. Use an adaptive perturbation factor strategy to balance population diversity during algorithm iteration. The restart mechanism enhances the exploration capability of mESC and prevents excessive convergence in the later stages of iteration. The boundary adjustment strategy based on an elite pool can screen more outstanding individuals as candidate solutions and accelerate convergence speed. Local development of dynamic centroid can reverse learning strategy balance algorithm to improve convergence accuracy and enhance local optimization.

1.3. Research Contribution

This study proposes a multi-strategy-based escape algorithm, mESC. This algorithm improves the original algorithm and its performance is validated through multiple experimental metrics on a test suite with 26 competitors. In addition, mESC is used for truss topology optimization and five engineering design optimization problems to affirm its superiority. The proposal of this improved algorithm provides more methods for optimizing problems, greatly improving the accuracy of optimization problems.

1.4. Chapter Arrangement

Section 2 first describes the concept of ESC, then presents the proposed improvement strategy for mESC, and finally presents relevant numerical experiments to verify the performance of the proposed algorithm. Section 3 confirms the practicality of the proposed algorithm through truss topology optimization and 5 engineering optimizations. Section 4 provides a summary of the entire text.

2. Related Work

2.1. Inspiration Source

The escape algorithm is proposed based on the response of the crowd during evacuation in emergency situations. This algorithm simulates various survival states and behaviors of crowds during an emergency evacuation, dividing them into three groups: calm, gathered, and panicked. A calm crowd can steadily move towards a safe zone, while a gathering crowd is in a hesitant state, and a panic crowd cannot smoothly move towards a safe zone.

2.2. Mathematical Modeling of ESC

(1) Initialization

Using random initialization in ESC,

In the formula, represent the lower and upper bounds of the th dimension, the value of the random variable follows a uniform distribution between 0 and 1.

Then, calculate the fitness values of the population and arrange them in ascending order. Store the optimal individuals in the elite pool Epool, where these elites are the best possible solutions that have been discovered, The specific expression is as follows:

(2) Panic index

This value represents the panic level of the crowd during the evacuation process, expressed as follows:

The larger the value, the more chaotic the behavior becomes. As the iteration progresses, the panic level decreases and the crowd gradually adapts to the environment. In the equation, represents the current iteration count and represents the maximum iteration count.

(3) Exploration stage

The three states of calmness, gathering, and panic are grouped according to the ratio, which is in line with the actual behavioral state of the crowd in emergency situations. Most people are in a state of panic, and only a small number of people are calm.

Calm down group update:

in the formula, represents the mean of all calm groups in the th dimension, is obtained according to the following equation,

In the formula, represents the group position of the calm group, represents the maximum and minimum values of the calm group in the th dimension, respectively, represents the individual’s adjustment value that satisfies , is a random value of 0 or 1, and is the adaptive Levy weight.

Aggregation group update:

In the equation, is a random individual within the panic group, and the vector is obtained according to the following equation,

In the formula, is a random position, is the maximum and minimum values of the cooling group, and is similar to .

Panic Group Update:

The impact of random indications on the fertilization pool of individuals in the panic group and other individuals,

In the formula, represents individuals in the elite pool, represents randomly selected individuals in the population, and the vector is obtained according to the following equation,

In the formula, is the group position of the panic group, and is the upper and lower bounds of the panic group.

(4) Development stage

At this stage, all individuals remain calm and improve their position by approaching members of the elite pool. This process simulates the crowd gradually gathering towards the determined optimal exit,

In the formula, represents the individual’s position, and represents the currently obtained best solution or exit.

2.3. The Proposed mESC Algorithm

ESC performs well in solving simple problems in low dimensions, but its performance significantly decreases when solving complex multimodal and combinatorial functions. This means that it is more prone to getting stuck in local solution spaces, and the continuous reduction in population diversity makes it difficult for the algorithm to maintain synchronous exploration and development, ultimately leading to overall performance degradation. Therefore, this article proposes some improvement strategies and develops the mESC algorithm to address these shortcomings. The specific improvement strategies are as follows.

2.3.1. Adaptive Perturbation Factor Strategy

Generally speaking, the population diversity of algorithms will decrease as the algorithm runs, and the significant reduction in diversity in the later stages of iteration is not conducive to the full exploration of the population. This article proposes an adaptive perturbation factor to overcome this drawback, which adjusts the perturbation probability of individuals in the population as the iteration progresses; furthermore, it enriches the diversity of the population. The specific expression is as follows:

is a random number in the equation.

As increases, it enhances the global search capability and avoids falling into local optima. However, as gradually decreases, it improves the local search accuracy and accelerates convergence. For continuous functions, when an individual has not yet found the optimal solution, there are better solutions nearby. The adaptive perturbation factor can adjust the individual extremum, global extremum, and position term to increase the possibility of finding the global optimal solution.

2.3.2. Restart Mechanism

ESC has the drawbacks of premature stagnation and getting stuck in local space, and this article uses a restart mechanism [29] to improve this. This mechanism can enhance the robustness of ESC while improving the global search capability, and effectively eliminating some poor individuals, thereby achieving the goal of enhancing algorithm convergence.

We set the worst individual factor in this article to 0.05, select the worst quantity as , and as the population size. The position update equation for these worst individuals is as follows:

satisfies the formula in the equation,

In the formula, follows a Gaussian distribution, represents the optimal individual, and is a random number.

In this strategy, the restart mechanism gives the algorithm the opportunity to jump out of local optima and explore other solution spaces, thereby increasing the probability of finding the global optimum. In addition, as the iteration progresses, the convergence speed may slow down. However, the restart mechanism is equivalent to using the fast convergence characteristics of the population in the initial stage of the iteration, and this mechanism can prompt the algorithm to continue optimization when facing local stagnation.

2.3.3. Boundary Adjustment Strategy Based on Elite Pool

According to Equation (10), we have obtained the position of the entire population. Next, we will perform boundary processing on the obtained positions to ensure the correctness and robustness of the algorithm by applying specific processing methods to the boundaries or extreme cases. Generally, when an individual exceeds the population boundary, they are indiscriminately assigned a critical value to the boundary, which can lead to local aggregation of individuals on the boundary and affect the search for the global optimal solution. This article introduces the boundary adjustment strategy of the elite pool, which updates the boundaries of individuals in the population based on the selected members in the elite pool.

Therefore, the position formula of the population is updated to,

In the formula, .

In this strategy, the elite pool ensures that the optimal solutions of each iteration are not eliminated by storing them, and these elite solutions reduce invalid searches by concentrating on the search potential area. By retaining diverse elite solutions, it is possible to prevent the population from prematurely falling into local solutions and enhance global search capabilities. Because elite solutions come from different regions of the population, this can maintain a balance between exploration and development for the algorithm. After mastering the position of the elite solution, the boundary can be adjusted to search in the area that is most likely to find the global optimal solution.

2.3.4. Dynamic Centroid Reverse Learning Strategy

This article proposes a random centroid reverse learning strategy to improve ESC. Based on the idea of reverse learning, while considering existing solutions, opposite solutions are also taken into account. By comparing with the reverse solution, choose the better solution to guide other individuals in the population to seek optimization. While balancing the concepts of adversarial learning and centroids, the robustness of mESC is improved by introducing randomness elements, in the following specific form:

Generate integer , which is the number of randomly selected populations. Then, randomly select individuals from the current population and calculate their centroids, expressed as follows:

Then, generate the population’s ortho solutions about the centroid, as shown below:

Finally, using the greedy rule, the top individuals with the best fitness values were selected from the original improved population and the population that underwent random centroid reverse learning as the new generation population. The random centroid reverse learning strategy utilizes the excellent solutions obtained from the previous generation to guide population initialization, improve algorithm accuracy, accelerate convergence speed, and enable faster search near potentially optimal positions.

In this strategy, the centroid will dynamically update with changes in the population to ensure that the algorithm can respond to changes in the population in a timely manner to avoid premature convergence. After integrating reverse learning, the reverse solution expands the search space and increases the diversity of the population, which helps to escape from local optima

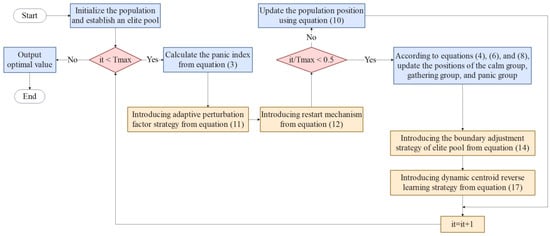

2.3.5. Specific Steps of mESC

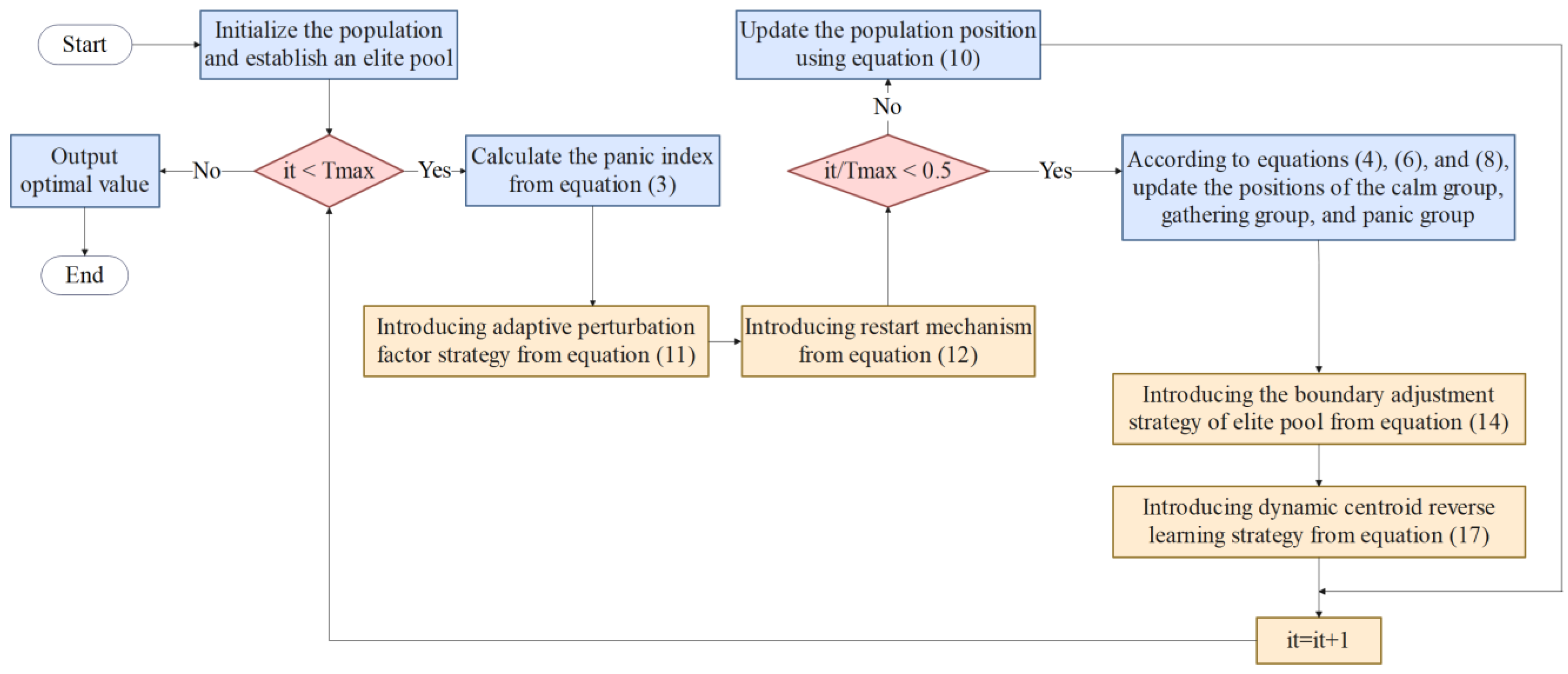

The pseudocode of the improved escape algorithm incorporating adaptive perturbation factor, restart mechanism, boundary adjustment strategy based on elite pool, and dynamic centroid reverse learning strategy, is listed in Algorithm 1. Figure 1 shows the flowchart of mESC.

| Algorithm 1 The proposed mESC |

| Input: Dimension , Population , Population size , Maximum number of iterations , The worst individual ratio Pworst is 0.1 |

| Output: Optimal fitness value |

| 1: Randomly initialize the individual positions of the population using Equation (1) |

| 2: Sort the fitness values of the population in ascending order and record the current optimal individual |

| 3: Store the refined individuals in the elite pool of Equation (2) |

| 4: while do |

| 5: Calculate the panic index from Equation (3) |

| 6: |

| 7: for do |

| 8: for do |

| 9: |

| 10: |

| 11: end for |

| 12: end for |

| 13: if then |

| 14: Divide the population into three groups and update the three groups according to Equations (4), (6) and (8) |

| 15: else |

| 16: Equation (10) updates the population |

| 17: end |

| 18: for do |

| 19: |

| 20: |

| 21: end |

| 22: |

| 23: Calculate individual fitness values and update the elite pool based on the optimal value |

| 24: |

| 25: end while |

| 26: Return the optimal solution from the elite pool |

Figure 1.

Proposed mESC flowchart.

2.4. Complexity Analysis of mESC

The time complexity of mESC consists of three parts: initialization, main iteration process, and dynamic centroid reverse learning: population size of , number of iterations of , dimension of , restart times of , and elite pool size of . The time complexity of mESC is as follows:

The spatial complexity of an algorithm refers to the trend of the required storage space during its runtime. The time complexity of mESC consists of population storage, fitness value storage, and elite pool storage. However, the spatial complexity corresponding to other strategies is . The time complexity of mESC is as follows:

2.5. Experimental Setup

We conducted two sets of comparative experiments to validate the performance of the proposed algorithm. The first set compared mESC with 16 other newly proposed metaheuristic algorithms, including some competitive algorithms proposed in 2023 and 2024, which showed excellent performance in solving some problems. The second group conducted comparative experiments between mESC and 11 high-performance, winner algorithms, including 3 winner algorithms from CEC competitions, 4 variant algorithms from DE, and 3 variant algorithms from PSO. These algorithms have generally strong performance and are often used as competitors in comparative experiments. By comparing with current new algorithms and powerful algorithms in the past, the superiority of mESC is highlighted.

This article will include some experimental tables and images in the Supplementary Materials. Among them, Tables S1 and S2 and Tables S3 and S4, respectively, show the results of two types of parameters in the 10 and 20 dimensions. Tables S5 and S6 and Tables S7 and S8 show the experimental results and Wilcoxon results of mESC and the novel metaheuristic algorithm in 10 and 20 dimensions, respectively. Tables S9 and S14 show the running times of mESC, the new metaheuristic algorithm, and the high-performance, winner algorithm, respectively. Tables S10 and S11 and Tables S12 and S13, respectively, show the experimental results and Wilcoxon results of mESC and high-performance, winner algorithm in 10 and 20 dimensions. Figures S1 and S2 show the convergence curves and boxplots of mESC and the novel metaheuristic algorithm, respectively. Figures S3 and S4 show the convergence curves and boxplots of mESC and high-performance, winner’s algorithm, respectively.

2.5.1. Benchmark Test Function

Use the CEC2022 (D = 10, 20) test kit to evaluate the performance of mESC for two comparative experiments. Set the population size of mESC to 30, with a maximum iteration of 500, and run it independently 30 times as a whole.

2.5.2. Parameter Settings

The new metaheuristic algorithms compared in the first set of experiments include Parrot Optimization (PO) [30], Geometric Mean Optimization (GMO) [31], Fata Morgana Algorithm (FATA) [32], Moss Growth Optimization (MGO) [33], Crown Pig Optimization (CPO) [34], Polar Lights Optimization (PLO) [35], Newton–Raphson-based Optimizer (NRBO) [36], Information Acquisition Optimization (IAO) [37], Love Evolutionary Algorithm (LEA) [38], Escape Algorithm (ESC), Improved Artificial Rabbit Optimization (MNEARO), Artificial Hummingbird Algorithm (AHA), Dwarf Mongoose Optimization Algorithm (DMOA), Zebra Optimization Algorithm (ZOA), and Seahorse Optimization (SHO). The high performance compared in the second set of experiments, the winning algorithm includes Autonomous Particle Groups for Particle Dwarm Optimization (AGPSO) [39], Integrating Particle Swarm Optimization and Gravity Search Algorithm (CPSOGSA) [40], Improved Particle Swarm Optimization (TACPSO) [41], Bernstein–Levy Differential Evolution (BDE) [42], Bezier Search Differential Evolution (BeSD) [43], Multi Population Differential Evolution (MDE) [44], Improving Differential Evolution through Bayesian Hyperparameter Optimization (MadDE) [45], Improved LSHADE Algorithm (LSHADE-cnEpSin) [46], Improvement of L-SHADE Using Semi-parametric Adaptive Method (LSHADE-SPACMA) [47], and Improving SHADE with Linear Population Size Reduction (LSHADE) [48]. All parameters are given in Table 1.

Table 1.

Parameter settings.

2.5.3. Empirical Test

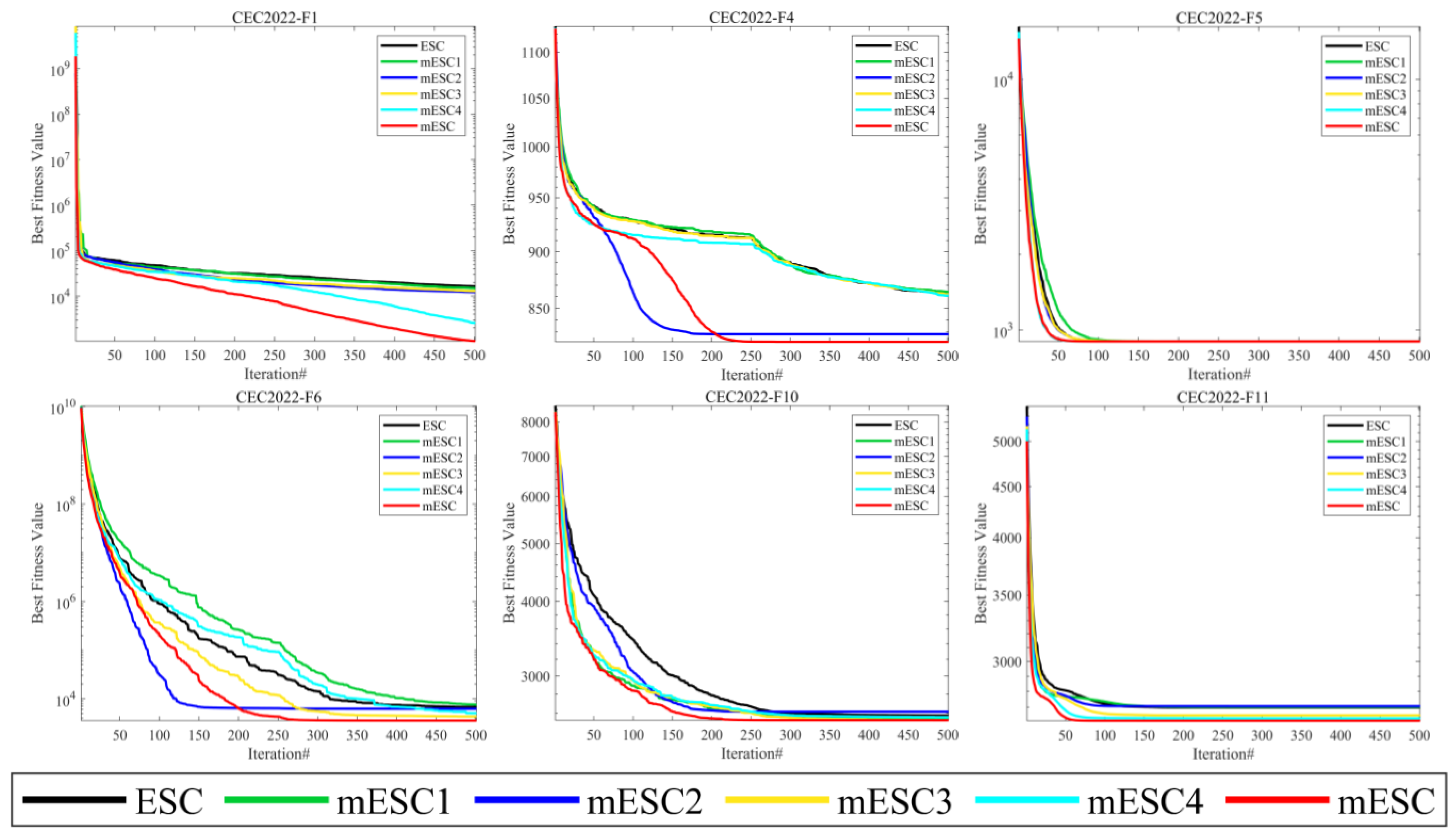

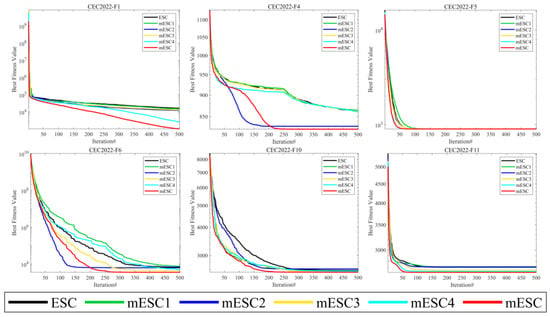

Before starting this section, we defined mESC1, mESC2, mESC3, and mESC4 as algorithms that separately introduce adaptive perturbation factors, restart mechanisms, boundary adjustment strategies based on elite pools, and dynamic centroid reverse learning strategies for mESC. Subsequently, we conducted impact analysis on mESC, ESC, mESC1, mESC2, mESC3, and mESC4, and discussed the degree of impact of the proposed individual strategies on the original ESC and mESC.

Given the convergence of six algorithms from Figure 2, when solving the F1 problem, mESC and mESC4 have the best convergence effect, while other competitors have all fallen into local optima after a mid-term operation. However, mESC has a convergence effect that is 1 or 2 orders of magnitude better than mESC4. For F4, mESC and mESC2 have the closest convergence effects. For F5, visually speaking, the convergence of the six algorithms is very close, but mESC has the fastest convergence speed. For F6, mESC has the highest convergence accuracy, while mESC2 converges faster than mESC. For F10, mESC1, mESC3, mESC4, and mESC, their convergence is very close at the beginning of the operation, but mESC has a higher convergence accuracy. The convergence of F11, mESC, and mESC4 are very similar, while the convergence accuracy of other algorithms is inferior to mESC. The four strategies play different roles in improving the algorithm, which is related to their different mechanisms, and combining them will produce better results.

Figure 2.

Convergence curves of mESC, ESC, mESC1, mESC2, mESC3, and mESC4 on CEC2022.

Table 2 presents the 12 experimental results on the CEC2022 test suite, and according to this table, mESC has the best overall performance. MESC has the optimal mean on 10 questions and the optimal standard deviation on four questions, indicating that the combination of these four improvement strategies is effective. MESC is optimal on unimodal functions and can also achieve optimality on fundamental functions. The performance of mESC3 is optimal on the mixed function F6, while mESC reaches its optimum on F7 and F8. MESC1 performs the best on F10, outperforming mESC, while mESC performs better on other functions. Overall, we can demonstrate that mESC performs the best and validates the effectiveness of the four strategies.

Table 2.

Experimental results of ESC, mESC, mESC1, mESC2, mESC3, and mESC4 on CEC2022.

2.5.4. Sensitivity of the Parameters

In the proposed mESC, the main strategies introduced are the proportion of worst-performing individuals in the restart mechanism and the parameter adaptive disturbance factor adjustment range in the adaptive disturbance factor strategy, which affect the performance of the algorithm. In this section, we determine the relevant parameters on the CEC2022 test set. The variable dimensions in the experiment are the standard dimensions 10 and 20 of the test set, with a maximum iteration of 500. The average values obtained from different parameters are shown in Tables S1–S4 and the ranking is given in the last row of the table.

The worst individual ratio determines the proportion of individuals replaced after each restart. A smaller value will make it easier to fall into local optima while accelerating convergence, while a larger value will significantly slow down convergence while making global search stronger. The parameter ranges from 0.05 to 0.20 and increases by 0.05. As shown in Tables S1 and S2, achieves better performance and performance in both 10 and 20 dimensions based on the final ranking.

The adaptive interference factor adjustment range determines the variation range of the interference factor. When the problem to be solved requires a high convergence speed, this range can be appropriately reduced. However, for scenarios with high solution quality, the variation range should be increased. In Tables S3 and S4, values are selected between 0.9 and 0.5 in increments gradually decreasing by 0.01. The results indicate that the most promising range is when the parameter decreases between 0.7 and 0.5.

2.6. Experimental Analysis of mESC and New Metaheuristic Algorithm

As shown in Figure S1, mESC has good convergence performance compared to other competitors when dealing with different dimensional problems on CEC2022. Given MESC in F1 on the 10 dimensions, F3, F5, F7, the convergence speed in the early stage is the fastest, and it can quickly find the global optimum. ZOA has the fastest convergence speed in the early stage on F4, while LEA and CPO fall into local optima prematurely on multiple functions. The optimization accuracy of mESC is much better than other algorithms. On the 20-dimensional F4 problem, ZOA, SHO, and NRBO converge slightly faster than the proposed algorithm in the early stages of iteration, but mESC has the best convergence accuracy. On F3, F4, and F11, AHA, MNEARO, ESC, and mESC have very similar convergence accuracy in the middle and later stages of iteration, but mESC has higher convergence accuracy.

From Tables S5 and S6, it can be seen that mESC’s overall average ranking and ranking on various benchmark functions are superior to other competitors, demonstrating superior performance. The overall ranking in 10 dimensions is 1.83, ranking first on 5 benchmark functions. MNEARO’s overall ranking is second only to mESC. The overall ranking in 20 dimensions is 1.50, ranking first on 8 benchmark functions, while MGO ranks worst in two dimensions.

Tables S7 and S8 present the statistical results of the Wilcoxon rank sum test on different dimensions of CEC2022. On the 10 dimensions, mESC outperforms PO, FATA, MGO, ECO, CPO, PLO, NRBO, LEA, SHO, ZOA, and MNEARO on all 12 benchmark functions. On the 20 dimensions, mESC outperforms PO, FATA, MGO, NRBO, ECO, CPO, PLO, LEA, SHO, ZOA, and MNEARO on 12 benchmark functions, and also outperforms mESC on five functions.

Figure S2 shows the boxplots of mESC and other competitors in different dimensions of CEC2022. Overall, mESC has shorter boxes on different functions, especially F1, F3, F5, and F7 on the 10 dimensions and F2 on the 20 dimensions, F9, F10, and F11, This demonstrates the superiority and stability of mESC.

The running time of an algorithm is also an indicator for evaluating its performance. As shown in Table S9, CPO has the shortest running time and ranks first, while mESC ranks very low in both dimensions of running time, which is in line with our expectations. Because it is inevitable that the algorithm will run for too long while ensuring its performance, we allow this phenomenon to occur.

2.7. Experimental Analysis of mESC and High-Performance, Winner Algorithm

The experimental results of mESC and high-performance, winner algorithm on CEC2022 are presented in Tables S10 and S11. On the 10 dimensions, mESC outperforms other competitors on 8 benchmark functions, ranking first overall with an average ranking of 1.58. MadDE, ranked second overall, outperforms the proposed algorithm on two benchmark functions with an average ranking of 3.50. MDE performs better than mESC on two benchmark functions, but its overall ranking is only fourth. Overall, mESC’s testing with these competitors has validated its superior performance.

Tables S12 and S13 show the Wilcoxon rank sum test of mESC and high-performance, winner algorithm on the CEC2022 test suite (symbol ♢ = 3.0199 × 10−11 in the table). On the 10 dimensions, BDE, BeSD, MDE, and MadDE have better p-values than mESC on 2, 2, 2, and 3 benchmark functions, respectively. The p-values of mESC on 12 functions are completely superior to AGPSO, TACPSO, LSAHDE cnEpSin, and LSHADE. On the 20 dimensions, mESC outperforms the other seven competitors on 12 benchmark functions and only has approximate performance on one function compared to CPSOGSA, TACPSO, and MDE. Therefore, we can say that the proposed strategy significantly improves the algorithm.

Figure S3 shows the convergence curve of mESC and high-performance, winner algorithm on CEC2022. In terms of 10 dimensions, the convergence of mESC is superior to other competitors. LSAHDE-SPACMA, LSAHDE, and CPSOGSA began to fall into local optima in the mid-iteration of F1 and F4. On the 20th dimension, as the dimension increases, the convergence speed of mESC improves. The accuracy of mESC on F1 and F4 is significantly better than other competitors, and some algorithms fall into local optima early on F3, F7, and F11 while mESC can maintain stability for optimization.

Table S14 presents the comparison results of the running time between mESC and high-performance, winner algorithms. The running time ranking of mESC is 12th in both dimensions, which is in line with our initial expectations. LSHADE has the shortest running time and ranks first.

Figure S4 shows the boxplots of mESC and other algorithms in different dimensions of CEC2022. Overall, mESC has shorter boxes for the vast majority of functions, especially F1, F5, F7, F1 in the 10 dimensions, and F1 in the 20 dimensions, F2, F4, F8. This indicates the stability of mESC.

3. Real World Application Solving

Next, we apply the proposed mESC to five engineering optimizations and two truss topology optimizations, highlighting its superiority through the results of solving these problems with mESC and other competitors.

3.1. mESC Optimization of Truss Topology Design Problem

Structural optimization design refers to the design of a scheme with the goals of minimizing volume, minimizing cost, and maximizing stiffness under given constraints. Meanwhile, truss optimization is aimed at reducing weight as much as possible to achieve resource recycling efficiency. The specific constraints are as follows:

Find

Minimize

is the cross-sectional area, is the mass value, is the mass density, and is the length of the rod.

Limitation

In the formula, represents stress, represents stress constraint, and represents binary bits, , and are displacement constraints, natural frequency constraints, and Euler buckling constraints, respectively; is a cross-sectional area constraint, and without truss connections, this truss topology is invalid, i.e., . The consideration of motion stability will ensure the smooth use of the truss, i.e., .

In the truss topology optimization, if the solved cross-sectional area is less than the critical area, it is assumed that the member is removed from the Truss; otherwise, the member is retained. If the loading node, the supporting node, and the non-erasable node are not connected through any Truss pole, the generated Truss topology is invalid (g6). In order to ensure the generation of a motion stable Truss structure, motion stability (g7) is included in the design constraints, which are mainly divided into the following two criteria:

(1) The degrees of freedom of the structure calculated using the Grubler criterion should be less than 1;

(2) The motion stability of the structure is checked by the positive definiteness of the stiffness matrix created by component connections, and the global stiffness matrix should be positive definite.

To evaluate whether the design scheme meets the constraints, a penalty letter [49] needs to be introduced, as follows:

Punishment

In the formula, , represent the level of constraint violation, represents the activity constraint, and and are 2. The Euler buckling coefficient is set to 4.0 kg and the mass is set to 5.0 kg.

3.1.1. Optimization of 24 Bar 2D Truss

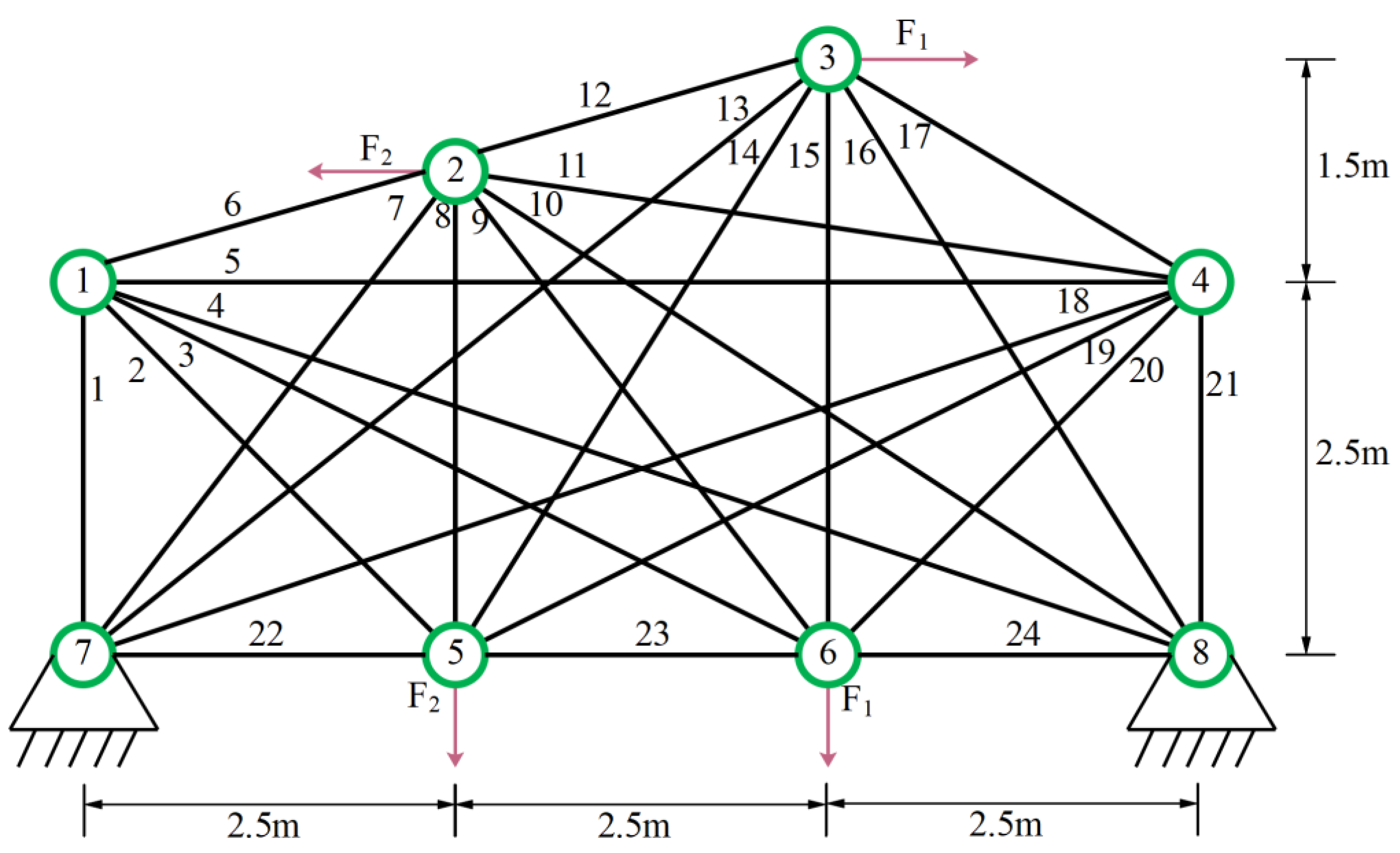

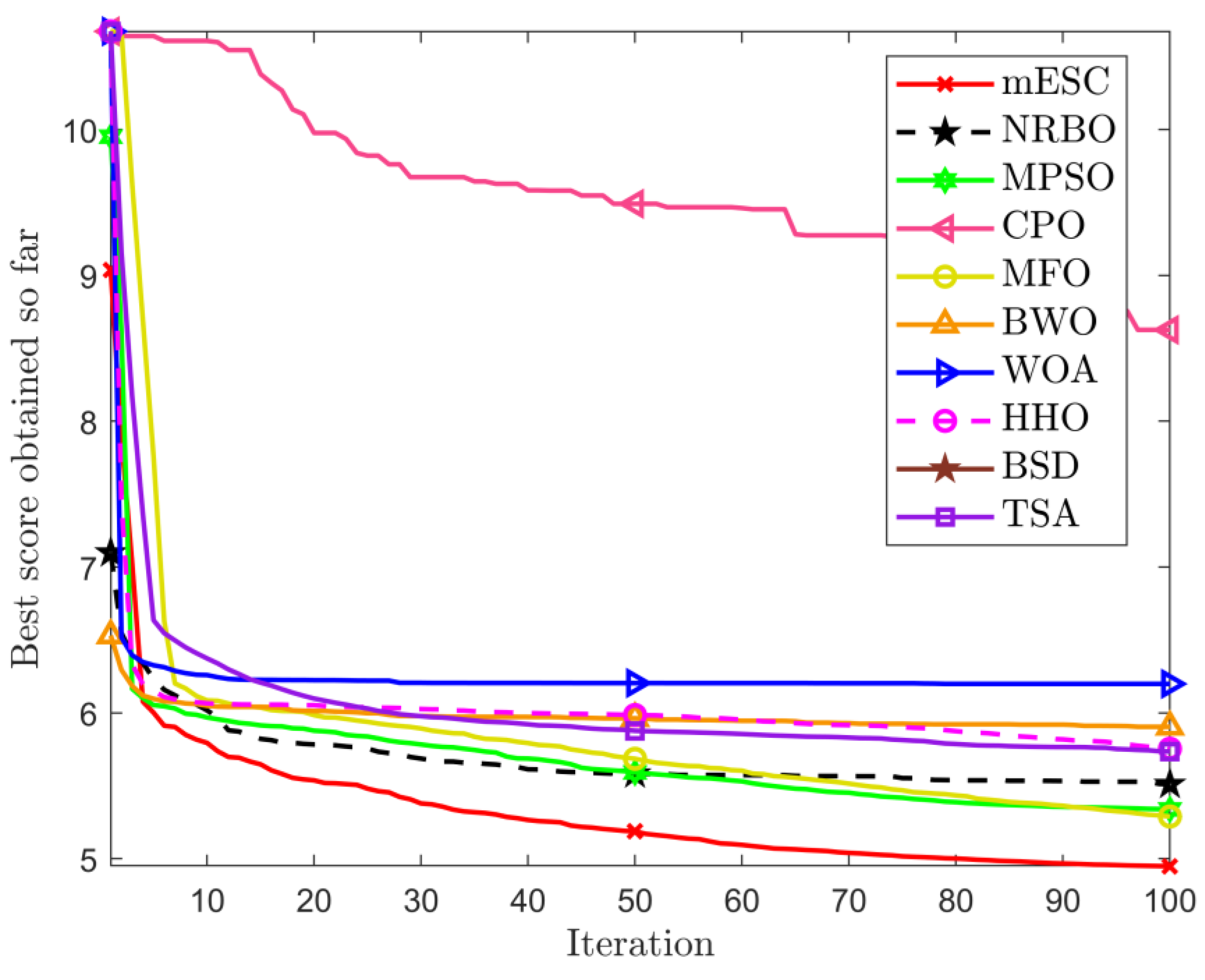

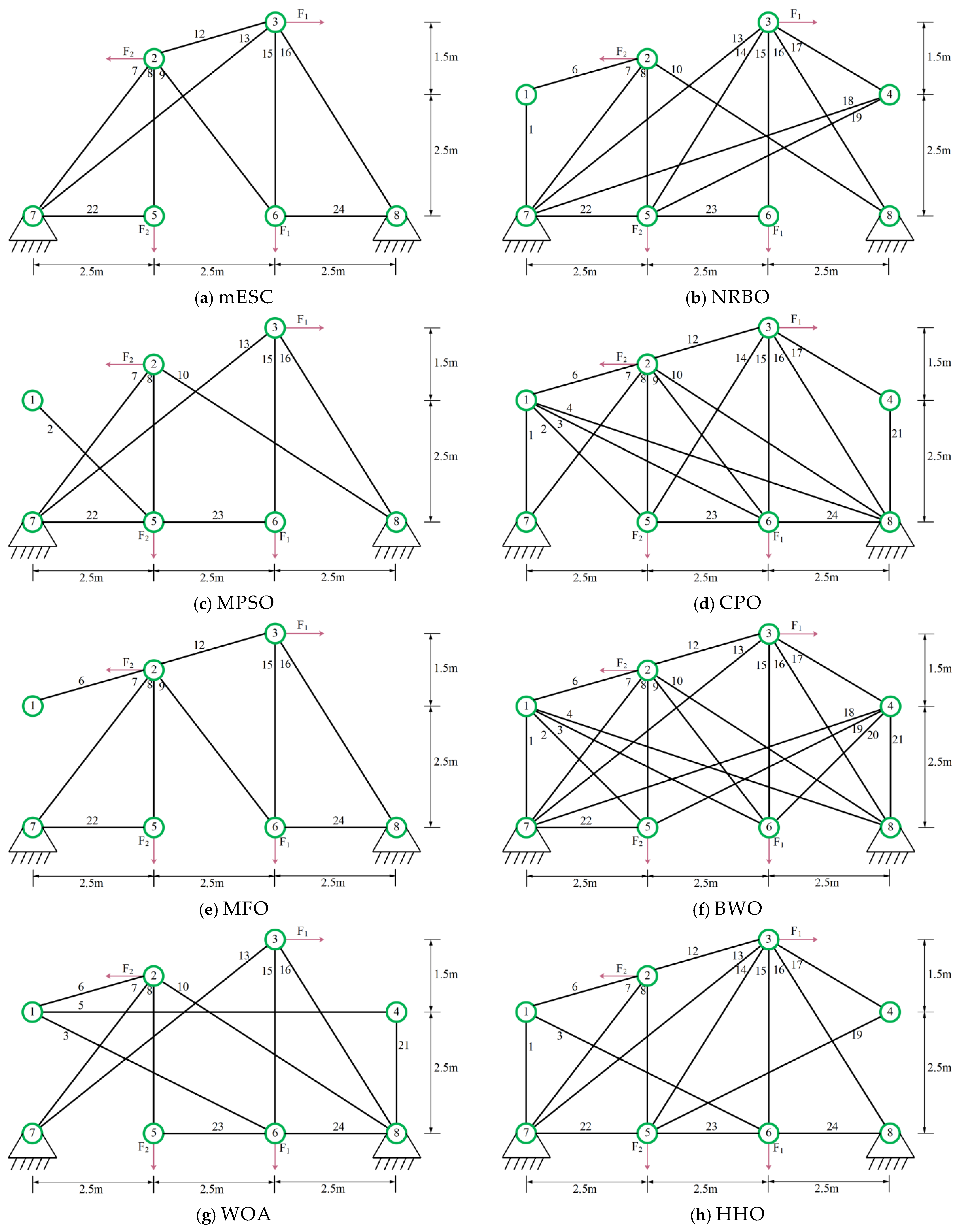

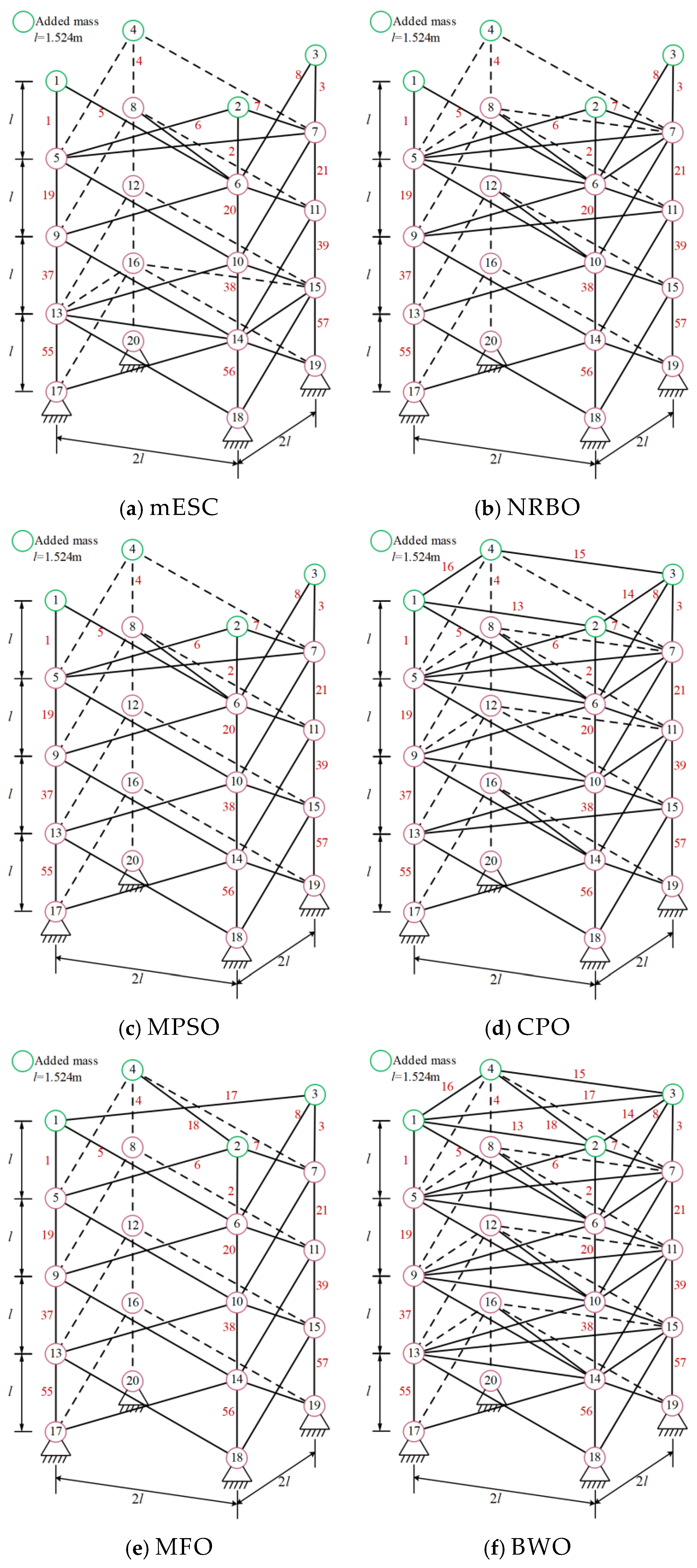

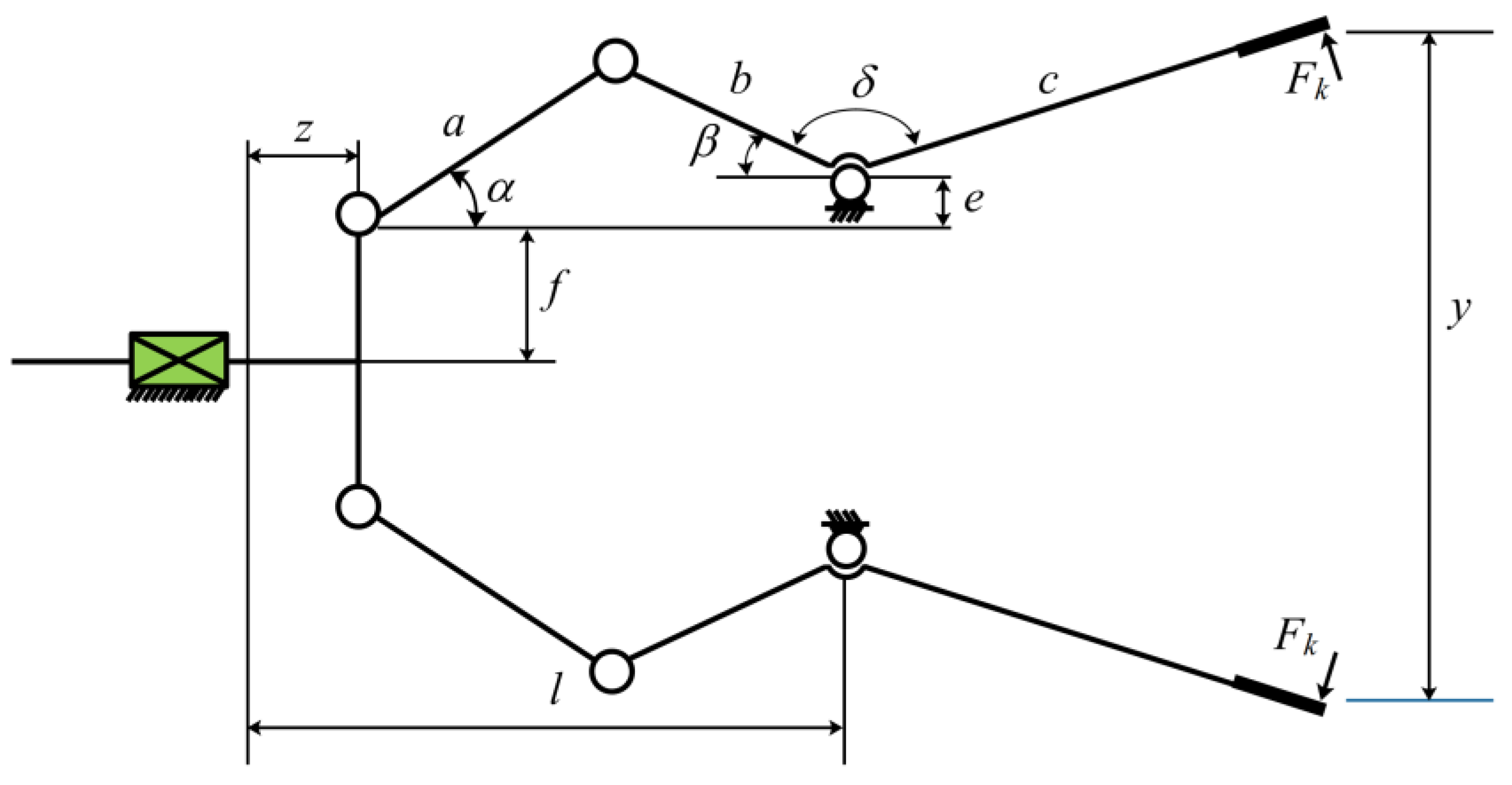

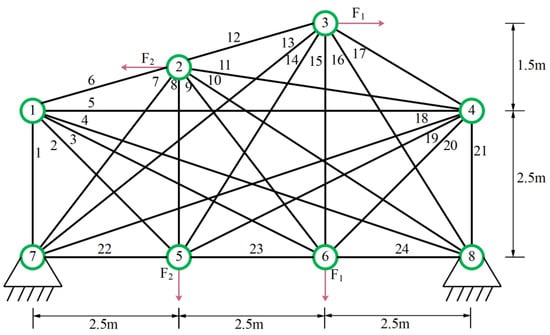

In the experiment, we selected nine algorithms including NRBO, MPSO [50], CPO, MFO, BWO, WOA, HHO [51], BSD [52], and TSA [53] as competitors. The relevant structure is shown in Figure 3, and the design variables are the segmental members of the truss. The parameters required for the experiment are described in Table 1. The experimental results of all algorithms after 20 runs are shown in Table 3, where the optimal weights and the optimal values of the average are marked with black bold graphs to evaluate the superiority and inferiority of the algorithm.

Figure 3.

24 Pole truss structure.

Table 3.

Conditions for 24 bar truss structure.

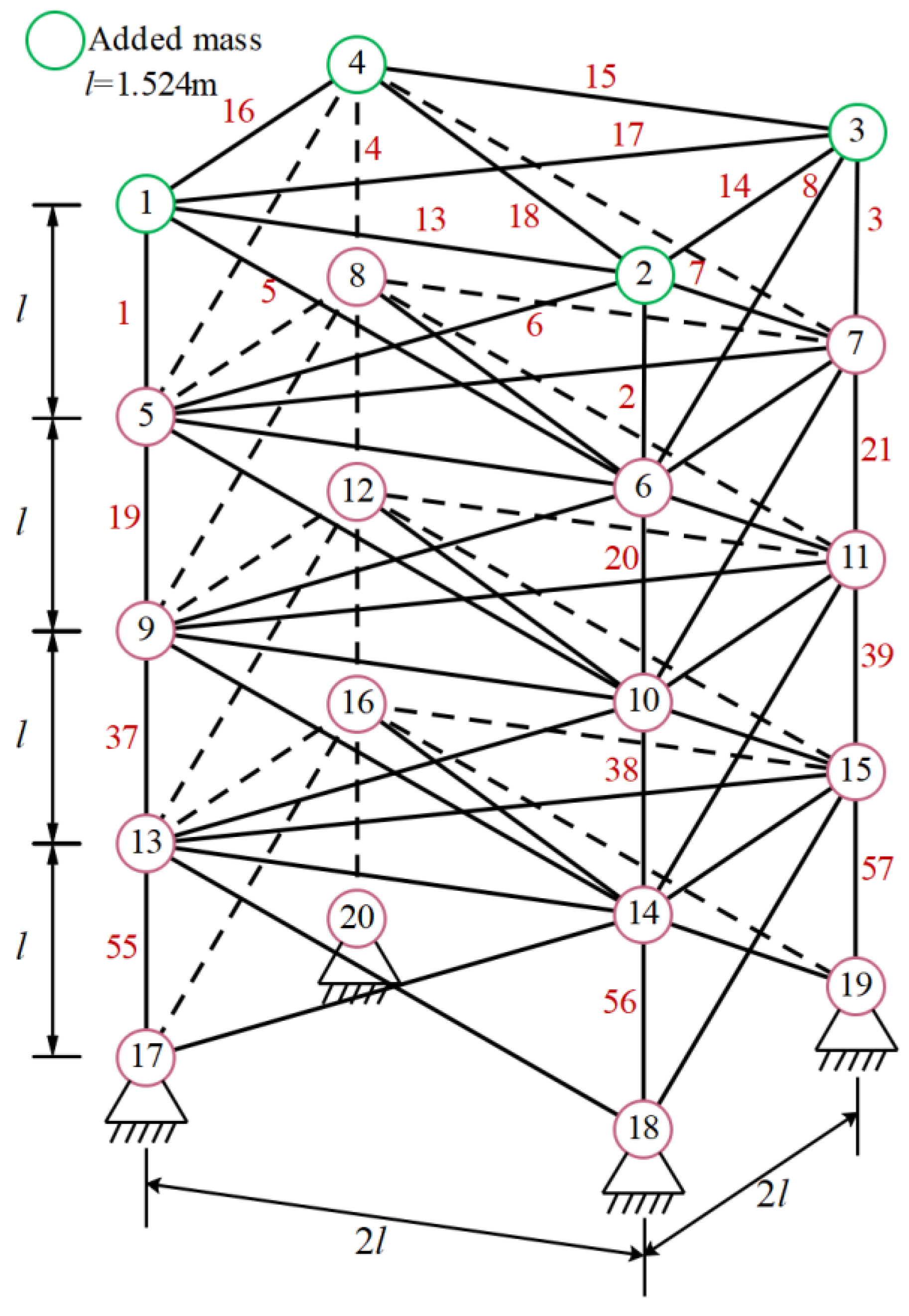

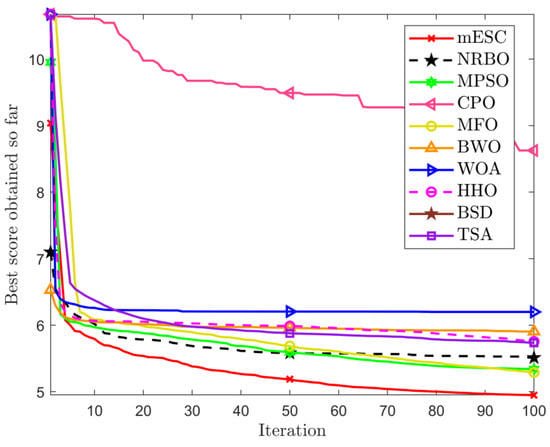

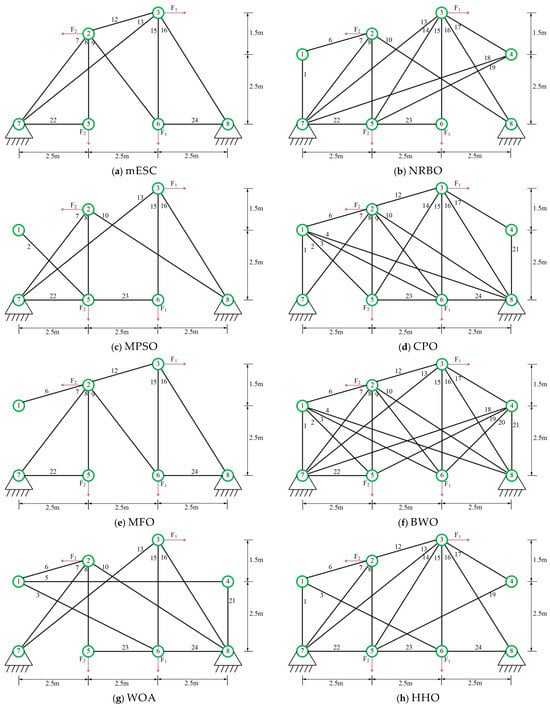

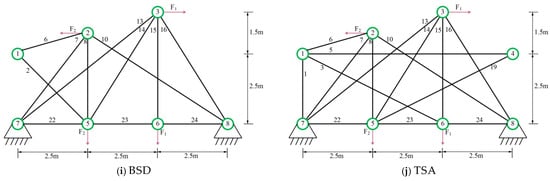

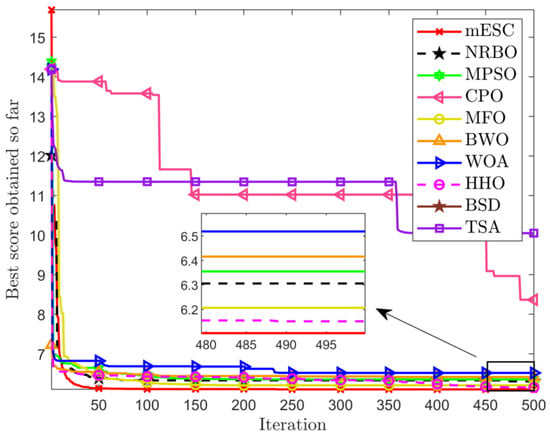

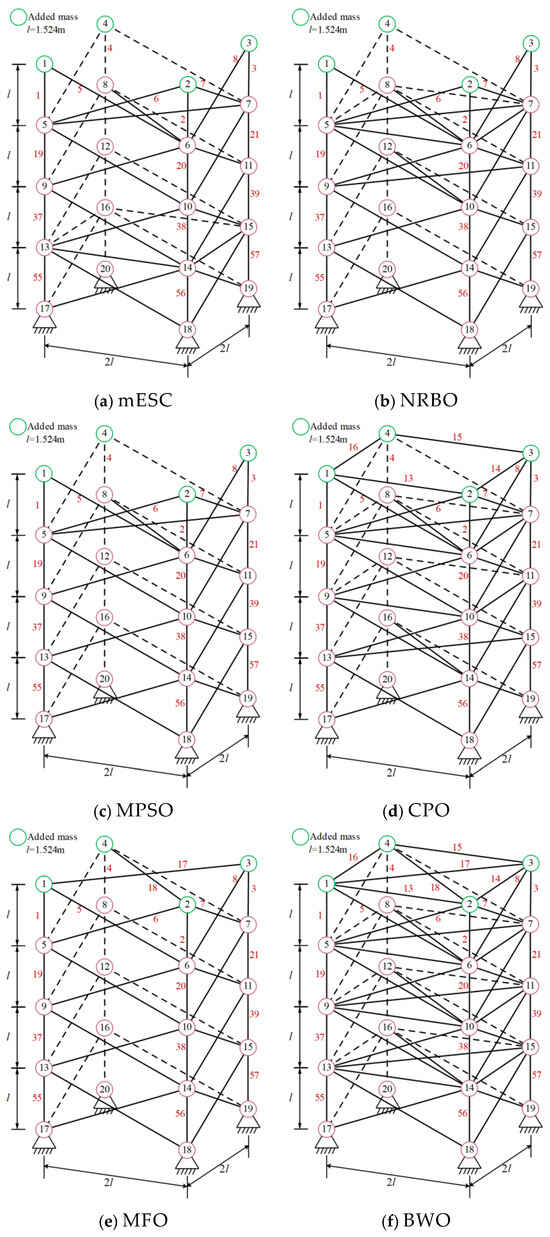

The data in Table 4 proves that the optimal weight and overall average value found by mESC are generally the best, with a minimum weight of 121.5840 and an overall average value of 140.5701. This indicates that mESC’s performance in solving this problem is the most stable compared to other competitors. Figure 4 shows the convergence graph of optimizing the problem among competitors. CPO begins to fall into local optima in the early and middle stages of iteration; MESC performed even better. Overall, the comprehensive performance of mESC has been validated in solving this optimization problem. Figure 5 shows the truss diagrams of each algorithm after removing the rods based on experimental results, with mESC having the highest number of rods removed.

Table 4.

Optimization results of 24 bars.

Figure 4.

Comparison of convergence of various algorithms on 24 bars.

Figure 5.

Topology optimization of 24 bar truss using various algorithms.

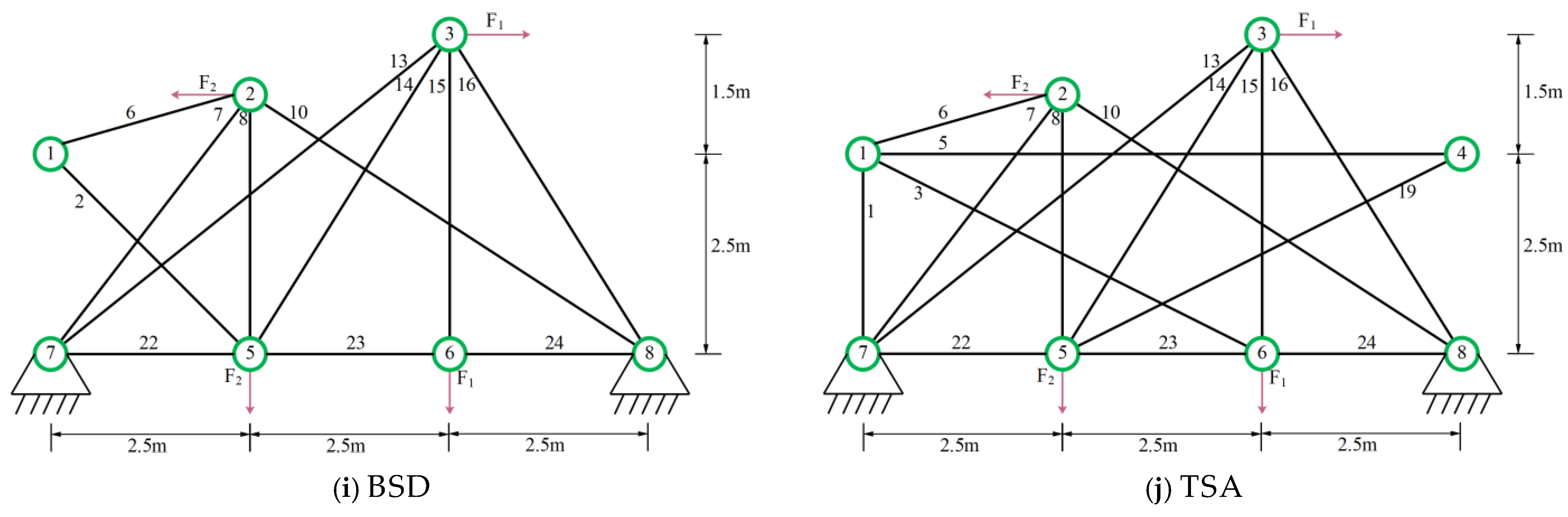

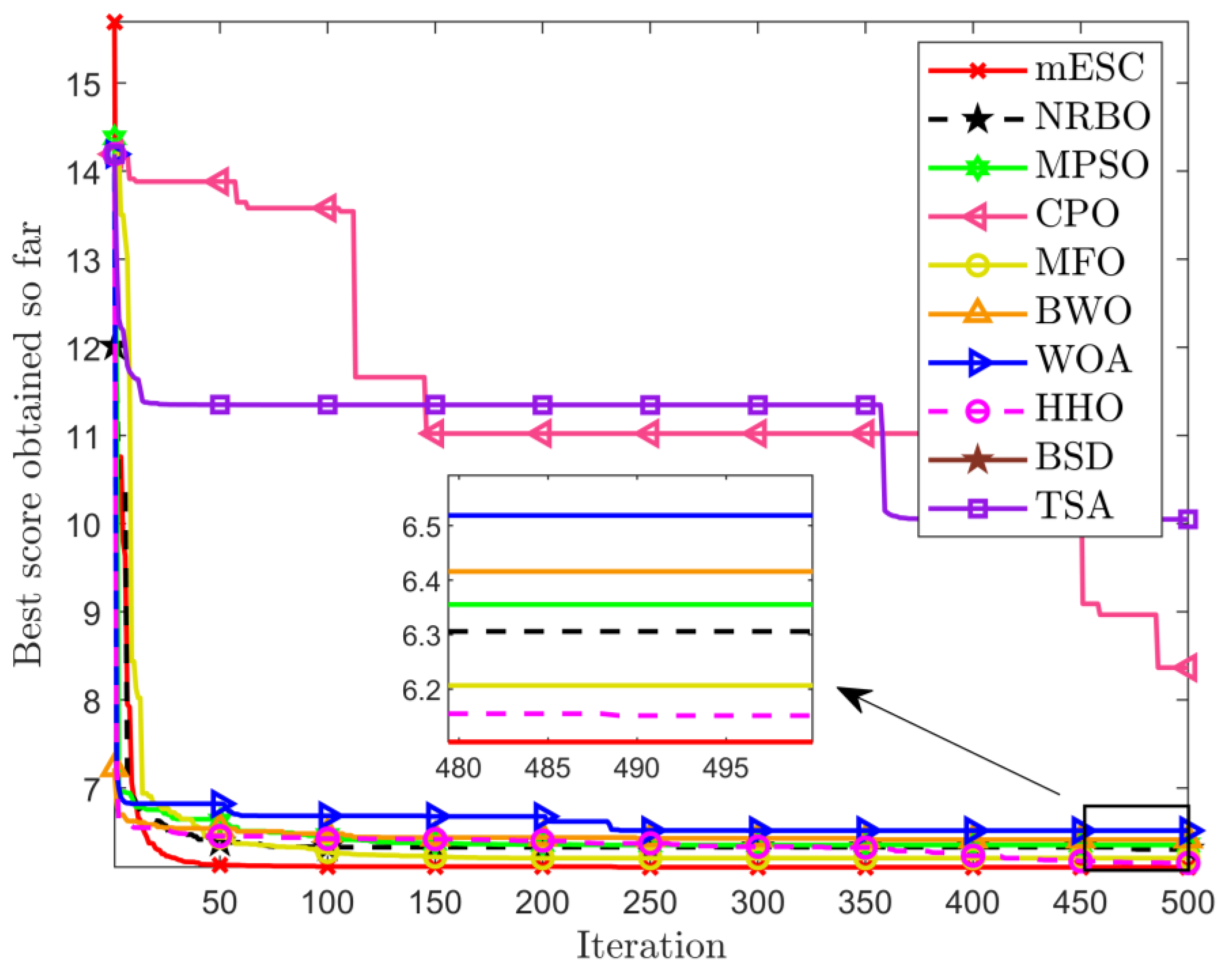

3.1.2. Optimization of 72 Bar 3D Truss

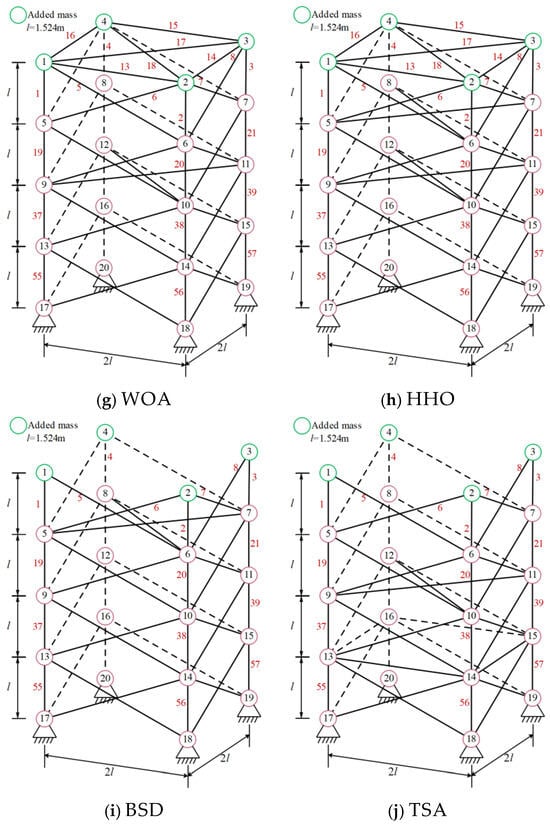

Figure 6 shows the 72 bar structural diagram. The truss components are divided into 16 groups, with the top four nodes representing mass concentration points. Set the data and parameters for minimum weight optimization in Table 5. The minimum weights and average values obtained by each algorithm are shown in Table 6. The optimal weight and overall average value of mESC are generally the best, with an optimal weight of 443.7325 being the smallest among all comparison algorithms, which confirms the superiority of the proposed algorithm’s performance. Figure 7 shows the optimization design convergence graphs of all algorithms, with mESC having the highest convergence accuracy. Figure 8 shows the truss structure diagram optimized by various algorithms.

Figure 6.

72 bar truss.

Table 5.

Setting of 72 bar truss structure.

Table 6.

Optimization of 72 bar truss structure.

Figure 7.

Convergence diagram of 72 bar truss.

Figure 8.

Topology optimization of 72 bar truss using various algorithms.

mESC can achieve the optimal structure by removing six rods; mESC needs to excel in solving such problems.

3.2. Engineering Problem

We use mESC to solve five engineering optimizations, namely minimizing the weight of the reducer, designing the welding beam, the problem of the stepper cone pulley, the problem of robot clamping, and the rolling element bearing. This article uses static penalty methods to handle constraints in the above engineering optimization problems, with the specific formula being:

In Formula (22), is the objective function, and are positive penalty constants, and are constraints, and parameters and are 1 or 2.

3.2.1. Minimize the Weight of the Reducer

This question is about the design of a small aircraft engine reducer, which involves seven variables: surface width , tooth pattern , number of teeth of the small gear, the size of the first axis is , size of the other shaft, first shaft diameter , and another shaft diameter . Its characteristics are as follows:

Minimize

Constraints:

Range:

To solve this problem, mESC conducted comparative experiments with NRBO, MPSO, CPO, BWO, WOA, HHO, TSA, AO [54], and GWO. According to Table 7, mESC provides the optimal design with an optimal value of 2994.506339787.

Table 7.

Optimization results of reducer design.

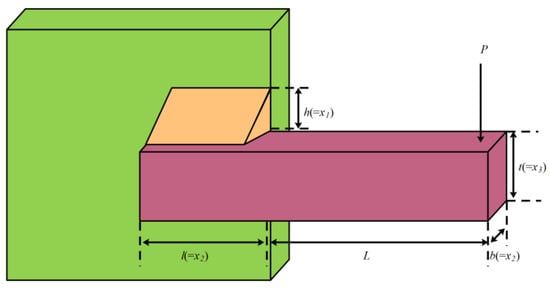

3.2.2. Welding Beam Design

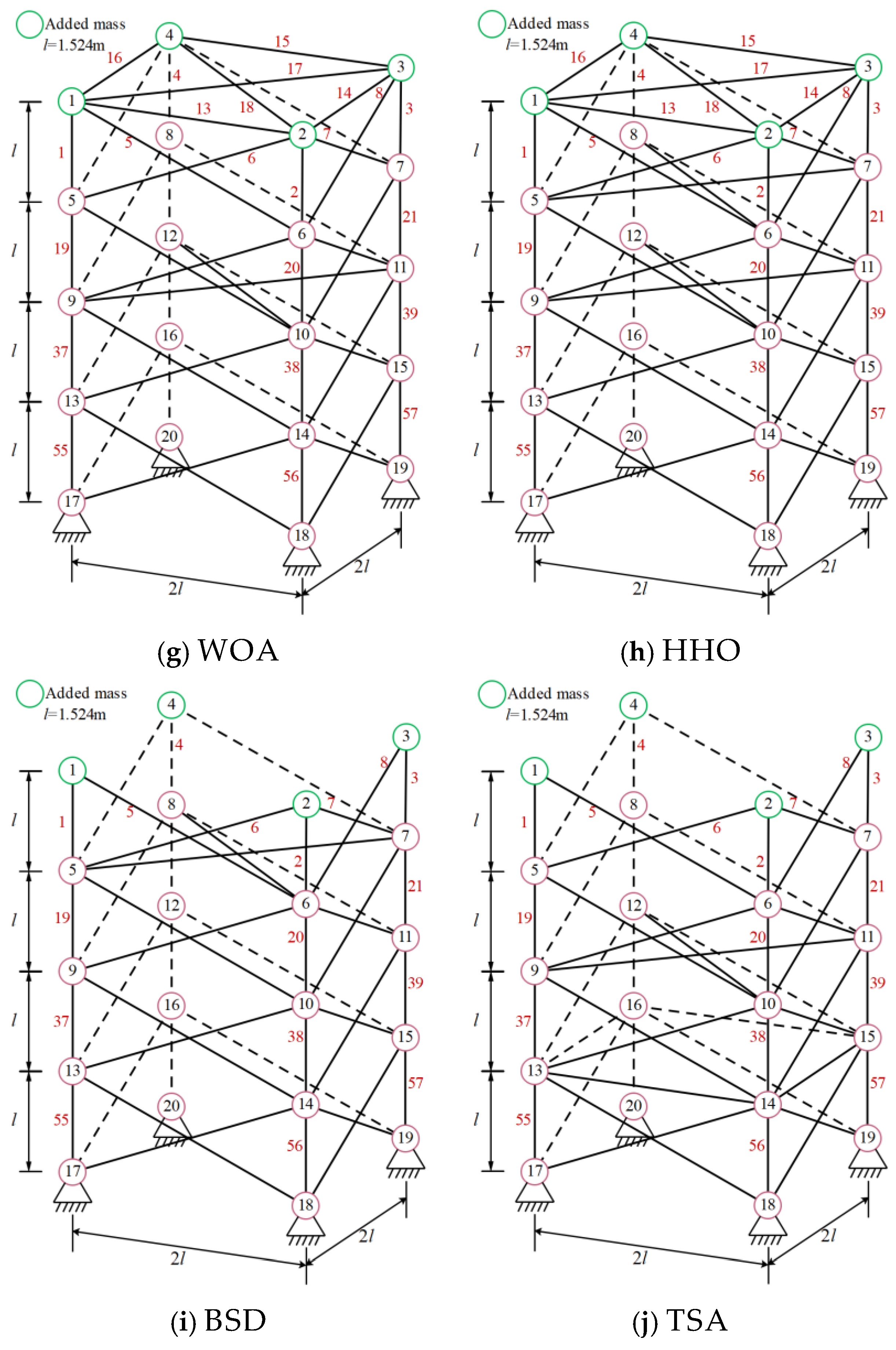

This design aims to minimize the cost of welding beams [55], involving weld seam width , clamping rod length , rod height , and rod thickness . The schematic diagram is shown in Figure 9, and the features are as follows:

Figure 9.

Welded beam structure.

Minimize

Constraints:

Range:

To solve this problem, mESC conducted comparative experiments with NRBO, MPSO, CPO, BWO, WOA, HHO, TSA, AO, and GWO. The optimal value obtained by mESC on this problem in Table 8 is 1.670306973.

Table 8.

Optimization results of welded beam design.

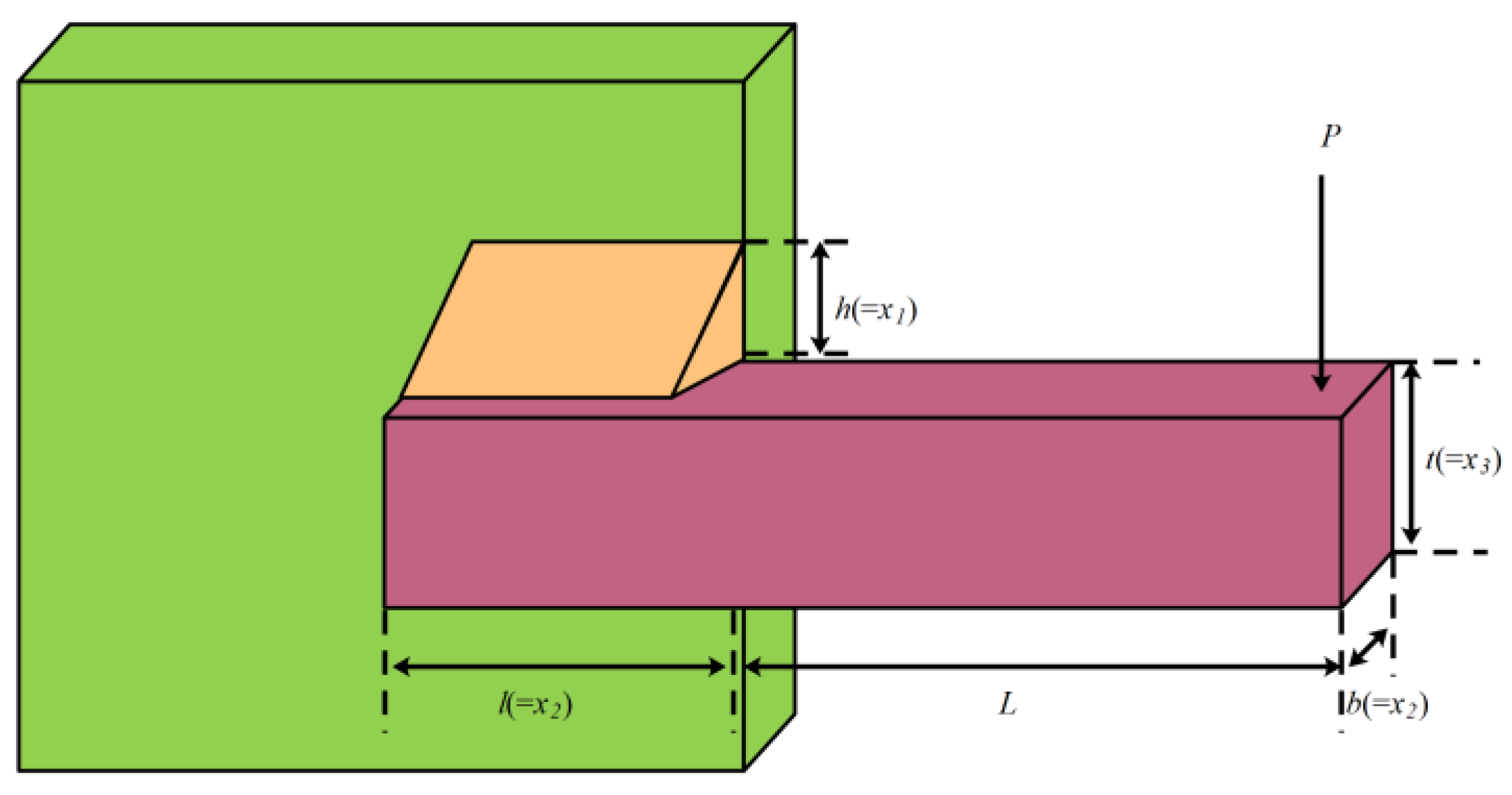

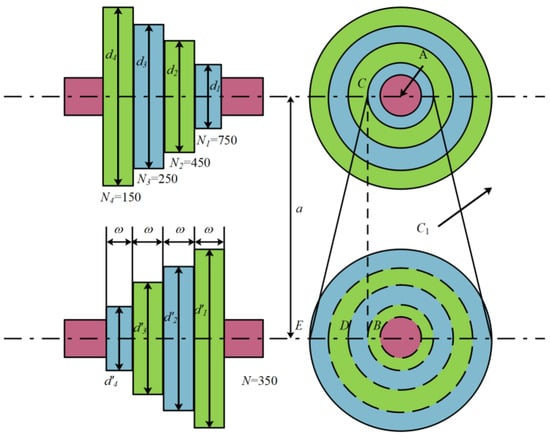

3.2.3. Step Cone Pulley Problem

The problem is to minimize the weight of the stepping cone pulley [56], involving five variables: pulley diameter and pulley width. Figure 10 shows its design diagram:

Figure 10.

Stepping cone pulley.

Minimize

Constraints:

Among them,

To address this issue, comparative experiments were conducted in Table 9 between mESC and competitors such as MPSO, CPO, AVOA [57], BWO, WOA, HHO, TSA, AO, and GWO. The results showed that mESC achieved the best performance with an optimal value of 16.983218316.

Table 9.

Optimization results of stepping cone pulley.

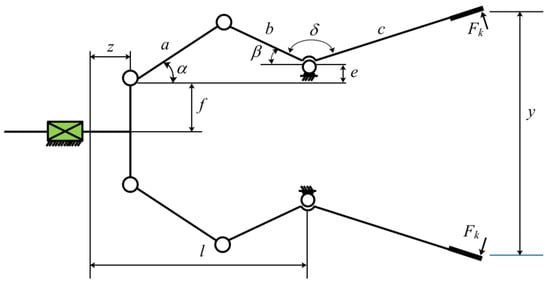

3.2.4. Robot Clamping Problem

This task studies the force that robot grippers can generate when grasping objects, while ensuring that the objects are not damaged and the grasping is stable [58]. Figure 11 is a structural diagram, characterized by the following:

Figure 11.

Robot clamping structure.

Minimize

Constraints:

Among them,

Range:

To address this issue, mESC conducted comparative experiments with MPSO, CPO, AVOA, BWO, WOA, HHO, TSA, AO, and GWO. Table 10 presents the design results, and mESC achieved the best performance with an optimal value of 16.983218316.

Table 10.

Optimization of robot gripping.

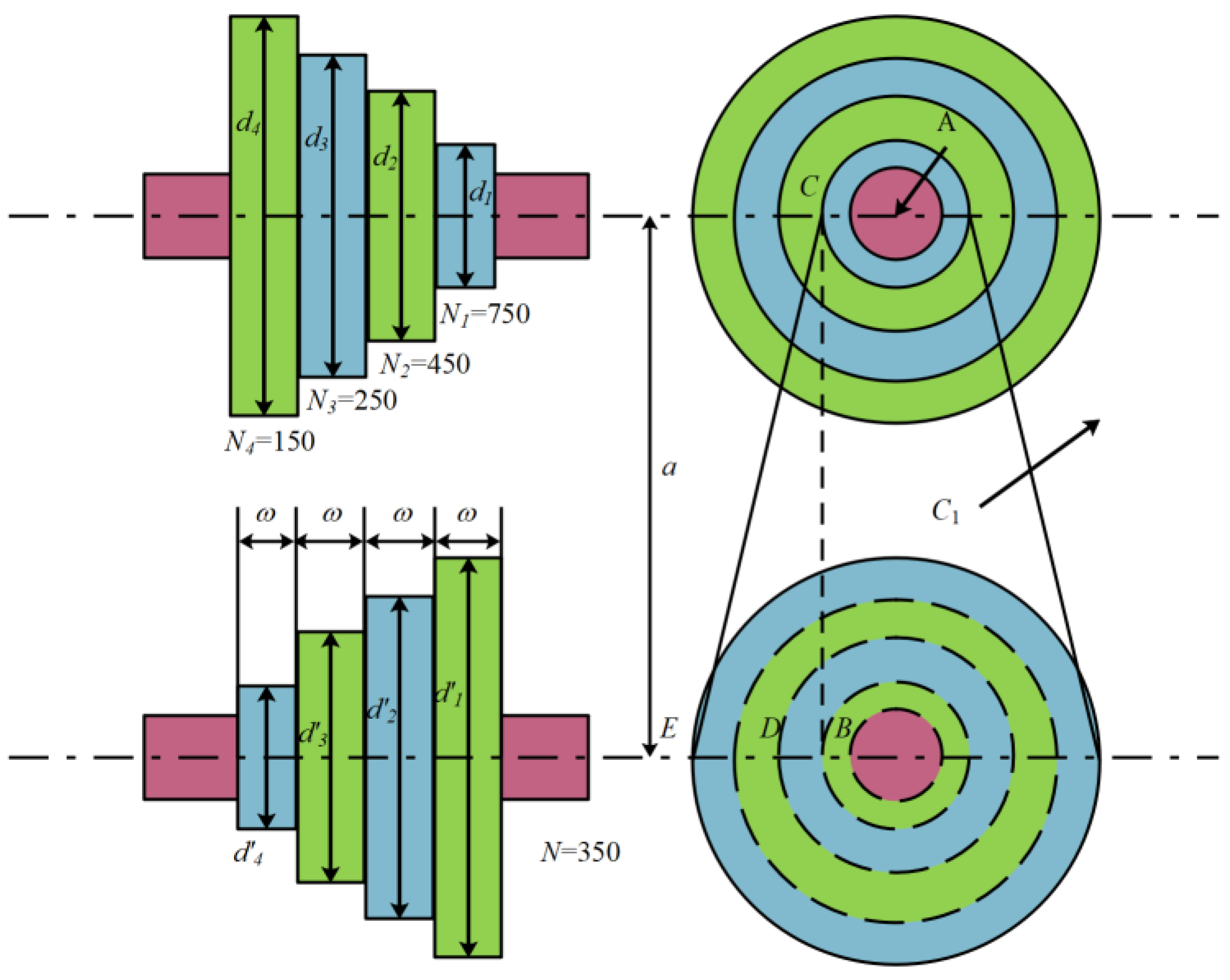

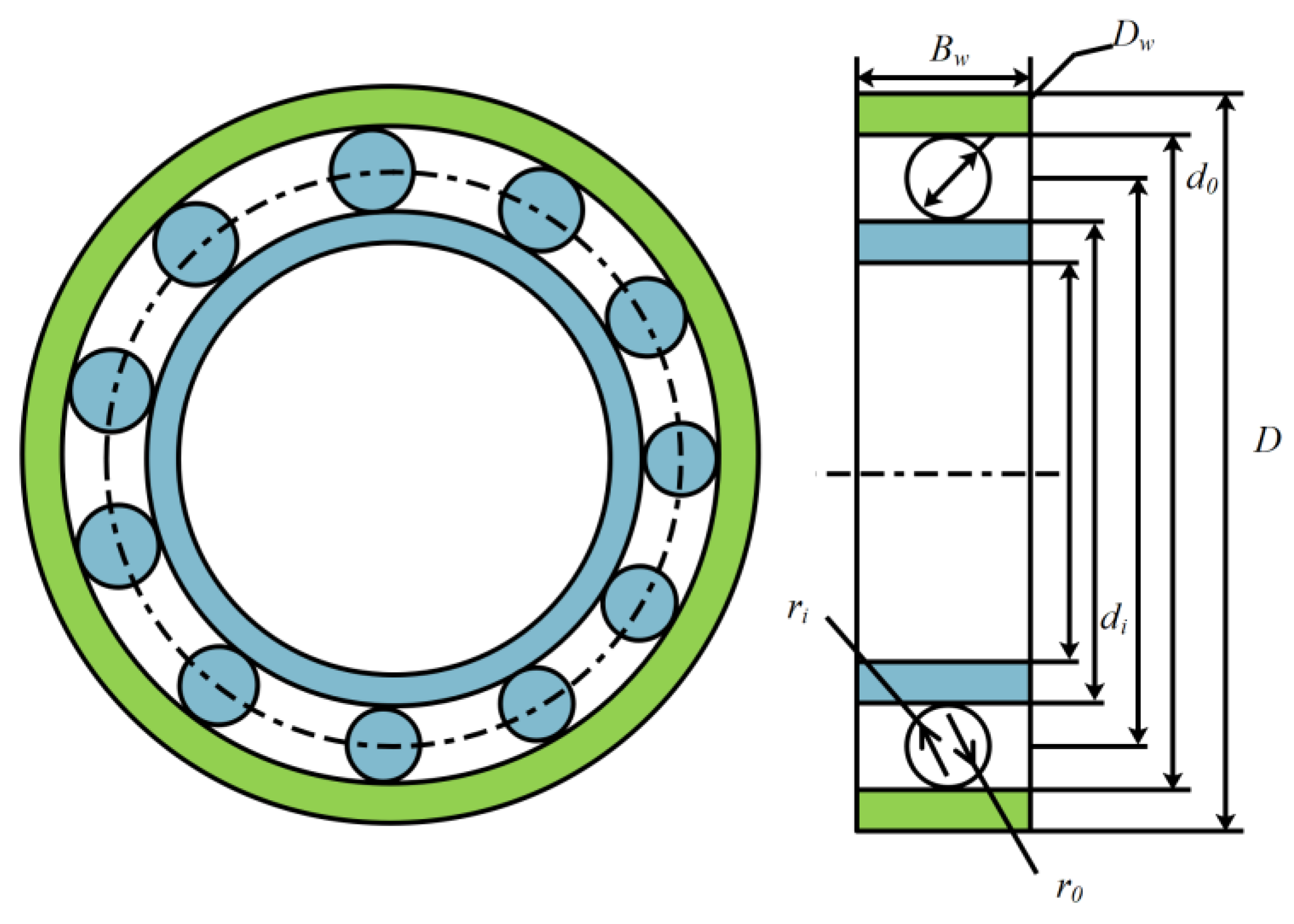

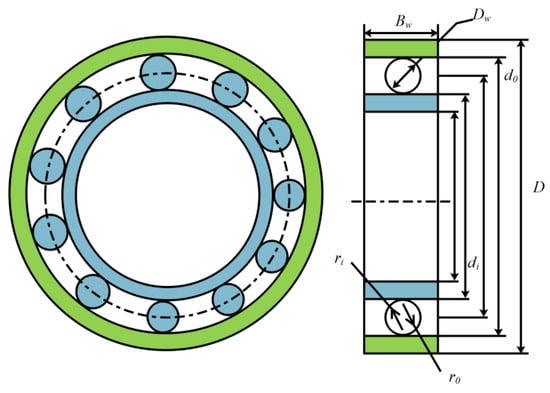

3.2.5. Rolling Element Bearings

This article applies mESC to optimize the design of rolling bearings. The schematic diagram is shown in Figure 12, with a total of 10 optimization parameters and the following characteristics:

Figure 12.

Rolling element bearing structure.

Minimize

Constraints:

where

Range:

To solve this problem, mESC was compared with MPSO, CPO, AVOA, BWO, WOA, HHO, TSA, AO, and GWO in experimental experiments. mESC can achieve optimal performance levels, and the optimal value of 16,958.202286941 is given in Table 11.

Table 11.

Optimization of rolling element bearings.

4. Summarize

This study proposes an improved version of mESC based on multi-strategy enhancement, which maintains population diversity through adaptive perturbation factor strategy, restarts the mechanism to improve the global exploration of mESC, and balances local development of the algorithm through dynamic centroid reverse learning strategy. Finally, the elite pool boundary adjustment strategy is used to accelerate population convergence. mESC conducted performance tests on the test suite and six optimized designs to demonstrate its strong superiority. In the future, we will further expand mESC, research new population update mechanisms, and apply them in areas such as feature selection, image segmentation, and information processing.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/biomimetics10040232/s1.

Author Contributions

Conceptualization, J.L. and L.C.; Methodology, J.L. and J.Y.; Software, J.L., J.Y. and L.C.; Validation, J.Y. and L.C.; Formal analysis, J.L. and L.C.; Investigation, J.L. and J.Y.; Resources, J.L., J.Y. and L.C.; Data curation, J.L. and L.C.; Writing—original draft, J.L., J.Y. and L.C.; Writing—review & editing, J.L., J.Y. and L.C.; Visualization, J.Y. and L.C.; Supervision, J.L. and L.C.; Project administration, J.L.; Funding acquisition, J.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the Natural Science Research Program of the Shaanxi Education Department in China (grant No. 24JC021). This work is also supported by the 2024 Shaanxi Provincial Key R&D Program project in China (grant No. 2024GX-YBXM-529).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All data generated or analyzed during the study are included in this published article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Krentel, M.W. The complexity of optimization problems. In Proceedings of the Eighteenth Annual ACM Symposium on Theory of Computing, Berkeley, CA, USA, 28–30 May 1986; pp. 69–76. [Google Scholar] [CrossRef]

- Zhao, Y.; vom Lehn, F.; Pitsch, H.; Pelucchi, M.; Cai, L. Mechanism optimization with a novel objective function: Surface matching with joint dependence on physical condition parameters. Proc. Combust. Inst. 2024, 40, 105240l. [Google Scholar] [CrossRef]

- Alireza, M.N.; Mohamad, S.A.; Rana, I. Optimizing a self-healing gelatin/aldehyde-modified xanthan gum hydrogel for extrusion-based 3D printing in biomedical applications. Mater. Today Chem. 2024, 40, 102208. [Google Scholar] [CrossRef]

- Ehsan, B.; Peter, B. A simulation-optimization approach for integrating physical and financial flows in a supply chain under economic uncertainty. Oper. Res. Perspect. 2023, 10, 100270. [Google Scholar] [CrossRef]

- Xie, D.W.; Qiu, Y.Z.; Huang, J.S. Multi-objective optimization for green logistics planning and operations management: From economic to environmental perspective. Comput. Ind. Eng. 2024, 189, 109988. [Google Scholar] [CrossRef]

- Ibham, V.; Aslan, D.K.; Sener, A.; Martin, S.; Muhammad, I. Machine learning of weighted superposition attraction algorithm for optimization diesel engine performance and emission fueled with butanol-diesel biofuel. Ain Shams Eng. J. 2024, 12, 103126. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95−International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar] [CrossRef]

- Storn, R.; Price, K. Differential evolution–a simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Trojovská, E.; Dehghani, M.; Trojovský, P. Zebra Optimization Algorithm: A New Bio-Inspired Optimization Algorithm for Solving Optimization Algorithm. IEEE Access 2022, 10, 49445–49473. [Google Scholar] [CrossRef]

- Seyedali, M. Moth-flame optimization algorithm: A novel nature-inspired heuristic paradigm. Knowl.-Based Syst. 2015, 89, 228–249. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Jameel, M. Spider wasp optimizer: A novel meta-heuristic optimization algorithm. Artif. Intell. Rev. 2023, 56, 11675–11738. [Google Scholar] [CrossRef]

- Zhao, S.; Zhang, T.; Ma, S.; Chen, M. Sea-horse optimizer: A novel nature-inspired meta-heuristic for global optimization problems. Appl. Intell. 2023, 53, 11833–11860. [Google Scholar] [CrossRef]

- Hao, W.G.; Wang, L.Y.; Mirjalili, S. Artificial hummingbird algorithm: A new bio-inspired optimizer with its engineering applications. Comput. Methods Appl. Mech. Eng. 2022, 388, 114194. [Google Scholar] [CrossRef]

- Agushaka, J.O.; Ezugwu, A.E.; Abualigah, L. Dwarf Mongoose Optimization Algorithm: A New Bio-Inspired Metaheuristic Method for Engineering Optimization. Comput. Methods Appl. Mech. Eng. 2022, 391, 114570. [Google Scholar] [CrossRef]

- Sang-To, T.; Hoang-Le, M.; Wahab, M.A. An efficient Planet Optimization Algorithm for solving engineering problems. Sci. Rep. 2022, 12, 8362. [Google Scholar] [CrossRef]

- Atashpaz-Gargari, E.; Lucas, C. Imperialist competitive algorithm: An algorithm for optimization inspired by imperialistic competition. In Proceedings of the 2007 IEEE Congress on Evolutionary Computation, Singapore, 25–28 September 2007; pp. 4661–4667. [Google Scholar] [CrossRef]

- Rao, R.V.; Savsani, V.J.; Vakharia, D.P. Teaching–learning-based optimization: An optimization method for continuous non-linear large scale problems. Inf. Sci. 2012, 183, 1–15. [Google Scholar] [CrossRef]

- Hu, G.; Cheng, M.; Houssein, E.H.; Hussien, A.G.; Abualigah, L. SDO: A novel sled dog-inspired optimizer for solving engineering problems. Adv. Eng. Inform. 2024, 62, 102783. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Dehghani, M.; Trojovský, P. Osprey optimization algorithm: A new bio-inspired metaheuristic algorithm for solving engineering optimization problems. Front. Mech. Eng. 2023, 8, 1126450. [Google Scholar] [CrossRef]

- Xue, J.; Shen, B. Dung beetle optimizer: A new meta-heuristic algorithm for global optimization. J. Supercomput. 2023, 79, 7305–7336. [Google Scholar] [CrossRef]

- Rashedi, E.; Nezamabadi-Pour, H.; Saryazdi, S. GSA: A gravitational search algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- Hakki, M.G.; Eksin, I.; Erol, O.K. A new optimization method: Big Bang-Big Crunch. Adv. Eng. Softw. 2006, 37, 106–111. [Google Scholar] [CrossRef]

- Hu, G.; Huang, F.Y.; Seyyedabbasi, A.; Wei, G. Enhanced multi-strategy bottlenose dolphin optimizer for UAVs path planning. Appl. Math. Model. 2024, 130, 243–271. [Google Scholar] [CrossRef]

- Hu, G.; Gong, C.S.; Li, X.X.; Xu, Z.Q. CGKOA: An enhanced Kepler optimization algorithm for multi-domain optimization problems. Comput. Methods Appl. Mech. Eng. 2024, 425, 116964. [Google Scholar] [CrossRef]

- Hu, G.; Du, B.; Chen, K.; Wei, G. Super eagle optimization algorithm based three-dimensional ball security corridor planning method for fixed-wing UAVs. Adv. Eng. Inform. 2024, 59, 102354. [Google Scholar] [CrossRef]

- Hu, G.; Huang, F.Y.; Chen, K.; Wei, G. MNEARO: A meta swarm intelligence optimization algorithm for engineering applications. Comput. Methods Appl. Mech. Eng. 2024, 419, 116664. [Google Scholar] [CrossRef]

- Ouyang, K.; Fu, S.; Chen, Y.; Cai, Q.; Heidari, A.A.; Chen, H. Escape: An Optimizer based on crowd evacuation behaviors. Artif. Intell. Rev. 2024, 58, 19. [Google Scholar] [CrossRef]

- Zhang, G.Z.; Fu, S.W.; Li, K.; Huang, H.S. Differential evolution with multi-strategies for UAV trajectory planning and point cloud registration. Appl. Soft Comput. 2024, 167 Pt C, 112466. [Google Scholar] [CrossRef]

- Lian, J.B.; Hui, G.H.; Ma, L.; Zhu, T.; Wu, X.C.; Heidari, A.A.; Chen, Y.; Chen, H.L. Parrot optimizer: Algorithm and applications to medical problems. Comput. Biol. Med. 2024, 172, 108064. [Google Scholar] [CrossRef]

- Rezaei, F.; Safavi, H.R.; Abd Elaziz, M. GMO: Geometric mean optimizer for solving engineering problems. Soft Comput. 27 2023, 15, 10571–10606. [Google Scholar] [CrossRef]

- Qi, A.; Zhao, D.; Heidari, A.A. FATA: An Efficient Optimization Method Based on Geophysics. Neurocomputing 2024, 607, 128289. [Google Scholar] [CrossRef]

- Zheng, B.; Chen, Y.; Wang, C. The moss growth optimization (MGO): Concepts and performance. J. Comput. Des. Eng. 2024, 11, 184–221. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Abouhawwash, M. Crested Porcupine Optimizer: A new nature-inspired metaheuristic. Knowl.-Based Syst. 2024, 284, 111257. [Google Scholar] [CrossRef]

- Yuan, C.; Dong, Z.; Heidari, A.A.; Liu, L.; Chen, Y.; Chen, H.l. Polar lights optimizer: Algorithm and applications in image segmentation and feature selection. Neurocomputing 2024, 607, 128427. [Google Scholar] [CrossRef]

- Sowmya, R.; Premkumar, M.; Jangir, P. Newton-Raphson-based optimizer: A new population-based metaheuristic algorithm for continuous optimization problems. Eng. Appl. Artif. Intell. 2024, 128, 107532. [Google Scholar] [CrossRef]

- Wu, X.; Li, S.; Jiang, X. Information acquisition optimizer: A new efficient algorithm for solving numerical and constrained engineering optimization problems. J. Supercomput. 2024, 80, 25736–25791. [Google Scholar] [CrossRef]

- Gao, Y.; Zhang, J.; Wang, Y.; Wang, J.; Qin, L. Correction to: Love evolution algorithm: A stimulus–value–role theory-inspired evolutionary algorithm for global optimization. J. Supercomput. 2024, 80, 15097–15099. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A.; Sadiq, A.S. Autonomous Particles Groups for Particle Swarm Optimization. Arab. J. Sci. Eng. 2014, 39, 4683–4697. [Google Scholar] [CrossRef]

- Rather, S.A.; Bala, P.S. Constriction coefficient based particle swarm optimization and gravitational search algorithm for multilevel image thresholding. Expert Syst. 2021, 38, 12717. [Google Scholar] [CrossRef]

- Vinodh, G.; Kathiravan, K.; Mahendran, G. Distributed Network Reconfiguration for Real Power Loss Reduction Using TACPSO. Int. J. Adv. Res. Electr. Electron. Instrum. Eng. 2017, 6, 7517–7525. [Google Scholar] [CrossRef]

- Civicioglu, P.; Besdok, E. Bernstein-Levy differential evolution algorithm for numerical function optimization. Neural Comput. Appl. 2023, 35, 6603–6621. [Google Scholar] [CrossRef]

- Akgungor, A.P.; Korkmaz, E. Bezier Search Differential Evolution algorithm based estimationmodels of delay parameter k for signalized intersections. Concurr. Comput. Pract. Exp. 2022, 34, e6931. [Google Scholar] [CrossRef]

- Emin, K.A. Detection of object boundary from point cloud by using multi-population based differential evolution algorithm. Neural Comput. Appl. 2023, 35, 5193–5206. [Google Scholar] [CrossRef]

- Biswas, S.; Saha, D.; De, S.; Cobb, A.D.; Jalaian, B.A. Improving Differential Evolution through Bayesian Hyperparameter Optimization. In Proceedings of the 2021 IEEE Congress on Evolutionary Computation (CEC), Krakow, Poland, 28 June–1 July 2021. [Google Scholar] [CrossRef]

- Awad, N.H.; Ali, M.Z.; Suganthan, P.N. Ensemble sinusoidal differential covariance matrix adaptation with Euclidean neighborhood for solving CEC2017 benchmark problems. In Proceedings of the 2017 IEEE Congress on Evolutionary Computation (CEC), Donostia/San Sebastian, Spain, 5–8 June 2017. [Google Scholar] [CrossRef]

- Mohamed, A.W.; Hadi, A.A.; Fattouh, A.M.; Jambi, K.M. Jambi: L-SHADE with Semi Parameter Adaptation Approach for Solving CEC 2017 Benchmark Problems. In Proceedings of the 2017 IEEE Congress on Evolutionary Computation (CEC), Donostia/San Sebastian, Spain, 5–8 June 2017; pp. 1456–1463. [Google Scholar] [CrossRef]

- Tanabe, R.; Fukunaga, A. Improving the search performance of SHADE using linear population size reduction. In Proceedings of the 2014 IEEE Congress on Evolutionary Computation, Beijing, China, 6–11 July 2014; pp. 1658–1665. [Google Scholar] [CrossRef]

- Tejani, G.G.; Savsani, V.J.; Bureerat, S.; Patel, V.K. Topology and size optimization of trusses with static and dynamic bounds by modified symbiotic organisms search. J. Comput. Civ. Eng. 2018, 32, 04017085. [Google Scholar] [CrossRef]

- Bansal, A.K.; Gupta, R.A.; Kumar, R. Optimization of hybrid PV/wind energy system using Meta Particle Swarm Optimization (MPSO). In Proceedings of the India International Conference on Power Electronics, New Delhi, India, 28–30 January 2011. [Google Scholar] [CrossRef]

- Heidari, A.A.; Mirjalili, S.; Faris, H.; Aljarah, I.; Mafarja, M.; Chen, H.L. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst. 2019, 97, 849–872. [Google Scholar] [CrossRef]

- Civicioglu, P.; Besdok, E. Bernstain-search differential evolution algorithm for numerical function optimization. Expert Syst. Appl. 2019, 138, 112831. [Google Scholar] [CrossRef]

- Kaur, S.; Awasthi, L.K.; Sangal, A.L.; Dhiman, G. Tunicate Swarm Algorithm: A new bio-inspired based metaheuristic paradigm for global optimization. Eng. Appl. Artif. Intell. 2020, 90, 103541. [Google Scholar] [CrossRef]

- Abualigah, L.; Yousri, D.; Abd Elaziz, M.; Ewees, A.A.; Al-qaness, M.A.A.; Gandomi, A.H. Aquila optimizer: A novel meta-heuristic optimization algorithm. Comput. Ind. Eng. 2021, 157, 107250. [Google Scholar] [CrossRef]

- Hu, G.; Zhu, X.N.; Wei, G.; Chang, C.T. An improved marine predators algorithm for shape optimization of developable Ball surfaces. Eng. Appl. Artif. Intell. 2021, 105, 104417. [Google Scholar] [CrossRef]

- Siddall, J.N. Optimal Engineering Design: Principles and Applications; CRC Press: Boca Raton, FL, USA, 1982. [Google Scholar]

- Abdollahzadeh, B.; Gharehchopogh, F.S.; Mirjalili, S. African vultures optimization algorithm: A new nature-inspired metaheuristic algorithm for global optimization problems. Comput. Ind. Eng. 2021, 158, 107408. [Google Scholar] [CrossRef]

- Ekrem, Ö.; Aksoy, B. Trajectory planning for a 6-axis robotic arm with particle swarm optimization algorithm. Eng. Appl. Artif. Intell. 2023, 122, 106099. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).