Abstract

The hippopotamus optimization algorithm (HO) is a novel metaheuristic algorithm that solves optimization problems by simulating the behavior of hippopotamuses. However, the traditional HO algorithm may encounter performance degradation and fall into local optima when dealing with complex global optimization and engineering design problems. In order to solve these problems, this paper proposes a modified hippopotamus optimization algorithm (MHO) to enhance the convergence speed and solution accuracy of the HO algorithm by introducing a sine chaotic map to initialize the population, changing the convergence factor in the growth mechanism, and incorporating the small-hole imaging reverse learning strategy. The MHO algorithm is tested on 23 benchmark functions and successfully solves three engineering design problems. According to the experimental data, the MHO algorithm obtains optimal performance on 13 of these functions and three design problems, exits the local optimum faster, and has better ordering and stability than the other nine metaheuristics. This study proposes the MHO algorithm, which offers fresh insights into practical engineering problems and parameter optimization.

1. Introduction

Finding the maximum value of a given objective function under specified constraints is the goal of optimization problems, which are found in a variety of disciplines, including computer science, mathematics, engineering, and economics. All optimization problems consist of three components: the objective function, constraints, and decision variables [1].

Traditional optimization algorithms, such as linear programming, quadratic programming, dynamic programming, etc., provide a solid mathematical foundation and efficient solutions for solving deterministic, convex, and well-structured optimization problems, but they usually require the problem to have a specific mathematical structure, and they are prone to fall into locally optimal solutions, especially in the case of multi-peak problems, where it is difficult to find the globally optimal solution, and the results of the solution strongly depend on the initial values [2]. The rise of metaheuristic algorithms compensates for the limitations of conventional optimization algorithms, as they are very flexible and adaptable and offer new tools and techniques for resolving challenging optimization issues in the real world. These algorithms are independent of the problem’s form and do not require knowledge of the objective function’s derivatives [3].

Metaheuristics are high-level algorithms that model social behaviors or natural phenomena to discover an approximate optimal solution to complex optimization problems. There are a wide variety of metaheuristic algorithms, which can be categorized into three groups based on their inspiration and working principles: evolution-based algorithms, group intelligence-based algorithms, and algorithms based on physical principles [4]. Evolution-based algorithms are mainly used to realize the overall progress of the population and finally complete the optimal solution by simulating the evolutionary law of superiority and inferiority in nature (Darwin’s law) [5]. Among the most prominent examples of these are genetic algorithms (GA) [6] and differential evolution (DE) [7]. Genetic algorithms simulate the process of biological evolution and optimize the solution through selection, crossover and mutation operations, with strong global search abilities which are suitable for discrete optimization problems. Differential evolution algorithms generate new solutions through the different operations of individuals in a population, which is excellent in dealing with nonlinear and multimodal optimization problems. By simulating a group’s intelligence, group intelligence-based algorithms [8,9] aim to produce a globally optimal solution. Each group in this algorithm is a biological population, and the most representative examples are the particle swarm optimization algorithm [10] and the ant colony algorithm [11], which use the cooperative behavior of a population to accomplish tasks that individuals are unable to complete. The PSO simulates the social behavior of bird or fish flocks and achieves global optimization through collaboration among individuals, which is simple, efficient, and suitable for continuous optimization problems. The ACO simulates the foraging behavior of ants and optimizes the paths through a pheromone mechanism, which is excellent in path optimization problems. There are also many other popular algorithms, such as the artificial bee colony algorithm [12], which simulates the foraging behavior of bees to optimize solutions through information sharing and collaboration, the bat optimization algorithm [13], which simulates the echolocation behavior of bats to optimize solutions through frequency and amplitude adjustment, and the gray wolf optimization algorithm [14], which simulates the collaboration and competition between leaders and followers in gray wolf packs. All of these algorithms have strong global search capabilities. The firefly algorithm (FA), which simulates the behavior of fireflies glowing to attract mates, optimizes solutions through light intensity and movement rules for multi-peak optimization problems. The fundamental concept of physical principle-based algorithms, of which simulated annealing (SA) [15] is the best example, is to use natural processes or physics principles as the basis for search techniques used to solve complex optimization problems. It mimics the annealing process of solids and performs well in combinatorial optimization problems by controlling the “temperature” parameter to balance global exploration and local exploitation in the search process. In addition to the above algorithms, others include the gravitational search algorithm (GSA) [16] and the water cycle algorithm (WCA) [17]. The GSA optimizes the solution by simulating gravitational interactions between celestial bodies and using mutual attraction between masses, demonstrating a strong global search capability. The WCA, on the other hand, simulates water cycle processes in nature and uses the convergence and dispersion mechanism of water flow to optimize the solution, which also has excellent global search performance. In addition, there are special types of hybrid optimization algorithms, which combine the features of two or more metaheuristics to enhance the performance of the algorithms by incorporating different search mechanisms. For example, the hybrid particle swarm optimization algorithm with differential evolution (DEPSO [18]) combines the population intelligence of the particle swarm optimization algorithm and the variability capability of differential evolution, which enables DEPSO to efficiently balance global and local searches and to improve the efficiency and effectiveness of the optimization process, especially for global optimization problems in continuous space. Based on a three-phase model that includes hippopotamus positioning in rivers and ponds, defense strategies against predators, and escape strategies, the HO is a new algorithm inspired by hippopotamus population behaviors which was proposed by Amiri [19] et al. in 2024. In the optimization sector, the hippopotamus optimization (HO) algorithm stands out for its excellent performance, which is able to quickly identify and converge to the optimal solution and effectively avoid falling into local minima. The algorithm’s efficient local search strategy and fast optimality-finding speed enable it to excel in solving complex problems. It effectively balances global exploration and local exploitation and is able to quickly find high-quality solutions, making it an effective tool for solving complex optimization problems.

Currently, metaheuristic algorithms have a wide range of application prospects in the field of engineering optimization. Hu [20] et al. used four metaheuristic algorithms, namely, the African vulture optimization algorithm (AVOA), the teaching–learning-based optimization algorithm (TLBO), the sparrow search algorithm (SSA), and the gray wolf optimization algorithm (GWO), to optimize a hybrid model and proposed integrated prediction of steady-state thermal performance prediction data for an energy pile-driven model. Sun [21] et al. responded to most of the industrial design problems and proposed a fuzzy logic particle swarm optimization algorithm based on the associative constraints processing method. A particle swarm optimization algorithm was used as a searcher, and a set of fuzzy logic rules integrating the feasibility of the individual was designed to enhance its searching ability. Wu [22] et al. responded to the ant colony optimization algorithm’s limitations, such as early blind searching, slow convergence, low path smoothness, and other limitations, and proposed an ant colony optimization algorithm based on farthest point optimization and a multi-objective strategy. Palanisamy and Krishnaswamy [23] used hybrid HHO-PSO (hybrid particle swarm optimization) for failure testing of wire ropes for hardness, wear and tear analysis, tensile strength, and fatigue life and adopted a hybrid HHO-based artificial neural network-based HHO (Hybrid ANN-HHO) to predict the performance of the experimental wire ropes. Liu [24] et al. proposed an improved adaptive hierarchical optimization algorithm (HSMAOA) in response to problems such as premature convergence and falling into local optimization when dealing with complex optimization problems in arithmetic optimization algorithms. Cui [25] et al. combined the whale optimization algorithm (WOA) with attention-to-the-technology (ATT) and convolutional neural networks (CNNs) to optimize the hyperparameters of the LSTM model and proposed a new load prediction model to address the over-reliance of most methods on the default hyperparameter settings. Che [26] et al. used a circular chaotic map as well as a nonlinear function for multi-strategy improvement of the whale optimization algorithm (WOA) and used the improved WOA to optimize the key parameters of the LSTM to improve its performance and modeling time. Elsisi [27] used a different learning process based on the improved gray wolf optimizer (IGWO) and fitness–distance balancing (FDB) methodology to balance the original gray wolf optimizer’s exploration and development approach and design a new automated adaptive model predictive control (AMPC) for self-driving cars to solve the rectification problem of self-driving car parameters and the uncertainty of the vision system. Karaman [28] et al. used the artificial bee colony (ABC) optimization algorithm to go in search of the optimal solution for the hyperparameters and activation function of the YOLOv5 algorithm and enhance the accuracy of colonoscopy. Yu and Zhang [29], in order to minimize the wake flow effect, proposed an adaptive moth flame optimization algorithm with enhanced detection exploitation capability (MFOEE) to optimize the turbine layout of wind farms. Dong [30] et al. optimized the genetic algorithm (GA) based on the characteristics of flood avoidance path planning and proposed an improved ant colony genetic optimization hybrid algorithm (ACO-GA) to achieve dynamic planning of evacuation paths for dam-breaking floods. Shanmugapriya [31] et al. proposed an IoT-based HESS energy management strategy for electric vehicles by optimizing the weight parameters of a neural network using the COA technique to improve the SAGAN algorithm in order to improve the battery life of electric vehicles. Beşkirli and Dağ [32] proposed an improved CPA algorithm (I-CPA) based on the instructional factor strategy and applied it to the problem of solar photovoltaic (PV) module parameter identification in order to improve the accuracy and efficiency of PV model parameter estimation. Beşkirli and Dağ [33] proposed a multi-strategy-based tree seed algorithm (MS-TSA) which effectively improves the global search capability and convergence performance of the algorithm by introducing an adaptive weighting mechanism, a chaotic elite learning method, and an experience-based learning strategy. It performs well in both CEC2017 and CEC2020 benchmark tests and achieves significant optimization results in solar PV model parameter estimation. Liu [34] et al. proposed an improved DBO algorithm and applied it to the optimal design of off-grid hybrid renewable energy systems to evaluate the energy cost with life cycle cost as the objective function. However, the above algorithms face the challenges of data size and complexity in practical applications and still suffer from the problem of easily falling into local optima, low efficiency, and insufficient robustness, which limit the performance and applicability of the algorithms.

When solving real-world problems, the HO algorithm excels due to its adaptability and robustness and is able to maintain stable performance in a wide range of optimization problems, making it an ideal choice for fast and efficient optimization problems. Maurya [35] et al. used the hippopotamus optimization algorithm (HO) to optimize distributed generation planning and network reconfiguration in the consideration of different loading models in order to improve the performance of a power grid. Chen [36] et al. addressed the limitations of the VMD algorithm and improved it by using the excellent optimization capability of the HO algorithm to achieve preliminary denoising, and in doing so, proposed a single-sign-on modal identification method based on hippopotamus optimization-variational modal decomposition (HO-VMD) and singular value decomposition-regularized total least squares-Prony (SVD-RTLS-Prony) algorithms. Ribeiro and Muñoz [37] used particle swarm optimization, hippopotamus optimization, and differential evolution algorithms to tune a controller with the aim of minimizing the root mean square (RMS) current of the batteries in an integrated vehicle simulation, thus mitigating battery stress events and prolonging its lifetime. Wang [38] et al. used an improved hippopotamus optimization algorithm (IHO) to improve solar photovoltaic (PV) output prediction accuracy. The IHO algorithm addresses the limitations of traditional algorithms in terms of search efficiency, convergence speed, and global searching. Mashru [39] et al. proposed the multi-objective hippopotamus optimizer (MOHO), which is a unique approach that excels in solving complex structural optimization problems. Abdelaziz [40] et al. used the hippopotamus optimization algorithm (HO) to optimize two key metrics and proposed a new optimization framework to cope with the problem of the volatility of renewable energy generation and unpredictable electric vehicle charging demand to enhance the performance of the grid. Baihan [41] et al. proposed an optimizer-optimized CNN-LSTM approach that hybridizes the hippopotamus optimization algorithm (HOA) and the pathfinder algorithm (PFA) with the aim of improving the accuracy of sign language recognition. Amiri [42] et al. designed and trained two new neuro-fuzzy networks using the hippopotamus optimization algorithm with the aim of creating an anti-noise network with high accuracy and low parameter counts for detecting and isolating faults in gas turbines in power plants. In addition to the above applications, there are many global optimization and engineering design problems. However, the theory of “no-free-lunch” (NFL) states that no optimization algorithm can solve all problems [43], and each existing optimization algorithm can only achieve the expected results on certain types of problems, so improvement of the HO algorithm is still necessary. Although the HO algorithm has many advantages, its performance level decreases when dealing with complex global optimization and engineering design problems, and it cannot avoid falling into local optima. It is still necessary to adjust the algorithm parameters and strategies according to specific problems in practical applications in order to fully utilize its potential. Therefore, we propose the MHO algorithm to enhance the ability of HO to solve these problems. The main contributions of this paper are as follows:

- Use the method of the sine chaotic map to replace the original population initialization method in order to prevent the HO algorithm from settling into local optimal solutions and to produce high-quality starting solutions.

- Introduce a new convergence factor to alter the growth mechanism of hippopotamus populations during the exploration phase improves the global search capability of HO.

- Incorporate a small-hole imaging reverse learning strategy into the hippopotamus escaping predator stage to avoid interference between dimensions, expand the search range of the algorithm to avoid falling into a local optimum, and thus improve the performance of the algorithm.

- The MHO model is tested on 23 benchmark functions, the optimization ability of the model is tested by comparing it with other algorithms, and three engineering design problems are successfully solved.

The structure of this paper is as follows: Section 2 presents the hippopotamus algorithm and three methods for enhancing the hippopotamus optimization algorithm; Section 3 presents experiments and analysis, including evaluating the experimental results and comparing the MHO algorithm with other algorithms; Section 4 applies MHO to three engineering design problems; and Section 5 provides a summary of the entire work.

2. Improved Algorithm

2.1. Sine Chaotic Map

A sine chaotic map [44] is a kind of chaotic system that generates chaotic sequences by nonlinear transformation of a sinusoidal function, which becomes a typical representative of a chaotic map due to the advantages of simple structure and high efficiency, and its mathematical expression is

where is a non-negative integer; denotes the value of the current iteration step; and is the chaos coefficient control parameter.

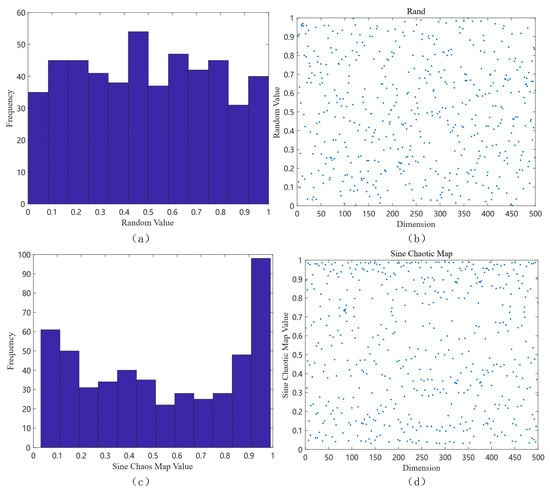

The sine map starts chaotic behavior when the parameter α is close to 0.87, and superior chaotic properties can be observed when α is close to 1. Therefore, the introduction of the sine chaotic map into the random initialization of the initial value of the hippopotamus optimization (HO) algorithm can make the hippopotamus population uniformly distributed throughout the search space, which improves the diversity of the initial population, enhances the global search capability of the HO algorithm, and effectively avoids falling into the local optimal solution. Figure 1 shows the population distribution initialized by the algorithm:

Figure 1.

Comparison of the distribution of algorithmic initialization: (a) histogram of frequency distribution of conventional random initialization; (b) scatter plot of the distribution of conventional random initialization in two-dimensional space; (c) histogram of frequency distribution of sinusoidal chaotic map initialization; and (d) scatter plot of the distribution of sinusoidal chaotic map initialization in two-dimensional space.

In the HO algorithm, a hippopotamus is a candidate solution to the optimization problem, which means that each hippopotamus’ position in the search space is updated to represent the values of the decision variables. Thus, each hippopotamus is represented as a vector and the population of hippopotamuses is mathematically characterized by a matrix. Similar to traditional optimization algorithms, the initialization phase of HO involves the generation of a random initial solution, and the vector of decision variables is generated as follows:

where denotes the location of the candidate solution, is a random number in the range of 0~1, and and represent the lower and upper limits of the decision variable, respectively. Let denote the population size of hippopotamus in the herd, while denotes the number of decision variables in the problem and the population matrix is composed by Equation (3).

Consequently, the improved expression for the initialization phase is

and furthermore,

where is a parameter that controls the chaotic behavior and is an initial value.

2.2. Change Growth Mechanism

The growth mechanism is a key component of the hippopotamus optimization algorithm that determines how the search strategy is updated to find better solutions based on current information.

In the original growth mechanism, the exploration phase of the HO algorithm models the activity of the hippopotamus itself in the entire herd. The authors subdivided the whole population into four segments, i.e., adult female hippopotamus, young hippopotamus, adult male hippopotamus, and the dominant male hippopotamus (the leader of the herd). The dominant hippopotamus was determined iteratively based on the value of the objective function (minimizing the minimum value of the problem and maximizing the maximum value of the problem).

In a typical hippopotamus herd, several females are positioned around the males, and the herd leader defends the herd and territory from possible attacks. When hippopotamus calves reach adulthood, the dominant male ejects them from the herd. Subsequently, these expelled males are asked to attract females or compete for dominance with other established male members. The location of the herd’s male hippopotamus in a lake or pond is represented mathematically by Equation (6).

In Equation (6), denotes the position of the male hippopotamus and indicates the location of the dominant hippopotamus. As shown in Equation (7), is a random vector between 0 and 1, is a random number between 0 and 1, and are integers between 1 and 2. is the average of a number of randomly selected hippopotamuses, which includes the currently considered hippopotamus with equal probability, is a random number between 0 and 1, and and are random integers that can be either 1 or 0.

Equations (9) and (10) describe the position of the female or immature hippopotamus in the herd (). The majority of immature hippos are with their mothers, but due to curiosity, sometimes immature hippos are separated from the herd or stay away from their mothers.

If the convergence factor is greater than 0.6, this means that the immature hippo has distanced itself from its mother (Equation (9)). If is greater than 0.5, this means that the immature hippopotamus has distanced itself from its mother but is still in or near the herd; otherwise, it has left the herd. Equations (9) and (10) are based on modeling this behavior for immature and female hippos. Randomly chosen numbers or vectors, denoted as and , are extracted from the set of five scenarios outlined in equation . In Equation (10), is a random number between 0 and 1. Equations (11) and (12) describe the position update of female or immature hippos. The objective function value is denoted by :

Using h-vectors, and scenarios enhance the algorithm’s global search and improve its exploration capabilities.

The growth mechanism is improved by introducing a new convergence factor , which is specifically designed to dynamically adjust the behavioral patterns of immature hippos, and the following equation is an improved formulation of :

where is the current iteration number and is the maximum iteration numbers.

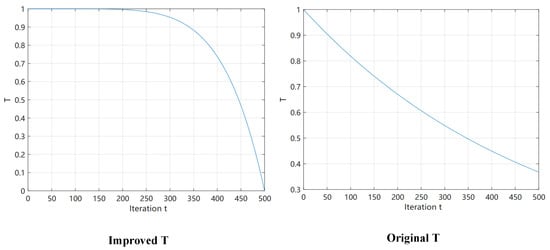

Plots of the functions of Equations (8) and (13) before and after the improvement are shown in Figure 2. The simulated immature hippopotamus individuals will show a higher propensity to explore within the hippopotamus population or within the surrounding area when (Equation (14)). This behavior promotes the algorithm to refine its search in a local region close to the current optimal solution, thus enhancing the algorithm’s search accuracy and efficiency in that region. The immature hippo attempts to move away from the present optimal solution when and . This is a method intended to prolong the search in order to lower the possibility that the algorithm would fall into a local optimum and to enable a more thorough investigation of the global solution space (Equation (15)). The algorithm is able to identify and escape potential local optimality traps more efficiently this way, thus increasing the probability of finding a globally optimal solution. When , immature hippos perform random exploration, allowing the algorithm to maintain diversity and avoid premature convergence. This improvement enhances the HO algorithm’s search capability and adaptability by better simulating the natural behavior of hippos.

Figure 2.

Plots of convergence factor T before and after improvement.

2.3. Small-Hole Imaging Reverse Learning Strategy

Many academics have proposed the reverse learning strategy to address the issue that most intelligent optimization algorithms are prone to local extremes [45]. The core idea behind this strategy is to create a corresponding reverse solution for the current solution during population optimization, compare the objective function values of these two solutions, and choose the better solution to move on to the next iteration. Based on this approach, this study presents small-hole imaging reverse learning [46] technique to enhance population variety, which enhances the algorithm’s global search capability and more accurately approximates the global optimal solution.

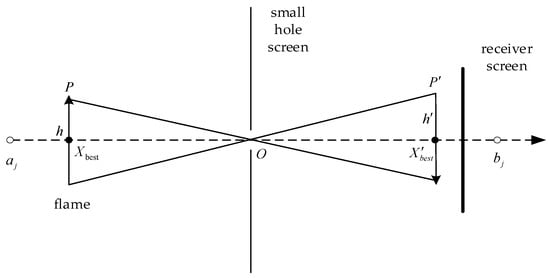

The principle of small-hole imaging is shown in Figure 3, which is a combined method combining pinhole imaging with dimension-by-dimension inverse learning derived from LensOBL [47]. The aim is to find an inverse solution for each dimension of the feasible solution, thus reducing the risk of the algorithm falling into a local optimum.

Figure 3.

Schematic diagram of small-hole imaging reverse learning.

Assume that in a certain space, there is a flame with height whose projection on the X-axis is (the dimensional optimal solution), the upper and lower bounds of the coordinate axes are and (the upper and lower bounds of the dimensional solution), and a screen with a small hole is placed on the base . The flame passing through the small hole can receive an inverted image with height on the receiving screen. The flame passing through the small hole can receive an inverted image of height on the receiving screen, and then a reversed point (the reversed solution of the dimensional solution) is obtained on the X-axis through small-hole imaging. Therefore, from the principle of small-hole imaging, Equation (16) can be derived.

Let ; through the transformation to obtain , the expression is Equation (17), and Equation (18) is obtained when .

As can be seen from Equation (18), small-hole imaging reverse learning is the correct general reverse learning strategy when , but at this time, small-hole imaging learning is only the current optimal position through general reverse learning to obtain a fixed reverse point; this fixed position is frequently far away from the global optimal position. Therefore, by adjusting the distance between the receiving screen and the small-hole screen to change the adjustment factor , we can use the algorithm to obtain an optimal solution closer to the position, making it jump out of the local optimal region and closer to the global optimal region.

The development phase of the original hippopotamus algorithm describes a hippopotamus fleeing from a predator. Another behavior of a hippopotamus facing a predator occurs when a hippopotamus is unable to repel a predator with its defensive behaviors, so the hippopotamus tries to get out of the area in order to avoid the predator. This strategy causes the hippo to find a safe location close to its current position. In the third phase, the authors simulate this behavior, which improves the algorithm’s local search capabilities. Random places are created close to the hippo’s present location in order to simulate this behavior.

where is the position of the hippo when it escaped from the predator, and it is searched to find the closest safe position. Out of the three situations, is a randomly selected vector or number (Equation (21)). Better localized search is encouraged by the possibilities that the s equations take into account, and represents a random vector between 0 and 1, while and denote random numbers generated in the range of 0 to 1. In addition, is a normally distributed random number. denotes the current iteration number, while denotes the highest iteration number.

The fact that the fitness value improved at the new position suggested that the hippopotamus had relocated to a safer area close to its original location.

Incorporating the small-hole imaging reverse learning strategy into the HO algorithm can effectively improve the diversity and optimization efficiency of the algorithm. This strategy enhances population diversity and expands the search range through chaotic sequences while mapping the optimal solution dimension by dimension to reduce interdimensional interference and improve global search capability. Additionally, it enhances stability, lowers the possibility of a local optimum, dynamically modifies the search range, and synchronizes the global search with the local exploitation capabilities, all of which help the algorithm to find a better solution with each iteration.

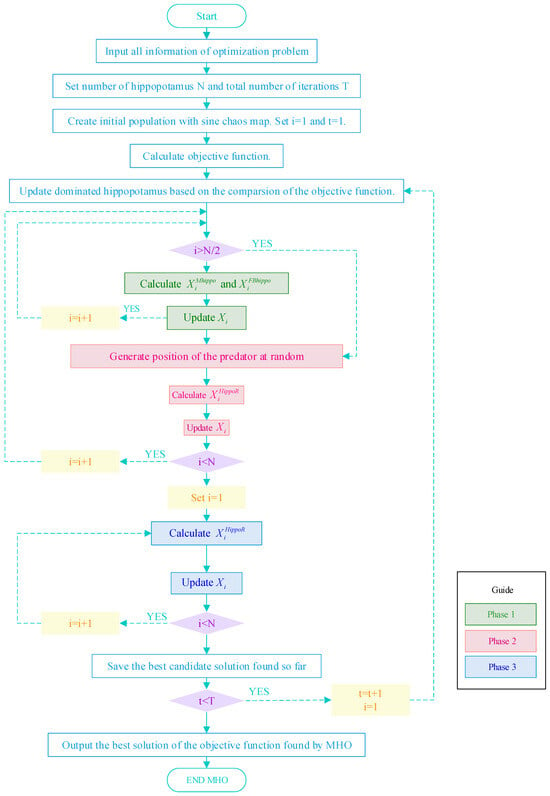

2.4. Algorithmic Process

The program details of MHO are shown in the flowchart in Figure 4. Firstly, create an initial population using the sine chaotic map, and set the iteration counter to and the time counter . Secondly, it is divided into three phases: When , enter the first phase (Phase 1), which is the position update of the hippopotamus in the river or pond (exploration phase). Use Equations (9) and (14) to calculate the positions of male and female hippos, respectively, and update the positions of the hippos using Equations (11) and (12). When , it enters the second phase (phase 2), i.e., hippopotamus defense against predators, which is consistent with the original hippopotamus algorithm. The third phase begins when , where the hippopotamus escapes from the predator, and the final position of the hippopotamus is calculated and the hippo’s nearest safe position is updated using Equation (17). Finally, if the time counter is at , increase and reset the iteration counter to continue the iteration. The optimal objective function solution discovered by the MHO algorithm is output once the maximum number of iterations has been reached.

Figure 4.

Flowchart of MHO algorithm.

2.5. Computational Complexity

Time complexity is a basic index to evaluate the efficiency of algorithms, which is analyzed in this paper by using the method of BIG-O [48]. Assuming that the population size is , the dimension is , and the number of iterations is , the time complexities of the HO algorithm and the MHO algorithm are analyzed as follows:

The standard HO algorithm consists of two phases: a random population initialization phase and a subsequent hippo position update. In the initialization phase, the time complexity of HO can be expressed as . In the position updating phase, the hippopotamus employs position updating in rivers or ponds for defense and escape from predatory mechanisms. The computational complexity of each iteration is , and after iterations, the computational complexity accumulates as . Therefore, the total time complexity of HO can be expressed as with time complexity.

The proposed MHO algorithm consists of three phases: population initialization based on sine chaotic mapping, hippopotamus position updating, and the small-hole imaging reverse learning phase. In the sine chaotic mapping-based population initialization phase, the time complexity of the MHO initialization is denoted as and is expressed as . The hippopotamus position update phase is very similar to the HO phase, with a time complexity which is consistent with HO. In the small-hole imaging reverse learning phase, the time complexity of this phase, denoted as , is executed in each iteration, resulting in a total complexity of . Thus, the overall time complexity of the MHO algorithm can be summarized as with a final time complexity of . It is worth noting that the time complexity of MHO is comparable to HO, which indicates that the enhancement strategy proposed in this study does not affect the solution efficiency of the algorithm.

3. Experiment

In this section, a series of experiments are designed to validate the effectiveness of the HO improvement algorithm, and we have chosen 23 benchmark test functions to evaluate the MHO algorithm and to perform comparison experiments with nine other meta-heuristic algorithms. In addition, ablation experiments of the algorithm were conducted to explore the contribution and impact of different components in the MHO algorithm.

3.1. Experimental Setup and Evaluation Criteria

To ensure the fairness and validity of the experiments, this paper proposes that the HO-based improved algorithm MHO, as well as other nature-inspired algorithms, are programmed and implemented in an experimental environment on Windows 10, all on a computer configured with 12th Gen Intel (R) Core (TM) i5-12600KF 3.70 GHz processor, 16 Gb RAM, and using the programming language MATLAB 2019b. The performance of the algorithms is evaluated using the following evaluation criteria:

Mean: the average value computed by the algorithm after executing it several times for the benchmark function tested. The mean value indicates the general effectiveness of the algorithm in finding the optimal solution, i.e., the desired performance of the algorithm. A lower mean value indicates that the algorithm is able to find a better solution on average over multiple runs. The formula is calculated as in Equation (23):

where is the number of executions and denotes the result of the execution.

Standard deviation: the standard deviation calculated by the algorithm after executing the test functions many times. The smaller the standard deviation, the more stable the performance of the algorithm, which usually means that the algorithm has better robustness. The formula is shown in Equation (24):

Rank: ranks the results of the Friedman test for all algorithms; the lower the mean and Std, the higher the rank. Algorithms with the same result are given comparative ranks to each other. “Rank-Count” represents the cumulative sum of the ranks, “Ave-Rank”’ represents the average of the ranks, and “Overall-Rank” is the final ranking of the algorithms in all comparisons.

3.2. Test Function

In order to test the improved performance of the MHO algorithm, 23 benchmark functions with different characteristics are used for testing and the specific function information is shown in Table 1, which contains the dimensionality (Dim), the domain, and the known theoretical optimum of the function. These test functions are grouped into three categories: single-peak test functions for , multimodal functions for , and fixed-dimension functions for . The single-peak benchmark function is characterized by the existence of only one global optimum solution and is monotonic and deterministic, so it is suitable for evaluating the speed of convergence and the development capability of optimization algorithms. The multimodal function has multiple local optimal solutions but only one global optimal solution, which makes it commonly used to test the global search capability of optimization algorithms and their ability to avoid falling into local optima. Fixed-dimension multimodal functions, on the other hand, are usually defined in a specific dimension, meaning that their complexity and difficulty are fixed and do not change as the dimension changes. This ensures consistency and comparability of tests.

Table 1.

Benchmark functions.

3.3. Sensitivity Analysis

MHO is a population-based optimizer that performs the optimization process through iterative computation. Therefore, it can be expected that the experimental results are usually influenced by the number of fitness evaluations (), where is the population size and is the number of iterations. Most of the studies in the literature fix at 15,000 iterations, i.e., when and . However, different and settings can have an impact on the algorithm’s performance. Therefore, we chose three different combinations (20/75000, 30/500, and 60/250) to analyze their effects on the MHO algorithm. Seventeen test functions were randomly selected for sensitivity analysis, and the experimental results are shown in Table 2.

Table 2.

Sensitivity analysis of p/t.

As can be seen in Table 2, for the best results for these three functions are achieved for four different settings. For of these six functions, the of 20/750 has the smallest value of standard deviation. of 30/500 for the functions and exhibits smaller values of Std. Rank-Count is the sum of the rank values of all functions for the same set of , where the Rank-Count value of 32.5 for of 30/500 is the smallest. After the Friedman test, it can be seen that the first place on the final ranking (Overall-Rank) is of 30/500, so it can be concluded that this experimental result is the best and is set as a fixed parameter for the experiment in this paper.

3.4. Experimental Results

Comparative experiments were conducted on the above twenty-three test functions for HO as well as variants of HO (HO1, HO2, and HO3) and comparing them with the Harris hawk algorithm (HHO) [49], honey badger algorithm (HBA) [50], dung beetle optimization algorithm (DBO) [51], particle swarm optimization algorithm (PSO), and whale optimization algorithm (WOA) [52], where HO1 is the HO with the introduction of the sine chaotic map after HO, using Equation (4) to replace the population initialization method, HO2 incorporates the small-hole imaging reverse learning strategy, using Equation (17) to add a reverse learning process, and HO3 is to improve the growth mechanism of HO (Equation (13)). The evaluation process is set with uniform parameters to ensure fairness, and each algorithm will perform 50 cycles each time. The experimental results are shown in Table 3.

Table 3.

Experimental results of MHO and its comparison algorithms.

Observing the data in Table 3 yields the performance of our MHO algorithm and its comparative algorithms on several benchmark functions. The MHO algorithm outperforms the other algorithms in terms of mean and standard deviation on the functions and is slightly inferior to the HHO algorithm on the functions , but the MHO algorithm is second only to the HHO algorithm in terms of mean and standard deviation on the function . The MHO algorithm is superior to all other algorithms except the variant HO2 on the function . For the multimodal benchmark function , the MHO algorithm performs optimally on the function . It is inferior to the HHO on both the and functions, but the mean value of is second only to the HHO and is superior to the other algorithms. For the fixed-dimensional test functions , it outperforms the other algorithms in terms of mean and standard deviation for the six test functions , and , and while the standard deviation is slightly worse than the other algorithms for the four functions, the mean values are optimal. For the fixed-dimensional test functions , MHO outperforms the other algorithms in terms of mean and standard deviation for the six test functions, while the standard deviation is slightly worse than the other algorithms for the four functions , but the mean values are optimal.

Summarizing the above results, it can be seen that the MHO algorithm shows a clear advantage in the benchmark function. Whether on single-peak, multi-peak, or hybrid functions, it shows excellent optimization performance and stability. These results fully demonstrate the effectiveness and superiority of the MHO algorithm in solving complex optimization problems.

3.5. Friedman Test

The Friedman test [53] provides an effective tool for performance comparison of optimization algorithms, statistical significance analysis, robustness assessment, and multi-objective optimization, which allows us to make scientific and reasonable algorithm selections and applications. Through the Friedman test, we can fairly compare the performance of different algorithms and reduce the bias caused by the selection of specific problems, so as to make objective evaluations and scientific decisions.

Therefore, in order to further compare the overall performance of these 10 algorithms, the algorithms are ranked using the Friedman test, and Table 4 shows the performance rankings of the 10 algorithms, including MHO, on 17 randomly selected functions out of the 23 benchmark functions mentioned above. From the table, it can be concluded that MHO has a sum of 50.5 ranking numbers, an average ranking of 2.1597, and a final ranking of 1, which indicates that MHO has the best overall performance. The results of the Friedman test once again prove that MHO performs better than the other algorithms.

Table 4.

Performance ratings of MHO and its comparative algorithms.

3.6. Convergence Analysis

The convergence curve usually indicates the process of the algorithm gradually approaching the optimal solution during the iteration process, which can simply and intuitively show the advantages and disadvantages of one or more algorithms, so the convergence analysis is a key step in verifying whether the MHO algorithm can stably find the optimal solution or near-optimal solution to the optimization problem.

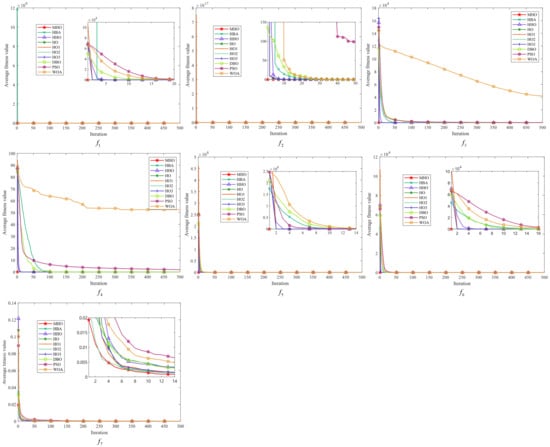

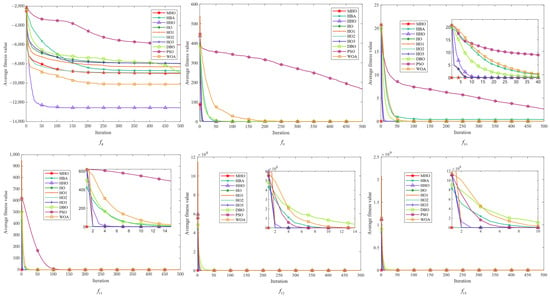

In this study, the average fitness value of the objective function is used as the criterion for evaluating the convergence of the algorithms, and each algorithm is iterated up to 500 times. We visualize the experimental results of ten algorithms, including MHO, on 23 benchmark functions, and the obtained convergence curves are subjected to convergence analysis. As shown in Figure 5, Figure 6 and Figure 7, the convergence plots are shown for the single-peak function, the multimodal function, and the fixed-dimension function, respectively.

Figure 5.

Convergence plots of single-peak function.

Figure 6.

Convergence plots of multi-peak function.

Figure 7.

Convergence plots of fixed-dimensional multimodal function.

All the convergence curves of the single-peak function are shown in Figure 5. The initial solution of MHO is always the lowest among the convergence curves on these seven functions, indicating that it is able to find a good quality solution at the initial stage. Among them, except for , the variant HO2 has similar curves to MHO, and the convergence speed as well as the accuracy is optimal, which reflects the effectiveness of the reverse learning strategy for small-aperture imaging. All the curves converge to the same level; except for the PSO of and the WOA of , all curves tend to converge. On the function, the convergence speed of MHO is not similar to other algorithms, but the value of its optimal solution is the smallest, so the overall performance is better than other algorithms.

All convergence curves for the multi-peak function are shown in Figure 6. Again, the curves of MHO and HO2 are similar on the six functions on the graphs. On the function, the fitness values of MHO and other functions are significantly lower than those of HHO, but on all functions other than that, the dominance of MHO is similar to that on the single-peak function. On , it can be seen that MHO and the green line of HO2 converge preferentially, followed by four similar lines for HO, HO1, HO3, and HHO converging one after another; PSO shows the worst convergence rate and fitness values on .

Figure 7 shows the multimodal function with fixed dimensions. There are few overall differences between all the algorithms in function , but there are noticeable differences between the curves in the detailed presentation. The same characteristics of MHO are exhibited in all these functions—a rapid decline in the initial period, showing a fast rate of convergence—and the other algorithms also show faster convergence on specific functions, but with lower fitness values for MHO. Among the functions , HHO has the worst overall performance, and MHO shows good optimization performance with fast convergence speed and optimal solutions on all functions. The other algorithms also perform well on specific functions but, overall, the MHO algorithm shows competitiveness in these tests.

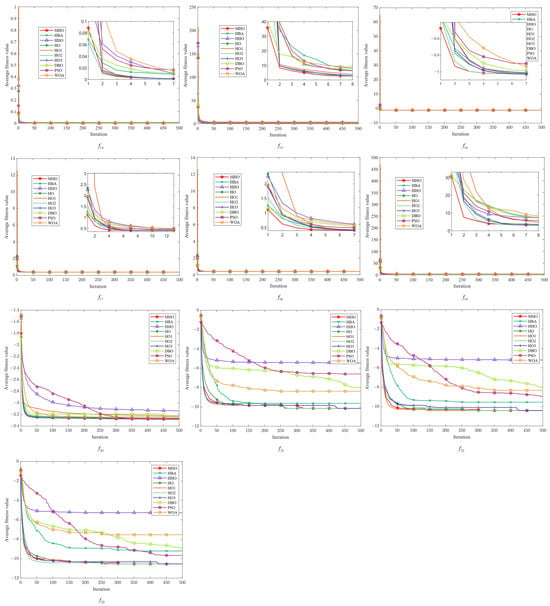

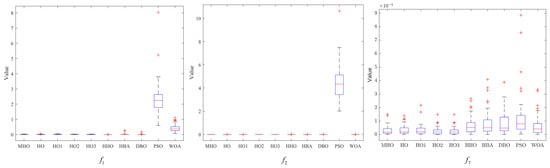

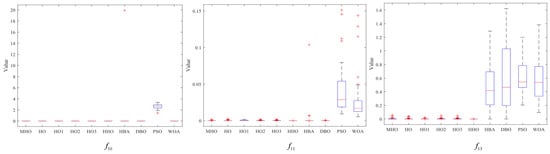

3.7. Stability Analysis

In this section, box-and-line plots are used to analyze the stability of all the algorithms, which are run independently 50 times, again using the experimental results for the 23 benchmark functions. Figure 8, Figure 9 and Figure 10 show the box-and-line plots for the single-peak function, the multi-peak function, and the fixed-dimension multimodal function, respectively. As an example, the boxplots of the functions in Figure 7 are presented as an evaluation method of the boxplots.

Figure 8.

Boxplots of single-peak function.

Figure 9.

Boxplots of multi-peak function.

Figure 10.

Boxplots of multimodal functions with fixed dimensions.

In the box-and-line plot, the red horizontal line represents the median, with lower values indicating better performance of the algorithm on the test function. It is the primary metric for evaluating the performance of the algorithms. MHO has a low median, with all the algorithms except HHO showing similar performance. The blue boxes for DBO and WOA show the interquartile range (IQR), where a smaller IQR indicates a more stable algorithmic performance. Thus, MHO, HO, HO1, HO2, HO3, HHO, HBA, and WOA all show better stability. The red crosses represent outliers, and the smaller the number, the better the stability. Here, only HHO, HBA, and PSO have outliers, implying that the other algorithms are more stable. The gray dotted line represents the whisker; the longer the whisker, the more discrete the data are. The longer whisker of DBO shows that it performs poorly. The stability of the algorithm can be analyzed by combining the above evaluation parameters. As a side note, we have chosen some representative examples to keep the data concise.

Among the single-peak functions shown in Figure 8, MHO has the lowest median, the smallest outliers, the smallest interquartile spacing, and shows better stability, while PSO is the least stable; the other four single-peak functions are consistent in their general trend with the representative cases shown.

Among the multi-peak functions presented in Figure 9, MHO has the lowest median and performs better in terms of stability, with only slightly more outliers on than HHO; among the functions not presented, the overall trend is consistent with the representative cases presented, with MHO being slightly weaker in terms of stability than HHO as well as PSO performing the worst.

Among the multimodal functions shown in Figure 10, MHO has the lowest median and outliers and the best stability, while HHO performs the worst. The general trend in the performance of the algorithms in the non-shown functions is consistent with the shown functions. The combined boxplot analysis of the above algorithms leads to the conclusion that MHO has the best stability.

4. Application to Engineering Design Problems

Three typical engineering constraint problems—reducer design [54], gear train design [55], and step taper pulley design [56]—are chosen for examination in this section in order to further confirm the efficacy of MHO in resolving global optimization issues. Because of their intricate restrictions and multi-objective optimization features, these issues are not only significant in engineering practice but also make excellent examples for evaluating the effectiveness of optimization methods. The trials are set up as 50 rounds of cycles with a maximum number of iterations per round of 50, and we will compare MHO’s performance with that of other algorithms to confirm its effectiveness.

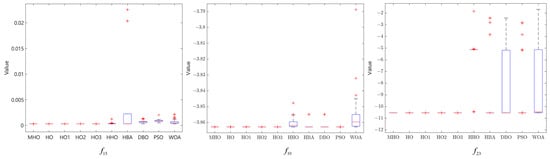

4.1. Speed Reducer Design Problem

Reducers are key components in mechanical drive systems. As shown in Figure 11, the design of a speed reducer is challenging. This is because seven design variables are involved: face width (), module of teeth (), number of teeth on the pinion (), length of the first shaft between the bearings (), length of the second shaft between the bearings (), diameter of the first shaft (), and diameter of the second shaft (). The objective is to minimize the total weight of the gearbox while satisfying 11 constraints. The constraints include bending stresses in the gear teeth, surface stresses, lateral deflections of shaft 1 and shaft 2 due to transmitted forces, and stresses in shaft 1 and shaft 2. The mathematical model is shown in Equation (25):

Figure 11.

Speed reducer design problem diagram.

MHO is compared to nine other algorithms in order to address the speed reducer design problem. Table 5 displays the results of the experiment, and it is evident that MHO is the least costly algorithm.

Table 5.

Comparison of the results for the speed reducer design problem.

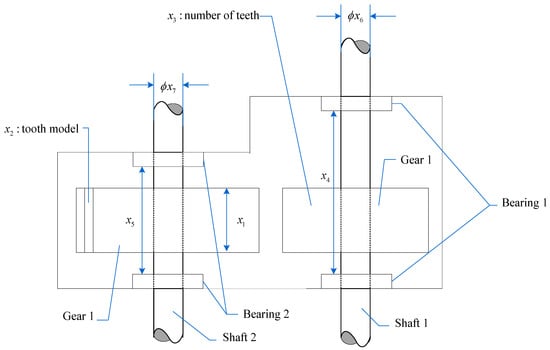

4.2. Gear Train Design Problem

The gear train design problem aims to minimize the cost of the gear ratios shown in Figure 12. This problem has four integer decision variables, where , , , and represent the number of teeth of four different gears. The mathematical model is shown in Equation (26):

Figure 12.

Gear system design problem diagram.

The MHO algorithm is employed to optimize the design of gear systems, and its results are compared with those of nine other algorithms. The experimental results are shown in Table 6. The optimal value obtained by MHO is lower than that of the other nine algorithms, indicating that MHO achieved a better value and superior performance in this problem.

Table 6.

Comparison of the results of gear train design problem.

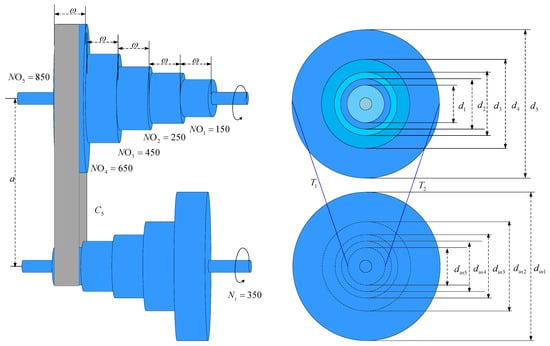

4.3. Step-Cone Pulley Problem

A stepped conical pulley is a pulley consisting of two or more conical pulleys connected as shown in Figure 13 with five design variables: , the diameter of the pulley at step , and , the width of the belt and the pulleys at each step. The goal of the system is to minimize the weight of the step conical pulley, and the problem contains 11 nonlinear constraints to ensure that the transmission power is at least 0.75 hp. Equation (27) is the mathematical model of the stepped conical pulley problem:

Figure 13.

Step-cone pulley problem diagram.

The stepped tapered pulley problem is solved using MHO, and it is compared to nine alternative techniques. A maximum of 50 iterations and 50 training rounds were used in each experiment. MHO is best, according to the experiment results, which are displayed in Table 7.

Table 7.

Comparison of the results of step-cone pulley problem.

5. Conclusions and Outlook

In this paper, we propose a modified hippopotamus optimization algorithm that aims to further improve the algorithm’s performance and address the issue of the algorithm’s easy descent into local optima.

The introduction of the sine chaotic map to initialize the population improves the diversity and randomness of the hippopotamus population, which enables the hippopotamus optimization algorithm to achieve a better balance between global and local searching, thus improving the initial solution quality as well as the convergence speed.

Premature convergence can be avoided by optimizing the hippo’s position update technique with a new convergence factor. In addition, a small-hole imaging reverse learning strategy is incorporated to improve the performance of the algorithm by mapping the current optimal solution of the algorithm dimension by dimension, avoiding interference between the dimensions, and at the same time expanding the search range of the algorithm.

Also, the proposed algorithm was experimented on with 23 test functions, and the performance of MHO was compared with HO and its variants as well as other metaheuristics, and the mean and standard deviation of the algorithm’s optimized search were calculated. The experimental results show that MHO is optimal in terms of mean and standard deviation for thirteen test functions, while failing to optimize in terms of mean and standard deviation for only five test functions. After analyzing the experimental results by using sensitivity analysis and the Friedman test for stability and convergence, respectively, it is concluded that MHO has a higher ranking and stability and can jump out of local optima faster. In order to further verify the ability of MHO in solving global optimization problems, it is applied to three engineering design problems and compared with other algorithms, and the results show that MHO obtains very impressive outcomes. The above experiments fully demonstrate that compared with other existing algorithms, MHO possesses a stronger global search capability and is able to explore the solution space more efficiently, thus searching for potential optimal solutions more comprehensively. In addition, MHO significantly improves its adaptability to complex optimization problems by dynamically adjusting the search direction and step size, thus achieving faster convergence. In terms of local searching, MHO is able to locate the optimal solution more accurately, especially for high-dimensional complex optimization problems, and its unique mechanism enables it to avoid falling into the local optimal trap. MHO also demonstrates higher robustness and outperforms the other nine compared algorithms in both parameter optimization and real engineering problems.

Nevertheless, MHO still has a tendency to converge to locally optimal solutions for certain functions when working with global optimization issues. On the complicated reducer design challenges, MHO’s solution performance is also not very steady. Therefore, we will continue to improve the exploration and production capability of MHO in our future research. Meanwhile, we will apply MHO to a wider range of problems, such as multi-objective optimization and current popular neural networks.

Author Contributions

T.H.: writing—review and editing, software, formal analysis, and conceptualization. H.W.: writing—review and editing, writing—original draft, software, and methodology. T.L.: visualization, supervision, resources, and data curation. Q.L.: writing—review and editing, visualization, funding acquisition, methodology, and conceptualization. Y.H.: supervision, resources, validation, and funding acquisition. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Anhui Provincial Colleges and Universities Collaborative Innovation Project (GXXT-2023-068), and the Anhui University of Science and Technology Graduate Innovation Fund Project (2023CX2086).

Data Availability Statement

The data generated from the analysis in this study can be found in this article. This study does not report the original code, which is available for academic purposes from the lead contact. Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon request.

Acknowledgments

We would like to thank the School of Electrical and Information Engineering at Anhui University of Science and Technology for providing the laboratory.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Dhiman, G.; Garg, M.; Nagar, A.; Kumar, V.; Dehghani, M. A novel algorithm for global optimization: Rat swarm optimizer. J. Ambient Intell. Humaniz. Comput. 2021, 12, 8457–8482. [Google Scholar] [CrossRef]

- Gharaei, A.; Shekarabi, S.; Karimi, M. Modelling and optimal lot-sizing of the replenishments in constrained, multi-product and bi-objective EPQ models with defective products: Generalised cross decomposition. Int. J. Syst. Sci. 2020, 7, 262–274. [Google Scholar] [CrossRef]

- Sun, Y.; Chen, Y. Multi-population improved whale optimization algorithm for high dimensional optimization. Appl. Soft Comput. 2024, 112, 107854. [Google Scholar] [CrossRef]

- Shen, Y.; Zhang, C.; Gharehchopogh, F.; Mirjalili, S. An improved whale optimization algorithm based on multi-population evolution for global optimization and engineering design problems. Expert Syst. Appl. 2023, 215, 119269. [Google Scholar] [CrossRef]

- Slowik, A.; Kwasnicka, H. Evolutionary algorithms and their applications to engineering problems. Neural Comput. Appl. 2020, 32, 12363–12379. [Google Scholar] [CrossRef]

- Baluja, S.; Caruana, R. Removing the Genetics from the Standard Genetic Algorithm. In Proceedings of the Twelfth International Conference on Machine Learning, Tahoe City, CA, USA, 9–12 July 1995. [Google Scholar]

- Coelho, L.; Mariani, V. Improved differential evolution algorithms for handling economic dispatch optimization with generator constraints. Energy Convers. Manag. 2006, 48, 1631–1639. [Google Scholar] [CrossRef]

- Ma, H.; Ye, S.; Simon, D.; Fei, M. Conceptual and numerical comparisons of swarm intelligence optimization algorithms. Soft Comput. 2017, 21, 3081–3100. [Google Scholar] [CrossRef]

- Tang, J.; Liu, G.; Pan, Q. A Review on Representative Swarm Intelligence Algorithms for Solving Optimization Problems: Applications and Trends. IEEE-CAA J. Autom. Sin. 2021, 8, 1627–1643. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995. [Google Scholar]

- Socha, K.; Dorigo, M. Ant colony optimization for continuous domains. Eur. J. Oper. Res. 2008, 185, 1155–1173. [Google Scholar] [CrossRef]

- Karaboga, D. An Idea Based on Honey Bee Swarm for Numerical Optimization; Technical Report-TR06; Technical Report; Erciyes University: Kayseri, Türkiye, 2005; Available online: https://abc.erciyes.edu.tr/pub/tr06_2005.pdf (accessed on 15 July 2024).

- Yang, X.; Hossein Gandomi, A. Bat algorithm: A novel approach for global engineering optimization. Eng. Comput. 2012, 29, 464–483. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Yu, V.F.; Jewpanya, P.; Redi, A.; Tsao, Y.C. Adaptive neighborhood simulated annealing for the heterogeneous fleet vehicle routing problem with multiple crossdocks. Comput. Oper. Res. 2021, 129, 105205. [Google Scholar] [CrossRef]

- Rashedi, E.; Nezamabadipour, H.; Saryazdi, S. GSA: A Gravitational Search Algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- Eskandar, H.; Sadollah, A.; Bahreininejad, A.; Hamdi, M. Water cycle algorithm—A novel metaheuristic optimization method for solving constrained engineering optimization problems. Comput. Struct. 2012, 110–111, 151–166. [Google Scholar] [CrossRef]

- Wang, S.H.; Li, Y.Z.; Yang, H.Y. Self-adaptive mutation differential evolution algorithm based on particle swarm optimization. Appl. Soft Comput. 2019, 81, 105496. [Google Scholar] [CrossRef]

- Shanmugapriya, P.; Kumar, T.S.; Kirubadevi, S.; Prasad, P.V. IoT based energy management strategy for hybrid electric storage system in EV using SAGAN-COA approach. J. Energy Storage 2024, 104, 114315. [Google Scholar] [CrossRef]

- Hu, S.J.; Kong, G.Q.; Zhang, C.S.; Fu, J.H.; Li, S.Y.; Yang, Q. Data-driven models for the steady thermal performance prediction of energy piles optimized by metaheuristic algorithms. Energy 2024, 313, 134000. [Google Scholar] [CrossRef]

- Sun, B.; Peng, P.; Tan, G.; Pan, M.; Li, L.; Tian, Y. A fuzzy logic constrained particle swarm optimization algorithm for industrial design problems. Appl. Soft Comput. 2024, 167, 112456. Available online: https://api.semanticscholar.org/CorpusID:274134625 (accessed on 15 July 2024). [CrossRef]

- Wu, S.; Dong, A.; Li, Q.; Wei, W.; Zhang, Y.; Ye, Z. Application of ant colony optimization algorithm based on farthest point optimization and multi-objective strategy in robot path planning. Appl. Soft Comput. 2024, 167, 112433. [Google Scholar] [CrossRef]

- Palanisamy, S.K.; Krishnaswamy, M. Optimization and forecasting of reinforced wire ropes for tower crane by using hybrid HHO-PSO and ANN-HHO algorithms. Int. J. Fatigue 2024, 190, 108663. [Google Scholar] [CrossRef]

- Liu, J.; Zhao, J.; Li, Y.; Zhou, H. HSMAOA: An enhanced arithmetic optimization algorithm with an adaptive hierarchical structure for its solution analysis and application in optimization problems. Thin-Walled Struct. 2025, 206, 112631. [Google Scholar] [CrossRef]

- Cui, X.; Zhu, J.; Jia, L.; Wang, J.; Wu, Y. A novel heat load prediction model of district heating system based on hybrid whale optimization algorithm (WOA) and CNN-LSTM with attention mechanism. Energy 2024, 312, 133536. [Google Scholar] [CrossRef]

- Che, Z.; Peng, C.; Yue, C. Optimizing LSTM with multi-strategy improved WOA for robust prediction of high-speed machine tests data. Chaos Soliton Fract. 2024, 178, 114394. [Google Scholar] [CrossRef]

- Elsisi, M. Optimal design of adaptive model predictive control based on improved GWO for autonomous vehicle considering system vision uncertainty. Appl. Soft Comput. 2024, 158, 111581. [Google Scholar] [CrossRef]

- Karaman, A.; Pacal, I.; Basturk, A.; Akay, B.; Nalbantoglu, U.; Coskun, S.; Sahin, O.; Karaboga, D. Robust real-time polyp detection system design based on YOLO algorithms by optimizing activation functions and hyper-parameters with artificial bee colony (ABC). Expert Syst. Appl. 2023, 221, 119741. [Google Scholar] [CrossRef]

- Yu, X.; Zhang, W. Address wind farm layout problems by an adaptive Moth-flame Optimization Algorithm. Appl. Soft. Comput. 2024, 167, 112462. [Google Scholar] [CrossRef]

- Dong, K.; Yang, D.W.; Sheng, J.B.; Zhang, W.D.; Jing, P.R. Dynamic planning method of evacuation route in dam-break flood scenario based on the ACO-GA hybrid algorithm. Int. J. Disaster Risk Reduct. 2024, 100, 104219. [Google Scholar] [CrossRef]

- Liu, X.; Wang, J.S.; Zhang, S.B.; Guan, X.Y.; Gao, Y.Z. Optimization scheduling of off-grid hybrid renewable energy systems based on dung beetle optimizer with convergence factor and mathematical spiral. Renew. Energy 2024, 237, 121874. [Google Scholar] [CrossRef]

- Beşkirli, A.; Dağ, İ. I-CPA: An Improved Carnivorous Plant Algorithm for Solar Photovoltaic Parameter Identification Problem. Biomimetics 2023, 8, 569. [Google Scholar] [CrossRef]

- Beşkirli, A.; Dağ, İ. Mustafa Servet Kiran. A tree seed algorithm with multi-strategy for parameter estimation of solar photovoltaic models. Appl. Soft Comput. 2024, 167, 112220. [Google Scholar] [CrossRef]

- Amiri, M.H.; Hashjin, N.M.; Montazeri, M.; Mirjalili, S.; Khodadadi, N. Hippopotamus optimization algorithm: A novel nature-inspired optimization algorithm. Sci. Rep. 2024, 14, 5032. Available online: https://api.semanticscholar.org/CorpusID:268083241 (accessed on 15 July 2024). [CrossRef] [PubMed]

- Maurya, P.; Tiwari, P.; Pratap, A. Application of the hippopotamus optimization algorithm for distribution network reconfiguration with distributed generation considering different load models for enhancement of power system performance. Electr. Eng. 2024. SN-1432-0487. [Google Scholar] [CrossRef]

- Chen, Y.; Wu, F.; Shi, L.; Li, Y.; Qi, P.; Guo, X. Identification of Sub-Synchronous Oscillation Mode Based on HO-VMD and SVD-Regularized TLS-Prony Methods. Energies 2024, 17, 5067. [Google Scholar] [CrossRef]

- Ribeiro, A.N.; Muñoz, D.M. Neural network controller for hybrid energy management system applied to electric vehicles. J. Energy Storage 2024, 104, 114502. [Google Scholar] [CrossRef]

- Wang, H.; Binti Mansor, N.N.; Mokhlis, H.B. Novel Hybrid Optimization Technique for Solar Photovoltaic Output Prediction Using Improved Hippopotamus Algorithm. Appl. Sci. 2024, 14, 7803. [Google Scholar] [CrossRef]

- Mashru, N.; Tejani, G.G.; Patel, P.; Khishe, M. Optimal truss design with MOHO: A multi-objective optimization perspective. PLoS ONE. 2024, 19, e0308474. Available online: https://api.semanticscholar.org/CorpusID:271905232 (accessed on 15 July 2024). [CrossRef]

- Abdelaziz, M.A.; Ali, A.A.; Swief, R.A.; Elazab, R. Optimizing energy-efficient grid performance: Integrating electric vehicles, DSTATCOM, and renewable sources using the Hippopotamus Optimization Algorithm. Sci. Rep. 2024, 14, 28974. [Google Scholar] [CrossRef]

- Baihan, A.; Alutaibi, A.I.; Alshehri, M.; Sharma, S.K. Sign language recognition using modified deep learning network and hybrid optimization: A hybrid optimizer (HO) based optimized CNNSa-LSTM approach. Sci. Rep. 2024, 14, 26111. [Google Scholar] [CrossRef]

- Amiri, M.H.; Hashjin, N.M.; Najafabadi, M.K.; Beheshti, A.; Khodadadi, N. An innovative data-driven AI approach for detecting and isolating faults in gas turbines at power plants. Expert Syst. Appl. 2025, 263, 125497. [Google Scholar] [CrossRef]

- Wolpert, D.H.; Macready, W.G. No free lunch theorems for optimization. IEEE Trans. Evolut. Comput. 1997, 1, 67–82. Available online: https://dl.acm.org/doi/10.1109/4235.585893 (accessed on 15 July 2024). [CrossRef]

- Wang, M.M.; Song, X.G.; Liu, S.H.; Zhao, X.Q.; Zhou, N.R. A novel 2D Log-Logistic–Sine chaotic map for image encryption. Nonlinear Dyn. 2025, 113, 2867–2896. [Google Scholar] [CrossRef]

- Sedigheh Mahdavi, Shahryar Rahnamayan, Kalyanmoy Deb, Opposition based learning: A literature review. Swarm Evol. Comput. 2018, 39, 1–23. [CrossRef]

- Jiao, J.; Li, J. Enhanced fireworks algorithm based on particle swarm optimization and reverse learning of small-hole imaging experiment. In Proceedings of the 2022 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Prague, Czech Republic, 9–12 October 2022. [Google Scholar]

- Yu, F.; Guan, J.; Wu, H.; Chen, Y.; Xia, X. Lens imaging opposition-based learning for differential evolution with cauchy perturbation. Appl. Soft Comput. 2024, 152, 111211. [Google Scholar] [CrossRef]

- Phalke, S.; Vaidya, Y.; Metkar, S. Big-O Time Complexity Analysis Of Algorithm. In Proceedings of the International Conference on Signal and Information Processing (IConSIP), Pune, India, 26–27 August 2022. [Google Scholar]

- Heidari, A.A.; Mirjalili, S.; Faris, H.; Aljarah, I.; Mafarja, M.; Chen, H.L. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst. 2019, 97, 849–872. [Google Scholar] [CrossRef]

- Hashim, F.A.; Houssein, E.H.; Hussain, K.; Mabrouk, M.S.; Al-Atabany, W. Honey Badger Algorithm: New metaheuristic algorithm for solving optimization problems. Math. Comput. Simul. 2022, 192, 84–110. [Google Scholar] [CrossRef]

- Xue, J.; Shen, B. Dung beetle optimizer: A new meta-heuristic algorithm for global optimization. J Supercomput. 2022, 79, 7305–7336. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Röhmel, J. The permutation distribution of the Friedman test. Comput. Stat. Data. Anal. 1997, 26, 83–99. [Google Scholar] [CrossRef]

- Golinski, J. Optimal synthesis problems solved by meansof nonlinear programming and random methods. J. Mech. 1970, 5, 287–309. [Google Scholar] [CrossRef]

- Huang, Y.; Liu, Q.; Song, H.; Han, T.; Li, T. CMGWO: Grey wolf optimizer for fusion cell-like P systems. Heliyon 2024, 10, e34496. [Google Scholar] [CrossRef]

- Han, T.; Li, T.; Liu, Q.; Huang, Y.; Song, H. A Multi-Strategy Improved Honey Badger Algorithm for Engineering Design Problems. Algorithms 2024, 17, 573. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).