1. Introduction

For decades, optimization challenges have constituted a major research focus across diverse real-world domains. These problems center on maximizing or minimizing target functions while adhering to specific constraints. A model for a single-objective optimization problem can be represented by Equation (

1).

In this context, represents the objective function, where is a D-dimensional vector consisting of the variables through . The functions and represent the inequality constraints and equality constraints, respectively. The variables and denote the lower and upper bounds of , respectively.

Optimization problems are ubiquitous across various fields, including industry, economics, and engineering design. However, with advancements in technology and human progress, existing methods are insufficient to address the increasingly complex optimization challenges. Therefore, identifying efficient and robust approaches for these complex optimization problems remains a significant challenge.

Optimization techniques are typically divided into two main types: deterministic methods and stochastic methods. Deterministic methods are typically based on mathematical models and seek optimal solutions to optimization problems through analytical approaches, offering strong theoretical foundations and precision. However, deterministic approaches often falter when applied to challenges characterized by complexity, multi-modality, and nonlinearity, particularly those that resist straightforward modeling. These methods are frequently plagued by significant issues, including high computational expenses and a tendency to become trapped in local optima. As a result, the efficacy of traditional optimization methods is increasingly constrained when confronted with the scale and complexity of modern problems. To overcome these challenges, stochastic optimization methods, particularly metaheuristic algorithms, have become a prominent focus of optimization research. Drawing inspiration from natural phenomena—such as biological, physical, and social behaviors—these approaches are characterized by robust global search capabilities and effective local optimization abilities. This synergy provides a significant advantage for addressing complex optimization challenges. While the vast majority of metaheuristic algorithms are designed for unconstrained optimization, they can also be applied to constrained problems by transforming them into an unconstrained format. Metaheuristic algorithms proposed over the past few decades are mainly classified into four categories: physics-based algorithms, evolution-based algorithms, human behavior-based algorithms, and swarm intelligence algorithms.

Physics-based algorithms: These algorithms are inspired by natural phenomena, laws, and mechanisms in physics, leveraging phenomena such as motion, gravity, repulsion, and energy conservation between objects to develop optimization algorithms. For instance, Simulated Annealing (SA) [

1], Circle Search Algorithm (CSA) [

2], Geyser inspired Algorithm (GEA) [

3], Big Bang-Big Crunch Algorithm (BBBC) [

4], Subtraction-Average-Based Optimizer (SABO) [

5], Elastic Deformation Optimization Algorithm (EDOA) [

6], Kepler Optimization Algorithm (KOA) [

7], Rime Optimizer (RIME) [

8], Central Force Optimization (CFO) [

9], Quadratic Interpolation Optimization (QIO) [

10], Water Cycle Algorithm (WCA) [

11], Sine Cosine Algorithm (SCA) [

12], Homonuclear Molecules Optimization (HMO) [

13], Turbulent Flow of Water Optimization (TFWO) [

14], Newton’Raphson-Based Optimizer (NRBO) [

15], Gradient-Based Optimizer (GBO) [

16], Intelligent Water Drops Algorithm (IW-DA) [

17], Equilibrium Optimizer (EO) [

18], Fick’s Law Algorithm (FLA) [

19], The Great Wall Construction Algorithm (GWCA) [

20].

Evolution-based algorithms: Inspired by Darwin’s theory of natural selection and genetic evolution, these algorithms model processes such as species evolution, survival of the fittest, genetic inheritance, and mutation, which collectively enhance the quality of a population over time. For example, Forest Optimization Algorithm (FOA) [

21], Tree-Seed Algorithm (TSA) [

22], Biogeography-Based Optimization (BBO) [

23], Artificial Infectious Disease (AID) [

24], Genetic Algorithm (GA) [

25], Genetic Programming (GP) [

26], Fungal Growth Optimizer (FGO) [

27], Evolutionary Programming (EP) [

28], Differential Evolution (DE) [

29], Black Widow Optimization (BWO) [

30], Human Felicity Algorithm (HFA) [

31], Fungi Kingdom Expansion (FKE) [

32].

Human behavior-based algorithms: Inspired by human society, decision-making processes, learning, and psychological behavior patterns, these algorithms aim to find global optima by simulating human intelligence in decision-making, learning, collaboration, competition, and environmental adaptation. Typical human behavior-based algorithms include City Councils Evolution (CCE) [

33], War Strategy Optimization (WSO) [

34], Hiking Optimization Algorithm (HOA) [

35], Teaching–Learning-Based Optimization (TLBO) [

36], Political Optimizer (PO) [

37], Volleyball Premier League (VPL) [

38], Social Evolution and Learning Optimization (SELO) [

39], Dream Optimization Algorithm (DOA) [

40], Tabu Search (TS) [

41], Football Team Training Algorithm (FTTA) [

42], Soccer League Competition (SLC) [

43], Brain Storm Optimization (BSO) [

44], Kids Learning Optimizer (KLO) [

45], Hunter Prey Optimization (HPO) [

46], Exchange Market Algorithm (EMA) [

47], Hunger Games Search (HGS) [

48], Social Learning Optimization (SLO) [

49], Mountaineering Team-Based Optimization (MTBO) [

50],

Swarm Intelligence Algorithms: These algorithms are inspired by the cooperative behavior of biological groups in nature. They achieve optimization tasks through simple interactions and collaboration between individuals. Notable swarm intelligence algorithms include Particle Swarm Optimization (PSO) [

51], Zebra Optimization Algorithm (ZOA) [

52], Moth-Flame Optimization (MFO) [

53], Harris Hawks Optimization (HHO) [

54], Artificial Rabbits Optimization (ARO) [

55], Golden Jackal Optimization (GJO) [

56], Ant Colony Algorithm (ACA) [

57], Cuckoo Search (CS) [

58], Snake Optimizer (SO) [

59], Marine Predators Algorithm (MPA) [

60], Secretary Bird Optimization algorithm (SBOA) [

61], Artificial Gorilla Troops Optimizer (GTO) [

62], Bat-inspired Algorithm (BA) [

63], Whale Optimization Algorithm (WOA) [

64], Gray Wolf Optimizer (GWO) [

65], Sand Cat Swarm Optimization (SCSO) [

66], Nutcracker Optimizer (NOA) [

67], Aquila Optimizer (AO) [

68], Black-winged Kite Algorithm (BKA) [

69], Crested Porcupine Optimizer (CPO) [

70], Hippopotamus Optimization Algorithm (HO) [

71], African Vultures Optimization Algorithm (AVOA) [

72], Sparrow Search Algorithm (SSA) [

73], Dung Beetle Optimizer (DBO) [

74], Honey Badger Algorithm (HBA) [

75].

Using this established groundwork, a significant number of scholars have directed their attention toward improving the efficacy of contemporary algorithms. Among these, PSO [

51] has gained significant attention as one of the most widely studied swarm intelligence optimization algorithms in recent years, owing to its efficiency. Although traditional PSO exhibits robust global optimization capabilities, it is prone to premature convergence. To address this issue, many researchers have proposed modifications to PSO, resulting in the development of various improved or even significantly enhanced variants. As an example, Zhi et al. [

76] proposed the Adaptive Multi-Population Particle Swarm Optimization (AMP-PSO) algorithm as a solution for the cooperative path planning challenge involving several Autonomous Underwater Vehicles (AUVs). This algorithm incorporated a multi-population grouping strategy and an interaction mechanism among the particle swarms. By dividing particles based on their fitness, one leader swarm and several follower swarms were established, significantly improving the optimization performance. Huang et al. [

77] developed the ACVDEPSO algorithm, in which particle velocity was represented as cylindrical vectors to facilitate path searching. Moreover, this work incorporates a challenger mechanism that leverages differential evolution operators. The purpose of this addition is to diminish the algorithm’s susceptibility to local optima, which consequently yields a substantial acceleration in convergence. Li et al. [

78] proposed the Pyramid Particle Swarm Optimization (PPSO) algorithm, which employed a pyramid structure to allocate particles to different levels according to their fitness. Within each level, particles competed in pairs to determine winners and losers. Losers collaborated with their corresponding winners to optimize performance, while winners interacted with particles from higher levels, including the top level of the pyramid. This methodology bolstered the algorithm’s capacity for global exploration.

Similar to PSO, GWO [

65] has also emerged as a widely applied swarm intelligence optimization algorithm in recent years. GWO derives its core concepts from the communal structure and predatory patterns of grey wolves. This approach has achieved widespread adoption as a technique for addressing optimization challenges across numerous engineering disciplines. For instance, Qiu et al. [

79] introduced an Improved Grey Wolf Optimizer (IGWO) that integrates multiple strategies to enhance performance. The algorithm employs a Lens Imaging-based Opposition Learning method and a nonlinear convergence strategy, using cosine variation to control parameters, thus achieving a balance between global exploration and local exploitation. Additionally, drawing inspiration from the Tentacle-inspired Swarm Algorithm (TSA) [

80] and PSO, a nonlinear parameter adjustment strategy was incorporated into the position update equation. This was further combined with corrections based on both individual historical best positions and global best positions, which improved convergence efficiency. Zhang et al. [

81] proposed an enhanced version of GWO, known as VGWO-AWLO, which incorporates velocity guidance, adaptive weights, and the Laplace operator. The algorithm first introduces a dynamic adaptive weighting mechanism based on uniform distribution, enabling the control parameter

a to achieve nonlinear dynamic variation, facilitating smooth transitions between exploration and exploitation phases. Second, a novel velocity-based position update equation was developed, along with an individual memory function to enhance local search capabilities and accelerate convergence toward the optimal solution. Third, a Laplace crossover operator strategy was employed to further enhance population diversity, effectively preventing the grey wolf population from becoming trapped in local optima. MELGWO, an enhanced variant of GWO, was introduced by Ahmed et al. [

82]. The novel method incorporates a hybrid of memory mechanisms, evolutionary operators, and stochastic local search techniques. Additionally, the algorithm incorporates Linear Population Size Reduction (LPSR) [

83] technology to further boost performance. Beyond PSO and GWO, many researchers have also focused on improving other swarm intelligence optimization algorithms to enhance their efficiency and effectiveness, though these advancements are not discussed in detail here.

Among the numerous metaheuristic algorithms, Differential Evolution (DE) [

29] stands out. Proposed by Storn and Price in the mid-1990s, DE utilizes difference vectors between individuals for mutation, integrating this with crossover and selection to guide the population search. DE is renowned for its simple structure, strong robustness, and superior convergence performance. Its powerful performance has laid a foundation for the subsequent development of metaheuristic algorithms.

In the decades that followed, numerous DE variants continued to demonstrate superior performance. In 2009, SaDE (Self-Adaptive Differential Evolution) [

84], an improved variant of DE, won the CEC competition. The key feature of SaDE is its ability to self-adapt its control parameters, primarily the scaling factor

F and crossover probability

. The scaling factor

F governs the magnitude of the mutation, while the crossover probability

controls the degree of recombination. This adaptive mechanism allows SaDE to tailor its behavior to different problems without manual parameter tuning, thereby reducing the cost of human intervention.

In 2014, LSHADE [

85], another DE variant, won the CEC competition. The LSHADE algorithm marked a significant milestone in the evolution of DE. LSHADE introduced a linear population reduction mechanism, which further enhanced convergence performance and solidified the dominance of DE-based algorithms in subsequent competitions.

In 2017, LSHADE-cnEpSin [

86] emerged as one of the winners of the CEC-2017 competition. As an enhancement of LSHADE, this algorithm employs a parameter adaptation strategy adjusted by a sinusoidal function, enabling it to exhibit different behaviors at various search stages. A more effective equilibrium between the algorithm’s global exploration and local exploitation phases is achieved through this framework. Within the LSHADE framework, more high-performing variants were subsequently proposed. For example, LSHADE_SPACMA [

87] is another notable advanced variant, frequently featured as a state-of-the-art representative in recent benchmark comparisons. First, the algorithm employs a dedicated elimination and generation mechanism specifically to bolster its local search intensification. Additionally, the algorithm leverages a mutation operator built upon an enhanced semi-parametric adaptation method and rank-based selection pressure. This combination is designed to effectively steer the evolutionary path. Moreover, LSHADE_SPACMA features an elite-based external archive, a component tasked with both preserving external solution diversity and promoting faster convergence.

The strong momentum of the DE algorithm family did not wane. IUDE [

88], jDE100 [

89], and IMODE [

90] subsequently won the CEC competitions in 2018, 2019, and 2020, respectively. The continuous evolution of these CEC-winning algorithms clearly demonstrates the robustness and longevity of the DE framework, as well as its exceptional potential for solving complex real-number optimization problems.

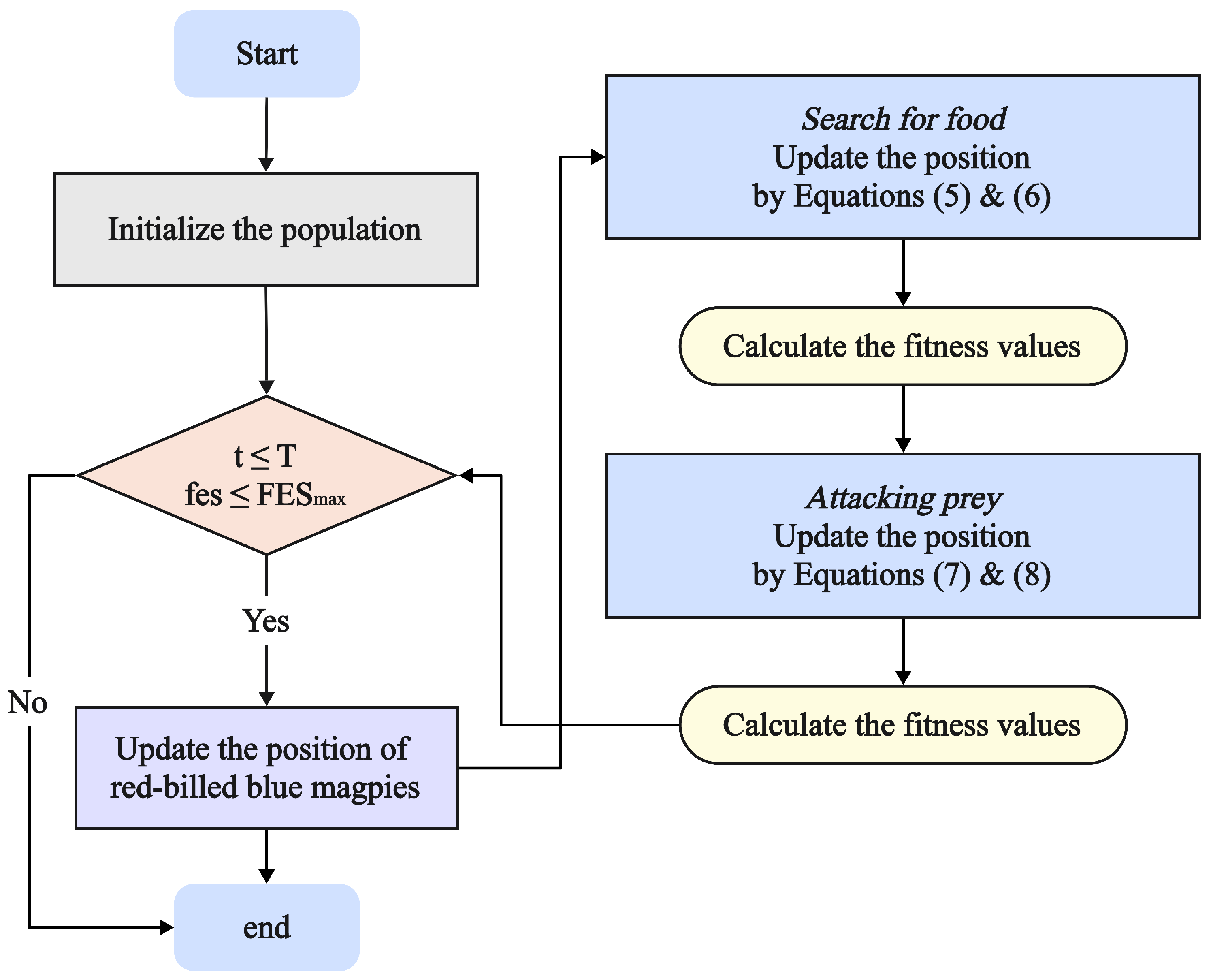

The Red-billed Blue Magpie Optimizer (RBMO) is a novel swarm intelligence optimization algorithm introduced by Xun et al. in 2024 [

91]. The algorithm was successfully applied to the 3D path planning problem for UAVs, yielding impressive results. To achieve its optimization goals, RBMO models the primary foraging strategies of the red-billed blue magpie, namely its techniques for finding food, attacking prey, and caching supplies. However, the algorithm’s proven effectiveness is constrained by an insufficient global search capability, which creates a vulnerability to premature convergence in local optima. To address these shortcomings, Zhang et al. [

92] introduced an improved version of the algorithm, known as ES-RBMO, which incorporates an elite strategy. This enhanced version aims to mitigate the issue of local optima by preserving low-fitness individuals through the elite strategy. However, although Zhang et al. emphasized that ES-RBMO effectively reduces the likelihood of becoming trapped in local optima, it does not resolve the inherent limitations in RBMO’s search expressions, which can still lead to difficulties in escaping local optima.

Despite the continuous emergence of new metaheuristic algorithms and improved versions of existing algorithms in recent years, according to the “No Free Lunch” theorem [

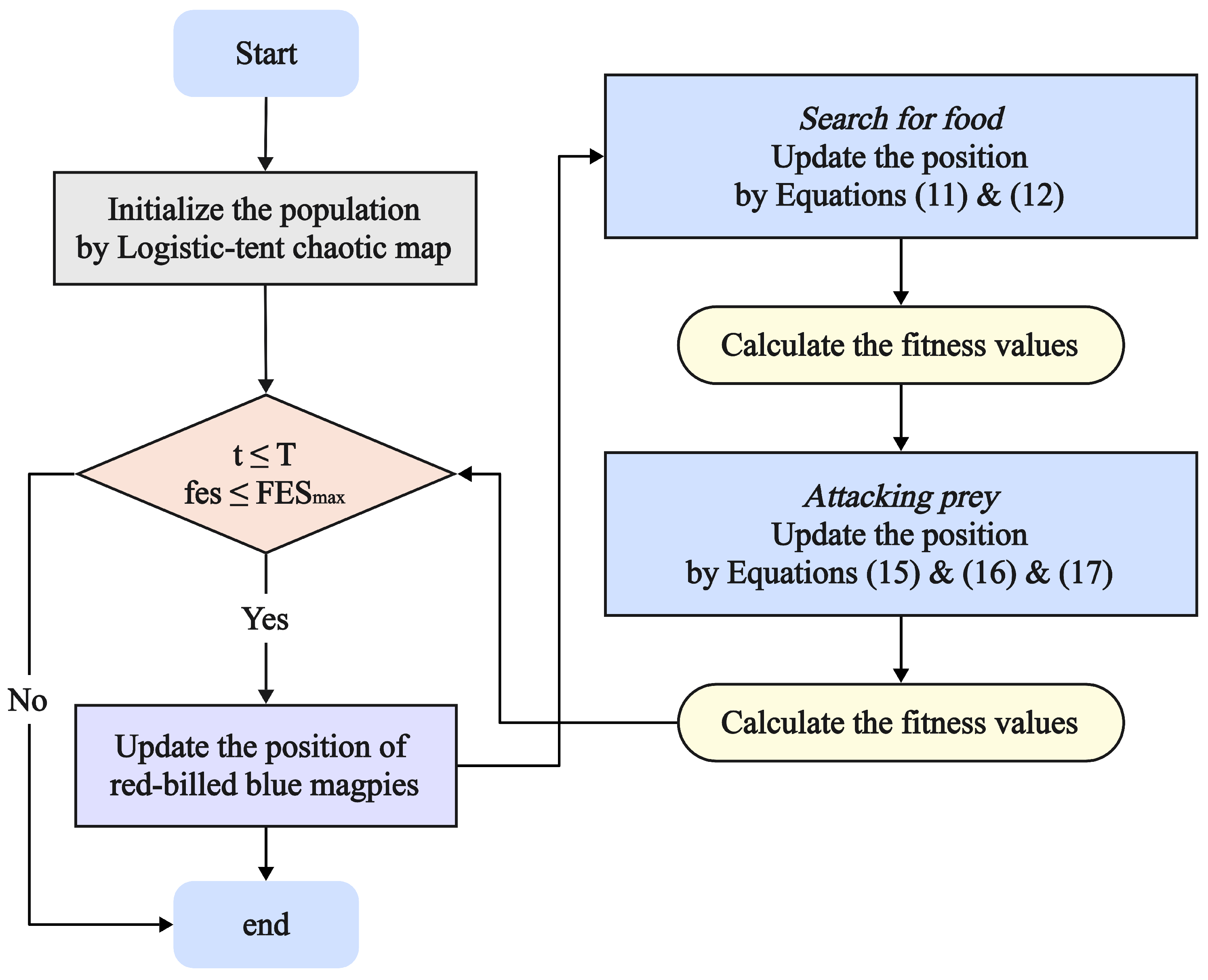

93], no single algorithm is universally applicable to all problems. Therefore, it remains necessary to continue searching for a metaheuristic algorithm capable of solving the majority of optimization problems. To address the limitations of the classical RBMO algorithm, expand its application scope, and identify methods capable of solving a broader range of optimization problems, this paper proposes an improved version of the RBMO algorithm, termed IRBMO. First, IRBMO introduces a balance factor that dynamically adjusts the weight between the mean and the global optimum, enabling particles to effectively obtain optimal state information from the population, thereby accelerating convergence and improving solution adaptability. Second, it incorporates multiple strategies to enhance population diversity. Third, Jacobi curves [

94] and Lévy Flight [

95] are integrated into the search phase to mitigate RBMO’s inability to escape local optima, significantly improving its ability to avoid premature convergence.

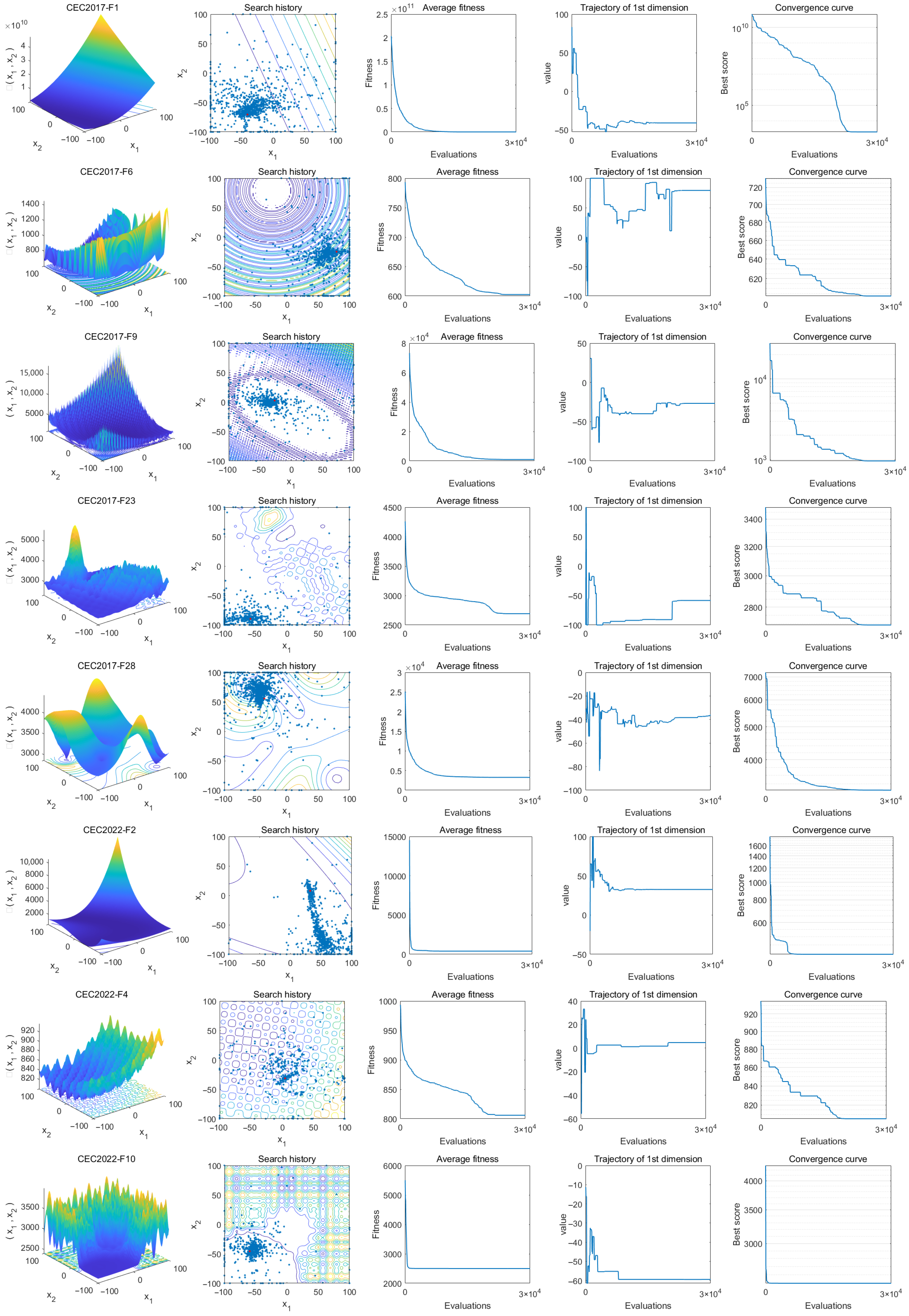

Experimental results on the CEC benchmark set, real-world engineering constrained optimization problems, and 3D UAV path planning in mountainous terrain demonstrate that IRBMO significantly improves upon the classical RBMO, achieving satisfactory results. In this paper, we highlight the following key contributions:

Improving Escape from Local Optima: To address the drawbacks of the classical RBMO algorithm, such as premature convergence, excessive reliance on the mean, and the inability to escape local optima, IRBMO is proposed.

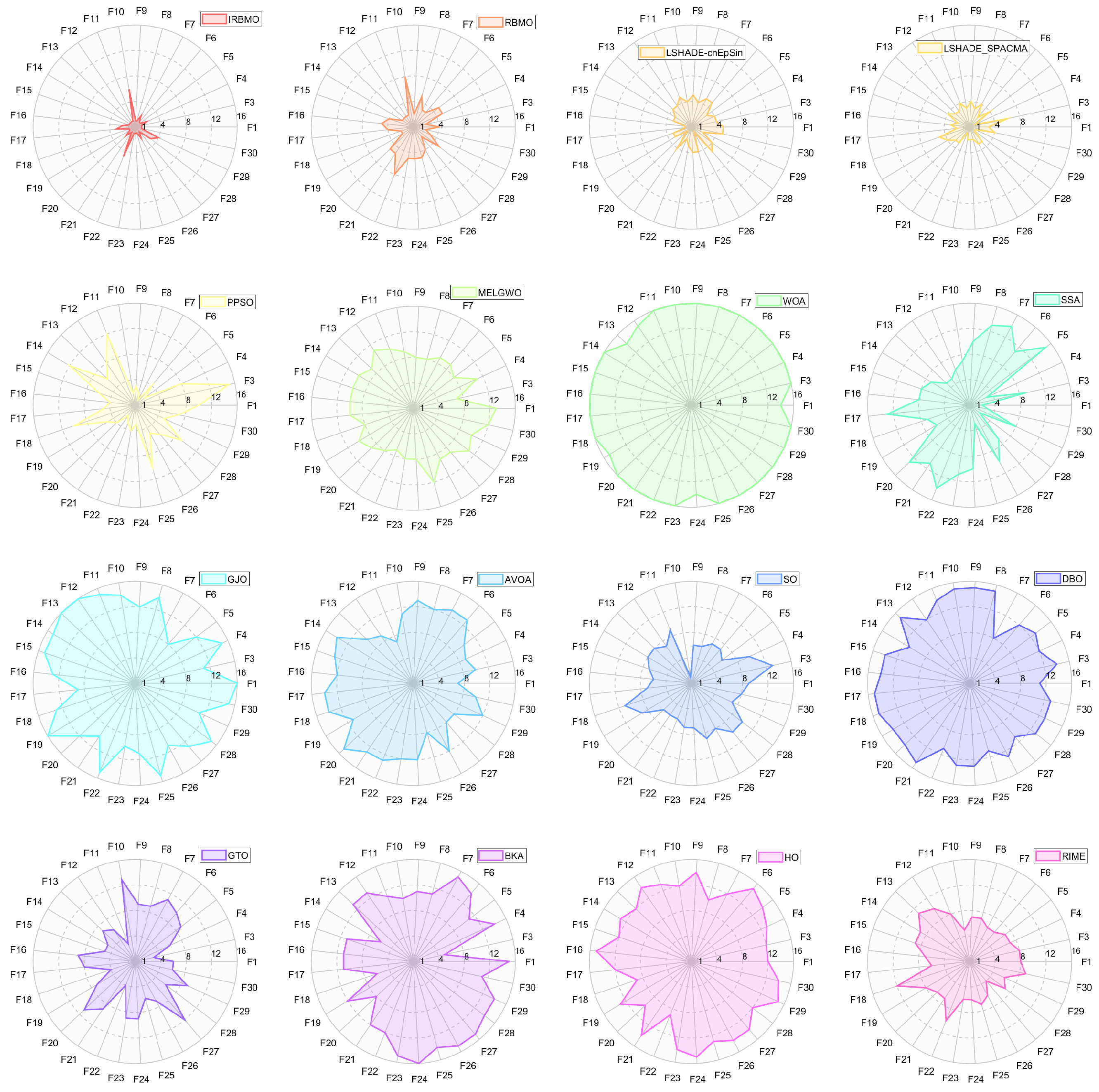

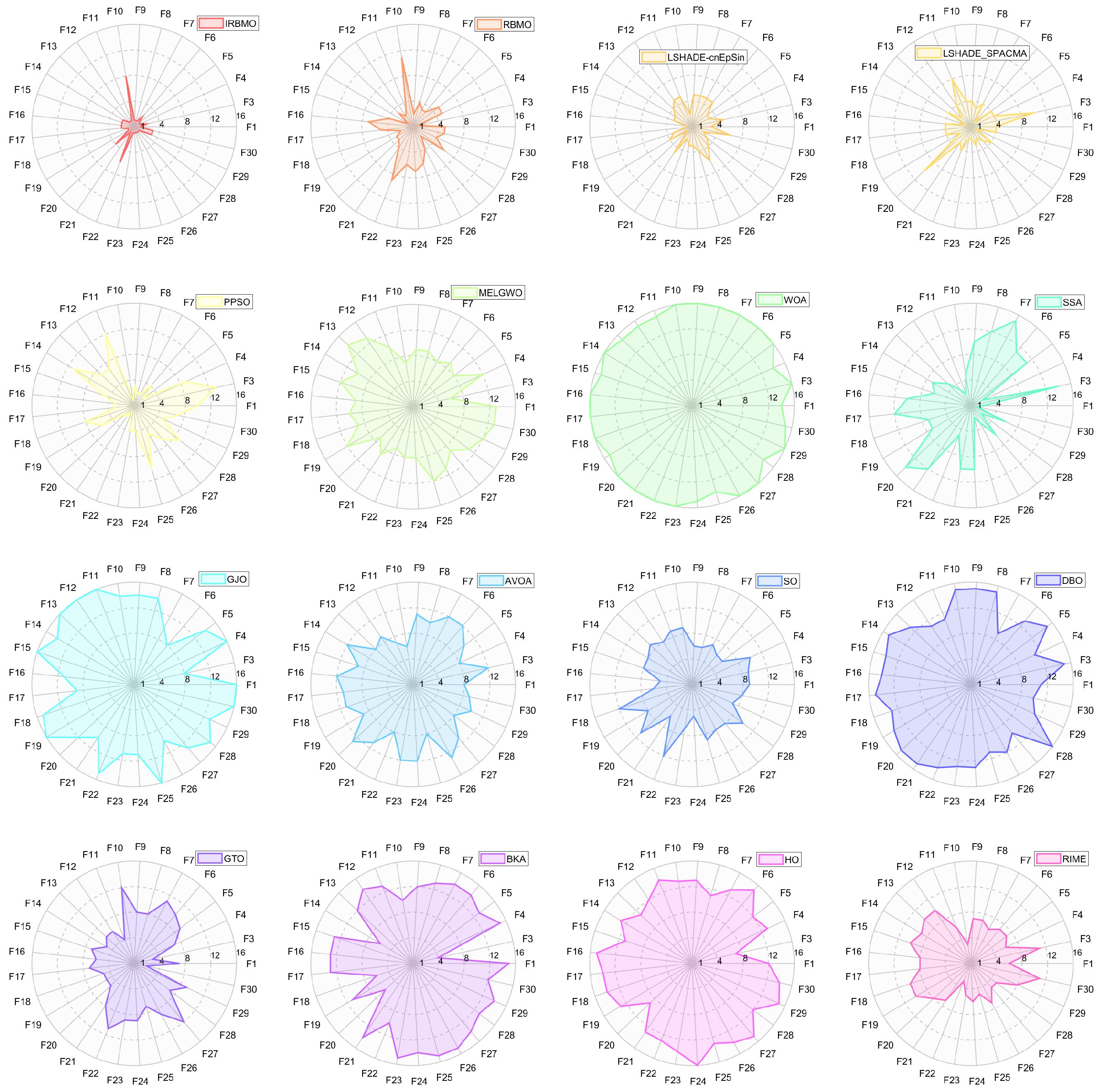

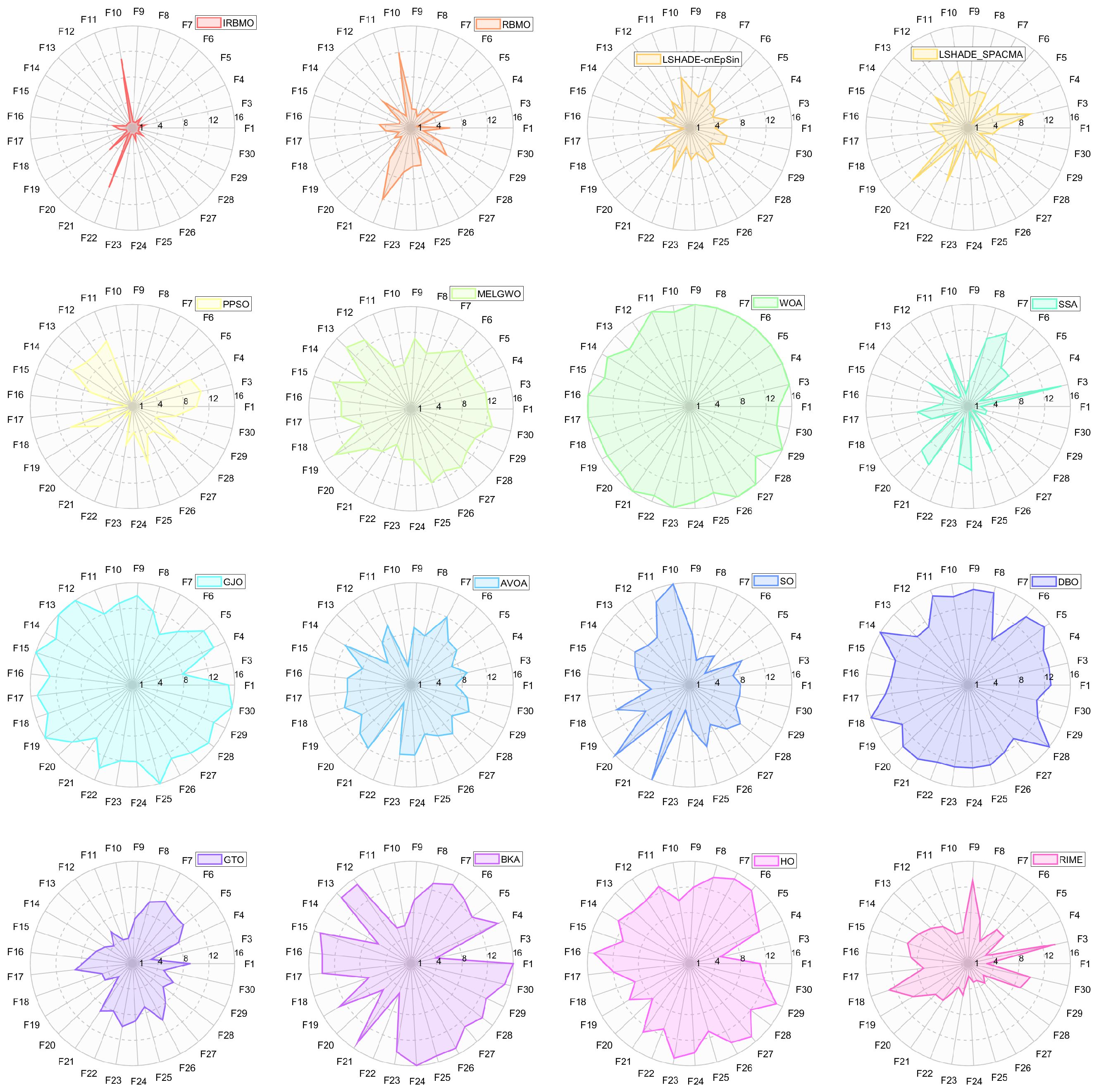

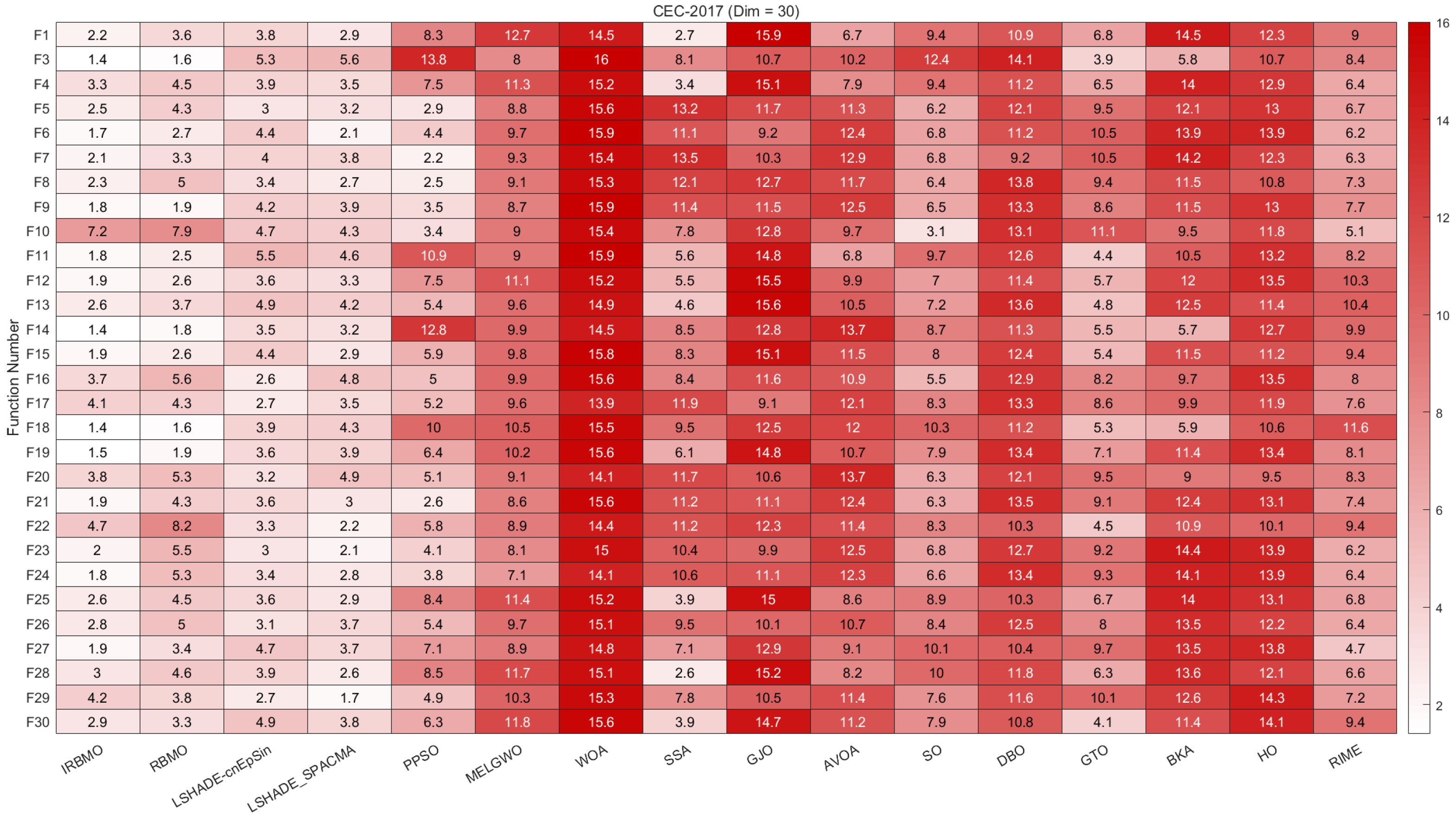

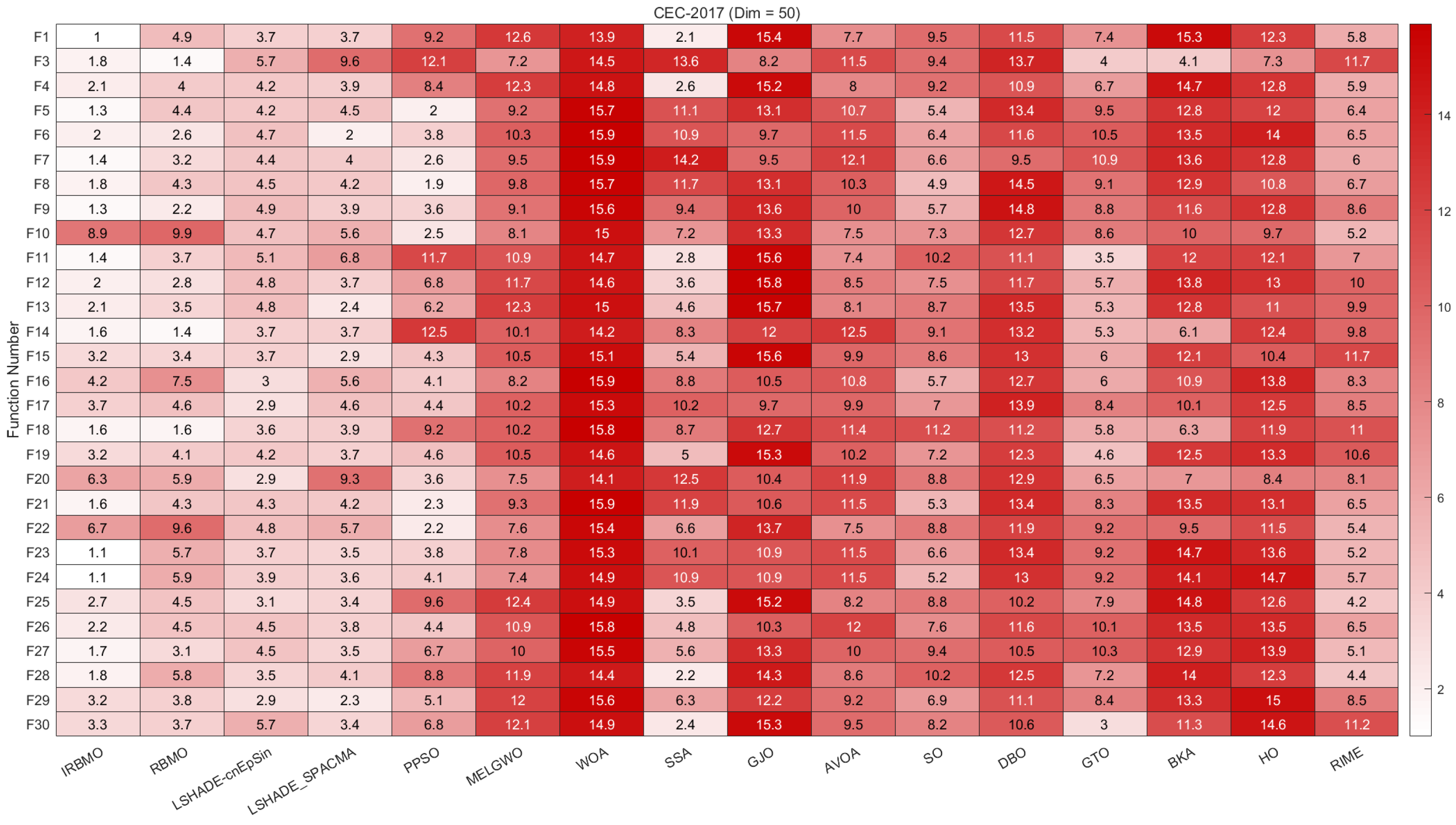

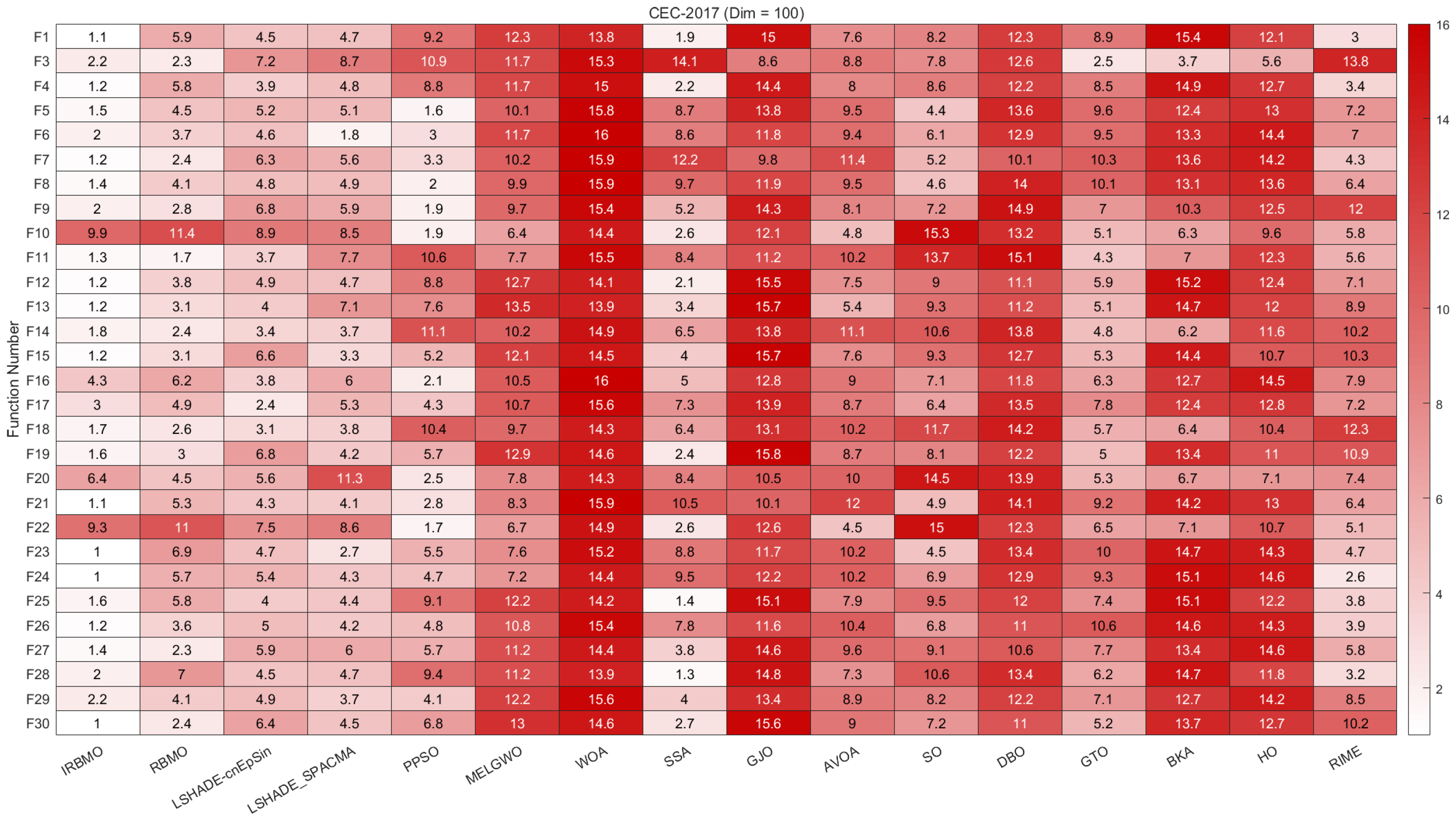

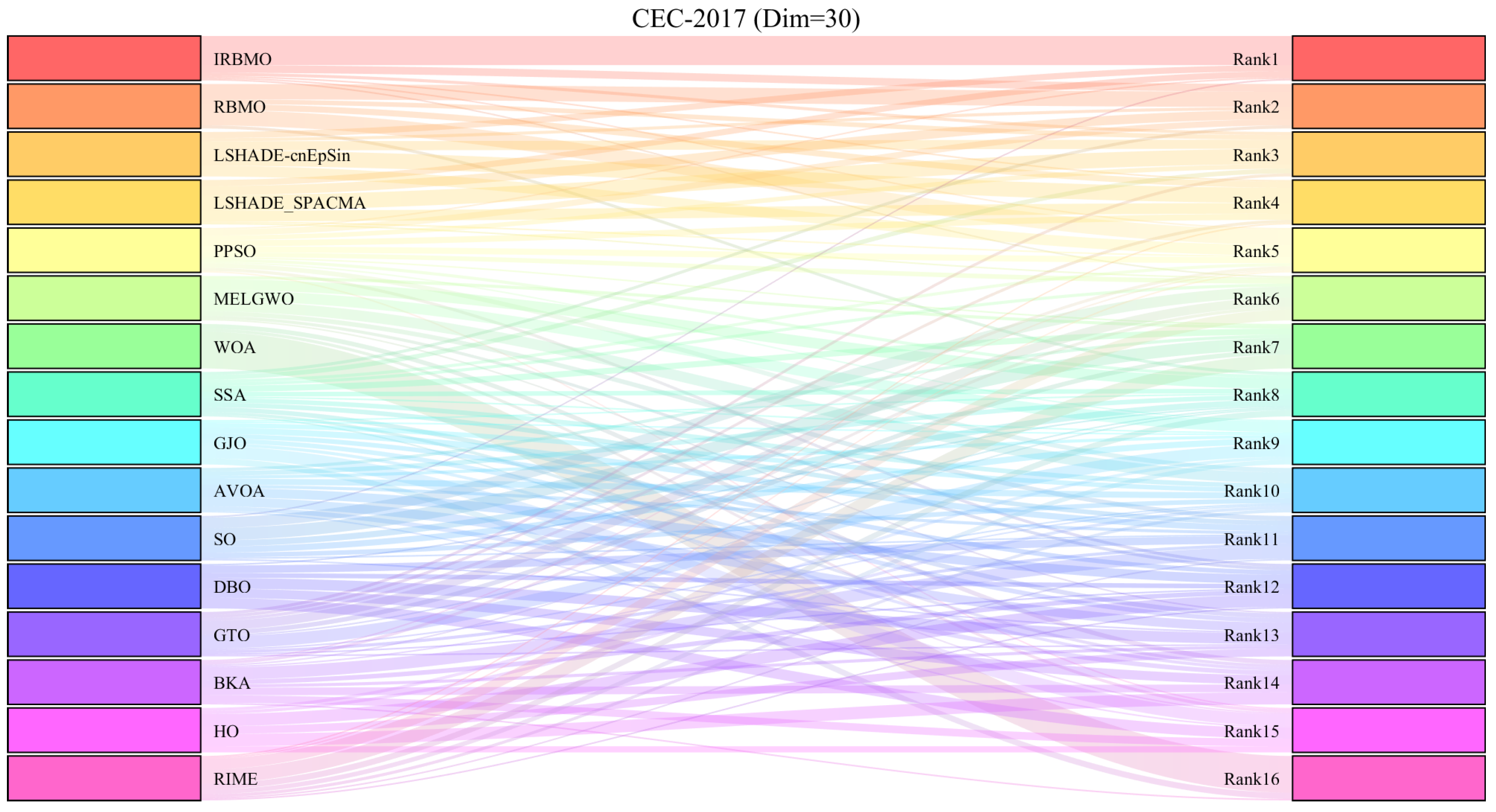

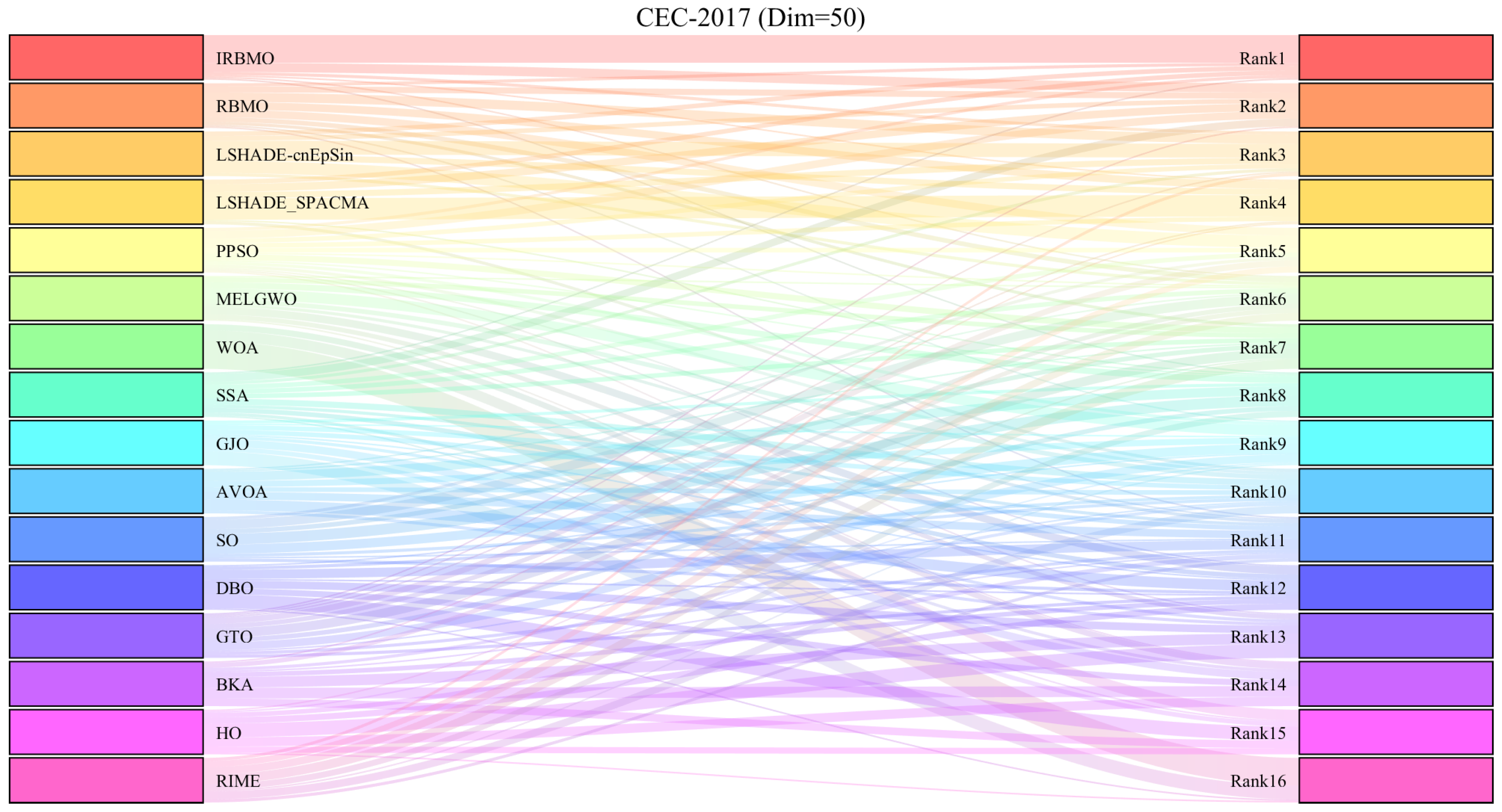

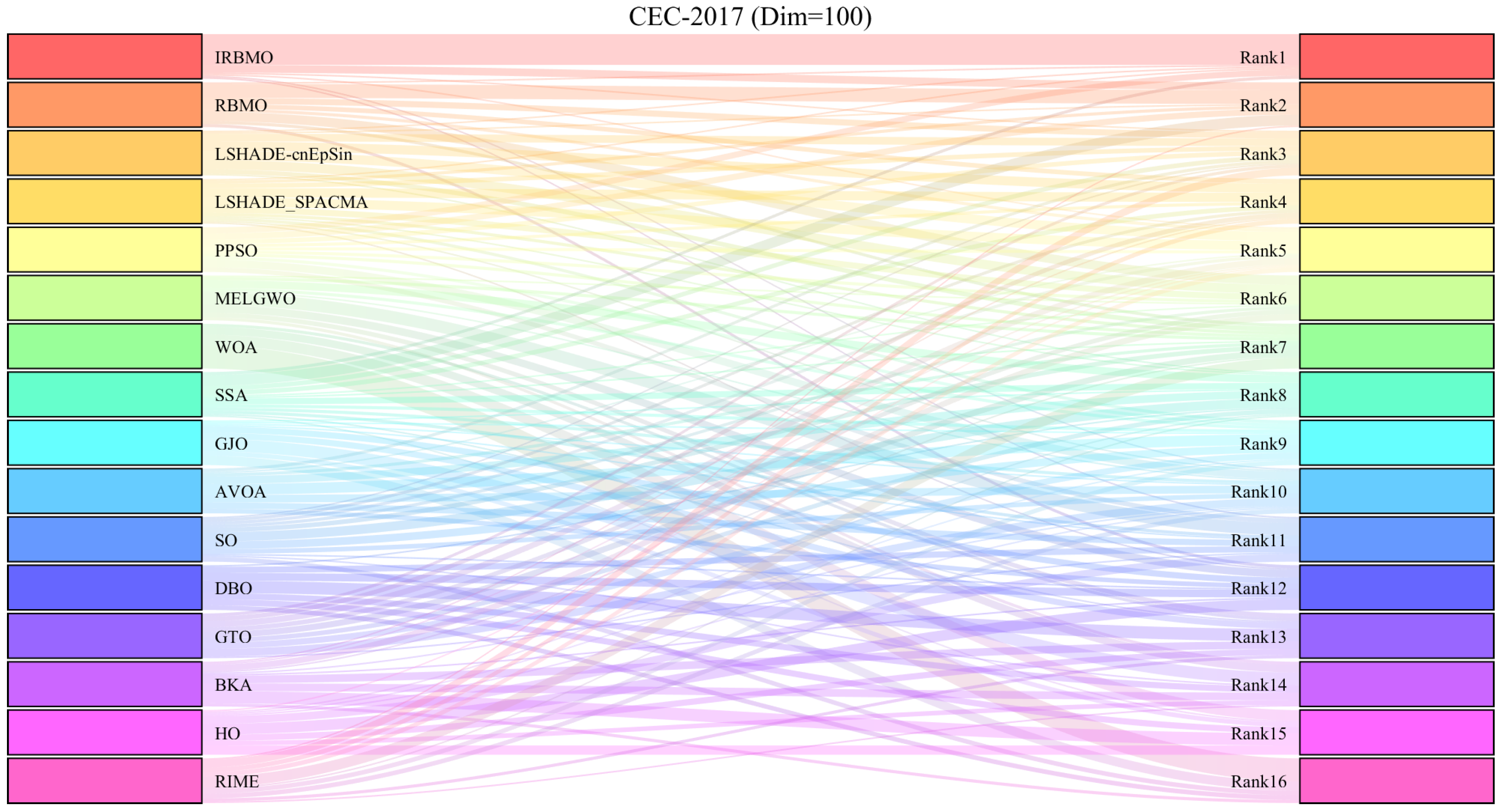

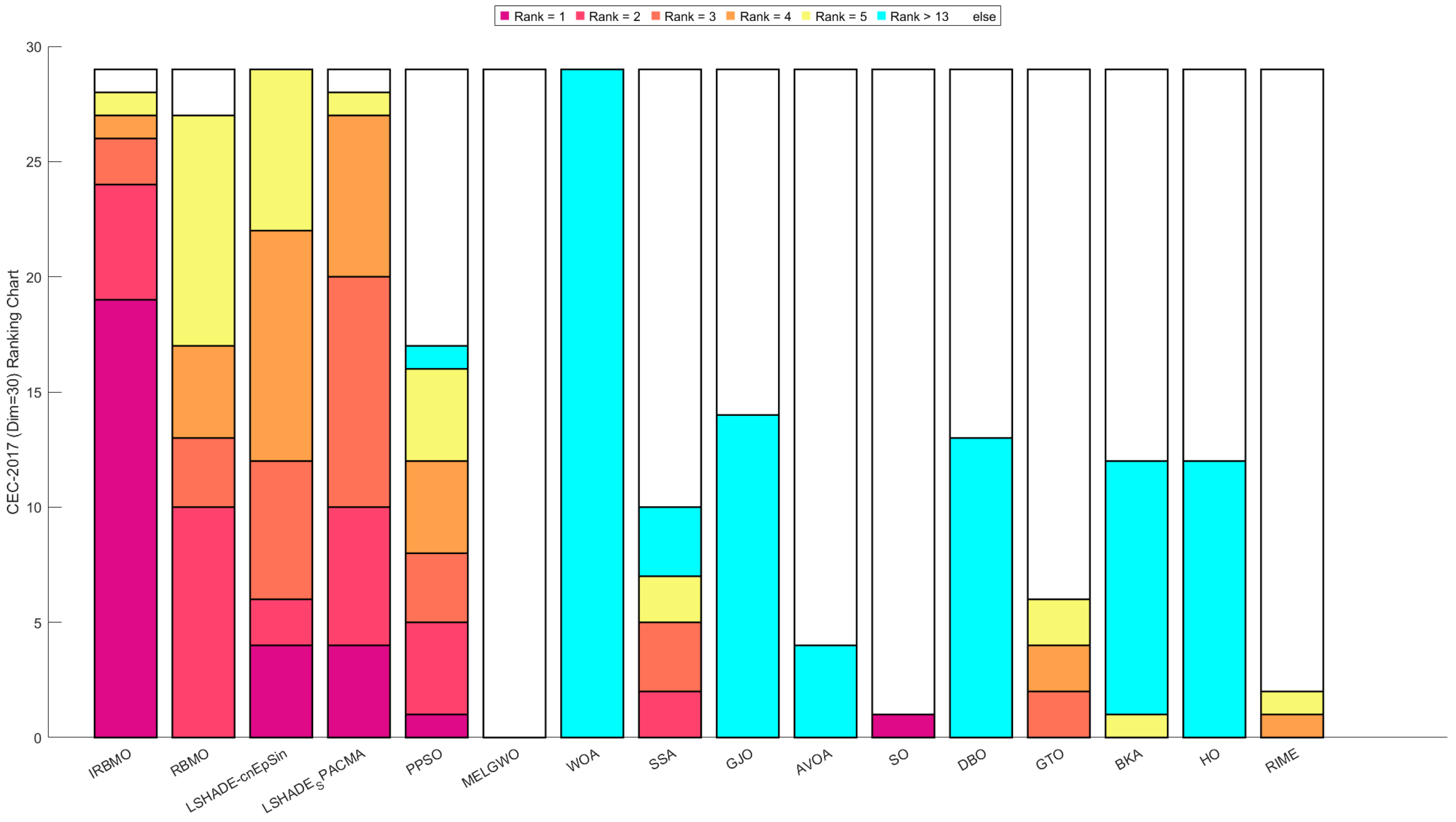

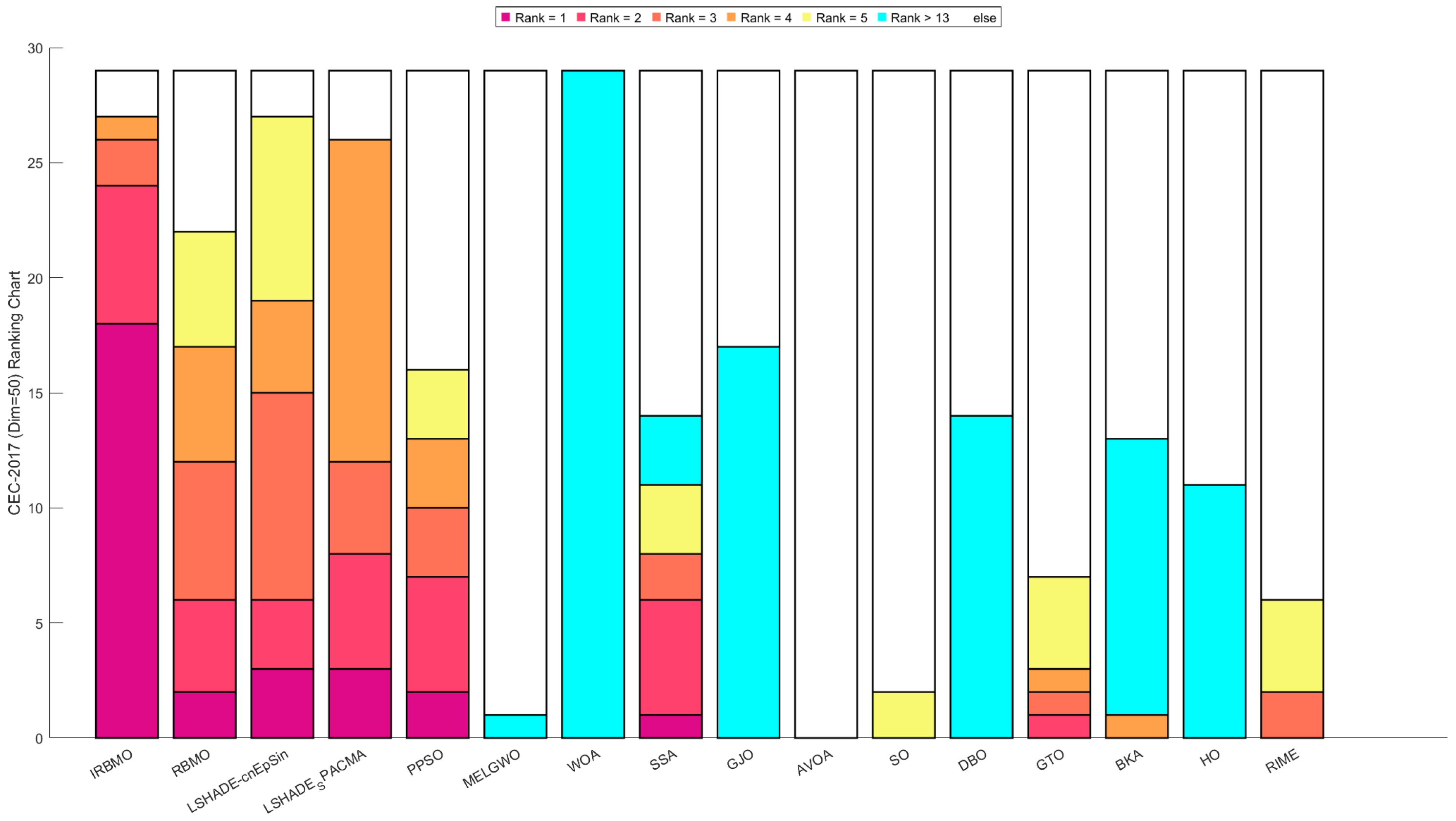

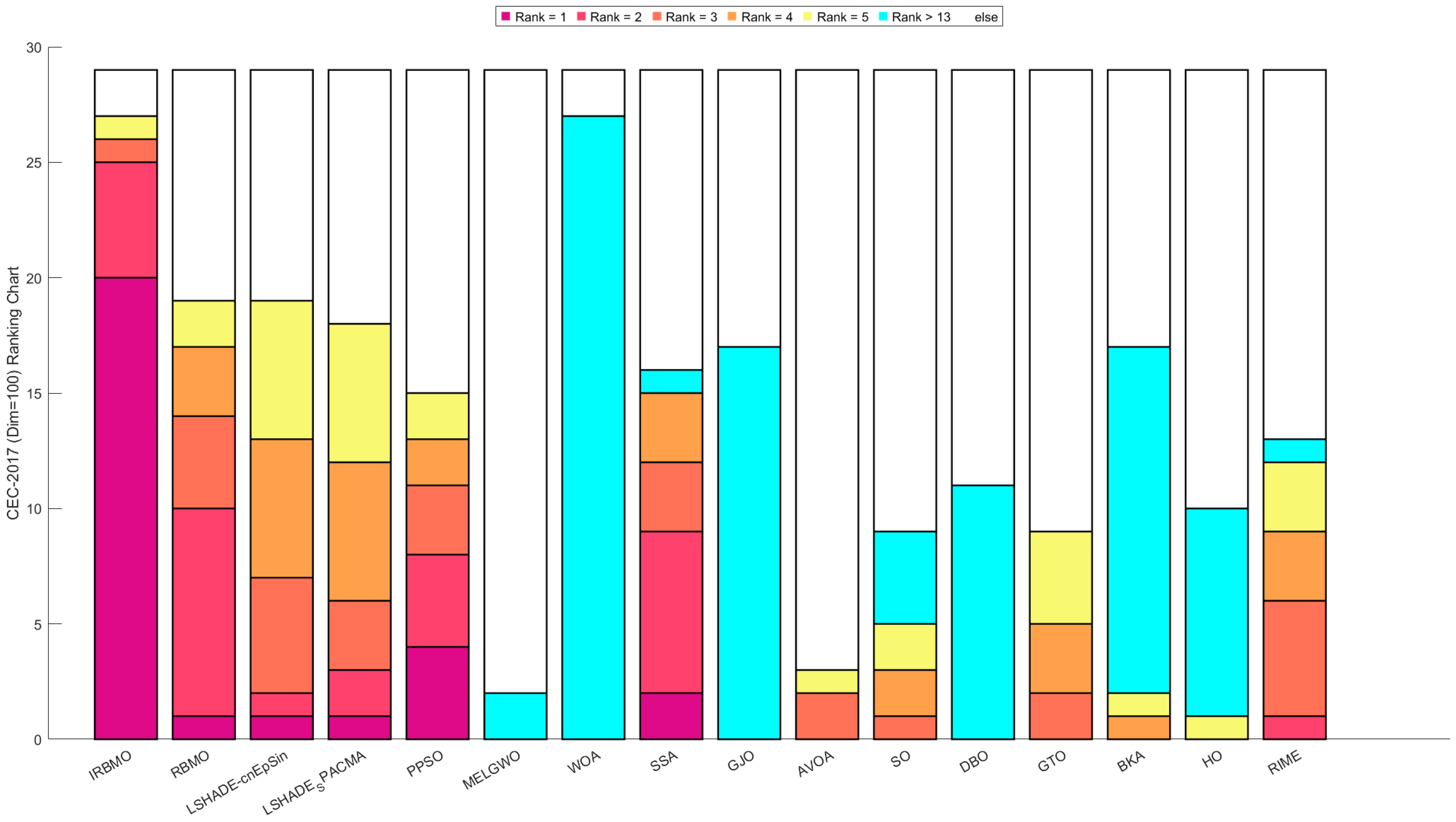

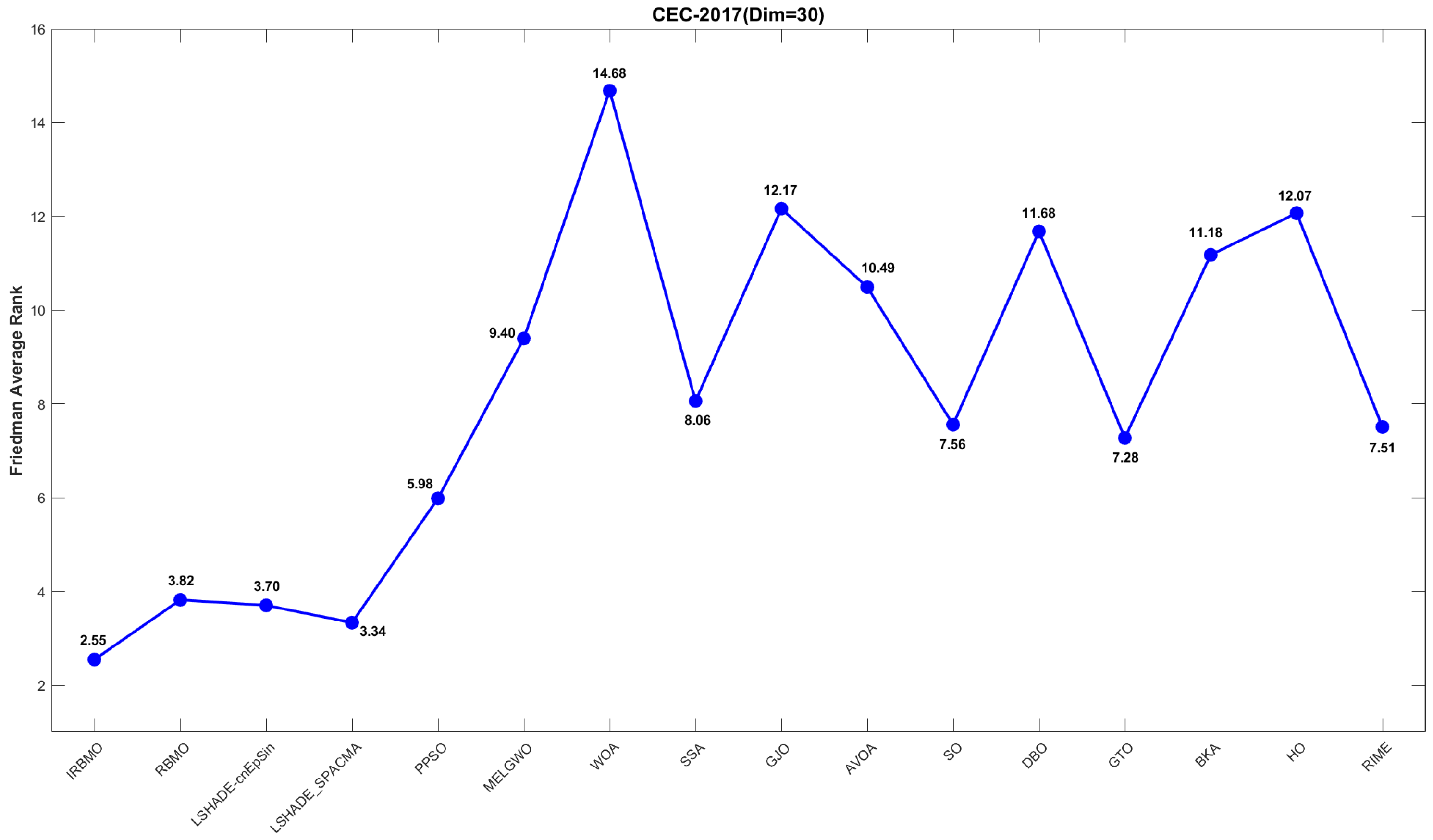

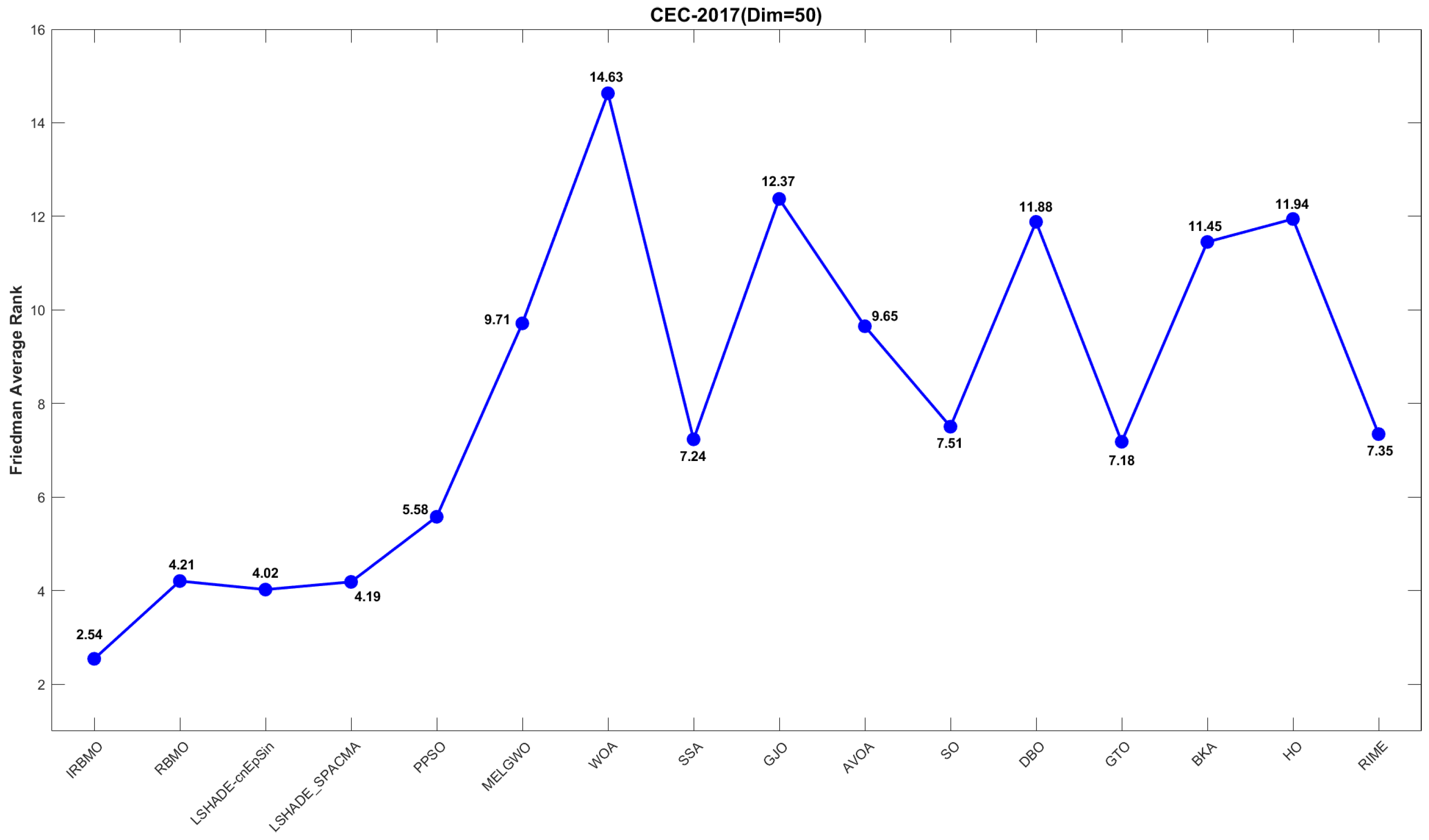

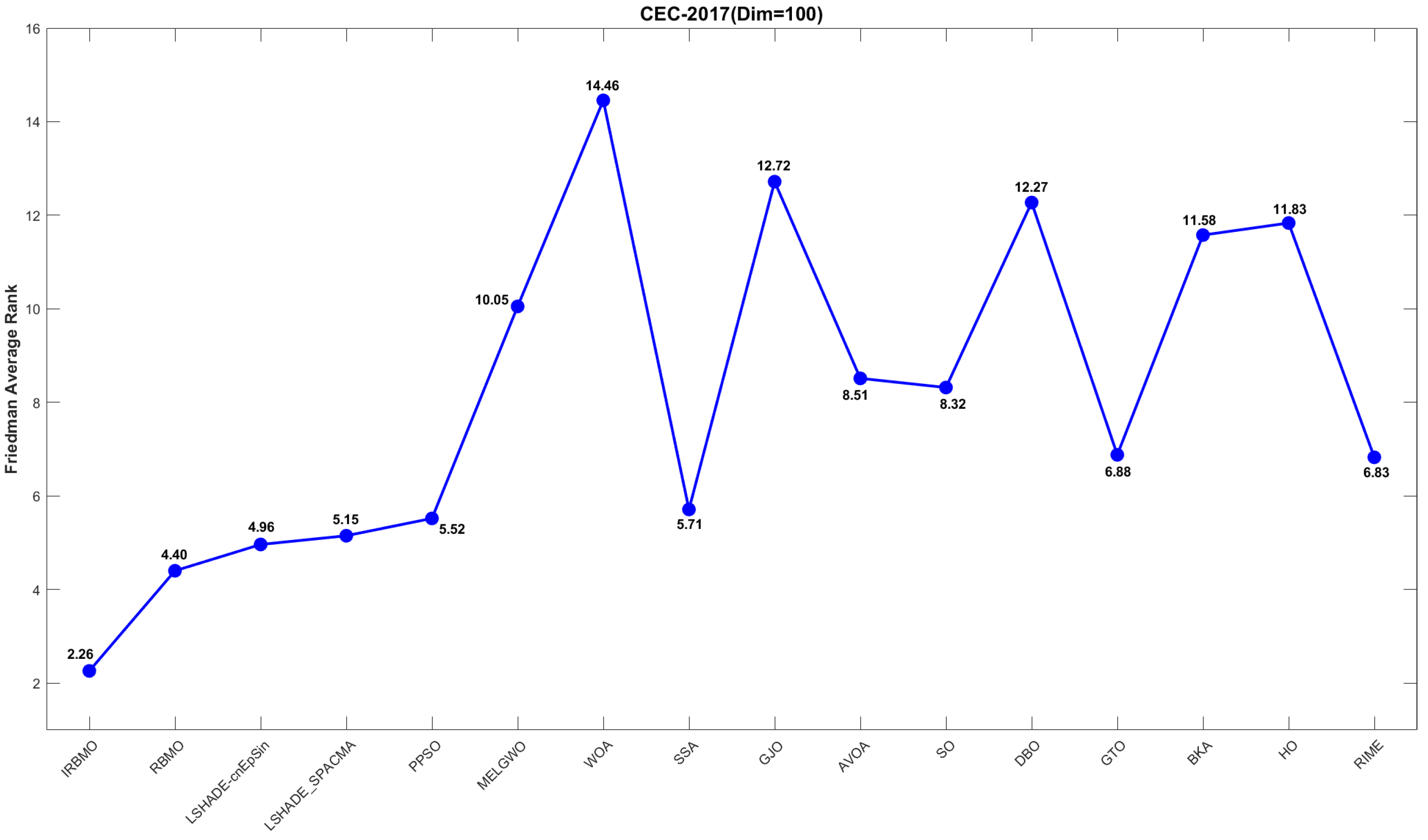

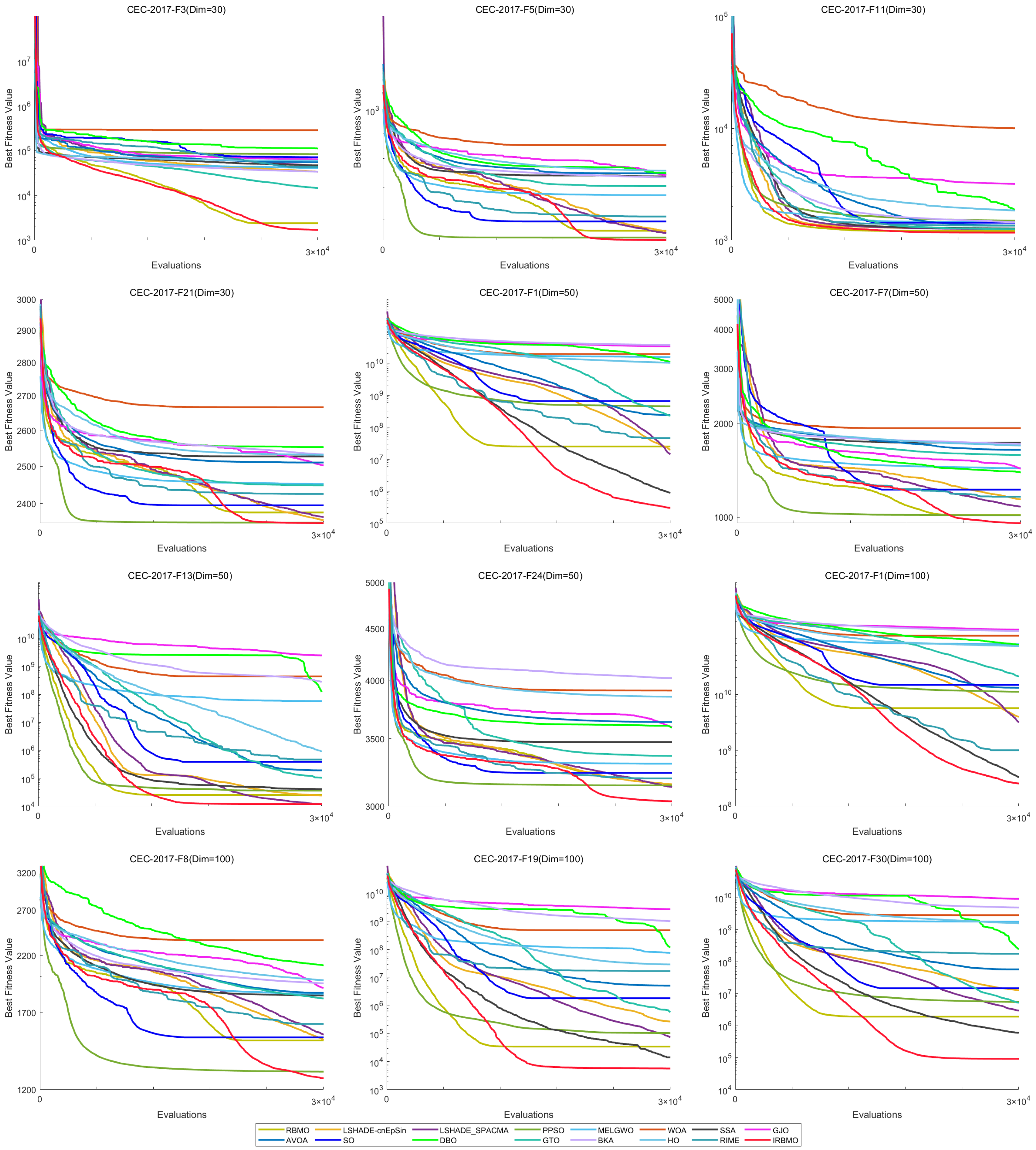

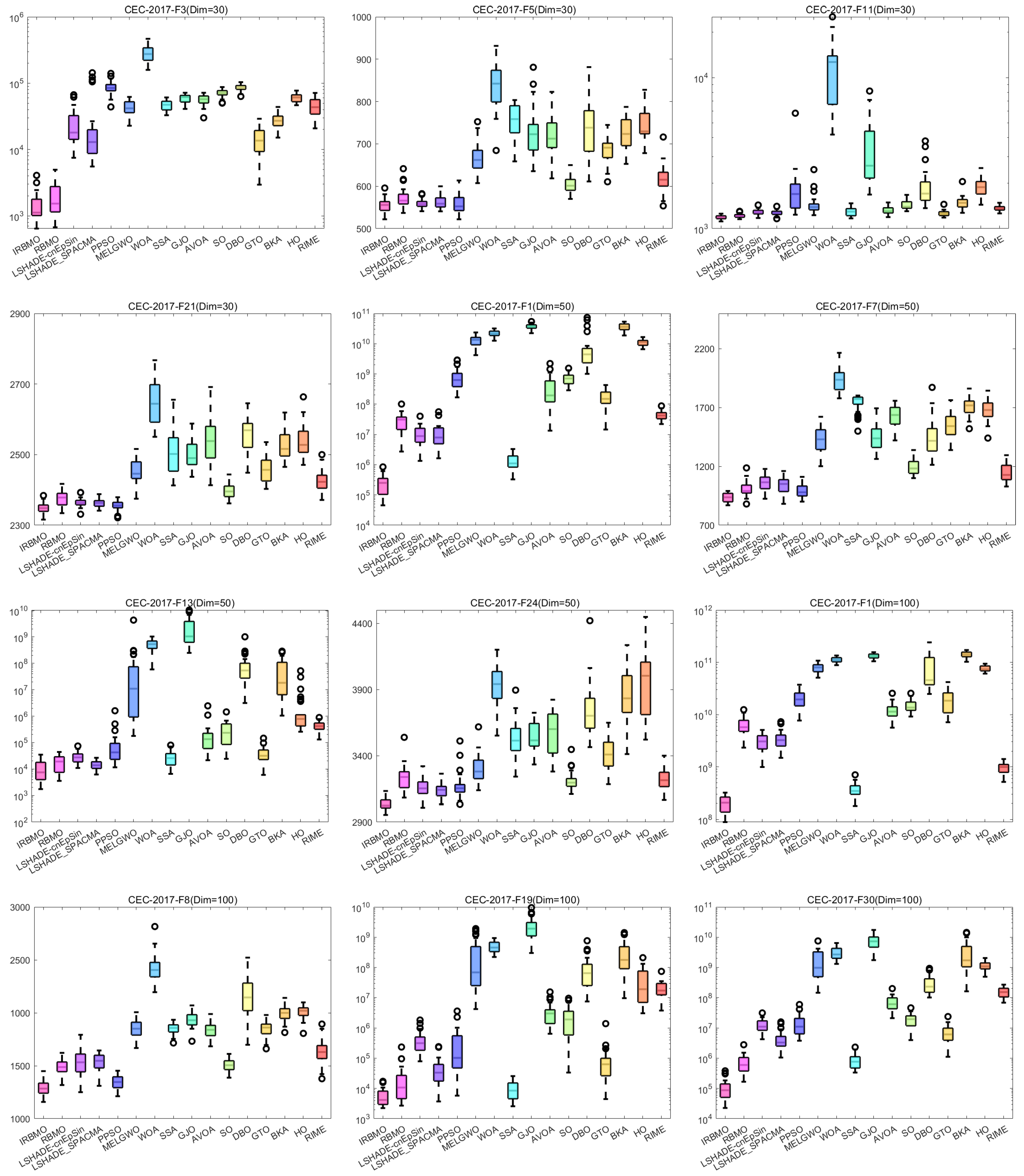

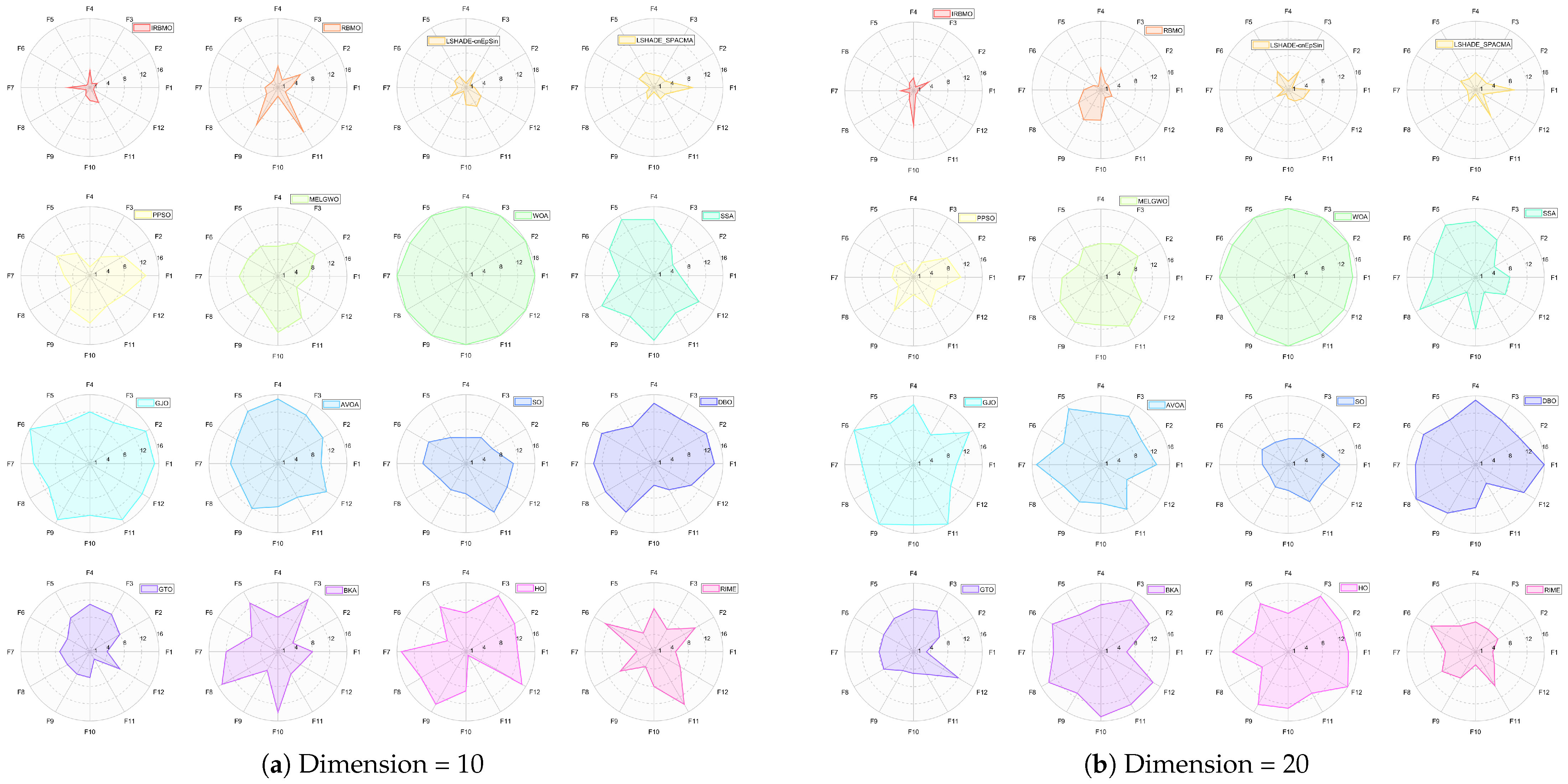

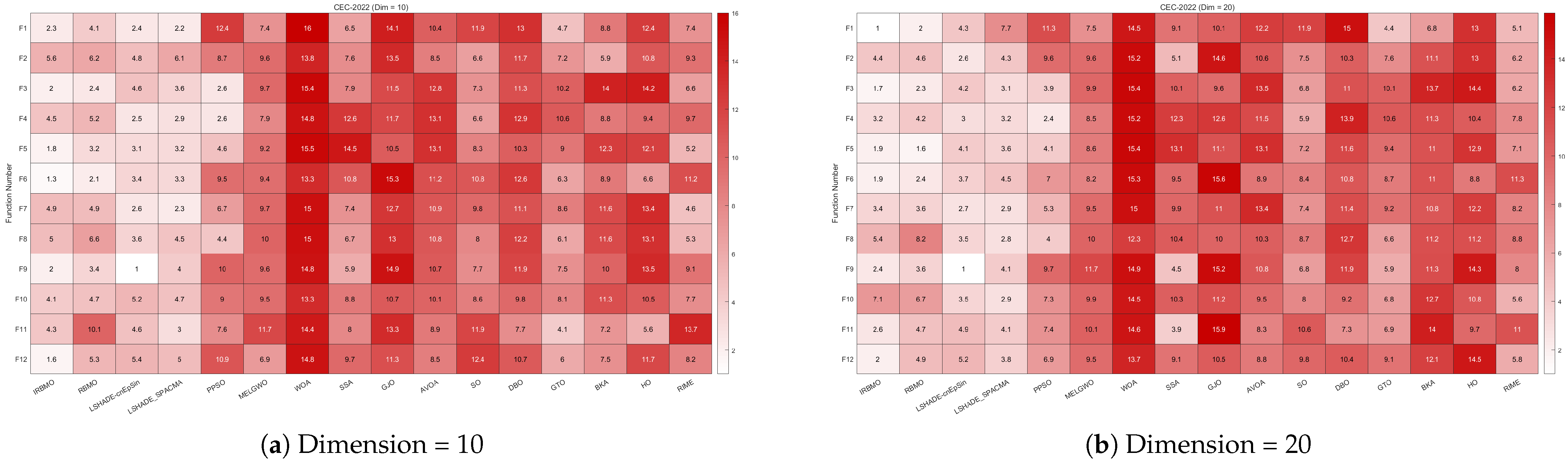

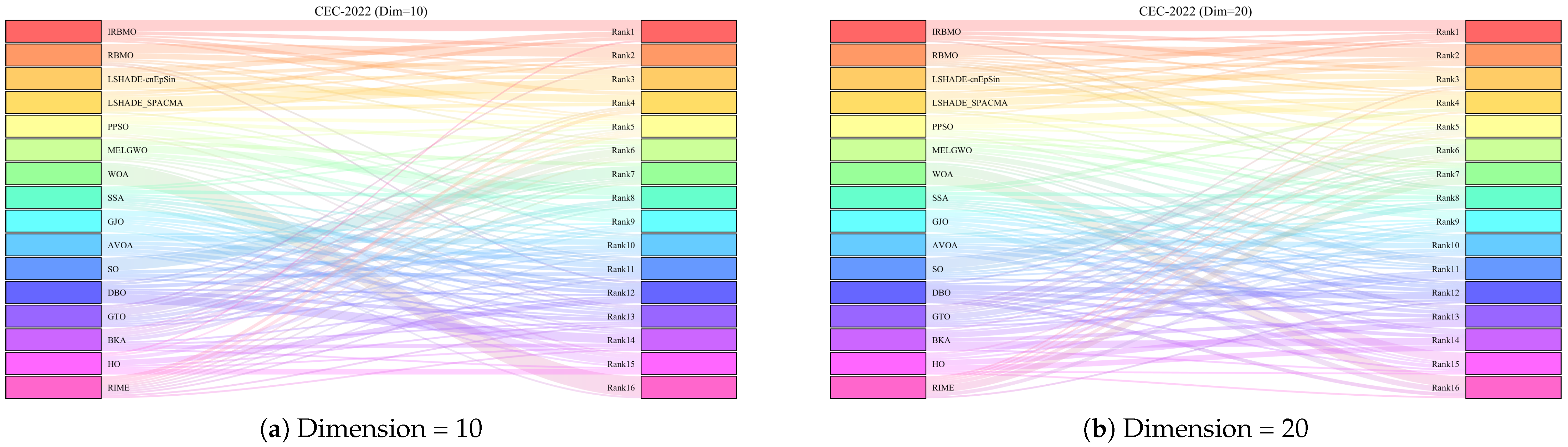

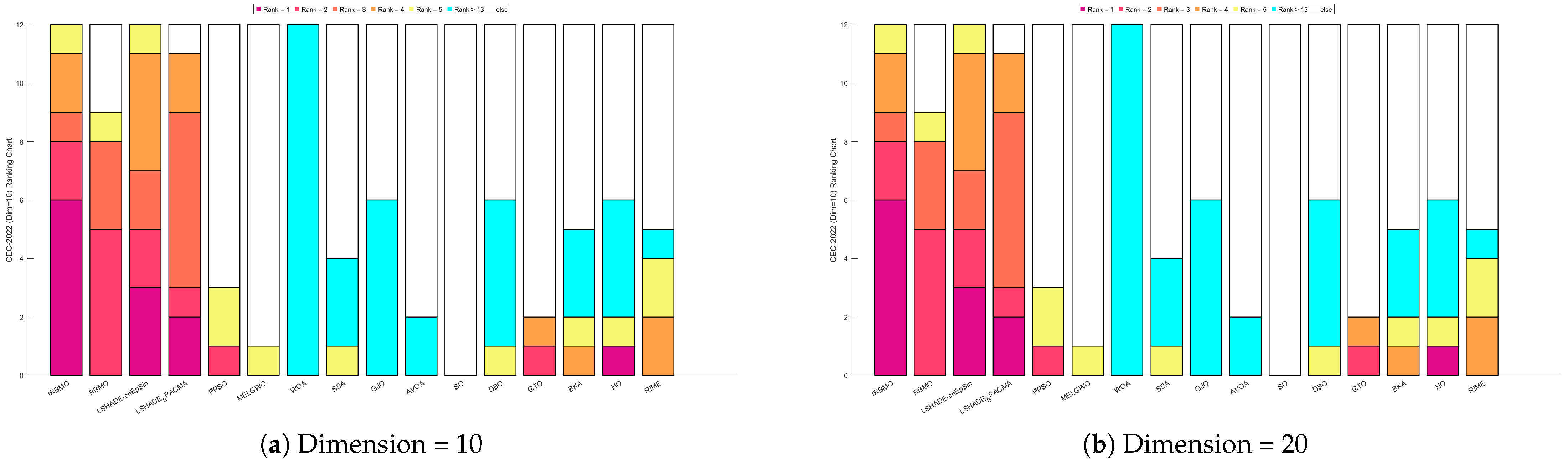

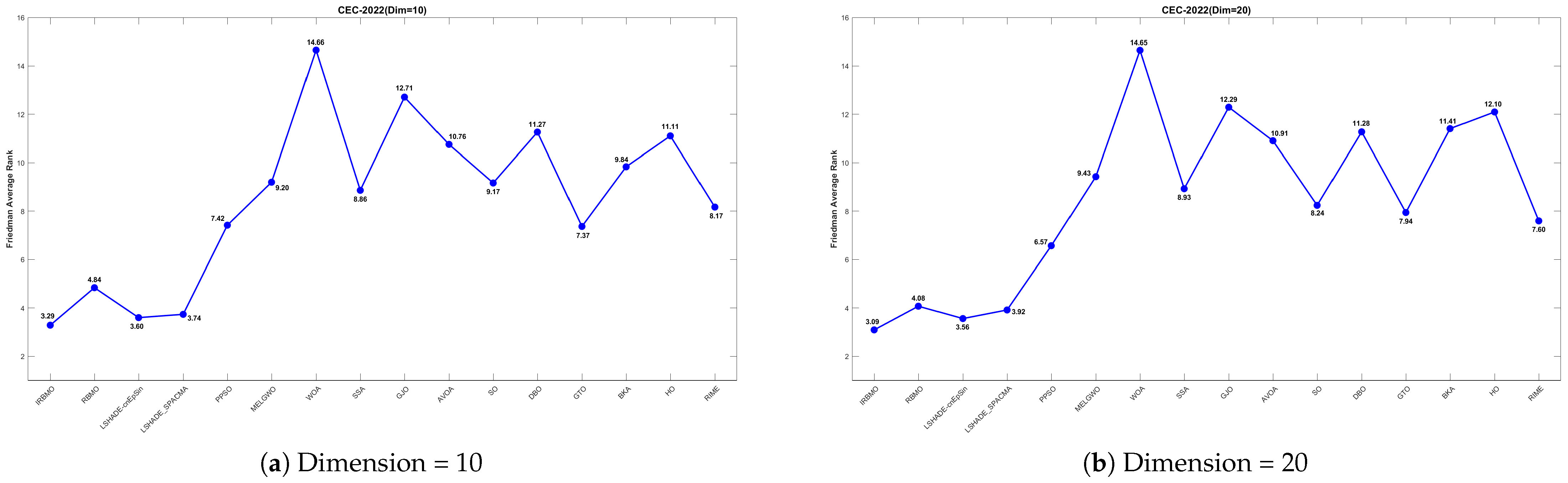

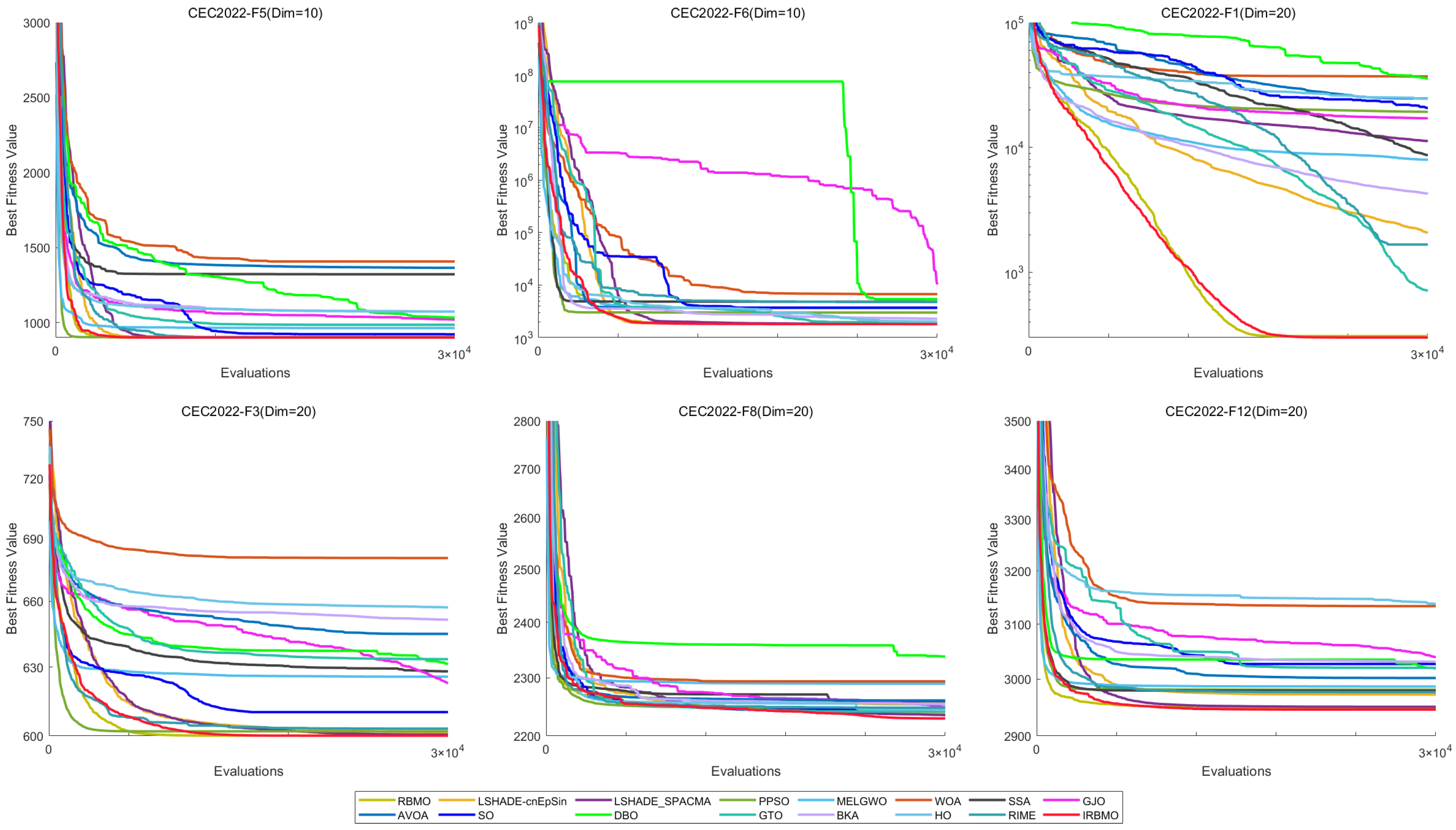

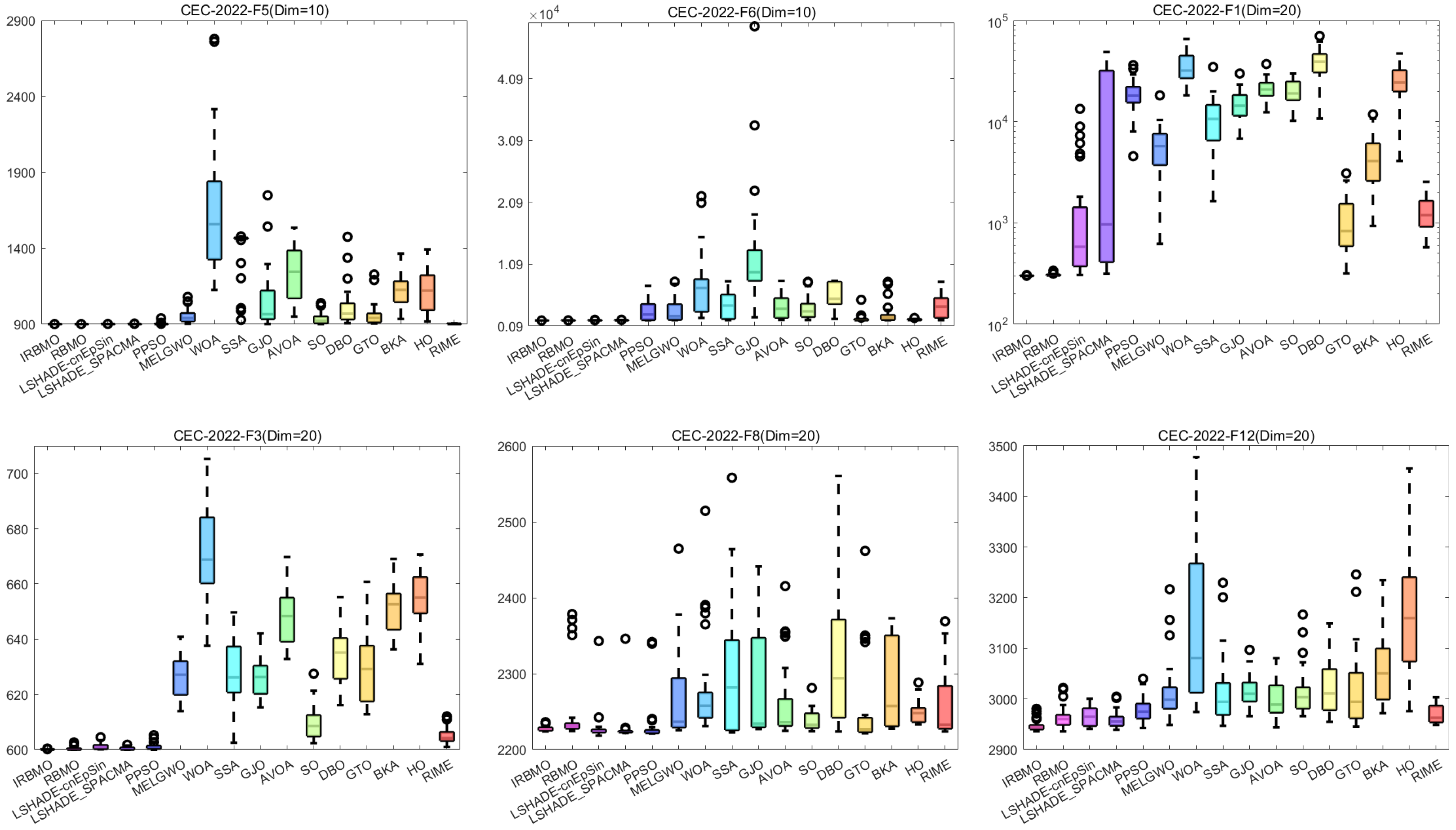

Comprehensive Benchmark Testing: The performance of 16 algorithms, including award-winning CEC competition algorithms, widely used algorithms or their improved versions, recently proposed high-performance and highly cited algorithms, as well as the classical RBMO and the proposed IRBMO, is compared on the CEC-2017 test sets of 30, 50, and 100 dimensions and the CEC-2022 test sets of 10 and 20 dimensions. To evaluate the performance of the 16 algorithms, radar charts, heat maps, Sankey diagrams, stacked bar charts, and line charts were employed to visualize their rankings and Friedman mean ranks across various test sets. We employed the Wilcoxon rank-sum test to ascertain if IRBMO’s performance was statistically distinct from the other algorithms. Additionally, we generated box plots to visualize the performance stability of each method. The findings from the CEC test sets reveal that IRBMO surpassed the other 15 algorithms on a majority of test functions, highlighting its superior stability, robustness, effectiveness, and capacity to avoid local optima.

Real-World Constrained Engineering Problems: The performance of 11 algorithms was evaluated across four real-world engineering constrained problems to validate the potential of IRBMO in addressing such challenges.

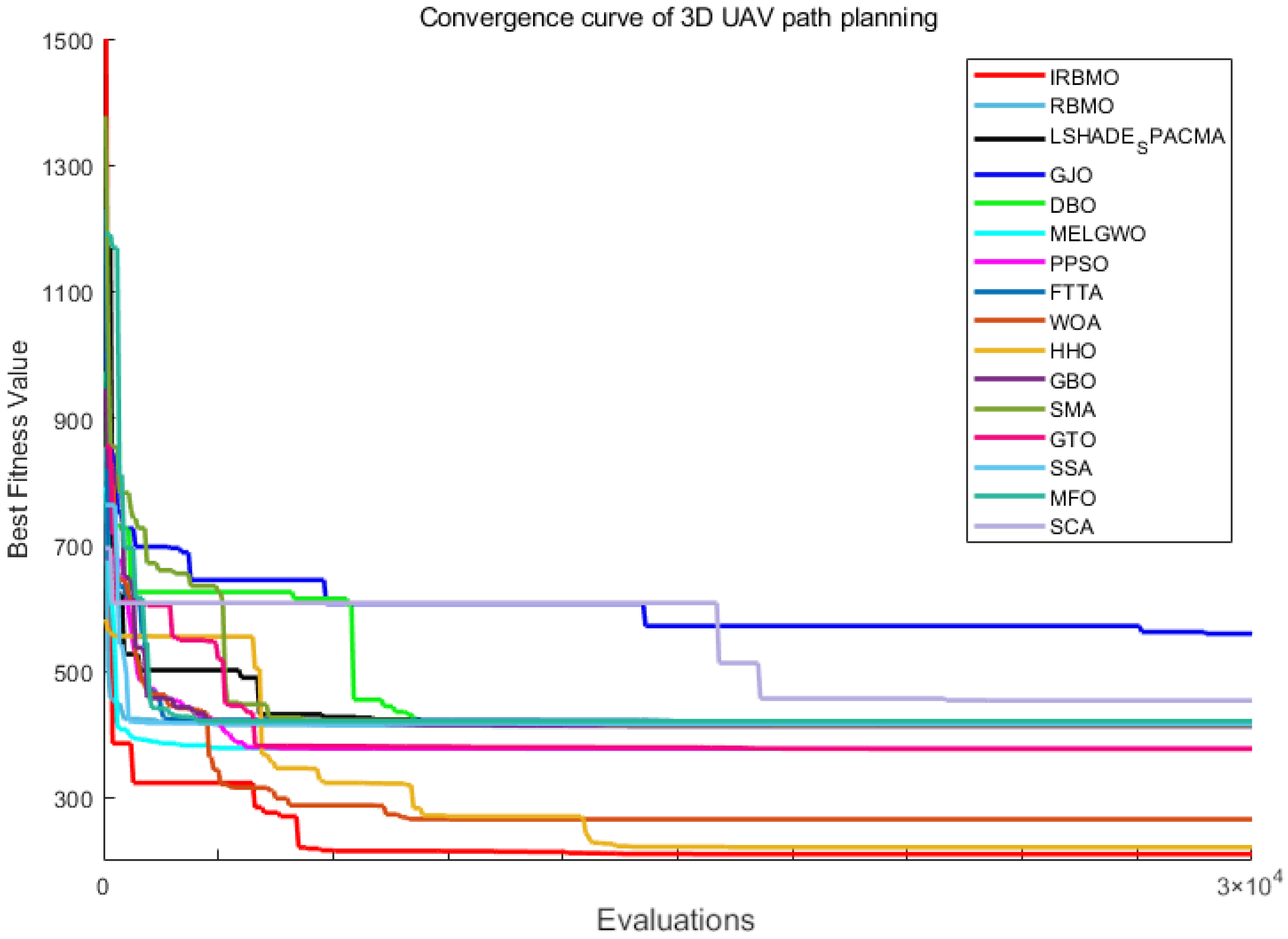

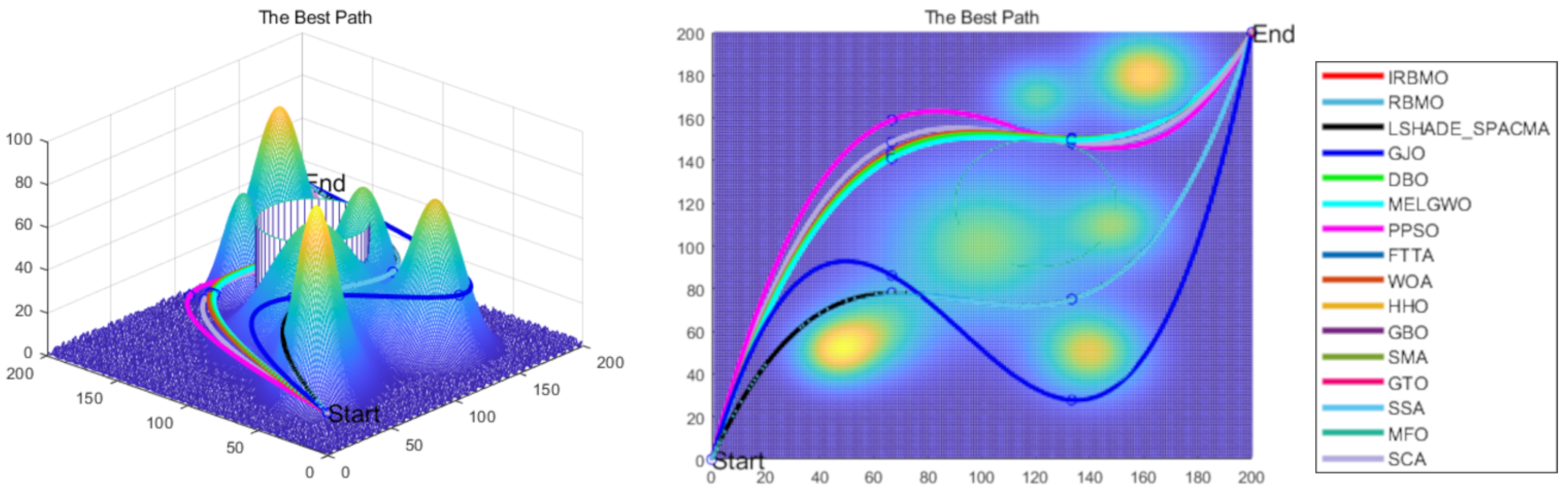

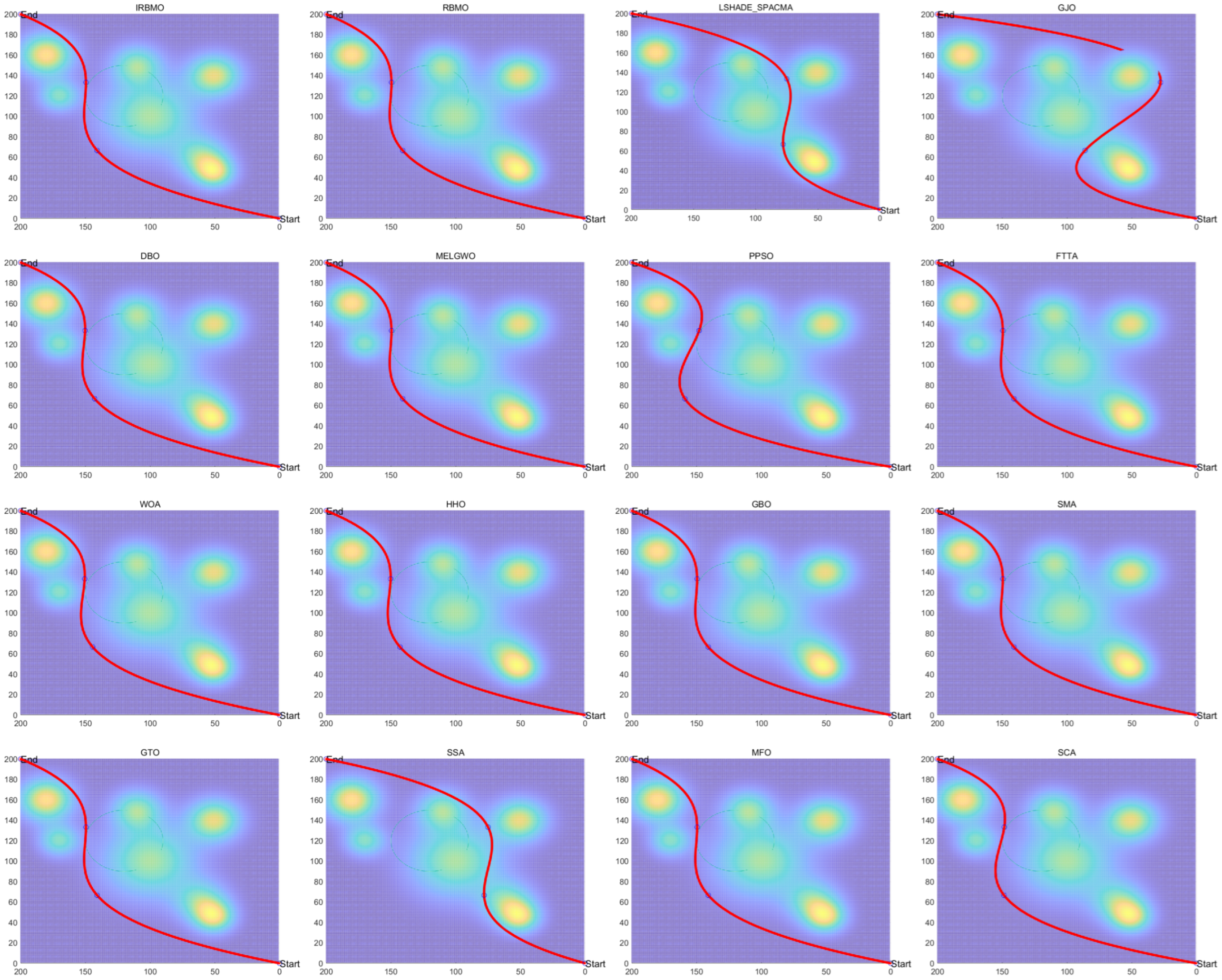

3D Path Planning for UAVs: IRBMO is applied to the 3D path planning problem for UAVs, and its performance is compared against 15 algorithms. Results validate IRBMO’s competitive edge and suitability for tackling 3D path planning problems relative to other state-of-the-art algorithms.

The IRBMO algorithm effectively mitigates several inherent limitations of traditional path planning methods, including navigation challenges in complex terrain environments and susceptibility to local optima. Compared to existing approaches, IRBMO demonstrates notable advancements in UAV path planning, achieving higher computational efficiency and operational feasibility. These improvements address critical gaps in prior research while offering practical solutions to persistent challenges in path planning methodologies.

7. Conclusions

This paper proposes an improved Red-billed Blue Magpie Optimizer (IRBMO) to address the limitations of the original RBMO algorithm, specifically its tendency to become trapped in local optima and insufficient global exploration capabilities when solving complex optimization problems.

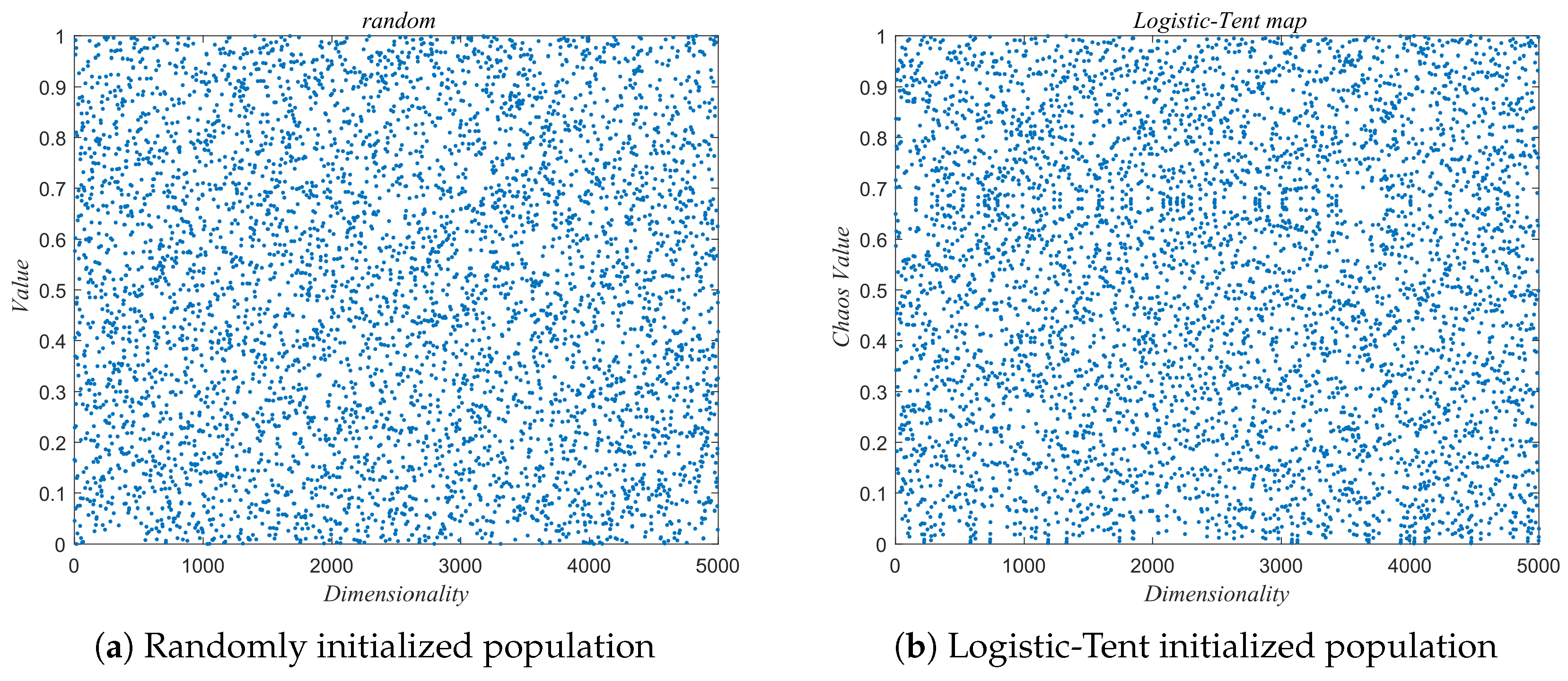

First, a Logistic-tent chaotic map is introduced to initialize the population. Compared to the random initialization in the standard RBMO, this strategy significantly enhances the diversity of the initial population, enabling the algorithm to more uniformly explore the entire solution space during the initial search phase, thereby laying a solid foundation for subsequent global optimization and rapid convergence.

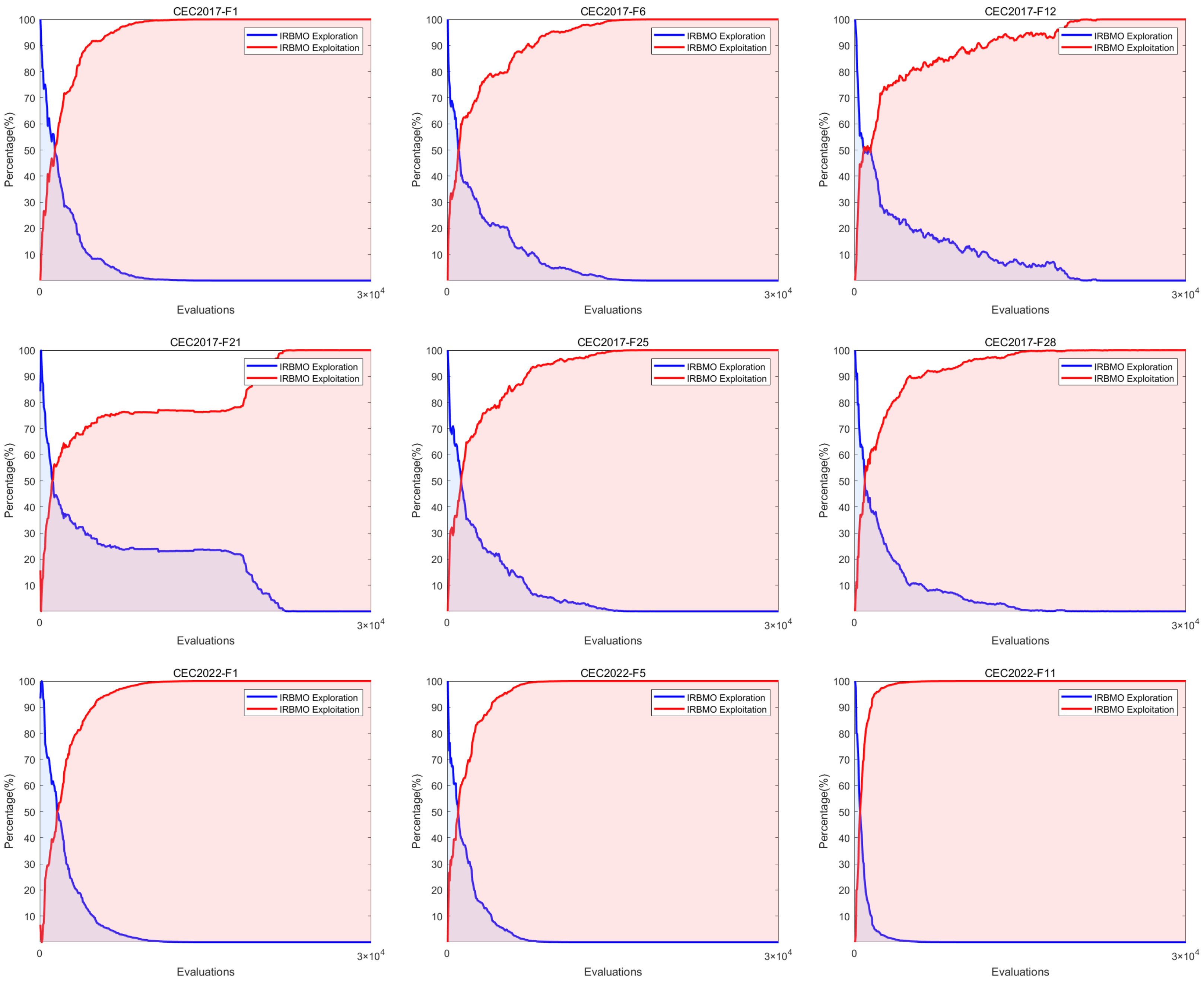

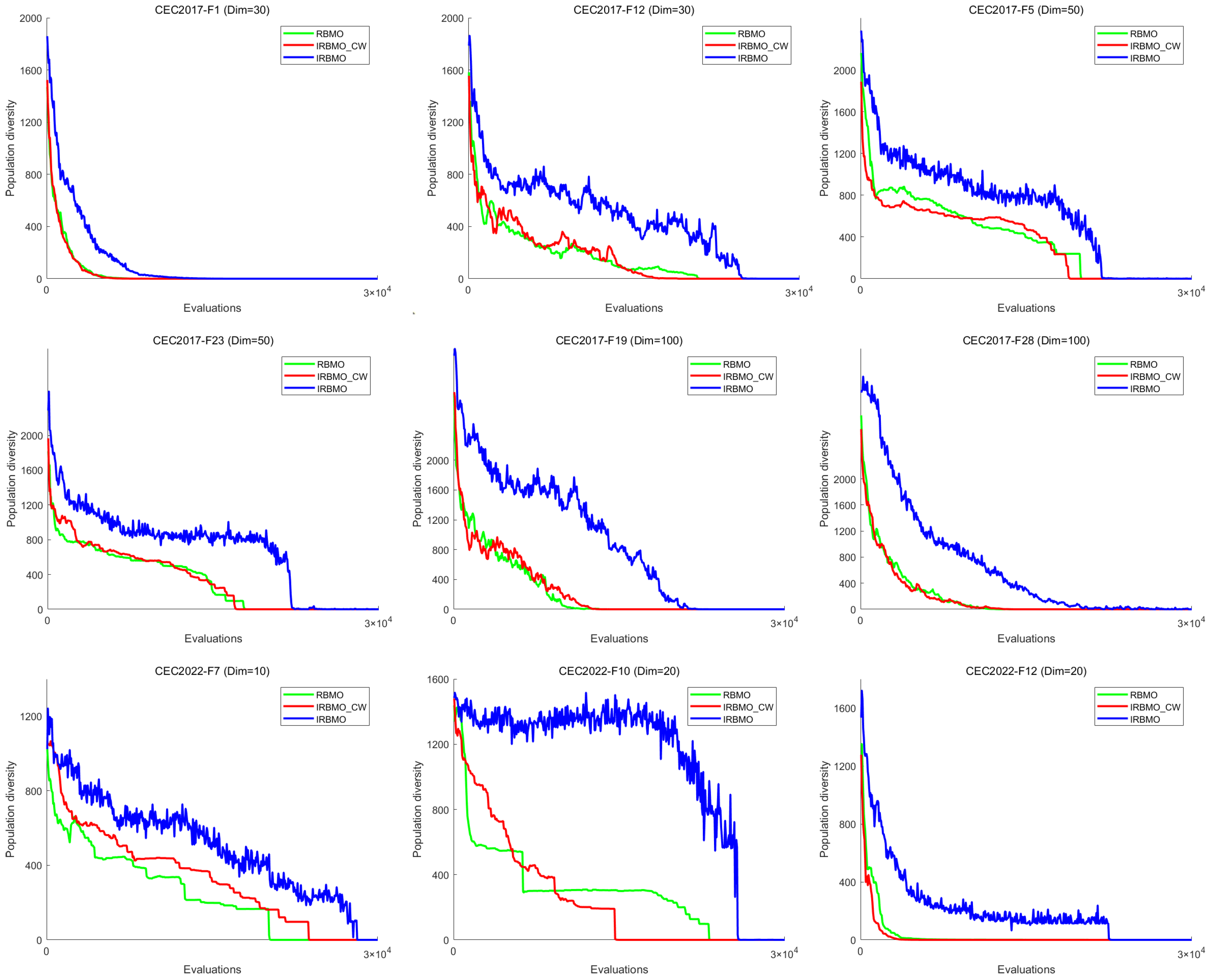

Second, to overcome convergence stagnation caused by the original algorithm’s over reliance on population mean values, a dynamic balance factor is introduced during the search phase. This factor dynamically adjusts the weights of the global optimal solution and population mean in guiding search directions, thereby achieving a better equilibrium between exploration and exploitation. Furthermore, a hybrid strategy integrating the Jacobi Curve and Lévy Flight is proposed. The long- and short-step jumping characteristics of Lévy Flight enhance the algorithm’s global perturbation capability, while the Jacobi Curve provides a nonlinear local perturbation mechanism. Combined, these mechanisms significantly improve the algorithm’s ability to escape local optima traps during late-stage convergence.

To comprehensively validate the performance of IRBMO, comprehensive comparisons were conducted against 15 benchmark algorithms, including classical RBMO, CEC competition-winning algorithms, and state-of-the-art metaheuristics proposed in recent years, using the CEC-2017 (30-, 50-, and 100-dimensional) and CEC-2022 (10- and 20-dimensional) test suites. Experimental results demonstrate that IRBMO achieves superior performance on most test functions, with significantly enhanced convergence accuracy, stability, and robustness compared to competitors, particularly excelling in high-dimensional complex problems. Furthermore, IRBMO was applied to four classical constrained engineering design problems and a complex 3D UAV path planning task. Results confirm its potential for practical engineering applications, as it consistently identifies higher-quality solutions.

While IRBMO exhibits exceptional performance in this study, several limitations warrant future exploration. For instance, its convergence speed on specific multimodal functions shows marginal room for improvement compared to optimal benchmarks. Future work will focus on developing parameter self-adaptation mechanisms to strengthen algorithmic universality and extend IRBMO’s applicability to broader domains, such as multi-objective optimization, neural architecture search, and large-scale scheduling problems.