1. Introduction

Swarm intelligence algorithms are meta-heuristic algorithms that mimic the collective behavior of natural biological populations to address complex optimization problems [

1,

2,

3,

4]. The core idea is to achieve intelligent overall behavior through simple rules and local information exchange among individuals. In recent years, scholars have developed a range of swarm intelligence algorithms, drawing inspiration from observing and simulating the living habits of organisms. For example, the Slime Mould Algorithm (SMA) [

5] mimics the foraging behavior of slime molds and utilizes adaptive weighting to adjust its search direction, rendering it suitable for high-dimensional and multi-modal optimization. However, the algorithm tends to converge slowly and often becomes trapped in local optima. However, it tends to become trapped in local optima and exhibits slow convergence. The Tunicate Swarm Algorithm (TSA) [

6] simulates the jet propulsion and group foraging behavior of tunicates, enhancing global exploration through a conflict avoidance mechanism. Nonetheless, it involves multiple parameters, which may lead to performance degradation and poor adaptability in high-dimensional settings. The Snake Optimizer (SO) [

7] employs a male–female dual-population mechanism to increase population diversity, but its convergence accuracy is limited. The Particle Swarm Optimization (PSO) algorithm [

8] features a simple principle and fast convergence, but it is prone to getting stuck in local optima and is sensitive to parameter settings. The Arctic Puffin Optimization (APO) algorithm [

9] employs a versatile multi-stage strategy that integrates behaviors of both aerial flight and underwater foraging to better adapt to complex environments. However, the common challenges are the insufficiently smooth transition between the exploration and exploitation phases and complex parameter adjustment. The Differentiated Creative Search (DCS) algorithm [

10] boosts search efficiency via cooperative evolution and multi-strategy fusion, yet it suffers from high implementation complexity and pronounced parameter sensitivity. However, they exhibit weak global exploration capabilities, a tendency to become trapped in local optima, and low convergence accuracy in high-dimensional problems. Given that these algorithms often suffer from issues such as low convergence accuracy and an imbalance between exploration and exploitation, several innovative algorithmic improvements have been proposed. For example, Xia et al. [

11] introduced a meta-learning-based alternating minimization method that replaces handcrafted strategies with learned adaptive ones, achieving substantial performance gains. Long et al. [

12] proposed an enhanced PSO algorithm, utilizing opposition-based learning to improve initial population quality. Introducing continuous mapping enhances neighborhood search capability, and integrating particle perturbation increases diversity to avoid local optima. Cai et al. [

13] proposed a multi-strategy differentiated creative search method, introducing co-evolution to improve the DCS algorithm efficiency. Integrating a composite fitness-distance evaluation enables balanced exploration-exploitation transitions, while linear population size reduction further enhances performance. Guo et al. [

14] proposed a “sweep-rotate” gait that enhances planetary rover escape capability from granular terrain. A Bayesian optimization-based escape strategy clarifies variable influences and parameter ranges, improving optimization accuracy. Tian et al. [

15] proposed an adaptive improvement method to address the low efficiency of traditional Jump Markov Chain Monte Carlo algorithms. By dynamically adjusting the proposal distribution and employing parallel annealing techniques, they significantly enhanced the efficiency and convergence of curve fitting calculations.

Typical engineering optimization problems exhibit nonlinear, non-differentiable, or multimodal characteristics that hinder the application of traditional gradient-based methods; metaheuristic algorithms emerge as particularly suitable solutions. Their effectiveness lies in their ability to perform a global search without requiring gradient information. Numerous engineering optimization problem models have already been developed. For instance, Kumar et al. [

16] addressed classical problems, including tension/compression spring design, which minimizes spring weight by optimizing three design variables, subject to four constraints. Zhou et al. [

17] developed a physics-informed neural network framework for fatigue life prediction, which incorporates partial differential inequalities derived from experimental data as physical constraints. Tao et al. [

18] proposed a novel turbine blade tip design methodology based on free-form deformation technology, developing a thermo-aerodynamic optimization framework that employs large-scale variable optimization.

The APO algorithm, a novel swarm intelligence algorithm introduced by Wang et al. in 2024 [

9], mimics the survival and predation behaviors of the Arctic puffin. While its unique multi-stage foraging strategy presents a promising framework for optimization, empirical studies reveal that the APO, in its original form, is not exempt from the common pitfalls of swarm intelligence algorithms. These include premature convergence, susceptibility to local optima, and an inadequate balance between exploration and exploitation in certain issues, which ultimately limit its convergence precision and practical applicability. To alleviate these issues, several improvements have been proposed. Fakhouri et al. [

19] introduced an adaptive differential evolution strategy that utilizes adaptive parameter control and external archives to strengthen its global exploration and convergence efficiency. Sun and Wang [

20] proposed a dual-strategy approach combining elite opposition-based learning with adaptive T-distribution mutation to enhance initial population quality and achieve superior balance between global exploration and local exploitation. Zhang [

21] introduced an elite reverse learning strategy that significantly improved convergence speed and optimized the effective performance of communication systems. Su and Jiang [

22] integrated a Gaussian mutation strategy to balance global exploration with local exploitation. By employing elite opposition-based learning to expand the search space and enhance population diversity. Nevertheless, as stated by the no-free-lunch theorem [

23], no single optimization algorithm can dominate all others on every possible problem. Although the aforementioned strategies enhance the optimization performance of APO, it remains prone to local optima and an imbalance between exploration and exploitation.

Therefore, this paper proposes the following improvements to APO: first, a mirror opposition-based learning mechanism with an adaptive mirroring factor is introduced. During the search process, both the current solutions and their dynamically generated mirror opposites are considered as feasible solutions, thus enhancing population diversity and the efficiency of locating optimal solutions, which enhances convergence accuracy. Second, a dynamic differential evolution strategy with an adaptive scaling factor is incorporated to aid in precise optimization and strengthen the algorithm’s ability to escape local optima.

The remainder of this paper is structured as follows:

Section 2 presents the fundamental concepts of the standard APO.

Section 3 describes the fundamental concepts of the improved APO (IAPO) using mathematical formulations and flowcharts, along with corresponding pseudocode.

Section 4 conducts ablation experiments on the proposed improvement strategies and analyzes the performance of the IAPO against eight comparison algorithms.

Section 5 applies nine algorithms to three engineering optimization design problems. Finally, the paper concludes and discusses potential directions for future research.

The key contributions of this study are listed below:

- (a)

A mirror opposition-based learning mechanism is introduced to enhance the quality of the initial population and expand the search scope. An adaptive parameter-driven dynamic differential evolution strategy is also integrated. By adjusting parameters adaptively, the algorithm prioritizes global exploration in early iterations and shifts focus to local exploitation in later stages, thereby achieving an automatic balance between exploration and exploitation and improving the ability to escape local optima.

- (b)

Comprehensive experiments are conducted on 20 benchmark functions, the CEC 2019 test set, and the CEC 2022 test set, which collectively validate the superiority of the proposed IAPO. Furthermore, simulation experiments on three engineering application problems demonstrate that IAPO outperforms other comparative algorithms in both robustness and practical applicability.

3. IAPO

To address three key limitations of the APO algorithm: sluggish early-stage convergence, a propensity to become trapped in local optima, and an inadequate balance between exploration and exploitation, we propose an IAPO. This enhanced algorithm incorporates a mirror opposition-based learning mechanism and a dynamic differential evolution strategy.

3.1. Mirror Opposition-Based Learning Mechanism

In many optimization scenarios, the search process often starts with random initial values and gradually approaches the optimal solution. When initial random values are close to the optimum, the problem can be solved efficiently. However, in the worst case, if the initial values lie opposite to the optimal region, the optimization process becomes time-consuming.

Without prior knowledge, it is difficult to ensure favorable initial solutions. From a logical perspective, the solution space can be explored more effectively by considering both current candidate solutions and their opposites. Introducing opposite solutions as feasible candidates can enhance the efficiency of locating the global optimum. This idea is rooted in the concept of opposition-based learning [

24,

25] and can be mathematically formulated as follows:

Based on this concept, Yao et al. [

26] proposed a mirror opposition-based learning mechanism inspired by convex lens imaging. Unlike conventional opposition-based learning, which produces a fixed opposite solution, the mirror opposition-based mechanism introduces adaptive perturbation via a mirror factor

, enabling dynamic adjustment of opposite solutions. This not only improves optimization accuracy but also maintains convergence speed [

27]. The updated position of an individual is given by:

where

is the mirror factor,

is the upper bound of the position, and

is the lower bound of the position.

The adaptive update formula for the mirror factor

is:

Generally, as a machine learning strategy, opposition-based learning offers the potential to extend existing learning algorithms.

3.2. Dynamic Differential Evolution Strategy

The Differential Evolution (DE) algorithm [

28] shares its conceptual foundation with genetic algorithms. Both employ random initialization to generate a starting population, utilize fitness values to guide selection, and proceed iteratively through mutation, crossover, and selection operations. However, the Arctic Puffin Optimization (APO) algorithm tends to converge near local optima in the later stages of optimization, increasing the risk of premature convergence. To mitigate this limitation, a dynamic differential evolution strategy is incorporated, which introduces an adaptive scaling factor. The specific procedure is outlined below.

The initial scaling factor

and crossover probability

were determined through preliminary parameter sensitivity experiments within the range [0, 1]. A parameter set demonstrating consistent effectiveness across most test problems was selected to maintain both effective adaptation and population diversity:

This paper employs the DE/rand/1 mutation strategy, which is selected for its independence from the current best solution. This characteristic helps maintain population diversity and enhances the algorithm’s ability to escape local optima. Furthermore, its simple structure allows smooth integration with the proposed adaptive parameter mechanism.

where

,

,

are distinct integers randomly selected from the range [1,

] (excluding

), and

is an adaptive scaling factor calculated as follows:

during the early iterations, the scaling factor

remains relatively high to promote global exploration. As the search progresses, the value

gradually decreases to shift the focus toward local refinement.

Next, the crossover operation is applied to combine the mutation vector

with the target vector

according to certain rules, producing a trial vector:

where each component is defined by:

where

is the

dimension value of the

new parameter individual;

denotes the random number calculated in the

dimension, and

is the crossover probability.

denotes the dimension of the population, and

is a random integer in [1,

], thus ensuring the mutation vector contributes at least one component.

Finally, a greedy selection operation is performed based on fitness comparison:

where

denotes the fitness function. This ensures that the population evolves toward improved solutions over successive generations, maintaining selection pressure toward higher-quality regions of the search space.

3.3. Algorithm Flow

The pseudocode of IAPO is shown as Algorithm 1:

| Algorithm 1. IAPO |

| Input: Population size N, maximum iterations T, problem dimension D. |

| Output: Global best position Xgbest, global best fitness f(Xgbest). |

| 1. Initialize parameters N, T; randomly initialize population , evaluate fitness |

| f() for each individual. |

| 2. Initialize the local best solution f(Xlbest) and position Xlbest. |

| 3. Initialize the global best solution f(Xgbest) and position Xgbest. |

| 4. for t = 1 → T |

| 5. Calculate the mirror factor q using Formula (10). |

| 6. for i = 1 → N |

| 7. Calculate the new position using Formula (9). |

| 8. if f() < f() |

| 9. = , f() = f() |

| 10. end if |

| 11. end for |

| 12. for i = 1 → N |

| 13. Calculate the behavior transition coefficient B using Formula (7). |

| 14. if B > 0.5 |

| 15. Calculate the new positions and using formulas (2)–(3) |

| 16. Update the individual position through comparison |

| 17. else if |

| 18. Calculate the new positions , , and using formulas |

| 19. (4)–(6); Update the individual position through comparison |

| 20. end if |

| 21. end for |

| 22. Update f(Xlbest), Xlbest, f(Xgbest), and Xgbest. |

| 23. for i = 1 → N |

| 24. Calculate the mutation vector using formulas (13)–(15). |

| 25. Calculate the trial vector using formulas (16)–(17). |

| 26. end for |

| 27. for i = 1 → N |

| 28. if f() < f() |

| 29. = , f() = f() |

| 30. end if |

| 31. end for |

| 32. Update f(Xgbest) and Xgbest. |

| 33. end for |

| 34. Return: Xgbest and f(Xgbest) f(Xgbest) |

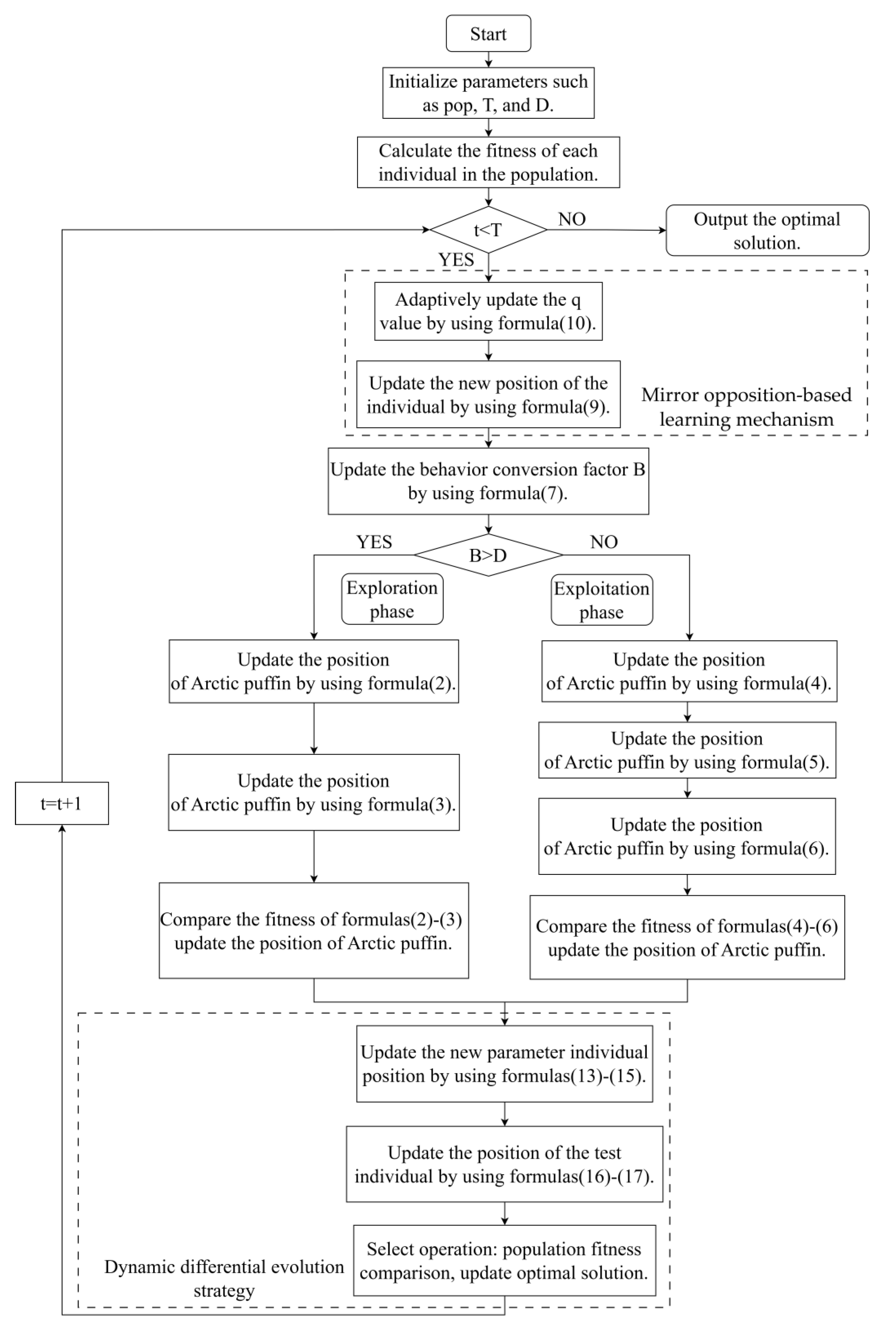

To clearly describe the overall solution logic of IAPO, a flowchart of this algorithm is drawn as

Figure 1.

3.4. Time Complexity Analysis

Time complexity serves as a key metric for evaluating algorithmic performance, as it governs code execution efficiency. The following analysis compares the time complexity between the standard APO and the improved IAPO algorithm.

Assuming the population size of Arctic puffins is , the maximum number of iterations is , and the dimension is .

(1) Time complexity of APO

The APO algorithm operates through three sequential phases: population initialization, aerial flight for global exploration, and underwater foraging for local exploitation. The time complexities of these three stages are as follows:

Initialization phase: Iterate times, generating a -dimensional vector each time, with a time complexity of .

Exploration phase: Includes two strategies—aerial search and swooping predation—each looping times, generating a new D-dimensional solution each time, with a complexity of . After iterations, the time complexity is .

Exploitation Phase: This phase is characterized by the application of three distinct strategies: gathering foraging, intensifying search, and predator avoidance, with position updates for all three strategies requiring calculations each time. After iterations, the time complexity is .

Hence, the combined time complexity of all algorithmic components amounts to .

(2) Complexity analysis of the mirror opposition-based learning mechanism

This approach diversifies the search space by creating opposition-based counterparts for the population, with computations involving inverse calculations for each dimension of every individual in the population, single execution has a complexity of . After iterations, this results in a total time complexity of .

(3) Time complexity of the dynamic differential evolution strategy

The core operations of the dynamic differential evolution strategy include mutation, crossover, and selection. The time complexities of these three operations during the iteration phase are as follows:

Mutation operation: For each individual, select 3 random individuals to calculate a D-dimensional differential vector, with a complexity of .

Crossover operation: Perform crossover operations on each dimension of each individual according to probability, with a complexity of .

Selection operation: Compare the fitness of the target individual with that of the trial individual, with a complexity of .

Total iteration complexity: After T iterations of the above three operations, the total time complexity is .

(4) Time complexity of IAPO

The total time complexity of the IAPO algorithm is the sum of the time complexities of the APO algorithm, the mirror-based learning mechanism, and the dynamic differential evolution strategy: In summary, it is found that the time complexity of IAPO is of the same order as that of standard APO and does not significantly increase the computational burden of the algorithm.

4. Simulation Experiments and Result Analysis

The performance of the proposed IAPO was rigorously evaluated using a comprehensive set of test functions, comprising 20 classical benchmarks, the CEC 2019 set, and the CEC 2022 set. The basic information of these test sets is provided in

Table 1,

Table 2 and

Table 3, respectively.

The 20 benchmark functions listed in

Table 1 [

29] are divided into three groups with distinct characteristics to comprehensively assess the algorithm’s performance: F1–F7 are unimodal functions, which are characterized by the presence of a single global optimum and the absence of local optima. These functions primarily test the convergence speed of the algorithm in straightforward optimization scenarios. F8–F13 [

30] are multimodal functions, which contain one global optimum and multiple local optima. They are used to evaluate the algorithm’s ability to balance global exploration and local exploitation. F14–F20 [

31] are fixed-dimensional multimodal functions, which test the robustness of algorithms in exploration and exploitation capabilities under constrained dimensions. The CEC 2019 test set [

32], shown in

Table 2, includes 10 objective functions. Functions F1 to F3 vary in dimension and search range, while F4 to F10 are 10-dimensional. The theoretical optimum for each function in this set is 1. The CEC 2022 test set [

33], detailed in

Table 3, comprises twelve functions with dimensions of 10 and 20, encompassing unimodal (F1), basic (F2–F5), hybrid (F6–F8), and composition (F9–F12) types. Many of the CEC 2019 and CEC 2022 functions are multimodal [

34] and present significant optimization challenges.

To ensure fairness in comparisons, all algorithms were coded in MATLAB 2022a and run on a computer with the following configuration: an AMD Ryzen 5 processor and 16 GB of RAM. The parameters were consistent across all algorithms: population size N = 30 and maximum iterations T = 500. Each algorithm was independently run 30 times. The best value (Best), mean value (Mean), and standard deviation (Std) of the results were used as evaluation indicators.

4.1. Ablation Study on Improvement Strategies

IAPO enhances the standard APO by incorporating two strategies: a mirror opposition-based learning mechanism and a dynamic differential evolution strategy. To analyze the contribution of each strategy, two variant algorithms were constructed:

(1) BAPO: APO enhanced with the mirror opposition-based learning mechanism.

(2) DAPO: APO enhanced with the dynamic differential evolution strategy.

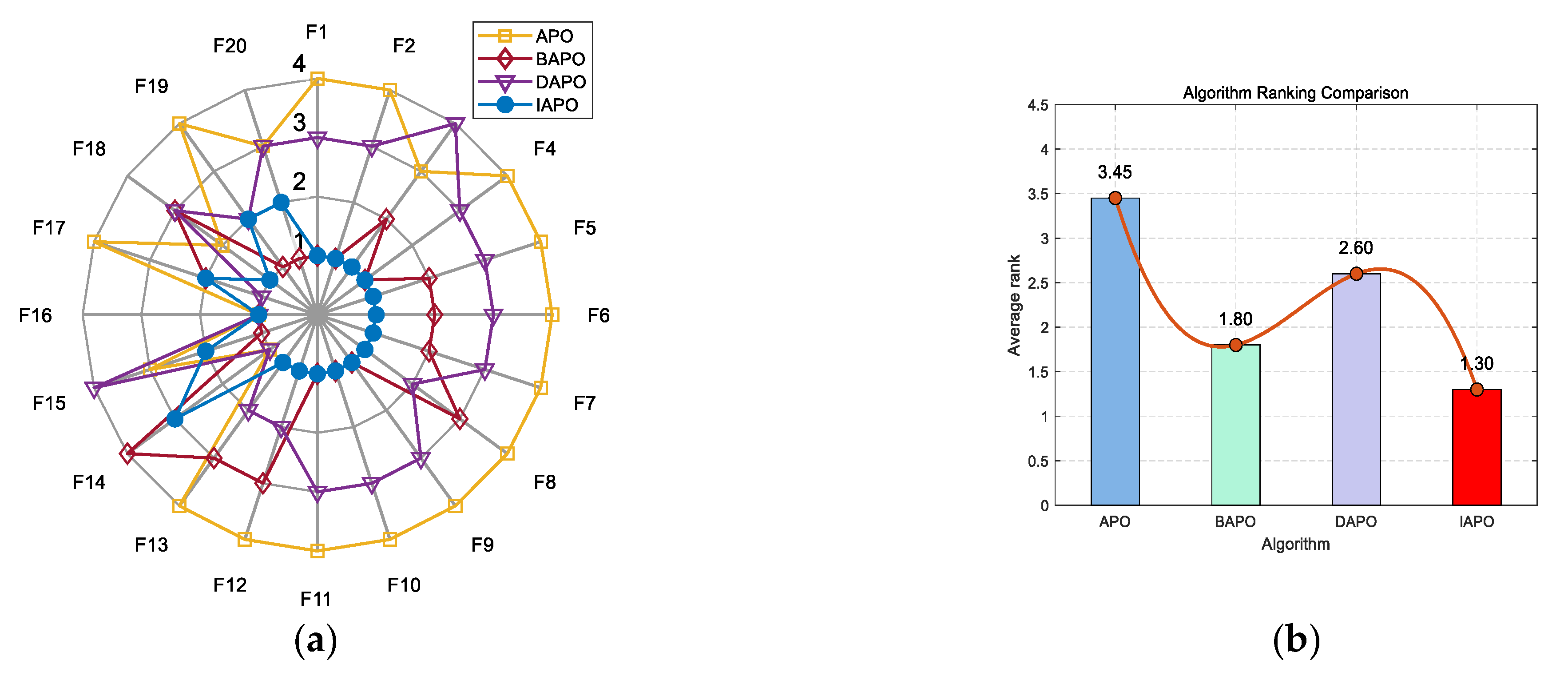

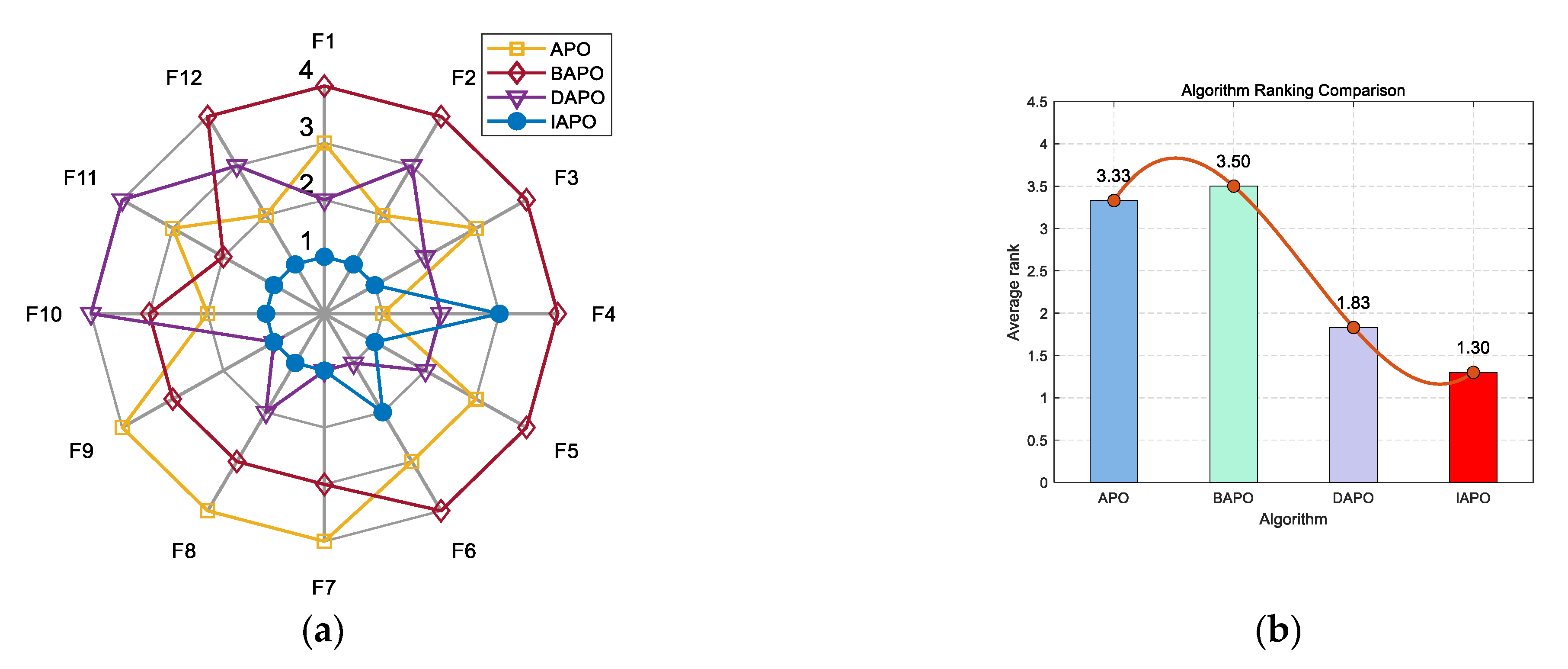

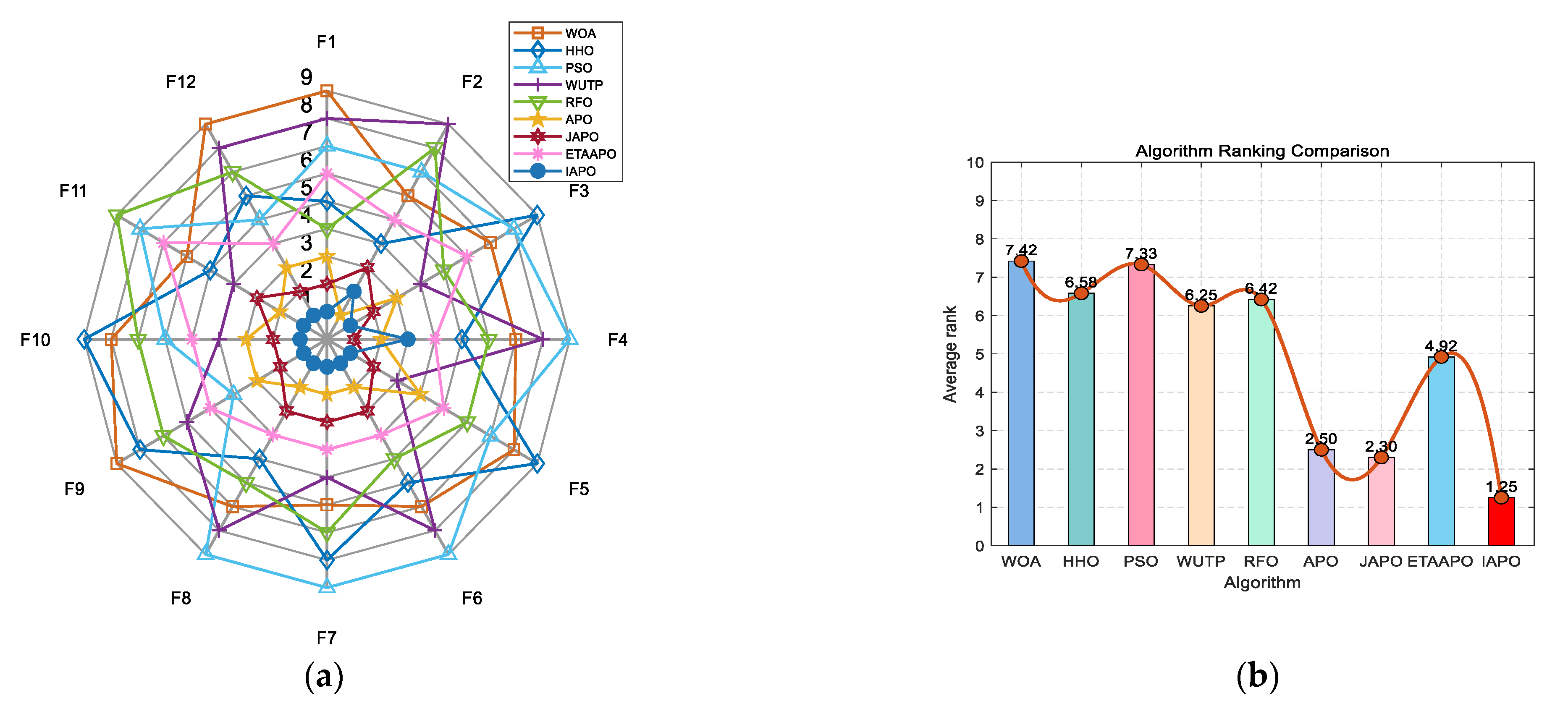

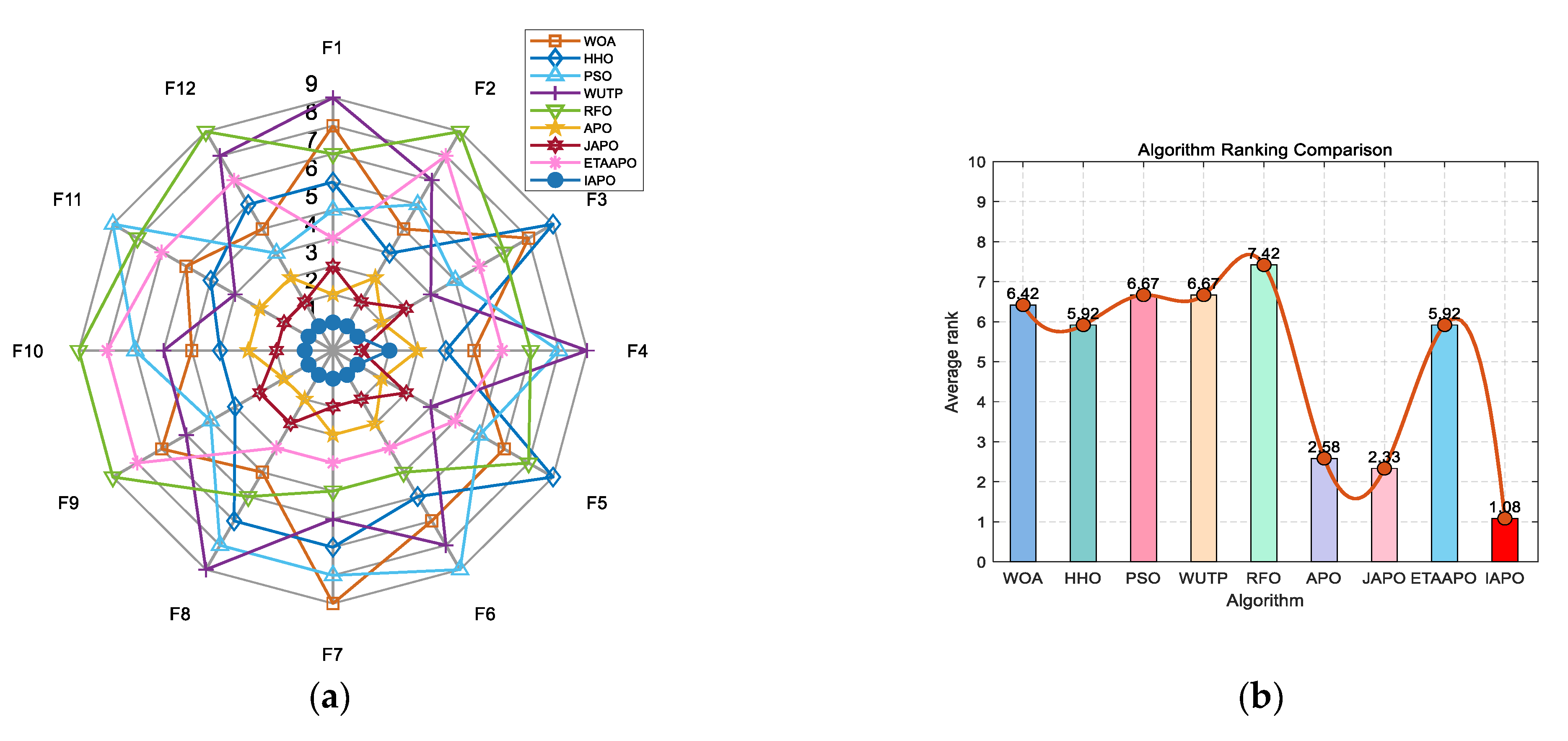

IAPO, APO, BAPO, and DAPO were evaluated on three distinct sets: 20 benchmark functions, along with the CEC 2019 and CEC 2022 test sets. The results of the ablation study are visualized using radar charts and ranking bar charts:

Figure 2 corresponds to the 20 benchmark functions,

Figure 3 to CEC 2019, and

Figure 4 and

Figure 5 to the 10- and 20-dimensional CEC 2022 sets, respectively.

In the accompanying radar charts, each radial axis corresponds to a specific test function, with larger values indicating poorer performance. A smaller enclosed area suggests better overall performance. Across all figures, IAPO (blue circle) consistently occupies positions closer to the center and covers the smallest area, indicating superior performance and stability. Similarly, in the ranking bar charts, IAPO consistently ranks first, validating the effectiveness of integrating both improvement strategies.

4.2. Experiments on Benchmark Functions

For a comprehensive evaluation of IAPO’s performance, comparative experiments employed the suite of benchmark functions detailed in

Table 1. The following representative swarm intelligence algorithms were selected for comparison: the well-established Whale Optimization Algorithm (WOA) [

35], Harris Hawks Optimization (HHO) [

36], and Particle Swarm Optimization (PSO) [

8]; more recently proposed algorithms, including the Water Uptake and Transport in Plants (WUTP) [

37] algorithm and the Rüppell’s Fox Optimizer (RFO) [

38]; two improved algorithms for APO the JAPO algorithm [

19] and the ETAAPO algorithm [

20], and the standard Arctic Puffin Optimization (APO) algorithm. Parameter settings for each algorithm were set as follows: IAPO(

,

,

), WOA(

,

), PSO(

,

,

), WUTP(

,

), RFO(

,

), APO(

,

), JAPO(

,

,

,

,

), ETAAPO(

,

). The optimization results of all algorithms on 30-dimensional functions F1–F13 and fixed-dimensional functions F14–F20 are summarized in

Table 4. The bold numbers in the table indicate the optimal values.

For the unimodal functions F1–F4, IAPO achieved the theoretical optimum value of zero for the best, mean, and standard deviation of the results, significantly outperforming the other eight algorithms. This demonstrates IAPO’s strong global search capability and stability. On functions F5–F7, IAPO also ranked first in all three evaluation metrics. On the multimodal functions F8–F13, which contain numerous local optima, IAPO attained the theoretical optimum on F8 with the smallest standard deviation, indicating a high ability to avoid local optima. On F9–F11, both HHO and IAPO delivered excellent and identical results. For F12 and F13, IAPO again outperformed all other algorithms across all metrics.

Across the subset of fixed-dimensional multimodal functions (F14–F20), the performance of the nine algorithms was comparable on F16. IAPO performed relatively poorly on F14, ranking sixth. On F18 and F19, IAPO’s standard deviation was slightly higher than that of ETAAPO and JAPO, placing it second. However, on F15, F17, and F20, IAPO achieved the best convergence accuracy, the highest average performance, and the smallest standard deviation, thus validating its robustness and effective balance between exploration and exploitation for solving fundamental problems.

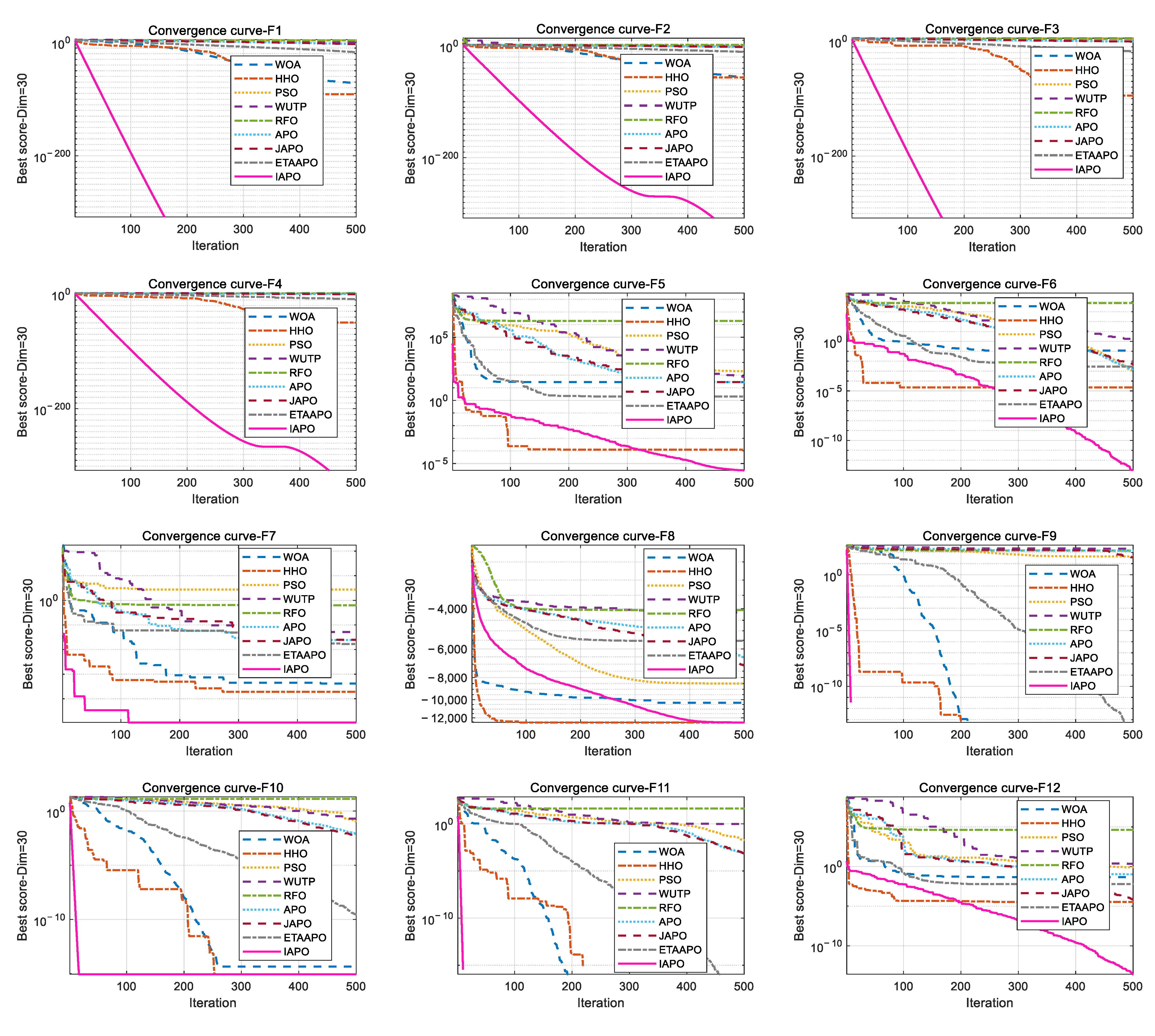

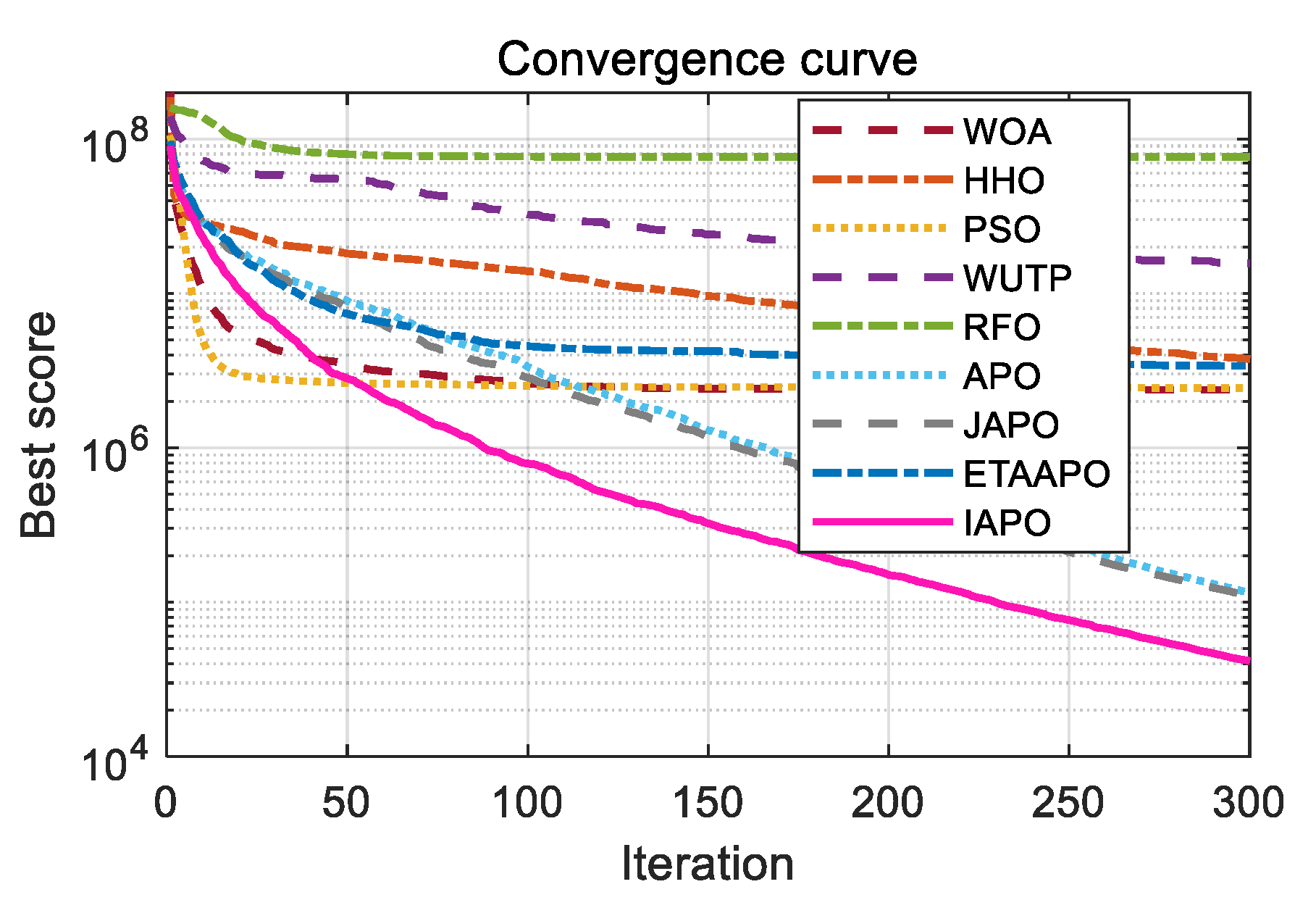

To further illustrate the convergence behavior, the convergence curves of IAPO and the other algorithms are plotted in

Figure 6.

Figure 6 presents a comparative view of the convergence characteristics, highlighting IAPO’s performance against other algorithms. For most test functions—including F1 to F4, F7, F9 to F11, F15, and F17 to F20—the IAPO curve converges rapidly and attains high precision early in the process. By the 50th iteration, IAPO already shows a clear advantage in both convergence speed and convergence accuracy. On functions F5, F6, F12, and F13, IAPO reaches the optimal value after approximately 200 iterations, and its curve continues to decline gradually even up to 500 iterations, reflecting sustained search refinement. Notably, on F7 and F8, IAPO repeatedly escapes local optima and finds better solutions, demonstrating a strong ability to avoid premature convergence. Overall, these results confirm that IAPO maintains high optimization accuracy and faster convergence speed across a variety of benchmark problems.

Figure 7 presents a radar chart and a bar chart summarizing the ranking of each algorithm across the 20 benchmark functions. In the radar chart, IAPO (blue circles) encloses the smallest area and is consistently positioned closer to the center—indicating superior and more stable performance. Similarly, the bar chart confirms that IAPO achieves the highest overall ranking, underscoring its effectiveness compared to the other algorithms.

4.3. Experiments on CEC 2019 Test Functions

Following the experimental setup used for the benchmark functions in

Section 4.2, the proposed IAPO algorithm was compared with WOA, HHO, PSO, WUTP, RFO, APO, JAPO, and ETAAPO. The corresponding results of the CEC 2019 test set are detailed in

Table 5, with all parameter configurations consistent with those described in

Section 4.2.

As shown in

Table 5, IAPO achieves competitive results on most test functions. Notably, it generally exhibits smaller standard deviations than the other algorithms, indicating more stable performance. Among the ten CEC 2019 functions, IAPO ranks first on eight functions (F1, F2, F4–F8, and F10). On function F3, IAPO’s best value is slightly lower than that of JAPO, but its mean accuracy is the highest among all algorithms. On F9, IAPO’s mean value ranks third, behind JAPO and ETAAPO. Overall, IAPO demonstrates clear superiority in 80% of the functions, confirming its effectiveness in handling complex optimization problems.

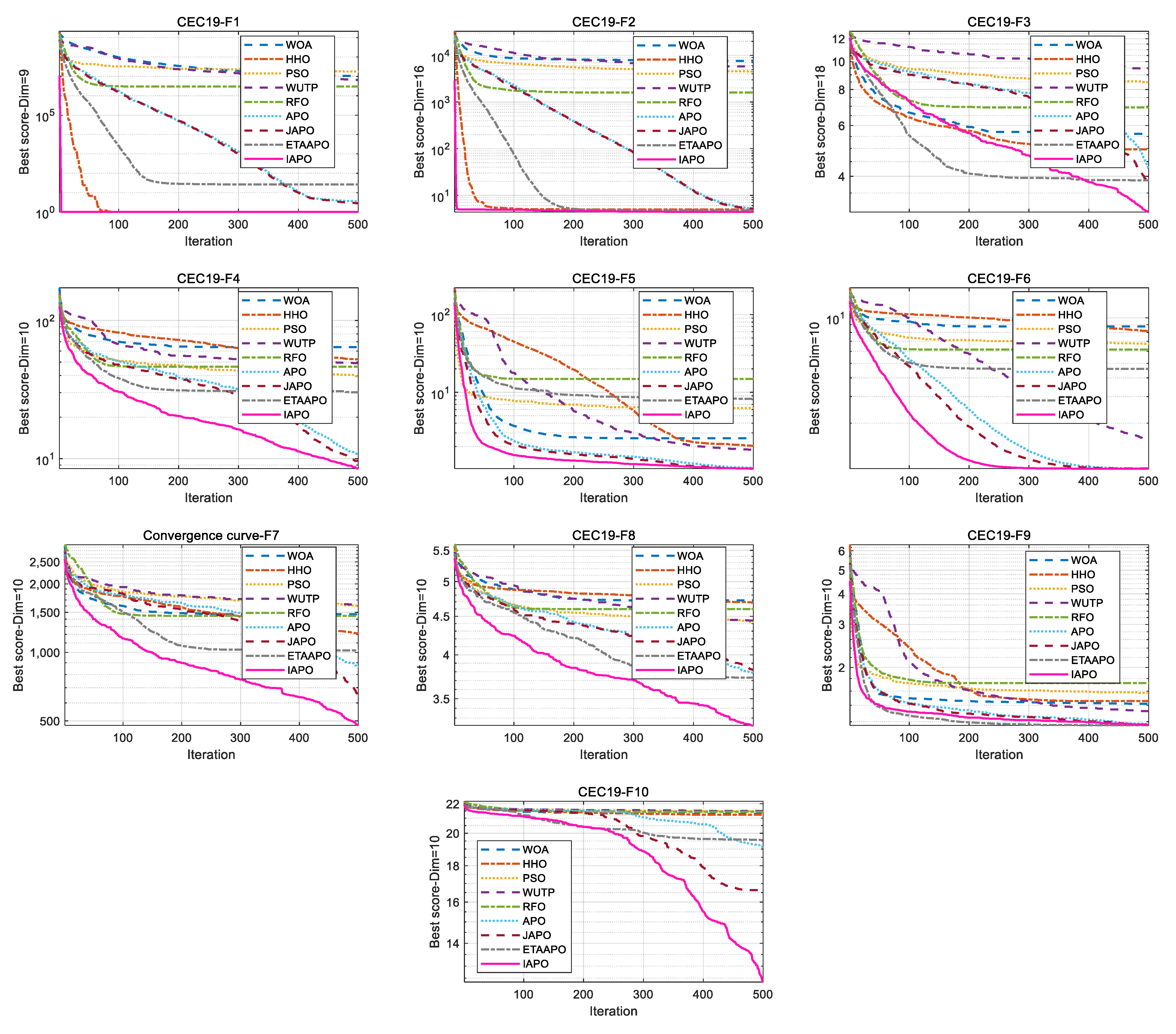

Figure 8 displays the convergence curves of IAPO and the comparison algorithms on the CEC 2019 test set. The results show that IAPO converges rapidly to lower fitness values across most functions. On F1, F2, F5, and F6, it reaches high precision within a small number of iterations. By the 50th iteration, IAPO already shows significantly better accuracy and convergence speed than the other algorithms. On functions such as F3, F4, F8, and F10, IAPO repeatedly escapes local optima and continues to refine the solution, demonstrating strong local avoidance capability.

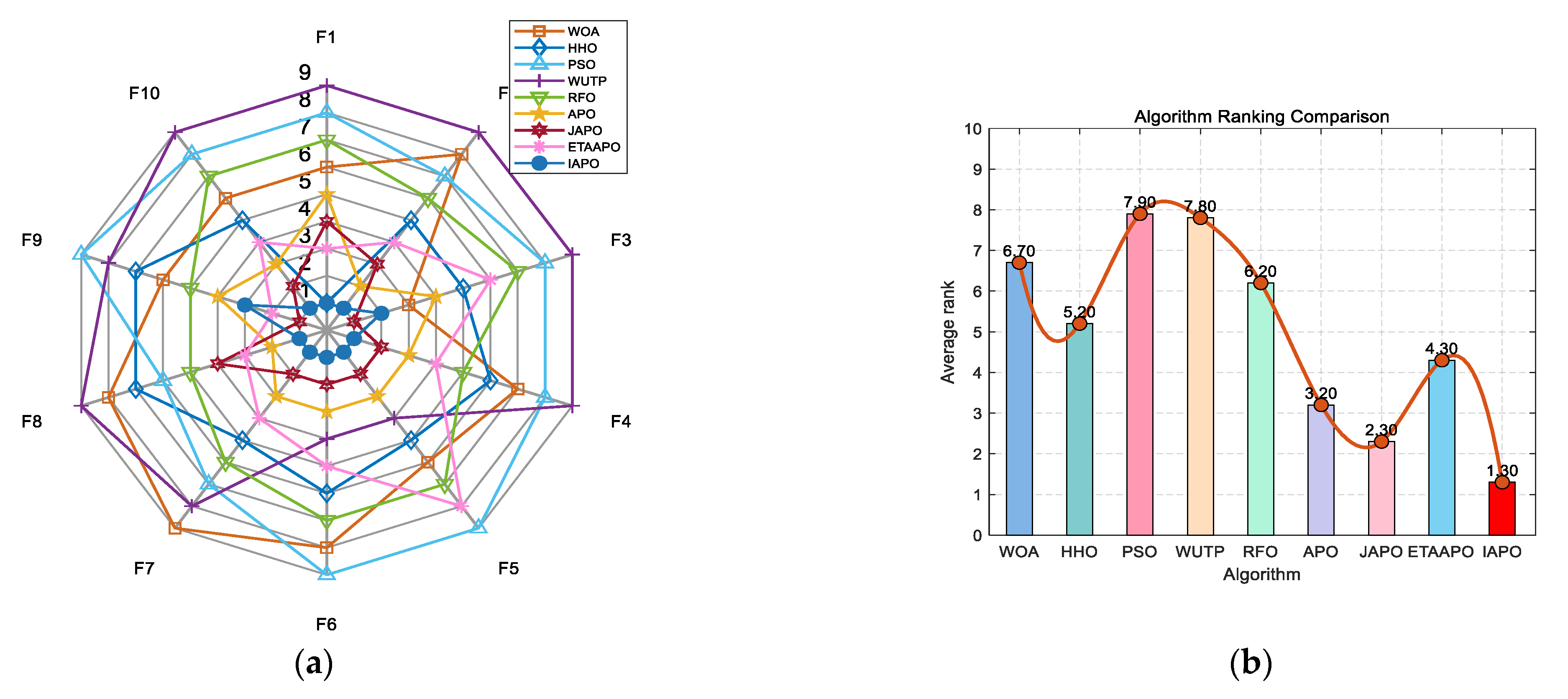

Figure 9 summarizes the ranking of each algorithm using a radar chart and a bar chart. In the radar chart, IAPO (blue circle) covers the smallest area and is positioned closest to the center, reflecting its superior and consistent performance. The bar chart further confirms that IAPO achieves the highest overall ranking.

4.4. Experiments on the CEC 2022 Test Set

The IAPO algorithm was compared with eight other swarm intelligence algorithms—WOA, HHO, PSO, WUTP, RFO, APO, JAPO, and ETAAPO—using the same parameter settings as in

Section 4.2. The optimization results for the 10- and 20-dimensional CEC 2022 sets are presented in

Table 6 and

Table 7, respectively.

As shown in

Table 6, for the 10-dimensional case, IAPO achieved the first rank on 10 out of 12 functions. On function F2, IAPO ranked second, slightly behind APO, while on F4, it placed third with a marginal difference from the top two algorithms, APO and JAPO. In the 20-dimensional setting (

Table 7), IAPO ranked first on 11 functions, and second on F4, closely following JAPO. Overall, IAPO exhibited superior performance on 87.5% of the functions in the CEC 2022 test set.

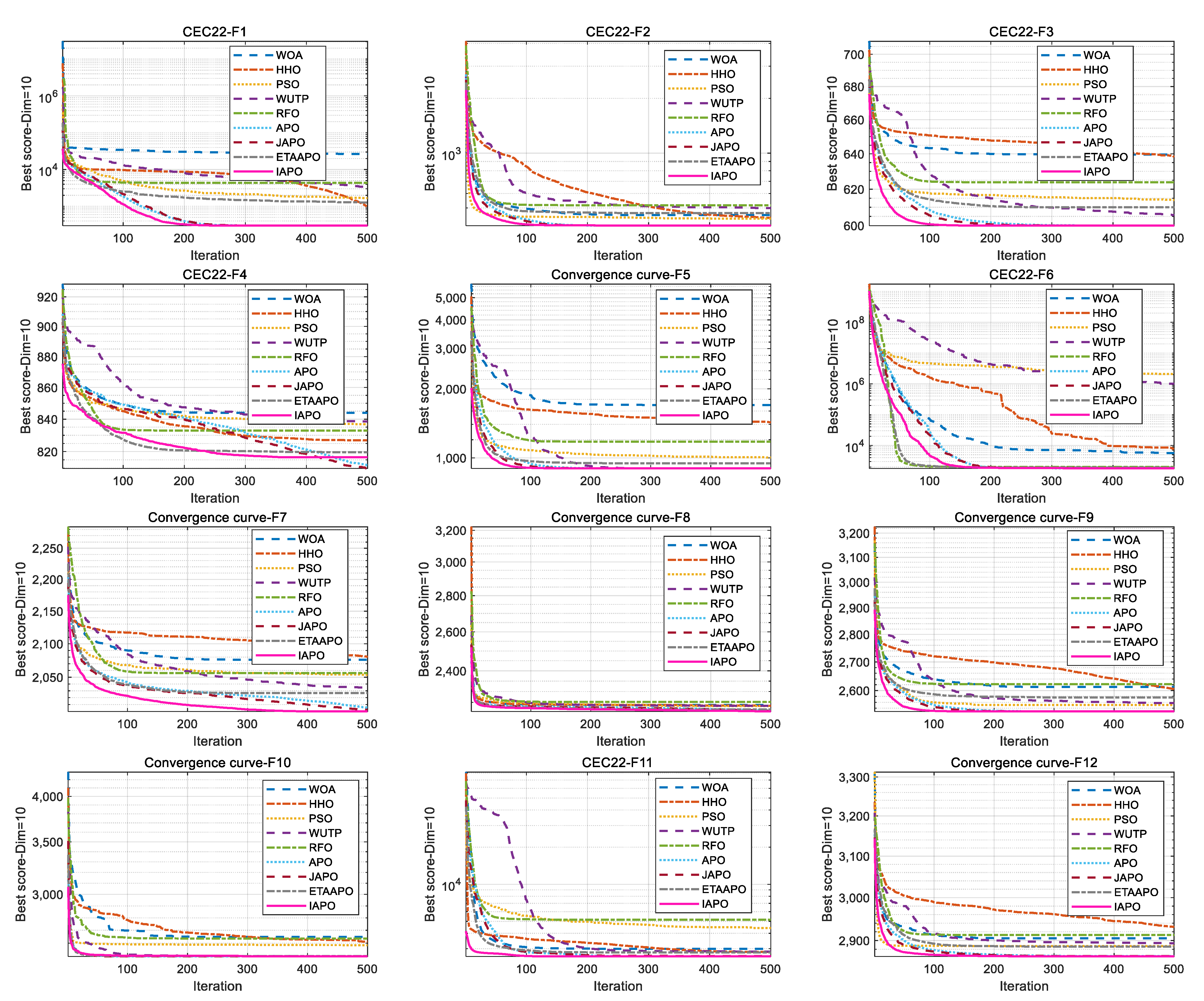

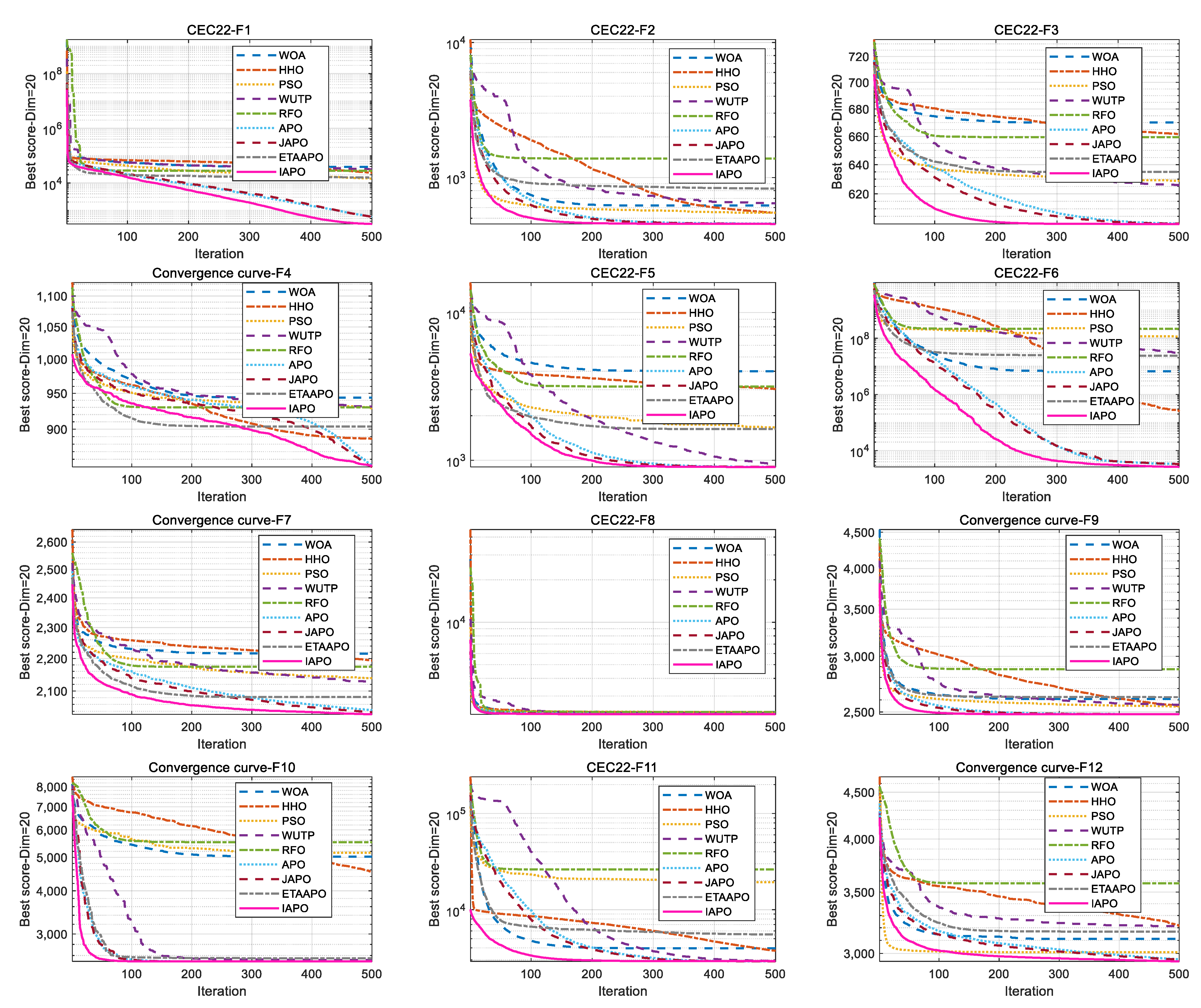

Figure 10 and

Figure 11 illustrate the convergence curves of IAPO and the comparison algorithms for the 10- and 20-dimensional sets, respectively. In both figures, IAPO converges more rapidly than the other algorithms, with smoother convergence curves and fewer instances of stagnation in local optima. This indicates that IAPO possesses not only faster convergence but also stronger global search capability and robustness for addressing challenging high-dimensional optimization problems.

Figure 12 and

Figure 13 present the ranking comparisons based on the 10- and 20-dimensional CEC 2022 sets, respectively. In the radar charts, IAPO (blue circle) occupies the smallest area and is consistently positioned near the center, reflecting its stable and superior performance across most functions. The accompanying bar charts confirm that IAPO achieves the highest overall ranking, further validating its effectiveness.

In summary, IAPO exhibits rapid initial convergence, which is facilitated by the introduced mirror opposition-based learning. This mechanism creates a high-quality, diverse initial population, providing a favorable starting point for the optimization process. Furthermore, IAPO demonstrates exceptional performance on complex multimodal functions. This capability is primarily due to its adaptive differential evolution strategy, which dynamically balances global exploration and local exploitation throughout the iterations. Effectively introducing new individuals enables the algorithm to escape local optima consistently.

4.5. Nonparametric Statistical Analysis Using Wilcoxon Rank-Sum and Friedman Tests

To provide a statistically rigorous comparison of algorithm performance beyond basic metrics such as the best, mean, and standard deviation, this experiment employs the Wilcoxon rank-sum test at a 95% confidence level. This test assesses whether the differences between IAPO and each comparison algorithm are statistically significant. The test was implemented in MATLAB 2022a using the ranksum(x,y) function. A p-value below the 0.05 threshold indicates a statistically significant difference between the two algorithms, whereas a value above it suggests no such significance. In the results, the symbols “+”, “=“, and “−” denote that IAPO performs significantly better, shows no significant difference, or performs significantly worse than the comparison algorithm, respectively.

The Wilcoxon test statistics for IAPO across the different test sets are summarized in

Table 8. The results, presented as the number of functions where IAPO wins/ties/loses against the comparison algorithms, are 142/15/3 for the 20 benchmark functions, 70/8/2 for the CEC 2019 test set, 91/3/2 for the 10-dimensional CEC 2022 set, and 94/2/0 for the 20-dimensional CEC 2022 set. The prevalence of

p-values less than 0.05 indicates that IAPO’s performance is significantly different from that of the other algorithms across most functions.

To further validate the overall performance ranking of IAPO against the comparison algorithms, the non-parametric Friedman test was conducted. For each test function, the algorithms were ranked based on their optimization results (with Rank 1 assigned to the best performer). If multiple algorithms achieved identical results, they were assigned an average rank. The test was implemented in MATLAB 2022a using the friedman(data) function. The Friedman test compares the average ranks of all algorithms across all functions, where a lower average rank indicates superior overall optimization performance. The calculation of the average rank is shown in Equation (18).

where

denotes the average rank of the

algorithm over

independent runs on the

test function.

is the number of independent runs,

denotes the rank of the

algorithm among all algorithms in the

run,

is the overall average rank of the

algorithm, and

is the number of test functions.

Table 9 shows the Friedman test results comparing IAPO with the eight comparison algorithms. The results show that IAPO achieved the lowest average ranks across all test sets: 1.15 on the 20 benchmark functions, 2.02 on the CEC 2019 test set, 1.50 on the 10-dimensional CEC 2022 set, and 1.48 on the 20-dimensional CEC 2022 set. With an overall rank first among all nine algorithms, IAPO demonstrates statistically superior performance in optimization against its comparison algorithms.

5. Experiments in Practical Engineering Optimization Problems

The applicability of IAPO to practical engineering design is further assessed using three constrained optimization problems: the planetary gear train design, the heat exchanger network design (case 1), and the blending-pooling-separation problem. The performance of IAPO is compared with that of WOA, HHO, PSO, WUTP, RFO, APO, JAPO, and ETAAPO.

All experiments were conducted in MATLAB 2022a under identical hardware and software conditions. Employing a population size of 30 and a maximum of 300 iterations, each algorithm underwent 30 independent runs. The Best, Mean, and Standard Deviation (Std) values were recorded as evaluation metrics.

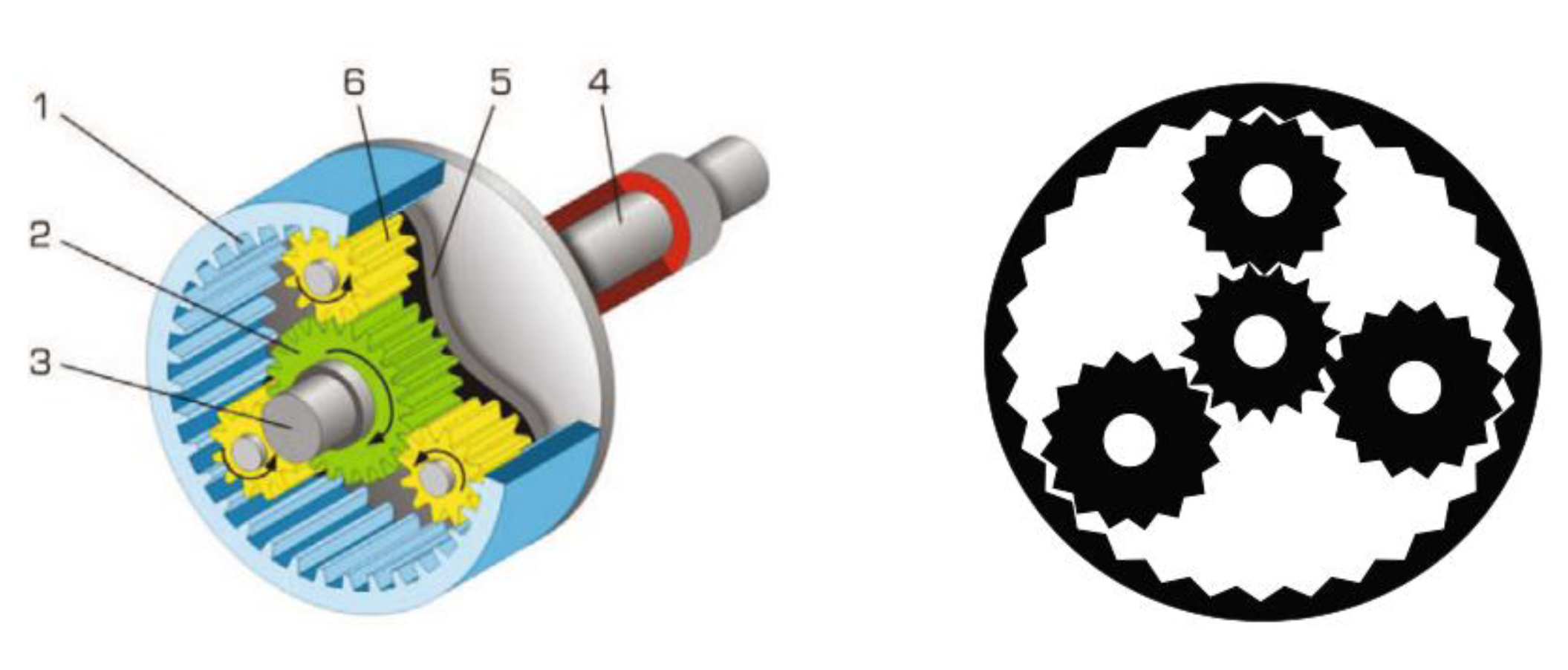

5.1. Planetary Gear Train Design Problem

The planetary gear train design problem [

39] is a constrained optimization task in mechanical power transmission systems, with a schematic shown in

Figure 14. Formulated for automotive applications, this problem aims to minimize the maximum gear ratio error in a planetary transmission system. The solution entails determining the total gear tooth count, modeled through six integer decision variables. (number of gear teeth

) and three discrete variables (gear module

,

=

,

, number of planetary gears

), totaling 9 variables subject to 11 constraints.

Mathematically, the optimization model can be expressed as:

Consider variable

Minimize

Where

The optimization results of IAPO and other comparison algorithms on the planetary gear design problem are summarized in

Table 10, including the recorded best, mean, and standard deviation values. The corresponding optimal design variable sets for each algorithm are summarized in

Table 11, which indicates that IAPO achieves the smallest values in all three performance metrics. Specifically, IAPO obtains the best objective value of 5.2559 × 10

−1 with the following optimal variable set: (35, 26, 25, 24, 20, 87, 1.5461, 2.0606, 1.4739). The convergence curve is illustrated in

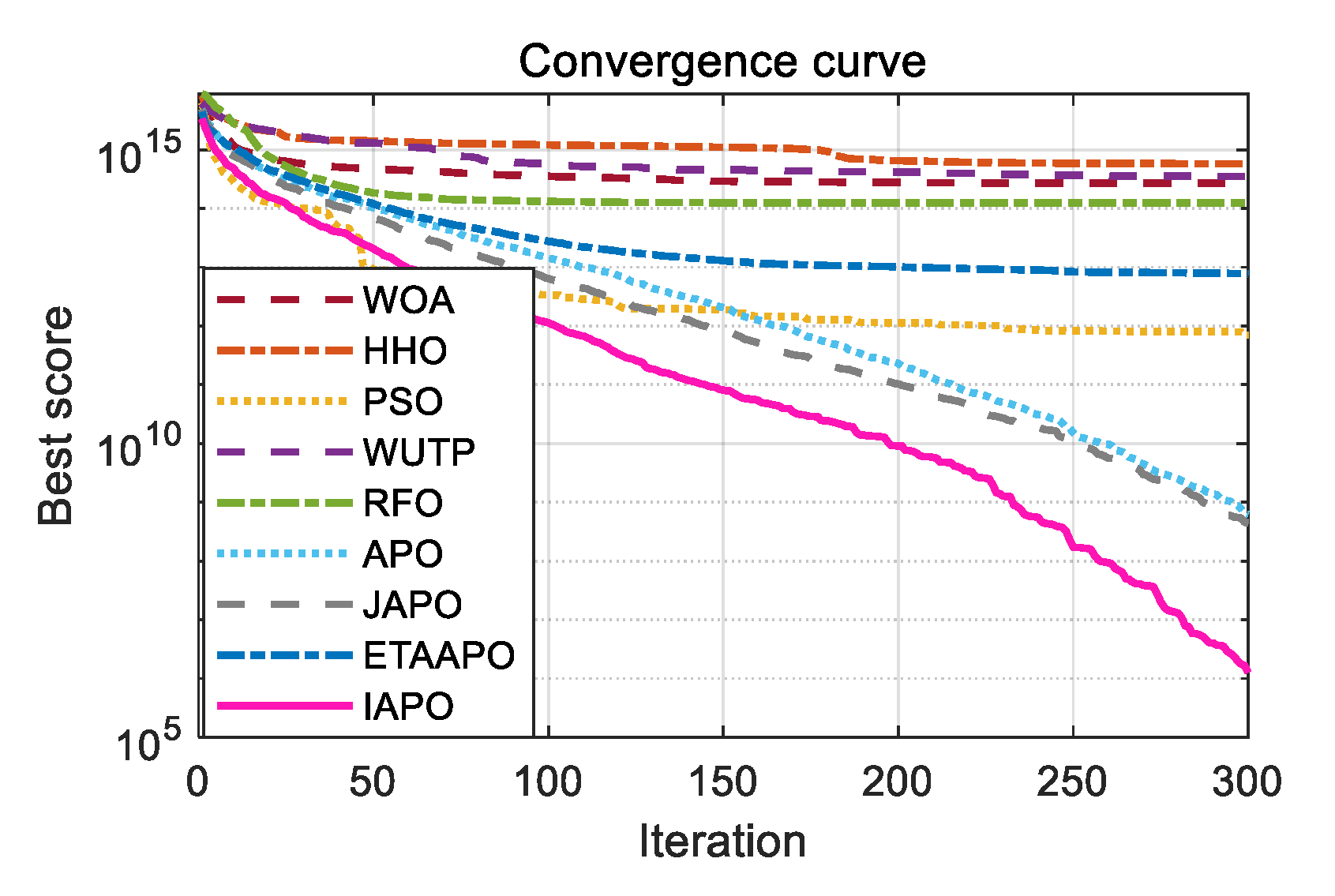

Figure 15, where IAPO exhibits the fastest convergence rate, with its curve consistently occupying the lowest position. These results demonstrate that IAPO is well-suited for this engineering problem and effectively minimizes the maximum gear ratio error.

5.2. Heat Exchanger Network Design Problem (Case 1)

The heat exchanger network design problem [

40] involves the optimal configuration design of heat exchanger structures, representing a complex engineering optimization challenge. The problem requires heating a cold fluid stream using three hot fluids with distinct inlet temperatures, aiming to minimize the heat transfer area of the exchanger. This represents the first instance of a heat exchange network design problem. In this case, the formulation includes two nonlinear and six linear equality constraints, and a nonlinear objective function. Moreover, nine linear inequality constraints are incorporated to account for temperature limitations.

Mathematically, the optimization model can be expressed as:

Minimize: .

The optimization results of the IAPO and comparison algorithms on the heat exchanger network design problem (Case 1) are summarized in

Table 12 and

Table 13.

Table 12 presents the best, mean, and standard deviation values, while

Table 13 lists the minimum area and corresponding optimal variables obtained by each algorithm. The results demonstrate that IAPO achieves the smallest values in all three performance metrics, attaining the minimum heat transfer area of 1.09E+03 with the optimal variable set: (0.809, 98.130, 78.244, 0.483, 1,999,921.277, 101.902, 100.008, 599.992, 700.008). The convergence curves, illustrated in

Figure 16, where IAPO exhibits the fastest convergence rate, with its curve consistently occupying the lowest position. These results confirm that IAPO is well-suited for this problem and effectively minimizes the heat exchanger’s heat transfer area.

5.3. Blending-Pooling-Separation Problem

This problem [

16] describes a typical chemical engineering unit operation that separates a three-component feed mixture into two multi-component product streams through a network of separators and splitting/blending/pooling, ultimately yielding high-purity products. The operating cost of each separator is proportional to its flow rate, and the process must satisfy mass balance constraints around each unit operation. The objective is to minimize total cost while adhering to material balances, component allocations, and flow constraints. The problem includes 15 nonlinear equality constraints, 17 linear equality constraints, five linear inequality constraints, and a linear objective function. Variables x1 to x38 denote flow indicators.

Mathematically, the optimization model can be expressed as:

Minimize: .

The optimization results of IAPO and comparison algorithms on the blending-pooling-separation problem are summarized in

Table 14 and

Table 15.

Table 14 reports the best, mean, and standard deviation values, while

Table 15 provides the minimum cost and corresponding optimal variables for each algorithm. The results show that IAPO dominates the comparison, showing clear superiority across all three key metrics, attaining the lowest cost of 1.49E+04 with the optimal variable set: (32.5, 92.4, 59.9, 115.4, 28.2, 24.5, 6.8, 17.9, 3.8, 0.7, 2.2, 0.2, 80.7, 48.8, 15.4, 0.6, 32.3, 37.5, 28.9, 9.1, 0.6, 0.6, 0.1, 0.4, 0.5, 0.2, 0.6, 0.5, 0.5, 0.4, 0.4, 0.2, 0.4, 0.0, 0.9, 0.4, 0.4, 0.2). The convergence curves illustrated in

Figure 17, confirm that IAPO converges most rapidly, with its curve consistently positioned at the lowest point. These findings demonstrate that IAPO is highly effective for this problem and successfully minimizes the total system cost.

To position this work within current research trends, this paper compares IAPO with several recently proposed algorithms through literature-based benchmarking, as shown in

Table 16. IAPO demonstrates optimal performance across 20 benchmark functions, exhibits high applicability to engineering problems and robustness, and overall performance is relatively strong.

6. Conclusions and Expectations

This paper proposes an improved Arctic Puffin Optimization (IAPO) algorithm to address the shortcomings of slow initial convergence, susceptibility to local optima, and poor exploration-exploitation balance in the standard APO. The proposed IAPO algorithm integrates a lens imaging opposite learning mechanism with a dynamic differential evolution strategy. This paper selects three categories of metaheuristic algorithms for comparison: classical algorithms, recently proposed swarm intelligence algorithms, and improved variants of APO. Comprehensive experiments were conducted on 20 benchmark functions, along with the CEC 2019 and CEC 2022 test sets. The results show that IAPO achieves higher accuracy, faster convergence, and superior robustness, securing first-place average rankings of 1.35, 1.30, 1.25, and 1.08 on the 20 benchmark functions, CEC 2019, 10- and 20-dimensional CEC 2022 test sets, respectively. Additionally, through three engineering optimization simulation experiments, IAPO achieved optimal solutions of 5.2559 × 10−4, 1.09 × 103, and 1.49 × 104 for the respective engineering problems, ranking first in all cases. These results further validate the algorithm’s robust practical application value. However, the proposed IAPO also has limitations. Its performance was occasionally inferior to the standard APO on a small number of test functions. Moreover, while the adaptive strategy reduces the risk of premature convergence, it does not guarantee immunity from getting trapped in local optima in all scenarios.

Given that IAPO demonstrates superior overall performance on the test set and three engineering application problems despite suboptimal results on a few specific tests, the future work will focus on the following directions: (1) Applying IAPO to a wider range of practical engineering problems. (2) Replacing the maximum iteration limit with a maximum number of fitness evaluations as the stopping criterion to ensure experimental fairness. (3) Conducting comparative experiments between IAPO and more state-of-the-art algorithms on additional benchmark datasets. (4) Select appropriate strategies based on the characteristics of different optimization problems or combine them with other algorithms. (5) Extending the application of the IAPO algorithm to multi-objective optimization problems.