1. Introduction

In modern industrial production systems, equipment failures not only cause enormous economic losses but may also trigger serious safety accidents [

1]. Therefore, accurate and timely fault detection has become a critical technology for ensuring industrial safety and production efficiency [

2]. Industrial fault detection is essentially a complex optimization problem [

3] that requires finding optimal feature data in high-dimensional feature spaces to achieve accurate identification and classification of fault patterns [

4]. Meanwhile, in numerous industrial applications such as engineering design [

5], parameter tuning [

6], and resource allocation, the solution quality of optimization problems directly affects system performance and economic benefits. However, real-world fault detection problems often exhibit complex characteristics such as high dimensionality, multi-modality, nonlinearity, and strong coupling [

7], making traditional mathematical optimization methods inadequate for handling such challenging problems.

Metaheuristic optimization algorithms [

8], as a class of intelligent optimization techniques that do not rely on problem-specific mathematical properties, have demonstrated tremendous application potential in fault detection feature selection, classifier parameter optimization, and various engineering optimization problems due to their powerful global search capabilities and good adaptability [

9]. These algorithms design effective search strategies to solve complex optimization problems by simulating intelligent phenomena in nature, such as biological evolution [

10], swarm behavior [

11], and physical processes [

12]. In recent years, researchers have proposed numerous metaheuristic algorithms, including Pied Kingfisher Optimizer (PKO) [

13], Red Fox Optimizer (RFO) [

14], Sine Cosine Algorithm (SCA) [

15], Genetic Algorithm (GA) [

16], Particle Swarm Optimization (PSO) [

17], Differential Evolution (DE) [

18], Grey Wolf Optimizer (GWO) [

19], and Whale Optimization Algorithm (WOA) [

20]. Notably, Pramanik et al. [

21] proposed a hybrid algorithm combining GA and GWO to select and reduce feature dimensions. Dhal et al. [

22] proposed a hybrid PSO and GWO algorithm, named BGWOPSO, for binary feature selection. Abinayaa [

23] proposed a hybrid optimization framework that integrates GWO with DE to address the high-dimensionality and redundancy issues in EEG signal feature selection. These algorithms have achieved significant success in industrial applications.

The Black-winged Kite Algorithm (BKA) [

24] is a recently proposed bio-inspired metaheuristic optimization algorithm that achieves global optimization search by simulating the unique hovering hunting behavior and seasonal migration patterns of black-winged kites [

25]. BKA abstracts the attacking behavior of black-winged kites into a local fine search mechanism and transforms their migration behavior into a global exploration strategy, theoretically possessing good exploration-exploitation balance capabilities [

26]. BKA has demonstrated competitive advantages in handling standard benchmark optimization problems, laying the foundation for its application in fault detection and engineering optimization. However, in complex high-dimensional fault detection scenarios, the original BKA is prone to local optima traps [

27], leading to significantly reduced detection accuracy and reliability when dealing with similar fault patterns.

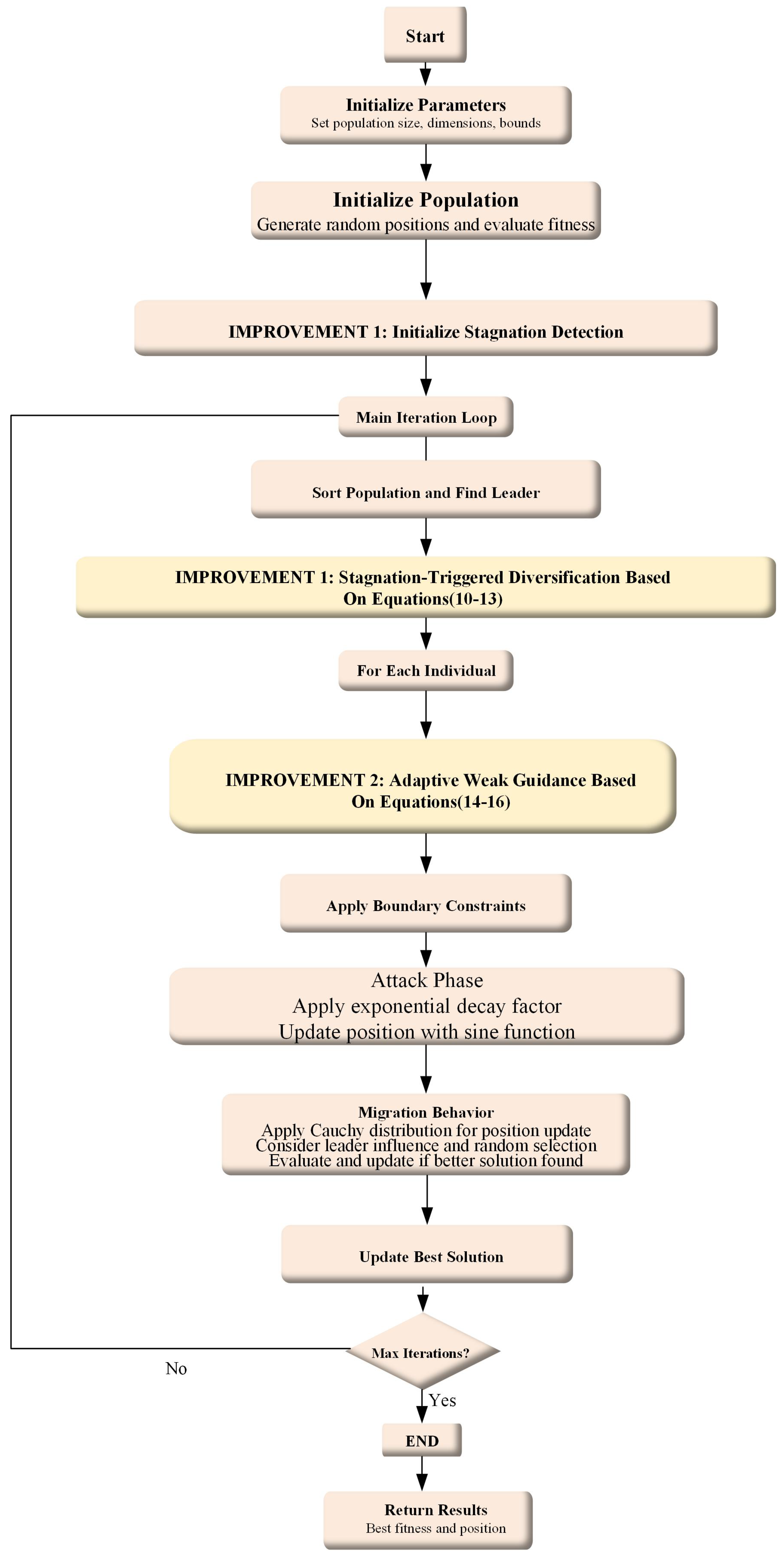

To address the aforementioned problems, this paper proposes an Improved Black-winged Kite Algorithm (IBKA). The algorithm significantly enhances the optimization performance of the original BKA by integrating stagnation-triggered diversification and adaptive weak guidance mechanisms. Specifically, the main technical contributions of this research include:

Development of an improved BKA algorithm that integrates stagnation-triggered diversification and adaptive weak guidance mechanisms. The former employs an intelligent stagnation detection strategy to monitor the algorithm’s convergence status in real-time, systematically applying mild random perturbations to the worst-performing individuals to enhance population diversity when consecutive stagnation is detected. The latter adopts a conditionally triggered elite guidance strategy to provide subtle directional guidance from the optimal solution to poorly performing individuals in the later stages of the algorithm. The synergistic effect of these dual mechanisms effectively resolves local optima trap problems while significantly improving convergence efficiency, all while maintaining the algorithm’s exploration capabilities.

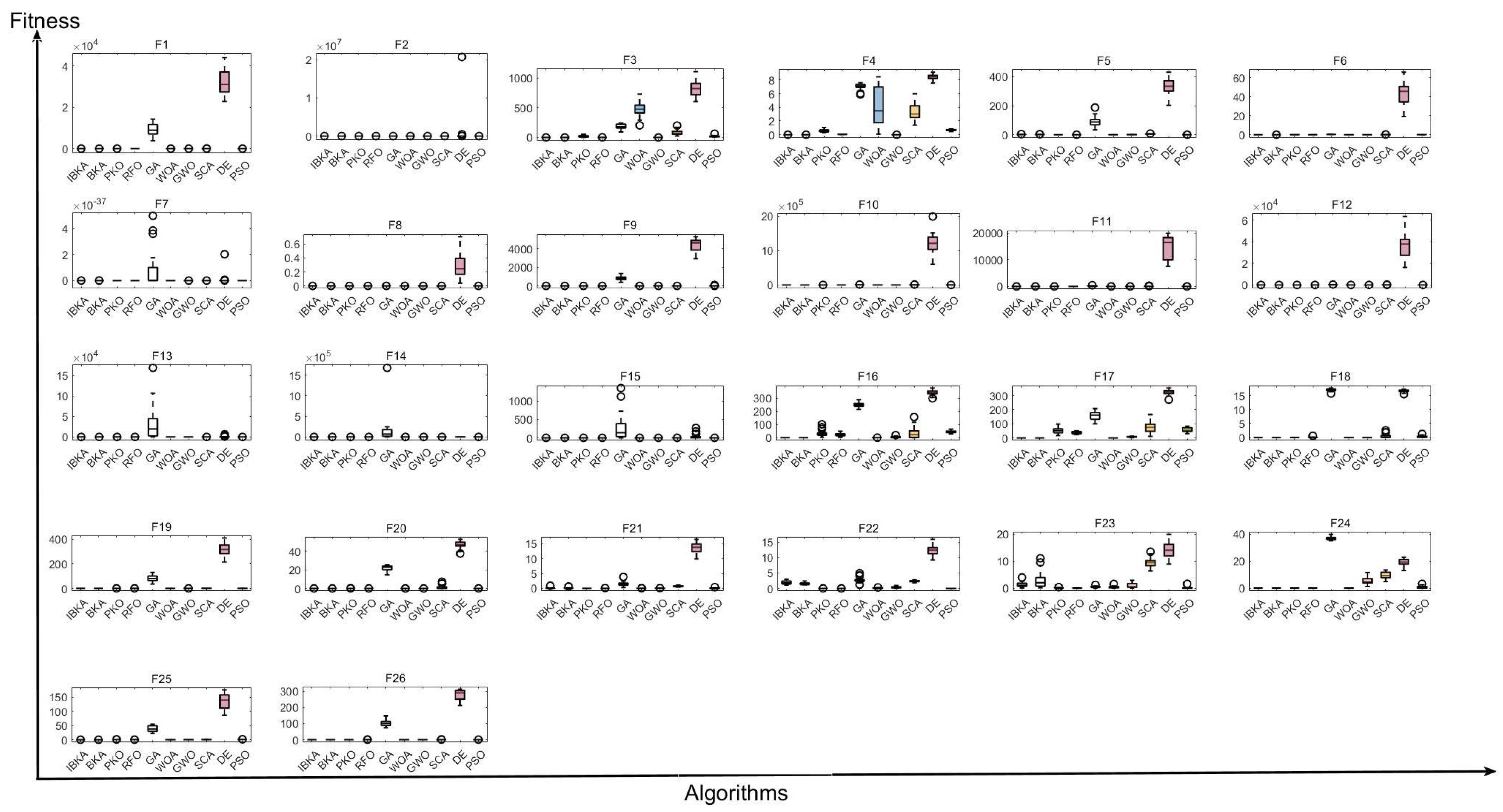

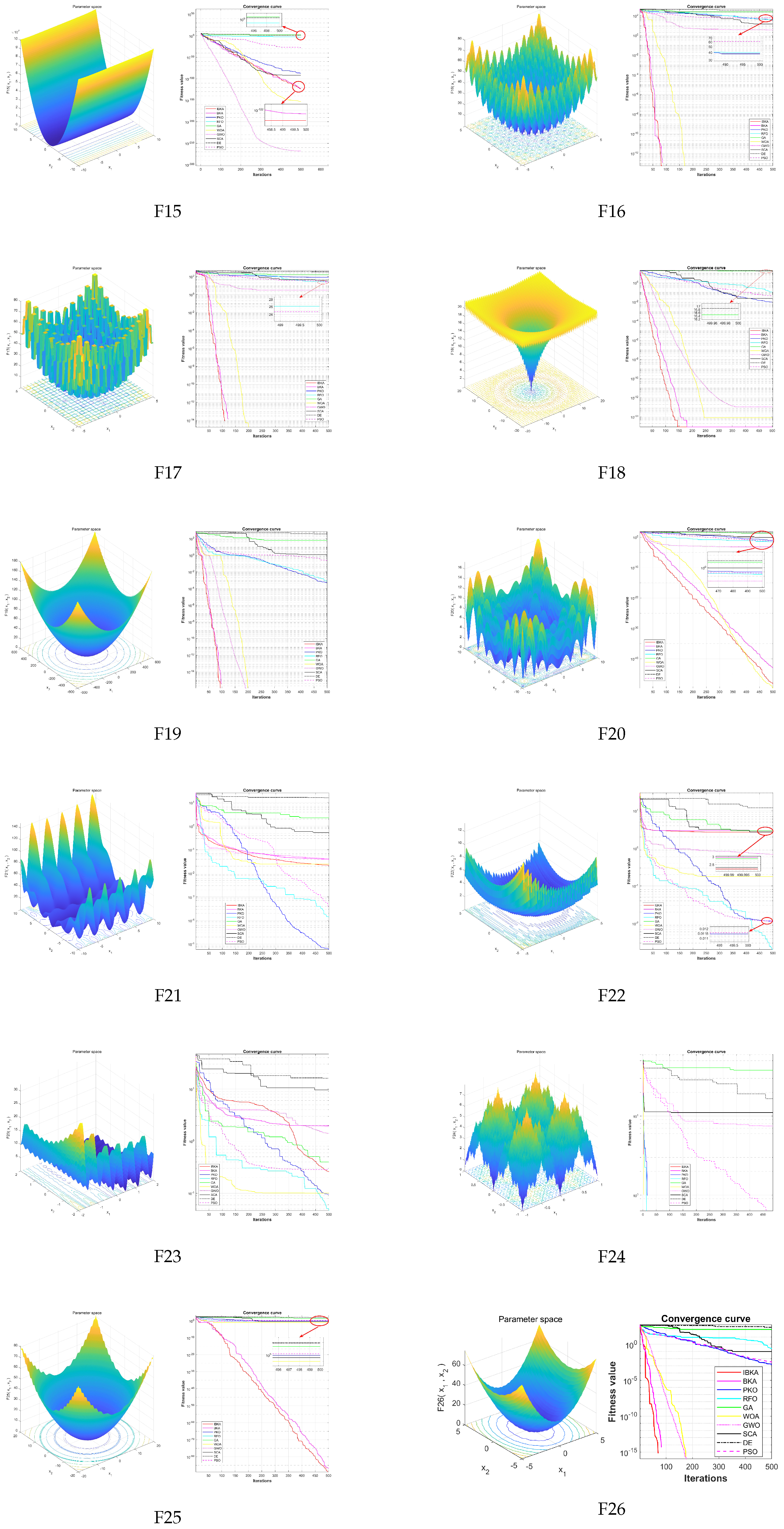

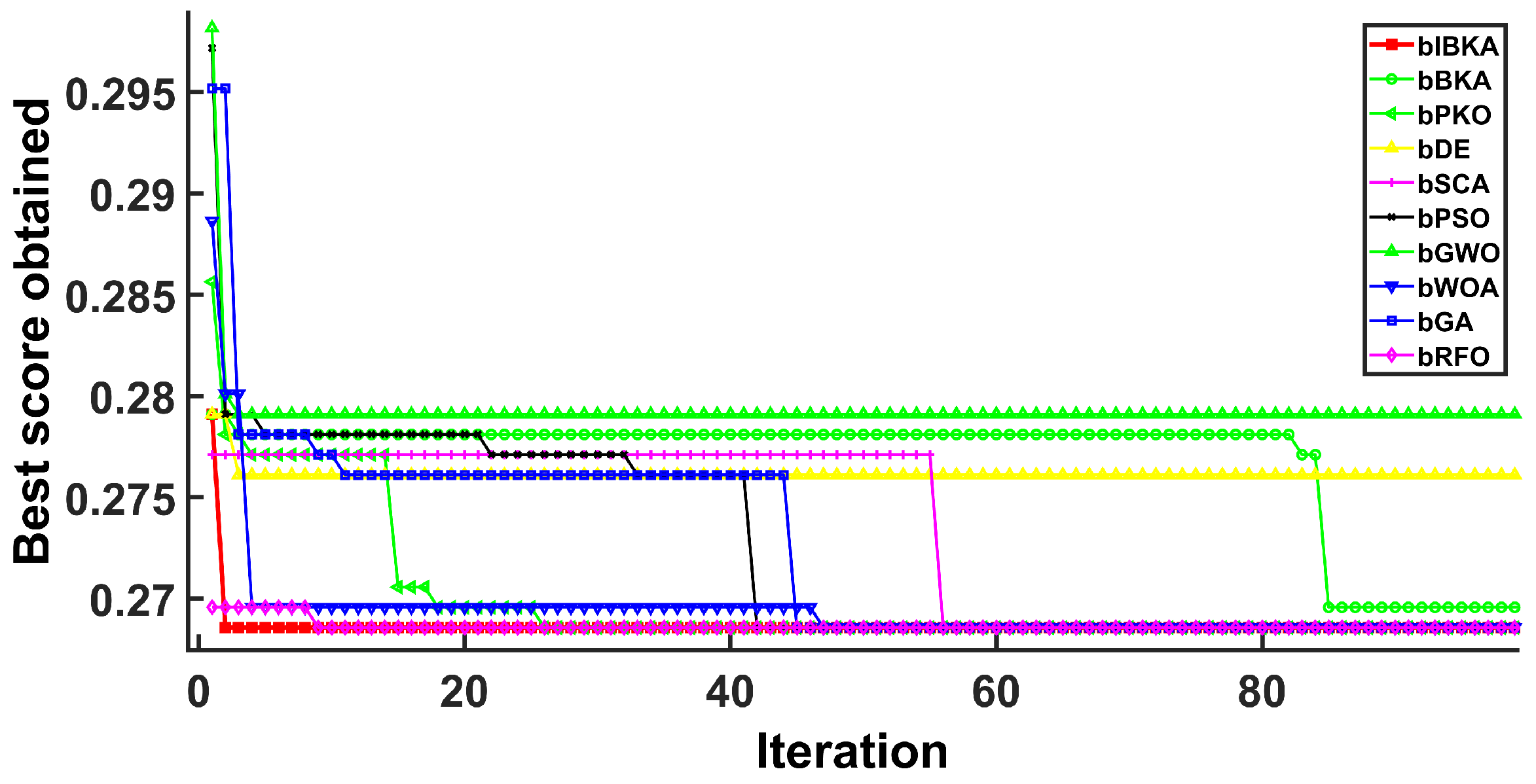

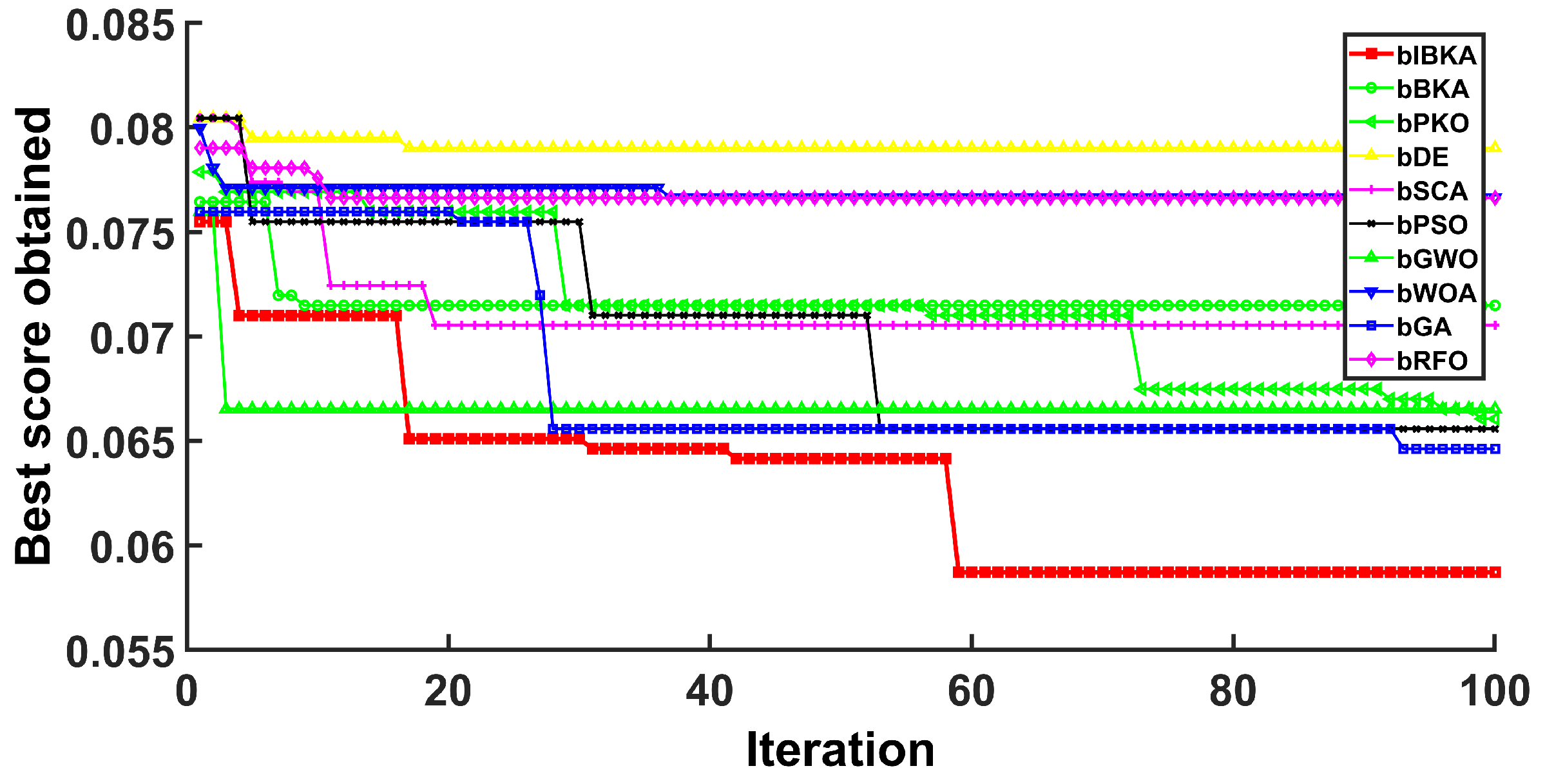

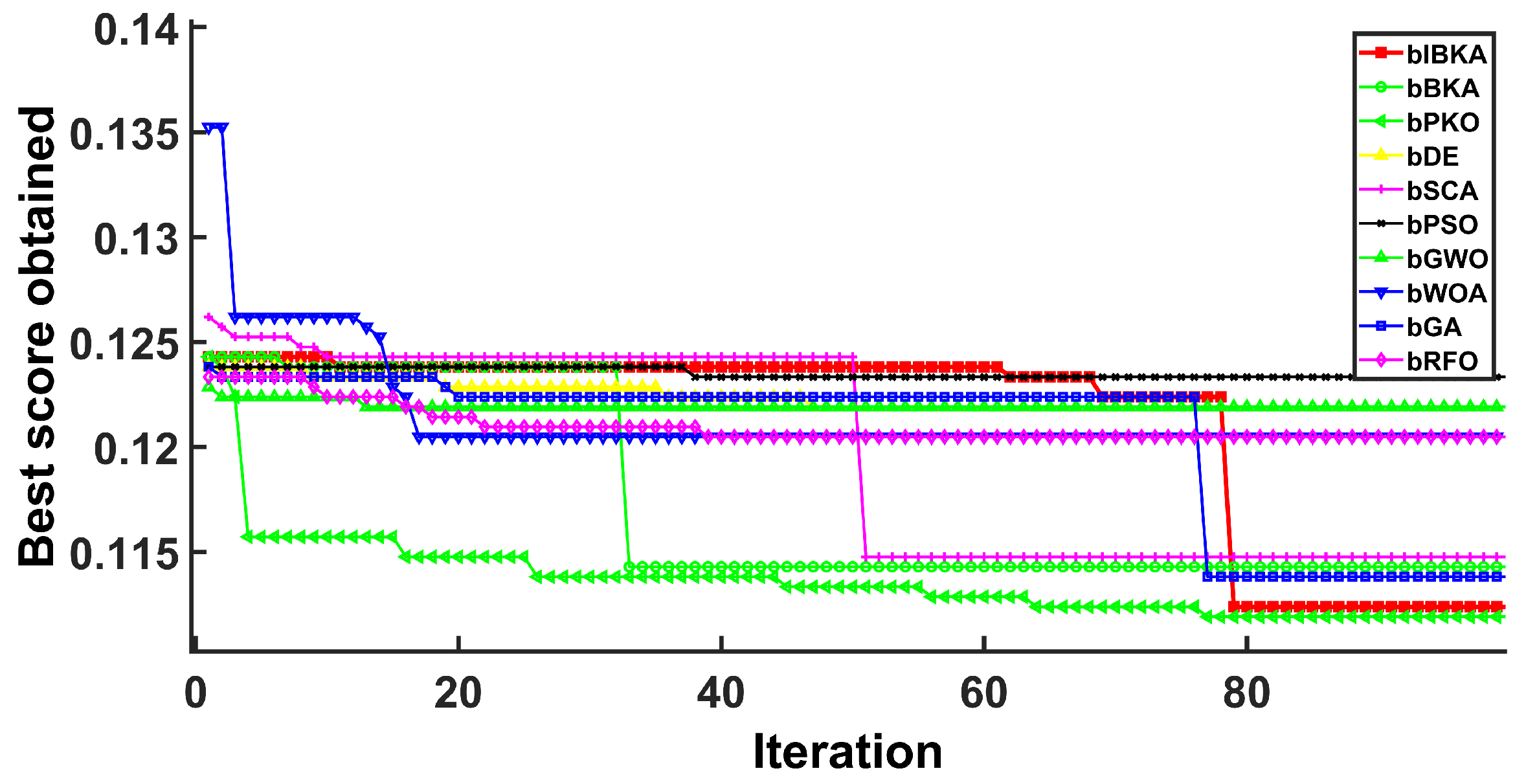

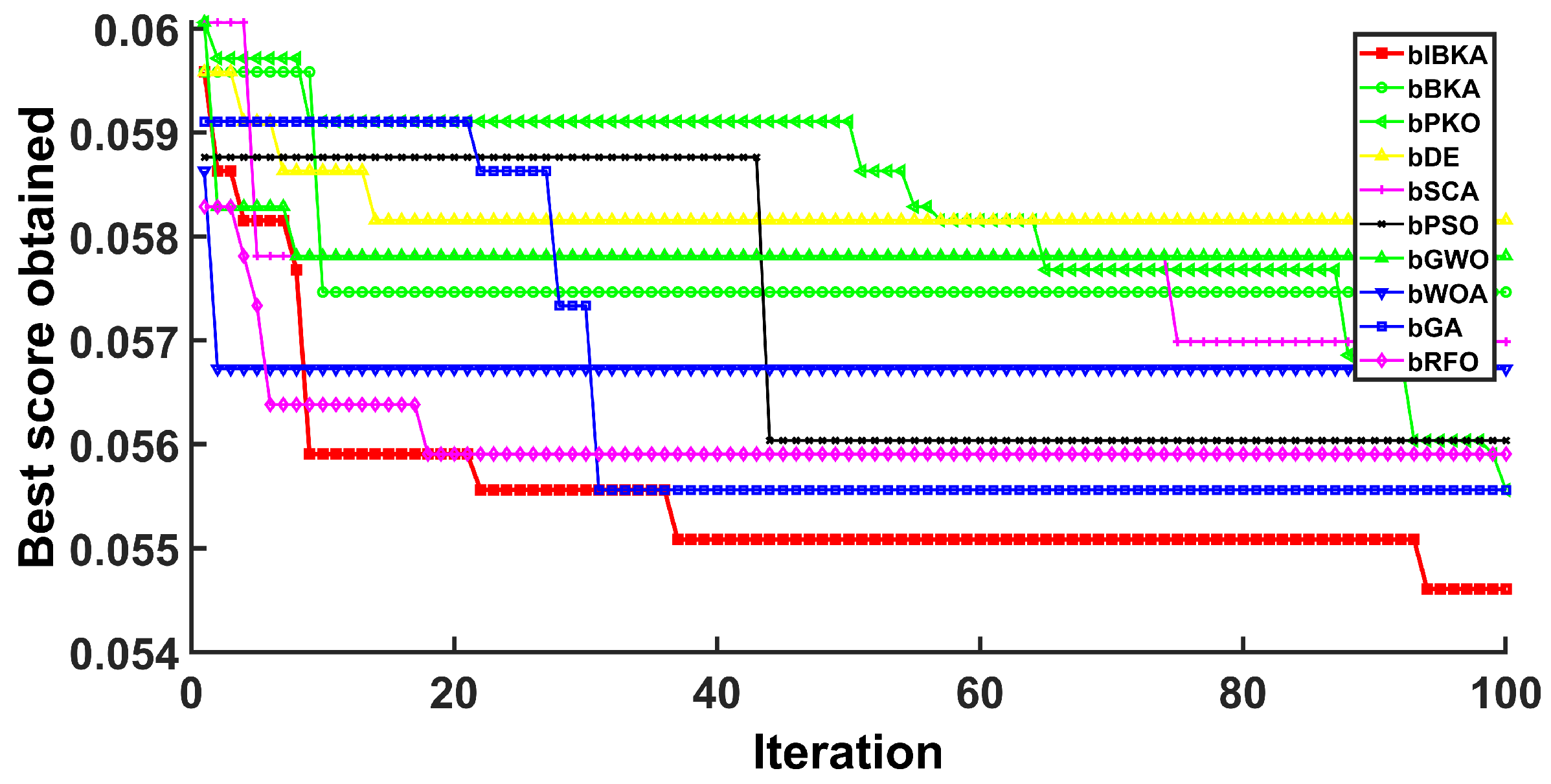

Comprehensive performance evaluation of IBKA and bIBKA regarding statistical significance and convergence speed. Through comparison with 9 state-of-the-art algorithms (including PSO, GWO, RFO, etc.), the superior performance of the proposed algorithms is validated.

Industrial fault detection application where bIBKA is applied to feature selection in fault detection, pioneering the application of bIBKA in the fault detection domain.

The organization of this paper is as follows:

Section 2 presents the optimization process of the BKA algorithm.

Section 3 provides detailed descriptions of the proposed IBKA and bIBKA.

Section 4 presents the results and analysis of IBKA and bIBKA performance on test functions and fault detection datasets.

2. Black-Winged Kite Algorithm

The BKA represents a novel nature-inspired metaheuristic optimization framework that emulates the sophisticated foraging and migratory behaviors exhibited by black-winged kites in their natural habitat [

28]. The various biological behaviors of the Black-winged Kite, which form the core of the BKA, are illustrated in

Figure 1. These small diurnal raptors, belonging to the family Accipitridae, demonstrate a distinctive hunting strategy characterized by sustained hovering behavior, wherein they maintain stationary flight positions at optimal altitudes while conducting aerial surveillance of the terrain below. Upon prey identification, they execute rapid vertical descents with remarkable precision to capture their quarry, demonstrating exceptional spatial awareness and timing accuracy. Beyond their hunting prowess, black-winged kites exhibit complex seasonal migration patterns that reflect their adaptive response to environmental variability and resource availability. These migratory behaviors encompass long-distance movements between breeding and wintering territories, with individuals demonstrating remarkable navigational capabilities and route optimization strategies. The migration process involves multiple decision-making stages, including departure timing, route selection, and stopover site utilization, all contributing to overall fitness and survival. The BKA algorithm incorporates these biological mechanisms through a dual-phase optimization approach: a hovering phase that simulates aerial surveillance behavior, enabling comprehensive exploration of the solution space, and a diving phase that mimics precision strike mechanisms, facilitating intensive exploitation of promising regions. Additionally, the algorithm integrates migration-inspired mechanisms for population diversity maintenance and global search capabilities, ensuring robust performance across diverse optimization landscapes. This bio-inspired approach leverages the evolutionary advantages of these avian behaviors, providing an effective balance between exploration and exploitation in complex optimization problems.

2.1. Population Initialization

The BKA algorithm starts by randomly generating a population of N candidate solutions and distributing them throughout the search space:

where

represents the initial position of the

i-th kite,

D is the problem dimension,

and

are the lower and upper bounds of the search space, respectively, and

generates a random vector of size

D.

2.2. Attacking Behavior

The attacking behavior simulates the precision hunting strategy [

29] of black-winged kites. During this phase, each kite performs local search around its current position. The mathematical formulation is:

where

t is the current iteration,

T is the maximum number of iterations, and

is a time-varying parameter that controls the intensity of local search.

The position update mechanism for attacking behavior follows two strategies based on a probability parameter :

Strategy 1 (with probability

p):

Strategy 2 (with probability

):

where

r is a random number, and

generates a random vector.

2.3. Migration Behavior

The migration behavior represents the global exploration capability of the algorithm, inspired by the seasonal movement patterns of black-winged kites. This mechanism enables the algorithm to explore distant regions of the search space and avoid local optima.

The migration factor is calculated as:

The Cauchy distribution is employed to generate step sizes for migration:

where

is a uniformly distributed random variable.

The position update for migration behavior depends on the fitness comparison:

Case 1: If

(current kite is better than a randomly selected kite):

Case 2: If

(current kite is worse than or equal to a randomly selected kite):

where

is a randomly selected kite from the population,

is the best solution found so far, and

represents the Cauchy-distributed random vector of dimension

D.

2.4. Selection Mechanism

A greedy selection mechanism is applied whenever the position is updated, either after an attacking or a migration step:

where

represents the objective function to be minimized.

5. Conclusions

This paper presents an improved Black-winged Kite Algorithm and its binary variant for addressing global optimization and fault detection challenges. The proposed Stagnation-Triggered Diversification Mechanism effectively prevents premature convergence by monitoring the population’s convergence state and applying targeted perturbations to stagnant individuals. The Adaptive Weak Guidance Mechanism further enhances optimization efficiency through a conditional elite guidance strategy, which is particularly beneficial during the late optimization phase. Comprehensive evaluations on 26 benchmark functions demonstrate IBKA’s superior performance in solution quality and convergence speed. Furthermore, experimental results across semiconductor manufacturing, mechanical system, and software fault detection scenarios validate that bIBKA achieves significant improvements in detection accuracy and reliability compared to existing algorithms while maintaining computational efficiency.

While these results validate the effectiveness of IBKA under the tested conditions, several important considerations emerge when extending this work to broader industrial contexts. The evaluation on static benchmark functions and fault datasets provides a solid foundation for understanding the algorithm’s core capabilities, though real-world deployments may encounter dynamic fault patterns where the fixed stagnation threshold could benefit from adaptive tuning mechanisms. Additionally, the fault detection datasets inherently exhibit class imbalance characteristic of real-world industrial applications, where normal operations significantly outnumber fault occurrences; in such cases, the reported accuracy values are naturally influenced by the performance of the majority class, while F1-scores provide more balanced indicators of the algorithm’s capability in detecting minority fault classes, which are often the most critical for industrial safety.