Engineer-Centred Design Factors and Methodological Approach for Maritime Autonomy Emergency Response Systems

Abstract

:1. Introduction

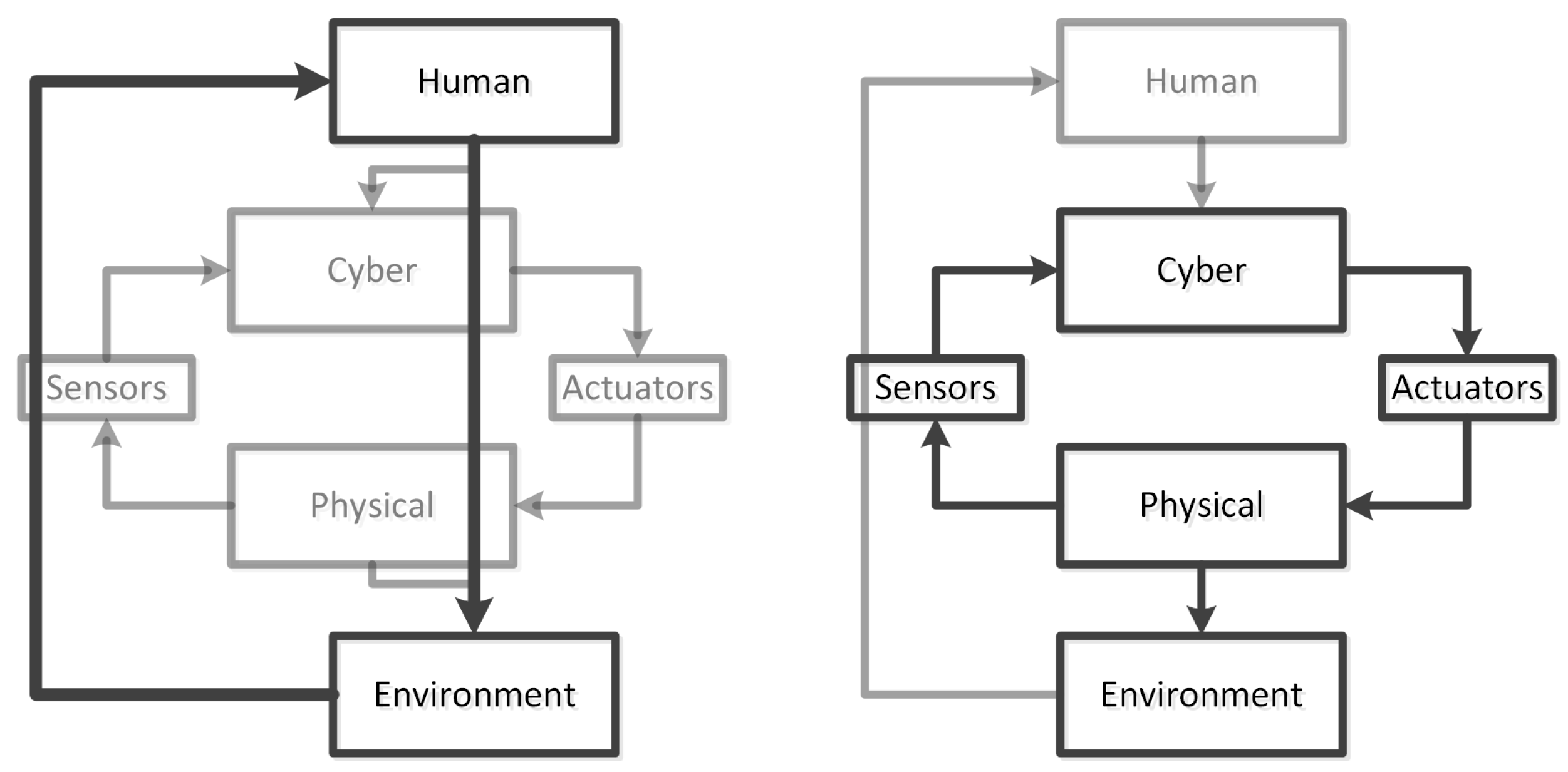

2. Theoretical Background

2.1. (Cognitive Systems) Engineering

2.2. Safety and Systems Engineering

2.3. A (Cognitive) System Engineering?

3. Methodology

3.1. Case Description

3.2. Data Collection and Analysis

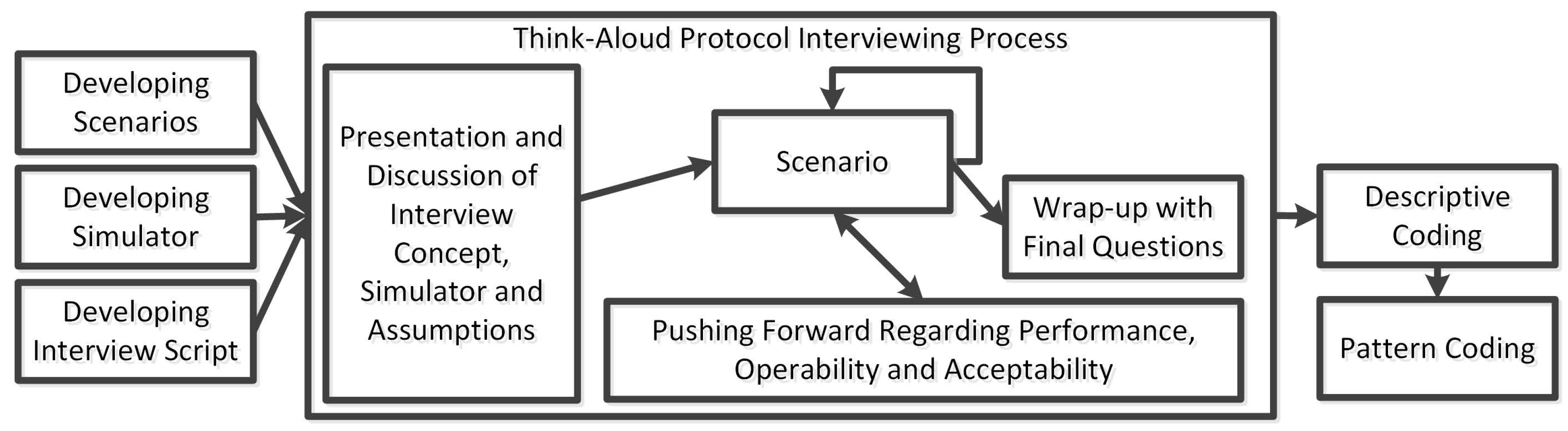

3.2.1. Data Collection Preparations

3.2.2. Methodological Choices

3.2.3. Implementation of Interviews

3.2.4. Data Analysis

4. Results and Analysis

4.1. On Fundamental Demands on Cognition and Collaboration

4.1.1. Design for Domain Experts

Interviewer: Do you need a shipmaster’s degree, and a few courses …

Interviewee: No, you don’t need that. What we are doing here is a computer game. A fifteen year old can do it, if he is interested … When things are happening, if this fifteen year old sees that here there is a problem, if this fifteen year old can bring in his expert team …then he just calls and says …or she of course … that this is something we need to look closer at. Here there is something that can become a problem according to the information I am getting here.

(Preceding discussion about what sensors and information are available, such as data from the Automatic Identification System (AIS). In the meantime the interviewee misses changes to the sensor data, but reacts when finally seeing the camera data.)

Interviewee: That is great. Perfect. Exactly what I want. So, there was a boat without AIS coming from there.

Interviewer: So, what would you have thought if you would have been alerted about a boat showing up.

Interviewee: No, that is alright … that it was so close that it wanted to change course …

(Small discussion about where in the interface the information was available, scrolling back and forth the interviewee indicates that the information from the camera was the only thing required to understand the situation.)

Interviewee: So, if the MASS has done that correction, then everything is fine. Then you don’t need to do anything more.

Interviewer: Do you need to know anything more from the ship to decide if it was a correct course correction, or the right thing to do …

Interviewee: No, not anything more than what preceded that …that he created a new route …

(The interviewee is alerted to a group of kayaks by a MASS. He dismisses them as they should be clearly recognizable on camera and radar.)

Interviewer: So, no need to worry?

Interviewee: No, I don’t think so. Well, there could be … what it could be … but then I have to trust the sensors, and there is a warning. Perhaps one should be alert just afterwards … no-one has stopped …so that the course correction was not enough. So, they do not turn around. Perhaps they change their minds because they are worried. Then it is a new situation. So, because of that you can be on the lookout to make sure the situation is resolved. To help the system a bit …

Interviewer: More like sending in information to indicate that one of the kayaks is hesitating, going back?

Interviewee: Yes, that I could for example have seen on the camera, or on the radar … that one echo is going back … they are splitting up …and that is what could happen, that things do not play out like you thought it would. Then you need to help out a bit.

4.1.2. Ensure a Dynamic, yet Appropriately Narrow, Focus

Interviewee: However, we provide feedback at every critical point on the map. It is very seldom that we do not provide feedback. We ask follow-up questions and provide feedback … it is an area that requires a lot of … we are more active with information and questions, this communication to help and assist the crews. We are very active.

Interviewer: And what is it that you typically help with?

Interviewee: We let them know who they are going to meet and which intentions they have. It is going to take that way, or that way … it is going there, but south of [geographical location] or north of [geographical location]. So we know … help them visualize the routes of the rest of the traffic. It is a bit what [other SMA employee] and others work with, which the future should be like, that you can see these routes in your sea chart … so we help them to visualize this with information.

(The scenario involves a MASS that is closing in on an area with diving activity. The interviewee discussed at length that there are a lot of factors that have to be considered, for which information might not be available. He was then challenged in regard to missing information from the MASS.)

Interviewer: When would you start to worry if it [the MASS] just continued?

Interviewee: I would start to worry, in this case, because it is not very far away … I would start to worry now. If it is within my mandate I would slow down the ship, and I would contact whoever is responsible for taking the decisions.

Interviewer: So, you would at least have the authority to slow down ships. Would that include dropping the anchor if you were really pressed for time?

Interviewee: Yes, I think that would have to be … that it would be something that a traffic operator would be able to do, because that is something that you could have to … some kind of emergency action to avoid accidents and there is no time to call anyone. You just have to make a decision. It should be within the possibilities for a traffic operator to just drop the anchor.

Interviewee 1: It is like always, you have to filter, you do that all the time. Like the sea chart … when I am piloting [a specific ship] I do not have all the information presented in the sea chart … I do not have to know the names of all the islands. You can filter quite a lot.

Interviewer: How much would you filter?

Interviewee 2: It depends on whether you know the area or not.

Interviewee 1: Yes, and what you are doing. If you need to anchor a ship then you need to know where cables and pipes are laid out. That information is perhaps not super-important otherwise. It depends on what you are doing.

(This is followed by a short discussion on different configurations of the interface.)

Interviewee 1: Another example, say I am piloting a ship with a very clean interface … Then one of my colleagues takes over and has a completely different preference. If something happens and I have to pilot the ship. Then perhaps I am stressed and then you have to understand the system very quickly … and suddenly you need more detailed information about depths because you are piloting the ship outside the area you are usually in and know. It is like [Interviewee 2] says, you have to take an individual position on how much information you want presented … how comfortable you are with the equipment.

Interviewer: So, you can be placed in a sudden, unexpected situation and some people want to prepare for that?

Interviewee 1: Yes.

Interviewee: We have internal guidelines to try to avoid ship meetings at one certain point in the [geographical area], at [geographical location]. But that is something most captains and pilots are aware of anyway, they do not want to meet there. So, they basically avoid it by themselves. But … if, for some reason two ships would be on a … are about to meet at [geographical location], especially if there are mist or foggy conditions then we are supposed to talk to them about it and get at least one of them to change their speed.

4.1.3. Accept Uncertain Information Sources

Interviewee: About this diving incident here. It becomes very important here that incidents like that is reported in to somebody … you get … the surveillance area for anyone surveying these ships becomes very, very large. For us, we are just VTS operators within a certain area, but anything could happen at anytime during any point on a ships route from A to B, and it can be outside of the VTS areas too. Someone starts a diving operation where there is no authority looking … how would you get this information?

4.2. On Methodological Choices

4.2.1. Characterising the Context

Interviewer: You were thinking about contacting someone with a deeper technical understanding. How much of an expert are we talking about?

Interviewee: If it was a manned ship then you would just have called the engineering room to get a yes or no answer …or we have this problem and we need this much time to fix it. During this time you need to reduce the load or something like that.

Interviewee: Much of I do today is phone calls, as I am working in operations. Are there divers in the water? Is this restriction applicable? This could just as easily be solved using a website through which I could interact with restriction areas.

4.2.2. Eliciting Multiple Explanations

(Interviewee removes a warning without realizing that a few components on the monitored MASS have broken down. This is followed by a short discussion on the interface.)

Interviewer: So the information you need is that it has decided to change its route, and it has, and that you can see?

Interviewee: Yes, and then I did a safety check, and it looked correct, although I noted that it did not end up at the same destination, but I think that is just a … he could solve that by adding to the route. You can change that next time around, so you do not have to be confused about that. Rerouted … then it is more believable. Otherwise I do not think …

(Short discussion about other parts of the interface regarding other routes.)

Interviewer: If there were other ships in the passage, now there are none, but if there were and it decided to just move ahead … what do you think would be required then?

Interviewee: Then … when I checked the route there were no AIS targets there, but if there was one there then that route has had to change. That is a bit tricky, because then you would have had to monitor that ship using its camera when moving past that other ship. Then it becomes difficult. Then you have to pilot … or at least monitor … because the sensors would detect the other ship …

5. Discussion

5.1. To Design for Handling Unknown Unknowns

- The need to design for domain experts is supported by existing guidelines on human-centred design. These guidelines already insist on the operator being included in the design process, which should provide engineers with a path to accessing domain knowledge.

- Nor does the need to dynamically direct the attention of the ROC operators imply a need to extend the current guidance on engineering practice. Addressing this fundamental demand requires engineers to understand the capabilities of the system well, i.e., what the limitations of control in different environmental conditions are, what is an unavoidable obstacle, etc. Transferring such explicit knowledge is the aim of system and safety engineering processes.

- However, the need to accept uncertain information sources when handling unknown unknowns puts other requirements on engineering. It requires engineers to understand the cognition and motivations of more stakeholders than the ROC operator. Furthermore, these stakeholders will most likely also be operationally and managerially independent from the engineering firm’s customers, i.e., the organisations operating MASSs and ROCs [64].

5.2. To Make Relevant Methodological Choices

5.3. Limitations

6. Conclusions

Author Contributions

Funding

Informed Consent Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AIS | Automatic Identification System |

| E-OCVM | European Operational Concept Validation Methodology |

| MASS | Maritime Autonomous Surface Ship |

| MDPI | Multidisciplinary Digital Publishing Institute |

| ROC | Remote Operations Centres |

| TAPI | Think-Aloud Protocol Interviewing |

| VTS | Vessel Traffic Service |

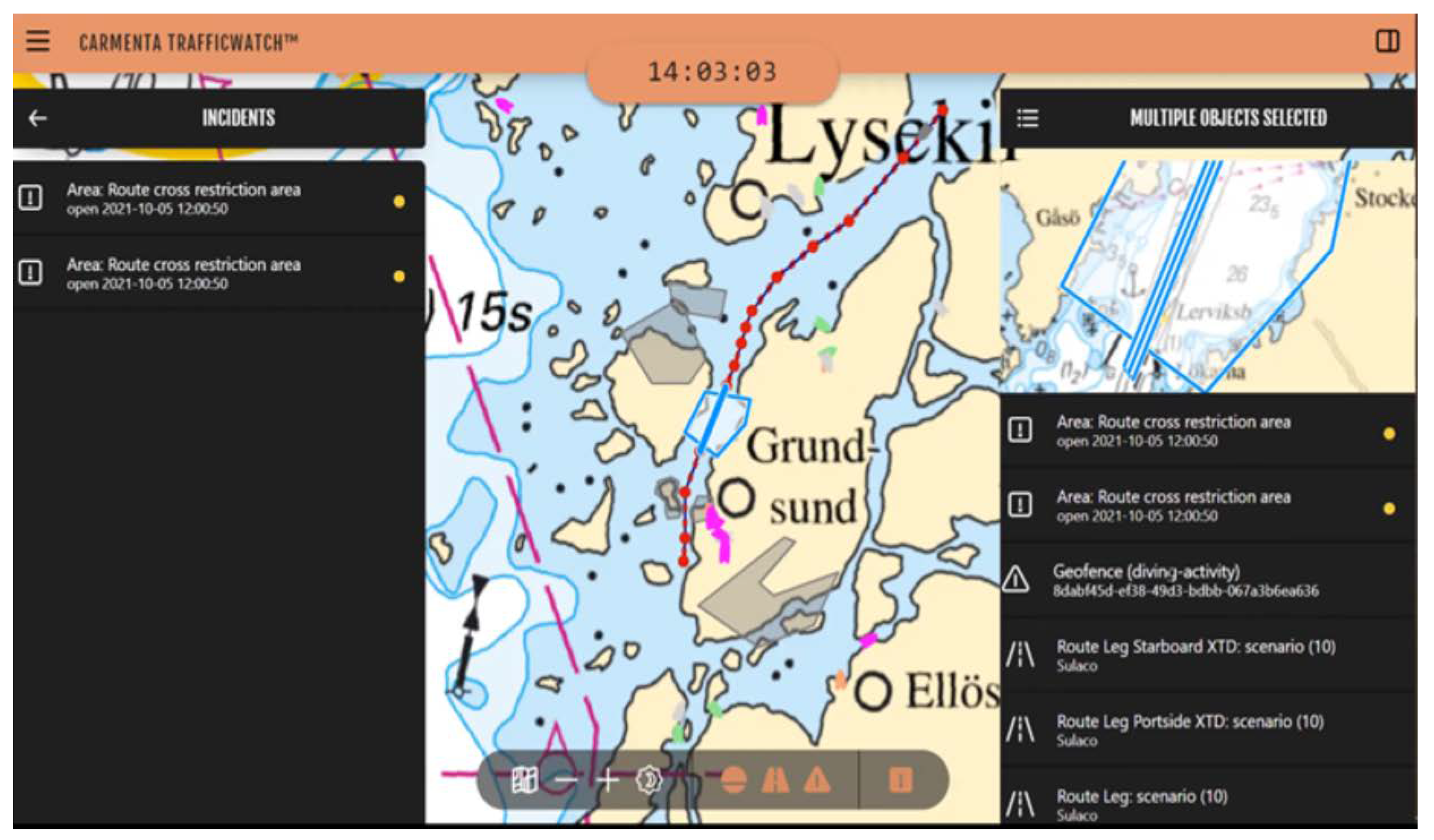

Appendix A. The Graphical User Interface

- Lists of different types of vessels.

- Lists of routes.

- Information about and from specific vessels, routes and restriction areas, including, for instance, the location of a vessel, camera images, and the purpose of a restriction area.

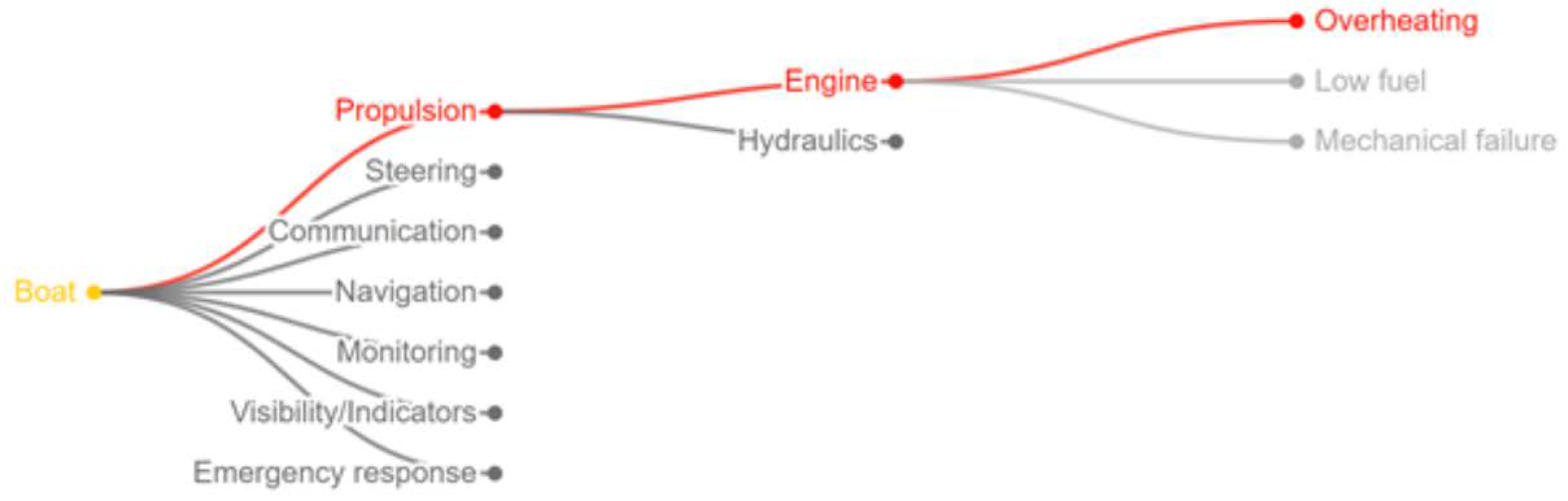

- A simple system health view in the form of a dynamic tree structure, color coded to indicate faults. This view was included to prompt practitioners to start talking about possible faults in MASSs and how they would like to diagnose and handle them. One example of this view is shown in Figure A2.

Appendix B. The Scenarios

Appendix B.1. MASS Breakdown, Autonomous Rerouting

- 1.

- MASS sends initial route to ROC.

- 2.

- MASS sends continuous position updates which can be seen by operators in real time.

- 3.

- MASS has a system health breakdown (variable breakdown). MASS updates state and sees risk on route, prompting route recalculation.

- A health data message is sent to ROC.

- A route update message is sent to ROC (including reason for change). This leads to the ROC operator being notified.

- 4.

- ROC looks up action to take for malfunction, which is to increase the safety margins. ROC infrastructure (also) detects that the increased safety margins and a hazard overlap and generates a notification.

- Variability, as in different types of breakdowns affecting MASS capability differently:

- -

- Simple breakdown with complete loss of functionality.

- -

- Complex breakdown where capability is reduced rather than completely lost.

- 5.

- Operators see notifications. (Prompted by vessel and self-identified.)

- 6.

- ROC updates vessel information:

- Route change

- Capability reduction

- 7.

- Operators can look at notifications, MASS information, etc. (MASS and ROC situational awareness.)

Appendix B.2. MASS Breakdown, No Autonomous Rerouting

- 1.

- MASS sends initial route to ROC.

- 2.

- MASS sends continuous position updates which can be seen by operators in real time.

- 3.

- MASS has a system health breakdown (variable breakdown).

- A health data message is sent to ROC.

- Variability, as in different types of breakdowns affecting capability differently (incident can thus be different, but here we use a grounding incident as an example): Simple breakdown with complete loss of functionality, and complex breakdown where capability is reduced rather than completely lost.

- 4.

- ROC looks up action to take for malfunction, which is to increase the safety margins. ROC infrastructure (also) detects that the increased safety margins and a hazard overlap and generates a notification.

- Variability, as in:

- -

- That the restricted area could be due to different global weather phenomena not updated on MASS.

- -

- The MASS could have an old map (so, a health message was probably sent much earlier, but was not critical at that point in time).

- 5.

- Operators see incidents and notifications. (Prompted by vessel and self-identified.)

- 6.

- ROC updates vessel information:

- Capability reduction

- 7.

- Operators can look at notifications, MASS information, etc. (MASS and ROC situational awareness.)

Appendix B.3. Multi-Malfunctions in MASS Subsystems

- 1.

- MASS sends initial route to ROC.

- 2.

- MASS sends continuous position updates which can be seen by operators in real time.

- 3.

- MASS has a system health breakdown (engine malfunction). MASS updates state and sees risk on route, prompting route recalculation.

- A health data message is sent to ROC.

- A route update message is sent to ROC (including reason for change). This leads to the ROC operator being notified.

- 4.

- ROC looks up actions to take for engine malfunction; it is decided to increase the safety margins. ROC infrastructure (also) detects that the increased safety margins and a hazard overlap and generates a notification.

- 5.

- MASS has a system health breakdown (AIS malfunction). (Prompts no route change.)

- A health data message is sent to ROC.

- 6.

- MASS has a system health breakdown. (Radar malfunction). MASS updates state and sees risk in current speed, prompting route recalculation.

- A health data message is sent to ROC.

- A route update message is sent to ROC (including reason for change). This leads to the ROC operator being notified.

- 7.

- Operators see incidents and notifications. (Prompted by vessel and self-identified.)

- 8.

- ROC updates vessel information:

- Route change

- Capability reduction

- 9.

- Operators can look at notifications, MASS information, etc. (MASS and ROC situational awareness.)

Appendix B.4. Restricted Area on Route, Seen by MASS Situational Awareness

- 1.

- MASS sends initial route to TW.

- 2.

- MASS sends continuous position updates which can be seen by operators in real time

- 3.

- MASS sends notification of route change, including reason (which is restricted area on route).

- Variability, as the restricted area could be due to:

- -

- Different local weather phenomena identifiable by MASS (e.g., fog).

- -

- An old map (so, a health message probably sent much earlier, but which was not critical at that point in time).

- 4.

- Operator receives notification that MASS has changed course due to restricted area on MASS route.

- 5.

- Operator investigates notification (ROC situational awareness), not seeing the restricted area.

- 6.

- Operator investigates MASS. (MASS situational awareness.)

Appendix B.5. Other Ship on Collision Course with MASS, Autonomous Rerouting

- 1.

- MASS sends initial route to ROC.

- 2.

- MASS sends continuous position updates which can be seen by operators in real time.

- 3.

- MASS sends notification of route change, including reason (which is ship on collision course).

- 4.

- Operator receives notification that MASS has changed course due to another ship being on collision course with MASS.

- 5.

- Operator investigates notification (ROC situational awareness), not seeing the ship.

- 6.

- Operator investigates MASS. (MASS situational awareness.)

- Variability in reason for collision risk due to different ships seen on radar and camera, i.e., ferry, super-tanker, kayaks, speedboat, slow cruiser and fishing boat.

- Information via radio from, e.g., fishing boat.

Appendix B.6. Other Ship on Collision Course with MASS, No Autonomous Rerouting

- 1.

- MASS sends initial route to ROC.

- 2.

- MASS sends continuous position updates which can be seen by operators in real time.

- 3.

- Operator receives notification that ship is on collision course with MASS.

- 4.

- Operator investigates notification. (ROC situational awareness.)

- 5.

- Operator check whether MASS sees same indication, which it does not. (MASS situational awareness.)

Appendix B.7. New Route from MASS Crosses Restricted Area

- 1.

- MASS sends initial route to ROC.

- 2.

- MASS sends continuous position updates which can be seen by operators in real time.

- 3.

- MASS notices object in route. MASS sends notification of route change, including reason (which is ship on collision course).

- Variability in different ships detected, i.e., ferry, super-tanker, kayaks, speedboat, slow cruiser, and fishing boat.

- 4.

- ROC receives notification that MASS has changed course due to another ship being on collision course with MASS.

- 5.

- ROC receives notification that MASS route interferes with restricted areas.

- 6.

- Operator investigates notification. (ROC situational awareness.)

- Variability in different restricted areas, i.e., dangerous, fog, restricted, etc.

- 7.

- Operator checks whether MASS sees same indication, which it does not. (MASS situational awareness.)

- 8.

- ROC checks route and sees that it interferes with restricted area. (ROC situational awareness.)

Appendix B.8. Restricted Area on Route, Seen in ROC Situational Awareness

- 1.

- MASS sends initial Route to ROC

- 2.

- MASS sends continuous position updates which can be seen by operators in real time

- 3.

- ROC receives alert from external system that there is a temporary restriction area along the MASS route.

- 4.

- Operator investigates notification. (ROC situational awareness.)

- 5.

- Operator checks whether MASS sees same indication, which it does not. (MASS situational awareness.)

Appendix B.9. Several Other Ships on Collision Course with MASS

- 1.

- MASS sends initial route to ROC.

- 2.

- MASS sends continuous position updates which can be seen by operators in real time.

- 3.

- ROC backend discovers that the route crosses an area with other vessels (it receives these objects from AIS).

- 4.

- Operator receives multiple notifications that ships are on collision course with MASS.

- 5.

- Operator investigates notification. (ROC situational awareness.)

- Variability in reason for collision risk:

- -

- Planned route on wrong side of fairway.

- -

- Area of fog.

- -

- Restricted area due to drifting, burning ship on route.

- 6.

- Operator checks whether MASS sees same indication, which it does not. (MASS situational awareness.)

Appendix B.10. Multi-Notifications from MASS

- 1.

- Several MASSs send initial Route to ROC.

- 2.

- Several MASSs send continuous position updates which can be seen by operators in real time.

- 3.

- Weather change prompts increase of risk contours across large area with several MASSs.

- 4.

- Several MASSs send notification of route change, including reason (which is ships at risk of grounding).

- 5.

- One MASS does not, but operator receives notification that this MASS is at risk of grounding.

- 6.

- Operator investigates notifications. (ROC situational awareness.)

- Variability in different restricted areas, i.e., dangerous, fog, restricted, etc.

- 7.

- Operator checks whether MASS (1) sees same indication, which it does not.

References

- Norwegian Forum for Autonomous Ships. Definitions for Autonomous Merchant Ships. Report. 2017. Available online: https://nfas.autonomous-ship.org/wp-content/uploads/2020/09/autonom-defs.pdf (accessed on 30 April 2022).

- Iwanaga, S. Legal Issues Relating to the Maritime Autonomous Surface Ships’ Development and Introduction to Services. Master’s Thesis, World Maritime University, Malmö, Sweden, 2019. [Google Scholar]

- Institute for Energy Technology. Identification of Information Requirements in ROC Operations Room; Technical Report; Institute for Energy Technology: Kjeller, Norway, 2020. [Google Scholar]

- Fan, C.; Wróbel, K.; Montewka, J.; Gil, M.; Wan, C.; Zhang, D. A framework to identify factors influencing navigational risk for Maritime Autonomous Surface Ships. Ocean Eng. 2020, 202, 107188. [Google Scholar] [CrossRef]

- Outcome of the Regulatory Scoping Exercise for the Use of Maritime Autonomous Surface Ships (MASS); MSC. 1/Circ. 1638; 2021; International Maritime Organization: London, UK.

- Lloyd’s Register. ShipRight, Design and Construction, Additional Design Procedures, Design Code for Unmanned Marine Systems; Lloyd’s Register Group Limited: London, UK, 2017.

- Bainbridge, L. Ironies of automation. Automatica 1983, 19, 129–135. [Google Scholar] [CrossRef]

- Wiener, E.L. Human Factors of Advanced Technology (“Glass Cockpit”) Transport Aircraft; Technical Report; NASA Ames Research Center: Mountain View, CA, USA, 1989.

- Stanton, N. Product design with people in mind. Hum. Factors Consum. Prod. 1998, 1–17. [Google Scholar]

- Standard ISO 21448:2022; Road Vehicles—Safety of the Intended Functionality. International Organization for Standardization: Geneva, Switzerland, 2019.

- Dekker, S.W.; Woods, D.D. To intervene or not to intervene: The dilemma of management by exception. Cogn. Technol. Work. 1999, 1, 86–96. [Google Scholar] [CrossRef]

- Löscher, I.; Axelsson, A.; Vännström, J.; Jansson, A. Eliciting strategies in revolutionary design: Exploring the hypothesis of predefined strategy categories. Theor. Issues Ergon. Sci. 2018, 19, 101–117. [Google Scholar] [CrossRef]

- Norman, D.A. Cognitive engineering. User Centered Syst. Des. 1986, 31, 61. [Google Scholar]

- Norman, D. Things That Make Us Smart: Defending Human Attributes in the Age of the Machine; Addison Wesley Publishing Co.: Reading, MA, USA, 1993. [Google Scholar]

- Ashby, W.R. An Introduction to Cybernetics; Chapman and Hall Ltd.: London, UK, 1956. [Google Scholar]

- Hollnagel, E.; Woods, D.D. Joint Cognitive Systems: Foundations of Cognitive Systems Engineering; CRC Press: Boca Raton, FL, USA, 2005. [Google Scholar]

- Rasmussen, J.; Pejtersen, A.M.; Goodstein, L.P. Cognitive Systems Engineering; Wiley: Hoboken, NJ, USA, 1994. [Google Scholar]

- Wiener, E.L.; Curry, R.E. Flight-deck automation: Promises and problems. Ergonomics 1980, 23, 995–1011. [Google Scholar] [CrossRef]

- Sarter, N.B.; Woods, D.D.; Billings, C.E. Automation surprises. In Handbook of Human Factors and Ergonomics, 2nd ed.; Wiley: Hoboken, NJ, USA, 1997; pp. 1926–1943. [Google Scholar]

- Endsley, M.R. Toward a theory of situation awareness in dynamic systems. Hum. Factors 1995, 37, 85–104. [Google Scholar] [CrossRef]

- Leveson, N.G. Engineering a Safer World: Systems Thinking Applied to Safety; The MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Baxter, G.; Sommerville, I. Socio-technical systems: From design methods to systems engineering. Interact. Comput. 2011, 23, 4–17. [Google Scholar] [CrossRef] [Green Version]

- Reason, J. Managing the Risks of Organizational Accidents; Routledge: Abingdon, UK, 2016. [Google Scholar]

- Herbert, S. The architecture of complexity. Proc. Am. Philos. Soc. 1962, 106, 467–482. [Google Scholar]

- Checkland, P. Systems Thinking, Systems Practice; John Wiley & Sons: New York, NY, USA, 1981. [Google Scholar]

- Kelly, T. Software Certification: Where is Confidence Won and Lost? In Addressing Systems Safety Challenges; Anderson, T., Dale, C., Eds.; Safety Critical Systems Club: York, UK, 2014. [Google Scholar]

- Maier, M.W. The Art of Systems Architecting; CRC Press: Boca Raton, FL, USA, 2000. [Google Scholar]

- Department of Defense, Systems Management College. Systems Engineering Fundamentals; Technical Report; Defense Acquisition University Press: Fort Belvoir, VA, USA, 2001. [Google Scholar]

- Standard ISO/TS 18152:2010; Ergonomics of Human-System Interaction—Specification for the Process Assessment of Human-System Issues. International Organization for Standardization: Geneva, Switzerland, 2010.

- Standard ISO/IEC/IEEE 15288:2015; Systems and Software Engineering—System Life Cycle Processes. International Organization for Standardization: Geneva, Switzerland, 2015.

- National Research Council. Managing Risks. In Human-System Integration in the System Development Process: A New Look; National Academies Press: Washington, DC, USA, 2007; Book Section 4; pp. 75–90. [Google Scholar] [CrossRef]

- Asplund, F.; Holland, G.; Odeh, S. Conflict as software levels diversify: Tactical elimination or strategic transformation of practice? Saf. Sci. 2020, 126, 104682. [Google Scholar] [CrossRef]

- Brown, J.S.; Duguid, P. Knowledge and organization: A social-practice perspective. Organ. Sci. 2001, 12, 198–213. [Google Scholar] [CrossRef] [Green Version]

- Bolisani, E.; Scarso, E. The place of communities of practice in knowledge management studies: A critical review. J. Knowl. Manag. 2014, 18, 366–381. [Google Scholar] [CrossRef]

- Lesser, E.L.; Storck, J. Communities of practice and organizational performance. IBM Syst. J. 2001, 40, 831–841. [Google Scholar] [CrossRef] [Green Version]

- Leonard, D.; Sensiper, S. The role of tacit knowledge in group innovation. Calif. Manag. Rev. 1998, 40, 112–132. [Google Scholar] [CrossRef]

- Asplund, F.; McDermid, J.; Oates, R.; Roberts, J. Rapid Integration of CPS Security and Safety. IEEE Embed. Syst. Lett. 2018, 11, 111–114. [Google Scholar] [CrossRef] [Green Version]

- Choi, B.C.; Pak, A.W. Multidisciplinarity, interdisciplinarity and transdisciplinarity in health research, services, education and policy: 1. Definitions, objectives, and evidence of effectiveness. Clin. Investig. Med. 2006, 29, 351–364. [Google Scholar]

- Choi, B.C.; Pak, A.W. Multidisciplinarity, interdisciplinarity, and transdisciplinarity in health research, services, education and policy: 2. Promotors, barriers, and strategies of enhancement. Clin. Investig. Med. 2007, 30, E224–E232. [Google Scholar] [CrossRef] [Green Version]

- Nooteboom, B. Learning by interaction: Absorptive capacity, cognitive distance and governance. J. Manag. Gov. 2000, 4, 69–92. [Google Scholar] [CrossRef]

- Nooteboom, B.; Van Haverbeke, W.; Duysters, G.; Gilsing, V.; Van den Oord, A. Optimal cognitive distance and absorptive capacity. Res. Policy 2007, 36, 1016–1034. [Google Scholar] [CrossRef] [Green Version]

- Wenger, E. Communities of practice and social learning systems. Organization 2000, 7, 225–246. [Google Scholar] [CrossRef]

- Szulanski, G. The process of knowledge transfer: A diachronic analysis of stickiness. Organ. Behav. Hum. Decis. Process. 2000, 82, 9–27. [Google Scholar] [CrossRef] [Green Version]

- Rogers, E.M. Diffusion Networks. In Diffusion of Innovations, 4th ed.; The Free Press: New York, NY, USA, 1995; Book Section 8. [Google Scholar]

- Nochur, K.S.; Allen, T.J. Do nominated boundary spanners become effective technological gatekeepers? (technology transfer). IEEE Trans. Eng. Manag. 1992, 39, 265–269. [Google Scholar] [CrossRef]

- Haas, A. Crowding at the frontier: Boundary spanners, gatekeepers and knowledge brokers. J. Knowl. Manag. 2015, 19, 1029–1047. [Google Scholar] [CrossRef]

- Rasmussen, J.; Pejtersen, A.M.; Goodstein, L.P. Work Domain Analysis. In Cognitive Systems Engineering; John Wiley & Sons, Inc.: New York, NY, USA, 1994; Book Section 2. [Google Scholar]

- De Weck, O.L.; Roos, D.; Magee, C.L. From Inventions to Systems. In Engineering Systems: Meeting Human Needs in a Complex Technological World; MIT Press: Cambridge, MA, USA, 2011; Book Section 1; pp. 1–22. [Google Scholar]

- Sjöfartsverket. Available online: https://www.sjofartsverket.se/en/ (accessed on 19 March 2022).

- Trafikverket. Available online: https://www.trafikverket.se/en/ (accessed on 19 March 2022).

- Färjerederiet, T. Vision 45, Den Gula Färjan ska bli Grön; Report; Trafikverket: Borlänge, Sweden, 2020.

- Elwinger, T.; Ödeen, J. Functional Specification, Operator Environment & Integrated Shipboard System; Report; Sjöfartsverket: Norrkoping, Sweden, 2020.

- Borst, C.; Bijsterbosch, V.A.; Van Paassen, M.; Mulder, M. Ecological interface design: Supporting fault diagnosis of automated advice in a supervisory air traffic control task. Cogn. Technol. Work 2017, 19, 545–560. [Google Scholar] [CrossRef]

- Metzger, U.; Parasuraman, R. Automation in future air traffic management: Effects of decision aid reliability on controller performance and mental workload. In Decision Making in Aviation; Routledge: London, UK, 2017; pp. 345–360. [Google Scholar]

- E-OCVM, European Operational Concept Validation Methodology, Version 3.0, Volume I; European Organisation for the Safety of Air Navigation: Brussels, Belgium, 2010.

- Woods, D.; Dekker, S. Anticipating the effects of technological change: A new era of dynamics for human factors. Theor. Issues Ergon. Sci. 2000, 1, 272–282. [Google Scholar] [CrossRef]

- Quinn Patton, M. Qualitative Interviewing. In Qualitative Research and Evaluation Methods; Sage Publications, Inc.: London, UK, 2015; Book Section 7. [Google Scholar]

- Brinkmann, S.; Kvale, S. Conducting an Interview. In InterViews, Learning the Craft of Qualitative Research Interviewing, 3rd ed.; Sage Publications, Inc.: London, UK, 2015; Book Section 7; pp. 125–147. [Google Scholar]

- Wahl, T. Exploring a Supervisory Interface for a Fleet of of Semi-Autonomous Vessels. Master’s Thesis, Aalto University, Espoo, Finland, 2019. [Google Scholar]

- Quinn Patton, M. Fieldwork Strategies and Observation Methods. In Qualitative Research and Evaluation Methods; Sage Publications, Inc.: London, UK, 2015; Book Section 6. [Google Scholar]

- Glomsrud, J.A.; Ødegårdstuen, A.; Clair, A.L.S.; Smogeli, Ø. Trustworthy versus explainable AI in autonomous vessels. In Proceedings of the International Seminar on Safety and Security of Autonomous Vessels (ISSAV) and European STAMP Workshop and Conference (ESWC), Helsinki, Finland, 17–20 September 2019; pp. 37–47. [Google Scholar]

- Parasuraman, R.; Sheridan, T.B.; Wickens, C.D. A model for types and levels of human interaction with automation. IEEE Trans. Syst. Man -Cybern.-Part Syst. Humans 2000, 30, 286–297. [Google Scholar] [CrossRef] [Green Version]

- McDonald, N. Organisational Resilience and Industrial Risk. In Resilience Engineering: Concepts and Precepts; Ashgate: Aldershot, UK, 2006; Chapter 11; pp. 155–180. [Google Scholar]

- Maier, M.W. Architecting principles for systems-of-systems. Syst. Eng. J. Int. Counc. Syst. Eng. 1998, 1, 267–284. [Google Scholar] [CrossRef]

- Paul, S.; Whittam, G. Business angel syndicates: An exploratory study of gatekeepers. Ventur. Cap. 2010, 12, 241–256. [Google Scholar] [CrossRef]

- Whelan, E.; Donnellan, B.; Golden, W. Knowledge Diffusion in Contemporary R&D Groups; Re-Examining the Role of the Technological Gatekeeper. In Knowledge Management and Organizational Learning; King, W.R., Ed.; Springer: Boston, MA, USA, 2009; pp. 80–93. [Google Scholar]

- Tushman, M.L. Special boundary roles in the innovation process. Adm. Sci. Q. 1977, 22, 587–605. [Google Scholar] [CrossRef]

- Marrone, J.A. Team boundary spanning: A multilevel review of past research and proposals for the future. J. Manag. 2010, 36, 911–940. [Google Scholar] [CrossRef]

- Asplund, F.; Ulfvengren, P. Work functions shaping the ability to innovate: Insights from the case of the safety engineer. Cogn. Technol. Work 2021, 23, 143–159. [Google Scholar] [CrossRef] [Green Version]

- Leite, L.; Rocha, C.; Kon, F.; Milojicic, D.; Meirelles, P. A survey of DevOps concepts and challenges. ACM Comput. Surv. (CSUR) 2019, 52, 1–35. [Google Scholar] [CrossRef] [Green Version]

- Claps, G.G.; Svensson, R.B.; Aurum, A. On the journey to continuous deployment: Technical and social challenges along the way. Inf. Softw. Technol. 2015, 57, 21–31. [Google Scholar] [CrossRef]

- Dosi, G. Technological paradigms and technological trajectories: A suggested interpretation of the determinants and directions of technical change. Res. Policy 1982, 11, 147–162. [Google Scholar] [CrossRef]

- Knudsen, T.; Levinthal, D.A. Two faces of search: Alternative generation and alternative evaluation. Organ. Sci. 2007, 18, 39–54. [Google Scholar] [CrossRef] [Green Version]

- Nicolini, D.; Mengis, J.; Swan, J. Understanding the role of objects in cross-disciplinary collaboration. Organ. Sci. 2012, 23, 612–629. [Google Scholar] [CrossRef]

- Hassall, M.E.; Sanderson, P.M. A formative approach to the strategies analysis phase of cognitive work analysis. Theor. Issues Ergon. Sci. 2014, 15, 215–261. [Google Scholar] [CrossRef]

| Human and Technology Integration | Operating Procedures | Communications Requirements | |

|---|---|---|---|

| Performance | A delay introduced by the operational concept. | Communication with irrelevant stakeholders. | A search for irrelevant information. |

| Operability | Confusion introduced by operational concept. | Not all relevant information sought. | |

| Acceptability | Request for information not in operational concept. |

| Interviewee | Maritime Experience | Background |

|---|---|---|

| 1 | 20+ years | Shipmaster’s degree, Merchant Navy, Professional Maritime Education |

| 2 | 5+ years | Shipmaster’s degree, Vessel Traffic Services (VTSs) |

| 3 | 25+ years | Shipmaster’s degree, Navy, VTSs |

| 4 | 10+ years | Shipmaster’s degree, Offshore industry, Passenger traffic, VTSs |

| 5 | 25+ years | Shipmaster’s degree, Pilotage, VTSs |

| 6 | 15+ years | Shipmaster’s degree, Merchant Navy, VTSs |

| 7 | 20+ years | Shipmaster’s degree, Merchant Navy, Passenger traffic |

| 8 | 15+ years | Maritime engineer, Passenger traffic |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Asplund, F.; Ulfvengren, P. Engineer-Centred Design Factors and Methodological Approach for Maritime Autonomy Emergency Response Systems. Safety 2022, 8, 54. https://doi.org/10.3390/safety8030054

Asplund F, Ulfvengren P. Engineer-Centred Design Factors and Methodological Approach for Maritime Autonomy Emergency Response Systems. Safety. 2022; 8(3):54. https://doi.org/10.3390/safety8030054

Chicago/Turabian StyleAsplund, Fredrik, and Pernilla Ulfvengren. 2022. "Engineer-Centred Design Factors and Methodological Approach for Maritime Autonomy Emergency Response Systems" Safety 8, no. 3: 54. https://doi.org/10.3390/safety8030054

APA StyleAsplund, F., & Ulfvengren, P. (2022). Engineer-Centred Design Factors and Methodological Approach for Maritime Autonomy Emergency Response Systems. Safety, 8(3), 54. https://doi.org/10.3390/safety8030054