Development and Reliability Review of an Assessment Tool to Measure Competency in the Seven Elements of the Risk Management Process: Part Three—Evaluation of the Group Results from the RISK Tool

Abstract

1. Introduction

- risk management for WHS is often conducted in an ad hoc manner by individuals or teams who are not competent in the seven elements of risk management, and these likely compromise outcomes, which is especially critical in high-risk industries;

- risk management for WHS is better undertaken in teams that are collectively optimised according to competence in the seven elements of the risk management process;

- to recruit/select a collectively optimised team, a tool is required to measure an individual’s competence in the seven elements of the risk management process.

- Establishing the context;

- Risk identification;

- Risk analysis;

- Risk evaluation;

- Risk treatment;

- Communication and consultation;

- Monitoring and reviewing.

1.1. Work Teams?

“a group of somewhere between two and 12 individuals performing a common task, albeit with specialist roles”.

1.2. The Benefits and Limitations of Using Teams in the Workplace

1.3. Consideration of Biases in Work Teams

1.4. Assembling Work Teams—Homogeneous vs. Heterogeneous Teams

2. Aim

3. Method

3.1. Background to Previous Study Methods

3.2. Introduction to This Study’s Methods

3.3. Participants

3.4. Procedure and Material

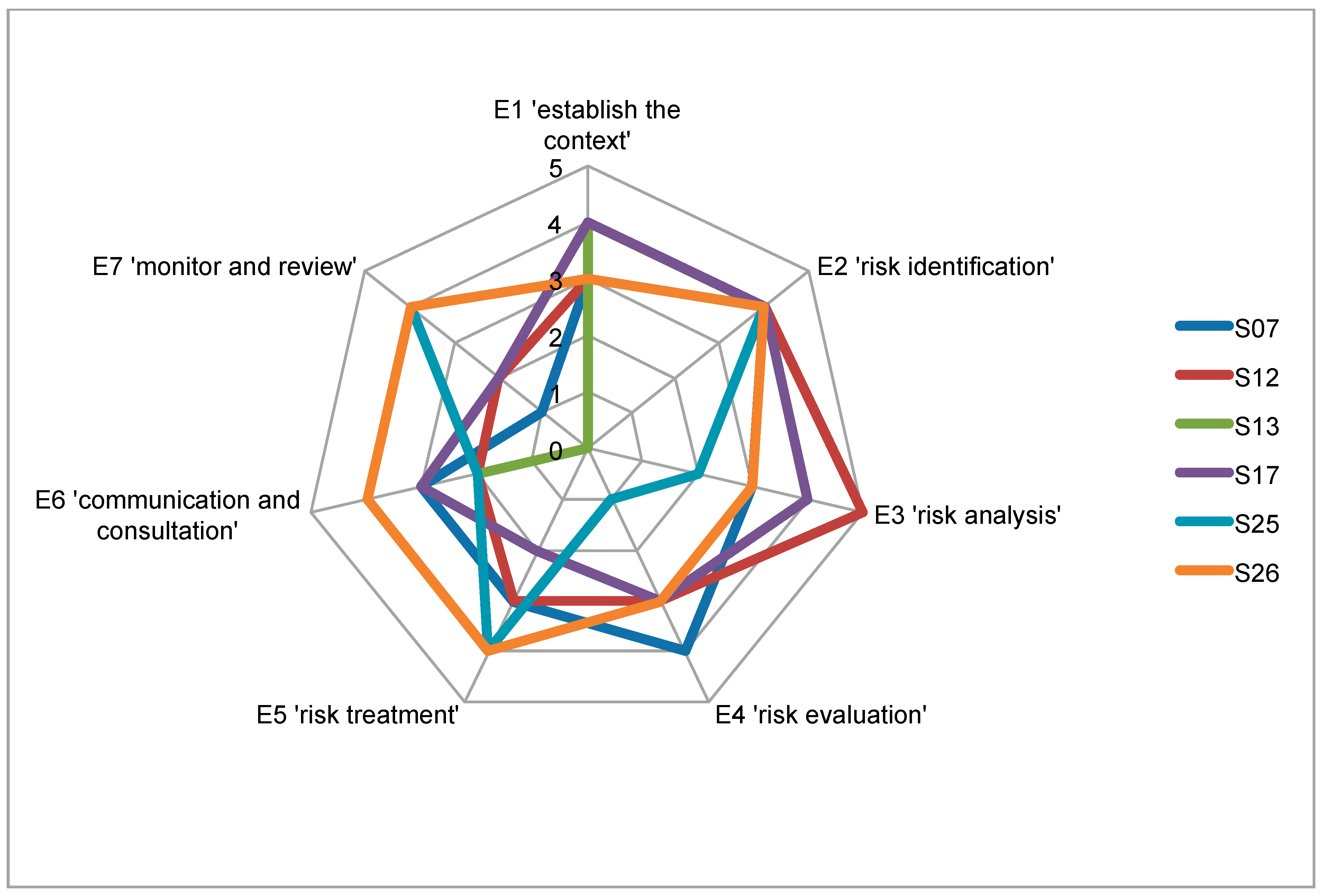

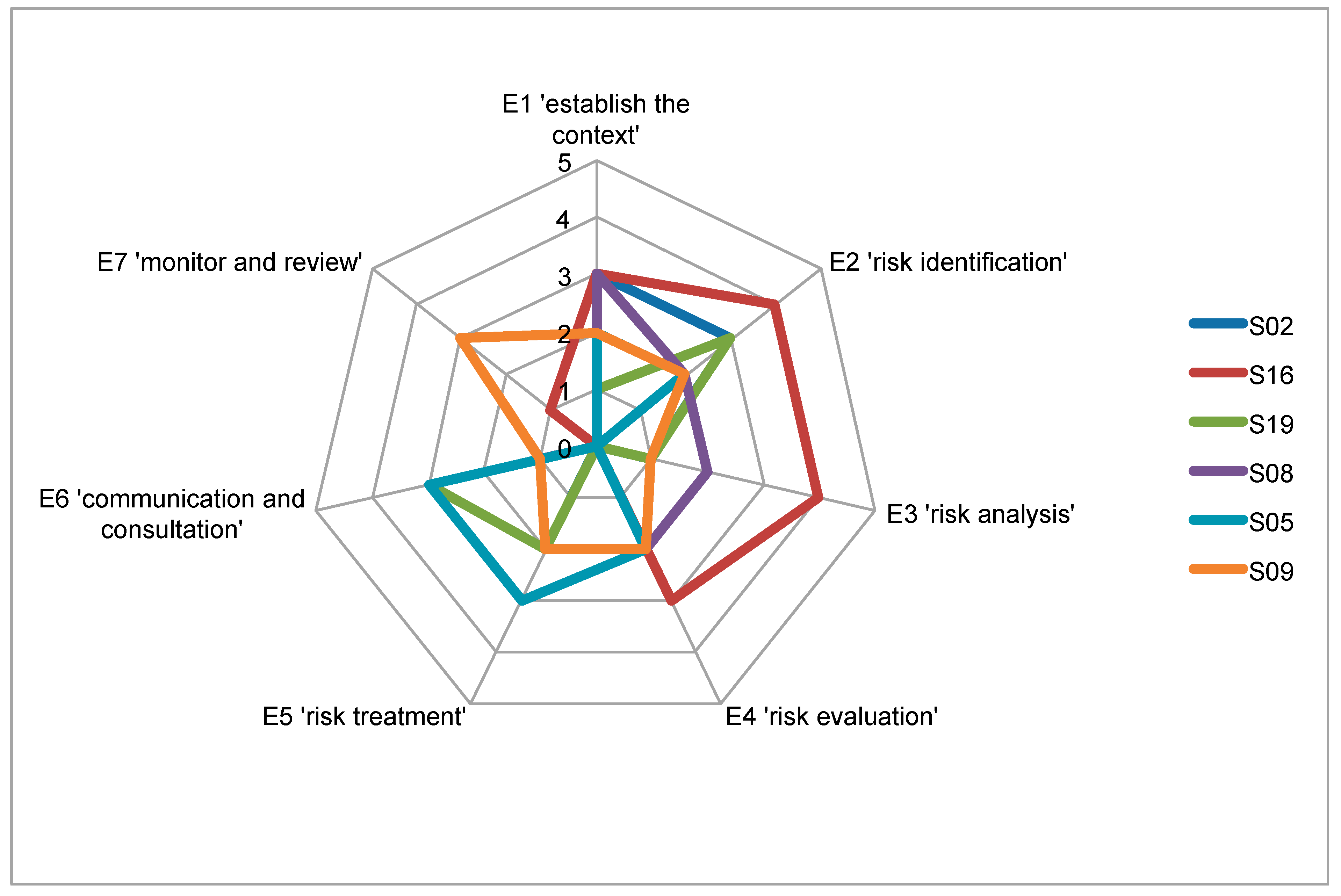

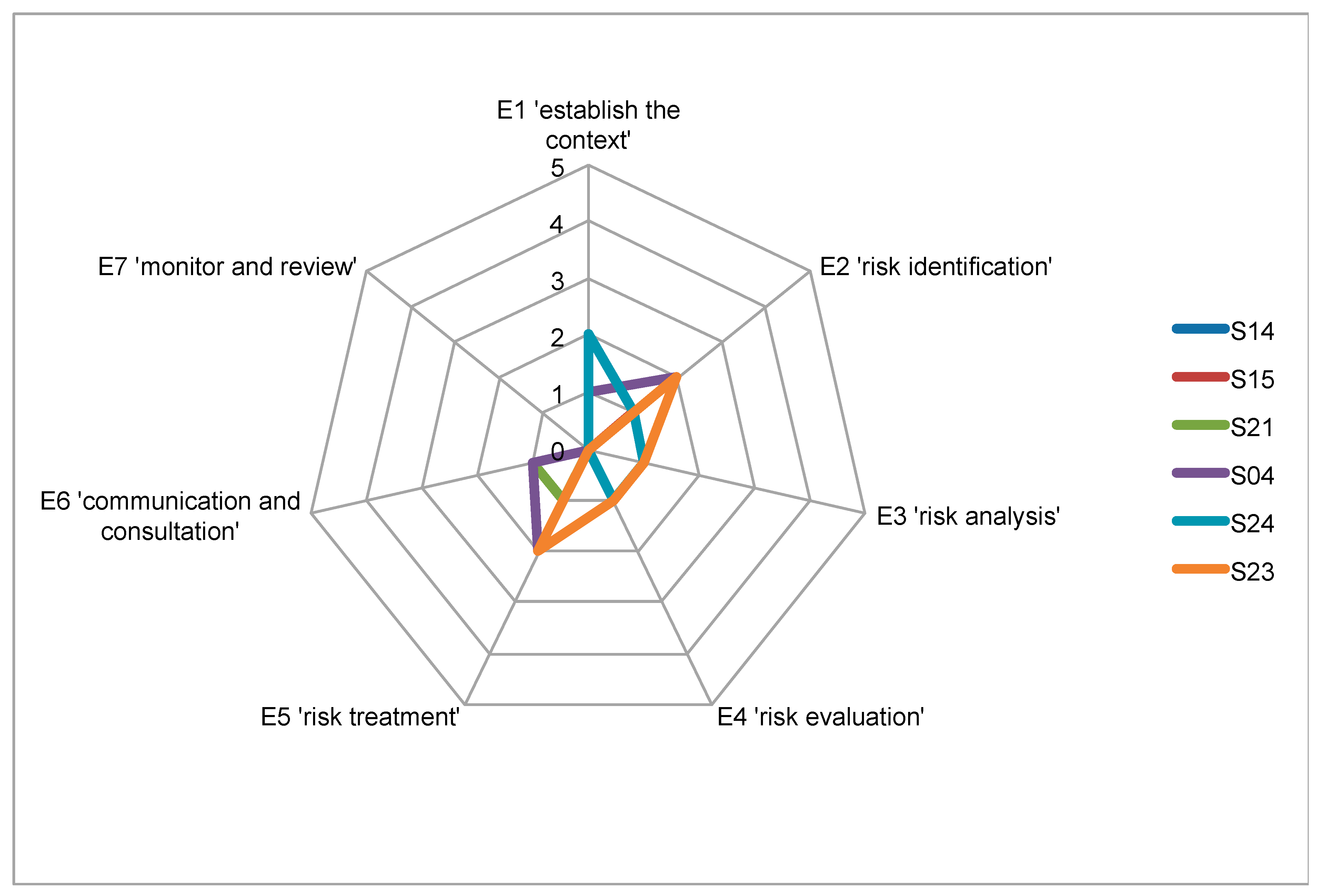

3.4.1. Team Selection

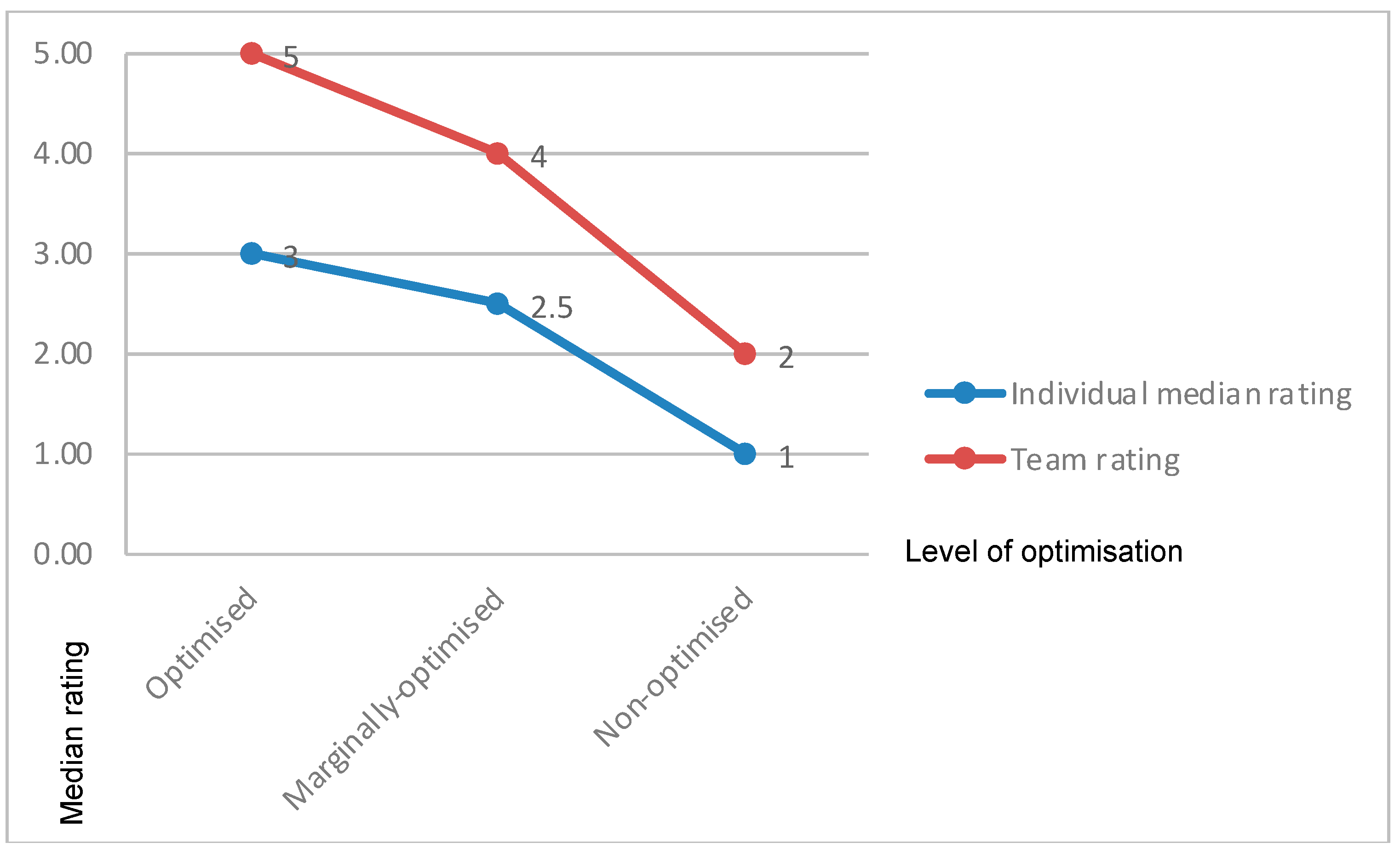

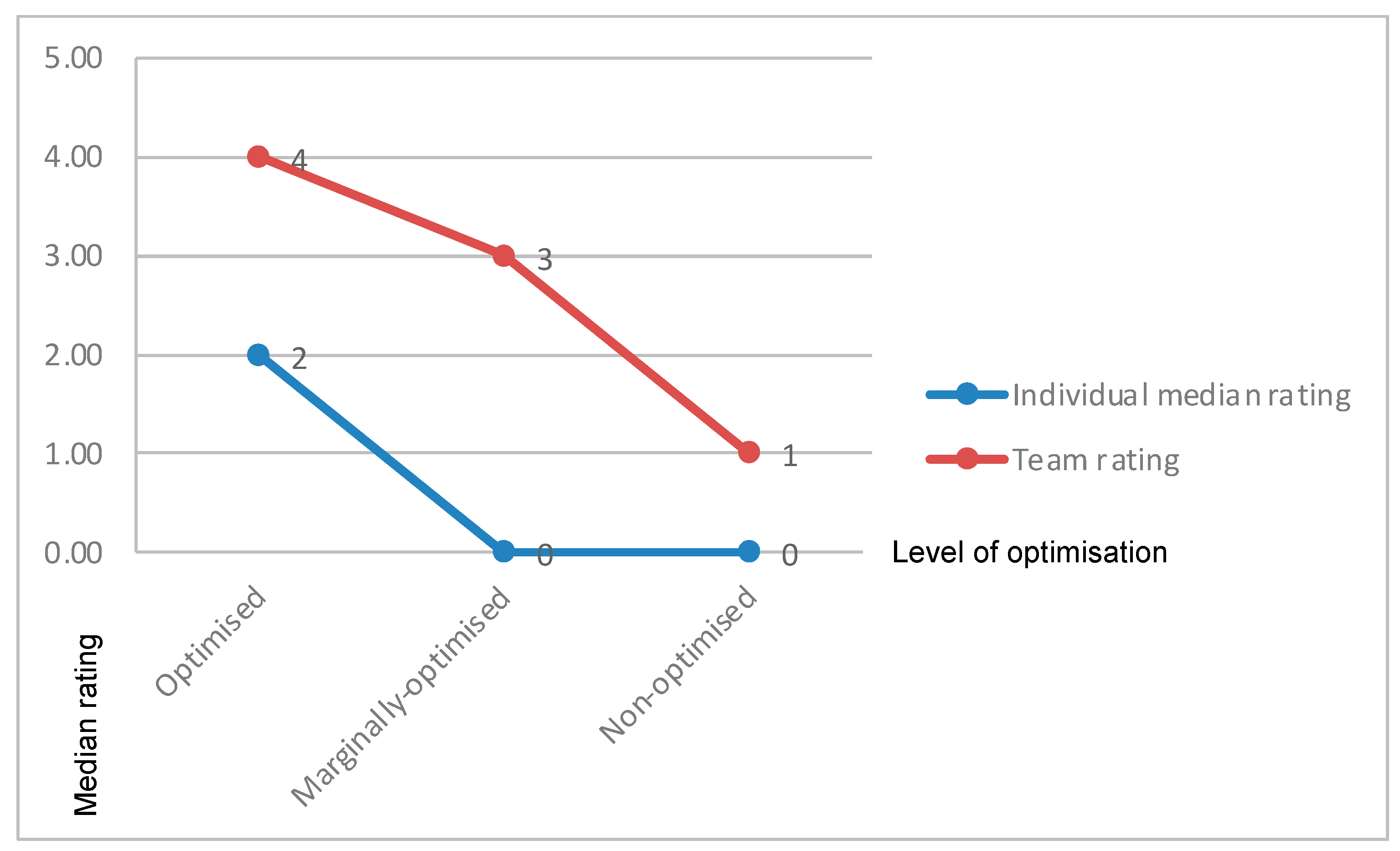

- Team A, the collectively optimised team, had at least one person who was rated as having some high level (4) or expert level (5) competency per element;

- Team B, the marginally optimised team, had at least one person who was rated as having some high level (4) or just above average (3) competency per element;

- Team C, the sub-optimised team, comprised people whose ratings ranged from no competency (0) to just below average competency (2) per element.

3.4.2. Team Exercise

- welcome participants on arrival;

- be a neutral guide, focussing on group etiquette only, and not provide risk management content information, e.g., the facilitator encouraged the input of all team members, kept the team on task and reduced distractions and reminded the team about time limits, appropriate language and respect for others’ opinions.

- Emergency management procedures.

- Facility and comfort arrangement familiarity.

- Participants would complete the same risk scenario exercise they had previously completed (Marling et al. [3]), but this time as a team. Each participant was given a copy of the scenario narrative and the associated diagram.

- They could use the computer in the room to search for any reference material.

- They could use the whiteboard and butcher’s paper to assist them.

- As previously advised, the forum would be filmed (at this point, the video recording commenced).

- Participants were asked to affirm verbally that they were aware of the ethical considerations and were willing to proceed on that basis, including their right to withdraw from the risk scenario exercise at any time for any reason.

- Participants were told that they might find it helpful to spend the first five minutes determining the process they would use to complete the risk scenario exercise (i.e., a meta-process). Houghton et al. [18] suggest that establishing procedures (presumably for the team process) may counter biases, rather than relying on the possible benefit of purely being a team negating these biases.

3.5. Analysis Strategy

4. Results

4.1. Inter-Rater Reliability Testing

4.2. Team Performance

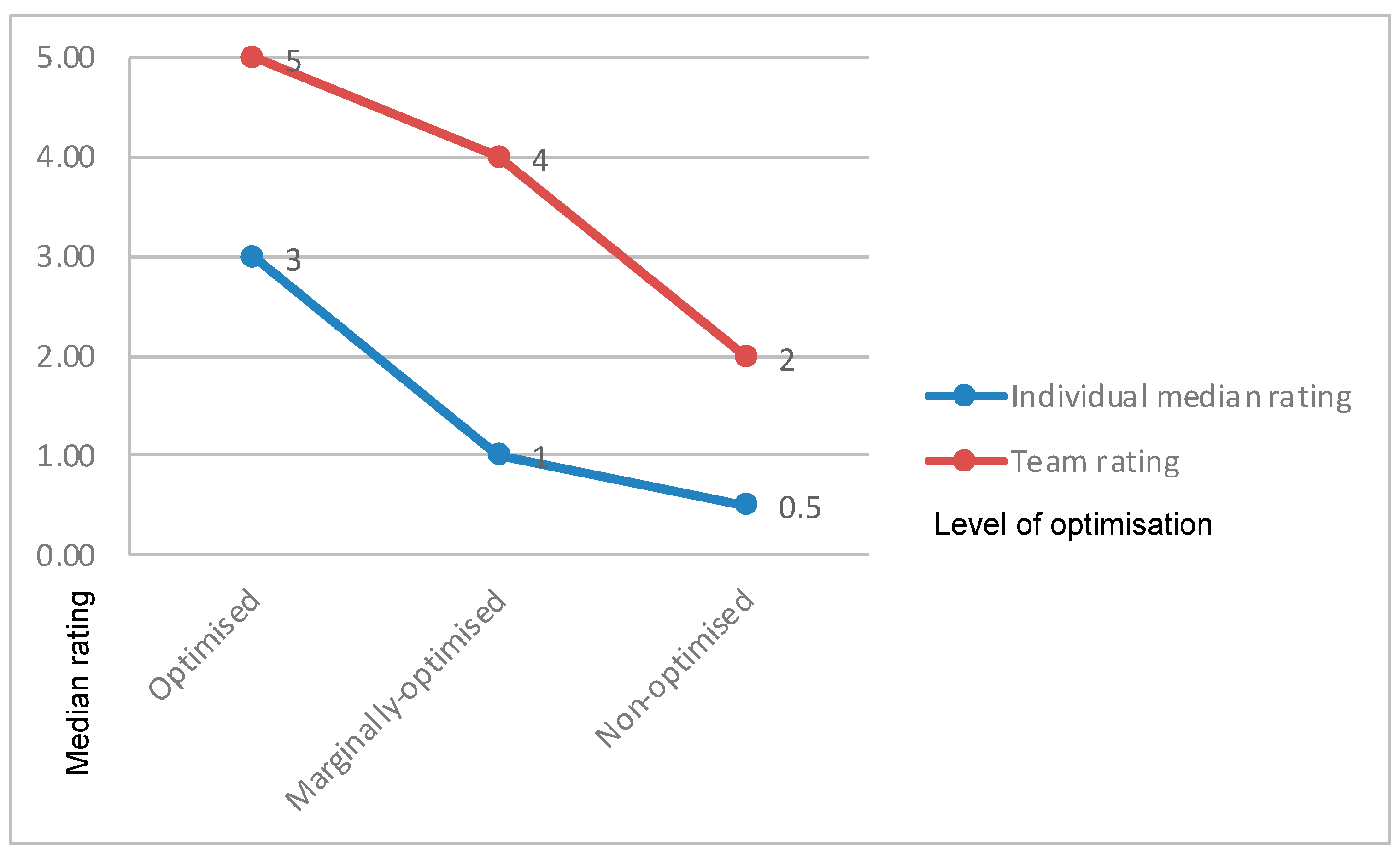

4.2.1. E1 ‘Establishing the Context’

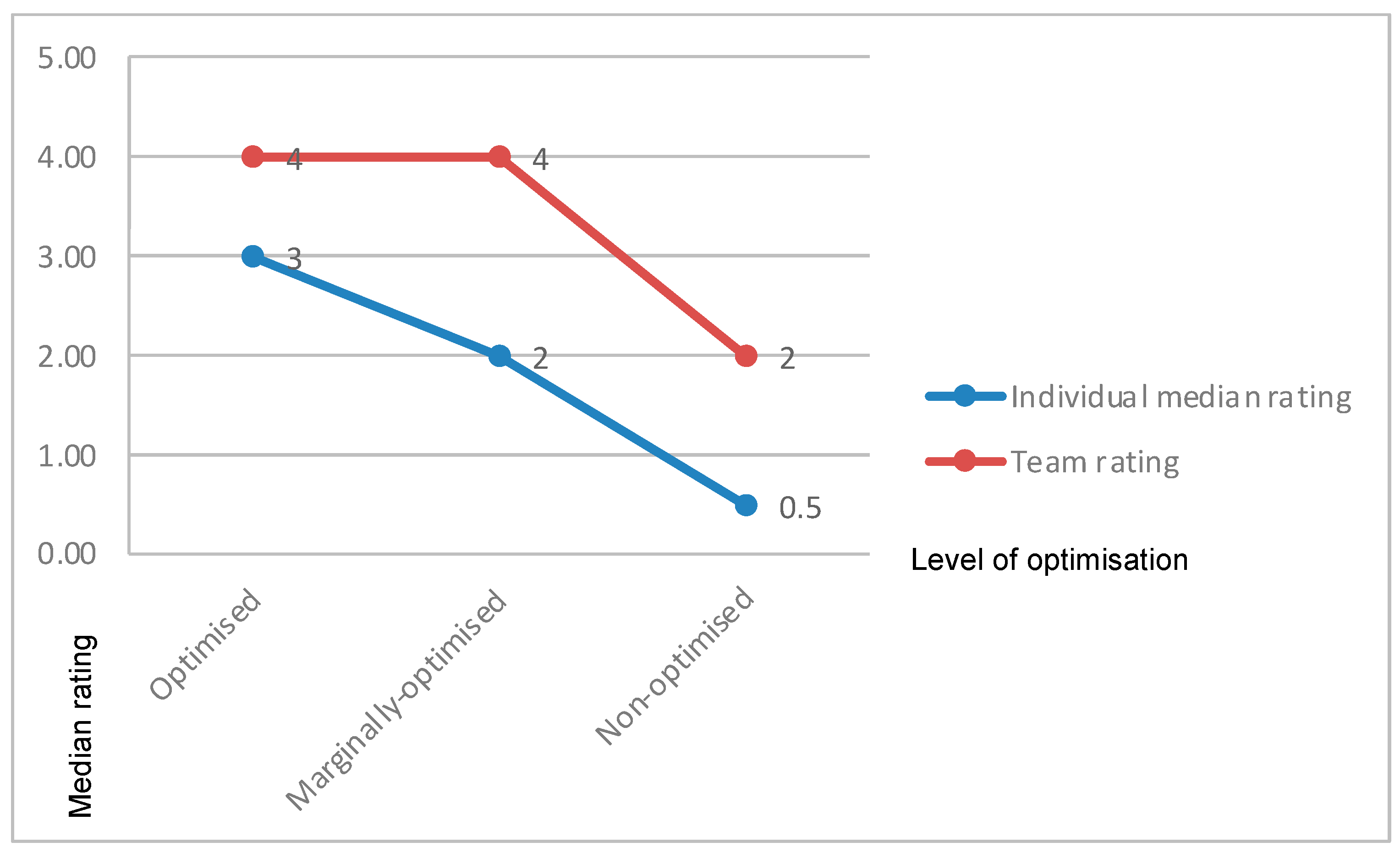

4.2.2. E2 ‘Risk Identification’

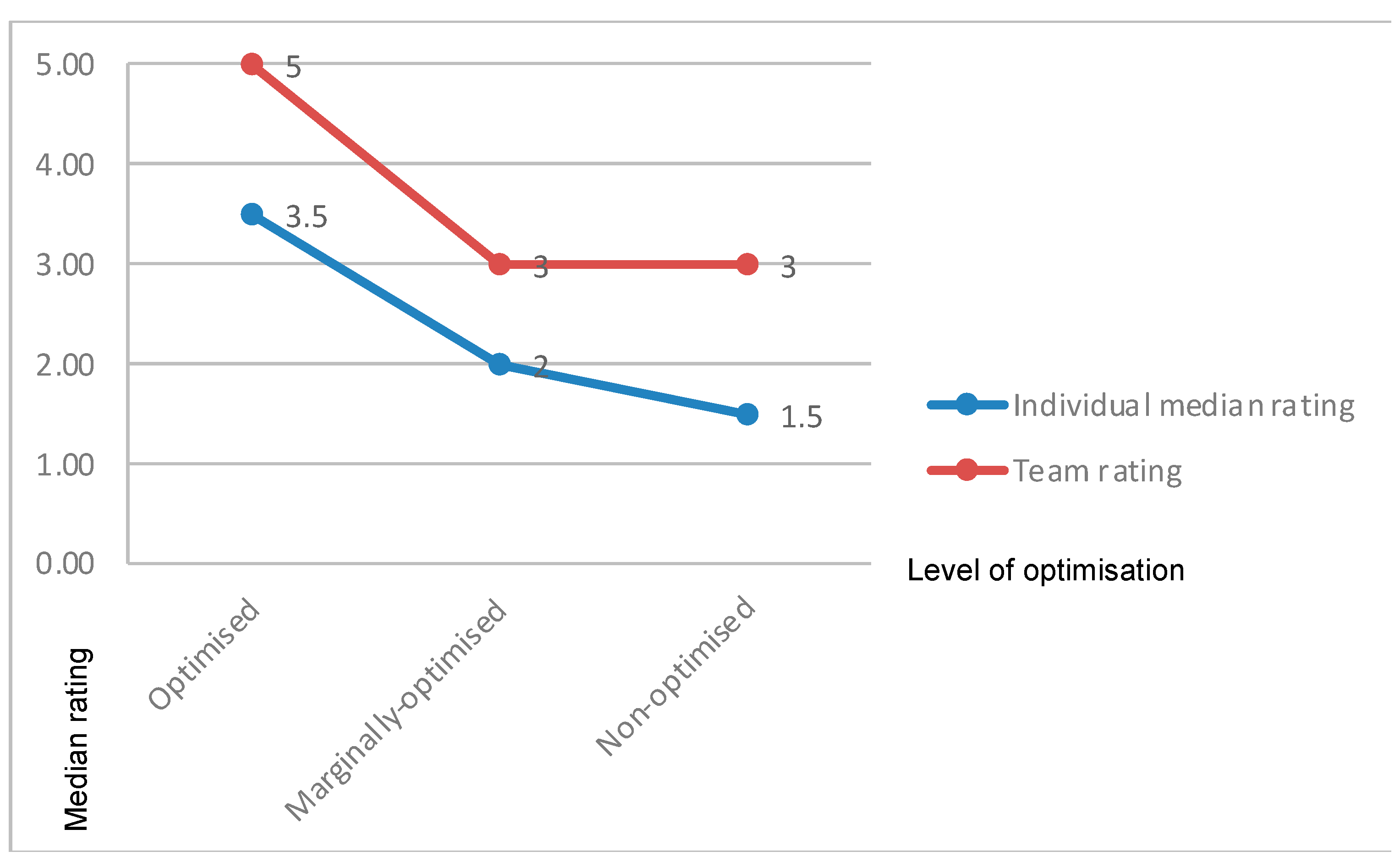

4.2.3. E3 ‘Risk Analysis’

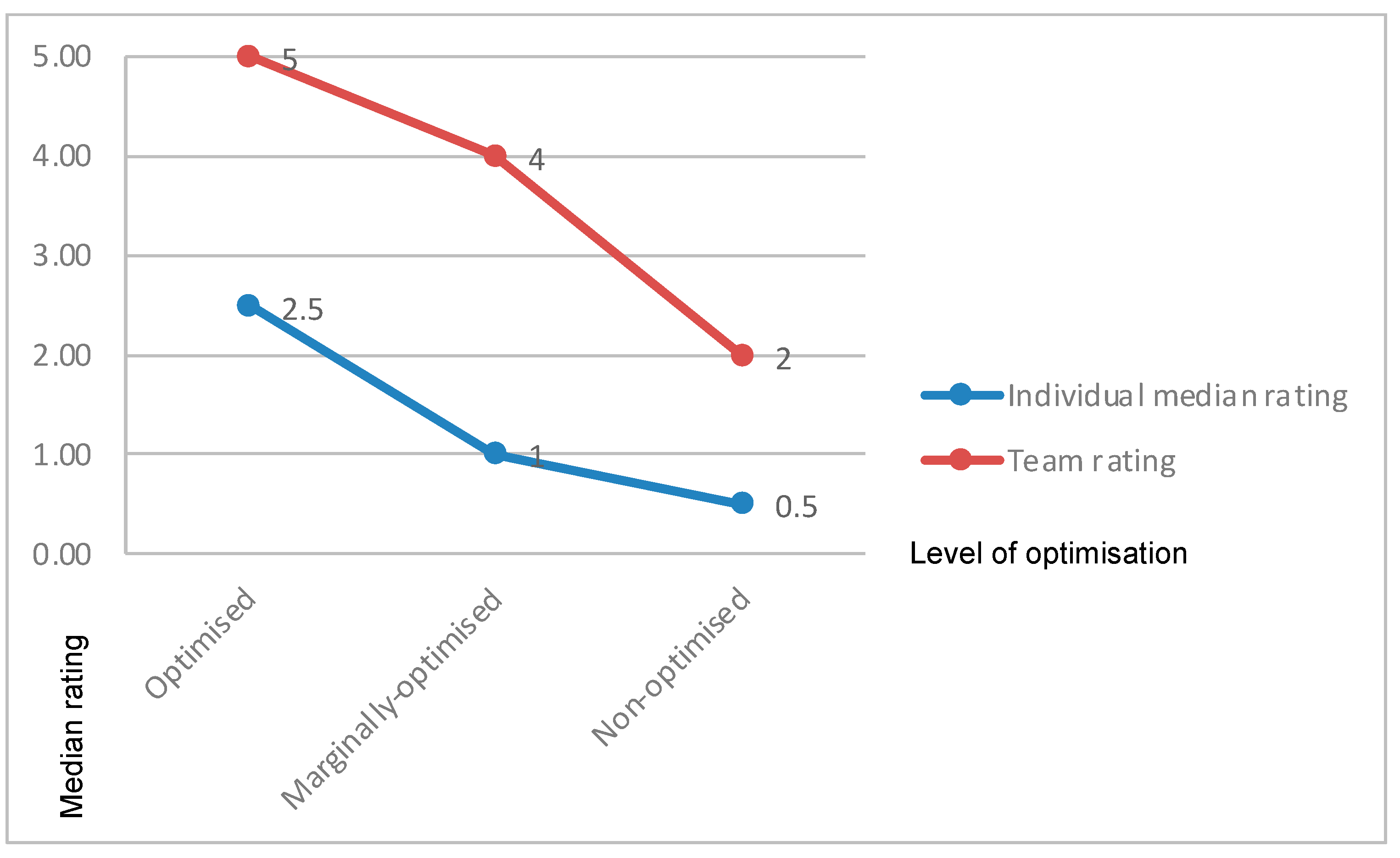

4.2.4. E4 ‘Risk Evaluation’

4.2.5. E5 ‘Risk Treatment’

4.2.6. E6 ‘Communication and Consultation’

4.2.7. E7 ‘Monitor and Review’

5. Discussion

6. Overall Outcomes and Recommendations for Future Work

6.1. Recommendations for Future Work

6.1.1. Further Research Regarding the Use of Teams to Conduct the Risk Management Process

6.1.2. RISKometric

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Group Code | Element 1 | Element 2 | Element 3 | Element 4 | Element 5 | Element 6 | Element 7 | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| O1 | O2 | A | O1 | O2 | A | O1 | O2 | A | O1 | O2 | A | O1 | O2 | A | O1 | O2 | A | O1 | O2 | A | |

| Group A | 5 | 5 | 5 | 5 | 4 | 5 | 5 | 5 | 5 | 4 | 4 | 4 | 5 | 5 | 5 | 4 | 5 | 5 | 4 | 4 | 4 |

| Group B | 4 | 3 | 4 | 3 | 4 | 4 | 4 | 4 | 4 | 3 | 4 | 4 | 3 | 3 | 3 | 4 | 3 | 4 | 3 | 3 | 3 |

| Group C | 2 | 2 | 2 | 4 | 3 | 3 | 2 | 2 | 2 | 2 | 2 | 2 | 3 | 4 | 3 | 2 | 2 | 2 | 1 | 1 | 1 |

References

- Marling, G.J. Optimising Risk Management Team Processes. Ph.D. Thesis, University of Queensland, Brisbane, Australia, 2015. [Google Scholar]

- Marling, G.J.; Horberry, T.; Harris, J. Development and reliability of an assessment tool to measure competency in the seven elements of the risk management process: Part one—The RISKometric. Safety 2020, 7, 1. [Google Scholar] [CrossRef]

- Marling, G.; Horberry, T.; Harris, J. Development and Reliability Review of an Assessment Tool to Measure Competency in the Seven Elements of the Risk Management Process: Part Two—Evaluation of the Individual Results from the RISKometric Tool. Safety 2022, 8, 71. [Google Scholar] [CrossRef]

- Mathieu, J.; Maynard, M.T.; Rapp, T.; Gilson, L. Team Effectiveness 1997–2007: A Review of Recent Advancements and a Glimpse into the Future. J. Manag. 2008, 34, 410–476. [Google Scholar] [CrossRef]

- Kozlowski, S.W.J.; Bell, B.S. Work groups and teams in organizations. In Handbook of Psychology: Industrial and Organizational Psychology; Borman, W.C., Ilgen, D.R., Klimoski, R.J., Eds.; Wiley: London, UK, 2003; Volume 12, pp. 333–375. [Google Scholar]

- Cohen, S.G.; Bailey, D.E. What makes teams work: Group effectiveness research from the shop floor to the executive suite. J. Manag. 1997, 23, 239–290. [Google Scholar] [CrossRef]

- Devine, D.J. A review and integration of classification systems relevant to teams in organizations. Group Dyn. Theory Res. Pract. 2002, 6, 291–310. [Google Scholar] [CrossRef]

- Hackman, R. Groups that Work and Those that Don’t; Jossey-Bass: San Francisco, CA, USA, 1990. [Google Scholar]

- Sundstrom, E. The challenges of supporting work team effectiveness. In Supporting Work Team Effectiveness; Sundstrom, E., Associates, Eds.; Jossey-Bass: San Francisco, CA, USA, 1999; pp. 3–23. [Google Scholar]

- Reason, J.; Andersen, H.B. Errors in a Team Context; Mohawc Belgirate Workshop: Belgirate, Italy, 1991. [Google Scholar]

- Guzzo, R.A.; Dickson, M.W. Teams in organizations: Recent research on performance and effectiveness. Annu. Rev. Psychol. 1996, 47, 307–338. [Google Scholar] [CrossRef] [PubMed]

- Sasou, K.; Reason, J. Team errors: Definition and taxonomy. Reliab. Eng. Syst. Saf. 1999, 65, 1–9. [Google Scholar] [CrossRef]

- Bernsen, N.O.; Reason, J. Errors in a Team Context; Mohawc Belgirate Workshop: Belgirate, Italy, 1993. [Google Scholar]

- Salas, E.; Dickinson, T.L.; Converse, S.A. Toward an Understanding of Team Performance and Training. In Teams: Their Training and Performance; Swezey, R.W., Salas, E., Eds.; Ablex: Norwood, NJ, USA, 1992; pp. 3–29. [Google Scholar]

- McEwan, D.; Ruissen, G.R.; Eys, M.A.; Zumbo, B.D.; Beauchamp, M.R. The Effectiveness of Teamwork Training on Teamwork Behaviors and Team Performance: A Systematic Review and Meta-Analysis of Controlled Interventions. PLoS ONE 2017, 12, e0169604. [Google Scholar] [CrossRef]

- Hackman, J.R.; Katz, N. Group behavior and performance. In Handbook of Social Psychology, 5th ed.; Fiske, S.T., Gilbert, D.T., Lindzey, G., Eds.; John Wiley & Sons: West Sussex, UK, 2010; Volume 2, pp. 1251–1280. [Google Scholar]

- Finley, H.R.A.M. The New Why Teams Don’t Work. 2011. Available online: www.readhowyouwant.com (accessed on 11 March 2023).

- Hogan, R.; Raza, S.; Driskell, J.E. Personality, Team Performance, and Organizational Context. In Psychology and Productivity; Springer: Boston, MA, USA, 1988; pp. 93–103. [Google Scholar]

- Houghton, S.M.; Simon, M.; Aquino, K.; Goldberg, C.B. No Safety in Numbers: Persistence of Biases and Their Effects on Team Risk Perception and Team Decision Making. Group Organ. Manag. 2000, 25, 325–353. [Google Scholar] [CrossRef]

- Kaplan, R.S.; Leonard, D.; Mikes, A. Novel Risks; Working Paper Summaries, Working Knowledge; Harvard Business School: Boston, MA, USA, 27 March 2020. [Google Scholar]

- Cooper, A.C.; Woo, C.Y.; Dunkelberg, W.C. Entrepreneurs’ perceived chance of success. J. Bus. Ventur. 1988, 3, 97–108. [Google Scholar] [CrossRef]

- Feeser, H.R.; Willard, G.E. Founding strategy and performance: A comparison of high and low growth high tech firms. Strategy Manag. J. 1990, 11, 87–98. [Google Scholar] [CrossRef]

- McCarthy, S.M.; Schoorman, F.D.; Cooper, A.C. Reinvestment decisions by entrepreneurs: Rational decision-making or escalation of commitment? J. Bus. Ventur. 1993, 8, 9–24. [Google Scholar] [CrossRef]

- Tversky, A.; Kahneman, D. Judgment under uncertainty: Heuristics and biases. Science 1974, 185, 1124–1131. [Google Scholar] [CrossRef] [PubMed]

- Langer, E.J. The illusion of control. J. Pers. Soc. Psychol. 1975, 32, 311–328. [Google Scholar] [CrossRef]

- Russo, J.E.; Schoemaker, P.J.H. Managing overconfidence. Sloan Manag. Rev. 1992, 33, 7–17. [Google Scholar]

- Petriglieri, G.; Wood, J.D. The invisible revealed: Collusion as an entry to the team unconscious. Trans. Anal. J. 2003, 33, 332–343. [Google Scholar]

- Blanding, M. Crowdsourcing Is Helping Hollywood Reduce the Risk of Movie-Making; Working Paper Summaries, Working Knowledge; Harvard Business School: Boston, MA, USA, 2019. [Google Scholar]

- Sundstrom, E.; De Meuse, K.P.; Futrell, D. Work Teams: Applications and effectiveness. Am. Psychol. 1990, 45, 120–133. [Google Scholar] [CrossRef]

- van Knippenberg, D.; Schippers, M.C. Workgroup diversity. Annu. Rev. Psychol. 2007, 58, 515–541. [Google Scholar] [CrossRef]

- Mello, A.S.; Ruckes, M.E. Team Composition. J. Bus. 2006, 79, 1019–1039. [Google Scholar] [CrossRef]

- Harrison, D.A.; Price, K.H.; Bell, M.P. Beyond relational demography: Time and the effects of surface- and deep-level diversity on work group cohesion. Acad. Manag. J. 1998, 41, 96–107. [Google Scholar] [CrossRef]

- Hoffman, R.L.; Maier, N.R.F. Quality and acceptance of problem solutions by members of homogeneous and heterogeneous groups. J. Abnorm. Soc. Psychol. 1961, 62, 401–407. [Google Scholar] [CrossRef]

- van Knippenberg, D.; De Dreu, C.K.W.; Homan, A.C. Work group diversity and group performance: An integrative model and research agenda. J. Appl. Psychol. 2004, 89, 1008. [Google Scholar] [CrossRef]

- Carrillo, J.D.; Gromb, D. Culture in Organisations: Inertia and Uniformity; Discussion Paper # 3613; Centre for Economic Policy Research: London, UK, 2002. [Google Scholar]

- Bantel, K.; Jackson, S. Top management and innovations in banking: Does the composition of the top team make a difference? Strateg. Manag. J. 1989, 10, 107–124. [Google Scholar] [CrossRef]

- Madjuka, R.J.; Baldwin, T.T. Team-based employee involvement programs: Effects of design and administration. Pers. Psychol. 1991, 44, 793–812. [Google Scholar] [CrossRef]

- Jackson, S.E.; May, K.E.; Whitney, K. Understanding the dynamics of configuration in decision making teams. In Team Effectiveness and Decision Making in Organizations; Jossey-Bass: San Francisco, CA, USA, 1995; pp. 204–261. [Google Scholar]

- Brewer, M.B. In-group bias in the minimal intergroup situation: A cognitive-motivational analysis. Psychol. Bull. 1979, 86, 307–324. [Google Scholar] [CrossRef]

- Brewer, M.B.; Brown, R.J. Intergroup relations. In Handbook of Social Psychology, 2nd ed.; Gilbert, D.T., Fiske, S.T., Eds.; McGraw-Hill: New York, NY, USA, 1998; Volume 4, pp. 554–594. [Google Scholar]

- Tajfel, H.; Turner, J.C. The social identity theory of intergroup behavior. In Psychology of Intergroup Relations; Worchel, S., Austin, W., Eds.; Nelson Hall: Chicago, IL, USA, 1986; pp. 7–24. [Google Scholar]

- O’Reilly, C.A.; Caldwell, D.F.; Bennett, W.P. Work Group Demography, Social Integration, and Turnover. Admin. Sci. Q. 1989, 34, 21–37. [Google Scholar]

- Murnighan, J.K.; Conlon, D.E. The dynamics of intense work groups: A study of British string quartets. Admin. Sci. Q. 1991, 36, 165–168. [Google Scholar] [CrossRef]

- Campion, M.A.; Medsker, G.J.; Higgs, C. Relations between work group characteristics and effectiveness: Implications for designing effective work groups. Pers. Psychol. 1993, 4, 823–847. [Google Scholar] [CrossRef]

- O’Reilly, C.A.; Flatt, S. Executive Team Demography, Organisational Innovation and Firm Performance; University of California: Berkeley, CA, USA, 1989; (Unpublished manuscript). [Google Scholar]

- Anocona, D.G.; Caldwell, D.F. Demography and design: Predictors of new product team performance. Organ. Sci. 1992, 3, 321–341. [Google Scholar] [CrossRef]

- Triandis, H.C.; Kurowski, L.L.; Gelfand, M.J. Workplace diversity. In Handbook of Industrial and Organizational Psychology, 2nd ed.; Consulting Psychologists Press: Palo Alto, CA, USA, 1994; Volume 4, pp. 769–827. [Google Scholar]

- Field, A. Discovering Statistics Using IMB SPSS Statistics; Sage Publications Ltd.: London, UK, 2012. [Google Scholar]

- ERM Initiative Faculty; Greizinger, T. Collaborative Risk Management Fundamentals. N.C. State Poole College of Management, Enterprise Risk Management Initiative. 2013. Available online: https://erm.ncsu.edu/library/article/collaborative-risk-management-fundamentals (accessed on 11 March 2023).

- Emery, F.E. Characteristics of socio-technical systems. In The Emergence of a New Paradigm of Work; ANU/CCE: Canberra, Australia, 1959. [Google Scholar]

- Trist, E. Occasional Paper 2: The Evolution of Socio-Technical Systems: A Conceptual Framework and an Action Research Project; Ontario Ministry of Labour: Toronto, ON, Canada, 1981. [Google Scholar]

- Hayes, J. Safety Decision Making in High Hazard Organisations at the Production/Maintenance Interface—A Literature Review; Australian National University: Canberra, Australia, 2006. [Google Scholar]

- Flin, R.; O’Connor, P.; Critchon, M. Safety at the Sharp End: A Guide to Non-Technical Skills; Ashgate Publishing Ltd.: Hampshire, UK, 2008. [Google Scholar]

- Lyon, B.K.; Hollcroft, B. Risk Assessments Top 10 Pitfalls & Tips for Improvement. Prof. Saf. Dec. 2012, 57, 28–34. [Google Scholar]

- Zou, P.X.W.; Sunindijo, R.Y. Skills for managing safety risk, implementing safety task, and developing positive safety climate in construction project. Autom. Constr. 2013, 34, 92–100. [Google Scholar] [CrossRef]

- Burnham, M. Targeting zero. Prof. Saf. 2015, 60, 40–45. [Google Scholar]

| Study 1 | Study 2 | Study 3 | |

|---|---|---|---|

| Participants | 26 | 26 | 26 |

| Aim | Develop and evaluate an assessment tool to assess the competence of individuals in different elements of the risk management process. | Compare individuals’ RISKometric results with their performance in a risk scenario exercise; so, providing a reliability review for the RISKometric (n = 18). Identify if performance in Risk Scenario Exercise remains stable over time (n = 8) | Determine if a team collectively competent in risk management would perform a risk scenario exercise better than less optimised teams (n = 18) Determine whether teams performed this task better than individuals (n = 26). |

| Materials | RISKometric | RISKometric Risk scenario exercise | RISKometric (used to form groups) Risk scenario exercise |

| Outcome focus | Individual difference | Individual difference Individual difference across time | Group difference Group vs. individual difference |

| Outcome measure | Evaluation ratings (0–5) given by participant, peer, upline and downline colleagues | Correlation between RISKometric ratings and risk scenario exercise performance (as evaluated by two observers) | Risk scenario exercise performance (as evaluated by two observers) |

| Reference | Marling et al. [2] | Marling et al. [3] | Current study |

| Team A | Team B | Team C | |

|---|---|---|---|

| Risk management experience | |||

| Collective | 136 years | 203 years | 178 years |

| M | 20.00 years | 34.50 years | 34.50 years |

| R | 8–44 years | 25–43 years | 8–44 years |

| Interquartile 1 | 9.75 years | 27.50 years | 24.25 years |

| Interquartile 2 | 33.25 years | 39.25 years | 35.75 years |

| Interquartile range | 23.50 years | 11.75 years | 11.50 years |

| Age | |||

| M | 40.50 years | 54.00 years | 51.50 years |

| R | 29–61 years | 43–60 years | 28–62 years |

| Interquartile 1 | 30.00 years | 46.25 years | 42.50 years |

| Interquartile 2 | 54.00 years | 58.00 years | 54.50 years |

| Interquartile range | 24.00 years | 11.75 years | 12.00 years |

| Level | |||

| Board manager/senior executive | 0 | 1 | 0 |

| Senior manager | 2 | 3 | 2 |

| Middle manager | 1 | 1 | 1 |

| Supervisors/foremen/team leaders | 1 | 1 | 1 |

| Operators/workers | 2 | 0 | 2 |

| Gender | |||

| Male | 5 | 6 | 4 |

| Female | 1 | 0 | 2 |

| Observer | Median | Quartile Array 1 (Q1) | Quartile Array 3 (Q3) |

|---|---|---|---|

| O1 | 4.0 | 2.5 | 4.0 |

| O2 | 4.0 | 2.5 | 4.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Marling, G.; Horberry, T.; Harris, J. Development and Reliability Review of an Assessment Tool to Measure Competency in the Seven Elements of the Risk Management Process: Part Three—Evaluation of the Group Results from the RISK Tool. Safety 2024, 10, 29. https://doi.org/10.3390/safety10010029

Marling G, Horberry T, Harris J. Development and Reliability Review of an Assessment Tool to Measure Competency in the Seven Elements of the Risk Management Process: Part Three—Evaluation of the Group Results from the RISK Tool. Safety. 2024; 10(1):29. https://doi.org/10.3390/safety10010029

Chicago/Turabian StyleMarling, Garry, Tim Horberry, and Jill Harris. 2024. "Development and Reliability Review of an Assessment Tool to Measure Competency in the Seven Elements of the Risk Management Process: Part Three—Evaluation of the Group Results from the RISK Tool" Safety 10, no. 1: 29. https://doi.org/10.3390/safety10010029

APA StyleMarling, G., Horberry, T., & Harris, J. (2024). Development and Reliability Review of an Assessment Tool to Measure Competency in the Seven Elements of the Risk Management Process: Part Three—Evaluation of the Group Results from the RISK Tool. Safety, 10(1), 29. https://doi.org/10.3390/safety10010029