1. Introduction

The astonishing visual abilities of some insect species observable under extremely dim light conditions have attracted the attention of researchers for many years [

1,

2,

3]. Nocturnal insects need to cope with the degradation of visual information arising from shot noise and transducer noise. Filtering in the spatial and temporal domains has been realized in denoising algorithms developed for removing noise from movies that were recorded under dim light conditions (e.g., [

4,

5]). The elimination of noise from static images is an even more difficult task because the temporal domain is not available for filtering. Currently, it is still challenging to remove noise from real-world camera images while avoiding artifacts and preserving object contours and image sharpness. The problem is that the noise statistics of camera images are very different from the Gaussian noise or salt-and-pepper noise often added to images to demonstrate the performance of denoising methods. The bionic night vision algorithm proposed by Hartbauer [

6] was originally developed to remove noise from dim light images, but needs to be modified to become applicable to real-world noisy images exhibiting lower noise levels in three color channels. Here, I describe the modified version of this bionic spatial-domain-denoising algorithm and applied it to a real-world image dataset.

Images taken with a CCD or CMOS camera under various light conditions often suffer from imperfections and sensor noise. In recent decades, the statistical property of real-world noise has been studied for CCD and CMOS image sensors [

7,

8,

9,

10]. Real-world noise has five major sources, including photon shot noise, fixed pattern noise, dark current, readout noise, and quantization noise (for further detail, see [

11]). Therefore, the denoising of real-world images is still a challenging problem [

10] and image databases containing noisy and noise-reduced camera images of the same scenes are needed. Xu et al., 2018 [

11], computed the mean image from static scenes to obtain the “ground truth” image for real-world noisy camera images. Sampling the same pixel many times and computing the average value (e.g., 500 times) will approximate the truth pixel value and significantly remove image noise. The resulting image dataset was made available to the public (

https://github.com/csjunxu/PolyU-Real-World-Noisy-Images-Dataset, accessed on 1 March 2023) and contains 40 different scenes captured using five cameras from the three leading camera manufactures: Canon EOS (5D Mark II, 80D, 600D); Nikon (D800); and Sony (A7 II). This image dataset consists of 100 images and was used in this study to test the performance of a modified version of the bionic night vision algorithm described by [

6].

Typically, noise reduction can be achieved by applying linear and non-linear filters (for a review of methods, see [

12,

13]). Linear smoothing, or median filtering, can reduce noise, but at the same time smooth out edges, resulting in a blurred image. An alternative and improved denoising method is total variation minimization (TV) denoising, which has been described by [

14]. The objective is the minimization of the total variation within an image, a concept that can be approximately characterized as the integral of the image gradient’s norm. Non-local means (NL-means) filtering represents an influential denoising filter technique that concurrently preserves image acuity and object contour fidelity [

15]. Furthermore, bilateral filtering constitutes a robust non-linear denoising algorithm rooted in the consideration of spatial proximities among neighboring pixels alongside their radiometric congruence [

16]. While bilateral filtering offers computational expediency, it poses challenges in the intricate calibration of its filter parameters [

17], and it is recognized that this algorithm may yield artifacts such as staircase effects and inverse contours. Alternatively, image denoising can be accomplished through Fourier transformation of the original image, wherein Fourier-transformed images undergo filtration and subsequent inverse transformation, thereby mitigating noise and averting undesirable blurring phenomena (e.g., [

18,

19]). Frequency-domain methods are hindered by their propensity to introduce multiple undesirable artifacts and their inability to uniformly enhance all image components. In contrast, wavelet-domain hidden Markov models have exhibited intriguing outcomes in the context of image denoising, particularly when employed on diagnostic images [

20,

21,

22]. In recent times, deep learning artificial neural networks (ANNs) have been employed for image denoising [

23,

24]. However, when contrasted with more straightforward denoising algorithms, the outcomes generated by ANN networks exhibit reduced predictability.

Recently, powerful algorithms have been developed for the denoising of real-world camera images to overcome the problem of different noise levels in the three color channels of color images [

25] and the fact that noise is signal-dependent and has different levels in different local patches [

11]. In the latter study, the authors proposed an algorithm that is based on the trilateral weighted sparse coding (TWSC) scheme of real-world color images. In contrast to denoising all color channels of RGB images with different levels of noise, denoising was only applied to the Y channel of YUV-transformed images in this study and a single hard threshold was used for an adaptive local averaging procedure [

6] to enhance the quality of real-world noisy images with complex noise statistics. This simple algorithm was executed in parallel on a multi-core processor and the results were compared with four common denoising algorithms.

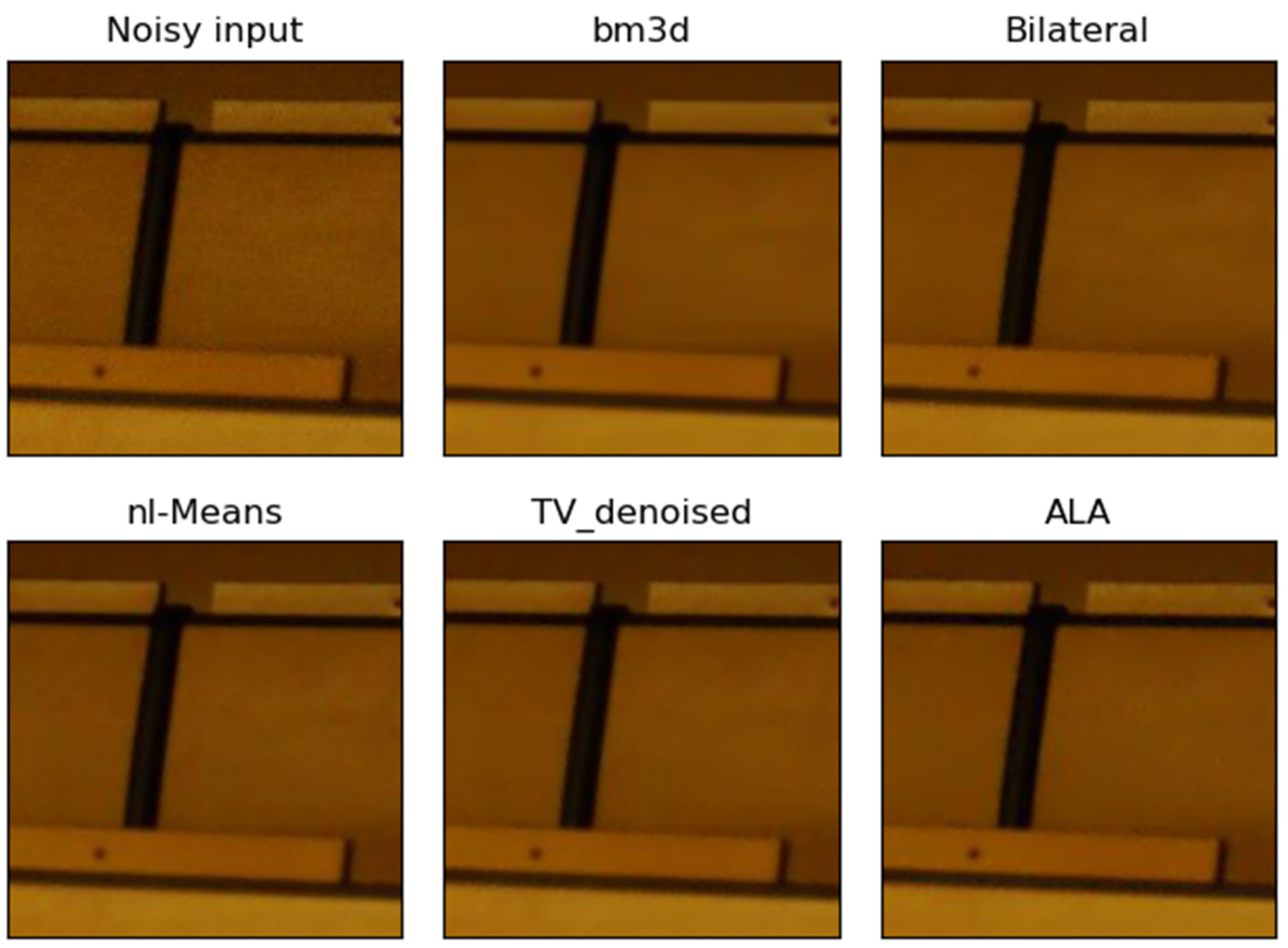

3. Image Denoising Results

Adaptive local averaging (ALA) effectively removed sensor noise from the real-world images that were taken in various settings using five different camera models. However, the sharpness of ALA-filtered images was slightly reduced and it was necessary to enhance image sharpness in a second filter step (

Figure 1). A visual image comparison shows that the performance of this two-step filter is comparable to the common image denoising filters NL-means and TV filter (for examples, see

Figure 2,

Figure 3,

Figure 4 and

Figure 5). Bm3d filtering performed the best regarding its denoising performance and in many cases preserved image sharpness. The bilateral filtering left some noise behind in dark image regions (

Figure 2 and

Figure 3) and the wavelet filter generated disturbing artifacts at object contours in the form of staircase effects. Therefore, the results for the wavelet filter are not shown in

Figure 2,

Figure 3,

Figure 4 and

Figure 5. The staircase artifact was completely absent in the output of the two-step image processing filter described in this study.

ALA filtering of the whole real-world image dataset resulted in an average PSNR value of 38.0 ± 3.22 dB (Equation (3)) and additional image sharpening increased this PSNR value to 39.0 ± 2.75 dB. The average PSNR values calculated using Equation (3) were significantly higher for the five common denoising filters compared to the two-step filter described here (

p < 0.01, N = 100, Mann–Whitney U test, see

Table 1). Calculating PSNR values from the ground-truth images and denoised images (using Equation (3)) resulted in rather similar average PSNR values for all filters with slightly higher values for the bm3d, NL-means and TV filter compared to the two-step filter (see

Table 1). In order to estimate the image denoising performance without comparing input and output images, PSNR values were also computed using Equation (4). The PSNR values of the output of the five common image denoising algorithms were very similar, but the application of two-step image denoising resulted in a slightly higher average PSNR value of 9.25 dB (see

Table 1). However, this small difference between the PSNR values of filter variants is not significant (

p > 0.05, N = 100, Mann–Whitney U test). The brightness of the output images was not affected by any filter applied in this study and was 96 for the whole image dataset for gray-value-transformed images. The mean SSIM index was very similar for all filter variants tested in this study (

Table 1). Interestingly, SSIM was highest for the bilateral filter, which was less effective in removing sensor noise from images compared to the bm3d filtering, which showed better denoising performance. In contrast, when the ground-truth image was compared with filter output, mean SSIM was highest for the bm3d filter (see

Table 1).

Dividing images into four segments of equal size increased the processing speed, but at the same time resulted in line artifacts after application of the ALA filter. Therefore, an overlap of 20 pixels (equal to the maximum radius of the ALA filter) between adjacent image segments was necessary to enable parallel processing without generating image artifacts in the form of horizontal and vertical lines. The average processing speed of the two-step image denoising filter in a Python script environment (Pycharm version 2023.1 in Anaconda Python interpreter) was 36.6 ± 18.2 s for input images with a dimension of 512 × 512 pixels (cropped image dataset), which is about three times faster compared to single-core computing. A possible C-code compilation of the ALA filter function will further increase the processing speed.

4. Discussion

The filtering results show that the combination of “adaptive local averaging” (ALA) and image sharpening leads to high-quality output images when real-world camera images with complex noise statistics are taken as input (see

Figure 2,

Figure 3,

Figure 4 and

Figure 5). Visual inspection of the image denoising of this two-step filter shows that this special kind of local means filter is comparable to the performance of NL-means and TV filters. Bm3d filtering was more effective in removing sensor noise from images compared to the two-step filter, but some output images appeared slightly blurry (for example,

Figure 4). In contrast, the bilateral filter was often less effective in removing noise from dark image regions and the wavelet filter generated artifacts in the form of staircase effects appearing next to object contours (data not shown). The ALA filter was originally inspired by the neuronal summation of adjacent photoreceptor cells of nocturnal insects, such as

Megalopta genalis, where spatial integration of image information in lamina neurons leads to denoising and enables night vision [

2]. The drawback of any local averaging filter is that output images are often blurry. Therefore, it was necessary to enhance image sharpness by applying an unsharp mask with a fixed radius of five pixels to obtain an image quality that was comparable to ground-truth images (see

Figure 1).

PSNR values are often computed to compare the performance of different denoising filters. The similar PSNR values of all noise filters applied in this study (see

Table 1) demonstrate a rather high performance of the two-step filter. The significantly lower average PSNR value of this filter, obtained by comparing the noisy input with the filtered output, is a consequence of the high number of tested images (N = 100). Using Equation (3) for PSNR calculation can be problematic because even weak denoising filters yield high PSNR values when the output image is similar to the noisy input (low denoising performance). This is also reflected in the high average SSIM value of the bilateral filter (see

Table 1), which is unlikely the result of the denoising performance of this filter because bm3d filtering as well as all other filters removed sensor noise much more effectively (see

Figure 2,

Figure 3,

Figure 4 and

Figure 5). This problem with PSNR values also becomes obvious when comparing the SSIM values that were calculated for the ground-truth images and the denoised output (

Table 1). In this case, the bm3d filter had the highest SSIM value, which indicates a high similarity between the filter output and ground truth images. Wavelet denoising had the lowest SSIM value, likely because of its staircase artifacts reducing image quality. The SSIM value obtained with the two-step filter described in this study was similar to the SSIM output of the bm3d, TV and NL-means filters when ground-truth images were compared with the corresponding filtered images. This result is surprising given the simplicity of the ALA filter, which only performs adaptive local averaging. Interestingly, using the same noisy image dataset as in this study, various elaborated denoising algorithms resulted in slightly lower PSNR values (maximum = 37.81 dB for the TWSC filter; ref. [

11]). However, it is difficult to compare PSNR values between studies because several equations exist for calculating PSNR values and in this study the PSNR values were calculated after the gray value transformation of color images. To circumvent this problem, I calculated the PSNR values using Equation (4), which revealed a slightly higher average PSNR value for the two-step denoising compared to the five common denoising filters.

In recent years, modern artificial neuronal network approaches have been developed for image denoising (e.g., [

23,

24]). Noise-free ground-truth images are essential for ANN training and the image dataset used in this study would offer this possibility, although the number of images is rather small for splitting the data into training and test datasets. In a follow-up study, the performance of the ALA filter will be compared with modern ANN-based noise filters using bigger image libraries such as the Smartphone Image Denoising Dataset (SIDD) consisting of 30,000 noisy images from 10 scenes.

A major benefit of the ALA filter is that it only depends on a single threshold parameter (Th) that can be derived from global image statistics (see Equation (1)). In contrast, several parameters need to be carefully adjusted for all other filters applied in this study. For example, the NL-means filter adjusts a smoothing parameter and four other parameters (tau, alpha, beta, gamma) that affect denoising performance and image sharpness. Using Equation (1), it was possible to calculate the Th for ALA denoising in a way that enhanced the quality of real-world noisy images exhibiting various levels of noise and different degrees of image brightness. To account for differences in image statistics, it was necessary to take the median image brightness and a kind of noise estimate into account for the calculation of the Th parameter for ALA denoising (see Equation (1)).

The ALA denoising described in this study is computationally demanding because the pixel-wise calculation of the diameter restricts local averaging to a small image region. To increase the processing speed, denoising was only performed on the brightness channel of YUV-transformed images. This saved 2/3 of the processing time compared to denoising all color channels of RGB images with different noise statistics that need to be taken into account for high-quality image denoising [

28]. Another method of enhancing the processing speed was achieved by splitting input images into four equal segments for multi-core parallel processing. In this study, parallel processing improved the computation speed almost three times and depended on the number of processors that were simultaneously available. Nevertheless, none of these methods are sufficient to compute large images in a short period of time because the computation demand increases with image size in a non-linear way. For practical purposes, it will be necessary to compile the ALA algorithm as C-code to enhance the processing speed on standard computer hardware. Theoretically, it would also be possible to enhance the processing speed by running ALA on a multi-processor environment supported by modern computation clusters. Another solution constitutes fast computer graphics hardware (GPU) or FPGA (field-programmable gateway arrays) hardware. In the latter case, processing speed increases due to the parallel architecture of FPGA boards [

27,

29]. Simple algorithms, like the ALA filter, can be implemented in reconfigurable FPGA hardware [

30], which is considered a practical way to obtain high computing performance (Xilinx Inc. System Generator for Digital Signal Processing;

http://www.xilinx.com/tools/dsp.htm, accessed on 3 August 2023). Recently, image denoising based on the ALA filter was successfully implemented on a Trenz Electronic FPGA hardware platform for denoising high-resolution 16-Bit mammography images (the prototype is shown in

Figure 6). This hardware enables parallel execution of ALA image denoising on many image segments at the same time.