Intelligent Performance Evaluation in Rowing Sport Using a Graph-Matching Network

Abstract

:1. Introduction

2. Related Works

2.1. The OpenPose System

2.2. Graph Neural Networks

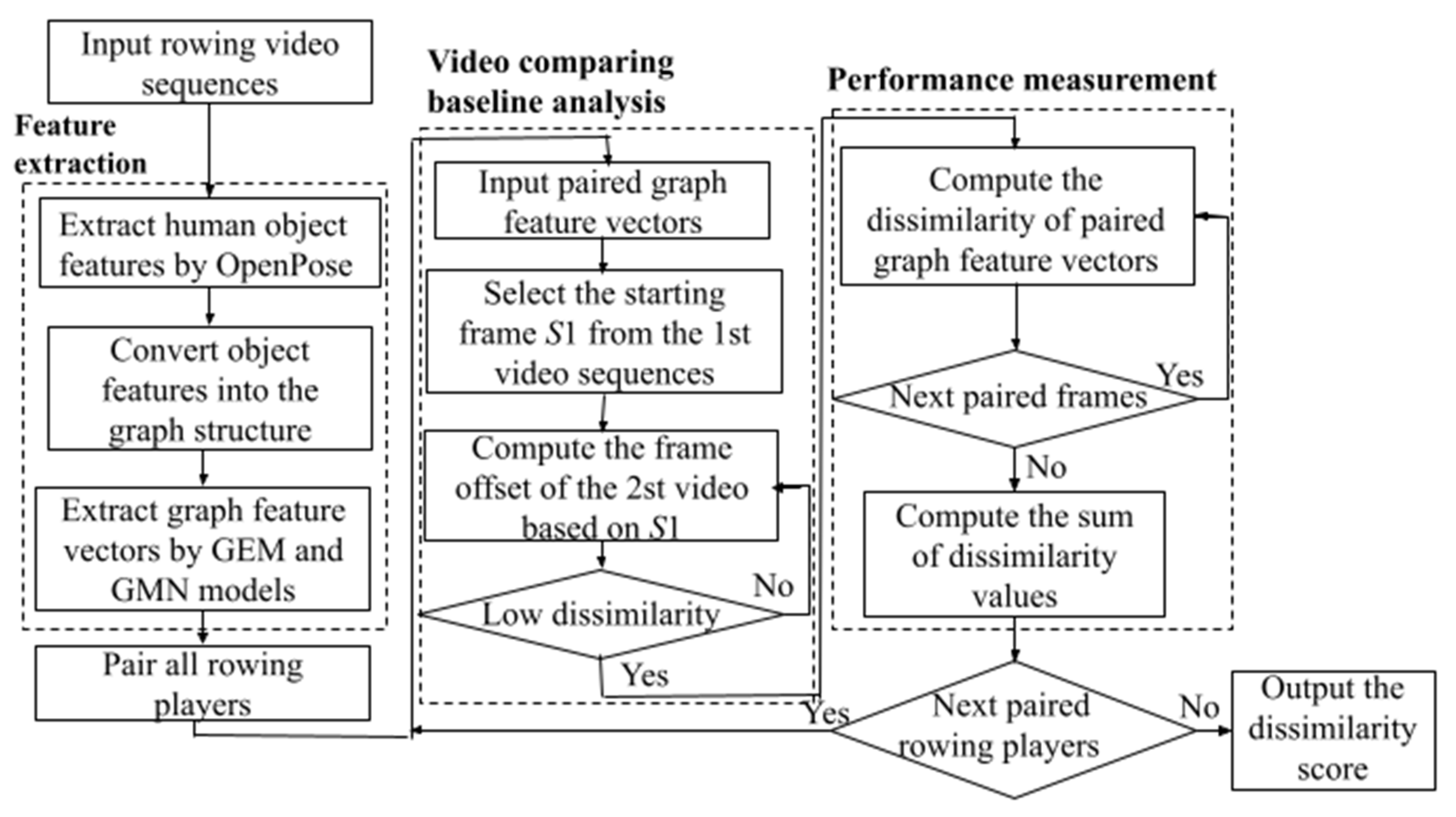

3. The Proposed Approach

3.1. Feature Extraction

3.2. Baseline Comparison Analysis

3.2.1. The Input Data for the Model

3.2.2. The Graph-Embedding Model (GEM)

3.2.3. The Graph-Matching Network (GMN)

3.3. Time-Period Similarity Processing

4. Results and Analysis

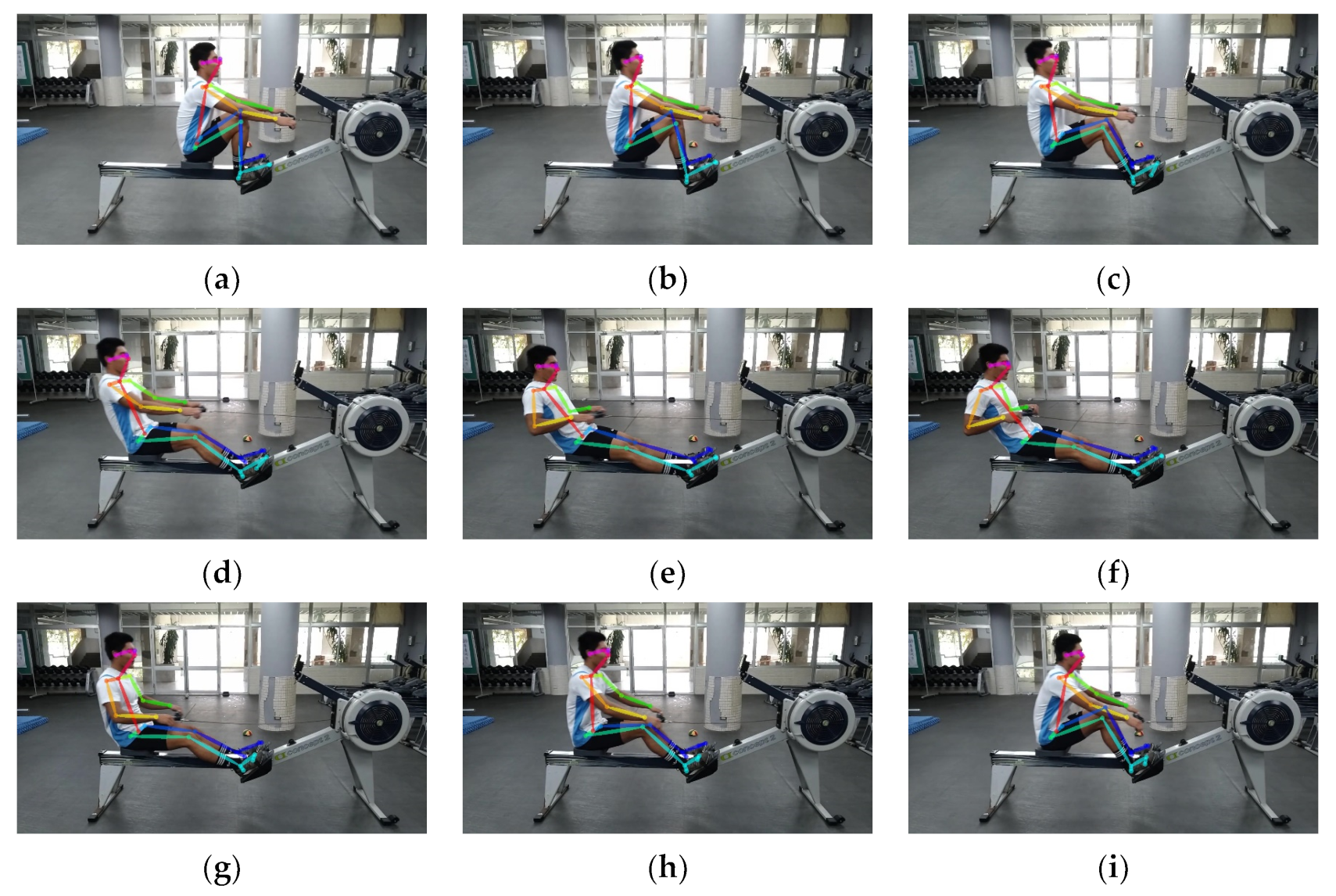

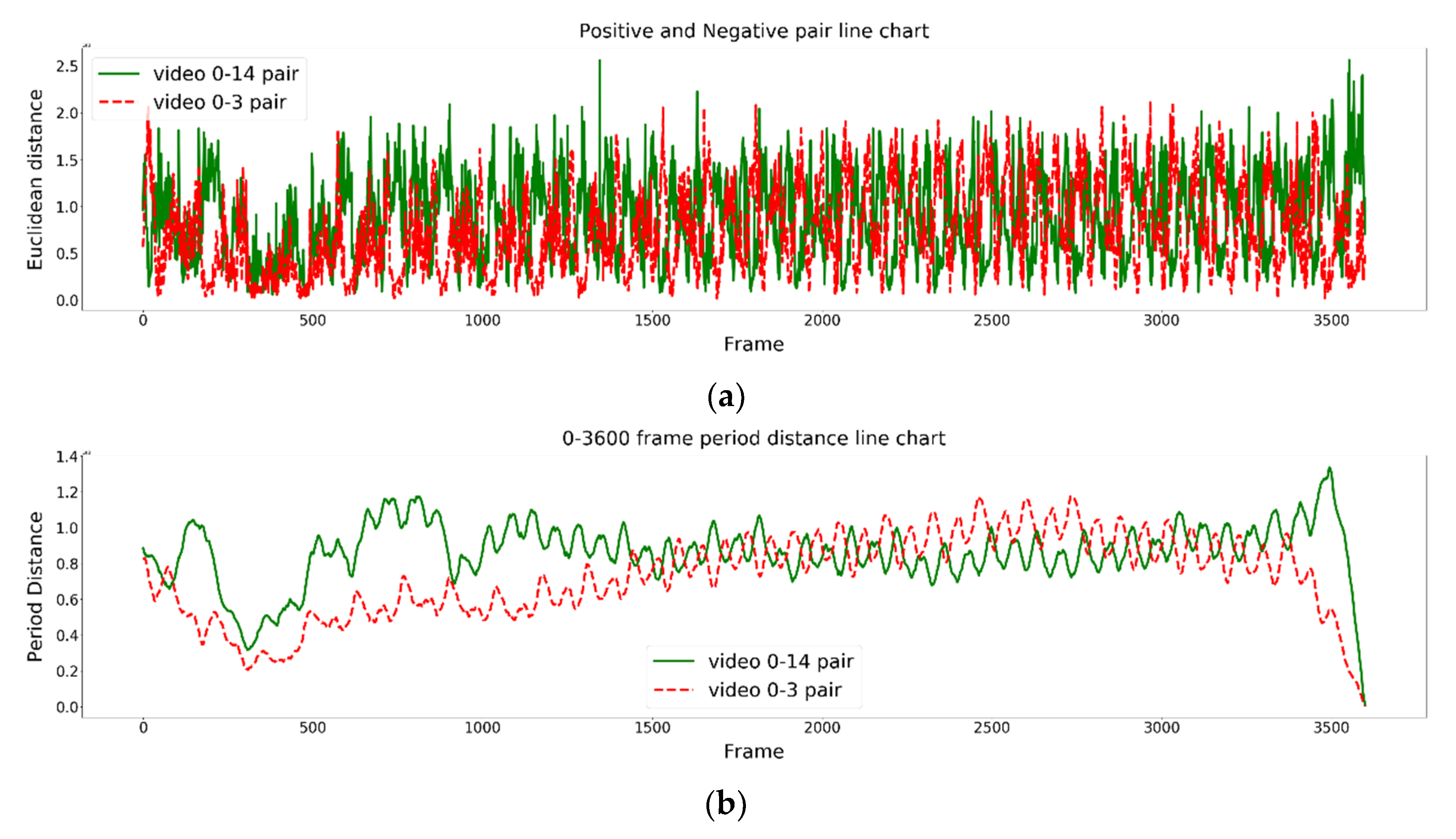

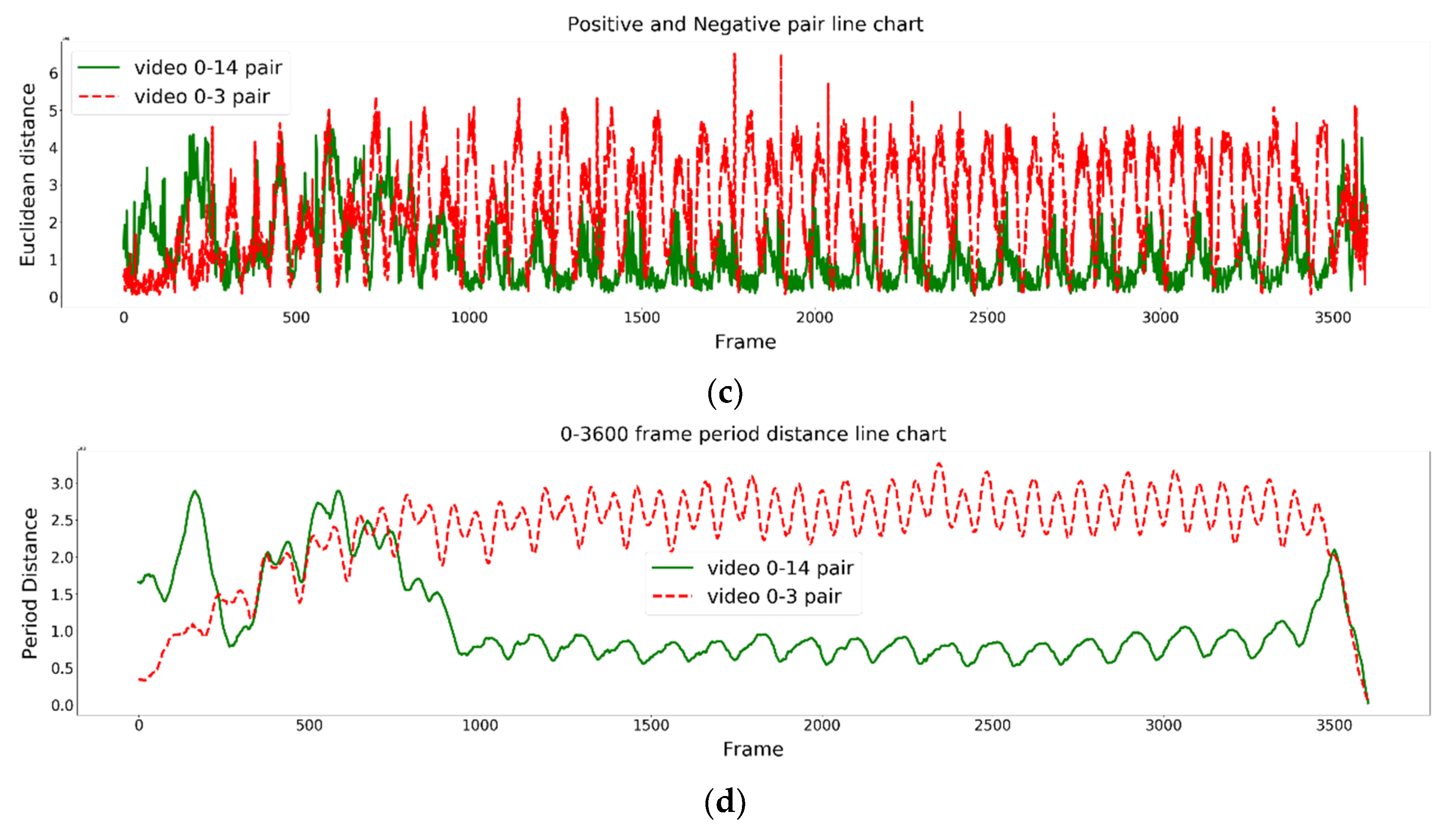

4.1. The Rowing Posture Similarity Analysis

4.2. Rowing Posture Analysis

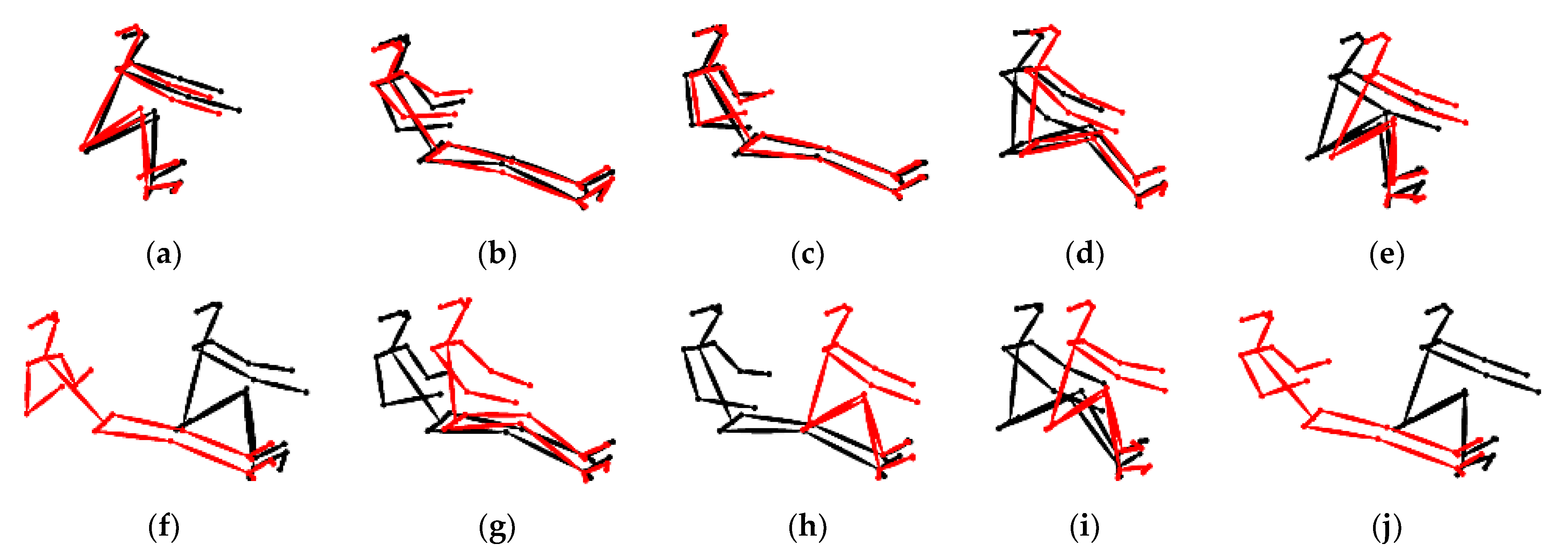

4.3. Visual Validation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Reinschmidt, C.; Van-den-Bogert, A.J.; Nigg, B.M.; Lundbrg, A.; Murphy, N. Effect of Skin Movement on the Analysis of Skeletal Knee Joint Motion During Running. J. Biomech. 1997, 30, 729–732. [Google Scholar] [CrossRef] [PubMed]

- Fujimori, Y.; Ohmura, Y.; Harada, T. Wearable motion capture suit with full-body tactile sensors. In Proceedings of the IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009. [Google Scholar]

- Ren, Z.; Meng, J.; Yuan, J.; Zhang, Z. Robust hand gesture recognition with kinect sensor. In Proceedings of the ACM International Conferenceon Multimedia, Scottsdale, AZ, USA, 28 November–1 December 2011. [Google Scholar]

- Wei, S.E.; Tang, N.C.; Lin, Y.Y.; Weng, M.F.; Liao, H.Y.M. Skeleton-augmented human action understanding by learning with progressively refined data. In Proceedings of the ACM International Conference on Human Centered Event Understanding from Multimedia, Orlando, FL, USA, 7 November 2014. [Google Scholar]

- Igor, J.; Julio, C.G.; Francesco, C.; Jaime, S.; Francesc, C.; Luka, M.; Ivan, K.; Sergej, O.; Jesús, O.; Bernardo, R.; et al. The 360° Performance System in Team Sports: Is It Time to Design a “Personalized Jacket” for Team Sports Players? Sports 2021, 9, 40. [Google Scholar]

- Chen, C.C.; Chang, C.; Lin, C.S.; Chen, C.H.; Chen, I.C. A Video based basketball shooting prediction and pose suggestion system. Multimed. Tools Appl. 2023, 82, 27551–27570. [Google Scholar] [CrossRef]

- Duan, C.; Hu, B.; Liu, W.; Song, J. Motion Capture for Sporting Events Based on Graph Convolutional Neural Networks and Single Target Pose Estimation Algorithms. Appl. Sci. 2023, 13, 7611. [Google Scholar] [CrossRef]

- Yuan, B.; Kamruzzaman, M.; Shan, S. Application of Motion Sensor Based on Neural Network in Basketball Technology and Physical Fitness Evaluation System. Wirel. Commun. Mob. Comput. 2021, 2021, 5562954. [Google Scholar] [CrossRef]

- Chang, Y.J.; Chen, S.F.; Huang, J.D.A. Kinect-based system for physical rehabilitation: A pilot study for young adults with motor disabilities. Res. Dev. Disabil. 2011, 32, 2566–2570. [Google Scholar] [CrossRef] [PubMed]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.E.; Sheikh, Y. OpenPose: Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 7291–7299. [Google Scholar] [CrossRef] [PubMed]

- Qiao, S.; Wang, Y.; Li, J. Real-Time human gesture grading based on OpenPose. In Proceedings of the IEEE International Conference on Image and Signal Processing, BioMedical Engineering and Informatics, Shanghai, China, 14–16 October 2017. [Google Scholar]

- Tsai, Y.S.; Hsu, L.H.; Hsieh, Y.Z.; Lin, S.S. The real-time depth estimation for an occluded person based on a single image and OpenPose method. Mathematics 2020, 8, 1333. [Google Scholar] [CrossRef]

- Nakai, M.; Tsunoda, Y.; Hayashi, H.; Murakoshi, H. Prediction of basketball free throw shooting by OpenPose. In Proceedings of the International Conference on New Frontiers in Artificial Intelligence, Yokohama, Japan, 12–14 November 2018. [Google Scholar]

- Toshev, A.; Szegedy, C. DeepPose: Human pose estimation via deep neural networks. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Xiao, B.; Wu, H.; Wei, Y. Simple baselines for human pose estimation and tracking. In Proceedings of the European International Conference on Computer Vision, Munich, Germany, 8–14 September 2018. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Zhang, B.; Chen, C.Y.; Chan, L.C.; Fok, W. Intelligent Sports performance Scoring and Analysis system Based on Deep Learning Network. In Proceedings of the International Conference on Artificial Intelligence and Big Data, Chengdu, China, 28–31 May 2020. [Google Scholar]

- Theagarajan, R.; Pala, F.; Zhang, X.; Bhanu, B. Soccer: Who Has the Ball? Generating Visual Analytics and Player Statistics. In Proceedings of the International Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Smedt, Q.D.; Wannous, H.; Vandeborre, J.P.; Guerry, J.; Saux, B.L.; Filliat, D. SHREC’17 track: 3D Hand gesture recognition using a depth and skeletal dataset. In Proceedings of the International Conference on Eurographics Workshop on 3D Object Retrieval, Lyon, France, 23–24 April 2017. [Google Scholar]

- Devineau, G.; Xi, W.; Moutarde, F.; Yang, J. Convolutional neural networks for multivariate time series classification using both inter- and intra- channel parallel convolutions. In Proceedings of the International Conference on Reconnaissance des Formes, Image, Apprentissage et Perception, Paris, France, 25–28 June 2018. [Google Scholar]

- Hou, J.; Wang, G.; Chen, X.; Xue, J.H.; Zhu, R.; Yang, H. Spatial-temporal attention Res-TCN for skeleton-based dynamic hand gesture recognition. In Proceedings of the European International Conference on Computer Vision, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Scarselli, F.; Gori, M.; Tsoi, A.C.; Hagenbuchner, M.; Monfardini, G. The Graph Neural Network Model. IEEE Trans. Neural Netw. 2008, 20, 61–80. [Google Scholar] [CrossRef] [PubMed]

- Wu, Z.; Pan, S.; Chen, F.; Long, G.; Zhang, C.; Yu, P.S. A Comprehensive Survey on Graph Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2019, 32, 4–24. [Google Scholar] [CrossRef] [PubMed]

- Sperduti, A.; Starita, A. Supervised neural networks for the classification of structures. IEEE Trans. Neural Netw. 1997, 8, 714–735. [Google Scholar] [CrossRef] [PubMed]

- Gori, M.; Monfardini, G.; Scarselli, F. A New Model for Learning in Graph Domains. In International Joint Conference on Neural Networks; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2005; pp. 729–734. [Google Scholar]

- Li, Y.; Gu, C.; Dullien, T.; Vinyals, O.; Kohli, P. Graph Matching Networks for Learning the Similarity of Graph Structured Objects. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 10–15 June 2019. [Google Scholar]

- Yan, S.; Xiong, Y.; Lin, D. Spatial Temporal Graph Convolutional Network for Skeleton-Based Action Recognition. In Proceedings of the International Conference on the Association for the Advance of Artificial Intelligence, New Orleans, LA, USA, 21–23 February 2018. [Google Scholar]

- Monisha, M.; Mohan, P.S. A Real-Time Embedded System for Human Action Recognition Using Template Matching. In Proceedings of the International Conference on Electrical, Instrumentation and Communication Engineering, Karur, India, 27–28 April 2017. [Google Scholar]

- Cho, Y.; Yoon, K. PaMM: Pose-Aware Multi-Shot Matching for Improving Person Re-Identification. IEEE Trans. Image Process. 2017, 27, 3739–3752. [Google Scholar] [CrossRef] [PubMed]

- Tiwari, E.; Sardar, P.; Jain, S. Football Match Result Prediction Using Neural Networks and Deep Learning. In Proceedings of the Reliability, Infocom Technologies and Optimization, Noida, India, 4–5 June 2020. [Google Scholar]

- Edouard Sarlin, P.; DeTone, D.; Malisiewicz, T.; Rabinovich, A. SuperGlue: Learn Feature Matching with Graph Neural Networks. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Li, J.; Li, J. An Improved Pose Matching Method Based on Deep Learning. In Proceedings of the IEEE International Conference on Civil Aviation Safety and Information Technology, Kunming, China, 17–19 October 2019. [Google Scholar]

- Von Zitzewitz, J.; Wolf, P.; Novakovic, V.; Wellner, M.; Rauter, G.; Brunschweiler, A.; Riener, R. Real-time rowing simulator with multimodal feedback. Sports Technol. 2008, 1, 257–266. [Google Scholar] [CrossRef]

- Hamilton, N.; Weimar, W.; Luttgens, K. Kinesiology: Scientific Basis of Human Motion, 12th ed.; McGraw-Hill: New York, NY, USA, 2012. [Google Scholar]

| No. | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2.93 | 2.40 | 4.89 | 2.11 | 2.83 | 2.32 | 4.15 | 3.26 | 2.84 | 3.85 | 4.03 | 2.83 | 3.29 | 1.43 | |

| 1 | 2.93 | 2.28 | 3.21 | 2.65 | 2.79 | 2.39 | 3.17 | 2.90 | 3.01 | 3.36 | 3.11 | 3.50 | 3.19 | 2.63 | |

| 2 | 2.40 | 2.28 | 3.39 | 2.39 | 2.87 | 2.68 | 3.37 | 3.05 | 3.13 | 3.51 | 2.72 | 3.21 | 3.05 | 2.38 | |

| 3 | 4.89 | 3.21 | 3.39 | 3.09 | 3.25 | 2.34 | 2.43 | 3.33 | 3.67 | 2.54 | 1.23 | 5.14 | 3.36 | 3.92 | |

| 4 | 2.11 | 2.65 | 2.39 | 3.09 | 2.77 | 1.40 | 4.85 | 4.32 | 2.66 | 4.45 | 2.86 | 2.25 | 3.38 | 2.71 | |

| 5 | 2.83 | 2.79 | 2.87 | 3.25 | 2.77 | 2.55 | 2.56 | 2.44 | 2.31 | 3.73 | 3.20 | 2.87 | 3.16 | 2.47 | |

| 6 | 2.32 | 2.39 | 2.68 | 3.25 | 1.40 | 2.55 | 3.09 | 2.95 | 1.53 | 2.90 | 2.75 | 3.02 | 2.64 | 1.85 | |

| 7 | 4.15 | 3.17 | 3.37 | 2.43 | 4.85 | 2.56 | 3.09 | 2.08 | 2.70 | 1.90 | 2.32 | 5.06 | 2.62 | 2.48 | |

| 8 | 3.26 | 2.90 | 3.05 | 3.33 | 4.32 | 2.44 | 2.95 | 2.08 | 2.68 | 2.61 | 3.77 | 3.41 | 2.92 | 2.22 | |

| 9 | 2.84 | 3.01 | 3.13 | 3.67 | 2.66 | 2.31 | 1.53 | 2.70 | 2.68 | 3.66 | 3.61 | 3.35 | 2.64 | 2.17 | |

| 10 | 3.85 | 3.36 | 3.51 | 2.54 | 4.45 | 3.73 | 2.90 | 1.90 | 2.61 | 3.66 | 2.50 | 6.98 | 3.84 | 2.55 | |

| 11 | 4.03 | 3.11 | 2.72 | 1.23 | 2.86 | 3.20 | 2.75 | 2.32 | 3.77 | 3.61 | 2.50 | 5.04 | 3.12 | 3.62 | |

| 12 | 2.83 | 3.50 | 3.21 | 5.14 | 2.25 | 2.87 | 3.02 | 5.06 | 3.41 | 3.35 | 6.98 | 5.04 | 3.46 | 3.15 | |

| 13 | 3.29 | 3.19 | 3.05 | 3.36 | 3.38 | 3.16 | 2.64 | 2.62 | 2.92 | 2.64 | 3.84 | 3.12 | 3.46 | 2.51 | |

| 14 | 1.43 | 2.63 | 2.38 | 3.92 | 2.71 | 2.47 | 1.85 | 2.48 | 2.22 | 2.17 | 2.55 | 3.62 | 3.15 | 2.51 |

| No. | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 10.14 | 8.01 | 16.40 | 6.97 | 10.22 | 8.24 | 13.67 | 11.70 | 10.31 | 11.33 | 15.09 | 8.86 | 11.63 | 4.77 | |

| 1 | 10.14 | 7.95 | 10.89 | 9.13 | 10.47 | 8.44 | 10.93 | 10.59 | 11.43 | 10.58 | 12.19 | 11.48 | 11.70 | 9.71 | |

| 2 | 8.01 | 7.95 | 11.03 | 7.62 | 10.65 | 9.31 | 11.32 | 10.85 | 11.71 | 10.70 | 10.46 | 9.91 | 10.90 | 8.15 | |

| 3 | 16.40 | 10.89 | 11.03 | 9.96 | 11.48 | 7.97 | 8.01 | 11.62 | 13.20 | 7.76 | 3.37 | 17.37 | 11.48 | 14.75 | |

| 4 | 6.97 | 9.13 | 7.62 | 9.96 | 9.59 | 4.34 | 15.88 | 14.43 | 9.09 | 13.21 | 10.38 | 7.01 | 11.18 | 9.50 | |

| 5 | 10.22 | 10.47 | 10.65 | 11.48 | 9.59 | 9.64 | 9.31 | 9.49 | 9.32 | 12.34 | 13.29 | 9.37 | 123.1 | 9.52 | |

| 6 | 8.24 | 8.44 | 9.31 | 7.97 | 4.34 | 9.64 | 11.13 | 11.05 | 4.65 | 8.35 | 10.54 | 11.08 | 9.49 | 7.38 | |

| 7 | 13.67 | 10.93 | 11.32 | 8.01 | 15.88 | 9.31 | 11.13 | 7.04 | 9.76 | 5.73 | 8.39 | 16.02 | 9.29 | 8.60 | |

| 8 | 11.70 | 10.59 | 10.85 | 11.62 | 14.43 | 9.49 | 11.05 | 7.04 | 10.38 | 8.15 | 15.20 | 10.99 | 10.74 | 8.13 | |

| 9 | 10.31 | 11.43 | 11.71 | 13.20 | 9.09 | 9.32 | 4.65 | 9.76 | 10.38 | 12.15 | 15.28 | 11.26 | 10.22 | 8.00 | |

| 10 | 11.33 | 10.58 | 10.70 | 7.76 | 13.21 | 12.34 | 8.35 | 5.73 | 8.15 | 12.15 | 8.22 | 20.63 | 12.44 | 7.56 | |

| 11 | 15.09 | 12.19 | 10.46 | 3.37 | 10.38 | 13.29 | 10.54 | 8.39 | 15.20 | 15.28 | 8.22 | 18.73 | 12.36 | 14.59 | |

| 12 | 8.86 | 11.48 | 9.91 | 17.37 | 7.01 | 9.37 | 11.08 | 16.02 | 10.99 | 11.26 | 20.63 | 18.73 | 11.22 | 11.05 | |

| 13 | 11.63 | 11.70 | 10.90 | 11.48 | 11.18 | 123.1 | 9.49 | 9.29 | 10.74 | 10.22 | 12.44 | 12.36 | 11.22 | 9.02 | |

| 14 | 4.77 | 9.71 | 8.15 | 14.75 | 9.50 | 9.52 | 7.38 | 8.60 | 8.13 | 8.00 | 7.56 | 14.59 | 11.05 | 9.02 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, C.-C.; Lin, C.-S.; Chen, Y.-T.; Chen, W.-H.; Chen, C.-H.; Chen, I.-C. Intelligent Performance Evaluation in Rowing Sport Using a Graph-Matching Network. J. Imaging 2023, 9, 181. https://doi.org/10.3390/jimaging9090181

Chen C-C, Lin C-S, Chen Y-T, Chen W-H, Chen C-H, Chen I-C. Intelligent Performance Evaluation in Rowing Sport Using a Graph-Matching Network. Journal of Imaging. 2023; 9(9):181. https://doi.org/10.3390/jimaging9090181

Chicago/Turabian StyleChen, Chien-Chang, Cheng-Shian Lin, Yen-Ting Chen, Wen-Her Chen, Chien-Hua Chen, and I-Cheng Chen. 2023. "Intelligent Performance Evaluation in Rowing Sport Using a Graph-Matching Network" Journal of Imaging 9, no. 9: 181. https://doi.org/10.3390/jimaging9090181

APA StyleChen, C.-C., Lin, C.-S., Chen, Y.-T., Chen, W.-H., Chen, C.-H., & Chen, I.-C. (2023). Intelligent Performance Evaluation in Rowing Sport Using a Graph-Matching Network. Journal of Imaging, 9(9), 181. https://doi.org/10.3390/jimaging9090181