Abstract

This work tackles the problem of image restoration, a crucial task in many fields of applied sciences, focusing on removing degradation caused by blur and noise during the acquisition process. Drawing inspiration from the multi-penalty approach based on the Uniform Penalty principle, discussed in previous work, here we develop a new image restoration model and an iterative algorithm for its effective solution. The model incorporates pixel-wise regularization terms and establishes a rule for parameter selection, aiming to restore images through the solution of a sequence of constrained optimization problems. To achieve this, we present a modified version of the Newton Projection method, adapted to multi-penalty scenarios, and prove its convergence. Numerical experiments demonstrate the efficacy of the method in eliminating noise and blur while preserving the image edges.

1. Introduction

Image restoration is an important task in many areas of applied sciences since digital images are frequently degraded by blur and noise during the acquisition process. Image restoration can be mathematically formulated as the linear inverse problem [1]

where and , respectively, are vectorized forms of the observed image and the exact image to be restored, is the linear operator modeling the imaging system, and represents Gaussian white noise with mean zero and standard deviation . The image restoration problem (1) is inherently ill-posed and regularization strategies, based on the prior information on the unknown image, are usually employed in order to effectively restore the image from .

In a variational framework, image restoration can be reformulated as a constrained optimization problem of the form

whose objective function contains a -based term, imposing consistency of the model with the data, and a regularization term , forcing the solution to satisfy some a priori properties. Here and henceforth, the symbol denotes the Euclidean norm. The constraint imposes some characteristics on the solution which are often given by the physics underlying the data acquisition process. Since image pixels are known to be nonnegative, a typical choice for is the positive orthant.

The quality of the restored images strongly depends on the choice of the regularization term which, in a very general framework, can be expressed as

where the positive scalars are regularization parameters and the are regularization functions for . The multi-penalty approach (3) allows to impose several regularity properties on the desired solution, however a crucial issue with its realization is the need to define reliable strategies for the choice of the regularization parameters , .

Therefore, in the literature, the most common and famous regularization approach is single-penalty regularization, also known as Tikhonov-like regularization, which corresponds to the choice :

In image restoration, smooth functions based on the -norm or convex nonsmooth functions like the Total Variation, the norm or the Total Generalized Variation are usually used for in (4) [2,3]. Even in the case , the development of suitable parameter choice criteria is still an open question. The recent literature has demonstrated a growing interest in multi-penalty regularization, with a significant number of researchers focusing on scenarios involving two penalty terms. Notably, the widely-used elastic regression in Statistics serves as an example of a multi-penalty regularization technique, integrating the and penalties from the Lasso and Ridge methods. However, the majority of the literature primarily addresses the development of suitable rules for parameter selection. Lu, Pereverzev et al. [4,5] have extensively investigated two -based terms, introducing a refined discrepancy principle to compute dual regularization parameters, along with its numerical implementation. The issue of parameter selection is further discussed in [6], where a generalized multi-parameter version of the L-curve criterion is proposed, and in [7], which suggests a methodology based on the GCV method. Reichel and Gazzola [8] propose regularization terms of the form

where are suitable regularization matrices. They present a method to determine the regularization parameters utilizing the discrepancy principle, with a special emphasis on the case . Fornasier et al. [9] proposed a modified discrepancy principle for multi-penalty regularization and provided theoretical background for this a posteriori rule. Works such as [10,11,12,13,14] also explore multi-penalty regularization for unmixing problems, employing two penalty terms based on and norms, and . The latter specifically concentrates on the and norms. The study [15] assesses two-penalty regularization, incorporating and penalty terms to tackle nonlinear ill-posed problems and analyzes its regularizing characteristics. In [16], an automated spatially adaptive regularization model combining harmonic and Total Variation (TV) terms is introduced. This model is dependent on two regularization parameters and two edge information matrices. Despite the dynamic update of the edge information matrix during iterations, the model necessitates fixed values for the regularization parameters. Calatroni et al. [17] present a space-variant generalized Gaussian regularization approach for image restoration, emphasizing its applicative potential. In [18], a multipenalty point-wise approach based on the Uniform Penalty principle is considered and analyzed for general linear inverse problems, introducing two iterative methods, UpenMM and GUpenMM, and analyzing their convergence.

Here, we extend the methodology developed in [18] to image restoration problems and we perform a comparative analysis with state-of-the-art regularization methods for this application. We propose to find an estimate of satisfying

where is a positive scalar and is the discrete Laplacian operator. This model, named MULTI, is specifically tailored for the image restoration problem. Observe that MULTI incorporates a pixel-wise regularization term and includes a rule for choosing the parameters. We formulate an iterative algorithm for computing the solution of (6), where . Once the regularization parameters are set in every inner iteration, the constrained minimization subproblem is efficiently solved by a customized version of the Newton Projection (NP) method. Here, the Hessian matrix is approximated by a Block Circulant with Circulant Blocks (BCCB) matrix, which is easily invertible in the Fourier space. This modified version of NP was designed in [19] for single-penalty image restoration under Poisson noise and it is adapted here to the context of multi-penalty regularization. Consequently, the convergence of the modified NP method can be established.

The principal contributions of this work are summarized as follows:

- We propose a variational pixel-wise regularization model tailored for image restoration and derived from the theoretical model developed in [18].

- We devise an algorithm capable of effectively and efficiently solving the proposed model.

- Through numerical experiments, we demonstrate that the proposed approach can proficiently eliminate noise and blur in smooth areas of an image while preserving its edges.

2. Materials and Methods

In this section, we present the iterative algorithm that generates the sequence converging to the solution in (6).

Starting from an initial guess taken as the observed image , the correspondent initial guess of the regularization parameters is computed as:

where

and is a neighborhood of size , (with R odd and ) of the -th pixel with coordinates .

The successive terms are obtained by the update formulas reported in steps 3–5 of Algorithm 1. The iterations are stopped when the relative distance between two successive regularization vectors is smaller than a fixed tolerance .

| Algorithm 1 Input: , , , Output: |

|

Algorithm 1 is well defined, and we experimentally observe a converging behaviour. Its formal convergence proof is obtained in [18] (theorem 3.4) for the case , since in this case, Algorithm 1 corresponds to UPenMM. Otherwise, to preserve convergence, we should introduce a correction as proposed in the Generalized Uniform Penalty method (GUPenMM) [18]. However, even without this correction, we obtained good-quality results and we prefer here to investigate Algorithm 1 because, in the case of large scale image restoration problems, it is much more convenient from a computational point of view. Moreover, we verified that the results obtained with such a correction are qualitatively comparable with those given by Algorithm 1, as the human eye cannot distinguish differences smaller than a few gray levels.

At each inner iteration, the constrained minimization subproblem (step 3 in Algorithm 1) is solved efficiently by a tailored version of the NP method where the Hessian matrix is approximated by a BCCB matrix easily invertible in the Fourier space.

Let us denote by the function to be minimized at step 3 in Algorithm 1:

and by its gradient where the iteration index k has been omitted for easier notation. Moreover, let denote the reduced gradient:

where is the set of indices [20]:

with

and is a small positive parameter.

The Hessian matrix has the form

where is the diagonal matrix with diagonal elements .

A general iteration of the proposed NP-like method has the form:

where is the search direction, is the steplength and denotes the projection on the positive orthant.

At each iteration ℓ, the computation of requires the solution of the linear system

where is the following approximation to

Under periodic boundary conditions, is a BCCB matrix and system (11) can be efficiently solved in the Fourier space by using Fast Fourier Transforms. Therefore, despite its simplicity, the BCCB approximation is efficient, since it allows to solve the linear system in operations, and effective, as is shown by the numerical results. Finally, given the solution of (11), the search direction is obtained as

The step length is computed with the variation of the Armijo rule discussed in [20] as the first number of the sequence , , such that

where and .

We observe that the approximated Hessian is constant for each inner iteration ℓ and it is positive definite, then it satisfies

Following that, the results given in [19] for single-penalty image restoration under Poisson noise can be applied here to prove the convergence of the NL-like iterations to critical points.

The stopping criteria for the NP-like method are based on the relative distance between two successive iterates and the relative projected gradient norm. In addition, a maximum number of NP iterations have been fixed.

3. Numerical Experiments

All the experiments were performed under Windows 10 and MATLAB R2021a running on a desktop (Intel(R) Core(TM) i5-8250CPU@1.60 GHz). Quantitatively, we evaluated the quality of image restoration by the relative error (RE), improved signal to noise ratio (ISNR), and mean structural similarity index (MSSIM) measures. The MSSIM is defined by Wang et al. [21] and ISNR is calculated as:

where is the restored image, is the reference image, and is the blurred, noisy image.

Four reference images were used in the experiments: galaxy, mri, leopard, and elaine, shown in Figure 1, Figure 2, Figure 3 and Figure 4. The first three images have size , while the elaine image is . In order to define the test problems, each reference image was convolved with two PSFs corresponding to a Gaussian blur with variance 2, generated by the psfGauss function from the MATLAB toolbox Restore-Tool [1], and an out-of-focus blur with radius 5, obtained with the function fspecial from the MATLAB Image Processing Toolbox. The resulting blurred image was then corrupted by Gaussian noise with different values of the noise level . The values were used.

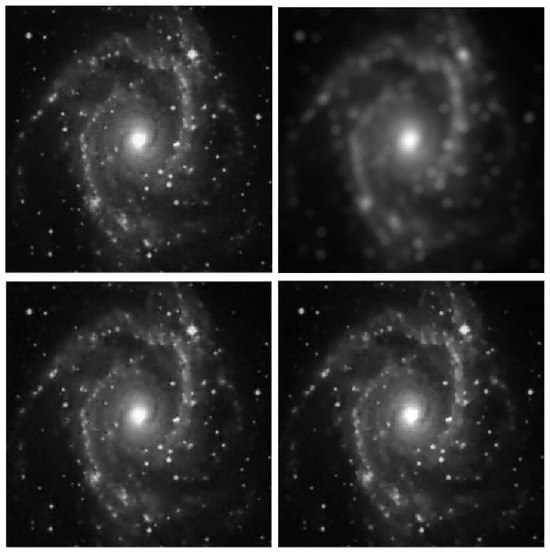

Figure 1.

galaxy test problem: out-of-focus blur; . Top row: original (left) and blurred (right) images. Bottom row: MULTI (left) and TGV (right) restorations.

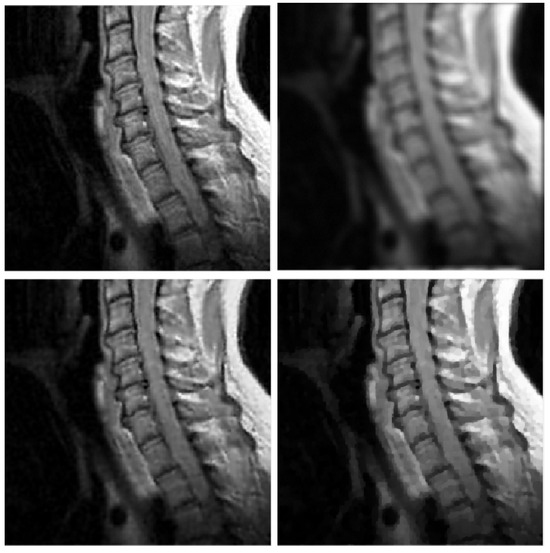

Figure 2.

mri test problem: out-of-focus blur; . Top row: original (left) and blurred (right) images. Bottom row: MULTI (left) and TGV (right) restorations.

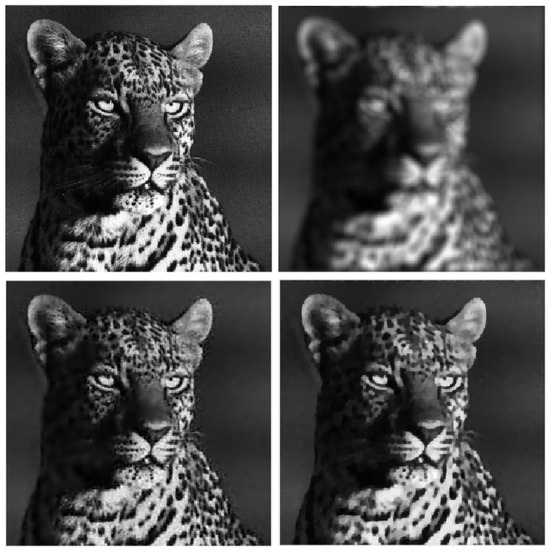

Figure 3.

leopard test problem: out-of-focus blur; . Top row: original (left) and blurred (right) images. Bottom row: MULTI (left) and TGV (right) restorations.

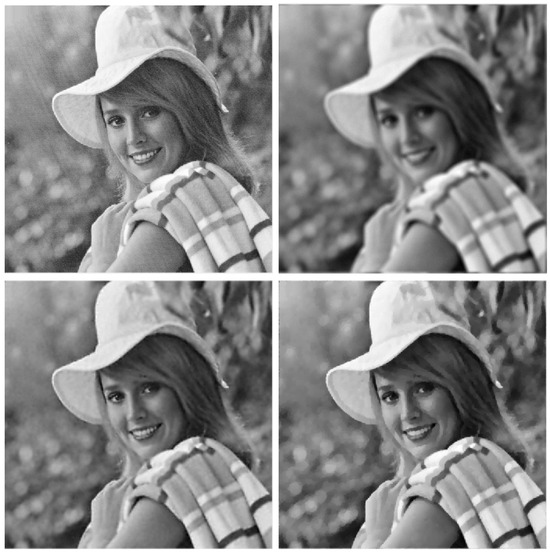

Figure 4.

elaine test problem: out-of-focus blur; . Top row: original (left) and blurred (right) images. Bottom row: MULTI (left) and TGV (right) restorations.

We compared the proposed pixel-wise multi-penalty regularization model (MULTI) with some commonly used state-of-the-art methods based on a variational approach. In particular, we considered the Tikhonov method (TIKH) [22], the Total Variation (TV) [2], and Total Generalized Variation (TGV) [3] regularization with nonnegative constraints. Tikhonov and TV regularization are quite classic regularization terms. It is well known that Tikhonov regularization tends to make images overly smooth and it fails to preserve sharp edges. On the contrary, TV regularization better preserves sharp edges but often produces staircase effects. TGV has been recently proposed to overcome the drawbacks of Tikhonov and TV regularization such as blurring and the staircasing effect. Therefore, we compared MULTI with TGV in order to demonstrate the capacity of MULTI to preserve sharp features as well as smooth transition variations.

In our numerical experiments, the regularization parameter values for TIKH, TV, and TGV were chosen heuristically by minimizing the relative error values. The Alternating Direction Method of Multipliers (ADMM) was used for the solution of the TV-based minimization problem, while for TIKH, we used the Scaled Gradient Projection (SGP) method with Barzilai and Borwein rules for the step length selection [23]. Regarding the TGV regularization, the RESPOND method [24] was used. We remark that RESPOND has been originally proposed for the restoration of images corrupted by Poisson noise by using Directional Total Generalized Variation regularization. It has been adapted here to deal with TGV-based restoration of images under Gaussian noise. The MATLAB implementation for Poisson noise is available on GitHub at the url https://github.com/diserafi/respond (accessed on 18 September 20).

The tolerance , in the outer loop of MULTI in Algorithm 1 step 7, was , while the maximum number of iterations was 20. Regarding the NP method, a tolerance of was used and the maximum number of iterations was 1000.

The size of the neighborhood in (8) was pixels for all tests except for galaxy, where a neighborhood was used.

The values of the parameter in (6), used in the various tests, are in the range . In order to compare all the algorithms at their best performance, the values used in each test are reported in Table 1, where we observe that the value of is proportional to the noise level. The parameter represents a threshold and, in general, should have a small value when compared to the non-null values of . We note that at the cost of adjusting a single parameter , it is possible to achieve point-wise optimal regularization.

Table 1.

Values for the parameter in (7).

Table 2, Table 3, Table 4 and Table 5 report the numerical results for all the test problems. The last column of the Tables shows the used values of the regularization parameter for TIKH, TV, and TGV while, for MULTI, it reports the norm of the regularization parameters vector computed by Algorithm 1. Column 7 shows the number of RESPOND, ADMM, and SGP iterations for TGV, TV, and TIKH, respectively. For the MULTI algorithm, Column 7 shows the number of outer iterations and NP iterations in parenthesis.

Table 2.

Numerical results for the galaxy test problem. Column Iters shows the number of RESPOND, ADMM and SGP iterations for TGV, TV and TIKH, respectively. For the MULTI algorithm, it shows the number of outer iterations and NP iterations in parenthesis. The best results are highlighted in bold. Column shows the used values of the regularization parameter for TIKH, TV and TGV while, for MULTI, it reports the norm of the regularization parameters vector computed by Algorithm 1.

Table 3.

Numerical results for the mri test problem. Column Iters shows the number of RESPOND, ADMM, and SGP iterations for TGV, TV, and TIKH, respectively. For the MULTI algorithm, it shows the number of outer iterations and NP iterations in parenthesis. Column shows the used values of the regularization parameter for TIKH, TV, and TGV while, for MULTI, it reports the norm of the regularization parameters vector computed by Algorithm 1. The best results are highlighted in bold.

Table 4.

Numerical results for the leopard test problem. Column Iters shows the number of RESPOND, ADMM, and SGP iterations for TGV, TV, and TIKH, respectively. For the MULTI algorithm, it shows the number of outer iterations and NP iterations in parenthesis. Column shows the used values of the regularization parameter for TIKH, TV, and TGV while, for MULTI, it reports the norm of the regularization parameters vector computed by Algorithm 1. The best results are highlighted in bold.

Table 5.

Numerical results for the elaine test problem. Column Iters shows the number of RESPOND, ADMM, and SGP iterations for TGV, TV, and TIKH, respectively. For the MULTI algorithm, it shows the number of outer iterations and NP iterations in parenthesis. Column shows the used values of the regularization parameter for TIKH, TV, and TGV while, for MULTI, it reports the norm of the regularization parameters vector computed by Algorithm 1. The best results are highlighted in bold.

In Table 2, Table 3, Table 4 and Table 5, we note that for the case of Gaussian blur, MULTI consistently achieves the best results, as highlighted in bold. This is evident from its higher MSSIM and ISNR values and lower RE values. However, for the Out-of-focus case, there are three instances (representing ) where TGV exhibits superior error parameters. Furthermore, our observations indicate that TGV consistently outperforms both TIKH and TV in terms of accuracy. Therefore, in Figure 1, Figure 2, Figure 3 and Figure 4 we only represent the images obtained by MULTI and TGV in the out-of-focus case, with , as this is a very challenging case.

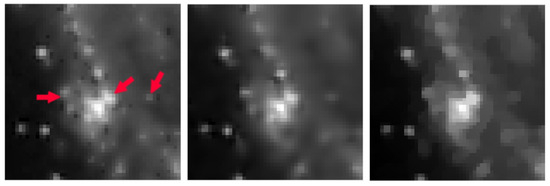

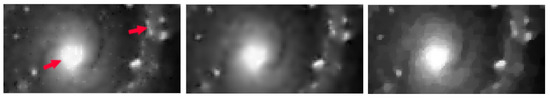

The strength of MULTI is evident by observing some details of the reconstructed images. In Figure 5, Figure 6, Figure 7 and Figure 8 we show some cropped details of the original images and compare it with MULTI and TGV reconstructions. Figure 5 shows a detail of the galaxy with a few stars over a dark background. In this case the image sparsity is better preserved by MULTI. Figure 6 shows the galaxy centre: it is a smooth area which is well recovered by MULTI while TGV shows staircasing. In Figure 7 we observe that the leopard’s whiskers and fur spots are better reproduced by MULTI. Moreover, from the images provided in Figure 8, it can be observed that MULTI method better preserves the local characteristics of the image, avoiding flattening the smooth areas and optimally preserving the sharp contours. We observe that a smooth area such as the cheek is better represented by MULTI, avoiding the staircase effect. Moreover, an area with strong contours, such as the teeth and the eyes, is better depicted. In summary, these examples show the good capacity of MULTI to preserve the different image structures, narrow peaks, and smooth areas by using local regularization parameters that are inversely proportional to the local curvature approximated by the discrete Laplacian.

Figure 5.

galaxy test problem: out-of-focus blur; . A detail of the original image (left), MULTI restoration (centre), and TGV restoration (right). Red arrows highlight the different image features.

Figure 6.

galaxy test problem: out-of-focus blur; . A detail of the original image (left), MULTI restoration (centre), and TGV restoration (right). Red arrows highlight the different image features.

Figure 7.

leopard test problem: out-of-focus blur; . A detail of the original image (left), MULTI restoration (centre), and TGV restoration (right). Red arrows highlight the different image features.

Figure 8.

elaine test problem: out-of-focus blur; . A detail of the original image (left), MULTI restoration (centre), and TGV restoration (right). Red arrows highlight the different image features.

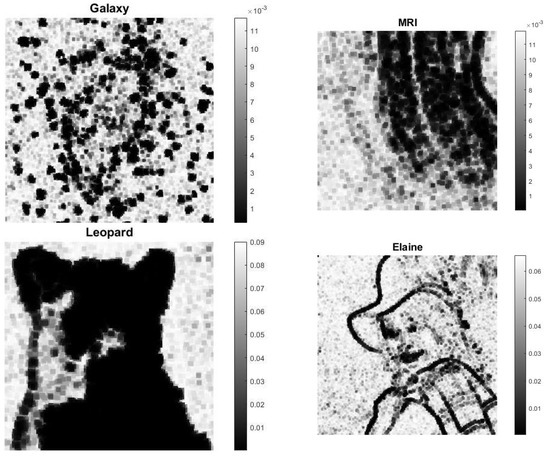

The regularization parameters computed by MULTI are represented in Figure 9; the adherence of the regularization parameters’ values to the image content is clear, showing larger values in areas where the image is flat and smaller values where there are pronounced gradients (edges). The range of the parameters automatically adjusts according to the different test types.

Figure 9.

Computed regularization parameters: out-of-focus blur, .

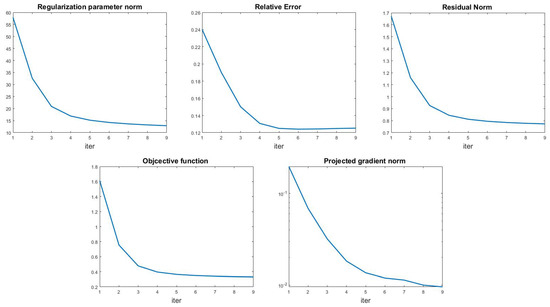

Finally, we show in Figure 10 an example of the algorithm behavior, reporting the history of the regularization parameter norm, relative error, and residual norm (top row), in the case of leopard test with out-of-focus blur and noise level . In the bottom row we show the decreasing behavior of the objective function and projected gradient norm. The relative error flattens after a few iterations, and the same behavior can be observed in all the other tests. Therefore, we used a large tolerance value () in the outer loop of Algorithm 1, making it possible to obtain good regularization parameters and accurate restorations in a few outer iterations. We observe that even in the most difficult case, Table 4 row 12, the total computation time is 285 s, proving the algorithm efficiency.

Figure 10.

Leopard test problem (out-of-focus blur, ). Top line: regularization parameters norm (left), relative error (middle), and residual norm (right) history for the multi-penalty model. Bottom line: objective function (left) and projected gradient norm history (right).

4. Conclusions

Despite the interest of recent literature on multi-penalty regularization, its drawback lies in the difficult computation of the regularization parameters. Our work proposes the pixel-wise regularization model to tackle the significant task of image restoration, concentrating on eliminating degradation originating from blur and noise. We show that multi-penalty regularization can be realized by an algorithm that is able to compute efficiently and automatically a large number of regularization parameters. The numerical results confirm the algorithm’s proficiency in eliminating noise and blur while concurrently preserving the edges of the image. Such an approach can be exploited in different real-world imaging applications, such as computed tomography, super-resolution, and biomedical imaging in general. Finally, further analyses of the properties of the proposed algorithm will be the subject of future works.

Author Contributions

Conceptualization, F.Z.; methodology, G.L.; software, G.L.; validation, G.L.; formal analysis, G.L.; investigation, G.L., V.B. and F.Z.; data curation, V.B. and G.L.; writing—original draft preparation, G.L.; writing—review and editing, V.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially supported by the Istituto Nazionale di Alta Matematica, GruppoNazionale per il Calcolo Scientifico (INdAM-GNCS).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hansen, P.C.; Nagy, J.G.; O’leary, D.P. Deblurring Images: Matrices, Spectra, and Filtering; SIAM: Philadelphia, PA, USA, 2006. [Google Scholar]

- Rudin, L.I.; Osher, S.; Fatemi, E. Nonlinear total variation based noise removal algorithms. Phys. D 1992, 60, 259–268. [Google Scholar] [CrossRef]

- Bredies, K.; Kunisch, K.; Pock, T. Total generalized variation. SIAM J. Imaging Sci. 2010, 3, 492–526. [Google Scholar] [CrossRef]

- Lu, S.; Pereverzev, S.V. Multi-parameter regularization and its numerical realization. Numer. Math. 2011, 118, 1–31. [Google Scholar] [CrossRef]

- Lu, S.; Pereverzev, S.V.; Shao, Y.; Tautenhahn, U. Discrepancy curves for multi-parameter regularization. J. Inverse-Ill-Posed Probl. 2010, 18, 655–676. [Google Scholar] [CrossRef]

- Belge, M.; Kilmer, M.E.; Miller, E.L. Efficient determination of multiple regularization parameters in a generalized L-curve framework. Inverse Probl. 2002, 18, 1161. [Google Scholar] [CrossRef]

- Brezinski, C.; Redivo-Zaglia, M.; Rodriguez, G.; Seatzu, S. Multi-parameter regularization techniques for ill-conditioned linear systems. Numer. Math. 2003, 94, 203–228. [Google Scholar] [CrossRef]

- Gazzola, S.; Reichel, L. A new framework for multi-parameter regularization. BIT Numer. Math. 2016, 56, 919–949. [Google Scholar] [CrossRef]

- Fornasier, M.; Naumova, V.; Pereverzyev, S.V. Parameter choice strategies for multipenalty regularization. SIAM J. Numer. Anal. 2014, 52, 1770–1794. [Google Scholar] [CrossRef][Green Version]

- Kereta, Z.; Maly, J.; Naumova, V. Linear convergence and support recovery for non-convex multi-penalty regularization. arXiv 2019, arXiv:1908.02503v1. [Google Scholar]

- Naumova, V.; Peter, S. Minimization of multi-penalty functionals by alternating iterative thresholding and optimal parameter choices. Inverse Probl. 2014, 30, 125003. [Google Scholar] [CrossRef][Green Version]

- Kereta, Ž.; Maly, J.; Naumova, V. Computational approaches to non-convex, sparsity-inducing multi-penalty regularization. Inverse Probl. 2021, 37, 055008. [Google Scholar] [CrossRef]

- Naumova, V.; Pereverzyev, S.V. Multi-penalty regularization with a component-wise penalization. Inverse Probl. 2013, 29, 075002. [Google Scholar] [CrossRef]

- Grasmair, M.; Klock, T.; Naumova, V. Adaptive multi-penalty regularization based on a generalized lasso path. Appl. Comput. Harmon. Anal. 2020, 49, 30–55. [Google Scholar] [CrossRef]

- Wang, W.; Lu, S.; Mao, H.; Cheng, J. Multi-parameter Tikhonov regularization with the ℓ0 sparsity constraint. Inverse Probl. 2013, 29, 065018. [Google Scholar] [CrossRef]

- Zhang, T.; Chen, J.; Wu, C.; He, Z.; Zeng, T.; Jin, Q. Edge adaptive hybrid regularization model for image deblurring. Inverse Probl. 2022, 38, 065010. [Google Scholar] [CrossRef]

- Calatroni, L.; Lanza, A.; Pragliola, M.; Sgallari, F. A flexible space-variant anisotropic regularization for image restoration with automated parameter selection. SIAM J. Imaging Sci. 2019, 12, 1001–1037. [Google Scholar] [CrossRef]

- Bortolotti, V.; Landi, G.; Zama, F. Uniform multi-penalty regularization for linear ill-posed inverse problems. arXiv 2023, arXiv:2309.14163. [Google Scholar]

- Landi, G.; Loli Piccolomini, E. An improved Newton projection method for nonnegative deblurring of Poisson-corrupted images with Tikhonov regularization. Numer. Algorithms 2012, 60, 169–188. [Google Scholar] [CrossRef]

- Bertsekas, D.P. Projected Newton methods for optimization problems with simple constraints. SIAM J. Control Optim. 1982, 20, 221–246. [Google Scholar] [CrossRef]

- Wang, S.; Rehman, A.; Wang, Z.; Ma, S.; Gao, W. SSIM-motivated rate-distortion optimization for video coding. IEEE Trans. Circuits Syst. Video Technol. 2011, 22, 516–529. [Google Scholar] [CrossRef]

- Tikhonov, A.N. On the solution of ill-posed problems and the method of regularization. In Doklady Akademii Nauk; Russian Academy of Sciences: Moscow, Russia, 1963; Volume 151, pp. 501–504. [Google Scholar]

- Bonettini, S.; Prato, M. New convergence results for the scaled gradient projection method. Inverse Probl. 2015, 31, 095008. [Google Scholar] [CrossRef]

- di Serafino, D.; Landi, G.; Viola, M. Directional TGV-based image restoration under Poisson noise. J. Imaging 2021, 7, 99. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).