Proposals Generation for Weakly Supervised Object Detection in Artwork Images

Abstract

:1. Introduction

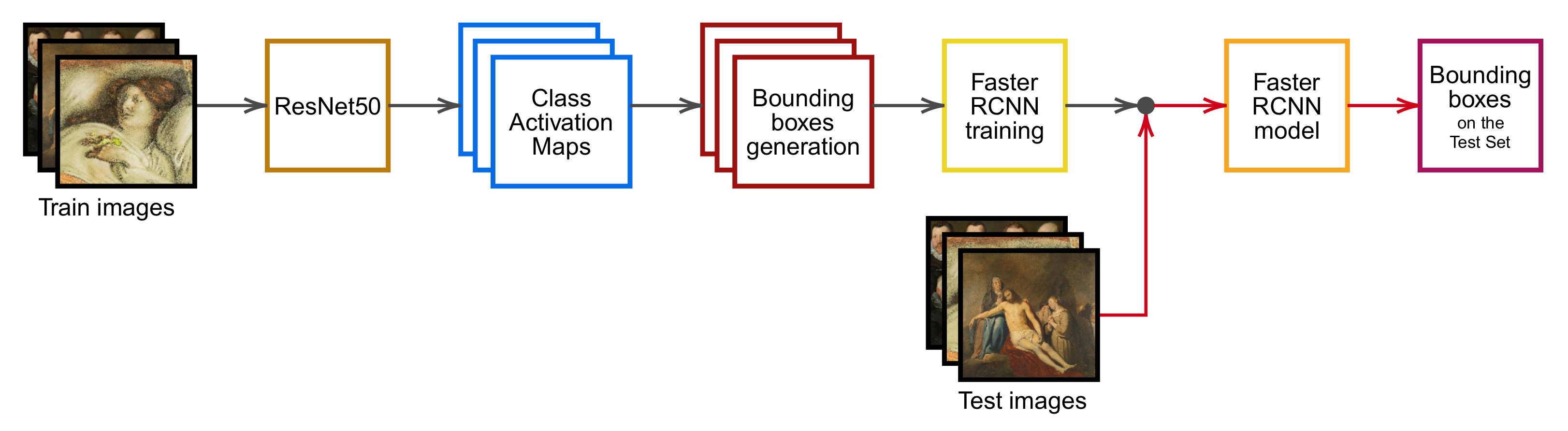

- We propose a WSOD approach based on three components that can be fully customized to work on non-natural data sets where state-of-the-art architectures fail. The proposed pipeline consists of: an existing ResNet-50 classifier, a CAM method paired with a dynamic thresholding technique to generate pseudo-ground truth (pseudo-GT) bounding boxes, and a Faster R-CNN object detector to localize classes inside images.

- We evaluate performances on two artwork data sets (ArtDL 2.0 and IconArt [16]), which are annotated for WSOD and whose complexity has been demonstrated in previous studies [17,18]. Our approach is able to reach ≈41.5% mAP on ArtDL and ≈17% mAP on IconArt, where state-of-the-art techniques obtain a maximum value of ≈25% and ≈15% mAP, respectively.

- We provide qualitative analysis to highlight the ability of the object detector to correctly localize multiple classes/instances even in complex artwork scenes. While the object detector can uncover features that are not found by the original classifier, failure examples show that the model sometimes suffers the inaccuracy of the pseudo-GT annotations used for training.

- For our analysis, we have extended an existing data set (ArtDL [14]) with 1697 manually annotated bounding boxes on 1625 images.

2. Related work

2.1. Weakly Supervised Object Detection

2.2. Automated Artwork Image Analysis

3. Methods

3.1. Classification Architecture

Discriminative Region Suppression

3.2. Class Activation Maps

Percentile as a Standard for Thresholding

3.3. Object Detector

4. Evaluation

4.1. Data Sets

4.1.1. ArtDL 2.0

4.1.2. IconArt

4.2. Quantitative Analysis

4.2.1. Classification

4.2.2. Pseudo-GT Generation

4.2.3. Weakly Supervised Object Detection

4.3. Qualitative Analysis

4.3.1. Positive Examples

4.3.2. Negative Examples

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Joshi, K.A.; Thakore, D.G. A survey on moving object detection and tracking in video surveillance system. Int. J. Soft Comput. Eng. 2012, 2, 44–48. [Google Scholar]

- Gupta, A.; Anpalagan, A.; Guan, L.; Khwaja, A.S. Deep learning for object detection and scene perception in self-driving cars: Survey, challenges, and open issues. Array 2021, 10, 100057. [Google Scholar] [CrossRef]

- Kaur, A.; Singh, Y.; Neeru, N.; Kaur, L.; Singh, A. A Survey on Deep Learning Approaches to Medical Images and a Systematic Look up into Real-Time Object Detection. Arch. Comput. Methods Eng. 2022, 29, 2071–2111. [Google Scholar] [CrossRef]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Castellano, G.; Vessio, G. Deep learning approaches to pattern extraction and recognition in paintings and drawings: An overview. Neural Comput. Appl. 2021, 33, 12263–12282. [Google Scholar] [CrossRef]

- Nguyen, M.H.; Torresani, L.; De La Torre, F.; Rother, C. Weakly supervised discriminative localization and classification: A joint learning process. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 1925–1932. [Google Scholar]

- Siva, P.; Xiang, T. Weakly supervised object detector learning with model drift detection. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 343–350. [Google Scholar]

- Song, H.O.; Girshick, R.; Jegelka, S.; Mairal, J.; Harchaoui, Z.; Darrell, T. On learning to localize objects with minimal supervision. In Proceedings of the International Conference on Machine Learning, Beijing, China, 21–26 June 2014; pp. 1611–1619. [Google Scholar]

- Zhang, D.; Han, J.; Cheng, G.; Yang, M.H. Weakly Supervised Object Localization and Detection: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021; early access. [Google Scholar] [CrossRef] [PubMed]

- Uijlings, J.R.; Van De Sande, K.E.; Gevers, T.; Smeulders, A.W. Selective search for object recognition. Int. J. Comput. Vis. 2013, 104, 154–171. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef]

- Carballal, A.; Santos, A.; Romero, J.; Machado, P.; Correia, J.; Castro, L. Distinguishing paintings from photographs by complexity estimates. Neural Comput. Appl. 2018, 30, 1957–1969. [Google Scholar] [CrossRef]

- Rodriguez, C.S.; Lech, M.; Pirogova, E. Classification of style in fine-art paintings using transfer learning and weighted image patches. In Proceedings of the 2018 12th International Conference on Signal Processing and Communication Systems (ICSPCS), Cairns, Australia, 17–19 December 2018; pp. 1–7. [Google Scholar]

- Milani, F.; Fraternali, P. A Dataset and a Convolutional Model for Iconography Classification in Paintings. J. Comput. Cult. Herit. 2021, 14, 1–18. [Google Scholar] [CrossRef]

- Gonthier, N.; Gousseau, Y.; Ladjal, S. An analysis of the transfer learning of convolutional neural networks for artistic images. In Proceedings of the International Conference on Pattern Recognition, Milan, Italy, 10–15 January 2021; pp. 546–561. [Google Scholar]

- Gonthier, N.; Gousseau, Y.; Ladjal, S.; Bonfait, O. Weakly supervised object detection in artworks. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Pinciroli Vago, N.O.; Milani, F.; Fraternali, P.; da Silva Torres, R. Comparing CAM Algorithms for the Identification of Salient Image Features in Iconography Artwork Analysis. J. Imaging 2021, 7, 106. [Google Scholar] [CrossRef]

- Gonthier, N.; Ladjal, S.; Gousseau, Y. Multiple instance learning on deep features for weakly supervised object detection with extreme domain shifts. Comput. Vis. Image Underst. 2022, 214, 103299. [Google Scholar] [CrossRef]

- Song, H.O.; Lee, Y.J.; Jegelka, S.; Darrell, T. Weakly-supervised discovery of visual pattern configurations. Adv. Neural Inf. Process. Syst. 2014, 27, 1–9. [Google Scholar]

- Kumar, M.; Packer, B.; Koller, D. Self-paced learning for latent variable models. Adv. Neural Inf. Process. Syst. 2010, 23, 1–9. [Google Scholar]

- Cinbis, R.G.; Verbeek, J.; Schmid, C. Weakly supervised object localization with multi-fold multiple instance learning. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 189–203. [Google Scholar] [CrossRef] [PubMed]

- Wan, F.; Liu, C.; Ke, W.; Ji, X.; Jiao, J.; Ye, Q. C-mil: Continuation multiple instance learning for weakly supervised object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 2199–2208. [Google Scholar]

- Hoffman, J.; Pathak, D.; Darrell, T.; Saenko, K. Detector discovery in the wild: Joint multiple instance and representation learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 2883–2891. [Google Scholar]

- Huang, Z.; Zou, Y.; Bhagavatula, V.; Huang, D. Comprehensive attention self-distillation for weakly-supervised object detection. arXiv 2020, arXiv:2010.12023. [Google Scholar]

- Zhang, S.; Ke, W.; Yang, L.; Ye, Q.; Hong, X.; Gong, Y.; Zhang, T. Discovery-and-Selection: Towards Optimal Multiple Instance Learning for Weakly Supervised Object Detection. arXiv 2021, arXiv:2110.09060. [Google Scholar]

- Yuan, Q.; Sun, G.; Liang, J.; Leng, B. Efficient Weakly-Supervised Object Detection With Pseudo Annotations. IEEE Access 2021, 9, 104356–104366. [Google Scholar] [CrossRef]

- Lv, P.; Hu, S.; Hao, T.; Ji, H.; Cui, L.; Fan, H.; Xu, M.; Xu, C. Contrastive Proposal Extension with LSTM Network for Weakly Supervised Object Detection. arXiv 2021, arXiv:2110.07511. [Google Scholar]

- Bilen, H.; Vedaldi, A. Weakly supervised deep detection networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2846–2854. [Google Scholar]

- Tang, P.; Wang, X.; Bai, X.; Liu, W. Multiple instance detection network with online instance classifier refinement. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2843–2851. [Google Scholar]

- Tang, P.; Wang, X.; Bai, S.; Shen, W.; Bai, X.; Liu, W.; Yuille, A. Pcl: Proposal cluster learning for weakly supervised object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 42, 176–191. [Google Scholar] [CrossRef] [PubMed]

- Gao, M.; Li, A.; Yu, R.; Morariu, V.I.; Davis, L.S. C-wsl: Count-guided weakly supervised localization. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 152–168. [Google Scholar]

- Yang, K.; Li, D.; Dou, Y. Towards precise end-to-end weakly supervised object detection network. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 8372–8381. [Google Scholar]

- Zeng, Z.; Liu, B.; Fu, J.; Chao, H.; Zhang, L. Wsod2: Learning bottom-up and top-down objectness distillation for weakly-supervised object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 8292–8300. [Google Scholar]

- Ren, Z.; Yu, Z.; Yang, X.; Liu, M.Y.; Lee, Y.J.; Schwing, A.G.; Kautz, J. Instance-aware, context-focused, and memory-efficient weakly supervised object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 10598–10607. [Google Scholar]

- Chen, Z.; Fu, Z.; Jiang, R.; Chen, Y.; Hua, X.S. Slv: Spatial likelihood voting for weakly supervised object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 12995–13004. [Google Scholar]

- Shen, Y.; Ji, R.; Chen, Z.; Wu, Y.; Huang, F. UWSOD: Toward Fully-Supervised-Level Capacity Weakly Supervised Object Detection. Adv. Neural Inf. Process. Syst. 2020, 33, 1–15. [Google Scholar]

- Zhou, B.; Khosla, A.A.L.; Oliva, A.; Torralba, A. Learning Deep Features for Discriminative Localization. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Chan, L.; Hosseini, M.S.; Plataniotis, K.N. A comprehensive analysis of weakly-supervised semantic segmentation in different image domains. Int. J. Comput. Vis. 2021, 129, 361–384. [Google Scholar] [CrossRef]

- Zhang, D.; Zeng, W.; Yao, J.; Han, J. Weakly supervised object detection using proposal-and semantic-level relationships. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 3349–3363. [Google Scholar] [CrossRef] [PubMed]

- Fang, L.; Xu, H.; Liu, Z.; Parisot, S.; Li, Z. EHSOD: CAM-Guided End-to-end Hybrid-Supervised Object Detection with Cascade Refinement. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 10778–10785. [Google Scholar]

- Wang, H.; Li, H.; Qian, W.; Diao, W.; Zhao, L.; Zhang, J.; Zhang, D. Dynamic pseudo-label generation for weakly supervised object detection in remote sensing images. Remote Sens. 2021, 13, 1461. [Google Scholar] [CrossRef]

- Wang, J.; Yao, J.; Zhang, Y.; Zhang, R. Collaborative learning for weakly supervised object detection. arXiv 2018, arXiv:1802.03531. [Google Scholar]

- Shen, Y.; Ji, R.; Wang, Y.; Wu, Y.; Cao, L. Cyclic guidance for weakly supervised joint detection and segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 697–707. [Google Scholar]

- Gao, Y.; Liu, B.; Guo, N.; Ye, X.; Wan, F.; You, H.; Fan, D. C-midn: Coupled multiple instance detection network with segmentation guidance for weakly supervised object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Long Beach, CA, USA, 16–20 June 2019; pp. 9834–9843. [Google Scholar]

- Yang, K.; Zhang, P.; Qiao, P.; Wang, Z.; Dai, H.; Shen, T.; Li, D.; Dou, Y. Rethinking Segmentation Guidance for Weakly Supervised Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 946–947. [Google Scholar]

- Diba, A.; Sharma, V.; Pazandeh, A.; Pirsiavash, H.; Van Gool, L. Weakly supervised cascaded convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 914–922. [Google Scholar]

- Wei, Y.; Shen, Z.; Cheng, B.; Shi, H.; Xiong, J.; Feng, J.; Huang, T. Ts2c: Tight box mining with surrounding segmentation context for weakly supervised object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 434–450. [Google Scholar]

- Kim, B.; Han, S.; Kim, J. Discriminative Region Suppression for Weakly-Supervised Semantic Segmentation. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; Volume 35, pp. 1754–1761. [Google Scholar]

- Shao, F.; Chen, L.; Shao, J.; Ji, W.; Xiao, S.; Ye, L.; Zhuang, Y.; Xiao, J. Deep Learning for Weakly-Supervised Object Detection and Localization: A Survey. Neurocomputing 2022, 496, 192–207. [Google Scholar] [CrossRef]

- Cetinic, E.; She, J. Understanding and creating art with AI: Review and outlook. arXiv 2021, arXiv:2102.09109. [Google Scholar] [CrossRef]

- Lecoutre, A.; Negrevergne, B.; Yger, F. Recognizing art style automatically in painting with deep learning. In Proceedings of the Asian Conference on Machine Learning, Seoul, Korea, 15–17 November 2017; pp. 327–342. [Google Scholar]

- Sabatelli, M.; Kestemont, M.; Daelemans, W.; Geurts, P. Deep transfer learning for art classification problems. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Van Noord, N.; Postma, E. Learning scale-variant and scale-invariant features for deep image classification. Pattern Recognit. 2017, 61, 583–592. [Google Scholar] [CrossRef]

- Bongini, P.; Becattini, F.; Bagdanov, A.D.; Del Bimbo, A. Visual question answering for cultural heritage. Iop Conf. Ser. Mater. Sci. Eng. 2020, 949, 012074. [Google Scholar] [CrossRef]

- Garcia, N.; Ye, C.; Liu, Z.; Hu, Q.; Otani, M.; Chu, C.; Nakashima, Y.; Mitamura, T. A dataset and baselines for visual question answering on art. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 92–108. [Google Scholar]

- Geun, O.W.; Jong-Gook, K. Visual Narrative Technology of Paintings Based on Image Objects. In Proceedings of the 2019 International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Korea, 16–18 October 2019; pp. 902–905. [Google Scholar]

- Lu, Y.; Guo, C.; Dai, X.; Wang, F.Y. Data-efficient image captioning of fine art paintings via virtual-real semantic alignment training. Neurocomputing 2022, 490, 163–180. [Google Scholar] [CrossRef]

- Ginosar, S.; Haas, D.; Brown, T.; Malik, J. Detecting people in cubist art. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 101–116. [Google Scholar]

- Smirnov, S.; Eguizabal, A. Deep learning for object detection in fine-art paintings. In Proceedings of the 2018 Metrology for Archaeology and Cultural Heritage (MetroArchaeo), Cassino, Italy, 22–24 October 2018; pp. 45–49. [Google Scholar]

- Marinescu, M.C.; Reshetnikov, A.; López, J.M. Improving object detection in paintings based on time contexts. In Proceedings of the 2020 International Conference on Data Mining Workshops (ICDMW), Sorrento, Italy, 17–20 November 2020; pp. 926–932. [Google Scholar]

- Strezoski, G.; Worring, M. Omniart: A large-scale artistic benchmark. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2018, 14, 1–21. [Google Scholar] [CrossRef]

- Couprie, L.D. Iconclass: An iconographic classification system. Art Libr. J. 1983, 8, 32–49. [Google Scholar] [CrossRef]

- Crowley, E.J.; Zisserman, A. Of gods and goats: Weakly supervised learning of figurative art. Learning 2013, 8, 14. [Google Scholar]

- Inoue, N.; Furuta, R.; Yamasaki, T.; Aizawa, K. Cross-domain weakly-supervised object detection through progressive domain adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 5001–5009. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2009, 22, 1345–1359. [Google Scholar] [CrossRef]

- Cetinic, E.; Lipic, T.; Grgic, S. Fine-tuning convolutional neural networks for fine art classification. Expert Syst. Appl. 2018, 114, 107–118. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhou, Y.; Ye, Q.; Qiu, Q.; Jiao, J. Soft proposal networks for weakly supervised object localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1841–1850. [Google Scholar]

- Yi, S.; Li, X.; Ma, H. WSOD with PSNet and Box Regression. arXiv 2019, arXiv:1911.11512. [Google Scholar]

- Omeiza, D.; Speakman, S.; Cintas, C.; Weldermariam, K. Smooth grad-cam++: An enhanced inference level visualization technique for deep convolutional neural network models. arXiv 2019, arXiv:1908.01224. [Google Scholar]

- Bae, W.; Noh, J.; Kim, G. Rethinking class activation mapping for weakly supervised object localization. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 618–634. [Google Scholar]

- Jo, S.; Yu, I.J. Puzzle-CAM: Improved localization via matching partial and full features. arXiv 2021, arXiv:2101.11253. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Chattopadhay, A.; Sarkar, A.; Howlader, P.; Balasubramanian, V.N. Grad-CAM++: Generalized Gradient-Based Visual Explanations for Deep Convolutional Networks. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018. [Google Scholar] [CrossRef]

- Fu, R.; Hu, Q.; Dong, X.; Guo, Y.; Gao, Y.; Li, B. Axiom-based grad-cam: Towards accurate visualization and explanation of cnns. arXiv 2020, arXiv:2008.02312. [Google Scholar]

- Ramaswamy, H.G. Ablation-cam: Visual explanations for deep convolutional network via gradient-free localization. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 1–5 March 2020; pp. 983–991. [Google Scholar]

- Jiang, P.T.; Zhang, C.B.; Hou, Q.; Cheng, M.M.; Wei, Y. LayerCAM: Exploring Hierarchical Class Activation Maps. IEEE Trans. Image Process. 2021, 30, 5875–5888. [Google Scholar] [CrossRef]

- Tagaris, T.; Sdraka, M.; Stafylopatis, A. High-Resolution Class Activation Mapping. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 4514–4518. [Google Scholar]

- Gao, W.; Wan, F.; Pan, X.; Peng, Z.; Tian, Q.; Han, Z.; Zhou, B.; Ye, Q. TS-CAM: Token Semantic Coupled Attention Map for Weakly Supervised Object Localization. arXiv 2021, arXiv:2103.14862. [Google Scholar]

- Belharbi, S.; Sarraf, A.; Pedersoli, M.; Ben Ayed, I.; McCaffrey, L.; Granger, E. F-CAM: Full Resolution Class Activation Maps via Guided Parametric Upscaling. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 4–8 January 2022; pp. 3490–3499. [Google Scholar]

- Kim, J.; Choe, J.; Yun, S.; Kwak, N. Normalization Matters in Weakly Supervised Object Localization. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 3427–3436. [Google Scholar]

- Jiang, H.; Learned-Miller, E. Face detection with the faster R-CNN. In Proceedings of the 2017 12th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2017), Washington, DC, USA, 30 May–3 June 2017; pp. 650–657. [Google Scholar]

- Córdova, M.; Pinto, A.; Hellevik, C.C.; Alaliyat, S.A.A.; Hameed, I.A.; Pedrini, H.; Torres, R.d.S. Litter Detection with Deep Learning: A Comparative Study. Sensors 2022, 22, 548. [Google Scholar] [CrossRef] [PubMed]

- Xie, Z.; Ji, C. Single and multiwavelength detection of coronal dimming and coronal wave using faster R-CNN. Adv. Astron. 2019, 2019, 7821025. [Google Scholar] [CrossRef]

- Singh, K.K.; Lee, Y.J. Hide-and-seek: Forcing a network to be meticulous for weakly-supervised object and action localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 3544–3553. [Google Scholar]

- Choe, J.; Shim, H. Attention-based dropout layer for weakly supervised object localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 2219–2228. [Google Scholar]

- Zhang, X.; Wei, Y.; Feng, J.; Yang, Y.; Huang, T.S. Adversarial complementary learning for weakly supervised object localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1325–1334. [Google Scholar]

- Zhang, Y.; Bai, Y.; Ding, M.; Li, Y.; Ghanem, B. W2f: A weakly-supervised to fully-supervised framework for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 928–936. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Torres, R.N.; Milani, F.; Fraternali, P. ODIN: Pluggable Meta-annotations and Metrics for the Diagnosis of Classification and Localization. In Proceedings of the International Conference on Machine Learning, Optimization, and Data Science, Lake District, UK, 4–8 October 2021; pp. 383–398. [Google Scholar]

- Ou, J.R.; Deng, S.L.; Yu, J.G. WS-RCNN: Learning to Score Proposals for Weakly Supervised Instance Segmentation. Sensors 2021, 21, 3475. [Google Scholar] [CrossRef] [PubMed]

- Tian, Z.; Shen, C.; Wang, X.; Chen, H. Boxinst: High-performance instance segmentation with box annotations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 5443–5452. [Google Scholar]

- Lan, S.; Yu, Z.; Choy, C.; Radhakrishnan, S.; Liu, G.; Zhu, Y.; Davis, L.S.; Anandkumar, A. DISCOBOX: Weakly Supervised Instance Segmentation and Semantic Correspondence from Box Supervision. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 3406–3416. [Google Scholar]

- Cetinic, E. Iconographic image captioning for artworks. In Proceedings of the International Conference on Pattern Recognition, Virtual, 10–15 January 2021; pp. 502–516. [Google Scholar]

- Yang, Z.; Zhang, Y.J.; Rehman, S.U.; Huang, Y. Image captioning with object detection and localization. In Proceedings of the International Conference on Image and Graphics, Shanghai, China, 13–15 September 2017; pp. 109–118. [Google Scholar]

- Yao, T.; Pan, Y.; Li, Y.; Mei, T. Exploring visual relationship for image captioning. In Proceedings of the European conference on computer vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 684–699. [Google Scholar]

- Yao, T.; Pan, Y.; Li, Y.; Mei, T. Hierarchy parsing for image captioning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 2621–2629. [Google Scholar]

| Set | Virgin Mary | Antony of Padua | Dominic | Francis of Assisi | Jerome | John the Baptist | Paul | Peter | Sebastian | Mary Magd. | Total | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Classification images | Train | 9515 | 115 | 234 | 784 | 939 | 943 | 419 | 949 | 448 | 727 | 15073 |

| Val. | 1189 | 14 | 30 | 98 | 117 | 97 | 52 | 118 | 56 | 90 | 1861 | |

| Test | 1189 | 14 | 29 | 98 | 118 | 99 | 52 | 119 | 56 | 90 | 1864 | |

| Object detection images | Val. | 1063 | 23 | 30 | 98 | 101 | 101 | 40 | 86 | 54 | 85 | 1625 |

| Test | 283 | 26 | 29 | 85 | 99 | 81 | 34 | 84 | 47 | 68 | 823 | |

| Annotated bounding boxes | Val. | 1076 | 23 | 30 | 98 | 102 | 101 | 40 | 87 | 55 | 85 | 1697 |

| Test | 283 | 26 | 29 | 85 | 99 | 81 | 34 | 84 | 47 | 68 | 836 |

| Set | Angel | Child Jesus | Crucifixion | Mary | Nudity | Ruins | Saint Sebastian | None | Total | |

|---|---|---|---|---|---|---|---|---|---|---|

| Classification images | Train | 600 | 755 | 86 | 1065 | 956 | 234 | 75 | 947 | 2978 |

| Test | 627 | 750 | 107 | 1086 | 1007 | 264 | 82 | 924 | 2977 | |

| Object detection images | Test | 261 | 313 | 107 | 446 | 403 | 114 | 82 | 623 | 1480 |

| Annotated bounding boxes | Test | 1043 | 320 | 109 | 502 | 759 | 194 | 82 | N/A | 3009 |

| Method | ArtDL 2.0 | IconArt | ||||||

|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1 | AP | Precision | Recall | F1 | AP | |

| ResNet-50 [14] | 0.727 | 0.698 | 0.691 | 0.716 | 0.715 | 0.679 | 0.642 | 0.725 |

| ResNet-50 + DRS | 0.658 | 0.658 | 0.649 | 0.701 | 0.717 | 0.619 | 0.656 | 0.731 |

| Mi-max [18] | 0.040 | 0.850 | 0.090 | 0.176 | 0.240 | 0.970 | 0.360 | 0.540 |

| Method | Virgin Mary | Antony of Padua | Dominic | Francis of Assisi | Jerome | John the Baptist | Paul | Peter | Sebastian | Mary Magd. |

|---|---|---|---|---|---|---|---|---|---|---|

| ResNet-50 | 0.973 | 0.548 | 0.498 | 0.746 | 0.784 | 0.805 | 0.469 | 0.733 | 0.822 | 0.781 |

| ResNet-50 + DRS | 0.959 | 0.737 | 0.575 | 0.782 | 0.781 | 0.707 | 0.345 | 0.663 | 0.790 | 0.675 |

| Mi-max | 0.768 | 0.016 | 0.017 | 0.059 | 0.266 | 0.208 | 0.049 | 0.074 | 0.390 | 0.189 |

| Method | Angel | Child Jesus | Crucifixion | Mary | Nudity | Ruins | Saint Sebastian |

|---|---|---|---|---|---|---|---|

| ResNet-50 | 0.739 | 0.848 | 0.794 | 0.888 | 0.821 | 0.764 | 0.219 |

| ResNet-50 + DRS | 0.702 | 0.841 | 0.833 | 0.883 | 0.818 | 0.789 | 0.250 |

| Mi-max | 0.548 | 0.547 | 0.765 | 0.694 | 0.651 | 0.412 | 0.138 |

| Method | DRS | Fixed Threshold | PaS | |||

|---|---|---|---|---|---|---|

| Threshold | mAP | Threshold | Percentile | mAP | ||

| CAM [37] | ✗ | 95 | 0.251 | |||

| ✓ | 95 | |||||

| Grad-CAM [74] | ✗ | 90 | ||||

| ✓ | 75 | |||||

| Grad-CAM++ [75] | ✗ | 95 | ||||

| ✓ | 80 | |||||

| Smooth Grad-CAM++ [71] | ✗ | 85 | ||||

| ✓ | 80 | |||||

| Architecture | ArtDL 2.0 | IconArt |

|---|---|---|

| PCL [30] | 0.248 | 0.059 * |

| CASD [24] | 0.135 | 0.045 |

| UWSOD [36] | 0.076 | 0.062 |

| Mi-max [18] | 0.082 | 0.145 * |

| CAM + PaS | 0.403 | 0.032 |

| Ours | 0.415 | 0.166 |

| Architecture | Virgin Mary | Antony of Padua | Dominic | Francis of Assisi | Jerome | John the Baptist | PAUL | Peter | Sebastian | Mary Magd. |

|---|---|---|---|---|---|---|---|---|---|---|

| PCL | 0.478 | 0.024 | 0.005 | 0.122 | 0.476 | 0.204 | 0.059 | 0.191 | 0.370 | 0.554 |

| CASD | 0.301 | 0.011 | 0.035 | 0.059 | 0.344 | 0.057 | 0.010 | 0.112 | 0.072 | 0.351 |

| UWSOD | 0.018 | 0.063 | 0.033 | 0.022 | 0.022 | 0.034 | 0.018 | 0.019 | 0.023 | 0.014 |

| Mi-max | 0.142 | 0.016 | 0.000 | 0.000 | 0.128 | 0.112 | 0.024 | 0.040 | 0.219 | 0.136 |

| CAM + PaS | 0.242 | 0.341 | 0.254 | 0.282 | 0.604 | 0.308 | 0.268 | 0.613 | 0.418 | 0.697 |

| Ours | 0.490 | 0.230 | 0.322 | 0.294 | 0.551 | 0.468 | 0.245 | 0.540 | 0.446 | 0.556 |

| Architecture | Angel | Child Jesus | Crucifixion | Mary | Nudity | Ruins | Saint Sebastian |

|---|---|---|---|---|---|---|---|

| PCL | 0.029 | 0.003 | 0.010 | 0.263 | 0.023 | 0.014 | 0.072 |

| CASD | 0.002 | 0.000 | 0.200 | 0.049 | 0.014 | 0.023 | 0.028 |

| UWSOD | 0.089 | 0.000 | 0.020 | 0.016 | 0.003 | 0.076 | 0.112 |

| Mi-max | 0.043 | 0.067 | 0.357 | 0.156 | 0.240 | 0.152 | 0.001 |

| CAM + PaS | 0.010 | 0.002 | 0.076 | 0.009 | 0.028 | 0.052 | 0.046 |

| Ours | 0.009 | 0.017 | 0.589 | 0.019 | 0.243 | 0.061 | 0.221 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Milani, F.; Pinciroli Vago, N.O.; Fraternali, P. Proposals Generation for Weakly Supervised Object Detection in Artwork Images. J. Imaging 2022, 8, 215. https://doi.org/10.3390/jimaging8080215

Milani F, Pinciroli Vago NO, Fraternali P. Proposals Generation for Weakly Supervised Object Detection in Artwork Images. Journal of Imaging. 2022; 8(8):215. https://doi.org/10.3390/jimaging8080215

Chicago/Turabian StyleMilani, Federico, Nicolò Oreste Pinciroli Vago, and Piero Fraternali. 2022. "Proposals Generation for Weakly Supervised Object Detection in Artwork Images" Journal of Imaging 8, no. 8: 215. https://doi.org/10.3390/jimaging8080215