Practical Application of Augmented/Mixed Reality Technologies in Surgery of Abdominal Cancer Patients

Abstract

:1. Introduction

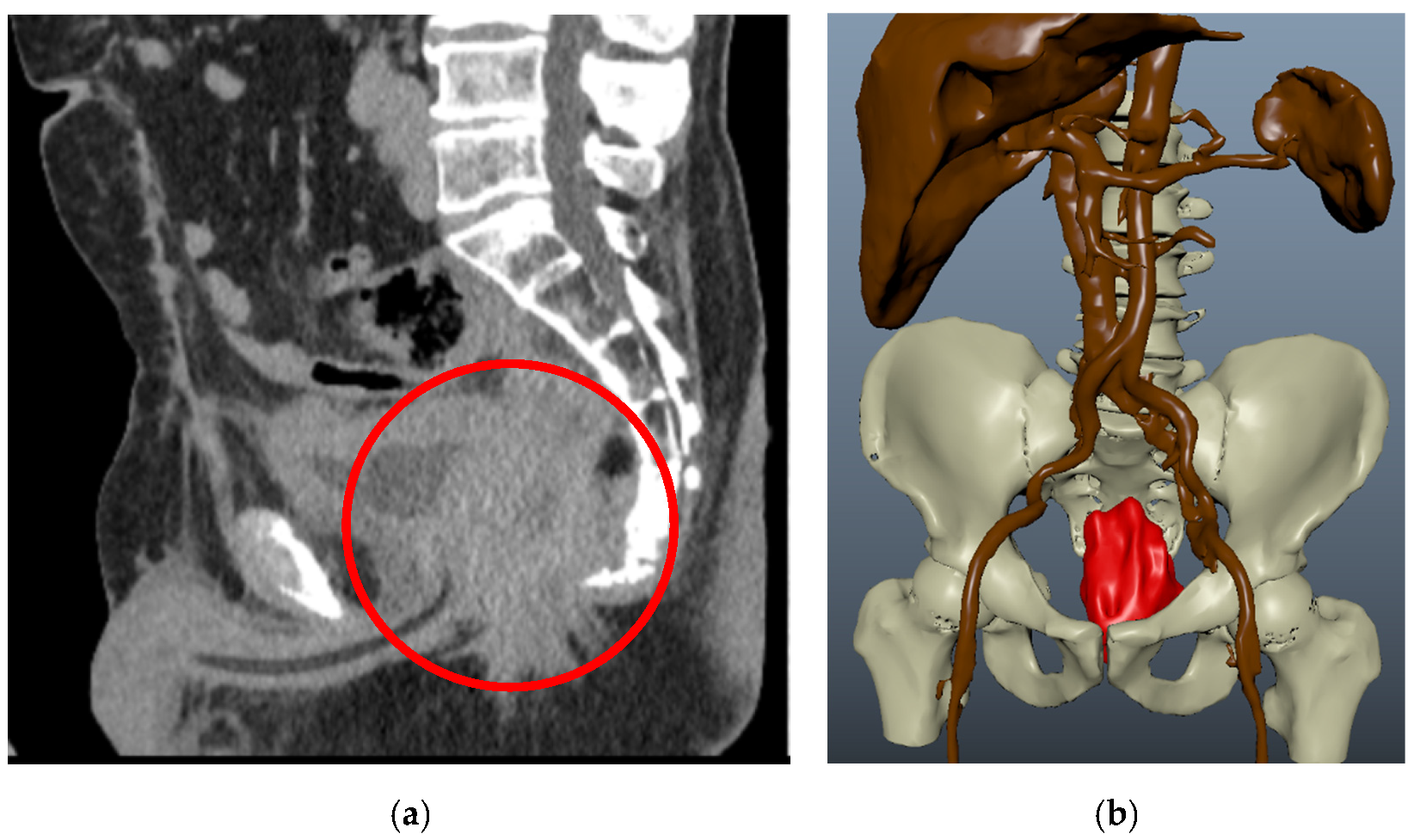

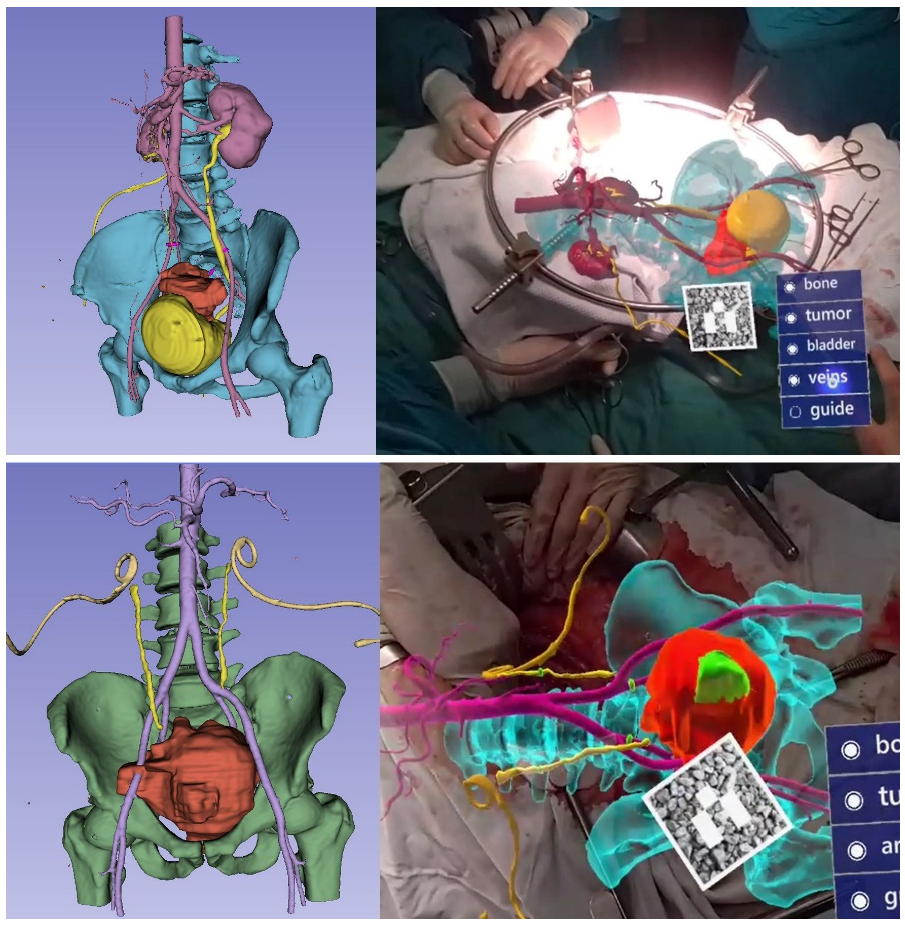

2. Materials and Methods

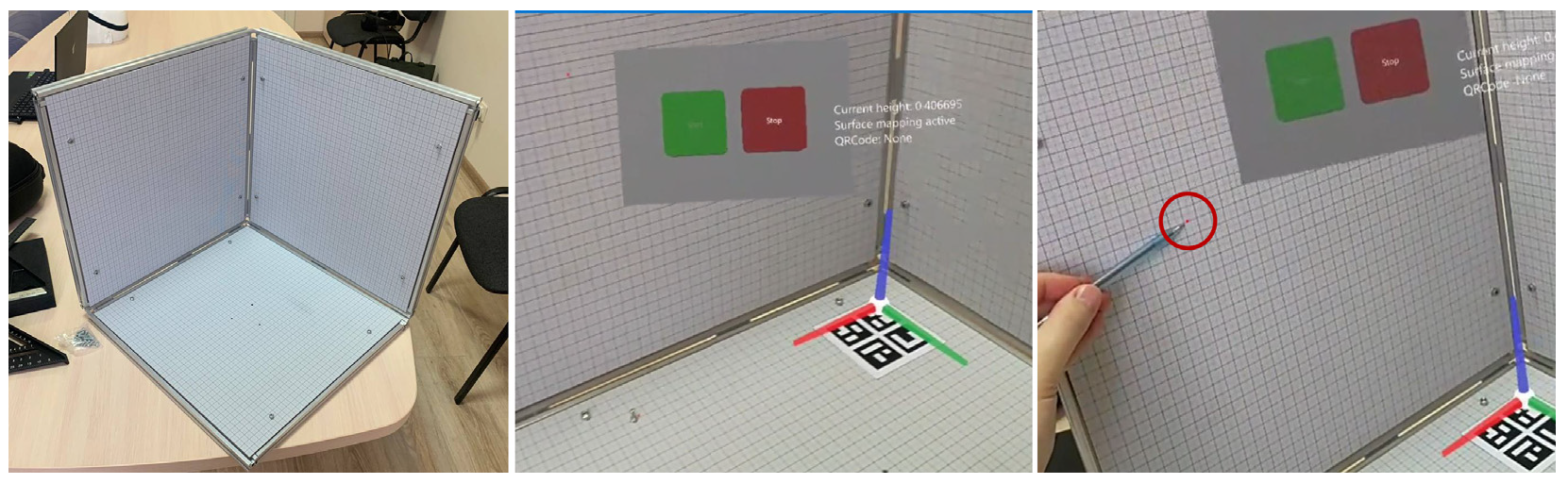

Materials

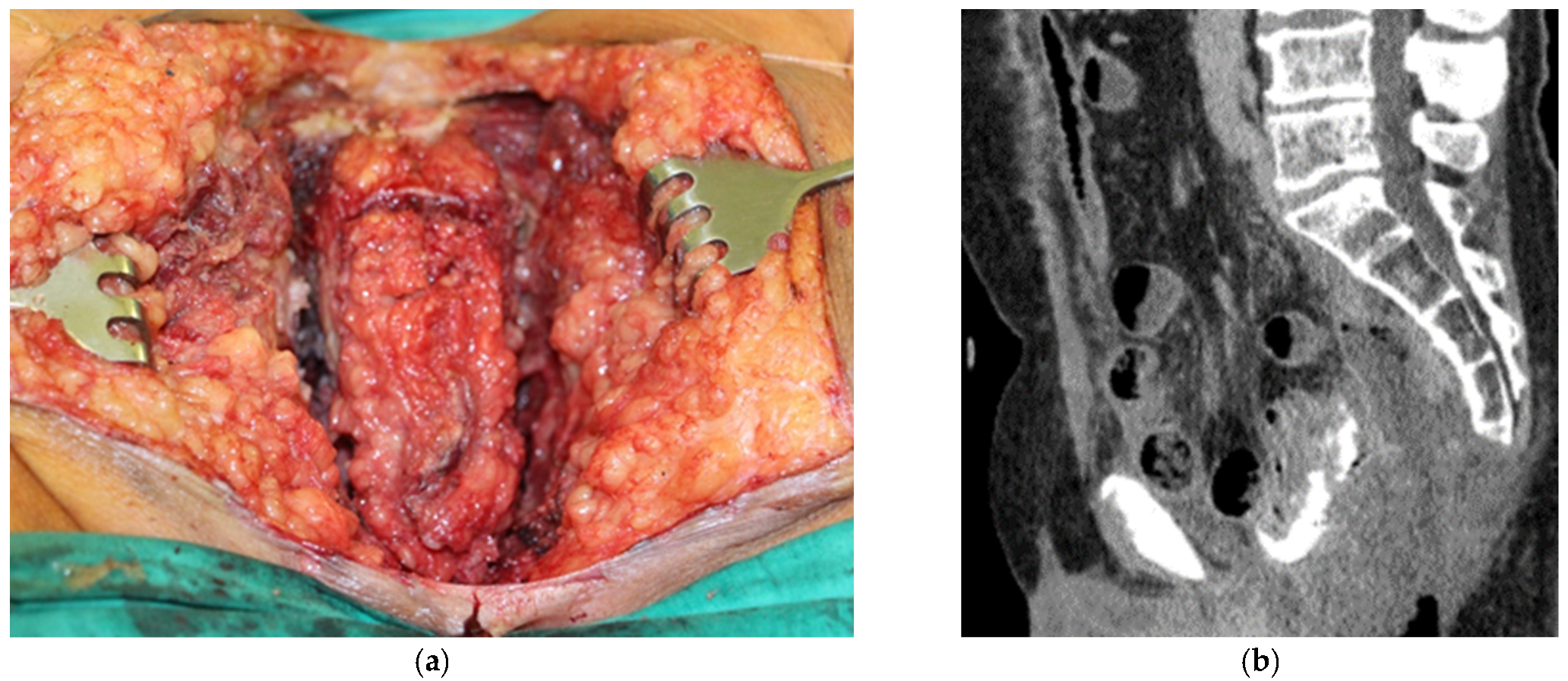

3. Results

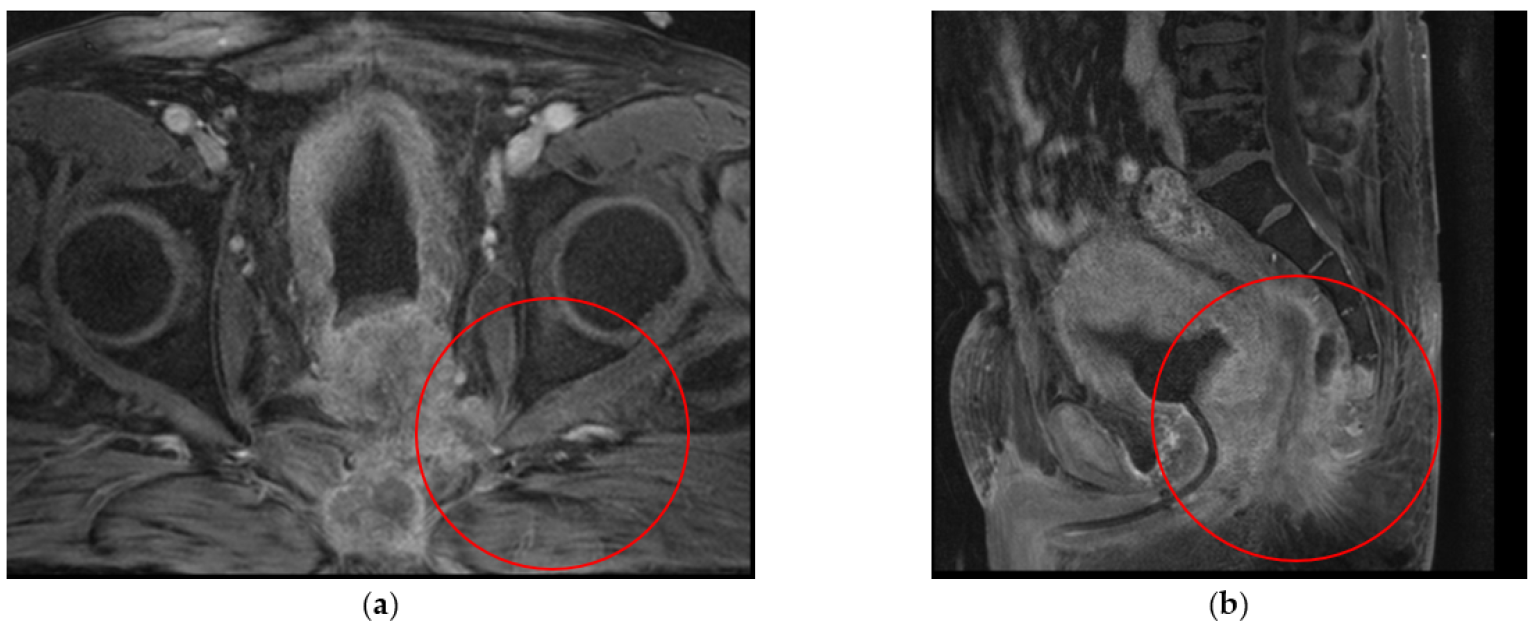

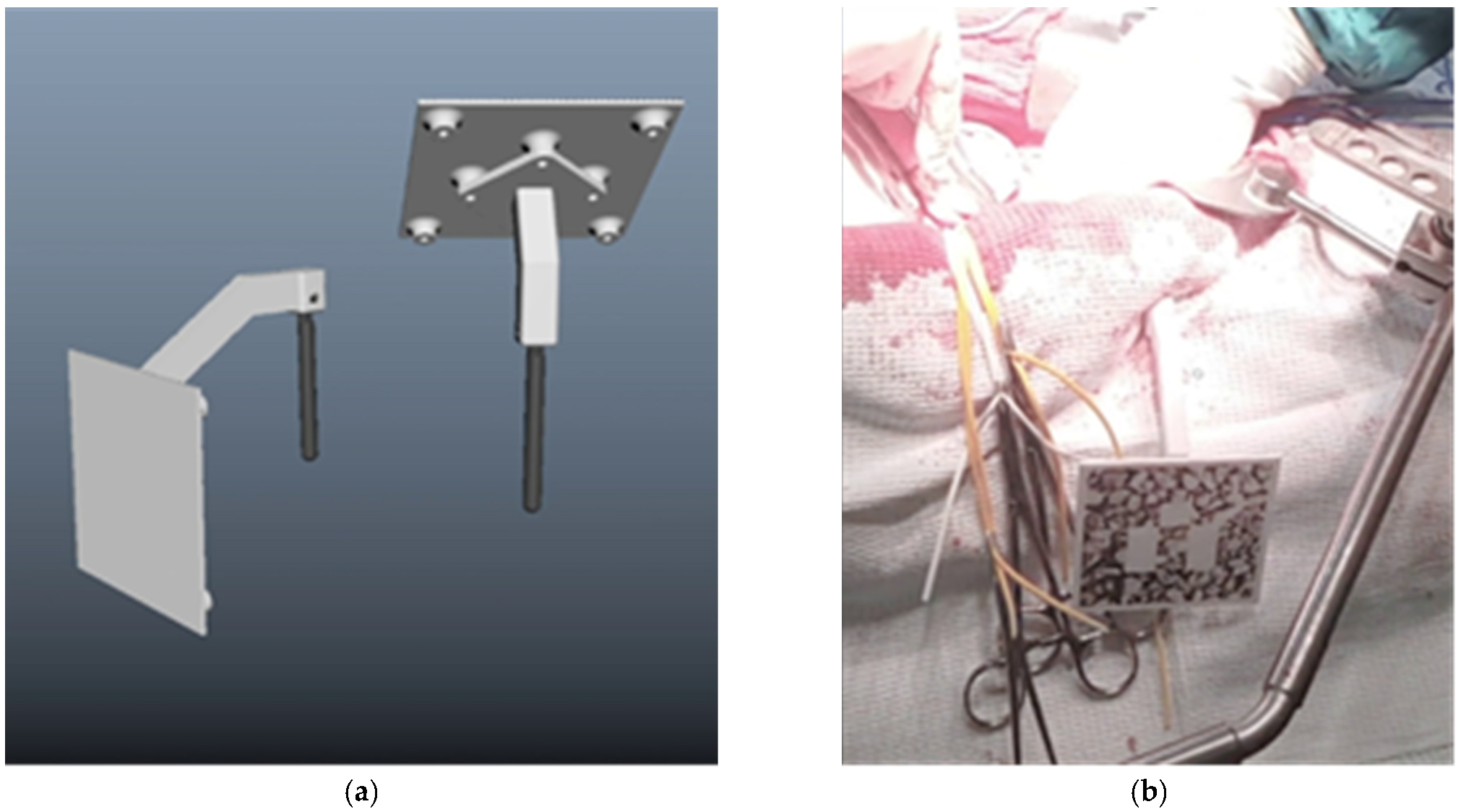

3.1. Clinical Experience in Cases with Invasive Markers

3.2. Clinical Experience in Cases with Noninvasive (Skinbased) Markers

3.3. Hologram Positioning Accuracy

4. Discussion

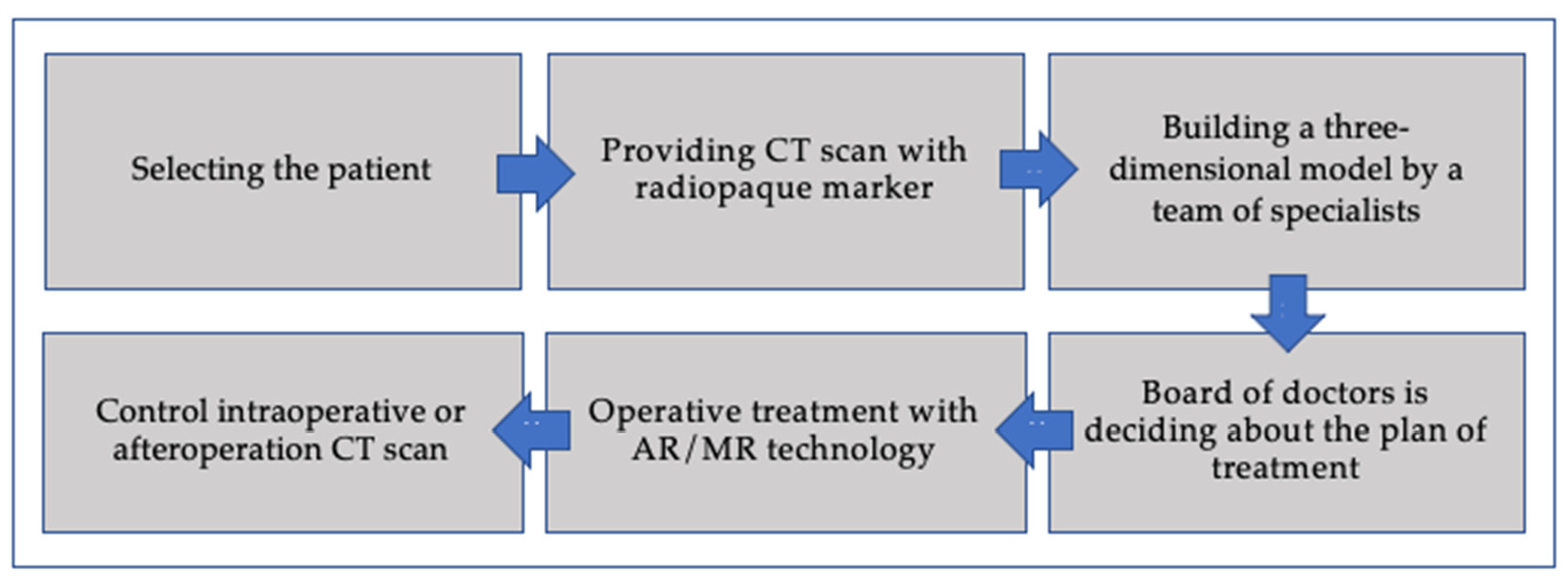

- Algorithm for the application of augmented reality in surgery

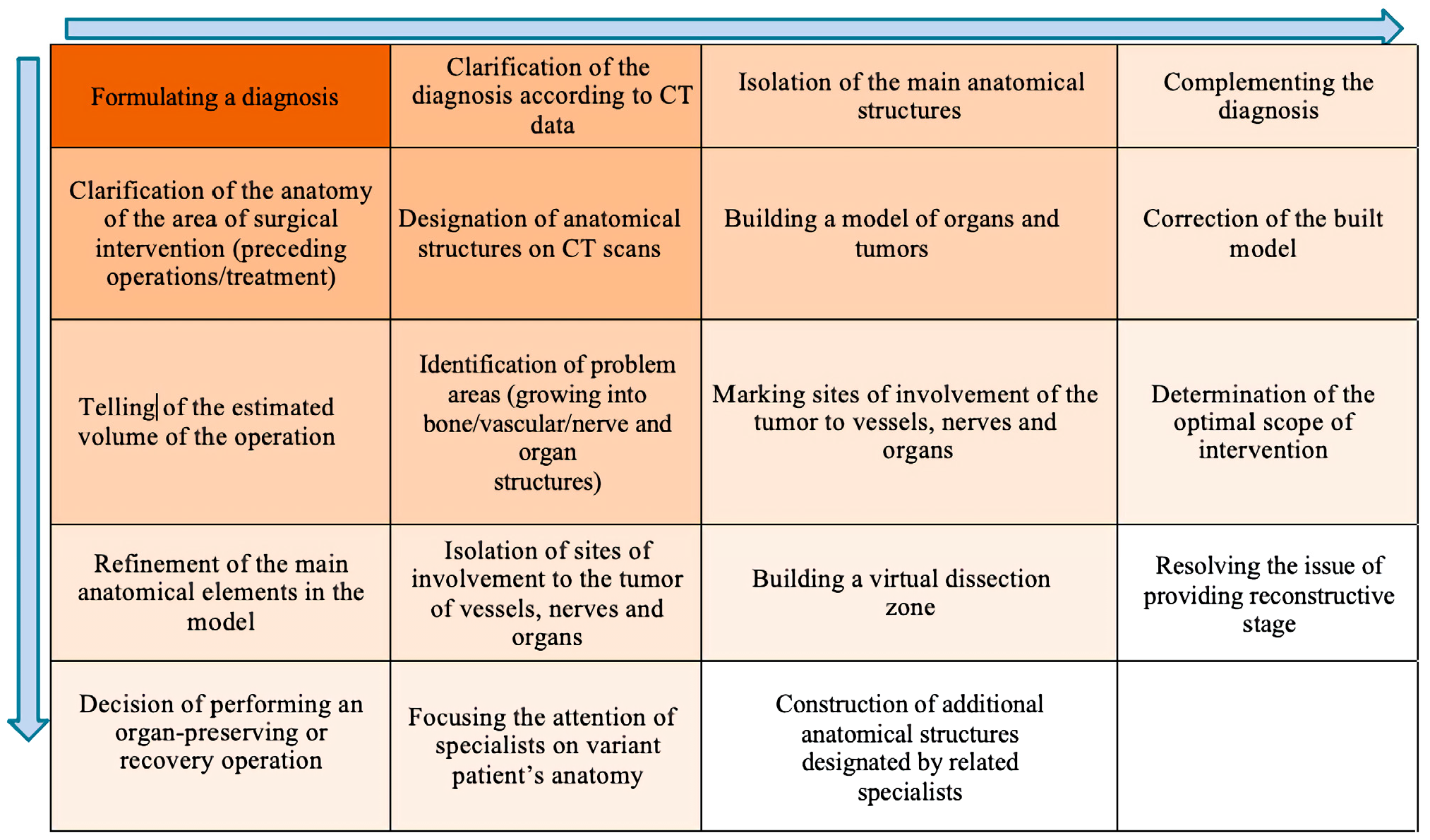

- Algorithm for creating a three-dimensional model of a surgical procedure by a multidisciplinary team.

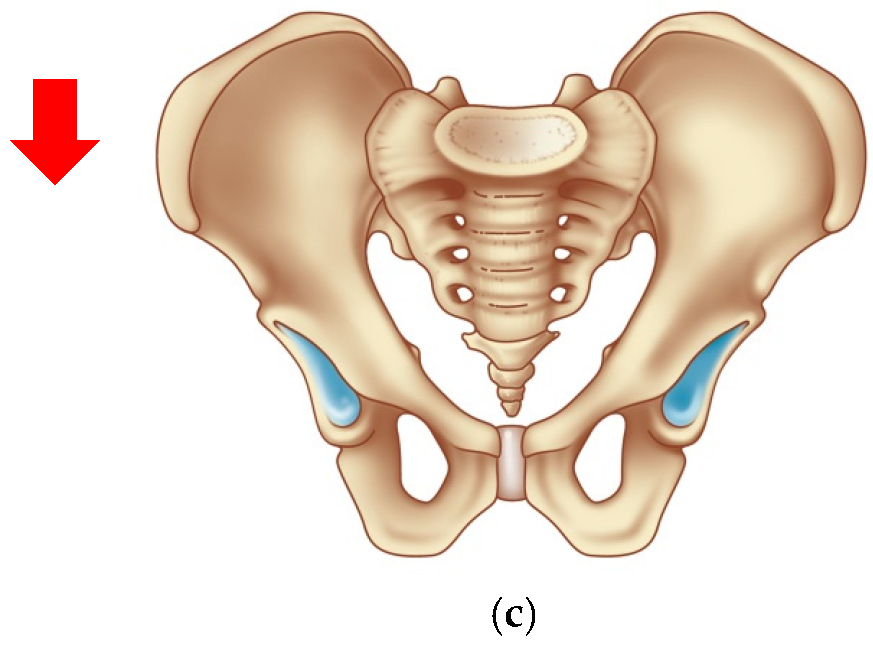

- Bone fixation of a marker

- Two (or more) marker design for position changes

- Magnet fixation of a marker

- Gestures control menu

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kontovounisios, C.; Tekkis, P. Locally Advanced Disease and Pelvic Exenterations. Clin. Colon Rectal Surg. 2017, 30, 404–414. [Google Scholar] [CrossRef] [PubMed]

- Caprino, P.; Sacchetti, F.; Tagliaferri, L.; Gambacorta, M.A.; Potenza, A.E.; Pastena, D.; Sofo, L. Use of electrochemotherapy in a combined surgical treatment of local recurrence of rectal cancer. J. Surg. Case Rep. 2021, 2021, rjab403. [Google Scholar] [CrossRef] [PubMed]

- Jimenez-Rodriguez, R.M.; Yuval, J.B.; Sauve, C.-E.G.; Wasserman, I.; Aggarwal, P.; Romesser, P.B.; Crane, C.H.; Yaeger, R.; Cercek, A.; Guillem, J.G.; et al. Type of recurrence is associated with disease-free survival after salvage surgery for locally recurrent rectal cancer. Int. J. Color. Dis. 2021, 36, 2603–2611. [Google Scholar] [CrossRef]

- Rokan, Z.; Simillis, C.; Kontovounisios, C.; Moran, B.J.; Tekkis, P.; Brown, G. Systematic review of classification systems for locally recurrent rectal cancer. BJS Open 2021, 5, zrab024. [Google Scholar] [CrossRef] [PubMed]

- Alzouebi, I.A.; Saad, S.; Farmer, T.; Green, S. Is the use of augmented reality-assisted surgery beneficial in urological education? A systematic review. Curr. Urol. 2021, 15, 148–152. [Google Scholar] [CrossRef] [PubMed]

- Cartucho, J.; Shapira, D.; Ashrafian, H.; Giannarou, S. Multimodal mixed reality visualisation for intraoperative surgical guidance. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 819–826. [Google Scholar] [CrossRef]

- Hakky, T.S.; Dickey, R.M.; Srikishen, N.; Lipshultz, L.I.; Spiess, P.E.; Carrion, R.E. Augmented reality assisted surgery: A urologic training tool. Asian J. Androl. 2016, 18, 732–734. [Google Scholar] [CrossRef]

- Tang, K.S.; Cheng, D.L.; Mi, E.; Greenberg, P.B. Augmented reality in medical education: A systematic review. Can. Med. Educ. J. 2019, 11, e81–e96. [Google Scholar] [CrossRef]

- Wake, N.; Rosenkrantz, A.B.; Huang, W.C.; Wysock, J.S.; Taneja, S.S.; Sodickson, D.K.; Chandarana, H. A workflow to generate patient-specific three-dimensional augmented reality models from medical imaging data and example applications in urologic oncology. 3D Print. Med. 2021, 7, 34. [Google Scholar] [CrossRef]

- Coelho, G.; Rabelo, N.N.; Vieira, E.; Mendes, K.; Zagatto, G.; de Oliveira, R.S.; Raposo-Amaral, C.E.; Yoshida, M.; de Souza, M.R.; Fagundes, C.F.; et al. Augmented reality and physical hybrid model simulation for preoperative planning of metopic craniosynostosis surgery. Neurosurg. Focus 2020, 48, E19. [Google Scholar] [CrossRef] [Green Version]

- Sparwasser, P.M.; Schoeb, D.; Miernik, A.; Borgmann, H. Augmented Reality und Virtual Reality im Operationssaal—Status Quo und Quo vadis. Aktuelle Urol. 2018, 49, 500–508. [Google Scholar] [CrossRef] [PubMed]

- Alsofy, S.Z.; Nakamura, M.; Suleiman, A.; Sakellaropoulou, I.; Saravia, H.W.; Shalamberidze, D.; Salma, A.; Stroop, R. Cerebral Anatomy Detection and Surgical Planning in Patients with Anterior Skull Base Meningiomas Using a Virtual Reality Technique. J. Clin. Med. 2021, 10, 681. [Google Scholar] [CrossRef] [PubMed]

- Thomas, D.J. Augmented reality in surgery: The Computer-Aided Medicine revolution. Int. J. Surg. 2016, 36, 25. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Leuze, C.; Zoellner, A.; Schmidt, A.R.; Cushing, R.E.; Fischer, M.J.; Joltes, K.; Zientara, G.P. Augmented reality visualization tool for the future of tactical combat casualty care. J. Trauma Acute Care Surg. 2021, 91, S40–S45. [Google Scholar] [CrossRef]

- Eckert, M.; Volmerg, J.S.; Friedrich, C.M. Augmented Reality in Medicine: Systematic and Bibliographic Review. JMIR mHealth uHealth 2019, 7, e10967. [Google Scholar] [CrossRef]

- Mikhail, M.; Mithani, K.; Ibrahim, G.M. Presurgical and Intraoperative Augmented Reality in Neuro-Oncologic Surgery: Clinical Experiences and Limitations. World Neurosurg. 2019, 128, 268–276. [Google Scholar] [CrossRef]

- Bartella, A.; Kamal, M.; Scholl, I.; Schiffer, S.; Steegmann, J.; Ketelsen, D.; Hölzle, F.; Lethaus, B. Virtual reality in preoperative imaging in maxillofacial surgery: Implementation of “the next level”? Br. J. Oral Maxillofac. Surg. 2019, 57, 644–648. [Google Scholar] [CrossRef]

- Chu, M.W.; Moore, J.; Peters, T.; Bainbridge, D.; Mccarty, D.; Guiraudon, G.M.; Wedlake, C.; Lang, P.; Rajchl, M.; Currie, M.E.; et al. Augmented Reality Image Guidance Improves Navigation for Beating Heart Mitral Valve Repair. Innov. Technol. Tech. Cardiothorac. Vasc. Surg. 2012, 7, 274–281. [Google Scholar] [CrossRef]

- Huber, T.; Hadzijusufovic, E.; Hansen, C.; Paschold, M.; Lang, H.; Kneist, W. Head-Mounted Mixed-Reality Technology During Robotic-Assisted Transanal Total Mesorectal Excision. Dis. Colon Rectum 2019, 62, 258–261. [Google Scholar] [CrossRef]

- Devernay, F.; Mourgues, F.; Coste-Maniere, E. Towards endoscopic augmented reality for robotically assisted minimally invasive cardiac surgery. In Proceedings of the International Workshop on Medical Imaging and Augmented Reality, Hong Kong, China, 10–12 June 2001; pp. 16–20. [Google Scholar]

- Ivanov, V.M.; Krivtsov, A.M.; Strelkov, S.V.; Kalakutskiy, N.V.; Yaremenko, A.I.; Petropavlovskaya, M.Y.; Portnova, M.N.; Lukina, O.V.; Litvinov, A.P. Intraoperative Use of Mixed Reality Technology in Median Neck and Branchial Cyst Excision. Future Internet 2021, 13, 214. [Google Scholar] [CrossRef]

- Sadeghi, A.H.; el Mathari, S.; Abjigitova, D.; Maat, A.P.M.; Taverne, Y.J.J.; Bogers, A.J.C.; Mahtab, E.A. Current and Future Applications of Virtual, Augmented, and Mixed Reality in Cardiothoracic Surgery. Ann. Thorac. Surg. 2020, 113, 681–691. [Google Scholar] [CrossRef] [PubMed]

- Guerriero, L.; Quero, G.; Diana, M.; Soler, L.; Agnus, V.; Marescaux, J.; Corcione, F. Virtual Reality Exploration and Planning for Precision Colorectal Surgery. Dis. Colon Rectum 2018, 61, 719–723. [Google Scholar] [CrossRef] [PubMed]

- Pérez-Serrano, N.; Fernando Trebolle, J.; Sánchez Margallo, F.M.; Blanco Ramos, J.R.; García Tejero, A.; Aguas Blasco, S. Digital 3-Dimensional Virtual Models in Colorectal Cancer and Its Application in Surgical Practice. Surg. Innov. 2019, 27, 246–247. [Google Scholar] [CrossRef] [PubMed]

- Kontovounisios, C.; Tekkis, P.P.; Bello, F. 3D imaging and printing in pelvic colorectal cancer: ‘The New Kid on the Block’. Tech. Coloproctol. 2018, 23, 171–173. [Google Scholar] [CrossRef] [Green Version]

- Atallah, S.; Nassif, G.; Larach, S. Stereotactic navigation for TAMIS-TME: Opening the gateway to frameless, image-guided abdominal and pelvic surgery. Surg. Endosc. 2014, 29, 207–211. [Google Scholar] [CrossRef]

- Kwak, J.-M.; Romagnolo, L.; Wijsmuller, A.; Gonzalez, C.; Agnus, V.; Lucchesi, F.R.; Melani, A.G.F.; Marescaux, J.; Dallemagne, B. Stereotactic Pelvic Navigation With Augmented Reality for Transanal Total Mesorectal Excision. Dis. Colon Rectum 2019, 62, 123–129. [Google Scholar] [CrossRef] [PubMed]

- Kawada, K.; Hasegawa, S.; Okada, T.; Hida, K.; Okamoto, T.; Sakai, Y. Stereotactic navigation during laparoscopic surgery for locally recurrent rectal cancer. Tech. Coloproctol. 2017, 21, 977–978. [Google Scholar] [CrossRef]

- Pokhrel, S.; Alsadoon, A.; Prasad, P.W.C.; Paul, M. A novel augmented reality (AR) scheme for knee replacement surgery by considering cutting error accuracy. Int. J. Med. Robot. Comput. Assist. Surg. 2018, 15, e1958. [Google Scholar] [CrossRef] [Green Version]

- Mitra, R.L.; Greenstein, S.A.; Epstein, L.M. An algorithm for managing QT prolongation in coronavirus disease 2019 (COVID-19) patients treated with either chloroquine or hydroxychloroquine in conjunction with azithromycin: Possible benefits of intravenous lidocaine. Heart Rhythm Case Rep. 2020, 6, 244–248. [Google Scholar] [CrossRef]

- Basnet, B.R.; Alsadoon, A.; Withana, C.; Deva, A.; Paul, M. A novel noise filtered and occlusion removal: Navigational accuracy in augmented reality-based constructive jaw surgery. Oral Maxillofac. Surg. 2018, 22, 385–401. [Google Scholar] [CrossRef]

| ID | Diagnosis | Operation | Duration, min |

|---|---|---|---|

| 1 | Cancer of the lower rectum cT3N1M0/pT3N1aM0 Lvi(+) IIIB st. 1 | Infralevator evisceration of the small pelvis, resection of the small intestine, cholecystectomy, resection of the coccyx, bilateral ureterocutaneostomy. | 380 |

| 2 | Cancer of the lower ampulla of the rectum pT3cN1aM0 IIIB st. 1 | Infralevator evisceration of the small pelvis with distal resection of the coccyx and sacrum at the S5 level (removal of the tumor by a single block with the bladder, prostate gland and the distal part of the sacrum). | 335 |

| 3 | Ovarian cancer T3cNxM1 IVa st.1 | Combined cytoreductive (initially optimal) operation: posterior supralevator evisceration of the small pelvis. Resection of the right dome of the diaphragm. Resection of the greater and lesser omentum. Obstructive resection of the sigmoid colon. Total peritonectomy. Cholecystectomy, splenectomy, appendectomy. Resection of the right ureter (Figure 8). | 390 |

| ID | Diagnosis | Operation | Duration, min |

|---|---|---|---|

| 1 | Bladder cancer T4bN3M1 (Lim) IV 1 | Radical cystectomy. Lymph node dissection along the aorta, in the aorto-oval space, and along the course of the iliac vessels. | 330 |

| 2 | Cervical cancer pT1b2N0M0 Ib st. Recurrence with the formation of a vesico-vaginal and recto-vaginal fistula 1 | Pelvic evisceration (radical cystectomy with formation of Bricker ileum conduit, resection of the vaginal stump, anterior resection of the rectum). | 90 |

| 3 | Cervical cancer cT2bN0M0 IIb st 1 | Diagnostic laparotomy (revision of the abdominal cavity, revealed multiple areas of carcinomatosis throughout the abdominal cavity, performing a radical operation was technically impossible). | 110 |

| 4 | Hepaticocholedochal stricture | Laparotomy. The rehepaticojejunostomy with a long Roux loop. | 270 |

| 5 | Recurrent chondrosarcoma of the 11th rib on the left with spread to the dome of the diaphragm, left kidney 1 | Not completed due to clinical exacerbation. | N/A |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ivanov, V.M.; Krivtsov, A.M.; Strelkov, S.V.; Smirnov, A.Y.; Shipov, R.Y.; Grebenkov, V.G.; Rumyantsev, V.N.; Gheleznyak, I.S.; Surov, D.A.; Korzhuk, M.S.; et al. Practical Application of Augmented/Mixed Reality Technologies in Surgery of Abdominal Cancer Patients. J. Imaging 2022, 8, 183. https://doi.org/10.3390/jimaging8070183

Ivanov VM, Krivtsov AM, Strelkov SV, Smirnov AY, Shipov RY, Grebenkov VG, Rumyantsev VN, Gheleznyak IS, Surov DA, Korzhuk MS, et al. Practical Application of Augmented/Mixed Reality Technologies in Surgery of Abdominal Cancer Patients. Journal of Imaging. 2022; 8(7):183. https://doi.org/10.3390/jimaging8070183

Chicago/Turabian StyleIvanov, Vladimir M., Anton M. Krivtsov, Sergey V. Strelkov, Anton Yu. Smirnov, Roman Yu. Shipov, Vladimir G. Grebenkov, Valery N. Rumyantsev, Igor S. Gheleznyak, Dmitry A. Surov, Michail S. Korzhuk, and et al. 2022. "Practical Application of Augmented/Mixed Reality Technologies in Surgery of Abdominal Cancer Patients" Journal of Imaging 8, no. 7: 183. https://doi.org/10.3390/jimaging8070183

APA StyleIvanov, V. M., Krivtsov, A. M., Strelkov, S. V., Smirnov, A. Y., Shipov, R. Y., Grebenkov, V. G., Rumyantsev, V. N., Gheleznyak, I. S., Surov, D. A., Korzhuk, M. S., & Koskin, V. S. (2022). Practical Application of Augmented/Mixed Reality Technologies in Surgery of Abdominal Cancer Patients. Journal of Imaging, 8(7), 183. https://doi.org/10.3390/jimaging8070183