Abstract

Today, the processing and analysis of mammograms is quite an important field of medical image processing. Small defects in images can lead to false conclusions. This is especially true when the distortion occurs due to minor malfunctions in the equipment. In the present work, an algorithm for eliminating a defect is proposed, which includes a change in intensity on a mammogram and deteriorations in the contrast of individual areas. The algorithm consists of three stages. The first is the defect identification stage. The second involves improvement and equalization of the contrasts of different parts of the image outside the defect. The third involves restoration of the defect area via a combination of interpolation and an artificial neural network. The mammogram obtained as a result of applying the algorithm shows significantly better image quality and does not contain distortions caused by changes in brightness of the pixels. The resulting images are evaluated using Blind/Referenceless Image Spatial Quality Evaluator (BRISQUE) and Naturalness Image Quality Evaluator (NIQE) metrics. In total, 98 radiomics features are extracted from the original and obtained images, and conclusions are drawn about the minimum changes in features between the original image and the image obtained by the proposed algorithm.

1. Introduction

Neoplasms in the mammary gland represent the most common cancer type among women. Recently, the number of new patients diagnosed with breast cancer reached approximately two million per year [1,2]. The early diagnosis of lesions of the mammary gland provides good opportunities for the elimination of these abnormalities. Therefore, medical organizations around the world are trying to provide frequent and easily accessible mammography services [3].

Both mobile mammography and stationary mammography are widely used, providing different levels of detail and image quality. Mobile mammography stations often produce low-quality images. A previous paper [4] compared the image quality levels between stationary and mobile services and concluded that the quality of images from mobile devices is insufficient. Stationary and mobile devices, due to their high workload, may experience disturbances in the imaging matrix or other defects that affect the image quality. False-positive results increase the overall medical costs for society, while false-negative results do not allow the early detection of diseases.

Due to their popularity, mobile mammography services should at least provide images of acceptable quality, and the image quality should have a minimal impact on cancer detection. Due to the radiation received, it is highly undesirable to take “extra” mammographic images [5]. However, image defects are often not critical and specialists can correctly interpret the mammograms. Despite this, when conducting automatic analyses, the attained quality is not sufficient.

Image quality is especially important in automatic analysis for the correct characterization of features [6] and for the accurate operation of artificial neural networks [7,8,9,10]. It should also be taken into account that for the elimination of image defects, algorithms are used that take up a significant part of the diagnostic time.

Various approaches are used to improve mammogram images. For example, in [11], a method based on a non-subsampled shearlet transform was used to eliminate image noise. In [12], the linearly quantile separated histogram equalization grey relational analysis method was presented. This method enhanced the local and global contrast, highlighting the desired areas. In [13], several methods for eliminating noise were proposed, and the median filter, max and min filters, and Weiner filter were considered.

Dynamic unsharp masking in a Laplacian pyramid was proposed in [14] to improve the image and to better highlight the internal structure of the mammogram. In [15], a comparison of different methods of cancer detection on mammogram images was carried out. It was proposed the use of the following procedures to improve the quality: low-pass filter, Gaussian smoothing, subsampling operations, and morphological operations. To eliminate noise, median filtering was also used with a 3 by 3 window [16,17]. In [18], various image quality improvement algorithms were presented, such as the synthetic minority over-sampling technique to improve the training set; a Gaussian pyramid for image scaling with minimal loss; histogram equalization, an adaptive mean, median filters, log transforms, and a Wiener filter to increase contrast; and thresholding of the pixel intensity to eliminate artifacts. In [19], an image filtering method based on the use of a fractal mask was presented.

The most commonly used method is contrast-limited adaptive histogram equalization (CLAHE) in combination with certain other algorithms. For example, in [20], to increase the image contrast, CLAHE was used with entropy-based intuitionistic fuzzy. In [21], the image was first converted to negative and then equalization was applied. In [22], CLAHE was combined with a discrete wavelet transform to increase the image contrast. In [23], several methods of image enhancement were compared: the combination of CLAHE with bilateral filtering, a log transform, histogram equalization, a Gaussian filter, a Laplacian filter, and median filters.

In the present work, we consider the processing of mammograms, the images of which have a clearly defined defective strip with a strong increase in brightness, up to the complete illumination of the image in the area of the defect. Similar defects can occur for detectors that are in motion. As an example, normal ray detection is carried out first, which is followed by a “hardware failure” expressed in the form of a band that differs significantly in characteristics from a “good” image. Then, further detection is carried out with violations, which can cause both hardware and software distortions. These distortions are expressed in violation of the contrast or the appearance of noise and interference. In the present work, violations that are visible as blurring of the image are considered. Existing noise elimination algorithms cannot cope with such a “complex” defect. Simultaneously with the defect, there is also a decrease in the contrast of one-half of the image. An algorithm for restoring areas with a non-linear increase or decrease in image brightness is proposed. In the area of the defect, where there is a complete loss of information, the image is interpolated and restored by an artificial neural network. The reconstructed mammogram is obtained by overlaying two images, which are interpolated (information about the light lines on the mammogram) and reconstructed by a neural network (information about the background).

The processed image should have approximately the same contrast; the vessels, fibrous and glandular tissues contained in the image should be well “read”. We use the BRISQUE and NIQE metrics to assess the perception of the entire image, as well as ten other metrics to assess the accuracy of recovering a lost image (an image in the band area). A comparison is also made on 98 radiomics features extracted from the original and obtained images.

2. Materials and Methods

Mammogram images contain artefacts and may have low contrast, which can significantly complicate the diagnosis process. The image recovery algorithm contains the following steps:

- Determining the core of the defect (black pixels and excessively bright pixels);

- Defect determination. Restoration of pixels outside the core;

- Equalizing the contrast of the entire image, except for the defect;

- Restoration of the core of the defect by the interpolation algorithm;

- Selection of light lines on the restored image;

- Restoration of the background of the core of the defect and of the area adjacent to the core with an artificial neural network;

- Overlaying of the interpolation results (highlighted lines) on the background obtained by the neural network.

Next, we take a closer look at some of the algorithms used.

2.1. Improving the Image Contrast

One of the main tasks in image processing is to increase the contrast. Contrast creates a visual difference that distinguishes an object from the background and other objects. The main goal is to improve the visual quality of the image. Histogram equalization (HE) is a commonly used method for modifying a histogram. However, the HE is a global image adjustment method that cannot effectively improve the local contrast, as the effect will be very poor in some situations. Therefore, the contrast-limited adaptive histogram equalization (CLAHE) method is used.

In the present work, we use the CLAHE algorithm to remove noise and enhance the contrast of mammograms. The filter parameters directly depend on the modes of intensity distributions on opposite sides of the defect. For the function in python, we choose grid_size = 8 by default, while clip_limit is set to values on a case-by-case basis. Other contrast enhancement algorithms can also be used, for example the multi-fractal method [16].

2.2. Recovery of the Lost Image

The classic approach for recovering a lost image is interpolation or approximation. Algorithms based on the Fourier spectrum, performing phase, and amplitude reconstruction are also used [24]. The main idea of this method is the sequential calculation of the image spectrum, with changes made only to the defect without changing the rest of the image [25,26,27].

In recent years, artificial neural networks have also been widely used [28,29]. The main advantage of the method is the high speed of work on high-resolution images with an acceptable level of restoration quality and the simplicity of the training set.

In the present work, two neural networks are used for the algorithm: a coarse–fine network for coarse gap filling in an image compressed to 256 × 256 and a refine network for more accurate gap filling in an image compressed to 512 × 512.

To improve the accuracy of filling a gap in the original image, the following parameters are calculated:

- The contextual residual, which is the difference between an original image and an image obtained after downsampling to 512 × 512 and further upsampling to an original resolution;

- Attention scores, which act as characteristics of the region affinity of the part of the image outside the gap to the gap filled by the neural network. They are used to transfer image structure information outside the gap to the inside.

Based on these two parameters, aggregated residuals are calculated, which are then added to the filled gap for sharpening.

2.3. Image Binarization

Binarization is one of the effective ways to determine the threshold level of shades of gray for stratification of the original image into the analyzed object and background. The quality of the image binarization process is improved as a necessary condition for increasing the efficiency of selection (detection) of given objects [30]. The binarization threshold is defined as an optimization problem in determining the maximum measure of distinguishability of classes [31] or is chosen empirically. Binarization is also actively used in mammography to eliminate image noise [32,33]. Other approaches are also used to highlight an image against the background; for example, the thresholding method and morphological operations were effectively applied in [17].

The main parameter of such a transformation is the threshold, which represents a value with which the brightness of each pixel is compared. Various binarization methods exist that can be conditionally divided into two groups: global and local. In the former case, the threshold value remains unchanged during the entire binarization process. In the latter case, the image is divided into regions, in each of which a local threshold is calculated. There also exist methods (for example the Otsu method) in which a threshold is automatically calculated that minimizes the average segmentation error, i.e., the average error from deciding whether image pixels belong to an object or background.

The main goal of binarization is a radical reduction in the amount of information that one has to work with. In the present work, incomplete thresholding with a global threshold (determined empirically) is used to extract light areas in the image.

3. Results

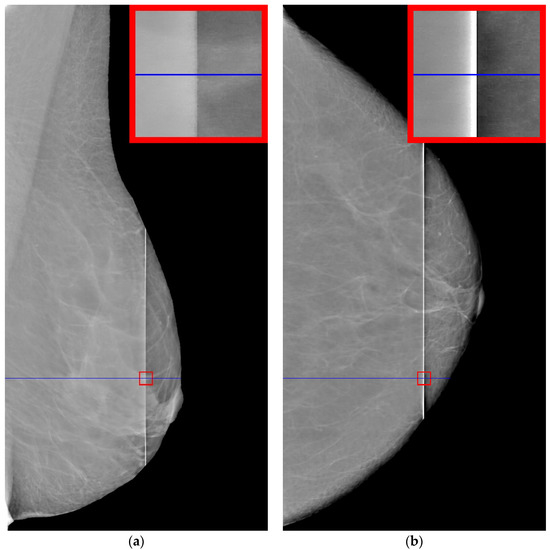

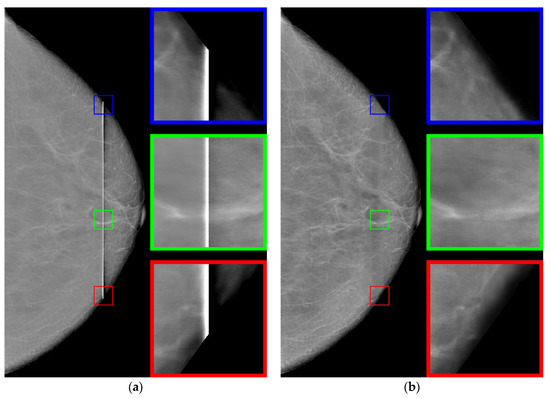

The original image of 4052 by 5539 pixels contains a vertical defect, which consists of a sharp increase in the intensity of the image in the area of the defect. The defect may have a different width along the vertical line. Defect values can reach from several tens to hundreds of pixels. The increase in brightness in the defect strip can be quite significant, in some cases reaching full illumination (maximum brightness). Examples of such mammograms are shown in Figure 1. The mammograms are taken retrospectively from patient records.

Figure 1.

Examples of defective mammograms. The size of the red squares is 100 by 100: (a) mammogram with unilluminated defect; (b) mammogram with illuminated defect.

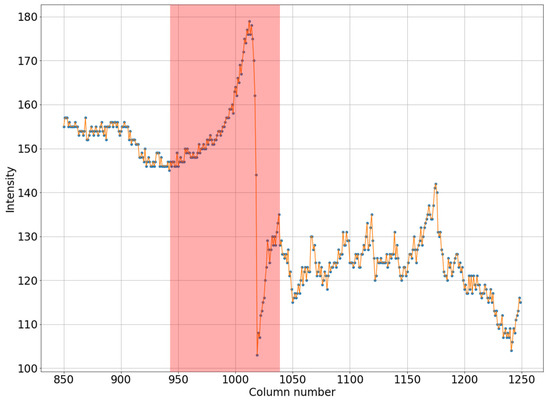

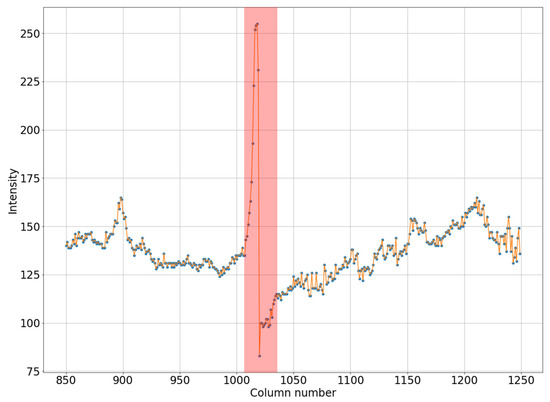

It can be seen in Figure 1a that the intensity does not reach maximum values in the region of the defect. However, the area of increased brightness is quite large. In Figure 1b, there is an illuminated defect, and in this area there is a complete loss of information. Unlike images in which the brightness increase is slow, in this case the brightness increase is quite sharp. This can be clearly seen in Figure 2 and Figure 3.

Figure 2.

Horizontal section of a mammogram with a wide low-intensity defect which corresponds to the blue stripe in Figure 1a.

Figure 3.

Horizontal section of a mammogram with a narrow high-intensity defect, which corresponds to the blue stripe in Figure 1b.

Let us note the following. A defect always shows an increase in pixel intensity on one side with a simultaneous decrease in intensity on the other side of the defect. In addition, the width of the defect correlates with its intensity. A wide defect corresponds to low-intensity changes. Conversely, a narrow defect corresponds to high-intensity distortions. For the proposed algorithm, it is necessary that one of the areas have an image with high contrast, corresponding to an operational device.

Let us consider the results of the step-by-step reconstruction of the mammogram image with illustrations using the example shown in Figure 1b.

3.1. Determination of Defects

At the initial stage, we determine the contour of the chest. This operation can be performed by moving from the borders of the photo to the center until the pixel color stops being black or reaches a specified low value. Next, we find the core of the defect. We consider the core of the defect to be the part of the image where the intensity is more than 220 or equal to zero. Note that there may not be a defect core in a particular horizontal section. Let us explain this with the example of Figure 1a. Here, you can see that the brightness of the strip in the middle is smaller than in the upper and lower parts. Thus, in this case, the defect core will be only at the ends of the vertical defect. In the case when the core of the defect is not connected, we will connect the individual sections with straight lines, resulting in a vertically similar area.

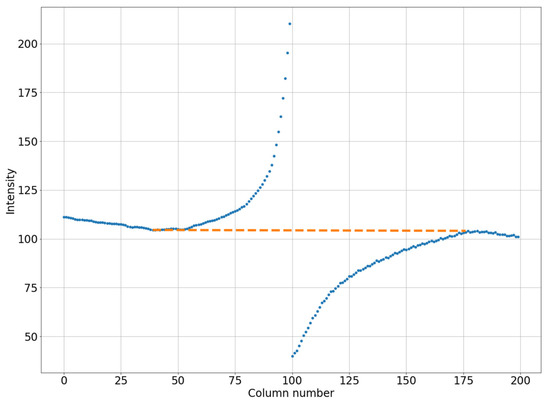

In the next step, the areas to the left and right of the defect core will be covered with curvilinear quadrilaterals measuring 100 × 100 pixels in size (in practice, this area will have an arcuate shape along the vertical). Then, we will calculate the average pixel intensities for each column of the selected quadrilateral. Let us determine the minimum intensity value from the illuminated side and the maximum intensity value from the darkened side, then connect the obtained data with a straight line, as shown in Figure 4. We determine the difference between the average values in each column (blue line in Figure 4) and the straight line values (orange line in Figure 4). The resulting value is calculated from the intensity values for each point of the column, thereby adjusting the brightness of the pixels in the defect area.

Figure 4.

An example of linearization of the column-average intensity in a small square (200 by 100 pixels). The blue dotted line is for the original image. The orange dotted line is for the smoothed image.

Let us repeat this action for all columns of the rectangle. Apparently, as the distance from the defect core increases, the change in the intensity values decreases in each column of this area, correcting the average pixel values to the desired level. The outer borders of the upper and lower rectangles coincide with the contour of the chest, and their vertical size can be less than 100 pixels.

In the next step, we will remove the “blurring” of the image of the area with low contrast.

3.2. Equalizing Contrast in the Image

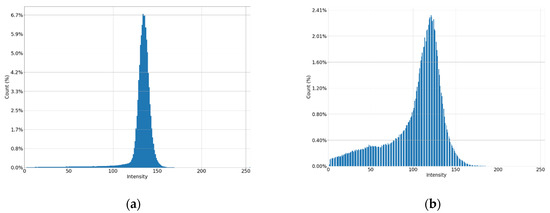

Let us estimate the contrast of the image to the left and to the right of the defect. The blurrier (lower contrast) image shown in Figure 5a corresponds to mammogram shown in Figure 1b. A high-contrast “uncorrupted” image of the same mammogram is shown in Figure 5b.

Figure 5.

Histograms of parts of the image: (a) low contrast image; (b) high contrast image.

Let us improve the “corrupted” image. For this purpose, we use the CLAHE method with the clip_limit parameter, which must be determined by the ratio of low- and high-contrast images. We calculate the mode and standard deviation (stdA and stdB) for the distribution of pixel brightness on both sides of the defect. We determine the CLAHE parameter using the following formula:

clip_limit = 1.11 − 0.023 × (stdB − stdA)

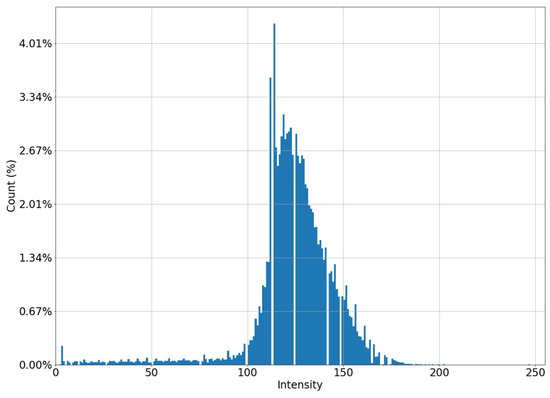

This formula is empirically derived as a regression model built on optimal clip_limit values for multiple mammograms. As a result, we improve the image for the non-contrasting part by changing the histogram from Figure 5a to Figure 6.

Figure 6.

Histogram of a “low-contrast” image after applying the CLAHE algorithm.

After this stage, the entire image, except for the core of the defect, has approximately the same contrast. Now let us proceed directly to the restoration of the image inside the core of the defect.

3.3. Restoring the Image in the Core of the Defect

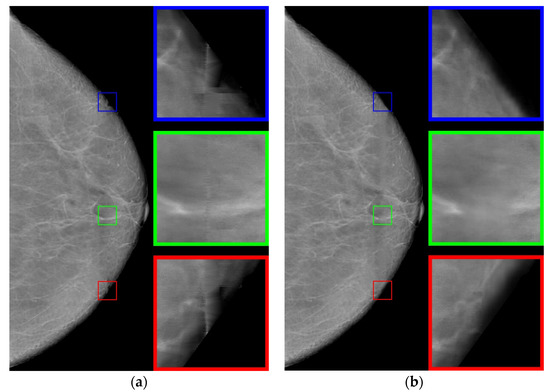

Using the interpolation method, we restore the images in the strip. The use of polynomials of higher degrees gives artifacts to the image; therefore, we use polynomials of the first degree. The resulting image is shown in Figure 7a. Note that the use of polynomials of the second or third degree does not significantly change the result.

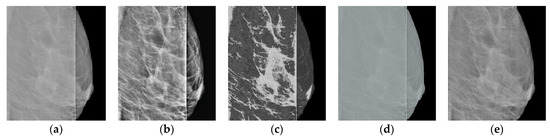

Figure 7.

Restored images: (a) using the interpolation method; (b) using the neural network.

Let us analyze enlarged images from small squares. It can be seen that the edge images (blue and red squares) are poorly restored. The images inside are approximated well enough, retaining the “white streaks”. However, with this approach, there are small horizontal stripes (green square), which is a usual occurrence with approximation by polynomials of a small degree.

Now we apply an artificial neural network trained on other data [29], based on the architecture of generative adversarial networks (GANs), which are very often used to generate natural images and videos. The resulting image is shown in Figure 7b. For the neural networks, the image is well restored at the boundaries (blue and red squares). However, the image inside the defect is blurred, nevertheless preserving the overall background well.

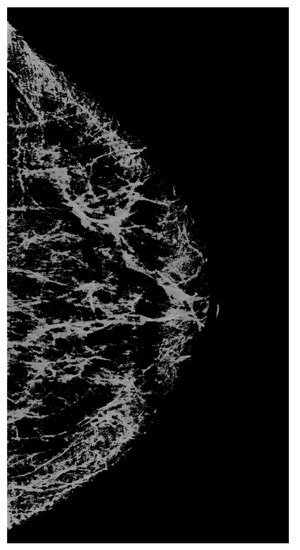

Let us proceed as follows. Let us take white streaks from the image restored by interpolation (defect pattern) and transfer them to the image obtained by the neural network (defect background). To do this, we apply the CLAHE method with the clip_limit = 20 parameter, significantly increasing the contrast. Then, we carry out binarizations. We attribute to the background the pixels with intensity levels of less than 2/3 of the maximum brightness (= 170), whereas the pixels with intensity levels ranging from 171 to 255 are attributed to the informative part of the image. We obtain a binary mask (Figure 8).

Figure 8.

A mask that carries information about significant lines in the image.

Using the mask, we select the necessary data (from Figure 7a) and apply them to the image containing the background (Figure 7b). As a result, we obtain the image shown in Figure 9b.

Figure 9.

(a) The original image and (b) the restored image.

3.4. Comparison of the Algorithm Versus Other Methods

A comparison of the results obtained with the proposed algorithm versus the results obtained with existing methods for improving the image quality is shown in Table 1. HERE, The most common metrics for measuring image quality without a reference, namely BRISQUE [34] and NIQE [35], Are used. It can be seen that the method proposed in the present work achieves the best value for the considered images. The following methods Are used for comparison: CLAHE with the clip_limit = 5 parameters; MedGA medical image enhancement method [36] based on genetic algorithms; neural network SCL-LLE [37] to improve the quality of the input image. The results of processing shown in Figure 1 for these methods are presented in Figure 10 and Figure 11.

Table 1.

Evaluation of various existing methods for improving image quality as well as of the proposed method for images from Figure 1.

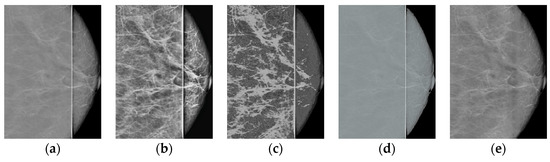

Figure 10.

Results of processing shown in Figure 1a: (a) original image; (b) CLAHE; (c) MedGA; (d) SCL-LLE; (e) proposed method.

Figure 11.

Comparison of results for Figure 1b: (a) original image; (b) CLAHE; (c) MedGA; (d) SCL-LLE; (e) proposed method.

Thus, the proposed method in terms of BRISQUE and NIQE metrics achieves the best value for the images considered in the work. Additionally, note that the MedGA and SCL-LLE methods do not remove the vertical defective band.

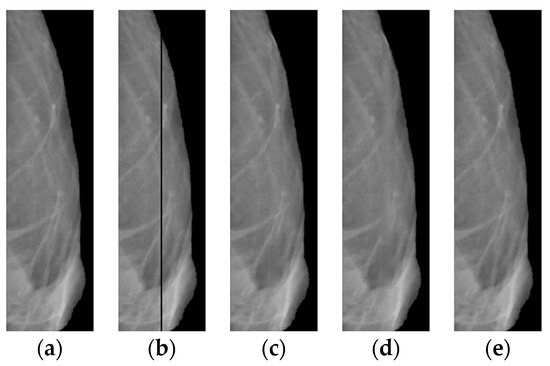

3.5. Estimating the Accuracy of Recovering a Lost Image

Let us evaluate the algorithm used for recovering a lost image. We use for this purpose the “good” (right) part of the mammogram image from Figure 1a. On the original image (Figure 12a), we cut out a rectangular strip 5 pixels wide (see Figure 12b). First, we restore the lost image in the strip using the classical interpolation method; the results of the restoration are shown in (Figure 12c). Then, we apply an algorithm based on a neural network to the defective image (Figure 12b).

Figure 12.

Right side of the image shown in Figure 1a: (a) original; (b) after cutting out the rectangular strip; (c) with interpolated band; (d) with a strip reconstructed by a neural network; (e) with the strip recovered by the proposed algorithm.

The following reference metrics are used here to assess the overlay efficiency: SSIM [38], VIF [39], VSI [40], CW_SSIM [41], MS_SSIM [42], FSIM [43], DISTS [44], GMSD [45], LPIPS-VGG [46], and NLPD [47]. The results for all metrics (Table 2) show that after the overlay, the image is better in comparison with the separate results for the application of the neural network and interpolation processes.

Table 2.

Evaluation of methods for restoring a “lost” image in a strip artificially cut out on the right side of the image shown in Figure 1a.

3.6. Radiomics and Statistical Analyses

Let us evaluate the features of four groups of images, the original image and three transformed images obtained by the algorithms discussed above, namely the proposed method, MedGA, and SCL-LLE. These evaluations will be carried out using Radiomics, which is used to extract a large number of features in medical images. Radiomics is also frequently used in the detection of various visually detectable diseases and abnormalities [48,49]. In the present work, the pyRadiomics python package [50] is used for feature calculations.

The Radiomics values not only indicate the quantitative histogram and texture characteristics of a medical image, but are also used as input data for machine learning [51,52,53]. Therefore, it is important to evaluate these parameters in the converted images in order to assess the degree of change in the original image. Since the method proposed in the work has significantly improved the perception of the picture, it is also necessary that the characteristic features not be “spoiled” in this case.

Let us single out 93 features: 18 for first-order statistical descriptors and 75 for texture features. Then, we can compare the obtained values using one-way analysis of variance (ANOVA) and Tukey’s post hoc honestly significant difference (HSD) test to identify very different features [49]. ANOVA is used here to determine whether there are any statistically significant differences between the group for the original images and the processed images. In other words, one-way analysis of variance compares the means among groups (in our case, four groups), determining whether one of these means is statistically different from the others.

Specifically, it tests the null hypothesis:

where is the group mean and is the group number. If the ANOVA returns a statistically significant result (p-value below 0.05), we accept the alternative hypothesis that there are at least two group means that are statistically significantly different from each other. We then look at radiomics features with p < 0.05 in a one-way ANOVA to find the percentage of radiomics features that differ between at least two groups. The ANOVA does not provide information about which group is significantly different from the others, but only that at least two groups are statistically different. For this reason, the Tukey HSD test must be used. This is a post hoc test based on Student’s t-distribution, which is useful for determining which of the group pairs are significantly different from each other. A parameter for each pair, namely the Tukey HSD Q-statistic, is calculated as follows:

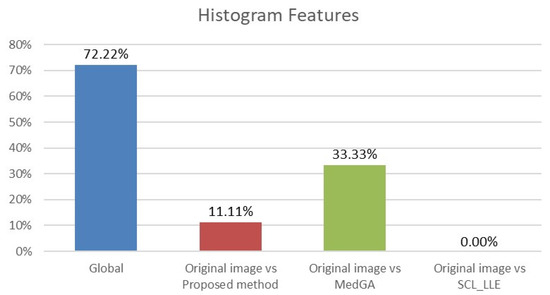

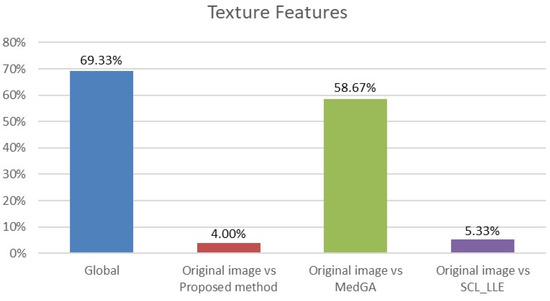

where and are average values of the compared samples; n is the sample size; is the within-group dispersion. Then, the p-value of the comparison of the observed Q-statistic and the Q-critical one is calculated. Finally, radiomics features with Tukey HSD p-values < 0.05 are examined to determine the percentage of features that differ between the original image and the considered approaches. This makes it clear which of the approaches shows a statistically significant difference. The results for histogram features (First-order features) and for texture features are shown in Figure 13 and Figure 14.

Figure 13.

Analysis of ANOVA and HSD tests showing differences in histogram features (18 features) across four groups (blue column) and differences considering pairs of groups: first group vs. second group (red column), first group vs. third group (green column), and first group vs. fourth group (purple column).

Figure 14.

Analysis of ANOVA and HSD tests showing differences in texture features (75 features) across four groups (blue column) and differences across pairs of groups: first group vs. second group (red column), first group vs. third group (green column), and first group vs. fourth group (purple column).

Analyzing the data in the histograms, we can conclude that the method proposed in this work leaves practically all of the signs without significant changes, except for 5 signs. For histogram features, these are the maximum intensity value and the range of intensity spread. For textural features, these are the gray-level size zone matrix (GLSZM) features: short run emphasis (SRE), size-zone non-uniformity normalized (SZNN), and small area emphasis (SAE) features.

4. Discussion

The problem solved in the present study is purely practical. The defect is not significant enough to justify purchasing new mammographs; however, it cannot be technically eliminated. The proposed algorithm improves the images, making them acceptable for analysis by a specialist or for further computer processing.

In the algorithm, when restoring the background of a defect, a neural network trained on third-party data (not mammograms) can be used, since this is sufficient to restore the background. Table 2 shows scores on ten metrics for three algorithms for recovering a lost image. For all metrics, the combined use of interpolation and a neural network gives the best results. Additionally, note that the algorithm can be easily applied to mammograms of any size.

If assessed visually without zooming in, the mammogram reconstructed by the interpolation algorithm (Figure 7a) looks quite contrasty. However, when zoomed in (colored squares in Figure 7a), it becomes clear that this is definitely not enough, and further improvement of the image is needed. A comparison of the proposed algorithm versus other approaches in terms of BRISQUE and NIQE metrics (Table 1) indicates that it provides better results. Adding “restoration” of the band to other approaches will lead only to an insignificant improvement in their metrics shown in Table 1, and will not change the overall score.

In total, 98 radiomics features are extracted from the original and “improved” images. Two differences in histogram features are explained by the presence of an overexposed band in the original image. If the band is excluded from consideration, then only three different textural features remain. We also note that in addition to the visual component, the MedGA method also significantly changes the features of radiomics, as indicated by the values of 33.33% and 58.67% of features that differ from the original image.

5. Conclusions

An algorithm for eliminating defects in a mammogram distorted by equipment is proposed. The mammogram has a defective strip in the form of a sharp change in the intensity of the pixels in this area up to a complete loss of information in certain areas of the strip. In addition, one part of the image has a lower contrast than the other part.

The algorithm combines the following steps:

- Defect highlighting;

- Equalization of image contrast outside the defect;

- Restoration of a defect by a combination of interpolation and artificial neural network.

One of the significant results of this work is an effective combination of two approaches to image restoration. One algorithm restores the background, while the other one restores the “significant” image. The results of the two algorithms are then combined to form a new high-quality image without loss of radiomics features.

Author Contributions

Conceptualization, D.C. and D.T.; methodology, D.T.; software, Z.K. and A.Z.; validation, D.G., Z.K., D.T. and D.C.; formal analysis, D.C.; investigation, Z.K. and A.Z.; resources, D.G.; data curation, D.G., Z.K. and A.Z.; writing—original draft preparation, D.T.; writing—review and editing, D.T.; visualization, Z.K. and A.Z.; supervision, D.C.; project administration, D.T.; funding acquisition, D.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data based on information that retrospectively has been taken from the patient’s case reports.

Acknowledgments

This paper has been supported by the Kazan Federal University Strategic Academic Leadership Program (“PRIORITY-2030”).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Fitzmaurice, C.; Dicker, D.; Pain, A.; Hamavid, H.; Moradi-Lakeh, M.; MacIntyre, M.F.; Allen, C.; Hansen, G.; Woodbrook, R.; Wolfe, C.; et al. The global burden of cancer 2013. JAMA Oncol. 2015, 1, 505–527. [Google Scholar] [CrossRef] [PubMed]

- Siegel, R.L.; Miller, K.D.; Jemal, A. Cancer statistics. CA A Cancer J. Clin. 2019, 69, 7–34. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jewett, P.I.; Gangnon, R.E.; Elkin, E.; Hampton, J.M.; Jacobs, E.A. Geographic access to mammography facilities and frequency of mammography screening. Ann. Epidemiol. 2018, 28, 65–71. [Google Scholar] [CrossRef] [PubMed]

- Chien, C.-Y.; Loh, E.-W.; Lin, Y.-K.; Huang, T.-W.; Wang, Y.-H.; Wang, H.-W.; Tseng, Y.-C.; Yao, M.M.-S.; Tam, K.-W. Image quality and performance benchmarks in vehicle and hospital mammography. Clin. Breast Cancer 2020, 20, e358–e365. [Google Scholar] [CrossRef] [PubMed]

- Martin, M.; Berns, E. Clinical mammography physics: State of practice. Clin. Imaging Phys. Curr. Emerg. Pract. 2020, 1, 89–106. [Google Scholar]

- Hussain, F.; Hammad, M.; Ksantini, R. Application of artificial intelligence in digital breast tomosynthesis and mammography. In Proceedings of the 2021 International Conference on Innovation and Intelligence for Informatics, Computing, and Technologies (3ICT), Bahrain, Bahrain, 29–30 September 2021; IEEE: Bahrain, Bahrain, 2021; pp. 638–645. [Google Scholar]

- Lee, J.-G.; Jun, S.; Cho, Y.-W.; Lee, H.; Kim, G.B.; Seo, J.B.; Kim, N. Deep learning in medical imaging: General overview. Korean J. Radiol. 2017, 18, 570–584. [Google Scholar] [CrossRef] [Green Version]

- Ansar, W.; Shahid, A.R.; Raza, B.; Dar, A.H. Breast cancer detection and localization using MobileNet based transfer learning for mammograms. In Proceedings of the Third International Symposium on Intelligent Computing Systems (ISICS), Sharjah, United Arab Emirates, 18–19 March 2020; Springer: Sharjah, United Arab Emirates, 2020; pp. 11–21. [Google Scholar]

- Tan, Y.J.; Sim, K.S.; Ting, F.F. Breast cancer detection using convolutional neural networks for mammogram imaging system. In Proceedings of the 2017 International Conference on Robotics, Automation and Sciences (ICORAS), Melaka, Malaysia, 27–29 November 2017; IEEE: Melaka, Malaysia, 2017; pp. 1–5. [Google Scholar]

- Kolchev, A.; Pasynkov, D.; Egoshin, I.; Kliouchkin, I.; Pasynkova, O.; Tumakov, D. YOLOv4-based CNN model versus nested contours algorithm in the suspicious lesion detection on the mammography image: A direct comparison in the real clinical settings. J. Imaging 2022, 8, 88. [Google Scholar] [CrossRef]

- Padmavathy, T.V.; Vimalkumar, M.N.; Sivakumar, N.; Gokulnath, C.B.; Parthasarathy, P. Performance analysis of pre-cancerous mammographic image enhancement feature using non-subsampled shearlet transform. Multimed. Tools Appl. 2021, 80, 26997–27012. [Google Scholar] [CrossRef]

- Gupta, B.; Tiwari, M.; Lamba, S.S. Visibility improvement and mass segmentation of mammogram images using quantile separated histogram equalisation with local contrast enhancement. CAAI Trans. Intell. Technol. 2019, 4, 73–79. [Google Scholar] [CrossRef]

- Ardra, J.; Grace, J.M.; Anto, D. Mammogram image denoising filters: A comparative study. In Proceedings of the 2017 Conference on Emerging Devices and Smart Systems (ICEDSS), Mallasamudram, India, 3–4 March 2017; IEEE: Mallasamudram, India, 2017; pp. 184–189. [Google Scholar]

- Duan, X.; Mei, Y.; Wu, S.; Ling, Q.; Qin, G.; Ma, J.; Chen, C.; Qi, H.; Zhou, L.; Xu, Y. A multiscale contrast enhancement for mammogram using dynamic unsharp masking in Laplacian pyramid. IEEE Trans. Radiat. Plasma Med. Sci. 2019, 3, 557–564. [Google Scholar] [CrossRef]

- Oza, P.; Sharma, P.; Patel, S.; Bruno, A. A bottom-up review of image analysis methods for suspicious region detection in mammograms. J. Imaging 2021, 7, 190. [Google Scholar] [CrossRef] [PubMed]

- Rampun, A.; Scotney, B.W.; Morrow, P.J.; Wang, H.; Winder, J. Breast density classification using local quinary patterns with various neighbourhood topologies. J. Imaging 2018, 4, 14. [Google Scholar] [CrossRef] [Green Version]

- Li, H.; Mukundan, R.; Boyd, S. Novel texture feature descriptors based on multi-fractal analysis and LBP for classifying breast density in mammograms. J. Imaging 2021, 7, 205. [Google Scholar] [CrossRef] [PubMed]

- Mridha, M.F.; Hamid, M.A.; Monowar, M.M.; Keya, A.J.; Ohi, A.Q.; Islam, M.R.; Kim, J.-M. A comprehensive survey on deep-learning-based breast cancer diagnosis. Cancers 2021, 13, 6116. [Google Scholar] [CrossRef] [PubMed]

- Sanchez-Montero, R.; Martinez-Rojas, J.-A.; Lopez-Espi, P.-L.; Nuñez-Martin, L.; Diez-Jimenez, E. Filtering of mammograms based on convolution with directional fractal masks to enhance microcalcifications. Appl. Sci. 2019, 9, 1194. [Google Scholar] [CrossRef] [Green Version]

- Dabass, J.; Arora, S.; Vig, R.; Hanmandlu, M. Mammogram image enhancement using entropy and CLAHE based intuitionistic fuzzy method. In Proceedings of the 2019 6th International Conference on Signal Processing and Integrated Networks (SPIN), Noida, India, 7–8 March 2019; IEEE: Noida, India, 2019; pp. 24–29. [Google Scholar]

- Ardymulya, I.; Wahyu, H. Mammographic Image enhancement using digital image processing technique. Int. J. Comput. Sci. Inf. Secur. 2018, 16, 222–226. [Google Scholar]

- Meenakshi, P.; Sanjay, T. Local entropy maximization based image fusion for contrast enhancement of mammogram. J. King Saud Univ.-Comput. Inf. Sci. 2021, 33, 150–160. [Google Scholar]

- Ravikumar, M.; Rachana, P.G.; Shivaprasad, B.J.; Guru, D.S. Enhancement of mammogram images using CLAHE and bilateral filter approaches. In Proceedings of the 2nd International Conference on Cybernetics, Cognition and Machine Learning Applications (ICCCMLA), Goa, India, 21–22 August 2021; Springer: Goa, India, 2021; pp. 261–271. [Google Scholar]

- Kayumov, Z.; Tumakov, D.; Mosin, S. Recognition of handwritten digits based on images spectrum decomposition. In Proceedings of the 23th International Conference on Digital Signal Processing and its Applications (DSPA), Moscow, Russian Federation, 24–26 March 2021; IEEE: Moscow, Russia, 2021. [Google Scholar]

- Kokoshkin, A.V.; Korotkov, V.A.; Korotkov, K.V.; Novichikhin, E.P. Retouching and restoration of missing image fragments by means of the iterative calculation of their spectra. Comput. Opt. 2019, 43, 1030–1040. [Google Scholar] [CrossRef]

- Hiya, R.; Subhajit, C.; Toshihiko, Y.; Tatsuaki, H. Image inpainting using frequency-domain priors. J. Electron. Imaging 2021, 30, 023016. [Google Scholar]

- Tavakoli, A.; Mousavi, P.; Zarmehi, F. Modified algorithms for image inpainting in Fourier transform domain. Comput. Appl. Math. 2018, 37, 5239–5252. [Google Scholar] [CrossRef]

- Xin, H.; Pengfei, X.; Renhe, J.; Haoqiang, F. Deep fusion network for image completion. arXiv 2019, arXiv:1904.08060. [Google Scholar]

- Zili, Y.; Qiang, T.; Shekoofeh, A.; Daesik, J.; Zhan, X. Contextual residual aggregation for ultra high-resolution image inpainting. arXiv 2020, arXiv:2005.09704. [Google Scholar]

- Kayumov, Z.; Tumakov, D.; Mosin, S. An effect of binarization on handwritten digits recognition by hierarchical neural networks. Lect. Notes Netw. Syst. 2022, 300, 94–106. [Google Scholar]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef] [Green Version]

- Sushreeta, T.; Tripti, S. Unified preprocessing and enhancement technique for mammogram images. Procedia Comput. Sci. 2020, 167, 285–292. [Google Scholar]

- Muneeswaran, V.; Rajasekaran, M.P. Local contrast regularized contrast limited adaptive histogram equalization using tree seed algorithm—An aid for mammogram images enhancement. In Proceedings of the Third International Conference on Smart Computing and Informatics (SCI), Odisha, India, 21–22 December 2018; Springer: Odisha, India, 2019; pp. 693–701. [Google Scholar]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a “completely blind” image quality analyzer. IEEE Signal Process. Lett. 2012, 20, 209–212. [Google Scholar] [CrossRef]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. No-reference image quality assessment in the spatial domain. IEEE Trans. Image Process. 2012, 21, 4695–4708. [Google Scholar] [CrossRef]

- Rundo, L.; Tangherloni, A.; Nobile, M.S.; Militello, C.; Besozzi, D.; Mauri, G.; Cazzaniga, P. MedGA: A novel evolutionary method for image enhancement in medical imaging systems. Expert Syst. Appl. 2019, 119, 387–399. [Google Scholar] [CrossRef]

- Liang, D.; Li, L.; Wei, M.; Yang, S.; Zhang, L.; Yang, W.; Du, Y.; Zhou, H. Semantically contrastive learning for low-light image enhancement. arXiv 2021, arXiv:2112.06451. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [Green Version]

- Sheikh, H.R.; Bovik, A.C. Image information and visual quality. IEEE Trans. Image Process. 2006, 15, 430–444. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Shen, Y.; Li, H. VSI: A visual saliency-induced index for perceptual image quality assessment. IEEE Trans. Image Process. 2014, 23, 4270–4281. [Google Scholar] [CrossRef] [Green Version]

- Wang, Z.; Simoncelli, E.P. Translation insensitive image similarity in complex wavelet domain. In Proceedings of the 1988 International Conference on Acoustics, Speech, and Signal Processing (ICASSP), New York, NY, USA, 11–14 April 1988; IEEE: New York, NY, USA, 1988; pp. 573–576. [Google Scholar]

- Wang, Z.; Simoncelli, E.P.; Bovik, A.C. Multiscale structural similarity for image quality assessment. In Proceedings of the Thrity-Seventh Asilomar Conference on Signals, Systems & Computers (ACSSC), Pacific Grove, CA, USA, 9–12 November 2003; IEEE: Pacific Grove, CA, USA, 2003; pp. 1398–1402. [Google Scholar]

- Zhang, L.; Zhang, L.; Mou, X.; Zhang, D. FSIM: A feature similarity index for image quality assessment. IEEE Trans. Image Process. 2011, 20, 2378–2386. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ding, K.; Ma, K.; Wang, S.; Simoncelli, E.P. Image quality assessment: Unifying structure and texture similarity. arXiv 2020, arXiv:2004.07728. [Google Scholar] [CrossRef] [PubMed]

- Xue, W.; Zhang, L.; Mou, X.; Bovik, A.C. Gradient magnitude similarity deviation: A highly efficient perceptual image quality index. IEEE Trans. Image Process. 2014, 23, 684–695. [Google Scholar] [CrossRef] [Green Version]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the 2018 Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; IEEE: Salt Lake City, UT, USA, 2018; pp. 586–595. [Google Scholar]

- Laparra, V.; Ballé, J.; Berardino, A.; Simoncelli, E.P. Perceptual image quality assessment using a normalized Laplacian pyramid. Electron. Imaging 2016, 16, 1–6. [Google Scholar] [CrossRef] [Green Version]

- Stefano, A.; Leal, A.; Richiusa, S.; Trang, P.; Comelli, A.; Benfante, V.; Cosentino, S.; Sabini, M.G.; Tuttolomondo, A.; Altieri, R.; et al. Robustness of PET radiomics features: Impact of co-registration with MRI. Appl. Sci. 2021, 11, 10170. [Google Scholar] [CrossRef]

- Benfante, V.; Stefano, A.; Comelli, A.; Giaccone, P.; Cammarata, F.P.; Richiusa, S.; Scopelliti, F.; Pometti, M.; Ficarra, M.; Cosentino, S.; et al. A new preclinical decision support system based on PET radiomics: A preliminary study on the evaluation of an innovative 64Cu-labeled chelator in mouse models. J. Imaging 2022, 8, 92. [Google Scholar] [CrossRef]

- van Griethuysen, J.J.M.; Fedorov, A.; Parmar, C.; Hosny, A.; Aucoin, N.; Narayan, V.; Beets-Tan, R.G.H.; Fillion-Robin, J.C.; Pieper, S.; Aerts, H.J.W.L. Computational radiomics system to decode the radiographic phenotype. Cancer Res. 2017, 77, 104–107. [Google Scholar] [CrossRef] [Green Version]

- Iori, M.; Di Castelnuovo, C.; Verzellesi, L.; Meglioli, G.; Lippolis, D.G.; Nitrosi, A.; Monelli, F.; Besutti, G.; Trojani, V.; Bertolini, M.; et al. Mortality prediction of COVID-19 patients using radiomic and neural network features extracted from a wide chest X-ray sample size: A robust approach for different medical imbalanced scenarios. Appl. Sci. 2022, 12, 3903. [Google Scholar] [CrossRef]

- Alongi, P.; Laudicella, R.; Panasiti, F.; Stefano, A.; Comelli, A.; Giaccone, P.; Arnone, A.; Minutoli, F.; Quartuccio, N.; Cupidi, C.; et al. Radiomics analysis of brain [18F]FDG PET/CT to predict Alzheimer’s disease in patients with amyloid PET positivity: A preliminary report on the application of SPM cortical segmentation, pyradiomics and machine-learning analysis. Diagnostics 2022, 12, 933. [Google Scholar] [CrossRef] [PubMed]

- Daimiel Naranjo, I.; Gibbs, P.; Reiner, J.S.; Lo Gullo, R.; Thakur, S.B.; Jochelson, M.S.; Thakur, N.; Baltzer, P.A.T.; Helbich, T.H.; Pinker, K. Breast lesion classification with multiparametric breast MRI using radiomics and machine learning: A comparison with radiologists’ performance. Cancers 2022, 14, 1743. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).