Abstract

This paper describes a new open data set, consisting of images of a chessboard collected underwater with different refractive indices, which allows for investigation of the quality of different radial distortion correction methods. The refractive index is regulated by the degree of salinity of the water. The collected data set consists of 662 images, and the chessboard cell corners are manually marked for each image (for a total of nodes). Two different mobile phone cameras were used for the shooting: telephoto and wide-angle. With the help of the collected data set, the practical applicability of the formula for correction of the radial distortion that occurs when the camera is submerged underwater was investigated. Our experiments show that the radial distortion correction formula makes it possible to correct images with high precision, comparable to the precision of classical calibration algorithms. We also show that this correction method is resistant to small inaccuracies in the indication of the refractive index of water. The data set, as well as the accompanying code, are publicly available.

1. Introduction

Various kinds of aberrations occur in optic systems. Therefore, when shooting with a camera other than a pinhole one, distortion in the image may emerge, such as radial distortion (RD) [1]. RD can be eliminated by calibration of the camera, during which the parameters are estimated for further software distortion compensation.

In the air, the refractive index (and, hence, the distortion parameters) barely changes, which allows the camera calibration to be carried out only once to obtain undistorted images. However, after submersion of the camera underwater, the distortion parameters change significantly due to the refraction of light waves when passing through the water–glass–air interfaces.

The exploration of RD correction methods underwater and study of their characteristics are relevant among scientists in many applied scientific fields. Underwater video analytics systems, for instance, are being developed to monitor the population status of forage fish in closed reservoirs [2]. The quality of the RD correction turns out to be crucial for the high-quality performance of such a system. A related problem has been described in the work [3], where a computer vision system was suggested as an alternative to the use of electric fishing rods for spawning fish “sampling”. The authors noted that an important factor affecting the quality of its operation is proper underwater RD correction. The author of [4] came to similar conclusions, in the context of an underwater object tracking task. Furthermore, in the work [5], the authors noted the importance of RD correction for the study of fish behaviour in their natural environment.

The authors of the work [6] focused on a system for autonomous mapping of the ocean floor, and demonstrated a number of experimental results using real and synthetic data. Based on these results, they explicitly showed the strong affect of even small errors in the estimated RD parameters on the constructed map quality. The same problem has been faced by scientists during the creation of a system for three-dimensional reconstruction of cultural heritage objects underwater [7]. The task of eliminating RD caused by the submersion of a lens underwater has also arisen in a work devoted to the creation of an visual odometry algorithm for an underwater autonomous vehicle [8].

A study that particularly deserves mentioning is [9], in which the authors encountered an underwater RD calibration problem in the task of improving the image aesthetics. Often, to enhance the image, scene depth map restoration is performed; for example, it is necessary to simulate the bokeh effect. The resulting distorted structure of the depth map can, to the contrary, lead to a decrease in aesthetics. Moreover, manufacturers of mobile phones and smartphones are currently extensively switching to the new IP68 [10] standard, with increased requirements for the stability of underwater performance, which has leads to growth in the popularity of amateur underwater photography.

The classic approach [11] for underwater RD correction is repeating calibration after submersion, which is a rather expensive and time-consuming process: a set of images of a special calibration object from different angles must be collected, followed by performing an automated search for RD correction parameters, the quality of which is determined by the prepared set of calibration images.

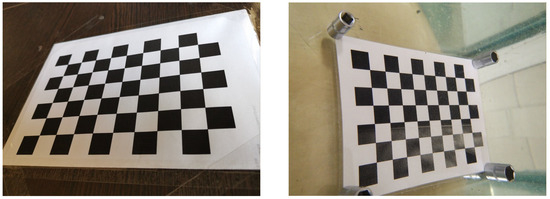

Most modern mobile phones cameras are sufficiently well pre-calibrated for shooting in the air, where RD is typically almost absent. In contrast, when underwater distortion appears, recalibration is required (Figure 1); however, due to the high labour consumption and complexity, its execution is not always justified.

Figure 1.

Examples of images made with the same camera in the air (left) and underwater (right).

Although it has been practically shown that such a shooting protocol actually allows for underwater RD elimination with sufficient quality [12], it is not applicable to any shooting scenario. For example, during amateur photography, strict limitations on the underwater shooting session time, or cases when operator’s expertise is limited, such an approach will generally not work. The average mobile phone user is more likely to delete a distorted image than figure out how to fix it.

The problem of RD correction parameter estimation is also aggravated by the fact that the refractive index may differ in different water reservoirs; consequently, the correction parameters found for some reservoir may not be suitable for another. The same may be true for a single reservoir, if its salinity or the temperature of the water changes [13]. It turns out that either the camera must be recalibrated for every submersion, or the necessity of recalibration must somehow be assessed.

Thus, the question arises: “Is it possible not to recalibrate, but to recalculate the parameters of the RD correction, knowing the refractive index of water?” For the first time, the answer to this question was proposed in [14]. In this study, the authors conducted a number of experiments, on the basis of which they derived an empirical formula for radial distortion correction depending on the refractive index. Later, in the work [15], the authors presented similar results, which were obtained theoretically, not experimentally.

In addition to deriving the RD correction formula, Ref. [15] have demonstrated its accuracy for a real image. However, there remain a number of questions, the answer to which requires a full-fledged experiment:

- How accurate is such analytical calibration?

- How does the accuracy of the initial calibration in the air affect the RD correction quality for this type of water?

- How does the inaccuracy of water refractive index selection affect the RD correction quality?

This work is devoted to answering these questions, thereby assessing the practical applicability of the RD compensation formula proposed in [15].

However, to answer these questions, a data set of underwater images which allows us to numerically assess the quality of distortion correction is required; for example, a set of images of some calibration object with a known structure. We could not find such an open data set. Therefore, a new set of images of a chessboard with manually marked cells square was assembled, called (Salt Water Distortion), which will be described in detail in Section 4 of this work.

The new results of numerical experiments confirming the practical applicability of the RD correction formula are described in Section 5.1 and Section 5.2. Section 5.3 evaluates the quality of the performance of an automatic detector of chessboard cell corners in underwater images, used as a tool to assess the quality of associated algorithms.

2. Reference Calibration Algorithm

In the work [16], it has been shown that the use of the pinhole camera model underwater has some restrictions, as the refractive index underwater depends on the wavelength. However, according to [17], for 19 °C distilled water, the refractive index varies from 1.332 at a wavelength of 656 nm to at a wavelength of 404 nm. As such variation is insignificant, the resulting angular difference in refraction is insignificant. The authors of [2] came to a similar conclusion.

An alternative to using the pinhole camera model is a more complex model, the so-called non-single viewpoint model (nSVP), while all falling rays pass through one point in the traditional camera model, in nSVP, they fall on the caustic curve [18]. As the authors of [19] have mentioned, the standard calibration object (chessboard) is not suitable in this case. Instead, a new calibration object was proposed: a perforated lattice, which is illuminated by two different wavelengths of light, forming an exactly known scheme of point light sources.

Thus, as the pinhole camera model is quite accurate, and the complications of the model lead to significant complication of the calibration procedure and the data collection process, only RD correction algorithms based on the classical pinhole camera model were studied in this work.

In this category, there exist many algorithms for RD calibration [20], which can be divided into the following groups:

- Calibration algorithms calculating RD correction parameters based on a series of images of a calibration object; for example, a chessboard [11], a flat object with evenly spaced LED bulbs [21], or an arbitrary flat textured object [22].

- Calibration algorithms based on active vision, calculating the parameters of RD correction from a series of scene images; in this case, information about camera movement is known during calibration [23].

- Self-calibration algorithms that calculate the RD correction parameters by checking the correctness of the epipolar constraint for a series of images of the same scene taken from different angles [24].

- Self-calibration algorithms that calculate camera parameters from a single-shot image [25,26].

As the purpose of this work is to check the formula for RD parameter re-calculation in laboratory conditions, the algorithm described in [11] is considered in our work as the most suitable reference; hereafter, we denote this algorithm by “classic”.

It is worth mentioning that, while the chosen classic algorithm aims to address more complex problems (i.e., not only RD correction), this algorithm is used only as a reference and to have an adequate baseline for quality assessment of the RD correction formula.

3. Methods

3.1. Classic Algorithm for Radial Distortion Correction

The classic algorithm works with a set of images of a flat calibration object with periodic structure (e.g., a chessboard) and known geometric parameters, taken from various angles. In these images, special points are defined (e.g., the nodes of a chessboard). This method is a traditional approach, in which the calculation of parameters of the distortion model is performed simultaneously as the calculation of the internal parameters of the camera (in the pinhole camera model), through solving the a non-linear optimization problem.

The calibration algorithm typically uses a standard distortion model [27] describing radially symmetric distortions. The software implementation of this algorithm in the OpenCV [28] library uses a model in which the transformation of the image point coordinates (in the plane of the pinhole camera screen) is set as follows:

where denotes the initial image point coordinates; denotes the coordinates of this image point after distortion correction; ; and are the distortion coefficients.

3.2. Formula for Radial Distortion Correction

In [15], it was shown that, with a known refractive index of water, the correction of the distortion occurring when the camera is immersed can be described by converting the coordinates of the image in the plane of the pinhole camera screen, as follows:

where and denote the coordinates of the object on the pinhole camera screen when shooting in the air and underwater, respectively; ; and n is the refractive index of water.

This formula is obtained by assuming infinitesimal thickness of the material separating water and air.

4. Calibration Data Set of Images in Salt Water (SWD)

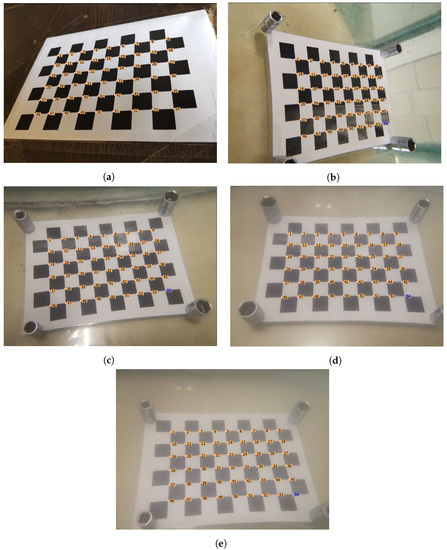

Shooting was conducted using a modern mobile phone (Huawei Mate 20 Pro). The data set was obtained using two cameras: a telephoto (focal length mm) camera and a wide-angle (focal length mm) camera. The image size was the same for both cameras, and was equal to pixels. A centimetre chessboard was used as a calibration object, with a side length per chessboard cell of cm ( cells).

Underwater image collection was carried out by submerging the cameras into an aquarium filled with tap water (salinity less than ). The non-salty water image set contained 48 and 56 images for each camera, correspondingly. Then, the salinity was increased, using table salt, to 13, 27, or , with the required amount of salt having been pre-calculated, as the spatial measurements of the used aquarium were known. The obtained salt water image sets consisted of 34, 86, and 88 images for the telephoto camera and 47, 89, and 80 images for the wide-angle camera, respectively.

The coordinates of the cell corners of the chessboard image for all collected images were marked manually. The coordinates of each point were specified with sub-pixel accuracy and, depending on the situation, either a point in the middle of a pixel, a point on a pixel grid, or a middle point of the border of two adjacent pixels was assigned to the corner pre-image of the chessboard. Examples of images and the used markup are provided in Figure 2. The collected data set is publicly available (https://github.com/Visillect/SaltWaterDistortion (accessed on 11 October 2022)).

Figure 2.

Example images from SWD data set and cell corner markup: (a) Captured in the air; (b) captured underwater (salinity ); (c) captured underwater (salinity ); (d) captured underwater (salinity ); and (e) captured underwater (salinity ).

It is also worth noting the technical difficulties that arose during the collection of the data set, which affected its appearance:

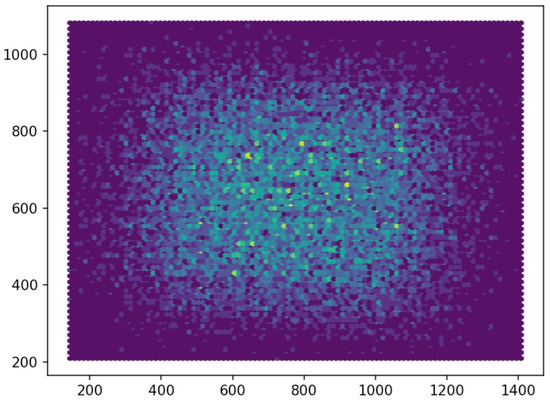

- After the shutter of the smartphone camera was released, the image was processed programmatically from the RAW format, as a result of which the final image was cropped, which was not displayed on the smartphone screen at the time of shooting. Thus, it was difficult to obtain a board at the edge of the image, where the distortion is known to be maximal. An example histogram of the distribution of board cells corners in the original image is shown in Figure 3.

Figure 3. Histogram of the corner distribution over the image area in the SWD data set for the telephoto camera.

Figure 3. Histogram of the corner distribution over the image area in the SWD data set for the telephoto camera. - Due to the movement of water movement during shutter release and further image processing, many photos turned cloudy. These photos had to be excluded as, due to the turbidity, some cell corners of the chessboard became indistinguishable.

- With an increase in salinity, the water turbidity also increased, as the salt used was not pure enough and contained impurities.

- Laminated paper with the chessboard image was used for shooting underwater. Because of this, light glares appeared at some angles. Particularly, it became difficult to determine the location of the chess grid corners, and such photos were also manually excluded.

5. Experimental Results

5.1. Precision of the Correction Formula

In this work, all numerical experiments were carried out on images captured only by a telephoto smartphone camera.

The initial calibration of the smartphone camera was carried out in air, using the classical method with images taken in the air from the SWD data set. Thus, the original RD of the lenses was eliminated, and the associated correction parameters were fixed. For images corrected with these parameters, the RD correction formula with varying index n was applied to the underwater photography. The marked points on the chessboard for all images in the experiment were also re-calculated, according to these parameters, using Formula (1). Based on analysis of the structure of the transformed set of marked points, the precision of the correction was evaluated.

To correctly compare the results of such correction with the classical method, the RD correction parameters underwater were calculated using the classical method for each degree of salinity separately. After that, using the obtained RD correction parameters, the marked points were transformed. Similarly to above, the precision of the correction algorithm was evaluated based on the obtained results.

In this paper, a software implementation presented in the OpenCV [28,29] library was used for the classical calibration method. An example of image correction using both methods is shown in Figure 4.

Figure 4.

Examples of images (from left to right): Taken underwater (salinity ) with parameters for correcting radial distortion when shooting in air; corrected using the classical calibration method; and corrected by Formula (2).

The experiment was carried out the same way for each level of salinity. Optimal distortion correction coefficients were selected through cross-validation (the size of the training set was , and the validation set was ) on the training set (i.e., with the exception of 10 test images).

To assess the quality of the radial distortion effect correction, the structure of the transformed set of marked points was analysed: the better that the points corresponding to one straight line of chessboard corners fit to a straight line, the better the calibration effect. In this paper, two metrics were used to estimate the quality of calibration:

- Metric 1. The standard deviation of the cell corners from the straight line approximating them, determined using the OLS method, was estimated.

- Metric 2. The distance from the straight line constructed through the corners of the chessboard and the most distant cell corner corresponding to it.

The second metric is especially important for quantifying the effect, as it is more likely that the furthest line will be at the border of the image, where RD correction errors are particularly pronounced.

For each set of images corresponding to different salinity indices, four errors were calculated:

- M1. The average value of metric 1 on the entire set of images for all lines.

- M2. The maximum value of metric 1 on the entire set of images among all lines.

- M3. The average value of metric 2 on the entire set of images for all lines.

- M4. The average value of metric 2 on the entire set of images for the most distant lines (for each image, one such line was chosen).

The point coordinates were multiplied by the same number, such that the length of the largest chessboard side for each image was 1000 pixels. This normalization was carried out to eliminate the influence of the scaling factor when correcting the image. The results of the experiment are presented in Table 1.

Table 1.

Comparison of the precision of radial distortion correction using the classical method and Formula (2).

It can be concluded, from the table, that the errors in the air after applying the classical method differed only by a few hundredths of a pixel from the errors before applying the method, which is reasonable as most of cameras (and especially smartphone cameras) are designed and optimised for shooting in the air. At the same time, errors in water at all levels of salinity, after applying the classical calibration method and the correction Formula (2), were much lower than the errors obtained before RD correction. The correction precision of both methods was comparable; in some cases, the correction error using Formula (2) turned out to be even lower than that obtained when using classical method. From this, we can conclude that the proposed formula is applicable for the correction of RD that occurs when a camera is submerged underwater.

5.2. Dependence of the Correction Precision on Salinity

As is well-known, the refractive index of water is affected by salinity and temperature [13]. In this paper, to conduct experiments with different refractive indices, the degree of salinity of water at room temperature was varied. The salinity of water is easier to control technically; moreover, it influences the refractive index of water more significantly.

Four sets of underwater images with a telephoto camera from the SWD data set collected in water with different salinity levels were used for the experiment. The refractive index corresponds to distilled water, while corresponds to saline solution (i.e., the salinity of the Dead Sea) [30]. The and salt solutions corresponded to and , respectively.

For each of these sets, correction was performed using Formula (2) with different values of the specified refractive index. The results of the experiments are presented in Table 2. The experiment showed that even significant changes in the salinity index only slightly affected the precision of the final correction; namely, the precision did not change by more than .

Table 2.

Dependence of RD correction error when using Formula (2) on the accuracy of specifying the water refraction index.

It should also be noted that, for all experiments, the error increased with an increase in the refractive index. This was due to the imperfection of the image normalization method: with an increase in the refraction parameter, the degree of image distortion increases, which leads to an increase in the final error when normalizing the largest chessboard side to a size of a thousand pixels.

From the results of this experiment, it can be concluded that the correction of radial distortion by Formula (2) with a refractive index of provides acceptable precision, in most cases.

5.3. Quality of the Automatic Chessboard Cell Corner Detector

To automatically assess the quality of the RD correction algorithms, it was necessary to be able to programmatically search for cell corners in the calibration object (i.e., the chessboard). This feature was provided by the findChessboardCorners function in the OpenCV library. A logical question arises: “Is it possible to use this function to assess the quality of correction in experiments with underwater images without using a data set?”.

To answer this question, it was considered sufficient to compare the detection result with the marked points in the SWD data set. For each image, the largest Euclidean distance between a pair of corresponding points was estimated, the comparison results are presented in Table 3.

Table 3.

Accuracy evaluation of chessboard cell corner detection using the OpenCV library findChessboardCorners function.

The results clearly demonstrate that the accuracy of the cell corner detector was significantly lower than the accuracy of correction. Large values in the column with maximum errors indicate that there were outliers that made at least the M2 and M4 metrics uninformative. Finally, the results in the table indicate that, with increasing salinity and turbidity of the water, the reliability of this measurement method decreases.

6. Conclusions

In this article, we described a new open data set for evaluating the accuracy of underwater radial distortion calibration algorithms under different refractive indices. The data set consists of 662 images of a chessboard collected with two different cameras, with the location of cell corners marked manually.

Based on the collected data set, a number of experiments were conducted to assess the practical applicability of a radial distortion correction formula when the camera is submerged underwater. According to the experimental results, the precision of RD correction using the formula was not inferior to a full-fledged calibration procedure for specific operating conditions. We also showed that the inaccuracy of specifying the refractive index of water does not significantly affect the precision of the correction and, so, it can be set equal to .

Thus, this article experimentally confirmed that the use of the radial distortion correction formula allows us to not only significantly simplify and reduce the cost of operating a camera underwater, but also maintains the calibration accuracy at a sufficient level.

Author Contributions

Data collection and preparation, software, research and experiments, visualization, writing, and editing: D.S.; review and editing: D.P.; supervision, review, and editing: E.E.; resources and writing: I.K. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partially supported by the Russian Foundation for Basic Research (19-29-09075).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data set, as well as the accompanying code for all of the experiments described in the article, are publicly available at https://github.com/Visillect/SaltWaterDistortion (accessed on 14 October 2022).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Conrady, A.E. Decentred Lens-Systems. Mon. Not. R. Astron. Soc. 1919, 79, 384–390. [Google Scholar] [CrossRef]

- Shortis, M. Camera Calibration Techniques for Accurate Measurement Underwater. Sensors 2015, 15, 30810–30826. [Google Scholar] [CrossRef] [PubMed]

- Ellender, B.; Becker, A.; Weyl, O.; Swartz, E. Underwater video analysis as a non-destructive alternative to electrofishing for sampling imperilled headwater stream fishes. Aquat. Conserv. Mar. Freshw. Ecosyst. 2012, 22, 58–65. [Google Scholar] [CrossRef]

- Pavin, A.M. Identifikatsiya podvodnykh ob”ektov proizvol’noi formy na fotosnimkakh morskogo dna [Identification of underwater objects of any shape on photos of the sea floor]. Podvodnye Issledovaniya i Robototekhni- ka [Underw. Res. Robot.] 2011, 2, 26–31. [Google Scholar]

- Somerton, D.; Glendhill, C. Report of the National Marine Fisheries Service Workshop on Underwater Video Analysis. In Proceedings of the National Marine Fisheries Service Workshop on Underwater Video Analysis, Seattle, WA, USA, 4–6 August 2004. [Google Scholar]

- Elibol, A.; Möller, B.; Garcia, R. Perspectives of auto-correcting lens distortions in mosaic-based underwater navigation. In Proceedings of the 2008 23rd International Symposium on Computer and Information Sciences, Istanbul, Turkey, 27–29 October 2008; pp. 1–6. [Google Scholar] [CrossRef]

- Skarlatos, D.; Agrafiotis, P. Image-Based Underwater 3D Reconstruction for Cultural Heritage: From Image Collection to 3D. Critical Steps and Considerations. In Visual Computing for Cultural Heritage; Springer: Cham, Switzerland, 2020; pp. 141–158. [Google Scholar] [CrossRef]

- Botelho, S.; Drews-Jr, P.; Oliveira, G.; Figueiredo, M. Visual odometry and mapping for Underwater Autonomous Vehicles. In Proceedings of the 6th Latin American Robotics Symposium (LARS 2009), Valparaiso, Chile, 29–30 October 2009; pp. 1–6. [Google Scholar] [CrossRef]

- Berman, D.; Levy, D.; Avidan, S.; Treibitz, T. Underwater Single Image Color Restoration Using Haze-Lines and a New Quantitative Dataset. arXiv 2018, arXiv:1811.01343. [Google Scholar] [CrossRef] [PubMed]

- Degrees of Protection Provided by Enclosures (IP Code). International Standard IEC 60529:1989+AMD1:1999+AMD2:2013. 2013. Available online: https://webstore.iec.ch/publication/2452 (accessed on 1 April 2022).

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Heikkila, J. Geometric Camera Calibration Using Circular Control Points. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1066–1077. [Google Scholar] [CrossRef]

- Quan, X.; Fry, E.S. Empirical equation for the index of refraction of seawater. Appl. Opt. 1995, 34, 3477–3480. [Google Scholar] [CrossRef] [PubMed]

- Lavest, J.M.; Rives, G.; Lapresté, J.T. Underwater Camera Calibration; Springer: Berlin/Heidelberg, Germany, 2000; pp. 654–668. [Google Scholar]

- Konovalenko, I.; Sidorchuk, D.; Zenkin, G. Analysis and Compensation of Geometric Distortions, Appearing when Observing Objects under Water. Pattern Recognit. Image Anal. 2018, 28, 379–392. [Google Scholar] [CrossRef]

- Sedlazeck, A.; Koch, R. Perspective and Non-perspective Camera Models in Underwater Imaging—Overview and Error Analysis. In Advances in Computer Communication and Computational Sciences; Springer: Berlin/Heidelberg, Germany, 2012; pp. 212–242. [Google Scholar]

- Daimon, M.; Masumura, A. Measurement of the refractive index of distilled water from the near-infrared region to the ultraviolet region. Appl. Opt. 2007, 46, 3811–3820. [Google Scholar] [CrossRef] [PubMed]

- Huang, L.; Zhao, X.; Huang, X.; Liu, Y. Underwater camera model and its use in calibration. In Proceedings of the 2015 IEEE International Conference on Information and Automation, Lijiang, China, 8–10 August 2015; pp. 1519–1523. [Google Scholar]

- Yau, T.; Gong, M.; Yang, Y.H. Underwater Camera Calibration Using Wavelength Triangulation. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2499–2506. [Google Scholar]

- Long, L.; Dongri, S. Review of Camera Calibration Algorithms. In Advances in Computer Communication and Computational Sciences; Springer: Singapore, 2019; pp. 723–732. [Google Scholar]

- Zhang, Y.; Zhou, F.; Deng, P. Camera calibration approach based on adaptive active target. In Proceedings of the Fourth International Conference on Machine Vision (ICMV 2011), Singapore, 9–10 December 2012; Volume 8350, p. 83501G. [Google Scholar]

- Brunken, H.; Gühmann, C. Deep learning self-calibration from planes. In Proceedings of the Twelfth International Conference on Machine Vision (ICMV 2019), Amsterdam, The Netherlands, 16–18 November 2019; Volume 11433, p. 1114333L. [Google Scholar]

- Duan, Y.; Ling, X.; Zhang, Z.; Liu, X.; Hu, K. A Simple and Efficient Method for Radial Distortion Estimation by Relative Orientation. IEEE Trans. Geosci. Remote Sens. 2017, 55, 6840–6848. [Google Scholar] [CrossRef]

- Lehtola, V.; Kurkela, M.; Ronnholm, P. Radial Distortion from Epipolar Constraint for Rectilinear Cameras. J. Med. Imaging 2017, 3, 8. [Google Scholar] [CrossRef]

- Kunina, I.; Gladilin, S.; Nikolaev, D. Blind radial distortion compensation in a single image using fast Hough transform. Comput. Opt. 2016, 40, 395–403. [Google Scholar] [CrossRef]

- Xue, Z.; Xue, N.; Xia, G.S.; Shen, W. Learning to calibrate straight lines for fisheye image rectification. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1643–1651. [Google Scholar]

- Brown, D.C. Decentering distortion of lenses. Photogramm. Eng. Remote Sens. 1966, 32, 444–462. [Google Scholar]

- Open Source Computer Vision Library. Available online: https://opencv.org (accessed on 7 July 2020).

- OpenCV Documentation: Camera Calibration and 3D Reconstruction. Available online: https://docs.opencv.org/2.4/modules/calib3d/doc/camera_calibration_and_3d_reconstruction.html (accessed on 7 July 2020).

- CRC Handbook. CRC Handbook of Chemistry and Physics, 85th ed.; CRC Press: Boca Raton, FL, USA, 2004; pp. 8–71. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).