1. Introduction

Image enhancement is the process of the quality improvement of an image for both human viewers and other automated image processing techniques [

1]. Although there are many books that discuss the techniques for image enhancement for grayscale images, the generalization of these techniques to color images is not straightforward because color images have more factors to be considered than grayscale images [

2], and those factors, e.g., hue, saturation and lightness, are mutually dependent on each other. This situation makes it more challenging to enhance color images than grayscale ones.

The performance evaluation of image-enhancement techniques has also been the main topic in the field of image processing research and development. For example, Puniani and Arora used two quantitative measures: the contrast improvement index (CII) and Tenengrad measure [

3], both of which focus on the local contrast of target images. However, the quality of color images is not evaluated by contrast only. Huang et al. [

4] used two color image quality metrics—the color naturalness index (CNI) and color colorfulness index (CCI), based on luminance, hue and saturation—to demonstrate that the closer CNI is to 1 in the range

, the more natural the color of an image, and the best range for CCI is between 16 and 20, according to [

5]. These results show the mutual dependence of color attributes, and the relationship between saturation and colorfulness. When the luminance or intensity is predetermined by an appropriate contrast enhancement technique, which is the case that we discuss in this paper, we can improve the colorfulness by increasing saturation while preserving hue.

Color image enhancement is one of the main issues in digital image processing for human visual systems and computer vision. A color has three attributes, including hue, saturation and intensity (or lightness), which are modified by color image enhancement. Among the three attributes of a color, hue determines the appearance of the color. Therefore, it is often preferable to preserve hue in the process of color image enhancement. A typical way of preserving hue is color space conversion, i.e., we convert an RGB (red, green and blue) color, which is a typical format for storing colors in computers, into the HSI (hue, saturation and intensity) color, then modify saturation and intensity, and finally return the modified HSI color to the original RGB color space. However, such a naive procedure may cause a gamut problem in that the modified color may be beyond the color gamut. A gamut problem, which is also called the out-of-gamut problem [

6], is a common problem in color image processing, where color space transformations between an RGB color space and other color spaces compatible with human perception are utilized. Different color spaces or color coordinate systems have a different color gamut, and color image processing in each color space is independent of other color spaces. Therefore, the domain and range of gamut mapping do not match generally, which may influence the results of color image processing, e.g., Yang and Kwok proposed a gamut clipping method for avoiding such out-of-gamut problems [

6]. However, clipping any value of color attributes may cause the degeneration of different colors into the same color.

To overcome the gamut problem, Naik and Murthy proposed a scheme for hue-preserving color image enhancement without a gamut problem [

2], which was surveyed by Bisla [

7]. Han et al. also presented an equivalent method to theirs from the point of view of 3D color histogram equalization [

8]. However, as they pointed out, their scheme always decreases the saturation in a common condition [

2]. To alleviate such a decrease in saturation, Yang and Lee proposed a modified hue-preserving gamut mapping method, which outputs the color with a higher saturation than that given by Naik and Murthy’s method [

9]. Yang and Lee’s method partitions the range of luminance, which is a normalized intensity, into three parts, corresponding to dark, middle and bright colors. For a dark or bright color, their method first enhances the saturation, and then applies Naik and Murthy’s method to the enhanced color. However, for a color with the luminance in the middle range, Yang and Lee’s method is the same as that of Naik and Murthy. Therefore, we cannot improve the saturation of such colors, even if we adopt the method of Yang and Lee. Yu et al. proposed a method for improving the saturation, using three bisecting planes of an RGB color cube, and demonstrated that their method achieves higher saturation, compared with the methods of Naik and Murthy, and Yang and Lee [

10]. Park and Lee proposed a piecewise linear gamut mapping method, which balances color saturation while avoiding color clipping [

11].

In this paper, we propose a method for hue-preserving saturation improvement by eliminating the middle part, where Yang and Lee’s method is the same as that of Naik and Murthy, which decreases the saturation. As a result, we can minimize the decrease in saturation caused by hue-preserving color image enhancement. We assume that the hue, saturation and intensity are defined in the HSI color model [

12]. We first compute the target intensities for all pixels by histogram equalization (HE) [

13] or histogram specification (HS), which is also called histogram matching [

14], where an RGB color cube-based method [

15] is adopted for further improvement of saturation. Then, we project each color in a given input image onto a surface in an RGB color space, which bisects the RGB color cube into dark and bright parts. The surface spreads to the edges of the RGB color cube far from the center of the cube, which denotes a neutral gray point. Therefore, the projection of an RGB color point onto the surface can increase the saturation. After that, we move the saturation-improved color to the final color point, corresponding to the target intensity. In our experiment, we compare the proposed method with those of Naik and Murthy, and Yang and Lee, and demonstrate the effectiveness of the proposed method.

In this work, we address the research question of how to maximize the color saturation in the context of hue-preserving color image enhancement. The reason why we want to preserve the original hue is that we want to keep the image contents unchanged, before and after the process of color image enhancement. For example, if red becomes green by a hue-changing color image enhancement, then a red apple will become a green one, which will alter the appearance of the image greatly. In order to keep the appearance unchanged, we consider it important for the color image enhancement to preserve the hue. Previous works had subregions in an RGB color space in which the colors always fade by the enhancement procedure. To avoid the effect of color fading, we try to eliminate the subregion for maximizing the effect of the saturation improvement. This elimination of the subregion is our main contribution.

The rest of this paper is organized as follows:

Section 2 summarizes the histogram manipulation methods used in the following sections.

Section 3 summarizes Naik and Murthy’s method and that of Yang and Lee for hue-preserving color image enhancement, and proposes a method for improving the saturation of Yang and Lee’s method by avoiding the direct use of Naik and Murthy’s method in the middle range of the intensity axis.

Section 4 shows our experimental results, which is discussed in

Section 5. Finally,

Section 6 concludes this paper.

2. Histogram Manipulation

Let be an RGB (red, green and blue) color image for and , where denotes the RGB color vector of a pixel at the two-dimensional point on the image P with m rows and n columns of pixels, and the superscript T denotes the matrix transpose. Assume that P is a 24-bit true color image; then, every element of is an integer between 0 and 255 such that , i.e., we equally assign 8-bit to each element to express levels. The intensity of is defined by , from which we have for . Instead of the intensity, LHS (luminance, hue and saturation) and YIQ (Luminance (Y), In-phase Quadrature in NTSC (National Television System Committee) color space) color spaces define the luminance L or the Y component Y: for a normalized RGB color vector with . Both of them can be expressed as the inner product of a constant vector and an RGB color vector, and are proportional to the length of the RGB color vector. Therefore, they have essentially equivalent information.

In this section, we briefly summarize two histogram manipulation (HM) methods: histogram equalization (HE) and histogram specification (HS).

2.1. Histogram Equalization

Histogram equalization (HE) is an effective method for contrast enhancement as used by Naik and Murthy [

2]. The histogram of the intensity image of

P can be equalized as follows. Let

be the histogram; then, the

lth element of

is given by

, where

denotes the Kronecker delta defined by

if

, and 0 if

. Next, let

be the cumulative histogram of

; then, the

lth element of

is given by

. Then, HE transforms an intensity

into the following:

where the ‘round’ operator rounds a given argument toward the nearest integer, and

.

2.2. Histogram Specification

Histogram specification (HS) assumes that a target histogram is given, and transforms the original histogram into the target one. Let

be a target histogram into which we want to transform the original histogram of intensity, and let

be the cumulative histogram of

, i.e., the

th element of

is given by

. Then, HS transforms an intensity

into the following:

As a reasonable choice for the target histogram, we have proposed an RGB color cube-based method as follows [

15]: We compute the area of the cross section of a normalized RGB color cube

and an equi-intensity plane

, where

,

, and

denotes the Euclidean norm, as a function of

:

from which the target histogram is given by the following:

for

. We also have the integral of

as follows:

from which the target cumulative histogram is given by the following:

for

. This method regards the area of the cross section between the color cube and a plane perpendicular to the intensity axis as the value of the target histogram. Since the wider the cross section becomes, the more saturated colors it contains, it is expected that HS with the obtained target histogram can produce saturation-enhanced images.

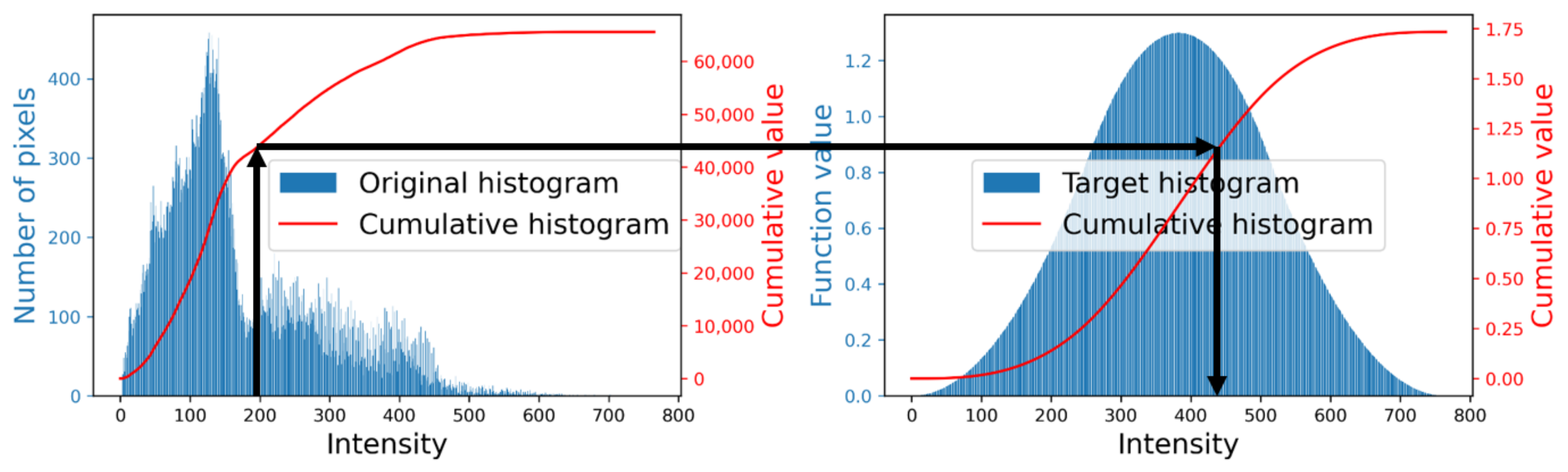

Figure 1 illustrates the procedure of HS based on this method. In this figure, the left graph shows an original intensity histogram (the blue bars) of a color image shown in

Figure 2a and its cumulative version (the red curve), and the right graph shows the target histogram (the blue bars) in (

4) and its cumulative version (the red curve) in (

6). We first extend an arrow upward from a point on the horizontal axis of the left graph, which denotes an input intensity, to the red curve. Then we turn right, and move to the right graph to find the intersection point with another red curve. Finally, we go down from the intersection point to the horizontal axis of the right graph, on which the indicated point gives the output intensity.

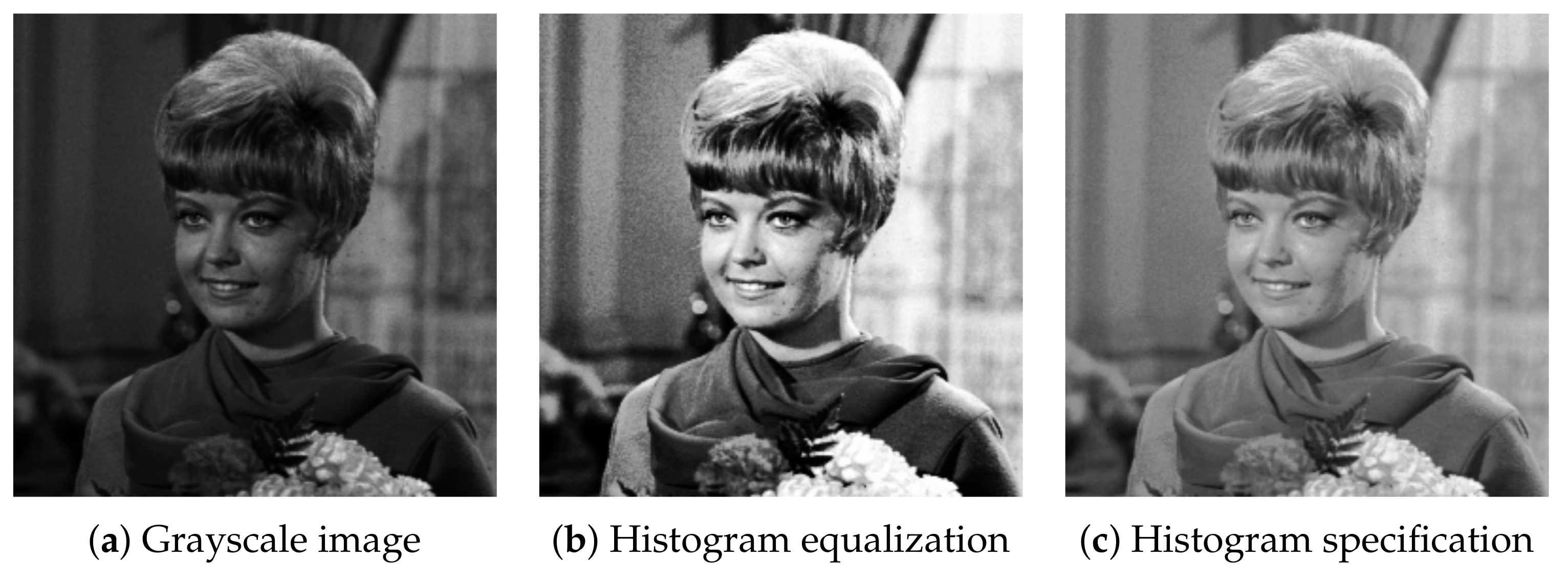

Figure 3 shows an example of HM for a grayscale image, where the original image in

Figure 3a, which is in the standard image database (SIDBA) [

16], is slightly dark. HE in

Figure 3b enhances the contrast; however, an excessive enhancement makes some details invisible, e.g., a leaf on the girl’s chest is assimilated into her scarf. On the other hand, HS in

Figure 3c enhances the overall details moderately.

3. Hue-Preserving Color Image Enhancement

A straightforward way of applying HM of an intensity image to the corresponding color image may be to rescale all colors to adjust their intensities to targets as follows:

where

and

denote the original and target intensities, respectively. However, this may cause a gamut problem when

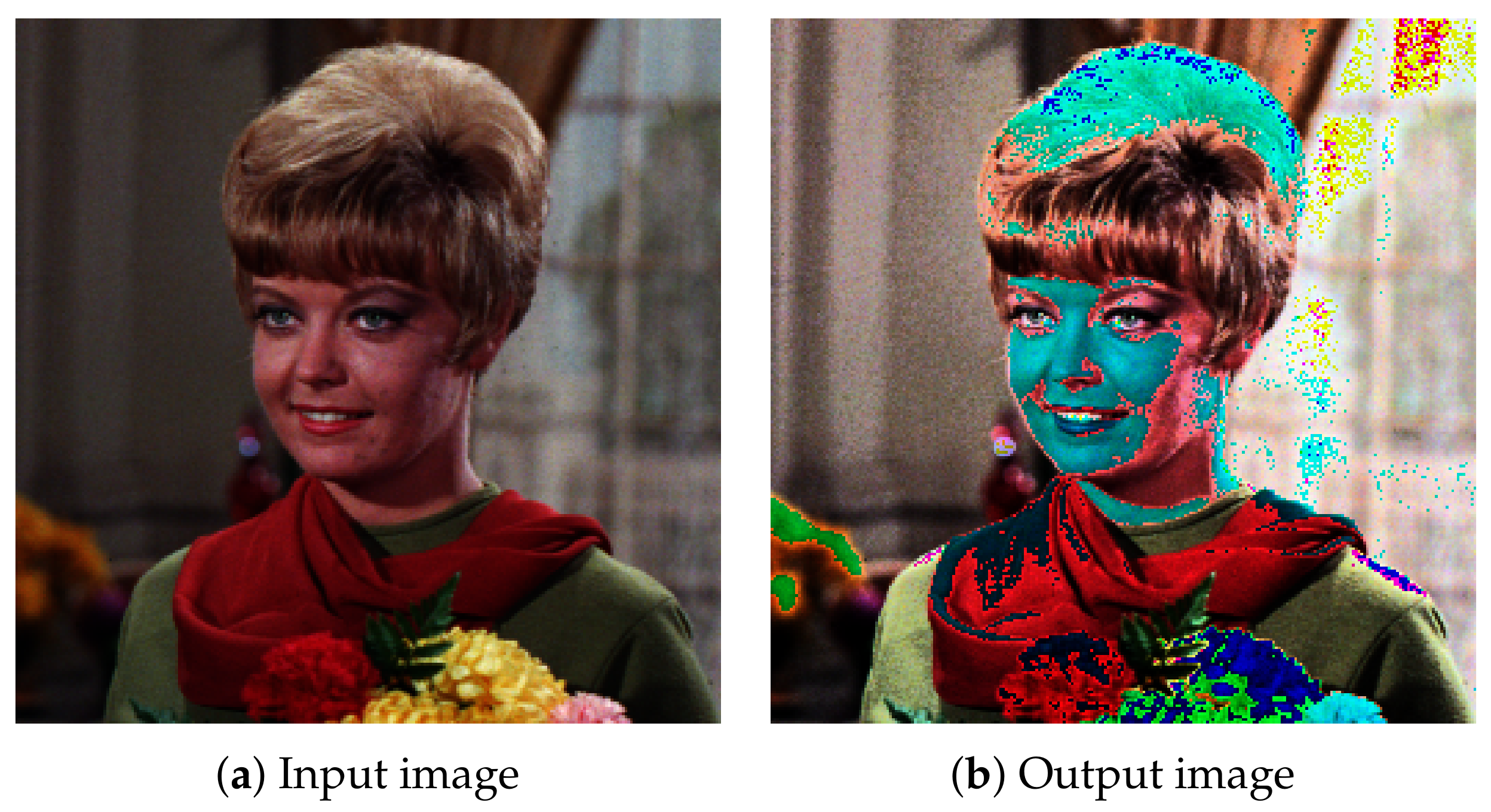

goes out of the range of color gamut. For example, a color image in

Figure 2a is broken by the method in (

7) with

computed by HE as shown in

Figure 2b, where the computed color values are simply saved with the ‘Image.save’ function in Python Image Library (PIL).

3.1. Naik and Murthy’s Method

To avoid the above gamut problem, Naik and Murthy proposed a method that ensures that the modified colors always go into the color gamut as follows [

2]: Let

be an RGB color vector in a normalized RGB color cube

for notational simplicity, and assume that a target intensity

is given. Then, we compute the intensity of

as

, and compare it with

. If

, then (

7) is adopted without a gamut problem because

ensures that

.

On the other hand, if

, then (

7) may cause a gamut problem. To avoid this, we transform

into the corresponding CMY (cyan, magenta and yellow) color vector as

. The intensity of

is given by

. Similarly, the target intensity in the CMY color space is given by

. Then we compute the modified CMY color vector

, which does not cause any gamut problem in the CMY color space because

or

. Finally, we transform

back into the RGB color vector as the following:

Naik and Murthy’s method is summarized in Algorithm 1.

| Algorithm 1 Naik and Murthy’s method |

- Require :

RGB color vector , target intensity - Ensure :

Modified RGB color vector - 1:

Compute the intensity of as ; - 2:

ifthen - 3:

; - 4:

else - 5:

; - 6:

end if

|

We can see that the above method does not increase the saturation defined by the following:

which is the distance from the intensity axis joining the black and white vertices to

[

12], where

I denotes the

identity matrix. We have proved that the following equations hold for any nonnegative numbers

and

[

15]:

The lines 3 and 5 in Algorithm 1 correspond to the cases where

and

in the above Equations (

10) and (

11), both of which mean a non-increase in saturation.

3.2. Yang and Lee’s Method

To increase the saturation, Yang and Lee modified Naik and Murthy’s method as follows [

9]. First, we divide the normalized RGB color space into three parts by two planes perpendicular to the intensity axis:

and

. If a color vector

satisfies

, then the intensity of

is boosted by

to

. After that,

is passed to the function

, which computes Naik and Murthy’s method in Algorithm 1 to obtain a modified color vector

.

On the other hand, if satisfies , then the intensity of is dropped down to 2 to increase the saturation as follows. First, we transform into the CMY color vector as whose intensity is . Next, the intensity is boosted up by to . After that, we transform back into the RGB color vector as . Finally, is passed to the function to obtain a modified color vector . If , then we simply apply Naik and Murthy’s method to as .

Yang and Lee’s method is summarized in Algorithm 2.

| Algorithm 2 Yang and Lee’s method. |

- Require :

RGB color vector , target intensity - Ensure :

Modified RGB color vector - 1:

Compute the intensity of as ; - 2:

if

then - 3:

; - 4:

; - 5:

else if then - 6:

; - 7:

; - 8:

else - 9:

; - 10:

end if

|

We have proved that the saturation given by Yang and Lee’s method is greater than or equal to that given by Naik and Murthy’s method [

15]. However, as described on line 9 in Algorithm 2, Yang and Lee’s method directly uses Naik and Murthy’s method for

. Therefore, Yang and Lee’s method also does not increase the saturation of any color whose intensity falls in the range.

Regarding the drawback of Yang and Lee’s method, Park and Lee recently proposed a piecewise linear gamut mapping method, which employs multiplicative and additive color mapping to improve the color saturation [

11]. However, as pointed out by the authors, the color shifting in the additive algorithm used in the middle part may cause a gamut problem, rarely. Therefore, they added an exceptional procedure to avoid the problem. In the next subsection, we consider a gamut problem-free method.

3.3. Middle Part Elimination

In this subsection, we propose a method for eliminating the middle part in Yang and Lee’s method, where Naik and Murthy’s method is directly used without boosting the saturation of colors. More precisely, Yang and Lee’s method divides the RGB color space into three disjoint parts with two planes perpendicular to the intensity axis l: , and ; in the middle part, satisfying , their method cannot increase the saturation as well as Naik and Murthy’s method. On the other hand, we divide the RGB color space into two parts with a boundary surface: and , where denotes the boundary threshold. We refer to this change from tripartition to bipartition as the middle part elimination.

The method described in this subsection is a refined version of Yu’s method [

10], which uses three planes for bisecting an RGB color cube. Those planes have unused regions redundantly, which are removed in the refined method. Moreover, the algorithm is simplified as described in Algorithm 3.

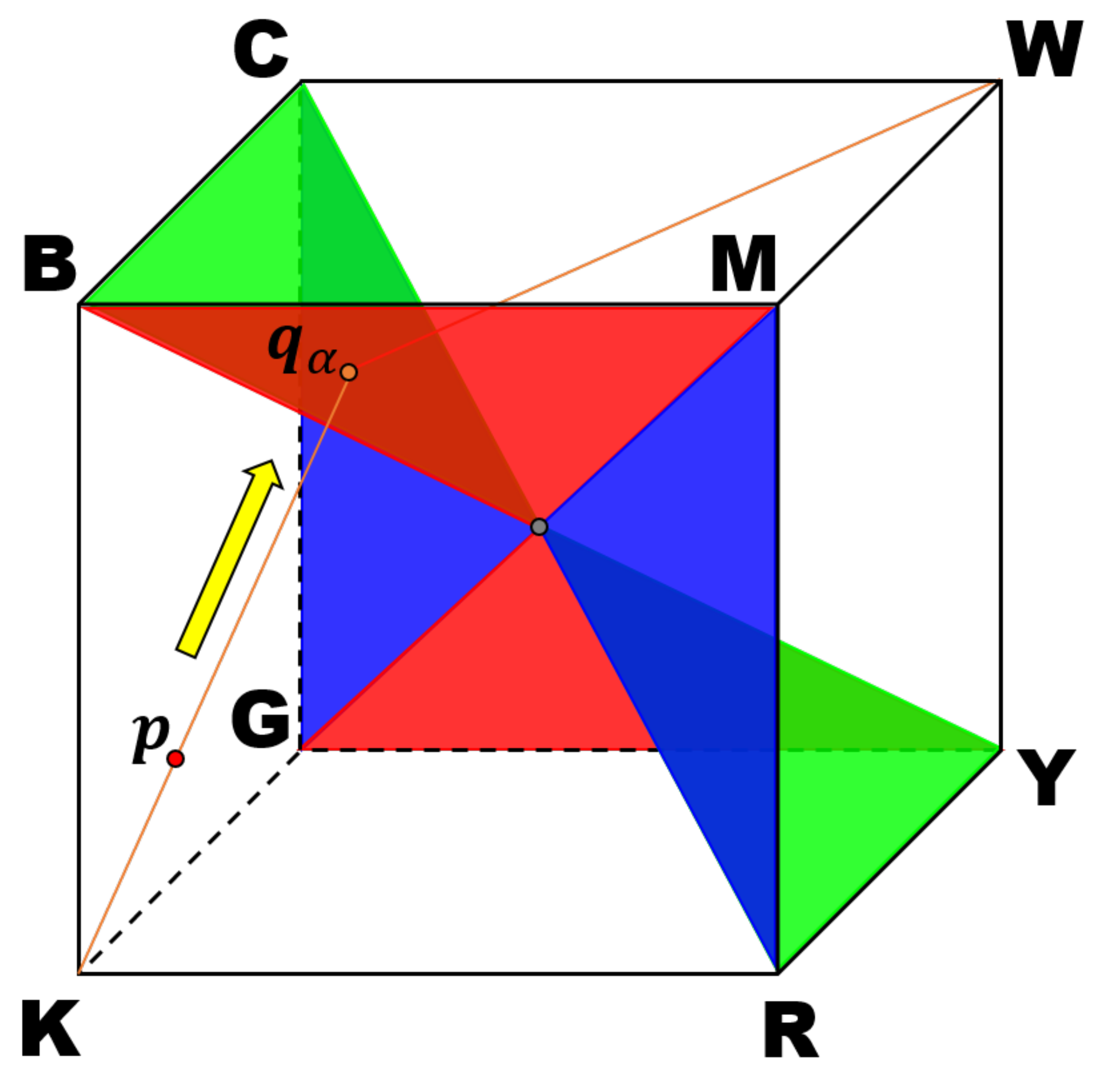

Figure 4 shows the proposed boundary surface, which bisects the RGB color space, illustrated by a cube, into black-leaning and white-leaning parts. The letters attached to the vertices of the cube denote eight basic colors normalized as follows:

,

and

. The boundary surface is painted in red, green and blue based on the coplanarity of each color point. Unlike Yang and Lee’s division of the RGB color space into three parts, the proposed bisection eliminates the middle part where Naik and Murthy’s method is directly used. Therefore, the drawback of their methods will be successfully avoided in the proposed method. For example, a color point

in this figure is first projected onto the boundary surface along the direction of the yellow arrow, where the end point is denoted by

. Note that

is more distant from the intensity axis than

, which means that

has higher saturation than

, i.e., by projecting a color point onto the boundary surface as shown in

Figure 4, we can always increase the saturation.

Figure 5 shows an orthographic view of the boundary surface in

Figure 4 from the white point to the black pointhidden behind the boundary surface, which forms a regular hexagon composed of six regular triangles colored in red, green and blue that correspond to the colors in

Figure 4. The sides of those triangles correspond to the edges of the cube in

Figure 4. A color

satisfies one of the inequalities written in the triangles onto which

is also orthographically projected. We can see that the median value of the RGB components in each triangle corresponds to the color of the triangle. This correspondence gives us an idea that elongates an RGB color vector

to the boundary surface shown in

Figure 4 to increase the saturation prior to the application of Naik and Murthy’s method, i.e., we can select a plane onto which a color is projected, according to the median value of the RGB components.

In

Figure 4, each pair of triangles with the same color is coplanar, where the normal vectors of red, green and blue planes are given by

and

, respectively. For a color

, we first select the median value in

as follows:

where the ‘median’ operator selects the median value from the given values. Then, we project

onto the plane with the normal vector

to increase the saturation. The detailed procedure is as follows. The procedure is divided into two parts according to the position of

in the RGB color space separated by the boundary surface.

If , then we compute the intersection point of a line , where is a parameter, and a plane . From these equations, we have where , that is, the intersection point is given by . Then, we apply Naik and Murthy’s method to obtain the modified color as .

On the other hand, if , then we compute the intersection point of a line passing through and , which is expressed as , and a plane . From these equations, we have , that is, the intersection point is given by . Then, we apply Naik and Murthy’s method to obtain the modified color as .

This method is summarized in Algorithm 3.

In this algorithm, before applying Naik and Murthy’s method at line 9, every color

is modified to

so that the middle part where Naik and Murthy’s method is directly applied to

is eliminated. We can confirm that the saturation of

is not smaller than that of

as follows. Line 5 in Algorithm 3 corresponds to

in (

10), and the denominator is not greater than 1 as

, which means that

. Therefore, we find that

by (

10). On the other hand, line 7 in Algorithm 3 corresponds to

in (), and the denominator is smaller than 1 as

, which means that

. Therefore, we conclude that

by (

11).

| Algorithm 3 The proposed method. |

- Require :

RGB color vector , target intensity - Ensure :

Modified RGB color vector - 1:

Compute the intensity of as ; - 2:

; - 3:

Compute ; - 4:

if

then - 5:

; - 6:

else - 7:

; - 8:

end if - 9:

;

|

4. Experimental Results

In this section, we show the experimental results of hue-preserving color image enhancement with Naik and Murthy’s method [

2], Yang and Lee’s method [

9] and the proposed method.

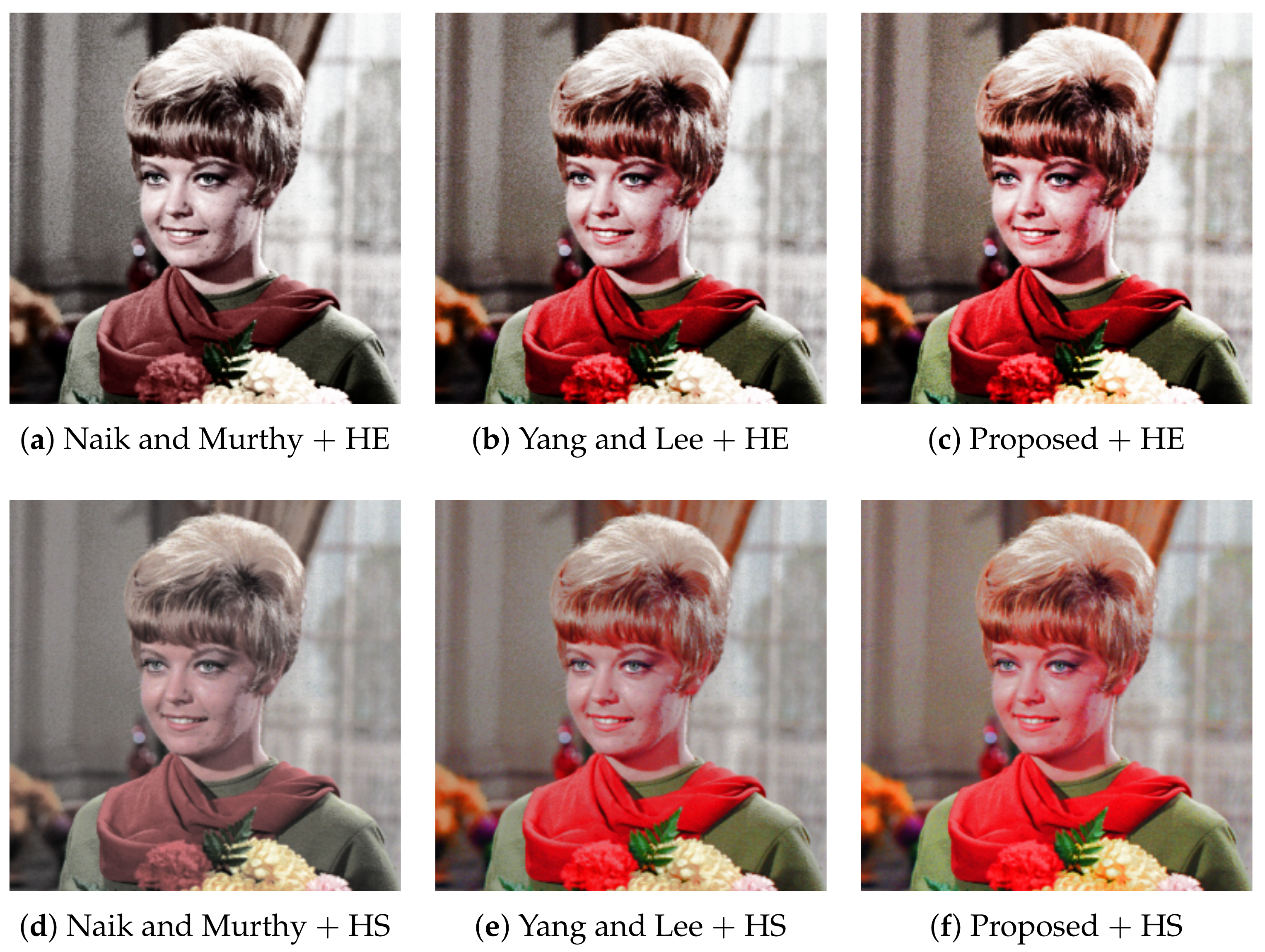

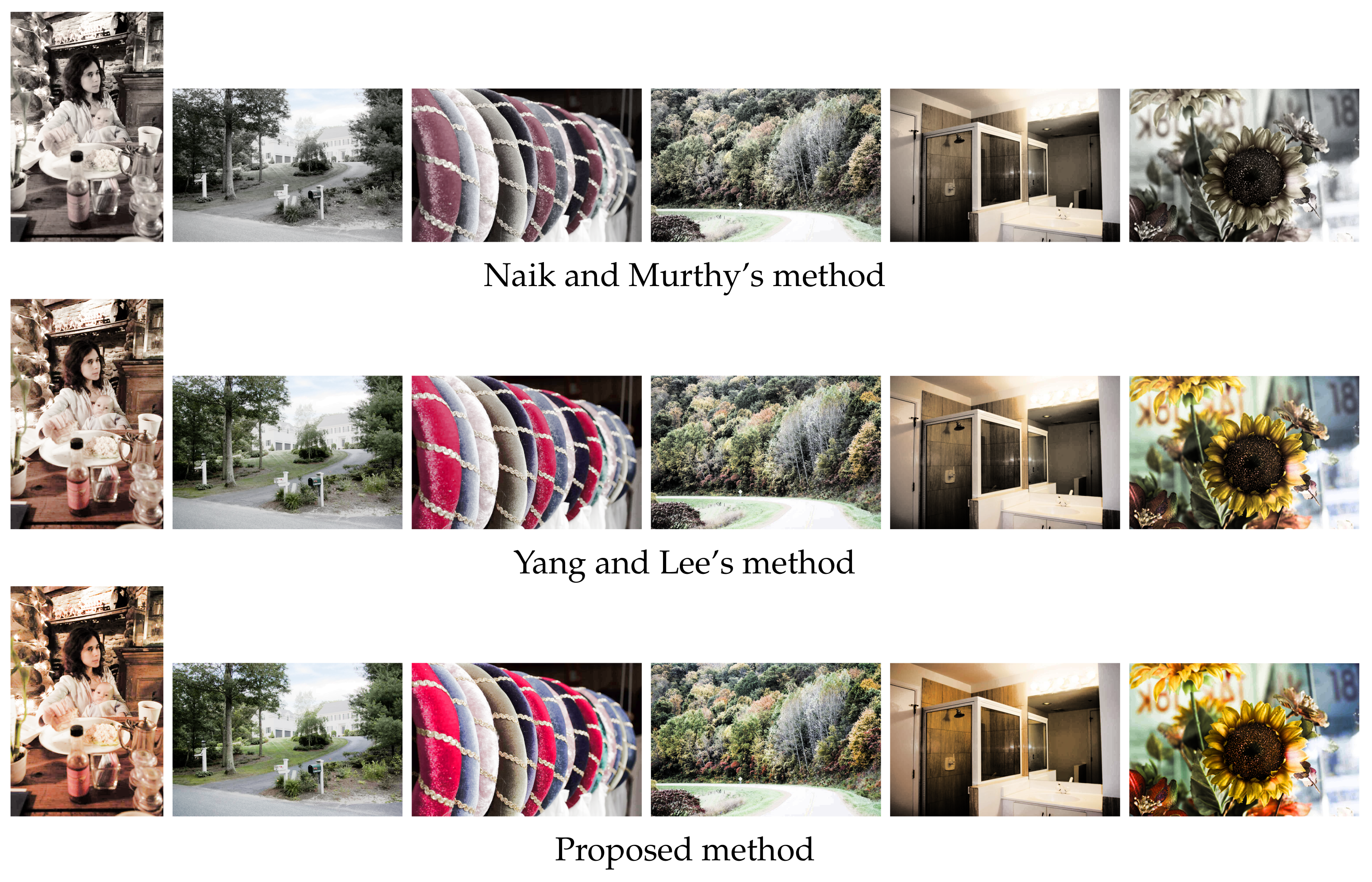

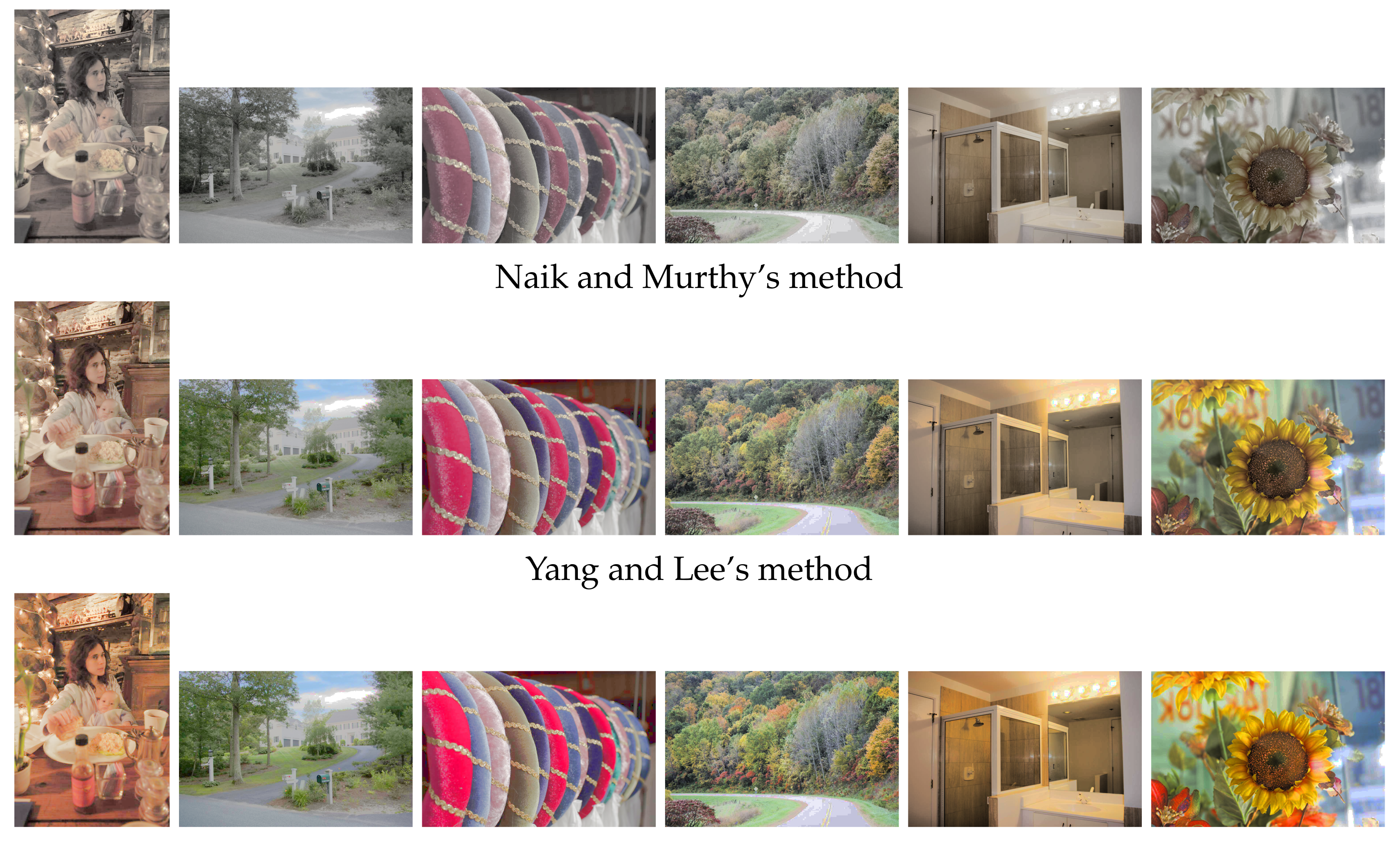

Figure 6 shows six enhanced color images computed from the common input image in

Figure 2a. In

Figure 6a, the contrast is enhanced by HE, compared with that of the original image, and the gamut problem is avoided by Naik and Murthy’s method successfully. However, the saturation is deteriorated as shown in

Section 3.1. In

Figure 6b, Yang and Lee’s method recovers the saturation better than that of Naik and Murthy. In

Figure 6c, the proposed method improves the saturation further than that of Yang and Lee. This result coincides with the results of Yu’s method [

10].

Figure 6d shows the result of HS combined with Naik and Murthy’s method, where the contrast is suppressed while the saturation is improved, compared with

Figure 6a.

Figure 6e of Yang and Lee’s method shows a more colorful image than

Figure 6d.

Figure 6d,e coincides with the results of Inoue’s method [

15].

Figure 6f of the proposed method shows further improved saturation than

Figure 6e, specifically for the regions of the facial skin and curtain in the background.

Table 1 shows the mean saturation of each image in

Figure 6, which numerically confirms that the proposed method achieved maximum saturation among the compared methods for both HE and HS.

As well as the compared methods, the proposed method is also applicable to other intensity transformation methods. For example, we show the results of the gamma correction (GC) in

Figure 7, where we choose

to obtain subjectively better results.

The compared three methods preserve the intensity of every input color image as shown in

Figure 8, where we can see the luminance [

9] images, which are the normalized intensity images, as

, corresponding to the enhanced color images in

Figure 6. The intensity images in the top row are identical to the histogram equalized-grayscale image in

Figure 3b, and those in the bottom row are identical to the histogram specified-grayscale image in

Figure 3c.

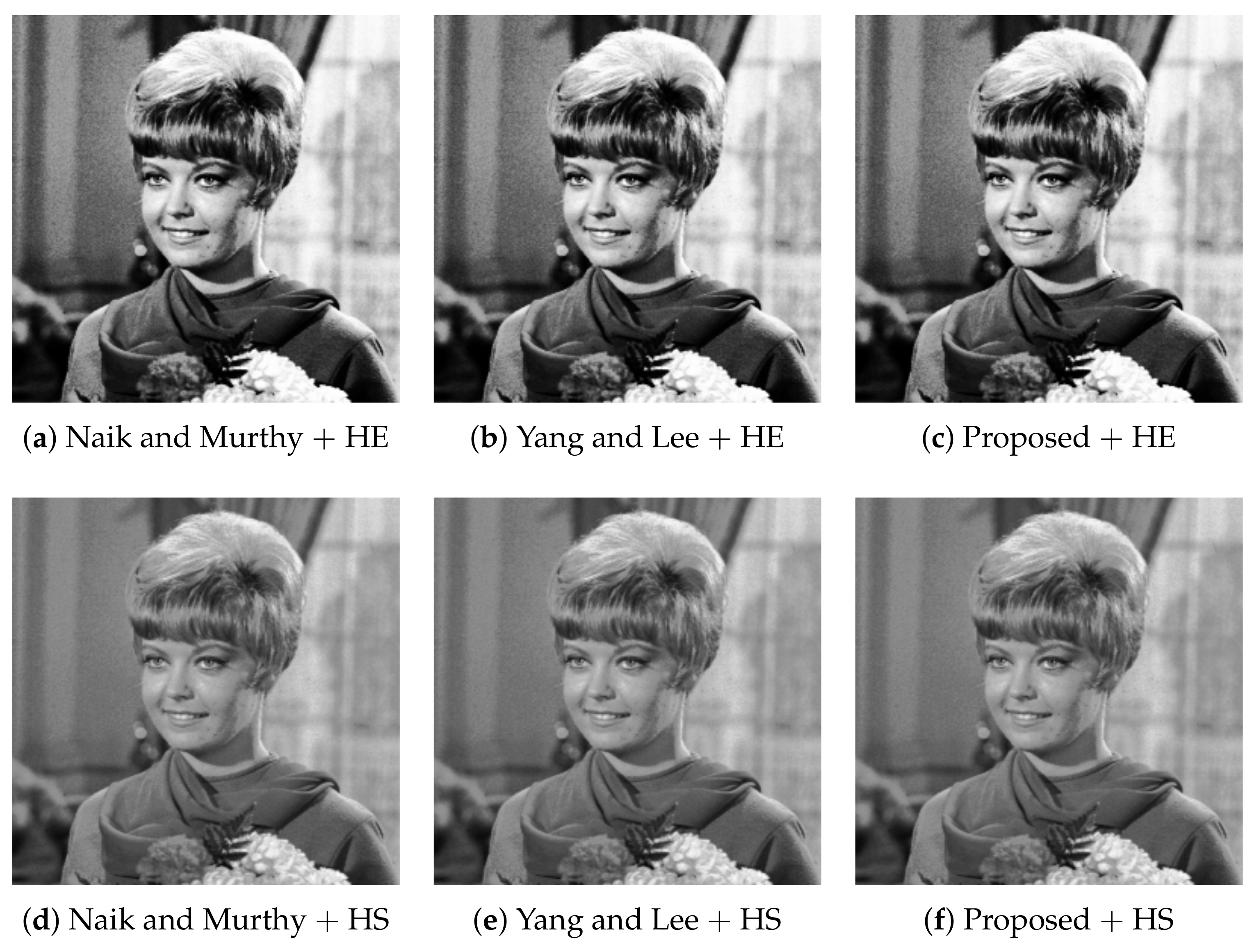

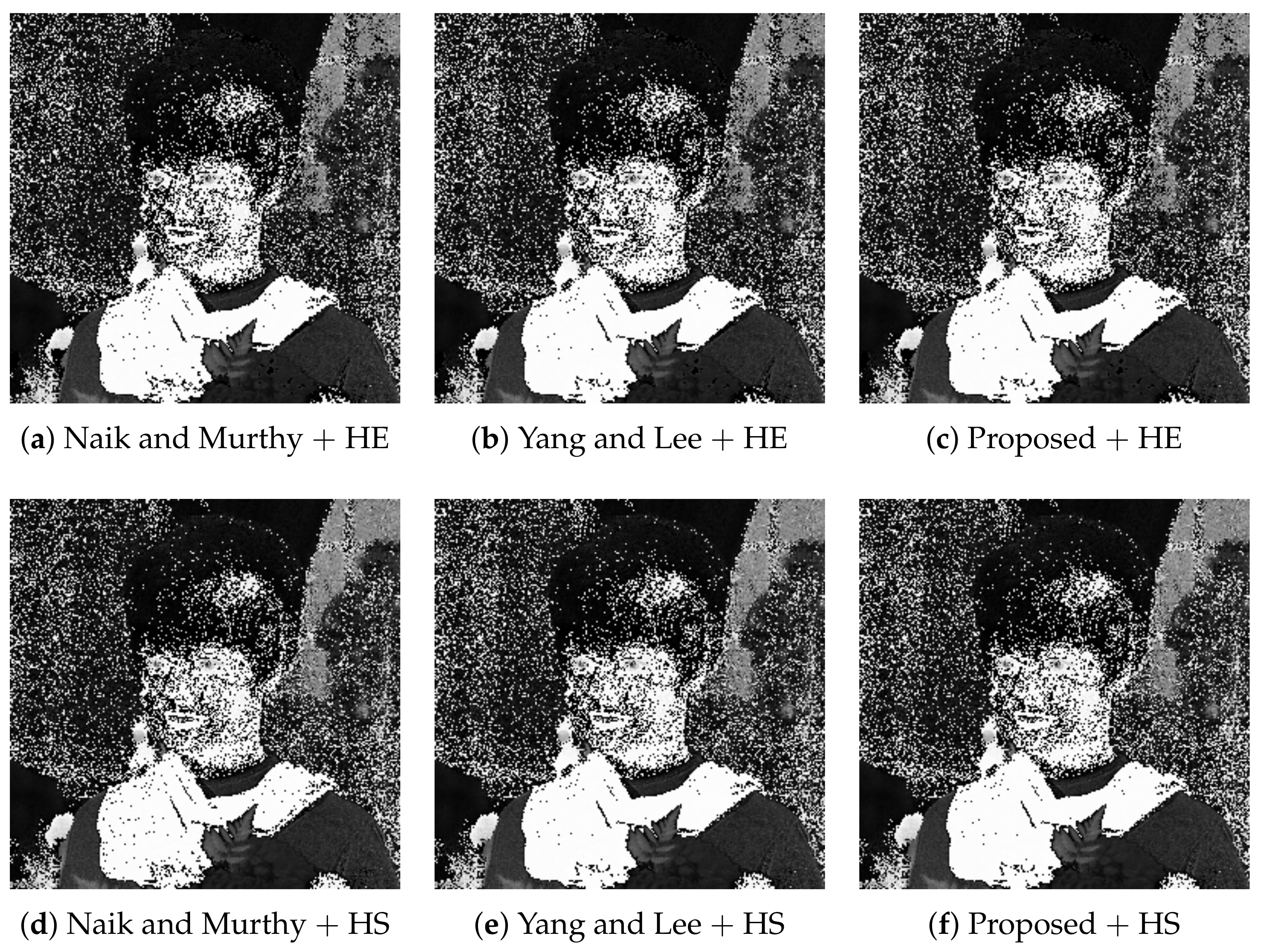

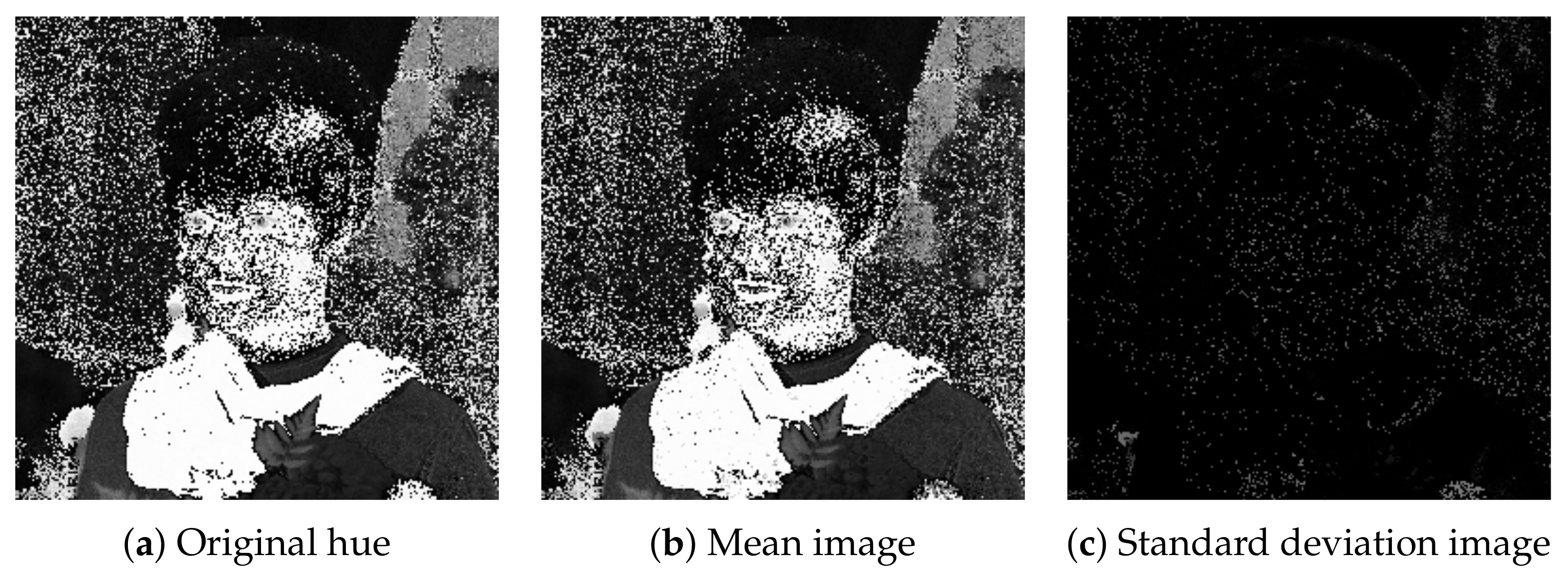

We also computed hue images from the color images in

Figure 6 as shown in

Figure 9, where each hue value is computed from the RGB color values on the basis of the definition of hue given by the Equation (6.2–2) in the reference [

12]. All images in

Figure 9 are similar to the original hue image shown in

Figure 10a. We observed that the hue values contain numerical errors whose maximum standard deviation is 0.50 for normalized RGB color vectors. The mean and standard deviation of hue values at every pixel are visualized in

Figure 10b,c, respectively. In

Figure 10c, the black and white pixels indicate the standard deviation values 0 and 1, respectively.

Figure 11 shows hue histograms of the color images in

Figure 6, where the vertical and horizontal axes of each graph denote the number of pixels and hue in degrees, respectively. The blue and red lines denote the original enhanced histograms, which are similar to each other. The small differences between them are mainly resulted from the quantization errors caused by recording color values in an image file format.

Next,

Figure 12 shows six color images selected from an image dataset by Afifi et al. [

17], where the first three images are underexposed, and the last three ones are overexposed. We selected the images in

Figure 12 based on their relative exposure values (EVs), that is, the underexposed images have smaller than or equal to

relative EVs, and the overexposed images have greater than or equal to

relative EVs. As the dataset was originally rendered using raw images taken from the MIT-Adobe FiveK dataset, their dataset follows the original license of the MIT-Adobe FiveK dataset [

18].

Figure 13 shows the results of HE applied to the images in

Figure 12, where Naik and Murthy’s method in the top row enhances the contrast without a gamut problem for both under- and overexposed images; however, the resultant images look like sepia or grayscale ones with faded colors. Yang and Lee’s method in the middle row recovers the color saturation compared with the above results. The proposed method in the bottom row improves the saturation further than the above two results.

Figure 14 also shows the results of HS applied to the images in

Figure 12, where we obtained more natural results of contrast enhancement than the results of HE in

Figure 13.

Figure 15 shows two graphs, (

a) and (

b), of the mean saturation of the above images in

Figure 13 and

Figure 14 enhanced by HE and HS, respectively, where the vertical and horizontal axes of each graph denote the mean saturation and the images numbered from 1 to 6 in

Figure 12. The green, yellow, orange and red bars denote the original image in

Figure 2a, Naik and Murthy’s method, Yang and Lee’s method and the proposed method, respectively, which show that Naik and Murthy’s method decreases the mean saturation from the original values, Yang and Lee’s method outperforms that of Naik and Murthy, and the proposed method outperforms that of Yang and Lee, quantitatively. In

Figure 15, the standard deviation of saturation in each image is also shown with an error bar, where the longer the error bar becomes, the more various saturation values the enhanced image contains.

Table 2 summarizes the mean saturation of the images in

Figure 13 and

Figure 14 obtained by the compared methods, where the proposed method achieves the maximum values among the compared methods for both HE and HS.

Similarly,

Table 3 summarizes the standard deviation of saturation for all compared methods, where the proposed method achieves the maximum values among the compared methods for both HE and HS.

In

Figure 15, we find that the standard deviation of saturation in an image can be larger than the mean saturation. For example, we show the histogram of saturation of image No. 3 enhanced by the proposed method with HE in

Figure 16, where the mean saturation is 42.1 and the standard deviation is 50.8. All values of the saturation are included in the range

.

We also evaluated the performance with the color colorfulness index (CCI) defined in (10) in [

4] as shown in

Figure 17, where the vertical and horizontal axes denote the CCI value and image numbers, respectively. The value of CCI varies from 0 (achromatic image) to the maximum (most colorful image) [

4]. For both HE (

a) and HS (

b), the proposed method denoted by red bars achieves the highest values among the compared methods.

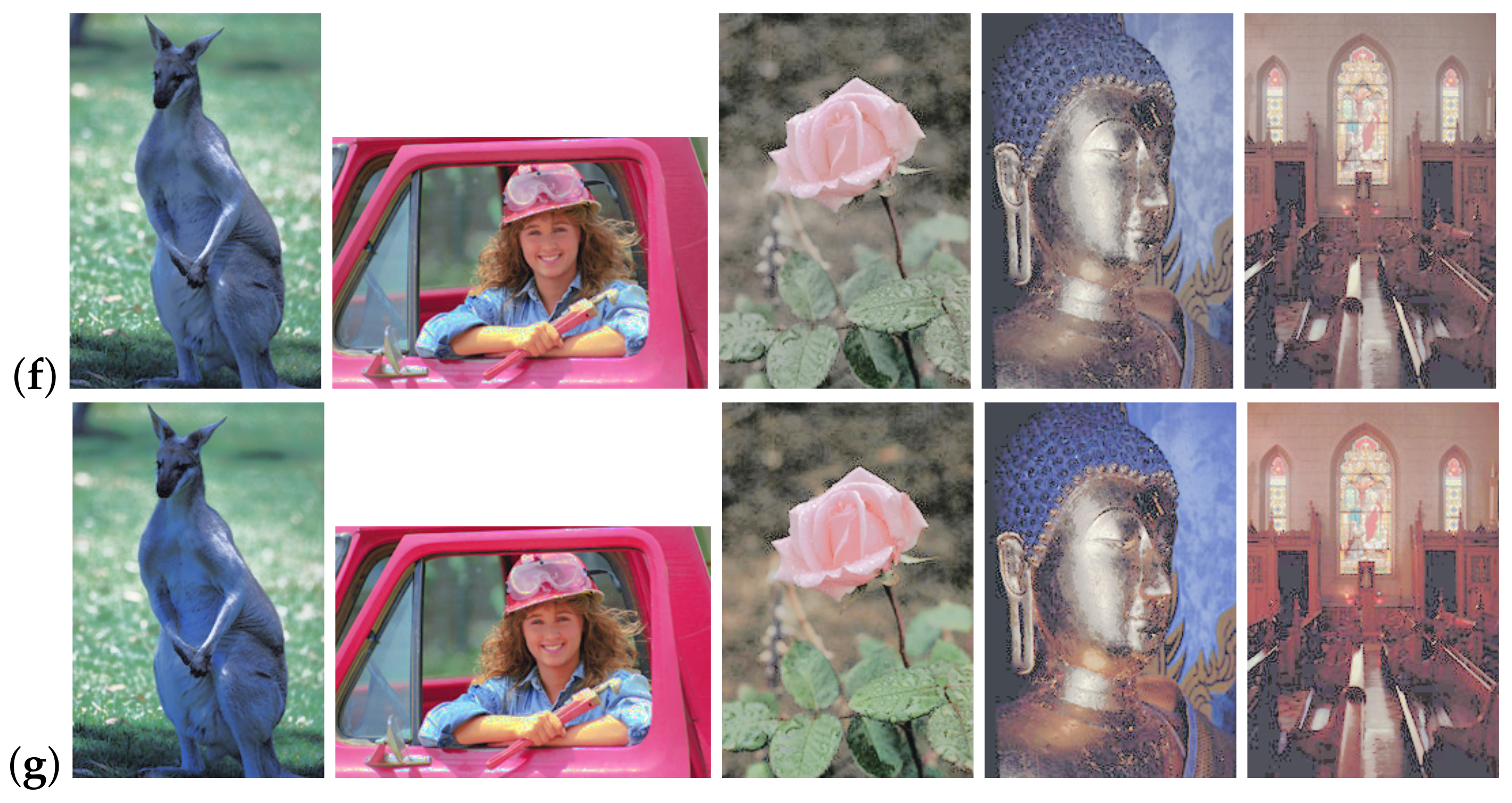

Finally,

Figure 18 shows an assembled result for browsing the performance of compared methods, where five original images in row (

a) are enhanced by six different combinations of compared methods to obtain enhanced images listed in rows, (

b) to (

g). These results also support the effectiveness of the proposed method visually. The rows (

b), (

c) and (

d) show the enhanced images by Naik and Murthy’s method, Yang and Lee’s method and the proposed method with HE; the rows (

e), (

f) and (

g) show that with HS. These results exemplify that HE enhances the contrast strongly, and HS used in this paper enhances the saturation dominantly.

5. Discussion

The above experimental results demonstrate the validity of the theoretical properties of the compared methods, i.e., Naik and Murthy’s method gives faded colors, Yang and Lee’s method recovers the weak point of Naik and Murthy’s method, and the proposed method improves the color saturation further than that of Yang and Lee by eliminating the middle part of their method where they directly use Naik and Murthy’s method, which generates faded colors almost every time.

For generating the target intensity image required for color image enhancement, we compared HE and HS in the above experiments, where we observed that HE frequently enhanced the contrast excessively. On the other hand, the adopted RGB color cube-based HS achieved moderate enhancement results in a parameter-free manner as well as the conventional HE. Therefore, we would like to recommend its adoption as an alternative to HE for contrast enhancement.

In this paper, we used a conventional method for HE and HS that is based on the cumulative histograms. On the other hand, Nikolova and Steidl have proposed a more sophisticated method for exact HS [

19,

20], which can be substituted for the conventional one to produce better results of color image enhancement in the proposed method.