Data Augmentation Using Background Replacement for Automated Sorting of Littered Waste

Abstract

:1. Introduction

2. Related Work

3. Datasets

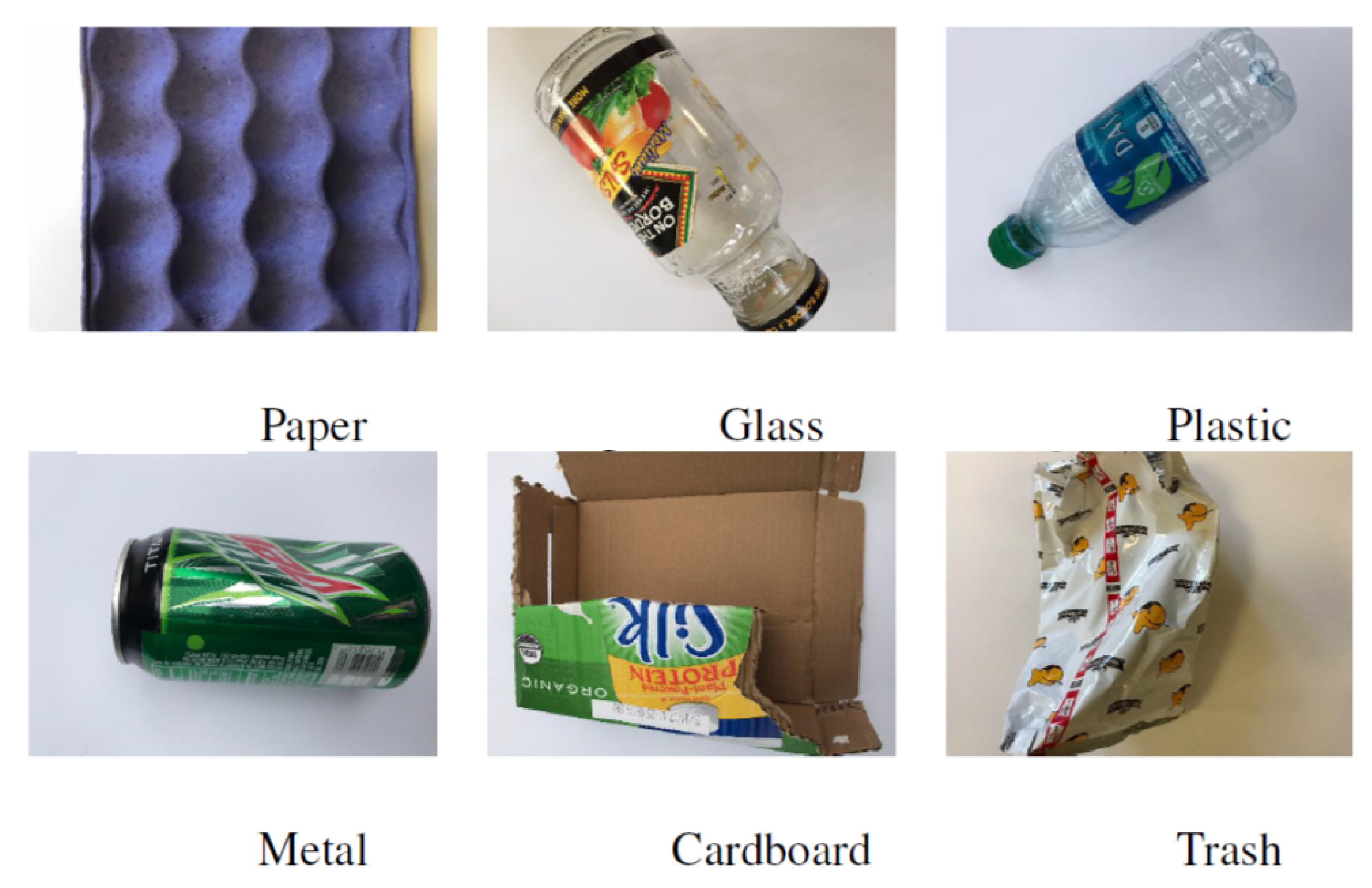

3.1. An Existing Conveyor-Belt-Oriented Dataset

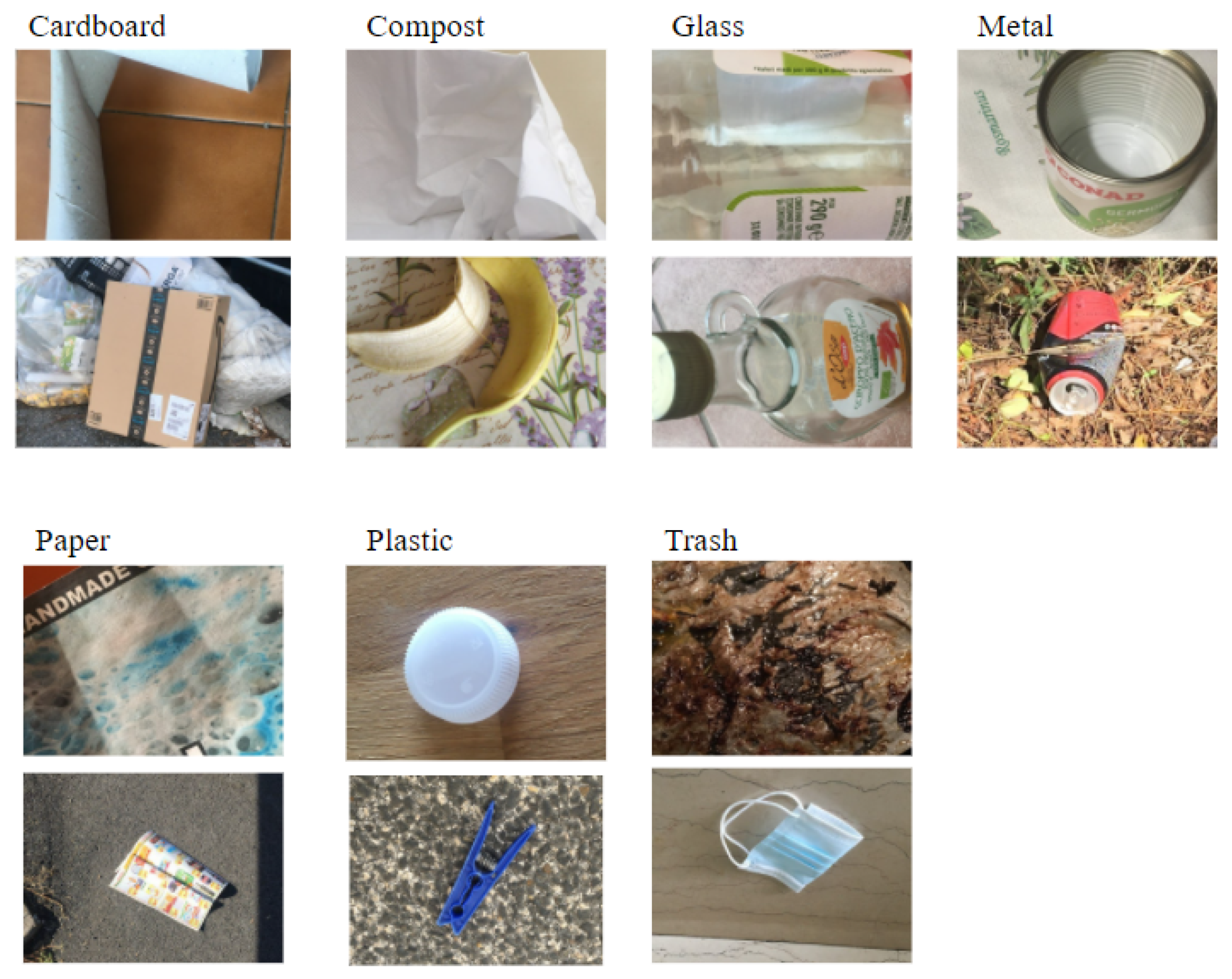

3.2. Littered Waste Testset

4. Methods and Implementation

4.1. Data-Augmentation with Background Replacement

- Select&Crop selects the waste from an image in a conveyor-belt-oriented dataset, crops it and, then, removes the background, replacing it with transparency. This is possible as images in conveyor-belt-oriented datasets are generally on a uniform background. Hence, selecting and cropping waste may be done with a high level of quality by means of existing, widely available image processing libraries. In our study, we used the OpenCV Python library.

- Littered waste Background Selection is a pseudo-random selection function of possible backgrounds. This pseudo-random function extracts backgrounds from available repositories. In our study, backgrounds derive from two major sources: (1) license free images found on Unsplash (https://unsplash.com, accessed on 5 August 2021); (2) background pictures produced in the present study. These backgrounds are randomly selected among pictures representing surfaces with different textures and lighting, as wastes can be found anywhere (see Figure 4).

- Merge is the simpler module as it merges cropped images and new backgrounds. The final output is a novel image with its trash classification label (see Figure 5).

4.2. Two Automated Waste Sorting Systems

5. Results

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Eriksen, M.; Lebreton, L.; Carson, H.; Thiel, M.; Moore, C.; Borerro, J.; Galgani, F.; Ryan, P.; Reisser, J. Plastic Pollution in the World’s Oceans: More than 5 Trillion Plastic Pieces Weighing over 250,000 Tons Afloat at Sea. PLoS ONE 2014, 9, e111913. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lebreton, L.C.; Van Der Zwet, J.; Damsteeg, J.W.; Slat, B.; Andrady, A.; Reisser, J. River plastic emissions to the world’s oceans. Nat. Commun. 2017, 8, 1–10. [Google Scholar] [CrossRef]

- Dunlop, S.; Dunlop, B.; Brown, M. Plastic pollution in paradise: Daily accumulation rates of marine litter on Cousine Island, Seychelles. Mar. Pollut. Bull. 2020, 151, 110803. [Google Scholar] [CrossRef]

- Kawecki, D.; Nowack, B. Polymer-Specific Modeling of the Environmental Emissions of Seven Commodity Plastics as Macro- and Microplastics. Environ. Sci. Technol. 2019, 53, 9664–9676. [Google Scholar] [CrossRef] [PubMed]

- Sarkodie, S.A.; Owusu, P.A. Impact of COVID-19 pandemic on waste management. Environ. Dev. Sustain. 2020, 23, 1–10. [Google Scholar] [CrossRef]

- Cruvinel, V.R.N.; Marques, C.P.; Cardoso, V.; Novaes, M.R.C.G.; Araújo, W.N.; Angulo-Tuesta, A.; Escalda, P.M.F.; Galato, D.; Brito, P.; da Silva, E.N. Health conditions and occupational risks in a novel group: Waste pickers in the largest open garbage dump in Latin America. BMC Public Health 2019, 19, 1–15. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yang, M.; Thung, G. Classification of trash for recyclability status. CS229 Proj. Rep. 2016, 2016, 1–6. [Google Scholar]

- Bircanoğlu, C.; Atay, M.; Beşer, F.; Genç, Ö.; Kızrak, M.A. RecycleNet: Intelligent waste sorting using deep neural networks. In Proceedings of the 2018 Innovations in Intelligent Systems and Applications (INISTA), Thessaloniki, Greece, 3–5 July 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–7. [Google Scholar]

- Chu, Y.; Huang, C.; Xie, X.; Tan, B.; Kamal, S.; Xiong, X. Multilayer hybrid deep-learning method for waste classification and recycling. Comput. Intell. Neurosci. 2018, 2018, 5060857. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Frost, S.; Tor, B.; Agrawal, R.; Forbes, A.G. Compostnet: An image classifier for meal waste. In Proceedings of the 2019 IEEE Global Humanitarian Technology Conference (GHTC), Seattle, WA, USA, 17–20 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–4. [Google Scholar]

- Sidharth, R.; Rohit, P.; Vishagan, S.; Karthika, R.; Ganesan, M. Deep Learning based Smart Garbage Classifier for Effective Waste Management. In Proceedings of the 2020 5th International Conference on Communication and Electronics Systems (ICCES), Coimbatore, India, 10–12 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1086–1089. [Google Scholar]

- Yan, K.; Si, W.; Hang, J.; Zhou, H.; Zhu, Q. Multi-label Garbage Image Classification Based on Deep Learning. In Proceedings of the 2020 19th International Symposium on Distributed Computing and Applications for Business Engineering and Science (DCABES), Xuzhou, China, 16–19 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 150–153. [Google Scholar]

- Rismiyati; Endah, S.N.; Khadijah; Shiddiq, I.N. Xception Architecture Transfer Learning for Garbage Classification. In Proceedings of the 2020 4th International Conference on Informatics and Computational Sciences (ICICoS), Semarang, Indonesia, 10–11 November 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–4. [Google Scholar]

- Thokrairak, S.; Thibuy, K.; Jitngernmadan, P. Valuable Waste Classification Modeling based on SSD-MobileNet. In Proceedings of the 2020-5th International Conference on Information Technology (InCIT), Chonburi, Thailand, 21–22 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 228–232. [Google Scholar]

- Sami, K.N.; Amin, Z.M.A.; Hassan, R. Waste Management Using Machine Learning and Deep Learning Algorithms. Int. J. Perceptive Cogn. Comput. 2020, 6, 97–106. [Google Scholar] [CrossRef]

- Rahman, M.W.; Islam, R.; Hasan, A.; Bithi, N.I.; Hasan, M.M.; Rahman, M.M. Intelligent waste management system using deep learning with IoT. J. King Saud Univ. Comput. Inf. Sci. 2020, in press. [Google Scholar] [CrossRef]

- Li, S.; Chen, L. Study on Waste Type Identification Method Based on Bird Flock Neural Network. Math. Probl. Eng. 2020, 2020, 9214350. [Google Scholar] [CrossRef]

- Kumar, S.; Yadav, D.; Gupta, H.; Verma, O.P.; Ansari, I.A.; Ahn, C.W. A Novel YOLOv3 Algorithm-Based Deep Learning Approach for Waste Segregation: Towards Smart Waste Management. Electronics 2021, 10, 14. [Google Scholar] [CrossRef]

- Ataee, A.; Kazemitabar, J.; Najafi, M. A Framework for Dry Waste Detection Based on a Deep Convolutional Neural Network. Iran. Iranica J. Energy Environ. 2020, 11, 248–252. [Google Scholar]

- Susanth, G.S.; Livingston, L.J.; Livingston, L.A. Garbage Waste Segregation Using Deep Learning Techniques. In Materials Science and Engineering; IOP Conference Series; IOP Publishing: Bristol, UK, 2021; Volume 1012, p. 012040. [Google Scholar]

- Melinte, D.O.; Travediu, A.M.; Dumitriu, D.N. Deep Convolutional Neural Networks Object Detector for Real-Time Waste Identification. Appl. Sci. 2020, 10, 7301. [Google Scholar] [CrossRef]

- Huang, G.L.; He, J.; Xu, Z.; Huang, G. A combination model based on transfer learning for waste classification. Concurr. Comput. Pract. Exp. 2020, 32, e5751. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Proença, P.F.; Simões, P. TACO: Trash Annotations in Context for Litter Detection. arXiv 2020, arXiv:2003.06975. [Google Scholar]

- Lim, S.; Kim, I.; Kim, T.; Kim, C.; Kim, S. Fast autoaugment. Adv. Neural Inf. Process. Syst. 2019, 32, 6665–6675. [Google Scholar]

- Naghizadeh, A.; Abavisani, M.; Metaxas, D.N. Greedy autoaugment. Pattern Recognit. Lett. 2020, 138, 624–630. [Google Scholar] [CrossRef]

- Naghizadeh, A.; Metaxas, D.N.; Liu, D. Greedy auto-augmentation for n-shot learning using deep neural networks. Neural Netw. 2021, 135, 68–77. [Google Scholar] [CrossRef]

- Cubuk, E.D.; Zoph, B.; Mane, D.; Vasudevan, V.; Le, Q.V. Autoaugment: Learning augmentation strategies from data. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 113–123. [Google Scholar]

- Sharan, L.; Rosenholtz, R.; Adelson, E.H. Accuracy and speed of material categorization in real-world images. J. Vis. 2014, 14, 12. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco CA, USA, 4–9 February 2017; Volume 31. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Chollet, F. Keras. 2015. Available online: https://github.com/fchollet/keras (accessed on 5 August 2021).

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Boucher, J.; Friot, D. Primary Microplastics in the Oceans: A Global Evaluation of Sources; IUCN: Gland, Switzerland, 2017; Volume 10. [Google Scholar]

| Category | Entries | Percentage | |

|---|---|---|---|

| TrashNet Categories | Cardboard | 403 | 15 |

| Glass | 501 | 18 | |

| Metal | 410 | 15 | |

| Paper | 594 | 22 | |

| Plastic | 482 | 17 | |

| Trash | 184 | 7 | |

| Compost | 177 | 6 | |

| Total | 2751 | 100 |

| Category | Entries | Percentage | |

|---|---|---|---|

| TrashNet Categories | Cardboard | 15 | 13 |

| Glass | 11 | 10 | |

| Metal | 11 | 10 | |

| Paper | 15 | 13 | |

| Plastic | 26 | 23 | |

| Trash | 16 | 14 | |

| Compost | 19 | 17 | |

| Total | 114 | 100 |

| Layer (Type) | Output Shape | Param |

|---|---|---|

| Conv2D | (None, 64, 64, 96) | 34,944 |

| MaxPooling2D | (None, 32, 32, 96) | 0 |

| Conv2D | (None, 32, 32, 192) | 460,992 |

| MaxPooling2D | (None, 16, 16, 192) | 0 |

| Conv2D | (None, 16, 16, 288) | 497,952 |

| Conv2D | (None, 16, 16, 288) | 746,784 |

| Conv2D | (None, 16, 16, 192) | 497,856 |

| MaxPooling2D | (None, 7, 7, 192) | 0 |

| Flatten | (None, 9408) | 0 |

| Dense | (None, 4096) | 38,539,264 |

| Dense | (None, 4096) | 16,781,312 |

| Dense | (None, 7) | 28,679 |

| AlexNet | NotAug | BackRep & BackRep | NotAug | LittleAug | |

|---|---|---|---|---|---|

| Conveyor-belt-oriented test | |||||

| Accuracy | |||||

| Macro AVG | F1 | ||||

| Micro AVG | F1 | ||||

| Littered Waste test | |||||

| Accuracy | |||||

| Macro AVG | F1 | ||||

| Micro AVG | F1 | ||||

| InceptionV4 | NotAug | BackRep & BackRep | NotAug | LittleAug |

|---|---|---|---|---|

| Conveyor-belt-oriented test | ||||

| Accuracy | ||||

| Macro AVG F1 | ||||

| Micro AVG F1 | ||||

| Littered Waste | ||||

| Accuracy | ||||

| Macro AVG F1 | ||||

| Micro AVG F1 | ||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Patrizi, A.; Gambosi, G.; Zanzotto, F.M. Data Augmentation Using Background Replacement for Automated Sorting of Littered Waste. J. Imaging 2021, 7, 144. https://doi.org/10.3390/jimaging7080144

Patrizi A, Gambosi G, Zanzotto FM. Data Augmentation Using Background Replacement for Automated Sorting of Littered Waste. Journal of Imaging. 2021; 7(8):144. https://doi.org/10.3390/jimaging7080144

Chicago/Turabian StylePatrizi, Arianna, Giorgio Gambosi, and Fabio Massimo Zanzotto. 2021. "Data Augmentation Using Background Replacement for Automated Sorting of Littered Waste" Journal of Imaging 7, no. 8: 144. https://doi.org/10.3390/jimaging7080144

APA StylePatrizi, A., Gambosi, G., & Zanzotto, F. M. (2021). Data Augmentation Using Background Replacement for Automated Sorting of Littered Waste. Journal of Imaging, 7(8), 144. https://doi.org/10.3390/jimaging7080144