A Methodology for Semantic Enrichment of Cultural Heritage Images Using Artificial Intelligence Technologies

Abstract

1. Introduction

- An end-to-end methodology and case study for semantic enrichment of cultural images.

- A technique for building and exploiting CV tools for digital humanities by employing iterative annotation of sample images by experts.

- A vocabulary for enriching cultural images in general and images related to food and drink in particular.

- A benchmarking data set which could serve as a ground truth for future research.

- A discussion of the lessons, challenges, and future directions.

2. State of the Art

2.1. Computer Vision in Digital Humanities

Convolutional Neural Networks

2.2. Semantic Web Technologies

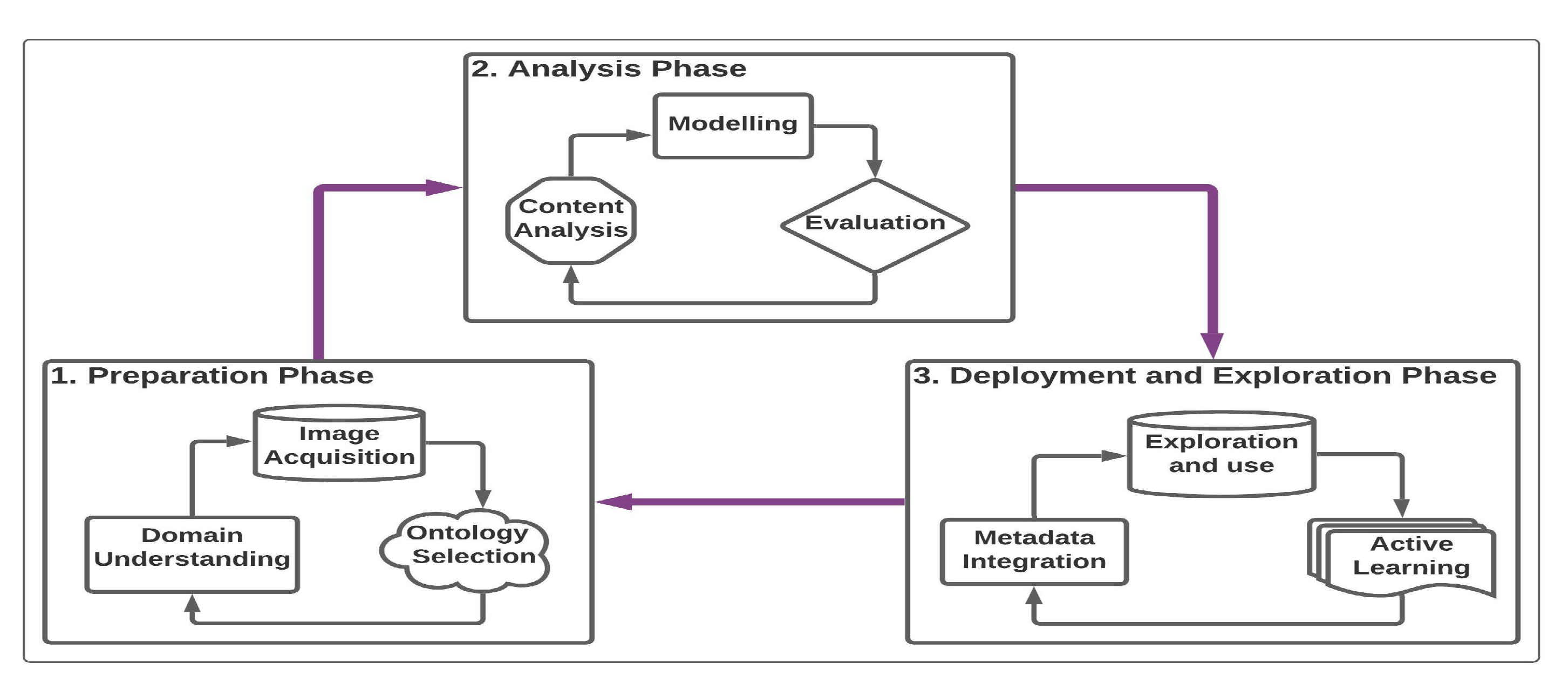

3. Methodology for Enhancing the Visibility of Cultural Images

3.1. Phase-1: Preparation Phase

3.1.1. Domain Understanding

3.1.2. Image Acquisition

3.1.3. Ontology Selection

3.2. Phase-2: Analysis Phase

3.2.1. Analysis of the Content of Images

3.2.2. Preparation of Training Data

3.2.3. Training and Selection of Best Performing Model

3.3. Phase-3: Integration and Exploitation Phase

3.3.1. Integration of Results

| @prefix <list all your prefixes here>. <#TripleMap1> a rr:TriplesMap ; rr:logicalTable [rr:tableName "PREDICTIONS"]; rr:subjectMap[rr:template "https://www.europeana.eu/en/item/{IMAGE_NAME}"; rr:class edm:webResource; ]; rr:predicateObjectMap[rr:predicate dc:description; rr:predicate rdfs:comment; rr:objectMap[rr:column "LABEL"; ];]; rr:predicateObjectMap[rr:predicate dc:description; rr:predicate rdfs:comment; rr:objectMap[rr:column "LABEL_CONF"; ];]. <#TripleMap2> a rr:TriplesMap ; [rr:sqlQuery """ Select * from PREDICTIONS where LABEL =’Appealing’ """]; rr:subjectMap[rr:template "https://www.europeana.eu/en/item/{IMAGE_NAME}"; rr:predicateObjectMap[rr:predicate dc:subject; rr:objectMap[rr:template "http://purl.obolibrary.org/obo/MFOEM_000039";];]. |

3.3.2. Supporting Efficient Exploitation

4. Case Study

4.1. Phase-1: Preparation Phase

4.1.1. Understanding and Defining the Domain

4.1.2. Image Acquisition

4.1.3. Ontology Selection

4.2. Phase-2

4.2.1. Analysis of the Contents of the Images

4.2.2. Manual Annotation for Generating Training Data

4.2.3. Round-1

4.2.4. Round-2

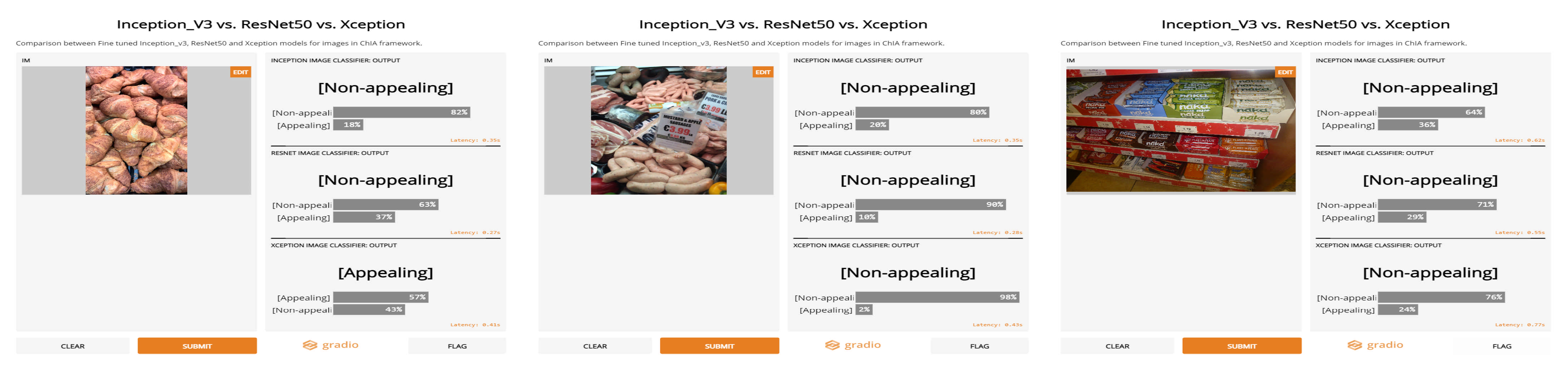

4.2.5. Round-3

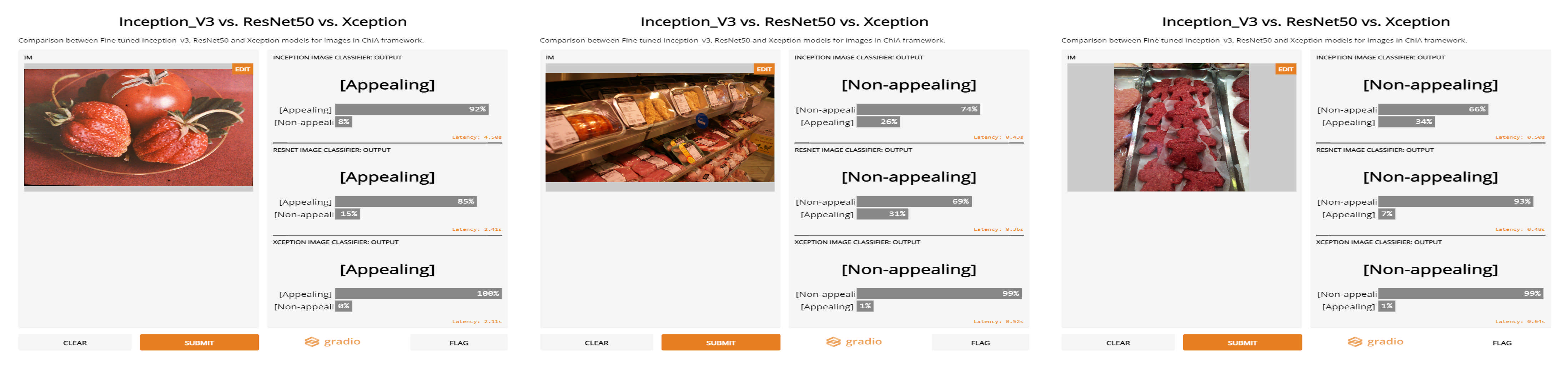

4.2.6. Round-4

4.3. Phase-4: Integration and Exploitation Phase

4.3.1. Moving towards Large Scale Annotation

4.3.2. Integration of Results

| <https://www.europeana.eu/en/item/2059511/data_foodanddrink_24255> a <http://www.europeana.eu/schemas/edm/webResource> ; <http://www.w3.org/2000/01/rdf-schema#comment> "Appealing" , "Appealing:95.69" ; <http://purl.org/dc/elements/1.1/description> "Appealing" , "Appealing:95.69" ; <http://purl.org/dc/elements/1.1/subject> <http://purl.obolibrary.org/obo/MFOEM_000039> . <https://www.europeana.eu/en/item/2059511/data_foodanddrink_24264> a <http://www.europeana.eu/schemas/edm/webResource> ; <http://www.w3.org/2000/01/rdf-schema#comment> "Appealing" , "Appealing:96.33" ; <http://purl.org/dc/elements/1.1/description> "Appealing" , "Appealing:96.33" ; <http://purl.org/dc/elements/1.1/subject> <http://purl.obolibrary.org/obo/MFOEM_000039> . <https://www.europeana.eu/en/item/2059511/data_foodanddrink_24245> a <http://www.europeana.eu/schemas/edm/webResource> ; <http://www.w3.org/2000/01/rdf-schema#comment> "Non-appealing" , "Non-appealing:81.24" ; <http://purl.org/dc/elements/1.1/description> "Non-appealing" , "Non-appealing:81.24" . <https://www.europeana.eu/en/item/2059511/data_foodanddrink_24263> a <http://www.europeana.eu/schemas/edm/webResource> ; <http://www.w3.org/2000/01/rdf-schema#comment> "Appealing" , "Appealing:91.9" ; <http://purl.org/dc/elements/1.1/description> "Appealing" , "Appealing:91.9" ; <http://purl.org/dc/elements/1.1/subject> <http://purl.obolibrary.org/obo/MFOEM_000039> . |

4.3.3. Supporting Efficient Exploration

| prefix obo: <http://purl.obolibrary.org/obo/> prefix rdf: <http://www.w3.org/1999/02/22-rdf-syntax-ns#> prefix rdfs: <http://www.w3.org/2000/01/rdf-schema#> prefix dc: <http://purl.org/dc/elements/1.1/> prefix edm: <http://www.europeana.eu/schemas/edm/> select ?subject ?predicate ?object where{ ?subject ?predicate ?object. ?subject rdf:type edm:webResource. ?subject dc:subject obo:MFOEM_000039. } limit 15 |

| <https://www.europeana.eu/en/item/2059511/data_foodanddrink_24255> dc:subject obo:MFOEM_000039 <https://www.europeana.eu/en/item/2059511/data_foodanddrink_24255> dc:description Appealing <https://www.europeana.eu/en/item/2059511/data_foodanddrink_24255> dc:description Appealing:95.69 <https://www.europeana.eu/en/item/2059511/data_foodanddrink_24255> rdfs:comment Appealing <https://www.europeana.eu/en/item/2059511/data_foodanddrink_24255> rdfs:comment Appealing:95.69 <https://www.europeana.eu/en/item/2059511/data_foodanddrink_24255> rdf:type edm:webResource <https://www.europeana.eu/en/item/2059511/data_foodanddrink_24264> dc:subject obo:MFOEM_000039 <https://www.europeana.eu/en/item/2059511/data_foodanddrink_24264> dc:description Appealing <https://www.europeana.eu/en/item/2059511/data_foodanddrink_24264> dc:description Appealing:96.33 <https://www.europeana.eu/en/item/2059511/data_foodanddrink_24264> rdfs:comment Appealing <https://www.europeana.eu/en/item/2059511/data_foodanddrink_24264> rdfs:comment Appealing:96.33 <https://www.europeana.eu/en/item/2059511/data_foodanddrink_24264> rdf:type edm:webResource <https://www.europeana.eu/en/item/2059511/data_foodanddrink_24263> dc:subject obo:MFOEM_000039 <https://www.europeana.eu/en/item/2059511/data_foodanddrink_24263> dc:description Appealing <https://www.europeana.eu/en/item/2059511/data_foodanddrink_24263> dc:description Appealing:91.9 |

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ziku, M. Digital Cultural Heritage and Linked Data: Semantically-informed conceptualisations and practices with a focus on intangible cultural heritage. Liber Q. 2020, 30. [Google Scholar] [CrossRef]

- Meroño-Peñuela, A.; Ashkpour, A.; Van Erp, M.; Mandemakers, K.; Breure, L.; Scharnhorst, A.; Schlobach, S.; Van Harmelen, F. Semantic Technologies for Historical Research: A Survey. Semant. Web 2015, 6, 539–564. [Google Scholar] [CrossRef]

- Beretta, F.; Ferhod, D.; Gedzelman, S.; Vernus, P. The SyMoGIH project: Publishing and sharing historical data on the semantic web. In Proceedings of the Digital Humanities 2014, Lausanne, Switzerland, 8–12 July 2014; pp. 469–470. [Google Scholar]

- Doerr, M. Ontologies for Cultural Heritage. In Handbook on Ontologies; International Handbooks on Information Systems; Staab, S., Studer, R., Eds.; Springer: Berlin/Heidelberg, Germany, 2009; pp. 463–486. [Google Scholar] [CrossRef]

- Fiorucci, M.; Khoroshiltseva, M.; Pontil, M.; Traviglia, A.; Del Bue, A.; James, S. Machine Learning for Cultural Heritage: A Survey. Pattern Recognit. Lett. 2020, 133, 102–108. [Google Scholar] [CrossRef]

- Evens, T.; Hauttekeete, L. Challenges of digital preservation for cultural heritage institutions. J. Librariansh. Inf. Sci. 2011, 43, 157–165. [Google Scholar] [CrossRef]

- Hyvönen, E. Using the Semantic Web in digital humanities: Shift from data publishing to data-analysis and serendipitous knowledge discovery. Semant. Web 2020, 11, 187–193. [Google Scholar] [CrossRef]

- Cornia, M.; Stefanini, M.; Baraldi, L.; Corsini, M.; Cucchiara, R. Explaining digital humanities by aligning images and textual descriptions. Pattern Recognit. Lett. 2020, 129, 166–172. [Google Scholar] [CrossRef]

- Cosovic, M.; Jankovic, R.; Ramic-Brkic, B. Cultural Heritage Image Classification. In Data Analytics for Cultural Heritage: Current Trends and Concepts; Belhi, A., Bouras, A., Al-Ali, A.K., Sadka, A.H., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 25–45. [Google Scholar] [CrossRef]

- Janković, R. Machine Learning Models for Cultural Heritage Image Classification: Comparison Based on Attribute Selection. Information 2020, 11, 12. [Google Scholar] [CrossRef]

- Ciocca, G.; Napoletano, P.; Schettini, R. CNN-based features for retrieval and classification of food images. Comput. Vis. Image Underst. 2018, 176–177, 70–77. [Google Scholar] [CrossRef]

- Gruber, T. Ontology. In Encyclopedia of Database Systems; Liu, L., Özsu, M.T., Eds.; Springer: Boston, MA, USA, 2009; pp. 1963–1965. [Google Scholar] [CrossRef]

- Zeng, M.L. Semantic enrichment for enhancing LAM data and supporting digital humanities. Review article. Prof. Inf. 2019, 28. [Google Scholar] [CrossRef]

- Lei, X.; Meroño-Peñuela, A.; Zhisheng, H.; van Harmelen, F. An Ontology Model for Narrative Image Annotation in the Field of Cultural Heritage. In Proceedings of the Second Workshop on Humanities in the Semantic Web (WHiSe), Vienna, Austria, 21–25 October 2017. [Google Scholar]

- Musik, C.; Zeppelzauer, M. Computer Vision and the Digital Humanities: Adapting Image Processing Algorithms and Ground Truth through Active Learning. View J. Eur. Telev. Hist. Cult. 2018, 7, 59–72. [Google Scholar] [CrossRef]

- Triandis, H. Subjective Culture. Online Read. Psychol. Cult. 2002, 2. [Google Scholar] [CrossRef]

- Zhu, X.; Vondrick, C.; Fowlkes, C.C.; Ramanan, D. Do we need more training data? Int. J. Comput. Vis. 2016, 119, 76–92. [Google Scholar] [CrossRef]

- Dorn, A.; Abgaz, Y.; Koch, G.; Díaz, J.L.P. Harvesting Knowledge from Cultural Images with Assorted Technologies: The Example of the ChIA Project. In Knowledge Organization at the Interface: Proceedings of the Sixteenth International ISKO Conference, 2020 Aalborg, Denmark, 1st ed.; International Society for Knowledge Organziation, (ISKO); Lykke, M., Svarre, T., Skov, M., Martínez-Ávila, D., Eds.; Ergon-Verlag: Baden, Germany, 2020; pp. 470–473. [Google Scholar] [CrossRef]

- Sorbara, A. Digital Humanities and Semantic Web. The New Frontiers of Transdisciplinary Knowledge. In Expanding Horizons: Business, Management and Technology for Better Society; ToKnowPress: Bangkok, Thailand, 2020; p. 537. [Google Scholar]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807. [Google Scholar] [CrossRef]

- Berners-Lee, T.; Hendler, J.; Lassila, O. The semantic web. Sci. Am. 2001, 284, 34–43. [Google Scholar] [CrossRef]

- Auer, S.; Bizer, C.; Kobilarov, G.; Lehmann, J.; Cyganiak, R.; Ives, Z. DBpedia: A Nucleus for a Web of Open Data. In The Semantic Web; Aberer, K., Choi, K.S., Noy, N., Allemang, D., Lee, K.I., Nixon, L., Golbeck, J., Mika, P., Maynard, D., Mizoguchi, R., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; pp. 722–735. [Google Scholar]

- Navigli, R.; Ponzetto, S.P. BabelNet: The automatic construction, evaluation and application of a wide-coverage multilingual semantic network. Artif. Intell. 2012, 193, 217–250. [Google Scholar] [CrossRef]

- Miller, G.A. WordNet: A Lexical Database for English. Commun. ACM 1995, 38, 39–41. [Google Scholar] [CrossRef]

- Doerr, M. The CIDOC CRM—An Ontological Approach to Semantic Interoperability of Metadata. Ai Mag. AIM 2003, 24, 75–92. [Google Scholar] [CrossRef]

- Isaac, A. Europeana Data Model Primer; Technical Report; European Commission: Brussels, Belgium, 2013. [Google Scholar]

- Meroño-Peñuela, A. Digital Humanities on the Semantic Web: Accessing Historical and Musical Linked Data. J. Catalan Intellect. Hist. 2017, 1, 144–149. [Google Scholar] [CrossRef][Green Version]

- Borgman, C. The Digital Future is Now: A Call to Action for the Humanities. Digit. Humanit. Q. 2010, 3, 1–30. [Google Scholar]

- Commission, E. Commission Recommendation of 27.10.2011 on the Digitisation and Online Accessibility of Cultural Material and Digital Preservation; European Commission: Brussels, Belgium, 2017. [Google Scholar]

- Sabou, M.; Lopez, V.; Motta, E.; Uren, V. Ontology selection: Ontology evaluation on the real Semantic Web. In Proceedings of the 15th International World Wide Web Conference (WWW 2006), Edinburgh, UK, 23–26 May 2006. [Google Scholar]

- Pileggi, S.F. Probabilistic Semantics. Procedia Comput. Sci. 2016, 80, 1834–1845. [Google Scholar] [CrossRef]

- Rocha Souza, R.; Dorn, A.; Piringer, B.; Wandl-Vogt, E. Towards A Taxonomy of Uncertainties: Analysing Sources of Spatio-Temporal Uncertainty on the Example of Non-Standard German Corpora. Informatics 2019, 6, 34. [Google Scholar] [CrossRef]

- Chen, M.; Dai, W.; Sun, S.Y.; Jonasch, D.; He, C.Y.; Schmid, M.F.; Chiu, W.; Ludtke, S.J. Convolutional neural networks for automated annotation of cellular cryo-electron tomograms. Nat. Methods 2017, 14, 983–985. [Google Scholar] [CrossRef]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef]

- Abgaz, Y.; Dorn, A.; Piringer, B.; Wandl-Vogt, E.; Way, A. Semantic Modelling and Publishing of Traditional Data Collection Questionnaires and Answers. Information 2018, 9, 297. [Google Scholar] [CrossRef]

- Abgaz, Y.; Dorn, A.; Piringer, B.; Wandl-Vogt, E.; Way, A. A semantic model for traditional data collection questionnaires enabling cultural analysis. In Proceedings of the Eleventh International Conference on Language Resources and Evaluation (LREC 2018); McCrae, J.P., Chiarcos, C., Declerck, T., Gracia, J., Klimek, B., Eds.; European Language Resources Association (ELRA): Paris, France, 2018. [Google Scholar]

- Jones, D.; O’Connor, A.; Abgaz, Y.M.; Lewis, D. A Semantic Model for Integrated Content Management, Localisation and Language Technology Processing. In Proceedings of the 2nd International Conference on Multilingual Semantic Web; DEU: Aachen, Germany, 2011; Volume 775, pp. 38–49. [Google Scholar]

- Dorn, A.; Wandl-Vogt, E.; Abgaz, Y.; Benito Santos, A.; Therón, R. Unlocking cultural conceptualisation in indigenous language resources: Collaborative computing methodologies. In Proceedings of the LREC 2018 Workshop CCURL 2018, Miyazaki, Japan, 7–12 May 2018. [Google Scholar]

- Debruyne, C.; O’Sullivan, D. R2RML-F: Towards Sharing and Executing Domain Logic in R2RML Mappings. In Proceedings of the Workshop on Linked Data on the Web, LDOW 2016, Co-Located with 25th International World Wide Web Conference (WWW 2016); CEUR Workshop Proceedings. Auer, S., Berners-Lee, T., Bizer, C., Heath, T., Eds.; RWTH: Aachen, Germany, 2016; Volume 1593. [Google Scholar]

- Isaac, A.; Haslhofer, B. Europeana linked open data–data. europeana. eu. Semant. Web 2013, 4, 291–297. [Google Scholar] [CrossRef]

- Schreiber, G.; Amin, A.; Aroyo, L.; van Assem, M.; de Boer, V.; Hardman, L.; Hildebrand, M.; Omelayenko, B.; van Osenbruggen, J.; Tordai, A.; et al. Semantic annotation and search of cultural-heritage collections: The MultimediaN E-Culture demonstrator. J. Web Semant. 2008, 6, 243–249. [Google Scholar] [CrossRef]

- Rodríguez Díaz, A.; Benito-Santos, A.; Dorn, A.; Abgaz, Y.; Wandl-Vogt, E.; Therón, R. Intuitive Ontology-Based SPARQL Queries for RDF Data Exploration. IEEE Access 2019, 7, 156272–156286. [Google Scholar] [CrossRef]

- Ait-Mlouk, A.; Jiang, L. KBot: A Knowledge Graph Based ChatBot for Natural Language Understanding Over Linked Data. IEEE Access 2020, 8, 149220–149230. [Google Scholar] [CrossRef]

- Al-Zubaide, H.; Issa, A.A. OntBot: Ontology based chatbot. In Proceedings of the International Symposium on Innovations in Information and Communications Technology, Amman, Jordan, 29 November–1 December 2011; pp. 7–12. [Google Scholar] [CrossRef]

- Abgaz, Y.; Dorn, A.; Preza Diaz, J.L.; Koch, G. Towards a Comprehensive Assessment of the Quality and Richness of the Europeana Metadata of food-related Images. In Proceedings of the 1st International Workshop on Artificial Intelligence for Historical Image Enrichment and Access, Marseille, France, 11–16 May 2020; European Language Resources Association (ELRA): Marseille, France, 2020; pp. 29–33. [Google Scholar]

- Preza Diaz, J.L.; Dorn, A.; Koch, G.; Abgaz, Y. A comparative approach between different Computer Vision tools, including commercial and open-source, for improving cultural image access and analysis. In Proceedings of the The 10th International Conference on Adanced Computer Information Technologies (ACIT’2020), Deggendorf, Germany, 16–18 September 2020. [Google Scholar] [CrossRef]

- Leatherdale, D.; Tidbury, G.E.; Mack, R.; Food and Agriculture Organization of the United Nations; Commission of the European Communities. AGROVOC: A Multilingual Thesaurus of Agricultural Terminology, english version ed; Apimondia, by arrangement with the Commission of the European Communities: Rome, Italy, 1982; p. 530. [Google Scholar]

- Alexiev, V. Museum linked open data: Ontologies, datasets, projects. Digit. Present. Preserv. Cult. Sci. 2018, VIII, 19–50. [Google Scholar]

- Petersen, T. Developing a new thesaurus for art and architecture. Libr. Trends 1990, 38, 644–658. [Google Scholar]

- Molholt, P.; Petersen, T. The role of the ‘Art and Architecture Thesaurus’ in communicating about visual art. Ko Knowl. Organ. 1993, 20, 30–34. [Google Scholar] [CrossRef]

- Baca, M.; Gill, M. Encoding multilingual knowledge systems in the digital age: The getty vocabularies. NASKO 2015, 5, 41–63. [Google Scholar] [CrossRef]

- Alghamdi, D.A.; Dooley, D.M.; Gosal, G.; Griffiths, E.J.; Brinkman, F.S.; Hsiao, W.W. FoodOn: A Semantic Ontology Approach for Mapping Foodborne Disease Metadata; ICBO: Lansing, MI, USA, 2017. [Google Scholar]

- Popovski, G.; Korousic-Seljak, B.; Eftimov, T. FoodOntoMap: Linking Food Concepts across Different Food Ontologies. In Proceedings of the KEOD, Vienna, Austria, 17–19 September 2019; pp. 195–202. [Google Scholar]

- Toet, A.; Kaneko, D.; de Kruijf, I.; Ushiama, S.; van Schaik, M.G.; Brouwer, A.M.; Kallen, V.; van Erp, J.B.F. CROCUFID: A Cross-Cultural Food Image Database for Research on Food Elicited Affective Responses. Front. Psychol. 2019, 10, 58. [Google Scholar] [CrossRef] [PubMed]

- Zawbaa, H.M.; Abbass, M.; Hazman, M.; Hassenian, A.E. Automatic Fruit Image Recognition System Based on Shape and Color Features. In Advanced Machine Learning Technologies and Applications; Hassanien, A.E., Tolba, M.F., Taher Azar, A., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 278–290. [Google Scholar]

- Bresilla, K.; Perulli, G.D.; Boini, A.; Morandi, B.; Corelli Grappadelli, L.; Manfrini, L. Single-Shot Convolution Neural Networks for Real-Time Fruit Detection Within the Tree. Front. Plant Sci. 2019, 10, 611. [Google Scholar] [CrossRef]

- Xue, D.; Zhou, X.; Li, C.; Yao, Y.; Rahaman, M.M.; Zhang, J.; Chen, H.; Zhang, J.; Qi, S.; Sun, H. An Application of Transfer Learning and Ensemble Learning Techniques for Cervical Histopathology Image Classification. IEEE Access 2020, 8, 104603–104618. [Google Scholar] [CrossRef]

- Birhane, A. The Impossibility of Automating Ambiguity. Artif. Life 2021, 1–18. [Google Scholar] [CrossRef]

- Cohen, J. A Coefficient of Agreement for Nominal Scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

- Cohen, J. Weighted kappa: Nominal scale agreement provision for scaled disagreement or partial credit. Psychol. Bull. 1968, 70, 213–220. [Google Scholar] [CrossRef]

- McHugh, M. Interrater reliability: The kappa statistic. Biochem. Med. Cas. Hrvat. Drus. Med. HDMB 2012, 22, 276–282. [Google Scholar] [CrossRef]

- Tang, W.; Hu, J.; Zhang, H.; Wu, P.; He, H. Kappa coefficient: A popular measure of rater agreement. Shanghai Arch. Psychiatry 2015, 27, 62–67. [Google Scholar] [CrossRef] [PubMed]

- Ciocca, G.; Napoletano, P.; Schettini, R. Food Recognition: A New Dataset, Experiments, and Results. IEEE J. Biomed. Health Inform. 2017, 21, 588–598. [Google Scholar] [CrossRef] [PubMed]

| Image_Name | Label | Confidence |

|---|---|---|

| https://image1 | Concept 1 | 85% |

| https://image1 | Concept 2 | 100% |

| https://image1 | Concept 3 | 40% |

| ... | ... | ... |

| Round-1 | Round-2 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| A001 | A002 | A003 | A004 | A005 | A001 | A002 | A004 | A005 | A006 | ||

| Task-1: Fruit/Non-fruit. | |||||||||||

| A001 | 1.000 | 0.928 | 0.892 | 0.907 | 0.886 | A001 | 1.000 | 0.943 | 0.928 | 0.913 | 0.912 |

| A002 | 0.928 | 1.000 | 0.892 | 0.938 | 0.897 | A002 | 0.943 | 1.000 | 0.912 | 0.866 | 0.865 |

| A003 | 0.892 | 0.892 | 1.000 | 0.923 | 0.923 | A004 | 0.928 | 0.912 | 1.000 | 0.913 | 0.881 |

| A004 | 0.907 | 0.938 | 0.923 | 1.000 | 0.918 | A005 | 0.913 | 0.866 | 0.913 | 1.000 | 0.897 |

| A005 | 0.886 | 0.897 | 0.923 | 0.918 | 1.000 | A006 | 0.912 | 0.865 | 0.881 | 0.897 | 1.000 |

| Task-2: Formal/Informal. | |||||||||||

| A001 | 1.000 | 0.330 | 0.252 | 0.316 | −0.091 | A001 | 1.000 | 0.168 | 0.255 | 0.167 | 0.125 |

| A002 | 0.330 | 1.000 | 0.210 | 0.306 | 0.153 | A002 | 0.168 | 1.000 | 0.095 | 0.419 | 0.489 |

| A003 | 0.252 | 0.210 | 1.000 | 0.051 | −0.031 | A004 | 0.255 | 0.095 | 1.000 | 0.089 | 0.208 |

| A004 | 0.316 | 0.306 | 0.051 | 1.000 | −0.028 | A005 | 0.167 | 0.419 | 0.089 | 1.000 | 0.520 |

| A005 | −0.091 | 0.153 | −0.031 | −0.028 | 1.000 | A006 | 0.125 | 0.489 | 0.208 | 0.520 | 1.000 |

| Task-3: Appealing/Non-appealing. | |||||||||||

| A001 | 1.000 | 0.659 | 0.296 | 0.534 | 0.317 | A001 | 1.000 | 0.472 | 0.526 | 0.336 | 0.569 |

| A002 | 0.659 | 1.000 | 0.325 | 0.453 | 0.268 | A002 | 0.472 | 1.000 | 0.565 | 0.454 | 0.366 |

| A003 | 0.296 | 0.325 | 1.000 | 0.424 | 0.370 | A004 | 0.526 | 0.565 | 1.000 | 0.439 | 0.386 |

| A004 | 0.534 | 0.453 | 0.424 | 1.000 | 0.454 | A005 | 0.336 | 0.454 | 0.439 | 1.000 | 0.205 |

| A005 | 0.317 | 0.268 | 0.370 | 0.454 | 1.000 | A006 | 0.569 | 0.366 | 0.386 | 0.205 | 1.000 |

| Concept | Definition |

|---|---|

| Fruit/Non-fruit | Fruit: a fruit is something that grows on a tree or bush and which contains seeds or a stone covered by a substance that you can eat. (e.g., strawberry, nut, tomato, peach, banana, green beans, melon, apple). Non-fruit: images that do not feature any type of fruit (for fruit definition see above) |

| Formal/Informal | Formal: arranged in a very controlled way or according to certain rules; an official situation or context. Informal: a relaxed environment, an unofficial situation or context, disorderly arrangement. |

| Appealing/Non-appealing | Appealing: an image that is a pleasure to look at. A food image that is pleasing to the eye, desirable to eat and good for food. Non-appealing: an image that is not a pleasure to look at. |

| A001 | A002 | A004 | A005 | A006 | A007 | |

|---|---|---|---|---|---|---|

| A001 | 1.000 | 0.293 | 0.335 | 0.330 | 0.164 | 0.274 |

| A002 | 0.293 | 1.000 | 0.475 | 0.483 | 0.190 | 0.042 |

| A004 | 0.335 | 0.475 | 1.000 | 0.648 | 0.156 | 0.082 |

| A005 | 0.330 | 0.483 | 0.648 | 1.000 | 0.123 | 0.061 |

| A006 | 0.164 | 0.190 | 0.156 | 0.123 | 1.000 | −0.025 |

| A007 | 0.274 | 0.042 | 0.082 | 0.061 | −0.025 | 1.000 |

| Model | Training Accuracy | Validation Accuracy | Test Accuracy |

|---|---|---|---|

| Fine tuned ResNet50 | 83.51% | 83.81% | 80% |

| Fine tuned Inception_V3 | 92.61% | 87.62% | 90% |

| Fine tuned Xception | 93.2% | 88.1% | 85.56% |

| A001 | A002 | A004 | A005 | A006 | |

|---|---|---|---|---|---|

| A001 | 1.000 | 0.223 | 0.287 | 0.057 | 0.293 |

| A002 | 0.223 | 1.000 | 0.245 | 0.090 | 0.163 |

| A004 | 0.287 | 0.245 | 1.000 | 0.133 | 0.200 |

| A005 | 0.057 | 0.090 | 0.133 | 1.000 | 0.085 |

| A006 | 0.293 | 0.163 | 0.200 | 0.085 | 1.000 |

| Model | Training Accuracy | Validation Accuracy | Test Accuracy |

|---|---|---|---|

| Fine tuned ResNet50 | 87.47% | 84.5% | 83.85% |

| Fine tuned Inception_V3 | 81.68% | 85.5% | 88.46% |

| Fine tuned Xception | 95.98% | 88.5% | 90.77% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abgaz, Y.; Rocha Souza, R.; Methuku, J.; Koch, G.; Dorn, A. A Methodology for Semantic Enrichment of Cultural Heritage Images Using Artificial Intelligence Technologies. J. Imaging 2021, 7, 121. https://doi.org/10.3390/jimaging7080121

Abgaz Y, Rocha Souza R, Methuku J, Koch G, Dorn A. A Methodology for Semantic Enrichment of Cultural Heritage Images Using Artificial Intelligence Technologies. Journal of Imaging. 2021; 7(8):121. https://doi.org/10.3390/jimaging7080121

Chicago/Turabian StyleAbgaz, Yalemisew, Renato Rocha Souza, Japesh Methuku, Gerda Koch, and Amelie Dorn. 2021. "A Methodology for Semantic Enrichment of Cultural Heritage Images Using Artificial Intelligence Technologies" Journal of Imaging 7, no. 8: 121. https://doi.org/10.3390/jimaging7080121

APA StyleAbgaz, Y., Rocha Souza, R., Methuku, J., Koch, G., & Dorn, A. (2021). A Methodology for Semantic Enrichment of Cultural Heritage Images Using Artificial Intelligence Technologies. Journal of Imaging, 7(8), 121. https://doi.org/10.3390/jimaging7080121