Time- and Resource-Efficient Time-to-Collision Forecasting for Indoor Pedestrian Obstacles Avoidance

Abstract

1. Introduction

1.1. Technical Context

1.2. Related Work

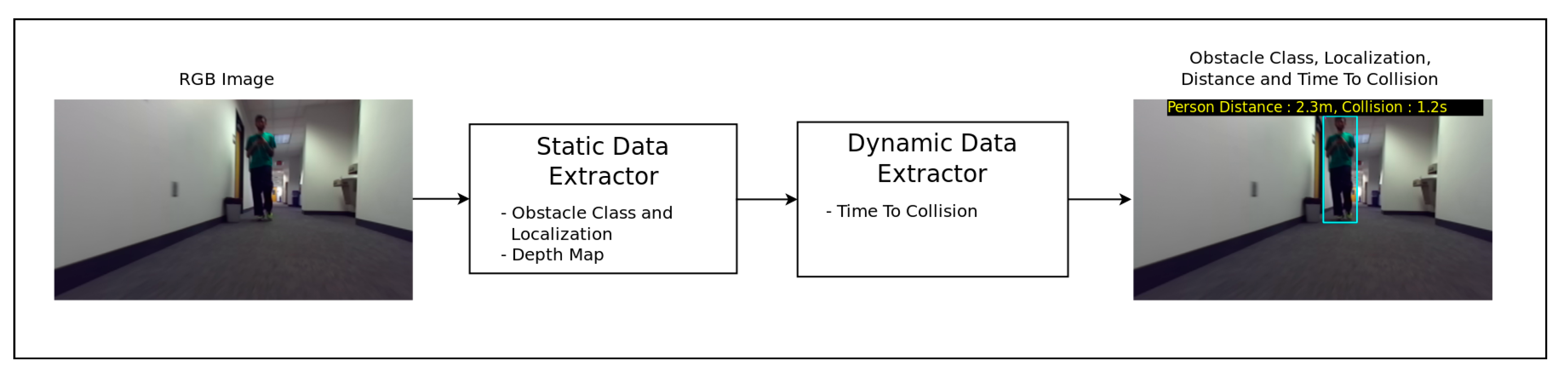

2. Proposed Model

2.1. Obstacle Detection Algorithm for the SmartGlasses Navigation System Application

- A static data extractor that computes the scene depth map and identifies and localizes the presence of an obstacle;

- A dynamic data extractor that uses temporal cues from the data computed in the first module stacked along multiple frames and predicts the Time-to-Collision to the closest obstacle detected.

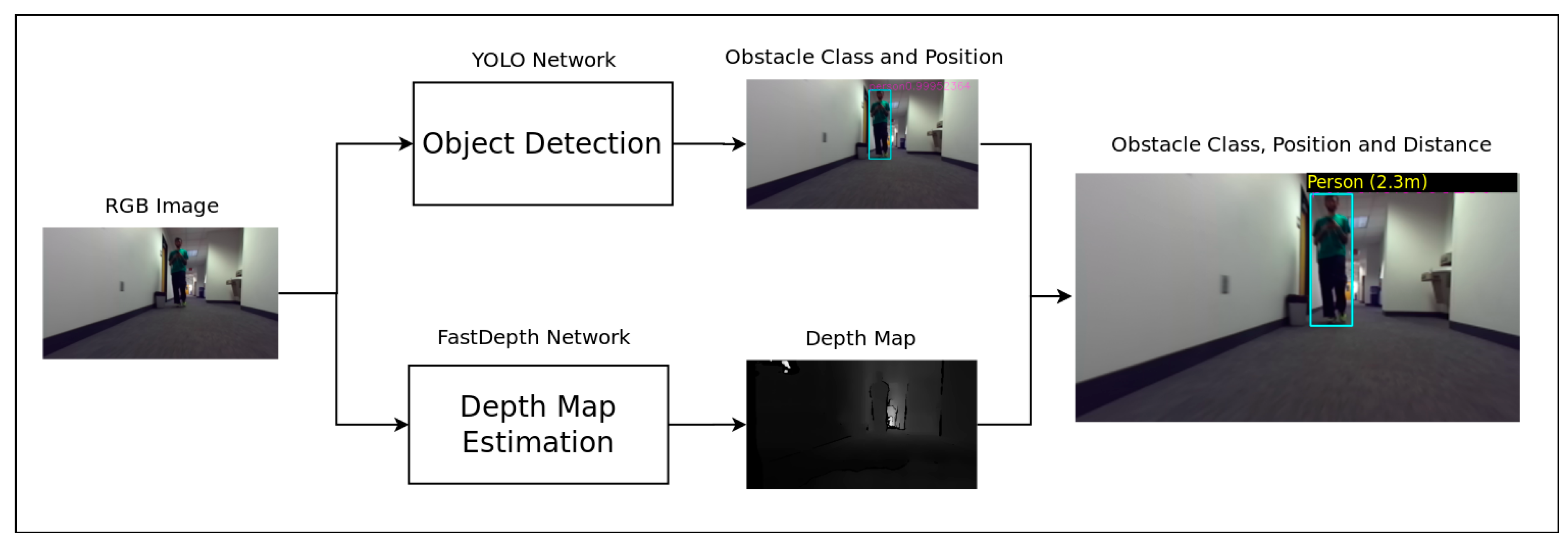

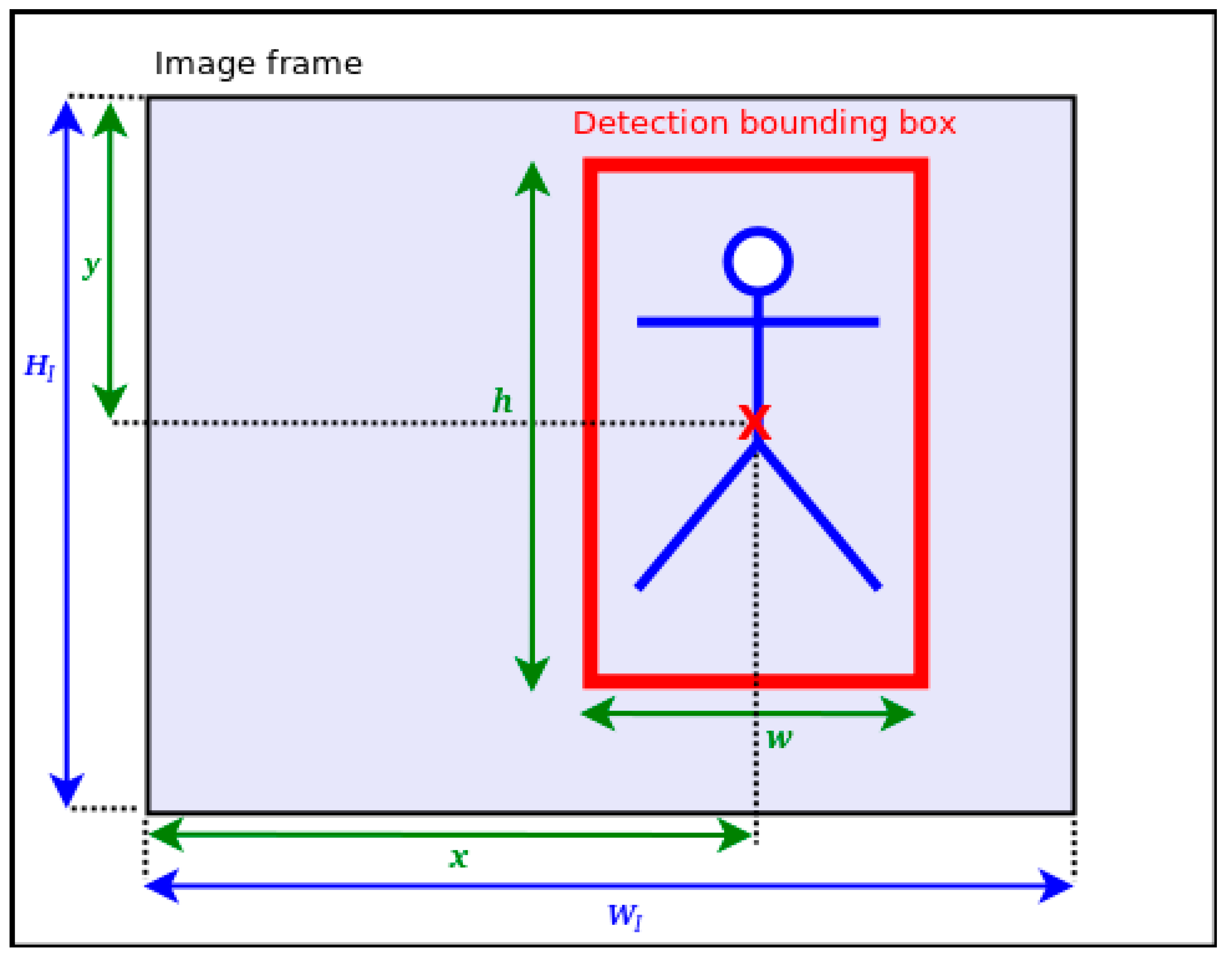

2.1.1. Static Data Extractor

- “Person”: this class represents the mobile obstacles the smartglasses user would encounter (pedestrians, vehicles).

- “Chair”, “desk”: these classes illustrate some examples of “static” obstacles the smartglasses user would have to avoid on his/her path.

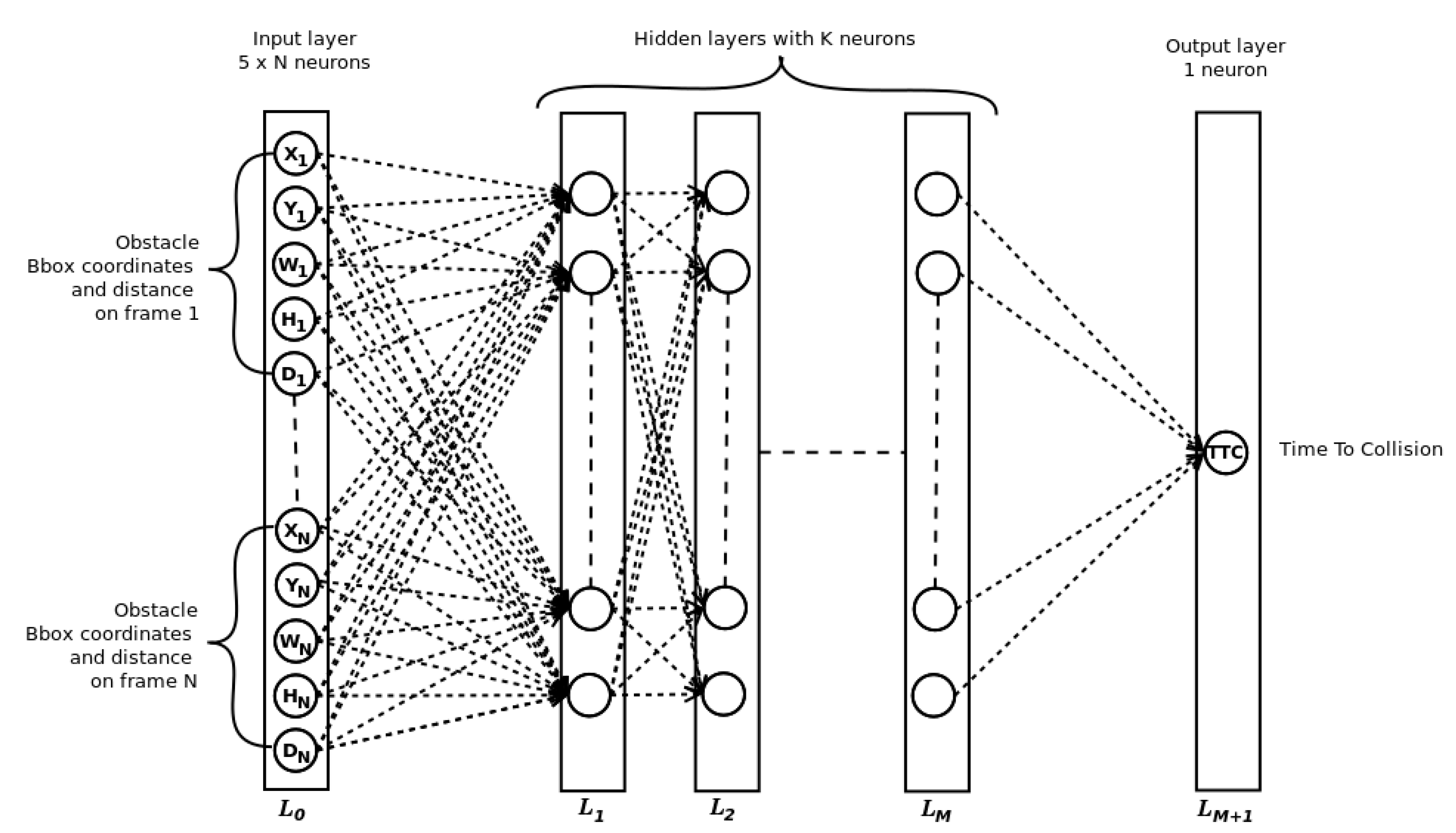

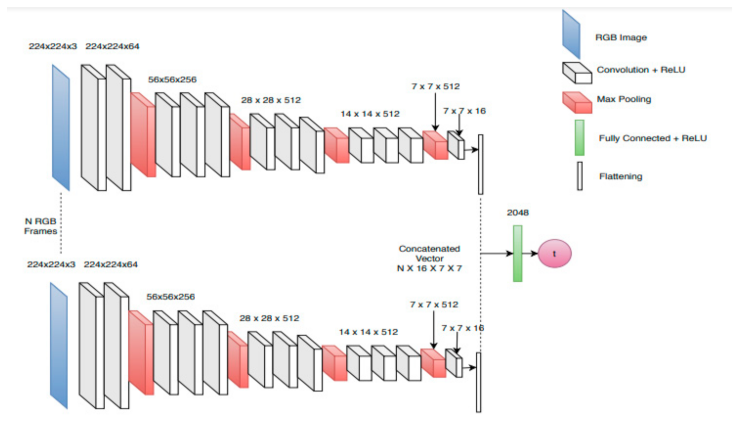

2.1.2. Dynamic Data Extractor

2.2. Datasets

- The RGB video sequence with the incoming obstacles in the view;

- The corresponding depth map extracted from either a stereo camera disparity map, the LIDAR point cloud coordinates, or the output of a monocular depth map estimator algorithm;

- The bounding box of the obstacles annotated either manually or with the help of a state-of-the-art detection algorithm;

- The Time-to-Collision value corresponding to each frame where the prediction should be (as we consider the Time-to-Collision task to be a regression problem).

3. Experimental Results

3.1. Network Configuration

3.2. Number of Input Frames

3.3. Time to Collision Estimation Results on the Near-Collision Test Set

3.4. Results on the Acquired Test Set

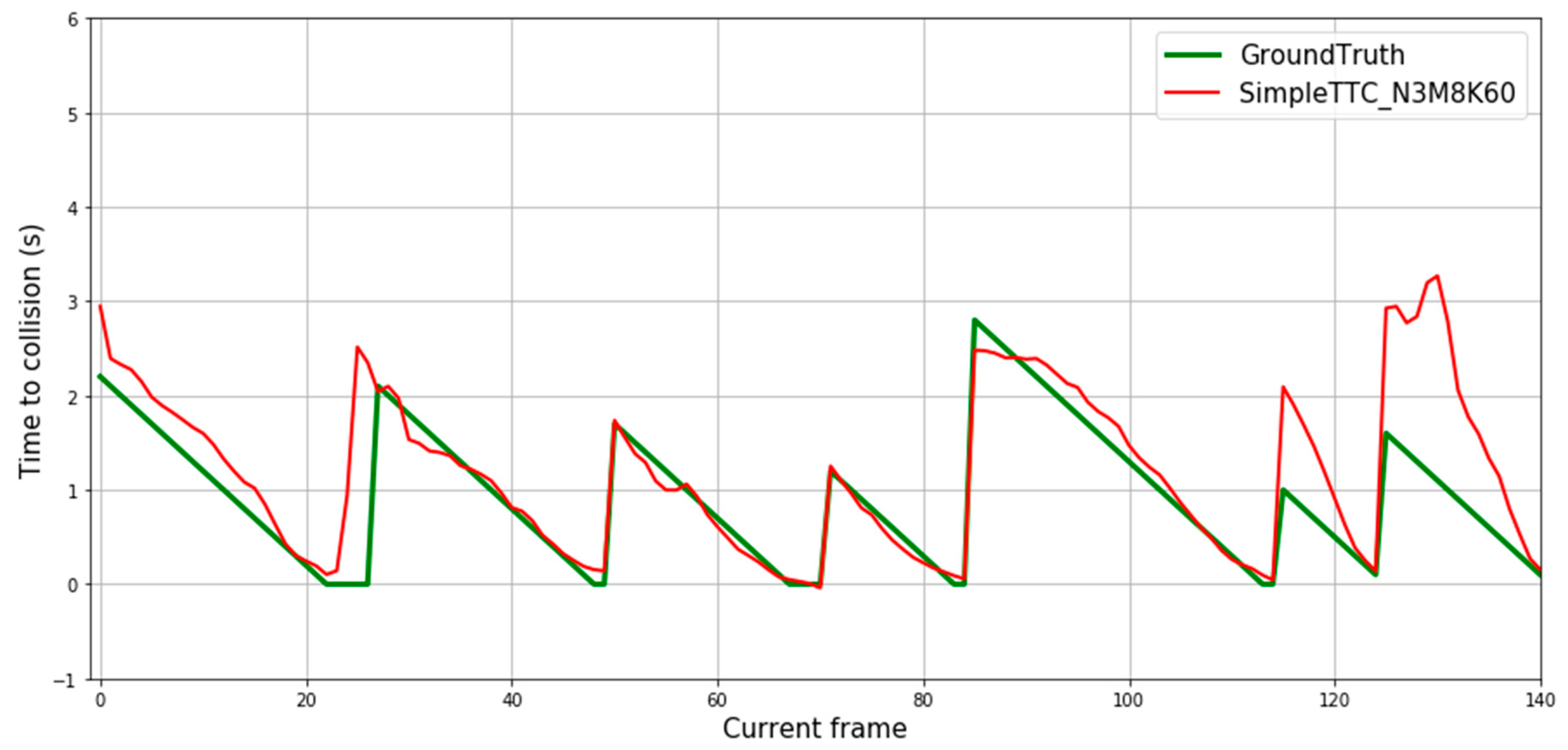

3.4.1. Results on Moving Obstacles

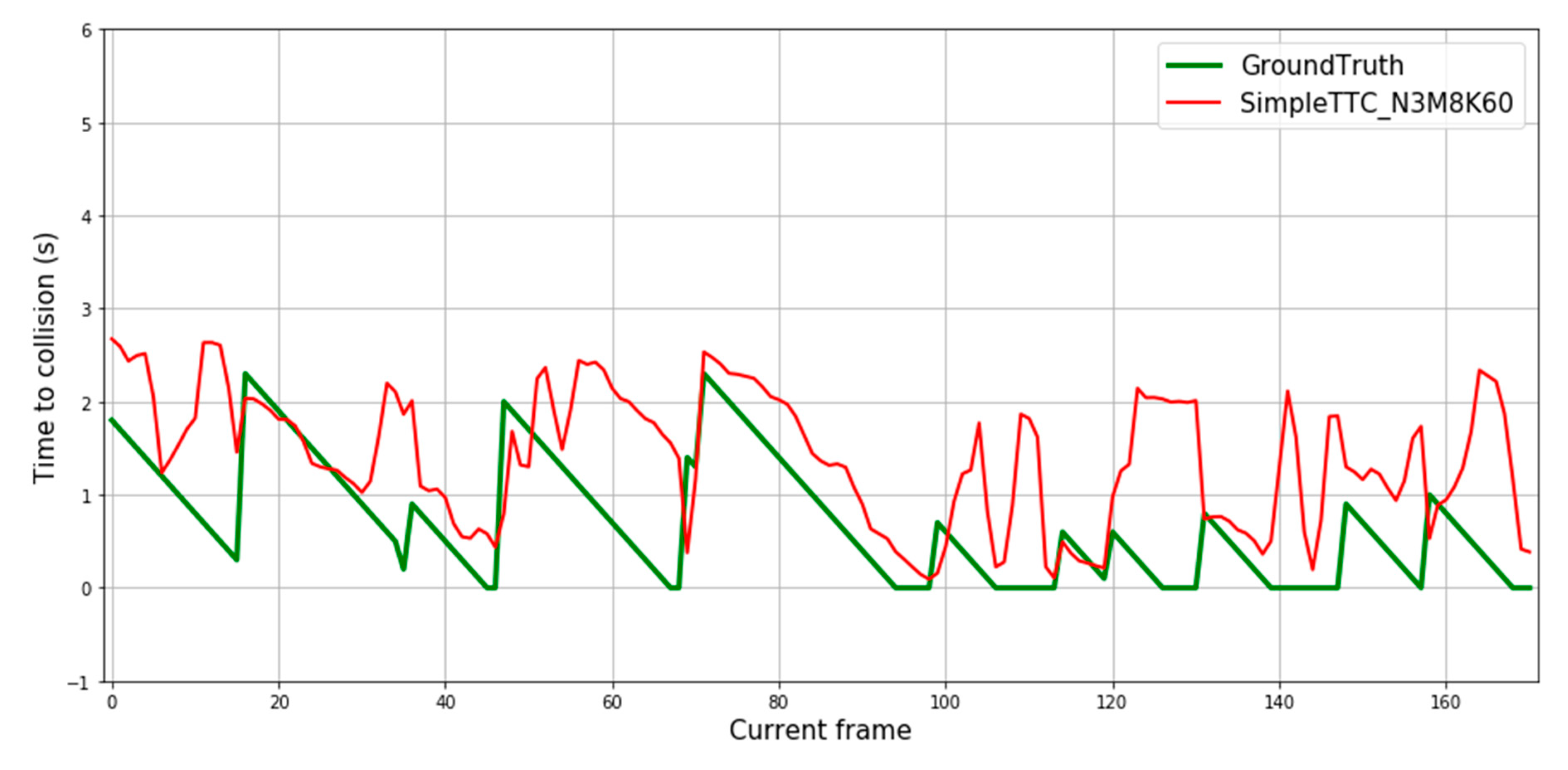

3.4.2. Results on Static Obstacles

3.5. Computational Efficiency

3.6. Comparison with the Near-Collision Network

3.7. Comparison with an Improved Version of the NearCollision Network

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Fernandes, H.; Costa, P.; Filipe, V.; Paredes, H.; Barroso, J. A review of assistive spatial orientation and navigation technologies for the visually impaired. Univ. Access Inf. Soc. 2019, 18. [Google Scholar] [CrossRef]

- Wong, F.; Nagarajan, R.; Yaacob, S. Application of stereovision in a navigation aid for blind people. In Proceedings of the Fourth International Conference on Information, Communications and Signal Processing, Singapore, 15–18 December 2003; Volume 2, pp. 734–737. [Google Scholar] [CrossRef]

- Dakopoulos, D.; Bourbakis, N.G. Wearable Obstacle Avoidance Electronic Travel Aids for Blind: A Survey. IEEE Trans. Syst. ManCybern. Part C 2010, 40, 25–35. [Google Scholar] [CrossRef]

- Garnett, N.; Silberstein, S.; Oron, S.; Fetaya, E.; Verner, U.; Ayash, A.; Goldner, V.; Cohen, R.; Horn, K.; Levi, D. Real-Time Category-Based and General Obstacle Detection for Autonomous Driving. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 22–29 October 2017; pp. 198–205. [Google Scholar] [CrossRef]

- Al-Kaff, A.; García, F.; Martín, D.; De La Escalera, A.; Armingol, J.M. Obstacle Detection and Avoidance System Based on Monocular Camera and Size Expansion Algorithm for UAVs. Sensors 2017, 17, 1061. [Google Scholar] [CrossRef] [PubMed]

- Pan, J.-S.; Ma, S.; Chen, S.-H.; Yang, C.-S. Vision-based vehicle forward collision warning system using optical flow algorithm. J. Inf. Hiding Multimed. Signal Process. 2015, 6, 1029–1042. [Google Scholar]

- Ess, A.; Leibe, B.; Schindler, K.; Van Gool, L. Robust Multiperson Tracking from a Mobile Platform. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 1831–1846. [Google Scholar] [CrossRef] [PubMed]

- Wofk, D.; Ma, F.; Yang, T.-J.; Karaman, S.; Sze, V. FastDepth: Fast Monocular Depth Estimation on Embedded Systems. In Proceedings of the International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019. [Google Scholar]

- Gan, Y.; Xu, X.; Sun, W.; Lin, L. Monocular Depth Estimation with Affinity, Vertical Pooling, and Label Enhancement. In Proceedings of the 15th European Conference, Part III, Munich, Germany, 8–14 September 2018. [Google Scholar] [CrossRef]

- Phillips, D.; Aragon, J.; Roychowdhury, A.; Madigan, R.; Chintakindi, S.; Kochenderfer, M. Real-Time Prediction of Automotive Collision Risk from Monocular Video; arXiv preprint: Ithaca, NY, USA, 2019. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. IEEE Conf. Comput. Vis. Pattern Recognit. CVPR 2017, 6517–6525. [Google Scholar] [CrossRef]

- Haseeb, M.A.; Guan, J.; Ristić-Durrant, D.; Gräser, A. DisNet: A Novel Method for Distance Estimation from Monocular Camera. In proceeding of 10th Planning, Perception and Navigation for Intelligent Vehicles (PPNIV’18), IROS, Madrid, Spain 1–5 October 2018. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The KITTI dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar] [CrossRef]

- Alenyà, G.; Nègre, A.; Crowley, J. Time to Contact for Obstacle Avoidance. In Proceeding of the 4th European Conference on Mobile Robots, ECMR’09, Mlini/Dubrovnik, Croatia, 23–25 September 2009; pp. 19–24. [Google Scholar]

- Lenard, J.; Welsh, R.; Danton, R. Time-to-collision analysis of pedestrian and pedal-cycle accidents for the development of autonomous emergency braking systems. Accid. Anal. Prev. 2018, 115, 128–136. [Google Scholar] [CrossRef] [PubMed]

- Camus, T.; Coombs, D.; Herman, M.; Hong, T.-H. Real-time single-workstation obstacle avoidance using only wide-field flow divergence. In Proceedings of the 13th International Conference on Pattern Recognition, Vienna, Austria, 25–29 August 1996; Volume 3, pp. 323–330. [Google Scholar] [CrossRef]

- Coombs, D.; Herman, M.; Hong, T.; Nashman, M. Real-time obstacle avoidance using central flow divergence and peripheral flow. In Proceedings of the IEEE Transactions on Robotics and Automation, Massachusetts Institute of Technology, Cambridge, MA, USA, 20–23 June 1995; pp. 276–283. [Google Scholar] [CrossRef]

- Nelson, R.; Aloimonos, J. Obstacle avoidance using flow field divergence. IEEE Trans. Pattern Anal. Mach. Intell. 1989, 11, 1102–1106. [Google Scholar] [CrossRef]

- Pundlik, S.; Tomasi, M.; Luo, G. Collision Detection for Visually Impaired from a Body-Mounted Camera. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Portland, OR, USA, 23–28 June 2013; pp. 41–47. [Google Scholar] [CrossRef]

- Byrne, J.; Taylor, C.J. Expansion Segmentation for Visual Collision Detection and Estimation. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 875–882. [Google Scholar] [CrossRef]

- Pundlik, S.; Peli, E.; Luo, G. Time to Collision and Collision Risk Estimation from Local Scale and Motion. In Proceedings of the Advances in Visual Computing-7th International Symposium, ISVC 2011, Las Vegas, NV, USA, 26–28 September 2011; pp. 728–737. [Google Scholar] [CrossRef]

- Mori, T.; Scherer, S. First results in detecting and avoiding frontal obstacles from a monocular camera for micro unmanned aerial vehicles. In Proceedings of the IEEE 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 1750–1757. [Google Scholar] [CrossRef]

- Manglik, A.; Weng, X.; Ohn-Bar, E.; Kitanil, K.M. Forecasting Time-to-Collision from Monocular Video: Feasibility, Dataset, and Challenges. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 4–8 November 2019. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the 13th European Conference on Computer Vision, (ECCV 2014), Zurich, Switzerland, 6–12 September 2014; Volume 8693. [Google Scholar] [CrossRef]

- Song, S.; Lichtenberg, S.P.; Xiao, J. SUN RGB-D: A RGB-D scene understanding benchmark suite. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 567–576. [Google Scholar] [CrossRef]

- Carreira, J.; Zisserman, A. Quo Vadis, Action Recognition? A New Model and the Kinetics Dataset. In Proceeding of IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4724–4733. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

| K Neurons\M Layers | 2 | 4 | 6 | 8 | 10 |

|---|---|---|---|---|---|

| 10 | 0.383 ± 0.440 | 0.387 ± 0.414 | 0.334 ± 0.381 | 1.140 ± 0.796 | 1.135 ± 0.816 |

| 20 | 0.361 ± 0.395 | 0.368 ± 0.419 | 0.394 ± 0.425 | 0.332 ± 0.384 | 1.140 ± 0.797 |

| 30 | 0.358 ± 0.410 | 0.366 ± 0.417 | 0.335 ± 0.395 | 0.338 ± 0.390 | 1.138 ± 0.803 |

| 40 | 0.361 ± 0.413 | 0.368 ± 0.416 | 0.334 ± 0.385 | 0.334 ± 0.396 | 1.140 ± 0.797 |

| 50 | 0.339 ± 0.383 | 0.365 ± 0.405 | 0.324 ± 0.383 | 0.340 ± 0.400 | 0.352 ± 0.414 |

| 60 | 0.353 ± 0.405 | 0.359 ± 0.405 | 0.339 ± 0.394 | 0.320 ± 0.375 | 0.328 ± 0.395 |

| 70 | 0.333 ± 0.374 | 0.354 ± 0.422 | 0.339 ± 0.396 | 0.323 ± 0.389 | 0.333 ± 0.399 |

| 80 | 0.341 ± 0.388 | 0.356 ± 0.418 | 0.350 ± 0.408 | 0.324 ± 0.391 | 0.321 ± 0.386 |

| 90 | 0.334 ± 0.373 | 0.340 ± 0.408 | 0.334 ± 0.395 | 0.340 ± 0.407 | 0.335 ± 0.406 |

| 100 | 0.367 ± 0.404 | 0.362 ± 0.407 | 0.344 ± 0.408 | 0.326 ± 0.387 | 0.324 ± 0.387 |

| Number of Frames | Mean (s) | Std (s) |

|---|---|---|

| 2 | 0.350 | 0.435 |

| 3 | 0.327 | 0.389 |

| 4 | 0.321 | 0.376 |

| 5 | 0.332 | 0.398 |

| 6 | 0.320 | 0.375 |

| Input Data | Mean (in s) | Std (in s) |

|---|---|---|

| RGB | 0.846 | 0.666 |

| Masked RGB | 0.672 | 0.567 |

| SimpleTTC (Ours) | 0.320 | 0.375 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Urban, D.; Caplier, A. Time- and Resource-Efficient Time-to-Collision Forecasting for Indoor Pedestrian Obstacles Avoidance. J. Imaging 2021, 7, 61. https://doi.org/10.3390/jimaging7040061

Urban D, Caplier A. Time- and Resource-Efficient Time-to-Collision Forecasting for Indoor Pedestrian Obstacles Avoidance. Journal of Imaging. 2021; 7(4):61. https://doi.org/10.3390/jimaging7040061

Chicago/Turabian StyleUrban, David, and Alice Caplier. 2021. "Time- and Resource-Efficient Time-to-Collision Forecasting for Indoor Pedestrian Obstacles Avoidance" Journal of Imaging 7, no. 4: 61. https://doi.org/10.3390/jimaging7040061

APA StyleUrban, D., & Caplier, A. (2021). Time- and Resource-Efficient Time-to-Collision Forecasting for Indoor Pedestrian Obstacles Avoidance. Journal of Imaging, 7(4), 61. https://doi.org/10.3390/jimaging7040061